94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Ophthalmol., 04 January 2023

Sec. Glaucoma

Volume 2 - 2022 | https://doi.org/10.3389/fopht.2022.1057896

This article is part of the Research TopicTranslational Opportunities for AI in GlaucomaView all 10 articles

Da Ma1,2,3*

Da Ma1,2,3* Louis R. Pasquale4

Louis R. Pasquale4 Michaël J. A. Girard5,6,7

Michaël J. A. Girard5,6,7 Christopher K. S. Leung8

Christopher K. S. Leung8 Yali Jia9

Yali Jia9 Marinko V. Sarunic10,3

Marinko V. Sarunic10,3 Rebecca M. Sappington1,2

Rebecca M. Sappington1,2 Kevin C. Chan11,12*

Kevin C. Chan11,12*Artificial intelligence (AI) has been approved for biomedical research in diverse areas from bedside clinical studies to benchtop basic scientific research. For ophthalmic research, in particular glaucoma, AI applications are rapidly growing for potential clinical translation given the vast data available and the introduction of federated learning. Conversely, AI for basic science remains limited despite its useful power in providing mechanistic insight. In this perspective, we discuss recent progress, opportunities, and challenges in the application of AI in glaucoma for scientific discoveries. Specifically, we focus on the research paradigm of reverse translation, in which clinical data are first used for patient-centered hypothesis generation followed by transitioning into basic science studies for hypothesis validation. We elaborate on several distinctive areas of research opportunities for reverse translation of AI in glaucoma including disease risk and progression prediction, pathology characterization, and sub-phenotype identification. We conclude with current challenges and future opportunities for AI research in basic science for glaucoma such as inter-species diversity, AI model generalizability and explainability, as well as AI applications using advanced ocular imaging and genomic data.

In therapeutic development research, the conventional forward translation paradigm adopts a benchtop-to-bedside scheme, which aims to find genomic associations or therapeutic biomarkers starting from in vitro studies and animal models to validation in human subjects for further refinements and therapeutic development. On the other hand, reverse translation offers a different research paradigm that starts from human studies to identify and generate hypotheses for validation in animal or in vitro studies (1). This alternative research paradigm is poised to address several bottlenecks in conventional forward translation due to its more patient-centered, seamless, continuous, and cyclical process (2).

In the field of glaucoma research, there is an urgent need to develop therapeutic methods beyond the conventional clinically proven therapeutic intervention of lowering intraocular pressure (IOP). Glaucoma is the leading cause of irreversible blindness worldwide. Although glaucoma is characterized by progressive damage of the retinal ganglion cells and their axons, very little is known about its underlying mechanisms. IOP is a major risk factor, but not the cause of the disease. However, only a limited number of current clinical trials (<7%) were able to focus on novel neurotherapeutic targets (3). Such a mismatch between the need and reality of the sub-phenotyping therapeutic development research is in part hindered by the limitations embedded in the conventional forward translation paradigm. Reverse translation could potentially alleviate these barriers. For example, recent work using a transgenic mouse model based on the optineurin E50K gene mutation, originally discovered in human normal-tension glaucoma patients (4), discovered novel mutation-level-dependent age effects on visual impairment (5). In another example, inspired by the relationship between aging and glaucoma in clinical settings, Lu et al. designed retinal tissue reprogramming through the induction of ectopic expression of the four Yamanaka transcription factors and showed reverse age-related vision loss and eye damage in an aging mouse model with glaucoma (6). These and other related studies demonstrated the successful implication of reverse translation in glaucoma research (7–9).

Universally, machine learning and artificial intelligence (AI) in medicine have been applied mostly in clinical data with far fewer studies pertaining to animal models. This disparity in application arises largely from greater availability and standardization of bedside clinical data compared to benchtop data. This is particularly true in the field of glaucoma, in which much of the recent technology development has been established in bedside clinical settings (10, 11). Disparities in technological advancement that favor clinical applications present an ideal opportunity to apply the reverse translation research paradigm. Thus, AI applications in glaucoma are poised for technical adaptations that can pioneer reverse translation from human to animal models. In this perspective, we summarize several areas of research opportunities for reverse AI translation in glaucoma. We also point out current challenges in the field, and identify several research directions to achieve successful reverse translation for scientific discoveries in glaucoma in the future.

This perspective is structured in the following order: We first review the latest research using supervised classification AI models to predict glaucoma-related clinical and pathological conditions, such as rapid glaucoma progression (Section 2.1) and optic nerve head (ONH) abnormality detection (Section 2.2). Parallelly, we summarize the applications of unsupervised clustering AI algorithms to identify glaucoma subtypes (Section 2.3), such as novel archetypal visual field loss patterns and ONH-abnormality structural patterns. The next sections focus on AI algorithms to identify glaucoma-related risk factors (Section 2.4) and endophenotypes (Section 2.5-2.7). Specifically, we take a close look at AI-derived phenotypic biomarkers for glaucoma using ophthalmic imaging techniques including structural optical coherence tomography (OCT) (Section 2.5-2.6) and vascular OCT-Angiography (OCTA) (Section 2.7). Finally, we review recent glaucoma AI research that addressed some of the common AI challenges including AI model generalizability (Section 2.8), model explainability (Section 2.9), and model transferability through federated learning techniques (Section 2.10) to train aggregated models across multiple sites without the need of sharing data among participating sites. We conclude by discussing current opportunities and challenges for reverse AI translation in glaucoma (Section 3.1), and share our perspective on future research directions (Section 3.2) incorporating state-of-the-art explainable AI method development into cutting-edge ophthalmic imaging and genomic techniques.

Predicting the disease progression or risk is important for patient stratification and guiding early intervention. Visual field measurement is a low-cost diagnostic tool for evaluating visual function. By using a deep neural network trained on low dimensional, baseline 2D visual field measurements, recent studies showed promising predictive power in forecasting the risk of rapid glaucomatous progression (12, 13). On the other hand, OCT may be capable of predicting visual field progression (14). The important question for future studies of reverse translation would be: which approaches would be more appropriate? Would visual field measurements predicting glaucoma risk have more promising values for clinical applications such as early screening or clinical trial participant stratification, while using OCT to predict visual fields fits better in animal studies to understand the structural-functional relationships within the pathogenic mechanisms of glaucoma? To answer these questions, longitudinal experiments could be designed in transgenic animal models to simultaneously evaluate the progression of functional and structural abnormalities and model their pathogenic cascades as a function of time.

The ONH represents the confluence structure for the entire visual system. Glaucoma is associated with elevated IOP, whereas the ONH is heavily affected by the biomechanical forces due to elevated IOP and is therefore susceptible to structural damage and the associated functional loss. Han et al. trained a convolutional neural network (CNN) model on a large dataset of 282,100 images from both the UK Biobank and the Canadian Longitudinal Study on Aging - CLSA for automatic AI labeling of the ONH (15). Their study was able to extract two key ONH parameters: vertical cup-to-disc ratio and vertical disc diameter. Using OCT, Heisler et al. demonstrated auto-peripapillary region extraction in a clinical cohort with a much smaller dataset using a composited approach of peripapillary layer segmentation and Faster R-CNN-based object detection of Bruch’s membrane opening (BMO) (16). Accurate segmentation of the optic disc region and peripapillary retinal boundaries has also been demonstrated by combining CNN and multi-weights graph search (17). These results showed promising potentials for reverse translation to animal glaucoma models in which the sample size is much smaller compared to large-scale population studies such as the UK Biobank. It is worth noting that special care should be taken in the experimental design that, such reverse translation might mainly be applicable in animals with ONH anatomy similar to human eyes.

Understanding disease subtypes is important to achieve precision medicine. For example, different subtypes of primary open-angle glaucoma (POAG) showed different patterns of visual field progression (18). Distinctive glaucoma sub-phenotypes were discovered based on visual field read patterns (19) or structural descriptions of the ONH shape models (20) through data-driven clustering and feature reduction methods such as Uniform Manifold Approximation and Projection (UMAP) and non-negative matrix factorization (21). Such clinically informed POAG sub-phenotypes provide opportunities for new frontiers of reverse translation. Existing AI studies in animal models are mainly limited to discriminating between glaucomatous and healthy eyes via OCT (22). With the reverse translation of AI findings from clinical studies, novel animal models can be developed or identified to understand the distinctive disease mechanisms for each of the POAG subtypes.

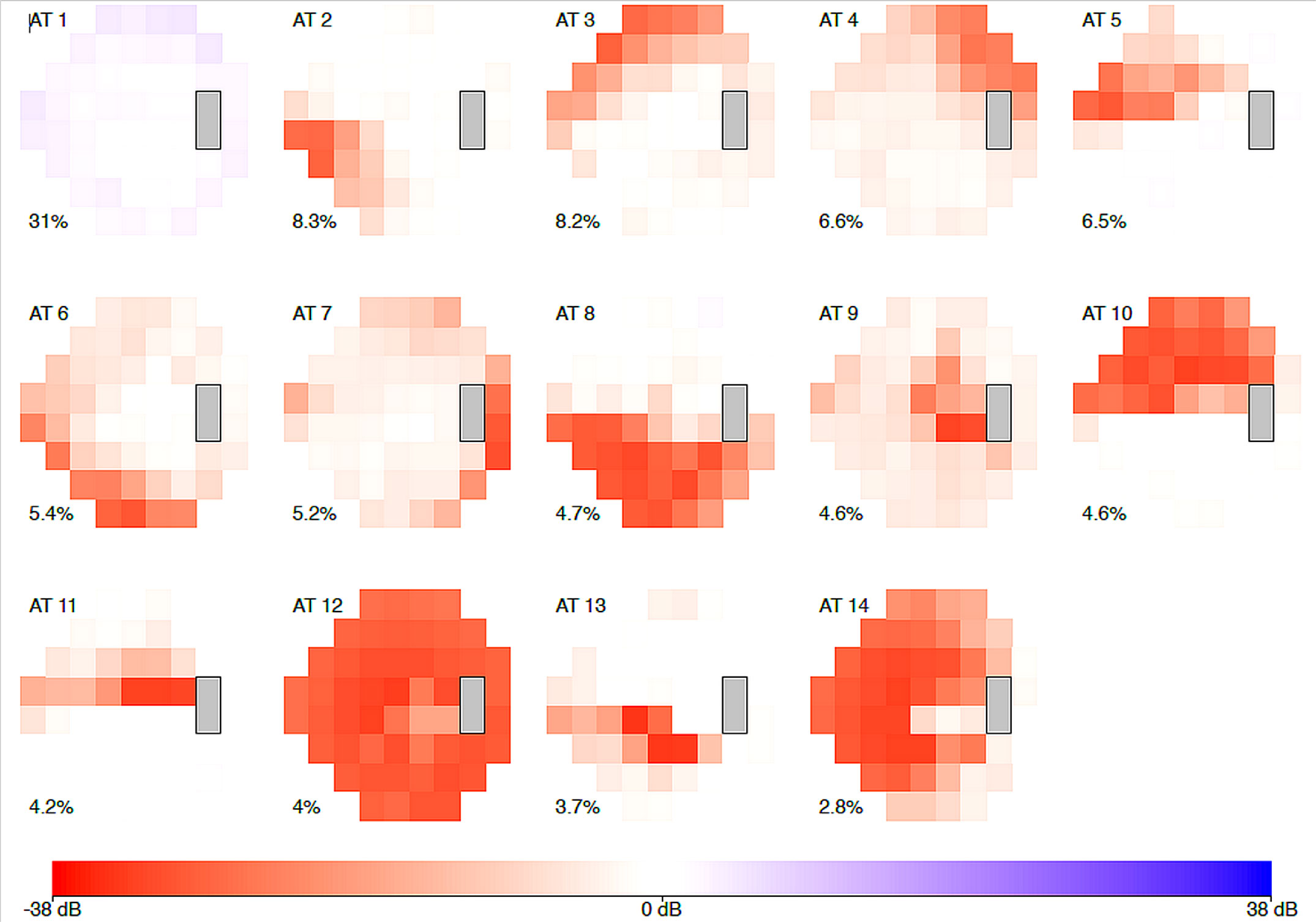

Genotype-phenotype associations are an important inter-species bridge to connect benchtop and bedside studies. A recent study has identified 14 archetypes of POAG using data-driven clustering methods based on the visual field measurement patterns (19) (Figure 1). By connecting the discovered sub-phenotypes with the ancestry data, the authors discovered the African-descendant ethnicity as the risk factor for specific POAG sub-phenotypes for both early and advanced loss archetypes. In another study, a genome-wide meta-analysis identified 127 open-angle glaucoma loci (23). AI-driven algorithms can also be used for assigning vertical cup-to-disc ratios to extend our knowledge about the genetic architecture of glaucoma (15). Such findings may guide the development of novel transgenic mouse models that are highly relevant to the human disease process. However, given the difference in ocular anatomy between the mouse and humans, it would be important to determine the inter-species translatability of the glaucoma sub-phenotypes, and animal-specific glaucoma sub-phenotyping models would need to be carefully trained and interpreted.

Figure 1 The 14 archetypal visual field loss patterns derived from visual fields of the 1957 incident primary open-angle glaucoma cases (2581 affected eyes). The integer at the top left of each archetype (AT) denotes the archetype number. The percentage at the bottom left of each archetype indicates this pattern’s respective average decomposition weight. The algorithm identified 14 archetypes: four representing advanced loss patterns, nine of early loss, and one of no visual field loss. African-American patients made up 1.3 percent of the study but had a nearly twofold increased risk of early visual field loss archetypes, and a sixfold higher risk for advanced field loss archetypes, when compared to white patients. [excerpted from (19)].

Describing the morphological and biomechanical phenotype of the ONH is critical for the field of glaucoma. Changes in ONH structure have been considered a central event in glaucoma, and the fragile ONH is constantly exposed to 3 major loads: IOP, cerebrospinal fluid pressure (CSFP), and optic nerve traction during eye movements. To better describe ONH structure in patients, Devalla et al. has proposed several deep learning approaches (e.g. DRUNET and ONH-Net) to simultaneously segment both connective and neural tissues of the ONH from OCT images (24), one of which was device-independent (25). Panda et al. and Braeu et al. employed these AI-driven approaches to identify novel morphological biomarkers for glaucoma in humans (20, 26). These technologies were then successfully ‘reverse-translated’ in the tree shrew model (27) to better understand non-linear optical distortions present in OCT images. This knowledge could ultimately improve our glaucoma predictions in patients.

Several technologies have been developed in humans to assess the biomechanics of the ONH. As it is now possible to observe IOP-induced (or gaze-induced) ONH deformations with OCT, techniques such as digital volume correlation, the virtual fields method, and other AI-driven approaches have been used to map local ONH tissue strain, biomechanical properties, and robustness (28–32). In a large glaucoma population, Chuangsuwanich et al. identified key biomechanical trends: (1) IOP-induced deformations were associated with visual field loss in high-tension glaucoma but not normal-tension glaucoma (33); and (2) normal-tension glaucoma ONHs were more biomechanically sensitive to changes in gaze, while high-tension glaucoma ONHs appeared more sensitive to changes in IOP (34). Similar techniques have in turn been used to test biomechanical hypotheses in non-human primates, allowing for a greater degree of freedom, and simultaneous control of IOP, CSFP, and blood pressure (35, 36). The knowledge gathered in those animal tests could ultimately help us refine a viable clinical test to assess ONH biomechanics in patients.

Biological and clinical explainability is important for both forward and reverse translations. Lee et al. developed a computational morphometric analysis pipeline to measure the individualized glaucoma-induced retinal structural changes through the estimation of retinal layer thicknesses and shape deformation over time (37). Such measurements require registration-based computation using a longitudinal dataset (38). Recent work by Shaini et al. used non-negative matrix factorization, an unsupervised dimensionality reduction and clustering method, to derive distinctive subphenotypes of ONH and peripapillary retinal nerve fiber layer (RNFL) surface-shape-based features, which could further improve the prediction accuracy of subsequent glaucomatous visual field loss (21).

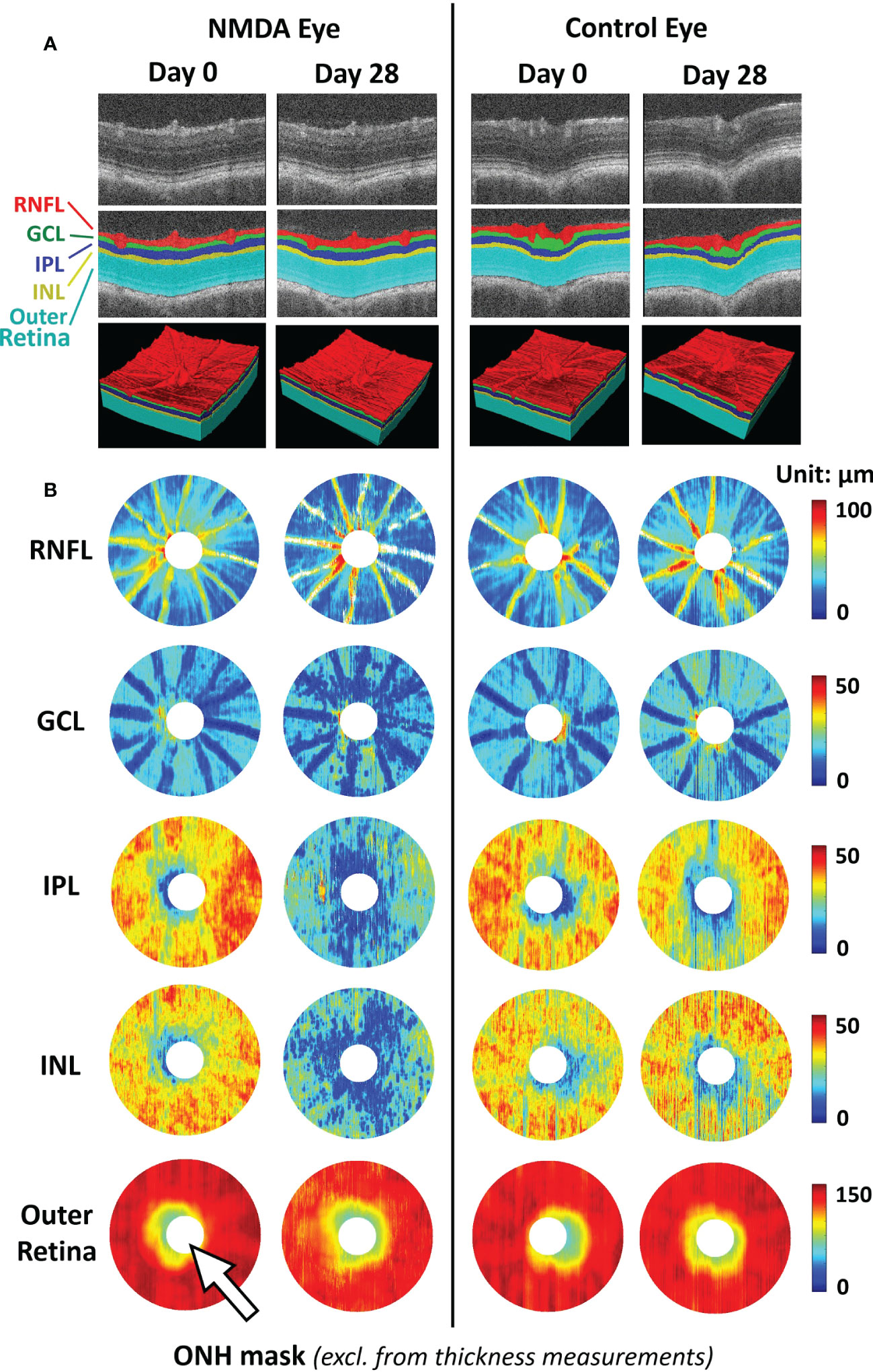

Focusing on the RNFL bundles, Leung et al. developed an optical texture analysis (39), and illustrated its diagnostic assessments for both glaucoma and non-glaucoma optic neuropathies (40). Such mathematical-driven modeling tools can be applied to both clinical studies and animal models to understand the relationships between RNFL integrity and other structural pathologies such as retinal vascular disruption. Furthermore, the model can further benefit from more accurate RNFL segmentation using deep-learning-based segmentation. Deep learning-based methods enable the automatic segmentation of retinal layers with high accuracy (41). Compared to clinical OCT, animal studies have a limited sample size and labeled ground truth data for training. In this sense, transfer learning and pseudo-labeling are proven to be beneficial in utilizing deep-learning models pre-trained on larger-scale clinical OCT data in animal studies. They require limited training data and minimal ground truth labels (42) (Figure 2).

Figure 2 Representative images of deep learning-assisted automatic retinal layer segmentation (A) and the thickness measurements of 5 retinal layers for both injured and control rat eyes (B) before and 28 days after unilateral N-methyl-D-aspartate (NMDA) injection. Automatic retinal layer segmentation was achieved using LF-UNet - an anatomical-aware cascaded deep-learning-based retinal optical coherence tomography (OCT) segmentation framework that has been validated on human retinal OCT data (42). In this work, two techniques were applied to improve the efficiency and generalizability of the LF-UNet segmentation framework when training with a small, labeled dataset – 1) composited transfer-learning and domain adaptation, and 2) pseudo-labeling. [excerpted from (42)]. (RNFL, retinal nerve fiber layer; GCL, ganglion cell layer; IPL, inner plexiform layer; INL, inner nuclear layer; ONH, optic nerve head).

Recent work by Brau et al. incorporated state-of-the-art geometric deep learning to train classifiers using point-cloud data derived from segmented ONH boundaries (26, 43). By using a dynamic-graph convolutional neural network (DGCNN), an explainable AI method, the authors were able to identify the critical 3D structural features of the ONH that are important to provide an improved glaucoma diagnosis. Some of those regions showed a great level of colocalization to the central retinal vessels, which aligns with their findings that the central retinal vessel trunk and branches have stronger diagnostic power for glaucoma compared to RNFL thickness (44). This model is also currently under clinical assessment (45). Importantly, this model can become a useful area of analysis with recent AI developments in glaucoma animal models. In OCT images, Choy et al. employed AI technology to delineate Schlemm’s canal lumens in mouse eyes (46). Similar segmentation and shape analysis approaches were recently applied to fixed tissues for automated analysis of multiple retinal morphological changes, including RNFL thickness (42), optic nerve density, and retinal ganglion cell soma density in animal models (47, 48). These studies suggest that, while population sizes for ground truth training may impose an obstacle to reverse translation of AI technology, they are not insurmountable.

OCT Angiography (OCTA) is a functional extension of OCT, which allows the detection of vascular-related retinal and optic nerve diseases (49, 50). OCTA has been used to quantify ONH blood flow in glaucoma since 2012 (51). Clinical studies show that OCTA-based vessel density and flow index are lower in glaucomatous eyes in the regions of the optic disc (52), peripapillary retina (53), and macular retina (54). OCTA-based vessel density can also reflect the severity of visual field loss in glaucoma patients (55). Recently, OCTA-derived nerve fiber layer plexus measurements have been used to further correlate with visual functions by simulating sector-wise visual field (56). Now, it is possible to detect glaucomatous focal perfusion loss using OCTA (57). Given the close relationship between the glaucoma-related pathologies and the retinal vascular regions (26), validated by other imaging modalities (44), we believe OCTA may serve as a new tool for glaucoma diagnosis and monitoring, and also understanding the mechanism of disease development (58, 59).

However, OCTA data may suffer from projection artifacts and motion artifacts (60). AI methods (61) have been applied for projection artifact removal (60, 62). AI has also been applied to enhance the retinal capillaries (63, 64), segment retinal vessels (65, 66), quantify the avascular zone (67–71), and map arteries and veins (72) in OCTA. There are potential opportunities for side-by-side development in humans with the intent to reverse translate.

Model generalization across devices is a crucial but challenging issue in AI development. Devalla et al. proposed a “3D digital staining” approach (25) that uses an “enhancer” neural network to learn the mathematical morphological operations to enhance the raw OCT images, which enables the application of a pre-trained segmentation of the ONH on different devices, thereby reducing variability. Similar approaches can be achieved through domain-adaptation using the generative adversarial network (GAN), with the potential to add additional shape or feature priors and constraints into pre-trained segmentation models (41) such as the source domain, structural similarity, signal-to-noise ratio, and high-level perceptual feature (73). Such data harmonization approaches can be important when translating to animal studies to account for different experimental setups and eliminate potential batch effects.

The explainability of AI modeling is crucial for translational applications in both clinical and pre-clinical animal studies. Recent studies have shown that explainable visualization can identify previously non-reported regions surrounding ONH that may be associated with glaucoma pathogenesis (74, 75). Some of the conventional explainable AI methods, such as Grad-CAM, are limited in terms of showing localized feature-important saliency maps due to the lack of resolution. With the integration of B-scan aggregation and enface projection for AI model visualization method (76), it is feasible to identify localized pathological signatures that differentiate retinal disease subtypes with a relatively small training set using feature agnostic AI classifier without the need for labeling of pathological regions. More excitingly, the newly proposed biomarker activation map (BAM) is an explainable visualization method specifically designed for AI-based disease diagnosis (77). The generated BAMs were designed to only localize the AI model-utilized unique biomarkers belonging to the positive class and showed much higher localization capability (77) than Grad-CAM or other conventional explainable AI methods, such as attention maps. It is foreseeable that such techniques can also be used to identify glaucoma pathogenesis and validate biomarkers in future pre-clinical studies.

The development of robust and generalizable deep learning models usually requires large samples of representative training data, which might demand the aggregation of data from different location sources. However, data sharing for clinical data is often restricted due to patient privacy concerns. Federated learning, or collaborative learning, helps to resolve such barriers through the training of an aggregated model without the need for data transfer, and is important for accelerating AI glaucoma research. The work by Lo et al. demonstrated the successful implementation of the federated framework to train more generalizable aggregated models of retinal vessel segmentation and diabetic retinopathy classification using OCT data from three different institutions and different OCT machines with distinctive distributions of disease severity (78). With regard to glaucoma, the work by Christopher et al. exemplified the successful training of federated models for glaucoma detection using data from two institutions containing distinctive racial populations (79). For accelerating reverse translation into basic science, future studies are envisioned that involve collaborative efforts and openness in building shared data sources of pre-clinical imaging, biochemical, and behavioral modalities in healthy animals across species, age, and gender, as well as experimental high-tension and normal-tension glaucoma disease models for establishing robust baselines and predicting neurobehavioral changes for further research. For pre-clinical animal data, the barriers to data sharing would be lower without privacy concerns. Moreover, the techniques developed in the federated learning framework, such as parallel model training with normalized weighted sharing and dataset-specific domain adaptation, would be beneficial for reverse translation.

In the recent decade, extensive efforts have been put into the development and investigations of AI methods for glaucoma research in clinical settings. Not only did these studies show promising results in improving clinical outcomes on the bedside, they have also provided precious first-hand experiences that researchers can learn from towards reverse translation to animal studies. The data-driven findings of glaucoma-related genomic loci and glaucoma sub-phenotypes provide information for potential novel transgenic animal models to further study the biological mechanisms for precision medicine. The effectiveness of using OCT to predict visual field progression, as well as using visual field measurements to predict the risk of accelerated glaucoma progression, indicates strong functional-structural correlations towards the disease progression. Such insights will likely guide the experimental design of future animal studies to focus on specific pathophysiological and functional pathways of the disease mechanisms. Furthermore, many imaging-based AI models are readily translatable to animal-based pre-clinical studies, from structural segmentation to shape and biomechanical models for the ONH and optic disc, peripapillary retinal vascular pathology and avascular zone abnormality detection, as well as retinal nerve fiber bundle texture analysis. Finally, AI methods for model generalizability, domain adaptation, and explainable AI are crucial for reverse translation to evaluate the applicability of animal models to monitoring glaucomatous conditions.

To date, most of the AI method developments in glaucoma are focused on clinical applications, leaving much room for reverse translation to benchside basic science research. Some of the current challenges in reverse translation research include the anatomical differences in the visual system between human and animal models. For example, human and rodent eyes have different sizes, with fovea and lamina cribrosa being present in humans but not in rodents (though they possess the pseudofovea and glial lamina), and with optic nerve fibers decussating at the optic chiasm to the contralateral hemisphere to different extents between humans (52%) and rodents (above 90%). Finally, like other biomedical and clinical applications, AI applications in glaucoma research also inherit some of the current limitations, such as model interpretability and generalizability.

Future research on reverse translation for glaucoma can further benefit from integrating state-of-the-art developments in AI methods. Advanced AI models such as vision transformers have shown better generalizability in tasks depicting POAG when applied to diverse independent datasets (80). The fast-growing self-supervised learning techniques have shown promising applications for efficiently utilizing relatively large amounts of unlabeled data to learn pathological features that are not specific to certain diseases but generalizable to other diagnoses, such as patients with both glaucoma and diabetic retinopathy or age-related macular degeneration. Although this approach can be more practical as comorbidities often occur in patients, care should be taken when applying self-supervised learning in medical image data in which pathology-related variations are highly localized. This often causes the algorithm subject to shortcut learning, detecting non-clinically-relevant easy features to drive prediction (81). Therefore, it is essential to incorporate domain-specific information when developing self-supervised learning methods to avoid contamination by spurious features (82). This can also improve robustness when training on clinical problems with small sample sizes by bootstrapping the performance to achieve diagnosis-level explanation (83). Future research on explainable AI should not only resolve where the model focuses, but also how the changes in those locations affect the model performance. The counterfactual approach (84) could potentially help to unveil the blackbox of the deep learning models by interrogating the explainability of the internal layers of the neural network, leading to the causal explanation inside the model (85, 86).

Successful efforts in the reverse translation of AI may also benefit the development and use of large animal models of glaucoma (87–90). While larger animals, such as dogs, swine, and primates, have greater anatomical homology to humans, their use is limited by the cost of cohorts and ethical considerations. Tree shrews may be considered as an alternative glaucoma animal model given the presence of the laminar cribrosa in the eyes of these small animals (27). The ability to access refined measurements of glaucoma pathology in a longitudinal and non-invasive manner could improve the usability of these models moving forward (91–93).

In addition, the recent development of novel imaging techniques has resulted in huge opportunities for data-driven AI approaches for basic science studies. For example, the recent advancements of adaptive optics, which is adopted from telescope technology, and two-photon imaging (94–96) have enabled in vivo visualization of glaucoma-related ocular structures such as the retina, ONH and trabecular meshwork in unprecedented detail (97). These data can facilitate efficient and accurate retinal layer segmentations (98, 99), cellular-level imaging of photoreceptors (100), and detect subtle pathological protein deposition in RNFL in both human (101) and animal studies (102). Furthermore, transmission electron microscopy and laser scanning microscopy can image the ultrastructural morphology of the ONH (103, 104), trabecular meshwork (105), and RNFL (106) from mouse and non-human primate models of glaucoma, enabling more in-depth understanding of the glaucoma pathogenesis (105) including astrocytic responses (104). While the large amounts of high-resolution imaging data pose analytic challenges using traditional image analysis methods, the fast-growing field of AI application in digital pathology offers a potential solution (107–109). Increased collection and digitalization of ophthalmic imaging from both human and experimental animal specimens provides great opportunities for harnessing reverse AI translation to evaluate glaucoma pathogenesis on a large scale with improved robustness.

Finally, the recent development of efficient sequencing techniques has enabled AI applications on multi-omics data for basic glaucoma research. For instance, genome-wide association studies (GWAS) have revealed hundreds of POAG-related genetic loci with consistent effects across ancestries (23, 110, 111); and whole-genome-based polygenic risk score enables the prediction of future glaucoma risks (112). Furthermore, multi-omics investigations can help identify the molecular signature for glaucoma predisposition (113), patient-specific tear composition (114), mechanical stress-derived trabecular meshwork cytoskeletal changes (115), and risk factors for IOP elevation (116). Integrating image data with multi-omics data (e.g., genomics, transcriptomics, proteomics, and metabolomics) using approaches such as spatial transcriptomics (117) may reveal novel genotype-phenotype associations and causal inferences, allowing the understanding of glaucoma-related disease etiology in a highly localized manner as well as the identification of more biologically-related glaucoma phenotypes.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

MS, RS, and KC proposed the topic. DM and KC wrote the manuscript. LP, YJ, CL, MS, MG, and RS edited the manuscript. All authors contributed to the article and approved the submitted version.

This work is supported in part by the National Institutes of Health R01-EY015473 [LP], R01-EY020496 [RS], R01-EY028125 [KC], R01-EY031394 [YJ] (Bethesda, Maryland), BrightFocus Foundation G2019103 [KC], G2020168 [YJ], G2021010S [MG] (Clarksburg, Maryland), an unrestricted grant from Research to Prevent Blindness to NYU Langone Health Department of Ophthalmology (New York, New York) [KC]; the NMRC-LCG grant ‘TAckling & Reducing Glaucoma Blindness with Emerging Technologies (TARGET)’, award ID: MOH-OFLCG21jun-0003 [MG]; the “Retinal Analytics through Machine learning aiding Physics (RAMP)” project that is supported by the National Research Foundation, Prime Minister’s Office, Singapore under its Intra-Create Thematic Grant “Intersection Of Engineering And Health” - NRF2019-THE002-0006 awarded to the Singapore MIT Alliance for Research and Technology (SMART) Centre [MG], Moorfields Eye Charity grant GR001424 [MS].

This perspective contains a summarization of several findings and research development presented in the mini-symposium “Reverse translation of AI in glaucoma” in the 2022 Meeting of the Association for Research in Vision and Ophthalmology (ARVO) held in Denver, Colorado, USA, and virtually. We thank all collaborators who contributed to our research papers upon which the present commentary is based.

LP: Consultant to Eyenovia, Skye Biosciences, Character Biosciences, and Twenty Twenty. MG: Abyss Processing Pte Ltd Co-founder and Consultant. YJ: Optovue, Inc F, P; Optos, Inc. P. MS: Seymour Vision I.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be constructed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Myserlis P, Radmanesh F, Anderson CD. Translational genomics in neurocritical care: a review. Neurotherapeutics (2020) 17(2):563–80. doi: 10.1007/s13311-020-00838-1

2. Shakhnovich V. It’s time to reverse our thinking: The reverse translation research paradigm. Clin Transl Sci (2018) 11(2):98–9. doi: 10.1111/cts.12538

3. Storgaard L, Tran TL, Freiberg JC, Hauser AS, Kolko M. Glaucoma clinical research: Trends in treatment strategies and drug development. Front Med (2021) 8:733080. doi: 10.3389/fmed.2021.733080

4. Rezaie T, Child A, Hitchings R, Brice G, Miller L, Coca-Prados M, et al. Adult-onset primary open-angle glaucoma caused by mutations in optineurin. Science (2002) 295(5557):1077–9. doi: 10.1126/science.1066901

5. Adi V, Sims J, Forlenza D, Liu C, Song H, Hamilton-Fletcher G, et al. Longitudinal age effects of optineurin E50K mutation and deficiency on visual function. Invest Ophthalmol Vis Sci (2021) 62(8):2385.

6. Lu Y, Brommer B, Tian X, Krishnan A, Meer M, Wang C, et al. Reprogramming to recover youthful epigenetic information and restore vision. Nature (2020) 588(7836):124–9. doi: 10.1038/s41586-020-2975-4

7. Evangelho K, Mastronardi CA, de-la-Torre A. Experimental models of glaucoma: A powerful translational tool for the future development of new therapies for glaucoma in humans–a review of the literature. Med (Mex) (2019) 55(6):280. doi: 10.3390/medicina55060280

8. Pilkinton S, Hollingsworth TJ, Jerkins B, Jablonski MM. An overview of glaucoma: Bidirectional translation between humans and pre-clinical animal models. In: Preclinical animal modeling in medicine. IntechOpen (2021). Available at: https://www.intechopen.com/chapters/76040.

9. Shinozaki Y, Leung A, Namekata K, Saitoh S, Nguyen HB, Takeda A, et al. Astrocytic dysfunction induced by ABCA1 deficiency causes optic neuropathy. Sci Adv (2022) 8(44):eabq1081. doi: 10.1126/sciadv.abq1081

10. Ittoop SM, Jaccard N, Lanouette G, Kahook MY. The role of artificial intelligence in the diagnosis and management of glaucoma. J Glaucoma (2022) 31(3):137–46. doi: 10.1097/IJG.0000000000001972

11. Thompson AC, Falconi A, Sappington RM. Deep learning and optical coherence tomography in glaucoma: Bridging the diagnostic gap on structural imaging. Front Ophthalmol (2022) 2:937205. doi: 10.3389/fopht.2022.937205

12. Herbert P, Hou K, Bradley C, Boland MV, Ramulu PY, Unberath M, et al. Forecasting risk of future rapid glaucoma worsening using early visual field, optical coherence tomography and clinical data. Invest Ophthalmol Vis Sci (2022) 63(7):2294.

13. Shuldiner SR, Boland MV, Ramulu PY, De Moraes CG, Elze T, Myers J, et al. Predicting eyes at risk for rapid glaucoma progression based on an initial visual field test using machine learning. PloS One (2021) 16(4):e0249856. doi: 10.1371/journal.pone.0249856

14. Nouri-Mahdavi K, Mohammadzadeh V, Rabiolo A, Edalati K, Caprioli J, Yousefi S. Prediction of visual field progression from OCT structural measures in moderate to advanced glaucoma. Am J Ophthalmol (2021) 226:172–81. doi: 10.1016/j.ajo.2021.01.023

15. Han X, Steven K, Qassim A, Marshall HN, Bean C, Tremeer M, et al. Automated AI labeling of optic nerve head enables insights into cross-ancestry glaucoma risk and genetic discovery in >280,000 images from UKB and CLSA. Am J Hum Genet (2021) 108(7):1204–16. doi: 10.1016/j.ajhg.2021.05.005

16. Heisler M, Bhalla M, Lo J, Mammo Z, Lee S, Ju MJ, et al. Semi-supervised deep learning based 3D analysis of the peripapillary region. BioMed Opt Express (2020) 11(7):3843–56. doi: 10.1364/BOE.392648

17. Zang P, Wang J, Hormel TT, Liu L, Huang D, Jia Y. Automated segmentation of peripapillary retinal boundaries in OCT combining a convolutional neural network and a multi-weights graph search. BioMed Opt Express (2019) 10(8):4340–52. doi: 10.1364/BOE.10.004340

18. Su WW, Hsieh SS, Cheng ST, Su CW, Wu WC, Chen HSL. Visual subfield progression in glaucoma subtypes. J Ophthalmol (2018) 2018:7864219. doi: 10.1155/2018/7864219

19. Kang JH, Wang M, Frueh L, Rosner B, Wiggs JL, Elze T, et al. Cohort study of Race/Ethnicity and incident primary open-angle glaucoma characterized by autonomously determined visual field loss patterns. Transl Vis Sci Technol (2022) 11(7):21. doi: 10.1167/tvst.11.7.21

20. Panda SK, Cheong H, Tun TA, Devella SK, Senthil V, Krishnadas R, et al. Describing the structural phenotype of the glaucomatous optic nerve head using artificial intelligence. Am J Ophthalmol (2022) 236:172–82. doi: 10.1016/j.ajo.2021.06.010

21. Saini C, Shen LQ, Pasquale LR, Boland MV, Friedman DS, Zebardast N, et al. Assessing surface shapes of the optic nerve head and peripapillary retinal nerve fiber layer in glaucoma with artificial intelligence. Ophthalmol Sci (2022) 2(3):100161. doi: 10.1016/j.xops.2022.100161

22. Fuentes-Hurtado F, Morales S, Mossi JM, Naranjo V, Fedulov V, Woldbye D, et al. Deep-Learning-Based classification of rat OCT images after intravitreal injection of ET-1 for glaucoma understanding. In: Yin H, Camacho D, Novais P, Tallón-Ballesteros AJ, editors. Intelligent data engineering and automated learning – IDEAL 2018. Cham: Springer International Publishing (2018). p. 27–34.

23. Gharahkhani P, Jorgenson E, Hysi P, Khawaja AP, Pendergrass S, Han X, et al. Genome-wide meta-analysis identifies 127 open-angle glaucoma loci with consistent effect across ancestries. Nat Commun (2021) 12(1):1258. doi: 10.1038/s41467-020-20851-4

24. Devalla SK, Chin KS, Mari JM, Tun TA, Strouthidis NG, Aung T, et al. A deep learning approach to digitally stain optical coherence tomography images of the optic nerve head. Invest Ophthalmol Vis Sci (2018) 59(1):63–74. doi: 10.1167/iovs.17-22617

25. Devalla SK, Pham TH, Pham TH, Panda SK, Zhang L, Subramanian G, et al. Towards label-free 3D segmentation of optical coherence tomography images of the optic nerve head using deep learning. BioMed Opt Express (2020) 11(11):6356–78. doi: 10.1364/BOE.395934

26. Braeu FA, Thiéry AH, Tun TA, Kadziauskiene A, Barbastathis G, Aung T, et al. Geometric deep learning to identify the critical 3D structural features of the optic nerve head for glaucoma diagnosis. arXiv (2022). Available at: http://arxiv.org/abs/2204.06931.

27. Grytz R, Hamdaoui ME, Fuchs PA, Fazio MA, Fazio MA, Fazio MA, et al. Nonlinear distortion correction for posterior eye segment optical coherence tomography with application to tree shrews. BioMed Opt Express (2022) 13(2):1070–86. doi: 10.1364/BOE.447595

28. Beotra MR, Wang X, Tun TA, Zhang L, Baskaran M, Aung T, et al. In vivo three-dimensional lamina cribrosa strains in healthy, ocular hypertensive, and glaucoma eyes following acute intraocular pressure elevation. Invest Ophthalmol Vis Sci (2018) 59(1):260–72. doi: 10.1167/iovs.17-21982

29. Braeu FA, Chuangsuwanich T, Tun TA, Thiery AH, Aung T, Barbastathis G, et al. AI-Based clinical assessment of optic nerve head robustness superseding biomechanical testing. arXiv (2022). Available at: http://arxiv.org/abs/2206.04689.

30. Midgett DE, Quigley HA, Nguyen TD. In vivo characterization of the deformation of the human optic nerve head using optical coherence tomography and digital volume correlation. Acta Biomater (2019) 96:385–99. doi: 10.1016/j.actbio.2019.06.050

31. Zhang L, Beotra MR, Baskaran M, Tun TA, Wang X, Perera SA, et al. In vivo measurements of prelamina and lamina cribrosa biomechanical properties in humans. Invest Ophthalmol Vis Sci (2020) 61(3):27. doi: 10.1167/iovs.61.3.27

32. Zhong F, Wang B, Wei J, Hua Y, Wang B, Reynaud J, et al. A high-accuracy and high-efficiency digital volume correlation method to characterize in-vivo optic nerve head biomechanics from optical coherence tomography. Acta Biomater (2022) 143:72–86. doi: 10.1016/j.actbio.2022.02.021

33. Chuangsuwanich T, Tun TA, Braeu FA, Wang X, Chin ZY, Panda SK, et al. Differing associations between optic nerve head strains and visual field loss in normal- and high-tension glaucoma subjects. Ophthalmology (2022). doi: 10.1016/j.ophtha.2022.08.007

34. Chuangsuwanich T, Tun TA, Braeu FA, Wang X, Chin ZY, Panda SK, et al. Adduction induces Large optic nerve head deformations in subjects with normal tension glaucoma. bioRxiv (2022), 2021.08.25.457300. doi: 10.1101/2021.08.25.457300v2

35. Tran H, Grimm J, Wang B, Smith MA, Gogola A, Nelson S, et al. Mapping in-vivo optic nerve head strains caused by intraocular and intracranial pressures. In: Optical elastography and tissue biomechanics IV. San Francisco, California, United States: SPIE (2017). p. 6–15. doi: 10.1117/12.2257360.full

36. Zhu Z, Waxman S, Wang B, Wallace J, Schmitt SE, Tyler-Kabara E, et al. Interplay between intraocular and intracranial pressure effects on the optic nerve head in vivo. Exp Eye Res (2021) 213:108809. doi: 10.1016/j.exer.2021.108809

37. Lee S, Heisler ML, Popuri K, Charon N, Charlier B, Trouvé A, et al. Age and glaucoma-related characteristics in retinal nerve fiber layer and choroid: Localized morphometrics and visualization using functional shapes registration. Front Neurosci (2017) 11:381. doi: 10.3389/fnins.2017.00381

38. Lee S, Charon N, Charlier B, Popuri K, Lebed E, Sarunic MV, et al. Atlas-based shape analysis and classification of retinal optical coherence tomography images using the functional shape (fshape) framework. Med Image Anal (2017) 35:570–81. doi: 10.1016/j.media.2016.08.012

39. Leung CKS, Guo PY, Lam AKN. Retinal nerve fiber layer optical texture analysis: Involvement of the papillomacular bundle and papillofoveal bundle in early glaucoma. Ophthalmology (2022) 129(9):1043–55. doi: 10.1016/j.ophtha.2022.04.012

40. Leung CKS, Lam AKN, Weinreb RN, Garway-Heath DF, Yu M, Guo PY, et al. Diagnostic assessment of glaucoma and non-glaucomatous optic neuropathies via optical texture analysis of the retinal nerve fibre layer. Nat BioMed Eng (2022) 6(5):593–604. doi: 10.1038/s41551-021-00813-x

41. Ma D, Lu D, Chen S, Heisler M, Dabiri S, Lee S, et al. LF-UNet - a novel anatomical-aware dual-branch cascaded deep neural network for segmentation of retinal layers and fluid from optical coherence tomography images. Comput Med Imaging Graph Off J Comput Med Imaging Soc (2021) 94:101988. doi: 10.1016/j.compmedimag.2021.101988

42. Ma D, Deng W, Wang X, Lee S, Matsubara J, Sarunic M, et al. Longitudinal assessments of retinal degeneration after excitotoxic injury using an end-to-end pipeline with deep learning-based automatic layer segmentation. Invest Ophthalmol Vis Sci (2020) 61(9):PB0053.

43. Thiery AH, Braeu F, Tun TA, Aung T, Girard MJA. Medical application of geometric deep learning for the diagnosis of glaucoma. arXiv (2022). Available at: http://arxiv.org/abs/2204.07004.

44. Panda SK, Cheong H, Tun TA, Chuangsuwanich T, Kadziauskiene A, Senthil V, et al. The three-dimensional structural configuration of the central retinal vessel trunk and branches as a glaucoma biomarker. Am J Ophthalmol (2022) 240:205–16. doi: 10.1016/j.ajo.2022.02.020

45. Braeu FA, Chuangsuwanich T, Tun TA, Thiery A, Barbastathis G, Aung T, et al. AI-Based clinical assessment of optic nerve head robustness from 3D optical coherence tomography imaging. Invest Ophthalmol Vis Sci (2022) 63(7):808.

46. Choy KC, Li G, Stamer WD, Farsiu S. Open-source deep learning-based automatic segmentation of mouse schlemm’s canal in optical coherence tomography images. Exp Eye Res (2022) 214:108844. doi: 10.1016/j.exer.2021.108844

47. Deng W, Hedberg-Buenz A, Soukup DA, Taghizadeh S, Wang K, Anderson MG, et al. AxonDeep: Automated optic nerve axon segmentation in mice with deep learning. Transl Vis Sci Technol (2021) 10(14):22. doi: 10.1167/tvst.10.14.22

48. Ritch MD, Hannon BG, Read AT, Feola AJ, Cull GA, Reynaud J, et al. AxoNet: A deep learning-based tool to count retinal ganglion cell axons. Sci Rep (2020) 10(1):8034. doi: 10.1038/s41598-020-64898-1

49. Jia Y, Tan O, Tokayer J, Potsaid B, Wang Y, Liu JJ, et al. Split-spectrum amplitude-decorrelation angiography with optical coherence tomography. Opt Express (2012) 20(4):4710–25. doi: 10.1364/OE.20.004710

50. Jia Y, Bailey ST, Hwang TS, McClintic SM, Gao SS, Pennesi ME, et al. Quantitative optical coherence tomography angiography of vascular abnormalities in the living human eye. Proc Natl Acad Sci (2015) 112(18):E2395–402. doi: 10.1073/pnas.1500185112

51. Jia Y, Morrison JC, Tokayer J, Tan O, Lombardi L, Baumann B, et al. Quantitative OCT angiography of optic nerve head blood flow. BioMed Opt Express (2012) 3(12):3127–37. doi: 10.1364/BOE.3.003127

52. Jia Y, Wei E, Wang X, Zhang X, Morrison JC, Parikh M, et al. Optical coherence tomography angiography of optic disc perfusion in glaucoma. Ophthalmology (2014) 121(7):1322–32. doi: 10.1016/j.ophtha.2014.01.021

53. Liu L, Jia Y, Takusagawa HL, Pechauer AD, Edmunds B, Lombardi L, et al. Optical coherence tomography angiography of the peripapillary retina in glaucoma. JAMA Ophthalmol (2015) 133(9):1045–52. doi: 10.1001/jamaophthalmol.2015.2225

54. Takusagawa HL, Liu L, Ma KN, Jia Y, Gao SS, Zhang M, et al. Projection-resolved optical coherence tomography angiography of macular retinal circulation in glaucoma. Ophthalmology (2017) 124(11):1589–99. doi: 10.1016/j.ophtha.2017.06.002

55. Yarmohammadi A, Zangwill LM, Diniz-Filho A, Suh MH, Yousefi S, Saunders LJ, et al. Relationship between optical coherence tomography angiography vessel density and severity of visual field loss in glaucoma. Ophthalmology (2016) 123(12):2498–508. doi: 10.1016/j.ophtha.2016.08.041

56. Liu L, Tan O, Ing E, Morrison JC, Edmunds B, Davis E, et al. Sectorwise visual field simulation using optical coherence tomographic angiography nerve fiber layer plexus measurements in glaucoma. Am J Ophthalmol (2020) 212:57–68. doi: 10.1016/j.ajo.2019.11.018

57. Chen A, Liu L, Wang J, Zang P, Edmunds B, Lombardi L, et al. Measuring glaucomatous focal perfusion loss in the peripapillary retina using OCT angiography. Ophthalmology (2020) 127(4):484–91. doi: 10.1016/j.ophtha.2019.10.041

58. Rao HL, Pradhan ZS, Suh MH, Moghimi S, Mansouri K, Weinreb RN. Optical coherence tomography angiography in glaucoma. J Glaucoma (2020) 29(4):312–21. doi: 10.1097/IJG.0000000000001463

59. Van Melkebeke L, Barbosa-Breda J, Huygens M, Stalmans I. Optical coherence tomography angiography in glaucoma: A review. Ophthalmic Res (2018) 60(3):139–51. doi: 10.1159/000488495

60. Hormel TT, Huang D, Jia Y. Artifacts and artifact removal in optical coherence tomographic angiography. Quant Imaging Med Surg (2021) 11(3):1120–33. doi: 10.21037/qims-20-730

61. Wang J, Hormel T, Jia Y. Artificial intelligence-assisted projection-resolved optical coherence tomographic angiography (aiPR-OCTA). Invest Ophthalmol Vis Sci (2022) 63(7):2910–F0063.

62. Zhang M, Hwang TS, Campbell JP, Bailey ST, Wilson DJ, Huang D, et al. Projection-resolved optical coherence tomographic angiography. BioMed Opt Express (2016) 7(3):816–28. doi: 10.1364/BOE.7.000816

63. Gao M, Guo Y, Hormel TT, Sun J, Hwang TS, Jia Y. Reconstruction of high-resolution 6×6-mm OCT angiograms using deep learning. BioMed Opt Express (2020) 11(7):3585–600. doi: 10.1364/BOE.394301

64. Gao M, Hormel TT, Wang J, Guo Y, Bailey ST, Hwang TS, et al. An open-source deep learning network for reconstruction of high-resolution oct angiograms of retinal intermediate and deep capillary plexuses. Transl Vis Sci Technol (2021) 10(13):13. doi: 10.1167/tvst.10.13.13

65. Guo Y, Hormel TT, Pi S, Wei X, Gao M, Morrison J, et al. An end-to-end network for segmenting the vasculature of three retinal capillary plexuses from OCT angiographic volume. Invest Ophthalmol Vis Sci (2020) 61(9):PB00119.

66. Lo J, Heisler M, Vanzan V, Karst S, Matovinović IZ, Lončarić S, et al. Microvasculature segmentation and intercapillary area quantification of the deep vascular complex using transfer learning. Transl Vis Sci Technol (2020) 9(2):38. doi: 10.1167/tvst.9.2.38

67. Guo Y, Camino A, Wang J, Huang D, Hwang TS, Jia Y. MEDnet, a neural network for automated detection of avascular area in OCT angiography. BioMed Opt Express (2018) 9(11):5147–58. doi: 10.1364/BOE.9.005147

68. Guo Y, Hormel TT, Xiong H, Wang B, Camino A, Wang J, et al. Development and validation of a deep learning algorithm for distinguishing the nonperfusion area from signal reduction artifacts on OCT angiography. BioMed Opt Express (2019) 10(7):3257–68. doi: 10.1364/BOE.10.003257

69. Guo Y, Hormel TT, Gao L, You Q, Wang B, Flaxel CJ, et al. Quantification of nonperfusion area in montaged widefield OCT angiography using deep learning in diabetic retinopathy. Ophthalmol Sci (2021) 1(2):100027. doi: 10.1016/j.xops.2021.100027

70. Heisler M, Chan F, Mammo Z, Balaratnasingam C, Prentasic P, Docherty G, et al. Deep learning vessel segmentation and quantification of the foveal avascular zone using commercial and prototype OCT-a platforms. arXiv (2019). Available at: http://arxiv.org/abs/1909.11289.

71. Wang J, Wang J, Hormel TT, You Q, Guo Y, Wang X, et al. Robust non-perfusion area detection in three retinal plexuses using convolutional neural network in OCT angiography. BioMed Opt Express (2020) 11(1):330–45. doi: 10.1364/BOE.11.000330

72. Gao M, Guo Y, Hormel TT, Tsuboi K, Pacheco G, Poole D, et al. A deep learning network for classifying arteries and veins in montaged widefield OCT angiograms. Ophthalmol Sci (2022) 2(2):100149. doi: 10.1016/j.xops.2022.100149

73. Chen S, Ma D, Lee S, Yu TTL, Xu G, Lu D, et al. Segmentation-guided domain adaptation and data harmonization of multi-device retinal optical coherence tomography using cycle-consistent generative adversarial networks. arXiv (2022). Available at: http://arxiv.org/abs/2208.14635.

74. Maetschke S, Antony B, Ishikawa H, Wollstein G, Schuman J, Garnavi R. A feature agnostic approach for glaucoma detection in OCT volumes. PloS One (2019) 14(7):e0219126. doi: 10.1371/journal.pone.0219126

75. Ran AR, Cheung CY, Wang X, Chen H, Luo L y, Chan PP, et al. Detection of glaucomatous optic neuropathy with spectral-domain optical coherence tomography: a retrospective training and validation deep-learning analysis. Lancet Digit Health (2019) 1(4):e172–82. doi: 10.1016/S2589-7500(19)30085-8

76. Ma D, Kumar M, Khetan V, Sen P, Bhende M, Chen S, et al. Clinical explainable differential diagnosis of polypoidal choroidal vasculopathy and age-related macular degeneration using deep learning. Comput Biol Med (2022) 143:105319. doi: 10.1016/j.compbiomed.2022.105319

77. Zang P, Hormel T, Wang J, Bailey ST, Flaxel CJ, Huang D, et al. Interpretable Diabetic Retinopathy Diagnosis based on Biomarker Activation Map. arXiv (2022) preprint arXiv:2212.06299. doi: 10.48550/arXiv.2212.06299

78. Lo J, Yu TT, Ma D, Zang P, Owen JP, Zhang Q, et al. Federated learning for microvasculature segmentation and diabetic retinopathy classification of OCT data. Ophthalmol Sci (2021) 1(4):100069. doi: 10.1016/j.xops.2021.100069

79. Christopher M, Nakahara K, Bowd C, Proudfoot JA, Belghith A, Goldbaum MH, et al. Effects of study population, labeling and training on glaucoma detection using deep learning algorithms. Transl Vis Sci Technol (2020) 9(2):27. doi: 10.1167/tvst.9.2.27

80. Bowd C, Fan R, Alipour K, Christopher M, Brye N, Proudfoot JA, et al. Primary open-angle glaucoma detection with vision transformer: Improved generalization across independent fundus photograph datasets. Invest Ophthalmol Vis Sci (2022) 63(7):2295.

81. DeGrave AJ, Janizek JD, Lee SI. AI For radiographic COVID-19 detection selects shortcuts over signal. Nat Mach Intell (2021) 3(7):610–9. doi: 10.1038/s42256-021-00338-7

82. Robinson J, Sun L, Yu K, Batmanghelich K, Jegelka S, Sra S. Can contrastive learning avoid shortcut solutions? In: Advances in neural information processing systems. Curran Associates, Inc (2021). p. 4974–86. Available at: https://proceedings.neurips.cc/paper/2021/hash/27934a1f19d678a1377c257b9a780e80-Abstract.html.

83. Sun L, Yu K, Batmanghelich K. Context matters: Graph-based self-supervised representation learning for medical images. Proc AAAI Conf Artif Intell (2021) 35(6):4874–82. doi: 10.1609/aaai.v35i6.16620

84. Singla S, Pollack B, Wallace S, Batmanghelich K. Explaining the black-box smoothly- a counterfactual approach. arXiv (2021). Available at: http://arxiv.org/abs/2101.04230.

85. Singla S, Wallace S, Triantafillou S, Batmanghelich K. Using causal analysis for conceptual deep learning explanation. Med Image Comput Comput Assist Interv (2021) 12903:519–28. doi: 10.1007/978-3-030-87199-4_49.

86. Singla S, Murali N, Arabshahi F, Triantafyllou S, Batmanghelich K. Augmentation by counterfactual explanation – fixing an overconfident classifier. arXiv, Waikoloa, Hawaii (2022). Available at: http://arxiv.org/abs/2210.12196.

87. Colbert MK, Ho LC, van der Merwe Y, Yang X, McLellan GJ, Hurley SA, et al. Diffusion tensor imaging of visual pathway abnormalities in five glaucoma animal models. Invest Ophthalmol Vis Sci (2021) 62(10):21. doi: 10.1167/iovs.62.10.21

88. Fortune B, Burgoyne CF, Cull GA, Reynaud J, Wang L. Structural and functional abnormalities of retinal ganglion cells measured In vivo at the onset of optic nerve head surface change in experimental glaucoma. Invest Ophthalmol Vis Sci (2012) 53(7):3939–50. doi: 10.1167/iovs.12-9979

89. Glidai Y, Lucy KA, Schuman JS, Alexopoulos P, Wang B, Wu M, et al. Microstructural deformations within the depth of the lamina cribrosa in response to acute In vivo intraocular pressure modulation. Invest Ophthalmol Vis Sci (2022) 63(5):25. doi: 10.1167/iovs.63.5.25

90. Pasquale LR, Gong L, Wiggs JL, Pan L, Yang Z, Wu M, et al. Development of primary open angle glaucoma-like features in a rhesus macaque colony from southern China. Transl Vis Sci Technol (2021) 10(9):20. doi: 10.1167/tvst.10.9.20

91. van der Merwe Y, Murphy MC, Sims JR, Faiq MA, Yang XL, Ho LC, et al. Citicoline modulates glaucomatous neurodegeneration through intraocular pressure-independent control. Neurotherapeutics (2021) 18(2):1339–59. doi: 10.1007/s13311-021-01033-6

92. Yang XL, van der Merwe Y, Sims J, Parra C, Ho LC, Schuman JS, et al. Age-related changes in eye, brain and visuomotor behavior in the DBA/2J mouse model of chronic glaucoma. Sci Rep (2018) 8(1):4643. doi: 10.1038/s41598-018-22850-4

93. Zhu J, Sainulabdeen A, Akers K, Adi V, Sims JR, Yarsky E, et al. Oral scutellarin treatment ameliorates retinal thinning and visual deficits in experimental glaucoma. Front Med (2021) 8:681169. doi: 10.3389/fmed.2021.681169

94. Newberry W, Vargas L, Sarunic MV. Progress on bimodal adaptive optics OCT and two-photon imaging. In: 2021 IEEE photonics conference (IPC) (2021). p. 1–2.

95. Newberry W, Wahl DJ, Ju MJ, Jian Y, Sarunic MV. Progress on multimodal adaptive optics OCT and multiphoton imaging. In: 2020 IEEE photonics conference (IPC) (2020). p. 1–2.

96. Durech E, Durech E, Durech E, Newberry W, Franke J, Sarunic MV. Wavefront sensor-less adaptive optics using deep reinforcement learning. BioMed Opt Express (2021) 12(9):5423–38. doi: 10.1364/BOE.427970

97. King BJ, Burns SA, Sapoznik KA, Luo T, Gast TJ. High-resolution, adaptive optics imaging of the human trabecular meshwork In vivo. Transl Vis Sci Technol (2019) 8(5):5. doi: 10.1167/tvst.8.5.5

98. Jian Y, Borkovkina S, Japongsori W, Camino A, Sarunic MV. Real-time retinal layer segmentation of adaptive optics optical coherence tomography angiography with deep learning. In: 2020 IEEE photonics conference (IPC) (2020). p. 1–2.

99. Borkovkina S, Camino A, Janpongsri W, Sarunic MV, Jian Y, Jian Y. Real-time retinal layer segmentation of OCT volumes with GPU accelerated inferencing using a compressed, low-latency neural network. BioMed Opt Express (2020) 11(7):3968–84. doi: 10.1364/BOE.395279

100. Heisler M, Ju MJ, Bhalla M, Schuck N, Athwal A, Navajas EV, et al. Automated identification of cone photoreceptors in adaptive optics optical coherence tomography images using transfer learning. BioMed Opt Express (2018) 9(11):5353–67. doi: 10.1364/BOE.9.005353

101. Zhang YS, Onishi AC, Zhou N, Song J, Samra S, Weintraub S, et al. Characterization of inner retinal hyperreflective alterations in early cognitive impairment on adaptive optics scanning laser ophthalmoscopy. Invest Ophthalmol Vis Sci (2019) 60(10):3527–36. doi: 10.1167/iovs.19-27135

102. Sidiqi A, Wahl D, Lee S, Ma D, To E, Cui J, et al. In vivo retinal fluorescence imaging with curcumin in an Alzheimer mouse model. Front Neurosci (2020) 14:713. doi: 10.3389/fnins.2020.00713

103. Zhu Y, Pappas AC, Wang R, Seifert P, Sun D, Jakobs TC. Ultrastructural morphology of the optic nerve head in aged and glaucomatous mice. Invest Ophthalmol Vis Sci (2018) 59(10):3984–96. doi: 10.1167/iovs.18-23885

104. Quillen S, Schaub J, Quigley H, Pease M, Korneva A, Kimball E. Astrocyte responses to experimental glaucoma in mouse optic nerve head. PloS One (2020) 15(8):e0238104. doi: 10.1371/journal.pone.0238104

105. Sihota R, Goyal A, Kaur J, Gupta V, Nag TC. Scanning electron microscopy of the trabecular meshwork: Understanding the pathogenesis of primary angle closure glaucoma. Indian J Ophthalmol (2012) 60(3):183–8. doi: 10.4103/0301-4738.95868

106. Wilsey LJ, Burgoyne CF, Fortune B. Transmission electron microscopy study of the retinal nerve fiber layer (RNFL) in nonhuman primate experimental glaucoma. Invest Ophthalmol Vis Sci (2018) 59(9):3742.

107. Pantanowitz L. Digital images and the future of digital pathology. J Pathol Inform (2010) 1:15. doi: 10.4103/2153-3539.68332

108. Niazi MKK, Parwani AV, Gurcan MN. Digital pathology and artificial intelligence. Lancet Oncol (2019) 20(5):e253–61. doi: 10.1016/S1470-2045(19)30154-8

109. Tizhoosh HR, Pantanowitz L. Artificial intelligence and digital pathology: Challenges and opportunities. J Pathol Inform (2018) 9(1):38. doi: 10.4103/jpi.jpi_53_18

110. The Genetics of Glaucoma in People of African Descent (GGLAD) Consortium, Hauser MA, Allingham RR, Aung T, Van Der Heide CJ, Taylor KD, et al. Association of genetic variants with primary open-angle glaucoma among individuals with African ancestry. JAMA (2019) 322(17):1682–91. doi: 10.1001/jama.2019.16161

111. Qassim A, Siggs OM. Predicting the genetic risk of glaucoma. Biochemist (2020) 42(5):26–30. doi: 10.1042/BIO20200063

112. Han X, Hewitt AW, MacGregor S. Predicting the future of genetic risk profiling of glaucoma: A narrative review. JAMA Ophthalmol (2021) 139(2):224–31. doi: 10.1001/jamaophthalmol.2020.5404

113. Golubnitschaja O, Yeghiazaryan K, Flammer J. Multiomic signature of glaucoma predisposition in flammer syndrome affected individuals – innovative predictive, preventive and personalised strategies in disease management. In: Golubnitschaja O, editor. Flammer syndrome: From phenotype to associated pathologies, prediction, prevention and personalisation. Cham: Springer International Publishing (2019). p. 79–104. doi: 10.1007/978-3-030-13550-8_5

114. Rossi C, Cicalini I, Cufaro MC, Agnifili L, Mastropasqua L, Lanuti P, et al. Multi-omics approach for studying tears in treatment-naïve glaucoma patients. Int J Mol Sci (2019) 20(16):E4029. doi: 10.3390/ijms20164029

115. Soundararajan A, Wang T, Sundararajan R, Wijeratne A, Mosley A, Harvey FC, et al. Multiomics analysis reveals the mechanical stress-dependent changes in trabecular meshwork cytoskeletal-extracellular matrix interactions. Front Cell Dev Biol (2022) 10:874828. doi: 10.3389/fcell.2022.874828

116. Hysi PG, Khawaja AP, Hammond C. A multiomics investigation of risk factors associated with intraocular pressure in the general population. Invest Ophthalmol Vis Sci (2020) 61(7):979.

Keywords: deep learning, artificial intelligence, reverse translation, transfer learning, glaucoma, optical coherence tomography, visual field

Citation: Ma D, Pasquale LR, Girard MJA, Leung CKS, Jia Y, Sarunic MV, Sappington RM and Chan KC (2023) Reverse translation of artificial intelligence in glaucoma: Connecting basic science with clinical applications. Front. Ophthalmol. 2:1057896. doi: 10.3389/fopht.2022.1057896

Received: 30 September 2022; Accepted: 05 December 2022;

Published: 04 January 2023.

Edited by:

Shi Song Rong, Harvard Medical School, United StatesCopyright © 2023 Ma, Pasquale, Girard, Leung, Jia, Sarunic, Sappington and Chan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Da Ma, ZG1hQHdha2VoZWFsdGguZWR1; Kevin C. Chan, Y2h1ZW53aW5nLmNoYW5AZnVsYnJpZ2h0bWFpbC5vcmc=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.