- 1Department of Ultrasound, The First Affiliated Hospital of Wannan Medical College (Yijishan Hospital), Wuhu, China

- 2Department of Radiology, The First Affiliated Hospital of Wannan Medical College (Yijishan Hospital), Wuhu, China

- 3Department of Ultrasound, WuHu Hospital, East China Normal University (The Second People’s Hospital, WuHu), Wuhu, Anhui, China

- 4Department of Ultrasound, Linyi Central Hospital, Linyi, Shandong, China

- 5Department of Ultrasound, Zibo Central Hospital, Zibo, Shandong, China

Objectives: To construct deep learning-assisted diagnosis models based on automatic segmentation of ultrasound images to facilitate radiologists in differentiating benign and malignant parotid tumors.

Methods: A total of 582 patients histopathologically diagnosed with PGTs were retrospectively recruited from 4 centers, and their data were collected for analysis. The radiomics features of six deep learning models (ResNet18, Inception_v3 etc) were analyzed based on the ultrasound images that were obtained under the best automatic segmentation model (Deeplabv3, UNet++, and UNet). The performance of three physicians was compared when the optimal model was used and not. The Net Reclassification Index (NRI) and Integrated Discrimination Improvement (IDI) were utilized to evaluate the clinical benefit of the optimal model.

Results: The Deeplabv3 model performed optimally in terms of automatic segmentation. The ResNet18 deep learning model had the best prediction performance, with an area under the receiver-operating characteristic curve of 0.808 (0.694−0.923), 0.809 (0.712−0.906), and 0.812 (0.680−0.944) in the internal test set and external test sets 1 and 2, respectively. Meanwhile, the optimal model-assisted clinical and overall benefits were markedly enhanced for two out of three radiologists (in internal validation set, NRI: 0.259 and 0.213 [p = 0.002 and 0.017], IDI: 0.284 and 0.201 [p = 0.005 and 0.043], respectively; in external test set 1, NRI: 0.183 and 0.161 [p = 0.019 and 0.008], IDI: 0.205 and 0.184 [p = 0.031 and 0.045], respectively; in external test set 2, NRI: 0.297 and 0.297 [p = 0.038 and 0.047], IDI: 0.332 and 0.294 [p = 0.031 and 0.041], respectively).

Conclusions: The deep learning model constructed for automatic segmentation of ultrasound images can improve the diagnostic performance of radiologists for PGTs.

Introduction

Salivary gland tumors (SGTs) are relatively uncommon diseases representing 3-12% of head and neck tumors (1), which most frequently occur in the parotid gland, an important exocrine organ. As data show, parotid gland tumors (PGTs) are the most common type of SGTs, which are approximately 80% benign and 20% malignant (2). The most common malignant PGTs (MPTs) are mucoepidermoid carcinoma and adenoid cystadenocarcinoma, while the most common benign PGTs (BPTs) are pleomorphic adenoma and Warthin tumor (3). Currently, the treatment for PGTs is predominantly surgical, and their treatment strategies and prognosis vary by histopathological types (4, 5). Specifically, BPTs are treated with local excision or lateral parotidectomy, while MPTs require more radical surgery such as extended resection and lymph node dissection. Therefore, accurate preoperative diagnosis of BPTs and MPTs is of utmost importance in adjusting treatment decisions.

As all PGTs are generally asymptomatic, their nature is distinguished before surgery primarily by ultrasound-guided core needle biopsy (USCB) or fine-needle aspiration (FNA) and medical imaging (6–9). Although USCB and FNA are considered minimally invasive and safe, they confer a risk of facial nerve injury, mumps, and tumor cell spread along the needle track, with the disadvantage of limited sampling that causes uncertain histological or cytological diagnosis (7, 8). Currently, preoperative imaging for PGTs includes ultrasound, magnetic resonance imaging (MRI), and computed tomography (CT). Despite the higher image quality of CT and MRI in differentiating parotid gland lesions (10), they also have some limitations, including exposure to ionizing radiation, contraindications for patients with internal ferromagnetic devices, high monetary cost, and prolonged examination times. These limitations have restricted the clinical use of CT and MRI in evaluating therapeutic effects in patients with PGTs (11, 12).

Notably, ultrasound is ideally applicable to patients with PGTs since PGTs are typically located in the superficial lobe and ultrasound is widely accepted to be preferred for superficial organ examination. Additionally, ultrasound has the advantages of low cost, non-invasiveness, and no ionizing radiation (12). Therefore, ultrasound is often used for the diagnosis of PGTs. Nevertheless, differential diagnosis of PGTs by ultrasound is challenging. A prior study exhibited that the accuracy of ultrasound in diagnosing malignant parotid masses was only 20%, despite its sensitivity and specificity of 38.9% and 90.1%, respectively, in diagnosing parotid masses (13). Therefore, it is urgent to develop a more reliable, non-invasive, and rapid method for determining the nature of PGTs.

Recently, machine learning (ML) has been increasingly used to analyze medical images. Deep learning (DL), an essential technology in ML, involves multiple levels, which has attracted wide attention because it can automatically learn semantic and spatial features of the hidden layers of neural networks (14). Intriguingly, several studies have yielded similar results in terms of the diagnostic performance of DL systems in the medical imaging diagnosis of various diseases, including thyroid diseases (15), breast diseases (16), and liver tumors (17). Additionally, some previous studies have revealed that DL can discriminate PGTs using medical image pairs, mainly involving CT (6, 18) and MRI (1). Yet, few studies have assessed the performance of DL methods based on ultrasound images in distinguishing BPTs from MPTs. Basically, images of PGTs obtained by a method involving DL are manually segmented by radiologists, which has two chief drawbacks. First, manual segmentation is quite time-consuming and error-prone. Second, accurate segmentation heavily depends on the subjectivity of radiologists, signifying that image segmentation quality is physician-dependent (19). Accordingly, it is imperative to develop a model integrating the optimal automatic segmentation model and DL methods for improving workflow efficiency and assisting radiologists in diagnosis. This study determined regions of interest (ROI) with an automatic segmentation algorithm, evaluated popular image segmentation algorithms including Deeplabv3, UNet, and UNet++, and constructed a deep learning-assisted diagnostic model for PGTs based on automatic segmentation of ultrasound images.

Materials and methods

Patients

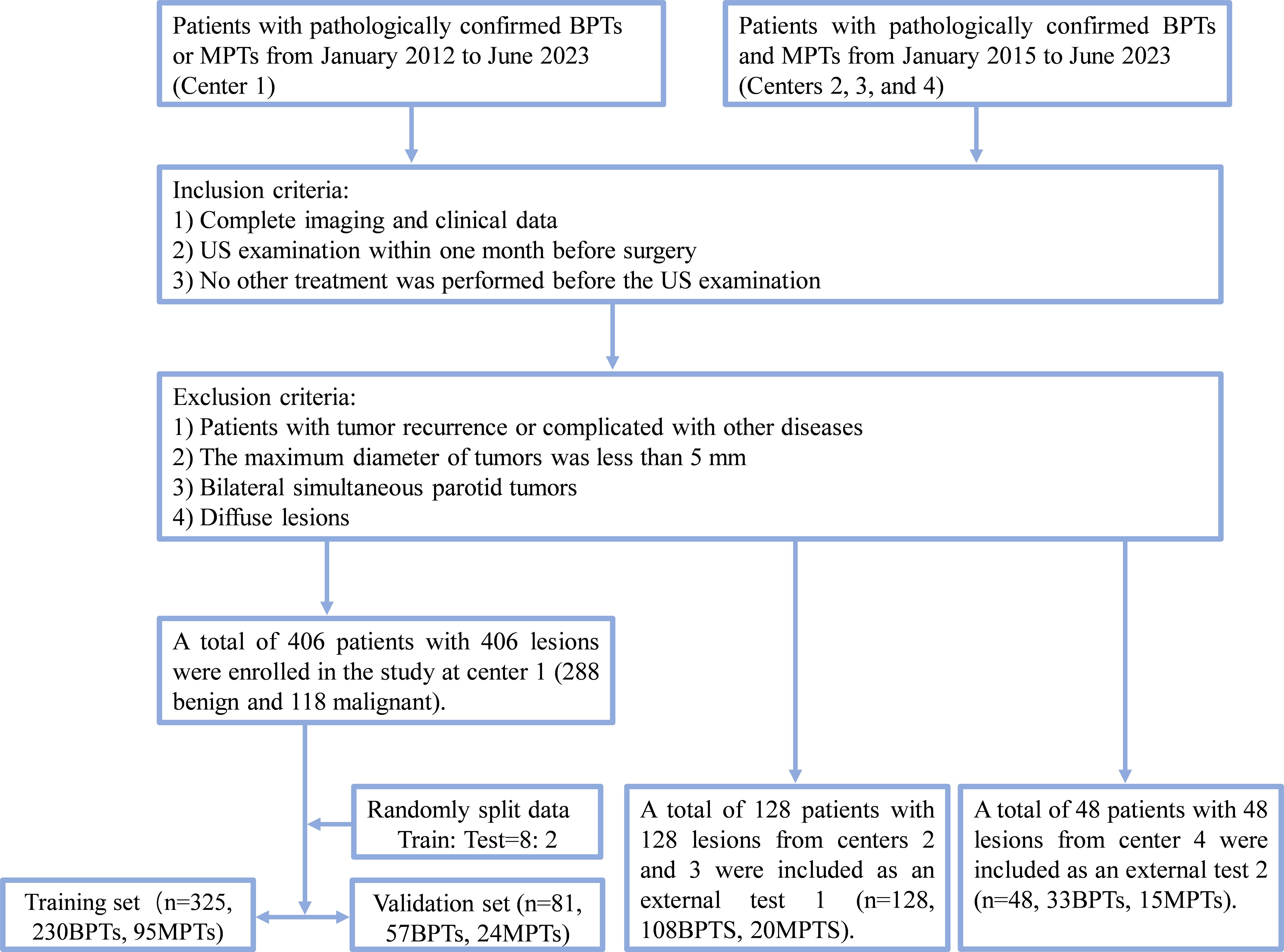

A total of 582 patients histopathologically diagnosed with BPTs and MPTs were retrospectively recruited from four centers (Figure 1), consisting of 406 patients from center 1, 95 patients from center 2, 33 patients from center 3 (patients in centers 2 and 3 datasets were included in external test set 1), and 48 patients from center 4 (Supplementary Table S1 for classification of histopathological types and numbers). Inclusion criteria for patients were as follows (1): patients with complete imaging and clinical data; (2) patients who received ultrasound within one month before surgery; (3) patients who did not receive any other treatments before the ultrasound. Exclusion criteria for patients were as follows: (1) patients with tumor recurrence or complicated with other diseases; (2) patients with tumors less than 5 mm in maximum diameter; (3) patients with simultaneous bilateral PGTs; (4) patients with diffuse lesions.

Figure 1. Flow chart of patient recruitment. Center 1, the First Affiliated Hospital of Wannan Medical College (Yijishan Hospital); External test set 1: center 2, WuHu Hospital, East China Normal University (The Second People’s Hospital, WuHu) and center 3, the Zibo Central Hospital; External test set 2, the Linyi Central Hospital. BPTs, benign parotid gland tumors; MPTs, malignant parotid gland tumor.

Ultrasonic instrument

Ultrasound of all patients was performed on ultrasound equipment (Supplementary Table S2) at these 4 centers.

Ultrasound image acquisition

All ultrasound images were stored in JPG or BMP format in the picture archiving and communication system (PACS). Before ultrasound, patients were placed in a supine position, with the neck slightly tilted to fully expose the parotid gland examination area. The sonographer performed crossing, multi-section, and bilateral contrast scanning of the parotid gland. The following features of the lesion were visualized with conventional ultrasound: maximum diameter, side (left/right), number (single/multiple), margin (clear/unclear), shape (regular/irregular), echo (uniform/uneven), posterior echogenicity enhancement (presence/absence), cystic or necrotic areas (presence/absence), calcification (presence/absence), Alder (20) grade (Alder 0/I/II/III), abnormal lymph nodes (presence/absence).

Clinical model construction and validation

Statistical analysis was conducted. The independent samples t-test was used only for comparisons of continuous variables between two groups, and the χ² test was utilized to analyze discrete variables. All clinical characteristics were subjected to univariate analyses, followed by the calculation of odds ratios (OR) and corresponding p values for each variable. Then, clinical characteristics with p < 0.05 were included to construct clinical models. The features identified in the univariate analyses were modeled with three ML algorithms (Logistic Regression [LR], Random Forest, and XGBoost).

Analysis of radiological and clinical data

The images and clinical data of all patients were attained from the routine clinical records and PACS of hospitals. All images were stored. Combined with the medical history of patients, the lesions were directly evaluated by three physicians from center 1 (radiologist A: XZ, a senior radiologist with 20 years of ultrasound experience; radiologist B: FHJ, an attending radiologist with 13 years of ultrasound experience; radiologist C: JW, a junior radiologist with 5 years of ultrasound experience). Meanwhile, all sonographic features were independently reviewed by ultrasound radiologists A and B, and all images were read by two radiologists in a double-blind manner. If the two radiologists disagreed on the image features of the lesion, they consulted and reached a consensus. Afterward, DL analysis was conducted with B-mode images containing lesions with the largest diameter or planes of suspected malignant features. Radiologists were blinded to the final histopathological findings throughout the study.

ROI segmentation

ROIs were identified with an automatic segmentation algorithm. Common image segmentation algorithms, including Deeplabv3, UNet, and UNet++, were evaluated. Additionally, different algorithms were combined with a post-fusion algorithm to obtain more accurate ROI segmentation results.

Training process

Data augmentation was performed. Concretely, sub-volumes were randomly cropped from the images and labeled according to their positive and negative labels, with spatial size and number of samples specified. Online data augmentation was used during the training process, such as spacing and random crop methods, which generated many different images as training iterations. DiceCELoss combining Dice Loss and Cross-Entropy Loss functions (Loss functions in Supplementary S3) was utilized. The weights of unlabeled pixels were set to zero, enabling unlabeled pixels to learn from only the labeled ones and, hence, to be generalized to the whole volume.

Hyperparameters were selected. The Adam optimizer was used at an initial learning rate of 1e-3. Our model was trained with 32 rounds of early stopping.

DL procedure

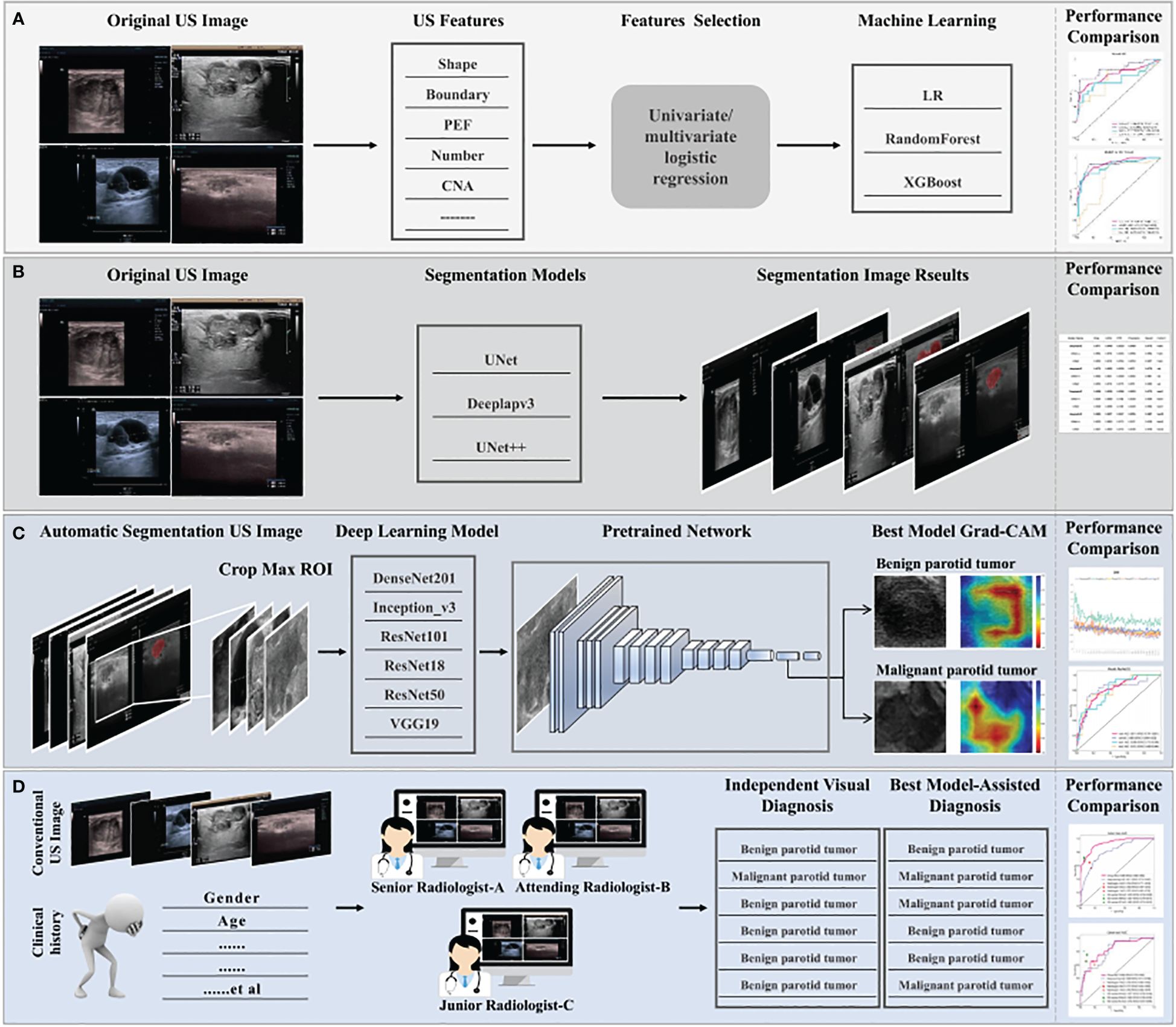

All 2D rectangular ROIs were cropped from raw US images as per the 2D automatic segmentation mask of the tumor. Figure 2 summarizes the overall flow of this study. Five commonly used pre-trained convolutional neural network (CNN) models including DensNet201, Inception_V3, ResNet101, ResNet18, ResNet50, and VGG19 were used and all initially trained in the ILSVRC-2012 dataset. The slice with the largest ROI was chosen to represent each patient. After that, gray values were normalized to the range [-1, 1] through min-max transformation. Each cropped sub-volume image was resized to 224 × 224 with nearest-neighbor interpolation to obtain images suited for model input. Due to the limited image data, the learning rate was determined with caution to improve generalization. In this study, the cosine decay learning rate algorithm was implemented (learning rate in Supplementary S4).

Figure 2. The overall flow of this study. (A) Construction process for clinical machine learning model of baseline ultrasound features. (B) Selection of segmentation models, automatic segmentation algorithms including Deeplabv3, UNet, and UNet++, to identify regions of interest. Optimal segmentation modeling results were obtained with quantization. (C) The construction process for the deep learning model. Ultrasound images were obtained with the results based on the automatic segmentation model and used as the input of different pre-trained models, six classical convolutional neural networks (DenseNet 121, Inception_V3, ResNet 101, ResNet18, ResNet 50, and VGG19). The predicted probability for BPTs and MPTs was the output. In addition, Grad-CAM (gradient-weighted class activation mapping) was applied to visualize the decision-making process of the model. (D) Three radiologists with different years of experience provided a comprehensive diagnosis of MPTs and BPTs, with or without the aid of the model. Radiologist C represents resident radiologists. Radiologist B represents attending radiologists. Radiologist A represents senior radiologists.

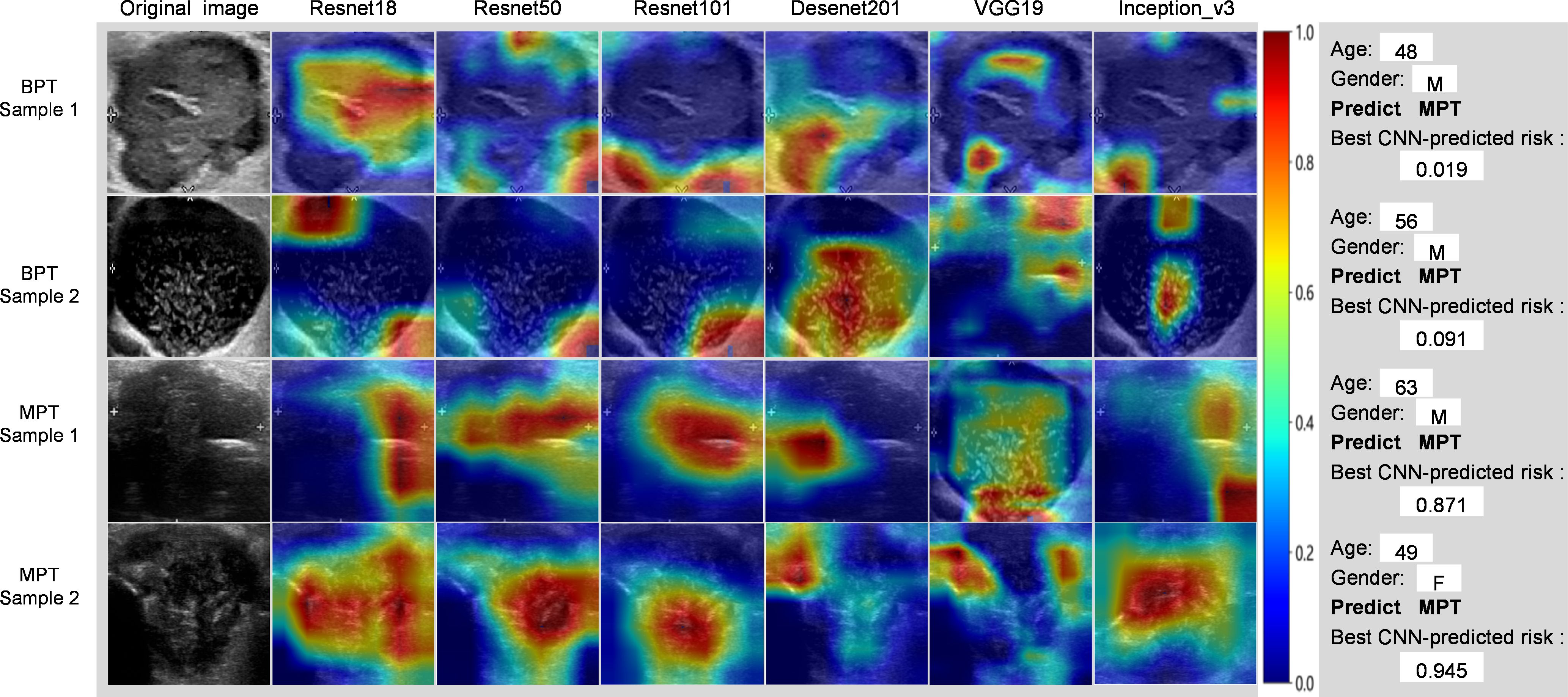

To increase the visibility of DL models and deepen the understanding of the underlying decision-making process, the goal of achieving model visualization and verification is of tremendous significance in model development (21). Therefore, to more transparently study the interpretability of DL-based radiomics, the model was visualized with gradient-weighted class activation mapping (Grad-CAM). The gradient information from the last convolutional layer of CNN was used for weighted fusion to acquire a class activation map, highlighting the important regional images for the classification target.

The entire training process was performed on an NVIDIA 4090 GPU with the use of MONAI 0.8.1 and PyTorch 1.8.1.

Visual evaluations and DL model–assisted diagnosis of radiologists

All ultrasound images of PGTs were reviewed independently by three radiologists (radiologists A, B, and C) who were blinded to the histopathological findings. These radiologists comprehensively interpreted the condition of the recruited patients with BPTs or MPTs based on ultrasound images and clinical information, combined with or without the prediction of the optimal model. In the process of our test, our overall process is as follows: step 1: provides two main types of information. First of all, the ultrasound images and clinical data. Secondly, the prediction results of the AI model are presented, focusing on the prediction label (probability of benign or malignant; for example, 0.147 for BPT and 0.853 for MPT) and the corresponding confidence score. step 2: At the request of the radiologist, we provided 2 pieces of supplementary information. The first is the Grad-CAM visualization generated by our deep learning model, which helps radiologists initially focus on salient parts of the image. The second is to provide by our automatic segmentation algorithm to identify region of interest (ROI). Additionally, under the assistance of the optimal DL model, the independent diagnosis was carried out again in a random order. After the assistance of the model, the values of Net Reclassification Index (NRI) and Integrated Discrimination Improvement (IDI) were calculated for the three radiologists to assess the clinical benefit.

Statistical analysis

The data were analyzed with Python software (version 3.9.16), R software (4.1.0), and SPSS software (version 27.0, IBM Corporation, Armonk, New York, USA). Differences were considered statistically significant at two-sided p < 0.05. Statistical analysis is detailed in the Supplementary S5.

Results

Analysis of baseline characteristics and the XGBoost model

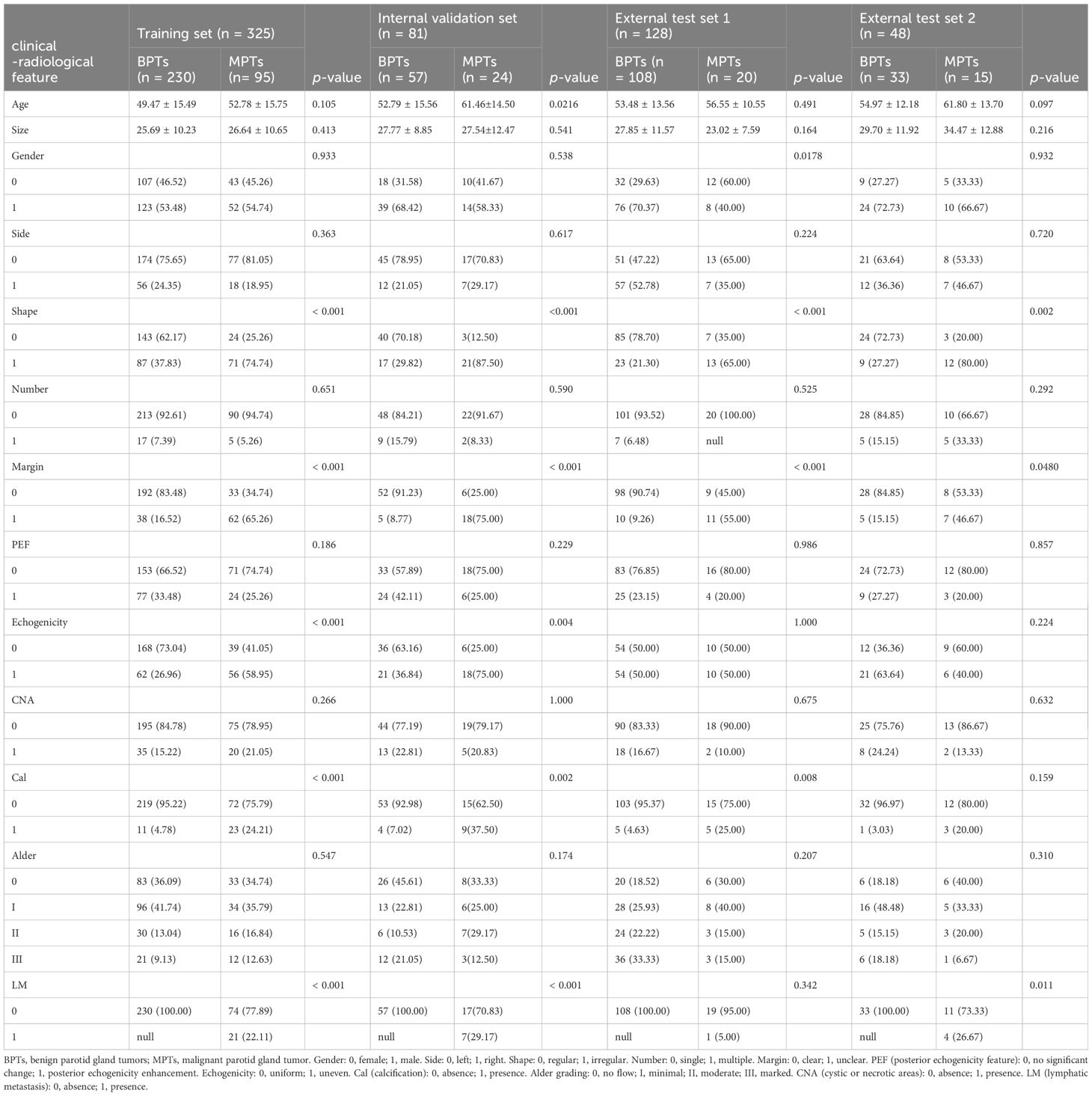

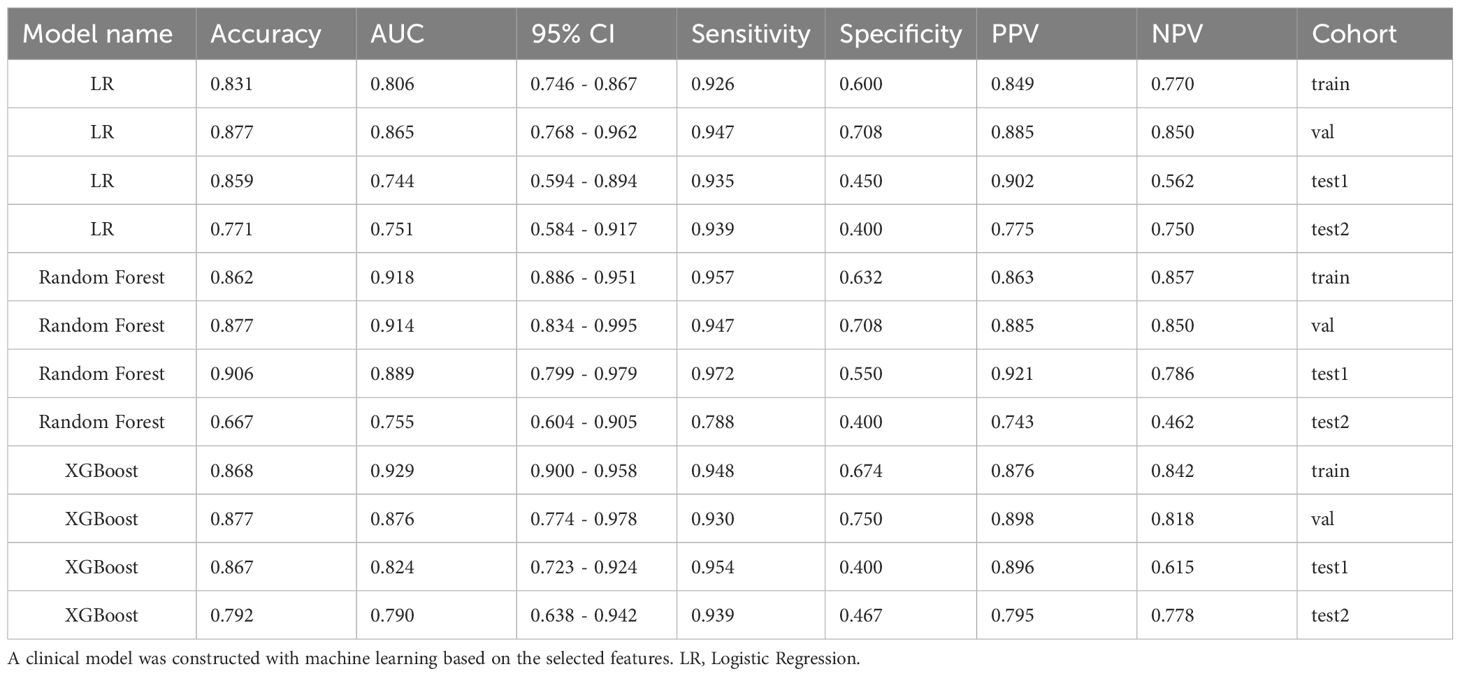

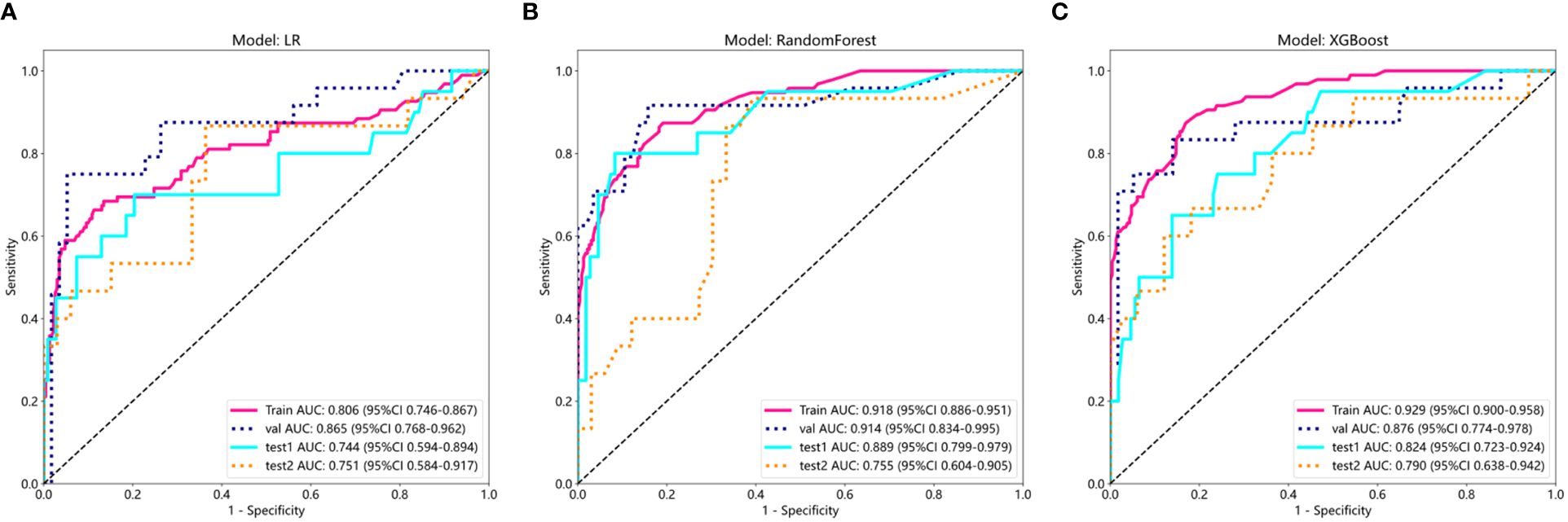

Supplementary Table S3 shows Cohen’s kappa consistency analysis results of subjective morphological features of ultrasound by senior radiologists and attending radiologists (radiologists A and B). The baseline clinical radiological characteristics are listed in Table 1. Univariate analyses were performed on all clinical features, and OR and corresponding p values were calculated for each variable. Features including shape, echogenicity, calcification, margin, and lymphatic metastasis (LM) had p-values below 0.05. Then, multivariate logistic regression analyses were conducted to screen the independent risk factors for predicting MPTs, followed by the construction of a clinical diagnostic model. It was found that shape, margin, and LM were independent risk factors for ultrasound diagnosis of MPTs (Supplementary Table S4). Therefore, clinical models were constructed based on these features with three ML algorithms. The results manifested that the performance of the nonlinear model was superior to that of the linear model. The XGBoost model was chosen as the benchmark for clinical characteristics in subsequent comparisons (Table 2, Figure 3).

Table 2. Comparison of clinical models constructed using machine learning based on features screened by univariate and multivariate analyses of clinical-radiological characteristics.

Figure 3. ROC results of clinical features of three different machine learning models (LR, Random Forest, and XGBoost). (A) ROC curve of logistic regression (LR); (B) ROC curve of Random Forest; (C) ROC curve of XGBoost.

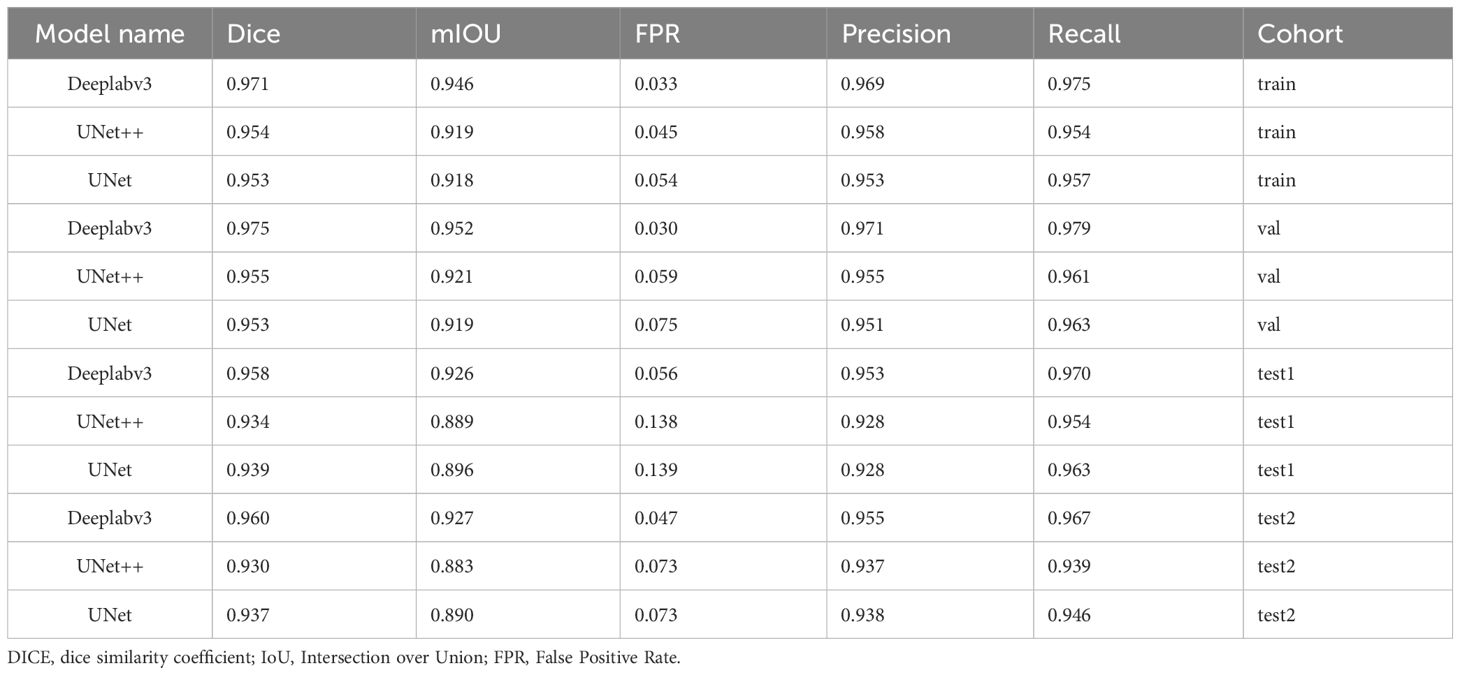

ROI segmentation evaluation

The performance of models was compared with various evaluation indicators (Table 3). Deeplabv3 consistently exhibited excellent performance and outperformed UNet++ and UNet models in terms of multiple indicators, including Dice coefficient, mIOU, FPR, Precision, and Recall (Supplementary S8).

Table 3. Comparison of the performance of the three automatic segmentation models, Deeplabv3, UNet++, and UNet, in terms of various evaluation indicators.

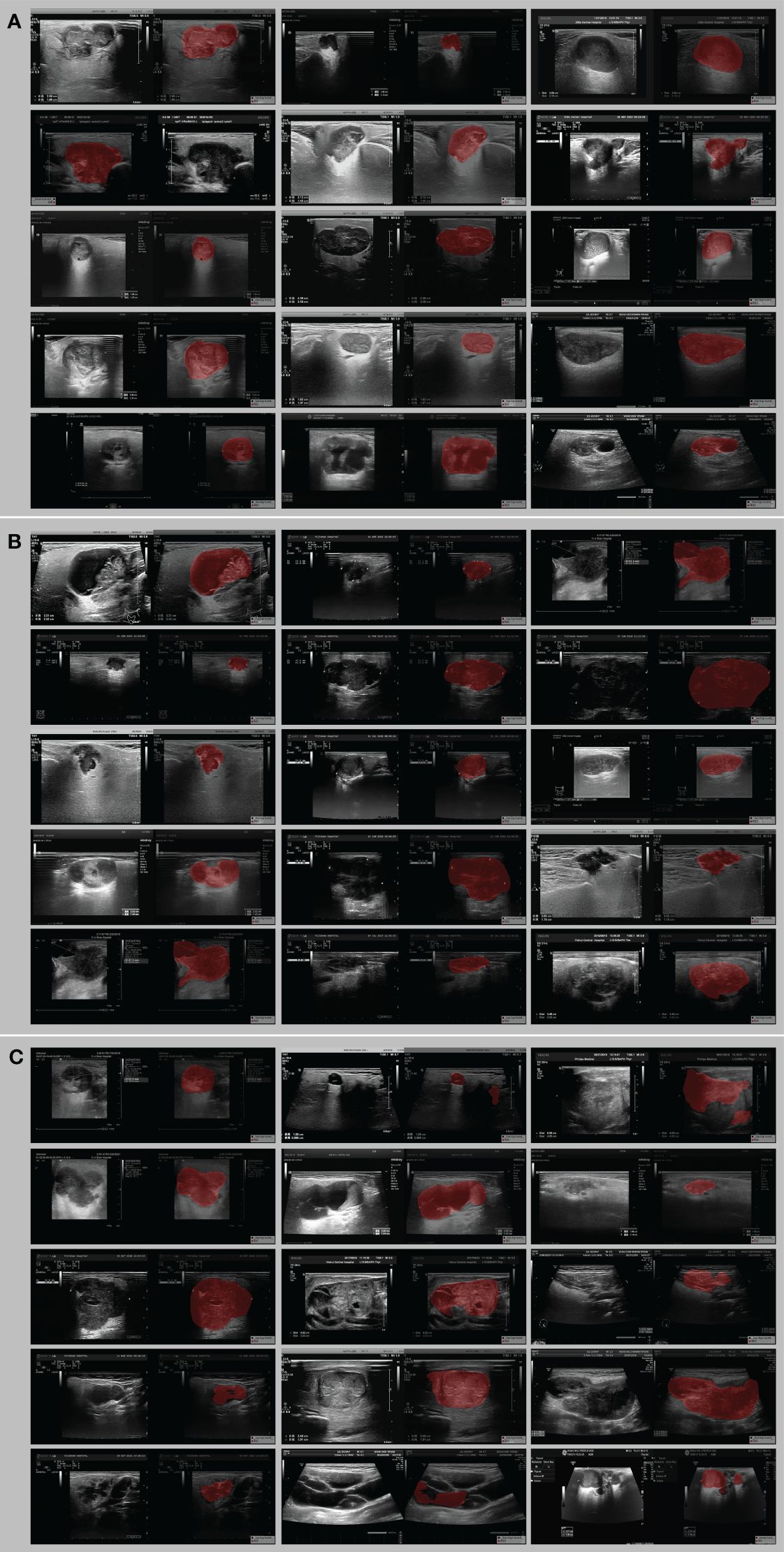

Visual evaluation results

Based on the quantization results, the optimal segmentation model (DeeplabV3) was selected. The outputs of the three models were visualized as models on the dataset to visually analyze differences in segmentation results of our proposed automatic segmentation model and the other 2 models. Table 4 depicts the results of the qualitative comparison. As observed, the segmentation results of the proposed Deeplabv3+ model were superior to those of U-Net and U-Net++, which had some subtle visible errors, The Deeplabv3+ model exhibited higher reproducibility than the other 2 models. To further increase the reliability of clinical trials, the Deeplabv3+ prediction results were presented to three experienced experts who were invited to evaluate our segmentation results through majority voting. Approximately 96% of the predicted segmentation results were endorsed by the experts, highlighting the effectiveness of our approach in a real-world clinical setting (Figure 4).

Figure 4. Example image of the best Deeplabv3 segmentation model for lesion delineation on ultrasound images. (A) all three physicians were satisfied with the visual assessment, (B) one of the three physicians was dissatisfied with the segmentation, and (C) two or three of the three physicians were dissatisfied with the segmentation.

The above quantification and visual evaluation results illustrated that the Deeplabv3 model had the best segmentation performance, thus laying the foundation for subsequent model construction.

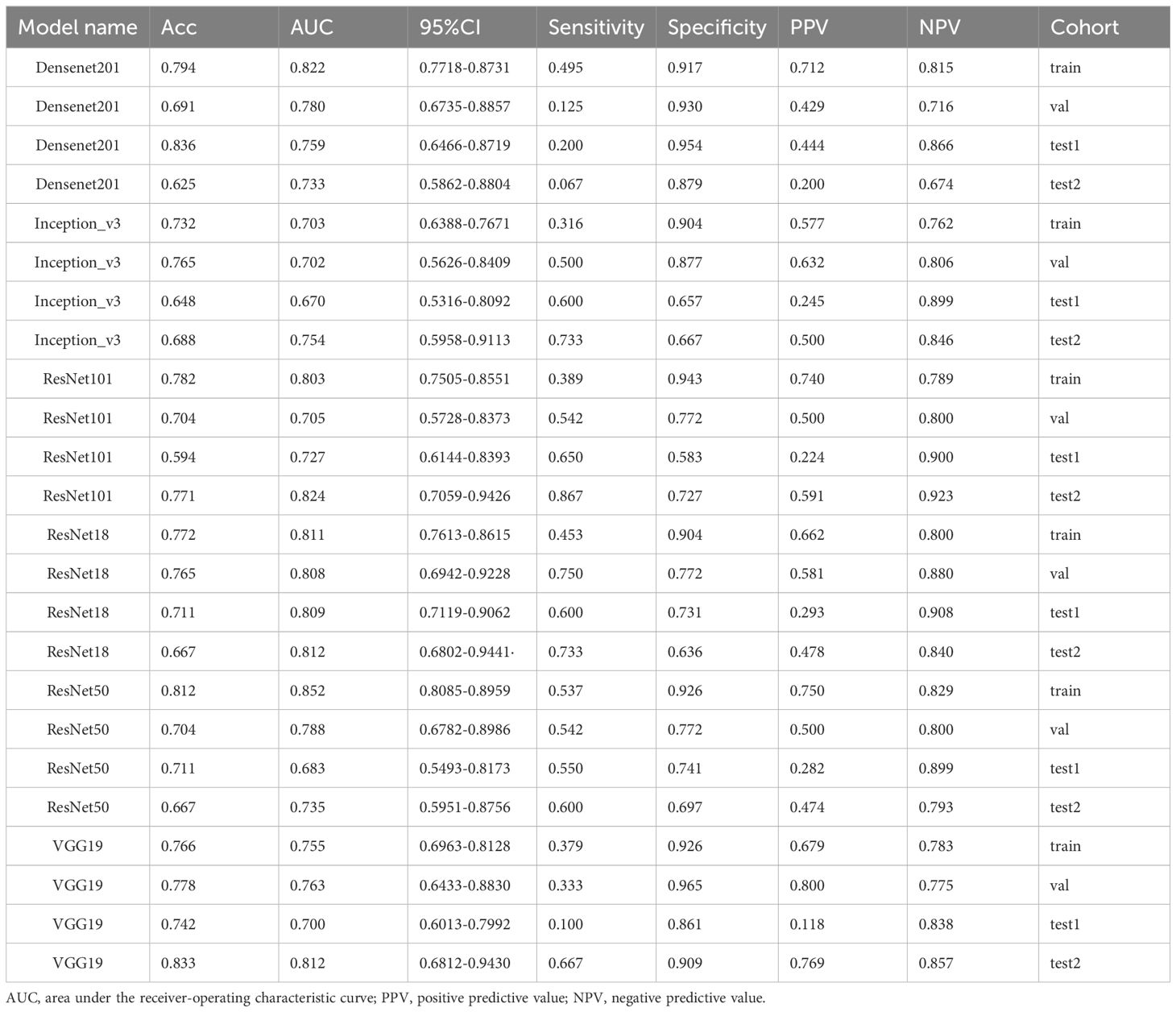

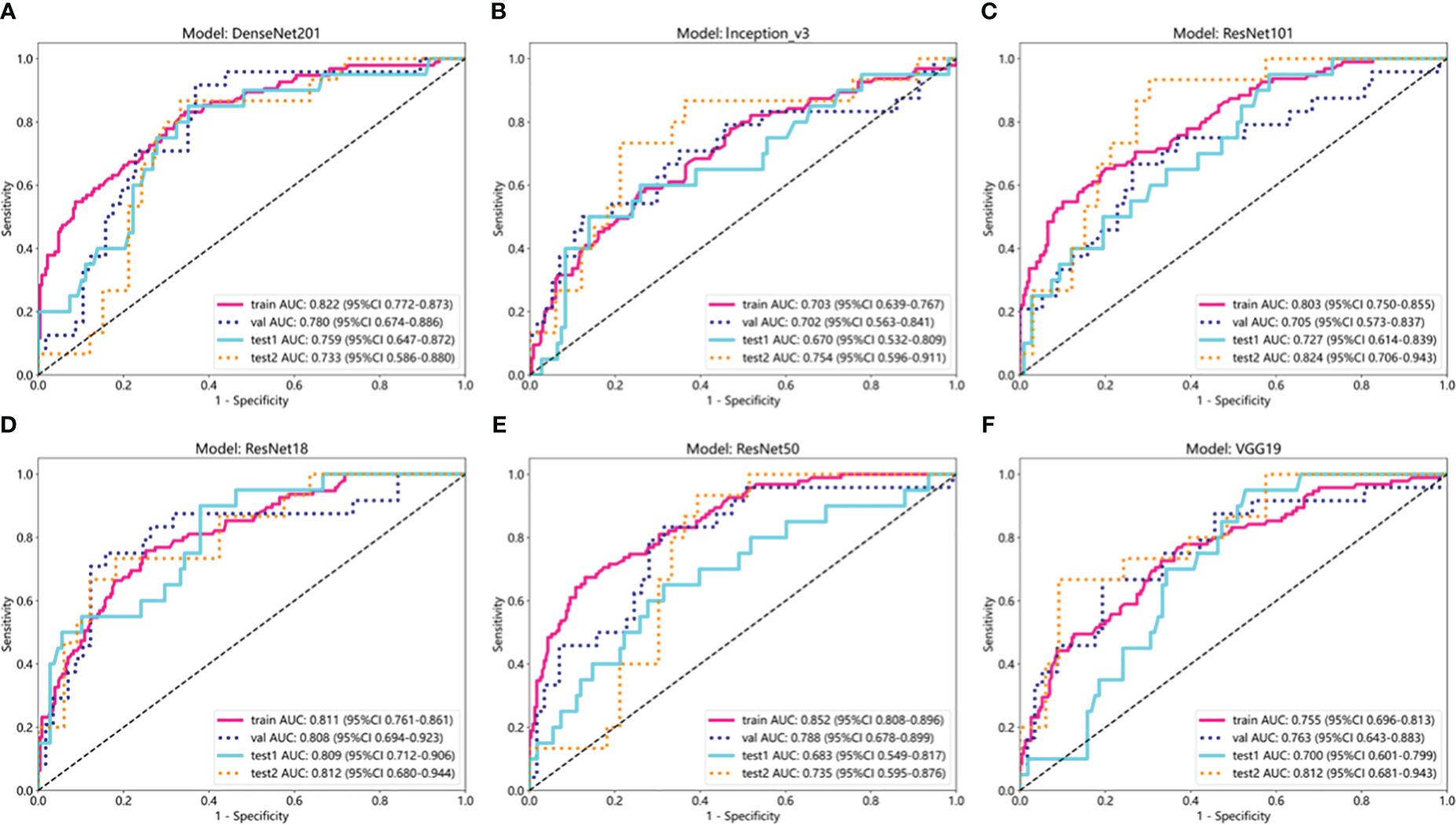

DL signature

The results of the DL models (Table 4) demonstrated that the ResNet18 model outperformed other models in terms of classification performance. ResNet18 exhibited a minimal loss value during training (22), indicating fewer errors, and achieved a higher convergence rate compared to other CNNs (Supplementary Figure 1). In the internal validation set, ResNet18 achieved an AUC of 0.808 (95%CI: 0.694−0.923), an accuracy of 0.765, a sensitivity of 0.750, and a specificity of 0.772. Additionally, in external test sets 1 and 2, ResNet18 maintained favorable classification performance with AUCs of 0.809 (95%CI: 0.712−0.906) and 0.812 (95%CI: 0.680-0.944), accuracies of 0.711 and 0.667, sensitivities of 0.600 and 0.733, and specificities of 0.731 and 0.636, respectively. Although the ResNet18 model did not surpass the clinical model in overall performance, it showed comparable AUCs in all test sets. Specifically, the AUCs of ResNet18 and the clinical models were 0.808 and 0.876 (p = 0.264) in the internal validation set, 0.809 and 0.824 (p = 0.814) in external test set 1, and 0.809 and 0.824 (p = 0.809) in external test set 2 (Figure 5).

Figure 5. ROC curves of six deep learning models in internal training and validation sets and external test sets 1 and 2. (A) ROC curve of DenseNet201; (B) ROC curve of Inception_v3; (C) ROC curve of ResNet101; (D) ROC curve of ResNet18; (E) ROC curve of ResNet50; (F) ROC curve of VGG19.

In terms of model interpretability, ResNet18 exhibited clearer regions of interest (ROIs), focusing on the margin and internal regions of the tumor, while not activating regions adjacent to normal parotid tissues (Figure 6).

Figure 6. Grad-CAM visualization of 4 typical samples. The attention regions of diferent deep learning models in benign and malignant parotid tumors US image analysis; BPT, benign parotid gland tumor; MPT, malignant parotid gland tumor.

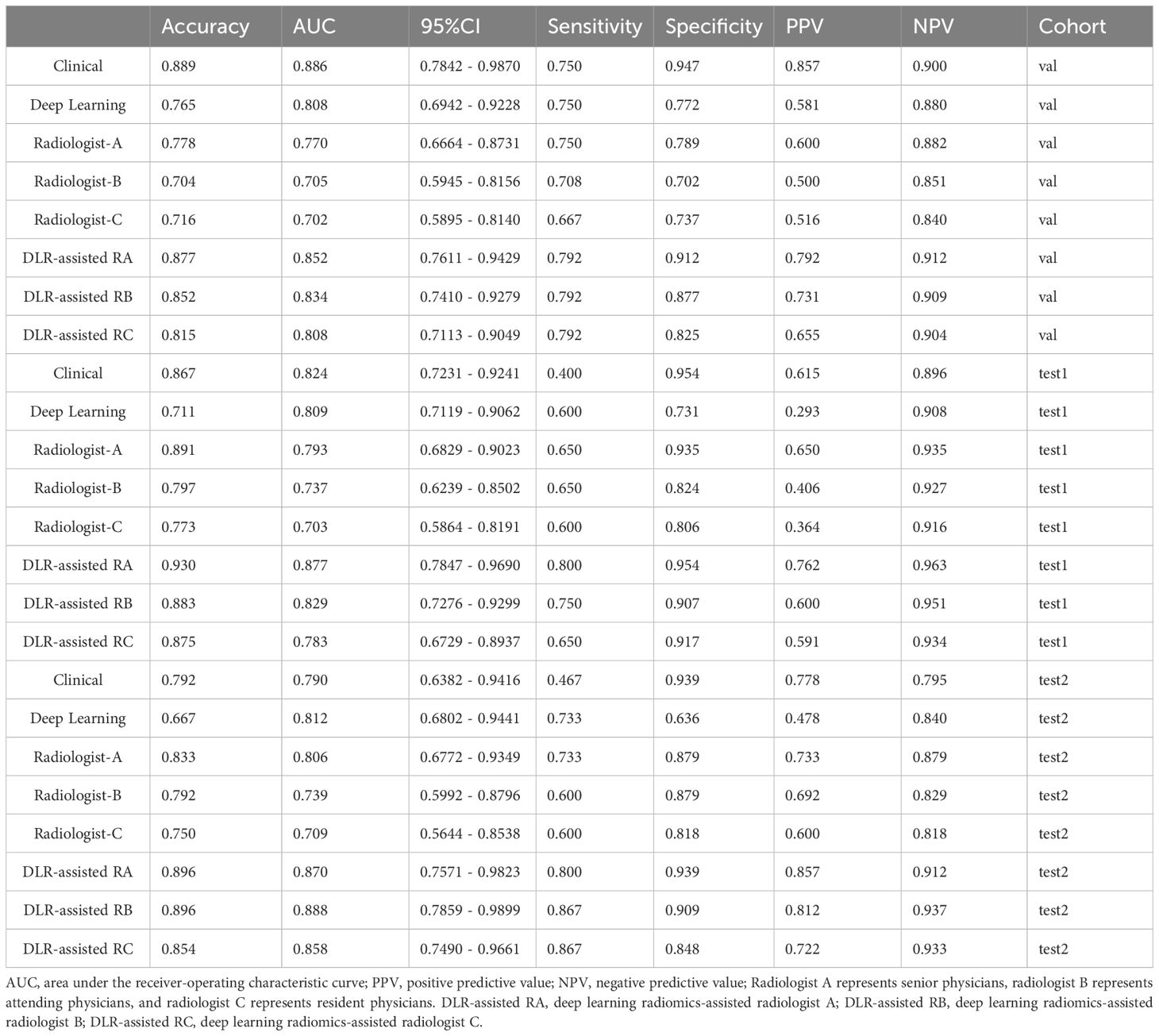

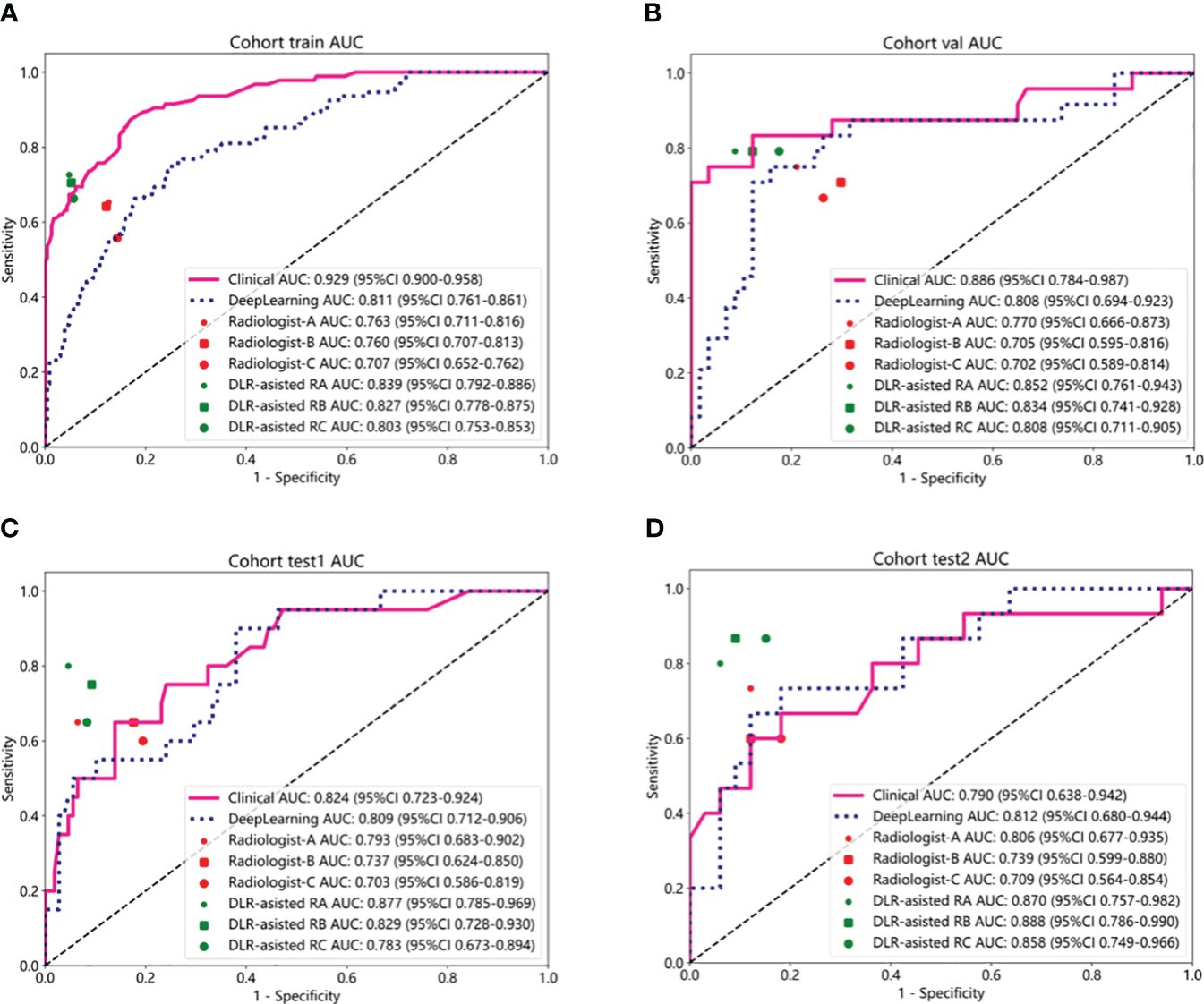

Diagnostic performance of the radiologist and DL model–assisted diagnosis

The diagnostic performance of radiologists in distinguishing BPTs from MPTs under the aid of ResNet18 is summarized in Table 5. The overall performance of radiologists, including those with different levels of experience, was significantly enhanced with the assistance of the ResNet18 model. For radiologist A, the AUC increased by 0.082 (p = 0.012) in the internal validation set, 0.084 (p = 0.042) in external test set 1, and 0.064 (p = 0.107) in external test set 2. For radiologist B, the AUC improvement was 0.129 (p = 0.001) in the internal validation set, 0.092 (p = 0.016) in external test set 1, and 0.149 (p = 0.022) in external test set 2. Radiologist C saw AUC enhancements of 0.106 (p = 0.014), 0.080 (p = 0.007), and 0.149 (p = 0.030) in the internal validation set, external test set 1, and external test set 2, respectively.

Table 5. Performance comparison between ResNet18 and radiologists of different seniorities and between radiologists with and without model assistance.

The diagnostic performance of the ResNet18 model was comparable to that of senior radiologist A, and significantly better than radiologists B and C (Figure 7). The kappa values for radiologists B and C improved from 0.615 to 0.808 in the internal validation set, from 0.814 to 0.870 in external test set 1, and from 0.698 to 0.727 in external test set 2 with the assistance of ResNet18. The clinical diagnostic benefit of radiologists aided by the ResNet18 DL model was superior to that of independent visual assessments by radiologists. In the internal validation set, the NRI values for radiologists A, B, and C were 0.164 (p = 0.015), 0.259 (p = 0.002), and 0.213 (p = 0.017), respectively, with corresponding IDI values of 0.235 (p = 0.016), 0.284 (p = 0.005), and 0.201 (p = 0.043). In external test set 1, the NRI values for radiologists A, B, and C were 0.169 (p = 0.054), 0.183 (p = 0.019), and 0.161 (p = 0.008), respectively, with IDI values of 0.204 (p = 0.068), 0.205 (p = 0.031), and 0.184 (p = 0.045). In external test set 2, the NRI values for radiologists A, B, and C were 0.127 (p = 0.109), 0.297 (p = 0.038), and 0.297 (p = 0.047), with IDI values of 0.194 (p = 0.132), 0.332 (p = 0.031), and 0.294 (p = 0.046), respectively.

Figure 7. Performance comparison between clinical model and deep learning model (ResNet18). (A-D) Communication between radiologists with and without ResNet18 assistance to discriminate between BPTs and MPTs in the internal train set (A), the internal validation set (B), external test set 1 (C), and external test set 2 (D). Radiologist A represents senior physicians, radiologist B represents attending physicians, and radiologist C represents resident physicians. DLR-assisted RA, deep learning radiomics-assisted radiologist A; DLR-assisted RB, deep learning radiomics-assisted radiologist B; DLR-assisted RC, deep learning radiomics-assisted radiologist C.

Discussion

Although the preferred treatment for PGTs is surgery, various histological types of PGTs lead to obvious differences in treatment decisions and prognosis (23). Therefore, accurate differentiation of BPTs from MPTs is the key to clinical treatment decisions and prognosis of patients. Identification and segmentation of tumor regions are a prerequisite for tumor evaluation, which also simplifies subsequent clinical evaluation. In clinical practice, PTG segmentation is, however, usually performed by clinicians manually with semi-automatic methods and is followed by pixel layering, which is time-consuming and laborious. As medical image data grow dramatically, there is an increasingly urgent clinic for automatic segmentation methods of medical images.

In recent years, artificial intelligence (AI) has been extensively applied to medical image segmentation. Nevertheless, no research pertaining to PGT segmentation has been published. As such, our study evaluated the feasibility of DL for PGT segmentation. Ultrasound images of 582 patients with PGTs from four hospitals were included in the present study. Five evaluation indicators were utilized to analyze the segmentation performance of different models. The Deeplabv3+ model yielded the optimal segmentation results in both internal and external test sets, with a Dice similarity coefficient of 0.975, 0.958, and 0.960 in the internal validation set and external test sets 1 and 2, respectively, implicating higher accuracy and generalization of the Deeplabv3 + model. This study majorly aimed to construct a model for PGT segmentation based on ultrasound images and further develop a model incorporating the optimal automatic segmentation model and DL methods, thus increasing workflow efficiency and assisting radiologists in diagnosis. Our results elucidated that despite the presence of several subtle visible errors in the segmentation results of all U-Net, Deeplabv3+, and U-Net++, the segmentation results of the Deeplabv3+ model were more factual in terms of both contour and detail. In addition, the repeatability of the Deeplabv3+ model was higher than that of the other two models, as qualitatively demonstrated by the quantitative values of Dice, mIOU, FPR, and Precision in the internal validation set and external test sets 1 and 2.

At present, surgery is the predominant treatment strategy for PGTs, and the preoperative differential diagnosis of BPTs and MPTs is essential for surgical planning (9). As reported, BGTs can be treated with local excision or lateral parotidectomy. While the treatment of MPTs generally involves radical parotidectomy, such as extended resection combined with lymph node dissection, or even facial nerve resection and postoperative radiotherapy, which may evoke more complications and invasive injuries for patients (12, 23). Although USCB and FNA can differentiate BPTs from MPTs to some extent, both methods are accompanied by serious complications since they are invasive. Due to the overlapping imaging features of different types of PGTs, traditional visual assessment is commonly affected by confounding factors. Of note, PGTs are usually located in the superficial lobe and are readily imaged by routine ultrasound, thus predisposing ultrasound to be an ideal examination method for PGTs. Ultrasound is a broadly accepted technique for PGT screening and is applied to diagnose most PGTs because it is sensitive, non-invasive, quick, radiation-free, and inexpensive. Nonetheless, the accurate diagnosis of PGTs by ultrasound is largely determined by the professional experience and expertise of radiologists. A former study reported that although the sensitivity and specificity of B-mode ultrasound were 38.9% and 90.1% in differentiating malignant and benign parotid nodules, respectively, its accuracy in differentiating malignant masses was only 20% (13). Consequently, more reliable methods to distinguish PGTs are required to be developed, thereby improving the differential diagnosis of PGTs by ultrasound. In this context, this study explored whether satisfactory results could be acquired by using an automated segmentation model under the aid of the optimal DL model, without the use of invasive methods. ML has been confirmed to be more advantageous in classification tasks than visual assessment by a radiologist since it can first learn features from medical images to maintain consistency and repeatability in diagnosis.

The present study assessed the value of several DL systems with different deep neural networks in the identification of PGTs. DL has achieved end-to-end classification and prediction. Many published articles have reported that DL can be used for the diagnosis and management of various tumors. Additionally, there are also multiple studies on the use of DL to characterize PGTs. For example, Wang et al. (12) reported that four DL methods could distinguish BPTs and MPTs based on ultrasound images with an AUC of 0.80−0.82. Xia et al. (24) developed a DL algorithm with an accuracy of 82.18% (95%CI = 0.77, 0.86) in the diagnosis and staging of parotid gland cancer based on MRI images. Some studies (18, 25) constructed DL-assisted diagnostic models based on CT images to improve the differential diagnosis of BPTs and MPTs by radiologists. At present, DL based on ultrasound images is yet not applied to the identification of the nature of PGTs, particularly to automatic segmentation of images. The study incorporated a relatively large sample size and two independent external test sets. Ultrasound images were analyzed to train an optimal segmentation model, which was subsequently used to develop a deep learning (DL) model aimed at assisting radiologists in diagnosis. Although the robust performance of these models may be attributed to radiologists, aided by the DL models, having reviewed the cases and gained certain insights for subsequent diagnoses, the study design introduces uncertainty regarding whether the enhanced accuracy of the radiologists was due to repeated case reviews or the assistance of the DL model. This ambiguity constitutes a major limitation of our study. Nonetheless, the study demonstrated a positive impact, indicating that our findings possess significant clinical value.

In this study, six DL models were utilized to distinguish BPTs from MPTs. ResNet18 has more robust generalization and general prediction capabilities when compared with other classical CNNs, which may be associated with different performance of diverse CNN models due to differences in the internal structure of the network. ResNet can train very deep neural networks to prevent the gradient disappearance problem and improve the expression ability and performance of the model (26). The use of residual connections can preserve the original features, which smoothes and stabilizes the learning of the network and further elevates the accuracy and generalization ability of the model. ResNet has the advantages of detailed and accurate description and strong robustness and is more suitable for training on small datasets. ResNet is a deep residual network proposed by He et al (26). in 2016 to address the degradation issue in deep neural networks, in which the network structure is connected by introducing residuals (residual connections) to overcome the problems of gradient disappearance and explosion, which enables the training of deeper networks. Several studies (27–29) have demonstrated that ResNet18 is well implemented for image classification and has been applied to medical image research. In our study, the Grad-CAM visualization of ResNet18 exhibited two key regions of tumors (margin and inner region), facilitating the distinguishment of BPTs from MPTs. Compared with BPTs, MPTs have a more irregular shape, more blurred margin, and more complex internal tumor heterogeneity. Therefore, these two key regions correspond to the areas on which radiologists focus, which supports the validity of the model to a certain extent. In addition, with the aid of the optimal model, the clinical benefit of radiologists with different seniorities was improved to some extent. In the internal validation set, NRI values of radiologists B and C were 0.259 (p = 0.002) and 0.213 (p = 0.017), and their IDI values were 0.284 (p = 0.005) and 0.201 (p = 0.043), respectively. In external test set 1, NRI values of radiologists B and C were 0.183 (p = 0.019) and 0.161 (p = 0.008), with IDI values of 0.205 (p = 0.031) and 0.184 (p = 0.045), respectively. In external test set 2, radiologists B and C showed NRI values of 0.297 (p = 0.038) and 0.297 (p = 0.047) and IDI values of 0.332 (p = 0.031) and 0.294 (p = 0.046), respectively. In addition, the diagnostic performance of junior and attending radiologists was also enhanced, indicating the developed DL model based on the automatic segmentation model as a potential aid to quickly assist radiologists in optimizing radiological interpretation and reducing misdiagnosis due to inexperience.

This study has several limitations. First, this study is a retrospective study, which calls for the validation of our results in prospective clinical trials before the assistive system can be applied in the clinic. Second, few pathological types of MPTs existed in external test sets 1 and 2. Third, this study only focused on the differential diagnosis of BPT and MPT without further investigating pathological classification. Fourth, the developed optimal automatic segmentation model may result in some result bias due to no manual adjustment. Accordingly, further studies are warranted to investigate whether the combination of automatic segmentation model training with manual tuning can further improve the performance of this model. Fifth, patients underwent ultrasound on non-uniform instruments in different centers. Sixth, the main limitation of the study is likely the bias due to the radiologists having already viewed the cases when they were being assisted by the deep learning model. The way the study is designed makes it uncertain whether the increased accuracy of the radiologists is due to the repeat reviewing of the cases or due to the assistance from the model. Lastly, Developing ML models that incorporate a wider range of radiological and multimodal images is the future of medicine; however, due to the retrospective nature of our study, a combined model based on Color Doppler Flow Imaging(CDFI), elastography, and contrast-enhanced ultrasound images(CEUS) for patients with PGT has not been established, making this an important direction for our future research.

Conclusion

In this study, the automatic segmentation model can effectively segment parotid lesions on ultrasound images to a certain extent. On the basis of the segmentation model, we proposed a DL-aided diagnostic model, which displayed excellent performance in differentiating BPTs from MPTs and compensated the gap between the experience levels of different radiologists to foster the precise treatment of patients with PGTs.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical approval was not required for the study involving humans in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and the institutional requirements.

Author contributions

WW: Conceptualization, Formal analysis, Project administration, Writing – original draft. JX: Data curation, Project administration, Writing – original draft. FX: Data curation, Methodology, Writing – review & editing. JL: Data curation, Writing – review & editing. ZZ: Data curation, Writing – review & editing. JW: Formal analysis, Writing – review & editing. TW: Investigation, Methodology, Writing – review & editing. HF: Formal analysis, Investigation, Writing – review & editing. QM: Formal analysis, Investigation, Writing – review & editing. FJ: Supervision, Writing – review & editing. XMZ: Supervision, Writing – review & editing. XZ: Formal analysis, Funding acquisition, Visualization, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Natural Science Research Project of the Higher Education in Anhui Province (grant numbers KJ 2020A0616) and University-level quality engineering project of Wannan Medical College (grant numbers 2022jyxm31).

Acknowledgments

At the same time, we are very grateful to the OnekeyAI platform and its developers, as well as to all the individuals involved in these studies, and to every researcher and technician who made this work possible.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2024.1417330/full#supplementary-material

References

1. Gunduz E, Alcin OF, Kizilay A, Yildirim IO. Deep learning model developed by multiparametric MRI in differential diagnosis of parotid gland tumors. Eur Arch Otorhinolaryngol. (2022) 279(11):5389–99. doi: 10.1007/s00405-022-07455-y

2. Bussu F, Parrilla C, Rizzo D, Almadori G, Paludetti G, Galli J. Clinical approach and treatment of benign and Malignant parotid masses, personal experience. Acta Otorhinolaryngol Ital. (2011) 31(3):135–43. doi: 10.1007/s00405-011-1574-2

3. Choi SY, Lee E, Kim E, Chung E, Son MK, Baek YI, et al. Clinical outcomes of bulky parotid gland cancers: need for self-examination and screening program for early diagnosis of parotid tumors. BMC Cancer. (2021) 21(1):178. doi: 10.1186/s12885-021-07902-9

4. Alzumaili B, Xu B, Saliba M, Abuhashem M, Ganly A, Ghossein I, et al. Clinicopathologic characteristics and prognostic factors of primary and recurrent pleomorphic adenoma: A single institution retrospective study of 705 cases. Am J Surg Pathol. (2022) 46(6):854–62. doi: 10.1097/PAS.0000000000001837

5. Lewis AG, Tong T, Maghami E. Diagnosis and management of Malignant salivary gland tumors of the parotid gland. Otolaryngol Clin North Am. (2016) 49:343–80. doi: 10.1016/j.otc.2015.11.001

6. Zheng Y, Zhou D, Liu H, Wen M. CT-based radiomics analysis of different machine learning models for differentiating benign and Malignant parotid tumors. Eur Radiol. (2022) 32(10):6953–64. doi: 10.1007/s00330-022-08830-3

7. Silva WP, Stramandinoli-Zanicotti RT, Schussel JL, Ramos GH, Ioshi SO, Sassi LM. Accuracy, sensitivity and specificity of fine needle aspiration biopsy for salivary gland tumors: A retrospective study from 2006 to 2011. Asian Pac J Cancer Prev. (2016) 17(11):4973–6. doi: 10.22034/APJCP.2016.17.11.4973

8. Cengiz AB, Tansuker HD, Gul R, Emre F, Demirbas T, Oktay MF. Comparison of preoperative diagnostic accuracy of fine needle aspiration and core needle biopsy in parotid gland neoplasms. Eur Arch Otorhinolaryngol. (2021) 278(10):4067–74. doi: 10.1007/s00405-021-07022-x

9. Psychogios G, Bohr C, Constantinidis J, Canis M, Vander Poorten V, Plzak J, et al. Review of surgical techniques and guide for decision making in the treatment of benign parotid tumors. Eur Arch Otorhinolaryngol. (2021) 278(1):15–29. doi: 10.1007/s00405-020-06250-x

10. Dhanani R, Iftikhar H, Awan MS, Zahid N, Momin SNA. Role of fine needle aspiration cytology in the diagnosis of parotid gland tumors: analysis of 193 cases. Int Arch Otorhinolaryngol. (2020) 24(4):e508–12. doi: 10.1055/s-0040-1709111

11. Yabuuchi H, Matsuo Y, Kamitani T, Setoguchi T, Okafuji T, Soeda H, et al. Parotid gland tumors: can addition of diffusion-weighted MR imaging to dynamic contrast-enhanced MR imaging improve diagnostic accuracy in characterization? Radiology. (2008) 249(3):909–16. doi: 10.1148/radiol.2493072045

12. Wang Y, Xie W, Huang S, Feng M, Ke X, Zhong Z. The diagnostic value of ultrasound-based deep learning in differentiating parotid gland tumors. J Oncol. (2022) 2022:8192999. doi: 10.1155/2022/8192999

13. Wu S, Liu G, Chen R, Guan Y. Role of ultrasound in the assessment of benignity and Malignancy of parotid masses. Dentomaxillofac Radiol. (2012) 41(2):131–5. doi: 10.1259/dmfr/60907848

14. McBee MP, Awan OA, Colucci AT, Ghobadi C.W, Kadom N, Kansagra AP, et al. Deep learning in radiology. Acad Radiol. (2018) 25(11):1472–80. doi: 10.1016/j.acra.2018.02.018

15. Zhang Q, Zhang S, Pan Y, Sun L, Li J, Qiao Y, et al. Deep learning to diagnose Hashimoto's thyroiditis from sonographic images. Nat Commun. (2022) 13(1):3759. doi: 10.1038/s41467-022-31449-3

16. Zhao X, Bai JW, Guo Q, Ren K, Zhang GJ. Clinical applications of deep learning in breast MRI. Biochim Biophys Acta Rev Cancer. (2023) 1878(2):188864. doi: 10.1016/j.bbcan.2023.188864

17. Park HJ, Park B, Lee SS. Radiomics and deep learning: hepatic applications. Korean J Radiol. (2020) 21:387–401. doi: 10.3348/kjr.2019.0752

18. Yu Q, Ning Y, Wang A, Li S, Gu J, Li Q, et al. Deep learning-assisted diagnosis of benign and Malignant parotid tumors based on contrast-enhanced CT: a multicenter study. Eur Radiol. (2023) 33(9):6054–65. doi: 10.1007/s00330-023-09568-2

19. Carneiro G, Georgescu B, Good S, Comaniciu D. Detection and measurement of fetal anatomies from ultrasound images using a constrained probabilistic boosting tree. IEEE Trans Med Imaging. (2008) 27(9):1342–55. doi: 10.1109/TMI.2008.928917

20. Adler DD, Carson PL, Rubin JM, Quinn-Reid D. Doppler ultrasound color flow imaging in the study of breast cancer: preliminary findings. Ultrasound Med Biol. (1990) 16(6):553–9. doi: 10.1016/0301-5629(90)90020-d

21. Groen AM, Kraan R, Amirkhan SF, Daams JG, Maas M. A systematic review on the use of explainability in deep learning systems for computer aided diagnosis in radiology: Limited use of explainable AI? Eur J Radiol. (2022) 157:110592. doi: 10.1016/j.ejrad.2022.110592

22. Zhang H, Lai H, Wang Y, Lv X, Hong Y, Peng J, et al. Research on the classification of benign and Malignant parotid tumors based on transfer learning and a convolutional neural network. IEEE Access. (2021) 9:40360–71. doi: 10.1109/Access.6287639

23. Moore MG, Yueh B, Lin DT, Bradford CR, Smith RV, Khariwala SS. Controversies in the workup and surgical management of parotid neoplasms. Otolaryngol Head Neck Surg. (2021) 164:27–36. doi: 10.1177/0194599820932512

24. Xia X, Feng B, Wang J, Hua Q, Yang Y, Sheng L, et al. Deep learning for differentiating benign from Malignant parotid lesions on MR images. Front Oncol. (2021) 11:632104. doi: 10.3389/fonc.2021.632104

25. Shen XM, Mao L, Yang ZY, Chai ZK, Sun TG, Xu YC, et al. Deep learning-assisted diagnosis of parotid gland tumors by using contrast-enhanced CT imaging. Oral Dis. (2022) 29(8):3325–3336. doi: 10.1111/odi.14474

26. He K, Ren S, Sun J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition (2016), 770–8. doi: 10.1109/CVPR.2016.90

27. Gao Z, Pan X, Shao J, Jiang X, Su Z, Jin K, et al. Automatic interpretation and clinical evaluation for fundus fluorescein angiography images of diabetic retinopathy patients by deep learning. Br J Ophthalmol. (2023) 107(12):1852–8. doi: 10.1136/bjo-2022-321472

28. Liu Y, She GR, Chen SX. Magnetic resonance image diagnosis of femoral head necrosis based on ResNet18 network. Comput Methods Programs BioMed. (2021) 208:106254. doi: 10.1016/j.cmpb.2021.106254

Keywords: automatic segmentation, deep learning, parotid gland tumors, ultrasound, net reclassification index, integrated discrimination improvement

Citation: Wei W, Xu J, Xia F, Liu J, Zhang Z, Wu J, Wei T, Feng H, Ma Q, Jiang F, Zhu X and Zhang X (2024) Deep learning-assisted diagnosis of benign and malignant parotid gland tumors based on automatic segmentation of ultrasound images: a multicenter retrospective study. Front. Oncol. 14:1417330. doi: 10.3389/fonc.2024.1417330

Received: 14 April 2024; Accepted: 18 July 2024;

Published: 09 August 2024.

Edited by:

Lorenzo Faggioni, University of Pisa, ItalyReviewed by:

Jared Shenson, Orlando Health Cancer Institute, United StatesDavid G. Ellis, University of Nebraska Medical Center, United States

Copyright © 2024 Wei, Xu, Xia, Liu, Zhang, Wu, Wei, Feng, Ma, Jiang, Zhu and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xia Zhang, eWpzdXNkQDE2My5jb20=

†These authors have contributed equally to this work

Wei Wei

Wei Wei Jingya Xu2†

Jingya Xu2† Xiangming Zhu

Xiangming Zhu