95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Oncol. , 27 October 2023

Sec. Pediatric Oncology

Volume 13 - 2023 | https://doi.org/10.3389/fonc.2023.1285775

This article is part of the Research Topic Artificial Intelligence and Machine Learning in Pediatrics View all 3 articles

Alberto Eugenio Tozzi1*

Alberto Eugenio Tozzi1* Ileana Croci1

Ileana Croci1 Paul Voicu2

Paul Voicu2 Francesco Dotta3

Francesco Dotta3 Giovanna Stefania Colafati3

Giovanna Stefania Colafati3 Andrea Carai4

Andrea Carai4 Francesco Fabozzi5

Francesco Fabozzi5 Giuseppe Lacanna1

Giuseppe Lacanna1 Roberto Premuselli5

Roberto Premuselli5 Angela Mastronuzzi5

Angela Mastronuzzi5Introduction: Europe works to improve cancer management through the use of artificialintelligence (AI), and there is a need to accelerate the development of AI applications for childhood cancer. However, the current strategies used for algorithm development in childhood cancer may have bias and limited generalizability. This study reviewed existing publications on AI tools for pediatric brain tumors, Europe's most common type of childhood solid tumor, to examine the data sources for developing AI tools.

Methods: We performed a bibliometric analysis of the publications on AI tools for pediatric brain tumors, and we examined the type of data used, data sources, and geographic location of cohorts to evaluate the generalizability of the algorithms.

Results: We screened 10503 publications, and we selected 45. A total of 34/45 publications developing AI tools focused on glial tumors, while 35/45 used MRI as a source of information to predict the classification and prognosis. The median number of patients for algorithm development was 89 for single-center studies and 120 for multicenter studies. A total of 17/45 publications used pediatric datasets from the UK.

Discussion: Since the development of AI tools for pediatric brain tumors is still in its infancy, there is a need to support data exchange and collaboration between centers to increase the number of patients used for algorithm training and improve their generalizability. To this end, there is a need for increased data exchange and collaboration between centers and to explore the applicability of decentralized privacy-preserving technologies consistent with the General Data Protection Regulation (GDPR). This is particularly important in light of using the European Health Data Space and international collaborations.

AI holds the promise of addressing various unmet needs in cancer, although its systematic application is still in progress (1–3). A pivotal requirement for the successful integration of AI into healthcare is that data used to develop algorithms should be representative of the population’s diversity, ensuring the avoidance of bias and adverse patient events. Mitigating bias stemming from inadequate representation in the training of healthcare AI tools is a widely acknowledged challenge, demanding targeted strategies for its resolution (4). The cornerstone of these strategies lies in the collection and integration of disparate datasets from various institutions, thereby circumventing the underrepresentation of specific population subsets and the potential ensuing discrimination. Numerous global initiatives have been launched to create openly accessible datasets that encompass a variety of data types essential for training and validating AI systems (5). Concurrently, the feasibility of consolidating datasets from multiple European centers is subject to adhering to GDPR regulations, which could potentially hamper data sharing for scientific purposes (6, 7). Within this context, a notable concern arises: how the current AI tools customized for clinical use in Europe are trained with datasets representing diverse populations.

In 2021, the European Commission introduced the Europe’s Beating Cancer Plan, a strategic framework aimed at bridging gaps in cancer prevention, treatment, and care, with a specific emphasis on childhood cancer priorities (8). This plan notably aligns with the European Digital Strategy’s endorsement of Artificial Intelligence (9). Building on this perspective, the European Society for Pediatric Oncology envisions harnessing AI technologies to their full potential for the benefit of pediatric cancer patients in Europe (10).

Central nervous system (CNS) tumors constitute nearly 20% of childhood cancers, making them the predominant solid neoplasms in this age group (11). Despite significant progress that has improved the outlook for pediatric CNS tumor patients, challenges persist in fully implementing precision medicine, enabling less invasive interventions, predicting treatment responses, and identifying new therapeutic approaches (10–12).

Childhood CNS tumors significantly differ from their adult counterparts by incidence, histology, molecular biology, treatment strategies, outcomes, and long-term outcomes. Consequently, extrapolating data from adults to children is not appropriate (13). Conversely, the rarity of brain tumors in pediatric patients poses a challenge in amassing a substantial volume of observations to appropriately train algorithms in this disease group.

The WHO stresses the need for AI developers to ensure that AI data is accurate, comprehensive, and representative of diverse age groups, including children (14, 15). Moreover, the proposed EU AI Act requires that “…training, validation and testing data sets shall be relevant, representative, free of errors and complete” (Art. 10) (16). In fact, a substantial portion of existing research on childhood CNS tumors consists of proof-of-concept studies based on limited datasets, which makes them not yet directly applicable into clinical practice (12, 17).

All this considered, we undertook a systematic review of the current literature related to AI tools specifically developed for tackling pediatric brain tumors in the European context. Our objective was to describe the characteristics of the data utilized in their development and the potential associated bias. Our inquiry centered on delineating the sources of data, gauging the scale of the datasets, evaluating their interoperability, ascertaining the presence of external validation, and scrutinizing the geographic representation of the cohorts employed in refining the algorithms. Based on these findings, we discuss potential strategies to accelerate the development and integration of AI tools for pediatric brain tumors into clinical practice in Europe.

We performed a systematic review of the current literature by employing a search query based on the terms recommended by the Cochrane collaboration for pediatric tumors (18), including terms specific to pediatric brain cancer. Furthermore, we developed a distinct search query tailored to encompass AI techniques, subsequently merging it with the prior query (Supplemental materials - S1).

Our search spanned the databases of MEDLINE, EMBASE, Web of Science, and Scopus, limiting the inquiry to papers published in English within the period spanning January 2010 to May 31, 2022. The outcomes of this search were imported into the Rayyan software (19) and subjected to a duplicate screening process.

Subsequent to this screening, we manually reviewed the remaining records to ascertain their eligibility against a set of predefined criteria, encompassing: 1) original articles; 2) papers detailing the development of AI tools tailored for pediatric brain tumor diagnosis, prognosis prediction, or therapeutic decision support; 3) at least one author affiliated with a European institution; 4) publications in English. Conversely, we excluded articles: 1) not presenting original data or reviews; 2) published in languages other than English; 3) authored by individuals not affiliated with European institutions; 4) describing studies conducted on animals or simulated environments.

We have included authors from institutions based in the UK, as they continue to be eligible for collaborative projects within established European networks following Brexit.

The selected articles were categorized into two distinct groups: 1) those exclusively comprising observations from patients under 18 years of age for algorithm training; 2) those encompassing both pediatric and adult populations.

Bibliometric details were extracted in a format compatible with bibliometric analytical tools, while three independent reviewers manually extracted specific details from each publication, including: a) the specific brain tumor type under examination; b) the scope of the AI tool; c) the nature of data utilized; d) the data repository employed; e) the count of patients contributing data for algorithm development; e) the use of data standards for interoperability; f) the use of external validation of the algorithms; g) the performance of the AI tool; h) the geographic representation of the cohorts used for developing the algorithm.

The resulting information was subjected to descriptive analysis, with bibliometric data analyzed through the Biblioshiny software (20). Additionally, we evaluated the FAIR Guiding Principles score (21) for each dataset included in the reviewed articles using the SATIFYD online tool (https://satifyd.dans.knaw.nl/). Other statistical analyses were performed utilizing the R software (22).

Our search strategy yielded a total of 10503 scientific publications. The selection process adhered to PRISMA standards and is visually represented in Figure 1. Upon eliminating duplicates across diverse databases, we identified 7478 unique records. Subsequent review of titles and abstracts narrowed down the selection to 572 records, whose full texts were evaluated. Within this group, we excluded 246 articles solely centered on adult populations, 24 that didn’t involve AI methodologies, and 29 that didn’t meet the eligibility criteria for various reasons. This left us with 273 remaining studies.

Of these 273 studies, 228 failed to report the age of the patients and were consequently excluded. Ultimately, we selected 45 articles that incorporated pediatric data for the AI algorithm development. Among these, 25 articles exclusively focused on children, while 20 articles used data from both pediatric and adult cohorts (Supplemental materials - S2).

Our review encompassed publications dating back to 2012. Notably, 27 out of the 45 articles (60.0%) included in this study were published from 2020 onward. Additionally, eight studies were published before 2016 and were characterized by relatively modest patient numbers during algorithm training, employing simple AI techniques. The array of AI methods utilized for analysis was diverse, encompassing techniques such as LDA, KNN, Naive Bayes, SVM, Random Forest, and more. Similarly, the choice of software varied, including Python, R, and Mazda Orange, although the preponderance of papers analyzed data within a Python environment. On average, the selected publications garnered 57.8 citations each.

Table 1 provides an overview of the brain tumor types for which AI algorithms were developed, categorized by the included population type. The majority of publications focused their efforts on glial tumors, while other tumor types were addressed less frequently. The category of unclassified tumors encompassed those specifically characterized as brain or posterior fossa tumors, pituitary adenoma, and large B-cell lymphoma. Table 2 shows the scope of the articles included in this review by type of population included. Notably, the predominant thrust of the selected papers (24 out of 45, accounting for 53.3%) was directed towards algorithmic development for tumor classification. Conversely, fewer papers pertained to prognosis, decision support, or validation tasks.

The majority of publications employed the same dataset for both testing and validating their algorithms, with only 3 out of 45 utilizing external datasets for validation. When reported, the external validation performance of the algorithm was lower than that reported for internal validation.

Table 3 shows the data sources utilized across the reviewed publications. The majority of AI studies examined diagnostic images as primary data sources, specifically employing multiple conventional MRI sequences for classification and prognosis of CNS tumors. However, some studies delved into spectroscopy, a more specialized MRI sequence often utilized at the point of diagnosis (23). Additionally, computer tomography and histopathology images made appearances as data sources. While metabolite profiles, epigenetics, gene expression, and clinical features were used less frequently than images, only 6 out of 45 publications (13.3%) integrated data from multiple sources.

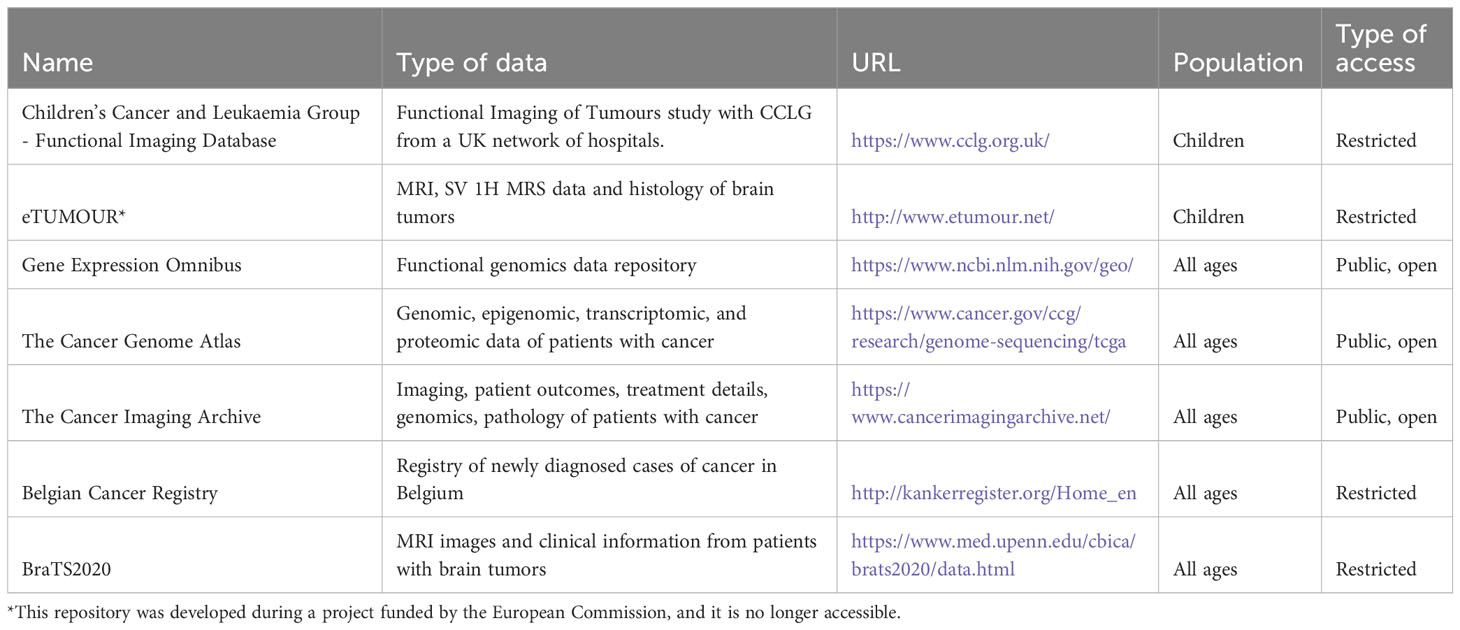

Table 4 shows the repositories gathering data from multiple sites used for the development of algorithms and accessible on the web, as reported in the articles included in the review.

Table 4 Data repositories gathering data from multiple sites used in articles included in this review.

Considering data origin, 29 articles (64.4%) drew from single centers, while 16 (35.6%) were the result of multicenter collaborations. Two studies used synthetic data to inform their algorithms’ development, and no instances of federated learning were noted. Notably, no selected publication provided insights into the interoperability of their datasets.

As for the FAIR Guiding Principles score, the median value for the datasets used in the articles in this review stood at 23 (IQR: 15-32). This relatively modest score stemmed from the frequent absence of accessible metadata and from the limited accessibility of datasets used for algorithm development.

The number of patients included in the datasets for the development of AI algorithms was quite variable and essentially limited for most data sources. The median observation count used for single-center studies was 89, whereas publications emerging from multicenter collaborations exhibited a median observation count of 120.

Examining geographic scope, 7 out of the 45 reviewed articles tapped into public repositories for data. An additional 14 articles incorporated data from beyond the EU, including countries such as the USA, Canada, China, Iran, and Argentina. In terms of pediatric datasets within the EU, 17 publications (37.8%) harnessed cohorts from the UK, while individual datasets emerged from Belgium, Denmark, Italy, Netherlands, Spain, and Sweden.

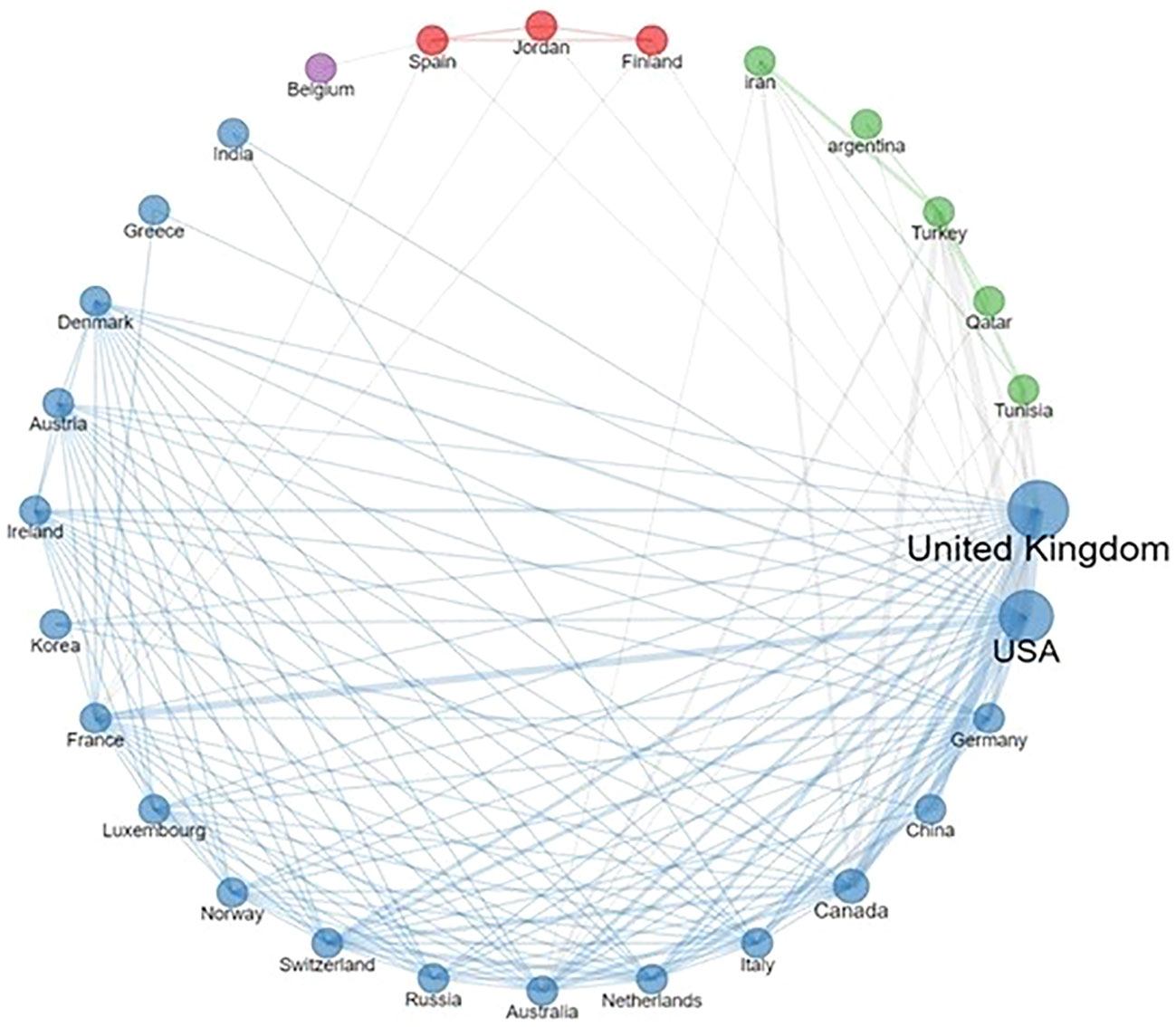

Delving into the collaborative landscape, Figure 2 illustrates the interconnected network of institutions represented across the selected publications. Notably, the UK stood out as a frequent contributor, not just in terms of author affiliations, but also due to its extensive collaborations with both European and non-European entities. Moreover, the network reveals consistent partnerships between EU countries and the USA.

Figure 2 Country collaboration network. The thickness of lines reflects the frequency of collaborations.

Our review unveiled a scarcity of publications regarding AI applied to CNS tumors in children in Europe. Most of the studies emerged from individual centers, indicating a pressing need for improving research in this field. This holds particular significance for the less prevalent tumor types, often overshadowed in the development of AI tools.

Furthermore, our review underscored that several European institutions are engaged in crafting AI tools for identical categories of pediatric brain tumors, with only 16 publications reflecting multicenter data contributions. On the other hand, we did not find any information about data standards for interoperability that can support collaborative research in this field. Notably, collaborative networks, both within Europe and on a global scale, emerged from our analysis, illustrating a fertile ground for reinforcing and expanding such partnerships.

We also observed that most publications reported AI tools trained with a low number of observations from selected populations. Moreover, few publications reported an external validation of their algorithms.

As in many other medical fields, research on AI on pediatric CNS tumors suffers from fragmented data and studies performed on small sample sizes. Although the performance of the published algorithm in this domain is fair according to reported results for internal validation, the potential for bias is high and their generalizability may be limited.

One of the major obstacles to data-sharing and collaboration among institutions in the development of AI tools that are trained on diverse and representative populations is the concern for privacy. This is particularly relevant in the EU, where the General Data Protection Regulation (GDPR) is in place. Data-sharing agreements require a thorough evaluation of potential risks, which can take a significant amount of time to complete. Additionally, there is still variability in the interpretation of GDPR requirements for data sharing at the country level (24, 25). Identifying the trade-off between privacy preservation and the full development of AI solutions will represent one of the most important topics for discussion at the data governance level.

While compliance with regulations for data sharing is important for scientific research in the field of AI (26), technologies such as federated and swarm learning (27–29) and synthetic data (30) may be prioritized to accelerate the development of AI tools. Notably, none of the publications reviewed in our study used federated or swarm learning and only two studies explored the use of synthetic data.

The datasets examined in the review seldom adhered to all FAIR principles, reinforcing the observation of a limited commitment to reusing and integrating data across different contexts.

Moreover, interoperability and data harmonization (31) are critical for addressing the fragmentation of data. The Observational Health Data Sciences and Informatics (OHDSI) community conducts research to promote the use of standards such as OMOP and standardized vocabularies (32, 33). Another standard that supports interoperability is HL7 FHIR (34). However, our review did not find any information on the interoperability of the datasets used for the development of AI tools.

The effectiveness of artificial intelligence tools is dependent on the quality of data they are trained on. With population selection, misrepresentation, or missing data, generalizability across subgroups is not possible. Our review found publications that used data from both children and adults for the development of algorithms for brain cancer, which may hinder their generalizability. The recent WHO document (13) on a detailed classification of CNS tumors in children provides a valuable tool for distinguishing different types on a molecular basis. AI tools can further improve classification if algorithms are trained with generalizable data.

The majority of AI tools used in the publications reviewed utilized diagnostic images as their data source. Neuroimaging, a well-developed area for AI in oncology, offers detailed analysis of brain microstructures and pathophysiology specific to children (35). However, AI also has the potential for integrating multiple types of data, which could be beneficial for investigating complex patterns and increasing algorithm accuracy. Our review found that only a small number of publications utilized this approach, which is an area that should be given more attention.

The development of AI tools outside medicine typically aggregates massive datasets from multiple sources which correlate with accurate predictions (36). Open repositories for developing AI tools represent an attempt to concentrate large amounts of diagnostic images and overcome the existing barriers to data access. In this review, among the 45 publications included, 7 have used a data repository for the development of their algorithm, of which 3 only were publicly accessible. Several public datasets are available, particularly for neuroimaging, which represents the main area of application of AI in neuro-oncology.

Unfortunately, public datasets for AI purposes are limited in size and do not accurately represent the entire population of children with brain tumors. Additionally, neuroimaging data in these datasets may have inconsistencies due to tumor and scan variations. Furthermore, advances in technology can lead to changes in the quality and features of diagnostic images, known as data drift, which can affect the performance of AI algorithms. This highlights the need for ongoing updates to the datasets used for training AI algorithms (37, 38).

In essence, the success of AI tools depends heavily on the quality and representativeness of the data used for training. Currently, many AI models for pediatric brain tumors are trained on small, single-center datasets. To enhance accuracy and applicability, there is a need for collaboration among research groups working on the same diseases, sharing data to enhance the robustness of AI models. Without this collective effort, AI models developed in isolation may lack the necessary accuracy for clinical decision-making, potentially introducing bias and errors. The absence of such collaboration leaves AI models vulnerable to overfitting, performing well on training data but poorly on external validation from different settings.

Our review has some limitations. Our study aimed to comprehensively review the existing literature on AI and pediatric brain tumor screening by using multiple bibliographic platforms and a structured search strategy with a manual review. However, information about the datasets used for developing AI tools may not be readily available in the literature, particularly if they are part of an industrial process. Therefore, policies that promote wider access and analysis of these datasets should be supported. Additionally, we intentionally focused on publications originating from the EU only. Our aim was to shed light on the challenges faced within the EU in terms of data sharing and AI model development taking into account factors as the General Data Protection Regulation (GDPR) and the issues related to data interoperability of the existing infrastructures. This approach is beneficial in understanding the European context, where there are many scientific collaborations and in light of the future creation of the European Health Data Space (39). Finally, we focused on data-driven bias only and we did not evaluate potential algorithmic bias in the studies included in this review.

In summary, this review highlights several potential sources of bias in AI tools developed for pediatric CNS tumors that may limit their clinical application. The most important strategy to address this limitation is promoting the use of larger and more diverse datasets through collaboration among different institutions that improve data availability, sharing, standardization, interoperability, continuous updates, and quality. On one hand, it is of paramount importance to promote the adoption of FAIR Principles during the development of AI tools. On the other hand, expanding existing networks that adhere to the same standards could benefit the development of EU-funded research and development projects, and data exchange through the European Health Data Space (39). Collaborative efforts should be supported by data standards such as those of Common Data Model (31, 32). Furthermore, decentralized privacy-preserving technologies such as federated learning and synthetic data may accelerate the development of AI tools based on large populations from different clinical sites while complying with EU privacy regulations (30, 40). Data access remains the biggest challenge in training and developing AI tools, and requires significant research effort and investment to enable the development of AI tools for pediatric brain tumors.

The original contributions presented in the study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author.

AT: Conceptualization, Methodology, Supervision, Writing – original draft, Writing – review & editing. IC: Data curation, Formal Analysis, Writing – original draft, Writing – review & editing. PV: Data curation, Formal Analysis, Writing – review & editing. FD: Data curation, Formal Analysis, Investigation, Writing – review & editing. GC: Data curation, Formal Analysis, Writing – review & editing. AC: Data curation, Supervision, Writing – review & editing. FF: Data curation, Formal Analysis, Investigation, Writing – review & editing. GL: Data curation, Formal Analysis, Writing – review & editing. RP: Supervision, Visualization, Writing – review & editing. AM: Conceptualization, Formal Analysis, Supervision, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by UNICA4EU, a project funded by the European Union’s Call for Pilot Projects and Preparatory Actions (PPPA) under Grant Agreements No 101052609. This work was supported also by the Italian Ministry of Health with Current Research funds.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2023.1285775/full#supplementary-material

1. Briganti G, Le Moine O. Artificial intelligence in medicine: today and tomorrow. Front Med (2020) 7:27. doi: 10.3389/fmed.2020.00027

2. Rajpurkar P, Chen E, Banerjee O, Topol EJ. AI in health and medicine. Nat Med (2022) 28:31–8. doi: 10.1038/s41591-021-01614-0

3. Iqbal MJ, Javed Z, Sadia H, Qureshi IA, Irshad A, Ahmed R, et al. Clinical applications of artificial intelligence and machine learning in cancer diagnosis: looking into the future. Cancer Cell Int (2021) 21:270. doi: 10.1186/s12935-021-01981-1

4. Parikh RB, Teeple S, Navathe AS. Addressing bias in artificial intelligence in health care. JAMA (2019) 322:2377. doi: 10.1001/jama.2019.18058

5. Bhinder B, Gilvary C, Madhukar NS, Elemento O. Artificial intelligence in cancer research and precision medicine. Cancer Discovery (2021) 11:900–15. doi: 10.1158/2159-8290.CD-21-0090

6. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation) (Text with EEA relevance) (2016). Available at: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32016R0679.

7. Hoofnagle CJ, van der Sloot B, Borgesius FZ. The European Union general data protection regulation: what it is and what it means. Inf Commun Technol Law (2019) 28:65–98. doi: 10.1080/13600834.2019.1573501

8. Europe’s Beating Cancer Plan. Eur Comm - Eur Comm. Available at: https://ec.europa.eu/commission/presscorner/detail/en/IP_21_342 (Accessed July 13, 2023).

9. A European approach to artificial intelligence | Shaping Europe’s digital future (2023). Available at: https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence (Accessed July 31, 2023).

10. SIOP Europe Strategic Plan Update 2021-2026. Available at: https://siope.eu/media/documents/siop-europes-strategic-plan-update-2021-2026.pdf (Accessed July 24, 2023).

12. Tozzi AE, Fabozzi F, Eckley M, Croci I, Dell’Anna VA, Colantonio E, et al. Gaps and opportunities of artificial intelligence applications for pediatric oncology in european research: A systematic review of reviews and a bibliometric analysis. Front Oncol (2022) 12:905770. doi: 10.3389/fonc.2022.905770

13. Horbinski C, Berger T, Packer RJ, Wen PY. Clinical implications of the 2021 edition of the WHO classification of central nervous system tumours. Nat Rev Neurol (2022) 18:515–29. doi: 10.1038/s41582-022-00679-w

14. World Health Organization. Ethics and governance of artificial intelligence for health: WHO guidance (2021). Geneva: World Health Organization. Available at: https://apps.who.int/iris/handle/10665/341996 (Accessed November 24, 2022).

15. World Health Organization. Ageism in artificial intelligence for health: WHO policy brief (2022). Geneva: World Health Organization. Available at: https://apps.who.int/iris/handle/10665/351503 (Accessed November 24, 2022). p.

16. EUROPEAN COMMISSION. REGULATION OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL - LAYING DOWN HARMONISED RULES ON ARTIFICIAL INTELLIGENCE (ARTIFICIAL INTELLIGENCE ACT) AND AMENDING CERTAIN UNION LEGISLATIVE ACTS. Available at: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A52021PC0206.

17. Huang J, Shlobin NA, Lam SK, DeCuypere M. Artificial intelligence applications in pediatric brain tumor imaging: A systematic review. World Neurosurg (2022) 157:99–105. doi: 10.1016/j.wneu.2021.10.068

18. Kremer L, Leclercq E, van Dalen E. Methods to be used in reviews – CCG standards . Cochrane Child Cancer Group. Available at: http://childhoodcancer.cochrane.org/sites/childhoodcancer.cochrane.org/files/public/uploads/CCG%20standards_Search%20strategy%20and%20Selection%20of%20studies.pdf (Accessed March 5, 2022).

19. Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan—a web and mobile app for systematic reviews. Syst Rev (2016) 5:210. doi: 10.1186/s13643-016-0384-4

20. Aria M, Cuccurullo C. bibliometrix : An R-tool for comprehensive science mapping analysis. J Informetr (2017) 11:959–75. doi: 10.1016/j.joi.2017.08.007

21. Wilkinson M, Dumontier M, Aalbersberg I, Appleton G, Axton M, Baak A, et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data (2016) 3:160018. doi: 10.1038/sdata.2016.18

22. R: The R Project for Statistical Computing. Available at: https://www.r-project.org/ (Accessed November 24, 2022).

23. Ross B, Michaelis T. Clinical applications of magnetic resonance spectroscopy. Magn Reson Q (1994) 10:191–247.

24. Bentzen HB, Castro R, Fears R, Griffin G, Ter Meulen V, Ursin G. Remove obstacles to sharing health data with researchers outside of the European Union. Nat Med (2021) 27:1329–33. doi: 10.1038/s41591-021-01460-0

25. Vlahou A, Hallinan D, Apweiler R, Argiles A, Beige J, Benigni A, et al. Data sharing under the general data protection regulation: time to harmonize law and research ethics? Hypertens Dallas Tex 1979 (2021) 77:1029–35. doi: 10.1161/HYPERTENSIONAHA.120.16340

26. Char DS, Shah NH, Magnus D. Implementing machine learning in health care - addressing ethical challenges. N Engl J Med (2018) 378:981–3. doi: 10.1056/NEJMp1714229

27. Xu J, Glicksberg BS, Su C, Walker P, Bian J, Wang F. Federated learning for healthcare informatics. J Healthc Inform Res (2021) 5:1–19. doi: 10.1007/s41666-020-00082-4

28. Rieke N, Hancox J, Li W, Milletarì F, Roth HR, Albarqouni S, et al. The future of digital health with federated learning. NPJ Digit Med (2020) 3:119. doi: 10.1038/s41746-020-00323-1

29. Warnat-Herresthal S, Schultze H, Shastry KL, Manamohan S, Mukherjee S, Garg V, et al. Swarm Learning for decentralized and confidential clinical machine learning. Nature (2021) 594:265–70. doi: 10.1038/s41586-021-03583-3

30. Rajotte J-F, Bergen R, Buckeridge DL, El Emam K, Ng R, Strome E. Synthetic data as an enabler for machine learning applications in medicine. iScience (2022) 25:105331. doi: 10.1016/j.isci.2022.105331

31. Belenkaya R, Gurley MJ, Golozar A, Dymshyts D, Miller RT, Williams AE, et al. Extending the OMOP common data model and standardized vocabularies to support observational cancer research. JCO Clin Cancer Inform (2021) 5:12–20. doi: 10.1200/CCI.20.00079

32. Hripcsak G, Duke JD, Shah NH, Reich CG, Huser V, Schuemie MJ, et al. Observational health data sciences and informatics (OHDSI): opportunities for observational researchers. Stud Health Technol Inform (2015) 216:574–8. doi: 10.3233/978-1-61499-564-7-574

33. Papez V, Moinat M, Voss EA, Bazakou S, Van Winzum A, Peviani A, et al. Transforming and evaluating the UK Biobank to the OMOP Common Data Model for COVID-19 research and beyond. J Am Med Inform Assoc JAMIA (2022), 30(1):103–111. doi: 10.1093/jamia/ocac203

34. Saripalle R, Runyan C, Russell M. Using HL7 FHIR to achieve interoperability in patient health record. J BioMed Inform (2019) 94:103188. doi: 10.1016/j.jbi.2019.103188

35. Nikam RM, Yue X, Kaur G, Kandula V, Khair A, Kecskemethy HH, et al. Advanced neuroimaging approaches to pediatric brain tumors. Cancers (2022) 14:3401. doi: 10.3390/cancers14143401

36. Deng J, Dong W, Socher R, Li L-J, Kai Li, Li F-F. (2009). ImageNet: A large-scale hierarchical image database, in: 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL: IEEE. pp. 248–55. doi: 10.1109/CVPR.2009.5206848

37. Quiñonero-Candela J ed. Dataset shift in machine learning. Cambridge, Mass: MIT Press (2009). p. 229. p.

38. Chi S, Tian Y, Wang F, Zhou T, Jin S, Li J. A novel lifelong machine learning-based method to eliminate calibration drift in clinical prediction models. Artif Intell Med (2022) 125:102256. doi: 10.1016/j.artmed.2022.102256

39. Shabani M. Will the European Health Data Space change data sharing rules? Science (2022) 375:1357–9. doi: 10.1126/science.abn4874

Keywords: artificial intelligence, CNS tumors, pediatric oncology, childhood cancer, data sharing

Citation: Tozzi AE, Croci I, Voicu P, Dotta F, Colafati GS, Carai A, Fabozzi F, Lacanna G, Premuselli R and Mastronuzzi A (2023) A systematic review of data sources for artificial intelligence applications in pediatric brain tumors in Europe: implications for bias and generalizability. Front. Oncol. 13:1285775. doi: 10.3389/fonc.2023.1285775

Received: 30 August 2023; Accepted: 16 October 2023;

Published: 27 October 2023.

Edited by:

Kaya Kuru, University of Central Lancashire, United KingdomReviewed by:

Sabyasachi Dash, AstraZeneca (United States), United StatesCopyright © 2023 Tozzi, Croci, Voicu, Dotta, Colafati, Carai, Fabozzi, Lacanna, Premuselli and Mastronuzzi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alberto Eugenio Tozzi, YWxiZXJ0b2V1Z2VuaW8udG96emlAb3BiZy5uZXQ=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.