94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Oncol. , 01 March 2024

Sec. Genitourinary Oncology

Volume 13 - 2023 | https://doi.org/10.3389/fonc.2023.1252630

Kidney disease is a serious public health problem and various kidney diseases could progress to end-stage renal disease. The many complications of end-stage renal disease. have a significant impact on the physical and mental health of patients. Ultrasound can be the test of choice for evaluating the kidney and perirenal tissue as it is real-time, available and non-radioactive. To overcome substantial interobserver variability in renal ultrasound interpretation, artificial intelligence (AI) has the potential to be a new method to help radiologists make clinical decisions. This review introduces the applications of AI in renal ultrasound, including automatic segmentation of the kidney, measurement of the renal volume, prediction of the kidney function, diagnosis of the kidney diseases. The advantages and disadvantages of the applications will also be presented clinicians to conduct research. Additionally, the challenges and future perspectives of AI are discussed.

Kidney disease is a serious public health problem affecting more than 10% of the global population (1). End-stage renal disease, which develops from various kidney conditions, eventually forces patients to finally rely on renal replacement treatment to prolong their lives (2). The many complications of end-stage renal disease have a significant impact on the physical and mental health of patients. Early and accurate diagnosis is therefore essential to slow the progression of kidney disease and improve the quality of life of people with kidney disease.

Over the past few decades, the early detection, diagnosis, and treatment of diseases have benefited greatly from the application of several medical imaging modalities, such as computed tomography (CT), magnetic resonance imaging (MRI), ultrasound, and X-ray (3). Ultrasound is often the test of choice for evaluating the kidney and perirenal tissue because it is real-time, accessible, and non-radioactive. However, in clinical practice, the interpretation and analysis of medical images have mostly been performed by specialized physicians, which has been highly dependent on long-term training and often prone to subjective judgments. Furthermore, due to the high subjective heterogeneity in visual interpretation, it is challenging to translate experience-based prediction into standardized practice (4).

In recent decades, with the rapid development of AI technology, there have been a large number of applications of AI in medical imaging that benefits clinicians a lot (5). It is widely used in disease diagnosis that rely on medical imaging, such as breast cancer (6), liver diseases (7), pancreatic cancer (8), thyroid nodules (9) and urology diseases (10). AI has been explored as a new potential tool to assist radiologists in clinical decision making to overcome the substantial interobserver variability in image acquisition and interpretation.

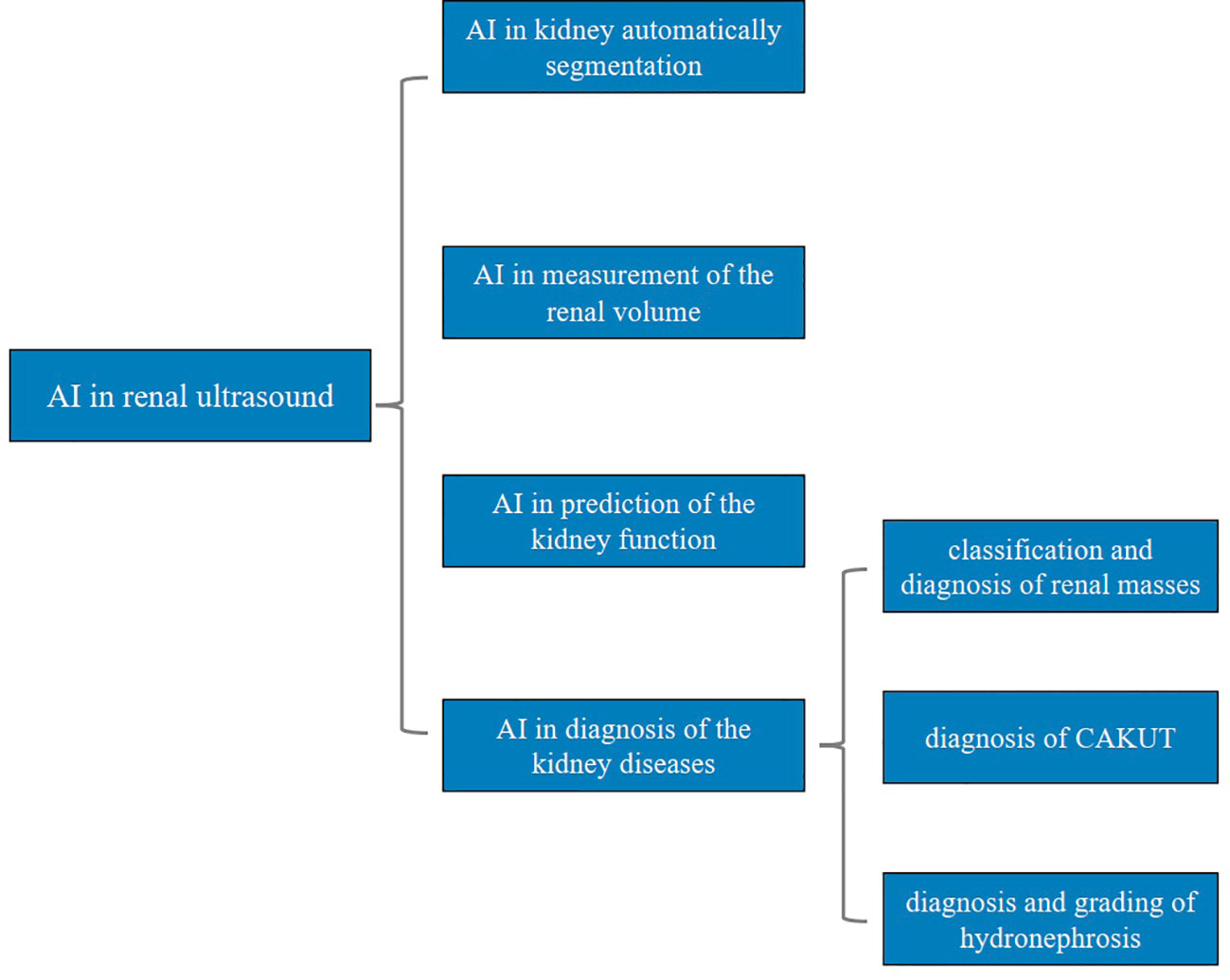

In this review, we comprehensively introduce the applications of AI in renal ultrasound, including automatic segmentation of the kidney, measurement of the renal volume, prediction of the kidney function, diagnosis of the kidney diseases. The advantages and disadvantages of the applications will also be presented to clinicians for research. Additionally, the challenges and future perspectives of AI in renal ultrasound are also discussed. The main structure of this review was presented in Figure 1.

Figure 1 Main structure of this review. AI, artificial intelligence; CAKUT, congenital abnormalities of the kidney and urinary tract.

We searched the PubMed and Web of Science databases for all research published in English up to 1 Jan 2023.In the search strategy, we used the search terms including “artificial intelligence”, “ultrasound”, and “renal”. The complete search strategy was available from the authors. We also included some narrative and systematic reviews to provide our readers with adequate details within the allowed number of references. In addition to the database searching, a hand search was performed, consisting of the reference lists of related articles and reviews and Google scholar search engines.

We mainly focused on AI in renal ultrasound images segmentation and the diagnostic and predictive capabilities of AI-assisted ultrasound in renal diseases. Hence, the articles were reviewed to determine the relevance based on the following criteria: studies that involved the application of AI methods to analyze ultrasound images of normal kidneys or renal diseases. The articles that were beyond this coverage were excluded.

All the titles and abstracts for all eligible articles were reviewed by two authors independently. If the abstracts were not relevant, then they were discarded, and the full-text articles were accessed. Further reviewing the full-text papers may lead to deserting some irrelevant documents and finally retaining articles that met the inclusion criteria in this review. When there was any discrepancy in the ultimately included articles, a consensus negotiation was reached to form the final inclusion result among the two authors.

AI was originally proposed at the Dartmouth Conference in 1956. The main goal of AI is to enable the performance of complex tasks that require human intelligence. The advancement of network technology has hastened the growth of AI innovation research, and significantly enhanced the technology’s usage. As an important component of the fourth industrial revolution, AI has changed dramatically our world, from facial recognition to smart homes. Particularly, AI methods have been found myriad applications in the medical image analysis field, driving it forward at a rapid pace, such as detection, segmentation, diagnosis, as well as risk assessment (5).

ML, a subfield of AI, is a general term for a class of algorithms. The basic idea of ML is to abstract a real-world problem into a mathematical model, to solve the model using mathematical methods to deal with the problem, and to evaluate whether and how well it has addressed the problem (11). Therefore, the process of ML could be simply summarized into three processes: training, validation, and testing.

According to the training methods, ML could be roughly divided into three types: supervised learning, unsupervised learning and reinforcement learning. Among them, supervised learning is the most widely used one in the field of medical imaging technology (12). It uses known input-outputs to train a model to map input data to output results, resulting in a function of inference, which is then able to infer output results from new input data. It is thus useful for classification, characterization and regression of the similarity between instances of similar results labels (12).

Consequently, in ultrasound imaging applications, expert-designed or radiomics-extracted image features can be used as input data to provide output predictions regarding subsequent disease outcomes. Commonly used ML techniques include linear regression, K-means, decision trees, random forests, support vector machines (SVMs), and neural networks (6).

The concept of DL is derived from artificial neural networks, which is the most significant branch of ML, as illustrated in Figure 2. DL methods are representation-learning methods with multiple levels of representation, obtained by composing simple but non-linear modules that each transform the representation at one level (starting with the raw input) into a representation at a higher, slightly more abstract level (13). In contrast to traditional ML, which relies on manual feature extraction, DL is based on automatic feature extraction by machines. DL is highly dependent on data, and the larger the amount of data, the better its performance. Thus it has even surpassed human performance in tasks such as image recognition and facial recognition (13).

Currently, convolutional neural networks (CNNs) are the most popular type of DL architecture in the medical image analysis field (14). A typical CNN architecture consists of three parts: convolutional layers, pooling layers, and fully-connected layers. Generally, a CNN model has many convolutional layers and pooling layers. The convolutional layer and the pooling layer are alternately set (7). The convolution layer utilizes filters (convolution kernels) to filter regions of the image to extract the features. Pooling layers can reduce the dimensionality of data more effectively than convolutional layers, which can not only greatly reduce the amount of operations, but effectively avoid overfitting as well. The data processed by the convolutional and pooling layers are fed to the fully-connected layer. The fully connected layer can integrate local information with class discrimination (7).

The emergence and continuous development of DL has shed new light on medical image analysis and had a significant impact on both clinical applications and scientific research (15).

Kidney segmentation is the key and fundamental step for medical image analysis. The main motivations for kidney segmentation in clinical practice are: (a) Evaluation of kidney parameters, namely its size and volume, to diagnose potential diseases; (b) Assessment of renal morphology and function; (c) Localization of abnormalities or pathologies present in the kidney; (d) Facilitating the decision-making process, helping in the treatment/interventional planning; (e) Post-operative follow-up after a renal intervention (16). In the current clinical work, there are three main types of methods used in studies on kidney segmentation: manual, semi-automatic and fully automatic. Kidney image segmentation that is performed manually or semi-automatically relies heavily on timing-consuming manual tasks that also lead to higher inter-operator variability (17). Thus, automatic segmentation methods for kidney ultrasound image using AI are proposed, as summarized in Table 1.

Based on 2D ultrasound, Yang et al. developed a framework which was combined with nonlocal total variation image denoising, distance regularized level set evolution, and shape element for kidney segmentation from noisy ultrasound images (18). The results of this proposed method shown that the sensitivity, and specificity could reach 96% and 95% respectively. Inspired by the excellent performance of boundary detection-based kidney segmentation methods, a fully-automatic kidney image segmentation method based on CNN, which consisted of a transfer learning network, a boundary distance regression network and a kidney pixelwise classification network was developed by Yin et al. (19). Their technique used image segmentation network architecture derived from DeepLab (21) to speed up model training and improve the performance of kidney image segmentation. Moreover, image-registration data augmentation based on thin-plate splines transformation and flipping was used to train the kidney segmentation model more robust. The results showed that the proposed method had significantly better performance than other 6 state-of-the-art DL segmentation networks, namely DeepLab (21), FCNN (22), U-Net (23), SegNet (24), PSPnet (25), DeeplabV3+ (26). Chen et al. proposed a deep neural network architecture, namely Multi-branch Aware Network to segment kidney (20). The neural network mainly consists of a multi-scale feature pyramid, a multi-branch encoder and a master decoder. The design of multi-scale feature pyramid can make the network more accessible to different kinds of details at different scales. The information exchange between multi-branch encoder can reduce the loss of feature information and improve the segmentation accuracy of the network. Thus, this method not only can segment kidney images more robustly and accurately, but also can reduce the false detection rate and missed detection rate of the network.

In summary, the segmentation using DL in renal ultrasound images could not only save a considerable amount of time for radiologists and provide more objective and reliable information for the clinical work, but also contribute to the development of AI in automatic diagnosis of renal diseases. The aforementioned literature only segmented normal kidneys, not those with abnormalities, and did not set up external validators to further validate their models. Future researchers deserve a chance to solve this issue.

Renal length is closely related to kidney function, and its change is considered as an important factor in assessing kidney status in patients with kidney disease (27). Renal bipolar length is widely used as a clinical indicator of chronicity and indirectly to estimate severity of pre-existing parenchymal damage (28). However, given that the bipolar length is a poor predictor of parenchymal volume and renal function particularly in studies utilizing MRI and CT to assess renal morphology (29). Renal volume is a relatively more reliable and accurate indicator for changes in renal length; this is confirmed by several methods for measuring renal volume, such as CT, MRI, ultrasound (30). Among the imaging technologies, ultrasound is undoubtedly a safer one for children. Kim D-W et al. aimed to develop a new automated method for renal volume measurement using hybrid learning, which integrated the DL-based U-Net model with the active contouring method based on ML to locate regions (31). They have demonstrated that the accuracy and reliability of renal volume calculation using the proposed process by comparing it with the renal volume measured by CT method. Unfortunately, the study’s sample size was insufficient, and there weren’t enough kidney samples from children in each age group to assess the model’s generalizability, statistical uncertainty, and potential bias. Overall, this is the first study on healthy children to use image pre-processing and hybrid learning to determine renal volume changes, since an age-matched normal reference value of renal volume could help in the diagnosis and prognosis of kidney diseases especially in childhood.

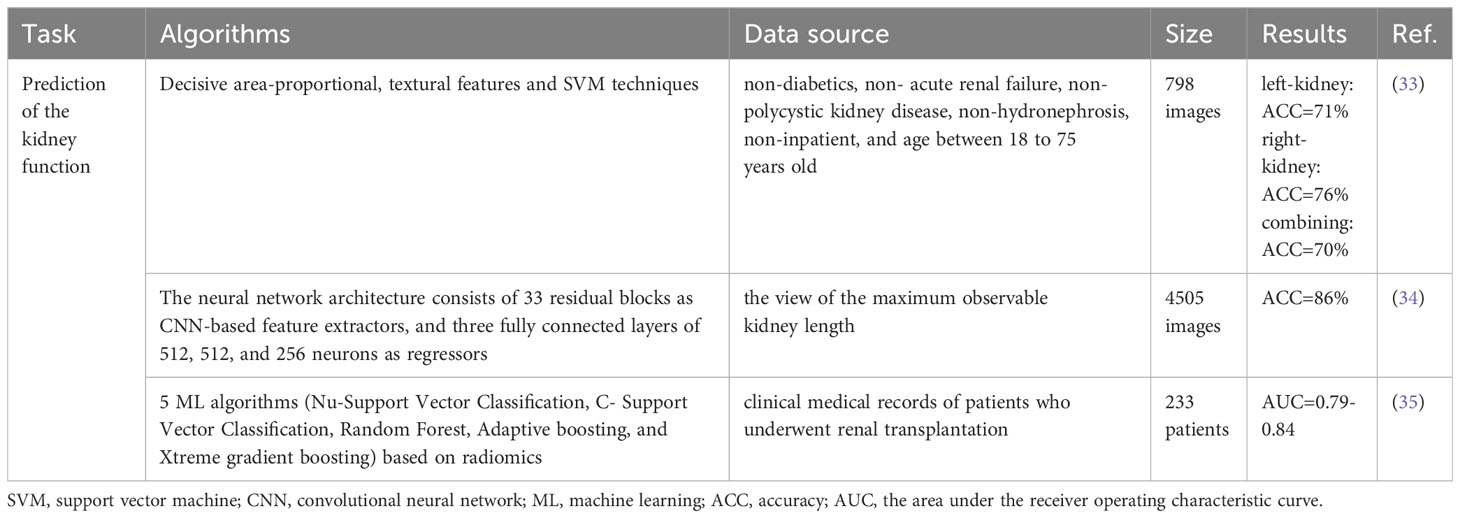

Estimated glomerular filtration rate (eGFR) is the main indices for evaluating kidney function and the main basis for the diagnosis and staging of chronic kidney disease (CKD) (32). Detection of eGFR using traditional methods is invasive and non-immediate. Hence, the utilization of ultrasound for non-invasive prediction of kidney function has been proposed, as shown in Table 2.

Table 2 Summary of the application of AI in the renal ultrasound for prediction of the kidney function.

With texture features and SVM classification algorithm, Chen et al. designed an integrated image analysis system for diagnosing different CKD stages (33). And the accuracy of prediction results could reach 75.95%. Kuo et al. employed a deep CNN to predict eGFR based on renal ultrasound images (34). The neural network architecture they constructed was referenced from the ResNet-101 model and included 33 residual blocks and three fully connected layers consisting of 512, 512 and 256 neurons. For classifying eGFR with a threshold of 60 ml/min/1.73 m2, their model achieved an overall accuracy of 85.6% and area under receiver operating characteristic curve (AUC) of 0.904. Radiomics has also been discovered to be useful in evaluating kidney function. Zhu et al. developed different models based on clinical and ultrasound image features as well as radiomic features to predict the kidney function by different ML methods (35). The results of this study validated the feasibility of radiomic features in the evaluation of kidney function.

The combination of renal ultrasound imaging and AI offers the possibility of non-invasive prediction of kidney function, which can be considered as complementary evidence to aid clinical diagnosis.

Ultrasound is widespread used in the diagnostic studies of the kidney and urinary tract. However, the diagnosis of kidney disease based on ultrasound imaging data relies on a variety of anatomical signatures, such as the renal length, the renal volume, the symmetry of the kidney, and the echogenicity of the renal parenchyma, which are usually obtained manually and exhibit a degree of interobserver dependence (36). Therefore, it is desirable to automate image analysis for a robust diagnosis of renal disease. At present, several modified AI algorithms have been developed to diagnose the various kidney diseases based on ultrasound images with the intention to help radiologists to make unbiased diagnosis, which is summarized in Table 3. Developing such AI algorithms helps the clinician more deeply integrate AI with renal ultrasound to find broader and practical clinical applications.

Renal tumor is one of the common tumors in the urological system, and most of them are malignant. And as medical imaging technology develops by leaps and bounds in recent years, the detection rate of renal tumors is also increasing each year (47). However, only 16-19% of renal tumors are benign, especially in small renal masses (SRM, <4 cm in size) with a high benign rate of 20-30% (37, 48). Accordingly, the percutaneous renal biopsy could be considered an essential pre-treatment diagnostic procedure in clinical practice. Nevertheless, percutaneous renal biopsy has limitations, such as a high rate of false negatives, the inability to accurately diagnose SRM, and the risk of bleeding and tumor dissemination. Benign findings in the biopsy specimen will not exclude the possibility of malignancy elsewhere in the lesion (49). Therefore, accurate preoperative identification of benign and malignant renal masses can avoid unnecessary surgery and serious complications, and reduce the financial burden on patients. Among renal malignancies, renal cell carcinoma (RCC) is the most morbid and lethal pathological type, whereas angiomyolipoma (AML) is the most common type of benign renal tumor (50, 51).

As the imaging features of these two kinds of tumors not only partially overlap, but are also easily influenced by the subjective experience of radiologists, which are still challenging and controversial to identify them based on imaging features solely. Four supervised ML algorithms including quadratic discriminant analysis, logistic regression, Na Free Baye, and nonlinear- SVM were compared for accuracy in distinguishing RCC from AML, using features of tumor, cortical and medullary regions as statistical inputs. Hersh et al. demonstrated that SVM achieved the best performance in distinguishing RCC between AML with an accuracy of 94% (37). Radiomics based on ultrasound can be taken as a promising diagnostic aid, as confirmed by the studies of Li et al. and Peiman et al. (38, 39). The accuracy of the radiomics nomogram based on ultrasound constructed by Li et al. to distinguish RCC from AML was superior to the assessment performance of junior and senior radiologists (38). Peiman et al. used radiomics to extract quantitative texture information and combined tumor-to-cortex echo intensity ratio and tumor size to construct a classification model that could accurately diagnose RCC and AML with an AUC of 0.945 (39). Despite the fact that these studies have yielded promising results, they all contain limitations that can be improved, including too few images, lack of validation set, and the proportion of the number of RCCs and AMLs included significantly differs from the actual incidence in the real world. As a result, when these models are applied to the real world, the outcomes may be quite different.

Contrast-enhanced ultrasound (CEUS) is particularly indicated for differential diagnosis between solid lesions and cysts. And previous studies showed that CEUS can be used to differentiate among lesions with an equivocal enhancement at CT or MRI (52, 53). This suggests that CEUS is a promising additional diagnostic tool capable of differentiating malignant from benign renal masses. Therefore, Zhu et al. (40) constructs a multimodal ultrasound fusion network, which can independently extract features from each of the two modalities (B-mode and CEUS-mode) and learn adaptive weights to fuse features for each sample.

AI cannot merely assist in the differential diagnosis of RCC and AML, but can also precisely distinguish between multiple classes of renal abnormalities. Sudharson et al. proposed an ensemble of deep neural networks based on transfer learning, which could ideally classify renal ultrasound images into four categories, namely normal, cysts, stones, and tumors (41). Their technique combined different deep neural networks, including ResNet-101, ShuffleNet, and MobileNet-v2 for feature extraction and then used SVM for classification. The ensemble model has demonstrated better classification performance than the individual deep neural network model.

Congenital abnormalities of the kidney and urinary tract (CAKUT) are disorders caused by developmental defects of the kidney and its outflow tract, including abnormalities in the location and number of kidneys, abnormalities in the size and structure of the kidneys, and dilatation of the urinary tract, which could accelerate the progression of CKD (54). The Prevalence is estimated to be 0.04-0.6% (55). Although the widespread use of ultrasound imaging facilitates early detection of CAKUT, current methods are limited by the lack of automated processes that accurately classify diseased and normal kidneys (36).

Recent studies have introduced the contribution of their algorithms for accurate automated diagnosis of CAKUT. Yin et al. developed a clinical diagnostic model for renal ultrasound images in multiple views which built upon the transfer learning and the multi-instance learning (MIL) (36). Diagnostic performance was measured by AUC and accuracy, and was achieved to 96.5% and 93.5% respectively. The results shown that their multi-view multi-instance DL method could obtain higher classification accuracy than DL models built on individual kidney images and kidney images in one single view. Based on the graph convolutional networks to optimize the instance-level features learned by CNNs and the integrated instance-level and bag-level supervision to improve the classification, Yin et al. also developed a deep MIL method for accurately diagnosing CAKUT (42). Compared with other deep MIL methods, the performance of the proposed method could achieve the accuracy, sensitivity and specificity to 85%, 86%, 84% respectively, which demonstrated that it could improve other state-of-the-art deep MIL methods for the kidney disease diagnosis. SVM classifiers integrating texture images features and deep transfer learning image features to accurately classify the kidneys of normal children and those with CAKUT (e.g., posterior urethral valves, kidney dysplasia) were built by Zheng et al. (43). The results suggested that the proposed method performed better than classifiers based on either the transfer learning features or the conventional features alone and yielded the best classification performance for distinguishing children with CAKUT from controls.

CAKUT is a very wide range of term that encompasses a variety of congenital anomaly that may occur in the urinary tract. Different types of CAKUT can result in different sonographic findings in the urinary tract. Nevertheless, the clinical characteristics of CAKUT patients who were included in the research by Yin et al. didn’t been described in detail (36, 42). And the study by Zheng et al. only involved US images of 50 CAKUT patients and 50 controls for modeling without further validation (43). These are the points that should be taken into consideration and improved in the design of future study.

Hydronephrosis is a dilatation of the kidney collecting system that occurs unilaterally or bilaterally. The grading of hydronephrosis is in accordance with the Society of Fetal Urology (SFU) study, which categorized the dilated renal pelvis, the number of calyces seen, and parenchymal atrophy into five grades of increasing severity (56). SFU grade 0 is for the normal kidney condition. Mild hydronephrosis (SFU grade 1-2) is usually considered a benign and relatively self-limiting condition that stabilizes or resolves spontaneously in most patients (57). In contrast, medium (SFU grade 3) and severe hydronephrosis (SFU grade 4) are closely related to a high risk of urinary tract infection and loss of kidney function (58, 59). Grading the severity of hydronephrosis relies on the subjective interpretation of renal ultrasound images. Hence, the emergence of AI offers a promising option for the evaluation and grading of hydronephrosis.

DL model has been used to solve hydronephrosis detecting and grading tasks in several studies. Guan et al. trained an encoder-decoder framework for ultrasonic hydronephrosis diagnosis (44). They selected U-Net to accurately segment the renal region and hydronephrosis region of the renal ultrasound images. U-Net for segmentation was trained on a labeled dataset which was annotated by professionals. And the classification network includes the encoder of U-Net for feature extraction and the Image Adaptive Classifier for image classification. Both the semantic segmentation section and the classification section shared a mutual usage of a transformation structure by separately training the encoder and decoder and loop this circle. This design can jointly utilize different supervision to automatically diagnose the severity of hydronephrosis by first segmenting and then classifying, which helps to improve the accuracy of the image classification network. A recent study by Lin et al. indicated that the Attention-Unet achieved a Dice coefficient of 0.83 for segmentation of the kidney and the dilated pelvicalyceal system (45). They further applied a fluid-to-kidney-area ratio measurement as a DL-derived biomarker for the semi-quantification of hydronephrosis. This DL method has been confirmed to provide an objective evaluation of pediatric hydronephrosis. A five layers CNN model developed from the Keras neural network API with Tensorflow to grade hydronephrosis ultrasound images was conducted by Smail et al. (46). The CNN model successfully achieved an average accuracy of 78% when classifying SFU grades 1-2 vs. SFU grades 3-4.

The current studies have explored the application of DL for detection and grading of hydronephrosis, validating the potential of the DL method to be capable of serving as a decision aid for clinical practice. However, the aforementioned study was retrospective, so it was not balanced in the types of SFU patients that included. A dataset with a balanced type and larger amount of data to construct a DL model may result in better performance and more accurately assist physicians in diagnosing hydronephrosis.

Despite the unprecedented and rapid development of AI technology in medical imaging, it is still far from being applied to various hospitals on a large scale. At present, there are many limitations to the various studies conducted on the application of AI to renal ultrasound. Controversies in radiomics applications include how to standardize ultrasound images, manual segmentation of images is both time-consuming and unstable, and unbalanced datasets used to construct models can lead to overfitting. On the other hand, in DL applications, the lack of large-scale public datasets, high requirements for image quality, and ultrasound devices from different manufacturers and heterogeneity of operators may lead to the variability in the training process. In addition, when there are more restrictions on the inclusion and exclusion criteria of the data, data censoring and bias are serious, which can also lead to AI models that deviate from the real world and have low generalization capabilities.

At the meantime, these also offer a new impetus for the development of renal ultrasound and show the broad prospect of AI-powered ultrasound in the future. Prospective multicenter studies are now urgently necessary, so how to eliminate discrepancies between different ultrasound devices and imaging parameters is a primary problem to be solved. The heterogeneity and variability of ultrasound data acquisition methods are the main obstacles that limit the comparison and generalization of different methods across different tasks. Therefore, the establishment of a standardized database for ultrasound applications is one of the directions for further research in the future. And the necessity of more robust methods to deal with speckle noise in ultrasound images and to automate the segmentation process (16).

In the subfield of renal ultrasound, there are still many applications that deserve in-depth study. For instance, in the treatment of renal masses, AI enables an optimized surgical approach known as robot-assisted partial nephrectomy. Robot-assisted partial nephrectomy has shown a significant improvement in the preservation of renal function, with no significant difference from radical nephrectomy in terms of overall survival, cancer-specific survival, recurrence or comorbidities (60, 61). However, one of the problems of AI in the diagnosis and treatment of kidney disease is how to reliably evaluate the remaining functional part of the kidney and choose the right surgical scope appropriately. Therefore, in addition to the diagnosis of benign and malignant renal tumors, the proper estimation of the remaining portion of the kidney for accurate surgical scope should be addressed when using AI for renal ultrasound.

AI, particularly DL, is progressively changing the field of medical imaging, giving rise to improved performance in the kidney segmentation, prediction of the kidney function, diagnosis of the kidney diseases. Due to its powerful image processing capability, fast computing speed and fatigue-free, AI applied to ultrasound is becoming more mature and coming closer to routine clinical applications (62). In the future, AI technology would gradually become inseparable from clinical work. However, we cannot directly apply various algorithms to clinical tasks. Given to the merits and weakness of varied algorithms, we need to choose the algorithm carefully to make the best use of its advantages according to the specific ultrasound task. With the rapid development of AI technology, clinicians must quickly adapt to their new roles as technology users and patient advocates, and refine their expertise for the benefit of their patients (63).

X-WC and Y-XP established the design and conception of the paper; TX, X-YZ, and NY explored the literature data; TX provided the first draft of the manuscript, which was discussed and revised critically for intellectual content by X-YZ, NY, X-FP, G-QC, X-WC, and Y-XP. All authors contributed to the article and approved the submitted version.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work has received funding from the High-end Foreign Experts Recruitment Plan of China (grant no. G2022154032L).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Lv JC, Zhang LX. Prevalence and disease burden of chronic kidney disease. Adv Exp Med Biol (2019) 1165:3–15. doi: 10.1007/978-981-13-8871-2_1

2. Kanda H, Hirasaki Y, Iida T, Kanao-Kanda M, Toyama Y, Chiba T, et al. Perioperative management of patients with end-stage renal disease. J Cardiothorac Vasc Anesth (2017) 31(6):2251–67. doi: 10.1053/j.jvca.2017.04.019

4. Chen X, Wang X, Zhang K, Fung KM, Thai TC, Moore K, et al. Recent advances and clinical applications of deep learning in medical image analysis. Med Image Anal (2022) 79:102444. doi: 10.1016/j.media.2022.102444

5. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts H. Artificial intelligence in radiology. Nat Rev Cancer. (2018) 18(8):500–10. doi: 10.1038/s41568-018-0016-5

6. Lei YM, Yin M, Yu MH, Yu J, Zeng SE, Lv WZ, et al. Artificial intelligence in medical imaging of the breast. Front Oncol (2021) 11:600557. doi: 10.3389/fonc.2021.600557

7. Li-Qiang Zhou J-YW, Yu S-Y, Wu G-G, Wei Qi, Deng Y-B, Wu X-L, et al. artificial intelligence in medical imaging of the liver. World J Gastroenterol (2019) 25(6):672–82. doi: 10.3748/wjg.v25.i6.672

8. Dietrich CF, Shi L, Koch J, Lowe A, Dong Y, Cui XW, et al. Early detection of pancreatic tumors by advanced EUS imaging. Minerva Gastroenterol Dietol. (2020) 68(2):133–43. doi: 10.23736/S1121-421X.20.02789-0

9. Wu GG, Lv WZ, Yin R, Xu JW, Yan YJ, Chen RX, et al. Deep learning based on ACR TI-RADS can improve the differential diagnosis of thyroid nodules. Front Oncol (2021) 11:575166. doi: 10.3389/fonc.2021.575166

10. Suarez-Ibarrola R, Hein S, Reis G, Gratzke C, Miernik A. Current and future applications of machine and deep learning in urology: a review of the literature on urolithiasis, renal cell carcinoma, and bladder and prostate cancer. World J Urol. (2020) 38(10):2329–47. doi: 10.1007/s00345-019-03000-5

11. Choi RY, Coyner AS, Kalpathy-Cramer J, Chiang MF, Campbell JP. Introduction to machine learning, neural networks, and deep learning. Transl Vis Sci Technol (2020) 9(2):14. doi: 10.1167/tvst.9.2.14

12. Shen YT, Chen L, Yue WW, Xu HX. Artificial intelligence in ultrasound. Eur J Radiol (2021) 139:109717. doi: 10.1016/j.ejrad.2021.109717

13. LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. (2015) 521(7553):436–44. doi: 10.1038/nature14539

14. Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, et al. A survey on deep learning in medical image analysis. Med Image Anal (2017) 42:60–88. doi: 10.1016/j.media.2017.07.005

15. Dinggang Shen GW, Suk H-I. Deep learning in medical image analysis. Annu Rev Biomed Engineering. (2017) 19:221–48. doi: 10.1146/annurev-bioeng-071516-044442

16. Torres HR, Queiros S, Morais P, Oliveira B, Fonseca JC, Vilaca JL. Kidney segmentation in ultrasound, magnetic resonance and computed tomography images: A systematic review. Comput Methods Programs Biomed (2018) 157:49–67. doi: 10.1016/j.cmpb.2018.01.014

17. Cardenas CE, Yang J, Anderson BM, Court LE, Brock KB. Advances in auto-segmentation. Semin Radiat Oncol (2019) 29(3):185–97. doi: 10.1016/j.semradonc.2019.02.001

18. Yang F, Qin W, Xie Y, Wen T, Gu J. A shape-optimized framework for kidney segmentation in ultrasound images using NLTV denoising and DRLSE. BioMed Eng Online. (2012) 11:82. doi: 10.1186/1475-925X-11-82

19. Yin S, Peng Q, Li H, Zhang Z, You X, Fischer K, et al. Automatic kidney segmentation in ultrasound images using subsequent boundary distance regression and pixelwise classification networks. Med Image Anal (2020) 60:101602. doi: 10.1016/j.media.2019.101602

20. Chen G, Dai Y, Zhang J, Yin X, Cui L. MBANet: Multi-branch aware network for kidney ultrasound images segmentation. Comput Biol Med (2022) 141:105140. doi: 10.1016/j.compbiomed.2021.105140

21. Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL. DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans Pattern Anal Mach Intelligence. (2018) 40(4):834–48. doi: 10.1109/TPAMI.2017.2699184

22. Shelhamer E, Long J, Darrell T. Fully convolutional networks for semantic segmentation. IEEE Trans Pattern Anal Mach Intell (2017) 39(4):640–51. doi: 10.1109/TPAMI.2016.2572683

23. Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. Cham: Springer International Publishing (2015).

24. Badrinarayanan V, Kendall A, Cipolla R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intelligence. (2017) 39(12):2481–95. doi: 10.1109/TPAMI.2016.2644615

25. Zhao H, Shi J, Qi X, Wang X, Jia J. Pyramid scene parsing network. Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, HI, USA: IEEE. (2017). p.6230–9. doi: 10.1109/CVPR.2017.660

26. Chen L-C, Zhu Y, Papandreou G, Schroff F, Adam H. Encoder-decoder with atrous separable convolution for semantic image segmentation. Cham: Springer International Publishing (2018).

27. Salmi IAl, Hajriy MAl, Hannawi S. Ultrasound measurement and kidney development: a mini-review for nephrologists. Saudi J Kidney Dis Transplantation. (2021) 32(1):174–82. doi: 10.4103/1319-2442.318520

28. Jones. SME, Schroepple. J, Arya. K, Mcclellan. W, Henniga. RA, O’Neill C. Correlation of renal histopathology with sonographic findings. Kidney Int (2005) 67:1515–20. doi: 10.1111/j.1523-1755.2005.00230.x

29. Cheung CM, Chrysochou C, Shurrab AE, Buckley DL, Cowie A, Kalra PA. Effects of renal volume and single-kidney glomerular filtration rate on renal functional outcome in atherosclerotic renal artery stenosis. Nephrol Dial Transplant. (2010) 25(4):1133–40. doi: 10.1093/ndt/gfp623

30. Magistroni R, Corsi C, Marti T, Torra R. A review of the imaging techniques for measuring kidney and cyst volume in establishing autosomal dominant polycystic kidney disease progression. Am J Nephrol. (2018) 48(1):67–78. doi: 10.1159/000491022

31. Kim DW, Ahn HG, Kim J, Yoon CS, Kim JH, Yang S. Advanced kidney volume measurement method using ultrasonography with artificial intelligence-based hybrid learning in children. Sensors (Basel). (2021) 21(20):6846. doi: 10.3390/s21206846

32. Benzing T, Salant D. Insights into glomerular filtration and albuminuria. N Engl J Med (2021) 384(15):1437–46. doi: 10.1056/NEJMra1808786

33. Chen C-J, Pai T-W, Hsu H-H, Lee C-H, Chen K-S, Chen Y-C. Prediction of chronic kidney disease stages by renal ultrasound imaging. Enterprise Inf Systems. (2019) 14(2):178–95. doi: 10.1080/17517575.2019.1597386

34. Kuo CC, Chang CM, Liu KT, Lin WK, Chiang HY, Chung CW, et al. Automation of the kidney function prediction and classification through ultrasound-based kidney imaging using deep learning. NPJ Digit Med (2019) 2:29. doi: 10.1038/s41746-019-0104-2

35. Zhu L, Huang R, Li M, Fan Q, Zhao X, Wu X, et al. Machine learning-based ultrasound radiomics for evaluating the function of transplanted kidneys. Ultrasound Med Biol (2022) 48(8):1441–52. doi: 10.1016/j.ultrasmedbio.2022.03.007

36. Yin S, Peng Q, Li H, Zhang Z, You X, Fischer K, et al. Computer-aided diagnosis of congenital abnormalities of the kidney and urinary tract in children using a multi-instance deep learning method based on ultrasound imaging data. Proc IEEE Int Symp BioMed Imaging. (2020) 2020:1347–50. doi: 10.1109/ISBI45749.2020.9098506

37. Sagreiya H, Akhbardeh A, Li D, Sigrist R, Chung BI, Sonn GA, et al. Point shear wave elastography using machine learning to differentiate renal cell carcinoma and angiomyolipoma. Ultrasound Med Biol (2019) 45(8):1944–54. doi: 10.1016/j.ultrasmedbio.2019.04.009

38. Li C, Qiao G, Li J, Qi L, Wei X, Zhang T, et al. An ultrasonic-based radiomics nomogram for distinguishing between benign and Malignant solid renal masses. Front Oncol (2022) 12:847805. doi: 10.3389/fonc.2022.847805

39. Habibollahi P, Sultan LR, Bialo D, Nazif A, Faizi NA, Sehgal CM, et al. Hyperechoic renal masses: differentiation of angiomyolipomas from renal cell carcinomas using tumor size and ultrasound radiomics. Ultrasound Med Biol (2022) 48(5):887–94. doi: 10.1016/j.ultrasmedbio.2022.01.011

40. Zhu D, Li J, Li Y, Wu J, Zhu L, Li J, et al. Multimodal ultrasound fusion network for differentiating between benign and Malignant solid renal tumors. Front Mol Biosci (2022) 9:982703. doi: 10.3389/fmolb.2022.982703

41. Sudharson S, Kokil P. An ensemble of deep neural networks for kidney ultrasound image classification. Comput Methods Programs Biomed (2020) 197:105709. doi: 10.1016/j.cmpb.2020.105709

42. Yin S, Peng Q, Li H, Zhang Z, You X, Liu H, et al. Multi-instance deep learning with graph convolutional neural networks for diagnosis of kidney diseases using ultrasound imaging. Uncertain Safe Util Mach Learn Med Imaging Clin Image Based Proced (2019) 11840:146–54. doi: 10.1007/978-3-030-32689-0_15

43. Zheng Q, Furth SL, Tasian GE, Fan Y. Computer-aided diagnosis of congenital abnormalities of the kidney and urinary tract in children based on ultrasound imaging data by integrating texture image features and deep transfer learning image features. J Pediatr Urol. (2019) 15(1):75 e1– e7. doi: 10.1016/j.jpurol.2018.10.020

44. Guan Y, Peng H, Li J, Wang Q. A mutual promotion encoder-decoder method for ultrasonic hydronephrosis diagnosis. Methods. (2022) 203:78–89. doi: 10.1016/j.ymeth.2022.03.014

45. Lin Y, Khong PL, Zou Z, Cao P. Evaluation of pediatric hydronephrosis using deep learning quantification of fluid-to-kidney-area ratio by ultrasonography. Abdom Radiol (NY). (2021) 46(11):5229–39. doi: 10.1007/s00261-021-03201-w

46. Smail LC, Dhindsa K, Braga LH, Becker S, Sonnadara RR. Using deep learning algorithms to grade hydronephrosis severity: toward a clinical adjunct. Front Pediatr (2020) 8:1. doi: 10.3389/fped.2020.00001

47. Perazella MA, Dreicer R, Rosner MH. Renal cell carcinoma for the nephrologist. Kidney Int (2018) 94(3):471–83. doi: 10.1016/j.kint.2018.01.023

48. Rossi SH, Prezzi D, Kelly-Morland C, Goh V. Imaging for the diagnosis and response assessment of renal tumours. World J Urol (2018) 36(12):1927–42. doi: 10.1007/s00345-018-2342-3

49. Leveridge MJ, Finelli A, Kachura JR, Evans A, Chung H, Shiff DA, et al. Outcomes of small renal mass needle core biopsy, nondiagnostic percutaneous biopsy, and the role of repeat biopsy. Eur Urol (2011) 60(3):578–84. doi: 10.1016/j.eururo.2011.06.021

50. Attalla K, Weng S, Voss MH, Hakimi AA. Epidemiology, risk assessment, and biomarkers for patients with advanced renal cell carcinoma. Urol Clin North Am (2020) 47(3):293–303. doi: 10.1016/j.ucl.2020.04.002

51. Kutikov A, Fossett LK, Ramchandani P, Tomaszewski JE, Siegelman ES, Banner MP, et al. Incidence of benign pathologic findings at partial nephrectomy for solitary renal mass presumed to be renal cell carcinoma on preoperative imaging. Urology. (2006) 68(4):737–40. doi: 10.1016/j.urology.2006.04.011

52. Bertolotto M, Cicero C, Perrone R, Degrassi F, Cacciato F, Cova MA. Renal masses with equivocal enhancement at CT: characterization with contrast-enhanced ultrasound. AJR Am J Roentgenol. (2015) 204(5):W557–65. doi: 10.2214/AJR.14.13375

53. Tufano A, Drudi FM, Angelini F, Polito E, Martino M, Granata A, et al. Contrast-enhanced ultrasound (CEUS) in the evaluation of renal masses with histopathological validation-results from a prospective single-center study. Diagnostics (Basel) (2022) 12(5):1209. doi: 10.3390/diagnostics12051209

54. Dodson JL, Jerry-Fluker JV, Ng DK, Moxey-Mims M, Schwartz GJ, Dharnidharka VR, et al. Urological disorders in chronic kidney disease in children cohort: clinical characteristics and estimation of glomerular filtration rate. J Urol. (2011) 186(4):1460–6. doi: 10.1016/j.juro.2011.05.059

55. Murugapoopathy V, Gupta IR. A primer on congenital anomalies of the kidneys and urinary tracts (CAKUT). Clin J Am Soc Nephrol. (2020) 15(5):723–31. doi: 10.2215/CJN.12581019

56. Fernbach. SK, Maizels. M, Conway. JJ. Ultrasound grading of hydronephrosis: introduction to the system used by the Society for Fetal Urology. Pediatr Radiology. (1993) 23(6):478–80. doi: 10.1007/BF02012459

57. Gokaslan F, Yalcinkaya F, Fitoz S, Ozcakar ZB. Evaluation and outcome of antenatal hydronephrosis: a prospective study. Ren Fail (2012) 34(6):718–21. doi: 10.3109/0886022X.2012.676492

58. Walsh TJ, Hsieh S, Grady R, Mueller BA. Antenatal hydronephrosis and the risk of pyelonephritis hospitalization during the first year of life. Urology. (2007) 69(5):970–4. doi: 10.1016/j.urology.2007.01.062

59. Patel K, Batura D. An overview of hydronephrosis in adults. Br J Hosp Med (Lond). (2020) 81(1):1–8. doi: 10.12968/hmed.2019.0274

60. Cerrato C, Meagher MF, Autorino R, Simone G, Yang B, Uzzo RG, et al. Partial versus radical nephrectomy for complex renal mass: multicenter comparative analysis of functional outcomes (Rosula collaborative group). Minerva Urol Nephrology. (2023) 75(4):425–33. doi: 10.23736/S2724-6051.23.05123-6

61. Cerrato C, Patel D, Autorino R, Simone G, Yang B, Uzzo R, et al. Partial or radical nephrectomy for complex renal mass: a comparative analysis of oncological outcomes and complications from the ROSULA (Robotic Surgery for Large Renal Mass) Collaborative Group. World J Urol (2023) 41(3):747–55. doi: 10.1007/s00345-023-04279-1

62. Akkus Z, Cai J, Boonrod A, Zeinoddini A, Weston AD, Philbrick KA, et al. A survey of deep-learning applications in ultrasound: artificial intelligence-powered ultrasound for improving clinical workflow. J Am Coll Radiol (2019) 16(9 Pt B):1318–28. doi: 10.1016/j.jacr.2019.06.004

Keywords: artificial intelligence, ultrasound, kidney, machine learning, deep learning

Citation: Xu T, Zhang X-Y, Yang N, Jiang F, Chen G-Q, Pan X-F, Peng Y-X and Cui X-W (2024) A narrative review on the application of artificial intelligence in renal ultrasound. Front. Oncol. 13:1252630. doi: 10.3389/fonc.2023.1252630

Received: 14 July 2023; Accepted: 12 December 2023;

Published: 01 March 2024.

Edited by:

Salvatore Siracusano, University of L’Aquila, ItalyReviewed by:

Antonio Tufano, Sapienza University of Rome, ItalyCopyright © 2024 Xu, Zhang, Yang, Jiang, Chen, Pan, Peng and Cui. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yue-Xiang Peng, c3lweXg0QDE2My5jb20=; Xin-Wu Cui, Y3VpeGlud3VAbGl2ZS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.