- 1Department of Circulation and Medical Imaging, Norwegian University of Science and Technology, Trondheim, Norway

- 2Department of Radiology and Nuclear Medicine, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway

Introduction: The five-class Dixon-based PET/MR attenuation correction (AC) model, which adds bone information to the four-class model by registering major bones from a bone atlas, has been shown to be error-prone. In this study, we introduce a novel method of accounting for bone in pelvic PET/MR AC by directly predicting the errors in the PET image space caused by the lack of bone in four-class Dixon-based attenuation correction.

Methods: A convolutional neural network was trained to predict the four-class AC error map relative to CT-based attenuation correction. Dixon MR images and the four-class attenuation correction µ-map were used as input to the models. CT and PET/MR examinations for 22 patients ([18F]FDG) were used for training and validation, and 17 patients were used for testing (6 [18F]PSMA-1007 and 11 [68Ga]Ga-PSMA-11). A quantitative analysis of PSMA uptake using voxel- and lesion-based error metrics was used to assess performance.

Results: In the voxel-based analysis, the proposed model reduced the median root mean squared percentage error from 12.1% and 8.6% for the four- and five-class Dixon-based AC methods, respectively, to 6.2%. The median absolute percentage error in the maximum standardized uptake value (SUVmax) in bone lesions improved from 20.0% and 7.0% for four- and five-class Dixon-based AC methods to 3.8%.

Conclusion: The proposed method reduces the voxel-based error and SUVmax errors in bone lesions when compared to the four- and five-class Dixon-based AC models.

1 Introduction

The advent of prostate-specific membrane antigen (PSMA) tracers has led to the increasing adoption of PET as the modality of choice in diagnosing recurrent prostate cancer (1). For this patient group, [68Ga]GaPSMA-11 PET/MR has been shown to have similar diagnostic performance to [68Ga]Ga-PSMA-11 PET/CT in nodal and osseous metastasis (2–4) and superior performance in the detection of local recurrences due to the higher soft-tissue contrast provided by MR (2, 5). However, attenuation correction (AC), which is the most important correction required for quantitatively accurate PET imaging, remains a challenge in PET/MR imaging (6–8).

For PET/CT, the contrast of the CT images is dependent on the electron density of the imaged tissue, which in turn is related to the linear attenuation coefficient (LAC) of the PET photons (6, 9). A piecewise linear transformation of the CT Hounsfield units can be used to estimate the LAC at the PET photon energy of 511 keV (10). This approach is widely accepted as AC for PET/CT in clinical practice (6, 11). Since the signal in MR comes from proton densities and tissue relaxation times, no such straightforward relationship between the MR intensity values and LAC at the PET photon energy exists (6, 9, 11).

In current clinical practice, whole-body PET/MR AC is typically derived from Dixon MR sequences. These sequences are time-efficient to acquire and are available in all commercially available clinical PET/MR scanners (12). In four-class Dixon-based AC, Dixon MR images are segmented into four components: fat, lung, soft tissue, and background air, and each component is subsequently assigned a respective predefined LAC (13). Bone, although highly attenuating, is not accounted for in this four-class attenuation correction model. Disregarding the bone can lead to an underestimation of the standardized uptake values in and near the bone. For the pelvic region in particular, errors as large as 30% have been found in the most impacted bone lesions (14). In the staging of prostate cancer recurrence after definitive therapy, bone lesions are also fairly common and can be expected in more than 20% of the cases (15). In restaging after salvage radiotherapy, bone lesions are even more common and are observed in as many as 45% of cases (16).

The short relaxation times and low proton density of cortical bone cause the MR signal to decay quickly in bone tissue (17) and make bone hard to distinguish from air in conventional MR images. Thus, in the four-class Dixon-based AC model, bone is classified as soft tissue. To tackle this issue, Paulus et al. (18) proposed the five-class Dixon-based AC model. This model is an atlas-based approach for whole-body PET AC based on individual registration of the major bones (skull, femur, hips, and spine, including sacrum) from a bone atlas consisting of paired Dixon MR images and bone masks. However, this method is prone to registration errors and misses bones entirely in some cases (19, 20). In a recent retrospective study of 200 patients, it was advised not to use this form of AC for PSMA PET/MR without great caution and thorough inspection of the resulting µ-maps (20).

Several deep learning-based approaches have also been suggested to improve AC in PET/MR. These approaches can broadly be categorized into those that only use the Dixon images (7, 21), those that require other MR sequences than the standard Dixon images (22–25), and approaches that only use the PET data (26). A different way to categorize these approaches is by whether their goal is to create a pseudo-CT image or a µ-map (21–23, 25, 27) or to directly correct or predict the corrected PET image itself (26).

An obvious limitation of the acquisition of additional MR sequences for AC purposes is that it requires additional scan time. Approaches that rely on the PET data itself as input data, either for µ-map prediction or for direct prediction of the corrected PET image itself, are dependent on the tracer for which the model is trained. These models are thus not directly applicable to multiple tracers without retraining the model.

We introduce a novel, deep learning-based approach to improving AC in pelvic PET/MR acquisitions. The proposed method aims to directly correct the errors in the PET images caused by bones not being included in the four-class AC model rather than predicting new µ-maps. For this purpose, a voxel-wise correction map is predicted by a convolutional neural network using Dixon MR and the four-class µ-map as input. The predicted correction map can subsequently be applied as a postprocessing step directly in the PET image space to correct PET images reconstructed with the four-class µ-map without re-reconstruction of the images. Additionally, the proposed model requires no additional sequences beyond the standard Dixon MR images and does not require retraining to be used with multiple tracers. In this work, we evaluated the quantitative impact of the proposed method on PSMA uptake in the pelvic region of patients suspected of recurrence of prostate cancer.

2 Materials and methods

2.1 Patient selection and data acquisition

This study included 49 patients who underwent same-day PET/CT and PET/MR procedures following a single tracer injection. The included cohort consisted of male patients with suspicion of lymphoma and lung cancer scanned with [18F]FDG (FDG), which was used for training and validation of the proposed model. A separate cohort with patients suspected of recurrence of prostate cancer after radical treatment scanned with [68Ga]Ga-PSMA-11 or [18F]PSMA-1007 was used as the test set. The PET acquisitions included one to five bed positions, where data were acquired for 5–10 min per bed. Patients with sphincter pumps and metal implants were excluded, as were those with imperfect coregistration between MR and CT.

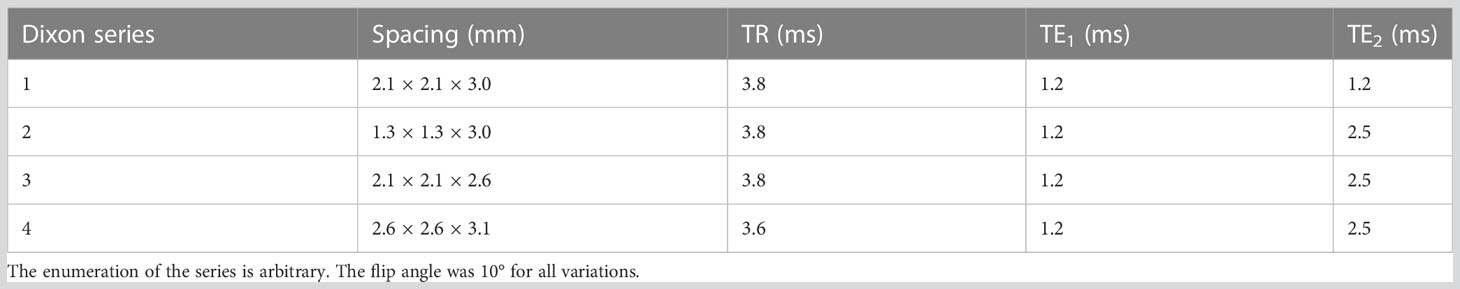

PET and MR images were acquired on a 3T Biograph mMR PET/MR scanner (Siemens Healthineers, Erlangen, Germany, updates MR B20P and MR E11). A standard Dixon sequence was acquired for attenuation correction purposes. The scan parameters and resolution of the Dixon series varied within the dataset. The parameters of the Dixon series are summarized in Table 1.

Low-dose CT images were acquired at a Biograph64 PET/CT scanner (Siemens Healthineers, Erlangen, Germany) using adaptive exposure control (tube voltage: 120 kV, peak and tube current median: 34.8, range: 17–52, slice thickness: 3 mm, matrix: 512 × 512, and pixel spacing: 1.5 mm × 1.5 mm). The CT images were acquired arms-up as opposed to the MR images. The PET images from the PET/CT examination were not used in this study.

2.2 µ-Map generation

To generate the reference standard µ-map, the CT images were first registered to the Dixon MR images with the Elastix registration toolbox (28, 29) using a composite registration scheme consisting of a rigid and a deformable stage (Supplementary Section 1). To obtain an accurate registration between CT and MR, arms were masked out from the MR images and corresponding four-class µ-maps. After the registration, the CT Hounsfield unit values were scaled to their corresponding LAC at 511 keV according to parameters by Burger et al. (10).

Due to the difficulty of attaining perfect coregistration in soft tissue and bone simultaneously, only the bone information was transferred into the Dixon four-class µ-map from the scanner console to generate the reference standard µ-map image as opposed to using a scaled registered CT directly. Bone information was defined as all voxels within the CT image with a LAC of >0.1 cm−1. This approach is similar to Bradshaw et al. (25), who also used MR-based AC for the soft tissue classes and coregistered bone information from CT images to form the reference standard µ-map. The CT-to-MR coregistration of each case was closely examined, and only images found to perform well upon visual inspection were kept in the dataset. The bone information was only inserted in a mask covering the pelvic region, which was defined as all slices from approximately the upper thighs to approximately vertebrae L2 of the lumbar spine.

2.3 PET reconstruction

PET reconstructions were performed using the Siemens proprietary offline PET reconstruction tools (E7tools, Siemens Healthineers, Erlangen, Germany) or at the scanner console with the following reconstruction settings: ordinary Poisson ordered-subset expectation-maximization algorithm with three iterations, 21 subsets, and a 4-mm Gaussian postreconstruction filter with resolution modeling included in the reconstruction (PSF). The PET volumes were reconstructed with a 344 × 344 matrix with an in-plane resolution of 2.1 mm × 2.1 mm and a slice thickness of 2.0 mm. Absolute scaling (30) was used for scatter correction in patients imaged with [68Ga]Ga-PSMA-11, and relative scaling was used for [18F]PSMA-1007 images and FDG images (31).

2.4 Correction method

A deep convolutional neural network was trained to predict the correction map between the PET images reconstructed using the reference standard µ-map, referred to as PETCT+MR, and the PET images reconstructed using the Dixon-based four-class µ-map, PET4C. The correction map was calculated as shown in Eq (1) For more details, see Supplementary Section 2.

The correction map was only estimated within the mask covering the pelvic region. Dixon in-phase, Dixon out-of-phase, and the four-class Dixon-based µ-map were used as inputs to the network. The images were resampled to the PET image space and min–max scaled to intensities in the range [0,1]. A threshold intensity mask was used to set intensity values outside the body contour to zero.

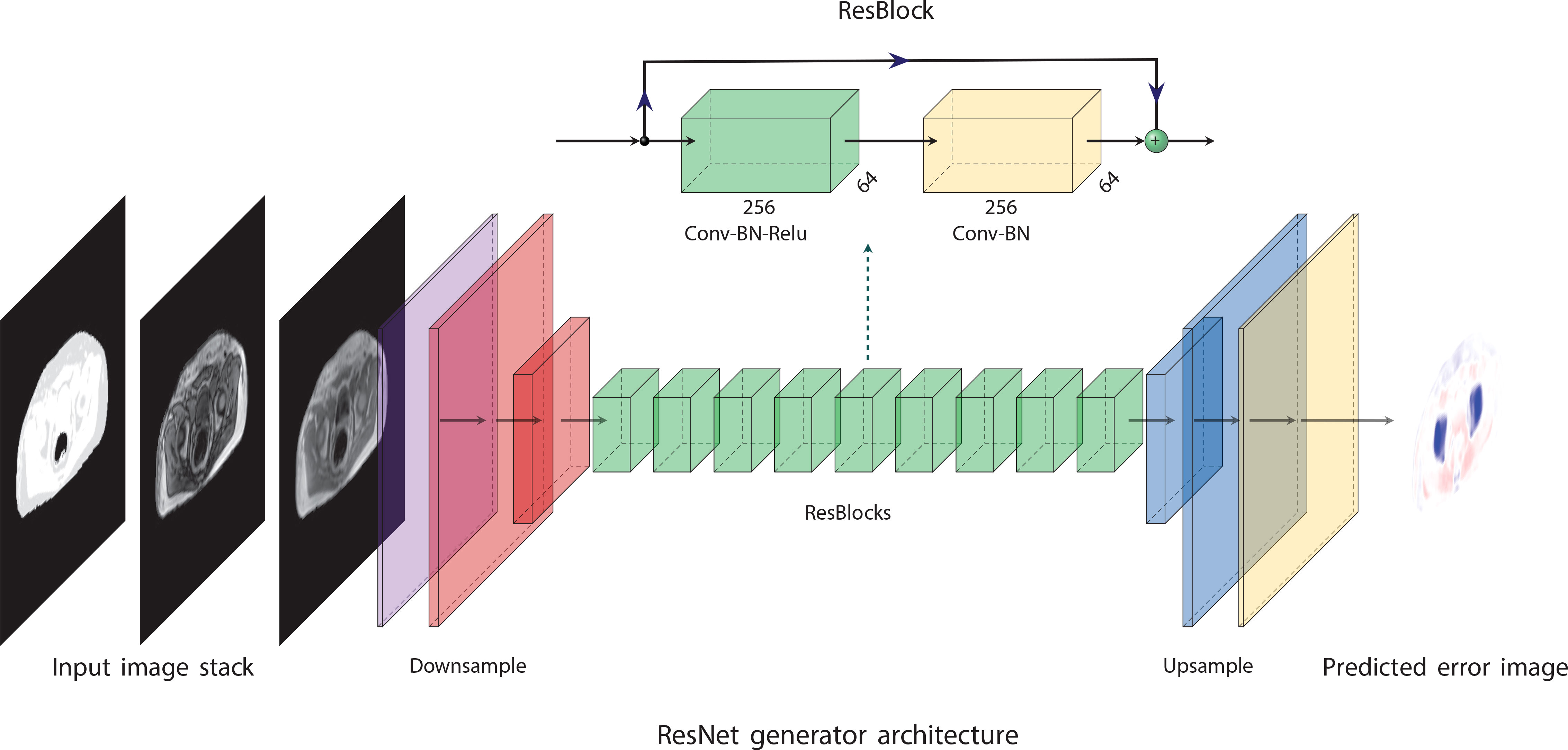

An overview of the network architecture is given in Figure 1. The network consisted of a nine-block ResNet (33) architecture inspired by Johnson et al. (32) and Wang et al. (35). The network was trained using L2 loss and an Adam optimizer (36) for 100 epochs with a linearly decaying learning rate starting from epoch 50. The code for training the network and the hyperparameters used in the final model is available on github (https://github.com/ntnu-mr-cancer/PETMR-4CMRAC-Correction-maps).

Figure 1 The network architecture is based on ideas proposed by Johnson et al. (32). It consists of nine residual blocks (33) (ResBlocks) between a convolutional front-end and a transposed convolutional back-end. The convolutional front-end downsamples the images to one-fourth of their original resolution, and the transpose convolutional back-end upsamples the images to their original resolution. The figure was made using PlotNeuralNet (34).

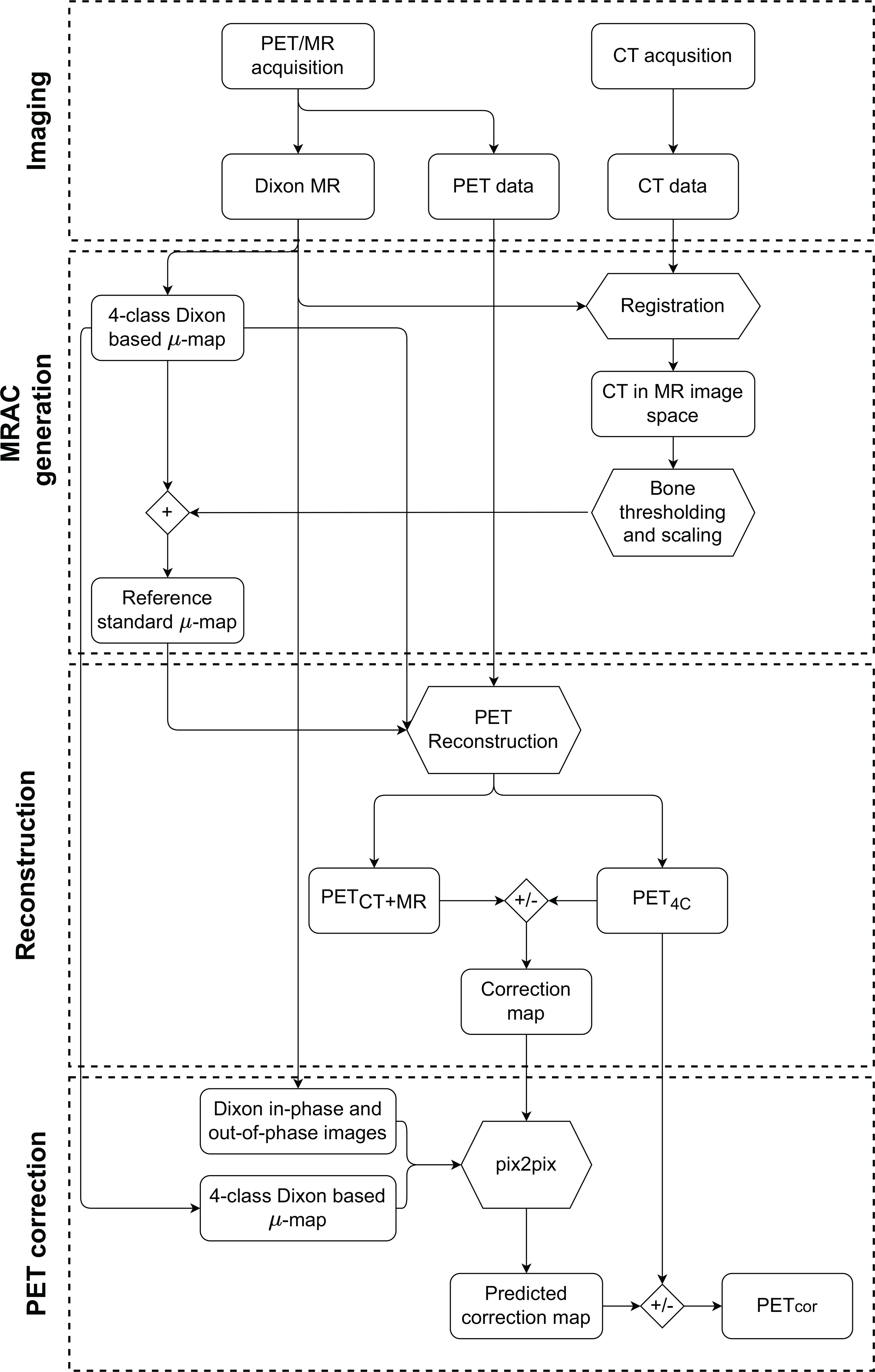

Results for additional tested network architectures can be found in Supplementary Section 3. The output of the network is a predicted correction map, which is used to correct the PET4C images by solving Eq (1). for PETCT+MR. The resulting corrected PET image will be referred to as PETcor. An overview of the methods from image acquisition to corrected PET images is given in Figure 2.

Figure 2 Graphical overview of the methodology from acquisition to generation of the corrected PET image (PETcor). The node labeled + refers to overwriting linear attenuation coefficients (LAC) in the four-class Dixon-based µ-map with CT bone information scaled to LAC at 511 keV. The nodes labeled +/-represent the creation of the correction map as specified in Eq (1), and the application of the correction map to PET reconstructed with the four-class Dixon-based µ-map (PET4C). PETMR+CT is PET reconstructed using reference standard µ-map, which is obtained by using a four-class Dixon-based µ-map for soft tissue and bone information from a co-registered CT image.

2.5 Analysis and statistics

Voxel- and lesion-based analysis was performed to assess the performance of the method. For the voxel-based analysis, only voxels within the pelvic mask that had an activity concentration of > 300 Bq ml−1 were used in the calculation. The relative error image (REx), defined as shown in Eq. (2),ResNet generator architecture

Where I is the image intensity, GS represents the reference standard PET image PETCT+MR, and x is either PET image that is compared to the PETCT+MR (i.e., PETcor or PET4C). REx was used as a basis to calculate the mean absolute percentage error (MAPE) and root-mean-squared percentage error (RMSPE) that were used as quantitative metrics in the voxel-based analysis. MAPE and RMSPE were defined as shown in Eq. (3)

Where µREx and σREx are the mean and standard deviation, REx and nmask are the number of voxels within the mask that satisfy the activity concentration threshold. It is understood that the summation in the definition of MAPE and the summations performed in calculating µREx and σREx in RMSPE are performed only over nmask.

To assess lesion performance, lesions were extracted from radiology reports. The lesion performance was measured as the relative error and MAPE of the maximum standardized uptake values (SUVmax) of the corresponding lesions between the PET images.

All presented values are given as medians with ranges in brackets unless otherwise mentioned. A two-sided Wilcoxon signed-rank test was performed to assess whether there were any differences between RMSPE values of the PETcor image and PET images reconstructed using four- and five-class Dixon-based AC (PET4C and PET5C) in the test set. A test of difference was also made for each of the tracers in the test set separately. Benjamini–Hochberg correction was used to correct the p-value for multiple comparisons where applicable (37). A Mann–Whitney U test was used to compare differences between the RMSPE of [68Ga]Ga-PSMA-11 and [18F]PSMA-1007 images. No statistical tests were performed for the lesion-based analysis due to the limited number of samples. A difference was considered significant if p < 0.05 was achieved.

3 Results

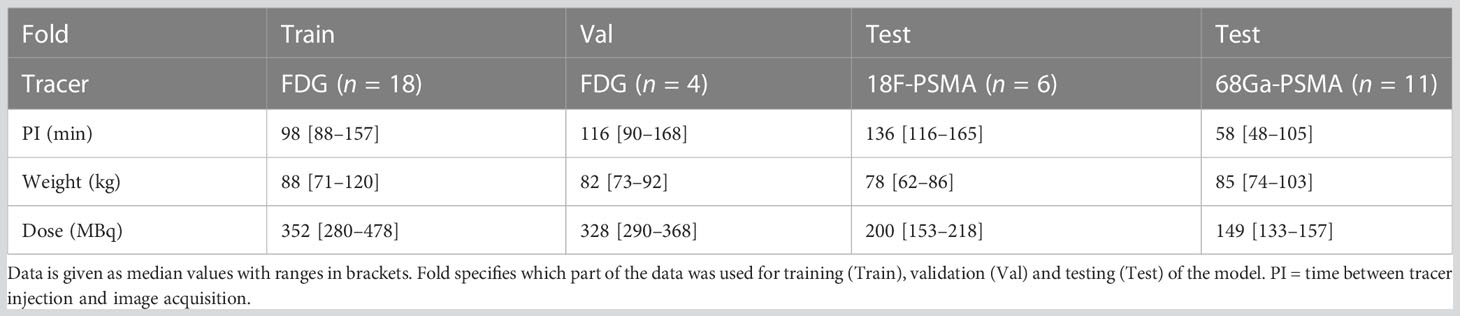

From the 49 included patients, two patients were removed from the dataset due to severe artifacts in the PET images and eight were removed due to suboptimal coregistration between CT and MR. This resulted in a training set consisting of 18 patients scanned with FDG, a validation set of four patients scanned with FDG, and a test set consisting of 17 patients scanned with [68Ga]Ga-PSMA-11 or [18F]PSMA-1007. An overview of the dataset is presented in Table 2, and a flow diagram of patient inclusion can be found in the Supplementary Material (S1). From radiology reports of patients in the test set, 16 soft tissue lesions and four bone lesions were extracted.

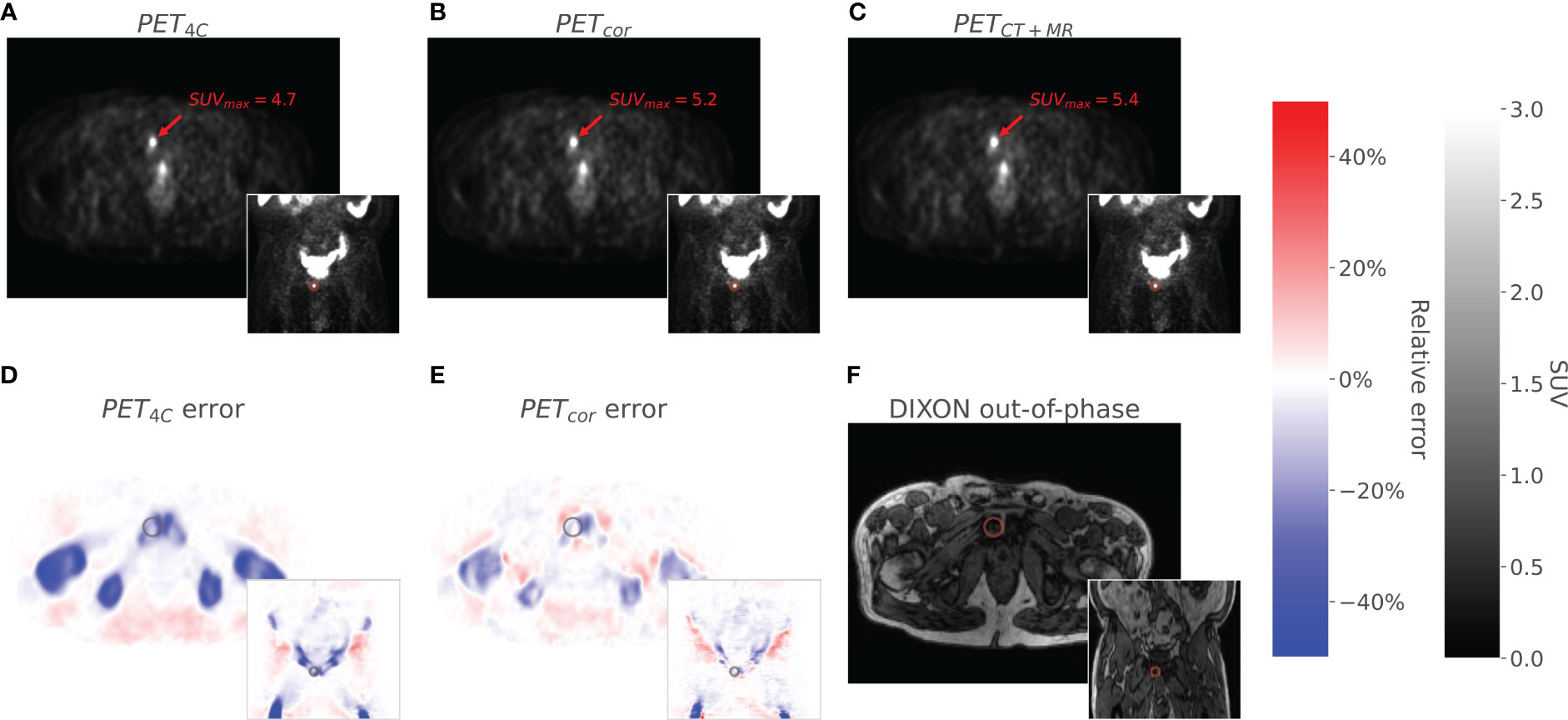

Training the convolutional neural network took approximately 4 h on a single NVIDIA GeForce GTX 1080 Ti. An example of a corrected PET image can be seen in Figure 3. The corrected PET images were found to closely resemble the reference standard PETCT+MR images.

Figure 3 The figure shows axial and coronal images of PET4C (A), PETcor (B), and PETCT (C). The PET4C error (D) is the relative error image between the PET4C image and PETMR+CT, and the PETcor error (E) is the relative error image between PETcor and PETMR+CT. Values outside the body contour in the relative error images are set to zero. The Dixon out-of-phase image is given as an anatomical correlate (F). A lesion located in the os pubis is highlighted in all images.

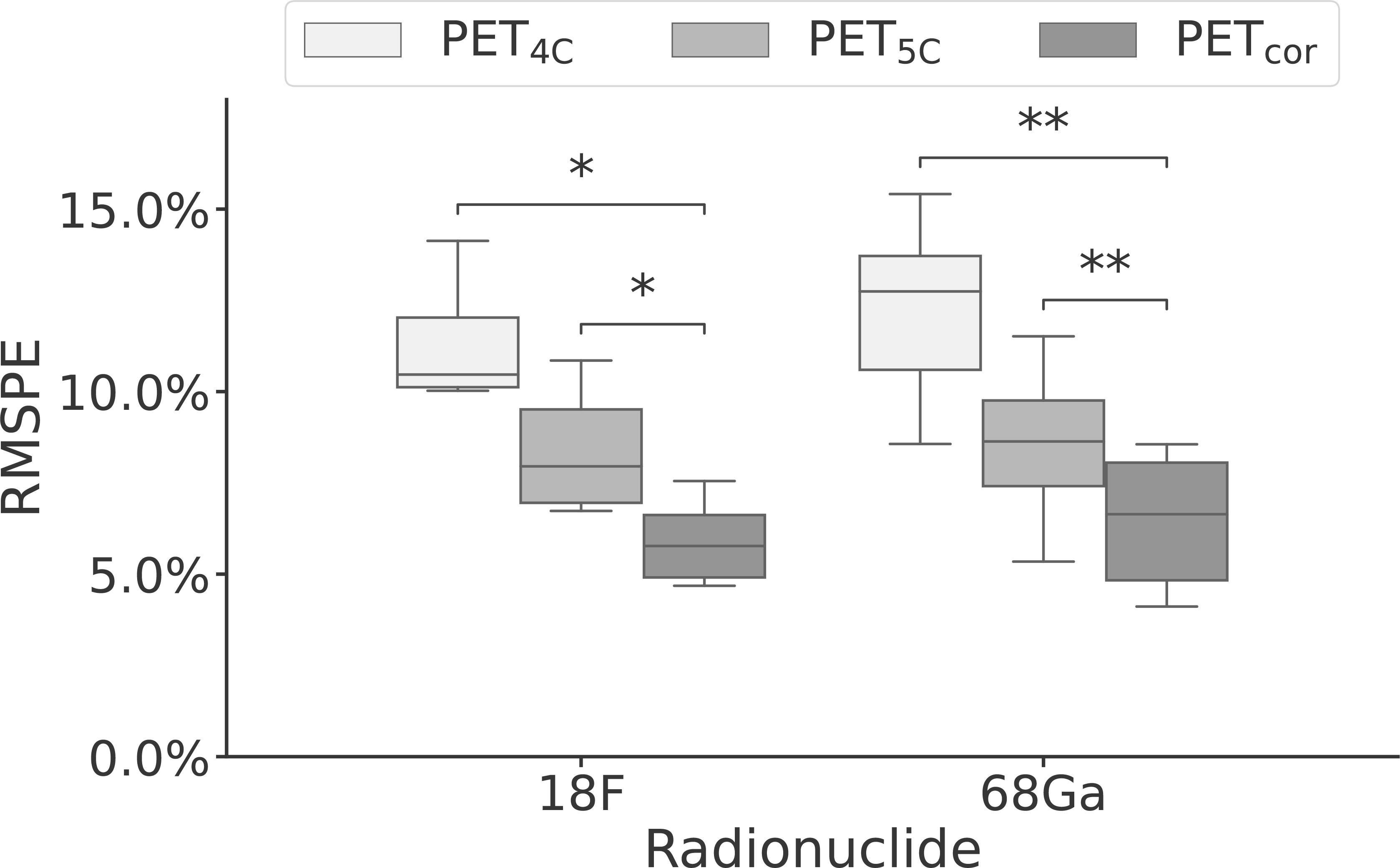

The voxel-based RMSPE and MAPE were 12.1% [8.6%, 15.4%] and 6.2% [4.0%, 10.3%], respectively, for the PET4C images and 8.6% [5.3%, 11.5%] and 3.5% [2.3%, 5.1%], respectively, for the PET5C images. In the PETcor images, the RMSPE was 6.2% [4.1%, 8.6%] and the MAPE was 3.3% [2.3%, 4.6%]. The error in PETcor is thus approximately reduced by half compared to the PET4C images. A significant difference was found between the RMSPE of the PETcor images and the PET4C images (p < 0.0001) and between PETcor images and the PET5C images (p < 0.0001). A significant difference was also found between the RMSPE values for [68Ga]Ga-PSMA-11 and [18F]PSMA-1007 patients individually between PETcor and both PET4C and PET5C, as shown in Figure 4. No significant difference was found when comparing the RMSPE of PETcor between patients acquired with [68Ga]Ga-PSMA-11 and [18F]PSMA-1007.

Figure 4 Box-and-whisker plot of the root mean squared percentage error performance stratified by radionuclide for PET reconstructed using four- and five-class Dixon-based attenuation correction (PET4C and PET5C) and the proposed model (PETcor). The central line within each box is the median. The box edges extend from the 25th to the 75th percentile. Values were considered outliers if they were more than 1.5 times the interquartile ranges of the box edges. The whiskers extend to the most extreme nonoutlier value in the data. *p < 0.05; **p < 0.01.

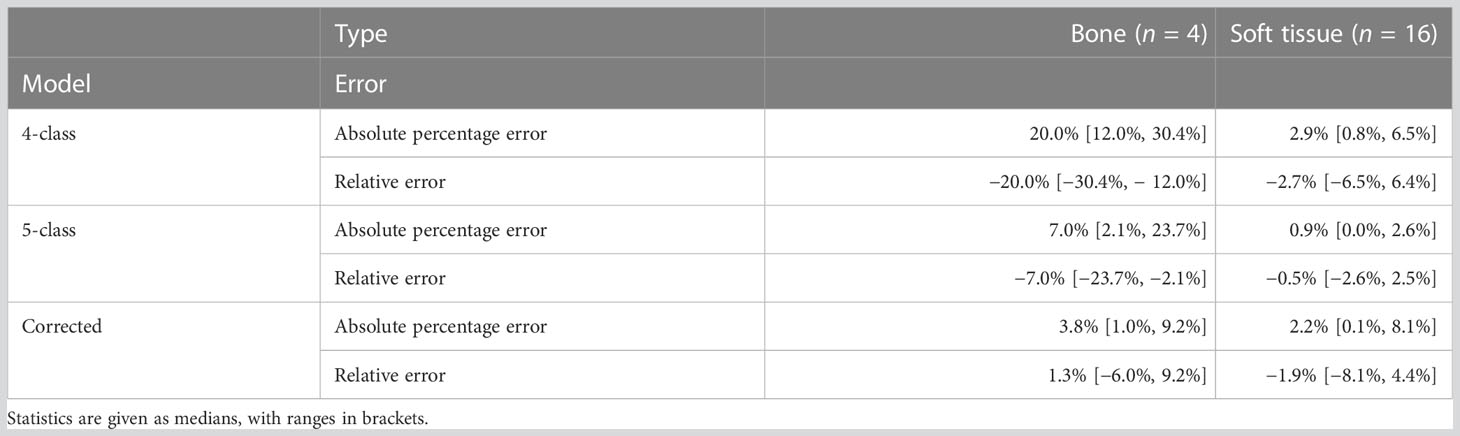

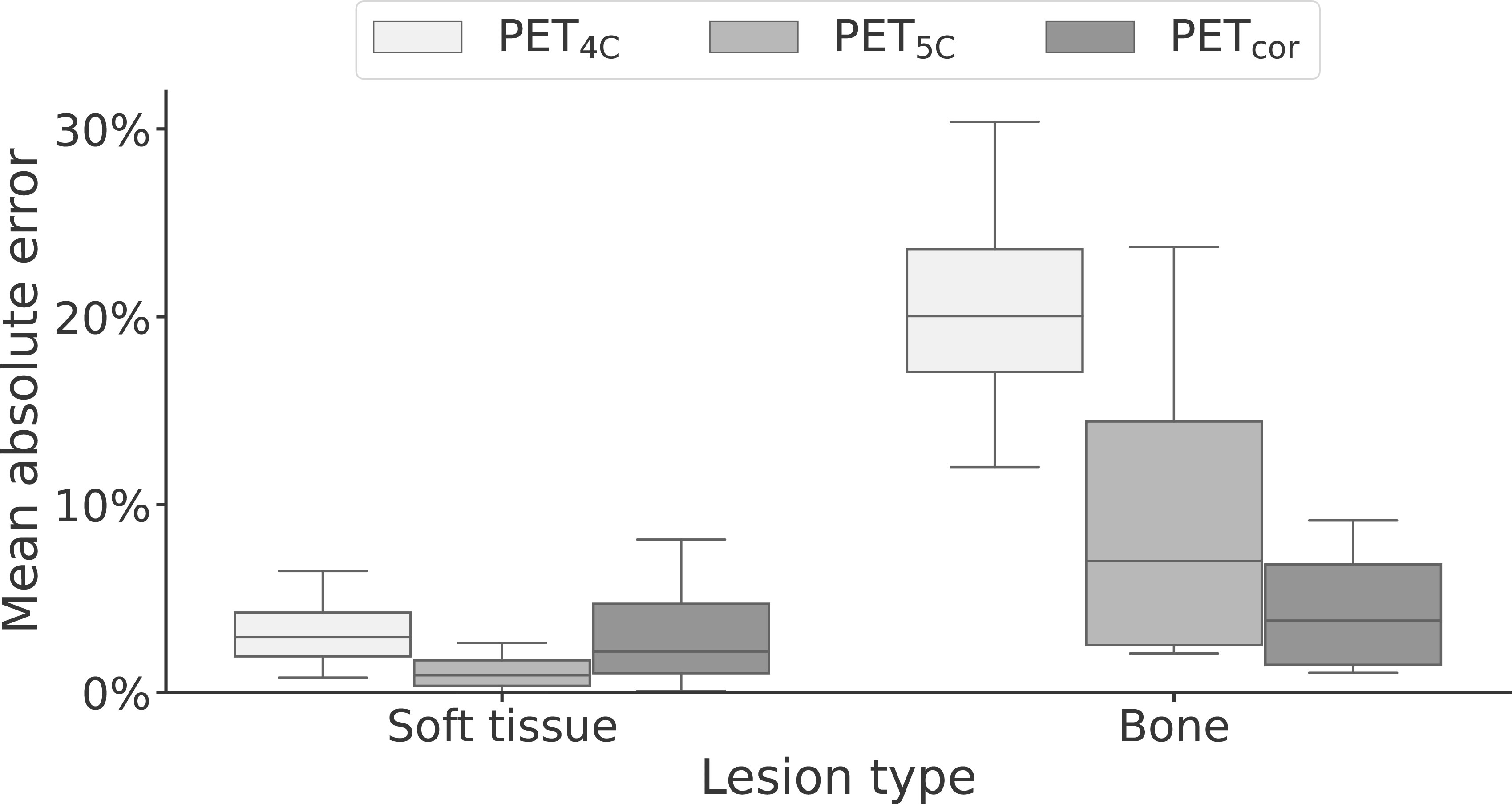

The lesion performance is summarized in Table 3 and Figure 5. Performance in soft tissue lesions improved marginally from a MAPE of 2.9% [0.8%, 6.5%] in PET4C to 2.2% [0.1%, 8.1%] for PETcor. For bone lesions, we observed more than a fivefold decrease in MAPE from 20.0% [12.0%, 30.4%] in PET4C to 3.8% [1.0%, 9.2%] in PETcor. PET5C, in comparison to the proposed model, had lower MAPE in soft tissue lesions and higher MAPE in bone lesions.

Table 3 Lesion performance of PET images reconstructed using four- and five-class Dixon-based µ-maps (PET4C and PET5C) and PET corrected using the proposed model (PETcor) relative to the reference standard.

Figure 5 Box-and-whisker plot of the mean absolute error of soft-tissue and bone lesions for PET reconstructed using four- and five-class Dixon-based attenuation correction (PET4C and PET5C) and the proposed model (PETcor). The central line within each box is the median. The box edges extend from the 25th to the 75th percentile. Values were considered outliers if they were more than 1.5 times the interquartile ranges of the box edges. The whiskers extend to the most extreme nonoutlier value in the data.

We observed a decrease in performance with increasing postinjection time (PI) in the test set. In a simple linear regression model, a significant linear trend (p < 0.05, r2 = 0.30) was found between the voxel-based RMSPE measurements and the PI time (see Supplementary Section 5 for further details).

4 Discussion

In this study, we propose a novel attenuation correction method that seeks to directly correct for the errors obtained by not including bone when using the conventional four-class Dixon-based AC. We show that the model reduces quantification errors in a voxel-based analysis and in bone lesions compared to four- and five-class Dixon-based methods. For soft tissue lesions, the performance remains similar to that of the four-class Dixon-based AC model.

Our method can be directly applied as a correction filter in the image space to PET4C images without the need for additional reconstruction or the acquisition of additional MR sequences. It can thus also be used to correct PET4C images retrospectively, as long as Dixon MR images are available. This distinguishes it from other models that seek to improve pseudo-CT generation. The model also only relies on Dixon MR images, which are fast to acquire. Furthermore, like the models that predict pseudo-CT images from MR series (21–23, 25, 27), but unlike the models that predict the pseudo-CT images or AC and scatter-corrected PET directly from non-attenuation corrected PET (26, 38, 39), the predicted correction maps are not tracer-dependent.

We observed that the proposed method is robust to variations in tracer type and scatter correction method. The training and validation sets consisted of FDG images, whereas the test set consisted of [68Ga]Ga-PSMA11 and [18F]PSMA-1007 images. Since there were no [68Ga]Ga-PSMA-11 images in the training data, the model had only seen images reconstructed using relative scaling during scatter correction in training. Nevertheless, no differences in performance were seen between [68Ga]Ga-PSMA-11 and [18F]PSMA-1007 patients in the test set.

A decrease in performance was seen with increasing PI. This trend can be explained by the higher number of counts acquired at lower PI, making the correction map that the model tries to predict less noisy. All patients had PET/MR and PET/CT acquisition performed on the same day. For around half of the patients in the test set, the PET/CT images were acquired at what was considered the optimal PI for the given tracer, and the PET/MR images were acquired once the PET/CT examination was finished. If all PET/MR had been acquired closer to the recommended PI, we would expect to see a slight increase in performance.

Multiple other methods have been suggested for improving PET/MR attenuation correction in the pelvic region (7, 22, 23, 25, 27). Bradshaw et al. (25) proposed a model based on the Deep Medic CNN architecture (40). In this model, T2 and T1 Lava Flex images were used for the prediction of pseudo-CT images, resulting in an RMSPE of 4.9% in the reconstructed PET image. Leynes et al. (23) used Dixon-based images and zero-echo-time images as input to a deep learning model based on the UNET architecture to generate µ-maps. The resulting PET reconstruction had an RMSPE of 2.85%. Similar to Bradshaw et al. (25), Torrado-Carvajal et al. (7) used a UNET-like architecture to create a pseudo-CT image using solely Dixon MR images as input. Their approach resulted in an absolute mean relative change of 1.83%.

A different family of models is composed of models that use non-attenuation corrected PET images as input and either the pseudo-CT (41) or the attenuation and scatter-corrected PET as output (26, 38). Though good performance can be obtained with these models, they are tracer-dependent and would likely need to be retrained for optimal performance with each new tracer. In addition, the models proposed in the literature (26, 38, 41) have only been trained and validated on PET/CT data so far, and their performance is yet to be assessed using data acquired on the PET/MR system.

Though many methods have been proposed, it is difficult to directly compare their performance. As pointed out by Lee (42), there is substantial heterogeneity in the choice of PET reconstruction parameters and performance metrics. There is also no reference dataset that can be used to benchmark the performance of different models. What is considered gold standard AC also varies between studies. In this study, we directly estimate the error of not including bones in the four-class Dixon-based µ-maps. The most common is to use registered CT images translated to LAC at 511 keV as the gold standard µ-map. This does, however, rely on a close-to-perfect coregistration between CT and MR images, which can be difficult to obtain in practice (25, 43, 44). We adopted a method similar to Bradshaw et al. (25) in which soft-tissue classes and air in the µ-map are derived from the MR images, and bone and osseous tissues are derived from the CT images.

A primary limitation of this study is the limited number of patients. The training set consisted of only 18 patients, and the test set consisted of 17 patients. Since the model was trained using a 2D network, this still constituted a considerable number of images, but we do not expect that the limited training set was able to capture all the expected interpatient variability. The number of lesions was also limited. In bone, where the model had the largest impact in our testing, only four lesions were found.

The current method is also limited to pelvic imaging only. Adapting to a different clinical application would require retraining of the model. Additionally, in the current work, only a limited subset of reconstruction parameters was used, and we did not evaluate the robustness of the method toward changes in reconstruction parameters. However, since the method is trained to predict correction maps for the PET images directly, we suspect the model to be subject to similar variability between reconstruction parameters as SUVmax measurements themselves (45, 46). Lastly, the model is only tested on a specific patient cohort consisting of elderly male patients. Consequently, the model must be evaluated on a larger and more diverse patient cohort before implementation in clinical practice is justified.

5 Conclusion

Direct correction of four-class Dixon-based AC PET in the image space is a viable method for improving AC of pelvic PSMA PET/MR imaging. The method is tracer-independent, requires only the Dixon MR series and the four-class Dixon-based µ-map, and can be retrospectively applied to PET data without the need for re-reconstruction. It gives superior performance to the four- and five-class Dixon-based AC in a voxel-based RMSPE analysis and for quantification of bone lesion uptake.

Data availability statement

The datasets presented in this article are not readily available because The data are not publicly available because they contain information that could compromise research participant privacy/consent. Requests to access the datasets should be directed to YmVuZGlrLnMuYWJyYWhhbXNlbkBudG51Lm5v.

Ethics statement

The studies involving humans were approved by Regional Committee for Medical and Health Research Ethics Mid Norway (FDG data: identifier REK2014/1289 and PSMA data: identifier REK2020/83009). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

All authors contributed to the study conception and design. The data collection of the FDG lymphoma and lung cancer dataset was performed by LE. Data collection of the [18F]PSMA-1007 and [68Ga]Ga-PSMA-11 images was performed by IK and BA. Analysis and interpretation of data was performed by ME, TB and BA. The first draft of the manuscript was written by BA and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

This work was supported by Norwegian Cancer Society and Prostatakreft-foreningen (Grant Number 215951), the Liaison Committee between the Central Norway Regional Health Authority and the Norwegian University of Science and Technology (Grant Numbers 90265300) and 180N – Norwegian Nuclear Medicine Consortium.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2023.1220009/full#supplementary-material

Abbreviations

AC, attenuation correction; LAC, linear attenuation coefficient; MAE, mean absolute error; MAPE, mean absolute percentage error; PET4C, PET images reconstructed using four-class Dixon-based µ-map; PET5C, PET images reconstructed using five-class Dixon-based µ-map with bone registered from bone atlas; PETMR+CT, PET images reconstructed using four-class Dixon-based µ-map with bone information from CT; PETcor, reconstructed PET4C corrected using the proposed method; PI, postinjection time; RMSPE, root-mean-squared percentage error

References

1. Fanti S, Goffin K, Hadaschik BA, Herrmann K, Maurer T, MacLennan S, et al. Consensus statements on PSMA PET/CT response assessment criteria in prostate cancer. Eur J Nucl Med Mol Imaging (2021) 48:469–76. doi: 10.1007/s00259-020-04934-4

2. Guberina N, Hetkamp P, Ruebben H, Fendler W, Grueneisen J, Suntharalingam S, et al. Whole-body integrated [68Ga]PSMA-11-PET/MR imaging in patients with recurrent prostate cancer: Comparison with whole-body PET/CT as the standard of reference. Mol Imaging Biol (2019) 22:788–96. doi: 10.1007/s11307-019-01424-4

3. Jentjens S, Mai C, Ahmadi Bidakhvidi N, De Coster L, Mertens N, Koole M, et al. Prospective comparison of simultaneous [68Ga]Ga-PSMA-11 PET/MR versus PET/CT in patients with biochemically recurrent prostate cancer. Eur Radiol (2022) 32:901–11. doi: 10.1007/s00330-021-08140-0

4. Freitag MT, Radtke JP, Hadaschik BA, Kopp-Schneider A, Eder M, Kopka K, et al. Comparison of hybrid 68Ga-PSMA PET/MRI and 68Ga-PSMA PET/CT in the evaluation of lymph node and bone metastases of prostate cancer. Eur J Nucl Med Mol Imaging (2016) 43:70–83. doi: 10.1007/s00259-015-3206-3

5. Freitag MT, Radtke JP, Afshar-Oromieh A, Roethke MC, Hadaschick BA, Gleave M, et al. Local recurrence of prostate cancer after radical prostatectomy is at risk to be missed in 68Ga-PSMA-11-PET of PET/CT and PET/MRI: comparison with mpMRI integrated in simultaneous PET/MRI. Eur J Nucl Med Mol Imaging (2017) 44:776–87. doi: 10.1007/s00259-016-3594-z

6. Chen Y, An H. Attenuation correction of PET/MR imaging. Magn Reson Imaging Clin N Am (2017) 25:245–55. doi: 10.1016/j.mric.2016.12.001

7. Torrado-Carvajal A, Vera-Olmos J, Izquierdo-Garcia D, Catalano OA, Morales MA, Margolin J, et al. Dixon-VIBE deep learning (DIVIDE) pseudo-CT synthesis for pelvis PET/MR attenuation correction. J Nucl Med (2019) 60:429–35. doi: 10.2967/jnumed.118.209288

8. Ringheim A, Campos Neto GdC, Martins KM, Vitor T, da Cunha ML, Baroni RH. Reproducibility of standardized uptake values of same-day randomized 68Ga-PSMA-11 PET/CT and PET/MR scans in recurrent prostate cancer patients. Ann Nucl Med (2018) 32:523–31. doi: 10.1007/s12149-018-1275-7

9. Mehranian A, Arabi H, Zaidi H. Vision 20/20: Magnetic resonance imaging-guided attenuation correction in PET/MRI: Challenges, solutions, and opportunities: PET/MRI, quantification, attenuation map, attenuation correction, tracer uptake. Med Phys (2016) 43:1130–55. doi: 10.1118/1.4941014

10. Burger C, Goerres G, Schoenes S, Buck A, Lonn A, von Schulthess G. PET attenuation coefficients from CT images: experimental evaluation of the transformation of CT into PET 511-keV attenuation coefficients. Eur J Nucl Med (2002) 29:922–7. doi: 10.1007/s00259-002-0796-3

11. Catana C. Attenuation correction for human PET/MRI studies. Phys Med Biol (2020) 65(23):TR02. doi: 10.1088/1361-6560/abb0f8

12. Iagaru A, Hope T, Veit-Haibach P. PET/MRI in oncology. 1 edn. Cham, Switzerland: Springer International Publishing (2018). doi: 10.1007/978-3-319-68517-5

13. Martinez-Moller A, Souvatzoglou M, Delso G, Bundschuh RA, Chefd’hotel C, Ziegler SI, et al. Tissue classification as a potential approach for attenuation correction in whole-body PET/MRI: Evaluation 13 with PET/CT data. J Nucl Med (2009) 50:520–6. doi: 10.2967/jnumed.108.054726

14. Samarin A, Burger C, Wollenweber SD, Crook DW, Burger IA, Schmid DT, et al. PET/MR imaging of bone lesions – implications for PET quantification from imperfect attenuation correction. Eur J Nucl Med Mol Imaging (2012) 39:1154–60. doi: 10.1007/s00259-012-2113-0

15. Perera M, Papa N, Roberts M, Williams M, Udovicich C, Vela I, et al. Gallium-68 prostate-specific membrane antigen positron emission tomography in advanced prostate cancer—updated diagnostic utility, sensitivity, specificity, and distribution of prostate-specific membrane antigen-avid lesions: A systematic review and meta-analysis. Eur Urol (2020) 77:403–17. doi: 10.1016/j.eururo.2019.01.049

16. Zamboglou C, Strouthos I, Sahlmann J, Farolfi A, Serani F, Medici F, et al. Metastasis-free survival and patterns of distant metastatic disease after PSMA-PET-guided salvage radiotherapy in recurrent or persistent prostate cancer after prostatectomy. Int J Radiat. Oncol Biol Phys (2022) 113(5):1015–24. doi: 10.1016/j.ijrobp.2022.04.048

17. Du J, Carl M, Bydder M, Takahashi A, Chung CB, Bydder GM. Qualitative and quantitative ultrashort echo time (UTE) imaging of cortical bone. J Magn Reson (2010) 207:304–11. doi: 10.1016/j.jmr.2010.09.013

18. Paulus DH, Quick HH, Geppert C, Fenchel M, Zhan Y, Hermosillo G, et al. Whole-body PET/MR imaging: Quantitative evaluation of a novel model-based MR attenuation correction method including bone. J Nucl Med (2015) 56:1061–6. doi: 10.2967/jnumed.115.156000

19. Elschot M, Selnæs KM, Johansen H, Krüger-Stokke B, Bertilsson H, Bathen TF. The effect of including bone in dixon-based attenuation correction for 18f-fluciclovine PET/MRI of prostate cancer. J Nucl Med (2018) 59:1913–7. doi: 10.2967/jnumed.118.208868

20. Bogdanovic B, Gafita A, Schachoff S, Eiber M, Cabello J, Weber WA, et al. Almost 10 years of PET/MR attenuation correction: the effect on lesion quantification with PSMA: clinical evaluation on 200 prostate cancer patients. Eur J Nucl Med Mol Imaging (2020) 48:543–53. doi: 10.1007/s00259-020-04957-x

21. Pozaruk A, Pawar K, Li S, Carey A, Cheng J, Sudarshan VP, et al. Augmented deep learning model for improved quantitative accuracy of MR-based PET attenuation correction in PSMA PET-MRI prostate imaging. Eur J Nucl Med Mol Imaging (2020) 48:9–20. doi: 10.1007/s00259-020-04816-9

22. Leynes AP, Yang J, Shanbhag DD, Kaushik SS, Seo Y, Hope TA, et al. Hybrid ZTE/dixon MR-based attenuation correction for quantitative uptake estimation of pelvic lesions in PET/MRI. Med Phys (2017) 44:902–13. doi: 10.1002/mp.12122

23. Leynes AP, Yang J, Wiesinger F, Kaushik SS, Shanbhag DD, Seo Y, et al. Zero-echo-time and dixon deep pseudo-CT (ZeDD CT): Direct generation of pseudo-CT images for pelvic PET/MRI attenuation correction using deep convolutional neural networks with multiparametric MRI. J Nucl Med (2018) 59:852–8. doi: 10.2967/jnumed.117.198051

24. Qian P, Zheng J, Zheng Q, Liu Y, Wang T, Al Helo R, et al. Transforming UTE-mDixon MR abdomenpelvis images into CT by jointly leveraging prior knowledge and partial supervision. IEEE/ACM Trans Comput Biol Bioinform (2021) 18:70–82. doi: 10.1109/TCBB.2020.2979841

25. Bradshaw TJ, Zhao G, Jang H, Liu F, McMillan AB. Feasibility of deep learning–based PET/MR attenuation correction in the pelvis using only diagnostic MR images. Tomography (2018) 4:138–47. doi: 10.18383/j.tom.2018.00016

26. Mostafapour S, Gholamiankhah F, Dadgar H, Arabi H, Zaidi H. Feasibility of deep learning-guided attenuation and scatter correction of whole-body 68Ga-PSMA PET studies in the image domain. Clin Nucl Med (2021) 46:609–15. doi: 10.1097/RLU.0000000000003585

27. Qian P, Chen Y, Kuo J-W, Zhang Y-D, Jiang Y, Zhao K, et al. mDixon-based synthetic CT generation for PET attenuation correction on abdomen and pelvis jointly using transfer fuzzy clustering and active learning-based classification. IEEE Trans Med Imaging (2020) 39:819–32. doi: 10.1109/TMI.2019.2935916

28. Klein S, Staring M, Murphy K, Viergever MA, Pluim JPW. elastix: A toolbox for intensity-based medical image registration. IEEE Trans Med Imaging (2010) 29:196–205. doi: 10.1109/TMI.2009.2035616

29. Shamonin DP, Bron EE, Lelieveldt BPF, Smits M, Klein S, Staring M. Fast parallel image registration on CPU and GPU for diagnostic classification of alzheimer’s disease. Front Neuroinform (2014) 7:50. doi: 10.3389/fninf.2013.00050

30. Heußer T, Mann P, Rank CM, Schäfer M, Dimitrakopoulou-Strauss A, Schlemmer HP, et al. Investigation of the halo-artifact in 68Ga-PSMA-11-PET/MRI. PLOS. ONE (2017) 12:e0183329. doi: 10.1371/journal.pone.0183329

31. Ollinger JM. Model-based scatter correction for fully 3d PET. Phys Med Biol (1996) 41:153–76. doi: 10.1088/0031-9155/41/1/012

32. Johnson J, Alahi A, Fei-Fei L. Perceptual losses for real-time style transfer and super-resolution. In: Leibe B, Matas J, Sebe N, Welling M, editors. Computer vision – ECCV 2016, vol. 9906. Cham, Switzerland: Springer International Publishing (2016). p. 694–711. doi: 10.1007/978-3-319-46475-643

33. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Proc IEEE Conf Comput Vision Pattern Recognition (CVPR) (2016), 770–8. doi: 10.1109/CVPR.2016.90

35. Wang TC, Liu M-Y, Zhu J-Y, Tao A, Kautz J, Catanzaro B. High-resolution image synthesis and semantic manipulation with conditional gans. Proc IEEE Conf Comput Vision Pattern Recognition (CVPR) (2018), 8798–807. doi: 10.1109/CVPR.2018.00917

36. Kingma DP, Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

37. Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J R Stat Soc Ser B Stat Methodol (1995) 57:289–300. doi: 10.1111/j.2517-6161.1995.tb02031.x

38. Shiri I, Arabi H, Geramifar P, Hajianfar G, Ghafarian P, Rahmim A, et al. Deep-JASC: joint attenuation and scatter correction in whole-body 18f-FDG PET using a deep residual network. Eur J Nucl Med Mol Imaging (2020) 47:2533–48. doi: 10.1007/s00259-020-04852-5

39. Dong X, Lei Y, Wang T, Higgins K, Liu T, Curran WJ, et al. Deep learning-based attenuation correction in the absence of structural information for whole-body positron emission tomography imaging. Phys Med Biol (2020) 65:055011. doi: 10.1088/1361-6560/ab652c

40. Kamnitsas K, Ledig C, Newcombe VFJ, Simpson JP, Kane AD, Menon DK, et al. Efficient multi-scale 3d CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal (2017) 36:61–78. doi: 10.1016/j.media.2016.10.004

41. Dong X, Wang T, Lei Y, Higgins K, Liu T, Curran WJ, et al. Synthetic CT generation from nonattenuation corrected PET images for whole-body PET imaging. Phys Med Biol (2019) 64:215016. doi: 10.1088/1361-6560/ab4eb7

42. Lee J. A review of deep-learning-based approaches for attenuation correction in positron emission tomography. IEEE Trans radiat. plasma Med Sci (2021) 5(2):160–184. doi: 10.1109/TRPMS.2020.3009269

43. Lee MS, Cho JY, Kim SY, Cheon GJ, Moon MH, et al. Diagnostic value of integrated PET/MRI for detection and localization of prostate cancer: Comparative study of multiparametric MRI and PET/CT. J Magn Reson (2017) 45:597–609. doi: 10.1002/jmri.25384

44. Akbarzadeh A, Gutierrez D, Baskin A, Ay MR, Ahmadian A, Riahi Alam N, et al. Evaluation of whole-body MR to CT deformable image registration. J Appl Clin Med Phys (2013) 14:238–53. doi: 10.1120/jacmp.v14i4.4163

45. Adams MC, Turkington TG, Wilson JM, Wong TZ. A systematic review of the factors affecting accuracy of SUV measurements. AJR Am J Roentgenol (2010) 195:310–20. doi: 10.2214/AJR.10.4923

46. Brendle C, Kupferschläger J, Nikolaou K, la Fougère C, Gatidis S, Pfannenberg C. Is?the standard uptake value (SUV) appropriate for quantification in clinical PET imaging? - variability induced by different SUV measurements and varying reconstruction methods. Eur J Radiol (2015) 84:158–62. doi: 10.1016/j.ejrad.2014.10.018

Keywords: PET/MR, attenuation correction, deep learning, prostate cancer, artificial intelligence frontiers, MRAC, pseudo-CT

Citation: Abrahamsen BS, Knudtsen IS, Eikenes L, Bathen TF and Elschot M (2023) Pelvic PET/MR attenuation correction in the image space using deep learning. Front. Oncol. 13:1220009. doi: 10.3389/fonc.2023.1220009

Received: 09 May 2023; Accepted: 31 July 2023;

Published: 24 August 2023.

Edited by:

Fabio Grizzi, Humanitas Research Hospital, ItalyReviewed by:

Chenyang Shen, University of Texas Southwestern Medical Center, United StatesJames C. L. Chow, University of Toronto, Canada

Copyright © 2023 Abrahamsen, Knudtsen, Eikenes, Bathen and Elschot. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bendik Skarre Abrahamsen, YmVuZGlrLnMuYWJyYWhhbXNlbkBudG51Lm5v

Bendik Skarre Abrahamsen

Bendik Skarre Abrahamsen Ingerid Skjei Knudtsen

Ingerid Skjei Knudtsen Live Eikenes1

Live Eikenes1 Tone Frost Bathen

Tone Frost Bathen Mattijs Elschot

Mattijs Elschot