- 1Research Institute, Cleveland Clinic Foundation, Cleveland, OH, United States

- 2Department of Leukemia, The University of Texas MD Anderson Cancer Center, Houston, TX, United States

- 3Department of Hematology and Oncology, Saint-Joseph University of Beirut, Beirut, Lebanon

- 4Maroone Cancer Center, Cleveland Clinic Florida, Weston, FL, United States

- 5Division of Hematology and Oncology, State University of New York Upstate Medical University, Syracuse, NY, United States

Introduction

The availability of medical information on the internet has transformed the dynamic of the patient-physician relationship. Patients are relying more and more on online resources to address their concerns. In November 2022, a dialogue-based artificial intelligence (AI) large language model (ChatGPT) was made accessible to the general public and has since gained millions of daily users (1, 2). Despite its capabilities in handling complex writing tasks (3), the use of chat-based AI in medicine is still in its early stages and is not intended for medical use yet. However, recent studies suggest a heightened potential for such technology to assist in patient education and provide supplementary information on various medical topics (4–10). We aimed to assess the ability of the AI model to provide accurate responses to evidence-based commonly asked patient-centered oncology questions.

Methods

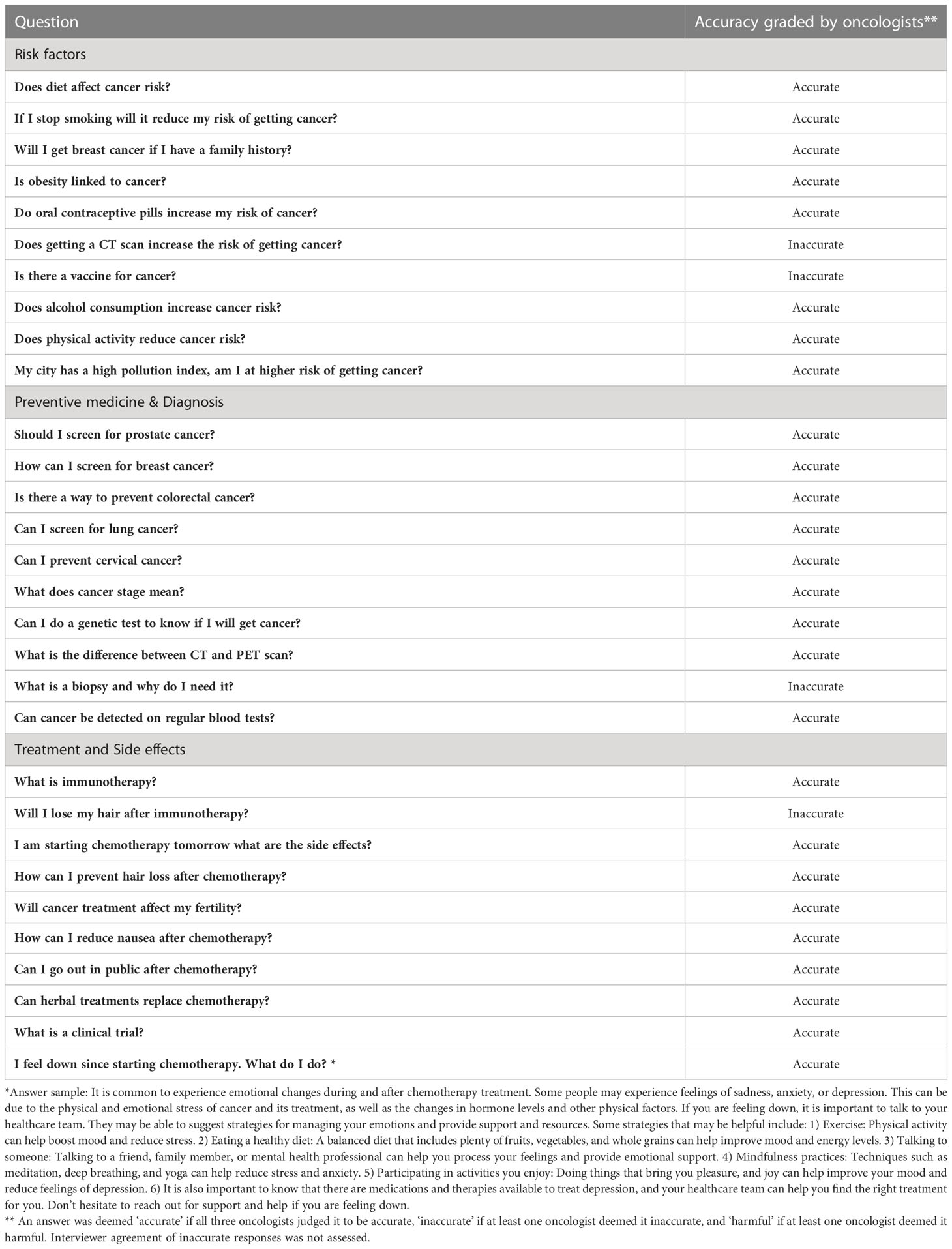

We convened a team of three oncologists from different institutions with extensive clinical and patient care experience to develop a set of thirty questions targeting common oncology topics. The questions focused on risk factors, preventive measures, diagnosis, treatment and side effects, and were designed in alignment with guideline-based topics, the oncologists’ clinical experience in both inpatient and outpatient settings and evidence based question prompt lists for patients seeing a medical or radiation oncologist (11, 12). The study was conducted in February 2023 using ChatGPT 3.5 (default model). The same questions were submitted to the online AI model three times to ensure consistency. Each set of 30 answers was assigned to 1 of the 3 oncologists. Each oncologist qualitatively graded each answer based on its relevance to current published preventive and management guidelines (13, 14) and their clinical expertise. They classified each response as either ‘accurate’, ‘inaccurate’, or ‘harmful’. An answer was considered accurate if – according to the oncologist – it contained essential and appropriate information, inaccurate if it provided false or incomplete information, and harmful if it included potentially harmful information for the patient. The oncologists’ assessments were then reviewed, and their evaluations combined. Evidently, to limit subjectivity and selection bias, an answer was deemed ‘accurate’ if all three oncologists judged it to be accurate, ‘inaccurate’ if at least one oncologist deemed it inaccurate, and ‘harmful’ if at least one oncologist deemed it harmful.

Results

AI model responses were considered “accurate” in 26 of 30 questions (86%). 4 responses (14%) were considered “inaccurate”, and none were considered “harmful” (Table 1). For example, when asked about hair loss as a side effect of immunotherapy, the AI model did not provide an adequate response and gave false but non-harmful information. Nonetheless, when asked about preventive strategies and screening recommendations for various types of cancer, such as breast lung cancer and colorectal cancer, the AI was in line with current recommendations. The average response time was approximately 0.18 seconds per word.

Table 1 Assessment of Oncology Topics Recommendations by Experienced Oncologists using an Online Chat-Based Artificial Intelligence Model.

Discussion

We reported that a popular online chat-based AI model provided mostly accurate responses to many oncology-related questions as evaluated by experienced oncologists. These findings suggest the potential of interactive AI in assisting in patient education. This could be particularly beneficial for oncology patients who may have complex and diverse questions regarding their condition. For example, the use of AI in this setting could potentially alleviate the need for patients to search for answers across numerous websites, which can often be overwhelming, time-consuming, and provide conflicting information. Nonetheless, the added value of such AI-based online chat remains heavily debated. With 14% of answers being inaccurate, the risk of receiving incorrect recommendations is significant. While future medically validated AI-based online chat could eventually provide patients with accurate supplementary information and resources, it is important for patients to understand that these technologies cannot replace the essential role of patient-physician interaction in making informed decisions about treatment options and managing care.

This study has several limitations. Firstly, it is important to note that the currently used AI model used is not intended for actual medical purposes. Secondly, oncology being a complex field, it cannot be fully covered by the limited set of questions used in this study. Thirdly, the accuracy and reliability of AI are susceptible to limitations and biases in the training data provided by the developers, which may result in incorrect or outdated information being provided. Fourthly, the evaluation of the responses was qualitative and conducted by a group of oncologists who may not represent the diverse views and opinions of healthcare professionals across different settings. Fifthly, inter-reviewer agreement and heterogeneity between the set of 3 AI responses was not assessed in detail. Finally, the AI responses did not include references to supporting evidence.

Future research should focus on developing a more standardized system for grading responses and assessing accuracy through a clear comparative analysis between physicians’ answers and AI-generated answers, as well as evaluating the usefulness of such tools in supporting physician practice.

Author contributions

JK: Drafted the manuscript. All authors contributed to the editing and writing. All authors have read and approved the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. ChatGPT: optimizing language models for dialogue (2022). OpenAI. Available at: https://openai.com/blog/chatgpt/ (Accessed February 9, 2023).

2. Ruby D. ChatGPT statistics for 2023: comprehensive facts and data (2023). Demand Sage. Available at: https://www.demandsage.com/chatgpt-statistics/ (Accessed February 9, 2023).

3. Stokel-Walker C. AI Bot ChatGPT writes smart essays - should professors worry? Nature (2022) 9. doi: 10.1038/d41586-022-04397-7

4. Sarraju A, Bruemmer D, Van Iterson E, Cho L, Rodriguez F, Laffin L. Appropriateness of cardiovascular disease prevention recommendations obtained from a popular online chat-based artificial intelligence model. JAMA (2023) 329(10):842–844. doi: 10.1001/jama.2023.1044

6. Bhattaram S, Shinde VS, Khumujam PP. ChatGPT: the next-gen tool for triaging? Am J Emerg Med (2023) S0735-6757(23):00142–0. doi: 10.1016/j.ajem.2023.03.027

7. Ayers JW, Poliak A, Dredze M, Leas EC, Zhu Z, Kelley JB, et al. Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA Intern Med (2023) 28:e231838. doi: 10.1001/jamainternmed.2023.1838

8. Mihalache A, Popovic MM, Muni RH. Performance of an artificial intelligence chatbot in ophthalmic knowledge assessment. JAMA Ophthalmol (2023) 27:e231144. doi: 10.1001/jamaophthalmol.2023.1144

9. Görtz M, Baumgärtner K, Schmid T, Muschko M, Woessner P, Gerlach A, et al. An artificial intelligence-based chatbot for prostate cancer education: design and patient evaluation study. Digit Health (2023) 9:20552076231173304. doi: 10.1177/20552076231173304

10. Kassab J, El Dahdah J, El Helou MC, Layoun H, Sarraju A, Laffin L, et al. Assessing the accuracy of an online chat-based artificial intelligence model in providing recommendations on hypertension management in accordance with the 2017 american college of cardiology/american heart association and 2018 ESC/European society of hypertension guidelines. Hypertension (2023). doi: 10.1161/HYPERTENSIONAHA.123.21183

11. Dimoska A, Tattersall MHN, Butow PN, Shepherd H, Kinnersley P. Can a “prompt list” empower cancer patients to ask relevant questions? Cancer (2008) 113:225–37. doi: 10.1002/cncr.23543

12. Miller N, Rogers SN. A review of question prompt lists used in the oncology setting with comparison to the patient concerns inventory. Eur J Cancer Care (2018) 27:e12489. doi: 10.1111/ecc.12489

13. Search results | united states preventive services taskforce. Available at: https://www.uspreventiveservicestaskforce.org/uspstf/topic_search_results?topic_status=P (Accessed February 9, 2023).

14. Treatment by cancer type . NCCN. Available at: https://www.nccn.org/guidelines/category_1 (Accessed February 9, 2023).

Keywords: ChatGPT, chatbot, artificial intelligence - AI, oncology, assessment

Citation: Kassab J, Nasr L, Gebrael G, Chedid El Helou M, Saba L, Haroun E, Dahdah JE and Nasr F (2023) AI-based online chat and the future of oncology care: a promising technology or a solution in search of a problem? Front. Oncol. 13:1176617. doi: 10.3389/fonc.2023.1176617

Received: 28 February 2023; Accepted: 16 May 2023;

Published: 25 May 2023.

Edited by:

Wei Liu, Mayo Clinic Arizona, United StatesReviewed by:

Xiang Li, Harvard Medical School, United StatesJason Holmes, Mayo Clinic Arizona, United States

Copyright © 2023 Kassab, Nasr, Gebrael, Chedid El Helou, Saba, Haroun, Dahdah and Nasr. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fadi Nasr, bmFzcmZhZGlAaG90bWFpbC5jb20=

†These authors have contributed equally to this work

Joseph Kassab

Joseph Kassab Lewis Nasr2†

Lewis Nasr2† Elio Haroun

Elio Haroun