95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Oncol. , 01 September 2023

Sec. Genitourinary Oncology

Volume 13 - 2023 | https://doi.org/10.3389/fonc.2023.1152622

This article is part of the Research Topic Radiomics and Radiogenomics in Genitourinary Oncology: Artificial Intelligence and Deep Learning Applications View all 9 articles

Zijie Wang1

Zijie Wang1 Xiaofei Zhang2

Xiaofei Zhang2 Xinning Wang3

Xinning Wang3 Jianfei Li4

Jianfei Li4 Yuhao Zhang3

Yuhao Zhang3 Tianwei Zhang3

Tianwei Zhang3 Shang Xu3

Shang Xu3 Wei Jiao3*

Wei Jiao3* Haitao Niu3

Haitao Niu3This study summarizes the latest achievements, challenges, and future research directions in deep learning technologies for the diagnosis of renal cell carcinoma (RCC). This is the first review of deep learning in RCC applications. This review aims to show that deep learning technologies hold great promise in the field of RCC diagnosis, and we look forward to more research results to meet us for the mutual benefit of renal cell carcinoma patients. Medical imaging plays an important role in the early detection of renal cell carcinoma (RCC), as well as in the monitoring and evaluation of RCC during treatment. The most commonly used technologies such as contrast enhanced computed tomography (CECT), ultrasound and magnetic resonance imaging (MRI) are now digitalized, allowing deep learning to be applied to them. Deep learning is one of the fastest growing fields in the direction of medical imaging, with rapidly emerging applications that have changed the traditional medical treatment paradigm. With the help of deep learning-based medical imaging tools, clinicians can diagnose and evaluate renal tumors more accurately and quickly. This paper describes the application of deep learning-based imaging techniques in RCC assessment and provides a comprehensive review.

Renal cell carcinoma (RCC) is one of the most common and fatal tumors of the urinary system. It originates from the urinary tubular epithelial system of the renal parenchyma and accounts for 4% of human malignancies. Its annual incidence exceeds 400,000 cases, with a total of approximately 431,288 cases worldwide in 2020 (1). Clear cell RCC (ccRCC) is the predominant type of RCC pathology. RCC is usually detected on computed tomography (CT) scans, and it is estimated that about 15-40% of patients are found incidentally while undergoing CT examinations (2, 3). RCC is usually asymptomatic in its early stages, and approximately 25-30% of patients present with metastases at the time of diagnosis. Early diagnosis of RCC will significantly improve prognosis; therefore, with the increasing number of RCC cases, it is critical to develop effective strategies for early diagnosis and identification of tumors with poor prognosis (4).

Deep learning is a branch of machine learning techniques. Traditional machine learning techniques include support vector machine (SVM), random forest, decision tree, K-nearest neighbor, naive Bayes, logistic regression, etc. (5, 6). The emergence of convolutional neural networks (CNN) has raised the accuracy of machine learning to a new level. As models continue to iterate in complexity, machine recognition capabilities are reaching human levels for the first time (7) which has led to the explosion of deep learning applications today. Deep learning technologies are starting to change various fields of production and life, such as AlphaGo, Face Payment, and Autopilot, which are well known to the public.

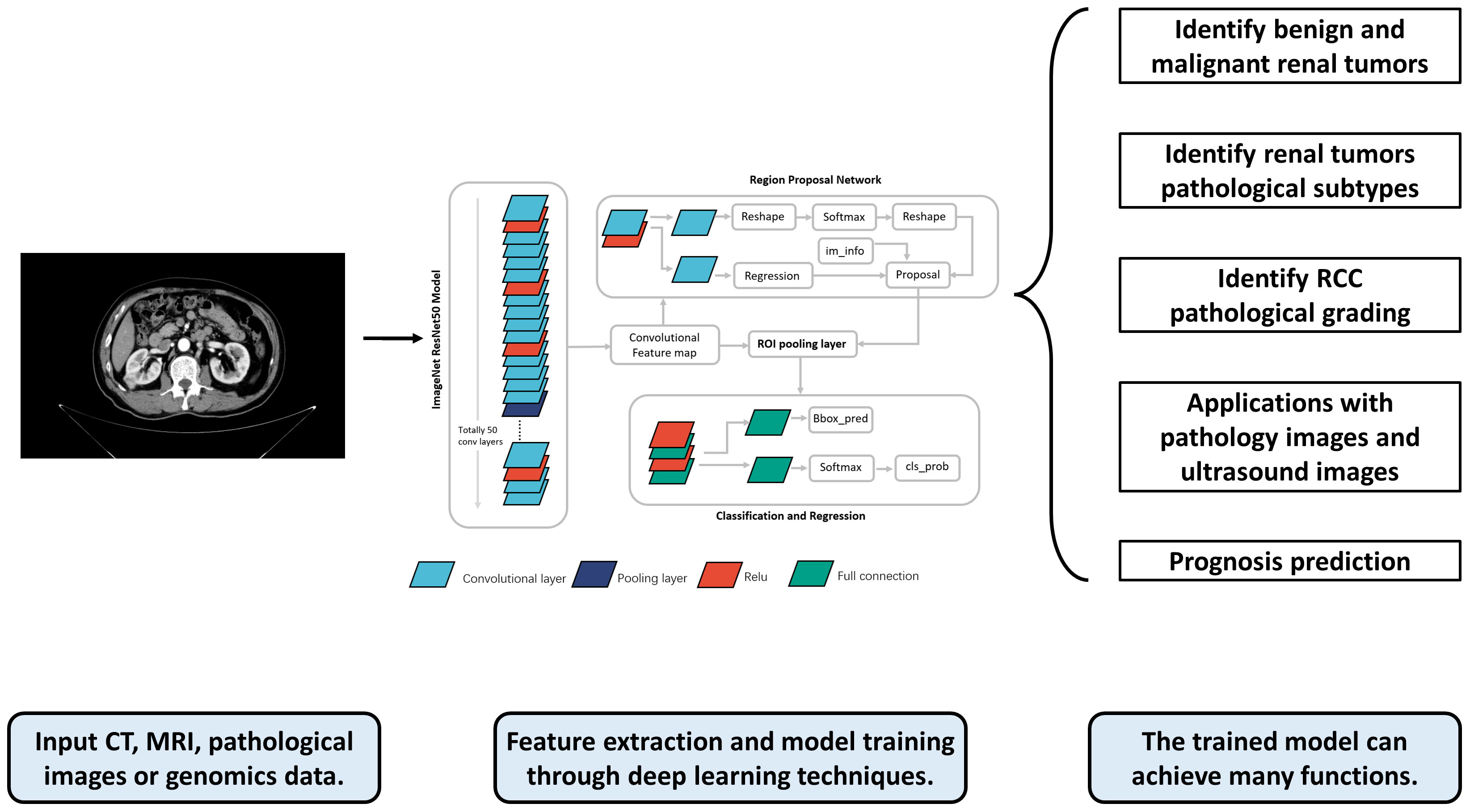

With the rapid development of computer hardware and deep learning theory, deep learning has been widely used for the classification of medical image processing (8). Currently, deep learning models have achieved diagnostic accuracy for most tumor images at the level of radiologists. (e.g., rectal cancer (9), breast cancer (10), lung cancer (11), etc.). CNNs and improved models have been widely used for medical image processing (12). In the field of urology, deep learning-based predictive models have achieved excellent results in the diagnosis and treatment of various diseases such as RCC, prostate cancer (13–15), bladder cancer (16–18), and urolithiasis (19–21). This paper summarizes the research on deep learning in the areas of pathological identification, pathological grading, and prognostic treatment of RCC, and discusses its future research directions. The flowchart and application overview of deep learning research can be seen in Figure 1.

Figure 1 The flowchart and application overview of deep learning research. First, data such as radiological, pathological, and genomic data from patients are collected as inputs. These data are preprocessed and fed into the deep learning model for training. The trained model can output a variety of prediction results, such as the pathological grade, pathological type, and prognosis of RCC patients, providing references for doctors’ subsequent diagnosis and treatment.

It is important to have an accurate imaging description of renal tumors because not all incidental findings of renal tumors are RCC. Up to 20% of solid renal tumors less than 4 cm in size are benign, most commonly renal oncocytoma (RO) and renal fat-poor angiomyolipoma (fpAML) (22). Currently, methods to differentiate between benign and malignant renal tumors are still limited. Although a percutaneous biopsy can confirm the diagnosis in most cases, it is relatively invasive. Studies have shown (23) that there is a risk of biopsy channel implantation in renal tumors (1.2%), especially in papillary RCC (pRCC) (12.5%). Therefore, as stated in the EAU (24), the “small but real” risk of channel implantation must be weighed in patients with renal tumors when puncture is necessary to determine subsequent treatment options. Also, although relatively uncommon, complications of renal tumor biopsy (e.g., hematoma, back pain, severe hematuria, pneumothorax, and hemorrhage) should not be ignored as well (25). Therefore, an ideal method for the diagnosis of renal tumors should ensure a high accuracy and detection rate while avoiding unnecessary potential risks to patients as much as possible. This calls for further improvements in complementary diagnostic techniques to increase sensitivity and specificity. The preoperative image diagnosis system constructed based on deep learning is mostly trained with pathological results as the golden standard, and the accuracy can often reach more than 90%. Its application in clinical practice can help patients avoid the risk of puncture and improve the diagnosis rate significantly (See Table 1).

Zabihollahy, F. et al. (28) included 77 benign renal tumors (57 RO, 20 fpAML) and 238 malignant renal tumors (123 ccRCC, 69 pRCC, 46 chromophobe RCC (chRCC)) to construct a model, which was based on a self-created semi-automatic and fully automatic method (39) to segment the tumor from normal renal tissue. Ultimately, the semi-automatic method achieved 83.75%, 89.05% and 91.73% accuracy, precision and recall on the test set, respectively. The fully automated method obtained 77.36%, 85.92%, and 87.22% accuracy, precision, and recall, respectively.

Tanaka, Takashi et al. (27) wanted to identify benign and malignant at the scale of small renal tumors ≤ 4 cm, they collected four-phase contrast enhanced CT (CECT) data of 168 renal tumors and trained 6 models (unenhanced (UN), corticomedullary (CM), nephrographic (NP), and excretory (EX) phase, enhanced three-phases, and all four-phases), respectively, using the Inception-v3 architecture CNN model, And finally the highest accuracy (88%) was found for the NP phase images, with an area under the subject operating curve (ROC) (AUC) of 0.846.

Magnetic resonance imaging (MRI) is suitable for patients allergic to intravenous CT contrast agents and pregnant women and has a better function than CECT for the assessment of inferior vena cava involvement. Xi, I. L. et al. (29) included data from 1162 renal lesions to develop a deep learning model by applying a residual network (ResNet) on MRI (T1C and T2WI) to distinguish benign renal tumors from RCC. The accuracy (0.70), sensitivity (0.92), and specificity (0.41) of the deep learning model were significantly higher than those of the radiomics model as well as the expert models.

According to the type of pathology, 60% to 80% of RCC are ccRCC and the rest are non-ccRCC. The World Health Organization (WHO) has developed a total of 4 versions of renal tumor classification criteria, and the current one is followed by the introduction of the fourth version of tumor classification criteria in 2016 (40). The growth pattern, treatment options, and risk of recurrence vary among different pathological subtypes of tumors. For example, AML, RO, renal cyst, cystic renal cancer, and other lesions can be followed up and observed. Precise preoperative evaluation of such tumor pathology can reduce unnecessary surgical treatment. Targeted therapy and immunotherapy also need to change the type and dose of drugs according to the pathological subtype of the tumor. In conclusion, if the pathological type of renal tumor can be known preoperatively, patients can benefit significantly.

Coy, H. et al. (35) used the open source Google TensorFlow™ Inception model to discriminate between RO and ccRCC, and three-phase CECT data as well as coronal, sagittal, and horizontal data were incorporated into the training model, achieving a positive predictive value of 82.5%. Biopsy differentiation between RO and chRCC currently remains a challenge, as both have similar molecular characteristics in addition to the typical histological features of tumor cells. Baghdadi, A. et al. (36) constructed an original predictive metric that can discriminate RO from chRCC on CECT images by measuring the tumour-to-cortex peak early-phase enhancement ratio (PEER) (41). They automatically identified tumor types by building deep learning algorithms to automatically measure the metric. The authors also introduced the concept of Dice similarity score (DSS) to quantitatively evaluate the difference between the model outline and the expert outline as another indicator of the model accuracy. The PEER assessment achieved 95% accuracy (100% sensitivity and 89% specificity) in the classification of tumor types compared to actual pathology results.

PRCC and chRCC are the most common types of non-ccRCC. Differences in origin factors and driver genes between the two have led to different treatment options and prognosis (42). PRCC and chRCC have some differences in imaging findings. PRCC presents with cysts, necrosis, and calcification, whereas chRCC presents with central whorl-like enhancement (43). However, in early stage or small sized masses, these aforementioned features are atypical and usually cause diagnostic difficulties. Teng et al. (32) used a total of six deep learning models to identify pRCC and chRCC. They extracted four case samples from The Cancer Imaging Archive (TCIA), a public database of cancer images, to participate in forming an external test set, and the best model (MobileNetV2) achieved 96.9% accuracy in the validation set (99.4% of sensitivity and 94.1% of specificity) and 100% (case accuracy)/93.3% (image accuracy) in the test set. Han, S. et al. (30) constructed a multiclassification model to discriminate ccRCC, pRCC, and chRCC based on the GoogLeNet model, the network showed an accuracy of 0.85, sensitivity of 0.64-0.98, specificity of 0.83-0.93, and AUC of 0.9.

The Fuhrman grading system is highly recognized in the field of oncology diagnosis and is widely used in the pathological grading of ccRCC (44). In 2012, the International Society of Urological Pathology (ISUP) introduced a new grading system for ccRCC and pRCC (45), which was incorporated into the latest World Health Organization (WHO) classification of renal tumors and designated as the WHO/ISUP grading system (40). In this grading system, tumors are classified into four different grades (I, II, III and IV), with higher grades indicating more severe disease. The automatic classification of pathology using deep learning methods can significantly reduce the workload of pathologists, and the acquisition of pathology grading based on preoperative imaging data can help urologists to develop fine treatment strategies earlier, significantly improving patient survival and reducing suffering (See Table 2).

Lin, F. et al. (48) classified WHO/ISUP classification I and II as low grade and III and IV as high grade. They then trained ResNet models based on CECT images and achieved good results on both internal validation set (accuracy=73.7, AUC=0.82) and external test set (accuracy=77.9, AUC=0.81).

Xu, L. et al. (47) first validated the model on data from a large cohort, where they used a cohort containing 706 ccRCC patients to construct a deep learning model to predict Fuhrman classification. The traditional model was also refined by adding a two-step process of mixed loss strategy and sample reweighting to identify high-grade patients with ccRCC, to dealing with the domain shift problem and the noisy label problem, as well as the imbalance dataset problem. They developed 4 deep learning networks separately and further combined different weights for better prediction. In the validation cohort, the AUC of the single deep learning model is 0.864, while the AUC of the integrated model is 0.882.

Zhao, Y. et al. (46) evaluated the efficacy of ResNet using MRI in discriminating between high and low grade RCCs in a sample of patients with AJCC grade I and II. 353 Fuhrman-graded RCCs were divided into training, validation, and test sets in a ratio of 7:2:1. 77 WHO/ISUP-graded RCCs were used as separate test sets. Finally, the Fuhrman test set achieved 0.88 accuracy, 0.89 sensitivity, and 0.88 specificity, the WHO/ISUP test set achieved 0.83 accuracy, 0.92 sensitivity, and 0.78 specificity.

Radiomics, derived from texture analysis technology, is a technique for diagnostic prediction by extracting features from image data with high throughput and filtering them to build models, usually using traditional machine learning methods to model the filtered features.

With the advent of deep learning techniques, some studies have used self-constructed or mature CNNs to model the extracted radiomics features (33, 50). There are many differences between traditional machine learning-based radiomics and deep learning-based radiomics. Traditional radiomics relies on manually designed feature extraction and traditional machine learning algorithms to analyze medical image data. These features may include shape, texture, intensity, and so on. Traditional machine learning algorithms such as Support Vector Machines (SVM) and Random Forest are used to train models, which are then applied to tasks such as classification, segmentation, prediction, etc. Deep learning-based radiomics, on the other hand, utilizes neural network structures for automatic feature learning and pattern recognition. Deep learning models can learn high-level abstract features through multiple layers of neural networks, eliminating the need for manual feature extraction. This ability for automatic learning allows deep learning-based radiomics to perform well in handling large-scale and complex medical image data. Furthermore, the performance of traditional machine learning methods is often limited by the quality and selection of features, whereas deep learning-based radiomics can directly learn the optimal feature representation from raw data through an end-to-end training and optimization process, resulting in better performance.

Identifying histological differences in different RCCs under the microscope is a time-consuming and labor-intensive task for pathologists. There is also a high rate of variation of inter- and intra-observer by manual identification of RCCs (51) Kidney tumors can have different appearance and combination morphologies, making them difficult to classify. With the advent of whole section images in digital pathology, automated histopathology image analysis systems have shown great promise for diagnosis (52–54). Computerized image analysis has the advantage of providing a more valid, objective, and consistent assessment to assist pathologists in their diagnosis. Deep learning-based models that automatically process digitized histopathology images and learn to extract cellular patterns associated with the presence of tumors can assist pathologists by (1) automatically pre-screening sections to reduce false-negative cases, (2) highlighting important areas on digitized sections to expedite diagnosis, and (3) providing objective and accurate diagnoses (See Table 3).

Zhu, M et al. (56) developed a deep learning model that accurately classifies digitized surgical and biopsy sections into five relevant categories: ccRCC, pRCC, chRCC, RO, and normal tissue. Their test set included 78 surgical resection full sections, 79 biopsy sections from the same institution, and 917 surgical resection sections from The Cancer Genome Atlas (TCGA) database. The mean AUC of the model on internal surgical sections, internal biopsy sections, and external TCGA sections was 0.98, 0.98, and 0.97, respectively. Abu Haeyeh, Y. et al. (57) trained three multi-scale CNNs and applied decision fusion to their predictions to obtain the final classification decision. For four types of kidney tissues: non-RCC renal parenchyma, non-RCC adipose tissue, ccRCC and clear cell papillary RCC (ccpRCC). The developed system showed high classification accuracy and sensitivity at the slide level for RCC biopsy samples, with an overall classification accuracy of 93.0%, sensitivity of 91.3%, and specificity of 95.6%.

A recent systematic review and meta-analysis (60) compared the diagnostic performance of enhanced ultrasound (CEUS) with CECT in the assessment of benign and malignant renal masses. 16 studies were included in the pooled analysis and the results showed comparable diagnostic performance with CEUS versus CECT (sensitivity 0.90 vs. 0.96). There are relatively few deep learning discrimination systems based on RCC ultrasound images, but several studies have been applied to assess the severity of hydronephrosis (61–63), It shows that deep learning techniques also have strong diagnostic efficacy for ultrasound images of the kidney. Zhu, D et al. (58) developed a deep learning model for CEUS images, called multimodal ultrasound fusion network (MUF-Net), and a total of 9794 images were cropped from CEUS videos for automatic classification of benign and malignant solid renal tumors. The performance of the model was compared with different experience levels radiologists. Accuracy was 70.6%, 75.7%, and 80.0% for the junior radiologist group, senior radiologist group, and MUF-Net, respectively, with AUC of 0.740, 0.794, and 0.877, respectively.

Utilizing deep learning techniques for predicting the prognosis of renal cancer can provide clinical doctors with more accurate patient risk assessment and treatment decision support, avoiding over-treatment or delayed treatment. Furthermore, the automated feature learning and prediction capabilities of deep learning models have the potential to enhance the efficiency and speed of prognosis assessment, offering practical solutions for large-scale prognosis evaluation of renal cancer patients.

Currently, there are limited studies on deep learning-based prognosis prediction for renal tumors. Schulz, S et al. (59) were the first to train a model on multi-scale data, incorporating histopathological images, CT/MRI scans, and genomic data from whole-exome sequencing of 248 patients. They developed and evaluated a multimodal deep learning model (MMDLM) for predicting the prognosis of clear cell renal cell carcinoma (ccRCC). The model achieved promising results, with an average C-index of 0.7791 and an average accuracy of 83.43%. However, the study also has certain limitations, such as missing imaging data for some patients and a relatively small dataset.

In recent years, deep learning techniques have made significant progress in a wide range of computer vision tasks as well as biomedical imaging analysis applications. Deep learning techniques have been integrated into the medical industry for several years and have shown significant value in the diagnosis, identification, and staging of RCC, but there are still many areas of research that have yet to be broken through by deep learning techniques. The following are some possible future research directions.

Prognostic analysis of tumor patients is an important application of deep learning research, but the current deep learning research in the field of RCC mostly stays at the level of diagnosis and identification. There is limited research on predicting the prognosis of RCC patients. Studies on the efficacy of immunotherapy and targeted therapy for RCC patients are still lacking.

Radiomics combined with genomics has formed radiogenomics, where the presence of high expression of specific genes in patients can be discerned by identifying their preoperative images, such as PET/MRI-based identification of VEGF genes (64), CT-based identification of PBRM1, BAP1, and VHL gene mutation levels (65–68), and also combined proteomics studies (69). Such studies not only extend the boundaries of deep learning prediction models, but also add a plausible biological explanation of deep learning at the molecular level to deepen our understanding of how deep learning works. Subsequent studies could update the machine learning models in the above studies to deep learning models to significantly improve prediction accuracy.

Deep learning technology combined with clinical diagnosis and treatment still has many areas in urgent need, especially the evaluation of some clinicopathological fine indicators. Similarly in the field of rectal cancer, in addition to the traditional benign-malignant differentiation and TNM staging rating, indicators such as circumferential resection margin(CRM) status (70) and tumor budding (71) have also become hot spots, and their role in guiding patient prognosis remains indispensable. In the field of RCC radiomics research, there are similar studies that have not yet been transplanted to deep learning models, such as Juxtatumoral perinephric fat invasion (72), inferior vena cava tumor thrombosis and vessel wall invasion (73), and evaluation of perirenal fat adhesions (74). Methodologically, these studies are no longer difficult to perform, only that no studies have been published yet.

An emerging area in RCC imaging is the use of pharmacokinetics from dynamic contrast-enhanced MRI. By dynamically tracking the distribution and clearance of MRI contrast agents, pharmacokinetic analysis can provide important information about tumor blood flow, vascular permeability, and extracellular space, which is extremely valuable for the diagnosis and differential diagnosis of renal cell carcinoma. For instance, the study by Wang et al. (75) has demonstrated the potential of pharmacokinetic parameters in differentiating subtypes of RCC and determining the malignancy of tumors. Deep learning techniques, especially CNN have been applied to analyze DCE-MRI data, to automatically extract and learn these pharmacokinetic parameters, thereby further improving the diagnostic accuracy of renal cell carcinoma. However, this field still faces some challenges, such as how to accurately extract pharmacokinetic parameters from various dynamic sequences, and how to address the issue of time and spatial resolution in dynamic enhancement data. Future research needs to address these issues and further explore the application of deep learning in the pharmacokinetic analysis of DCE-MRI in RCC.

Deep learning techniques have a wide range of promising applications in various clinical disciplines, but many challenges remain before the relevant results can be translated into clinical applications.

Most of the studies conducted so far are from the same medical center and have not been fully validated in independent cohorts, which leads to biased results and reduces the generalizability of the studies. We still need more multicenter, randomized controlled trials to enhance testing. Multidisciplinary and extensive cooperation to actively promote the maturation, standardization, and clinical development of deep learning research.

As a field combined with medical big data, enough data is a prerequisite for establishing models and a guarantee for maintaining stable system performance. Current studies in hotspot areas are mostly around 100-200 cases, there are still some risks of overfitting, and it is urgent to establish a platform for sharing large data of multi-center images.

The current studies in various hot areas are mostly retrospective, lacking large samples of randomized multicenter prospective tests, and there is still a large gap with the actual clinical application.

The process of deep learning image acquisition lacks a unified standard or evaluation system, and the comparability of various studies of the same type is poor due to many reasons such as imaging equipment parameters, image construction, imaging physician habits and patient compliance.

Image segmentation is an essential step in the deep learning model building process, and the repeatability of manual, semi-automatic, and automatic methods vary and has its own advantages and disadvantages, so how to improve the outlining accuracy with high repeatability is the current problem to be optimized. Both overfitting and underfitting of data can affect the repeatability of the model and optimization of algorithm is still the breakthrough of innovation in this field.

Since the training of deep learning models requires high-throughput data processing, traditional statistical methods and analysis tools used in clinical research are no longer competent, which puts higher demands on the interdisciplinary ability and communication level of radiologists, surgeons, and computer engineers. There is still a need to figure out how doctors can better interface with engineers.

Self-supervised learning can partially address the issue of data scarcity, especially in segmentation tasks. In traditional supervised learning, a large amount of labeled data is required for model training, which is costly and time-consuming to obtain. In contrast, self-supervised learning techniques leverage unlabeled data by designing tasks that generate labels automatically or utilizing unsupervised tasks. This allows models to learn meaningful features and semantic information from the unlabeled data. The advantage of self-supervised learning lies in its ability to enhance model performance, reduce reliance on many labeled data, and accelerate the training process by fully leveraging unlabeled data. It provides a valuable solution for coping with data scarcity.

Deep learning models have achieved impressive results in the medical field, but their explainability remains a challenge. Deep learning models typically consist of multiple layers of neural networks, with many parameters and complex nonlinear mapping relationships. This complexity leads to opaque decision-making processes, making it difficult to explain the basis for their predictions. This lack of explainability can raise issues of trust and acceptance in medical practice. To address this problem, researchers have proposed various strategies, and one important approach is using Grad-CAM (Gradient-weighted Class Activation Mapping) (76). Grad-CAM is a gradient-based interpretability method that associates the model’s prediction results with specific local regions in the input image. Grad-CAM determines which regions in the image are crucial for a specific prediction result by computing the gradient of the predicted class with respect to the last convolutional layer. It then visualizes these key regions on the image to help doctors or researchers understand the basis of the model’s decisions. Such visualizations provide an intuitive display of the areas the model pays attention to during the prediction process, offering some explanatory power for the model’s decisions. In addition to Grad-CAM, there are other methods and techniques used to enhance the interpretability of deep learning models, such as LIME (Local Interpretable Model-agnostic Explanations), SHAP (SHapley Additive exPlanations) (77), and more. These methods attempt to analyze the model’s prediction results from different perspectives, providing explanatory insights and increasing the trustworthiness and acceptability of the model in medical practice.

In this paper, we conducted a comprehensive review of the latest advancements and challenges in the use of deep learning techniques for the imaging diagnosis of renal cell carcinoma. Through the analysis of various deep learning models in the application of renal cell carcinoma imaging diagnosis, we found that these technologies have enormous potential, significantly improving the accuracy and efficiency of diagnosis. However, these methods also have some limitations, such as the availability and quality of data, the interpretability of the models, and challenges in clinical applications. Despite these challenges, we believe that with the further development and improvement of deep learning techniques, their applications in the imaging diagnosis of renal cell carcinoma will become increasingly widespread. We look forward to more research in the future to overcome existing challenges and further promote the development of this field.

ZW, JL, and WJ: Project development. TZ, XZ, and YZ: literature review and data extraction. ZW, TZ and XW: manuscript drafting. WJ, and HN: critical revision of the manuscript. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Bukavina L, Bensalah K, Bray F, Carlo M, Challacombe B, Karam JA, et al. Epidemiology of renal cell carcinoma: 2022 update. Eur Urol (2022) 82:529–42. doi: 10.1016/j.eururo.2022.08.019

2. Meyer HJ, Pfeil A, Schramm D, Bach AG, Surov A. Renal incidental findings on computed tomography: Frequency and distribution in a large non selected cohort. Medicine (2017) 96:e7039. doi: 10.1097/MD.0000000000007039

3. O'Connor SD, Pickhardt PJ, Kim DH, Oliva MR, Silverman SG. Incidental finding of renal masses at unenhanced CT: prevalence and analysis of features for guiding management. AJR Am J roentgenol (2011) 197:139–45. doi: 10.2214/AJR.10.5920

4. Giulietti M, Cecati M, Sabanovic B, Scire A, Cimadamore A, Santoni M, et al. The role of artificial intelligence in the diagnosis and prognosis of renal cell tumors. Diagnostics (Basel Switzerland) (2021) 11(2):206. doi: 10.3390/diagnostics11020206

5. Pasini G, Stefano A, Russo G, Comelli A, Marinozzi F, Bini F. Phenotyping the histopathological subtypes of non-small-cell lung carcinoma: how beneficial is radiomics? Diagnostics (Basel Switzerland) (2023) 13(6):1167. doi: 10.3390/diagnostics13061167

6. Li X, Ono C, Warita N, Shoji T, Nakagawa T, Usukura H, et al. Heart rate information-based machine learning prediction of emotions among pregnant women. Front Psychiatry (2021) 12:799029. doi: 10.3389/fpsyt.2021.799029

7. He K, Zhang X, Ren S, Sun J. (2016). Deep residual learning for image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, New York, New York, USA: Institute of Electrical and Electronics Engineers (IEEE), pp. 770–8.

8. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts H. Artificial intelligence in radiology. Nat Rev Cancer (2018) 18:500–10. doi: 10.1038/s41568-018-0016-5

9. Lu Y, Yu Q, Gao Y, Zhou Y, Liu G, Dong Q, et al. Identification of metastatic lymph nodes in MR imaging with faster region-based convolutional neural networks. Cancer Res (2018) 78:5135–43. doi: 10.1158/0008-5472.CAN-18-0494

10. Sechopoulos I, Teuwen J, Mann R. Artificial intelligence for breast cancer detection in mammography and digital breast tomosynthesis: State of the art. Semin Cancer Biol (2021) 72:214–25. doi: 10.1016/j.semcancer.2020.06.002

11. Forte GC, Altmayer S, Silva RF, Stefani MT, Libermann LL, Cavion CC, et al. Deep learning algorithms for diagnosis of lung cancer: A systematic review and meta-analysis. Cancers (2022) 14(16):3856. doi: 10.3390/cancers14163856

12. Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, et al. A survey on deep learning in medical image analysis. Med image Anal (2017) 42:60–88. doi: 10.1016/j.media.2017.07.005

13. Rabaan AA, Bakhrebah MA, AlSaihati H, Alhumaid S, Alsubki RA, Turkistani SA, et al. Artificial intelligence for clinical diagnosis and treatment of prostate cancer. Cancers (2022) 14(22):5595. doi: 10.3390/cancers14225595

14. Naik N, Tokas T, Shetty DK, Hameed BMZ, Shastri S, Shah MJ, et al. Role of deep learning in prostate cancer management: past, present and future based on a comprehensive literature review. J Clin Med (2022) 11(13):3575. doi: 10.3390/jcm11133575

15. Almeida G, Tavares J. Deep learning in radiation oncology treatment planning for prostate cancer: A systematic review. J Med Syst (2020) 44:179. doi: 10.1007/s10916-020-01641-3

16. Bandyk MG, Gopireddy DR, Lall C, Balaji KC, Dolz J. MRI and CT bladder segmentation from classical to deep learning based approaches: Current limitations and lessons. Comput Biol Med (2021) 134:104472. doi: 10.1016/j.compbiomed.2021.104472

17. Borhani S, Borhani R, Kajdacsy-Balla A. Artificial intelligence: A promising frontier in bladder cancer diagnosis and outcome prediction. Crit Rev oncology/hematol (2022) 171:103601. doi: 10.1016/j.critrevonc.2022.103601

18. Li M, Jiang Z, Shen W, Liu H. Deep learning in bladder cancer imaging: A review. Front Oncol (2022) 12:930917. doi: 10.3389/fonc.2022.930917

19. Black KM, Law H, Aldoukhi A, Deng J, Ghani KR. Deep learning computer vision algorithm for detecting kidney stone composition. BJU Int (2020) 125(6):920–4. doi: 10.1111/bju.15035

20. Caglayan A, Horsanali MO, Kocadurdu K, Ismailoglu E, Guneyli S. Deep learning model-assisted detection of kidney stones on computed tomography. Int Braz J urol (2022) 48:830–9. doi: 10.1590/s1677-5538.ibju.2022.0132

21. Liu YY, Huang ZH, Huang KW. Deep learning model for computer-aided diagnosis of urolithiasis detection from kidney-ureter-bladder images. Bioengineering (Basel Switzerland) (2022) 9(12):811. doi: 10.3390/bioengineering9120811

22. Schieda N, Lim RS, McInnes MDF, Thomassin I, Renard-Penna R, Tavolaro S, et al. Characterization of small (<4cm) solid renal masses by computed tomography and magnetic resonance imaging: Current evidence and further development. Diagn interventional Imaging (2018) 99:443–55. doi: 10.1016/j.diii.2018.03.004

23. Hora M, Macklin PS, Sullivan ME, Tapping CR, Cranston DW, Webster GM, et al. Tumour seeding in the tract of percutaneous renal tumour biopsy: A report on seven cases from a UK tertiary referral centre. Eur Urol (2019) 75:861–7. doi: 10.1016/j.eururo.2018.12.011

24. Ljungberg B, Albiges L, Abu-Ghanem Y, Bedke J, Capitanio U, Dabestani S, et al. (2022). EAU guidelines on renal cell carcinoma 2022. The Netherlands: European Association of Urology Arnhem.

25. Herrera-Caceres JO, Finelli A, Jewett MAS. Renal tumor biopsy: indicators, technique, safety, accuracy results, and impact on treatment decision management. World J Urol (2019) 37:437–43. doi: 10.1007/s00345-018-2373-9

26. Zhou L, Zhang Z, Chen YC, Zhao ZY, Yin XD, Jiang HB. A deep learning-based radiomics model for differentiating benign and Malignant renal tumors. Trans Oncol (2019) 12:292–300. doi: 10.1016/j.tranon.2018.10.012

27. Tanaka T, Huang Y, Marukawa Y, Tsuboi Y, Masaoka Y, Kojima K, et al. Differentiation of small (≤ 4 cm) renal masses on multiphase contrast-enhanced CT by deep learning. AJR Am J roentgenol (2020) 214(3):605–12. doi: 10.2214/AJR.19.22074

28. Zabihollahy F, Schieda N, Krishna S, Ukwatta E. Automated classification of solid renal masses on contrast-enhanced computed tomography images using convolutional neural network with decision fusion. Eur Radiol (2020) 30(9):5183–90. doi: 10.1007/s00330-020-06787-9

29. Xi IL, Zhao Y, Wang R, Chang M, Purkayastha S, Chang K, et al. Deep learning to distinguish benign from Malignant renal lesions based on routine MR imaging. Clin Cancer Res (2020) 26:1944–52. doi: 10.1158/1078-0432.CCR-19-0374

30. Han S, Hwang SI, Lee HJ. The classification of renal cancer in 3-phase CT images using a deep learning method. J digital Imaging (2019) 32:638–43. doi: 10.1007/s10278-019-00230-2

31. Zheng Y, Wang S, Chen Y, Du HQ. Deep learning with a convolutional neural network model to differentiate renal parenchymal tumors: a preliminary study. Abdominal Radiol (New York) (2021) 46(7):3260–8. doi: 10.1007/s00261-021-02981-5

32. Zuo T, Zheng Y, He L, Chen T, Zheng B, Zheng S, et al. Automated classification of papillary renal cell carcinoma and chromophobe renal cell carcinoma based on a small computed tomography imaging dataset using deep learning. Front Oncol (2021) 11:746750. doi: 10.3389/fonc.2021.746750

33. Lee H, Hong H, Kim J, Jung DC. Deep feature classification of angiomyolipoma without visible fat and renal cell carcinoma in abdominal contrast-enhanced CT images with texture image patches and hand-crafted feature concatenation. Med Phys (2018) 45:1550–61. doi: 10.1002/mp.12828

34. Oberai A, Varghese B, Cen S, Angelini T, Hwang D, Gill I, et al. Deep learning based classification of solid lipid-poor contrast enhancing renal masses using contrast enhanced CT. Br J Radiol (2020) 93:20200002. doi: 10.1259/bjr.20200002

35. Coy H, Hsieh K, Wu W, Nagarajan MB, Young JR, Douek ML, et al. Deep learning and radiomics: the utility of Google TensorFlow Inception in classifying clear cell renal cell carcinoma and oncocytoma on multiphasic CT. Abdominal Radiol (New York) (2019) 44:2009–20. doi: 10.1007/s00261-019-01929-0

36. Baghdadi A, Aldhaam NA, Elsayed AS, Hussein AA, Cavuoto LA, Kauffman E, et al. Automated differentiation of benign renal oncocytoma and chromophobe renal cell carcinoma on computed tomography using deep learning. BJU Int (2020) 125:553–60. doi: 10.1111/bju.14985

37. Pedersen M, Andersen MB, Christiansen H, Azawi NH. Classification of renal tumour using convolutional neural networks to detect oncocytoma. Eur J Radiol (2020) 133:109343. doi: 10.1016/j.ejrad.2020.109343

38. Nikpanah M, Xu Z, Jin D, Farhadi F, Saboury B, Ball MW, et al. A deep-learning based artificial intelligence (AI) approach for differentiation of clear cell renal cell carcinoma from oncocytoma on multi-phasic MRI. Clin Imaging (2021) 77:291–8. doi: 10.1016/j.clinimag.2021.06.016

39. Fatemeh Z, Nicola S, Satheesh K, Eranga U. Ensemble U-net-based method for fully automated detection and segmentation of renal masses on computed tomography images. Med Phys (2020) 47:4032–44. doi: 10.1002/mp.14193

40. Moch H, Cubilla AL, Humphrey PA, Reuter VE, Ulbright TM. The 2016 WHO classification of tumours of the urinary system and male genital organs-part A: renal, penile, and testicular tumours. Eur Urol (2016) 70:93–105. doi: 10.1016/j.eururo.2016.02.029

41. Amin J, Xu B, Badkhshan S, Creighton TT, Abbotoy D, Murekeyisoni C, et al. Identification and validation of radiographic enhancement for reliable differentiation of CD117(+) benign renal oncocytoma and chromophobe renal cell carcinoma. Clin Cancer Res (2018) 24:3898–907. doi: 10.1158/1078-0432.CCR-18-0252

42. Ljungberg B, Albiges L, Abu-Ghanem Y, Bedke J, Capitanio U, Dabestani S, et al. European association of urology guidelines on renal cell carcinoma: the 2022 update. Eur Urol (2022) 82:399–410. doi: 10.1016/j.eururo.2022.03.006

43. Wang D, Huang X, Bai L, Zhang X, Wei J, Zhou J. Differential diagnosis of chromophobe renal cell carcinoma and papillary renal cell carcinoma with dual-energy spectral computed tomography. Acta radiologica (Stockholm Sweden 1987) (2020) 61:1562–9. doi: 10.1177/0284185120903447

44. Fuhrman SA, Lasky LC, Limas C. Prognostic significance of morphologic parameters in renal cell carcinoma. Am J Surg Pathol (1982) 6:655–63. doi: 10.1097/00000478-198210000-00007

45. Delahunt B, Cheville JC, Martignoni G, Humphrey PA, Magi-Galluzzi C, McKenney J, et al. The International Society of Urological Pathology (ISUP) grading system for renal cell carcinoma and other prognostic parameters. Am J Surg Pathol (2013) 37:1490–504. doi: 10.1097/PAS.0b013e318299f0fb

46. Zhao Y, Chang M, Wang R, Xi IL, Chang K, Huang RY, et al. Deep learning based on MRI for differentiation of low- and high-grade in low-stage renal cell carcinoma. J magnetic resonance Imaging JMRI (2020) 52:1542–9. doi: 10.1002/jmri.27153

47. Xu L, Yang C, Zhang F, Cheng X, Wei Y, Fan S, et al. Deep learning using CT images to grade clear cell renal cell carcinoma: development and validation of a prediction model. Cancers (2022) 14(11):2574. doi: 10.3390/cancers14112574

48. Lin F, Ma C, Xu J, Lei Y, Li Q, Lan Y, et al. A CT-based deep learning model for predicting the nuclear grade of clear cell renal cell carcinoma. Eur J Radiol (2020) 129:109079. doi: 10.1016/j.ejrad.2020.109079

49. Yang M, He X, Xu L, Liu M, Deng J, Cheng X, et al. CT-based transformer model for non-invasively predicting the Fuhrman nuclear grade of clear cell renal cell carcinoma. Front Oncol (2022) 12:961779. doi: 10.3389/fonc.2022.961779

50. He X, Wei Y, Zhang H, Zhang T, Yuan F, Huang Z, et al. Grading of clear cell renal cell carcinomas by using machine learning based on artificial neural networks and radiomic signatures extracted from multidetector computed tomography images. Acad Radiol (2020) 27:157–68. doi: 10.1016/j.acra.2019.05.004

51. Al-Aynati M, Chen V, Salama S, Shuhaibar H, Treleaven D, Vincic L. Interobserver and intraobserver variability using the Fuhrman grading system for renal cell carcinoma. Arch Pathol Lab Med (2003) 127:593–6. doi: 10.5858/2003-127-0593-IAIVUT

52. Araújo T, Aresta G, Castro E, Rouco J, Aguiar P, Eloy C, et al. Classification of breast cancer histology images using Convolutional Neural Networks. PloS One (2017) 12:e0177544. doi: 10.1371/journal.pone.0177544

53. Coudray N, Ocampo PS, Sakellaropoulos T, Narula N, Snuderl M, Fenyö D, et al. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat Med (2018) 24:1559–67. doi: 10.1038/s41591-018-0177-5

54. Wei JW, Suriawinata AA, Vaickus LJ, Ren B, Liu X, Lisovsky M, et al. Evaluation of a deep neural network for automated classification of colorectal polyps on histopathologic slides. JAMA network Open (2020) 3:e203398. doi: 10.1001/jamanetworkopen.2020.3398

55. Tabibu S, Vinod PK, Jawahar CV. Pan-Renal Cell Carcinoma classification and survival prediction from histopathology images using deep learning. Sci Rep (2019) 9:10509. doi: 10.1038/s41598-019-46718-3

56. Zhu M, Ren B, Richards R, Suriawinata M, Tomita N, Hassanpour S. Development and evaluation of a deep neural network for histologic classification of renal cell carcinoma on biopsy and surgical resection slides. Sci Rep (2021) 11(1):7080. doi: 10.1038/s41598-021-86540-4

57. Abu Haeyeh Y, Ghazal M, El-Baz A, Talaat IM. Development and evaluation of a novel deep-learning-based framework for the classification of renal histopathology images. Bioengineering (Basel Switzerland) (2022) 9(9):423. doi: 10.3390/bioengineering9090423

58. Zhu D, Li J, Li Y, Wu J, Zhu L, Li J, et al. Multimodal ultrasound fusion network for differentiating between benign and Malignant solid renal tumors. Front Mol Biosci (2022) 9:982703. doi: 10.3389/fmolb.2022.982703

59. Schulz S, Woerl AC, Jungmann F, Glasner C, Stenzel P, Strobl S, et al. Multimodal deep learning for prognosis prediction in renal cancer. Front Oncol (2021) 11:788740. doi: 10.3389/fonc.2021.788740

60. Furrer MA, Spycher SCJ, Büttiker SM, Gross T, Bosshard P, Thalmann GN, et al. Comparison of the diagnostic performance of contrast-enhanced ultrasound with that of contrast-enhanced computed tomography and contrast-enhanced magnetic resonance imaging in the evaluation of renal masses: A systematic review and meta-analysis. Eur Urol Oncol (2020) 3:464–73. doi: 10.1016/j.euo.2019.08.013

61. Smail LC, Dhindsa K, Braga LH, Becker S, Sonnadara RR. Using deep learning algorithms to grade hydronephrosis severity: toward a clinical adjunct. Front Pediatr (2020) 8:1. doi: 10.3389/fped.2020.00001

62. Lien WC, Chang YC, Chou HH, Lin LC, Liu YP, Liu L, et al. Detecting hydronephrosis through ultrasound images using state-of-the-art deep learning models. Ultrasound Med Biol (2022) 49(3):723–33. doi: 10.1016/j.ultrasmedbio.2022.10.001

63. Song SH, Han JH, Kim KS, Cho YA, Youn HJ, Kim YI, et al. Deep-learning segmentation of ultrasound images for automated calculation of the hydronephrosis area to renal parenchyma ratio. Invest Clin Urol (2022) 63:455–63. doi: 10.4111/icu.20220085

64. Yin Q, Hung SC, Wang L, Lin W, Fielding JR, Rathmell WK, et al. Associations between tumor vascularity, vascular endothelial growth factor expression and PET/MRI radiomic signatures in primary clear-cell-renal-cell-carcinoma: proof-of-concept study. Sci Rep (2017) 7:43356. doi: 10.1038/srep43356

65. Kocak B, Durmaz ES, Ates E, Ulusan MB. Radiogenomics in clear cell renal cell carcinoma: machine learning-based high-dimensional quantitative CT texture analysis in predicting PBRM1 mutation status. AJR Am J roentgenol (2019) 212:W55–w63. doi: 10.2214/AJR.18.20443

66. Feng Z, Zhang L, Qi Z, Shen Q, Hu Z, Chen F. Identifying BAP1 mutations in clear-cell renal cell carcinoma by CT radiomics: preliminary findings. Front Oncol (2020) 10:279. doi: 10.3389/fonc.2020.00279

67. Kocak B, Durmaz ES, Kaya OK, Kilickesmez O. Machine learning-based unenhanced CT texture analysis for predicting BAP1 mutation status of clear cell renal cell carcinomas. Acta radiologica (Stockholm Sweden 1987) (2019) 61(6):856–64. doi: 10.1177/0284185119881742

68. Li ZC, Zhai G, Zhang J, Wang Z, Liu G, Wu GY, et al. Differentiation of clear cell and non-clear cell renal cell carcinomas by all-relevant radiomics features from multiphase CT: a VHL mutation perspective. Eur Radiol (2019) 29:3996–4007. doi: 10.1007/s00330-018-5872-6

69. Scrima AT, Lubner MG, Abel EJ, Havighurst TC, Shapiro DD, Huang W, et al. Texture analysis of small renal cell carcinomas at MDCT for predicting relevant histologic and protein biomarkers. Abdominal Radiol (New York) (2019) 44:1999–2008. doi: 10.1007/s00261-018-1649-2

70. Wang D, Xu J, Zhang Z, Li S, Zhang X, Zhou Y, et al. Evaluation of rectal cancer circumferential resection margin using faster region-based convolutional neural network in high-resolution magnetic resonance images. Dis colon rectum (2020) 63:143–51. doi: 10.1097/DCR.0000000000001519

71. Liu S, Zhang Y, Ju Y, Li Y, Kang X, Yang X, et al. Establishment and clinical application of an artificial intelligence diagnostic platform for identifying rectal cancer tumor budding. Front Oncol (2021) 11:626626. doi: 10.3389/fonc.2021.626626

72. Gill TS, Varghese BA, Hwang DH, Cen SY, Aron M, Aron M, et al. Juxtatumoral perinephric fat analysis in clear cell renal cell carcinoma. Abdominal Radiol (New York) (2019) 44:1470–80. doi: 10.1007/s00261-018-1848-x

73. Alayed A, Krishna S, Breau RH, Currin S, Flood TA, Narayanasamy S, et al. Diagnostic accuracy of MRI for detecting inferior vena cava wall invasion in renal cell carcinoma tumor thrombus using quantitative and subjective analysis. AJR Am J roentgenol (2019) 212:562–9. doi: 10.2214/AJR.18.20209

74. Khene ZE, Bensalah K, Largent A, Shariat S, Verhoest G, Peyronnet B, et al. Role of quantitative computed tomography texture analysis in the prediction of adherent perinephric fat. World J Urol (2018) 36:1635–42. doi: 10.1007/s00345-018-2292-9

75. Wang HY, Su ZH, Xu X, Huang N, Sun ZP, Wang YW, et al. Dynamic contrast-enhanced MRI in renal tumors: common subtype differentiation using pharmacokinetics. Sci Rep (2017) 7:3117. doi: 10.1038/s41598-017-03376-7

76. Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. (2017). Grad-cam: Visual explanations from deep networks via gradient-based localization, in: Proceedings of the IEEE international conference on computer vision, Los Angeles, CA, USA: IEEE Computer Society. pp. 618–26.

Keywords: deep learning, artificial intelligence, carcinoma, prediction model, imaging diagnosis

Citation: Wang Z, Zhang X, Wang X, Li J, Zhang Y, Zhang T, Xu S, Jiao W and Niu H (2023) Deep learning techniques for imaging diagnosis of renal cell carcinoma: current and emerging trends. Front. Oncol. 13:1152622. doi: 10.3389/fonc.2023.1152622

Received: 28 January 2023; Accepted: 11 August 2023;

Published: 01 September 2023.

Edited by:

Alessandro Stefano, National Research Council (CNR), ItalyReviewed by:

Rakesh Shiradkar, Emory University, United StatesCopyright © 2023 Wang, Zhang, Wang, Li, Zhang, Zhang, Xu, Jiao and Niu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wei Jiao, amlhb3dlaTM5MjlAMTYzLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.