94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

MINI REVIEW article

Front. Oncol., 17 January 2023

Sec. Surgical Oncology

Volume 13 - 2023 | https://doi.org/10.3389/fonc.2023.1116761

This article is part of the Research TopicArtificial Intelligence in Colorectal CancersView all 7 articles

Background: A considerable number of recent research have used artificial intelligence (AI) in the area of colorectal cancer (CRC). Surgical treatment of CRC still remains the most important curative component. Artificial intelligence in CRC surgery is not nearly as advanced as it is in screening (colonoscopy), diagnosis and prognosis, especially due to the increased complexity and variability of structures and elements in all fields of view, as well as a general shortage of annotated video banks for utilization.

Methods: A literature search was made and relevant studies were included in the minireview.

Results: The intraoperative steps which, at this moment, can benefit from AI in CRC are: phase and action recognition, excision plane navigation, endoscopy control, real-time circulation analysis, knot tying, automatic optical biopsy and hyperspectral imaging. This minireview also analyses the current advances in robotic treatment of CRC as well as the present possibility of automated CRC robotic surgery.

Conclusions: The use of AI in CRC surgery is still at its beginnings. The development of AI models capable of reproducing a colorectal expert surgeon’s skill, the creation of large and complex datasets and the standardization of surgical colorectal procedures will contribute to the widespread use of AI in CRC surgical treatment.

Colorectal cancer (CRC) is the second most prevalent cause of cancer-related deaths worldwide and the third most common malignancy in both men and women, respectively (1, 2),. With liver metastases present in nearly 20% of cases, 60–70% of individuals with clinical symptoms of CRC are detected at advanced stages. Additionally, individuals with metastatic dissemination at the time of diagnosis had a 5-year overall survival rate of only 10-15%, compared to patients with local malignancy, which ranges from 80-90% (3).

Artificial intelligence (AI) is a branch of computer science that focuses on creating intelligent computers capable of performing activities that normally necessitate human intelligence. Several Ai technologies exist all around us, but understanding and evaluating their impact on today’s society might be difficult. Deep learning algorithms and support vector machines (SVMs) have made important contributions to this advanced technology during the last decade, playing a key role in medical and healthcare systems (4).

There are two types of AI applications in the medical field: virtual and physical. The virtual component of AI is made up of machine learning (ML) and deep learning (DL, a subset of ML) (5). There are three types of machine learning algorithms: supervised, unsupervised, and reinforcement learning. Meanwhile, the most well-known deep learning scheme, a convolutional neural network (CNN), is a sort of multilayer artificial neural network that is extremely efficient for image categorization (6).

Artificial neural networks (ANNs) are ML tools. In function, they mimic the human brain by connecting and discovering complicated relationships and patterns in data. ANNs are made up of numerous computational units (neurons) that accept inputs, execute calculations, and send output to the next computational unit. The input is processed as signals by layers of algorithms, which produce specific patterns as final output, which are interpreted and employed in decision-making. Simple 1- or 2-layered neural networks are typically used in ANNs (7, 8).

Computer vision (CV) is focused on how computers may learn to understand digital images and videos (such as object and scene recognition) at a high level, in a manner similar to the human eye. 2 The processed data can include video sequences, several camera perspectives, or multidimensional data from a medical scanning instrument (7, 9–11).

The physical branch of AI includes medical devices and robots, such as the Da Vinci Surgical System (Intuitive Surgical Inc., Sunnyvale, CA, USA), as well as nanorobots.

A considerable number of recent research have used AI in the area of CRC (7–9). From the standpoint of clinical practice, the available AI applications in CRC primarily contain four clinical aspects (10):

● Screening: Endoscopy is the gold standard for CRC screening. AI-assisted colonoscopy for polyp detection and characterization, risk prediction models using clinical and omics data, are expected to improve CRC screening.

● Diagnosis: The qualitative diagnosis and staging of CRC are mostly based on imaging and pathological examination. DL can greatly increase medical image interpretation, minimize disparities in experience, and reduce misinterpretation rates thanks to powerful image recognition processing technology (9).

● Treatment: The treatment of CRC mainly consists of surgery, chemotherapy and radiotherapy. Novel therapies can be evaluated with the help of AI, while AI can provide a more precise treatment choice, individually tailored on each patient (11).

● Prognosis: Predicting the recurrence and estimating survival is more accurate using ML approach, as it uses various multidimensional information. Deep learning has been demonstrated to be as good as or better than statistical methods (eg. COX regression model) in cancer prognosis (12).

Surgical treatment of CRC still remains the most important curative component. Artificial intelligence in CRC surgery is not nearly as advanced as it is in screening (colonoscopy), diagnosis and prognosis. This is most likely due to the increased complexity and variability of structures and elements in all fields of view, as well as a general shortage of equivalent annotated video banks for utilization (13, 14).

The aim of this minireview is to summarize up to date information on the possibility of using AI in improving the outcome of surgical treatment of CRC. It concentrates on the intraoperative steps which can benefit from AI and summaries the published studies, it gives a brief outline of current AI applications in colorectal surgery. It also analysis the current advances in CRC robotic treatment, especially automated surgeries. In order to make appropriate decisions on topics deserving of further investigation, it is necessary to understand the existing situation of AI in the surgical treatment of CRC.

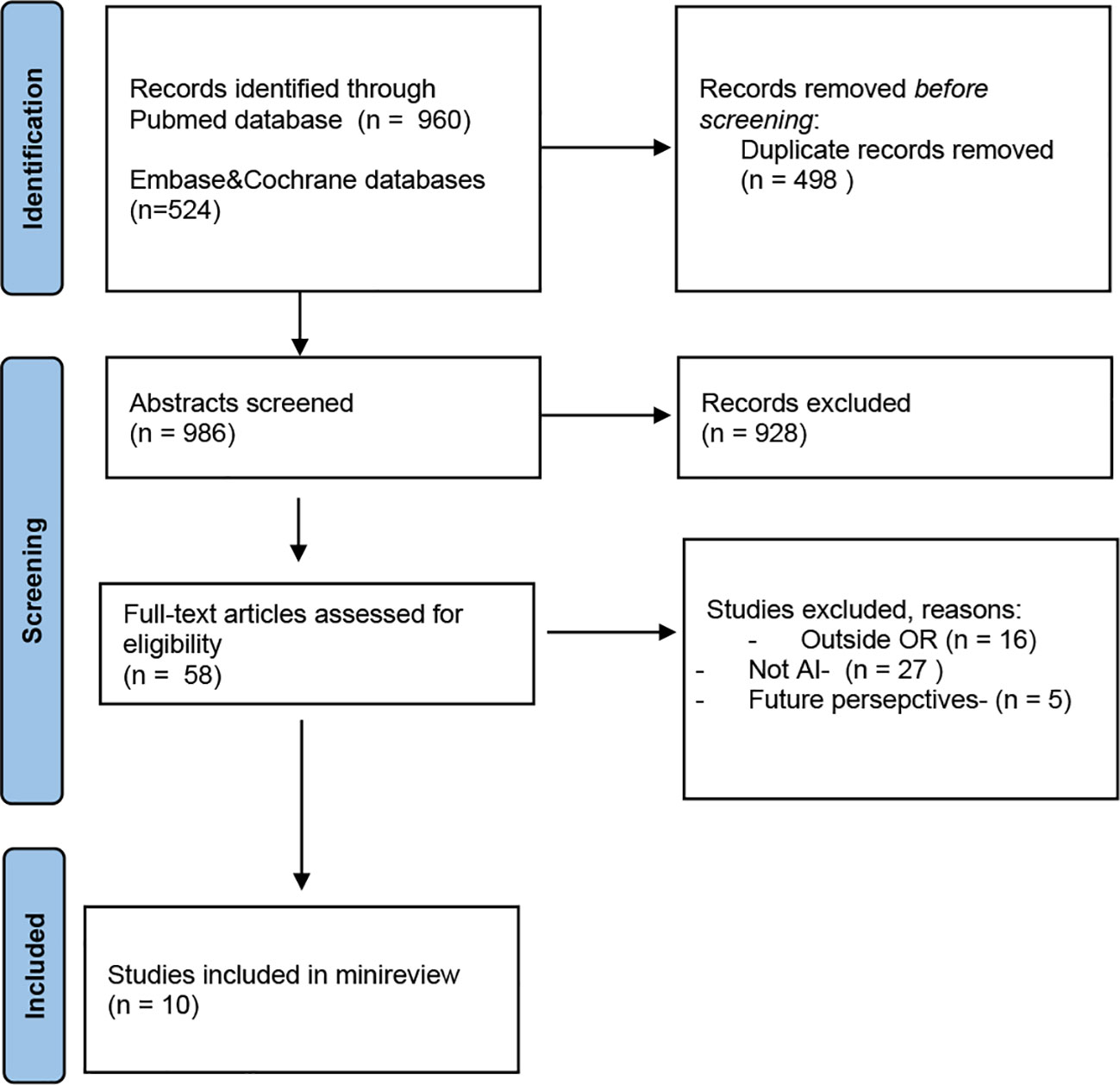

A literature search was performed up to September 5th, 2022 using the following online databases: PubMed,Embase, Cochrane Library. The terms AI, OR, and surgery, including synonyms or equivalent terms, were used to obtain the literature. We have read the abstracts and selected the articles presenting data which can be used during CRC surgical treatment. The literature search retrieved 1484 articles, from 3 databases. Finally, 10 studies were included. The flow diagram can be viewed in Figure 1.

Figure 1 PRISMA 2020 flow diagram search (15).

Table 1 shows an overview of the included studies, their application, and the specific AI subfield the application is based on.

With the introduction of robotic colorectal surgery, colorectal cancer surgical treatment has entered a new era. The da Vinci System is now the most extensively utilized robotic surgical. It allows surgeons to execute extremely delicate or highly complex procedures with wristed devices that have seven degrees of freedom. When compared to traditional open surgery, the advantages of surgery with these robots include a shorter period of recovery and hospital stay, minimum scarring, smaller incisions, and a significant reduction in the risk of surgical site infections, postoperative pain, and blood loss (26, 27). Surgeons can operate with a larger viewing field thanks to computer-controlled instruments. Computer-controlled devices enable surgeons to work with a wider viewing field, greater flexibility, dexterity, precision, and less fatigue. The da Vinci dual-console provides integrated teaching and supervision, for residents’ surgical training. The Senhance surgical robot (TransEnterix Surgical Inc., Morrisville, NC, USA) is a laparoscopic-based technology that allows skilled laparoscopic surgeons to perform more sophisticated surgeries (28).

The robotic platform has a distinct benefit in that it allows access to difficult-to-reach locations, such as a narrow pelvis, while also preserving postoperative urinary and sexual function (29). Rectal surgery was related with higher conversion rates in men, obese patients, and patients getting a low anterior resection compared to robotic surgery in the ROLARR randomized clinical study (30). Recent research has also showed that robotic-assisted surgery appears to be more suitable for protecting the pelvic autonomic nerve (29, 31, 32).

The Intuitive Surgical da Vinci system pioneered the notion of transparent teleoperation, in which motions done by the surgeon on the control interface are precisely copied by surgical tools on the patient side. The lack of a decision-making process by the machine in the transparent teleoperation paradigm gives the surgeon unlimited control. However, these devices have certain algorithmic autonomy, such as tremor suppression and redundancy resolution, which do not interfere with the surgeon’s actions (33). They can not be considered AI driven devices, but they offer a starting point for the hardware for future autonomous operating robots.

Shademan et al. described complete in vivo autonomous robotic anastomosis of porcine intestine utilizing the Smart Tissue Autonomous Robot (STAR) (16). STAR surpassed human surgeons in a range of ex vivo and in vivo surgical tasks, despite being conducted in a carefully controlled experimental context. In later in vivo tests, STAR obtained 66.28% correctly placed stitches in the first attempt, which corresponded to an average of 0.34 suture hesitancy per stitch (17).

For the first time, these experiments proved the fledgling clinical feasibility of an autonomous soft-tissue surgical robot. STAR was controlled by artificial intelligence (AI) algorithms and received input from an array of optical and tactile sensors, as opposed to traditional surgical robots, which are managed in real time by people and have become ubiquitous in specific subspecialties.

Phase recognition is the task of identifying surgical images according to preset surgical phases. Phases are parts of surgical operations that are required to finish procedures successfully. They are often determined by consensus and recorded on surgical videos (34).

There are several studies of phase and action recognition, on different types of surgery, including colorectal surgery. The study of Kitaguchi et al. aimed to create a large annotated dataset containing laparoscopic colorectal surgery videos and to evaluate the accuracy of automatic recognition for surgical phase, action, and tool by combining AI with the dataset. They used 300 intraoperative videos and 82 million frames were marked for a phase and action classification task, while 4000 frames were marked for a tool segmentation task. 80% of the frames, were used for the training dataset and 20% for the test dataset. CNN was utilized to analyze the videos. The accuracy for the automatic surgical phase task was 81%, while the accuracy for action classification task was 83.2% (18).

The creation of an image-guided navigation system for areolar tissue in the complete mesorectal excision plane using deep learning has been reported by Igaki et al. This could be useful to surgeons since areolar tissue can be utilized as a landmark for the optimum dissection plane. Deep learning-based semantic segmentation of areolar tissue was conducted in the whole mesorectal excision plane. The deep learning model was trained using intraoperative images of the whole mesorectal excision scene taken from left laparoscopic resection movies. Six hundred annotation images were generated from 32 videos, with 528 photos used in training and 72 images used in testing. The established semantic segmentation model helps in locating and emphasizing the areolar tissue area in the whole mesorectal excision plane (19).

There are commercial systems available that allow the endoscopic camera to move without human intervention, following particular features in the scene., Viki (35), FreeHand (36), SOLOASSIST (37)and AutoLap (20), for example, do camera stabilization and target tracking. These were the first autonomous systems used to assist with MIS intervention. The autonomy is implemented via feature tracking algorithms that maintain the surgical instrument in the endoscope’s visual field (38).

For AutoLap minimally invasive rectal resection with entire mesorectal excision was chosen to experimentally test cognitive camera guidance as this surgical method places great demands on camera control. A single surgeon performed twenty surgeries with human camera guidance for learning purposes. After the completion of the surgeon’s learning curve, two different robots were trained on data from the manual camera guiding, followed by using one robot to train the other. The performance of the cognitive camera robot improved with experience The duration of each surgery improved as the robot became more experienced, also the quality of the camera guidance (evaluated by the surgeon as good/neutral/poor) improved, becoming good in 56.2% of evaluations (20).

In order to predict anastomotic complications attributable to hypoperfusion after laparoscopic colonic surgery, a fluorescence laparoscopic system can be used during surgery for angiography using indocyanine green (ICG). Each patient has a different perfusion status, due to individual variations in collateral circulation blood flow pathways, which provides a different ICG curve. A well-trained AI can forecast the probability of hypoperfusion-related anastomotic problems by analyzing the microcirculation state, by using numerous metrics and ICG curve patterns. The AI-based micro perfusion analysis system can help surgeons by quickly performing real-time analysis and giving information in a color map to surgeons. Using a neural network that imitate the visual cortex, Park et al. clustered 10,000 ICG curves into 25 patterns using unsupervised learning, an AI training approach that does not require annotations during training. ICG curves were derived from 65 processes. Curves were preprocessed to minimize the degradation of the AI model caused by external factors such light source reflection, background, and camera movement. The AI model revealed more accuracy in the microcirculation evaluation when the AUC of the AI-based technique was compared to T1/2 max max (time from first fluorescence increase to half of maximum), TR (time ratio: T1/2 max/Tmax, Tmax is the time form first fluorescence increase to maximum), and RS (rise slope), with values of 0.842, 0.750, 0.734, and 0.677, respectively. This makes it easier to create a color mapping scheme of red-green-blue areas that classifies the degree of vascularization. In comparison to a surgeon’s solely visual inspection, this AI model delivers a more objective and accurate approach of fluorescence signal evaluation. It can provide an immediate evaluation of the grade of perfusion during minimally invasive colorectal procedures, allowing for early detection of insufficient vascularization (21, 39).

Knot-tying is part of basic surgical skills and a quick technique in open surgery, while laparoscopic knot-tying can take up to three minutes for a single knot to be done. Mayer et al. described a solution based on RNNs to speed up knot-tying in robotic cardiac surgery. The surgeon inputs a sequence to the network (for example, instances of human-performed knot-tying), and an RNN with long-term storage learns the task. The preprogrammed controller was able to construct a knot in 33.7 seconds, however the introduction of an RNN offered a speed improvement of about 25% after learning from 50 prior runs, generating a knot in 25.8 seconds (22, 40, 41).

Optical biopsy is a light-based nondestructive in situ assessment of tissue pathologic features. Hyperspectral imaging (HSI) is a non-invasive optical imaging tool that provides pixel-by-pixel spectroscopic and spatial information about the investigated area. Tissue-light interaction produces distinct spectral signatures, allowing the visualization of tissular perfusion and differentiation of tissue types. HSI cameras are commercially available and are easily compatible with laparoscopes (42, 43).. In the past years several very promising studies, which used different AI methods in detecting CRC during surgery using HIS, were published.

Jansen-Winkeln et al. used HSI records from 54 patients who underwent colorectal resections, creating a realistic intraoperative setting for their study. By using a CNN method, they obtained a sensitivity if 86% and specificity of 95% for the distinction between cancer and healthy mucosa, while differentiating cancer against adenoma had a sensitivity of 68%, and 59% specificity o for CCR (23).

Collins et al. used HIS imaging on specimens obtained immediately after extraction from 34 patients undergoing surgical resection for CRC. Using a CNN to automatically detect CRC in the HIS images they obtained a sensitivity of 87% and specificity of 90% for cancer detection. Their approach could be used for objectively assessing tumor margins during surgery (24).

By combining HSI and CNN trained with deep learning on porcine models, Okamoto et al. obtained an automatic distinction of different anatomical layers in CRC surgery, achieving a recognition sensitivity of 79.0 ± 21.0% for the retroperitoneum and 86.0 ± 16.0% for the colon and mesentery (25)..These results are promising in improving the results of complete mesocolic excision, by lowering the complications associated with it (like lesions of the ureter, gonadal vessels)and offering a better oncologic result.

This minireview offers an overview of various AI applications currently available for the surgical treatment of CRC, which will show their utility in improving treatment outcome in the future. Although promising in their pilot effort, the AI applications mentioned in this article are not ready yet for large-scale clinical usage.

Autonomous robots are still part of the future, but the moment they will become part of the surgical treatment is getting nearer. The hardware part is available (commercially available surgical robots), while several intraoperative aspects of CRC surgeries have been captured, analyzed and successfully reproduced using AI.

The current use of AI to the medical area is steadily changing the diagnostic and treatment approach to a wide range of diseases. While many AI applications have been used and investigated in several cancer entities, such as lung and breast cancer, the use of AI in CRC is still in its early stages (44). AI’s utility in CRC has been established mostly for aiding in screening and staging. Meanwhile, evidence on the use of AI in colorectal surgery is limited.

Surgical data and applications are more difficult to analyze and use than data for AI in screening endoscopy, radiology, and pathology. Surgical movies are dynamic, displaying difficult-to-model tool-tissue interactions that modify and even entirely reshape anatomical situations. Surgical workflows and techniques are difficult to standardize, particularly in long and unpredictable operations like colorectal surgery for CRC. During surgical interventions, surgeons use prior knowledge, such as preoperative imaging, as well as their personal experience and intuition to make decisions. More and better data are required to address these challenges. This includes reaching an agreement on annotation techniques (45) and publicly publishing vast, high-quality annotated datasets. Multiple institutions must collaborate in this context to ensure that data are diverse and representative (46). Such datasets will be critical for training stronger AI models, and also for demonstrating generalizability through external validation studies (47).

The use of AI in CRC surgery is still at its beginnings, despite the fact that AI has already demonstrated its clear clinical benefits in the screening and diagnosis of CRC. Many studies are still in the preclinical phase. The development of AI models capable of reproducing a colorectal expert surgeon’s skill, the creation of large and complex datasets and the standardization of surgical colorectal procedures will contribute to the widespread use of AI in CRC surgical treatment.

MA contributed to the conception, research of the primary literature, and writing of the article. DL. MM contributed to the conception and research of the primary literature for the article. SO contributed to the conception, research of the primary literature, and writing of the article. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin (2021) 71:209–49. doi: 10.3322/caac.21660

2. Fitzmaurice C, Abate D, Abbasi N, Abbastabar H, Abd-Allah F, Abdel-Rahman O, et al. Global, regional, and national cancer incidence, mortality, years of life lost, years lived with disability, and disability-adjusted life-years for 29 cancer groups, 1990 to 2017: A systematic analysis for the global burden of disease study. JAMA Oncol (2019) 5:1749–68. doi: 10.1001/jamaoncol.2019.2996

3. Miller KD, Nogueira L, Mariotto AB, Rowland JH, Yabroff KR, Alfano CM, et al. Cancer treatment and survivorship statistics, 2019. CA Cancer J Clin (2019) 69:363–85. doi: 10.3322/caac.21565

4. Shalev-Shwartz S, Ben-David S. Understanding machine learning: From theory to algorithms. Cambridge, UK: Cambridge University Press (2014).

5. Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism (2017) 69:S36–40. doi: 10.1016/j.metabol.2017.01.011

6. Ruffle JK, Farmer AD, Aziz Q. Artificial intelligence-assisted gastroenterology–promises and pitfalls. Am J Gastroenterol (2019) 114:422–8. doi: 10.1038/s41395-018-0268-4

7. Hashimoto DA, Rosman G, Rus D, Meireles OR. Artificial intelligence in surgery: Promises and perils. Ann Surg (2018) 268(1):70–6. doi: 10.1097/sla.0000000000002693

8. Deo RC. Machine learning in medicine. Circulation (2015) 132(20):1920–30. doi: 10.1161/circulationaha.115.001593

9. Szeliski R. Computer vision: Algorithms and applications. New York City, NY: Springer Science & Business Media (2010).

10. Huang T. Computer vision: Evolution and promise. Champaign, IL: University of Illinois Press (1996).

11. Sonka M, Hlavac V, Boyle R. Image processing, analysis,and machine vision. Boston, MA: Cengage Learning (2014).

12. Chahal D, Byrne MFA. Primer on artificial intelligence and its application to endoscopy. Gastrointest Endosc (2020) 92:813–20. doi: 10.1016/j.gie.2020.04.074

13. Pacal I, Karaboga D, Basturk A, Akay B, Nalbantoglu UA. Comprehensive review of deep learning in colon cancer. Comput Biol Med (2020) 126:104003. doi: 10.1016/j.compbiomed.2020.104003

14. Goyal H, Mann R, Gandhi Z, Perisetti A, Ali A, Aman Ali K, et al. Scope of artificial intelligence in screening and diagnosis of colorectal cancer. J Clin Med (2020) 9:3313. doi: 10.3390/jcm9103313

15. Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. J Clin Epidemiol (2021) 134:178–89. doi: 10.1016/j.jclinepi.2021.03.001

16. Shademan A, Decker RS, Opfermann JD, Leonard S, Krieger A, Kim PC. Supervised autonomous robotic soft tissue surgery. Sci Transl Med (2016) 8(337):337ra64. doi: 10.1126/scitranslmed.aad9398

17. Saeidi H, Opfermann JD, Kam M, Wei S, Leonard S, Hsieh MH, et al. Autonomous robotic laparoscopic surgery for intestinal anastomosis. Sci Robot (2022) 7(62):eabj2908. doi: 10.1126/scirobotics.abj2908

18. Kitaguchi D, Takeshita N, Matsuzaki H, Oda T, Watanabe M, Mori K, et al. Automated laparoscopic colorectal surgery workflow recognition using artificial intelligence: Experimental research. Int J Surg (2020) 79:88–94. doi: 10.1016/j.ijsu.2020.05.015

19. Igaki T, Kitaguchi D, Kojima S, Hasegawa H, Takeshita N, Mori K, et al. Artificial intelligence-based total mesorectal excision plane navigation in laparoscopic colorectal surgery. Dis Colon Rectum (2022) 65(5):e329–33. doi: 10.1097/DCR.0000000000002393

20. Wagner M, Bihlmaier A, Kenngott HG, Mietkowski P, Scheikl PM, Bodenstedt S, et al. A learning robot for cognitive camera control in minimally invasive surgery. Surg Endosc (2021) 35:5365–74. doi: 10.1007/s00464-021-08509-8

21. Park SH, Park HM, Baek KR, Ahn HM, Lee IY, Son GM. Artificial intelligence based real-time microcirculation analysis system for laparoscopic colorectal surgery. World J Gastroenterol (2020) 26:6945–62. doi: 10.3748/wjg.v26.i44.6945

22. Weede O, Mönnich H, Müller B, Wörn H. An intelligent and autonomous endoscopic guidance system for minimally invasive surgery, in: 2011 IEEE International Conference on Robotics and Automation. (2011) 2011:5762–8. doi: 10.1109/ICRA.2011.5980216

23. Jansen-Winkeln B, Barberio M, Chalopin C, Schierle K, Diana M, Köhler H, et al. Feedforward artificial neural network-based colorectal cancer detection using hyperspectral imaging: A step towards automatic optical biopsy. Cancers (Basel) (2021) 13(5):967. doi: 10.3390/cancers13050967

24. Collins T, Bencteux V, Benedicenti S, Moretti V, Mita MT, Barbieri V, et al. Automatic optical biopsy for colorectal cancer using hyperspectral imaging and artificial neural networks. Surg Endosc (2022) 36:8549–59. doi: 10.1007/s00464-022-09524-z

25. Okamoto N, Rodríguez-Luna MR, Bencteux V, Al-Taher M, Cinelli L, Felli E, et al. Computer-assisted differentiation between colon-mesocolon and retroperitoneum using hyperspectral imaging (HSI) technology. Diagnost (Basel) (2022) 12(9):2225. doi: 10.3390/diagnostics12092225

26. Hussain A, Malik A, Halim MU, Ali AM. The use of robotics in surgery: A review. Int J Clin Pract (2014) 68:1376–82. doi: 10.1111/ijcp.12492

28. Hirano Y, Kondo H, Yamaguchi S. Robot-assisted surgery with senhance robotic system for colon cancer: Our original single-incision plus 2-port procedure and a review of the literature. Tech Coloproctol (2021) 25:1–5. doi: 10.1007/s10151-020-02389-1

29. Kim HJ, Choi G-S, Park JS, Park SY, Yang CS, Lee HJ. The impact of robotic surgery on quality of life, Uri-nary and sexual function following total mesorectal excision for rectal cancer: A propensity score-matched analysis with laparoscopic surgery. Colorectal Dis (2018) 20:O103–13. doi: 10.1111/codi.14051

30. Jayne D, Pigazzi A, Marshall H, Croft J, Corrigan N, Copeland J, et al. Effect of robotic-assisted vs conventional laparoscopic surgery on risk of conversion to open laparotomy among patients undergoing resection for rectal cancer: The ROLARR randomized clinical trial. JAMA (2017) 318:1569–80. doi: 10.1001/jama.2017.7219

31. Yang S-X, Sun Z-Q, Zhou Q-B, Xu J-Z, Chang Y, Xia K-K, et al. Security and radical assessment in open, laparoscopic, robotic colorectal cancer surgery: A comparative study. Technol Cancer Res Treat (2018) 17. doi: 10.1177/1533033818794160

32. Mitsala A, Tsalikidis C, Pitiakoudis M, Simopoulos C, Tsaroucha AK. Artificial intelligence in colorectal cancer screening, diagnosis and treatment. A New Era Curr Oncol (2021) 28(3):1581–607. doi: 10.3390/curroncol28030149

33. Attanasio A, Scaglioni B, De Momi E, Fiorini P, Valdastri P. Autonomy in surgical robotics. Annu Rev Control Robot Auto Syst (2021) 4(1):651–79. doi: 10.1146/annurev-control-062420-090543

34. Garrow CR, Kowalewski KF, Li L, Wagner M, Schmidt MW, Engelhardt S, et al. Machine learning for surgical phase recognition: A systematic review. Ann Surg (2021) 273:684–93. doi: 10.1097/SLA.0000000000004425

35. Endo control system website. Available at: https://www.endocontrol-medical.com/en/viky-en/ (Accessed Sept. 10, 2022).

36. Free hand system website. Available at: https://www.freehandsurgeon.com (Accessed Sept. 10, 2022).

37. SOLOASSIST system website. Available at: https://aktormed.info/en/products/soloassist-en (Accessed Sept. 10, 2022).

38. Fiorini P, Goldberg KY, Liu Y, Taylor RH. Concepts and trends n autonomy for robot-assisted surgery. Proc IEEE Inst Electr Electron Eng (2022) 110(7):993–1011. doi: 10.1109/JPROC.2022.3176828

39. Vasey B, Nagendran M, Campbell B, Clifton DA, Collins GS, Denaxas S, et al. Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. Nat Med (2022) 28:924–33. doi: 10.1038/s41591-022-01772-9

40. Mayer H, Gomez F, Wierstra D, Nagy I, Knoll A, Schmidhuber J. A system for robotic heart surgery that learns to tie knots using recurrent neural networks. Adv Robot (2008) 22(13-14):1521–37. doi: 10.1163/156855308X360604

41. Kassahun Y, Yu B, Tibebu AT, Stoyanov D, Giannarou S, Metzen JH, et al. Surgical robotics beyond enhanced dexterity instrumentation: a survey of machine learning techniques and their role in intelligent and autonomous surgical actions. Int J CARS (2016) 11:553–68. doi: 10.1007/s11548-015-1305-z

42. Barberio M, Longo F, Fiorillo C, Seeliger B, Mascagni P, Agnus V, et al. HYPerspectral enhanced reality (HYPER): A physiology-based surgical guidance tool. Surg Endosc (2020) 34:1736–44. doi: 10.1007/s00464-019-06959-9

43. Clancy NT, Jones G, Maier-Hein L, Elson DS, Stoyanov D. Surgical spectral imaging. Med Image Anal (2020) 63:101699. doi: 10.1016/j.media.2020.101699

44. Hamamoto R, Suvarna K, Yamada M, Kobayashi K, Shinkai N, Miyake M, et al. Application of artificial intelligence technology in oncology: Towards the establishment of precision medicine. Cancers (2020) 12:3532. doi: 10.3390/cancers12123532

45. Mascagni P, Alapatt D, Garcia A, Okamoto N, Vardazaryan A, Costamagna G, et al. Surgical data science for safe cholecystectomy: A protocol for segmentation of hepatocystic anatomy and assessment of the critical view of safety. arXiv (2021) arXiv:2106:10916. doi: 10.48550/ARXIV.2106.10916

46. Ward TM, Mascagni P, Madani A, Padoy N, Perretta S, Hashimoto DA. Surgical data science and artificial intelligence for surgical education. J Surg Oncol (2021) 124:221–30. doi: 10.1002/jso.26496

Keywords: artificial intelligence, colorectal cancer, automated robotic surgery, phase recognition, excision plane navigation, endoscopy control, annotated video banks

Citation: Avram MF, Lazăr DC, Mariş MI and Olariu S (2023) Artificial intelligence in improving the outcome of surgical treatment in colorectal cancer. Front. Oncol. 13:1116761. doi: 10.3389/fonc.2023.1116761

Received: 05 December 2022; Accepted: 03 January 2023;

Published: 17 January 2023.

Edited by:

Pasquale Cianci, Azienda Sanitaria Localedella Provincia di Barletta Andri Trani (ASL BT), ItalyReviewed by:

Altamura Amedeo, Pia Fondazione di Culto e Religione Card. G. Panico, ItalyCopyright © 2023 Avram, Lazăr, Mariş and Olariu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mihaela Flavia Avram, YXZyYW0ubWloYWVsYUB1bWZ0LnJv

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.