- 1Department of Ultrasound, The First Affiliated Hospital of University of Science and Technology of China (USTC), Division of Life Sciences and Medicine, University of Science and Technology of China, Hefei, Anhui, China

- 2Department of Computing, Hebin Intelligent Robots Co., LTD., Hefei, China

- 3Department of Respiratory and Critical Care Medicine, The First People’s Hospital of Hefei City, The Third Affiliated Hospital of Anhui Medical University, Hefei, China

Objective: Our aim was to develop dual-modal CNN models based on combining conventional ultrasound (US) images and shear-wave elastography (SWE) of peritumoral region to improve prediction of breast cancer.

Method: We retrospectively collected US images and SWE data of 1271 ACR- BIRADS 4 breast lesions from 1116 female patients (mean age ± standard deviation, 45.40 ± 9.65 years). The lesions were divided into three subgroups based on the maximum diameter (MD): ≤15 mm; >15 mm and ≤25 mm; >25 mm. We recorded lesion stiffness (SWV1) and 5-point average stiffness of the peritumoral tissue (SWV5). The CNN models were built based on the segmentation of different widths of peritumoral tissue (0.5 mm, 1.0 mm, 1.5 mm, 2.0 mm) and internal SWE image of the lesions. All single-parameter CNN models, dual-modal CNN models, and quantitative SWE parameters in the training cohort (971 lesions) and the validation cohort (300 lesions) were assessed by receiver operating characteristic (ROC) curve.

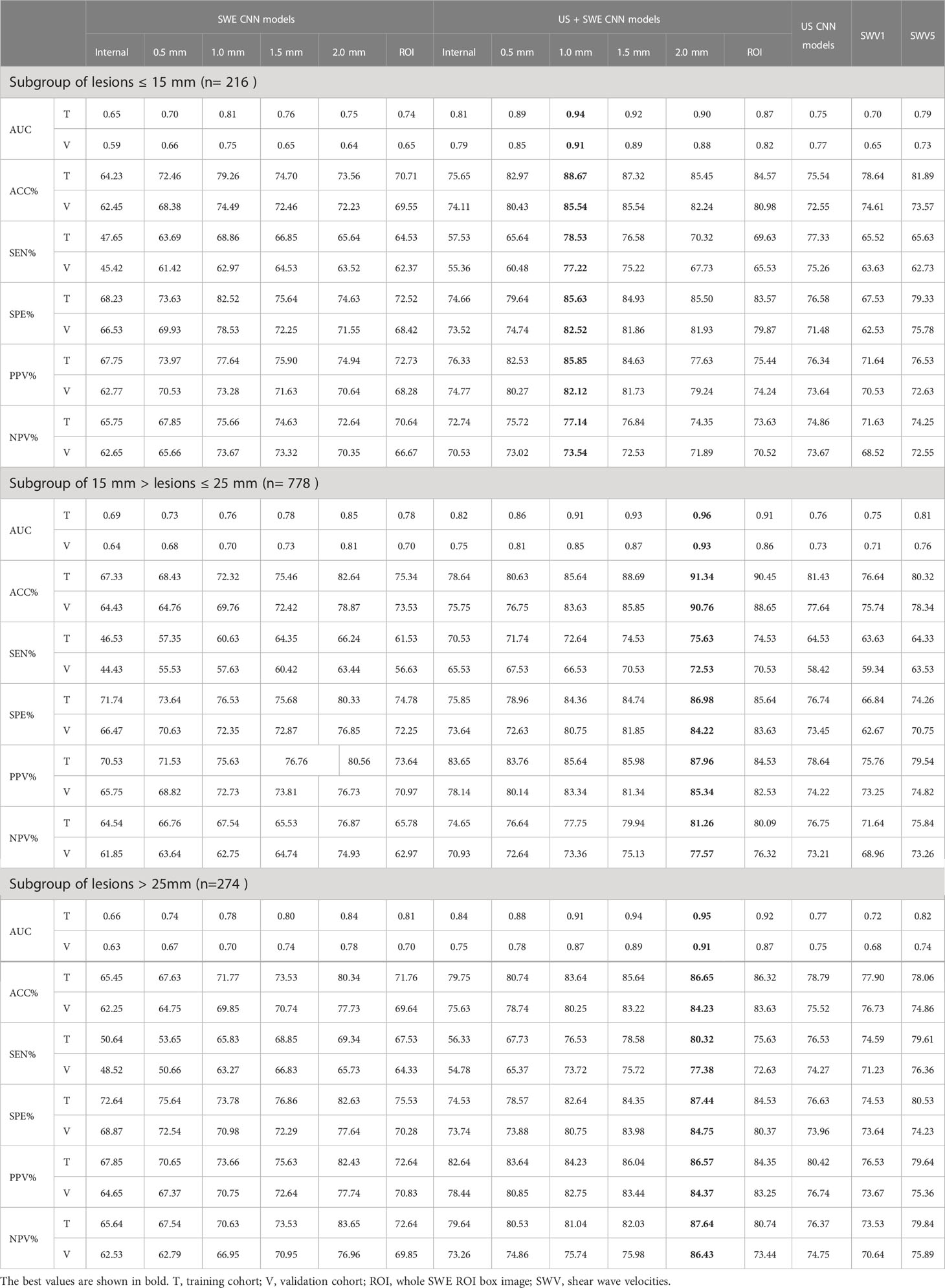

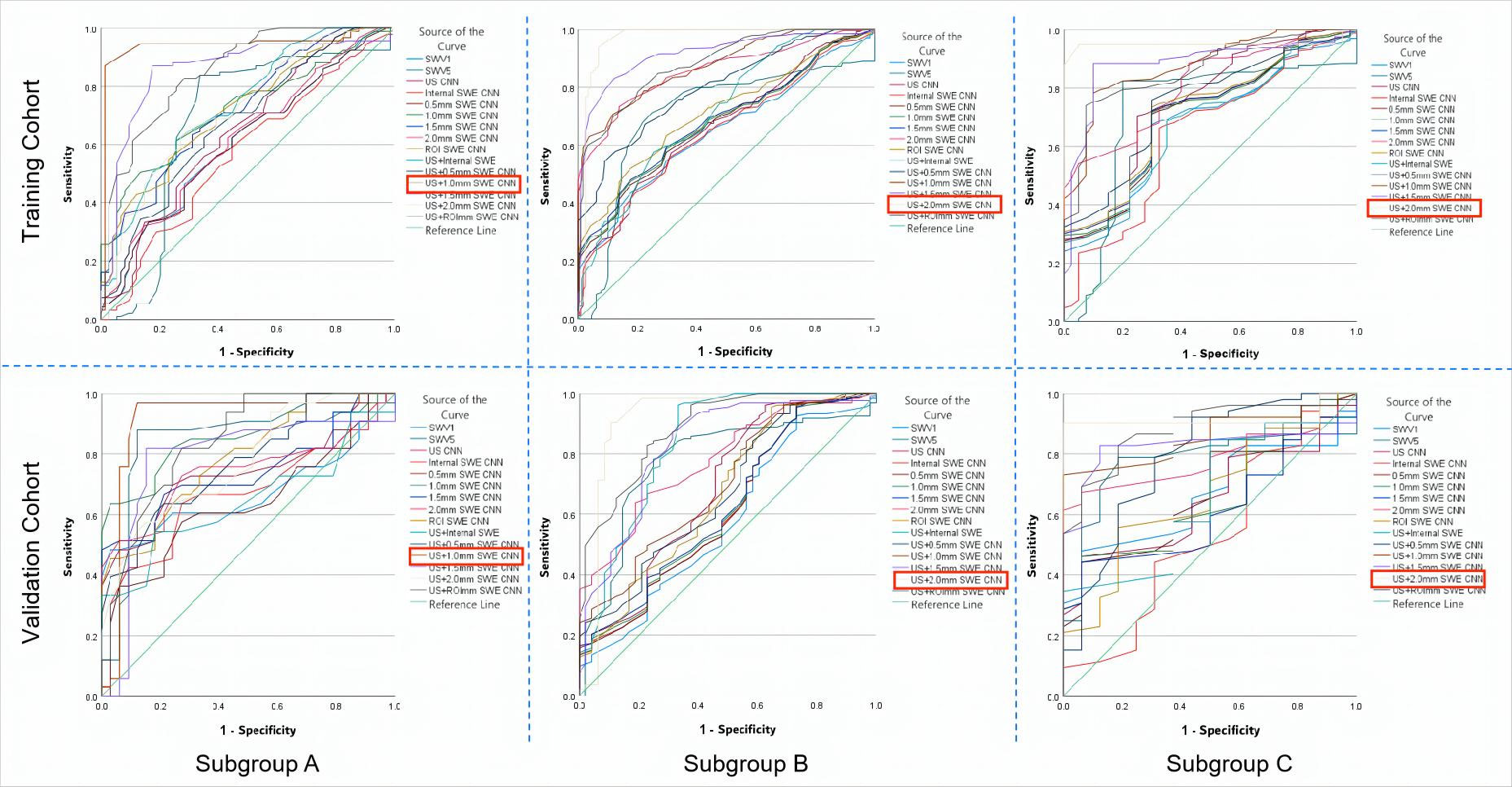

Results: The US + 1.0 mm SWE model achieved the highest area under the ROC curve (AUC) in the subgroup of lesions with MD ≤15 mm in both the training (0.94) and the validation cohorts (0.91). In the subgroups with MD between15 and 25 mm and above 25 mm, the US + 2.0 mm SWE model achieved the highest AUCs in both the training cohort (0.96 and 0.95, respectively) and the validation cohort (0.93 and 0.91, respectively).

Conclusion: The dual-modal CNN models based on the combination of US and peritumoral region SWE images allow accurate prediction of breast cancer.

Introduction

The morbidity of breast cancer in Asian women with dense breasts is even 4–6 times higher than that in western women with fatty breasts (1–3). Breast ultrasound (US) has been recognized as the main imaging method for diagnosing breast cancer (4–6). American College of Radiology Breast Imaging Reporting and Data System(ACR-BIRADS) is used for the evaluation and follow-up recommendations of breast lesions detected by US. However, radiologists’ subjective classification differences may affect diagnostic performance, thereby leading to overtreatment, especially for lesions with BI-RADS 4 category (5, 6).

Although shear-wave elastography (SWE) may improve the diagnostic specificity of the conventional US for breast cancer, even in cases of small or interval breast cancer (IBC) (4–7). However, due to the inhomogeneity within the lesion (hemorrhage, calcification, and cystic appearance) introduces subjective bias, which influences the final measured value, the specificity remains limited up to 86% when the quantitative SWE parameters were used, thereby may lead to unnecessary biopsies (5–8)

Previous studies have confirmed that the assessment of peritumoral stiffness of breast lesions can improve the accuracy of SWE in predicting breast cancer, considering desmoplastic reaction and tumor cell infiltration into the peritumoral stroma (9–12). Moreover, the peritumoral invasion is an independent prognostic factor significantly associated with an increased risk of relapse and death in node-negative breast cancer patients (13, 14). However, it is difficult to distinguish the boundary between the normal and tumor tissue in SWE (13). Therefore, peritumoral stiffness of breast lesions is highly dependent on radiologists’ experience rather than on the integrated high-throughput imaging information (12–14). Thus, it is desirable to develop approaches using artificial intelligence (AI) to integrate high-throughput imaging information that cannot be directly identified by unaided eye, so as to offer assistance to radiologists and improve the efficiency and accuracy of breast cancer diagnosis.

Recently, deep convolutional neural network (CNN)-based approaches have been considered as an effective approach for the feature extraction and classification of US images in breast cancer diagnosis (15–18). However, most of the CNN models used in the diagnosis of breast cancer have been based on the US or SWE images of intratumoral tissue rather than peritumoral tissue (15–20). The peritumoral stiffness of breast lesions is an accurate predictor of breast cancer (9–12). However, based on the traditional SWE technology, it is difficult to obtain accurate SWE image of the peritumoral tissue, and it is hard to estimate which width of the peritumoral tissue should be evaluated to provide the optimal diagnostic index of benign and malignant lesions (13).

Few studies have used CNN-based AI diagnostic systems to predict breast cancer based on peritumoral region’s SWE image. Therefore, the purpose of this study was to develop a dual-modal CNN model based on peritumoral SWE image of breast lesions and examine its diagnostic performance in breast cancer. The dual-modal CNN model was able to automatically recognize the location of breast lesions in B-mode US images. After mapping the lesions’ boundaries detected on B-mode US images to SWE images, segmentation of different widths (0.5 mm, 1.0 mm, 1.5 mm, 2.0 mm) of the peritumoral tissue was automatically completed. Then, we evaluated the predictive performance of each CNN model based on different peritumoral widths for breast cancer.

Materials and methods

Study population

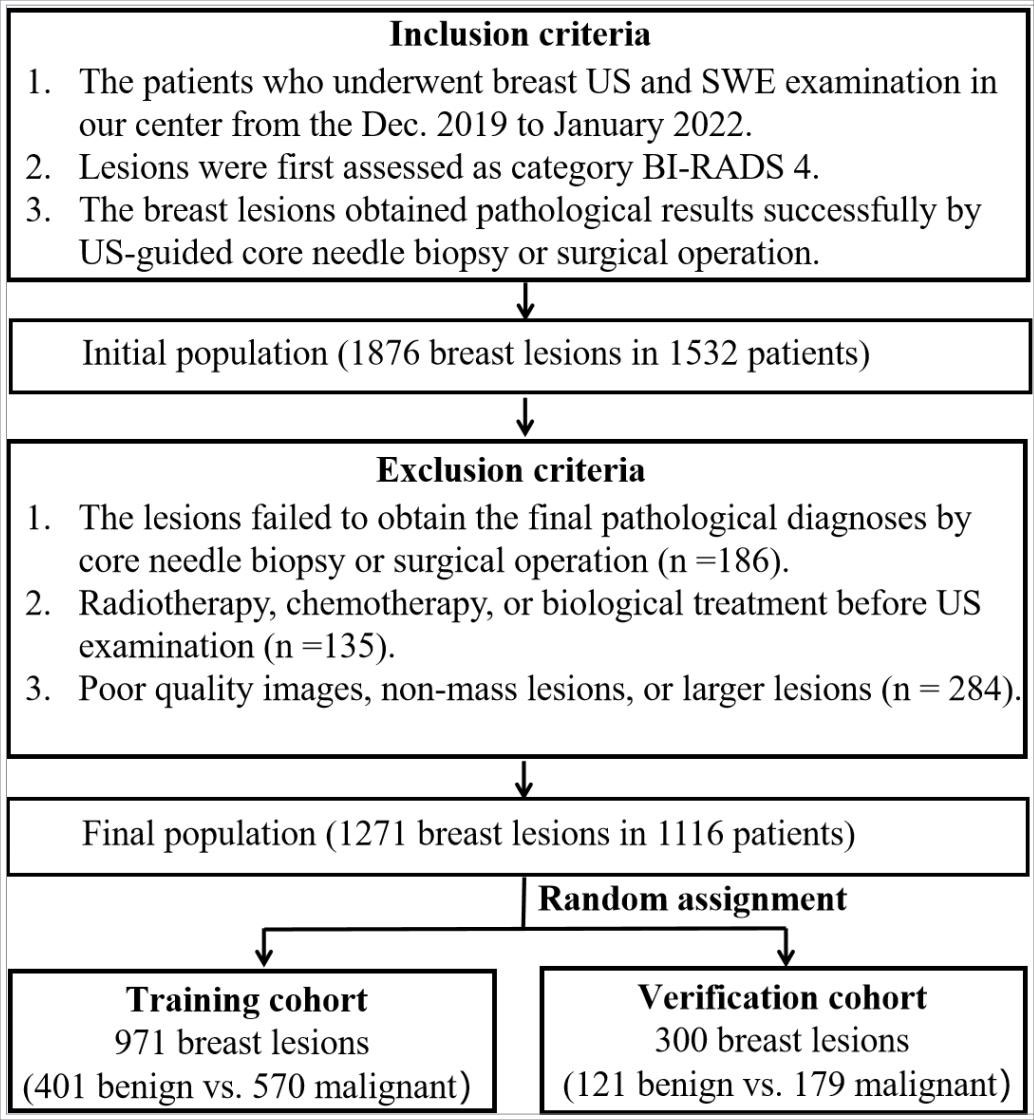

This retrospective study was approved by the institutional ethics committee of the First Affiliated Hospital of the University of Science and Technology of China (USTC). Between December 2019 and April 2022, the initial population included 1876 breast lesions in 1532 consecutive patients who had undergone US and SWE examinations. The inclusion criteria were as follows: (i) ACR-BIRADS 4 category of breast lesions; (ii) solid or cystic solid breast lesions examined by B-mode US and SWE; (iii) core needle biopsy or surgical resection performed to obtain accurate pathological results. The exclusion criteria were as follows: (i) radiotherapy, chemotherapy, or biological treatment before US examination; (ii) a history of breast surgery (including excision or plastic surgery); (iii) pregnancy or lactation; (iiii) non-mass lesions or larger lesions (larger than 40 mm), which were beyond the maximum range of the SWE sampling frame. Finally, a total of 1271 ACR-BIRADS 4 lesions from 1116 female patients were analyzed in this study. The lesions were assigned to the training cohort (971 lesions) and the verification cohort (300 lesions) by random sampling at an approximate ratio of 3:1 (Figure 1).

Dual-modal image acquisition and preprocessing

All of the US and SWE examinations were performed by breast radiologists using the Siemens ACUSON Sequoia (Siemens Healthcare GmbH, USA) US system equipped with 10 MHz linear array transducers. We acquired and stored transverse and longitudinal static images with the maximum diameter of breast lesions on US, and images containing the lesion’s characteristics (such as calcification, angulation, and spiculation sign) were also stored. All breast lesions included in this study were of ACR-BIRADS 4 category. The category of all lesion was reassessed by two radiologists both with more than eight years of working experience, and another radiologist with 12 years of breast examination experience was consulted to reach a final decision when disagreements occurred.

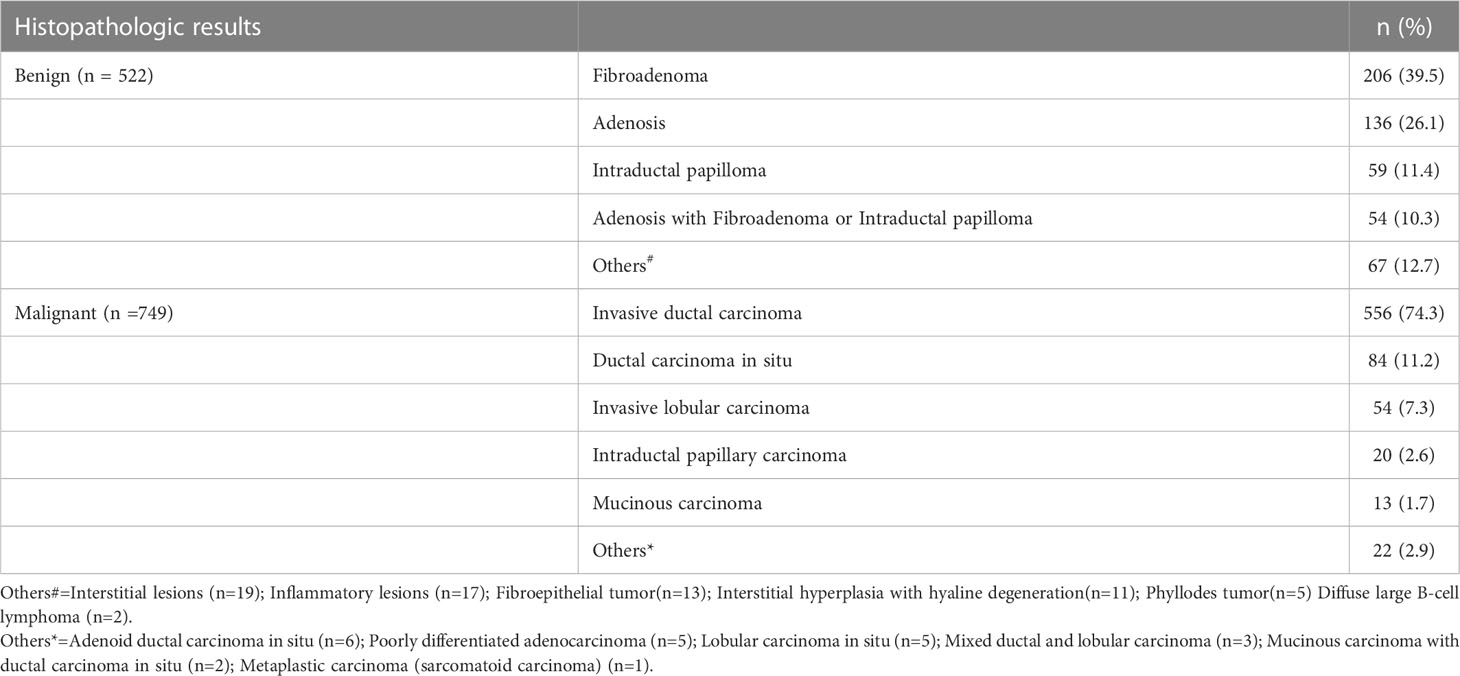

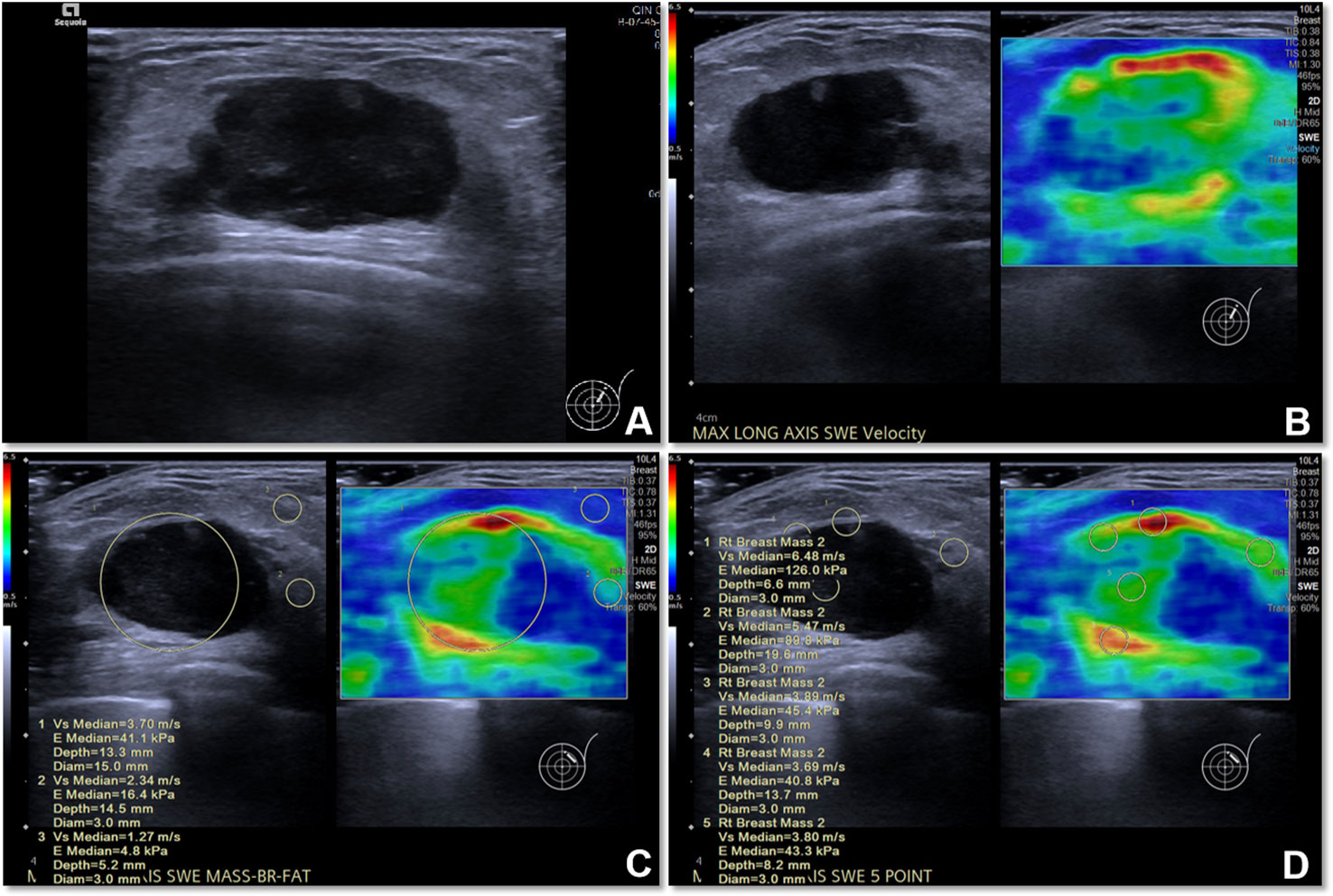

SWE examination of each lesion was performed in machine default modes, and we adjusted the size of a rectangular region of interest (ROI) to cover the whole lesion. We stored a static SWE image of the lesion and the surrounding tissue without measurement (for image segmentation); the lesion was put in the middle of the SWE region ensuring that the ROI included the lesion and at least 5 mm of the surrounding breast tissue. Then, quantitative SWE parameters were measured as follows: (i) a round ROI containing the lesion was measured and its shear-wave velocity (SWV) was recorded as SWV1, which represents the average stiffness of the lesion; (ii) five 3-mm-wide round ROIs were selected for measurement; four of them were placed at locations adjacent to the lesion (including peritumoral and intratumoral tissues), and one ROI was placed inside of the lesion, and the average SWV was recorded as SWV5, which represents the average stiffness of the lesion including the peritumoral tissue (Figure 2). All SWE images were taken from the longitudinal and transverse section of the breast lesions, and two senior breast radiologists with more than eight years of clinical experience performed all of the SWE examinations. The radiologists in this study were blinded to the patients’ clinical data and pathological results. All of the US and SWE images extracted from the Siemens database sites were in.jpg format. In order to determine whether the size of lesions has an impact on the selection of optimal peritumoral region SWE images of the lesions, in this study we divided the lesions to three subgroups depending on their maximum diameter (MD) (≤15 mm; >15 mm and ≤25 mm; >25 mm).

Figure 2 The US image and quantitative SWE parameters of metaplastic carcinoma (sarcomatoid carcinoma) in a 77-year-old woman. (A) US image of the lesion. (B) SWE image of the lesion. (C) The SWV1 value of the lesion was 3.70 m/s, while that of the normal mammary gland was 2.34 m/s and that of adipose tissue was 1.27 m/s. (D) The SWV5 value of the lesion and peritumoral region was 4.66 m/s.

All of the breast lesions were pathologically confirmed through US-guided core needle biopsy or surgical pathology after US and SWE examination. Benign lesions were followed-up with US for at least 18 months and showed no increase in the maximum diameter and volume on conventional US.

Labeling and segmentation of the peritumoral region

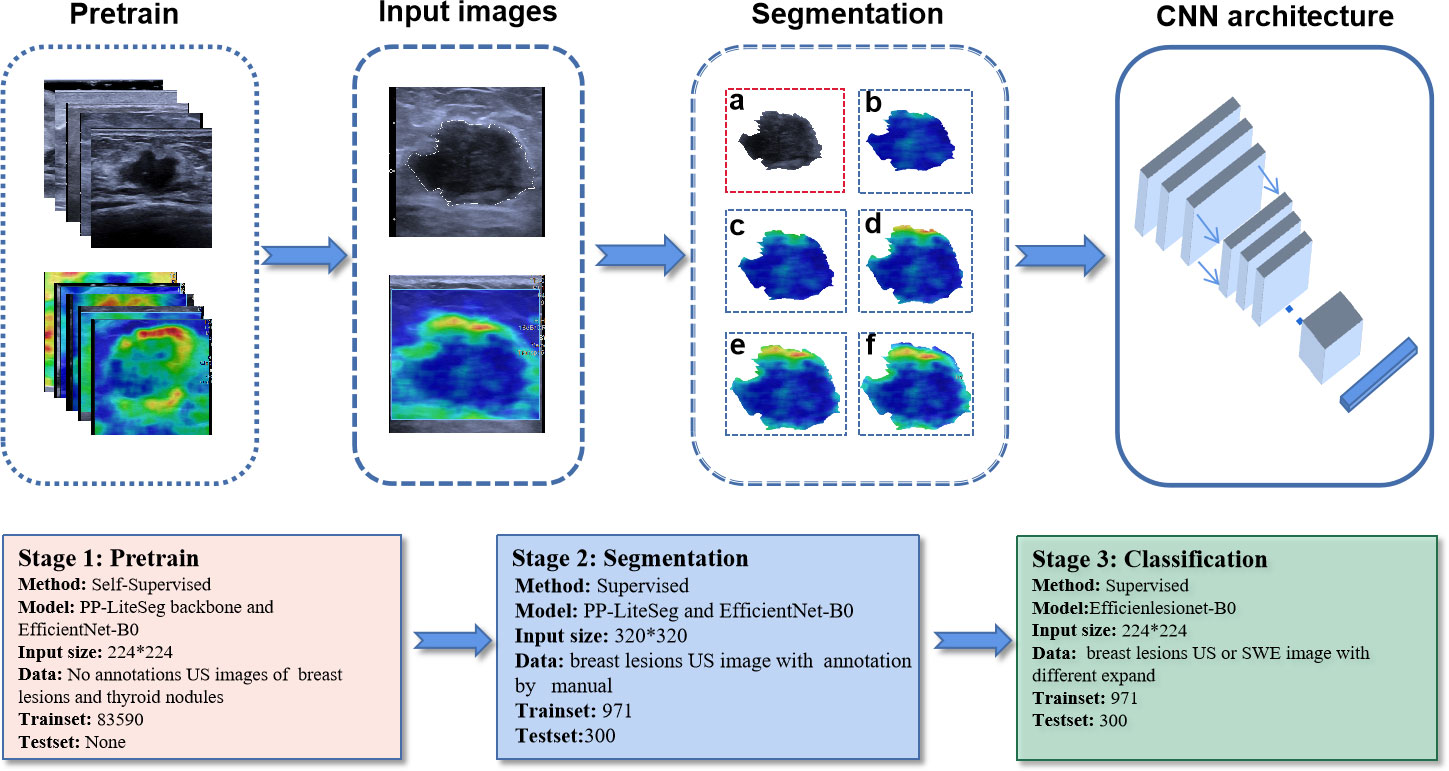

We constructed a CNN segmentation model for segmentation of lesions on SWE images. Traditionally, color SWE images are often not suitable for deep learning or induce instability because boundaries of lesions are difficult to label. Therefore, for the segmentation of the interior of the lesion, we labeled the SWE images according to the lesion boundary on the US image. First, we pretrained two backbone models of PP-LiteSeg and EfficientNet-B0 using Simsiam network architecture to identify the lesions on US images. Simsiam is a siamese network that has shown to be an effective self-supervised method. We used 83590 non-annotated US images of breast lesions for pretraining. Then, the lesion’s region in each US image in the training cohort was manually labeled using an open-source annotation tool (Labelme, https://github.com/wkentaro/labelme) by one radiologist (with eight years of experience in breast US) who was blinded to the clinical data and histopathological results of the patients.

After training, we obtained a segmentation model for describing the shape of the lesion on US images. The segmentation model allowed us to draw an ROI encompassing breast lesion’s boundary on the two-dimensional image, and then map the lesion’s boundary onto the SWE image and automatically expand the boundary of the peritumoral tissue with different widths (0.5 mm, 1.0 mm, 1.5 mm, 2.0 mm). For each SWE image of breast lesions, the following six images were finally segmented: the interior of the lesion; the lesion including 0.5 mm of peritumoral tissue; the lesion including 1.0 mm of peritumoral tissue; the lesion including 1.5 mm of peritumoral tissue; the lesion including 2.0 mm of peritumoral tissue; and the whole rectangular SWE ROI image.

The segmentation model comprised encoder, aggregation, and decoder. It was implemented on PaddlePaddle (https://github.com/PaddlePaddle/PaddleSeg). Compared with the default config, we removed some data augmentations including ResizeStepScaling and RandomPaddingCrop, added rotation augmentations, modified the num_classes to 2, and limited input_size to 320×320. OHEM loss was selected according to the better performance in a small target binary segmentation than cross-entropy loss. The process of model pretraining and segmentation network construction is shown in Figure 3.

Figure 3 The process of model pretraining and segmentation network construction. The trained segmentation model automatically segments the original US and SWE images into six standard data inputs to classification models: (A) the segmented US image of the lesion; (B) the segmented SWE image of the lesion; (C) the segmented SWE image of the lesion including 0.5 mm of peritumoral tissue; (D) the segmented SWE image of the lesion including 1.0 mm of peritumoral tissue; (E) the segmented SWE image of the lesion including 1.5 mm of peritumoral tissue; (F) the segmented SWE image of the lesion including 2.0 mm of peritumoral tissue.

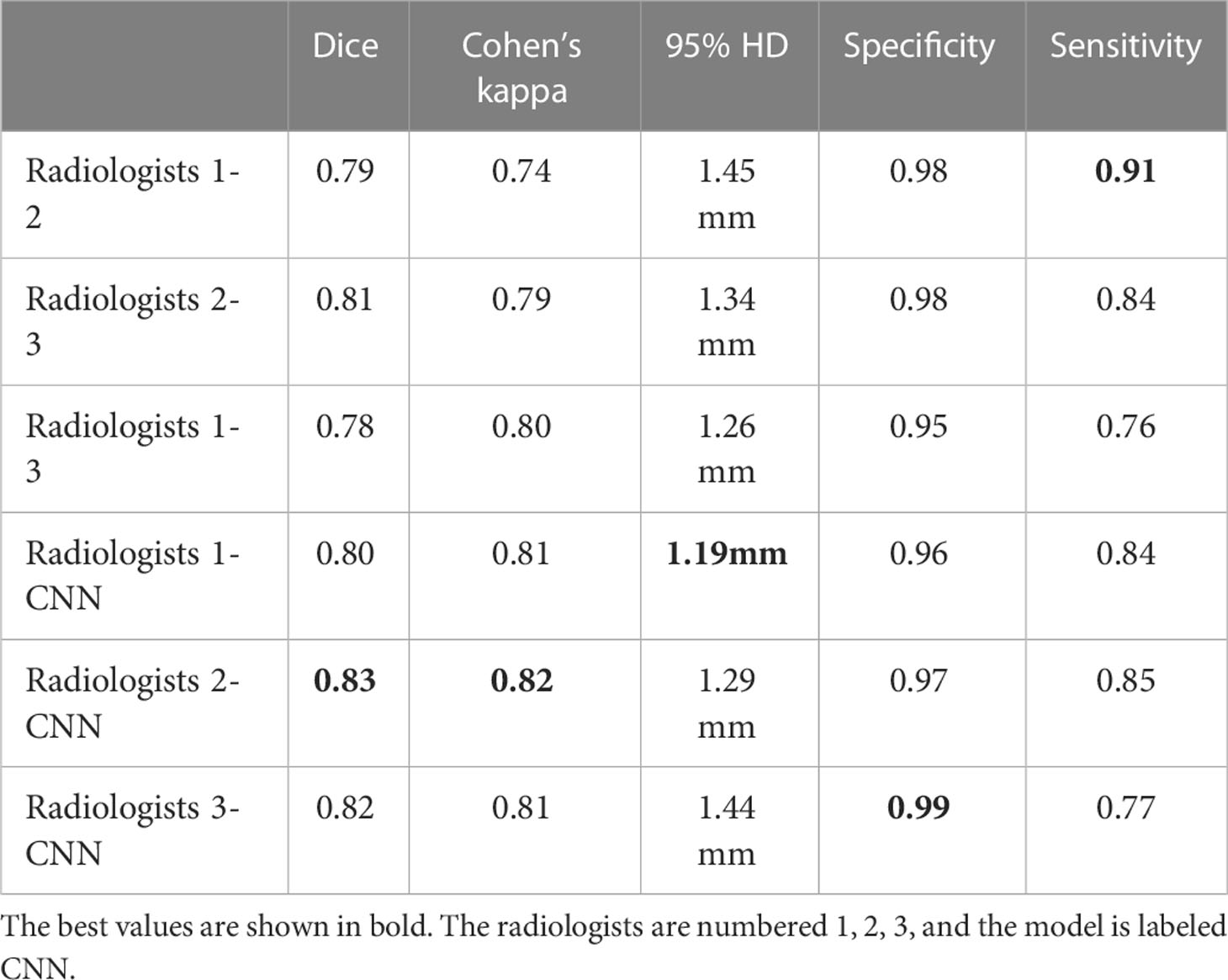

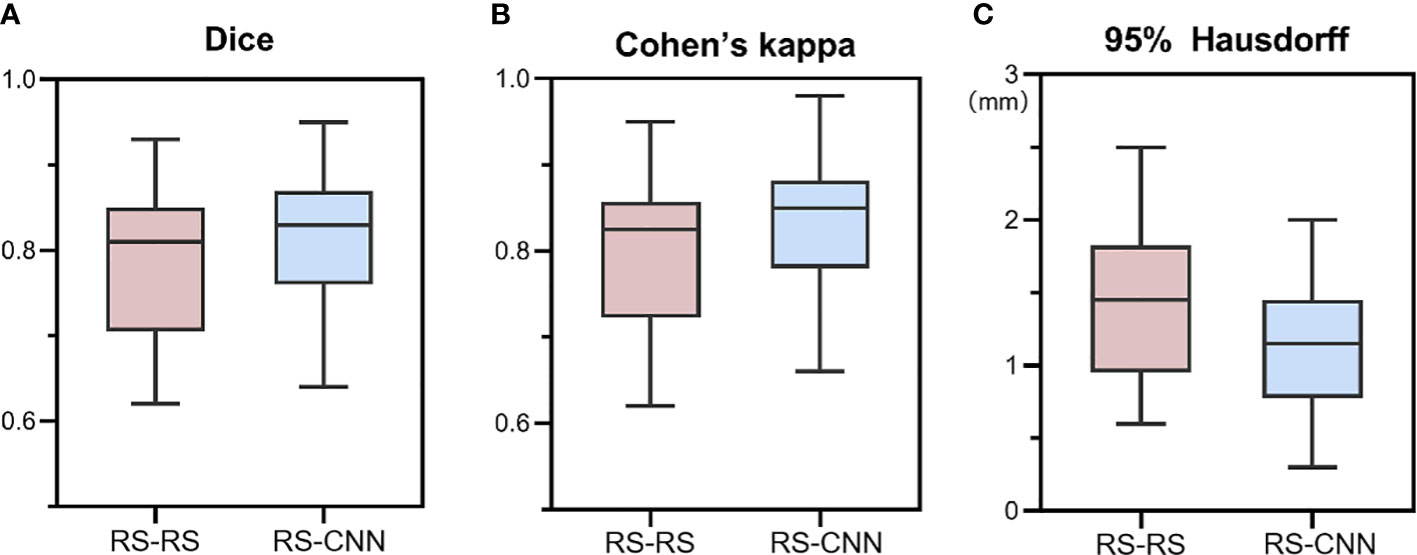

To achieve high consistency of the automatic segmentation model, the Dice similarity coefficient, Cohen’s kappa, Hausdorff 95 (95% HD), and the segmentation metrics (area, major axis length, and minor axis length) were used to evaluate each lesion’s pixel and boundary consistency of the three radiologists and the CNN segmentation model in the validation cohort. Each of the three radiologists had eight years of experience in breast US and was blinded to the clinical data and histopathological results of the patients.

CNN-based predictive model building based on the single-segmentation peritumoral region SWE image

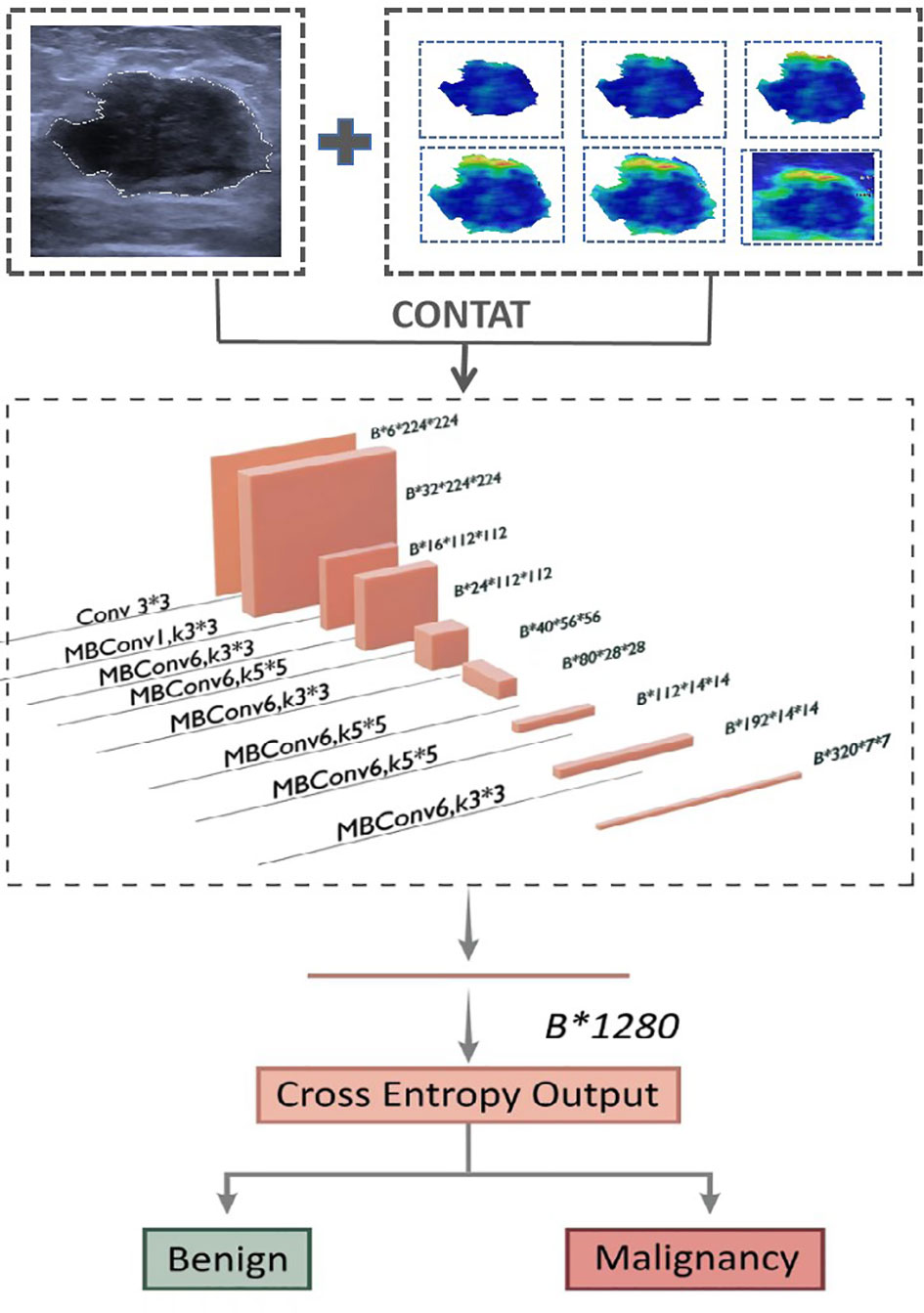

For predicting breast cancer, we built benign and malignant binary classification CNN models by EfficientNet-B0. Seven single-parameter CNN models were trained based on seven individual input images (Figure 4). Five of them were segmented on SWE (Internal SWE CNN, 0.5 mm SWE CNN, 1.0 mm SWE CNN, 1.5 mm SWE CNN, 2.0 mm SWE CNN); one was segmented on US image; and the last one was the whole heat map region on SWE image. EfficientNet-B0 architecture is shown in Figure 4. The optimizer was SGD with 0.9 momentum and Le-4 weight-decay; the loss function was cross entropy loss; batch was 128; and the learning rate was 0.01. Data augmentation included random horizontal flip, random brightness, random contrast, and random saturation. We implemented them in an open-source machine learning framework (PyTorch, version is 1.10, https://pytorch.org).

Figure 4 Network architecture of single prediction model for breast cancer. The input layer is a single US image or a segmented SWE image.

CNN-based predictive model building based on the fusion of peritumoral region segmentation SWE images and US images

Furthermore, six corresponding US + SWE image CNN models (US + Internal SWE CNN; US + 0.5 mm SWE CNN; US + 1.0 mm SWE CNN; US + 1.5 mm SWE CNN; US + 2.0 mm SWE CNN; US + ROI SWE CNN) were also trained based on the fusion-input binary classification CNN by using both US and SWE images as the network input. We fused the two images on the first convolution layer of the network. For the fusion input, we modified the EfficientNet-B0, which increased input channel of the first convolution layer from 3 to 6. Correspondingly, two three-channel images were concatenated into six channels on the channel axis and then resized into 224×224. Other parameters of the model were the same as before, and the model architecture is shown in Figure 5.

Figure 5 Network architecture of dual-modal prediction model for breast cancer. The input layer inp.

Statistical analysis

Statistical analysis was performed using commercially available SPSS software (version 19.0; Chicago, USA). All numerical data were presented as the mean ± standard deviation. The Shapiro–Wilk test was used to verify whether the quantitative data were normally distributed. The Mann–Whitney U tests were used to compare the continuous variables between the benign and malignant groups or between the three subgroups in the training and validation cohorts. To evaluate the consistency between the radiologists and the CNN segmentation model segmentation, we utilized the sensitivity, specificity, Dice coefficient, Cohen’s kappa, and 95% Symmetric Hausdorff Distance. The Pearson correlation coefficients and Wilcoxon signed-rank tests were used to determine whether the CNN segmentation model’s performance aligned with that of the radiologists. We used three metrics (area, length of major axis, and length of minor axis) to evaluate the consistency of segmentation. The performances of all predictive CNN models and two quantitative SWE parameters were assessed using receiver operating characteristic (ROC) curve analysis. ROC was also used to calculate the corresponding sensitivity (SEN), specificity (SPE), positive predictive value (PPV), negative predictive value (NPV), and area under the ROC curve (AUC). The McNemar test was used for paired comparisons of proportions. All statistical tests were two-sided, and p values lower than 0.05 were considered to indicate statistical significance.

Results

Clinicopathologic characteristics of all breast lesions

The clinicopathologic characteristics and subgroups of all 1271 breast lesions are summarized in Table 1. Among the 1271 BI-RADS 4 lesions (ACR-BIRADS 4A:397; ACR-BIRADS 4B: 575; ACR-BIRADS 4C: 299), 749 (58.9%) were malignant and 522 (41.1%) were benign, as shown in Table 2. The mean age of the entire cohort was 45.40 ± 9.65 years (range, 19–79 years); the mean age in the malignant group was greater than that in the benign group (51.29 ± 7.29 vs. 39.56 ± 6.73, P < 0.001), and there was no significant difference in the mean age between the training and the validation cohorts (P > 0.05 for all). The MD of the malignant group was larger than that of the benign group (19.98 ± 5.22 mm vs. 14.55 ± 7.74 mm, P < 0.001), but there was no difference between the training cohorts and the validation cohorts (P > 0.05 for all). The lesions were divided into subgroups depending on the MD. The subgroup with the MD of lesions ≤15 mm included 218 lesions (167 in the training cohort and 51 in the validation cohort; 89 benign and 129 malignant); the subgroup with MD between 15 mm and 25 mm included 779 lesions (598 in the training cohort and 181 in the validation cohort; 327 benign and 452 malignant); and the subgroup with MD >25 mm included 274 lesions (206 in the training cohort and 68 in the validation cohort; 112 benign and 162 malignant).

Quantitative SWE parameters of all breast lesions

Considering 1271 lesions, SWV values of the malignant group were significantly higher than those of the benign group, including the intratumoral stiffness (SWV1: 3.76 ± 0.78 m/s vs. 1.85 ± 0.65 m/s; P < 0.01) and the peritumoral stiffness (SWV5: 4.02 ± 0.82 m/s vs. 1.67 ± 0.74 m/s; P < 0.01).

SWV5 values were significantly higher than SWV1 values in the malignant group, ACR-BIRADS 4B and 4C group; SWV5 values were lower than SWV1 values in the benign group and ACR-BIRADS 4A group (all P < 0.01). SWV5 and SWV1 values in the subgroup with 15 mm <MD ≤25 mm were higher than those in the subgroups with MD ≤15 mm and MD >25 mm, because there were more malignant lesions in this subgroup (all P < 0.01).

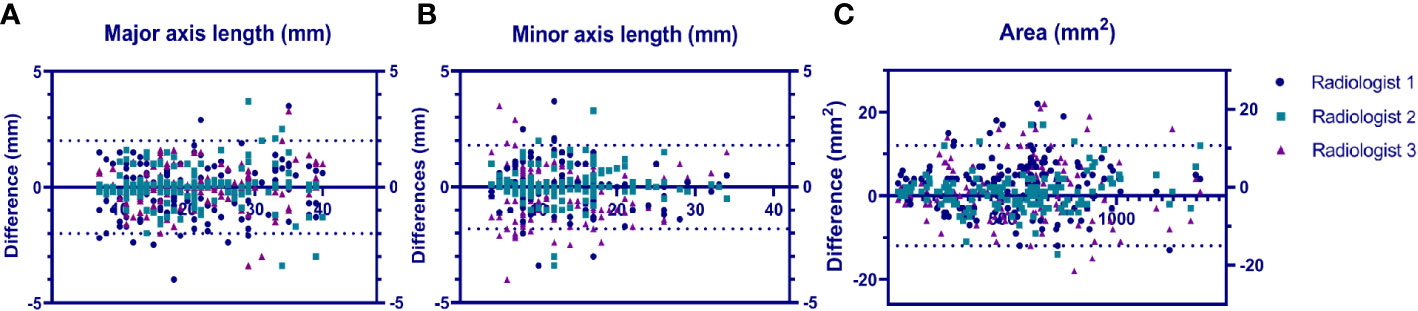

Consistency analysis of lesion segmentation manually vs. CNN model

We calculated the average values of the segmentation metrics’ intra- and interrater consistency of the three radiologists, as shown in Table 3. Wilcoxon signed-rank tests were conducted, and Pearson’s correlation coefficients were calculated using geometric features extracted from pairwise comparison metrics in the radiologists’ segmentations. Wilcoxon tests indicated that the CNN segmentation model satisfactorily matched the performance of the radiologists regarding sensitivity, specificity, the Dice coefficient, Cohen’s kappa, and 95% Symmetric Hausdorff Distance (P > 0.05). The Dice coefficient and Cohen’s kappa had the best values in Radiologist 2-CNN (0.83; 0.82); the specificity had the best values in Radiologist 3-CNN (0.99); and the 95% Symmetric Hausdorff Distance had the best values in Radiologist 1-CNN (1.19 mm) (Table 3). The box-plot diagrams show the intra- and interrater consistency of the radiologists and the CNN model compared to the radiologists (Figure 6). The Pearson’s correlation coefficients showed a strong relationship between the area, major and minor axis length between all of the observers (radiologists vs. CNN model r = 0.98, 0.97, 0.99). As shown in Bland–Altman plots, the differences between the CNN model and the three radiologists in segmentation area, major axis length, and minor axis length were almost 0 (Figure 7).

Figure 6 Box-plot diagrams of Dice (A), Cohen’s kappa (B), and 95% Hausdorff metrics (C) compared between the radiologists’ segmentation (RS-RS) and between the experts and deep learning model segmentation (RS-CNN). RS, radiologists; CNN, convolutional neural network.

Figure 7 Bland–Altmann plots showing the model performance vs. that of each radiologist in terms of lesion major axis length (A), lesion minor axis length (B) and lesion area (C).uts a US image and a segmented SWE image at the same time.

Diagnostic performance of the quantitative SWE parameters, US CNN model, and SWE CNN models for predicting breast cancer in the training and the validation cohorts

The diagnostic performances of quantitative SWE parameters, US CNN models, and SWE CNN models in both the training and the validation cohorts are summarized in Table 4.

Table 4 Performance summary of SWE CNN model, US+SWE CNN models and quantitative SWE parameters for predicted breast cancer in the training and validation cohort.

Among these three single models, the 1.0 mm SWE image CNN model had the highest area under curve (AUC), accuracy (ACC), sensitivity, and specificity for predicting breast cancer in the subgroup with MD ≤15 mm both in the training and in the validation cohort (0.81, 79.26%, 68.86%, 82.52% vs. 0.75, 74.49%, 62.97%, 78.53%).

In the subgroups with 15 mm <MD ≤25 mm and MD >25 mm, the 2.0 mm SWE image CNN model had the highest AUC, ACC, sensitivity, and specificity both in the training cohort (15 mm <MD ≤25 mm: 0.85, 82.64%, 66.24%, 80.33%; MD >25 mm: 0.84, 80.34%, 69.34%, 82.63%) and the validation cohort (15 mm <MD ≤25 mm: 0.81, 78.87%, 63.44%, 76.85%; MD >25 mm: 0.78, 77.73%, 65.73%, 77.64%).

Regardless of the grouping method, the AUCs and ACC of the SWE image CNN models were all better than SWV5 and the US CNN model in predicting breast cancer (all P < 0.05), except for the 0.5 mm and the internal SWE image CNN models. There was no significant difference between the SWV5 and the US CNN model in sensitivity, specificity, and AUCs (all P > 0.05, Figure 8).

Figure 8 The ROC curves of the prediction CNN models and the quantitative SWE parameters (SWV1 and SWV5) in both the training and the validation cohorts for the three subgroups. (A) Based on the ROC curves of the subgroup with MD ≤15 mm, the US + 1.0 mm SWE CNN showed the highest AUCs in the training (0.94) and in the validation (0.91) cohorts. (B) From the ROC curves of the subgroup with 15 mm< MD≤ 25 mm, the US + 2.0 mm SWE CNN showed the highest AUCs in the training (0.96) and in the validation (0.93) cohorts. (C) From the ROC curves of the subgroup with MD >25 mm, the US + 2.0 mm SWE CNN showed the highest AUC in both the training (0.95) and the validation (0.91) cohorts. ROC, receiver operating characteristic; AUC, area under the ROC curve; MD, maximum diameter of the lesion. The best values are shown in red box mark.

Diagnostic performance of the US + SWE dual-modal CNN model for predicting breast cancer in the training and the validation cohorts

In both the training and the validation cohorts, the overall performances of the US + SWE image CNN models were slightly higher than those of the corresponding single-image CNN models.

The US CNN + 1.0 mm SWE model achieved the highest AUC for MD ≤15 mm both in the training cohort (0.94) and in the validation cohort (0.91) (Figure 8). In the subgroup with MD ≤15 mm, the US + 1.0 mm SWE CNN model achieved the highest ACC, sensitivity, and specificity both in the training cohort (88.67%, 78.53%, 85.63%, respectively) and in the validation cohort (85.54%, 77.72%, 82.52%, respectively).

In the subgroups with 15 mm <MD ≤25 mm and MD >25 mm, the US CNN + 2.0 mm SWE model achieved the highest AUCs both in the training cohort (0.96, 0.95, respectively) and in the validation cohort (0.93, 0.91, respectively) (Figure 8). Comparing the results obtained by the US + SWE dual-modal CNN model to those of the US images CNN model, there were 6.2%, 5.7%, and 8.7% average percentage increases for ACC, sensitivity, and specificity, respectively, where the improvement in specificity was significant, indicating that the dual-modal CNN model can improve specificity without loss of sensitivity for classifying breast cancer.

Similarly, the US + 2.0 mm SWE CNN model achieved the highest ACC, sensitivity, and specificity in both the training and the validation cohorts for 15 mm <MD ≤25 mm (91.34%, 75.63%, 86.98% and 90.76%, 72.53%, 84.22%) and MD >25 mm (86.65%, 80.32%, 87.44% and 84.23%, 77.38%, 84.75%).

Discussion

The most important contribution of this work is the introduction of the dual-modal CNN architecture to predict breast cancer. We adopted the deep learning algorithm-assisted strategy for clinical diagnosis of breast cancer based on the automatic segmentation of peritumoral region on ultrasound SWE images. Our dual-modal CNN architecture improved the SWE diagnostic accuracy of breast cancer, reaching 90.76%.

The ACR-BIRADS category is based on the morphological features visible on US images. This approach has high sensitivity but relatively low specificity, thereby leading to unnecessary biopsy and excessive diagnosis (19, 20). The SWE examination has been reported to be able to increase specificity and sensitivity for predicting breast cancer (8–10). Our results showed that SWV5 values were higher than SWV1 values in the malignant group (all P < 0.001), while there was no significant difference between SWV1 and SWV5 in the benign group. SWV5 had higher AUCs than SWV1 both in the training and in the validation cohorts for predicting breast cancer, regardless of the subgroup. This demonstrated that stiffness that includes peritumoral tissue is a better indicator of breast cancer, which is consistent with previous studies (21–24), because the peritumoral region of breast cancer has abnormally stiff collagen fibers, which is related to cancer fibroblasts, as well as infiltration of cancer cells into the surrounding tissue (25, 26). However, based on the traditional SWE technology, it is difficult to accurately select the surrounding tissues and obtain accurate peritumoral stiffness value. This may be the reason why SWE technology has not yet been widely applied for clinical diagnosis of breast cancer (27, 28). Few studies have evaluated the value of intra- and peritumoral regions in the prediction of breast cancer, and no studies have shown the diagnostic efficacy of CNNs segmenting different widths of peritumoral region of breast cancer.

In the subgroup with MD ≤15 mm, comparing the results obtained by dual-modal CNN models integrating US and SWE images with those of just using US or SWE images single CNN models, the accuracy, sensitivity, and AUC were all improved, with specificity increasing most significantly (average 7.8%). Such an improvement in specificity may be because SWE images provide information on the stiffness of lesions, complementing the US image diagnosis of breast cancer from another dimension. The US + 1.0 mm SWE model achieved the highest AUC in diagnosing breast cancer both in the training (0.94) and in the validation cohorts (0.90).

In the subgroups with 15 mm <MD ≤25 mm and MD >25 mm, the dual-modal US + SWE model still had higher AUC, accuracy, sensitivity, and specificity than any of the single-parameter CNN models. The US + 2.0 mm SWE model achieved the highest AUC in predicting breast cancer both in the training (0.96; 0.95) and in the validation cohort (0.93; 0.91). These results show that the most effective SWE image region for predicting breast cancer is the area containing 2.0 mm of peritumoral tissue. Similarly, for the lesions with the maximum diameter ≤15 mm, the most effective image region for SWE to predict breast cancer is the area containing 1.0 mm of peritumoral tissue. Regardless of the group, the 0.5 mm SWE CNN model and the US + 0.5 SWE dual-modal CNN model showed no significant difference from the corresponding lesion’s internal SWE CNN model, possibly because stiffness of the 0.5 mm peritumoral tissue was very close to intratumoral tissue stiffness.

The reliability and repeatability of automatic segmentation are crucial. In our research, the segmentation CNN model achieved stable consistency with all three radiologists in terms of Dice coefficient and Cohen’s kappa. The difference of each observer in the segmentation area and the maximum diameter of the transverse and longitudinal sections of the lesions was very close to 0, indicating that the CNN segmentation model has excellent consistency with radiologists.

The CNN architecture does not require manual input of radiomics signatures, but automatically selects useful features from US images for classification; this improves diagnostic performance and efficiency while minimizing artificial false-positive or false-negative errors (29–32). However, it is unknown which region of the CNN model based on US and SWE images can improve the diagnostic efficiency of breast cancer, so it is called a black-box learning method, which may lead to ambiguous interpretation of CNN features (33, 34). In our study, we constructed an image segmentation model to automatically segment the effective area (the optimal peritumoral width) on SWE images that is conducive to the diagnosis of breast cancer, and then integrated the automatic segmentation model into our CNN prediction model, thereby breaking the previous CNN black-box learning mode and greatly improving the efficiency of the CNN model in the diagnosis of breast cancer.

Our results showed that the CNN models combining US images with SWE images containing 1 mm or 2 mm of the peritumoral tissue were superior to whole SWE ROI image models in predicting breast cancer, indicating that the effective SWE region of breast cancer was the area covering a certain width of peritumoral tissue. Moreover, the lesions with different diameters have differential infiltration into the peritumoral tissue. Our CNN model based on automatic segmentation of SWE images of peritumoral tissue can automatically identify effective regions of breast lesions’ SWE image, thereby improving the work efficiency of radiologists and reducing their SWE measurement workload.

There are some limitations to this study. First, this study only considered ACR-BIRADS 4 lesions, there are some deviations in the study samples. Second, all images and data came from a single center. Therefore, a larger data cohort acquired from multiple centers with different models of US equipment is necessary to create a more comprehensive training cohort.

Conclusion

In conclusion, the dual-modal CNN models based on the combination of US images and peritumoral region’s SWE allow for accurate prediction of breast cancer. Moreover, when lesion’s diameter is ≤15 mm, the best diagnostic SWE image area for predicting breast cancer contains 1.0 mm of the peritumoral region. When lesion diameter is >25 mm, or between 15 mm and 25 mm, the SWE image containing 2.0 mm of the peritumoral region is the optimal diagnostic area for predicting breast cancer.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by USTC. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

LX, ZL, CP, XL, Y-YC and LH participated in literature search, data acquisition, data analysis, or data interpretation. LX and LH conceived and designed the study, and critically revised the manuscript, performed the research, wrote the first draft, collected and analyzed the data. LX, N-AH, and LH participated in paper writing and revised the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of interest

Author ZL was employed by Hebin Intelligent Robots Co., LTD.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

ACR-BIRADS, American College of Radiology Breast Imaging Reporting and Data System; CNN, convolutional neural networks; FNA, fine-needle aspiration; MD: maximum diameter; ROI, region of interest; SWE, shear-wave elastography; US,Ultrasound.

References

1. Hyuna S, Jacques F, Siegel RL, Mathieu L, Isabelle S, Ahmedin J, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. ca-a Cancer J Clin (2021) 71(3):209–49. doi: 10.3322/caac.21660

2. Shepherd John A, Kerlikowske K. Do fatty breasts increase or decrease breast cancer risk? Breast Cancer Res (2012) 14(1):102. doi: 10.1186/bcr3081

3. Vander Waal Daniëlle, Verbeek André LM, Broeders Mireille JM. Breast density and breast cancer-specific survival by detection mode. BMC Cancer (2018) 18(1):386. doi: 10.1186/s12885-018-4316-7

4. Kim MY, Kim SY, Kim YS, Kim ES, Chang JM. Added value of deep learning-based computer-aided diagnosis and shear wave elastography to b-mode ultrasound for evaluation of breast masses detected by screening ultrasound. MEDICINE (2021) 100(31):e26823. doi: 10.1097/MD.0000000000026823

5. Farooq F, Mubarak S, Shaukat S, Khan N, Jafar K, Mahmood T, et al. Value of elastography in differentiating benign from malignant breast lesions keeping histopathology as gold standard. Cureus (2019) 11:e5861. doi: 10.7759/cureus.5861

6. Fujioka T, Katsuta L, Kubota K, Mio M, Yuka K, Arisa K, et al. Classification of breast masses on ultrasound shear wave elastography using convolutional neural networks. ULTRASONIC Imaging (2020) 42(4-5):213–20. doi: 10.1177/0161734620932609

7. Choi HY, Seo M, Sohn YM, Hwang JH, Song EJ, Min SY, et al. Shear wave elastography for the diagnosis of small ( 2 cm) breastlesions: Added value and factors associated with false results. Br J Radiol (2019) 92:20180341. doi: 10.3348/kjr.2018.0530

8. Zhang X, Liang M, Yang Z, Zheng C, Wu J, Ou B, et al. Deep learning-based radiomics of b-mode ultrasonography and shear-wave elastography: Improved performance in breast mass classification. Front Oncol (2020) 10:1621. doi: 10.3389/fonc.2020.01621

9. Xie X, Zhang Q, Liu S, Ma Y, Liu Y, Xu M, et al. Value of quantitative sound touch elastography of tissues around breast lesions in the evaluation of malignancy. Clin Radiol (2021) 76(1):79.e21–8. doi: 10.1016/j.crad.2020.08.016

10. Zhang L, Xu J, Wu H, Liang WY, Ye XQ, Tian HT, et al. Screening breast lesions using shear modulus and its 1-mm shell in sound touch elastography. ULTRASOUND IN Med AND Biol (2019) 45(3):710–9. doi: 10.1016/j.ultrasmedbio.2018.11.013

11. Kong WT, Wang Y, Zhou WJ, Zhang YD, Wang WP, Zhuang XM, et al. Can measuring perilesional tissue stiffness and stiff rim sign improve the diagnostic performance between benign and malignant breast lesions? J Med Ultrasonics (2021) 48(1):53–61. doi: 10.1007/s10396-020-01064-0

12. Xu P, Wu M, Yang M, Xiao J, Ruan ZM, Wu LY. Evaluation of internal and shell stiffness in the differential diagnosis of breast non-mass lesions by shear wave elastography. World J Clin cases (2020) 8(12):2510–9. doi: 10.12998/wjcc.v8.i12.2510

13. Mao YJ, Lim HJ, Ni M, Yan WH, Wong DWC, Cheung JCW, et al. Breast tumour classification using ultrasound elastography with machine learning: A systematic scoping review. Cancers (2022) 14(2):367. doi: 10.3390/cancers14020367

14. Zhou J, Zhan W, Dong Y, Yang ZF, Zhou C. Stiffness of the surrounding tissue of breast lesions evaluated by ultrasound elastography. Eur Radiol (2014) 24(7):1659–67. doi: 10.1007/s00330-014-3152-7

15. Pourasad Y, Zarouri E, Salemizadeh PM, Mohammed AS. Presentation of novel architecture for diagnosis and identifying breast cancer location based on ultrasound images using machine learning. Diagnostics (Basel Switzerland) (2021) 11(10):1870. doi: 10.3390/diagnostics11101870

16. Wijata A, Andrzejewski J, Pyciński Bartłomiej. An automatic biopsy needle detection and segmentation on ultrasound images using a convolutional neural network. ULTRASONIC Imaging (2021) 43(5):262–72. doi: 10.1177/01617346211025267

17. Jiang MP, Lei SL, Zhang JH, Hou LQ, Zhang MX, Luo YC, et al. Multimodal imaging of target detection algorithm under artificial intelligence in the diagnosis of early breast cancer. J Healthcare Eng (2022), 9322937. doi: 10.1155/2022/9322937

18. Wang Yi, Choi EJ, Choi Y, Zhang H, Jin JY, Ko SB, et al. Breast cancer classification in automated breast ultrasound using multiview convolutional neural network with transfer learning. ULTRASOUND IN Med AND Biol (2020) 46(5):1119–32. doi: 10.1016/j.ultrasmedbio.2020.01.001

19. Kim J, Kim HJ, Kim C, Kim WH. Artificial intelligence in breast ultrasonography. Ultrasonography (2021) 40(2):183–90. doi: 10.14366/usg.20117

20. Ozaki Jo, Fujioka T, Yamaga E, Hayashi A, Kujiraoka Y, Imokawa T, et al. Deep learning method with aconvolutional neural network for image classification of normal and metastatic axillary lymph nodes on breast ultrasonography. Japanese J Radiol (2022) 40:814–22. doi: 10.1007/s11604-022-01261-6

21. Cui Y-Y, He N-A, Ye X-J, Hu L, Xie L, Zhong W, et al. Evaluation of tissue stiffness around lesions by sound touch shear wave elastography in breast malignancy diagnosis. ULTRASOUND IN Med AND Biol (2022) 48(8):1672–80. doi: 10.1016/j.ultrasmedbio.2022.04.219

22. Lei Hu, Xiao L, Chong P, Li X, Nianan He. Assessment of perinodular stiffness in differentiating malignant from benign thyroid nodules. Endocrine connection (2021) 10(5):492~501. doi: 10.1530/EC-21-0034

23. Lei Hu, Nianan He, Li X, Xianjun Ye, Xiao L, Chong P, et al. Evaluation of the perinodular stiffness potentially predicts the malignancy of the thyroid nodule. J Ultrasound Med (2020) 89:3251~3255. doi: 10.1002/jum.15329

24. Park HS, Shin HJ, Shin CK, Cha JH, Chae EY, Choi WJ, et al. Comparison of peritumoral stromal tissue stiffness obtained by shear wave elastography between benign and malignant breast lesions. Acta RADIOLOGICA (2018) 59(10):1168–75. doi: 10.1177/0284185117753728

25. Zhang X, Liang M, Yang ZH, Zheng CS, Wu JY, Ou B, et al. Deep learning-based radiomics of b-mode ultrasonography and shear-wave elastography: Improved performance in breast mass classification. Front Oncol (2020) 10:1621. doi: 10.3389/fonc.2020.01621

26. Sun Q, Lin X, Zhao YS, Li L, Yan K, Liang D, et al. Deep learning vs. radiomics for predicting axillary lymph node metastasis of breast cancer using ultrasound images: Don't forget the peritumoral region. Front Oncol (2020) 10:53. doi: 10.3389/fonc.2020.00053

27. Dong F, Wu H, Zhang L, Tian HT, Liang WY, Ye XY, et al. Diagnostic performance of multimodal sound touch elastography for differentiating benign and malignant breast masses. J OF ULTRASOUND IN Med (2019) 38(8):2181–90. doi: 10.1002/jum.14915

28. Choi JiS, Han BK, Ko ES, Bae JM, Ko EY, Song SH, et al. Effect of a deep learning framework-based computer-aided diagnosis system on the diagnostic performance of radiologists in differentiating between malignant and benign masses on breast ultrasonography. KOREAN J OF Radiol (2019) 20(5):749–58. doi: 10.3348/kjr.2018.0530

29. Wang Q, Chen He, Luo G, Li B, Shang HT, Shao H, et al. Performance of novel deep learning network with the incorporation of the automatic segmentation network for diagnosis of breast cancer in automated breast ultrasound. Eur Radiol (2022) 32:7163–72. doi: 10.1007/s00330-022-08836-x

30. Misra S, Jeon S, Managuli R, Lee S, Kim G, Yoon C, et al. Bi-modal transfer learning for classifying breast cancers via combined b-mode and ultrasound strain imaging. IEEE Trans ON ULTRASONICS FERROELECTRICS AND FREQUENCY CONTROL (2022) 69(1):222–32. doi: 10.1109/TUFFC.2021.3119251

31. Jabeen K, Khan MA, Alhaisoni M, Tariq U, Zhang YD, Hamza A, et al. Breast cancer classification from ultrasound images using probability-based optimal deep learning feature fusion. Sensors (Basel Switzerland) (2022) 22(3). doi: 10.3390/s22030807

32. Yi J, Kang HK, Kwon JH, Kim KS, Park MH, Seong YK, et al. Technology trends and applications of deep learning in ultrasonography: Image quality enhancement, diagnostic support, and improving workflow efficiency. Ultrasonography (2021) 40(1):7–22. doi: 10.14366/usg.20102

33. Gu J, Tong T, He C, Xu M, Yang X, Tian J, et al. Deep learning radiomics of ultrasonography can predict response to neoadjuvant chemotherapy in breast cancer at an early stage of treatment: A prospective study. Eur Radiol (2022) 32(3):2099–109. doi: 10.1007/s00330-021-08293-y

Keywords: convolutional neural networks (CNN), shear-wave elastography (SWE), peritumoral stiffness, segmentation, breast cancer

Citation: Xie L, Liu Z, Pei C, Liu X, Cui Y-y, He N-a and Hu L (2023) Convolutional neural network based on automatic segmentation of peritumoral shear-wave elastography images for predicting breast cancer. Front. Oncol. 13:1099650. doi: 10.3389/fonc.2023.1099650

Received: 18 November 2022; Accepted: 31 January 2023;

Published: 14 February 2023.

Edited by:

Richard Gary Barr, Northeast Ohio Medical University, United StatesReviewed by:

Yuanpin Zhou, Sun Yat-sen University, ChinaSubathra Adithan, Jawaharlal Institute of Postgraduate Medical Education and Research (JIPMER), India

Copyright © 2023 Xie, Liu, Pei, Liu, Cui, He and Hu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nian-an He, aGVuaWFuYW43MUBxcS5jb20=; Lei Hu, aHVsZWlAdXN0Yy5lZHUuY24=

†These authors have contributed equally to this work

Li Xie

Li Xie Zhen Liu2†

Zhen Liu2† Xiao Liu

Xiao Liu Lei Hu

Lei Hu