- Department of General Surgery, The Second Affiliated Hospital of Nanjing Medical University, Nanjing, Jiangsu, China

Purpose: To construct the deep learning system (DLS) based on enhanced computed tomography (CT) images for preoperative prediction of staging and human epidermal growth factor receptor 2 (HER2) status in gastric cancer patients.

Methods: The raw enhanced CT image dataset consisted of CT images of 389 patients in the retrospective cohort, The Cancer Imaging Archive (TCIA) cohort, and the prospective cohort. DLS was developed by transfer learning for tumor detection, staging, and HER2 status prediction. The pre-trained Yolov5, EfficientNet, EfficientNetV2, Vision Transformer (VIT), and Swin Transformer (SWT) were studied. The tumor detection and staging dataset consisted of 4860 enhanced CT images and annotated tumor bounding boxes. The HER2 state prediction dataset consisted of 38900 enhanced CT images.

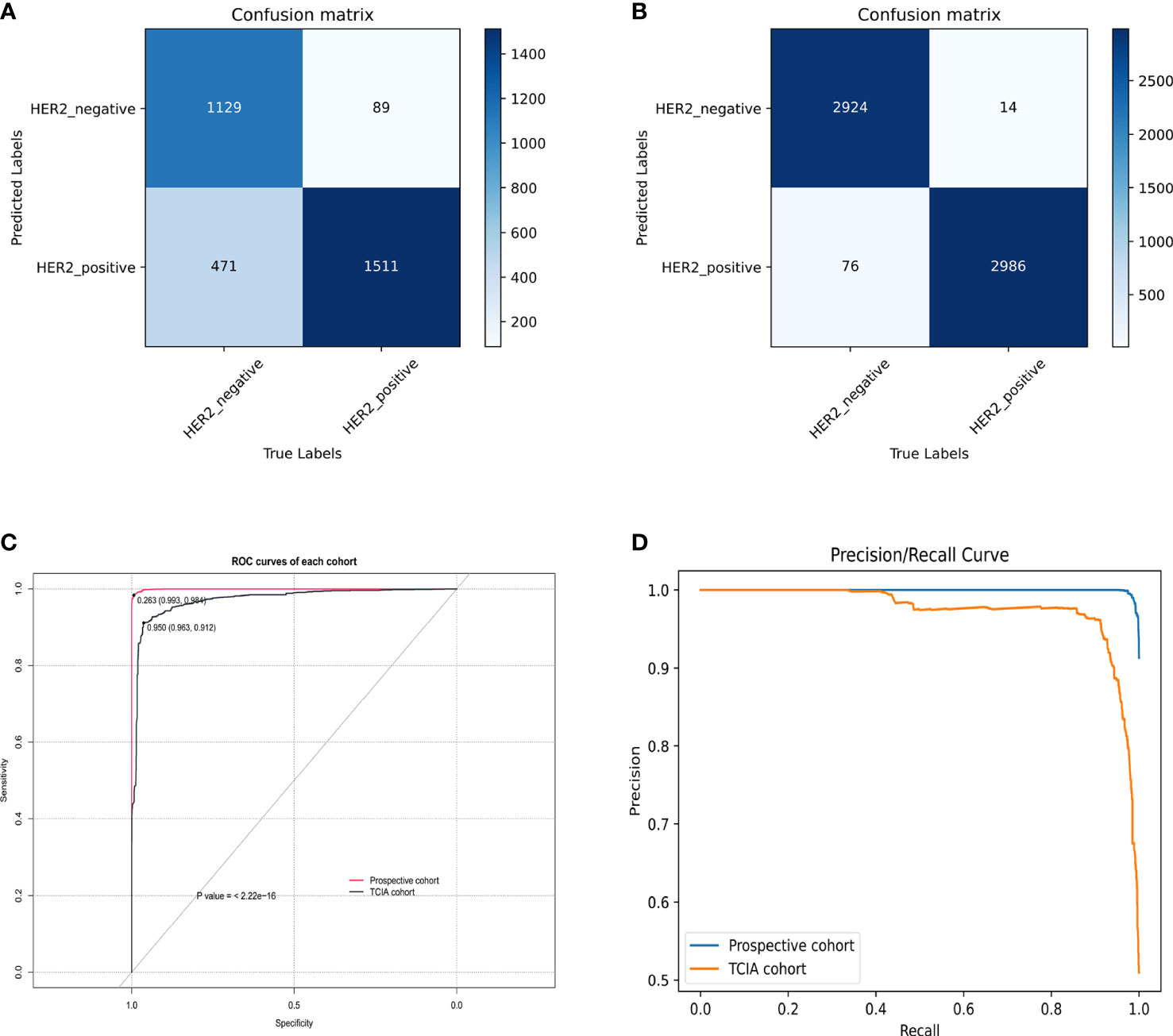

Results: The DetectionNet based on Yolov5 realized tumor detection and staging and achieved a mean Average Precision (IoU=0.5) (mAP_0.5) of 0.909 in the external validation cohort. The VIT-based PredictionNet performed optimally in HER2 status prediction with the area under the receiver operating characteristics curve (AUC) of 0.9721 and 0.9995 in the TCIA cohort and prospective cohort, respectively. DLS included DetectionNet and PredictionNet had shown excellent performance in CT image interpretation.

Conclusion: This study developed the enhanced CT-based DLS to preoperatively predict the stage and HER2 status of gastric cancer patients, which will help in choosing the appropriate treatment to improve the survival of gastric cancer patients.

Introduction

Gastric cancer is one of the most common tumors in the world and ranks fourth in cancer-related deaths (1). Many individuals with gastric cancer are already in the late stages when they are detected, due to the atypia of early symptoms (2, 3). The tumor, node, and metastasis (TNM) stage is commonly used to assist clinicians in making treatment decisions, and for patients with advanced disease, adjuvant chemotherapy is recommended as the standard preoperative treatment (4, 5). However, the use of medical imaging for preoperative staging assessment is unsatisfactory (6, 7). Surgical resection combined with adjuvant chemotherapy or chemoradiotherapy is still the main treatment for advanced gastric cancer (4). However, even with standard treatment, the prognosis of patients with advanced gastric cancer remains poor (8, 9). HER2 overexpression is an important driver of gastric carcinogenesis and is associated with poor prognosis in advanced gastric cancer (10–12). Trastuzumab combined with standard chemotherapy can significantly improve overall survival in HER2-positive patients (4, 11, 13, 14). Accurate assessment of HER2 status is crucial in the treatment of gastric cancer (15). Detecting HER2 status by immunohistochemistry (IHC) or fluorescence in situ hybridization (FISH) is widespread, although they are invasive and costly (16, 17).

Identifying imaging biomarkers is critical in oncology (18). Studies have shown that using medical images can capture the biology of tumors at the genetic and cellular level (19). Enhanced CT is widely used in clinical practice and is a routine imaging examination for preoperative evaluation of gastric cancer patients (20). In recent years, deep learning (DL) has gained increasing attention in the field of oncology. Deep learning has gained increasing attention in the field of oncology recently, which can extract more information from input data (21–23). Convolutional neural networks (CNNs) are the most mature deep learning algorithms and perform well in a variety of image classification tasks (24, 25). Transformers have also received extensive attention in image classification and detection (26–28). Research has confirmed that, under certain conditions, the predictive performance of DL models is not inferior to that of human experts (29, 30).

Therefore, this study aimed to develop the DLS for preoperative prediction of staging and HER2 status in patients with gastric cancer. We also built a simple web service (web) to make this prediction more accessible to clinicians. To our knowledge, this has not been reported in any published study.

Materials and methods

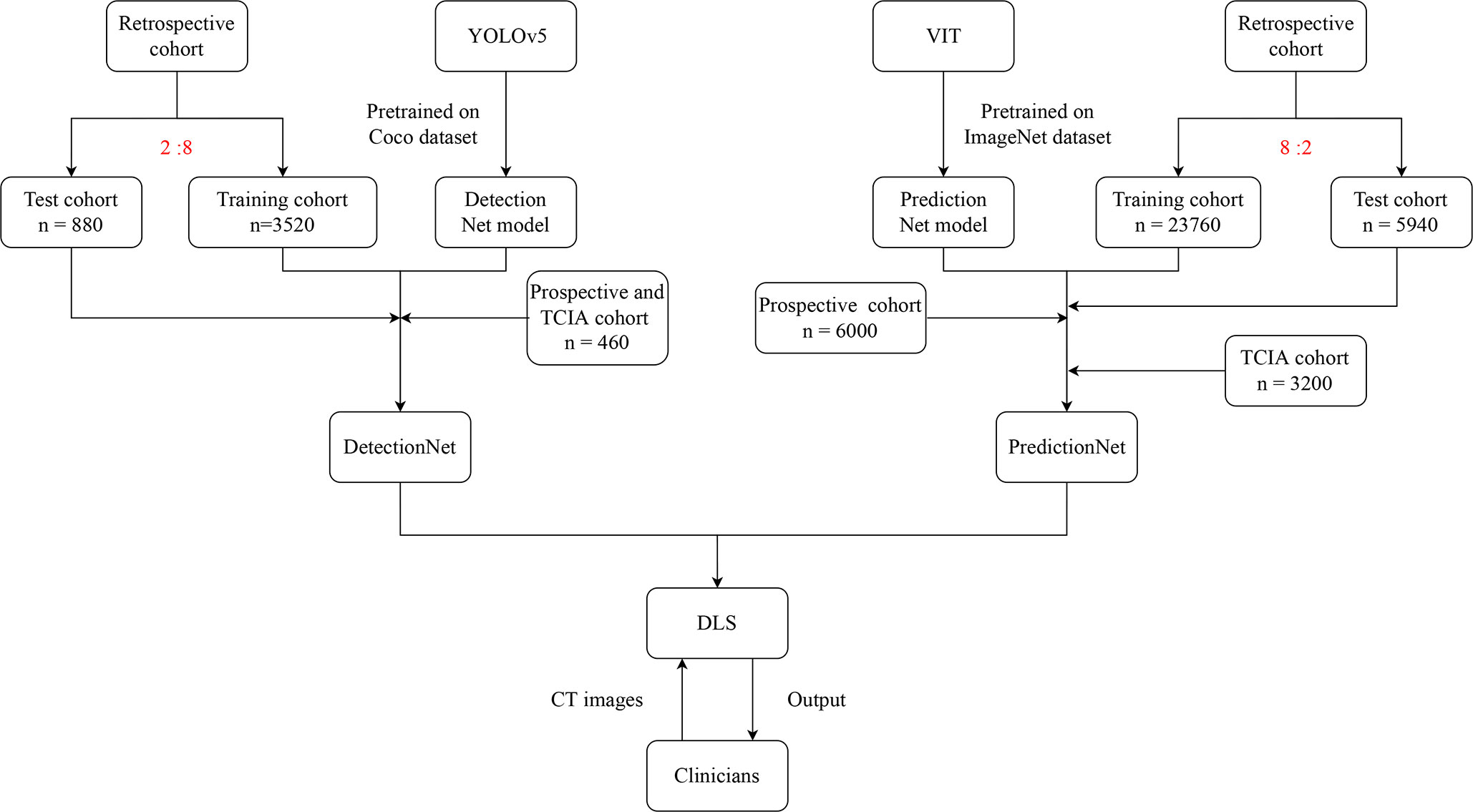

Figure 1 depicted the workflow of this study.

Patients

We collected three different cohorts. Our research team retrospectively collected clinical data from all gastric cancer patients from January 2017 to June 2021, and a total of 297 patients participated in this study. We also collected CT images and clinical information of 40 patients from the TCIA database. In addition, we prospectively and continuously collected the clinical data of 60 gastric cancer patients from October 2021 to April 2022. The inclusion criteria included enhanced CT within 1 week before gastrectomy, postoperative pathology confirmed as gastric cancer, post-gastrectomy HER2 status testing, clear HER2 status, and no preoperative chemotherapy or radiotherapy. The exclusion criteria included poorly dilated stomach or artifacts on CT images, small gastric cancer lesions that are difficult to identify, and the inability to determine HER2 status in patients with gastric cancer. Supplementary Material detailed the sample size assessment process (Figure S1) and the data collection (Figures S2A–C).

CT image acquisition

CT examinations were performed on a 64 Dual Source CT. The patient was instructed to fast for more than 8 hours and to inject anisodamine 20 mg intravenously to avoid gastric motility. Besides, all patients were asked to take 1000ml of warm water orally to dilate the stomach before the examination and hold their breath during the examination. After the non-enhanced abdominal CT scan, the patients were intravenously injected with 1.5 mL/kg of iodinated contrast medium (ioversol injection 320 mg I/mL, Jiangsu Hengrui Pharmaceuticals Co.,Ltd, Jiangsu, China) at a flow rate of 3.0 mL/s by an automatic pump syringe. After the contrast agent injection starts, when the contrast agent concentration reached 100 Hu, the imaging after 8 seconds is the arterial phase, the imaging at 21 seconds after the arterial phase imaging is the venous phase, and the imaging at 90 seconds after the venous phase imaging is the delayed phase. The parameters of the CT scan were as follows: tube voltage 120 kV, tube current 150 - 300 mA, field of view 30 - 50 cm, matrix 512 × 512, rotation time 0.5 seconds, pitch 1.0, and images were reconstructed with section thicknesses of 2 mm.

CT images collection

Studies confirmed that features extracted from the enhanced CT arterial phase images had better predictive performance than the portal venous phase (31, 32). Therefore, we resampled the enhanced CT arterial phase images. The resampled voxel sizes were set to 1×1×1 mm³ voxels to standardize the slice thickness. Two radiologists reviewed the patient’s enhanced CT arterial phase images and both of them had more than eight years of medical imaging experience. The evaluation processes of the two doctors were independent of each other, and they did not know the patient’s pathological information. For each patient’s CT images, they took a total of five images of the largest cross-section of the tumor and annotated the images, using five consecutive slices (maximum lesion). They marked the tumor location on these images. If their opinions disagreed, the opinion of another chief physician with 15 years of experience in medical imaging will be finally adopted.

Dataset construction

We screened the five consecutive axial slices with the largest tumor area from the CT images of each patient for the construction of the dataset (33). Specifically, one of the slices was located in the largest section of the tumor, and then, with this slice as the center, the upper two slices and the lower two slices were selected, for a total of five slices.

We retrospectively collected 1100 images for automatic tumor location detection and predicted staging, including 370 stage I images, 360 stage II images, and 370 stage III images. Additional images were then obtained using cropping, flipping, and rotating. This approach reduced the possibility of overfitting when the model processes the dataset (34). A total of 4400 images were obtained. Then, we randomly divided these images into training and test cohorts in a ratio of 8:2. The training cohort was used for model training, and the test cohort was used for model validation. We also collected 160 images from the TCIA cohort and 300 images from the prospective cohort. As the vast majority of patients in the TCIA cohort were in stage III, we mixed the two cohorts as an external validation cohort to further validate the performance of the model.

We retrospectively collected a total of 1485 CT images for HER2 status prediction, including 800 HER2-negative and 685 HER2-positive images. We used image enhancement techniques to perform 19 image transformations to expand the original dataset. We use the “transforms” function for image enhancement, using three methods, including Crop, Filp and Rotation, Transform. The specific method names are as follows: RandomCrop, CenterCrop, RandomResizedCrop, RandomRotation, RandomVerFilp, RandomHorFilp, Normalize, RandomErasing, Pad, ColorJitter, RandomGrayscale, Affine, RandomOrder. We randomly split these images into a training cohort and a test cohort in a ratio of 8:2. The training cohort was used for model training, and the test cohort was used for model validation. We also collected 160 and 300 images from the TCIA cohort and the prospective cohort, respectively. After performing the same image enhancement process on them, they were used as two external validation cohort for further validation of the model. All the images were normalized.

Model construction

We decomposed the model into two tasks. The first task was to detect the tumor location of gastric cancer in enhanced CT images and predict their stage (DetectionNet). The second task predicted the HER2 status of the tumor (PredictionNet).

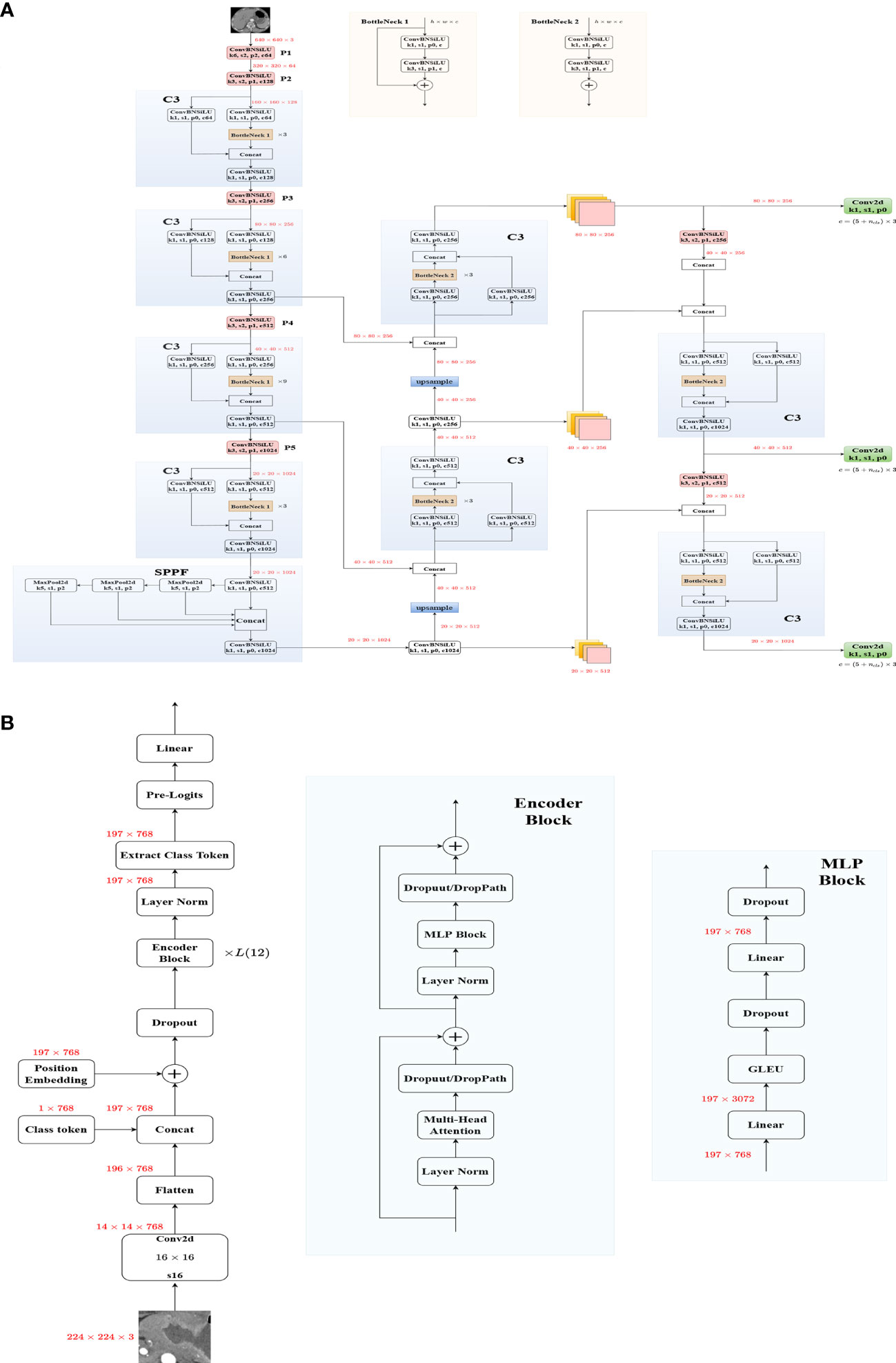

Yolov5 was used to build the DetectionNet, which was pre-trained on the Coco dataset. Data enhancement techniques such as image translation and image scale were used in the construction of the DetectionNet. The architecture was shown in Figure 2A and we did not modify YOLOv5.

When building the PredictionNet, we preprocessed the images of the training cohort and the test cohort differently (35). EfficientNet is one of the most powerful CNNs, which has achieved the highest accuracy on the ImageNet top1 while requiring fewer computing resources than other models (36). Therefore, we chose EfficientNet (Figure S3) and EfficientNetV2 (Figure S4) to build the CNN models. Transformer-based image structure has strong non-local feature extraction ability, VIT (Figure 2B), and SWT show great performance and are considered as strong backbones (27, 28, 37). Both of them were used to build the transformer models. They were all pre-trained on the ImageNet dataset (38, 39). Supplementary Material detailed the training process of the model.

Model evaluation

For DetectionNet, compute_loss was divided into three parts: cls_loss, box_loss, and obj_loss. Cls_loss is the Classes loss, which is used to calculate whether the anchor box and the corresponding calibration classification are correct (BCE loss). Box_loss is the Location loss, which is the error between the predicted box and the calibration box (CIoU loss). Obj_loss is the Objectness loss, used to calculate the confidence of the network (BCE loss). The formula of the loss function is detailed in the Supplementary Material. The calculation of loss was performed on each layer of feature maps. Confusion matrix, mAP, and Precision-Recall (P-R) curves were used to further evaluate the performance of the DetectionNet.

For PredictionNet, we evaluated the classification performance of the networks by accuracy and loss value and selected the best network. At the same time, the receiver operator characteristics (ROC) curves and P-R curves were also used for network evaluation. Gradient-weighted Class Activation Mapping (Grad-CAM) was used to visualize the output of a given layer in deep learning (40).

DLS construction

The DLS consisted of two parts, including tumor detection and staging by the DetectionNet and HER2 status prediction by the PredictionNet. We visualized the DetectionNet based on “pyqt5”, and encapsulated the PredictionNet into an executable file based on”pyinstaller”. To further facilitate clinicians to use the DLS, we also built a simple web based on “flask”, which was applied to all Internet Protocol (IP) in the hospital’s local area network, and all hospital staff could use the service.

Results

Patient characteristics

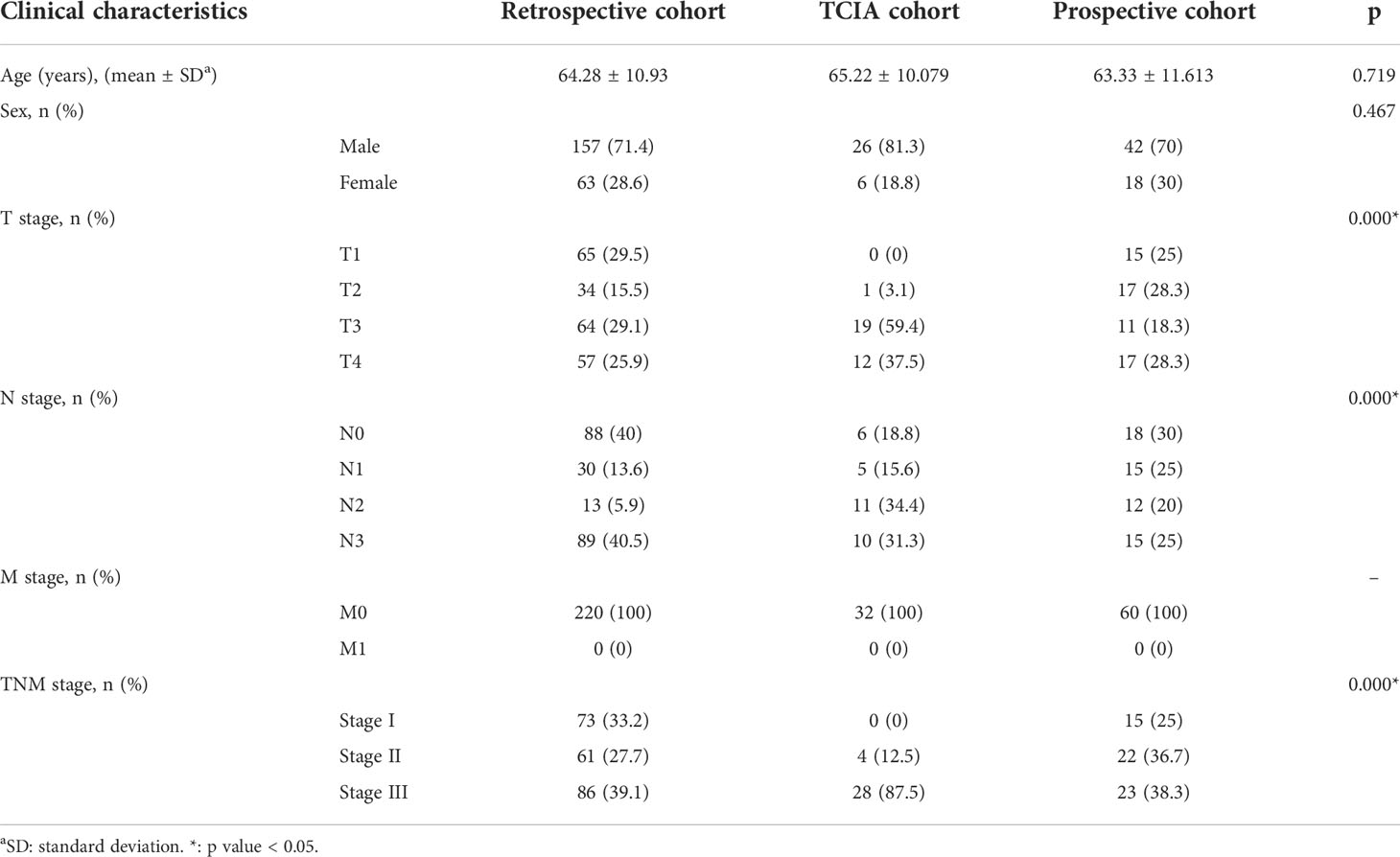

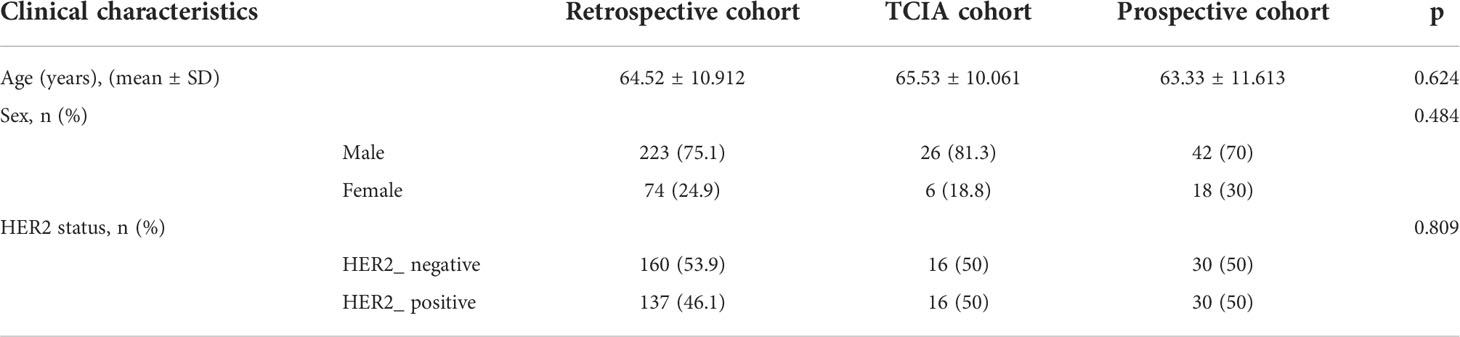

A total of 397 patients were included in this study. Table 1 and Table 2 summarized the clinical findings of the retrospective, TCIA, and prospective cohorts.

Model performance

After 150 learning epochs, the Yolov5 achieved the best-optimized parameters, achieving a precision of 0.9717 and a recall of 0.9579 in the test cohort (Figure S5). Confusion matrices for the test cohort and external validation cohort were shown in Figures 3A, B. The mAP_0.5 value of the test cohort and external validation cohort were 0.974 and 0.909, respectively (Figures 3C, D). The F1 scores of the model in the test cohort and external validation cohort were 0.97 and 0.88, respectively (Figure 3E, F).

Figure 3 Evaluation of DetectionNet performance in the test and external validation cohort. (A, B) The confusion matrix in the test (A) and external validation (B) cohort. (B) The F1 curve. The model had an F1 score of 0.97 in the test cohort. (C, D) The P-R curve. The mAP_0.5 value of the test cohort (C) and external validation cohort (D) were 0.974 and 0.909, respectively. (E, F) The F1 curve. The F1 scores of the model in the test cohort (E) and external validation cohort (F) were 0.97 and 0.88, respectively.

According to the training loss and accuracy value, after 160 learning epochs, all the networks achieved the best-optimized parameters (Figure S6). The results showed that the VIT had the best classification results and outperformed CNNs in the test cohort (Figure S6). VIT was selected to build the PredictionNet and the confusion matrix showed that the PredictionNet had good classification performance (Figures 4A, B). Besides, Figure 4C showed excellent performance in the TCIA cohort and prospective cohort with the AUC of 0.9721 and 0.9995, respectively. Figure 4D showed the P-R curve of the PredictionNet. Figure 5 showed representative images of Grad-CAM for the VIT.

Figure 4 Evaluation of PredictionNet performance. (A, B) The confusion matrix in the TCIA (A) and prospective (B) cohort. (C) The ROC curves. The AUC values of TCIA cohort and prospective cohort were 0.9721 and 0.9995, respectively. (D) The P-R curves.

Figure 5 Enhanced CT arterial phase images and feature heatmaps generated by VIT. The importance of features is represented by color bars.

Application of DLS

After training DetectionNet for tumor detection and staging and PredictionNet for HER2 status prediction, we combined these networks to implement the DLS. DLS accepted raw enhanced CT images as input. The system automatically detected the input images, realized tumor detection and staging, and outputted the detection results with image blocks (Figures S7A, B). Then, the tumor image was input into the DLS to predict the HER2 status, and the prediction conclusion was output for the doctor to check (Figure S7C). In addition, we design a simple web service to be applied to the hospital’s local area network to make the prediction process more accessible to clinicians lacking AI knowledge (Figure S7D).

Discussion

In this study, we developed and validated the enhanced computed tomography-based deep learning system for preoperative prediction of stage and HER2 status in gastric cancer patients. DLS successfully stratified gastric cancer patients according to the stage and HER2 status, facilitating individualized preoperative assessment of stage and HER2 status. More importantly, we built the web service for preoperative prediction of stage and HER2 status in gastric cancer patients.

Accurate and effective stage assessment and HER2 examination play a crucial role in the treatment and prognosis of patients with gastric cancer (4, 5, 15). Medical imaging is a commonly used method for preoperative staging assessment, but the accuracy is not satisfactory (5, 6). Gastroscopic biopsy is a common method for preoperative detection of HER2 status. However, it can lead to serious complications such as infection, bleeding, and perforation (41). Studies have attempted to assess HER2 status through positron emission tomography (PET/CT) and magnetic resonance imaging (MRI) (42, 43). Although certain results have been achieved, they are not routine preoperative examinations for gastric cancer patients. Enhanced CT is more commonly used in the examination and treatment of tumors (31, 44). To our knowledge, this is the first study using enhanced CT images of gastric cancer and deep learning to preoperatively predict the stage and HER2 status.

Different from other studies, most of the deep learning research in the field of gastric cancer focuses on the classification and prognostic analysis of endoscopic images or pathological images (45–48). Compared with endoscopy and tissue biopsy, enhanced CT is a non-invasive preoperative routine test with few risks (49). Furthermore, this study successfully established a DLS and tested the results with the TCIA cohort and the independent prospective cohort. Our findings confirmed that enhanced CT, as a routine preoperative examination in gastric cancer patients, had an inherent feature of receptor expression and thus could reflect the expression status of HER2. Several studies have reported correlations between CT and genes for lung and colorectal cancers (50, 51). The performance of DLS was excellent, achieving the AUC of 0.9721 and 0.9995 in the external validation cohort and prospective cohort, respectively. Due to various reasons like deep learning ‘black box properties’ and clinician bias, DLS is not yet sufficient to replace endoscopic biopsy. However, it was worth noting that DLS had shown advantages over endoscopy and tissue biopsy because it can be assessed from the entire tumor and may be useful if the biopsy was of poor quality. The results of this study underscored the fact that enhanced CT of gastric cancer had inherent features to assess the expression status of HER2 in gastric cancer. It was quite valuable because it was nearly impossible for clinicians to determine the status of HER2 with enhanced CT. Grad-CAM visualized the output of the deep learning models and further research should be carried out based on this result in the future.

Besides, DLS successfully performed tumor detection and preoperative staging prediction on CT images of gastric cancer patients. Previous studies generally only focused on a certain stage such as the depth of tumor invasion or lymph node metastasis and did not conduct an overall assessment (45, 52, 53). Clinical guidelines require clinicians to determine TNM staging before initiating any treatment (54). The study by Huang et al. (55) showed that integrating multiple markers into one model facilitates individualized management of patients and is superior to using a single marker. We were inclined to this view. Only focusing on T staging or N staging may not be able to comprehensively assess the patient’s condition, thus affecting clinicians’ diagnosis, treatment, and prognosis evaluation of patients.

After reviewing the literature, we found that many CT-based deep learning studies use CT layers ranging from one to several dozen layers (33, 52, 56–59). However, we did not review the literature on how many CT layers of input were optimal for deep learning. For clinical work, artificial intelligence needs to process and interpret images quickly and accurately, reducing workflow and medical errors (60). Therefore, it is essential to reduce the workload of doctors while ensuring accuracy. The aim of this study is to build a deep learning system to help clinicians quickly assess patients. If too many CT layers are entered, this increases the workload of the clinician and also increases the hardware configuration conditions required to run the model (61). The study by Hu et al. showed that the performance of building a model with three consecutive CT layers centered on the largest cross-section of the tumor was quite close to that of building a model based on the whole tumor volume (AUC, 0.712 VS. 0.725) (62). This provided the basis for our study. Therefore, we screened the five consecutive axial slices with the largest tumor area from the CT images of each patient for the construction of the dataset. The results of our analysis confirmed that both the DetectionNet and the PredictionNet can achieve excellent performance on images based on only five CT layers, which will serve as a reference for other researchers.

Radiomics is an emerging field that has received significant attention in the practice of oncology (31, 63). Li et al. (64) used radiomics to predict the depth of tumor invasion, and Wang et al. (65) predicted lymph node metastasis with an accuracy of 0.77 and 0.80, respectively. Li et al. (66)predicted HER2 status in gastric cancer patients based on CT radiomics, with an AUC of 0.771 in the test cohort. The study by Wang et al. (67)also showed similar results. The accuracy of the DetectionNet and the AUC of the PredictionNet were significantly higher than their radiomics models. The excellent performance of this study may be attributed to the use of deep learning algorithms. The study by Yun et al. (68) showed that integrating deep learning features and radiomics features would reduce the classification performance of deep learning feature models. Chalkidou et al. (69) believed that radiomics features may contain human bias. At the same time, there had always been a problem with reproducibility in radiomics (70). With the advent of deep learning, the value of traditional radiomics has been called into question (71, 72). Deep learning allows relevant features to be learned automatically, without prior definition by the researcher, and these abstract representations also improve learning capabilities, increasing generality and accuracy while reducing potential bias (73). Human-defined radiomics had certain limitations, and the differences between tissue types may not be fully included in the radiomics features.

More importantly, this study also explored the clinical application of deep learning models. Although the previous artificial intelligence research also has excellent performance, they have only been tested in the internal validation cohort or external validation cohort, and have not tried to apply to clinical practice (74, 75), which is not in line with the trend of personalized medicine (76). Schmidt et al. (77) believed that medical research should serve clinical applications. Therefore, we developed the DLS for clinicians. We also built a simple web service for clinicians to use in clinical work. When uploading enhanced CT images of gastric cancer, no professional annotation is required, DLS or web will display brief results of the stage and HER2 prediction. Although there are many difficulties in translating medical research into clinical technology (78), and Cabitza et al. (79) pointed out that artificial intelligence may bring unforeseen consequences in clinical practice, we still believe that this is a worthwhile attempt. Artificial intelligence, with its efficient learning and data processing capabilities, will change the way we deal with gastric cancer and become an invaluable tool for clinicians.

This study has several limitations. First, most of the patients in this study were from a single center, and DLS may not perform well on contrast-enhanced CT images from other hospitals. In our further studies, we will do our best to conduct a multicenter study to reduce the differences between hospitals and make the DLS more robust. In addition, the sample size of the TCIA cohort and prospective cohort of this study was small. Therefore, DLS needs to be validated in larger cohorts. Besides, this study used only enhanced CT arterial phase images for prediction. Another staging of contrast-enhanced CT is for further study. Finally, the DLS of this study was constructed based on the 2D model. In future studies, we will explore the clinical value of 3D models in CT.

In conclusion, our DLS can preoperatively predict the stage and HER2 status in gastric cancer patients based only on enhanced CT images. To make our model more intuitive and convenient for clinicians, we designed a web service based on the developed algorithm. The DLS will help clinicians evaluate the stage and HER2 status of gastric cancer patients preoperatively and select the appropriate treatment, thereby reducing the physical and financial burden on patients.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

This study was performed in line with the principles of the Declaration of Helsinki and approved by the Ethics Committee of the Second Affiliated Hospital of Nanjing Medical University (NO [2022].-KY-113-01). The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

NL collected and organized the clinical data. XG completed the modeling and data analysis and wrote the manuscript. JZ directed the research. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the National Natural Science Foundation of China (NO. 81874058).

Acknowledgments

The authors thanked all colleagues who contributed to this work.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2022.950185/full#supplementary-material

References

1. Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA: Cancer J Clin (2021) 71(3):209–49. doi: 10.3322/caac.21660

2. Rice TW, Gress D, Patil DT, Hofstetter WL, Kelsen DP, Blackstone EH. Cancer of the esophagus and esophagogastric junction-major changes in the American joint committee on cancer eighth edition cancer staging manual. CA: Cancer J Clin (2017) 67(4):304–17. doi: 10.3322/caac.21399

3. Digklia A, Wagner AD. Advanced gastric cancer: Current treatment landscape and future perspectives. World J Gastroenterol (2016) 22(8):2403–14. doi: 10.3748/wjg.v22.i8.2403

4. National comprehensive cancer network (NCCN) guidelines . Available at: http://www.nccn.org/ (Accessed January 11, 2022).

5. Fukagawa T, Katai H, Mizusawa J, Nakamura K, Sano T, Terashima M, et al. A prospective multi-institutional validity study to evaluate the accuracy of clinical diagnosis of pathological stage III gastric cancer (JCOG1302A). Gastric Cancer (2018) 21(1):68–73. doi: 10.1007/s10120-017-0701-1

6. Kubota K, Suzuki A, Shiozaki H, Wada T, Kyosaka T, Kishida A. Accuracy of multidetector-row computed tomography in the preoperative diagnosis of lymph node metastasis in patients with gastric cancer. Gastrointestinal tumors (2017) 3(3-4):163–70. doi: 10.1159/000454923

7. Joo I, Lee JM, Kim JH, Shin CI, Han JK, Choi BI. Prospective comparison of 3T MRI with diffusion-weighted imaging and MDCT for the preoperative TNM staging of gastric cancer. J magnetic resonance Imaging JMRI (2015) 41(3):814–21. doi: 10.1002/jmri.24586

8. Miao RL, Wu AW. Towards personalized perioperative treatment for advanced gastric cancer. World J Gastroenterol (2014) 20(33):11586–94. doi: 10.3748/wjg.v20.i33.11586

9. Orditura M, Galizia G, Sforza V, Gambardella V, Fabozzi A, Laterza MM, et al. Treatment of gastric cancer. World J Gastroenterol (2014) 20(7):1635–49. doi: 10.3748/wjg.v20.i7.1635

10. Boku N. HER2-positive gastric cancer. Gastric Cancer (2014) 17(1):1–12. doi: 10.1007/s10120-013-0252-z

11. Janjigian YY, Kawazoe A, Yañez P, Li N, Lonardi S, Kolesnik O, et al. The KEYNOTE-811 trial of dual PD-1 and HER2 blockade in HER2-positive gastric cancer. Nature (2021) 600(7890):727–30. doi: 10.1038/s41586-021-04161-3

12. Chung HC, Bang YJ, F. C,S, Qin SK, Satoh T, Shitara K, et al. First-line pembrolizumab/placebo plus trastuzumab and chemotherapy in HER2-positive advanced gastric cancer: KEYNOTE-811. Future Oncol (London England) (2021) 17(5):491–501. doi: 10.2217/fon-2020-0737

13. Smyth EC, Verheij M, Allum W, Cunningham D, Cervantes A, Arnold D. Gastric cancer: ESMO clinical practice guidelines for diagnosis, treatment and follow-up. Ann Oncol (2016) 27(suppl 5):v38–49. doi: 10.1093/annonc/mdw350

14. Charalampakis N, Economopoulou P, Kotsantis I, Tolia M, Schizas D, Liakakos T, et al. Medical management of gastric cancer: a 2017 update. Cancer Med (2018) 7(1):123–33. doi: 10.1002/cam4.1274

15. Curea FG, Hebbar M, Ilie SM, Bacinschi XE, Trifanescu OG, Botnariuc I, et al. Current targeted therapies in HER2-positive gastric adenocarcinoma. Cancer biotherapy radiopharmaceuticals (2017) 32(10):351–63. doi: 10.1089/cbr.2017.2249

16. Lordick F, Al-Batran SE, Dietel M, Gaiser T, Hofheinz RD, Kirchner T, et al. HER2 testing in gastric cancer: results of a German expert meeting. J Cancer Res Clin Oncol (2017) 143(5):835–41. doi: 10.1007/s00432-017-2374-x

17. Hirai I, Tanese K, Nakamura Y, Otsuka A, Fujisawa Y, Yamamoto Y, et al. Assessment of the methods used to detect HER2-positive advanced extramammary paget's disease. Med Oncol (Northwood London England) (2018) 35(6):92. doi: 10.1007/s12032-018-1154-z

18. Sorace AG, Elkassem AA, Galgano SJ, Lapi SE, Larimer BM, Partridge SC, et al. Imaging for response assessment in cancer clinical trials. Semin Nucl Med (2020) 50(6):488–504. doi: 10.1053/j.semnuclmed.2020.05.001

19. Aerts HJ, Velazquez ER, Leijenaar RT, Parmar C, Grossmann P, Carvalho S, et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat Commun (2014) 5:4006. doi: 10.1038/ncomms5006

20. Amin MB, Edge SB, Greene FL, Byrd DB, Brookland RK, Washington MK, et al. AJCC cancer staging manual. 8th Edition. New York: Springer (2016).

21. Huang S, Yang J, Fong S, Zhao Q. Artificial intelligence in cancer diagnosis and prognosis: Opportunities and challenges. Cancer Lett (2020) 471:61–71. doi: 10.1016/j.canlet.2019.12.007

22. Deo RC. Machine learning in medicine. Circulation (2015) 132(20):1920–30. doi: 10.1161/CIRCULATIONAHA.115.001593

23. Wong D, Yip S. Machine learning classifies cancer. Nature (2018) 555(7697):446–7. doi: 10.1038/d41586-018-02881-7

24. Skrede OJ, De Raedt S, Kleppe A, Hveem TS, Liestøl K, Maddison J, et al. Deep learning for prediction of colorectal cancer outcome: a discovery and validation study. Lancet (London England) (2020) 395(10221):350–60. doi: 10.1016/S0140-6736(19)32998-8

25. Coudray N, Ocampo PS, Sakellaropoulos T, Narula N, Snuderl M, Fenyö D, et al. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat Med (2018) 24(10):1559–67. doi: 10.1038/s41591-018-0177-5

26. Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S. End-to-end object detection with transformers. In: Vedaldi A, Bischof H, Brox T, Frahm J-M, editors. Computer vision–ECCV 2020. New York, NY, USA: Springer International Publishing (2020).

27. Dosovitskiy A, Beyer L, Alexander K, Weissenborn D, Zhai D, Unterthiner T, et al. An image is worth 16x 16 words: Transformers for image recognition at scale. arXiv preprint arXiv (2021) 2010.11929, 2020.

28. Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, et al. Swin transformer: hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (2021) pp. 10012–22. doi: 10.48550/arXiv.2103.14030

29. Kermany DS, Goldbaum M, Cai W, Valentim CCS, Liang H, Baxter SL, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell (2018) 172(5):1122–31.e9. doi: 10.1016/j.cell.2018.02.010

30. Jiang K, Jiang X, Pan J, Wen Y, Huang Y, Weng S, et al. Current evidence and future perspective of accuracy of artificial intelligence application for early gastric cancer diagnosis with endoscopy: A systematic and meta-analysis. Front Med (2021) 8:629080. doi: 10.3389/fmed.2021.629080

31. Liu S, Liu S, Ji C, Zheng H, Pan X, Zhang Y, et al. Application of CT texture analysis in predicting histopathological characteristics of gastric cancers. Eur Radiol (2017) 27(12):4951–9. doi: 10.1007/s00330-017-4881-1

32. Ba-Ssalamah A, Muin D, Schernthaner R, Kulinna-Cosentini C, Bastati N, Stift J, et al. Texture-based classification of different gastric tumors at contrast-enhanced CT. Eur J Radiol (2013) 82(10):e537–43. doi: 10.1016/j.ejrad.2013.06.024

33. Ariji Y, Fukuda M, Kise Y, Nozawa M, Yanashita Y, Fujita H, et al. Contrast-enhanced computed tomography image assessment of cervical lymph node metastasis in patients with oral cancer by using a deep learning system of artificial intelligence. Oral Surg Oral Med Oral Pathol Oral Radiol (2019) 127(5):458–63. doi: 10.1016/j.oooo.2018.10.002

34. Zhong Z, Zheng L, Kang G, Li S, Yang Y. Random erasing data augmentation. Proc AAAI Conf Artif Intell (2020) 34(07):13001–8. doi: 10.1609/aaai.v34i07.7000

35. Drozdzal M, Chartrand G, Vorontsov E, Shakeri M, Di Jorio L, Tang A, et al. Learning normalized inputs for iterative estimation in medical image segmentation. Med image Anal (2018) 44:1–13. doi: 10.1016/j.media.2017.11.005

36. Tan M, Le QV. Efficientnet: Rethinking model scaling for convolutional neural networks. In International conference on machine learning. (2019) pp. 6105–14. doi: 10.48550/arXiv.1905.11946

37. Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention is all you need. In: Proceedings of the 31st international conference on neural information processing systems, NIPS’17. Red Hook, NY, USA: Curran Associates Inc (2017). p. 6000–6010.

38. Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv (2014) 1409.1556, 2014. doi: 10.48550/arXiv.1409.1556

39. Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. ImageNet Large scale visual recognition challenge. Int J Comput Vision (2015) 115(3):211–52. doi: 10.1007/s11263-015-0816-y

40. Selvaraju RR, Das A, Vedantam R, Cogswell M, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vision (2016) 128:336–59. doi: 10.1007/s11263-019-01228-7

41. Levy I, Gralnek IM. Complications of diagnostic colonoscopy, upper endoscopy, and enteroscopy. best practice & research. Clin Gastroenterol (2016) 30(5):705–18. doi: 10.1016/j.bpg.2016.09.005

42. Chen R, Zhou X, Liu J, Huang G. Relationship between 18F-FDG PET/CT findings and HER2 expression in gastric cancer. J Nucl Med (2016) 57(7):1040–4. doi: 10.2967/jnumed.115.171165

43. Ji C, Zhang Q, Guan W, Guo T, Chen L, Liu S, et al. Role of intravoxel incoherent motion MR imaging in preoperative assessing HER2 status of gastric cancers. Oncotarget (2017) 8(30):49293–302. doi: 10.18632/oncotarget.17570

44. Liu S, Shi H, Ji C, Guan W, Chen L, Sun Y, et al. CT textural analysis of gastric cancer: correlations with immunohistochemical biomarkers. Sci Rep (2018) 8(1):11844. doi: 10.1038/s41598-018-30352-6

45. Yoon HJ, Kim S, Kim JH, Keum JS, Oh SI, Jo J, et al. A lesion-based convolutional neural network improves endoscopic detection and depth prediction of early gastric cancer. J Clin Med (2019) 8(9):E1310. doi: 10.3390/jcm8091310

46. Song Z, Zou S, Zhou W, Huang Y, Shao L, Yuan J, et al. Clinically applicable histopathological diagnosis system for gastric cancer detection using deep learning. Nat Commun (2020) 11(1):4294. doi: 10.1038/s41467-020-18147-8

47. Su F, Sun Y, Hu Y, Yuan P, Wang X, Wang Q, et al. Development and validation of a deep learning system for ascites cytopathology interpretation. Gastric Cancer (2020) 23(6):1041–50. doi: 10.1007/s10120-020-01093-1

48. Huang B, Tian S, Zhan N, Ma J, Huang Z, Zhang C, et al. Accurate diagnosis and prognosis prediction of gastric cancer using deep learning on digital pathological images: A retrospective multicentre study. EBioMedicine (2021) 73:103631. doi: 10.1016/j.ebiom.2021.103631

49. Chang X, Guo X, Li X, Han X, Li X, Liu X, et al. Potential value of radiomics in the identification of stage T3 and T4a esophagogastric junction adenocarcinoma based on contrast-enhanced CT images. Front Oncol (2021) 11:627947. doi: 10.3389/fonc.2021.627947

50. Liu Y, Kim J, Balagurunathan Y, Li Q, Garcia AL, Stringfield O, et al. Radiomic features are associated with EGFR mutation status in lung adenocarcinomas. Clin Lung Cancer (2016) 17(5):441–448.e6. doi: 10.1016/j.cllc.2016.02.001

51. Yang L, Dong D, Fang M, Zhu Y, Zang Y, Liu Z, et al. Can CT-based radiomics signature predict KRAS/NRAS/BRAF mutations in colorectal cancer? Eur Radiol (2018) 28(5):2058–67. doi: 10.1007/s00330-017-5146-8

52. Zheng L, Zhang X, Hu J, Gao Y, Zhang X, Zhang M, et al. Establishment and applicability of a diagnostic system for advanced gastric cancer T staging based on a faster region-based convolutional neural network. Front Oncol (2020) 10:1238. doi: 10.3389/fonc.2020.01238

53. Dong D, Fang MJ, Tang L, Shan XH, Gao JB, Giganti F, et al. Deep learning radiomic nomogram can predict the number of lymph node metastasis in locally advanced gastric cancer: an international multicenter study. Ann Oncol (2020) 31(7):912–20. doi: 10.1016/j.annonc.2020.04.003

54. AK AA, Garvin JH, Redd A, Carter ME, Sweeny C, Meystre SM. Automated extraction and classification of cancer stage mentions fromUnstructured text fields in a central cancer registry. AMIA joint summits on translational science proceedings. AMIA Joint Summits Trans Sci (2018) 2017:16–25.

55. Huang YQ, Liang CH, He L, Tian J, Liang CS, Chen X, et al. Development and validation of a radiomics nomogram for preoperative prediction of lymph node metastasis in colorectal cancer. J Clin Oncol (2016) 34(18):2157–64. doi: 10.1200/JCO.2015.65.9128

56. Chen X, Feng B, Chen Y, Duan X, Liu K, Li K, et al. A CT-based deep learning model for subsolid pulmonary nodules to distinguish minimally invasive adenocarcinoma and invasive adenocarcinoma. Eur J Radiol (2021) 145:110041. doi: 10.1016/j.ejrad.2021.110041

57. Marentakis P, Karaiskos P, Kouloulias V, Kelekis N, Argentos S. Oikonomopoulos, n.; et al., lung cancer histology classification from CT images based on radiomics and deep learning models. Med Biol Eng Comput (2021) 59(1):215–26. doi: 10.1007/s11517-020-02302-w

58. Lee S, Choe EK, Kim SY, Kim HS, Park KJ, Kim D. Liver imaging features by convolutional neural network to predict the metachronous liver metastasis in stage I-III colorectal cancer patients based on preoperative abdominal CT scan. BMC Bioinf (2020) 21(Suppl 13):382. doi: 10.1186/s12859-020-03686-0

59. Ma J, He N, Yoon JH, Ha R, Li J, Ma W, et al. Distinguishing benign and malignant lesions on contrast-enhanced breast cone-beam CT with deep learning neural architecture search. Eur J Radiol (2021) 142:109878. doi: 10.1016/j.ejrad.2021.109878

60. Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med (2019) 25(1):44–56. doi: 10.1038/s41591-018-0300-7

61. Beam AL, Kohane IS. Translating artificial intelligence into clinical care. Jama (2016) 316(22):2368–9. doi: 10.1001/jama.2016.17217

62. Hu Y, Xie C, Yang H, Ho JWK, Wen J, Han L, et al. Computed tomography-based deep-learning prediction of neoadjuvant chemoradiotherapy treatment response in esophageal squamous cell carcinoma. Radiother Oncol (2021) 154:6–13. doi: 10.1016/j.radonc.2020.09.014

63. Lambin P, Leijenaar RTH, Deist TM, Peerlings J, de Jong EEC, van Timmeren J, et al. Radiomics: the bridge between medical imaging and personalized medicine. Nat Rev Clin Oncol (2017) 14(12):749–62. doi: 10.1038/nrclinonc.2017.141

64. Li C, Shi C, Zhang H, Chen Y, Zhang S. Multiple instance learning for computer aided detection and diagnosis of gastric cancer with dual-energy CT imaging. J Biomed Inf (2015) 57:358–68. doi: 10.1016/j.jbi.2015.08.017

65. Wang Y, Liu W, Yu Y, Liu JJ, Xue HD, Qi YF, et al. CT radiomics nomogram for the preoperative prediction of lymph node metastasis in gastric cancer. Eur Radiol (2020) 30(2):976–86. doi: 10.1007/s00330-019-06398-z

66. Li Y, Cheng Z, Gevaert O, He L, Huang Y, Chen X, et al. A CT-based radiomics nomogram for prediction of human epidermal growth factor receptor 2 status in patients with gastric cancer. Chin J Cancer Res (2020) 32(1):62–71. doi: 10.21147/j.issn.1000-9604.2020.01.08

67. Wang Y, Yu Y, Han W, Zhang YJ, Jiang L, Xue HD, et al. CT radiomics for distinction of human epidermal growth factor receptor 2 negative gastric cancer. Acad Radiol (2021) 28(3):e86–92. doi: 10.1016/j.acra.2020.02.018

68. Yun J, Park JE, Lee H, Ham S, Kim N, Kim HS. Radiomic features and multilayer perceptron network classifier: a robust MRI classification strategy for distinguishing glioblastoma from primary central nervous system lymphoma. Sci Rep (2019) 9(1):5746. doi: 10.1038/s41598-019-42276-w

69. Chalkidou A, O'Doherty MJ, Marsden PK. False discovery rates in PET and CT studies with texture features: A systematic review. PloS One (2015) 10(5):e0124165. doi: 10.1371/journal.pone.0124165

70. Traverso A, Wee L, Dekker A, Gillies R. Repeatability and reproducibility of radiomic features: A systematic review. Int J Radiat oncology biology Phys (2018) 102(4):1143–58. doi: 10.1016/j.ijrobp.2018.05.053

71. Xu Y, Hosny A, Zeleznik R, Parmar C, Coroller T, Franco I, et al. Deep learning predicts lung cancer treatment response from serial medical imaging. Clin Cancer Res (2019) 25(11):3266–75. doi: 10.1158/1078-0432.CCR-18-2495

72. Hosny A, Parmar C, Coroller TP, Grossmann P, Zeleznik R, Kumar A, et al. Deep learning for lung cancer prognostication: A retrospective multi-cohort radiomics study. PloS Med (2018) 15(11):e1002711. doi: 10.1371/journal.pmed.1002711

73. LeCun Y, Bengio Y, Hinton G. Deep learning. Nature (2015) 521(7553):436–44. doi: 10.1038/nature14539

74. Li L, Chen Y, Shen Z, Zhang X, Sang J, Ding Y, et al. Convolutional neural network for the diagnosis of early gastric cancer based on magnifying narrow band imaging. Gastric Cancer (2020) 23(1):126–32. doi: 10.1007/s10120-019-00992-2

75. Ueyama H, Kato Y, Akazawa Y, Yatagai N, Komori H, Takeda T, et al. Application of artificial intelligence using a convolutional neural network for diagnosis of early gastric cancer based on magnifying endoscopy with narrow-band imaging. J Gastroenterol Hepatol (2021) 36(2):482–9. doi: 10.1111/jgh.15190

76. Balachandran VP, Gonen M, Smith JJ, DeMatteo RP. Nomograms in oncology: more than meets the eye. Lancet Oncol (2015) 16(4):e173–80. doi: 10.1016/S1470-2045(14)71116-7

77. Schmidt DR, Patel R, Kirsch DG, Lewis CA. Vander heiden, m. g.; locasale, j. w., metabolomics in cancer research and emerging applications in clinical oncology. CA: Cancer J Clin (2021) 71(4):333–58. doi: 10.3322/caac.21670

78. de Boer LL, Spliethoff JW, Sterenborg H, Ruers TJM. Review: in vivo optical spectral tissue sensing-how to go from research to routine clinical application? Lasers Med Sci (2017) 32(3):711–9. doi: 10.1007/s10103-016-2119-0

Keywords: gastric cancer, HER2 status, deep learning, CNN, transformer

Citation: Guan X, Lu N and Zhang J (2022) Accurate preoperative staging and HER2 status prediction of gastric cancer by the deep learning system based on enhanced computed tomography. Front. Oncol. 12:950185. doi: 10.3389/fonc.2022.950185

Received: 31 May 2022; Accepted: 24 October 2022;

Published: 14 November 2022.

Edited by:

Marco Rengo, Sapienza University of Rome, ItalyReviewed by:

Zijian Zhang, Xiangya Hospital, Central South University, ChinaKourosh Zarringhalam, University of Massachusetts Boston, United States

Copyright © 2022 Guan, Lu and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jianping Zhang, ZHJ6aGFuZ2pwQG5qbXUuZWR1LmNu

Xiao Guan

Xiao Guan Na Lu

Na Lu Jianping Zhang

Jianping Zhang