- 1Chitkara University Institute of Engineering and Technology, Chitkara University, Baddi, India

- 2Chitkara University Institute of Engineering and Technology, Chitkara University, Rajpura, India

- 3Department of Computer Science and Engineering, Kongju National University, Cheonan, South Korea

- 4KIET Group of Institutions, Ghaziabad, India

- 5Department of Software, Kongju National University, Cheonan, South Korea

- 6Department of Computer Science, College of Computers and Information Technology, Taif University, Taif, Saudi Arabia

- 7Department of Mathematics, Faculty of Science, Suez Canal University, Ismailia, Egypt

Recent advancement in the field of deep learning has provided promising performance for the analysis of medical images. Every year, pneumonia is the leading cause for death of various children under the age of 5 years. Chest X-rays are the first technique that is used for the detection of pneumonia. Various deep learning and computer vision techniques can be used to determine the virus which causes pneumonia using Chest X-ray images. These days, it is possible to use Convolutional Neural Networks (CNN) for the classification and analysis of images due to the availability of a large number of datasets. In this work, a CNN model is implemented for the recognition of Chest X-ray images for the detection of Pneumonia. The model is trained on a publicly available Chest X-ray images dataset having two classes: Normal chest X-ray images and Pneumonic Chest X-ray images, where each class has 5000 Samples. 80% of the collected data is used for the purpose to train the model, and the rest for testing the model. The model is trained and validated using two optimizers: Adam and RMSprop. The maximum recognition accuracy of 98% is obtained on the validation dataset. The obtained results are further compared with the results obtained by other researchers for the recognition of biomedical images.

1 Introduction

Fungi, bacteria, or viruses fill the lungs with fluid, which is the main reason for the infection of the lungs. This infection which is known as Pneumonia causes effusion of the pleural as well as inflammation in the air sacs. Pneumonia is the cause of 15% of the deaths in children who are below the age of 5 years (1). Pneumonia is more prevalent in underdeveloped or developing ‘countries with scanty medical resources. This disease can be fatal if not diagnosed and treated early. It can cause the failure of the respiratory system or inflammation can be uncontrolled. For its diagnosis: Magnetic resonance imaging (MRI), radiography (X-ray), or computed tomography (CT) is frequently used, whereas radiography or X-ray is most commonly used as it is an inexpensive and non-invasive examination. Chest X-ray is the radiology test in which the chest is exposed to radiation to produce an image of the internal organs of the chest. Once the chest X-ray is produced then the radiologist interprets the organs of the chest by looking at the image produced. An automated system is required which can detect the Pneumonia for the X-ray image as manual chest X-ray examination is prone to variability (2). Sometimes the X- rays are not very clear, which makes it difficult for the radiotherapist to identify the features that help in the detection of disease. Therefore, a deep learning-based CNN model is required that can automatically recognize the healthy and pneumonic lungs from the Chest X-ray images (3). Deep learning and machine learning in the field of Artificial Intelligence that is being used in various fields like visual recognition, natural language processing (NLP), self-driving cars, fraud detection, news aggregation, and so on. Deep Learning based Models work similar to that of a human brain, by using data as the input so that it can be processed through various layers for its recognition. These models can find the hidden features that are sometimes not possible to detect by radiologists. CNN is the main tool of deep learning models which can automatically find the various features of the images which makes the recognition of images possible for different classes.

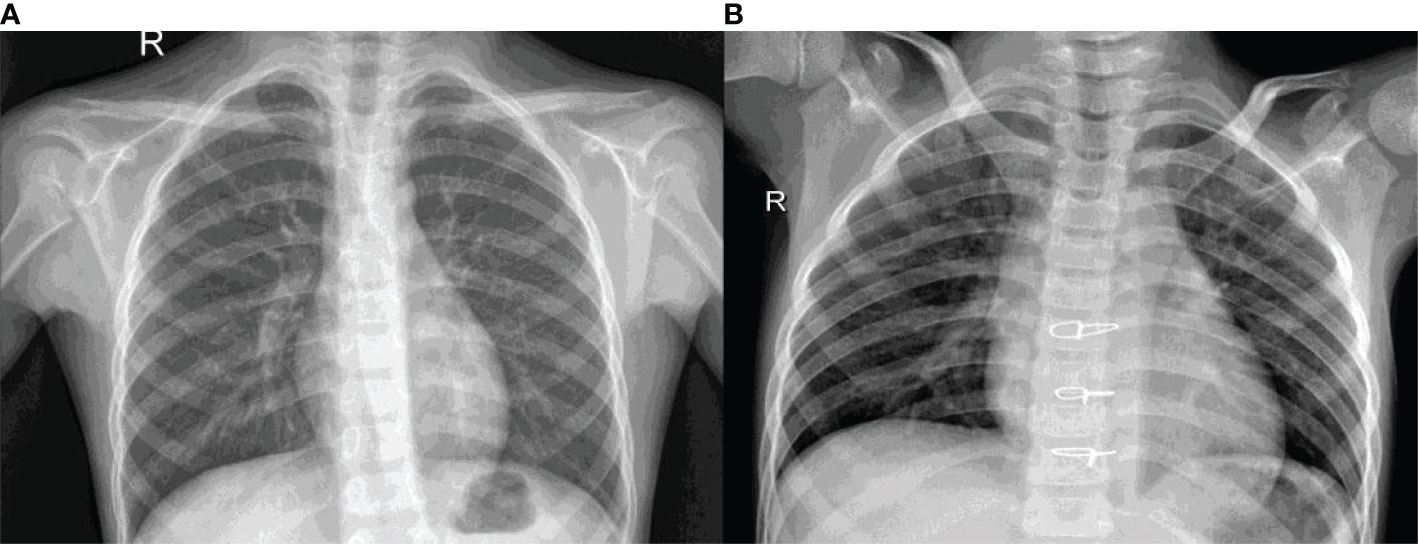

Figure 1 shows the X-ray images of the lungs for the healthy and Pneumonic chest. Figure 1A is the healthy X-ray whereas Figure 1B is the Pneumonic X-ray. It can be seen that the pneumonic X-ray has white spots called infiltrates.

The paper is structured as: A review similar to the related work is given in Section 2; while Section 3 represents the methods used in this paper. The results obtained are depicted in Section 4 which is followed by the conclusion in Section 5. The contributions of the proposed work are given below:

• A CNN based model is developed which can be used for the recognition of chest X-ray images of the lungs.

• A model is evaluated to find the suitable optimizer, number of epochs as well as the learning rate (LR) which can work well on the available dataset.

• A Comparison of the proposed work with other developed models for the recognition of biomedical images.

2 Related Work

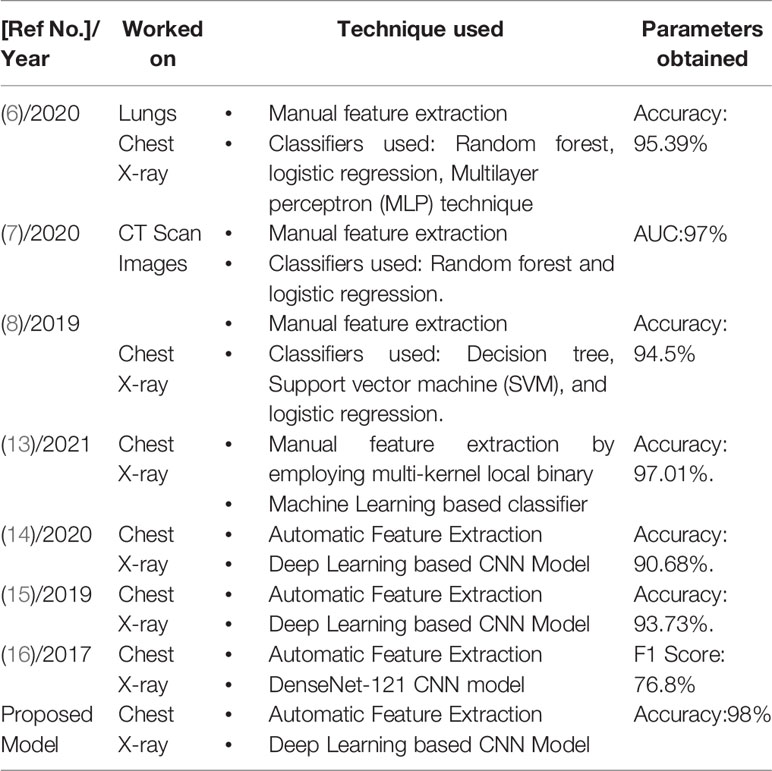

Detect Pneumonia using chest X-ray images is a problem for many years (4, 5). Sometimes the chest X-rays are not clear enough to identify the ‘features’ that help in the diagnosis of the disease. Various machine learning, as well as deep learning models, are being used for the detection and classification of pneumonia. In machine learning techniques, various features are required to be manually extracted whereas features are automatically extracted by employing deep learning techniques for detection and classification. In this section, an attempt has been made to present the work that has been done for the recognition of biomedical images using Machine Learning as well as Deep Learning techniques. Chandra et al. (6) have employed a machine learning technique to detect pneumonia. First, the lung regions are segmented from the X-ray, and later eight statistical features are extracted from the regions which are used for classification. Once the features are extracted, various classifiers are implemented for classification like the random forest, logistic regression, and multilayer perceptron (MLP) technique. The model is evaluated on 412 images and the accuracy obtained is 95.39%. Yue et al. (7) have extracted six features from the 52 CT scanned images for the detection of pneumonia. An AUC (area under the curve) of 97% is achieved. Similarly, Kuo et al. (8) have also manually extracted various features for the detection and classification of Pneumonia. The extracted features are applied to various classification models such as decision tree, support vector machine (SVM), and logistic regression. The maximum accuracy obtained is 94.5% using the decision tree.

Features are manually extracted in machine learning techniques (9, 10) whereas features are automatically extracted in deep learning techniques (11, 12). Tuncer et al. (13) has also employed a machine learning-based technique, which has further imposed the fuzzy tree transformed technique on the images. Then, various features are extracted with the help of multi-kernel local binary pattern, and later various classifiers are employed. Obtained accuracy is 97.01%. Various deep learning techniques are being employed for the detection and classification of chest X-rays.

Sharma et al. (14) implemented a CNN based model for the classification of pneumonia in Chest X-rays. The data augmentation technique is also employed to increase the data. Obtained accuracy is 90.68%. Similarly, Stephen et al. (15) also employed CNN based model by incorporating a data augmentation technique and obtained an accuracy of 93.73%. Rajpukar et al. (16) have also implemented a Deep learning-based DenseNet-121 CNN model for classifying pneumonia from Chest X-ray images. F1 score of only 76.8% is achieved. The model has not perform well due to the unavailability of the patient’s history. It has been found that various machine learning and deep learning models have been implemented for recognition. Manual extraction of features is a cumbersome task as required in machine learning models, but features are not required to be manually extracted in deep learning models, which makes them easier to implement. In this work, we have obtained a recognition accuracy of 98% using a 12 layered customized CNN model with the help of RMSprop Optimizer. A suitable optimizer has also been obtained for the available dataset. The prepared model has also been evaluated using Adam Optimizer while other parameters are kept the same.

3 Proposed Approach

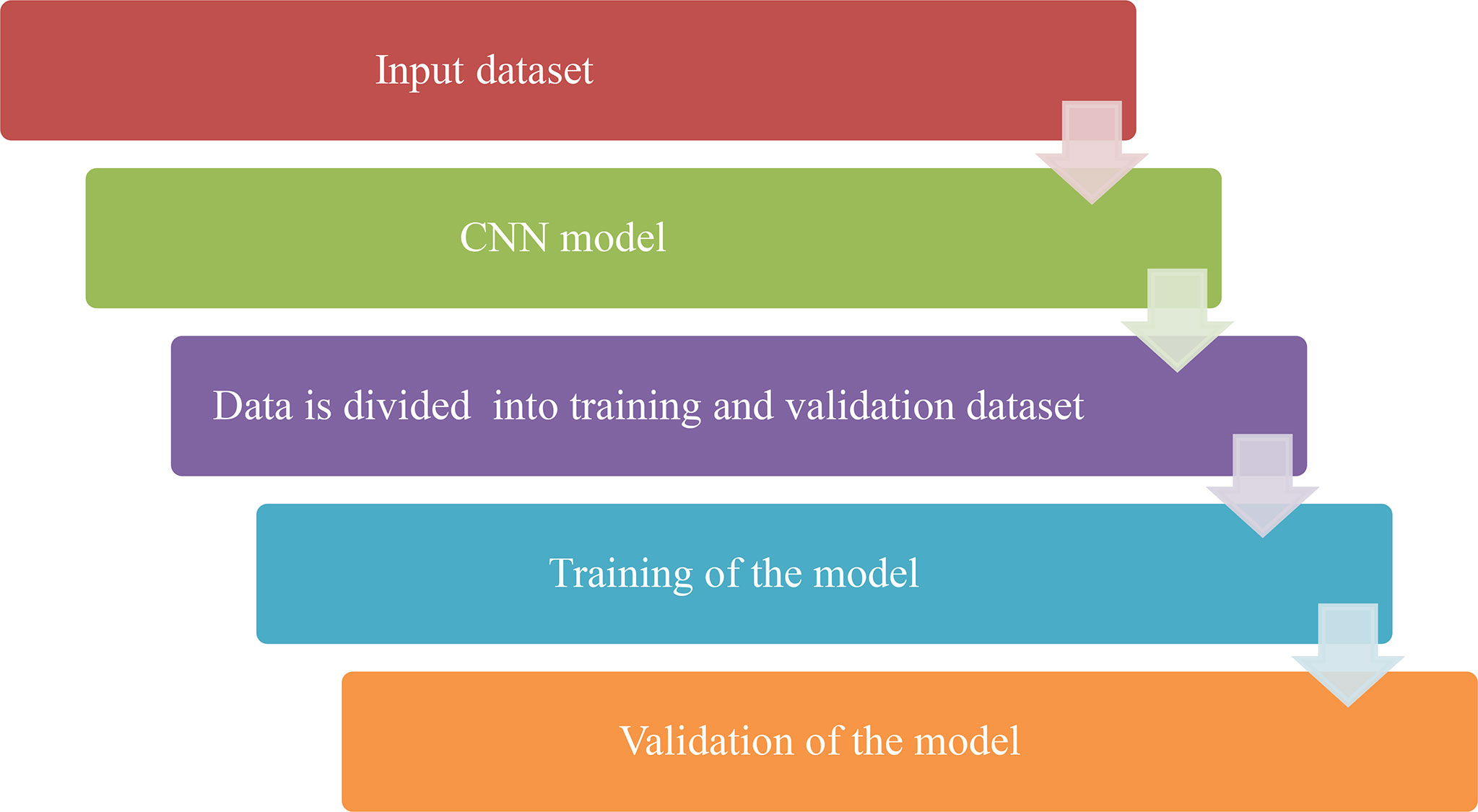

CNN based model is proposed for the recognition and classification of the Chest X-ray images. Publically available Kaggle dataset of X-ray images is used for recognition. The proposed Methodology of the work is given in Figure 2 below. It can be seen from the figure that an X-ray image is given as input to the CNN model. First, the image is resized to 64X32 and then it will pass through some different layers (convolution, pooling, and flattening) after dividing into training and validation datasets. In the end, the obtained image will be passed through the output class which will predict the class of that image i.e. Pneumonic or Healthy image.

3.1 Input Dataset

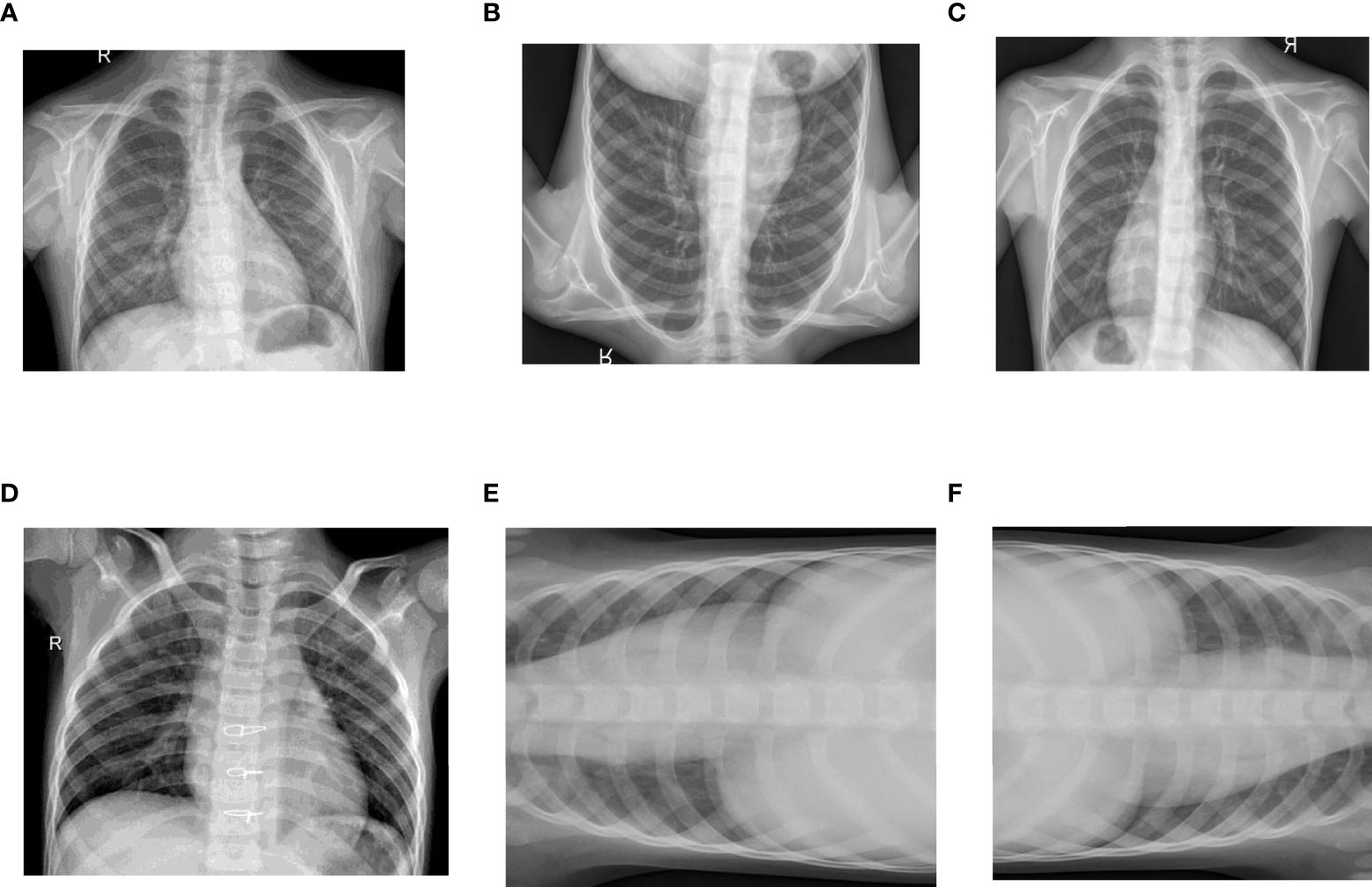

Kaggle dataset is used for classification and recognition. Data preprocessing is not required as the dataset is already available in a clean and pre-processed format. The dataset consists of two classes of chest X-ray images where each class has 5000 images of Healthy and Pneumonic Chest X-rays. 4000 images of each class are used to train the model, and 1000 images of each class are used to test the model. Few of the dataset images for Healthy and Pneumonic Chest X-rays can be observed in Figure 3.

Figure 3 Dataset Images: (A) Healthy Chest X-ray 1, (B) Healthy Chest X-ray 2, (C) Healthy Chest X-ray 3, (D) Pneumonic Chest X-ray 1, (E) Pneumonic Chest X-ray 2 and (F) Pneumonic Chest X-ray 3.

3.2 Data Augmentation

To increase the number of images for each class, a data augmentation technique is employed. As data augmentation helps in improving the performance of the model a model is trained on different examples of the dataset. Flipping and Rotation of the existing dataset are carried out to enhance the dataset. Figure 4 is showing the flipped and rotated images of the dataset.

Figure 4 Data Augmentation of Images: (A) Original Healthy Chest X-ray, (B) Healthy Chest X-ray Flipped Vertically, (C) Healthy Chest X-ray Flipped Horizontally, (D) Original Pneumonic Chest X-ray, (E) Pneumonic Chest X-ray rotated 90 degrees left and (F) Pneumonic Chest X-ray rotated 90 degrees right.

3.3 Proposed Convolution Model

A total of 10000 images of healthy and pneumonic chest X-rays have been taken and each class has 5000 samples.

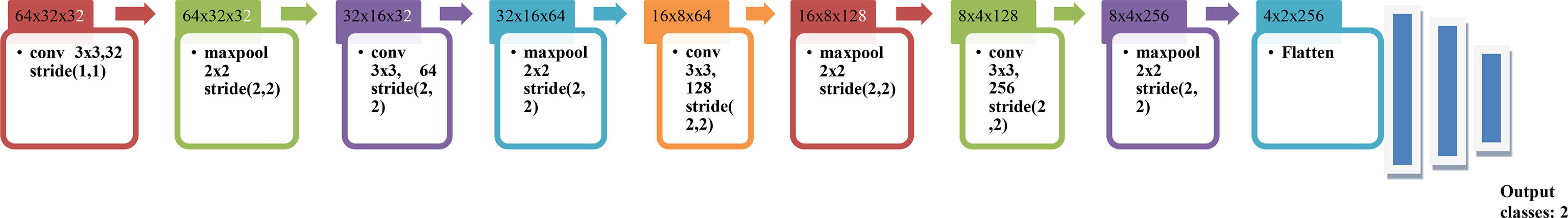

All the samples are passed through the set of three main layers of the CNN model: convolution, pooling and then the fully connected layer. The basic block diagram of the proposed model is shown in Figure 5. CNN model has been the biggest contributor in the field of image analysis and computer vision. CNN model or ConvNet can learn the features of various images which are used for their classification and recognition. The convolution layer helps in obtaining the feature maps from the images which are the extraction of various features. Automatic feature extraction takes place using a convolution layer. The pooling layer helps in retaining the important features while it also helps in dropping off the features which are least important for the recognition. This layer helps reduce the image size. The fully connected layer at the end helps in obtaining the output as classes. The architecture of the CNN model is shown in Figure 6. The X-ray image is given as input to the first layer of the model, i.e. convolution layer with 32 filters and the size of each filter is 3x3 which will help in obtaining 32 feature maps. These are given to the next layer: the Maxpooling layer with a filter pool of size 2x2 and a stride with the size (2, 2). All Maxpooling layers used in the model have the same size. In the next convolution layer, the number of filters is increased from 32 to 64 with the same size 3x3 as used earlier, which is again followed by a max-pooling layer. The number of filters in the next convolution layer increases to 128 which is followed by the max-pooling layer, and the last convolution layer has 256 filters (17, 18). In the end, a flattening layer is introduced so that the obtained pooled feature maps can be fed to the array of the neural network (19, 20). Two dense layers are introduced after the flattening layer which is followed by an output layer.

4 Results and Analysis

In this section, results obtained for the recognition of chest X-rays are shown. The CNN model is trained using 80% of the data for the recognition of healthy and pneumonic chest X-rays. Once the model is trained, then it is validated using the rest of the images. The model is trained using RMSprop as well as Adam optimizer with an LR of 0.01 and the number of epochs used is 30. The model has not perform well with the other optimizers as compared to the two selected optimizers. Even, when the number of epochs are increased from 30, the model’s performance started to deteriorate or either remained the same. Therefore, the model performed at its best with the selected optimization parameters. All the results are obtained on the Google Colab, which is a free Jupyter environment that runs on the cloud.

4.1 Result Analysis Using RMSprop Optimizer

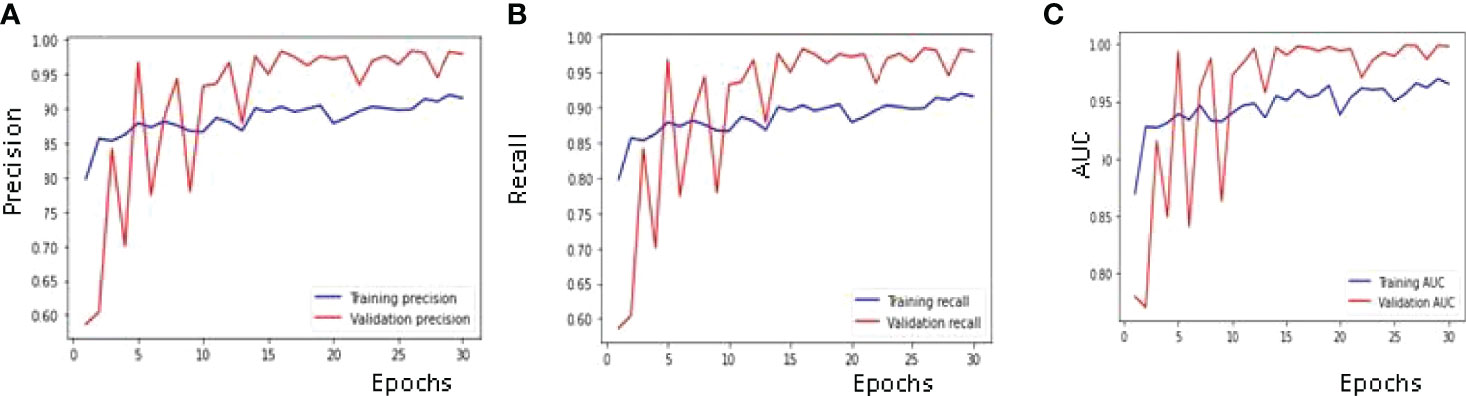

The model has been trained and validated with the help of RMSprop Optimizer where the number of epochs using which the model is trained is 30, LR used is 0.01 and Batch size of 4 is used. The model is validated with the help of various parameters such as accuracy, loss, recall, area under the curve (AUC) and precision (19, 21).

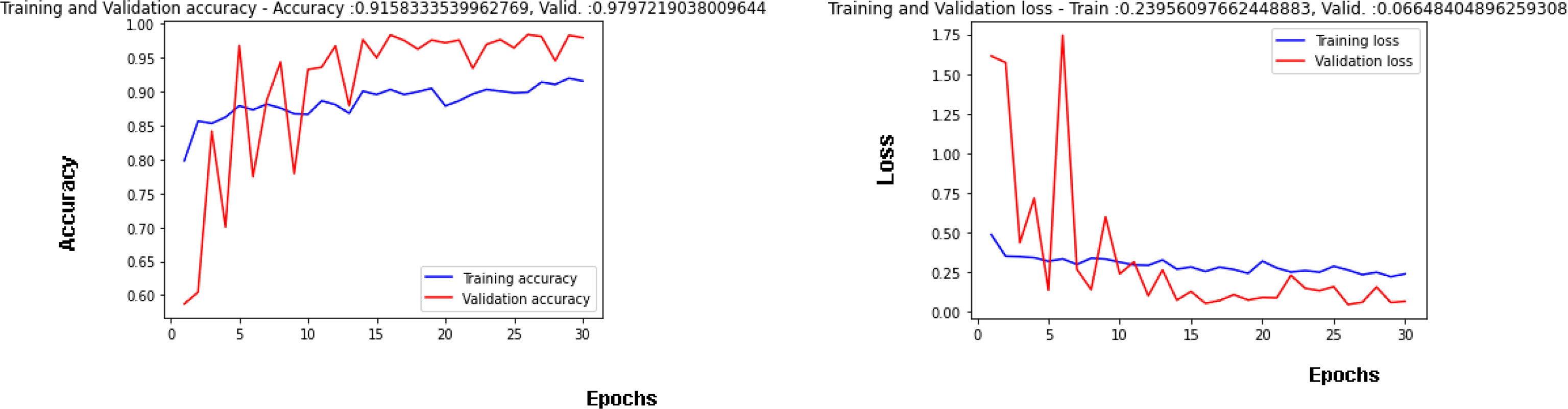

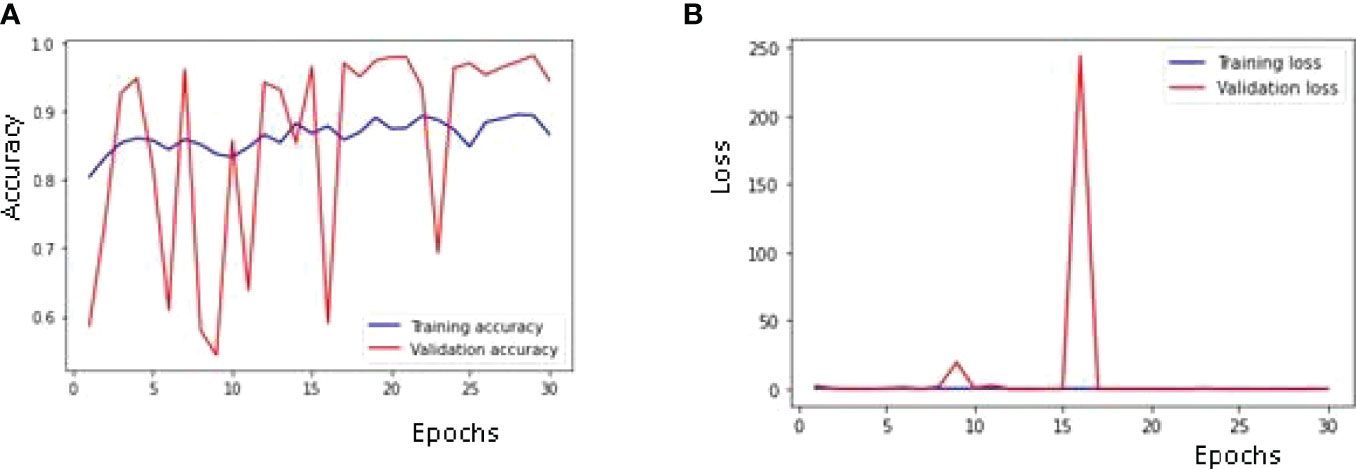

4.1.1 nTraining and Validation: Accuracy and Loss Curves

Figure 7 is showing the results obtained on the training as well as the validation dataset. A maximum validation accuracy of 98% is obtained on the 29th epoch. In Figure 7A, Validation accuracy (22) is below 60% on the 1st epoch and increases till 3rd epoch to around 84% and then decreases on 4th epoch and again increases on the next epoch (23–25). The highest validation accuracy obtained is 98% on the 29th epoch, and it is 97% on the last epoch. Training accuracy also started at around 80% in the 1st epoch and going close to 90% in the last epoch. Figure 7B is showing the curve for the training and validation dataset. Validation loss is around 1.61 in the 1st epoch and is decreasing till the 5th epoch to 0.13 but again increasing in the 6th epoch to 1.74. The value obtained for validation loss is 0.06 on the last epoch but the minimum validation loss obtained is 0.05 on the 29th epoch itself. Training loss is 0.50 in the 1st epoch and decreases to close to a value of 0.30 in the last epoch.

4.1.2 Confusion Matrix Parameters Analysis

Performance metrics are used for the recognition of patterns and the classification of images. These parameters are important in building a perfect model which can give more accurate and precise results. Maximum validation precision, maximum validation recall, and maximum validation AUC obtained are 0.05, 98%, 98% and 99% on the 29th epoch.

The best results for the available dataset using the proposed CNN model are obtained on the 29th Epoch. Figure 8A shows the curve of precision for the training and validation dataset. The value of precision for the validation data is below 0.60 on the 1st epoch, increasing till the 3rd epoch and later decreasing and then increasing. The maximum value of the precision is 0.98 on the 29th epoch, while 0.97 on the last epoch. The value of precision for the training dataset is around 0.80 on the 1st epoch and goes close to 0.90 on the last epoch. The parameter convergence plot for Recall is shown in Figure 8B. Recall for the validation dataset is below 0.60 on the 1st epoch and 0.97 on the last epoch. The maximum value for the recall is 0.98 on the 29th epoch. The value of recall for the training dataset is 0.80 on the 1st epoch and close to 0.90 on the last epoch. The AUC for the validation and training dataset is shown in Figure 8C. The highest value of AUC achieved is around 0.99 on the 29th as well as 30th epoch, while the minimum value of AUC is 0.77 on the 2nd epoch and it started at 0.78 on the 1st epoch. The AUC for the training data is around 0.87 on the 1st epoch and is going close to 0.96 on the 30th epoch.

Figure 8 Confusion Matrix Parameter Curves using RMSprop Optimizer: (A) Precision, (B) Recall and (C) AUC.

4.2 Result Analysis Using Adam Optimizer

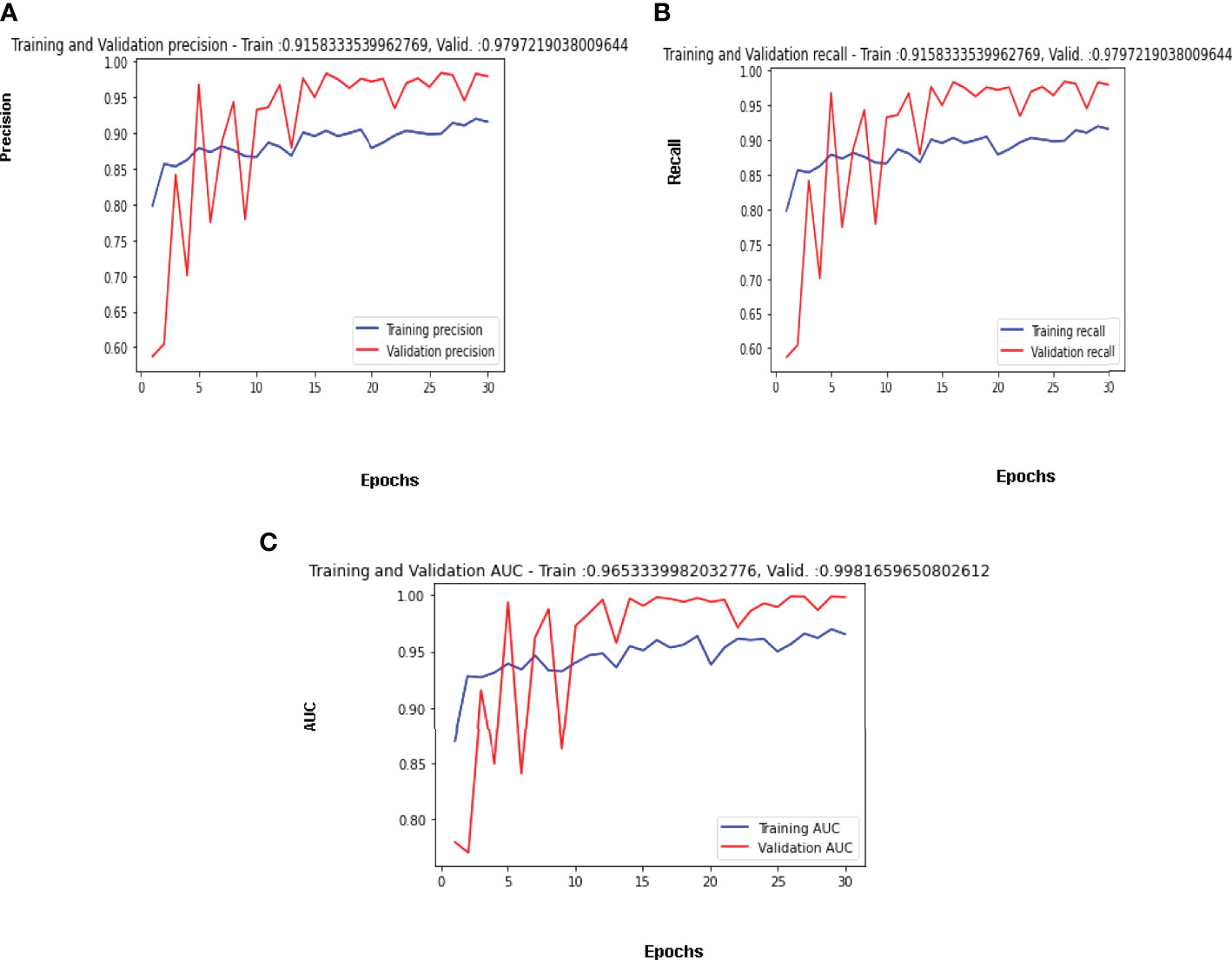

Here the model is trained and validated using Adam Optimizer (19, 20). The number of epochs using which the model is trained is 30, the LR used is 0.01, and a batch size of 4 is used. The model is validated with the help of various parameters like accuracy, loss, Recall, Area under the curve (AUC) (19, 26), and precision (22, 27).

4.2.1 Training and Validation: Accuracy and Loss Curves

Figure 9 is showing the results obtained on the training as well as the Validation dataset. The maximum validation accuracy of 97% is obtained on the 29th epoch. It can be observed from Figure 9A that the validation accuracy is below 60% in the 1st epoch and increases till the 4th epoch to around 94% and then decreases in the 5th and 6th epoch and again increases in the next epoch. The highest validation accuracy obtained is 97% on the 29th epoch and it is 94% on the last epoch. Training accuracy also started at around 80% in the 1st epoch and is below 90% till the last epoch. Figure 9B is showing the loss curve for the training and validation dataset. The validation loss is around 2.2 in the 1st epoch and is decreasing till the 5th epoch to 0.47 but again increasing in the 6th epoch to 1.25. The value obtained for validation loss is 0.15 on the last epoch but the minimum validation loss obtained is 0.08 on the 29th epoch itself. Training loss is around 2 in the 1st epoch and decreases to close to a value of 0.80 in the last epoch.

4.2.2 Confusion Matrix Parameters Analysis

Maximum validation precision, maximum validation recall, and maximum validation AUC obtained are 98%, 98% and 99% on the 29th epoch. The best results for the available dataset using the proposed CNN model are obtained on the 29th Epoch. Figure 10A is shows the curve of precision for the training and validation dataset. The value of precision for the validation data is below 0.60 on the 1st epoch, increasing till the 5th epoch and later decreasing and then increasing. The maximum value of the precision is 0.98 on the 29th epoch, while 0.94 on the last epoch. The value of precision for the training dataset is around 0.80 on the 1st epoch and goes close to 0.87 on the last epoch. The parameter convergence plot for Recall is shown in Figure 10B. Recall for the validation dataset is below 0.60 on the 1st epoch and 0.94 on the last epoch. The maximum value for the recall is 0.98 on the 29th epoch. The value of recall for the training dataset is 0.80 on the 1st epoch and close to 0.87 on the last epoch. The AUC for the validation and training dataset is shown in Figure 10C. The highest value of AUC achieved is around 0.99 on the 29th and it is 0.98 on the 30th epoch while the minimum value of AUC is 0.67 on the 1st epoch. The AUC for the training data is around 0.87 on the 1st epoch and is going close to 0.93 on the 30th epoch.

Figure 10 Confusion Matrix Parameter Curves Using Adam Optimizer: (A) Precision, (B) Recall, and (C) AUC.

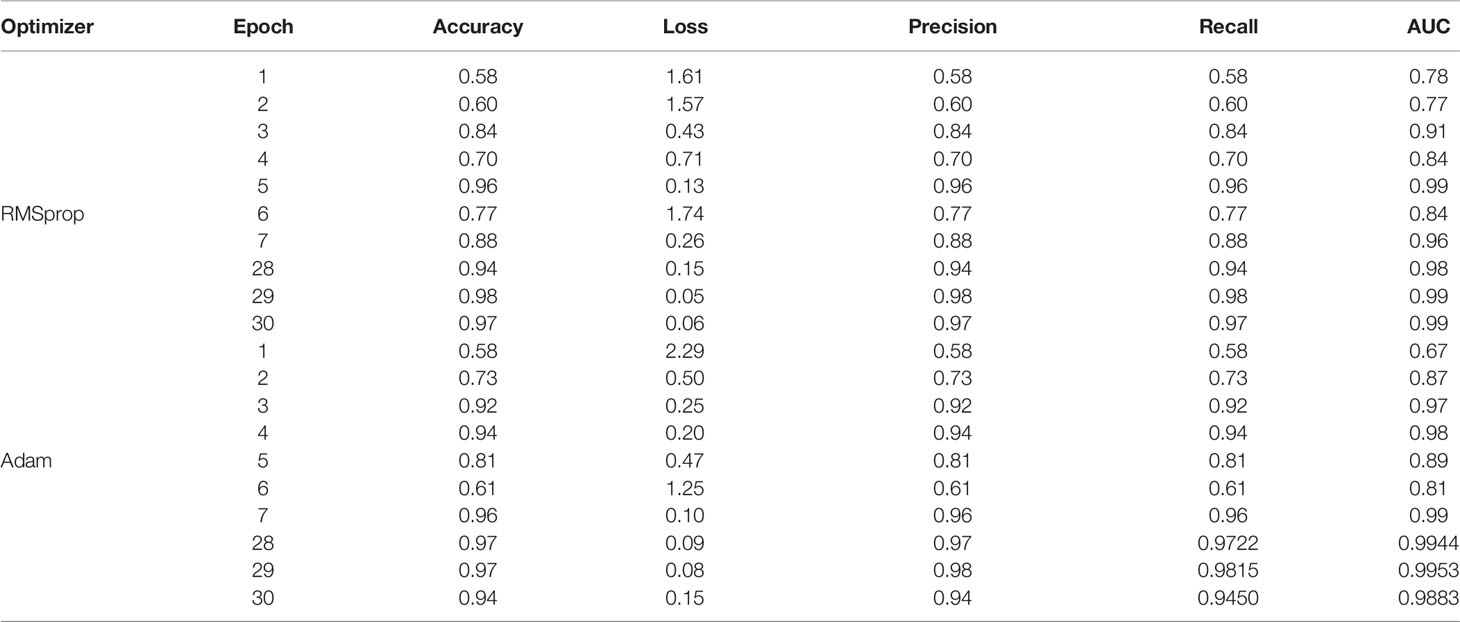

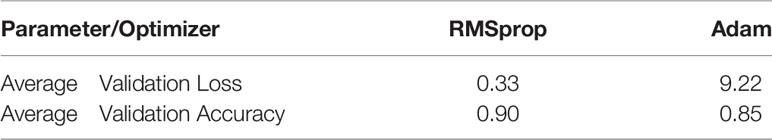

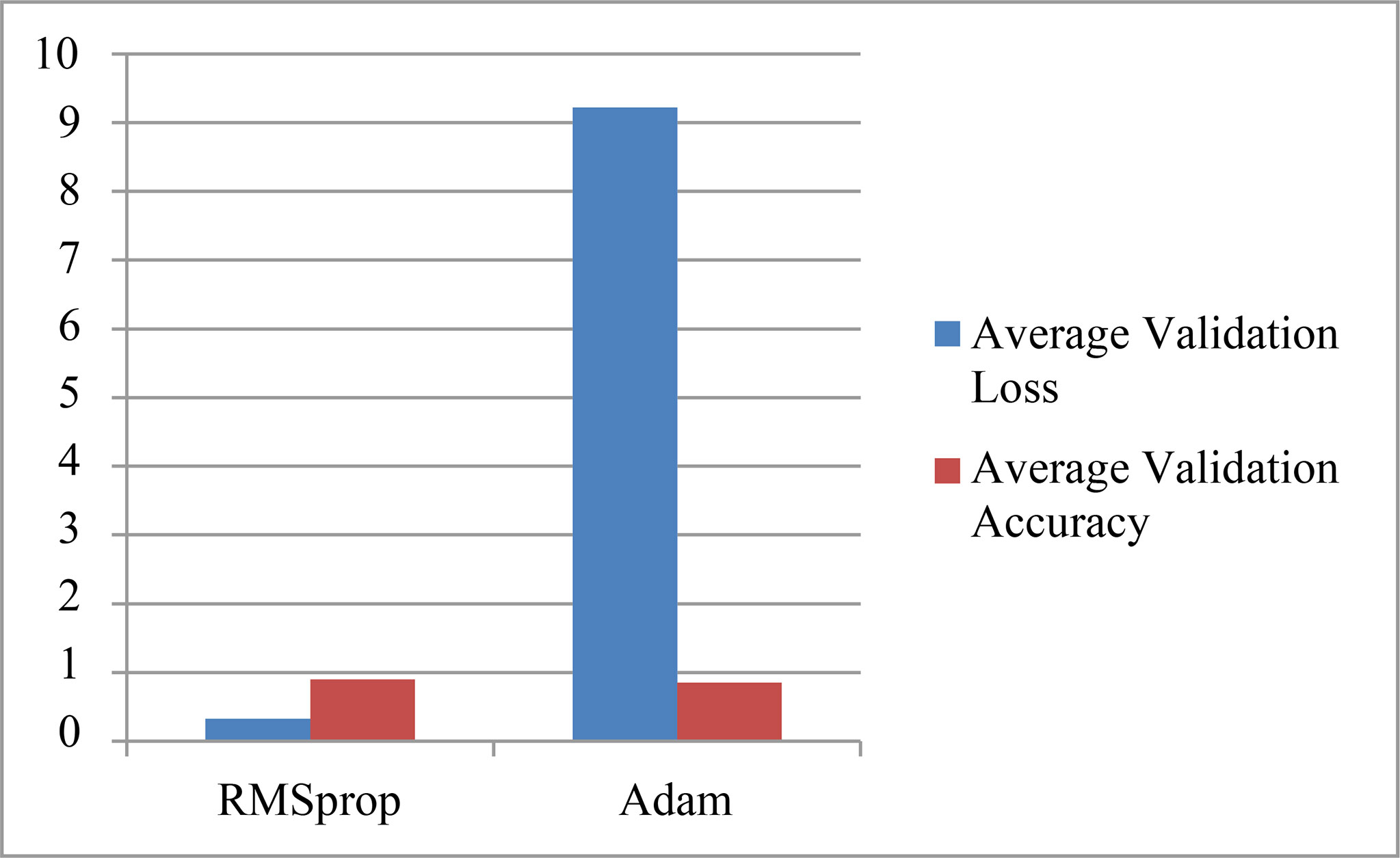

4.3 Results Comparison of Adam and RMS Optimizer

The parameter values obtained by using two optimizers: RMSprop and Adam are shown in Table 1. The table shows the results for various validation parameters such as accuracy, loss, precision, recall, and AUC (25). A model is trained and validated using 30 epochs, it can be seen that the maximum accuracy is obtained on the 29th epoch using both the optimizers. RMSprop has achieved the maximum accuracy of 98% with a minimum loss of 0.05 while Adam optimizer has obtained the maximum accuracy of 97% with a minimum loss of 0.08. The results for the average accuracy of the validation and loss are also depicted in Table 2. Average Validation loss and Accuracy using RMSprop optimizer is 0.33 and 90% while 9.22 and 85% using Adam Optimizer. The graph representation for the same is given in Figure 11.

4.4 Comparison of the Proposed Work With the Existing Models

Table 3 shows a comparison of the proposed work with existing models which have been used for the recognition of biomedical images. The table shows the values of parameters obtained for the recognition of biomedical images. It can be observed in the table that the highest recognition accuracy obtained by the proposed work is 98% by employing CNN based deep learning model in which automatic feature extraction takes place while others have achieved less accuracy.

5 Conclusion

As Pneumonia is a life-threatening disease that occurs due to an infection in the lungs if not detected at an early stage. Chest X-ray is the most common method for the diagnosis of the disease. Radiotherapist manually observes the features from the Chest X-ray which can help in the identification of the Pneumonia. A CNN model is proposed for the recognition of chest X-rays which are classified into 2 classes: Healthy X-rays and pneumonic X-rays. A CNN model employing two optimizers: RMSprop and Adam are used for recognition. Kaggle dataset is used for the recognition having 5000 samples of images for each class. From the obtained results, it has been observed that the RMS prop optimizer with an LR of 0.01 has helped in obtaining the maximum validation accuracy of 98% while the Adam optimizer has achieved the maximum validation accuracy of 97%. In terms of the average validation values obtained using two optimizers, it has been found that the highest average accuracy and the lowest average loss of 90% and 0.33 are also achieved using RMSprop Optimizer for the available dataset. Huge data are being taken into consideration for recognition and classification. The future direction could be to think about reducing the processing time of the data. Selective information processing is the solution, which means processing the required part of the image only instead of the whole image.

It can be concluded from the obtained results that RMSprop has outperformed Adam Optimizer for the recognition of the available dataset.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

Author Contributions

Conceptualization, SS, SG, DG. Data curation, SS, SG, DG, JR, SJ, JK, ME. Formal analysis, SS, SG, DG, JR, SJ. Funding acquisition, JK and JR. Investigation, SS, SG, DG, JR, SJ. Methodology, SS, SG, DG, JR, SJ, JK. Project administration, SG and DG. Writing—original draft, SS, SG, DG, JR, SJ. Writing—review and editing, SS, SG, DG, JR, SJ, JK, ME. All authors contributed to the article and approved the submitted version.

Funding

This research was partly supported by the Technology Development Program of MSS [No. S3033853] and by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2021R1A4A1031509).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

Our deepest gratitude goes to the anonymous reviewers for improving the quality of the paper.

References

1. WHO. Pneumonia. World Health Organization (2019). Available at: https://www.who.int/news-room/fact-sheets/detail/pneumonia.

2. Neuman M, Lee E, Bixby S, Diperna S, Hellinger J, Markowitz R. Variability in the Interpretation of Chest Radiographs for the Diagnosis of Pneumonia in Children. J Hosp Med (2012) 7:294–8. doi: 10.1002/jhm.955PMID:22009855

3. Williams G, Macaskill P, Kerr M, Fitzgerald D, Isaacs D, Codarini M. Variability and Accuracy in Interpretation of Consolidation on Chest Radiography for Diagnosing Pneumonia in Children Under 5 Years of Age. Pediatr Pulmonol (2013) 48:1195–200. doi: 10.1002/ppul.22806PMID:23997040

4. Albahli S, Rauf HT, Algosaibi A, Balas VE. AI-Driven Deep CNN Approach for Multi-Label Pathology Classification Using Chest X-Rays. PeerJ Comput Sci (2021) 7:e495. doi: 10.7717/peerj-cs.495

5. Albahli S, Rauf H, Arif M, Nafis M, Algosaibi A. Identification of Thoracic Diseases by Exploiting Deep Neural Networks. Neural Netw (2021) 5:6. doi: 10.32604/cmc.2021.014134

6. Chandra T, Verma K. Pneumonia Detection on Chest X-Ray Using Machine Learning Paradigm. In: Proceedings of 3rd International Conference on Computer Vision and Image Processing (India: Springer) (2020). p. 21–33.

7. Yue H, Yu Q, Liu C, Huang Y, Jiang Z, Shao C. Machine Learning-Based CT Radiomics Method for Predicting Hospital Stay in Patients With Pneumonia Associated With SARS-CoV-2 Infection: A Multicenter Study. Ann Trans Med (2020) 8:1–7. doi: 10.21037/atm-203026PMID:32793703

8. Kuo K, Talley P, Huang C, Cheng L. Predicting Hospital-Acquired Pneumonia Among Schizophrenic Patients: A Machine Learning Approach. BMC Med Inf Decision Making (2019) 19:1–8. doi: 10.1186/s12911-019-0792-1

9. Meraj T, Hassan A, Zahoor S, Rauf H, Lali M, Ali L. Lungs Nodule Detection Using Semantic Segmentation and Classification With Optimal Features. Preprints (2019) 10:4135–49. doi: 10.20944/preprints201909.0139.v1

10. Rajinikanth V, Kadry S, Damas ˇevičius R, Taniar D, Rauf H. Machine-Learning- Scheme to Detect Choroidal-Neovascularization in Retinal OCT Image. In: 2021 Seventh International Conference on Bio Signals, Images, and Instrumentation (ICBSII) (Chennai, India: IEEE. (2021). p. 1–5.

11. Kadry S, Nam Y, Rauf H, Rajinikanth V, Lawal I. Automated Detection of Brain Abnormality Using Deep-Learning-Scheme: A Study. In: 2021 Seventh International Conference on Bio Signals, Images, and Instrumentation (ICBSII) (Chennai, India: IEEE) (2021). p. 1–5.

12. Rajinikanth V, Kadry S, Taniar D, Damas ˇevičius R, Rauf H. Breast-Cancer Detection Using Thermal Images With Marine-Predators-Algorithm Selected Features. In: 2021 Seventh International Conference on Bio Signals, Images, And Instrumentation (ICBSII) (Chennai, India: IEEE) (2021). p. 1–6.

13. Tuncer T, Ozyurt F, Dogan S, Subasi A. A Novel Covid-19 and Pneumonia Classification Method Based on F-Transform. Chemometr Intell Lab Syst (2021) 210:104256. doi: 10.1016/j.chemolab.2021.104256

14. Sharma H, Jain J, Bansal P, Gupta S. Feature Extraction and Classification of Chest X-Ray Images Using Cnn to Detect Pneumonia. In: 2020 10th International Conference On Cloud Computing, Data Science & Engineering (Confluence) (Nodia, India: IEEE) (2020). p. 227–31.

15. Stephen O, Sain M, Maduh U, Jeong D. An Efficient Deep Learning Approach to Pneumonia Classification in Healthcare. J Healthcare Eng (2019) 2019:1–7 . doi: 10.1155/2019/4180949

16. Rajpurkar P, Irvin J, Zhu K, Yang B, Mehta H, Duan T. Chexnet: Radiologist-Level Pneumonia Detection on Chest X-Rays With Deep Learning. ArXiv Preprint (2017).

17. Juneja S, Dhiman G, Kautish S, Viriyasitavat W, Yadav K. A Perspective Roadmap for IoMT-Based Early Detection and Care of the Neural Disorder, Dementia. J Healthcare Eng (2021) 2021 1–11. doi: 10.1155/2021/6712424. Hindawi Limited.

18. Sharma S, Gupta S, Gupta D, Juneja S, Singal G, Dhiman G, et al. Recognition of Gurmukhi Handwritten City Names Using Deep Learning and Cloud Computing. Sci Program (2022) 2022:1–16. doi: 10.1155/2022/5945117

19. Dhankhar A, Juneja S, Juneja A, Bali V. Kernel Parameter Tuning to Tweak the Performance of Classifiers for Identification of Heart Diseases. Int J E-Health Med Commun (2021) 12(4):1–16. doi: 10.4018/IJEHMC.20210701.oa1

20. Kumar N, Gupta M, Gupta D, Tiwari S. Novel Deep Transfer Learning Model for COVID-19 Patient Detection Using X-Ray Chest Images. J Ambient Intell Humaniz Comput (2021) 2021:1–10. doi: 10.1007/s12652-021-03306-6

21. Dhiman G, Juneja S, Viriyasitavat W, Mohafez H, Hadizadeh M, Islam MA, et al. A Novel Machine-Learning-Based Hybrid CNN Model for Tumor Identification in Medical Image Processing. Sustainability (Switzerland) (2022) 14(3):1–13. doi: 10.3390/su14031447

22. Dhiman G, Rashid J, Kim J, Juneja S, Viriyasitavat W, Gulati K. Privacy for Healthcare Data Using the Byzantine Consensus Method. IETE J Res (2022) 2022:1–12. doi: 10.1080/03772063.2022.2038288

23. Chowdhary CL, Alazab M, Chaudhary A, Hakak S, Gadekallu TR. Computer Vision and Recognition Systems Using Machine and Deep Learning Approaches: Fundamentals, Technologies and Application. The Institution of Engineering and Technology (2021).

24. Ali TM, Nawaz A, Rehman AU, Ahmad RZ, Javed AR, Gadekallu TR, et al. A Sequential Machine Learning Cum Attention Mechanism for Effective Segmentation of Brain Tumor. Front Oncol (2022) 2129. doi: 10.3389/fonc.2022.873268

25. Mannan A, Abbasi A, Javed AR, Ahsan A, Gadekallu TR, Xin Q. Hypertuned Deep Convolutional Neural Network for Sign Language Recognition. Comput Intell Neurosci (2022) 2022:1–10. doi: 10.1155/2022/1450822

26. Sharma S, Gupta S, Gupta D, Juneja S, Gupta P, Dhiman G, et al. Deep Learning Model for the Automatic Classification of White Blood Cells. Comput Intell Neurosci (2022) 2022:1–13 . doi: 10.1155/2022/7384131

Keywords: biomedical images, convolutional neural network, deep learning, chest X-rays, optimizers

Citation: Sharma S, Gupta S, Gupta D, Rashid J, Juneja S, Kim J and Elarabawy MM (2022) Performance Evaluation of the Deep Learning Based Convolutional Neural Network Approach for the Recognition of Chest X-Ray Images. Front. Oncol. 12:932496. doi: 10.3389/fonc.2022.932496

Received: 29 April 2022; Accepted: 01 June 2022;

Published: 29 June 2022.

Edited by:

Thippa Reddy Gadekallu, VIT University, IndiaReviewed by:

Sumit Badotra, Lovely Professional University, IndiaSharma Tripti, Maharaja Surajmal Institute of Technology, India

Copyright © 2022 Sharma, Gupta, Gupta, Rashid, Juneja, Kim and Elarabawy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Junaid Rashid, anVuYWlkcmFzaGlkMDYyQGdtYWlsLmNvbQ==; Jungeun Kim, amVraW1Aa29uZ2p1LmFjLmty

Sandhya Sharma1

Sandhya Sharma1 Junaid Rashid

Junaid Rashid Sapna Juneja

Sapna Juneja Jungeun Kim

Jungeun Kim