94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Oncol., 29 July 2022

Sec. Gynecological Oncology

Volume 12 - 2022 | https://doi.org/10.3389/fonc.2022.924945

This article is part of the Research TopicInsights in Gynecological Oncology: 2021View all 11 articles

Histopathologic evaluations of tissue sections are key to diagnosing and managing ovarian cancer. Pathologists empirically assess and integrate visual information, such as cellular density, nuclear atypia, mitotic figures, architectural growth patterns, and higher-order patterns, to determine the tumor type and grade, which guides oncologists in selecting appropriate treatment options. Latent data embedded in pathology slides can be extracted using computational imaging. Computers can analyze digital slide images to simultaneously quantify thousands of features, some of which are visible with a manual microscope, such as nuclear size and shape, while others, such as entropy, eccentricity, and fractal dimensions, are quantitatively beyond the grasp of the human mind. Applications of artificial intelligence and machine learning tools to interpret digital image data provide new opportunities to explore and quantify the spatial organization of tissues, cells, and subcellular structures. In comparison to genomic, epigenomic, transcriptomic, and proteomic patterns, morphologic and spatial patterns are expected to be more informative as quantitative biomarkers of complex and dynamic tumor biology. As computational pathology is not limited to visual data, nuanced subvisual alterations that occur in the seemingly “normal” pre-cancer microenvironment could facilitate research in early cancer detection and prevention. Currently, efforts to maximize the utility of computational pathology are focused on integrating image data with other -omics platforms that lack spatial information, thereby providing a new way to relate the molecular, spatial, and microenvironmental characteristics of cancer. Despite a dire need for improvements in ovarian cancer prevention, early detection, and treatment, the ovarian cancer field has lagged behind other cancers in the application of computational pathology. The intent of this review is to encourage ovarian cancer research teams to apply existing and/or develop additional tools in computational pathology for ovarian cancer and actively contribute to advancing this important field.

Opinions about the role of computational imaging in the future of pathology range from the belief that computers will entirely replace pathologists to the conviction that computers will never achieve the competency of a well-trained pathologist. Similar to advanced chess-playing software, which is now practically unbeatable by humans, it is likely that computers will be faster and more accurate than pathologists in performing specific tasks, but the main purpose of computational pathology is not to outperform pathologists in routine analyses but to provide them with a completely new and complementary set of tools, some of which are highlighted below:

Ovarian cancer is the fifth most deadly cancer in women, accounting for more than 200,000 deaths per year worldwide (1). Pathologic diagnosis is primarily confirmed by histopathologic evaluation and interpretation of H&E stained sections of tumor obtained during surgical debulking and/or biopsies obtained during treatment. Years of training with meticulous attention to histopathologic details in numerous examples of normal, altered but benign, and cancerous tissues enable pathologists to extract and empirically integrate an array of visual information from H&E slides into discrete features, such as tumor type, grade, mitotic count, and lymphovascular invasion. Using these features and additional pathological parameters allows the pathologist to categorize each tumor within a universally accepted classification, such as the World Health Organization (WHO) classification of tumors, which ultimately guides clinicians in patient management. Whereas each pathologist is variably limited by a composite of experience, memory, ability to recall visual detail, and case-correlated clinical outcome information locally available, computer algorithms take advantage of a searchable database with quantitative image features linked to clinical outcomes from thousands of patients. By applying computational image analyses, a new patient’s tumor can be compared to tumors in the database to find similar tumor phenotypes and help predict the most likely clinical outcome for the new patient.

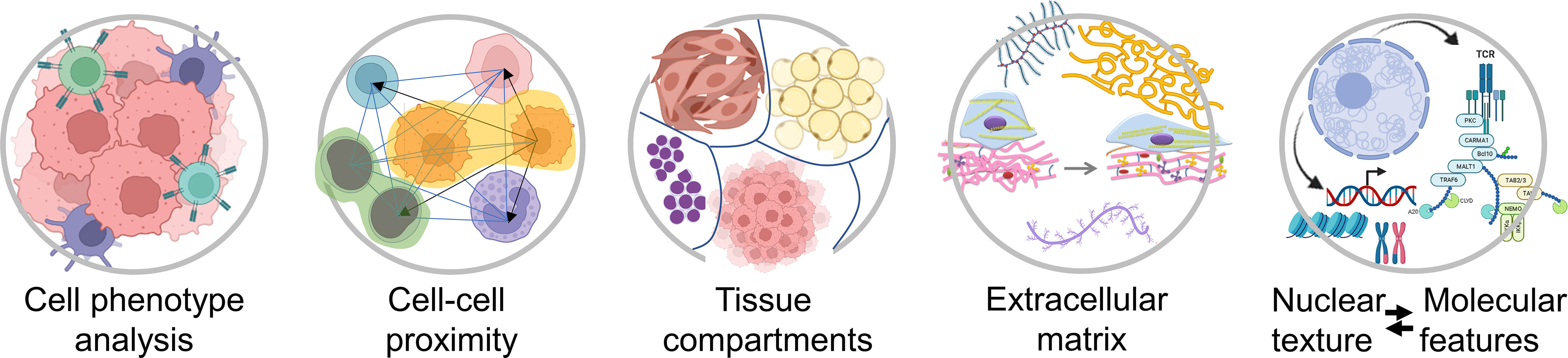

The invention of microscopes enabled the visualization of subcellular details, including nuclear enlargement, atypia, nucleolar prominence, hyperchromatism, and mitotic activity, which are usually important in establishing cancer diagnoses. However, a computer can extract and quantify hundreds, if not thousands, of additional visual and subvisual features (Figure 1), which can be configured into an algorithm to predict specific tumor behavior (i.e. likelihood of sensitivity to immunotherapy) or assess the efficacy of chemotherapy in tumor biopsies obtained at different time points during treatment. Extracting and analyzing information that is beyond human visual perception might also help identify new tissue, cellular, or subcellular structures, features, and/or spatial relationships that are difficult to characterize in H&E slides, such as liquid flow and vacuolarity, and intratumoral areas with increased tension, pH, metabolic activity, and/or genotoxic stress.

In most solid malignancies, including ovarian cancer, cancer cells exist within a complex microenvironment that supports tumor growth. In general, the presence of immune cells is associated with better prognosis while the presence of cancer-associated fibroblasts (CAFs) is associated with worse prognosis. In addition to phenotypic diversity, it is becoming apparent that spatial relationships between cancer cells, immune cells, and CAFs play key roles in tumor aggressiveness and response to therapy. However, our knowledge and understanding about the composition of, and the spatial organization within, the tumor microenvironment is still in its infancy. Higher-order spatial patterns, such as cell-cell interactions, tissue interfaces, collagen alignment, and extent of tumor heterogeneity (Figure 2), are important readouts of the complexities of tumor pathophysiology and response to treatment yet remain largely unexplored. While the quantitation of individual cell types and the algorithms that are currently applied to summarize the extent and intensity of tumor staining in a biomarker panel are sufficient for patient stratification into broad groups, i.e. responders and non-responders, comprehensive spatial and morphometric readouts will be necessary to effectively tailor medical treatment to the individual characteristics of each patient (2, 3).

Figure 2 Examples of spatial characteristics within the tumor microenvironment that can be quantified using different types of computational image software. Created with BioRender.com.

Knowing where and what to look for when examining a specimen or a histologic slide is crucial. Information that is not considered clinically relevant is more likely to be only cursorily examined or implicitly overlooked. For example, precursor lesions in the fallopian tube became obvious to pathologists only at the beginning of the 21st century after decades of searching for these lesions in the ovary, which at the time seemed to be the most logical place for ovarian cancer initiation. Computers can record information extracted from hundreds of scanned slide images in a matter of hours. Using unbiased systematic processing of the recorded data, new and/or non-intuitive patterns can emerge (4). For example, although precursor lesions, such as serous tubal intraepithelial carcinoma (STIC) are identifiable in serially sectioned fallopian tubes processed with standard histopathology methods (5), the morphologic events that precede STIC formation are still unknown. Indeed, it is likely that STIC lesions are preceded by subtle morphologic changes that are either not visible to the human eye or are too nuanced to detect without prior knowledge of where and what to look for. A recent analysis of global stromal features in fallopian tubes from postmenopausal women showed that fallopian tubes with STIC lesions exhibit subvisual global changes in the stroma that might precede STIC formation and/or provide a permissible microenvironment for the neoplastic transformation of tubal epithelial cells (6). The ability to detect and understand what constitutes a “permissible” microenvironment for cancerous transformation provides new opportunities for the early detection of cancer and for the identification of rate-limiting events in the early stages of cancer development.

Artificial Intelligence (AI) comprises a set of algorithms that mimic human intelligence, enabling machines to perform complex tasks, such as cognitive perception, decision-making, and communication. Machine learning is a subcategory of AI in which a machine is initially provided with large amounts of training data needed to build models to accurately analyze and interpret new data (7, 8). Machine learning models can automatically learn, improve their performance, and solve problems. Support vector machines, clustering algorithms, k-nearest neighbor classifiers, and logistic regression models (7, 8) are examples of commonly used models. However, because they can learn only a handful of features, they are being replaced by models that use deep learning. Deep learning is a type of machine learning that uses a neural network consisting of three or more functional layers, which include an input layer, multiple hidden layers, and an output layer (9, 10). The hidden layers promote learning and refinement of information “seen” by the input layers to increase the accuracy of predictions of the model’s output. The layers are connected to each other, and the strengths of these connections (termed “weights”) are learned from the training data.

A convolutional neural network (CNN) is a type of a deep learning model used to build advanced decision-making workflows in digital image analysis, ranging from image processing, classification and segmentation of tissue images to the prediction of patient outcomes. CNN often employs more than three convolutional sets of learnable filters (kernels) to extract features from the images. Kernel filters provide low, high and selective filtering (smoothing and sharpening, respectively). The filters distill various basic features such as edges, shapes, color, intensity and location of objects in the image. By flattening an image, removing or reducing the dimensions, convolutional kernels conduct pre-processing steps that enable selection and organization of the most informative learned features and recognition of these features in previously unseen images. In deeper layers of the CNN, the features are transformed into feature maps that summarize the presence of detected features in the input image (11). Another model that could prove useful in pathology is the fully convolutional network (FCN), characterized by a hierarchy of convolutional layers that are not fully connected. Unlike CNN, which learns from repetitive features that occur throughout the entire image, FCN learns from every pixel thereby allowing detection of objects or features that might be only sporadically present in an entire image (12), for example cancer stem cells which comprise a minute fraction of the tumor. CNN and FCN models are well suited for tumor/object detection, classification, and segmentation tasks (13–16). A recurrent neural network (RNN) model is characterized by dynamic behavior, meaning that it can memorize inputs over different time points or time intervals and learn from them in a sequential manner. RNN could be useful to analyze serial biopsies from a patient who is under surveillance for disease development, progression, or emergence of therapy resistance (17). Machine learning can be applied in a supervised or unsupervised manner. Supervised machine learning uses labeled data sets to train algorithms that classify data or predict outcomes. As input data are fed into the model, the significance of the individual data is adjusted until the model has been appropriately fitted to infer a function that maps input images to a designated outcome label or an object. In contrast, unsupervised machine learning uses unlabeled images to infer a function and detect patterns in the data, clustering them by any distinguishing characteristic(s). Both supervised and unsupervised learning can be useful to reveal new non-intuitive patterns that correlate with specific clinical outcomes.

Oncologists in consultation with pathologists increasingly rely on molecular assays to guide patient-tailored cancer therapy. Such assays can be costly and time-consuming in comparison to routine H&E slides, which can be digitized and analyzed on site. If the application of AI to H&E stained slides could provide molecular information, i.e. predict mutation status or expression levels of actionable cancer drivers in a patient’s tumor, several of the currently used technologies, such as immunohistochemistry, in situ hybridization, and next generation sequencing, might become superfluous. Recent efforts to integrate digitized H&E image data with molecular data indicate that determining molecular information from digitized H&E slides is not only feasible (18–21) but might achieve greater accuracy than other techniques that are subject to human error-prone steps, such as specialized specimen preparation and complex laboratory tasks.

Since tissue and cellular morphologies are known to reflect their physiological and pathological conditions, it would be reasonable to expect that higher-order features extracted from histopathology slides are associated with distinct molecular profiles, such as specific gene and protein expression, metabolism, and sensitivity to specific drugs. For example, a higher-order chromatin structure was shown to be the main determinant of genomic instability and mutation frequency in cancer cells (22–24) as well as a strong prognostic indicator in a pan-cancer study (25). Relying only on tumor histomorphology in H&E slides, recent reports indicate that it was possible to predict microsatellite instability in gastrointestinal cancers (26–28), determine molecular expression of ER, PR, and HER2 in breast cancer (29), and detect mutations in prognostically and therapeutically relevant driver genes in different cancer types, including BRAF in melanoma (30), APC, KRAS, PIK3CA, SMAD4, and TP53 in colorectal cancer (31), EGFR, KRAS, TP53, STK11, FAT1, and SETBP1 in lung cancer (32), TP53, CTNNB, FMN2, and ZFX4 in hepatocellular carcinoma (33), FGFR3 in bladder cancer (34), and IDH1 in glioma (35).

With an increasing number of available cancer therapies, it is crucial to develop companion diagnostic tests that identify and stratify subpopulations of patients by their likelihood to benefit from specific therapies. For example, in ovarian cancer, immunotherapy can be associated with serious side effects but only shows efficacy in about 10% of ovarian cancer patients (36). Another example is the need to identify patients who could benefit from PARP inhibitors. In the absence of better biomarkers, detection of a gene mutation (i.e. BRCA1 mutation) or elevated protein expression (i.e. PD-1 and PD-L1) of the intended therapeutic target are frequently used as proxies for treatment response. However, most of the current treatment response biomarkers perform poorly. While it is logical to expect that BRCA mutation carriers would be more sensitive to PARP inhibitors, clinical trials have shown that many non-carriers are responsive to PARP inhibitors although the rationale for this response is still unclear (37). Similarly, immunohistochemical detection of tumor-infiltrating lymphocytes and PD-L1 is a poor proxy for sensitivity to immunotherapy in most cancer types, including ovarian cancer (38). In fact, microsatellite instability, a less specific biomarker, seems to be a better predictor of sensitivity to immunotherapy in various solid cancers (39), possibly because microsatellite instability encompasses multiple proteins and pathways that are involved in the immune response. Using the same reasoning, it is likely that morphometric tumor features that reflect complex pathophysiological states will prove to be more clinically useful biomarkers even if they are initially non-intuitive or currently non-explainable.

Finding a biomarker in an unexpected place is exemplified in the tumor microenvironment. While cancer research has mostly focused on cancer cells, the unbiased application of deep imaging to digitized H&E slides combined with software that identifies areas of the image that influence algorithm-based classification has frequently revealed stromal features as better biomarkers of clinical outcomes than features of cancer cells (40). In ovarian cancer, a high ratio of stromal CAFs to cancer cells is an independent biomarker of poor survival and chemotherapy resistance (41–44). Indeed, stromal features were identified as the most effective biomarkers in colon cancer (45–47), mesothelioma (48), breast cancer (49, 50), lung adenocarcinoma (51) and early-stage non-small cell lung cancer (52). The discovery of stromal features as the most relevant predictors of clinical outcomes is not surprising as multiple molecular studies of expression profiles and proteomic signatures have also shown that an increased stroma/cancer ratio and increased expression of gene signatures associated with stromal remodeling were the strongest predictors of clinical outcomes (53–62). Studies in several cancer types have also shown that the signature of TGFβ-mediated extracellular matrix remodeling is the best predictor of therapy failure (63). The tumor stroma could contribute to poor survival and therapeutic resistance through multiple mechanisms, including promotion of tumor growth, angiogenesis, invasion and metastasis, provision of protective niches for cancer stem cells, creation of an immunosuppressive microenvironment that excludes immune cells from proximity to cancer cells, and/or generation of physical barriers that block access of chemotherapies and immunotherapies to cancer cells (64–68). Thus, it is unlikely that a single molecular pathway will be sufficient as a biomarker of the complex biology that dictates clinical outcomes. A recent computational image analysis of H&E slides of non-small cell lung and gynecologic cancers has shown that the spatial architecture and the interaction of cancer cells and tumor-infiltrating lymphocytes can predict clinical benefit in patients receiving immune checkpoint inhibitors. Importantly, the computational image classifier was associated with clinical outcome independent of clinical factors and PD-L1 expression levels (69). In addition to identifying new biomarkers by highlighting specific structures and regions, computational tissue imaging could assist with the identification of new therapeutic targets. For example, targeting CAFs with various TGFβ inhibitors was effective in improving immunotherapeutic efficacy in several preclinical cancer models (66–68).

Potential applications of computational pathology in cancer include diagnosis, phenotyping, subtype classification, early detection, prognostication, assessment of sensitivity to chemotherapy and immunotherapy, and identification of suitable targeted therapies (20). For example, open-source software, QuPath (70), was capable of classifying serous borderline ovarian tumors and high-grade serous ovarian cancer with >90% accuracy by examining only a small number of tiles extracted from whole H&E slide images (71). While this approach does not reach the accuracy achieved by pathologists who examine multiple sections from different areas of each tumor and have access to surgical records and other clinical information, this example suggests that improvements in the software recognition pattern and computational capacity to analyze pathology slides could achieve clinical-grade tumor classification (72).

Several examples report the utility of computational imaging to automate cancer diagnoses without compromising accuracy. For example, a CNN trained to classify images as malignant melanoma or benign nevi demonstrated superior performance compared to manual scoring by histopathologists (73, 74). Another study that assessed the ability of deep learning algorithms to accurately detect breast cancer metastases in H&E slides of lymph node sections reported that the algorithms were superior in detecting micrometastases and equivalent to the best performing pathologists when under time constraints in detecting macrometastases (75). Similarly, an AI system has reached a clinically acceptable level of cancer detection accuracy in prostate needle biopsies (76). A study of deep CNN in Gleason scoring of prostatectomy specimens reported that the deep learning-based Gleason classification achieved higher sensitivity and specificity than 9 out of 10 pathologists (77). Computational analyses of H&E images have been applied for the prediction of patient survival and recurrence. Deep learning-based algorithms have also been reported to predict prostate cancer recurrence comparably to a genomic companion diagnostic test (78). Other studies utilizing deep learning-based classification involve determining histologic subtype in ovarian cancer (79), predicting platinum resistance in ovarian cancer (41, 80), predicting survival outcome in patients with mesothelioma (48), predicting metastatic recurrence and death in patients with primary melanoma (81) and colon cancer (46), predicting lung cancer recurrence after surgical resection to identify patients who should receive additional adjuvant therapy (52, 82), and predicting response to ipilimumab immunotherapy in patients with malignant melanoma (83). A computational quantitative characterization of the architecture of tumor-infiltrating lymphocytes and their interplay with cancer cells from H&E slides of three different gynecologic cancer types (ovarian, cervical, and endometrial) and across three different treatment approaches (platinum, radiation and immunotherapy) showed that the geospatial profile was prognostic of disease progression and survival irrespective of the treatment modality (84). This computationally-derived profile even outperformed the stage variable, which is the current standard for gynecologic oncology (84). Upon closer inspection, features related to the increased density of lymphocytes in the epithelium and invasive tumor front were associated with better survival compared with features related to the increased density of lymphocytes in the stromal compartment (84). Although these patterns can be observed by conventional pathology examination, the value of the computational geospatial profile is in the inherently quantitative output rather than the descriptive output from conventional pathology.

Computational pathology has already become an integral component of cancer research and is increasingly being used in anatomic pathology to automate tasks, reduce subjectivity, and improve accuracy and reproducibility (17, 85, 86). The development of high-resolution whole slide image scanners, which are specialized microscopes fitted with high resolution cameras, high magnification objectives, and software for the identification and automated correction of out-of-focus points, constitutes a major advance in computational pathology. Two of these high-quality scanners, the Leica Aperio AT2 DX System and the Philips IntelliSite Pathology Solution, now have FDA approval for clinical review and expert interpretation of pathology slides in routine diagnoses (87). To facilitate the adoption and implementation of this technology into pathology workflows, the Digital Pathology Association has published several practical guides to whole slide imaging (88–91). Digitization of pathology slides enables simultaneous review of slides by multiple pathologists at different institutions and the creation and growth of centralized cloud-based image repositories and databases that are accessible for remote high-throughput studies and/or the development of multiple freely-available machine learning-based tools that can recognize and quantify positive immunostaining or basic structures, such as epithelial-lined ducts, blood vessels, and mitotic figures in H&E slides. Proof-of-concept studies show that automated analyses of simple morphologic patterns can assist pathologists and researchers, allowing them to focus on more complex tasks (2, 92).

Academic pathology departments are increasingly interested in adopting AI in routine practice to optimize operations, reduce costs, and improve patient care. A survey of physician perspectives on the integration of AI into diagnostic pathology revealed that 75% of pathologists across more than fifty countries are interested in using AI as a diagnostic tool in cancer care (93). With the continuous increase in the aged population and the growing number of available tests for persionalized medicine, pathologists are overburdened by tasks, such as counting mitotic figures or scoring Ki67 staining, that could be easily performed by machines. Hence, automated computational imaging platforms for the detection and quantitation of stained markers in defined cell subpopulations are the first candidates to be integrated into pathology workflows as these analyses could provide accurate, unbiased, reproducible, and standardized results that can be viewed and verified (72, 89, 94–96). In contrast, results of artificial neural network analyses that cannot be verified visually are more likely to be met with mistrust by the pathology community because the pathologist needs to be confident in the result before signing out a report (97). This problem might be solved by the further development of tools that introduce transparency for non-linear machine learning methods, such as gradient-weighted class activation mapping (grad-CAM) that overlays images and heatmaps to better visualize the cell type or region in which the informative features were expressed (98).

Although research results utilizing computational imaging in pathology are promising, significant improvements need to be made to achieve the safety and reliability required for endorsement by medical specialty societies, legal approvals, and implementation of this technology into daily clinical pathological diagnostics (99–101). One of the first steps will involve the standardization of slide scanners, display devices, image formats, platforms for image processing and analysis as well as the standardization of the interfaces with clinical information systems and reimbursement mechanisms. Among the technical challenges that must be overcome are normalization methods for histological data preparation at different institutions. This is a pressing issue given that histologic slides are stained either manually or with auto-stainers using site-specific protocols and then digitized on scanners from various manufacturers. Tissue handling and processing can also introduce artefacts, such as air bubbles, fingerprints, excessive glue, blurry/out of focus tissue image, unstained or overstained tissue, and folded or cauterized tissue and digitization (102). While the human eye might not be sensitive to slight differences in tissue fixation, sectioning, staining, and coverslip mounting, all of these factors indiscriminately impact machine learning, and each can have drastic implications on the final results (102). For these reasons, the computational pathology community strongly promotes rigorous algorithm development and testing schemes. First, innovative methods must be used to address pixel data normalization, color standardization, and recognition of the areas on the slide that are out of focus, blurred or contain folded or damaged tissue (103–109). Second, algorithm generalizability on unseen digitized specimens collected at different institutions or deposited in public repositories, such as The Cancer Genome Atlas (TCGA) (110) and The Cancer Imaging Archive (TCIA) (111), will need to be tested and standardized (7, 112, 113).

Many commercial and freely available viewers and image analysis software packages support different whole slide image formats for Windows, Linux, and MacOS. Commercial slide viewers include ImageScope (Aperio/Leica), CaseViewer (3DHistech), and ObjectiveView. The development of web-based viewing interfaces and custom computational pathology pipelines is supported by OpenSlide and SlideIO software libraries. For users with little to no programming skills, the most popular free software packages for general tissue image analysis include ImageJ (114), Fiji (115), Icy (116), Orbit (117), ilastik (118), Cell Profiler (119), QuPath (70), SlicerScope (120), and PathML (121). These platforms have been embraced in the research arena because they can be easily adapted to different types of image analyses by introducing new codes or incorporating plugins from other programs. They are also flexible in processing different types of image files. Additionally, a plethora of fit-for-purpose packages with custom-made computational pathology pipelines can be found on GitHub (https://github.com/). Commercial digital pathology solutions, such as PerkinElmer (USA), Tissue Gnostics (Austria), Halo (United States), Visophram (Denmark) and Definiens (Germany), are often preferred by pathology departments because these platforms offer built-in databases of analytical engines and available technical support. Recognizing the importance of standardization in clinical practice, the freely-accessible Pathology Analytic Imaging Standards (PAIS) was created to support the standardization of image analysis algorithms and image features (122, 123). Resources of web-based interfaces and online tools, such as The Cancer Digital Slide Archive (CDSA, http://cancer.digitalslidearchive.net/), for visualization and analysis of pathology image data are also becoming more readily available (124).

Computational image analysis holds great promise in advancing ovarian cancer research, including a better understanding of the events that provide a permissible microenvironment for early cancerous transformation in the fallopian tube, the role of the stroma in therapeutic resistance, and biomarkers for the selection of patients most likely to benefit from different therapeutic approaches. Although computational pathology in the ovarian cancer research field is still too rudimentary to use in a clinical setting, we anticipate that it will become one of the main tools for cancer research and precision medicine in the next decade, surpassing current methods for the selection of patient-tailored therapy, such as immunostaining, mutation sequencing, and expression profiling. Among the key factors that will propel computational pathology to the forefront of medical research are the accessibility of histopathology slides, the low-cost of image analysis and storage, seamless integration with other computational imaging platforms, and the potential for rapid, accurate, and reliable prediction of patient outcomes.

All authors contributed to the article writing and revisions and approved the submitted version.

SO is supported by the Veterans Administration Merit Award VA-ORD BX004974, the Iris Cantor-UCLA Women’s Health Center/UCLA National Center of Excellence in Women’s Health Pilot Research Project NCATS UCLA CTSI grant UL1TR00188, the Sandy Rollman Ovarian Cancer Foundation, the Mary Kay Foundation, and the Annenberg Foundation. AG is supported by the Initiative of Excellence - Research University - a grant program at Silesian University of Technology (year 2021, no.07/010/SDU/10-21-01).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA: A Cancer J Clin (2021) 71:209. doi: 10.3322/caac.21660

2. Acs B, Rantalainen M, Hartman J. Artificial intelligence as the next step towards precision pathology. J Intern Med (2020) 288:62. doi: 10.1111/joim.13030

3. Dlamini Z, Francies FZ, Hull R, Marima R. Artificial intelligence (AI) and big data in cancer and precision oncology. Comput Struct Biotechnol J (2020) 18:2300. doi: 10.1016/j.csbj.2020.08.019

4. Großerueschkamp F, Jütte H, Gerwert K, Tannapfel A. Advances in digital pathology: From artificial intelligence to label-free imaging. Visc Med (2021) 37:482. doi: 10.1159/000518494

5. Nik NN, Vang R, Shih Ie M, Kurman RJ. Origin and pathogenesis of pelvic (ovarian, tubal, and primary peritoneal) serous carcinoma. Annu Rev Pathol (2014) 9:27. doi: 10.1146/annurev-pathol-020712-163949

6. Wu J, Raz Y, Recouvreux MS, Diniz MA, Lester J, Karlan BY, et al. Focal serous tubal intra-epithelial carcinoma lesions are associated with global changes in the fallopian tube epithelia and stroma. Front Oncol (2022) 12:853755. doi: 10.3389/fonc.2022.853755

7. Komura D, Ishikawa S. Machine learning methods for histopathological image analysis. Comput Struct Biotechnol J (2018) 16:34. doi: 10.1016/j.csbj.2018.01.001

8. Cui M, Zhang DY. Artificial intelligence and computational pathology. Lab Invest (2021) 101:412. doi: 10.1038/s41374-020-00514-0

10. Wang S, Yang DM, Rong R, Zhan X, Xiao G. Pathology image analysis using segmentation deep learning algorithms. Am J Pathol (2019) 189:1686. doi: 10.1016/j.ajpath.2019.05.007

11. Mobadersany P, Yousefi S, Amgad M, Gutman DA, Barnholtz-Sloan JS, Velázquez Vega JE, et al. Predicting cancer outcomes from histology and genomics using convolutional networks. Proc Natl Acad Sci USA (2018) 115:E2970. doi: 10.1073/pnas.1717139115

12. Xing F, Cornish TC, Bennett T, Ghosh D, Yang L. Pixel-to-Pixel learning with weak supervision for single-stage nucleus recognition in Ki67 images. IEEE Trans BioMed Eng (2019) 66:3088. doi: 10.1109/TBME.2019.2900378

13. Gertych A, Swiderska-Chadaj Z, Ma Z, Ing N, Markiewicz T, Cierniak S, et al. Convolutional neural networks can accurately distinguish four histologic growth patterns of lung adenocarcinoma in digital slides. Sci Rep (2019) 9:1483. doi: 10.1038/s41598-018-37638-9

14. Li W, Li J, Sarma KV, Ho KC, Shen S, Knudsen BS, et al. Path r-CNN for prostate cancer diagnosis and Gleason grading of histological images. IEEE Trans Med Imaging (2018) 38:945. doi: 10.1109/TMI.2018.2875868

15. Klimov S, Xue Y, Gertych A, Graham RP, Jiang Y, Bhattarai S, et al. Predicting metastasis risk in pancreatic neuroendocrine tumors using deep learning image analysis. Front Oncol (2020) 10:593211. doi: 10.3389/fonc.2020.593211

16. Golden JA. Deep learning algorithms for detection of lymph node metastases from breast cancer: Helping artificial intelligence be seen. JAMA (2017) 318:2184. doi: 10.1001/jama.2017.14580

17. Bera K, Schalper KA, Rimm DL, Velcheti V, Madabhushi A. Artificial intelligence in digital pathology - new tools for diagnosis and precision oncology. Nat Rev Clin Oncol (2019) 16:703. doi: 10.1038/s41571-019-0252-y

18. Schmauch B, Romagnoni A, Pronier E, Saillard C, Maillé P, Calderaro J, et al. A deep learning model to predict RNA-seq expression of tumours from whole slide images. Nat Commun (2020) 11:3877. doi: 10.1038/s41467-020-17678-4

19. Montalto MC, Edwards R. And they said it couldn't be done: Predicting known driver mutations from H&E slides. J Pathol Inform (2019) 10:17. doi: 10.4103/jpi.jpi_91_18

20. Kather JN, Heij LR, Grabsch HI, Loeffler C, Echle A, Muti HS, et al. Pan-cancer image-based detection of clinically actionable genetic alterations. Nat Cancer (2020) 1:789. doi: 10.1038/s43018-020-0087-6

21. Fu Y, Jung AW, Torne RV, Gonzalez S, Vöhringer H, Shmatko A, et al. Pan-cancer computational histopathology reveals mutations, tumor composition and prognosis. Nat Cancer (2020) 1:800. doi: 10.1038/s43018-020-0085-8

22. Schuster-Bockler B, Lehner B. Chromatin organization is a major influence on regional mutation rates in human cancer cells. Nature (2012) 488:504. doi: 10.1038/nature11273

23. Dixon JR, Jung I, Selvaraj S, Shen Y, Antosiewicz-Bourget JE, Lee AY, et al. Chromatin architecture reorganization during stem cell differentiation. Nature (2015) 518:331. doi: 10.1038/nature14222

24. Gisselsson D, Bjork J, Hoglund M, Mertens F, Dal Cin P, Akerman M, et al. Abnormal nuclear shape in solid tumors reflects mitotic instability. Am J Pathol (2001) 158:199. doi: 10.1016/S0002-9440(10)63958-2

25. Kleppe A, Albregtsen F, Vlatkovic L, Pradhan M, Nielsen B, Hveem TS, et al. Chromatin organisation and cancer prognosis: a pan-cancer study. Lancet Oncol (2018) 19:356. doi: 10.1016/S1470-2045(17)30899-9

26. Kather JN, Pearson AT, Halama N, Jäger D, Krause J, Loosen SH, et al. Deep learning can predict microsatellite instability directly from histology in gastrointestinal cancer. Nat Med (2019) 25:1054. doi: 10.1038/s41591-019-0462-y

27. Schrammen PL, Ghaffari Laleh N, Echle A, Truhn D, Schulz V, Brinker TJ, et al. Weakly supervised annotation-free cancer detection and prediction of genotype in routine histopathology. J Pathol (2022) 256:50. doi: 10.1002/path.5800

28. Echle A, Grabsch HI, Quirke P, van den Brandt PA, West NP, Hutchins GGA, et al. Clinical-grade detection of microsatellite instability in colorectal tumors by deep learning. Gastroenterology (2020) 159:1406. doi: 10.1053/j.gastro.2020.06.021

29. Shamai G, Binenbaum Y, Slossberg R, Duek I, Gil Z, Kimmel R. Artificial intelligence algorithms to assess hormonal status from tissue microarrays in patients with breast cancer. JAMA Netw Open (2019) 2:e197700. doi: 10.1001/jamanetworkopen.2019.7700

30. Kim RH, Nomikou S, Coudray N, Jour G, Dawood Z, Hong R, et al. Deep learning and pathomics analyses reveal cell nuclei as important features for mutation prediction of BRAF-mutated melanomas. J Invest Dermatol (2021) 142:1650. doi: 10.1016/j.jid.2021.09.034.

31. Jang HJ, Lee A, Kang J, Song IH, Lee SH. Prediction of clinically actionable genetic alterations from colorectal cancer histopathology images using deep learning. World J Gastroenterol (2020) 26:6207. doi: 10.3748/wjg.v26.i40.6207

32. Coudray N, Ocampo PS, Sakellaropoulos T, Narula N, Snuderl M, Fenyö D, et al. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat Med (2018) 24:1559. doi: 10.1038/s41591-018-0177-5

33. Chen M, Zhang B, Topatana W, Cao J, Zhu H, Juengpanich S, et al. Classification and mutation prediction based on histopathology H&E images in liver cancer using deep learning. NPJ Precis Oncol (2020) 4:14. doi: 10.1038/s41698-020-0120-3

34. Loeffler CML, Ortiz Bruechle N, Jung M, Seillier L, Rose M, Laleh NG, et al. Artificial intelligence-based detection of FGFR3 mutational status directly from routine histology in bladder cancer: A possible preselection for molecular testing? Eur Urol Focus (2021). doi: 10.1016/j.euf.2021.04.007

35. Chang P, Grinband J, Weinberg BD, Bardis M, Khy M, Cadena G, et al. Deep-learning convolutional neural networks accurately classify genetic mutations in gliomas. AJNR Am J Neuroradiol (2018) 39:1201. doi: 10.3174/ajnr.A5667

36. Kandalaft LE, Odunsi K, Coukos G. Immune therapy opportunities in ovarian cancer. Am Soc Clin Oncol Educ Book (2020) 40:1. doi: 10.1200/EDBK_280539

37. Miller RE, Leary A, Scott CL, Serra V, Lord CJ, Bowtell D, et al. ESMO recommendations on predictive biomarker testing for homologous recombination deficiency and PARP inhibitor benefit in ovarian cancer. Ann Oncol (2020) 31:1606. doi: 10.1016/j.annonc.2020.08.2102

38. Chan TA, Yarchoan M, Jaffee E, Swanton C, Quezada SA, Stenzinger A, et al. Development of tumor mutation burden as an immunotherapy biomarker: utility for the oncology clinic. Ann Oncol (2019) 30:44. doi: 10.1093/annonc/mdy495

39. Chang L, Chang M, Chang HM, Chang F. Microsatellite instability: A predictive biomarker for cancer immunotherapy. Appl Immunohistochem Mol Morphol (2018) 26:e15. doi: 10.1097/PAI.0000000000000575

40. Diao JA, Wang JK, Chui WF, Mountain V, Gullapally SC, Srinivasan R, et al. Human-interpretable image features derived from densely mapped cancer pathology slides predict diverse molecular phenotypes. Nat Commun (2021) 12:1613. doi: 10.1038/s41467-021-21896-9

41. Yu KH, Hu V, Wang F, Matulonis UA, Mutter GL, Golden JA, et al. Deciphering serous ovarian carcinoma histopathology and platinum response by convolutional neural networks. BMC Med (2020) 18:236. doi: 10.1186/s12916-020-01684-w

42. Lan C, Heindl A, Huang X, Xi S, Banerjee S, Liu J, et al. Quantitative histology analysis of the ovarian tumour microenvironment. Sci Rep (2015) 5:16317. doi: 10.1038/srep16317

43. Lan C, Li J, Huang X, Heindl A, Wang Y, Yan S, et al. Stromal cell ratio based on automated image analysis as a predictor for platinum-resistant recurrent ovarian cancer. BMC Cancer (2019) 19:159. doi: 10.1186/s12885-019-5343-8

44. Verhaak RG, Tamayo P, Yang JY, Hubbard D, Zhang H, Creighton CJ, et al. Prognostically relevant gene signatures of high-grade serous ovarian carcinoma. J Clin Invest (2013) 123:517. doi: 10.1172/JCI65833

45. Jiao Y, Li J, Qian C, Fei S. Deep learning-based tumor microenvironment analysis in colon adenocarcinoma histopathological whole-slide images. Comput Methods Programs BioMed (2021) 204:106047. doi: 10.1016/j.cmpb.2021.106047

46. Kwak MS, Lee HH, Yang JM, Cha JM, Jeon JW, Yoon JY, et al. Deep convolutional neural network-based lymph node metastasis prediction for colon cancer using histopathological images. Front Oncol (2020) 10:619803. doi: 10.3389/fonc.2020.619803

47. Bilal M, Raza SEA, Azam A, Graham S, Ilyas M, Cree IA, et al. Novel deep learning algorithm predicts the status of molecular pathways and key mutations in colorectal cancer from routine histology images. Lancet Digital Health (2021) 3:e763. doi: 10.1016/S2589-7500(21)00180-1

48. Courtiol P, Maussion C, Moarii M, Pronier E, Pilcer S, Sefta M, et al. Deep learning-based classification of mesothelioma improves prediction of patient outcome. Nat Med (2019) 25:1519. doi: 10.1038/s41591-019-0583-3

49. Mittal S, Yeh K, Leslie LS, Kenkel S, Kajdacsy-Balla A, Bhargava R. Simultaneous cancer and tumor microenvironment subtyping using confocal infrared microscopy for all-digital molecular histopathology. Proc Natl Acad Sci U.S.A. (2018) 115:E5651. doi: 10.1073/pnas.1719551115

50. Li H, Bera K, Toro P, Fu P, Zhang Z, Lu C, et al. Collagen fiber orientation disorder from H&E images is prognostic for early stage breast cancer: clinical trial validation. NPJ Breast Cancer (2021) 7:104. doi: 10.1038/s41523-021-00310-z

51. Wang S, Rong R, Yang DM, Fujimoto J, Yan S, Cai L, et al. Computational staining of pathology images to study the tumor microenvironment in lung cancer. Cancer Res (2020) 80:2056. doi: 10.1158/0008-5472.CAN-19-1629

52. Wang X, Janowczyk A, Zhou Y, Thawani R, Fu P, Schalper K, et al. Prediction of recurrence in early stage non-small cell lung cancer using computer extracted nuclear features from digital H&E images. Sci Rep (2017) 7:13543. doi: 10.1038/s41598-017-13773-7

53. Jia D, Liu Z, Deng N, Tan TZ, Huang RY, Taylor-Harding B, et al. A COL11A1-correlated pan-cancer gene signature of activated fibroblasts for the prioritization of therapeutic targets. Cancer Lett (2016) 382:203. doi: 10.1016/j.canlet.2016.09.001

54. Cheon DJ, Tong Y, Sim MS, Dering J, Berel D, Cui X, et al. A collagen-remodeling gene signature regulated by TGF-beta signaling is associated with metastasis and poor survival in serous ovarian cancer. Clin Cancer Res (2014) 20:711. doi: 10.1158/1078-0432.CCR-13-1256

55. Hu Y, Taylor-Harding B, Raz Y, Haro M, Recouvreux MS, Taylan E, et al. Are epithelial ovarian cancers of the mesenchymal subtype actually intraperitoneal metastases to the ovary? Front Cell Dev Biol (2020) 8:647. doi: 10.3389/fcell.2020.00647

56. Huang R, Wu D, Yuan Y, Li X, Holm R, Trope CG, et al. CD117 expression in fibroblasts-like stromal cells indicates unfavorable clinical outcomes in ovarian carcinoma patients. PLoS One (2014) 9:e112209. doi: 10.1371/journal.pone.0112209

57. Becht E, de Reynies A, Giraldo NA, Pilati C, Buttard B, Lacroix L, et al. Immune and stromal classification of colorectal cancer is associated with molecular subtypes and relevant for precision immunotherapy. Clin Cancer Res (2016) 22:4057. doi: 10.1158/1078-0432.CCR-15-2879

58. Kim H, Watkinson J, Varadan V, Anastassiou D. Multi-cancer computational analysis reveals invasion-associated variant of desmoplastic reaction involving INHBA, THBS2 and COL11A1. BMC Med Genomics (2010) 3:51. doi: 10.1186/1755-8794-3-51

59. Karlan BY, Dering J, Walsh C, Orsulic S, Lester J, Anderson LA, et al. POSTN/TGFBI-associated stromal signature predicts poor prognosis in serous epithelial ovarian cancer. Gynecol Oncol (2014) 132:334. doi: 10.1016/j.ygyno.2013.12.021

60. Zhang S, Jing Y, Zhang M, Zhang Z, Ma P, Peng H, et al. Stroma-associated master regulators of molecular subtypes predict patient prognosis in ovarian cancer. Sci Rep (2015) 5:16066. doi: 10.1038/srep16066

61. Liu Z, Beach JA, Agadjanian H, Jia D, Aspuria PJ, Karlan BY, et al. Suboptimal cytoreduction in ovarian carcinoma is associated with molecular pathways characteristic of increased stromal activation. Gynecol Oncol (2015) 139:394. doi: 10.1016/j.ygyno.2015.08.026

62. Ryner L, Guan Y, Firestein R, Xiao Y, Choi Y, Rabe C, et al. Upregulation of periostin and reactive stroma is associated with primary chemoresistance and predicts clinical outcomes in epithelial ovarian cancer. Clin Cancer Res (2015) 21:2941. doi: 10.1158/1078-0432.CCR-14-3111

63. Chakravarthy A, Khan L, Bensler NP, Bose P, De Carvalho DD. TGF-β-associated extracellular matrix genes link cancer-associated fibroblasts to immune evasion and immunotherapy failure. Nat Commun (2018) 9:4692. doi: 10.1038/s41467-018-06654-8

64. Jain RK. Normalizing tumor microenvironment to treat cancer: bench to bedside to biomarkers. J Clin Oncol (2013) 31:2205. doi: 10.1200/JCO.2012.46.3653

65. Su S, Chen J, Yao H, Liu J, Yu S, Lao L, et al. CD10(+)GPR77(+) cancer-associated fibroblasts promote cancer formation and chemoresistance by sustaining cancer stemness. Cell (2018) 172:841. doi: 10.1016/j.cell.2018.01.009

66. Mariathasan S, Turley SJ, Nickles D, Castiglioni A, Yuen K, Wang Y, et al. TGFbeta attenuates tumour response to PD-L1 blockade by contributing to exclusion of T cells. Nature (2018) 554:544. doi: 10.1038/nature25501

67. Tauriello DVF, Palomo-Ponce S, Stork D, Berenguer-Llergo A, Badia-Ramentol J, Iglesias M, et al. TGFbeta drives immune evasion in genetically reconstituted colon cancer metastasis. Nature (2018) 554:538. doi: 10.1038/nature25492

68. Grauel AL, Nguyen B, Ruddy D, Laszewski T, Schwartz S, Chang J, et al. TGFβ-blockade uncovers stromal plasticity in tumors by revealing the existence of a subset of interferon-licensed fibroblasts. Nat Commun (2020) 11:6315. doi: 10.1038/s41467-020-19920-5

69. Wang X, Barrera C, Bera K, Viswanathan VS, Azarianpour-Esfahani S, Koyuncu C, et al. Spatial interplay patterns of cancer nuclei and tumor-infiltrating lymphocytes (TILs) predict clinical benefit for immune checkpoint inhibitors. Sci Adv (2022) 8:eabn3966. doi: 10.1126/sciadv.abn3966

70. Bankhead P, Loughrey MB, Fernandez JA, Dombrowski Y, McArt DG, Dunne PD, et al. QuPath: Open source software for digital pathology image analysis. sci rep. Sci Rep (2017) 7:16878. doi: 10.1038/s41598-017-17204-5

71. Jiang J, Tekin B, Guo R, Liu H, Huang Y, Wang C. Digital pathology-based study of cell- and tissue-level morphologic features in serous borderline ovarian tumor and high-grade serous ovarian cancer. J Pathol Inform (2021) 12:24. doi: 10.4103/jpi.jpi_76_20

72. Campanella G, Hanna MG, Geneslaw L, Miraflor A, Werneck Krauss Silva V, Busam KJ, et al. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat Med (2019) 25:1301. doi: 10.1038/s41591-019-0508-1

73. Hekler A, Utikal JS, Enk AH, Solass W, Schmitt M, Klode J, et al. Deep learning outperformed 11 pathologists in the classification of histopathological melanoma images. Eur J Cancer (2019) 118:91. doi: 10.1016/j.ejca.2019.06.012

74. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature (2017) 542:115. doi: 10.1038/nature21056

75. Ehteshami Bejnordi B, Veta M, Johannes van Diest P, van Ginneken B, Karssemeijer N, Litjens G, et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA (2017) 318:2199. doi: 10.1001/jama.2017.14585

76. Pantanowitz L, Quiroga-Garza GM, Bien L, Heled R, Laifenfeld D, Linhart C, et al. An artificial intelligence algorithm for prostate cancer diagnosis in whole slide images of core needle biopsies: a blinded clinical validation and deployment study. Lancet Digit Health (2020) 2:e407. doi: 10.1016/S2589-7500(20)30159-X

77. Nagpal K, Foote D, Liu Y, Chen PC, Wulczyn E, Tan F, et al. Development and validation of a deep learning algorithm for improving Gleason scoring of prostate cancer. NPJ Digit Med (2019) 2:48. doi: 10.1038/s41746-019-0112-2

78. Leo P, Janowczyk A, Elliott R, Janaki N, Bera K, Shiradkar R, et al. Computer extracted gland features from H&E predicts prostate cancer recurrence comparably to a genomic companion diagnostic test: a large multi-site study. NPJ Precis Oncol (2021) 5:35. doi: 10.1038/s41698-021-00174-3

79. Wu M, Yan C, Liu H, Liu Q. Automatic classification of ovarian cancer types from cytological images using deep convolutional neural networks. Biosci Rep (2018) 38:BSR20180289. doi: 10.1042/BSR20180289

80. Laury AR, Blom S, Ropponen T, Virtanen A, Carpén OM. Artificial intelligence-based image analysis can predict outcome in high-grade serous carcinoma via histology alone. Sci Rep (2021) 11:19165. doi: 10.1038/s41598-021-98480-0

81. Kulkarni PM, Robinson EJ, Sarin Pradhan J, Gartrell-Corrado RD, Rohr BR, Trager MH, et al. Deep learning based on standard H&E images of primary melanoma tumors identifies patients at risk for visceral recurrence and death. Clin Cancer Res (2020) 26:1126. doi: 10.1158/1078-0432.CCR-19-1495

82. Wu Z, Wang L, Li C, Cai Y, Liang Y, Mo X, et al. DeepLRHE: A deep convolutional neural network framework to evaluate the risk of lung cancer recurrence and metastasis from histopathology images. Front Genet (2020) 11:768. doi: 10.3389/fgene.2020.00768

83. Harder N, Schönmeyer R, Nekolla K, Meier A, Brieu N, Vanegas C, et al. Automatic discovery of image-based signatures for ipilimumab response prediction in malignant melanoma. Sci Rep (2019) 9:7449. doi: 10.1038/s41598-019-43525-8

84. Azarianpour S, Corredor G, Bera K, Leo P, Fu P, Toro P, et al. Computational image features of immune architecture is associated with clinical benefit and survival in gynecological cancers across treatment modalities. J Immunother Cancer (2022) 10:e003833. doi: 10.1136/jitc-2021-003833

85. Niazi MKK, Parwani AV, Gurcan MN. Digital pathology and artificial intelligence. Lancet Oncol (2019) 20:e253. doi: 10.1016/S1470-2045(19)30154-8

86. Colling R, Pitman H, Oien K, Rajpoot N, Macklin P, Snead D, et al. Artificial intelligence in digital pathology: a roadmap to routine use in clinical practice. J Pathol (2019) 249:143. doi: 10.1002/path.5310

87. Evans AJ, Bauer TW, Bui MM, Cornish TC, Duncan H, Glassy EF, et al. US Food and drug administration approval of whole slide imaging for primary diagnosis: A key milestone is reached and new questions are raised. Arch Pathol Lab Med (2018) 142:1383. doi: 10.5858/arpa.2017-0496-CP

88. Zarella MD, Bowman D, Aeffner F, Farahani N, Xthona A, Absar SF, et al. A practical guide to whole slide imaging: A white paper from the digital pathology association. Arch Pathol Lab Med (2019) 143:222. doi: 10.5858/arpa.2018-0343-RA

89. Abels E, Pantanowitz L, Aeffner F, Zarella MD, van der Laak J, Bui MM, et al. Computational pathology definitions, best practices, and recommendations for regulatory guidance: a white paper from the digital pathology association. J Pathol (2019) 249:286. doi: 10.1002/path.5331

90. Aeffner F, Zarella MD, Buchbinder N, Bui MM, Goodman MR, Hartman DJ, et al. Introduction to digital image analysis in whole-slide imaging: A white paper from the digital pathology association. J Pathol Inform (2019) 10:9. doi: 10.4103/jpi.jpi_82_18

91. Lujan G, Quigley JC, Hartman D, Parwani A, Roehmholdt B, Meter BV, et al. Dissecting the business case for adoption and implementation of digital pathology: A white paper from the digital pathology association. J Pathol Inform (2021) 12:17. doi: 10.4103/jpi.jpi_67_20

92. Rizzardi AE, Johnson AT, Vogel RI, Pambuccian SE, Henriksen J, Skubitz AP, et al. Quantitative comparison of immunohistochemical staining measured by digital image analysis versus pathologist visual scoring. Diagn Pathol (2012) 7:42. doi: 10.1186/1746-1596-7-42

93. Sarwar S, Dent A, Faust K, Richer M, Djuric U, Van Ommeren R, et al. Physician perspectives on integration of artificial intelligence into diagnostic pathology. NPJ Digit Med (2019) 2:28. doi: 10.1038/s41746-019-0106-0

94. Bhargava R, Madabhushi A. Emerging themes in image informatics and molecular analysis for digital pathology. Annu Rev BioMed Eng (2016) 18:387. doi: 10.1146/annurev-bioeng-112415-114722

95. Ghaznavi F, Evans A, Madabhushi A, Feldman M. Digital imaging in pathology: whole-slide imaging and beyond. Annu Rev Pathol (2013) 8:331. doi: 10.1146/annurev-pathol-011811-120902

96. Mukhopadhyay S, Feldman MD, Abels E, Ashfaq R, Beltaifa S, Cacciabeve NG, et al. Whole slide imaging versus microscopy for primary diagnosis in surgical pathology: A multicenter blinded randomized noninferiority study of 1992 cases (Pivotal study). Am J Surg Pathol (2018) 42:39. doi: 10.1097/PAS.0000000000000948

97. Durán JM, Jongsma KR. Who is afraid of black box algorithms? on the epistemological and ethical basis of trust in medical AI. J Med Ethics (2021) 47:329. doi: 10.1136/medethics-2020-106820

98. Teo YYA, Danilevsky A, Shomron N. Overcoming interpretability in deep learning cancer classification. Methods Mol Biol (2021) 2243:297. doi: 10.1007/978-1-0716-1103-6_15

99. Serag A, Ion-Margineanu A, Qureshi H, McMillan R, Saint Martin MJ, Diamond J, et al. Translational AI and deep learning in diagnostic pathology. Front Med (Lausanne) (2019) 6:185. doi: 10.3389/fmed.2019.00185

100. Muehlematter UJ, Daniore P, Vokinger KN. Approval of artificial intelligence and machine learning-based medical devices in the USA and Europe (2015-20): a comparative analysis. Lancet Digit Health (2021) 3:e195. doi: 10.1016/S2589-7500(20)30292-2

101. Benjamens S, Dhunnoo P, Meskó B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: an online database. NPJ Digit Med (2020) 3:118. doi: 10.1038/s41746-020-00324-0

102. Schmitt M, Maron RC, Hekler A, Stenzinger A, Hauschild A, Weichenthal M, et al. Hidden variables in deep learning digital pathology and their potential to cause batch effects: Prediction model study. J Med Internet Res (2021) 23:e23436. doi: 10.2196/23436

103. Bautista P, Hashimoto N, Yagi Y. Color standardization in whole slide imaging using a color calibration slide. J Pathol Inf (2014) 5:4. doi: 10.4103/2153-3539.126153

104. Khan AM, Rajpoot N, Treanor D, Magee D. A nonlinear mapping approach to stain normalization in digital histopathology images using image-specific color deconvolution. IEEE Trans Biomed Eng (2014) 61:1729. doi: 10.1109/TBME.2014.2303294

105. Chappelow J, Tomaszewski JE, Feldman M, Shih N, Madabhushi A. HistoStitcher©: An interactive program for accurate and rapid reconstruction of digitized whole histological sections from tissue fragments. Computerized Med Imaging Graphics (2011) 35:557. doi: 10.1016/j.compmedimag.2011.01.010

106. Kothari S, Phan J, Wang M. Eliminating tissue-fold artifacts in histopathological whole-slide images for improved image-based prediction of cancer grade. J Pathol Inf (2013) 4:22. doi: 10.4103/2153-3539.117448

107. Janowczyk A, Zuo R, Gilmore H, Feldman M, Madabhushi A. HistoQC: An open-source quality control tool for digital pathology slides. JCO Clin Cancer Inform (2019) 3:1. doi: 10.1200/CCI.18.00157

108. Senaras C, Niazi MKK, Lozanski G, Gurcan MN. DeepFocus: Detection of out-of-focus regions in whole slide digital images using deep learning. PloS One (2018) 13:e0205387. doi: 10.1371/journal.pone.0205387

109. Moles Lopez X, D'Andrea E, Barbot P, Bridoux AS, Rorive S, Salmon I, et al. An automated blur detection method for histological whole slide imaging. PLoS One (2013) 8:e82710. doi: 10.1371/journal.pone.0082710

110. Hutter C, Zenklusen JC. The cancer genome atlas: Creating lasting value beyond its data. Cell (2018) 173:283. doi: 10.1016/j.cell.2018.03.042

111. Clark K, Vendt B, Smith K, Freymann J, Kirby J, Koppel P, et al. The cancer imaging archive (TCIA): maintaining and operating a public information repository. J Digit Imaging (2013) 26:1045. doi: 10.1007/s10278-013-9622-7

112. Lu MY, Chen RJ, Kong D, Lipkova J, Singh R, Williamson DFK, et al. Federated learning for computational pathology on gigapixel whole slide images. Med Image Anal (2022) 76:102298. doi: 10.1016/j.media.2021.102298

113. Sheller MJ, Edwards B, Reina GA, Martin J, Pati S, Kotrotsou A, et al. Federated learning in medicine: facilitating multi-institutional collaborations without sharing patient data. Sci Rep (2020) 10:12598. doi: 10.1038/s41598-020-69250-1

114. Schneider CA, Rasband WS, Eliceiri KW. NIH Image to ImageJ: 25 years of image analysis. Nat Methods (2012) 9:671. doi: 10.1038/nmeth.2089

115. Schindelin J, Arganda-Carreras I, Frise E, Kaynig V, Longair M, Pietzsch T, et al. Fiji: an open-source platform for biological-image analysis. Nat Methods (2012) 9:676. doi: 10.1038/nmeth.2019

116. de Chaumont F, Dallongeville S, Chenouard N, Hervé N, Pop S, Provoost T, et al. Icy: an open bioimage informatics platform for extended reproducible research. Nat Methods (2012) 9:690. doi: 10.1038/nmeth.2075

117. Stritt M, Stalder AK, Vezzali E. Orbit image analysis: An open-source whole slide image analysis tool. PloS Comput Biol (2020) 16:e1007313. doi: 10.1371/journal.pcbi.1007313

118. Sommer C, Straehle C, Köthe U, Hamprecht FA. Ilastik: Interactive learning and segmentation toolkit. In: 2011 IEEE international symposium on biomed imaging: From nano to macro New York: IEE. (2011). p. 230.

119. Carpenter AE, Jones TR, Lamprecht MR, Clarke C, Kang IH, Friman O, et al. CellProfiler: image analysis software for identifying and quantifying cell phenotypes. Genome Biol (2006) 7:R100. doi: 10.1186/gb-2006-7-10-r100

120. Yu X, Zhao B, Huang H, Tian M, Zhang S, Song H, et al. An open source platform for computational histopathology. IEEE Access (2021) 9:73651. doi: 10.1109/ACCESS.2021.3080429

121. Rosenthal J, Carelli R, Omar M, Brundage D, Halbert E, Nyman J, et al. Building tools for machine learning and artificial intelligence in cancer research: Best practices and a case study with the PathML toolkit for computational pathology. Mol Cancer Res (2022) 20:202. doi: 10.1158/1541-7786.MCR-21-0665

122. Wang F, Kong J, Cooper L, Pan T, Kurc T, Chen W, et al. A data model and database for high-resolution pathology analytical image informatics. J Pathol Inf (2011) 2:32. doi: 10.4103/2153-3539.83192

123. Wang F, Kong J, Gao J, Cooper L, Kurc T, Zhou Z, et al. A high-performance spatial database based approach for pathology imaging algorithm evaluation. J Pathol Inf (2013) 4:5. doi: 10.4103/2153-3539.108543

Keywords: artificial intelligence, computational pathology, convolutional neural network (CNN), deep learning, artificial neural network, digital pathology, machine learning, ovarian cancer

Citation: Orsulic S, John J, Walts AE and Gertych A (2022) Computational pathology in ovarian cancer. Front. Oncol. 12:924945. doi: 10.3389/fonc.2022.924945

Received: 20 April 2022; Accepted: 27 June 2022;

Published: 29 July 2022.

Edited by:

Sarah M. Temkin, National Institutes of Health (NIH), United StatesReviewed by:

Saira Fatima, Aga Khan University, PakistanCopyright © 2022 Orsulic, John, Walts and Gertych. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sandra Orsulic, c29yc3VsaWNAbWVkbmV0LnVjbGEuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.