- 1Department of Pathology, Peking University People’s Hospital, Beijing, China

- 2Department of Pathology, Chinese PLA General Hospital, Beijing, China

- 3R&D Department, China Academy of Launch Vehicle Technology, Beijing, China

- 4Thorough Lab, Thorough Future, Beijing, China

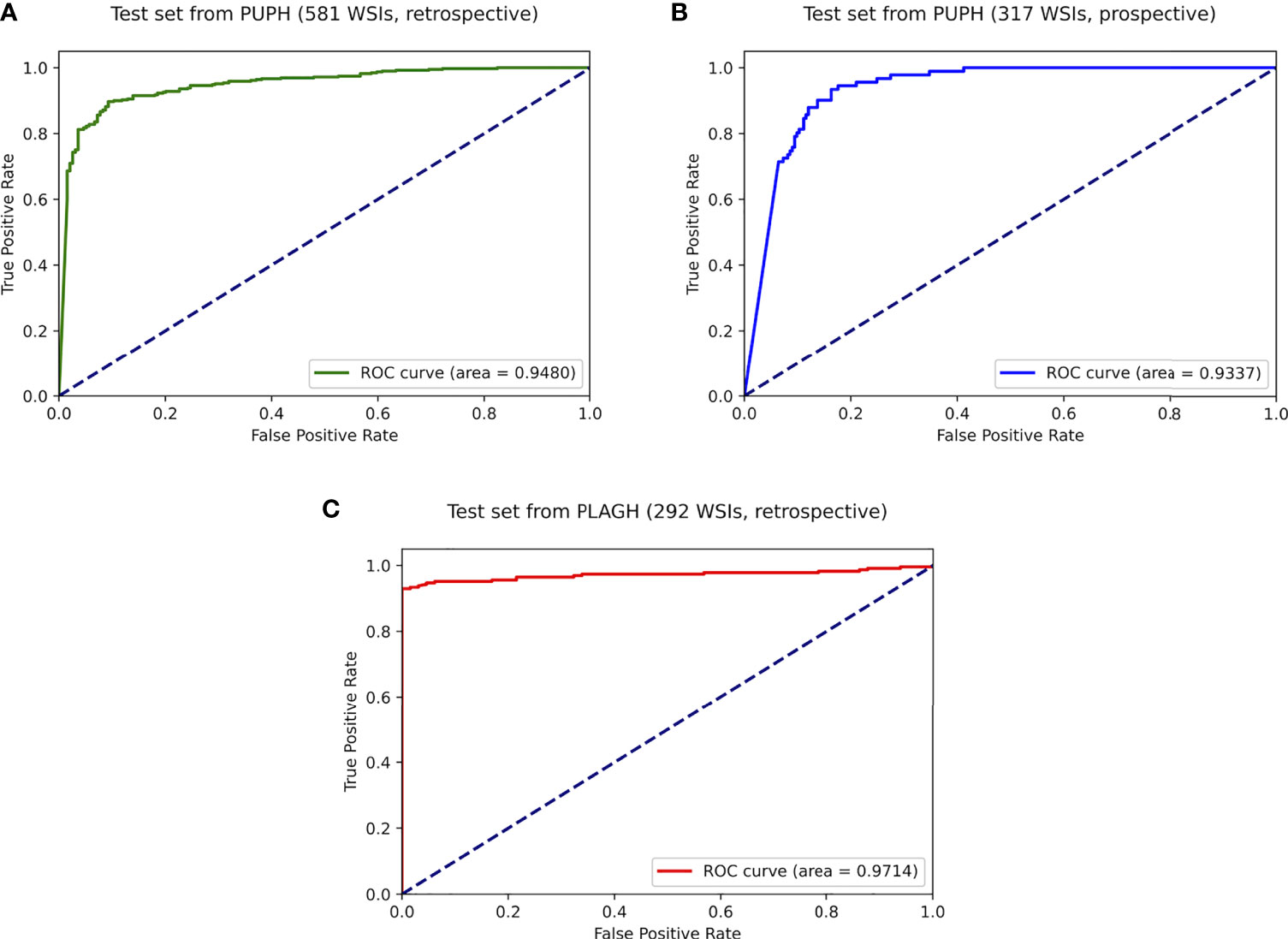

The accurate pathological diagnosis of endometrial cancer (EC) improves the curative effect and reduces the mortality rate. Deep learning has demonstrated expert-level performance in pathological diagnosis of a variety of organ systems using whole-slide images (WSIs). It is urgent to build the deep learning system for endometrial cancer detection using WSIs. The deep learning model was trained and validated using a dataset of 601 WSIs from PUPH. The model performance was tested on three independent datasets containing a total of 1,190 WSIs. For the retrospective test, we evaluated the model performance on 581 WSIs from PUPH. In the prospective study, 317 consecutive WSIs from PUPH were collected from April 2022 to May 2022. To further evaluate the generalizability of the model, 292 WSIs were gathered from PLAHG as part of the external test set. The predictions were thoroughly analyzed by expert pathologists. The model achieved an area under the receiver operating characteristic curve (AUC), sensitivity, and specificity of 0.928, 0.924, and 0.801, respectively, on 1,190 WSIs in classifying EC and non-EC. On the retrospective dataset from PUPH/PLAGH, the model achieved an AUC, sensitivity, and specificity of 0.948/0.971, 0.928/0.947, and 0.80/0.938, respectively. On the prospective dataset, the AUC, sensitivity, and specificity were, in order, 0.933, 0.934, and 0.837. Falsely predicted results were analyzed to further improve the pathologists’ confidence in the model. The deep learning model achieved a high degree of accuracy in identifying EC using WSIs. By pre-screening the suspicious EC regions, it would serve as an assisted diagnostic tool to improve working efficiency for pathologists.

Introduction

Endometrial cancer (EC) is one of the most common gynecological tumors in women, with increasing incidence and mortality rates across the world (1, 2). In developed countries, EC ranks first in malignancies of the female reproductive system (2, 3). In the United States, it was estimated that 52,600 new cases of EC were reported in 2014, which increased to 61,880 in 2019, and the incidence continues to increase (2, 4, 5). According to the statistics of the National Cancer Center of China in 2019, the incidence of EC was 10.28/100,000, while the death rate was 1.9/100,000 (6, 7). In recent years, due to high-fat, high-fever diet, and low-exercise lifestyles, the incidence rate of EC in China has been rising (3, 7).

In clinical work, pathological diagnosis is the gold standard for endometrial specimens. Only when pathologists made accurate diagnoses, gynecologists would give next-step treatment suggestions, leading to an excessive demand for pathologists. The shortage of anatomic pathologists happens both in China and globally, resulting in an overloading of the workforce, thus affecting the diagnostic accuracy (8). According to the China Diagnostic Pathology Industry Analysis Report, the country requires 84,000–168,000 pathologists based on the need for 1-2 pathologists per 100 beds. However, as of 2018, there are only 18,000 pathologists on record, leaving a gap of at least 66,000 pathologists.

The majority of ECs can be diagnosed with curettage or biopsies, after which further surgical treatment is administered. Despite the huge volume of daily diagnostic requirements, specimens obtained during curettage or biopsies are frequently fragmented and asymmetrical, containing blood and even cervical mucus, which increases diagnostic complexity. These circumstances exert considerable pressure on the pathological diagnosis. The complexity of diagnosis and the lack of pathologists constitute a significant contradiction. It is worthwhile to investigate how to find new technologies that enable pathologists to concentrate on regions of interest (ROIs).

In recent years, artificial intelligence has seen tremendous growth, and the application of this cutting-edge technology to the area of pathology has gradually become a new trend. The latest studies have demonstrated that deep learning can be applied in the pathological diagnosis of a variety of organs, such as the prostate (9, 10), stomach (11–13), melanoma (14), lymph node metastasis (15), etc. In these studies, deep learning models can be used as a screening tool to flag the suspected malignant area in advance, prompting pathologists to thoroughly examine the ROIs, thus improving the diagnostic accuracy and shortening diagnostic time. There is also several research on the application of deep learning to EC recognition using whole-slide images (WSIs) (16–18). Sun et al. developed a convolutional neural network (CNN) to interpret hematoxylin and eosin (H&E)-stained image patches from endometrial specimens (16). This study classified pathological images at the patch level and used retrospectively collected cases for model evaluation. Zhao et al. developed a CNN to screen for endometrial intraepithelial neoplasia (17). Hong et al. trained a CNN to predict EC subtypes and molecular features (18).

In this research, we established a high-accuracy deep learning model for EC detection and conducted retrospective, prospective, and multicenter studies to demonstrate its clinical utility. The deep learning model achieved high sensitivity in detecting EC using WSIs with an area under the receiver operating characteristic (ROC) curve (AUC), sensitivity, and specificity of 0.928, 0.924, and 0.801, respectively, on 1,190 WSIs collected from the Peking University People’s Hospital (PUPH) and the Chinese PLA General Hospital (PLAGH). By studying the model predictions, we found the deep learning model was able to detect ECs with different morphology types, especially for illusory appearances.

Materials and methods

Dataset

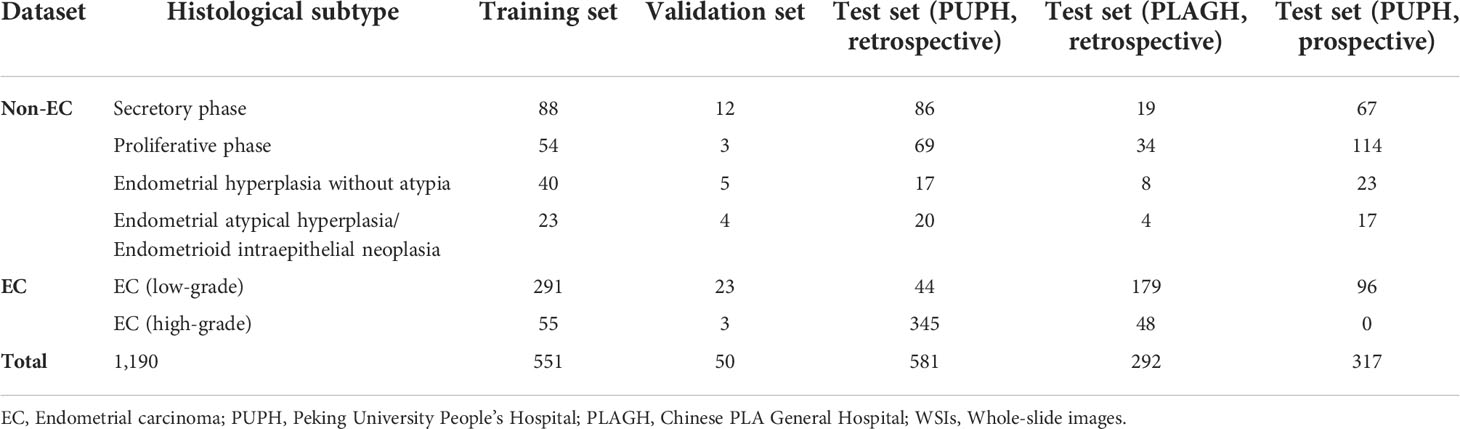

To train and validate the CNN for EC detection, a total of 601 (551 for training and 50 for validation) slides of endometrial specimens from 601 patients were collected from PUPH, including all main pathological subtypes of the endometrium (Table 1). The cases of secretory phase, proliferative phase, endometrial hyperplasia without atypia, and endometrial atypical hyperplasia/endometrioid intraepithelial neoplasia were considered non-cancer. According to the World Health Organization classification of the female genitals, low-grade and high-grade ECs correspond to grades 1 & 2 and 3, respectively (19, 20). All slides were reviewed by two senior gynecological pathologists to reach an agreement on the final diagnostic report.

The test set could be divided into retrospective and prospective parts. For the retrospective study, we collected 581 cases of endometrial biopsy specimens from PUPH and 292 cases from PLAGH. Meanwhile, 317 consecutive endometrial biopsies from PUPH were collected from April 2022 to May 2022 as the prospective test set. The gold-standard diagnoses of all cases in the test set were determined by two experts (X. Zhang and Z. Song) under a multi-head microscope. For cases with different diagnoses, consensus was reached after discussion.

All training and validation slides were digitized (eyepiece magnification fixed at 10x) using the KF-PRO-005 scanner (KFBio). The test slides from PUPH were digitalized using both KF-PRO-005 and Motic EasyScan 102. The test slides from PLAGH were scanned by Motic EasyScan 102. All the WSIs had a maximum zoom ratio of 400x and a physical resolution of 0.238μm/pixel.

Data annotation

Expert pathologists labeled 346 training and 26 validation slides containing EC using a self-developed annotation system based on iPad, referring to the Fourth Edition of the WHO Classification of Tumors of the Endometrial System. A three-step approach including initial labeling, further verification, and final expert review (by D. Shen) was developed. Once the labeling was completed, the slides and annotations were sent to the training process.

Model development

Using Otsu’s method, the background parts of the slide were filtered away while producing training and validation sets. Otsu’s method is one of the foreground detection approaches in computer vision. On the thumbnail of the grayscale slide, a grid search of the thresholding parameter was done to reduce the intra-class variance. This led to the extraction of the effective tissue area. The slides were then divided into patches of 320×320 pixels. These patches were extracted side-by-side from the effective tissue area without overlapping. Explicitly, 891,330 malignant and 1,983,966 benign patches were used for training.

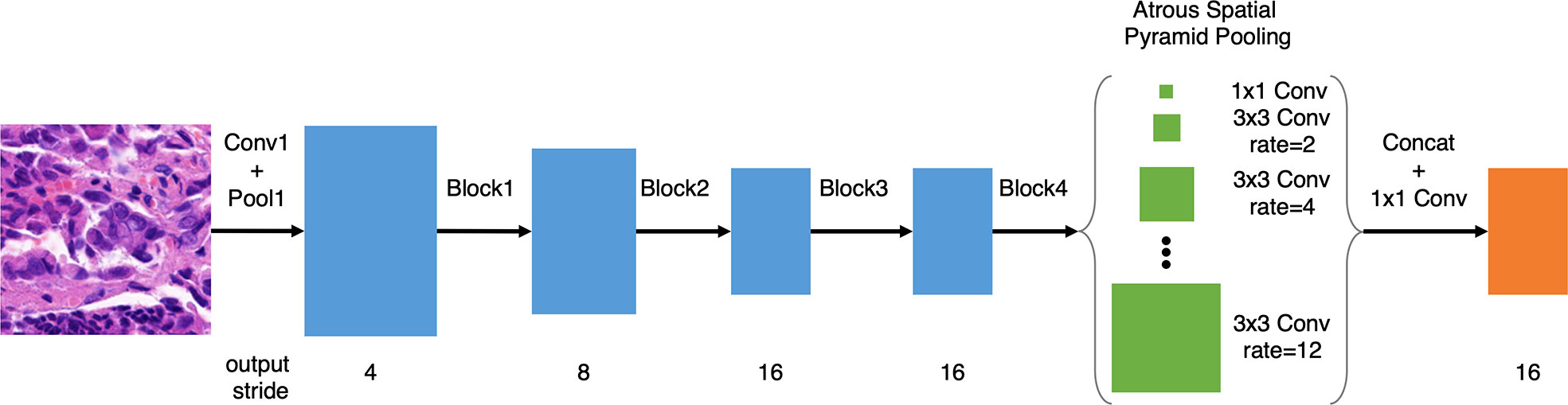

Following our previous work on gastric cancer detection (13), based on DeepLab v3 and ResNet-50, we improved the model with atrous spatial pyramid pooling (ASPP) with dilations of 2, 4, 6, 8, 10, and 12, as illustrated in Figure 1, which improved the multi-scale detection capability. Meanwhile, we removed the image-level pooling information from the ASPP to force the model to focus on tumor cells. Since histology slides have no discernible orientation, we augmented the training data using random rotation and mirroring. We used random scaling between 1.0x and 1.5x to make the deep learning model more tolerant of slight variations in the scanning ratio. We further randomized the magnitude of the patch brightness, contrast, hue (average color), and saturation (with a maximum delta of 0.08).

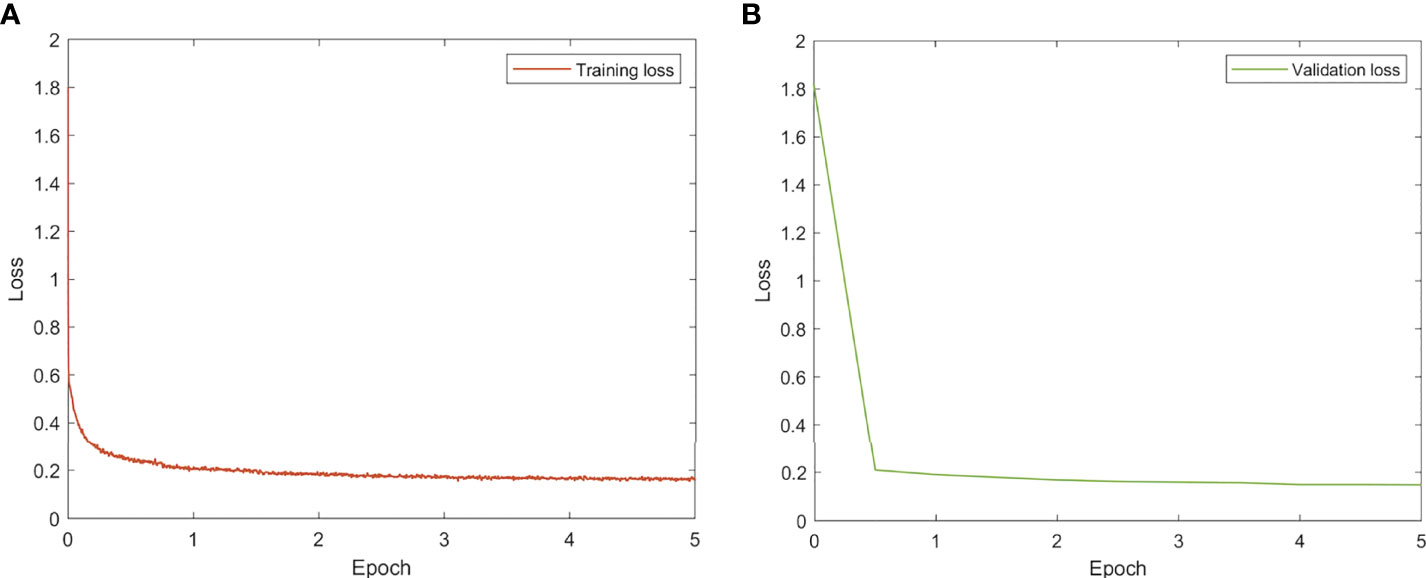

All models were trained and evaluated on an Ubuntu server with four Nvidia GTX1080Ti GPUs using TensorFlow. To train the models, the ADAM optimizer with a fixed learning rate of 0.001 was utilized. The batch size was set at 80 (20 per GPU) and training was terminated after 5 epochs. The learning curves are given in Figure 2.

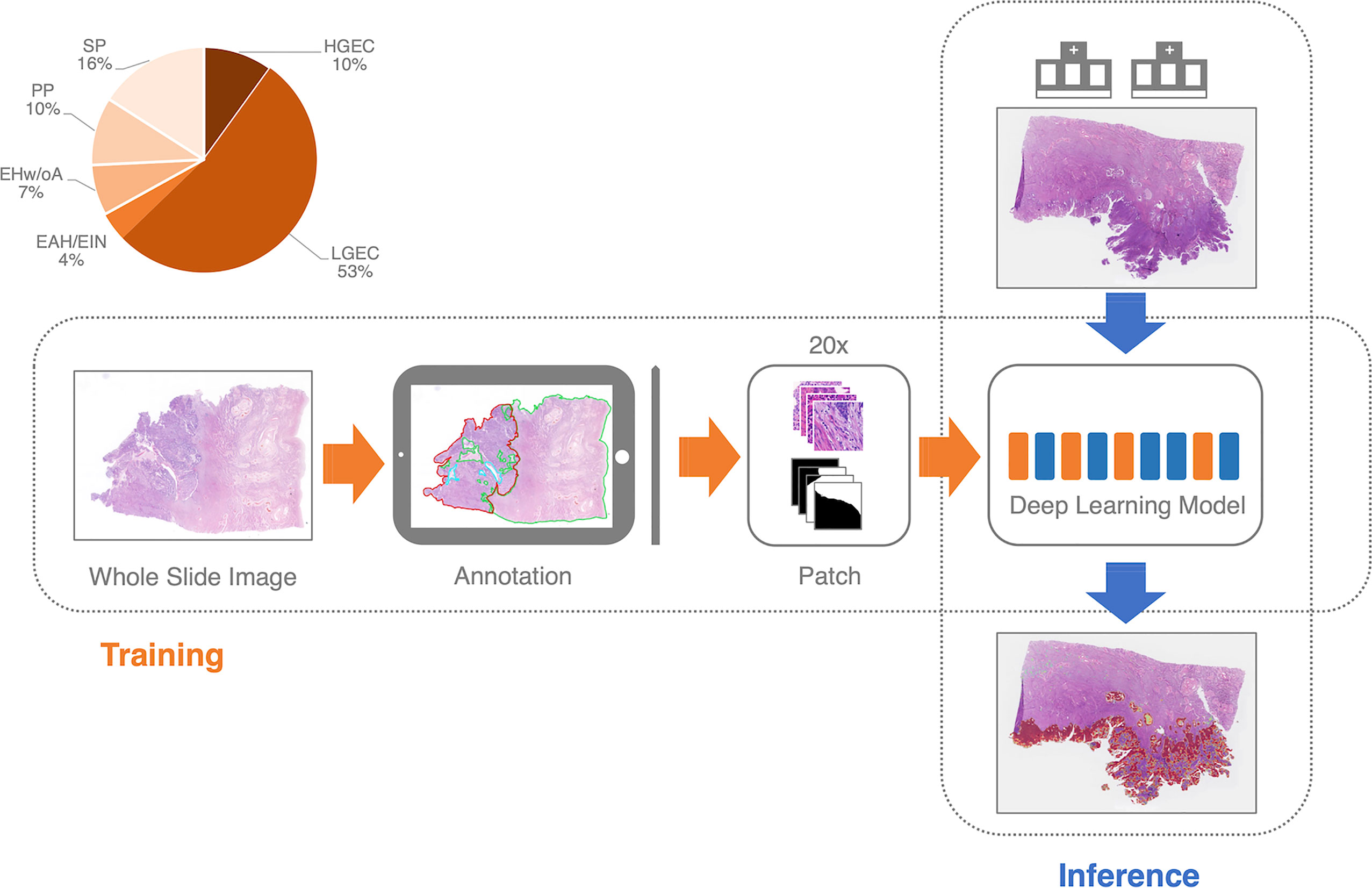

Since there are no fully connected layers, the advantage of the fully convolutional neural network design is that training and inference tile sizes do not need to be equal (Figure 3). During the inference phase, we divide the WSI into tiles of 2,000 by 2,000 pixels. We implemented the overlap-patch method by entering a 2,200×2,200-pixel tile into the model, but only utilized the 2,000×2,000-pixel region in the middle for the final prediction. The final prediction of the deep learning model was the EC probability for the 2,000×2,000 pixels. The tile-level predictions were then concatenated to the slide level. We used the 1000th highest pixel-level probability as the slide-level EC probability. The ROC curve was constructed from the probability by using slide-level thresholding.

Figure 3 The training and inference pipeline of the deep learning model. EC, endometrial cancer; SP, Secretory phase; PP, Proliferative phase; EHw/oA, Endometrial hyperplasia without atypia; EAH/EIN, Endometrial atypical hyperplasia/Endometrioid intraepithelial neoplasia; LGEC, EC (low-grade); HGEC, EC (high-grade).

Evaluation metrics

We selected three assessment measures to characterize the model’s performance: accuracy = (TP + TN)/(TP + FN + FP + TN), sensitivity = TP/(TP + FN), and specificity = TN/(TN + FP), where TP, FP, TN, and FN stand for true positive, false positive, true negative, and false negative, respectively. Accuracy reflected the proportion of successfully predicted slides relative to the total number of slides. The sensitivity/specificity reflected the percentage of properly recognized adenomatous/normal slides. The statistics were computed using Python scripts created in-house and plotted with matplotlib.

Results

Model performance

On the retrospective test set collected from PUPH, the deep learning model achieved an AUC, sensitivity, and specificity of 0.948, 0.928, and 0.800, respectively (Figure 4A). For the prospective study, 317 consecutive endometrium specimens for a continuous month in PUPH were collected, digitalized, and tested. The deep learning model achieved an AUC, sensitivity, and specificity of 0.933, 0.934, and 0.837, respectively (Figure 4B). The performance of the model on the prospective dataset was comparative to that on the retrospective one, showing the model capability on daily diagnosis.

Figure 4 ROC curves of the best-trained model showed promising predictive power on the test datasets. (A) Retrospective test dataset from PUPH. (B) Prospective test dataset from PUPH. (C) Retrospective test dataset from PLAGH.

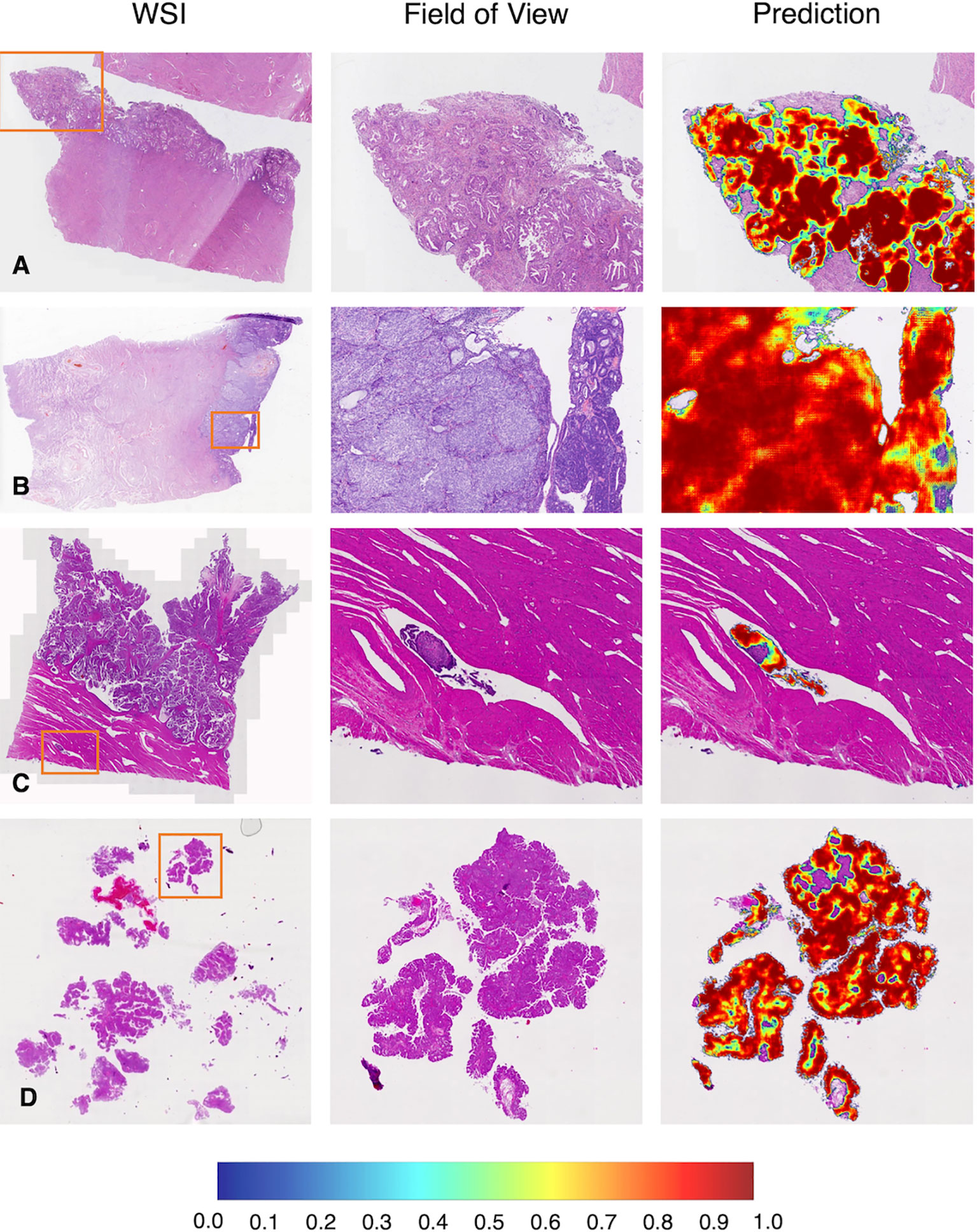

As shown in Figures 5A, B, common subtypes of EC, including low and high-grade ECs, could be accurately detected by the deep learning model. When we focused on the predicted heatmap of the model, we found the border of the dark red area accurately fitted the cancerous regions. An example of lymph-vascular space invasion (LVSI) was given in Figure 5C. The model correctly identified the small infiltration lesion. A similar situation was shown in Figure 5D, the scattered and fragmented EC components were detected by the model.

Figure 5 Representative examples in the test dataset from PUPH. (A) Low-grade EC. (B) High-grade EC. (C) Intralymphatic carcinoma thrombus. (D) Fragmented component of EC. WSI, whole-slide image.

Multicenter study

To test the robustness of the model, 292 WSIs from PLAGH were tested. The model achieved an AUC, sensitivity, and specificity of 0.971, 0.947, and 0.938, respectively (Figure 4C). Despite data distribution bias, the model performance on the retrospective dataset from PLAGH was even better than that from PUPH. This finding proved the generalizability of the deep learning model.

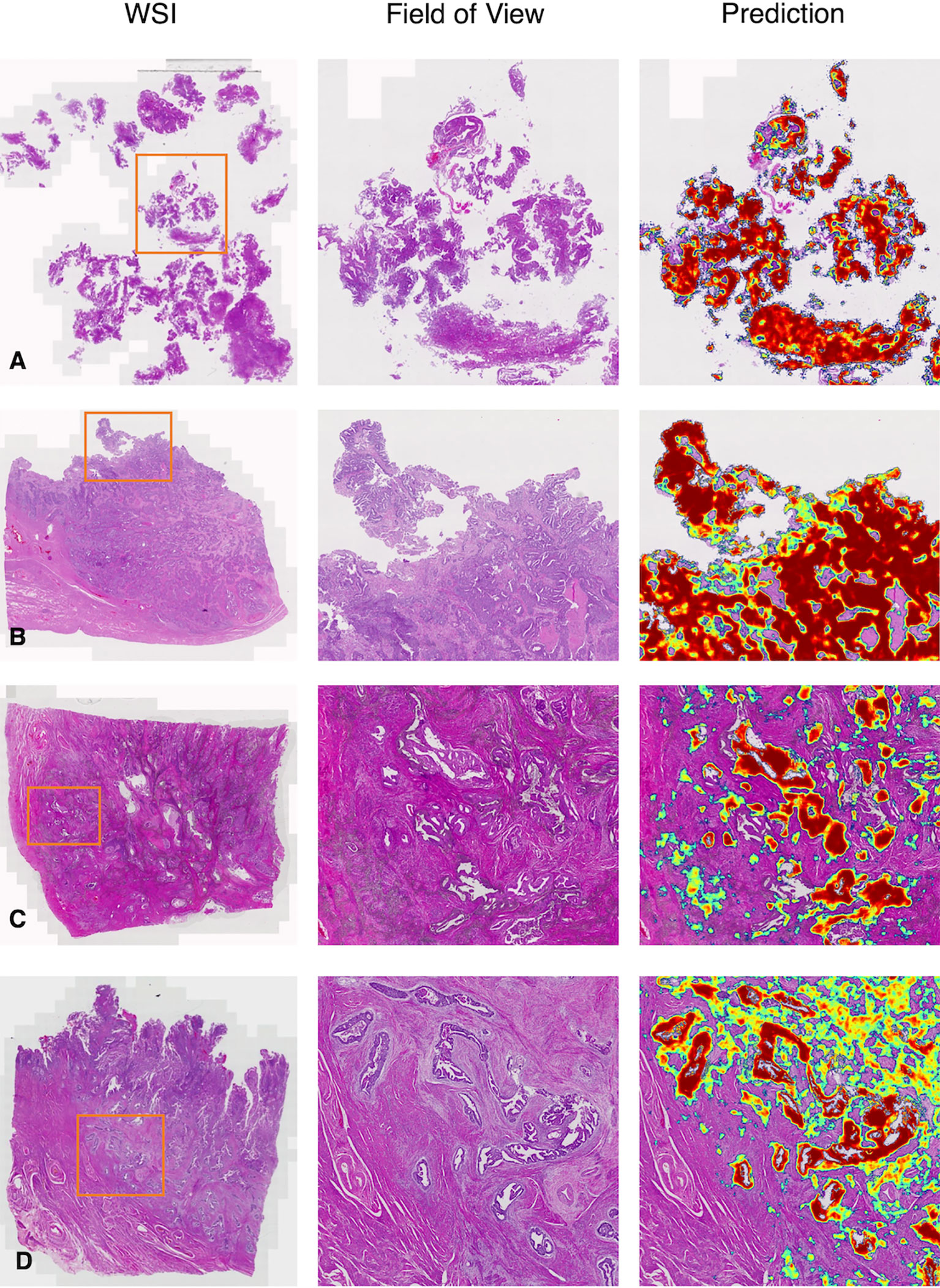

We gave four predicted examples in Figure 6. A case of low-grade EC was shown in Figure 6A. The deep learning model detected cancers contained in fragmented specimens. Figure 6B shows a surgical specimen with high-grade EC. A more difficult situation was revealed in Figure 6C, with small infiltrating lesions shown in the deep muscularis away from the main tumor area. Figure 6D shows an example of microcystic, elongated, and fragmented (MELF) infiltration.

Figure 6 Representative examples in the test dataset from PLAGH. (A) Low-grade EC. (B) High-grade EC. (C) Small infiltrating lesions were showed in the deep muscularis away from the main tumor area. (D) MELF infiltration. WSI: whole-slide image.

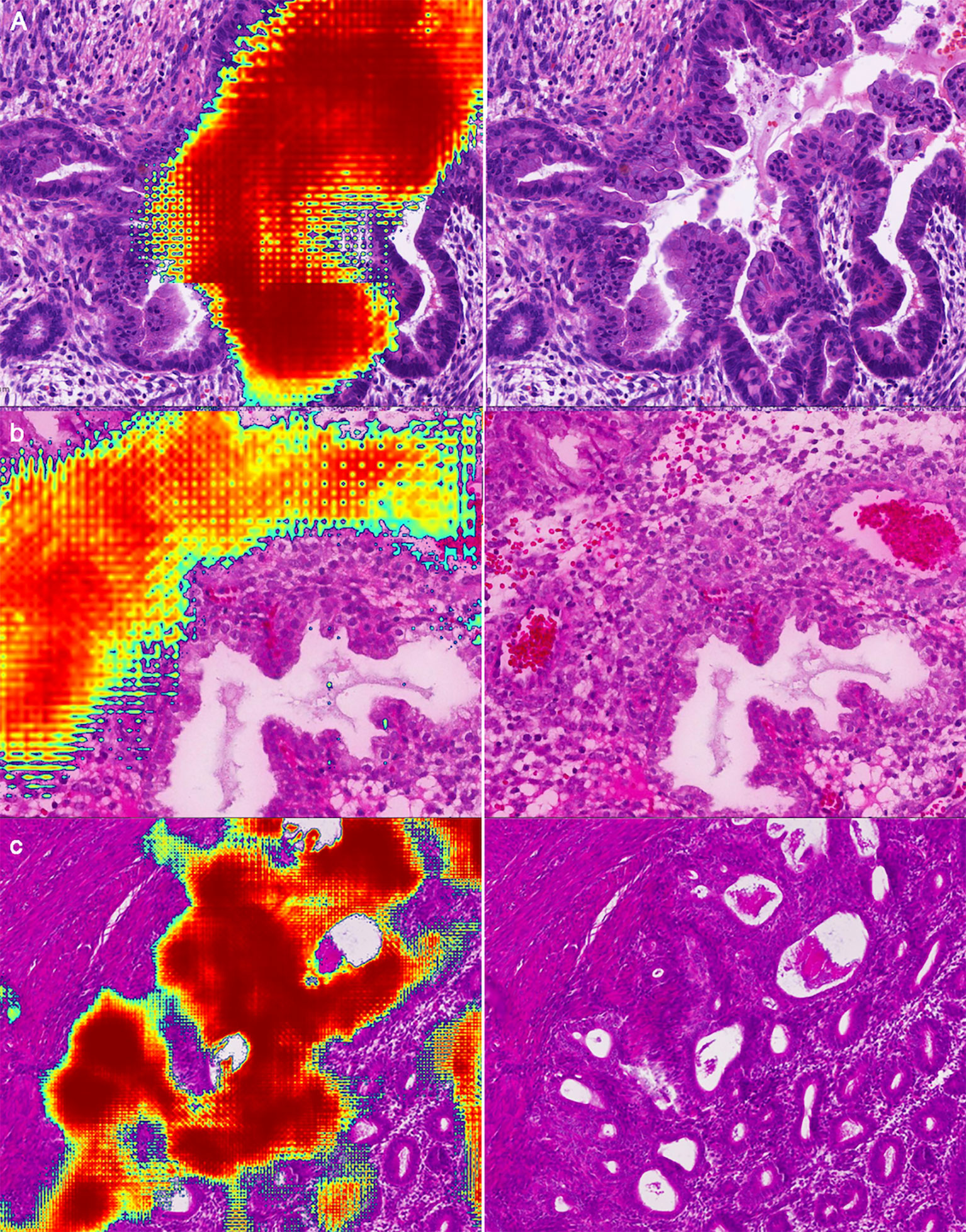

False analysis

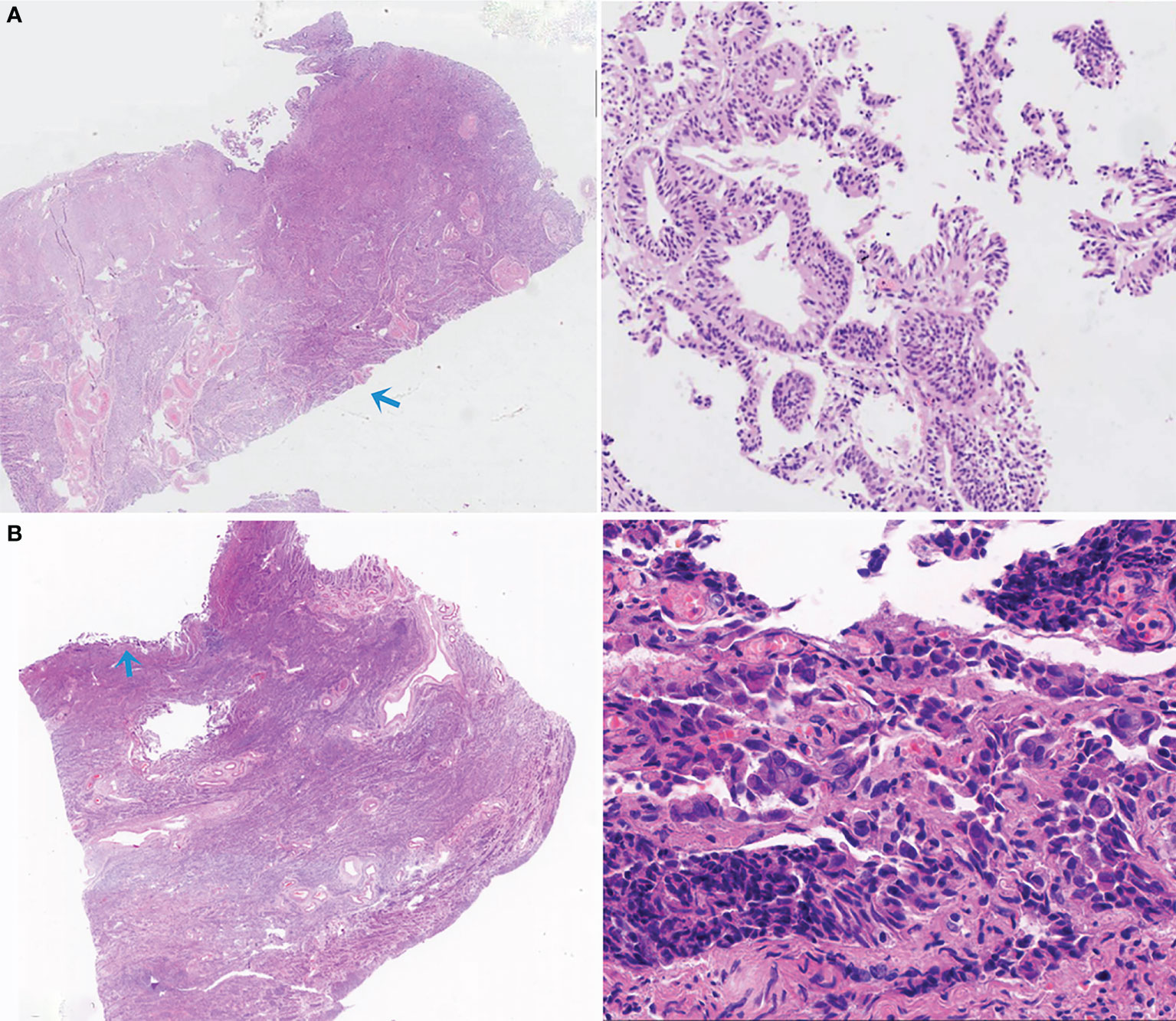

We have listed three common false positive patterns of the deep learning model in Figure 7. In Figure 7A, the mucous metaplasia of endometrial glands with papillary hyperplasia was morphologically overlapped with mucogenic EC. These lesions were cancer mimickers. It’s extremely confusing when it comes to diagnosis. In Figure 7B, stromal cells decidualize with epithelioid morphology. This is a typical histological feature of the secretory endometrium, similar to high grade EC in morphology. Figure 7C was a case of hysterectomy for multiple leiomyomas of the uterus, with endometrium in the proliferative stage. Due to the lack of submucosa in the endometrium, the interface between the endometrium and the uterine muscle wall was irregular. A few endometrial glands often appear in the superficial muscle layer, resulting in the illusion of cancer invading the uterine wall.

Figure 7 Representative examples of false positives: (A) Mucous metaplasia of endometrial glands with papillary hyperplasia. (B) Decidualization of stromal cells with epithelioid morphology. (C) Endometrium in the proliferative stage.

We are also interested in false negatives. Two representative examples of false negatives were given in Figure 8. In Figure 8A, scattered and fragmented endometrial cancer components separating from the main body of the uterus were misdiagnosed by the deep leaning algorithm. In Figure 8B, since the tissue was squeezed, the color of cells was dark, the nuclei were elongated, and the cell nucleoplasm ratio appeared to increase. These are similar to the morphological characteristics of cancer.

Figure 8 Two representative examples of false negatives. (A) The small, scattered, and fragmented EC components were missed by the deep leaning model. (B) Due to artificial factors, the compressive deformation of tissue was evident, leading to a missed diagnosis.

Discussion

In recent years, artificial intelligence has achieved unprecedented development, and the application of this frontier technology in the field of medicine has gradually become a new trend. Recent studies have demonstrated promising results of deep learning algorithms in recognizing various lesions using WSIs (12, 14, 15, 21, 22). As for EC, the increasing diagnostic workload of endometrial biopsy specimens calls for the development of new models with high sensitivity and specificity.

We have developed a deep learning model to detect EC and have demonstrated the performance and generalizability of the model. Considering that the clinically significant diagnostic error rate in surgical pathology has been reported to vary from 0.26% to 1.2% (23, 24), the model performance in diagnosing EC was almost equal to that of human pathologists, suggesting that it may help pathologists under a real-world scenario as a second-opinion (25, 26).

Better diagnosis leads to better treatment for EC. LVSI is a high-risk factor for the prognosis of EC (27). The treatment approach is different for patients with or without LVSI. For human pathologists, the case shown in Figure 5C tends to be missed in clinical practice, especially when a pathologist is under high diagnostic pressure. The deep learning model successfully flagged these subtle regions, alerting pathologists to re-examine the case. MELF invasion was an independent prognostic factor closely related to the risk of lymph node metastasis (28), indicating poor prognosis. Omitting deep muscular infiltration leads to a lower stage (from IA2 to IA1). As shown in Figure 6D, the assistance of the model could help pathologists make better diagnoses.

To improve the pathologists’ confidence in the deep learning model, we performed a thorough analysis of the falsely predicted cases. In clinical practice, tissue might be significantly damaged by cauterization or compression during a biopsy, resulting in illusory appearances. These situations are also confusing for primary pathologists. A human pathologist could make a diagnosis based on a patient’s menstruation, which was not known to the model.

Most of the false positive and false negative cases in the test dataset were caused by artificial tissue deformation. These issues may be alleviated with more training data and better data augmentation techniques.

Despite all this, the deep learning model revealed excellent performance on the real-world test dataset and proved to prevent pathologists from missed diagnosis. Different from pathologists, the model is based solely on H&E-stained slides, while pathologists could review additional IHC slides and clinical data to make final diagnoses. Thus, an accurate deep learning model will not replace the breadth and contextual knowledge of pathologists. The model would function as a supplemental diagnostic tool to assist pathologists in discriminating EC from no-cancer. To boost the clinical utility value of the model, in future work, we will add more subtype identification capabilities to the model.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

Ethics statement

This study was approved by the Institutional Review Boards at PUPH and PLAGH. This study was conducted in accordance with the Declaration of Helsinki and informed consent from the patients was waived.

Author contributions

XZ, SW, ZS, and DS proposed the research, XiaoyZ, XiaobZ, CW, YZ, SL, and DS performed the WSI annotation, ZS led the multicenter study, XiaobZ, WB, and SW conducted the experiment, LW, SW, and QL wrote the deep learning code and performed data analysis, XiaobZ, WB, and SW drafted the manuscript, ZS and DS reviewed the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work is supported by the Medical Big Data and Artificial Intelligence Project of the Chinese PLA General Hospital.

Acknowledgments

We would like to thank Cancheng Liu at Thorough Future for data collection and help discussions.

Conflict of interest

SW is the co-founder and chief technology officer (CTO) of Thorough Future. LW is the algorithm researcher of Thorough Future.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Morice P, Leary A, Creutzberg C, Abu-Rustum N, Darai E. Endometrial cancer. Lancet (2016) 387:1094–108. doi: 10.1016/S0140-6736(15)00130-0

2. Siegel RL, Miller KD, Jemal A. Cancer statistics, 2019. CA Cancer J Clin (2019) 69:7–34. doi: 10.3322/caac.21551

3. Chen W, Zheng R, Baade PD, Zhang S, Zeng H, Bray F, et al. Cancer statistics in China, 2015. CA Cancer J Clin (2016) 66:115–32. doi: 10.3322/caac.21338

4. Group SGOCPECW, Burke WM, Orr J, Leitao M, Salom E, Gehrig P, et al. Endometrial cancer: a review and current management strategies: part II. Gynecol Oncol (2014) 134:393–402. doi: 10.1016/j.ygyno.2014.06.003

5. Group SGOCPECW, Burke WM, Orr J, Leitao M, Salom E, Gehrig P, et al. Endometrial cancer: a review and current management strategies: part I. Gynecol Oncol (2014) 134:385–92. doi: 10.1016/j.ygyno.2014.05.018

6. Cao W, Chen HD, Yu YW, Li N, Chen WQ. Changing profiles of cancer burden worldwide and in China: a secondary analysis of the global cancer statistics 2020. Chin Med J (Engl) (2021) 134:783–91. doi: 10.1097/CM9.0000000000001474

7. Wu C, Li M, Meng H, Liu Y, Niu W, Zhou Y, et al. Analysis of status and countermeasures of cancer incidence and mortality in China. Sci China Life Sci (2019) 62:640–7. doi: 10.1007/s11427-018-9461-5

8. Metter DM, Colgan TJ, Leung ST, Timmons CF, Park JY. Trends in the US and Canadian pathologist workforces from 2007 to 2017. JAMA Netw Open (2019) 2:e194337. doi: 10.1001/jamanetworkopen.2019.4337

9. Strom P, Kartasalo K, Olsson H, Solorzano L, Delahunt B, Berney DM, et al. Artificial intelligence for diagnosis and grading of prostate cancer in biopsies: a population-based, diagnostic study. Lancet Oncol (2020) 21:222–32. doi: 10.1016/S1470-2045(19)30738-7

10. Nagpal K, Foote D, Liu Y, Chen PC, Wulczyn E, Tan F, et al. Development and validation of a deep learning algorithm for improving Gleason scoring of prostate cancer. NPJ Digit Med (2019) 2:48. doi: 10.1038/s41746-019-0112-2

11. Park J, Jang BG, Kim YW, Park H, Kim BH, Kim MJ, et al. A prospective validation and observer performance study of a deep learning algorithm for pathologic diagnosis of gastric tumors in endoscopic biopsies. Clin Cancer Res (2021) 27:719–28. doi: 10.1158/1078-0432.CCR-20-3159

12. Ba W, Wang S, Shang M, Zhang Z, Wu H, Yu C, et al. Assessment of deep learning assistance for the pathological diagnosis of gastric cancer. Mod Pathol (2022) 35:1262–8. doi: 10.1038/s41379-022-01073-z

13. Song Z, Zou S, Zhou W, Huang Y, Shao L, Yuan J, et al. Clinically applicable histopathological diagnosis system for gastric cancer detection using deep learning. Nat Commun (2020) 11:4294. doi: 10.1038/s41467-020-18147-8

14. Ba W, Wang R, Yin G, Song Z, Zou J, Zhong C, et al. Diagnostic assessment of deep learning for melanocytic lesions using whole-slide pathological images. Transl Oncol (2021) 14:101161. doi: 10.1016/j.tranon.2021.101161

15. Ehteshami Bejnordi B, Veta M, Johannes van Diest P, van Ginneken B, Karssemeijer N, Litjens G, et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA (2017) 318:2199–210. doi: 10.1001/jama.2017.14585

16. Sun H, Zeng X, Xu T, Peng G, Ma Y. Computer-aided diagnosis in histopathological images of the endometrium using a convolutional neural network and attention mechanisms. IEEE J BioMed Health Inform (2020) 24:1664–76. doi: 10.1109/JBHI.2019.2944977

17. Zhao F, Dong D, Du H, Guo Y, Su X, Wang Z, et al. Diagnosis of endometrium hyperplasia and screening of endometrial intraepithelial neoplasia in histopathological images using a global-to-local multi-scale convolutional neural network. Comput Methods Programs Biomed (2022) 221:106906. doi: 10.1016/j.cmpb.2022.106906

18. Hong R, Liu W, DeLair D, Razavian N, Fenyo D. Predicting endometrial cancer subtypes and molecular features from histopathology images using multi-resolution deep learning models. Cell Rep Med (2021) 2:100400. doi: 10.1016/j.xcrm.2021.100400

19. Zheng XD, Chen GY, Huang SF. Updates on adenocarcinomas of the uterine corpus and the cervix in the 5th edition of WHO classification of the female genital tumors. Zhonghua Bing Li Xue Za Zhi (2021) 50:437–41. doi: 10.3760/cma.j.cn112151-20201021-00797

20. Mayr D, Schmoeckel E, Hohn AK, Hiller GGR, Horn LC. Current WHO classification of the female genitals: Many new things, but also some old. Pathologe (2021) 42:259–69. doi: 10.1007/s00292-021-00933-w

21. Litjens G, Sanchez CI, Timofeeva N, Hermsen M, Nagtegaal I, Kovacs I, et al. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci Rep (2016) 6:26286. doi: 10.1038/srep26286

22. Coudray N, Ocampo PS, Sakellaropoulos T, Narula N, Snuderl M, Fenyo D, et al. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat Med (2018) 24:1559–67. doi: 10.1038/s41591-018-0177-5

23. Nakhleh RE, Zarbo RJ. Amended reports in surgical pathology and implications for diagnostic error detection and avoidance: a college of American pathologists q-probes study of 1,667,547 accessioned cases in 359 laboratories. Arch Pathol Lab Med (1998) 122:303–9.

24. Mukhopadhyay S, Feldman MD, Abels E, Ashfaq R, Beltaifa S, Cacciabeve NG, et al. Whole slide imaging versus microscopy for primary diagnosis in surgical pathology: A multicenter blinded randomized noninferiority study of 1992 cases (Pivotal study). Am J Surg Pathol (2018) 42:39–52. doi: 10.1097/PAS.0000000000000948

25. Hanna MG, Ardon O, Reuter VE, Sirintrapun SJ, England C, Klimstra DS, et al. Integrating digital pathology into clinical practice. Mod Pathol (2022) 35:152–64. doi: 10.1038/s41379-021-00929-0

26. Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med (2019) 25:44–56. doi: 10.1038/s41591-018-0300-7

27. Sanderson PA, Critchley HO, Williams AR, Arends MJ, Saunders PT. New concepts for an old problem: the diagnosis of endometrial hyperplasia. Hum Reprod Update (2017) 23:232–54. doi: 1093/humupd/dmw042

Keywords: endometrial cancer, deep learning, artificial intelligence, convolutional neural network, data analysis

Citation: Zhang X, Ba W, Zhao X, Wang C, Li Q, Zhang Y, Lu S, Wang L, Wang S, Song Z and Shen D (2022) Clinical-grade endometrial cancer detection system via whole-slide images using deep learning. Front. Oncol. 12:1040238. doi: 10.3389/fonc.2022.1040238

Received: 09 September 2022; Accepted: 19 October 2022;

Published: 02 November 2022.

Edited by:

Cheng Lu, Guangdong Provincial People’s Hospital, ChinaCopyright © 2022 Zhang, Ba, Zhao, Wang, Li, Zhang, Lu, Wang, Wang, Song and Shen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Danhua Shen, ZGFuaHVhc2hlbkAxMjYuY29t; Zhigang Song, c29uZ3poZzMwMUAxMzkuY29t; Shuhao Wang, dG9Ac2h1aGFvLndhbmc=

†These authors have contributed equally to this work

Xiaobo Zhang

Xiaobo Zhang Wei Ba

Wei Ba Xiaoya Zhao1

Xiaoya Zhao1 Danhua Shen

Danhua Shen