- 1Visual Analytics for Knowledge Laboratory (VIS2KNOW Lab), School of Convergence, College of Computing and Informatics, Sungkyunkwan University, Seoul, South Korea

- 2Department of Software, Sejong University, Seoul, South Korea

- 3Information Systems Department, College of Computer and Information Sciences, Imam Mohammad Ibn Saud Islamic University (IMSIU), Riyadh, Saudi Arabia

- 4Department of Computer Science & Engineering, Oakland University, Rochester, MI, United States

- 5Faculty of Computers & Information Technology, Computer Science Department, University of Tabuk, Tabuk, Saudi Arabia

- 6Digital Image Processing Laboratory, Islamia College Peshawar, Peshawar, Pakistan

- 7Color and Visual Computing Lab, Department of Computer Science, Norwegian University of Science and Technology (NTNU), Gjøvik, Norway

The coronavirus disease 2019 (COVID-19) pandemic has caused a major outbreak around the world with severe impact on health, human lives, and economy globally. One of the crucial steps in fighting COVID-19 is the ability to detect infected patients at early stages and put them under special care. Detecting COVID-19 from radiography images using computational medical imaging method is one of the fastest ways to diagnose the patients. However, early detection with significant results is a major challenge, given the limited available medical imaging data and conflicting performance metrics. Therefore, this work aims to develop a novel deep learning-based computationally efficient medical imaging framework for effective modeling and early diagnosis of COVID-19 from chest x-ray and computed tomography images. The proposed work presents “WEENet” by exploiting efficient convolutional neural network to extract high-level features, followed by classification mechanisms for COVID-19 diagnosis in medical image data. The performance of our method is evaluated on three benchmark medical chest x-ray and computed tomography image datasets using eight evaluation metrics including a novel strategy of cross-corpse evaluation as well as robustness evaluation, and the results are surpassing state-of-the-art methods. The outcome of this work can assist the epidemiologists and healthcare authorities in analyzing the infected medical chest x-ray and computed tomography images, management of the COVID-19 pandemic, bridging the early diagnosis, and treatment gap for Internet of Medical Things environments.

1 Introduction

In the beginning of December 2019, a novel infectious acute disease called coronavirus disease 2019 (COVID-19) caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) has emerged and caused severe impact on health, human lives, and global economy. This COVID-19 disease originated in Wuhan city of China and then spread in several other countries and become a global pandemic (1). This virus is easily transmitted between two persons through petite drops caused by coughing, sneezing, and talking during close contact. The infected person usually has certain symptoms after 7 days that include high fever, continuous cough, shortness of breath, and taste loss. According to the statistical report of the World Health Organization (WHO) (2), COVID-19 affected around 192 countries with 199 million confirmed active cases and 4.2 million confirmed deaths till August 4, 2021. Considering the fast spread and its high contiguous nature, it is essential to diagnose COVID-19 at an early stage to greatly prevent the outbreak by isolating the infected persons, thereby minimizing the possibilities of infection to healthy people. Till date, the most common and convenient technique for diagnosing COVID-19 is the reverse transcription polymerase chain reaction (RT-PCR). However, this technique has very low precision, high delay, and low sensitivity, making it less effective in preventing the spread of COVID-19 (3).

Besides the RT-PCR testing system, there are several other medical imaging-based COVID-19 diagnosing methods such as computed tomography (CT) (4–6) and chest radiography (x-ray) (7, 8). Diagnosis of COVID-19 is typically associated with both the symptoms of pneumonia and medical chest x-ray tests (9, 10). Chest x-ray is the first medical imaging-based technique that plays an important role in the diagnosis and detection of COVID-19 disease. Some attempts have been made in the literature to detect COVID-19 from medical chest x-ray images using machine learning and deep learning approaches (11, 12). For instance, Narin et al. (13) evaluated the performance of five pretrained Convolutional Neural Network (CNN)-based models for the detection of coronavirus pneumonia-infected patients using medical chest x-ray images. Ismail et al. (14) utilized deep feature extraction and fine-tuning of the pretrained CNNs to classify COVID-19 and normal (healthy) chest x-ray images. Tang et al. (15) used chest x-ray images with effective screening for the detection of COVID-19 cases. Furthermore, Jain et al. (16) used transfer learning for the COVID-19 detection using medical chest x-ray images, and they compared the performance of medical imaging-based COVID-19 detection methods.

More recently, several other deep learning-based approaches (17) are presented to overcome the limitations of previous imaging-based COVID-19 detection methods. For instance, Minaee et al. (18) proposed a transfer learning strategy to improve the COVID-19 recognition rate in medical chest x-ray images. They investigated different pretrained CNN architectures on their newly prepared COVID-19 x-ray image datasets and claimed reasonable results. However, their newly created dataset is not balanced and has a smaller number of COVID-19p images compared with non-COVID-19 images. Aniello et al. (19) presented ADECO-CNN to classify infected and noninfected patients via medical CT images. They compared their CNN architecture with pretrained CNNs including VGG19, GoogleNet, and ResNet50. Yujin et al. (20) suggested a patch-based CNN approach for efficient classification and segmentation of COVID-19 chest x-ray images. They first preprocessed medical chest x-ray images and then fed them into their proposed network for infected lung area segmentation and classification in medical images. However, their attained performance is relatively low due to the small number of images in their used dataset. Similarly, Yu-Huan et al. (21) presented a joint classification and segmentation framework called JCS for COVID-19 medical chest CT diagnosis. They trained their JCS system on their newly created COVID-19 classification and segmentation dataset. They claimed real-time and explainable diagnosis of COVID-19 in chest CT images with high efficiency in both classification and segmentation. Afshar et al. (22) proposed a deep uncertainty-aware transfer learning framework for COVID-19 detection in medical x-ray and CT images. They first extracted CNN features from images of chest x-ray and CT scan dataset and then evaluated by different machine learning classifiers to classify the input image as COVID or non-COVID.

The current COVID-19 pandemic situation greatly overwhelms the health monitoring systems of even developed countries, leading to an upward trend in the number of deaths on a daily basis. Also, the inaccessibility of healthcare system and required medication to the rural areas caused increase in the loss of human lives. Therefore, an intelligent AI-driven healthcare system is necessarily needed for combating with COVID-19 pandemic and rescuing hospitals and other medical staff. Thanks to the Internet of Things (23–26) and Internet of Medical Things (IoMT) (27, 28) for offering powerful features (i.e., online monitoring, high-speed communication, and remote checkups) that can greatly assist healthcare system of a country against COVID-19 pandemic (29). Also, Healthcare 5.0 with 5G-enabled IoMT environment can effectively improve the accessibility of doctors and nurses to their patients in remote areas, enabling COVID-19 patients to control their health based on daily recommendations from doctors.

Undoubtedly, the deployment of 5G-enabled IoMT protocols can greatly enhance the performance of smart healthcare system by connecting hospitals and patients, transmitting their health-related data between both parties. However, such a smart IoMT healthcare environment demands computationally efficient yet accurate AI algorithms (including both machine learning and deep learning algorithms) (30). Most of the existing deep learning approaches use computationally complex CNN architectures that require high network bandwidth and computational requirements and cannot be employed on resource constrained devices. Thus, the architecture of AI Algorithm (i.e., CNN architecture) to be deployed must meet the requirements of the executional environment (the device used in IoMT environment).

To alleviate the shortcomings of pervious approaches and design energy-efficient model for IoMT-enabled environment, we propose a computationally efficient, yet accurate CNN architecture called WEENet. The proposed architecture is designed to efficiently detect COVID-19 in medical chest x-ray images, requiring limited computational resources. More precisely, the key contributions of this study are summarized as follows:

1. Deep learning-based models require huge amount of medical imaging data to train effectively, but COVID-19 benchmarks have relatively limited number of samples especially for COVID class. To increase the number of images for effective training of the proposed WEENet framework, we applied offline data augmentation techniques on available medical chest x-ray images such as rotation, flipping, zooming, etc. that brought improvements in the performance as evident from the results.

2. WEENet is developed to detect COVID-19 in medical chest x-ray images and support the management of IoMT environments. WEENet uses EfficientNet (31) model as a backbone for feature extraction from chest x-ray images, followed by stacked autoencoding layers to represent the features in more abstract form before the final classification decision.

3. The performance of several deep learning-based models is evaluated using benchmark medical chest x-ray images datasets and eight evaluation metrics including a novel strategy of cross-corpse and robustness evaluation for COVID-19 detection in chest x-ray images. Furthermore, we also compared the performance of our WEENet with other state-of-the-art (SOTA) methods, where it surpassed in terms of several evaluation metrics.

The remainder of the article is organized as follows: Section 2 covers the proposed IoMT-based WEENet framework with a discussion on datasets. In Section 3, we discuss the experimental setups, the experimental results, and their analysis. Finally, Section 4 concludes this paper and suggests future research directions.

2 Proposed IoMT-Based WEENet Framework

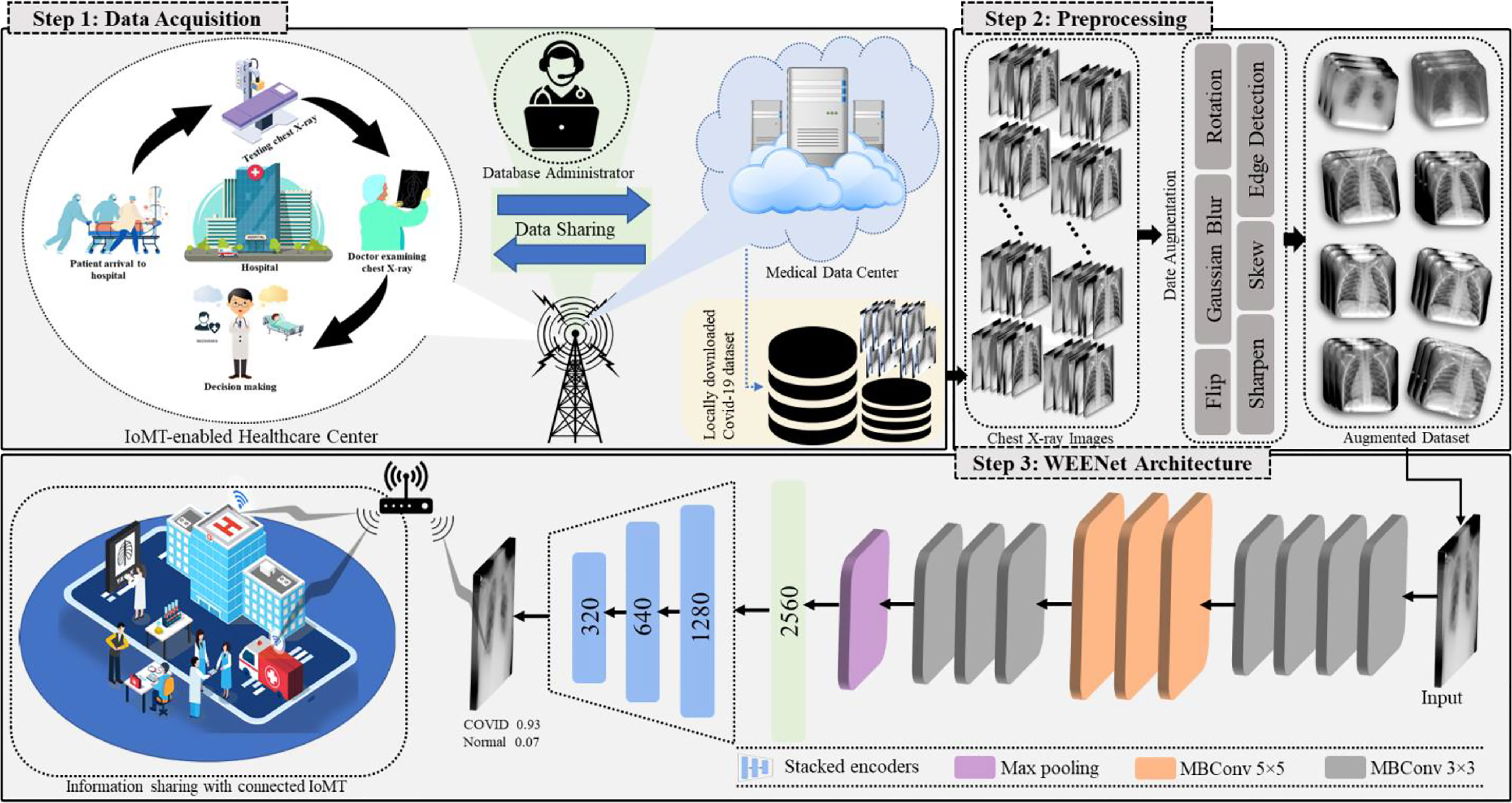

This section discusses the overall workflow of our WEENet framework in IoMT environment for efficient and timely detection of COVID-19 in x-ray images over edge computing platforms. For better understanding, the proposed WEENET framework is divided into three phases including Data Acquisition, Preprocessing, and WEENet. The first phase presents the detail of data collection from different sources, followed by the second phase which performs extensive data augmentation on the data collected in the first phase to prevent underfitting/overfitting problems. The third phase contains the WEENet architecture which is responsible for COVID-19 detection in x-ray images. The overall graphical overview of our proposed framework with all phases is given in Figure 1 and explained in the following subsections.

Figure 1 Overview of the proposed WEENet-assisted framework for COVID-19 diagnosis using chest x-ray images with the support of 5G technology and efficient management for IoMT environments.

2.1 Data Acquisition and Preprocessing

During the pandemic, hospitals around the world produced image data related to COVID-19 (such as medical x-ray and CT images), and some of them are publicly available for research purposes in medical imaging. However, the available COVID-19 image datasets are either not well organized or have lack of balance between positive and negative class samples, which often lead network to model overfitting during the training process. Therefore, the research community is working to organize the available COVID-19 image data and make it usable before utilizing it for early diagnosis of COVID-19. To achieve data diversity and balance between positive and negative class samples, we actively used data augmentation approaches, which not only increase the volume of data but can also significantly improve the classification performance of deep learning models as evident from our experiments.

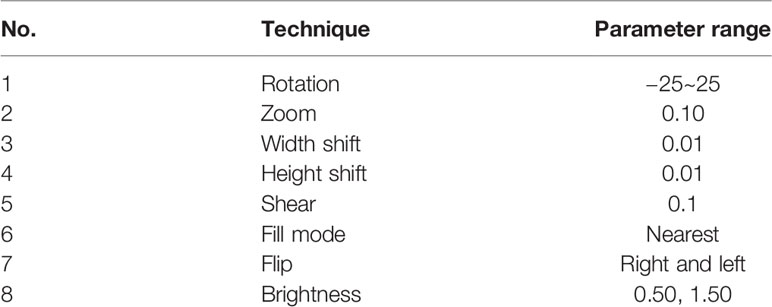

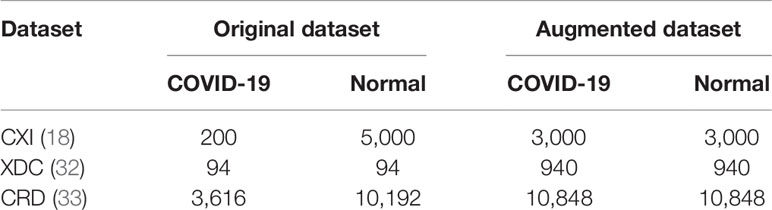

In this research, we have used three different COVID-19 image datasets namely chest x-ray images (CXI) (18), x-ray dataset COVID-19 (XDC) (32), and COVID-19 radiography database (CRD) (33), where each dataset contains medical chest x-ray images of positive and negative patients. To alleviate the chances of model overfitting and class biasness, we performed extensive data augmentation by equalizing the number of positive and negative class samples in each of the abovementioned datasets. Considering the number of images per class in the dataset, we performed data augmentation with different augmentation ratio for each dataset so that we can obtain balance training data. Following this strategy, we augmented the COVID-19 images of CXI (18) dataset with augmentation ratio of 1:15 such that each image is reproduced in 15 different variants. Similarly, for XDC (32), we used the data augmentation ratio of 1:10 for both positive and negative classes. For CRD (33) dataset, we only augmented the COVID-9 class with the augmentation ratio of 1:3, where each image is reconstructed with its 3 different variants. The proposed data augmentation strategy analyzed different augmentation approaches and then selected the most suitable eight distinct operations on each image of the dataset that include Rotation, Zoom, Width shift, Height shift, Shear, Fill mode, Flip, and Brightness operations before forwarding to our proposed WEENet for training. The details of augmentation operations used in our method are listed in Table 1.

It can be noticed that images in original XDC dataset are insufficient for training a deep learning algorithm. Also, the number of positive samples in the CXI dataset is comparatively lesser than negative samples prior to data augmentation process. Similarly, the CRD dataset has also a huge difference between the number of positive and negative samples. On the other hand, the augmented version of the listed datasets can be found well balanced and rich in terms of data diversity, thus more suitable for deep learning-based methods.

2.2 EfficientNet: The Backbone Architecture

Several CNN architectures have been explored before choosing the appropriate model that are extensively used in different domain studies such as time series prediction, classification, object detection, and crowed estimation. These architectures include VGG16 (34), VGG19 (34), ResNet18 (35), ResNet50 (35), and ResNet101 (35) that are used by researchers for COVID-19 detection in chest x-ray images, but each CNN model has its own pros and cons. However, researchers investigate these architectures to boost their accuracy by using different scaling strategies to adjust the network depth, width, or resolution. Most of the networks are based on single scaling, that scales only a single dimension from depth, width, and size. Though, scaling two or three dimensions will yield efficiency and suboptimal accuracy. To this end, we investigate EfficientNet that scales all the dimensions through compound scaling technique. This network is developed through leveraging multiobjective architecture search, which optimizes both floating point operations (FLOPs) and accuracy. EfficientNet uses the search space of (36) and ACC (m) × [FLOPS (m)/T]w as an optimization tool. The ACC (m) and FLOPS (m) represent the accuracy and FLOPs of model m while T and w are the FLOPs target and hyperparameters, respectively. These terms control the tradeoff between the accuracy and FLOPs. This network comprises several convolutional layers where different-sized kernels are equipped in each layer. The input frame having three channels (R, G, B) corresponds to size such as 224 × 224 × 3. The subsequent layers are scaled down in a resolution that reduces the size of feature maps while the width is scaled up to increase the accuracy. This tool ensures the collection of important features from the input frame. For example, the second layer consists of Width = 112 kernels, and the number of kernels by next convolution is Width = 64. The total maximum kernels used are Depth = 2,560 in the last layer, where the resolution is 7 × 7 which represents the most discriminative features. At the end, we added max pooling layer that is followed by encoding layers and a SoftMax layer for the final classification.

2.3 The Proposed WEENet

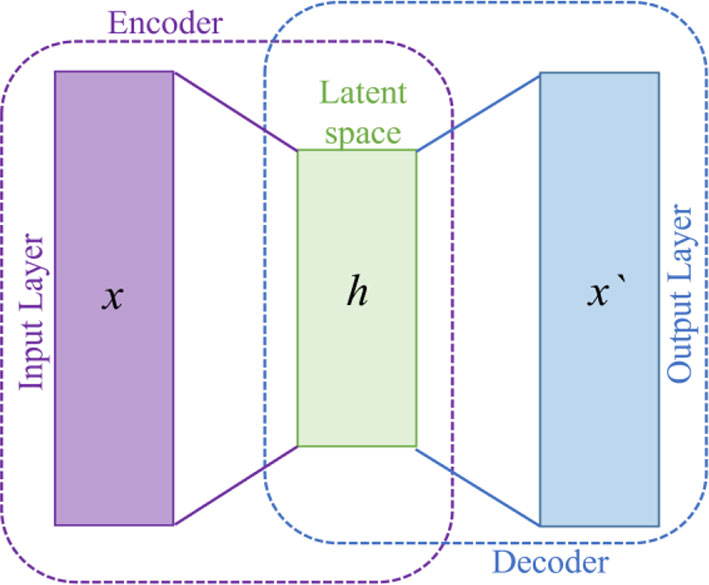

The proposed WEENet is based on EfficientNet model followed by encoding layers. EfficientNet is used to extract important features from the input data and then the output is feed forward to stacked encoding layers. The stacked encoding layers are based on autoencoder (37) used to compress the data from high dimension into low dimension, while preserving the salient information from the input data. Autoencoders are a type of deep neural networks that map the data to itself through a process of (nonlinear) dimensionality reduction, followed by dimensionality expansion. The autoencoder models include three layers: input, hidden, and output layer as shown in Figure 2. The encoder part is used to map the input data into lower dimension followed by decoding layers to reconstruct it. Let us suppose the input data , where Xn belongs to Rm x 1, hn is the low-dimension map (hidden state) which is calculated from Xn, and “On” is the output decoder. The mathematical representation of encoding and decoding layer is shown in Eq. (1).

here, F represents the encoding function, 𝒲1 is the weight metrics, and 𝒷1 is the bias term. The mathematical representation of the decoding layer is shown in Eq. (2).

In this equation, G is the decoding function, 𝒲2 is the weight metrics, and 𝒷2 is the bias term of decoding layer. In our WEENet, we used the encoding part of the autoencoder to represent the features in more abstract form. In these layers, the high-dimension EfficientNet features is encoded to low-dimension features. In the proposed model, two encoding layers are incorporated with EfficientNet architecture. The output of EfficientNet is 2,560 dimension feature vector which is encoded to 1,280 dimension feature vector. Furthermore, 1,280 dimension feature vector is then encoded to 640 dimension feature vector is then 320. The proposed model is trained for 50 epochs, using SGD optimizer with 0.0001 learning rate, and its performance is tested against SOTA as given in Section 3.

3 Experimental Results and Discussion

In this section, we evaluated our WEENet on three publicly available COVID-19 datasets and compared the classification performance with other methods. For this, we first provide the details of experimental settings of this research study, followed by information about datasets and metrics for performance evaluation. Subsequently, we compare the proposed WEENet with other SOTA CNN architectures used for COVID-19 classification. Finally, we close this section by emphasizing on the feasibility of our proposed WEENet framework for COVID-19 diagnosis in 5G-enabled IoMT environments.

3.1 Implementation Details

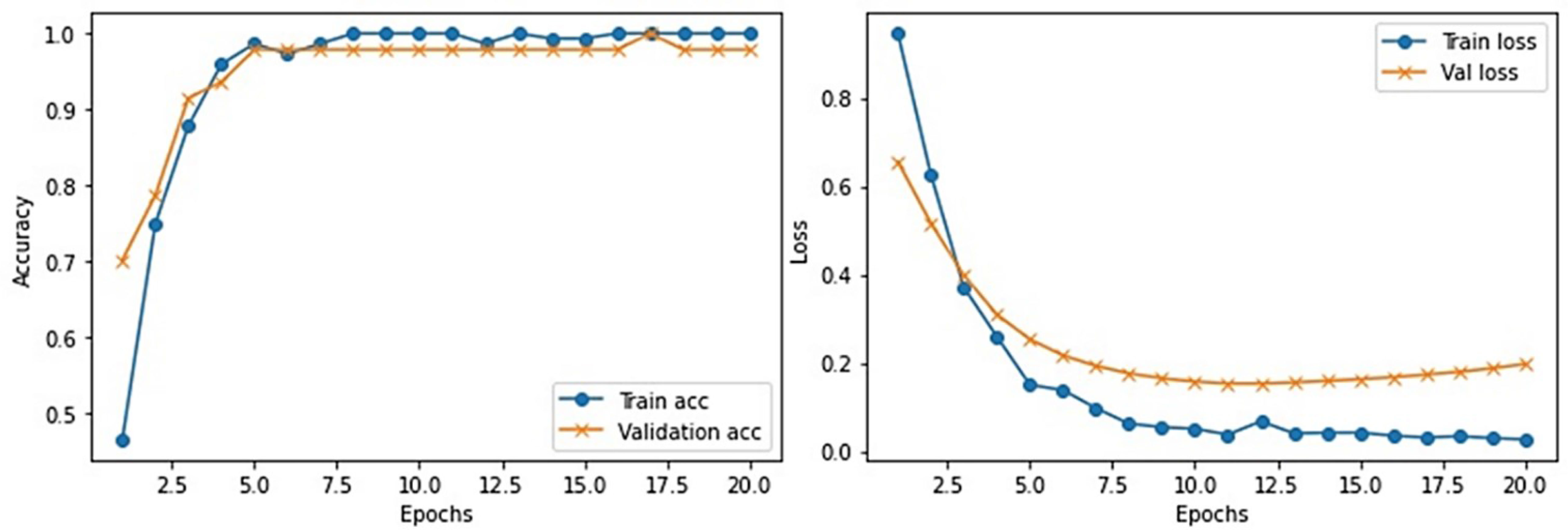

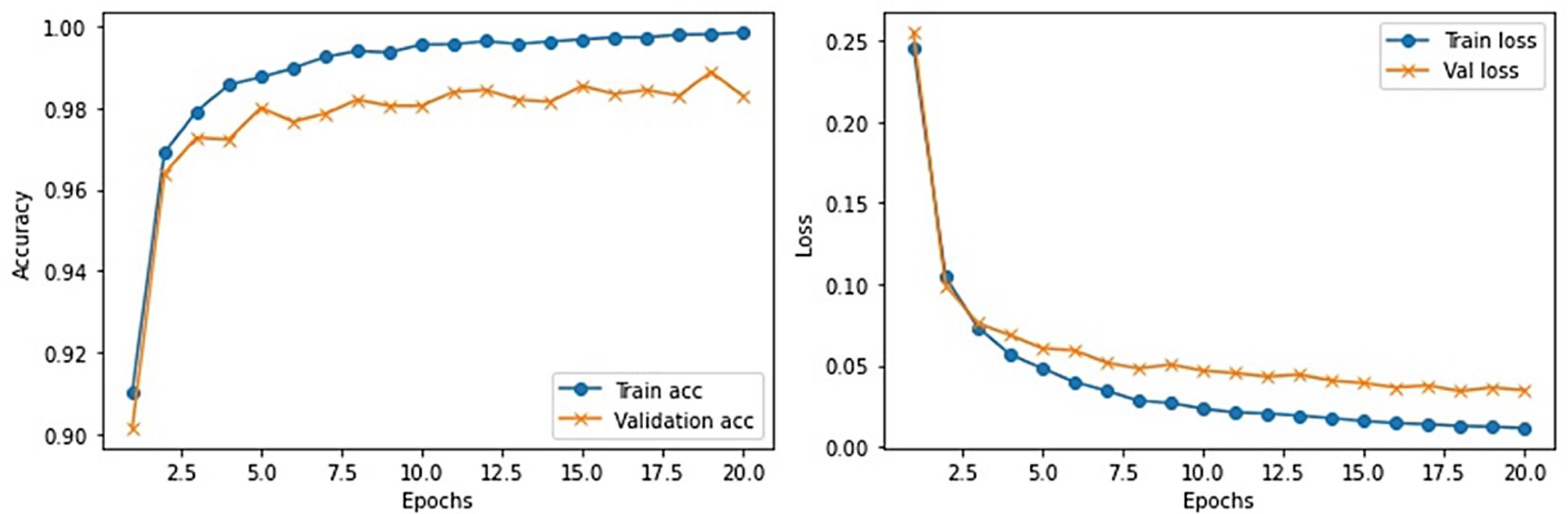

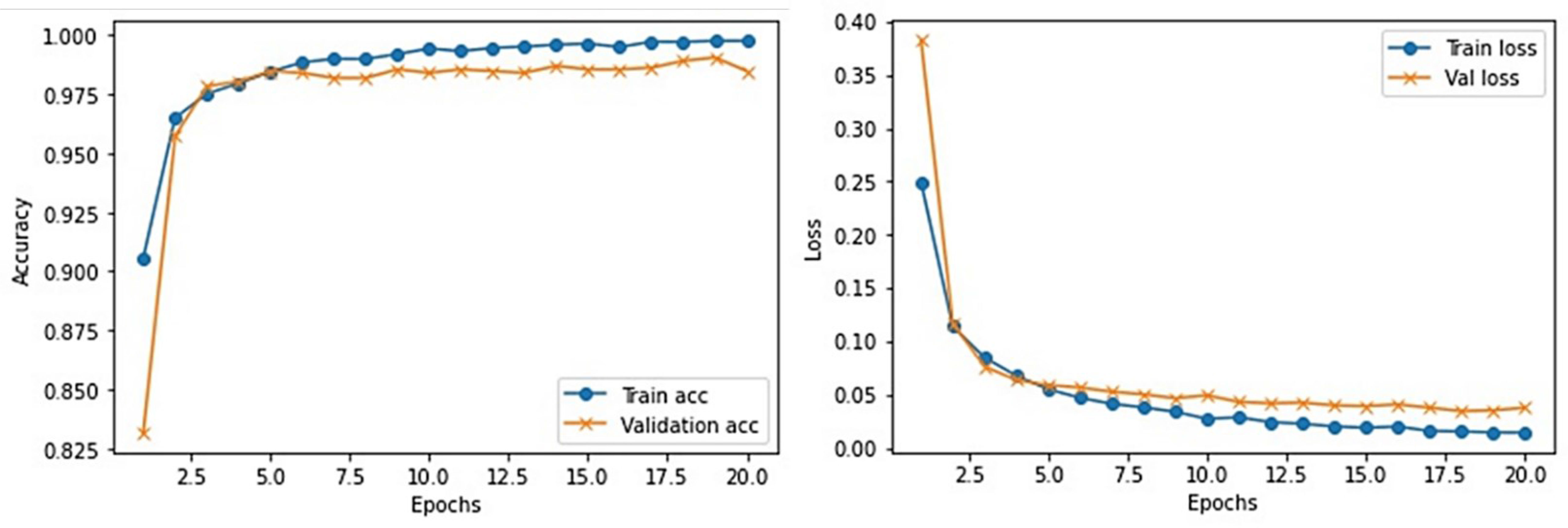

This section provides the detail of experimental settings and the execution environment used for implementing our proposed WEENet framework. The proposed method is purely implemented in Python (version 3.5) language using Visual Studio Code (VSCode)-integrated development environment. The WEENet concepts are implemented by utilizing a very prominent deep learning framework called Keras on Intel Core i7 CPU equipped with a GPU of Nvidia GTX having 6 GB onboard memory. The proposed WEENet architecture is trained on three different datasets including CXI, XDC, and CRD with the same configuration of hyperparameters, i.e., number of epochs, batch size, learning rate, weight decay, etc. The training and validation performances of our WEENet on CXI, XDC, and CRD datasets are visually depicted in Figures 3–5, respectively.

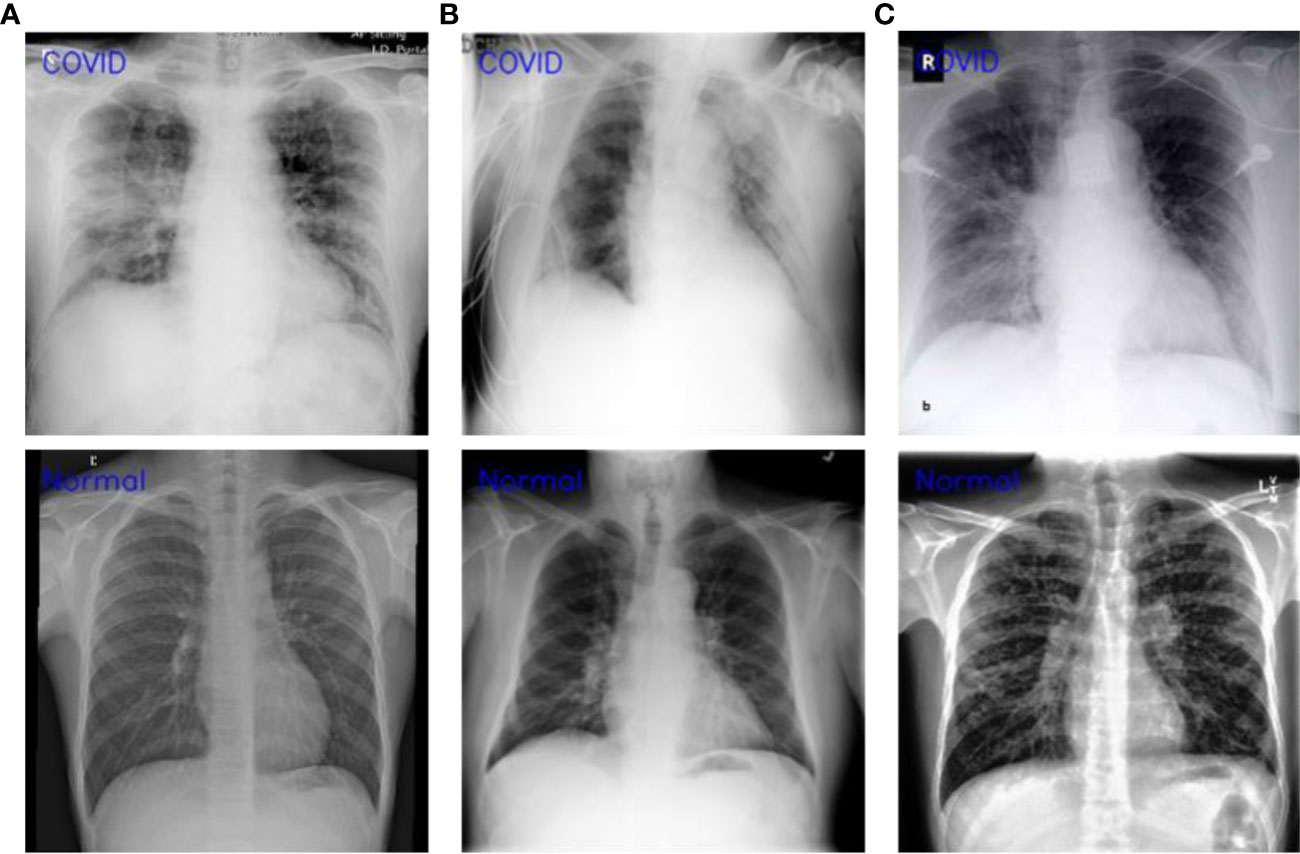

3.2 Details of the Datasets

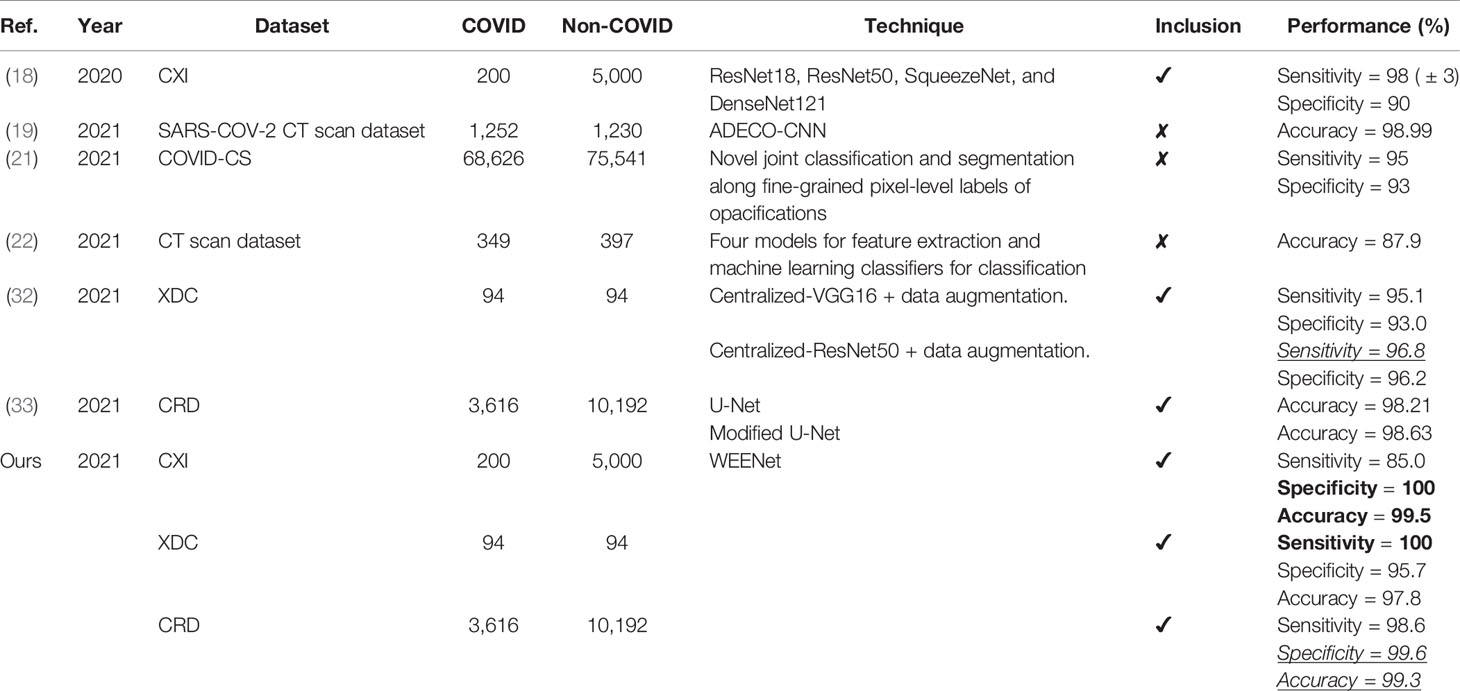

For experimental evaluation, we have used three publicly available datasets (18, 32, 33) to validate the performance of our proposed method compared with other SOTA CNN architectures. These datasets contain chest x-ray images of positive and negative COVID-19 patients assigned with corresponding labels, i.e., COVID-19 and normal. The statistical details of the abovementioned datasets are listed in Table 2. Besides these datasets, there are several other publicly available datasets commonly used for COVID-19 classification. However, most of them are either imbalance or have weak diversity leading to poor performance. Therefore, we selected CXI, XDC, and CRD datasets from the publicly available listed datasets in Table 3. The detail of each dataset is given in Table 3 including publishing year, name of the dataset, number of samples in COVID class and non-COVID class, methods of evaluation, and experimental outcomes in terms of sensitivity, specificity, and accuracy.

Table 3 Detailed information of the collected SOTA including techniques and their other important remarks.

3.2.1 CXI Dataset

It is one of the most used datasets for COVID-19 diagnosis in medical image analysis community. This dataset contains a total of 184 COVID-19 infected and more than 5,000 normal chest x-ray images. Clearly, the original CXI (18) dataset has imbalance class samples that significantly affect a model’s performance during training. Considering the chances of overfitting during training, we augmented the dataset and balanced the number of images for both COVID-19 and normal class. The number of per class images for both original and augmented CXI dataset is listed in Table 2.

3.2.2 XDC Dataset

This dataset is created by collecting a small number of chest x-ray images of positive and negative COVID-19 patients. Overall, this dataset is very small and cannot be used for training large CNN networks. Also, such a lesser amount of image data often leads to model underfitting where model struggles to learn from the data under observation. To avoid such kind of hurdles during training, we augmented the XDC (32) dataset and increased the number of images from 94 to 940 for both COVID-19 and normal class as given in Table 2.

3.2.3 CRD Dataset

The COVID-19 radiography dataset is the large-scale chest x-ray image dataset released in different versions. In the first release, they publicly share 219 COVID-19 infected and 1,341 normal chest x-ray images. In the second release, they increased the number of COVID-19 infected chest x-ray images to 1,200. Following this, in the third release, the number of COVID-19 infected chest x-ray images is increased to 3,616 and normal chest x-ray images to 10,192. In this paper, we used the final release of the CRD (33) dataset, whose statistical details are presented in Table 2.

3.3 Evaluation Metrics

In image classification problem, the performance of trained CNN model is mostly evaluated by conducting quantitative assessment via commonly used classification performance metrics. These metrics can be easily computed with the help of confusion matrix by forwarding the actual class labels and predicted labels. Following this strategy, in this paper, we used eight commonly used performance evaluation metrics that include true positive (TP), false positive (FP), false negative (FN), true negative (TN), sensitivity, specificity, accuracy, and receiver operating characteristics (ROC) for validating the classification performance of our WEENet. The values of TP, FP, FN, and TN are retrieved from the confusion matrix and the sensitivity, specificity, accuracy, and ROC metrics are computed accordingly as Eq. (3) to Eq. (6).

Here, sensitivity indicates the number of correctly classified positive samples over the total number of positive samples. Similarly, specificity represents the number of correctly classified negative samples over the total number of negative samples. Accuracy is a generic classification metric that indicates the total number of correct classifications over the total number of samples. Finally, ROC metric represents the relationship (indicated by symbol ~R~) between specificity and sensitivity.

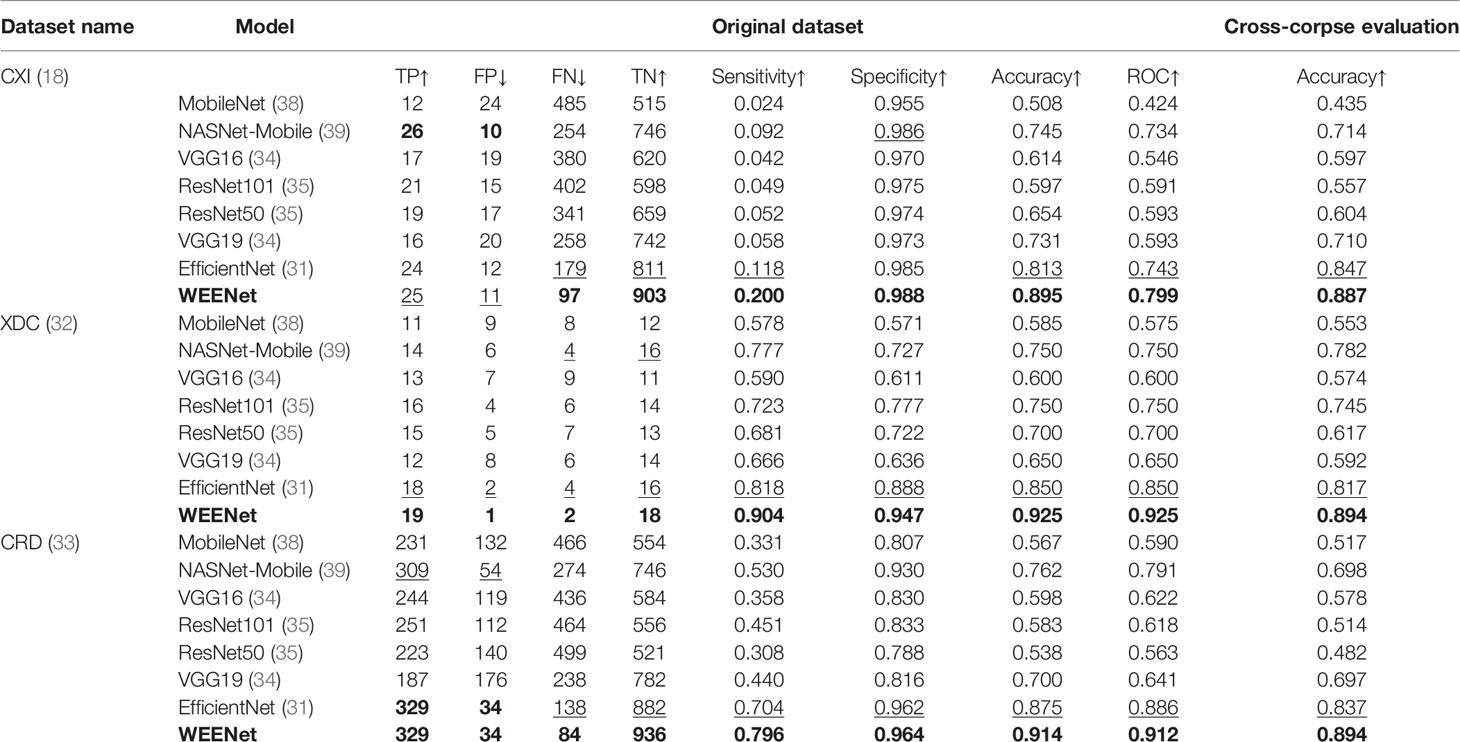

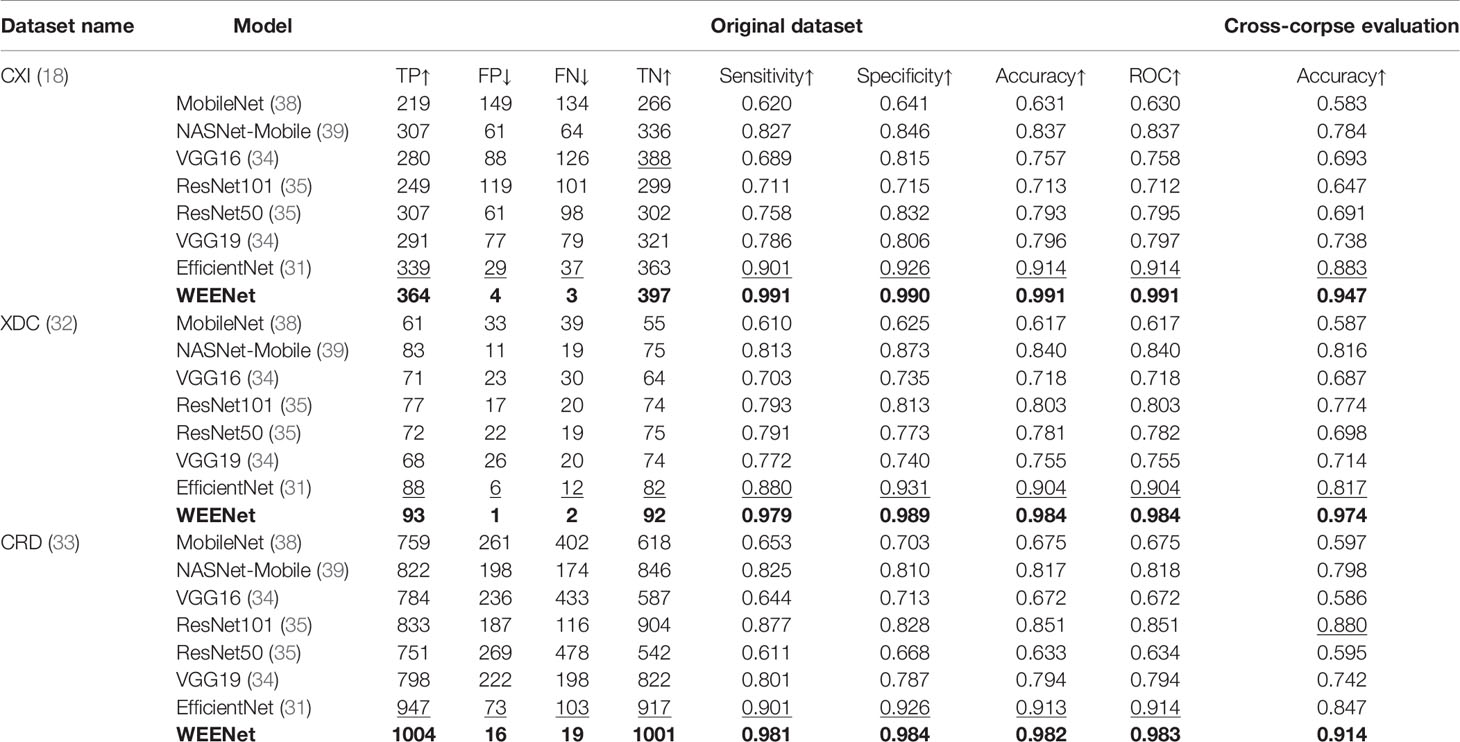

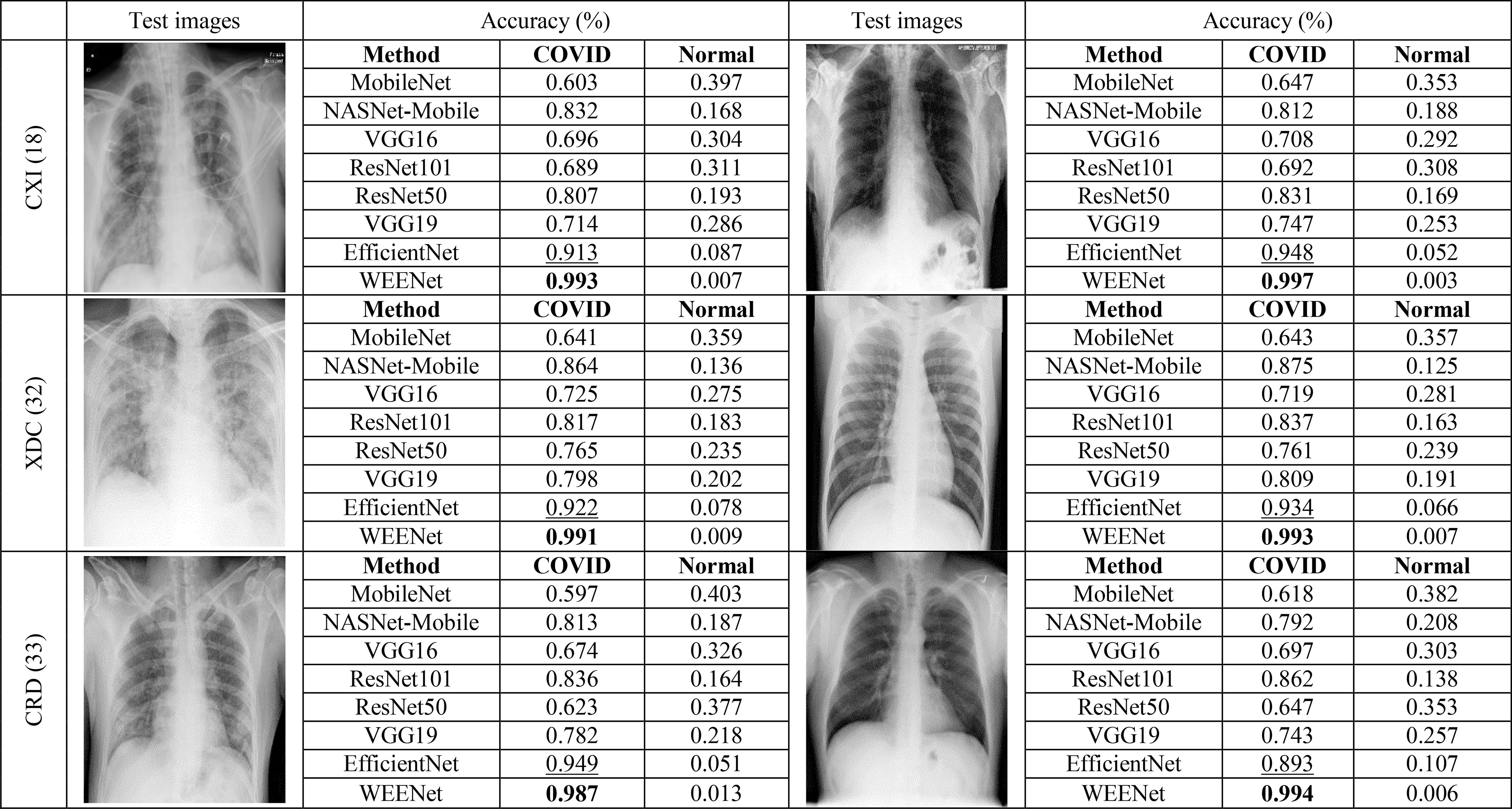

3.4 Cross-Corpse Evaluation and Robustness Analysis

The generalization of a system plays an important role especially when dealing with uncertain computational environment, where data under the observation is semantically different from the data used for training the algorithm. Bearing this in mind, we proposed a new evaluation strategy called cross-corpse evaluation for validating the generalization and robustness of our proposed system in uncertain environment. In this new evaluation strategy, first, we evaluated the performance of our method against other SOTA on test sets of the same datasets used for training. While in the second round of experiments, we assessed the performance of the proposed approach compared with the underlined investigated CNNs on test sets of the datasets other than training datasets, which is termed as cross-corpse evaluation. The obtained quantitative results for both the same dataset and cross-corpse evaluation strategy are presented in Tables 4, 5. It can be easily perceived that the obtained accuracy score for cross-corpse evaluation is comparatively lower than that of the original dataset, yet the accuracy scores indicate the better generalization performance. Furthermore, the reported quantitative results in Tables 4, 5 verify the overwhelming performance of our method by obtaining the highest accuracy across each dataset in both the same dataset and cross-corpse evaluation. We also evaluated the qualitative performance of our method against SOTA by doing classification on randomly collected images from the test sets of each experimented dataset. The prediction results for randomly selected images from each experiment dataset are shown in Figure 6, where it can be noticed that our method provides the best prediction results compared with other SOTA methods for COVID-19 classification.

Figure 6 Robustness analysis of our proposed method against other SOTA CNNs on randomly selected test images from each dataset and the corresponding predictions made by each method.

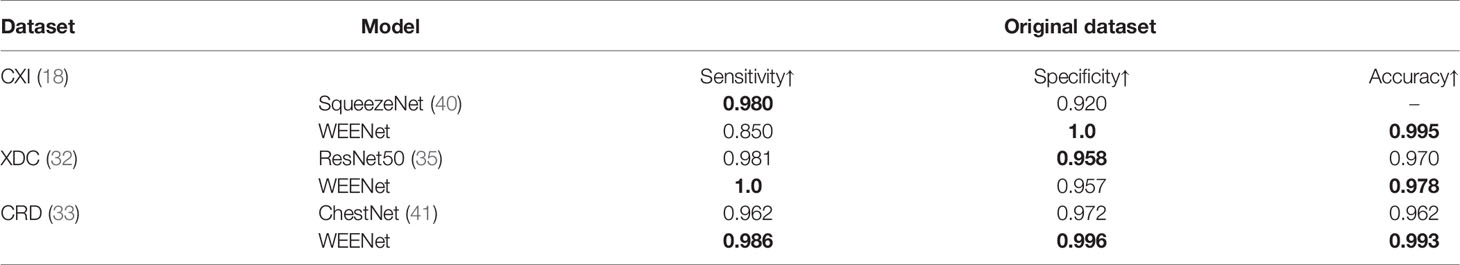

3.5 Comparison With Other CNN Models for COVID-19 Classification

This section presents the comparative analysis of our proposed WEENet with other SOTA methods for COVID-19 classification on test sets of CXI, XDC, and CRD datasets. For comparative analysis, we evaluated the performance of our method and compared it with SOTA including MobileNet (38), NASNet-Mobile (39), VGG16 (34), ResNet101 (35), RestNet50 (35), VGG19 (34), and EfficientNet (31). To investigate the performance of our method and validate the effectiveness of the proposed data augmentation strategy, we conducted experiments on both original datasets and augmented datasets and compared the results with the SOTA methods. The obtained results for the original dataset are given in Table 4, where it can be perceived that our proposed WEENet outperforms all comparative CNNs on original datasets across each evaluation metric except NASNet-Mobile (39) that performs comparatively better than our method in terms of TP and FP on the CXI dataset. On the other hand, the obtained results on augmented datasets are given in Table 5, where it can be noticed that our proposed WEENet achieved the best results by overwhelming the SOTA CNNs across each evaluation metric, thus showing its superiority and efficiency for COVID-19 classification in medical chest x-ray images. We also compared our WEENet architecture with other SOTA CNN-based COVID-19 classification approaches and reported the results in Table 6. The reported results reflect the dominancy of our WEENet on CRD dataset across each evaluation metric Although our method obtained comparatively lower values for sensitivity and specificity on the CXI and XDC datasets, still our method attained best results on the same datasets across the other two evaluation metrics. The best reported results presented in Tables 3–6 are highlighted in the bold text while the runner up scores are indicated in the underlined text. Furthermore, some visual results of the proposed WEENet over test set of each dataset are given in Figure 7.

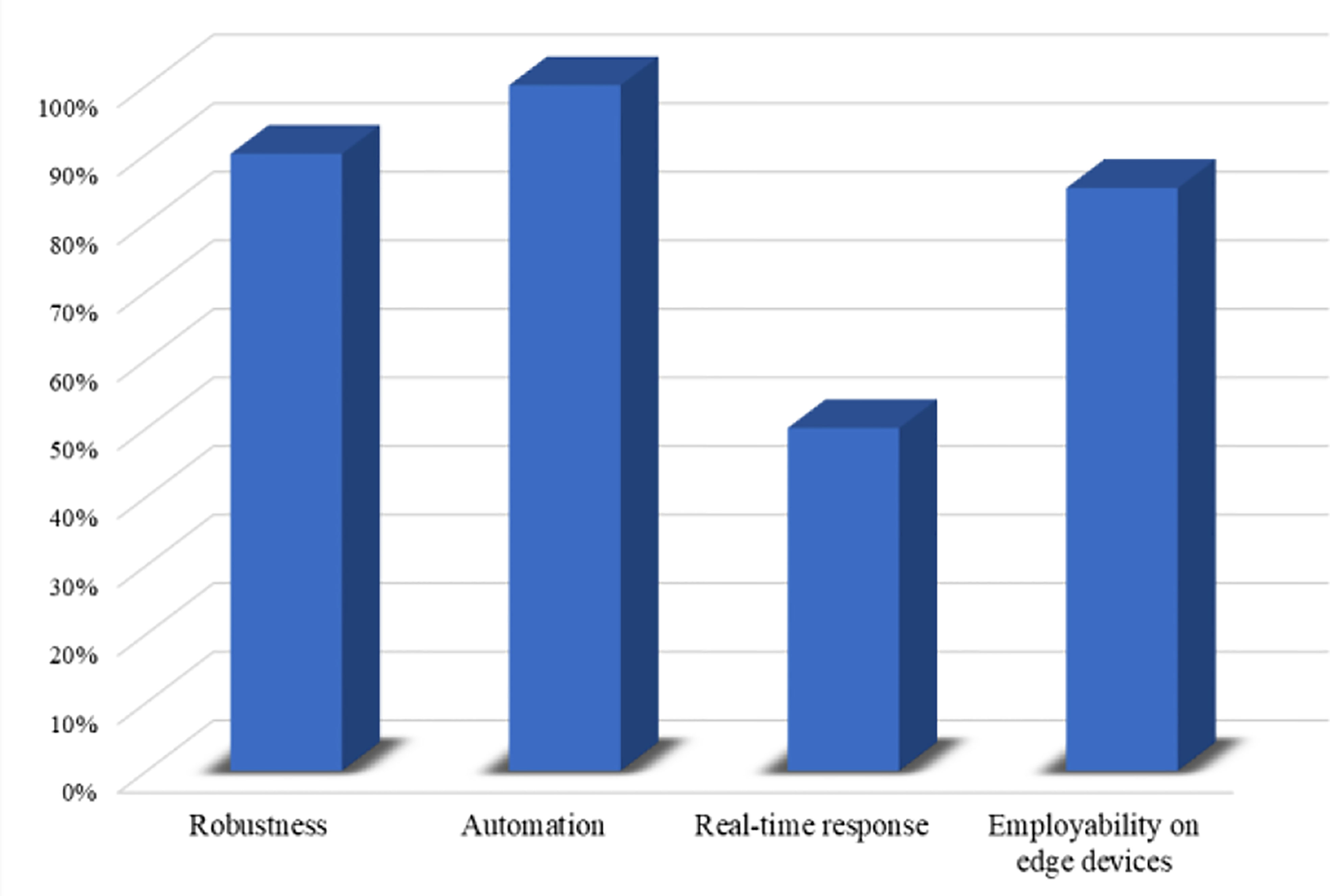

3.6 Feasibility Analysis for 5G-Enabled IoMT Environment

Considering the requirements of 5G-enabled IoMT environment for rapid and accurate smart healthcare systems (42–44), it is essential to analyze the feasibility of a system before deploying in the real world. The feasibility assessment protocols involved different steps to investigate the suitability of a given system for the problem under observation in various aspects such as the robustness of decision-making system, automation, real-time response, and employability on edge-computing platforms. Having this in mind, we conducted feasibility analysis experiments and investigated our proposed WEENet in the abovementioned aspects. Based on the obtained quantitative results in the previous section, we estimated the robustness of our WEENet by averaging the attained accuracy score across all datasets and achieved an average of 90% accuracy. Next, the proposed method meets automation requirements thereby providing fully end-to-end deep learning system. Although, our method takes relatively more time for diagnosing COVID-19 in chest x-ray image, it has limited memory storage requirements for deployment on edge devices, making it a suitable approach for early COVID-19 detection in 5G-enabled IoMT environments. The conducted feasibility assessment findings are depicted in Figure 8.

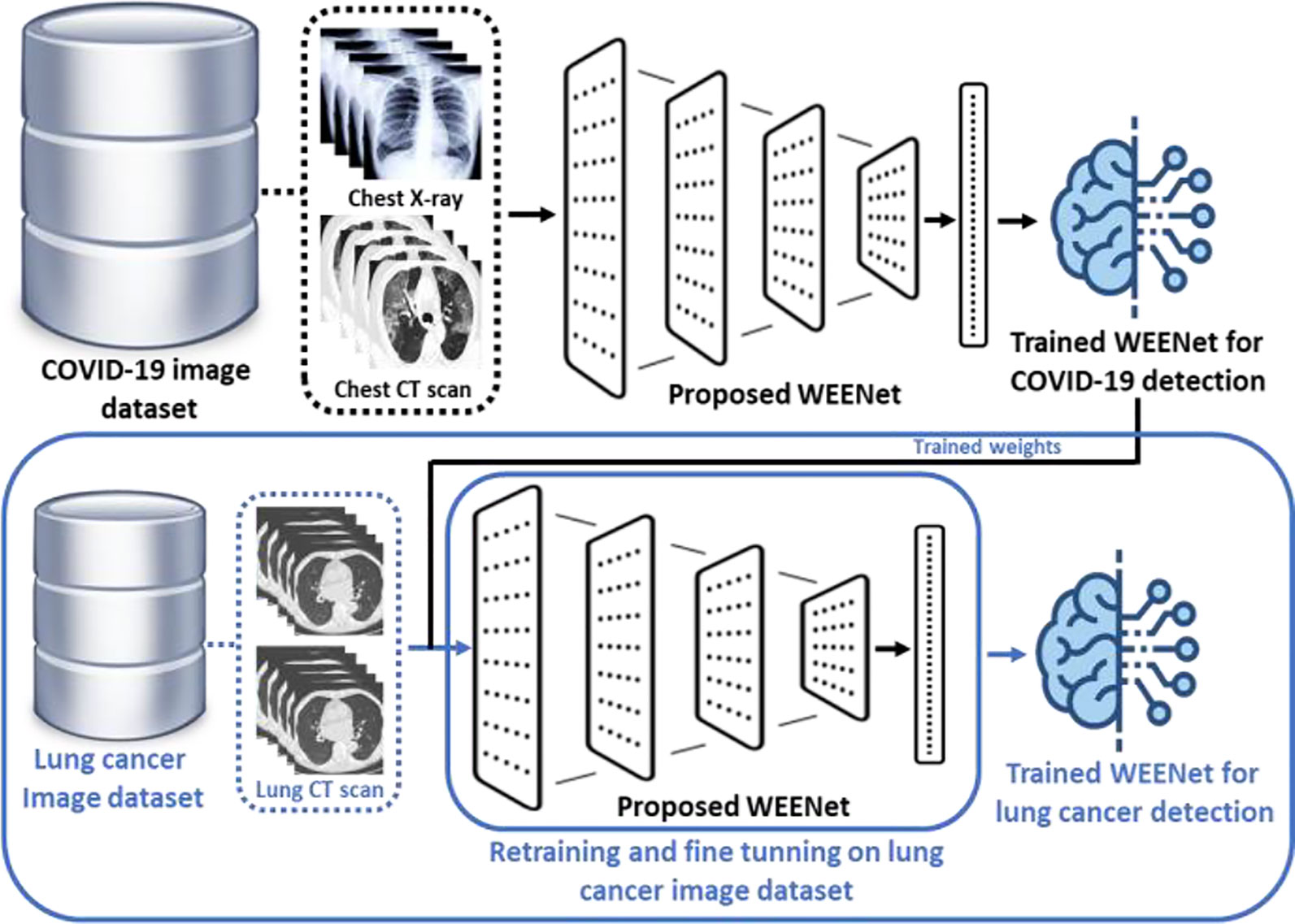

3.7 WEENet for Lung Cancer Detection

In this section, we discuss the effectiveness and reusability of our proposed WEENet framework for early detection of lung cancer in chest CT scan images of infected patients. The deep learning-based early detection of lung cancer (45) can greatly facilitate the doctors and other medical-related individuals to eliminate the cancer cell at first place by providing proper care and treatment to the infected patients. Considering the relevancy in the image data (chest CT scan images) used for COVID-19 detection and lung cancer CT scan images (46), the proposed WEENet framework can be used for lung cancer detection by fine-tunning the architecture on lung cancer image data using transfer learning strategy (47). For efficient retraining of the WEENet architecture, the trained weights (already learned knowledge during training on COVID-19 image data) can be used while training the proposed WEENet on lung cancer image data. The utilization of trained weights will not only reduce the training efforts (in terms of training time) but can also improve the performance of retrained architecture for lung cancer detection. The reusability workflow of our proposed WEENet for lung cancer detection task is depicted in Figure 9.

Figure 9 The graphical overview of the reusability process of our proposed WEENet for lung cancer detection task.

4 Conclusion and Future Work

The COVID-19 pandemic started in 2019 and has severely affected human life and the world economy for which different actions are initiated to stop its spread and efficiently handle the pandemic. Such actions include the concept of smart lockdown, development of new devices for temperature checking, early detection of COVID-19 using medical imaging techniques, and treatment plans for patients with different risk levels. This work supports the necessary action of early COVID-19 detection using medical chest x-ray images in 5G-enabled IoMT environment, contributing to the management of COVID-19 pandemic. Considering the limited available medical imaging data and different conflicting performance metrics for early COVID-19 detection, in this work, we investigated deep learning-based frameworks for effective modeling and early diagnosis of COVID-19 from medical chest x-ray images in IoMT-enabled environment. We proposed “WEENet” for COVID-19 diagnosis using efficient CNN architecture and evaluated its performance on three benchmark medical chest x-ray and CT image datasets using eight different evaluation metrics such as accuracy, ROC, robustness, specificity, and sensitivity etc. We also tested the performance of our method using cross-corpse evaluation strategy. Our results are encouraging against SOTA methods and will support healthcare authorities in analyzing medical chest x-ray images of infected patients and will assist the management of the COVID-19 pandemic in IoMT environments.

The reported results are better than SOTA methods, but model size is not the best among all methods under consideration (though better than majority of the models). This is due to some of the architectural layers, tuned to balance the performance metrics towards optimization. More investigation is needed to further reduce the model size without affecting the performance, which is one of our plans. We also plan to extend this work to a multiclass problem including mild, moderate, and severe as discussed in COVIDGR dataset (48) from the University of Granada, Spain.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

Author Contributions

KM, HU, and ZK contributed to the idea conceptualization, data acquisition, implementation, experimental assessment, manuscript writing, and revision. AS contributed to the data acquisition and manuscript revision. AA, MA, MK, MHAH contributed to acquisition and interpretation of data. KMM, MH, and MS contributed to the design of study and revision of manuscript.

Funding

This work was supported by the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number 959.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number 959.

References

1. Song F, Shi N, Shan F, Zhang Z, Shen J, Lu H, et al. Emerging 2019 Novel Coronavirus (2019-Ncov) Pneumonia. Radiology (2020) 295(1):210–7. doi: 10.1148/radiol.2020200274

2. WHO. WHO Coronavirus (COVID-19) Dashboard (2021). Available at: https://covid19.who.int/.

3. Xie X, Zhong Z, Zhao W, Zheng C, Wang F, Liu JJR. Chest CT for Typical Coronavirus Disease 2019 (COVID-19) Pneumonia: Relationship to Negative RT-PCR Testing. Radiology (2020) 296(2):E41–5. doi: 10.1148/radiol.2020200343

4. Huang C, Wang Y, Li X, Ren L, Zhao J, Hu Y, et al. Clinical Features of Patients Infected With 2019 Novel Coronavirus in Wuhan, China. Lancet (2020) 395(10223):497–506. doi: 10.1016/S0140-6736(20)30183-5

5. Liang X, Zhang Y, Wang J, Ye Q, Liu Y, Tong JJF I. M. Diagnosis of COVID-19 Pneumonia Based on Graph Convolutional Network. Front Med (2021) 7:1071. doi: 10.3389/fmed.2020.612962

6. Heidarian S, Afshar P, Enshaei N, Naderkhani F, Rafiee MJ, Fard FB, et al. Covid-Fact: A Fully-Automated Capsule Network-Based Framework for Identification of Covid-19 Cases From Chest Ct Scans. Front Artif Intell (2021) 4:598932. doi: 10.3389/frai.2021.598932

7. Bogoch II, Watts A, Thomas-Bachli A, Huber C, Kraemer MU, Khan K. Pneumonia of Unknown Aetiology in Wuhan, China: Potential for International Spread via Commercial Air Travel. J Travel Med (2020) 27(2):taaa008. doi: 10.1093/jtm/taaa008

8. Yoo SH, Geng H, Chiu TL, Yu SK, Cho DC, Heo J, et al. Deep Learning-Based Decision-Tree Classifier for COVID-19 Diagnosis From Chest X-Ray Imaging. Front Med (2020) 7:427. doi: 10.3389/fmed.2020.00427

9. Shi H, Han X, Jiang N, Cao Y, Alwalid O, Gu J, et al. Radiological Findings From 81 Patients With COVID-19 Pneumonia in Wuhan, China: A Descriptive Study. Lancet Infect Dis (2020) 20(4):425–34. doi: 10.1016/S1473-3099(20)30086-4

10. Mohammad-Rahimi H, Nadimi M, Ghalyanchi-Langeroudi A, Taheri M, Ghafouri-Fard S. Application of Machine Learning in Diagnosis of COVID-19 Through X-Ray and CT Images: A Scoping Review. Front Cardiovasc Med (2021) 8:185. doi: 10.3389/fcvm.2021.638011

11. Hu S, Gao Y, Niu Z, Jiang Y, Li L, Xiao X, et al. Weakly Supervised Deep Learning for Covid-19 Infection Detection and Classification From Ct Images. IEEE Access (2020) 8:118869–83. doi: 10.1109/ACCESS.2020.3005510

12. Ye Q, Xia J, Yang G. Explainable AI For COVID-19 CT Classifiers: An Initial Comparison Study. arXiv Preprint arXiv (2021). doi: 10.1109/CBMS52027.2021.00103

13. Narin A, Kaya C, Pamuk ZJPA, Applications. Automatic Detection of Coronavirus Disease (Covid-19) Using X-Ray Images and Deep Convolutional Neural Networks. Pattern Anal Appl (2021) 24:1207–20. doi: 10.1007/s10044-021-00984-y

14. Ismael AM, Şengür A. Deep Learning Approaches for COVID-19 Detection Based on Chest X-Ray Images. Expert Syst Appl (2021) 164:114054. doi: 10.1016/j.eswa.2020.114054

15. Tang S, Wang C, Nie J, Kumar N, Zhang Y, Xiong Z, et al. EDL-COVID: Ensemble Deep Learning for COVID-19 Cases Detection From Chest X-Ray Images. IEEE Trans Ind Inf (2021) 17(9):6539–49. doi: 10.1109/TII.2021.3057683

16. Jain R, Gupta M, Taneja S, Hemanth D. J. J. A. I. Deep Learning Based Detection and Analysis of COVID-19 on Chest X-Ray Images. Appl Intell (2021) 51(3):1690–700. doi: 10.1007/s10489-020-01902-1

17. Wang L, Zhang Y, Wang D, Tong X, Liu T, Zhang S, et al. Artificial Intelligence for COVID-19: A Systematic Review. Front Med (2021) 8:1457. doi: 10.3389/fmed.2021.704256

18. Minaee S, Kafieh R, Sonka M, Yazdani S, Soufi GJ. Deep-Covid: Predicting Covid-19 From Chest X-Ray Images Using Deep Transfer Learning. Med Image Anal (2020) 65:101794. doi: 10.1016/j.media.2020.101794

19. Castiglione A, Vijayakumar P, Nappi M, Sadiq S, Umer M. COVID-19: Automatic Detection of the Novel Coronavirus Disease From CT Images Using an Optimized Convolutional Neural Network. IEEE Trans Ind Inf (2021) 17(9):6480–8. doi: 10.1109/TII.2021.3057524

20. Oh Y, Park S, Ye JC. Deep Learning Covid-19 Features on Cxr Using Limited Training Data Sets. IEEE Trans Med Imaging (2020) 39(8):2688–700. doi: 10.1109/TMI.2020.2993291

21. Wu Y-H, Gao S-H, Mei J, Xu J, Fan D-P, Zhang R-G, et al. Jcs: An Explainable Covid-19 Diagnosis System by Joint Classification and Segmentation. IEEE Trans Image Process (2021) 30:3113–26. doi: 10.1109/TIP.2021.3058783

22. Shamsi A, Asgharnezhad H, Jokandan SS, Khosravi A, Kebria RM, Nahavandi D, et al. An Uncertainty-Aware Transfer Learning-Based Framework for COVID-19 Diagnosis. IEEE Trans Netural Networks (2021) 32(4):1408–17. doi: 10.1109/TNNLS.2021.3054306

23. Zhang W, Lu Q, Yu Q, Li Z, Liu Y, Lo SK, et al. Blockchain-Based Federated Learning for Device Failure Detection in Industrial IoT. IEEE Internet Things J (2020) 8(7):5926–37. doi: 10.1109/JIOT.2020.3032544

24. Lu H, He X, Du M, Ruan X, Sun Y, Wang K. Edge QoE: Computation Offloading With Deep Reinforcement Learning for Internet of Things. IEEE Internet Things J (2020) 7(10):9255–65. doi: 10.1109/JIOT.2020.2981557

25. Du M, Wang K, Chen Y, Wang X, Sun Y. J. I. C. M. Big Data Privacy Preserving in Multi-Access Edge Computing for Heterogeneous Internet of Things. IEEE Commun Mag (2018) 56(8):62–7. doi: 10.1109/MCOM.2018.1701148

26. Xu C, Wang K, Sun Y, Guo S, Zomaya NS, Engineering. Redundancy Avoidance for Big Data in Data Centers: A Conventional Neural Network Approach. IEEE Trans Network Sci Eng (2018) 7(1):104–14. doi: 10.1109/TNSE.2018.2843326

27. Parah SA, Kaw JA, Bellavista P, Loan NA, Bhat GM, Muhammad K, et al. Efficient Security and Authentication for Edge-Based Internet of Medical Things. IEEE Internet Things J (2020) 8(21):15652–62. doi: 10.1109/JIOT.2020.3038009

28. Dourado CM, Da Silva SPP, Da Nóbrega RVM, Rebouças Filho PP, Muhammad K, De Albuquerque VHC. An Open IoHT-Based Deep Learning Framework for Online Medical Image Recognition. IEEE J Selected Areas Commun (2020) 39(2):541–8. doi: 10.1109/JSAC.2020.3020598

29. Bellavista P, Torello M, Corradi A, Foschini L. Smart Management of Healthcare Professionals Involved in COVID-19 Contrast With SWAPS. Front Sustain Cities (2021) 03:73. doi: 10.3389/frsc.2021.638743

30. Zhang W, Zhou T, Lu Q, Wang X, Zhu C, Sun H, et al. Dynamic Fusion-Based Federated Learning for COVID-19 Detection. IEEE Internet Things J (2021) 8(21):15884–91. doi: 10.1109/JIOT.2021.3056185

31. Tan M, Le Q. Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks. In: International Conference on Machine Learning. California: PMLR (2019). p. 6105–14.

32. Feki I, Ammar S, Kessentini Y, Muhammad K. J. A. S. C. Federated Learning for COVID-19 Screening From Chest X-Ray Images. Appl Soft Comput (2021) 106:107330. doi: 10.1016/j.asoc.2021.107330

33. Rahman T, Khandakar A, Qiblawey Y, Tahir A, Kiranyaz S, Kashem SBA, et al. Exploring the Effect of Image Enhancement Techniques on COVID-19 Detection Using Chest X-Ray Images. Comput Biol (2021) 132:104319. doi: 10.1016/j.compbiomed.2021.104319

34. Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv preprint arXiv (2014).

35. He K, Zhang X, Ren S, Sun J. (2016). Deep Residual Learning for Image Recognition, in: 29th IEEE Conference on Computer Vision and Pattern Recognition. (Las Vegas: IEEE) pp. 770–8.

36. Tan M, Chen B, Pang R, Vasudevan V, Sandler M, Howard A, et al. Mnasnet: Platform-Aware Neural Architecture Search for Mobile. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. California: IEEE (2019). p. 2820–8.

37. Kramer MA. Nonlinear Principal Component Analysis Using Autoassociative Neural Networks. AIChE J (1991) 37(2):233–43. doi: 10.1002/aic.690370209

38. Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, et al. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv preprint arXiv (2017).

39. Zoph B, Vasudevan V, Shlens J, Le QV. Learning Transferable Architectures for Scalable Image Recognition In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Salt Lake City: IEEE (2018). pp. 8697–710.

40. Iandola FN, Han S, Moskewicz MW, Ashraf K, Dally WJ, Keutzer K. SqueezeNet: AlexNet-Level Accuracy With 50x Fewer Parameters and< 0.5 MB Model Size. arXiv preprint arXiv (2016).

41. Wang H, Xia Y. Chestnet: A Deep Neural Network for Classification of Thoracic Diseases on Chest Radiography. arXiv preprint arXiv (2018).

42. Rachakonda L, Bapatla AK, Mohanty SP, Kougianos E. SaYoPillow: Blockchain-Integrated Privacy-Assured IoMT Framework for Stress Management Considering Sleeping Habits. IEEE Trans Consumer Electron (2020) 67(1):20–9. doi: 10.1109/TCE.2020.3043683

43. Salem O, Alsubhi K, Shaafi A, Gheryani M, Mehaoua A, Boutaba R. Man in the Middle Attack Mitigation in Internet of Medical Things. IEEE Trans Ind Inf (2021) 18:2053–62. doi: 10.1109/TII.2021.3089462

44. Sun Y, Liu J, Yu K, Alazab M, Lin K. PMRSS: Privacy-Preserving Medical Record Searching Scheme for Intelligent Diagnosis in IoT Healthcare. IEEE Trans Ind Inf (2021) 18:1981–90. doi: 10.1109/TII.2021.3070544

45. Ibrahim DM, Elshennawy NM, Sarhan AM. Deep-Chest: Multi-Classification Deep Learning Model for Diagnosing COVID-19, Pneumonia, and Lung Cancer Chest Diseases. Comput Biol Med (2021) 132:104348. doi: 10.1016/j.compbiomed.2021.104348

46. Quarato CMI, Mirijello A, Maggi MM, Borelli C, Russo R, Lacedonia D, et al. Lung Ultrasound in the Diagnosis of COVID-19 Pneumonia: Not Always and Not Only What Is COVID-19 “Glitters”. Front Med (2021) 8. doi: 10.3389/fmed.2021.707602

47. Shrey SB, Hakim L, Kavitha M, Kim HW, Kurita T. Transfer Learning by Cascaded Network to Identify and Classify Lung Nodules for Cancer Detection. In: International Workshop on Frontiers of Computer Vision. Ibusuki: Springer (2020). p. 262–73.

48. D. A. R. I. i. D. S. a. C. Intelligence. COVIDGR. Available at: https://dasci.es/es/transferencia/open-data/covidgr/.

Keywords: medical imaging, COVID-19 diagnosis, machine learning, Internet of Medical Things, deep learning, x-ray imaging, cancer categorization

Citation: Muhammad K, Ullah H, Khan ZA, Saudagar AKJ, AlTameem A, AlKhathami M, Khan MB, Abul Hasanat MH, Mahmood Malik K, Hijji M and Sajjad M (2022) WEENet: An Intelligent System for Diagnosing COVID-19 and Lung Cancer in IoMT Environments. Front. Oncol. 11:811355. doi: 10.3389/fonc.2021.811355

Received: 08 November 2021; Accepted: 01 December 2021;

Published: 02 February 2022.

Edited by:

Guang Yang, Imperial College London, United KingdomReviewed by:

Kun Wang, UCLA School of Law, United StatesKemal Polat, Abant Izzet Baysal University, Turkey

Zhenghua Chen, Nanyang Technological University, Singapore

Copyright © 2022 Muhammad, Ullah, Khan, Saudagar, AlTameem, AlKhathami, Khan, Abul Hasanat, Mahmood Malik, Hijji and Sajjad. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Khan Muhammad, a2hhbi5tdWhhbW1hZEBpZWVlLm9yZw==; Abdul Khader Jilani Saudagar, YWtzYXVkYWdhckBpbWFtdS5lZHUuc2E=

Khan Muhammad

Khan Muhammad Hayat Ullah2

Hayat Ullah2 Mohammad Hijji

Mohammad Hijji Muhammad Sajjad

Muhammad Sajjad