94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Oncol. , 25 January 2022

Sec. Genitourinary Oncology

Volume 11 - 2021 | https://doi.org/10.3389/fonc.2021.792120

This article is part of the Research Topic Advances in Prostate Cancer: Model Systems, Molecular and Cellular Mechanisms, Early Detection, and Therapies View all 21 articles

Purpose: To evaluate the diagnostic performance of the extraprostatic extension (EPE) grading system for detection of EPE in patients with prostate cancer (PCa).

Materials and Methods: We performed a literature search of Web of Science, MEDLINE (Ovid and PubMed), Cochrane Library, EMBASE, and Google Scholar to identify eligible articles published before August 31, 2021, with no language restrictions applied. We included studies using the EPE grading system for the prediction of EPE, with histopathological results as the reference standard. The pooled sensitivity, specificity, positive likelihood ratio (LR+), negative likelihood ratio (LR−), and diagnostic odds ratio (DOR) were calculated with the bivariate model. Quality assessment of included studies was performed using the Quality Assessment of Diagnostic Accuracy Studies-2 tool.

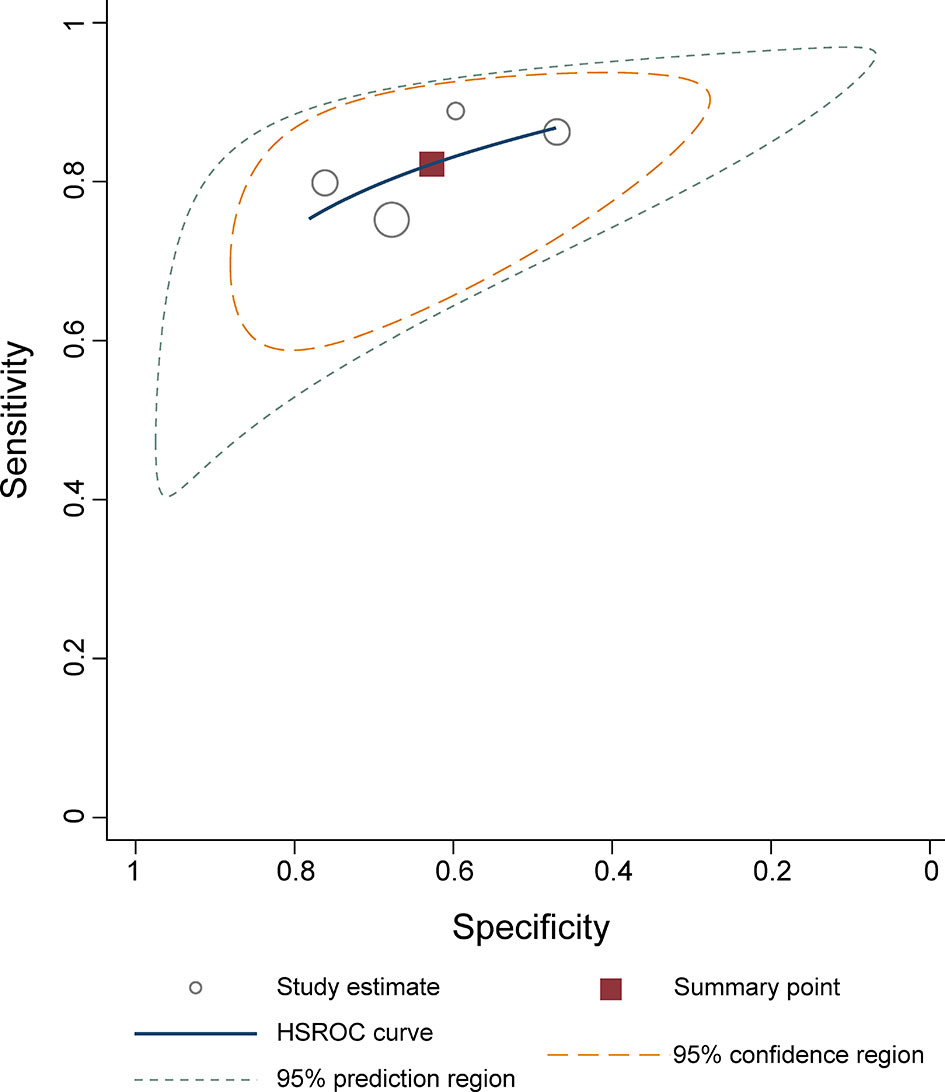

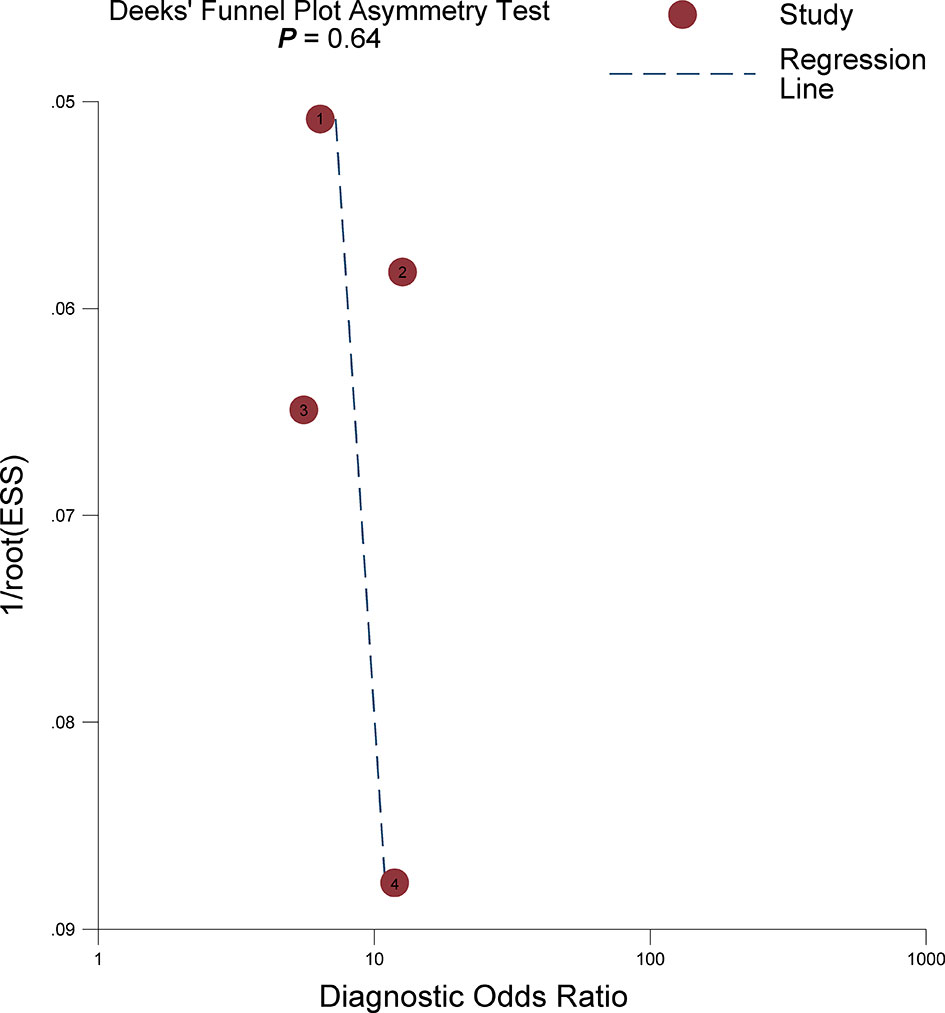

Results: A total of 4 studies with 1,294 patients were included in the current systematic review. The pooled sensitivity and specificity were 0.82 (95% CI 0.76–0.87) and 0.63 (95% CI 0.51–0.73), with the area under the hierarchical summary receiver operating characteristic (HSROC) curve of 0.82 (95% CI 0.79–0.85). The pooled LR+, LR−, and DOR were 2.20 (95% CI 1.70–2.86), 0.28 (95% CI 0.22–0.36), and 7.77 (95% CI 5.27–11.44), respectively. Quality assessment for included studies was high, and Deeks’s funnel plot indicated that the possibility of publication bias was low (p = 0.64).

Conclusion: The EPE grading system demonstrated high sensitivity and moderate specificity, with a good inter-reader agreement. However, this scoring system needs more studies to be validated in clinical practice.

Prostate cancer (PCa) is the most common malignancy among males in Northern America and Europe, where one in nine men will be diagnosed with PCa at some point during their lifetime (1, 2). Compared with organ-confined disease (pT2), which can benefit from nerve-sparing surgical procedures, locally advanced disease [pT3, or extraprostatic extension (EPE)] is associated with a higher risk of biochemical recurrence and metastatic disease (3, 4). Despite that patients who underwent radical prostatectomy (RP) have shown high cancer-specific survival, they are suffering from postoperative erectile dysfunction and urinary incontinence (5). On the other hand, preservation of the neurovascular bundles (NVBs) can improve postoperative potency rates; however, increasing the risks of positive surgical margins then leads to biochemical recurrence and treatment failure (6). Thus, preoperative evaluation of EPE plays a crucial role in clinical management and treatment planning. Previously, varied clinical models and grading systems have been proposed for the prediction of EPE, including the Cancer of the Prostate Risk Assessment (CAPRA) score, Memorial Sloan Kettering Cancer Center (MSKCC) nomogram, and Partin tables (PT). Nonetheless, these risk stratification tools are lacking accuracy and are roughly correlated with final histopathologic results in clinical practice, with reported areas under the curve (AUCs) ranging from 0.61 to 0.81 (7–10).

In 2012, the European Society of Urogenital Radiology (ESUR) introduced Prostate Imaging Reporting and Data System (PI-RADS) for performing, interpreting, and reporting the PCa with multiparametric MRI (mpMRI) (11–13), which was widely applied in clinical practice (14–16). However, for localized advantage PCa of EPE, the ESUR PI-RADS demonstrated moderate diagnostic accuracy, mainly depending on radiologists’ own experience and short of reproducibility (17). Recently, a new scoring system termed the EPE grade has been proposed by Mehralivand et al. (18), the primary strength of which is simplicity and without needing to cooperate with complex imaging features. According to this grading system, grade 1 is defined as either curvilinear contact length ≥15 mm or capsular bulge and irregularity; grade 2 is defined as both curvilinear contact length ≥15 mm and capsular bulge and irregularity; and grade 3 is defined as visible EPE at MRI. Several studies showed that the EPE grading system has favorable diagnostic performance; however, this new guideline has not been evaluated systematically. Thus, in this study, we aimed to assess the diagnostic accuracy of using the EPE grading system for the prediction of EPE.

This meta-analysis was in compliance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (19) and performed with a standardized review and data extraction protocol. A research question was established based on the Patient Index Test Comparator Outcome Study (PICOS) design criteria, as follows: what is the overall diagnostic performance of the EPE grading for prediction of EPE in patients with PCa? Our goal was to pool the sensitivity and specificity based on currently available retrospective and prospective cohort studies.

A computerized literature search of Web of Science, MEDLINE (Ovid and PubMed), Cochrane Library, EMBASE, and Google Scholar for studies applying the EPE grading system from December 2018 to September 2021, with no language restriction, was applied. The terms combined synonyms using for literature search, as follows: [(EPE) or (ECE) or (extracapsular extension) or (extraprostatic extension)] and [(PCa) or (prostate cancer) or (prostate carcinoma)]. Additional papers were identified from the most recent reviews and the reference lists of eligible papers.

Studies would be included if they met the following eligibility criteria: 1) involved patients underwent MRI for assessment of suspected EPE, 2) with the EPE grading system for prediction of EPE in PCa, 3) reported sufficient information for the reconstruction of 2 × 2 tables to evaluate the diagnostic performance, and 4) with histopathological finding after RP as the reference standard.

Studies would be excluded if any of the following criteria were satisfied: 1) studies with a too small sample of fewer than 20 participants, 2) studies using other guidelines or risk stratification tools rather than the EPE grading system, 3) not reported sufficient details for assessing the diagnostic performance, 4) studies with overlapping population, and 5) review articles, guidelines, consensus statements, letters, editorials, and conference abstracts. Two reviewers (WL and WS, with 8 and 5 years of experience, respectively, in performing systematic reviews and meta-analyses) independently evaluated all abstracts, subsequently reviewed full texts, and selected potential eligible articles; all disagreements were resolved through consensus in consultation with a third reviewer (AD).

The following information is extracted from each study: 1) demographic characteristics (sample size, patient age, prostate serum antigen (PSA) level, Gleason score or International Society of Urological Pathology (ISUP) classification, and number of patients diagnosed with EPE using histopathology; 2) study characteristics (first author, publication year, affiliation and location, period of patient recruitment duration, study design, cutoff threshold, other scoring systems used, number of readers and corresponding experience, and blinding; 3) technical characteristics (MRI sequences, magnetic field strength, and coil type); and 4) diagnostic accuracy information (number of true positive, false negative, false positive, and true negative findings classified with diagnostic criteria). Data extraction was performed by one investigator (WL) and confirmed by a second investigator (WS), with disagreements resolved by consensus after discussion with another one (AD). The methodologic quality of included studies was assessed with the Diagnostic Accuracy Studies-2 tool (20).

Heterogeneity among included studies was summarized with the inconsistency index (I2) and Q test: for value between 0% and 40%, unimportant; between 30% and 60%, moderate; between 50% and 90%, substantial; and between 75% and 100%, considerable (21). Pooled sensitivity, specificity, positive likelihood ratio (LR+), negative likelihood ratio (LR−), diagnostic odds ratio (DOR), and their 95% CI were calculated with the bivariate model (22, 23) and then graphically presented in the forest plots; the area under the hierarchical summary receiver operating characteristic (HSROC) curve was calculated as well. In addition, we constructed an HSROC curve with a 95% confidence region and prediction region to demonstrate the results (22, 23). Publication bias was evaluated using Deeks’ funnel plot and determined with Deeks’ asymmetry test (24). All analyses were conducted using STATA 16.0, and statistical significance was set at a p-value <0.05.

Figure 1 shows the flowchart of the publication selection process. Our searches generated 137 relevant articles, of which 39 records were excluded for duplicates. After abstract inspection, 65 records were excluded, and a full-text examination was performed in the remaining 13 potentially eligible studies. A total of 29 studies were excluded due to insufficient data to reconstruct 2 × 2 tables, not in the field of interest, and could not reproduce the sensitivity and specificity. Consequently, a total of 4 studies comprising 1,294 participants were included in the present meta-analysis (18, 25–27).

The demographic characteristics are presented in Table 1. The sample size of the study population ranged from 130 to 553 patients, with a mean age of 60–65 years. Histopathological results after RP revealed that EPE was presented in 22.6%–48.5% of patients. The mean PSA levels of participants ranged from 6.28 to 9.95 ng/ml, with an ISUP category of 1–5. Concerning study design, only 1 was prospective, and all the remaining 3 were retrospective in nature. In all studies, MRI sequences of T2-weighted imaging (T2WI), dynamic contrast enhanced (DCE), and diffusion-weighted imaging (DWI) sequences were used. Regarding the cutoff, 1 study reported the outcomes of 3 thresholds (EPE grades ≥1, ≥2, and ≥3) (18), whereas the remaining studies only reported the outcome of a cutoff threshold ≥1. Aside from the EPE grading system, diagnostic accuracy of a quantitative assessment of the length of capsular contact (LCC) and in-house Likert scale were reported by 2 studies (18, 25, 26). In all studies, the MRI images were interpreted by 2 radiologists independently with experience of 2–15 years. The inter-reader agreement calculated with kappa values was reported by 3 studies, which ranged from 0.47 to 0.88 (25–27). In 1 study, the MRI was performed with a 1.5-T scanner (25), whereas all the remaining 3 studies used 3.0-T scanners. In 3 studies, the readers were blinded to final pathology results; however, 1 study reported that the readers were aware that patients had PCa (26). The study characteristics are summarized in Table 2, and the key points of the included studies are summarized in Table 3.

Generally, quality assessment for included studies was high (Figure 2). However, concerning the patient selection domain, 3 of 4 studies were retrospective in study design (25–27). For the index test domain, one study reported that the radiologists were aware that patients were diagnosed with PCa and had undergone RP but were unaware of the final histopathologic finding (26). Concerning the two other domains, all studies were considered as low risk of bias.

Figure 2 Grouped bar charts show the risk of bias and concerns for applicability of included studies.

The sensitivity and specificity for individual studies were 0.75–0.89 and 0.47–0.76. Pooled sensitivity and specificity of 4 included studies combined were 0.82 (95% CI 0.76–0.87) and 0.63 (95% CI 0.51–0.73), respectively; the coupled forest plots are presented in Figure 3. Higgins’s I2 statistics revealed moderate heterogeneity regarding sensitivity (I2 = 55.87%) and considerable heterogeneity regarding specificity (I2 = 93.05%). The pooled LR+ and LR− were 2.20 (95% CI 1.70–2.86) and 0.28 (95% CI 0.22–0.36), respectively, with a DOR of 7.77 (95% CI 5.27–11.44; Figure 4). The calculated area under the HSROC curve was 0.82 (95% CI 0.79–0.85). The large difference between the 95% confidence region and the 95% prediction region in the HSROC curve revealed heterogeneity between the studies, which is demonstrated in Figure 5. Deeks’ funnel plot and asymmetry test showed that there was no significant probability of publication bias among included studies, with a p-value of 0.64 (Figure 6).

Figure 5 Hierarchical summary receiver operating characteristic plots with summary point and 95% confidence area for the overall.

Figure 6 Deeks’s funnel plot. A p-value of 0.64 suggests that the likelihood of publication bias is low.

In the current study, we assessed the diagnostic performance of the EPE grading system for predicting EPE in patients with PCa. Based on 4 studies, the pooled sensitivity and specificity were 0.82 (95% CI 0.76–0.87) and 0.63 (95% CI 0.51–0.73), with an area under HSROC of 0.82 (95% CI 0.79–0.85). Because of insufficient data, it is unfeasible to pool the summary estimates of inter-reader agreement; however, 3 studies reported that the κ values ranged from 0.47 to 0.88, indicating a moderate to substantial reproducibility among radiologists.

Previous conventional assessment of the 5-point EPE Likert scale (1 = highly unlikely, 2 = unlikely, 3 = equivocal or indeterminate, 4 = likely, and 5 = highly likely) have been employed widely in clinical practice, in which radiologists assign a score for the likelihood of EPE during MRI interpretation. However, the Likert scale primarily depends on radiologists’ personal patterns and experience and then lacks objective criteria, resulting in widely varied accuracy (28–30). A prior meta-analysis showed that the pooled sensitivity and specificity were 0.57 and 0.91 for detection of EPE with mpMRI (31); by contrast, the EPE grading system yielded higher sensitivity but lower specificity and with overall similar diagnostic performance. However, compared with previous MRI grading methods, the EPE grading system provided a standardized and simplified scoring system for the prediction of EPE, because it is based on only a few imaging features and is easy to teach and learn. Moreover, Xu et al. and Park et al. reported good inter-reader agreement while using the EPE grading system, and less experienced radiologists could benefit from this guideline and yield good diagnostic accuracy (26, 27). Nonetheless, the EPE grading system is still burdened with a subjective bias between radiologists due to some qualitative analyses (25).

For patients with EPE, aggressive surgery led to high cancer-specific survival but at the cost of a higher rate of urinary incontinence and erectile dysfunction, whereas preservation of the NVBs leads to a higher risk of positive surgical margin and biochemical recurrence, which then leads to treatment failure after RP. The optimal clinical decision is a trade-off, which needs accurate preoperative assessment of histopathologic EPE. An ideal scoring system should be based on precise definitions, is easy to apply in clinical practice, is robust, and has a high level of inter-reader agreement. For prediction of EPE, it should include both quantitative measures (apparent diffusion coefficient, tumor size, tumor volume, and LLC) and the qualitative criteria. The ESUR PI-RADS recommends reporting these features when evaluating mpMRI prostate examinations; however, it does not assign a likelihood of EPE based on a combination of these findings (11–13). Although the ESUR PI-RADS includes a discontinuous scale (1 = capsular abutment; 2 = not specified; 3 = capsular irregularity; 4 = NVB thickening, bulge, or loss of capsule; and 5 = measurable extracapsular disease) for prediction of EPE, only a few studies assessed its diagnostic performance. A recent meta-analysis showed that the pooled sensitivity and specificity were 0.71 and 0.76 (17). Nevertheless, the diagnostic results were extracted from more experienced readers or more accurate outcomes.

In recent years, quantitative metrics are intensively investigated for assisting the prediction of EPE, which includes LCC, ADC, tumor volume, and tumor size. These mpMRI quantitative metrics showed moderate-to-high diagnostic accuracy as an independent predictor for the detection of EPE. Nonetheless, various measurement approaches and tools, along with MRI techniques and sequences, result in widely varied optimal cutoff thresholds (32, 33). The EPE grading system recommends the quantitative metric of 15-mm curvilinear contact length as a threshold for evaluation of EPE; however, it was unclear how such threshold was derived. According to current evidence, the reported optimal threshold varied from 6 to 20 mm, with sensitivity of 0.59–0.91 and specificity of 0.44–0.88 (34). The lower cutoff value for predicting EPE will lead to higher sensitivity but at the cost of decreased specificity, and vice versa. In PI-RADS v2, a tumor size of 15 mm was recommended as the cutoff for the prediction of EPE, while some studies demonstrated that the optimal threshold was 16–18 mm (35, 36). Nevertheless, this quantitative assessment was not included in the EPE grading system.

There are some limitations to our study. First, the majority of studies included were retrospective in study design, leading to high risk regarding patient selection domain. Nevertheless, it is unfeasible to pool the summary estimates from prospective studies. Second, substantial heterogeneity was found across included studies, which affected the general applicability of our meta-analysis. However, it is impossible to perform meta-regression and subgroup analyses to investigate the source because there are merely 4 studies in total. Nevertheless, we applied a solid and robust methodology for this meta-analysis using the guidelines published by the Cochrane Collaboration. Third, our analysis was based on only 4 studies; therefore, the results should be regarded with caution, and large prospective studies are needed to validate this guideline in the future. In addition, because of insufficient information, we cannot perform direct comparisons between the EPE grades with other scoring systems.

The EPE grading system demonstrated high sensitivity and moderate specificity, with a good inter-reader agreement. However, this scoring system needs more large prospective studies to be validated in clinical practice.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

Guarantor of the article: JT. Conception and design: WL and WWS. Collection and assembly of data: FL and YS. Data analysis and interpretation: YMW, JT, and ADD. All authors contributed to the article and approved the submitted version.

This study was supported by the Natural Science Foundation of Jiangsu Vocational College of Medicine (No. 20204112).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Ferlay J, Colombet M, Soerjomataram I, Dyba T, Randi G, Bettio M, et al. Cancer Incidence and Mortality Patterns in Europe: Estimates for 40 Countries and 25 Major Cancers in 2018. Eur J Cancer Oxf Engl 1990 (2018) 103:356–87. doi: 10.1016/j.ejca.2018.07.005

2. Siegel RL, Miller KD, Jemal A. Cancer Statistics, 2020. CA Cancer J Clin (2020) 70:7–30. doi: 10.3322/caac.21590

3. Tollefson MK, Karnes RJ, Rangel LJ, Bergstralh EJ, Boorjian SA. The Impact of Clinical Stage on Prostate Cancer Survival Following Radical Prostatectomy. J Urol (2013) 189:1707–12. doi: 10.1016/j.juro.2012.11.065

4. Mikel Hubanks J, Boorjian SA, Frank I, Gettman MT, Houston Thompson R, Rangel LJ, et al. The Presence of Extracapsular Extension is Associated With an Increased Risk of Death From Prostate Cancer After Radical Prostatectomy for Patients With Seminal Vesicle Invasion and Negative Lymph Nodes. Urol Oncol (2014) 32:26.e1–7. doi: 10.1016/j.urolonc.2012.09.002

5. Quinlan DM, Epstein JI, Carter BS, Walsh PC. Sexual Function Following Radical Prostatectomy: Influence of Preservation of Neurovascular Bundles. J Urol (1991) 145:998–1002. doi: 10.1016/s0022-5347(17)38512-9

6. Swindle P, Eastham JA, Ohori M, Kattan MW, Wheeler T, Maru N, et al. Do Margins Matter? The Prognostic Significance of Positive Surgical Margins in Radical Prostatectomy Specimens. J Urol (2008) 179:S47–51. doi: 10.1016/j.juro.2008.03.137

7. Rayn KN, Bloom JB, Gold SA, Hale GR, Baiocco JA, Mehralivand S, et al. Added Value of Multiparametric Magnetic Resonance Imaging to Clinical Nomograms for Predicting Adverse Pathology in Prostate Cancer. J Urol (2018) 200:1041–7. doi: 10.1016/j.juro.2018.05.094

8. Ohori M, Kattan MW, Koh H, Maru N, Slawin KM, Shariat S, et al. Predicting the Presence and Side of Extracapsular Extension: A Nomogram for Staging Prostate Cancer. J Urol (2004) 171:1844–9; discussion 1849. doi: 10.1097/01.ju.0000121693.05077.3d

9. Eifler JB, Feng Z, Lin BM, Partin MT, Humphreys EB, Han M, et al. An Updated Prostate Cancer Staging Nomogram (Partin Tables) Based on Cases From 2006 to 2011. BJU Int (2013) 111:22–9. doi: 10.1111/j.1464-410X.2012.11324.x

10. Morlacco A, Sharma V, Viers BR, Rangel LJ, Carlson RE, Froemming AT, et al. The Incremental Role of Magnetic Resonance Imaging for Prostate Cancer Staging Before Radical Prostatectomy. Eur Urol (2017) 71:701–4. doi: 10.1016/j.eururo.2016.08.015

11. Barentsz JO, Richenberg J, Clements R, Choyke P, Verma S, Villeirs G, et al. ESUR Prostate MR Guidelines 2012. Eur Radiol (2012) 22:746–57. doi: 10.1007/s00330-011-2377-y

12. Weinreb JC, Barentsz JO, Choyke PL, Cornud F, Haider MA, Macura KJ, et al. PI-RADS Prostate Imaging – Reporting and Data System: 2015, Version 2. Eur Urol (2016) 69:16–40. doi: 10.1016/j.eururo.2015.08.052

13. Turkbey B, Rosenkrantz AB, Haider MA, Padhani AR, Villeirs G, Macura KJ, et al. Prostate Imaging Reporting and Data System Version 2.1: 2019 Update of Prostate Imaging Reporting and Data System Version 2. Eur Urol (2019) 76:340–51. doi: 10.1016/j.eururo.2019.02.033

14. Hamoen EHJ, Rooij M, Witjes JA, Barentsz JO, Rovers MM. Use of the Prostate Imaging Reporting and Data System (PI-RADS) for Prostate Cancer Detection With Multiparametric Magnetic Resonance Imaging: A Diagnostic Meta-Analysis. Eur Urol (2015) 67:1112–21. doi: 10.1016/j.eururo.2014.10.033

15. Woo S, Suh CH, Kim SY, Cho JY, Kim SH. Diagnostic Performance of Prostate Imaging Reporting and Data System Version 2 for Detection of Prostate Cancer: A Systematic Review and Diagnostic Meta-Analysis. Eur Urol (2017) 72:177–88. doi: 10.1016/j.eururo.2017.01.042

16. Park KJ, Choi SH, Kim M-H, Kim JK, Jeong IG. Performance of Prostate Imaging Reporting and Data System Version 2.1 for Diagnosis of Prostate Cancer: A Systematic Review and Meta-Analysis. J Magn Reson Imaging JMRI (2021) 54:103–12. doi: 10.1002/jmri.27546

17. Li W, Dong A, Hong G, Shang W, Shen X. Diagnostic Performance of ESUR Scoring System for Extraprostatic Prostate Cancer Extension: A Meta-Analysis. Eur J Radiol (2021) 143:109896. doi: 10.1016/j.ejrad.2021.109896

18. Mehralivand S, Shih JH, Harmon S, Smith C, Bloom J, Czarniecki M, et al. A Grading System for the Assessment of Risk of Extraprostatic Extension of Prostate Cancer at Multiparametric MRI. Radiology (2019) 290:709–19. doi: 10.1148/radiol.2018181278

19. Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JPA, et al. The PRISMA Statement for Reporting Systematic Reviews and Meta-Analyses of Studies That Evaluate Healthcare Interventions: Explanation and Elaboration. Epidemiol Biostat Public Health (2009) 6:e1–34. doi: 10.1016/j.jclinepi.2009.06.006

20. Whiting PF. QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann Intern Med (2011) 155:529. doi: 10.7326/0003-4819-155-8-201110180-00009

21. Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, et al. The Cochrane Collaboration’s Tool for Assessing Risk of Bias in Randomised Trials. BMJ (2011) 343:889–93. doi: 10.1136/bmj.d5928

22. Reitsma JB, Glas AS, Rutjes AWS, Scholten RJPM, Bossuyt PM, Zwinderman AH. Bivariate Analysis of Sensitivity and Specificity Produces Informative Summary Measures in Diagnostic Reviews. J Clin Epidemiol (2005) 58:982–90. doi: 10.1016/j.jclinepi.2005.02.022

23. Rutter CM, Gatsonis CA. A Hierarchical Regression Approach to Meta-Analysis of Diagnostic Test Accuracy Evaluations. Stat Med (2001) 20:2865–84. doi: 10.1002/sim.942

24. Deeks JJ. Systematic Reviews of Evaluations of Diagnostic and Screening Tests. BMJ (2001) 323:157–62. doi: 10.1136/bmj.323.7305.157

25. Reisæter LAR, Halvorsen OJ, Beisland C, Honoré A, Gravdal K, Losnegård A, et al. Assessing Extraprostatic Extension With Multiparametric MRI of the Prostate: Mehralivand Extraprostatic Extension Grade or Extraprostatic Extension Likert Scale? Radiol Imaging Cancer (2020) 2:e190071. doi: 10.1148/rycan.2019190071

26. Park KJ, Kim M, Kim JK. Extraprostatic Tumor Extension: Comparison of Preoperative Multiparametric MRI Criteria and Histopathologic Correlation After Radical Prostatectomy. Radiology (2020) 296:87–95. doi: 10.1148/radiol.2020192133

27. Xu L, Zhang G, Zhang X, Bai X, Yan W, Xiao Y, et al. External Validation of the Extraprostatic Extension Grade on MRI and Its Incremental Value to Clinical Models for Assessing Extraprostatic Cancer. Front Oncol (2021) 11:655093. doi: 10.3389/fonc.2021.655093

28. Costa DN, Passoni NM, Leyendecker JR, de Leon AD, Lotan Y, Roehrborn CG, et al. Diagnostic Utility of a Likert Scale Versus Qualitative Descriptors and Length of Capsular Contact for Determining Extraprostatic Tumor Extension at Multiparametric Prostate MRI. Am J Roentgenol (2018) 210:1066–72. doi: 10.2214/AJR.17.18849

29. Freifeld Y, Diaz de Leon A, Xi Y, Pedrosa I, Roehrborn CG, Lotan Y, et al. Diagnostic Performance of Prospectively Assigned Likert Scale Scores to Determine Extraprostatic Extension and Seminal Vesicle Invasion With Multiparametric MRI of the Prostate. AJR Am J Roentgenol (2019) 212:576–81. doi: 10.2214/AJR.18.20320

30. Fütterer JJ, Engelbrecht MR, Huisman HJ, Jager GJ, Hulsbergen-van De Kaa CA, Witjes JA, et al. Staging Prostate Cancer With Dynamic Contrast-Enhanced Endorectal MR Imaging Prior to Radical Prostatectomy: Experienced Versus Less Experienced Readers. Radiology (2005) 237:541–9. doi: 10.1148/radiol.2372041724

31. de Rooij M, Hamoen EHJ, Witjes JA, Barentsz JO, Rovers MM. Accuracy of Magnetic Resonance Imaging for Local Staging of Prostate Cancer: A Diagnostic Meta-Analysis. Eur Urol (2016) 70:233–45. doi: 10.1016/j.eururo.2015.07.029

32. Shieh AC, Guler E, Ojili V, Paspulati RM, Elliott R, Ramaiya NH, et al. Extraprostatic Extension in Prostate Cancer: Primer for Radiologists. Abdom Radiol NY (2020) 45:4040–51. doi: 10.1007/s00261-020-02555-x

33. Schieda N, Lim CS, Zabihollahy F, Abreu-Gomez J, Krishna S, Woo S, et al. Quantitative Prostate MRI. J Magn Reson Imaging JMRI (2020) 53:1632–45. doi: 10.1002/jmri.27191

34. Kim T-H, Woo S, Han S, Suh CH, Ghafoor S, Hricak H, et al. The Diagnostic Performance of the Length of Tumor Capsular Contact on MRI for Detecting Prostate Cancer Extraprostatic Extension: A Systematic Review and Meta-Analysis. Korean J Radiol (2020) 21:684–94. doi: 10.3348/kjr.2019.0842

35. Ahn H, Hwang SI, Lee HJ, Suh HS, Choe G, Byun S-S, et al. Prediction of Extraprostatic Extension on Multi-Parametric Magnetic Resonance Imaging in Patients With Anterior Prostate Cancer. Eur Radiol (2020) 30:26–37. doi: 10.1007/s00330-019-06340-3

Keywords: prostate neoplasms, magnetic resonance imaging, diagnostic performance, extraprostatic extension, systematic review

Citation: Li W, Shang W, Lu F, Sun Y, Tian J, Wu Y and Dong A (2022) Diagnostic Performance of Extraprostatic Extension Grading System for Detection of Extraprostatic Extension in Prostate Cancer: A Diagnostic Systematic Review and Meta-Analysis. Front. Oncol. 11:792120. doi: 10.3389/fonc.2021.792120

Received: 09 October 2021; Accepted: 27 December 2021;

Published: 25 January 2022.

Edited by:

Tanya I. Stoyanova, Stanford University, United StatesReviewed by:

Helena Vila-Reyes, Columbia University Irving Medical Center, United StatesCopyright © 2022 Li, Shang, Lu, Sun, Tian, Wu and Dong. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jun Tian, MTEzNjhAanNtYy5lZHUuY24=; Anding Dong, c3VibWl0Zm9yX3NjaUAxMjYuY29t

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.