94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Oncol., 26 July 2021

Sec. Cancer Imaging and Image-directed Interventions

Volume 11 - 2021 | https://doi.org/10.3389/fonc.2021.700158

This article is part of the Research TopicThe Use Of Deep Learning In Mapping And Diagnosis Of CancersView all 21 articles

Tianle Shen1†

Tianle Shen1† Runping Hou1,2†

Runping Hou1,2† Xiaodan Ye3

Xiaodan Ye3 Xiaoyang Li1

Xiaoyang Li1 Junfeng Xiong2

Junfeng Xiong2 Qin Zhang1

Qin Zhang1 Chenchen Zhang1

Chenchen Zhang1 Xuwei Cai1

Xuwei Cai1 Wen Yu1

Wen Yu1 Jun Zhao2*‡

Jun Zhao2*‡ Xiaolong Fu1*‡

Xiaolong Fu1*‡Background: To develop and validate a deep learning–based model on CT images for the malignancy and invasiveness prediction of pulmonary subsolid nodules (SSNs).

Materials and Methods: This study retrospectively collected patients with pulmonary SSNs treated by surgery in our hospital from 2012 to 2018. Postoperative pathology was used as the diagnostic reference standard. Three-dimensional convolutional neural network (3D CNN) models were constructed using preoperative CT images to predict the malignancy and invasiveness of SSNs. Then, an observer reader study conducted by two thoracic radiologists was used to compare with the CNN model. The diagnostic power of the models was evaluated with receiver operating characteristic curve (ROC) analysis.

Results: A total of 2,614 patients were finally included and randomly divided for training (60.9%), validation (19.1%), and testing (20%). For the benign and malignant classification, the best 3D CNN model achieved a satisfactory AUC of 0.913 (95% CI: 0.885–0.940), sensitivity of 86.1%, and specificity of 83.8% at the optimal decision point, which outperformed all observer readers’ performance (AUC: 0.846±0.031). For pre-invasive and invasive classification of malignant SSNs, the 3D CNN also achieved satisfactory AUC of 0.908 (95% CI: 0.877–0.939), sensitivity of 87.4%, and specificity of 80.8%.

Conclusion: The deep-learning model showed its potential to accurately identify the malignancy and invasiveness of SSNs and thus can help surgeons make treatment decisions.

Lung cancer is one of the most lethal malignancies worldwide (1). Early detection and accurate diagnosis of pulmonary nodules can decrease the mortality of lung cancer (2). According to the content of solid component, pulmonary nodules can be divided into solid nodules and subsolid nodules (SSNs). They have great difference in clinical management due to their different biological characteristics (3).

SSNs are defined as nodular areas of homogeneous or heterogeneous attenuation that did not completely cover the whole lung parenchyma within them, including pure ground-glass nodules (PGGNs) and part-solid nodules (PSNs) (4) (Supplementary Figure S1). According to the pathology, SSNs can be further divided into benign and malignant lesions, of which malignant SSNs include pre-invasive (atypical adenomatous hyperplasia, AAH; adenocarcinoma in situ, AIS; minimally invasive adenocarcinoma, MIA) and invasive lesions (invasive pulmonary adenocarcinoma, IA) (5). The three categories of SSNs have different biological characteristics and need different clinical management. Benign SSNs include hemorrhage, inflammation, fibrosis, pulmonary alveolar proteinosis, etc. (6), which need almost no intervention but only follow-up. In contrast, malignant SSNs include subtypes of adenocarcinoma, and those malignant pathological types need careful intervention, such as surgical resection and stereotactic body radiation therapy (SBRT) (7). To be specific, receiving systematic lymph node dissection has no statistical significance on improving the prognosis of patients with pre-invasive SSNs (8, 9). The pre-invasive malignant SSNs may just need to be treated with conservative approach (sub-lobectomy or wedge resection) with long-term CT follow-up, while more aggressive surgical treatment (standard lobectomy with extended lymph node dissection) is necessary for patients with invasive (IA) SSNs. Also, the prognosis of different pathological subtypes varies greatly after the corresponding treatment (10, 11). Therefore, accurate classification of SSNs has a great importance for clinical decision-making and prognosis evaluating, especially for thoracic surgeons as it determines the candidates of surgery and the type of lung resection.

Nowadays, the prevalence application of high-resolution CT scanning makes more SSNs be detected at an early stage. However, for those detected SSNs, there exist many difficulties for accurate diagnosis during clinical practice. For example, the synchronous or asynchronous appearance of multiple primary SSNs, the inappropriate location of the SSNs, and the poor physical condition of the patients make it impossible to access each SSN by biopsy. Therefore, CT imaging has become the most important method to help clinicians make the diagnostic decisions of SSNs. As reported, clinicians often make decisions according to some CT morphological features (12, 13). Nevertheless, these morphological features are subjective and qualitative, which often lead to low inter-observer agreement and unsatisfied accuracy (14–16). The inaccurate diagnosis caused by the above limitations have led to undertreatment or overtreatment for patients with SSNs in clinical practice. Therefore, a more objective and quantitative method to accurately distinguish the malignancy and invasiveness of SSNs is urgently needed.

Recently, deep learning has been widely used to analyze medical images on various image modalities (17–20). Previous studies have shown the efficiency of deep learning in pulmonary nodule detection and classification areas (21–23). However, most of these studies are based on solid nodules, and few concentrate on SSNs. Therefore, this study aims to develop and validate a deep learning–based malignancy and invasiveness prediction model in patients with SSNs from the realistic clinical cohort.

With approval from the institutional review board, we retrospectively collected patients with pulmonary nodules in Shanghai Chest Hospital from January 1, 2012, to December 31, 2018. The inclusion criteria include the following: (1) Patients received surgical resection of pulmonary nodules in our hospital. (2) Patients received pre-surgery chest CT scanning (thickness ≤5 mm) in our hospital. (3) Subsolid nodules were confirmed in the chest CT. Patients were excluded if (1) post-surgery pathological results were not available; (2) distant metastasis was found in preoperative examinations; (3) other malignant radiological features were present including enlarged hilar nodes, pleural effusion, atelectasis, etc.

Chest CT scans were taken with a 64-detector CT row scanner (Brilliance 64; Philips, Eindhoven, Netherlands). Part of the patients conducted a target thin-section helical CT scan with layer thickness of 1 mm, while the others only had the whole lung scan with a layer thickness of 5 mm.

SSNs were manually segmented by one radiation oncologist (with 5 years of experience in CT interpretation) using the MIM software (version 5.5.1, shown with window level −400 and window width 1,600), then the region of interest (ROI) was confirmed by one radiologist (with over 10 years of experience in CT interpretation).

The image preprocessing procedure are as follows: CT scans were converted into Hounsfield units (HU), then voxel intensity was clipped to [−1,024, 400] and [−160, 240] HU, respectively. Min-Max normalization was used to rescale the image to [0,1]. Linear interpolation was applied to get isotropic volumes with a resolution of 0.5 mm × 0.5 mm × 0.5 mm. Then, an image cube and the corresponding segmentation mask with 64 × 64 × 64 voxels were cropped from the interpolated CT image centered on the tumor. The cropped image cubes were used as the input of our 3D CNN classification model.

According to the pathological report, each SSN was given a specific label (benign, AAH, AIS, MIA, IA). For the malignancy classification, patients who had at least one pathologically confirmed malignant SSN (including AAH, AIS, MIA, IA) were regarded as positive samples with label 1, and those without malignant findings were negative samples with label 0. For the invasiveness classification, patients who were pathologically confirmed as AAH, AIS, or MIA were regarded as pre-invasive samples with label 0, while patients confirmed as IA were regarded as invasive samples with label 1.

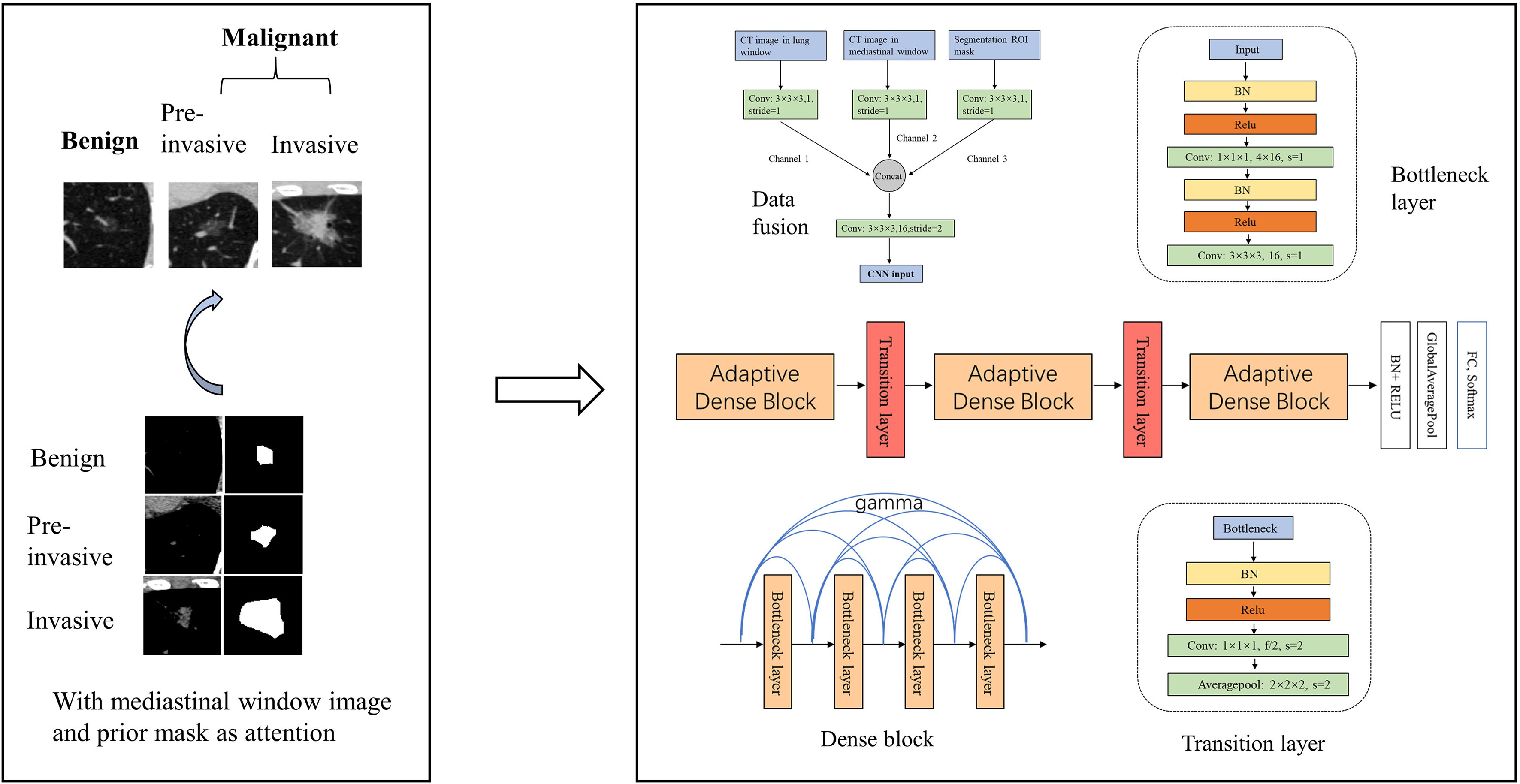

We respectively established a binary classifier to distinguish benign and malignant SSNs and another one to recognize pre-invasive and invasive SSNs. The framework of our models is shown in Figure 1.

Figure 1 Framework of our model. We developed a 3D CNN model for the malignancy and invasiveness recognition of subsolid pulmonary nodules. The 3D CNN model was based on modified 3D adaptive DenseNet and was improved by incorporating different window images and segmentation mask.

We totally constructed three models for the malignancy and invasiveness prediction of SSNs, respectively. First, a logistic regression model built with nodule size was used as the baseline clinical model. Second, a 3D CNN model based on modified adaptive DenseNet using the lung window image as input was constructed (AdaDense) (24). The adaptive dense connected structure can effectively reuse the shallow layers’ features by allowing each layer access to feature maps from all of its preceding layers, which makes it easier to get a smooth decision function with better generalization performance. However, as most of the subsolid nodules’ size are small, there exist lots of noisy information from the background in the cropped image patches. Therefore, we considered incorporating the segmentation mask as attention map to help the network focus on regions within the nodule. Moreover, studies have shown that solid portions of SSNs detected by mediastinal window can help distinguish pure ground-glass nodules and part-solid nodules (25, 26), and the proportion of solid components are considered to be related with the malignancy and invasiveness classification (8, 27). Therefore, to take the segmentation mask and solid component factors into account, we finally built another 3D CNN model using the lung window image [HU: (−1,024,400)] incorporated with mediastinal window image [HU: (−160,240)] and mask image as input (AdaDense_M). Then, given the CT image of SSNs, the CNN model output the predicted probability of the SSN being malignancy or invasiveness.

The architecture of the AdaDense_M model can be seen in Figure 1, which consists of two parts, data fusion and main structure. For the data fusion part, the CT image patch in different windows and the corresponding segmentation mask were separately convolved by a kernel of 3×3×3 to obtain channels 1, 2, and 3, respectively. Then the three channels were concatenated together and convolved by a 3×3×3 kernel with stride=2 as the input of the main structure. This operation reduced the original feature map of 64×64×64 to the size of 32×32×32. For the main structure part, there were three dense blocks connected by transition layers. Each of the dense block contained four bottleneck structures, and after each bottleneck layer, all feature maps in the previous layers were adaptively concentrated together to realize feature reuse. The bottleneck layer can reduce the number of input feature maps, thereby improving the computational efficiency. The transition layer further compressed parameters by reducing half of the feature maps after dense blocks.

As the sample size was limited, we used data augmentation to avoid overfitting. We did online augmentation including rotations, reflection, and translation. For a given nodule patch and the corresponding mask, they were first translated by one to three voxels in three directions. Then the translated images were randomly rotated by 90, 180, 270, and 360° around the x-, y-, and z-axis. Finally, the rotated images were randomly flipped along the x-, y-, and z-axis.

For the network training, we used cross-entropy function as loss function and Adam optimizer to train the model. Xavier was used to initialize the network. The learning rate was set to 1e-4. Maximum iterative epoch was 1,000. We early stopped the training process when the validation dataset’s performance had no improvement within five epochs. The batch size for each iteration was set to 24. The multiple test method was used to improve the stability of testing performance. Given a test example, the input image patch with different windows and the corresponding mask was randomly generated 10 times to obtain 10 different prediction probabilities, and the final prediction result was computed by averaging all prediction probabilities. The study was implemented with Tensorflow framework on a GeForce GTX 1080Ti GPU.

To compare the performance of the CNN model with human experts for malignancy prediction, an observer reader study was conducted in the same testing dataset. Two radiologists (with over 10 years of clinical experience) were respectively asked to grade the SSNs based on preoperative CT images. The scores ranged from 0 to 10, and the higher the score was, the more likely they thought the SSN was malignant. The detailed scoring criteria can be found in Supplementary Figure S2. The radiologists made their own decisions independently. Also, the radiologists were given access to patients’ demographics and clinical history as auxiliary information.

To evaluate different models’ performance, the receiver operating characteristic curve (ROC) was plotted, and the area under the ROC curve (AUC), sensitivity, and specificity were calculated to evaluate these models’ discrimination ability. Delong test was used to pairwise compare different ROCs. Calibration curve was utilized to assess the calibration ability of the model. Brier score was calculated to quantify the calibration of those models, of which lower values (closer to 0) indicate better calibration. Decision curve analysis was used to determine the clinical usefulness of different models by calculating the net benefit of the constructed models at different threshold probabilities.

Mann-Whitney test was used to compare differences of the mean value of patient’s age and max diameter in different groups. Pearson’s χ2 test was used to compare differences of patients’ gender and location proportion in different groups.

The statistical analysis was conducted with R software (Rproject.org) and python (version 3.7). P-value less than 0.05 was considered as statistically significant difference.

From the total of 2,614 patients, 1,791 were malignant and 823 were benign nodules. The number of patients with 1 mm layer thickness was 1,735 (accounting for 66.4%), while the other 879 (33.6%) patients were with scans of 5 mm thickness. The median nodule diameter was 1 cm. All patients’ characteristic statistical information are shown in Table 1. Detailed distribution of nodule sizes is shown in Supplementary Figure S3. Generally, female patients with larger diameter and location of right upper and left upper lobe were more likely to be malignant. The patients were randomly divided into training (60.9%), validation (19.1%), and testing datasets (20%) for the following analysis. The distribution of different subtypes of SSNs on each dataset is shown in Table 2. No significant difference was found among the datasets (Supplementary Table S1).

The observer readers’ classification ROC, AUC, sensitivity, and specificity are shown in Table 3 and Supplementary Figure S4. As we can see, one radiologist achieved the best performance with an AUC of 0.877 (95% CI: 0.843–0.911), sensitivity of 95.4%, and specificity of 66.7%, which was significantly better than another radiologist reader with an AUC of 0.815 (95% CI: 0.774–0.856). The difference also indicated the low inter-observer agreement of the malignancy recognition in clinical practice.

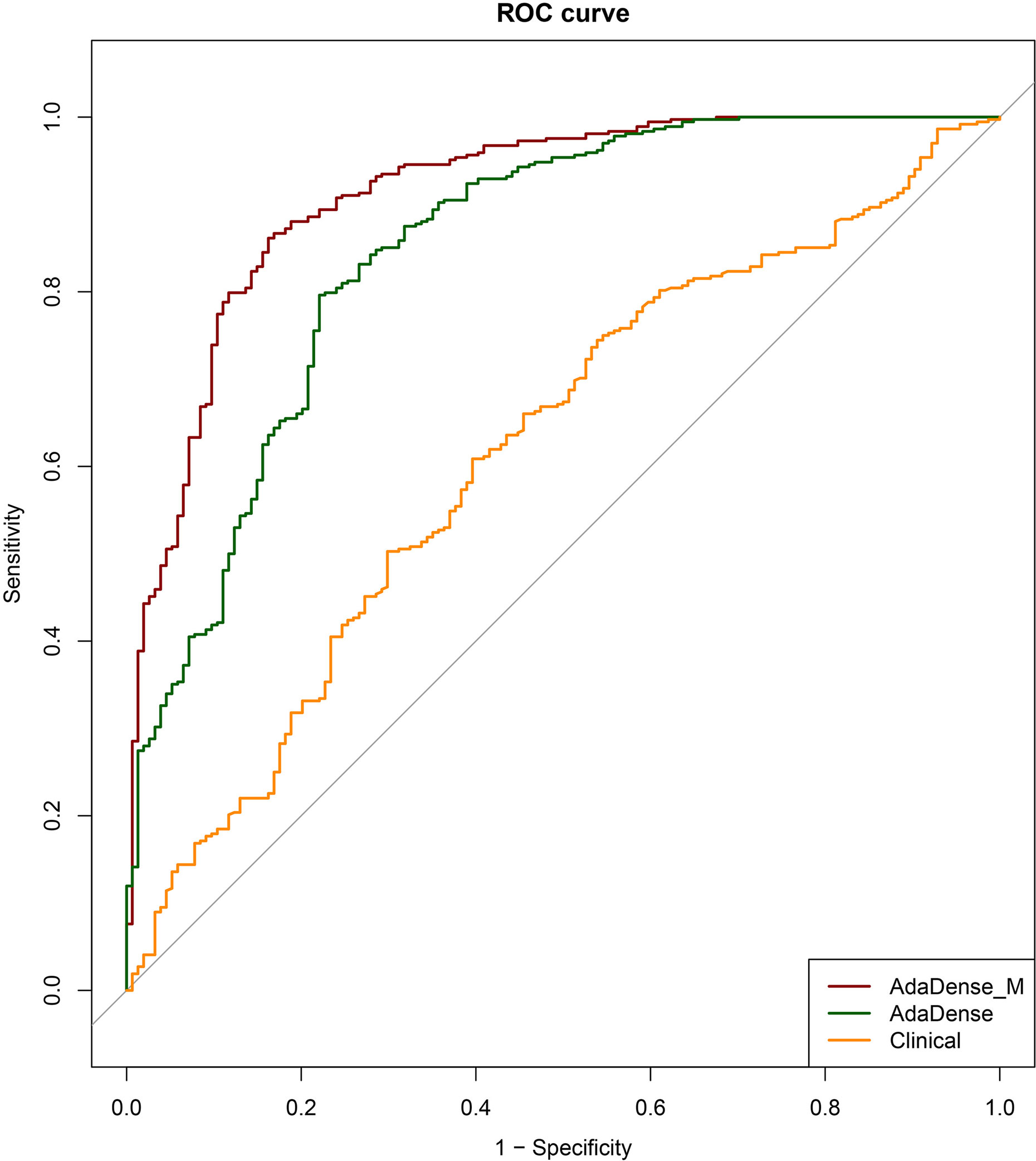

The ROC curves of the 3D CNN models for malignancy classification in the testing dataset are shown in Figure 2. As we can see, the best CNN model based on CT images was 3D CNN incorporated with different window images and the segmentation mask (AdaDense_M). The AUC of the best CNN model was 0.913 (95% CI: 0.885–0.940), which was significantly better than the 3D CNN only with the lung window image input (AdaDense) with an AUC of 0.848 (95% CI: 0.810–0.886). Also, the CNN model performed significantly better than clinical features-based model (AUC: 0.618), and adding clinical features to the CNN model yielded no significant improvement (AUC: 0.914, p = 0.489). The sensitivity and specificity of the AdaDense_M model at the optimal decision point were 86.1 and 83.8%. With a sensitivity of 100, 98, and 95%, the percentages of benign nodules that could be correctly identified was 32.5, 47.4, and 63.0%. Also, the Adadense_M model performed better than all the observer readers (AUC: 0.846±0.031).

Figure 2 The ROC curves of the CNN models for malignancy prediction. The ROC curves of the AdaDense_M (CNN incorporated with different window images and segmentation mask), AdaDense (CNN only with the lung window image as input), and baseline clinical model (diameter) for malignancy prediction in the testing dataset. The three models’ corresponding AUCs were 0.913, 0.848, and 0.618, respectively. DeLong tests showed that the AdaDense_M performs significantly better than the AdaDense model and the clinical model (p<0.001).

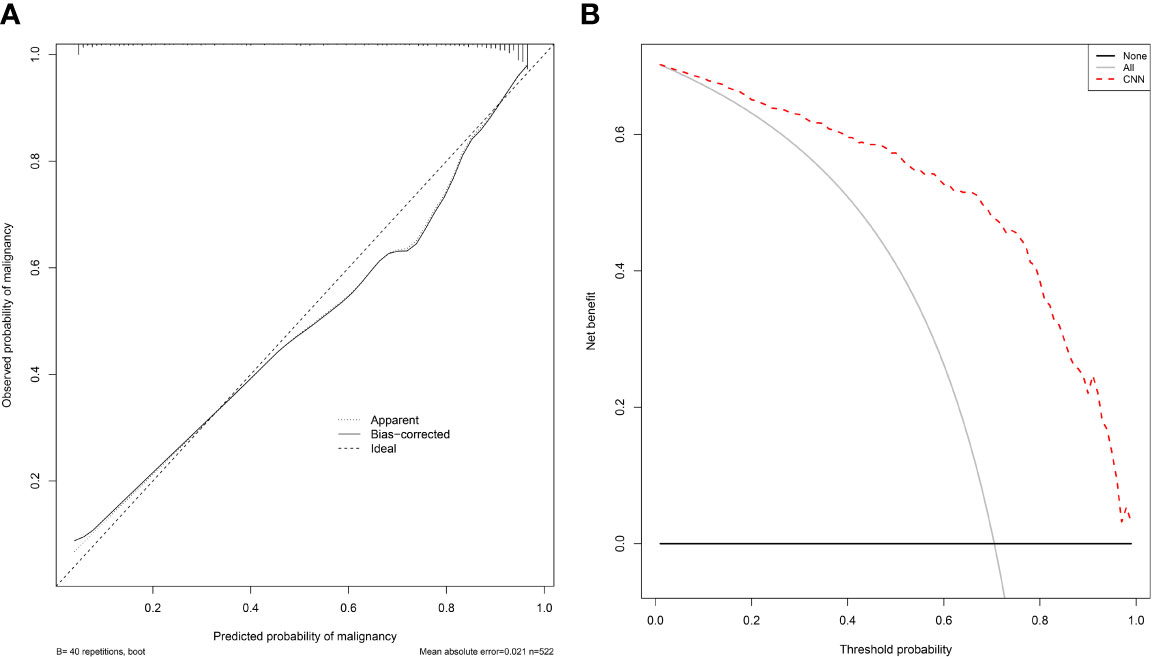

The calibration curve and decision curve of the CNN model (AdaDense_M) were plotted in Figure 3. The Brier score was 0.101, showing satisfactory consistency between the predicted malignant probability and actual observation (Figure 3A). Also, the model can bring apparent benefits for the malignancy classification when the threshold was set to 0.01–0.99 compared with the treat-all strategies (perform surgeries in all patients) (Figure 3B).

Figure 3 The calibration curve and decision curve of the CNN model for malignancy prediction. (A) The calibration curve of the CNN model (AdaDense_M) for malignancy prediction. The diagonal dotted line represents a perfect prediction by an ideal model. (B) The decision curve of the CNN model (AdaDense_M) for malignancy prediction. The gray solid line represents the assumption that all patients had malignant nodules. The black solid line represents the assumption that no patients had malignant nodules. The net benefit was calculated by subtracting the proportion of all patients who are false positive from the proportion who are true positive, weighting by the relative harm of a false-positive and a false-negative result.

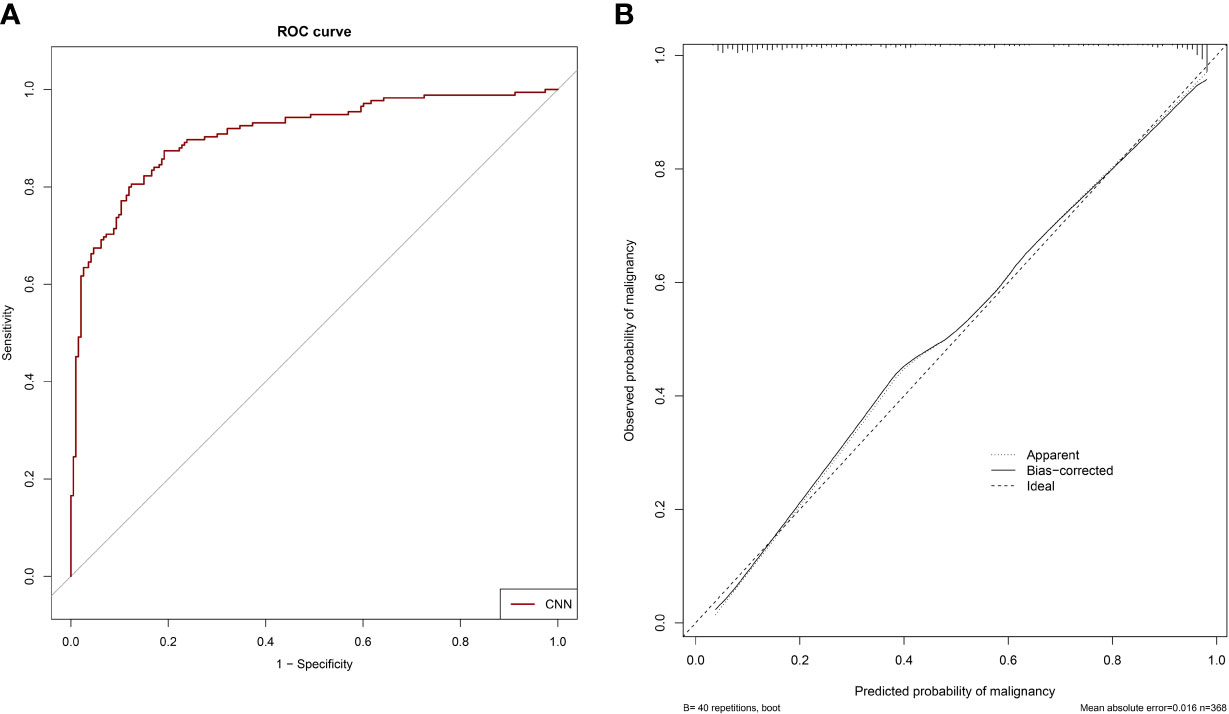

The ROC curves of the 3D CNN models for invasiveness classification in the testing dataset are shown in Figure 4A. The CNN model (AdaDense_M) achieved satisfactory AUC of 0.908 (95% CI: 0.877–0.939), sensitivity of 87.4%, and specificity of 80.8% at the optimal decision point. The confusion matrix is shown in Table 4. Calibration curve showed satisfactory consistency between the predicted invasiveness probability and the actual observation with a Brier score of 0.124 (Figure 4B).

Figure 4 The ROC curve and calibration curve of the CNN model for invasiveness prediction. (A) The ROC curve of the CNN model (AdaDense_M) for invasiveness prediction with an AUC of 0.908 in the testing dataset. (B) The calibration curve of the CNN model (AdaDense_M) for invasiveness prediction in the testing dataset. The diagonal dotted line represents a perfect prediction by an ideal model.

Accurate diagnosis of malignancy and invasiveness of SSNs plays an important role in clinical decision-making, especially for thoracic surgeons. In this study, we developed and validated a novel deep-learning model based on preoperative CT images for accurate classification of SSNs. Moreover, the deep-learning model outperformed radiologists for malignancy prediction.

According to the Fleischner recommendations (3), follow-up CTs are recommended when subsolid nodules are initially detected to differentiate them between transient and persistent. Then, if the nodules are persistent, the management would be determined based on the patient's age, performance status, nodule size, and solid portion size. However, as there exist no national strategy for early-stage lung cancer screening in China, patients with pulmonary nodules may come to the hospital for a variety of reasons. Thus, for Chinese patients in clinical routine, the lesions are usually larger at the first visit, resulting in the risk of diagnosis by dynamic follow-up. Therefore, it is necessary to diagnosis SSNs based on preoperative CT images at a single point. Furthermore, this diagnostic result greatly determines the subsequent treatment strategies in clinical practice. For SSNs that are basically diagnosed as benign, almost no intervention but only follow-up is needed. While for SSNs highly suspicious of malignancy, surgery or SBRT is usually adopted according to the individual condition of patients. More specifically, sub-lobectomy is more appropriate for pre-invasive SSNs, while lobectomy with extended lymph node dissection is more suitable for invasive SSNs. Currently, the inaccurate diagnosis based on radiologists’ subjective judgment may cause overtreatment or undertreatment, which is harmful for the long-term survival of patients. Here, we established a quantitative deep-learning model that can accurately identify the malignancy and invasiveness of SSNs before the operation. This will play an important guiding role in the decision-making of the final surgical resection range, which can avoid unnecessary surgical trauma, reduce the complications of patients, and preserve the lung function to the greatest extent, and at the same time, patients can get radical treatment opportunities.

Considering that CNN has great advantage in automatically extracting deep representative image features, we decided to establish a CNN model for malignancy and invasiveness recognition of SSNs. Our established CNN model incorporated with different window images and segmentation mask (Adadense_M) finally achieved satisfying classification performance. Besides that, we tried to developed a fusion model by combining the CNN model’s prediction result and the best radiologist’s score with logistic regression. The fusion model finally achieved an AUC of 0.956 (95% CI: 0.938–0.975) for malignancy prediction, which was significantly better than the CNN model or radiologist alone. This result means that the CNN model has great potential to help the radiologist make better diagnosis of malignancy of SSNs.

Small sample size was the bottleneck to develop a high-efficacy prediction model for previous studies to distinguish pulmonary SSNs (28–32) (Table 5). Our study utilized the largest sample size to date with detailed CT images and pathologic information of SSNs. Compared with models built with qualitative features and radiomics (28–30), our CNN model can automatically learn deep representative features, which have stronger predictive ability than the hand-crafted features. Thus, our CNN model performs significantly better than other radiomics models for malignancy prediction of SSNs. Furthermore, in comparison with models developed with CNN (31, 32), our AdaDense_M model creatively uses the prior segmentation mask and tumor cube in mediastinal window as attention map, which can make the network focus on information within the tumor and its solid components. Results show that the CNN model we built achieved a high AUC value for invasiveness prediction of SSNs among the existing studies.

This study also has some limitations. First, we only included patients with pathologically confirmed SSNs who had undergone surgical resection, which results in a selection bias of more malignant patients. If more benign samples can be included, our model would be further improved. Second, there are 33.5% patients who only conducted regular CT scans with the layer thickness of 5 mm. Due to the small size and unique morphology of SSNs, the regular CT scans of SSNs are too blurred to excavate deep features for CNN. More thin-section CT scan data will be collected in the future, and the model performance may be further improved. Moreover, external dataset and prospective cohort are also required to validate the generalization ability of our model.

We constructed a deep learning–based model to identify the malignancy and invasiveness of pulmonary SSNs based on CT images. The model achieved a satisfactory performance and was proven with potential to guide the selection of surgery candidates and type of lung resection methods.

The datasets presented in this article are not readily available because the datasets are privately owned by Shanghai Chest Hospital and are not made public. Requests to access the datasets should be directed to XF,eGxmdTE5NjRAaG90bWFpbC5jb20=.

The studies involving human participants were reviewed and approved by Shanghai Chest Hospital, Shanghai Jiaotong University. The ethics committee waived the requirement of written informed consent for participation.

All authors contributed to the article and approved the submitted version. XF, JZ, TS, and RH contributed to the study concept and design. TS, RH and XL contributed to acquisition of data. RH, TS, XY, XL, JX, QZ, CZ, XC, and WY contributed to analysis and interpretation of data. TS and RH contributed to drafting of the manuscript.

This work was supported in part by the Major Research Plan of the National Natural Science Foundation of China (Grant No. 92059206), Shanghai Jiao Tong University Medical Engineering Cross Research Funds (No. YG2017ZD10 and YG2014ZD05), National Key Research and Development Program (No. 2016YFC0905502 and 2016YFC0104608), and National Natural Science Foundation of China (No. 81371634).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2021.700158/full#supplementary-material

1. Siegel RL, Miller KD, Jemal A. Cancer Statistics, 2019. CA Cancer J Clin (2019) 69(1):7–34. doi: 10.3322/caac.21551

2. Aberle DR, Adams AM, Berg CD, Black WC, Clapp JD, Fagerstrom RM, et al. Reduced Lung-Cancer Mortality With Low-Dose Computed Tomographic Screening. N Engl J Med (2011) 365(5):395–409. doi: 10.1056/NEJMoa1102873

3. Macmahon H, Naidich DP, Goo JM, Lee KS, Leung ANC, Mayo JR, et al. Guidelines for Management of Incidental Pulmonary Nodules Detected on CT Images: From the Fleischner Society. Radiology (2017) 284(1):228–43. doi: 10.1148/radiol.2017161659

4. Henschke C. CT Screening for Lung Cancer : Frequency and Significance of Part-Solid and Nonsolid Nodules. Ajr Am J Roentgenol (2002) 178(5):1053–7. doi: 10.2214/ajr.178.5.1781053

5. Travis WD, Brambilla E, Nicholson AG, Yatabe Y, Austin JHM, Beasley MB, et al. The 2015 World Health Organization Classification of Lung Tumors: Impact of Genetic, Clinical and Radiologic Advances Since the 2004 Classification. J Thorac Oncol (2015) 10(9):1243–60. doi: 10.1097/jto.0000000000000630

6. Godoy MCB, Naidich DP. Subsolid Pulmonary Nodules and the Spectrum of Peripheral Adenocarcinomas of the Lung: Recommended Interim Guidelines for Assessment and Management. Article Radiol (2009) 253(3):606–22. doi: 10.1148/radiol.2533090179

7. Hammer MM, Hatabu H. Subsolid Pulmonary Nodules: Controversy and Perspective. Eur J Radiol Open (2020) 7:100267. doi: 10.1016/j.ejro.2020.100267

8. Ye T, Deng L, Wang S, Xiang J, Zhang Y, Hu H, et al. Lung Adenocarcinomas Manifesting as Radiological Part-Solid Nodules Define a Special Clinical Subtype. J Thorac Oncol (2019) 14(4):617–27. doi: 10.1016/j.jtho.2018.12.030

9. Ye T, Deng L, Xiang J, Zhang Y, Hu H, Sun Y, et al. Predictors of Pathologic Tumor Invasion and Prognosis for Ground Glass Opacity Featured Lung Adenocarcinoma. Ann Thorac Surg (2018) 106(6):1682–90. doi: 10.1016/j.athoracsur.2018.06.058

10. Tsutani Y, Miyata Y, Nakayama H, Okumura S, Adachi S, Yoshimura M, et al. Appropriate Sublobar Resection Choice for Ground Glass Opacity-Dominant Clinical Stage IA Lung Adenocarcinoma: Wedge Resection or Segmentectomy. Chest (2014) 145(1):66–71. doi: 10.1378/chest.13-1094

11. Zhang J, Wu J, Tan Q, Zhu L, Gao W. Why do Pathological Stage IA Lung Adenocarcinomas Vary From Prognosis?: A Clinicopathologic Study of 176 Patients With Pathological Stage IA Lung Adenocarcinoma Based on the IASLC/ATS/ERS Classification. J Thorac Oncol (2013) 8(9):1196–202. doi: 10.1097/JTO.0b013e31829f09a7

12. Yang J, Wang H, Geng C, Dai Y, Ji J. Advances in Intelligent Diagnosis Methods for Pulmonary Ground-Glass Opacity Nodules. Biomed Eng Online (2018) 17(1):1–18. doi: 10.1186/s12938-018-0435-2

13. Kim H, Park CM, Koh JM, Lee SM, Goo JM. Pulmonary Subsolid Nodules: What Radiologists Need to Know About the Imaging Features and Management Strategy. Diagn Interv Radiol (2014) 20(1):47–57. doi: 10.5152/dir.2013.13223

14. Jin X, Zhao S-H, Gao J, Wang D-J, Wu J, Wu C-C. CT Characteristics and Pathological Implications of Early Stage (T1N0M0) Lung Adenocarcinoma With Pure Ground-Glass Opacity. Eur Radiol (2015) 25(9):2532–40. doi: 10.1007/s00330-015-3637-z

15. Dai C, Ren Y, Xie H, Jiang S, Fei K, Jiang G, et al. Clinical and Radiological Features of Synchronous Pure Ground-Glass Nodules Observed Along With Operable Non-Small Cell Lung Cancer. J Surg Oncol (2016) 113(7):738–44. doi: 10.1002/jso.24235

16. Gierada DS, Pilgram TK, Ford M, Fagerstrom RM, Church TR, Nath H, et al. Lung Cancer: Interobserver Agreement on Interpretation of Pulmonary Findings at Low-Dose CT Screening. Radiology (2008) 246(1):265–72. doi: 10.1148/radiol.2461062097

17. Ardila D, Kiraly AP, Bharadwaj S, Choi B, Reicher JJ, Peng L, et al. End-To-End Lung Cancer Screening With Three-Dimensional Deep Learning on Low-Dose Chest Computed Tomography. Nat Med (2019) 25(6):954–61. doi: 10.1038/s41591-019-0447-x

18. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-Level Classification of Skin Cancer With Deep Neural Networks. Nature (2017) 542(7639):115–8. doi: 10.1038/nature21056

19. Spampinato C, Palazzo S, Giordano D, Aldinucci M, Leonardi R. Deep Learning for Automated Skeletal Bone Age Assessment in X-Ray Images. Med Image Anal (2017) 36:41–51. doi: 10.1016/j.media.2016.10.010

20. van der Burgh HK, Schmidt R, Westeneng HJ, de Reus MA, van den Berg LH, van den Heuvel MP. Deep Learning Predictions of Survival Based on MRI in Amyotrophic Lateral Sclerosis. NeuroImage Clin (2017) 13:361–9. doi: 10.1016/j.nicl.2016.10.008

21. Jiang H, Ma H, Qian W, Gao M, Li Y. An Automatic Detection System of Lung Nodule Based on Multi-Group Patch-Based Deep Learning Network. IEEE J Biomed Health Informatics (2017) PP(99):1. doi: 10.1109/JBHI.2017.2725903

22. Xie Y, Xia Y, Zhang J, Song Y, Feng D, Fulham M, et al. Knowledge-Based Collaborative Deep Learning for Benign-Malignant Lung Nodule Classification on Chest CT. IEEE Trans Med Imaging (2019) 38(4):991–1004. doi: 10.1109/tmi.2018.2876510

23. Hua KL, Hsu CH, Hidayati SC, Cheng WH, Chen YJ. Computer-Aided Classification of Lung Nodules on Computed Tomography Images via Deep Learning Technique. Oncotargets Ther (2015) 8:2015–22. doi: 10.2147/OTT.S80733

24. Huang G, Liu Z, Weinberger KQ. Densely Connected Convolutional Networks. 2017 IEEE Conf Computer Vision Pattern Recog (CVPR) (2017) 2261–9. doi: 10.1109/CVPR.2017.243

25. Kamiya S, Iwano S, Umakoshi H, Ito R, Shimamoto H, Nakamura S, et al. Computer-Aided Volumetry of Part-Solid Lung Cancers by Using CT: Solid Component Size Predicts Prognosis. Radiology (2018) 287(3):1030–40. doi: 10.1148/radiol.2018172319

26. Revel MP, Mannes I, Benzakoun J, Guinet C, Leger T, Grenier P, et al. Subsolid Lung Nodule Classification: A CT Criterion for Improving Interobserver Agreement. Radiology (2018) 286(1):316–25. doi: 10.1148/radiol.2017170044

27. Ge X, Gao F, Li M, Chen Y, Hua Y. Diagnostic Value of Solid Component for Lung Adenocarcinoma Shown as Ground-Glass Nodule on Computed Tomography. Zhonghua Yi Xue Za Zhi (2014) 94(13):1010–3. doi: 10.3760/cma.j.issn.0376-2491.2014.13.014

28. Gong J, Liu J, Hao W, Nie S, Wang S, Peng W. Computer-Aided Diagnosis of Ground-Glass Opacity Pulmonary Nodules Using Radiomic Features Analysis. Phys Med Biol (2019) 64(13):135015. doi: 10.1088/1361-6560/ab2757

29. Digumarthy SR, Padole AM, Rastogi S, Price M, Mooradian MJ, Sequist LV, et al. Predicting Malignant Potential of Subsolid Nodules: Can Radiomics Preempt Longitudinal Follow Up CT? Cancer Imaging (2019) 19(1):36. doi: 10.1186/s40644-019-0223-7

30. Yang W, Sun Y, Fang W, Qian F, Ye J, Chen Q, et al. High-Resolution Computed Tomography Features Distinguishing Benign and Malignant Lesions Manifesting as Persistent Solitary Subsolid Nodules. Clin Lung Cancer (2018) 19(1):e75–83. doi: 10.1016/j.cllc.2017.05.023

31. Gong J, Liu J, Hao W, Nie S, Zheng B, Wang S, et al. A Deep Residual Learning Network for Predicting Lung Adenocarcinoma Manifesting as Ground-Glass Nodule on CT Images. Eur Radiol (2019) 30(4):1847–55. doi: 10.1007/s00330-019-06533-w

Keywords: pulmonary subsolid nodules, computed tomography, diagnosis, computer-aided diagnosis (CAD), deep learning

Citation: Shen T, Hou R, Ye X, Li X, Xiong J, Zhang Q, Zhang C, Cai X, Yu W, Zhao J and Fu X (2021) Predicting Malignancy and Invasiveness of Pulmonary Subsolid Nodules on CT Images Using Deep Learning. Front. Oncol. 11:700158. doi: 10.3389/fonc.2021.700158

Received: 25 April 2021; Accepted: 08 July 2021;

Published: 26 July 2021.

Edited by:

Abhishek Mahajan, Tata Memorial Hospital, IndiaReviewed by:

Shouliang Qi, Northeastern University, ChinaCopyright © 2021 Shen, Hou, Ye, Li, Xiong, Zhang, Zhang, Cai, Yu, Zhao and Fu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jun Zhao, anVuemhhb0BzanR1LmVkdS5jbg==; Xiaolong Fu, eGxmdTE5NjRAaG90bWFpbC5jb20=

†These authors have contributed equally to this work and share first authorship

‡These authors have contributed equally to this work and share last authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.