- 1School of Computer Science and Engineering, University of Electronic Science and Technology of China, Chengdu, China

- 2Department of General Surgery (Hepatobiliary Surgery), The Affiliated Hospital of Southwest Medical University, Luzhou, China

- 3Department of Hepatobiliary Surgery, Deyang People’s Hospital, Deyang, China

- 4School of Information and Software Engineering, University of Electronic Science and Technology of China, Chengdu, China

- 5Department of Pathology, The Affiliated Hospital of Southwest Medical University, Luzhou, China

- 6Department of Radiology, The Affiliated Hospital of Southwest Medical University, Luzhou, China

- 7Department of Anesthesiology, The Affiliated Hospital of Southwest Medical University, Luzhou, China

Background: Development and validation of a deep learning method to automatically segment the peri-ampullary (PA) region in magnetic resonance imaging (MRI) images.

Methods: A group of patients with or without periampullary carcinoma (PAC) was included. The PA regions were manually annotated in MRI images by experts. Patients were randomly divided into one training set, one validation set, and one test set. Deep learning methods were developed to automatically segment the PA region in MRI images. The segmentation performance of the methods was compared in the validation set. The model with the highest intersection over union (IoU) was evaluated in the test set.

Results: The deep learning algorithm achieved optimal accuracies in the segmentation of the PA regions in both T1 and T2 MRI images. The value of the IoU was 0.68, 0.68, and 0.64 for T1, T2, and combination of T1 and T2 images, respectively.

Conclusions: Deep learning algorithm is promising with accuracies of concordance with manual human assessment in segmentation of the PA region in MRI images. This automated non-invasive method helps clinicians to identify and locate the PA region using preoperative MRI scanning.

Introduction

The peri-ampulla (PA) region refers to the area within 2cm of the main papilla of the duodenum, including Vater ampulla, lower segment of common bile duct, opening of pancreatic duct, duodenal papilla and duodenal mucosa nearby (1–4). This region was deep and narrow in the abdomen and has many adjacent organs and blood vessels, so it is difficult to identify this area using conventional imaging examinations. At the same time, the PA region was prone to a series of diseases, including malignant tumors such as periampullary carcinoma (PAC) and benign lesions such as chronic mass pancreatitis, the inflammatory stricture of the lower of common bile duct, or the lower of common bile duct stone etc. (5, 6). The treatment and prognosis of these diseases vary differently, so accurate diagnosis of these disease has important clinical significance. However, the imaging diagnosis of this kind of disease is based on the determination of the specific location of PA region.

So far, among all these modern imaging techniques, magnetic resonance imaging (MRI) is a preferable choice to detect the diseases of the PA region for its advantages of excellent soft-tissue contrast and fewer radiation exposures (5, 7). However, the accuracy and specificity of MRI are still unsatisfying in the diagnosis of the diseases. A study has reported that the specificity of MRI was only 78.26%, while the accuracy was 89.89% in the diagnosis of PAC (5). Similarly, our previous study also found that MRI had only 87% accuracy in detecting PAC (8). For the disease in PA region, misdiagnose will lead to many adverse factors for the follow-up treatment of patients (8, 9). Therefore, it is necessary to further improve the preoperative diagnostic accuracy of the diseases in this special region. Meanwhile, the precise segmentation of PA region is the first and foundation for the accurate diagnosis.

Deep learning is an emerging sub-branch of artificial intelligence that has demonstrated transformative capabilities in many domains (10). Technically, deep learning is a type of neural network with multiple neural layers that is capable of extracting abstract representations of input data like images, videos, time series, natural languages, and texts. Recently, there is a remarkable research advance of applying deep learning in healthcare and clinical medicine (11–13). Deep learning has applications in the analysis of electronic health records, physiological data, and especially in the diagnosis of diseases using medical imaging (14). In the analysis of medical images of MRI, computed tomography (CT), X-ray, microscopy, and other images, deep learning shows promising performance in tasks like classification, segmentation, detection, and registration (15). Recently, considerable literature has grown up in analyzing image segmentation of different human organs using deep learning, such as pancreas (16), liver (17, 18), heart (19), brain (20, 21), etc. However, the PA region remains largely under-explored in medical image analysis based on advanced deep learning algorithms. Though the neural networks have been applied to classify ampullary tumors, the images were taken by endoscopic during operations rather than preoperative and non-invasive MRI or CT scanning (22). To our best knowledge, there is no reported work has been devoted to develop and evaluate deep learning methods to segment the PA region in MRI images.

Therefore, in this study, we presented a deep learning method to automatically segment the PA region in MRI images. We retrospectively collected an MRI image dataset from different types of PA region diseases to train, including PAC and non-PAC patients, so that the PA region could be accurately identified on the MRI image information of different cases. In a training-validation approach, we developed the deep learning method in the training set and validated the performance in the validation set. This would provide a basis for further research on the diagnosis of PAC.

Materials and Methods

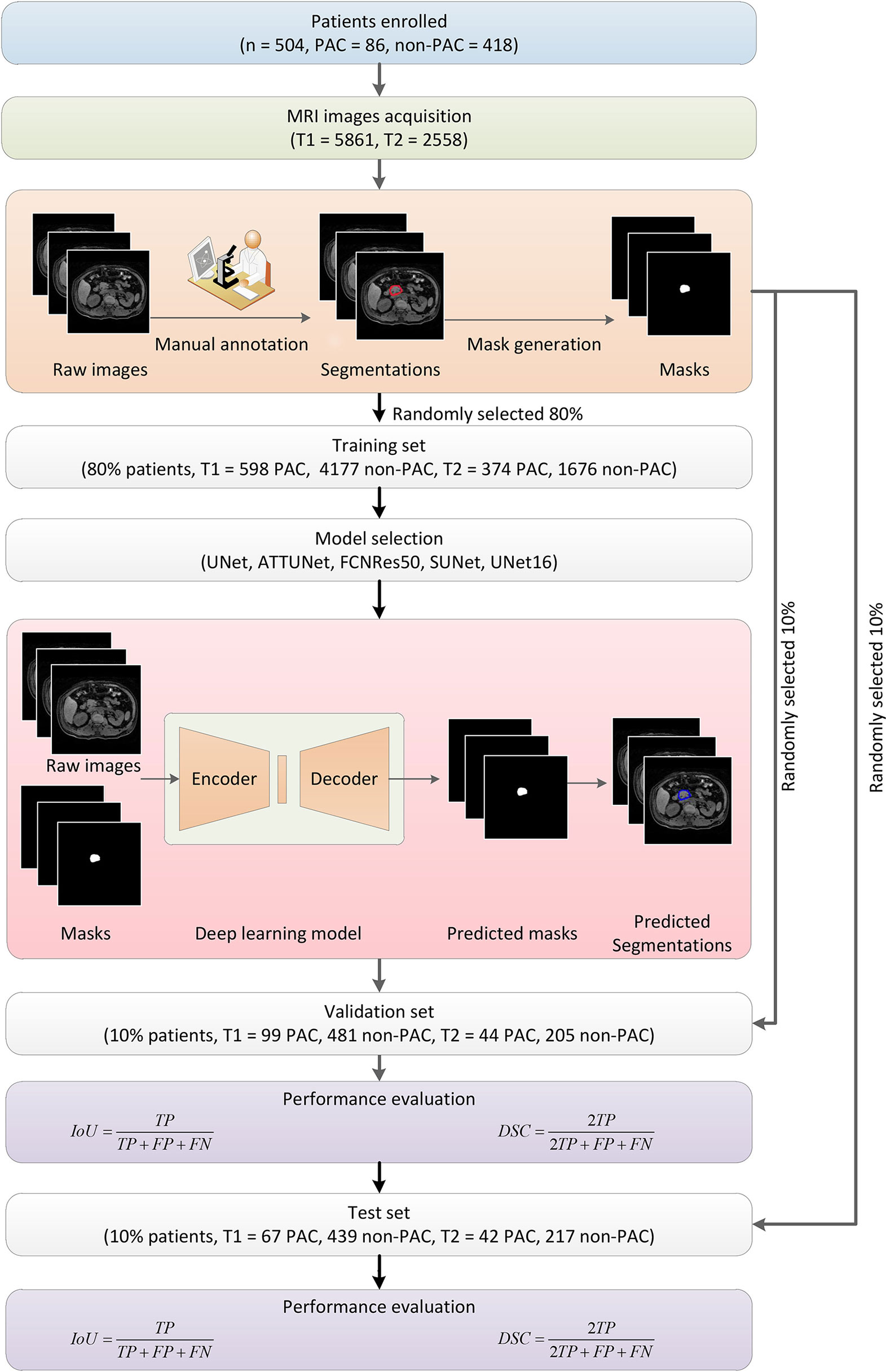

The overall workflow of this study was illustrated in Figure 1. First, patients were included, and the MRI images were obtained. Next, the PA regions were annotated in the MRI images by experts. Based on the raw images and annotation information, the deep learning segmentation algorithms were trained and evaluated in training and validation datasets, respectively. Finally, the performance was summarized and reported.

Figure 1 Overall flowchart of this study. First, MRI images were obtained from enrolled patients and manually annotated by experts to obtain the masks for later deep learning algorithm development. The dataset was randomly divided into subsets for algorithm training, validation, and testing, respectively. Five models were developed and evaluated, and the UNet16 and FCNRes50 achieved the best performance.

Patients Characteristics

This was a retrospective study approved by the Ethics Committee of the Affiliated Hospital of Southwest Medical University (No.KY2020157). A total of 504 patients who underwent MRI examinations in the Department of Hepatobiliary and Pancreatic Surgery of the Affiliated Hospital of Southwest Medical University were included from June 1, 2018 to May 1, 2019. In these people, 86 persons were diagnosed as peri-ampullary carcinoma through pathology after surgery or endoscopy, and the other 418 persons show no peri-ampullary lesion determined by radiologist. All patients underwent MRI examinations. The demographic and clinical characteristics of PAC and non-PAC patients were shown in Table S1 and Table S2, respectively.

MRI Techniques

After 3-8 hours of fasting, patients were asked to practice their breathing techniques. MRI was performed in all patients with a 3.0-T MR equipment (Philips Achieva, Holland, Netherlands) with a quasar dual gradient system and a 16.0-channel phased-array Torso coil in the supine position. Drinking water or conventional oral medicines were not restricted. The MR scan started with the localization scan, followed by a sensitivity-encoding (SENSE) reference scan. The scanning sequences were as follows: breath-hold axial dual fast field echo (dual FFE) and high spatial resolution isotropic volume exam (THRIVE) T1-weighted imaging (T1WI), respiratory triggered coronal turbo spin echo (TSE) T2-weighted imaging (T2WI), axial fat-suppressed TSE-T2WI, single-shot TSE echo-planar imaging (EPI) diffusion-weighted imaging (DWI), and MR cholangiopancreatography (MRCP). For the dynamic contrast enhancement (DCE)-MRI, axial-THRIVE-T1WI were used. 15mL of contrast agent Gd-DTPA was injected through the antecubital vein at a speed of 2mL/s. DCE-MRI was performed in three phases, including arterial, portal, and delayed phase, and images were collected after 20s, 60s, and 180s, respectively (10). In result, among the 504 patients, 485 patients had THRIVE-T1W images (n = 5,861), and 495 patients had T2 W images (n = 2,558).

MRI Imaging Analysis

Post-processing of MRI images was performed using the Extended MR Workspace R2.6.3.1 (Philips Healthcare) with the FuncTool package. MRI showed typical PAC imaging manifestations: (1) the mass was nodular or invasive; (2) Tumour parenchyma on T1WI was equal or marginally lower signals; (3) Tumour parenchyma on T2WI was equally or slightly stronger signal; (4) DWI showed high signal intensity; (5) the mass was mild or moderate enhancement after contrast and (6) when MRCP was performed, the bile duct suddenly terminated asymmetrically and expanded proportionally (double-duct signs may occur when the lesion obstructed the ducts (8).

Pathological Examination

The pathological data from all of the cases were analyzed by two pathologists with more than 15 years of diagnostic experience. The pathologists were blinded to the clinical and imaging findings.

Image Annotation

First, all MRI images were annotated by two experienced radiologists using in-house software. In the annotation, one radiologist was required to manually draw the outlines of the PA regions in the MRI images. The outline information was used to generate a corresponding mask image in the same size to indicate the segmentation and of the PA region. An expert radiologist reviewed all manual annotations to ensure the quality of the annotations, which served as ground truths to develop and validate deep learning algorithms (23–26).

Among the 504 patients, 485 patients had T1 images (n = 5,861), and 495 patients had T2 images (n = 2,558) were processed separately. We developed algorithms for three cases, namely using only T1, only T2, and combination of T1 and T2. In a cross-validation approach, we first randomly divided the patients into three independent cohorts, namely one training cohort (80%), one validation cohort (10%), and one test cohort (10%). Their images and corresponding annotated mask images were also accordingly grouped into one training set, one validation set, and one test set, respectively. In other words, the MRI images and the corresponding mask images of the training cohort were used to train deep learning algorithms, and those images of the validation and test cohort were later used to select and evaluate the performance of deep learning algorithms.

Deep Learning Methods

In this study, we developed deep learning algorithms using multiple layers of convolutional neural network (CNN) to automatically segment PA regions in MRI images. CNN is usually utilized to extract hierarchical patterns from images in a feedforward manner. CNN-based deep learning algorithms have achieved remarkable performance in many computer vision applications surpassing human experts (10). In medical image analysis, UNet adopted a two-block structure utilizing multiple layers of CNN (27). More specifically, the architecture consisted of two components. Namely, one encoder transformed the high dimensional input images into low dimensional abstract representations, and one following decoder projected the low dimensional abstract representations back to the high dimensional space by reversing the encoding. Finally, generated images were output with pixel-level label information indicating the PA region. The detailed structures were illustrated in Figure S1A for UNet16 and Figure S1B for FCNRes50, respectively. In order to systematically investigate the performance of the deep learning approach, in this study, we also considered another four structure variations, namely ATTUNet using the attention gate approach in UNet (27), FCNRes50 using ResNet50 as the downsampling approach FCNRes50 combine residual network and fully convolutional network structures to extract pixel-level information and generate segmentation (28), UNet16 use VGG16 as the downsampling approach (29), and SUNet using SeLu as the nonlinear activation function instead of ReLu.

In the deep learning algorithm training stage, the MRI images of the training cohort were input into the encoder one by one. The output masks generated by the decoder were compared against the corresponding ground truth to calculate the loss function, which indicated the deviations of predicted segmentation. By using the back-propagation technique of stochastic gradient descent optimization, the encoder-decoder structure was continuously optimized to minimize the loss. More technically, the weights between neural network layers were adjusted to improve the capability of segmentations. Once the training started, both the encoder and decoder were all trained together. In this manner, a satisfying deep learning neural network could hopefully be obtained after training with enough training samples. Meanwhile, since the input and output were both images, this deep learning approach enjoyed significant advantages over the conventional image analysis methods by eliminating the exhausting feature engineering or troublesome manual interferences. After the training stage, the trained encoder-decoder structure was used in passive inferences to predict PA regions in MRI images. In inferences, the weights were kept unchanged. In the validation stage, the MRI images of the validation set were input into the neural network, and the corresponding mask images were obtained. The images of the test cohort were used in evaluating the performance of the selected best model. We systematically considered four different variations of the UNet structures and one FCNRes50 structure to seek the best performing deep learning structure. Deep learning algorithms were trained, validated, and tested separately using respective images. The five models were trained, validated, and tested in the dataset contained both T1 and T2 images.

All programs were implemented in Python programming language (version 3.7) with freely available open-source packages, including Opencv-Python (version 4.1.0.25) for image and data processing, Scipy (version 1.2.1) and Numpy (version 1.16.2) for data management, Pytorch (version 1.1) for deep learning framework, Cuda (version 10.1) for graphics processing unit (GPU) support. The training and validation were conducted in a computer installed with an NVIDIA 3090Ti deep learning GPU, 24GB main memory, and Intel(R) Xeon(R) 2.10GHz central processing unit (CPU). It is worth mentioning that the validation task could be done using a conventional personal computer within an acceptable time since the passive inference requires fewer computations.

Statistical Evaluation of Segmentation

The performance of the segmentation task for the PA region in MRI images was quantitatively evaluated using intersection over union (IoU) and Dice similarity coefficient (DSC). For one PA region instance in an MRI image, the manually annotated ground truth and the deep learning predicted segmentation were compared at pixel-level to see how the two regions overlapped. In general, larger values of IoU and DSC indicated better segmentation accuracies. The average IoU and DSC were calculated based on predictions for all images in the validation set. For simplicity, we used IoU as the main measurement, and the performance of five deep learning structures was ranked according to IoU. The predictions of T1 and T2 MRI images were conducted separately in the same manner.

Results

MRI Images

In preparing the training, validation, test datasets, we divided the initial dataset based on patients to ensure that images from a given patient would only appear in one dataset. In result, for T1 images (n = 5,861), the training set included 598 images from 67 PAC patients, and 4,177 images from 322 patients without PAC. The validation set included 99 images from 8 PAC patients, and 418 images from 40 patients without PAC. The test set included 67 images from 8 PAC patients, and 439 images from 40 patients without PAC. For T2 images (n = 2,558), the training set included 374 images from 68 PAC patients, and 1,676 images from 329 patients without PAC. The validation set included 44 images from 8 PAC patients, and 205 images from 41 patients without PAC. The validation set included 42 images from 8 PAC patients, and 217 images from 41 patients without PAC. For the dataset combined T1 and T2 MRI images (n = 8,419). The training set included 959 images from 69 PAC patients, and 5,701 images from 335 patients without PAC. The validation set included 176 images from 9 PAC patients, and 806 images from 42 patients without PAC. The test set included 89 images from 8 PAC patients, and 668 images from 41 patients without PAC.

Segmentation Performance

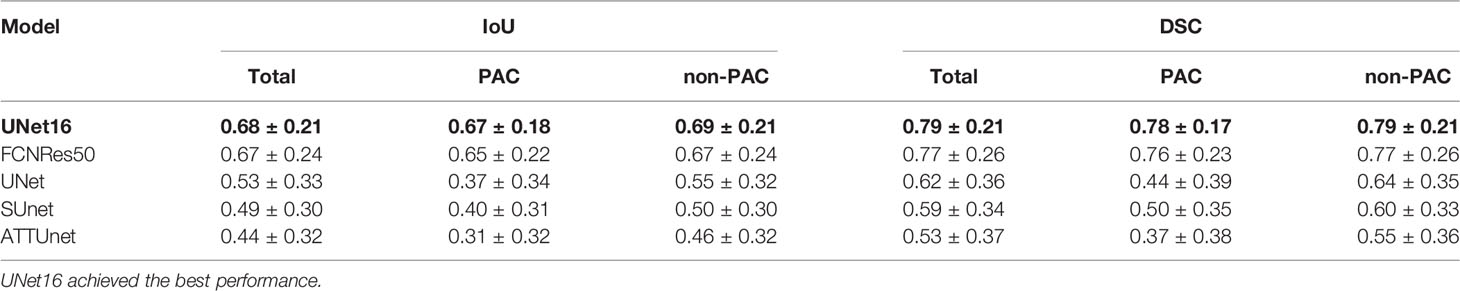

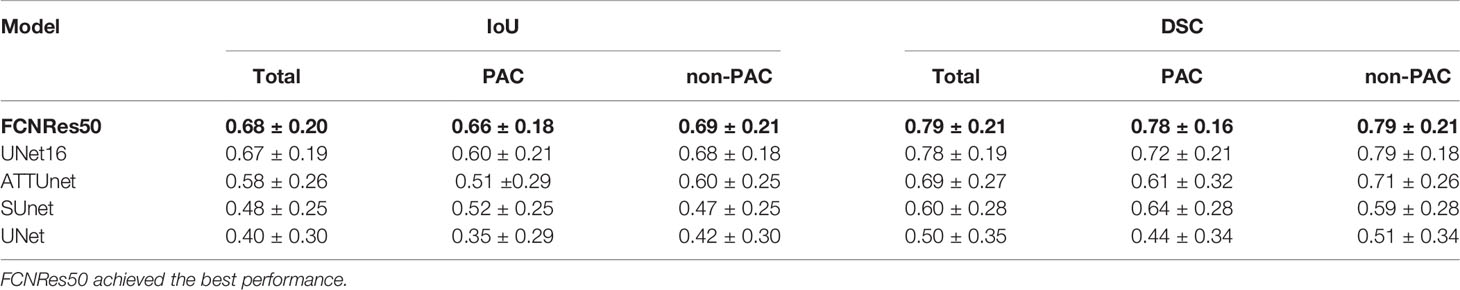

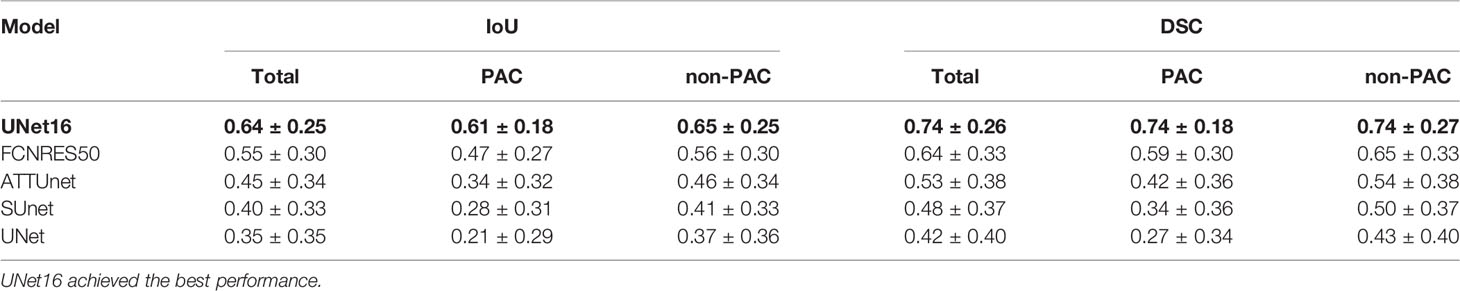

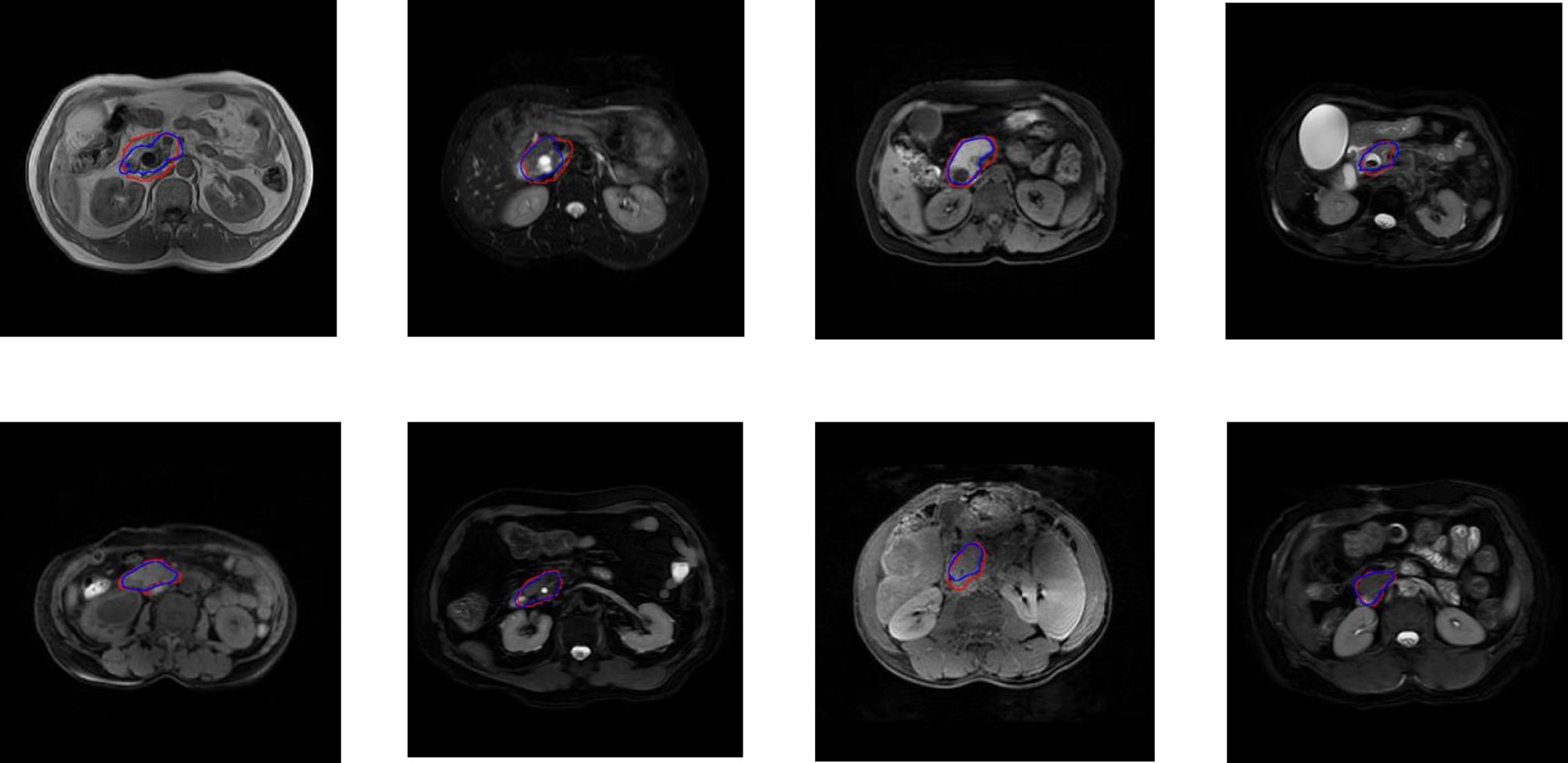

For the five segmentation deep learning structures, we followed the same training approach in separated training, validation, and testing. Specifically, each image formed a batch (batch size = 1), and ten rounds were repeated (epoch = 10) to ensure the convergence of the loss. The optimizer of all models is Adam, with a learning rate of 0.0001. The final segmentation performance of all five structures was presented in Table 1 for T1 images, Table 2 for T2 images, and Table 3 for T1 and T2 images, respectively. We found that UNet16 outperformed all the rest structures with the best performance for both of only T1 (IoU = 0.68, DSC = 0.79) and combined T1 and T2 (IoU = 0.64, DSC = 0.74), respectively. The performance of FCNRes50 is better than UNet16 in only T2 (IoU = 0.68, DSC = 0.79) images segmentation. As shown in the tables, the performance of patients with PAC and patients without PAC is calculated, respectively. Figure 2 demonstrated the segmentation samples obtained by UNet16 for T1 images, FCNRes50 for T2 images, and UNet16 for combined T1 and T2 images. In terms of speed, the algorithms could output the segmentation for a given image within two seconds, which significantly improved the efficiency of image analysis.

Table 1 Segmentation performance of deep learning structures in the test T1 images ranked by mean IoU.

Table 2 Segmentation performance of deep learning structures in the test T2 images ranked by mean IoU.

Table 3 Segmentation performance of deep learning structures in the test T1 and T2 images ranked by mean IoU.

Figure 2 Examples of PA regions of PAC patients (top panel) and PA regions of patients without PAC (bottom panel). The first column were examples of T1 MRI image obtained by UNet16 trained using only T1 images, the second column were examples of T2 MRI image obtained by FCNRes50 trained using only T2 images, the third column were examples of T1 MRI image obtained by UNet16 trained using both T1 and T2 images, and the fourth column were examples of T2 MRI image obtained by UNet16 trained using both T1 and T2 images. Blue, algorithm; red, expert.

Discussion

PAC occurs in 5% of gastrointestinal tumors, and pancreatic cancer is the most common, followed by distal cholangiocarcinoma (2, 30). Pancreatoduodenectomy (PD) was the standard treatment for patients with PAC (31). However, complications such as pancreatic fistula, biliary fistula, infection, and hemorrhage often occur after PD surgery. A previous study has shown that the incidence of postoperative complications of PD may be as high as 30-65% (32). For patients with benign lesions, unnecessary PD surgery could lead to the occurrence of these surgical complications in patients, or even death in some patients. Meanwhile, if malignant lesions are misdiagnosed as benign lesions, it will undoubtedly delay the treatment of patients, resulting in poor prognosis. Due to the anatomical complexity of the periampullary region and less of particular serum markers, the early-accurate diagnose of PAC still remains challenging. Currently, non-invasive diagnostic methods, including ultrasound scan, CT imaging as well as MRI, have been successfully applied to the detection and diagnosis of PAC. One study has reported that the specificity of ultrasound scan was only 52.1%, while the accuracy was 61.61% in the diagnosis of PAC (6). Another study has reported that the specificity of CT was only 16.7%, while the accuracy was 84.4% in the diagnosis of PAC (33). So far, among all these modern imaging techniques, MRI has been reported to be an optimal choice for allowing assessment of periampullary lesions (32). However, there are still limiting factors in the evaluation of the disease using MRI because the PA region is small and the relatively complicated anatomy. Moreover, the tapered area of the distal biliary and pancreatic ducts contain little or no fluid. Physiologic contraction of the sphincter of Oddi also makes it difficult to evaluate the PA region (34). Recently, with the significant development in deep learning and increasing medical needs, artificial intelligence technology has significant advantages in improving the diagnosis of diseases. Therefore, we proposed and developed a deep learning method to automatically segment the PA region in MRI, which could be further extended to future AI-based diagnosis of the disease in PA region using AI, and also facilitate the plan of surgery and endoscopic treatment for clinicians.

In this work, we developed deep learning structures to automatically segment the PA region using MRI T1 and T2 images. Recently, there were abundant reported studies developing AI algorithms for segmentation of abdominal organs or structures including pancreas (16), liver (17, 18), spleen (35, 36), gallbladder (37), kidney (38, 39), the local lesions of stomach (40), etc. However, there is no report of PA region segmentation using AI algorithms. To our best knowledge, this work is the first systematic study of developing and evaluating deep learning approaches for the segmentation of the PA regions in MRI. To evaluate the performance of various deep learning structures, we implemented five algorithms that appeared in deep learning literature, including UNet (27), ATTUNet (41), FCNRes50 (28), UNet16 (29), and SUNet. UNet was the most used deep learning structure in medical image analysis using the encoder and decoder components based on CNN (42). The rest variations improve the UNet structures with attention or replace nonlinear activation functions. This study considered these structures and compared their performance in the same datasets.

In total, 504 patients were included in this study and 5,861 T1 images and 2,558 T2 images were collected. All images were manually annotated by experts to delineate the PA regions in the MRI images. By dividing patients into training and validation cohorts, their images were split into a training set for algorithms training and a validation set for final performance evaluation. As a result, UNet16 achieved the best performance among the five structures with the highest IoU of 0.68 and DSC of 0.79 for T1 images. The model with the best performance for T2 images segmentation is FCNRes50 with an IoU of 0.68 and DSC of 0.79. UNet16 achieved the best performance in the dataset of combined T1 and T2. The IoU is 0.64 and the highest DSC is 0.74 which are not better than the results obtained in the independent T1 or T2 datasets. Therefore, the results showed that UNet16 and FCNRes50 were able to accurately identify the PA region in MRI images.

However, there are still several limitations in this study. First, we only focused on developing an AI to automated localize and segment the PA regions in MRI of PA cancer, but did not make a diagnosis. In the future, we would collect more data and extend the present deep learning framework to classify and diagnose PA cancer. Second, this is a retrospective study from a single hospital, which may inevitably lead to selective bias for the patients. The results need to be validated by prospective and external cohorts. Third, the applied AI technologies in this study are still in rapid evolution with more emerging advanced deep learning algorithms. In the future, it’s necessary to evaluate new deep learning algorithms in PA cancer image analysis to achieve better performance.

In conclusion, we established an MRI image dataset, developed an MRI image data annotation system, established an automatic deep learning the PA region image segmentation model, and realized the location of the PA region.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

This was a retrospective study approved by the Ethics Committee of the Affiliated Hospital of Southwest Medical University. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

YT, YZ, XC, WW, QG, JS, JW, and SS conceived and designed the study, and were responsible for the final decision to submit for publication. All authors contributed to the article and approved the submitted version.

Funding

This study is supported by the Innovation Method Program of the Ministry of Science and Technology of the People’s Republic of China (M112017IM010700), The Key Research and Development Project of Science & Technology Department of Sichuan Province (20ZDYF1129), The Applied Basic Research Project of Science & Technology Department of Luzhou city (2018-JYJ-45).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank the Department of Radiology and the Department of Pathology of the Affiliated Hospital of Southwest Medical University for the support of this research.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2021.674579/full#supplementary-material

Supplementary Figure 1 | Schematic diagram of the proposed deep learning algorithms UNet16 (A) and FCNRes50 (B). UNet16 is based on an Encoder-Decoder architecture. The encoder was a down-sampling stage, while the decoder was an up-sampling stage. FCNRes50 combine residual network and fully convolutional network structures to extract pixel-level information and generate segmentation. Images and ground truth masks were input into the network to obtain the predicted segmentation.

References

1. Berberat PO, Künzli BM, Gulbinas A, Ramanauskas T, Kleeff J, Müller MW, et al. An Audit of Outcomes of a Series of Periampullary Carcinomas. Eur J Surg Oncol (2009) 35(2):187–91. doi: 10.1016/j.ejso.2008.01.030

2. Heinrich S, Clavien PA. Ampullary Cancer. Curr Opin Gastroen (2010) 26(3):280–5. doi: 10.1097/MOG.0b013e3283378eb0

3. Bronsert P, Kohler I, Werner M, Makowiec F, Kuesters S, Hoeppner J, et al. Intestinal-Type of Differentiation Predicts Favourable Overall Survival: Confirmatory Clinicopathological Analysis of 198 Periampullary Adenocarcinomas of Pancreatic, Biliary, Ampullary and Duodenal Origin. BMC Cancer (2013) 13:428. doi: 10.1186/1471-2407-13-428

4. Baghmar S, Agrawal N, Kumar G, Bihari C, Patidar Y, Kumar S, et al. Prognostic Factors and the Role of Adjuvant Treatment in Periampullary Carcinoma: A Single-Centre Experience of 95 Patients. J Gastrointest Cancer (2019) 50(3):361–9. doi: 10.1007/s12029-018-0058-7

5. Zhang T, Su ZZ, Wang P, Wu T, Tang W, Xu EJ, et al. Double Contrast-Enhanced Ultrasonography in the Detection of Periampullary Cancer: Comparison With B-Mode Ultrasonography and MR Imaging. Eur J Radiol (2016) 85(11):1993–2000. doi: 10.1016/j.ejrad.2016.08.021

6. Hester CA, Dogeas E, Augustine MM, Mansour JC, Polanco PM, Porembka MR, et al. Incidence and Comparative Outcomes of Periampullary Cancer: A Population-Based Analysis Demonstrating Improved Outcomes and Increased Use of Adjuvant Therapy From 2004 to 2012. J Surg Oncol (2019) 119(3):303–17. doi: 10.1002/jso.25336

7. Sugita R, Furuta A, Ito K, Fujita N, Ichinohasama R, Takahashi S. Periampullary Tumors: High-Spatial-Resolution MR Imaging and Histopathologic Findings in Ampullary Region Specimens. Radiology (2004) 231(3):767–74. doi: 10.1148/radiol.2313030797

8. Chen XP, Liu J, Zhou J, Zhou PC, Shu J, Xu LL, et al. Combination of CEUS and MRI for the Diagnosis of Periampullary Space-Occupying Lesions: A Retrospective Analysis. BMC Med Imaging (2019) 19(1):77. doi: 10.1186/s12880-019-0376-7

9. Schmidt CM, Powell ES, Yiannoutsos CT, Howard TJ, Wiebke EA, Wiesenauer CA, et al. Pancreaticoduodenectomy: A 20-Year Experience in 516 Patients. Arch Surg (Chicago Ill 1960) (2004) 139(7):718–25, 725-7. doi: 10.1001/archsurg.139.7.718

10. Lecun Y, Bengio Y, Hinton G. Deep Learning. NATURE (2015) 521(7553):436–44. doi: 10.1038/nature14539

11. Chen JH, Asch SM. Machine Learning and Prediction in Medicine — Beyond the Peak of Inflated Expectations. N Engl J Med (2017) 376(26):2507–9. doi: 10.1056/NEJMp1702071

12. Hinton G. Deep Learning—a Technology With the Potential to Transform Health Care. JAMA (2018) 320(11):1101–2. doi: 10.1001/jama.2018.11100

13. Stead WW. Clinical Implications and Challenges of Artificial Intelligence and Deep Learning. JAMA (2018) 320(11):1107–8. doi: 10.1001/jama.2018.11029

14. Esteva A, Robicquet A, Ramsundar B, Kuleshov V, DePristo M, Chou K, et al. A Guide to Deep Learning in Healthcare. Nat Med (2019) 25(1):24–9. doi: 10.1038/s41591-018-0316-z

15. Litjens G, Kooi T, Bejnordi B, Arindra A, Setio A, Ciompi F, et al. A Survey on Deep Learning in Medical Image Analysis. Med Image Anal (2017) 42:60–88. doi: 10.1016/j.media.2017.07.005

16. Roth HR, Lu L, Lay N, Harrison AP, Farag A, Sohn A, et al. Spatial Aggregation of Holistically-Nested Convolutional Neural Networks for Automated Pancreas Localization and Segmentation. Med Image Anal (2018) 45:94–107. doi: 10.1016/j.media.2018.01.006

17. Chung M, Lee J, Park S, Lee CE, Lee J, Shin YG. Liver Segmentation in Abdominal CT Images Via Auto-Context Neural Network and Self-Supervised Contour Attention. Artif Intell Med (2021) 113:102023. doi: 10.1016/j.artmed.2021.102023

18. Kushnure DT, Talbar SN. MS-Unet: A Multi-Scale Unet With Feature Recalibration Approach for Automatic Liver and Tumor Segmentation in CT Images. Computerized Med Imaging Graphics (2021) 89:101885. doi: 10.1016/j.compmedimag.2021.101885

19. Bai W, Shi W, O’Regan D, Tong T, Wang H, Jamil-Copley S, et al. A Probabilistic Patch-Based Label Fusion Model for Multi-Atlas Segmentation With Registration Refifinement: Application to Cardiac Mr Images. IEEE TMI (2013) 32(7):1302–15. doi: 10.1109/TMI.2013.2256922

20. Wang H, Suh J, Das S, Pluta J, Craige C, Yushkevich P. Multiatlas Segmentation With Joint Label Fusion. IEEE PAMI (2013) 35:611–23. doi: 10.1109/TPAMI.2012.143

21. Wang L, Shi F, Li G, Gao Y, Lin W, Gilmore J, et al. Segmentation of Neonatal Brain Mr Images Using Patch-Driven Level Sets. NeuroImage (2014) 84(1):141–58. doi: 10.1016/j.neuroimage.2013.08.008

22. Seo JD, Seo DW, Alirezaie J. Simple Net: Convolutional Neural Network to Perform Differential Diagnosis of Ampullary Tumors, in: 2018 IEEE 4th Middle East Conference on Biomedical Engineering (MECBME); 2018 2018-01-01. IEEE (2018). pp. 187–92.

23. Vorontsov E, Cerny M, Régnier P, Jorio L, Pal C, Lapointe R, et al. Deep Learning for Automated Segmentation of Liver Lesions At CT in Patients With Colorectal Cancer Liver Metastases. Radiol: Artif Intell (2019) 1:180014. doi: 10.1148/ryai.2019180014

24. Ahn Y, Yoon JS, Lee SS, Suk HII, Son JH, Sung YS, et al. Deep Learning Algorithm for Automated Segmentation and Volume Measurement of the Liver and Spleen Using Portal Venous Phase Computed Tomography Images. Korean J Radiol (2020) 21(8):987–97. doi: 10.3348/kjr.2020.0237

25. Chen Y, Dan R, Xiao J, Wang L, Sun B, Saouaf R, et al. Fully Automated Multi-Organ Segmentation in Abdominal Magnetic Resonance Imaging With Deep Neural Networks. Med Phys (2020). doi: 10.1002/MP.14429

26. Song D, Wang Y, Wang W, Wang Y, Cai J, Zhu K, et al. Using Deep Learning to Predict Microvascular Invasion in Hepatocellular Carcinoma Based on Dynamic Contrast-Enhanced MRI Combined With Clinical Parameters. J Cancer Res Clin Oncol (2021). doi: 10.1007/s00432-021-03617-3

27. Ronneberger O, Fischer P, Brox T. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015; 2015 2015-01-01. Navab N, Hornegger J, Wells W, Frangi A, editors. Springer International Publishing (2015). p. 234–41.

28. Shelhamer E, Long J, Darrell T. Fully Convolutional Networks for Semantic Segmentation. IEEE T Pattern Anal (2017) 39(4):640–51. doi: 10.1109/TPAMI.2016.2572683

29. Pravitasari AA, Iriawan N, Almuhayar M, Azmi T, Fithriasari K, Purnami SW, et al. UNet-VGG16 With Transfer Learning for MRI-Based Brain Tumor Segmentation. Telkomnika (2020) 18:1310–8. doi: 10.12928/TELKOMNIKA.v18i3.14753

30. Albores-saavedra J, Schwartz AM, Batich K, Henson DE. Cancers of the Ampulla of Vater: Demographics, Morphology, and Survival Based on 5,625 Cases From the SEER Program. J Surg Oncol (2009) 100(7):598–605. doi: 10.1002/jso.21374

31. Winter JM, Brennan MF, Tang LH, D’Angelica MI, Dematteo RP, Fong Y, et al. Survival After Resection of Pancreatic Adenocarcinoma: Results From a Single Institution Over Three Decades. Ann Surg Oncol (2012) 19(1):169–75. doi: 10.1245/s10434-011-1900-3

32. Hill JS, Zhou Z, Simons JP, Ng SC, McDade TP, Whalen GF, et al. A Simple Risk Score to Predict in-Hospital Mortality After Pancreatic Resection for Cancer. Ann Surg Oncol (2010) 17(7):1802–7. doi: 10.1245/s10434-010-0947-x

33. Hashemzadeh S, Mehrafsa B, Kakaei F, Javadrashid R, Golshan R, Seifar F, et al. Diagnostic Accuracy of a 64-Slice Multi-Detector CT Scan in the Preoperative Evaluation of Periampullary Neoplasms. J Clin Med (2018) 7(5):7. doi: 10.3390/jcm7050091

34. Kim JH, Kim M-J, Chung J-J, Lee WJ, Yoo HS, Lee JT. Differential Diagnosis of Periampullary Carcinomas at MR Imaging. Radiographics (2002) 22(6):1335–52. doi: 10.1148/rg.226025060

35. Moon H, Huo Y, Abramson RG, Peters RA, Assad A, Moyo TK, et al. Acceleration of Spleen Segmentation With End-to-End Deep Learning Method and Automated Pipeline. Comput Biol Med (2019) 107:109–17. doi: 10.1016/j.compbiomed.2019.01.018

36. Yucheng T, Yuankai H, Yunxi X, Hyeonsoo M, Albert A, Tamara KM, et al. Improving Splenomegaly Segmentation by Learning From Heterogeneous Multi-Source Labels. Proc SPIE Int Soc Opt Eng (2019) 10949. doi: 10.1117/12.2512842

37. Lian J, Ma Y, Ma Y, Shi B, Liu J, Yang Z, et al. Automatic Gallbladder and Gallstone Regions Segmentation in Ultrasound Image. Int J Comput Assist Radiol Surg (2017) 12(4):553–68. doi: 10.1007/s11548-016-1515-z

38. Park J, Bae S, Seo S, Park S, Bang J-I, Han JH, et al. Measurement of Glomerular Filtration Rate Using Quantitative SPECT/CT and Deep-Learning-Based Kidney Segmentation. Sci Rep (2019) 9(1):4223. doi: 10.1038/s41598-019-40710-7

39. Onthoni DD, Sheng T-W, Sahoo PK, Wang L-J, Gupta P. Deep Learning Assisted Localization of Polycystic Kidney on Contrast-Enhanced CT Images. Diagn (Basel) (2020) 10(12):25. doi: 10.3390/diagnostics10121113

40. Huang L, Li M, Gou S, Zhang X, Jiang K. Automated Segmentation Method for Low Field 3D Stomach MRI Using Transferred Learning Image Enhancement Network. BioMed Res Int (2021) 2021:6679603. doi: 10.1155/2021/6679603

41. Lian S, Luo Z, Zhong Z, Lin X, Su S, Li S. Attention guided U-Net for accurate iris segmentation. J Vis Commun Image Represent (2018) 56:296–304. doi: 10.1016/j.jvcir.2018.10.001.

Keywords: peri-ampullary cancer, periampullary regions, MRI, deep learning, segmentation

Citation: Tang Y, Zheng Y, Chen X, Wang W, Guo Q, Shu J, Wu J and Su S (2021) Identifying Periampullary Regions in MRI Images Using Deep Learning. Front. Oncol. 11:674579. doi: 10.3389/fonc.2021.674579

Received: 02 March 2021; Accepted: 20 April 2021;

Published: 28 May 2021.

Edited by:

Naranamangalam Raghunathan Jagannathan, Chettinad University, IndiaReviewed by:

Amit Mehndiratta, Indian Institute of Technology Delhi, IndiaYee Kai Tee, Tunku Abdul Rahman University, Malaysia

Copyright © 2021 Tang, Zheng, Chen, Wang, Guo, Shu, Wu and Su. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jian Shu, c2h1amlhbm5jQDE2My5jb20=; Jiali Wu, MTM5ODI3NTk5NjRAMTYzLmNvbQ==; Song Su, MTM4ODI3Nzg1NTRAMTYzLmNvbQ==

†These authors share first authorship

Yong Tang

Yong Tang Yingjun Zheng2†

Yingjun Zheng2† Weijia Wang

Weijia Wang Song Su

Song Su