- Department of Gastroenterology and Hepatology, Kindai University Faculty of Medicine, Osaka-Sayama, Japan

Recent advancement in artificial intelligence (AI) facilitate the development of AI-powered medical imaging including ultrasonography (US). However, overlooking or misdiagnosis of malignant lesions may result in serious consequences; the introduction of AI to the imaging modalities may be an ideal solution to prevent human error. For the development of AI for medical imaging, it is necessary to understand the characteristics of modalities on the context of task setting, required data sets, suitable AI algorism, and expected performance with clinical impact. Regarding the AI-aided US diagnosis, several attempts have been made to construct an image database and develop an AI-aided diagnosis system in the field of oncology. Regarding the diagnosis of liver tumors using US images, 4- or 5-class classifications, including the discrimination of hepatocellular carcinoma (HCC), metastatic tumors, hemangiomas, liver cysts, and focal nodular hyperplasia, have been reported using AI. Combination of radiomic approach with AI is also becoming a powerful tool for predicting the outcome in patients with HCC after treatment, indicating the potential of AI for applying personalized medical care. However, US images show high heterogeneity because of differences in conditions during the examination, and a variety of imaging parameters may affect the quality of images; such conditions may hamper the development of US-based AI. In this review, we summarized the development of AI in medical images with challenges to task setting, data curation, and focus on the application of AI for the managements of liver tumor, especially for US diagnosis.

Introduction

Artificial intelligence (AI) is generally considered as the intelligence performed by compactional statistics, where machine learning is a subset of AI. Recently, AI is emerging as a major constituent in the field of medicine and healthcare. In particular, AI can be easily applied to imaging data because these data are electronically organized, and AI excels at recognizing unique and complex features of images and facilitates quantitative assessments in an automated fashion. This characteristic of AI is ideal in the constrained clinical setting wherein medical staff must interpret large image datasets based on their visual perception with uncertainty, in which human errors are inevitable. For example, AI is a powerful tool in radiomics where extracting a large number of features form medical images is required. Based on this advantage, AI have been applied for classification of lesions, such as liver tumors, and prediction of the prognosis using image data from computed tomography and magnetic resonance imaging (MRI) (1). In addition, AI-based image processing techniques have also introduced in the field of ultrasonography (US). This review shows the recent progress in AI for medical imaging, especially for an AI-aided diagnosis for the detection, characterization, subsequent monitoring, and prediction of outcomes in patients with liver cancer, especially in the field of US diagnosis.

History and Recent Progress of AI in Medical Imaging

The application of pattern recognition in medical issues has been proposed in the early 1960s. In the 1980s, the prevalence of computers induced the development of medical AI in radiology using a quantitatively computable domain. After the emergence of deep neural network, the rate at which AI is evolving radiology is rapidly growing that is proportional to the growth of data volume in medical image and computational power (2).

For the image analysis, a convolutional neural network (CNN) is commonly applied, which is a class of deep neural networks using pixel value and assembling complex patterns to smaller and simpler patterns (2). The algorism contains multiped hidden layer with multiple convolutional and pooling layers. A trained CNN-based AI model using ≥120,000 retinal fundus images has been demonstrated to show high performance comparable to that of an experienced ophthalmologist for detecting referable diabetic retinopathy, which is expected to effectively assist ophthalmologists in the clinical workflow (3). Assessment of AI models for detecting lymph node metastasis of breast cancer based on whole microscopic slide images showed the superior performance of AI for detecting cancer cells in specimens to that of pathologists (4). A pre-trained CNN-based AI model for the diagnosis of skin cancer achieves performance on par with that by expert dermatologists in terms of the discrimination of skin cancers from corresponding benign lesions on dermography (5). AI models for the detection of pediatric pneumonia on chest radiography images and for the discrimination of diabetic macular edema from age-related macular degeneration on optical coherence tomography images are also reported with high performance, comparable to that of human experts (6). An AI-based colonoscopy system has been shown to accurately differentiate neoplastic lesions from non-neoplastic lesions on stained endocytoscopic images and endocytoscopic narrow-band images in endoscopic evaluation of small colon polyps (7). The application of AI for US-based diagnosis has been mainly reported for the diagnosis of malignant tumors, such as mammary and thyroid cancers (8–11). Le et al. reported an AI model for the diagnosis of thyroid cancer pre-trained with 312,399 B-mode US images of cancer and healthy controls (12). The model’s diagnostic performance was validated in three test datasets with AUCs of 0.908–0.947. The AI model showed higher specificity in identifying thyroid cancer and comparable sensitivity to those corresponding to experienced radiologists. Another report described a real-time detection system of thyroid tumors based on real-time images using the “You Only Look Once” (YOLO) algorithm. This model achieved a similar sensitivity, positive predictive value, negative predictive value, and accuracy for the diagnosis of malignant thyroid tumors with higher specificity compared to those corresponding to experienced radiologists (12, 13). For the detection of breast cancer, Kumar et al. reported a real-time segmentation model of breast tumors using a CNN (14). This system can reportedly segment tumor images in real-time, suggesting its potential for clinical applications. Collectively, diagnostic accuracy of well-trained AI model for medical image is, at least, on par with human experts with much quicker output, suggesting the higher efficiency for diagnosis in clinical setting.

On the other hand, recently, Skrede et al. reported the use of AI for the prediction of outcomes after colorectal cancer resection using a pre-trained CNN-based model with pathological images (15). They discriminated the cases of poor prognosis from those of good prognosis, indicating the potential of medical AI for the management of cancer, such as the identification of patients who would benefit from adjuvant treatment after resection.

Process for Developing AI Models for Imaging Diagnosis

Setting Tasks for AI in Medical Imaging

For the development of AI in medical imaging, it is important to select tasks that reflect important needs at clinical sites. For example, large-volume screening of medical images requires extensive effort, which is time consuming and invites human errors. In this setting, AI should be a powerful tool for clinicians because of its advantageous for precise detection of subtle features of lesions, segmentation, and quick output. AI models that can estimate the risk of disease may contribute to avoiding invasive examinations, representing an attractive task (16).

Data Sets for Developing AI Models for Medical Images

Generally, three independent datasets are required for developing medical AI (17). A training set is required for the training of AI models, which contains many images to update model parameters. A tuning set is for the selection of a model’s hyperparameters that are necessary for the best expected output. A test set is for the final assessment of the performance of AI models. The splitting of curated data must be clean, and each dataset should be completely independent without any overlap with respect to lesions to avoid overfitting the output.

For disease classification, such as that corresponding to diagnosis, the data volume in each subclass should be similar because imbalances in data volumes among subclasses may lead to overfitting of the output, which may limit the performance of an AI model. For the image of rare diseases, the AI-based image created through generative adversarial networks might also be applicable.

AI Algorithm

During training, AI models automatically detect specific features of images through the fitting of model parameters, which improves the performance. CNNs are commonly applied for AI algorithm of imaging data (2). However, US examinations require real-time output, and an algorithm that requires many mathematical operations might not be appropriate for analyzing US images. The YOLO-based algorithm is suitable for the real-time detection and classification of lesions with high-speed processing. The process of selecting a model’s architecture and training essentially involves a balance between model underfitting and overfitting (17). Underfitting occurs when a low-capacity model is used relative to the problem complexity and data size. Overfitting indicates that the evaluation overestimates the model’s performance on previously unencountered data, in which case low performance on the test set is observed. Because there is a large diversity among US images in terms of the conditions of the examination and image parameter settings, larger volumes of data are required compared to those required for the development of other medical imaging AI.

Evaluation of Performance and Potential Impact

One of the major categories of evaluation of AI-aided imaging diagnosis is the ability to discriminate the lesions, such as benign or malignant. The area under the receiver operating characteristic curve (AUC) is commonly used as a threshold-free discriminative metric. Evaluation may also be based on other metrics, such as sensitivity (recall), specificity, and precision (positive predictive value); these are threshold-dependent. On the other hand, calibration, which evaluates how effectively the predicted probability matches the actual diagnosis should also be estimated (17). In addition, variability in the probability in the same lesion may also need to be analyzed because there can be variations among US images even within the same lesion, which is attributed to differences in parameter settings. Validation for accuracy is a critical process in the transitional process of medical AI. The performance of AI models must be evaluated using independent test cohorts and be compared with an experienced human control in real-world scenarios.

Current AI Models for Medical Imaging of Liver Lesions

AI Using Medical Image for the Management of Liver Tumors

Recently, many reports have described the development of AI models for the detection and diagnosis of liver tumors; some studies have aimed to predict outcomes after treatments, which may be applicable for the personalized management of patients (18, 19).

Hamm et al. reported the classification of 6 types of liver tumors by a pre-trained CNN using MRI data of 494 lesions from 334 cases (20). After data augmentation of the images for training, the established AI model demonstrated 90% sensitivity and 98% specificity for the test cohort. The average sensitivity and specificity for the radiologist were 82.5 and 96.5%, respectively. For the diagnosis of hepatocellular carcinoma (HCC), the sensitivities were 90% for the AI model and 60–70% for the radiologists. Considering the short processing time (only 6.6 ms) for output, the pre-trained AI model showed superior performance compared to that of the human radiologists.

On the other hand, AI is also useful for the detection of specific radiological features that may reflect histopathological characteristics associated with the biological behavior of a tumor. From this point of view, the development of AI for the prediction of outcomes after treatment, including tumor recurrence after surgery, may be possible. If pathological diagnosis is applied for constructing an AI model for medical imaging, it may be a non-invasive substitute for biopsy, which may significantly impact the management of cancer. Fent et al. reported a preoperative prediction model for microvascular invasion in patients with resectable HCC who do not show macroscopic vascular invasion through training using gadolinium-ethoxybenzyl (EOB)-diethylenetriamine-enhanced MRI data (21). The AI model selected ten specific features of EOB-enhanced MRI data to predict microvascular invasion. The performance of the AI model showed an AUC of 0.83 with 90.0, 75.0, and 84.0% sensitivity, specificity, and accuracy, respectively, which were much better than those of human radiologists. Kim et al. reported an AI model for the prediction of early and late recurrence of tumors after surgery using EOB-MRI data from solitary HCC cases (22). They established their AI model using a random survival forest to predict disease-free survival and found that peritumoral image features 3 mm outside the tumor border are important for the prediction of early recurrence after curative surgery.

AI Using Histopathological Images for Diagnosis and Management of Liver Cancers

It has also been reported that an AI model pre-trained with histopathological images of liver cancer using transfer learning can distinguish cancerous tissue from healthy liver tissue (23). Saillard et al. showed that a deep-learning model of histopathological images predicts survival after resection of HCC (24). They developed two kinds of AI models pre-trained with supervised image data, which was annotated based on the tumor portion in the slide images by pathologists, and non-supervised data without human annotations. The concordance indices for survival prediction were 0.78 and 0.75 for the pre-trained AI models with supervised and non-supervised data, respectively. Reportedly, these histopathological AI models showed a higher discriminatory power than that derived from a combination of known clinical risk factors. Some pathological findings, including vascular space, a macrotrabecular pattern of tumor cell architecture, a high degree of cytological atypia, and nuclear hyperchromasia, effectively predicted poor survival, and immune infiltrates and fibrosis in tumor and non-tumor tissues were associated with a low risk of short survival. These studies indicate that histopathological images yield useful training data for the prediction of prognosis in HCC cases (25).

AI-Aided Diagnosis for Liver Tumors in Ultrasonography

Generally, US images are heterogeneous because of the multiple image parameters and conditions of examination compared to other kind of medical images. Such heterogeneity of image data makes it difficult to develop the AI for US diagnosis, especially for liver tumors (18).

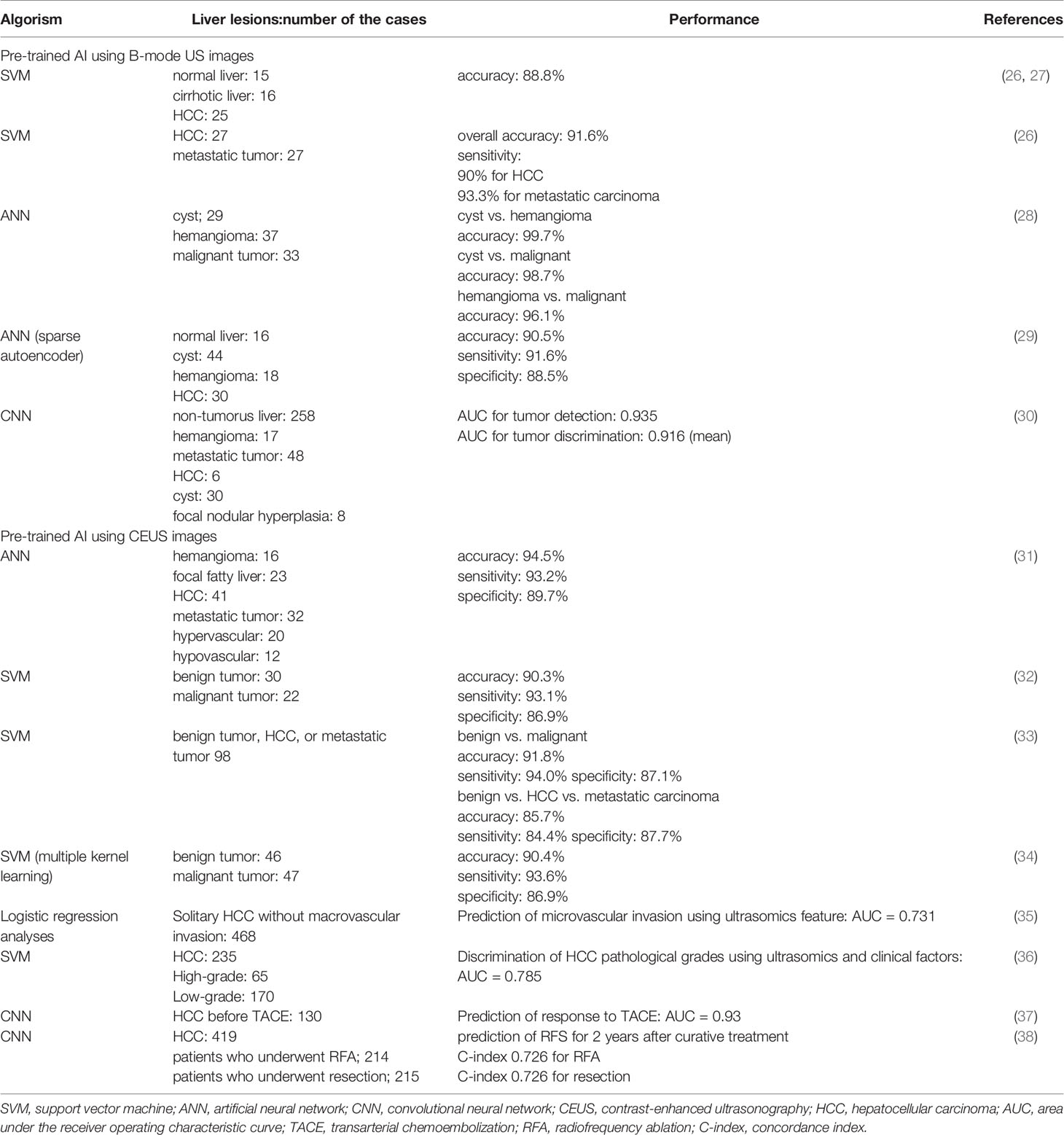

AI models are trained using cropped images of regions of interest that specifically focus on tumors for applying neural network and can be evaluated using cross-validation methods for small sample cohorts. The studies regarding the application of B-mode US images on machine learning for the diagnosis of liver tumor are summarized in Table 1. Virmani et al. reported the machine learning for discriminating HCC and metastatic liver tumor using support vector machine (SMV), where overall accuracy was 91.6 %; sensitivity of 90% for HCC and 93.3% for metastatic tumor were achieved (26). Hwang et al. tried to extract textural features of liver tumors including cysts, hemangiomas, and malignant lesions for the diagnosis; they examined the accuracy of two-class discriminations for cyst vs. hemangioma, cyst vs. malignant tumor, and hemangioma vs. malignant tumor, demonstrating the accuracy of more than 95% for each comparison (28). On the other hand, the study using artificial neural network (ANN) show 4-class discrimination for normal liver, cyst, hemangioma, and HCC: accuracy of almost 90%, and similar levels of sensitivity, and specificity are reported (29). Generally, these early studies failed to show the superiority of neural network for the diagnostic accuracy of liver tumors compared to the conventional machine learning because of the small size of learning cohort. Schmauch et al. reported the performance of an AI model for the diagnosis of liver tumors from B-mode US images (30). They reported an AI model for lesion detection and diagnosis from whole-liver US images using a 50-layer residual network. Despite the relatively small volume of training data, the performance for tumor detection and 5-class discrimination (HCC, metastatic tumors, hemangiomas, cysts, and focal nodular hyperplasia) achieved considerable AUCs (0.953 and 0.916) for tumor detection and discrimination, respectively, by cross validation. To reduce the heterogeneity of the US images, they cropped the images maximally to remove the black borders and standardize the aspect ratio. They also performed rescaling of the image intensity for normalization based on the intensity of the abdominal wall.

Table 1 Performance for diagnosis of liver tumor based on the machine learning using image of ultrasonography.

In addition to the gray scale B-mode US, doppler US, contrast-enhanced US (CEUS), shear wave elastography (SWE) and three-dimensional US images are also applicable for the training of AI models. Still image of contrast-enhanced US (CEUS) was applied for the learning data for more accurate discrimination of liver tumors. Streba et al. applied ANN for 4-class discrimination of liver tumor with 94.5, 94.2, and 89.7% for accuracy, sensitivity and specificity, respectively, for the discriminaton (31). Gatos et al. and Kondo et al. reported the 4-class classification of benign tumors, hepatocellular carcinoma, and metastatic tumors using SMV pretrained with CEUS images (32, 33). A contrast agent, Sonazoid, was used and, reportedly, sensitivity, specificity, and accuracy that discriminate malignant lesions from benign were 94.0, 87.1, and 91.8%, respectively (33). Another report applied a pretrained SMV using CEUS images and achieved the accuracy, sensitivity, and specificity of 90.4, 93.6, and 86.9%, respectively, for the different diagnosis of benign and malignant liver tumors (34). Discrimination of benign and malignant lesions is a critical task for the management of patients with liver tumors, and CEUS images yield attractive data for the development of AI models to detect malignant tumors.

On the other hand, because of the development of new treatments in HCC, management of this type of cancer is becoming complex (39). Recently, in addition to detection and diagnosis, AI model regarding the management of HCC, such as prediction of microvascular invasion, pathological grading, and treatment outcomes have been reported. Hu et al. proposed US-based radiomics score consisted of six selected features was an independent predictor of microvascular invasion in HCC (35). On the other hand, model for predicting pathological grading of HCC before surgery was also reported using ultrasomics of CEUS images (36). Liu et al. developed an AI model for the prediction of responses to transarterial chemoembolization in patients with HCC through training with B-mode US and CEUS images (37). They reported AUCs of 0.93 and 0.81 for the AI based on CEUS and B-mode US images, respectively, indicating a higher performance of the model pre-trained with CEUS images than that with B-mode US images. They also reported AI models for predicting outcomes in patients with HCC after two types of treatment—radiofrequency ablation (RFA) and liver resection—from radiomics information based on CEUS images (38). For the prediction of two-year progression-free survival (PFS), both models provided high prediction accuracy. Interestingly, the models showed that some patients who underwent RFA and surgery should swap their treatments, so that a higher probability of increased 2-year PFS would be achieved. In addition, another report showed radiomic signature from grayscale US images of gross-tumoral region had potential for prediction of microvascular invasion of HCC before surgery, suggesting the potential of radiomic approach for the prediction of outcome (40). Such AI prediction models using radiomic signature may be applicable for personalized medicine in HCC treatment.

The grading of liver fibrosis and steatosis is also an important task for the management of liver disease because these backgrounds may confer a risk of liver cancer. Several reports have described the classification of fibrosis and steatosis based on disease progression using AI models trained with B-mode US and SWE images (18, 41). Deep-learning models show hyper-performance in terms of detection and risk stratification of fatty liver disease compared to that corresponding to conventional machine-learning models (42). AI models pre-trained with color images of US-SWE can also discriminate chronic liver disease from healthy cases (43). Reportedly, the combination of B-mode US images, raw radiofrequency data, and dynamic contrast-enhanced microflow is a useful dataset for developing AI models that classify the stage of liver fibrosis (44), where datasets involving raw radiofrequency data provide better predictive value than those from conventional US image only. Therefore, it should be possible that AI using multiparametric ultrasomics can help improve the performance of the model. For the development of AI that determine the stage of liver fibrosis more accurately, Gatos et al. reported a detection algorithm that excludes unreliable regions on SWE images, which contributes to a reduction in interobserver variability (45). Applying these AI models may be an alternative to invasive liver biopsy for predicting the progression of liver disease, which may be associated with a risk of liver cancer.

Conclusion

Among the imaging modalities, US is the most commonly used in clinical practice for detection of liver tumors because of its low-cost, non-ionizing, and portable point-of-care characteristics providing real-time images. From this point of view, the AI-powered US carries more advantage in routine clinical applications compared to that in CT and MRI (46). Although, US images involve operator-, patient-, and scanner-dependent variations, AI-aided US diagnosis is becoming mature that is attributed to the recent advancement in the US equipment and increase in computing power to identify the complex imaging features. In addition to the B-mode image, images from CEUS and US elastography is becoming promising data applicable in AI-based diagnosis in the field of liver tumor according to the prevalence of high-end US equipment (46, 47). These could also be a safeguard for misdiagnosis in the actual workflow. The development of AI-aided technologies for the detection and diagnosis of malignant tumors may carry sufficient potential to reduce cancer-related mortality in the near future.

Author Contributions

Conceptualization: NN. Writing—original draft preparation: NN. Writing—review and editing: NN. Supervision: MK. Funding acquisition: NN and MK. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by AMED (Japan Agency for Medical Research and Development) under Grant No. JP19lk1010035. (NN and MK) and ROIS NII Open Collaborative Research 2020 under Grant No. 20S0601 (NN).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. Haj-Mirzaian A, Kadivar A, Kamel IR, Zaheer A. Updates on Imaging of Liver Tumors. Curr Oncol Rep (2020) 22:46. doi: 10.1007/s11912-020-00907-w

2. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts H. Artificial intelligence in radiology. Nat Rev Cancer (2018) 18:500–10. doi: 10.1038/s41568-018-0016-5

3. Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA (2016) 316:2402–10. doi: 10.1001/jama.2016.17216

4. Ehteshami Bejnordi B, Veta M, Johannes van Diest P, van Ginneken B, Karssemeijer N, Litjens G, et al. Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women With Breast Cancer. JAMA (2017) 318:2199–210. doi: 10.1001/jama.2017.14580

5. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature (2017) 542:115–8. doi: 10.1038/nature21056

6. Kermany DS, Goldbaum M, Cai W, Valentim CCS, Liang H, Baxter SL, et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell (2018) 172:1122–31.e1129. doi: 10.1016/j.cell.2018.02.010

7. Kudo SE, Misawa M, Mori Y, Hotta K, Ohtsuka K, Ikematsu H, et al. Artificial Intelligence-assisted System Improves Endoscopic Identification of Colorectal Neoplasms. Clin Gastroenterol Hepatol (2020) 18:1874–81.e1872. doi: 10.1016/j.cgh.2019.09.009

8. Zhang Z, Zhang X, Lin X, Dong L, Zhang S, Zhang X, et al. Ultrasonic Diagnosis of Breast Nodules Using Modified Faster R-CNN. Ultrason Imaging (2019) 41:353–67. doi: 10.1177/0161734619882683

9. Fujioka T, Kubota K, Mori M, Kikuchi Y, Katsuta L, Kasahara M, et al. Distinction between benign and malignant breast masses at breast ultrasound using deep learning method with convolutional neural network. Jpn J Radiol (2019) 37:466–72. doi: 10.1007/s11604-019-00831-5

10. Zhao WJ, Fu LR, Huang ZM, Zhu JQ, Ma BY. Effectiveness evaluation of computer-aided diagnosis system for the diagnosis of thyroid nodules on ultrasound: A systematic review and meta-analysis. Med (Baltimore) (2019) 98:e16379. doi: 10.1097/MD.0000000000016379

11. Ciritsis A, Rossi C, Eberhard M, Marcon M, Becker AS, Boss A. Automatic classification of ultrasound breast lesions using a deep convolutional neural network mimicking human decision-making. Eur Radiol (2019) 29:5458–68. doi: 10.1007/s00330-019-06118-7

12. Li X, Zhang S, Zhang Q, Wei X, Pan Y, Zhao J, et al. Diagnosis of thyroid cancer using deep convolutional neural network models applied to sonographic images: a retrospective, multicohort, diagnostic study. Lancet Oncol (2019) 20:193–201. doi: 10.1016/S1470-2045(18)30762-9

13. Verburg F, Reiners C. Sonographic diagnosis of thyroid cancer with support of AI. Nat Rev Endocrinol (2019) 15:319–21. doi: 10.1038/s41574-019-0204-8

14. Kumar V, Webb JM, Gregory A, Denis M, Meixner DD, Bayat M, et al. Automated and real-time segmentation of suspicious breast masses using convolutional neural network. PloS One (2018) 13:e0195816. doi: 10.1371/journal.pone.0195816

15. Skrede OJ, De Raedt S, Kleppe A, Hveem TS, Liestol K, Maddison J, et al. Deep learning for prediction of colorectal cancer outcome: a discovery and validation study. Lancet (2020) 395:350–60. doi: 10.1016/S0140-6736(19)32998-8

16. Poplin R, Varadarajan AV, Blumer K, Liu Y, McConnell MV, Corrado GS, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat BioMed Eng (2018) 2:158–64. doi: 10.1038/s41551-018-0195-0

17. Chen PC, Liu Y, Peng L. How to develop machine learning models for healthcare. Nat Mater (2019) 18:410–4. doi: 10.1038/s41563-019-0345-0

18. Nishida N, Yamakawa M, Shiina T, Kudo M. Current status and perspectives for computer-aided ultrasonic diagnosis of liver lesions using deep learning technology. Hepatol Int (2019) 13:416–21. doi: 10.1007/s12072-019-09937-4

19. Spann A, Yasodhara A, Kang J, Watt K, Wang B, Goldenberg A, et al. Applying Machine Learning in Liver Disease and Transplantation: A Comprehensive Review. Hepatology (2020) 71:1093–105. doi: 10.1002/hep.31103

20. Hamm CA, Wang CJ, Savic LJ, Ferrante M, Schobert I, Schlachter T, et al. Deep learning for liver tumor diagnosis part I: development of a convolutional neural network classifier for multi-phasic MRI. Eur Radiol (2019) 29:3338–47. doi: 10.1007/s00330-019-06205-9

21. Feng ST, Jia Y, Liao B, Huang B, Zhou Q, Li X, et al. Preoperative prediction of microvascular invasion in hepatocellular cancer: a radiomics model using Gd-EOB-DTPA-enhanced MRI. Eur Radiol (2019) 29:4648–59. doi: 10.1007/s00330-018-5935-8

22. Kim S, Shin J, Kim DY, Choi GH, Kim MJ, Choi JY. Radiomics on Gadoxetic Acid-Enhanced Magnetic Resonance Imaging for Prediction of Postoperative Early and Late Recurrence of Single Hepatocellular Carcinoma. Clin Cancer Res (2019) 25:3847–55. doi: 10.1158/1078-0432.CCR-18-2861

23. Sun C, Xu A, Liu D, Xiong Z, Zhao F, Ding W. Deep Learning-Based Classification of Liver Cancer Histopathology Images Using Only Global Labels. IEEE J BioMed Health Inform (2020) 24:1643–51. doi: 10.1109/JBHI.2019.2949837

24. Saillard C, Schmauch B, Laifa O, Moarii M, Toldo S, Zaslavskiy M, et al. Predicting survival after hepatocellular carcinoma resection using deep-learning on histological slides. Hepatology (2020). doi: 10.1002/hep.31207

25. Chaudhary K, Poirion OB, Lu L, Garmire LX. Deep Learning-Based Multi-Omics Integration Robustly Predicts Survival in Liver Cancer. Clin Cancer Res (2018) 24:1248–59. doi: 10.1158/1078-0432.CCR-17-0853

26. Virmani J, Kumar V, Kalra N, Khandelwal N. Characterization of primary and secondary malignant liver lesions from B-mode ultrasound. J Digit Imaging (2013) 26:1058–70. doi: 10.1007/s10278-013-9578-7

27. Virmani J, Kumar V, Kalra N, Khandelwal N. SVM-based characterization of liver ultrasound images using wavelet packet texture descriptors. J Digit Imaging (2013) 26:530–43. doi: 10.1007/s10278-012-9537-8

28. Hwang YN, Lee JH, Kim GY, Jiang YY, Kim SM. Classification of focal liver lesions on ultrasound images by extracting hybrid textural features and using an artificial neural network. BioMed Mater Eng (2015) 26 Suppl 1:S1599–1611. doi: 10.3233/BME-151459

29. Tarek M, Hassan M, El-Sayed S. Diagnosis of focal liver diseases based on deep learning technique for ultrasound images. Arab J Sci Eng (2017) 42:3127–40. doi: 10.1007/s13369-016-2387-9

30. Schmauch B, Herent P, Jehanno P, Dehaene O, Saillard C, Aube C, et al. Diagnosis of focal liver lesions from ultrasound using deep learning. Diagn Interv Imaging (2019) 100:227–33. doi: 10.1016/j.diii.2019.02.009

31. Streba CT, Ionescu M, Gheonea DI, Sandulescu L, Ciurea T, Saftoiu A, et al. Contrast-enhanced ultrasonography parameters in neural network diagnosis of liver tumors. World J Gastroenterol (2012) 18:4427–34. doi: 10.3748/wjg.v18.i32.4427

32. Gatos I, Tsantis S, Spiliopoulos S, Skouroliakou A, Theotokas I, Zoumpoulis P, et al. A new automated quantification algorithm for the detection and evaluation of focal liver lesions with contrast-enhanced ultrasound. Med Phys (2015) 42:3948–59. doi: 10.1118/1.4921753

33. Kondo S, Takagi K, Nishida M, Iwai T, Kudo Y, Ogawa K, et al. Computer-Aided Diagnosis of Focal Liver Lesions Using Contrast-Enhanced Ultrasonography With Perflubutane Microbubbles. IEEE Trans Med Imaging (2017) 36:1427–37. doi: 10.1109/TMI.2017.2659734

34. Guo LH, Wang D, Qian YY, Zheng X, Zhao CK, Li XL, et al. A two-stage multi-view learning framework based computer-aided diagnosis of liver tumors with contrast enhanced ultrasound images. Clin Hemorheol Microcirc (2018) 69:343–54. doi: 10.3233/CH-170275

35. Hu HT, Wang Z, Huang XW, Chen SL, Zheng X, Ruan SM, et al. Ultrasound-based radiomics score: a potential biomarker for the prediction of microvascular invasion in hepatocellular carcinoma. Eur Radiol (2019) 29:2890–901. doi: 10.1007/s00330-018-5797-0

36. Wang W, Wu SS, Zhang JC, Xian MF, Huang H, Li W, et al. Preoperative Pathological Grading of Hepatocellular Carcinoma Using Ultrasomics of Contrast-Enhanced Ultrasound. Acad Radiol (2020). doi: 10.1016/j.acra.2020.05.033

37. Liu D, Liu F, Xie X, Su L, Liu M, Xie X, et al. Accurate prediction of responses to transarterial chemoembolization for patients with hepatocellular carcinoma by using artificial intelligence in contrast-enhanced ultrasound. Eur Radiol (2020) 30:2365–76. doi: 10.1007/s00330-019-06553-6

38. Liu F, Liu D, Wang K, Xie X, Ming L. Deep learning radiomics based on contrast-enhanced ultrasound might optimize curative treatments for very early or early stage hepatocellular carcinoma patients. Liver Cancer (2020) 9(4):397–413. doi: 10.1159/000505694

39. Nishida N, Kudo M. Immune checkpoint blockade for the treatment of human hepatocellular carcinoma. Hepatol Res (2018) 48:622–34. doi: 10.1111/hepr.13191

40. Dong Y, Zhou L, Xia W, Zhao XY, Zhang Q, Jian JM, et al. Preoperative Prediction of Microvascular Invasion in Hepatocellular Carcinoma: Initial Application of a Radiomic Algorithm Based on Grayscale Ultrasound Images. Front Oncol (2020) 10:353. doi: 10.3389/fonc.2020.00353

41. Wang K, Lu X, Zhou H, Gao Y, Zheng J, Tong M, et al. Deep learning Radiomics of shear wave elastography significantly improved diagnostic performance for assessing liver fibrosis in chronic hepatitis B: a prospective multicentre study. Gut (2019) 68:729–41. doi: 10.1136/gutjnl-2018-316204

42. Biswas M, Kuppili V, Edla DR, Suri HS, Saba L, Marinhoe RT, et al. Symtosis: A liver ultrasound tissue characterization and risk stratification in optimized deep learning paradigm. Comput Methods Programs BioMed (2018) 155:165–77. doi: 10.1016/j.cmpb.2017.12.016

43. Gatos I, Tsantis S, Spiliopoulos S, Karnabatidis D, Theotokas I, Zoumpoulis P, et al. A Machine-Learning Algorithm Toward Color Analysis for Chronic Liver Disease Classification, Employing Ultrasound Shear Wave Elastography. Ultrasound Med Biol (2017) 43:1797–810. doi: 10.1016/j.ultrasmedbio.2017.05.002

44. Li W, Huang Y, Zhuang BW, Liu GJ, Hu HT, Li X, et al. Multiparametric ultrasomics of significant liver fibrosis: A machine learning-based analysis. Eur Radiol (2019) 29:1496–506. doi: 10.1007/s00330-018-5680-z

45. Gatos I, Tsantis S, Spiliopoulos S, Karnabatidis D, Theotokas I, Zoumpoulis P, et al. Temporal stability assessment in shear wave elasticity images validated by deep learning neural network for chronic liver disease fibrosis stage assessment. Med Phys (2019) 46:2298–309. doi: 10.1002/mp.13521

46. Akkus Z, Cai J, Boonrod A, Zeinoddini A, Weston AD, Philbrick KA. Erickson BJ. A Survey of Deep-Learning Applications in Ultrasound: Artificial Intelligence-Powered Ultrasound for Improving Clinical Workflow. J Am Coll Radiol (2019) 16:1318–28. doi: 10.1016/j.jacr.2019.06.004

Keywords: artificial intelligence, ultrasound, imaging, liver cancer, neural network, diagnosis

Citation: Nishida N and Kudo M (2020) Artificial Intelligence in Medical Imaging and Its Application in Sonography for the Management of Liver Tumor. Front. Oncol. 10:594580. doi: 10.3389/fonc.2020.594580

Received: 13 August 2020; Accepted: 16 November 2020;

Published: 21 December 2020.

Edited by:

Hui-Xiong Xu, Tongji University, ChinaReviewed by:

Christoph Dietrich, Hirslanden Private Hospital Group, SwitzerlandMing-de Lu, Sun Yat-sen University, China

Copyright © 2020 Nishida and Kudo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Naoshi Nishida, bmFvc2hpQG1lZC5raW5kYWkuYWMuanA=

Naoshi Nishida

Naoshi Nishida Masatoshi Kudo

Masatoshi Kudo