94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

HYPOTHESIS AND THEORY article

Front. Syst. Neurosci., 23 December 2021

Volume 15 - 2021 | https://doi.org/10.3389/fnsys.2021.786252

This article is part of the Research TopicUnderstanding in the Human and the MachineView all 18 articles

The Air Force research programs envision developing AI technologies that will ensure battlespace dominance, by radical increases in the speed of battlespace understanding and decision-making. In the last half century, advances in AI have been concentrated in the area of machine learning. Recent experimental findings and insights in systems neuroscience, the biophysics of cognition, and other disciplines provide converging results that set the stage for technologies of machine understanding and machine-augmented Situational Understanding. This paper will review some of the key ideas and results in the literature, and outline new suggestions. We define situational understanding and the distinctions between understanding and awareness, consider examples of how understanding—or lack of it—manifest in performance, and review hypotheses concerning the underlying neuronal mechanisms. Suggestions for further R&D are motivated by these hypotheses and are centered on the notions of Active Inference and Virtual Associative Networks.

The notions of Situational Awareness and Situational Understanding figure prominently in multiple DoD documents, predicating the achievement of battlespace dominance on SA/SU superiority as, for example, in the following:

“Joint and Army commanders rely on data, information, and intelligence during operations to develop situational understanding against determined and adaptive enemies… because of limitations associated with human cognition, and because much of the information obtained in war is contradictory or false, more information will not equate to better understanding. Commanders and units must be prepared to integrate intelligence and operations to develop situational understanding” (The United States Army Functional Concept for Intelligence, 2020–2040, TRADOC 2017 Pamphlet 525- 2-, p. iii).

Distinctions between SA and SU are defined as follows:

“Situational awareness is immediate knowledge of the conditions of the operation, constrained geographically in time. More simply, it is Soldiers knowing what is currently happening around them. Situational awareness occurs in Soldiers’ minds. It is not a display or the common operating picture; it is the interpretation of displays or the current actual observation of the situation. …

Situational understanding is the product of applying analysis and judgment to relevant information to determine the relationships among the mission variables to facilitate decision making. It enables commanders to determine the implications of what is happening and forecast what may happen.” The United States Army Operations and Doctrine. Guide to FM-3-0.

Definitive publications by the originator of SA/SU concept and theory (Endsley, 1987, 1988, 1994; Endsley and Connors, 2014) identify three levels of situation awareness and associate understanding with Level 2, as shown in Figure 1.

Figure 1. Three levels of Situation Awareness (adopted from Endsley and Connors, 2014).

According to the schematic in Figure 1, understanding mediates between perception and prediction. The question is: what does such mediation involve, what, exactly, does understanding contribute? The significance of such a contribution can be questioned by, for example, pointing at innumerable cases in the animal domain of going directly from perception to prediction (e.g., intercepting preys requires predators to possess mechanisms for movement prediction, as in frogs shooting their tongues to catch flying insects). The bulk of this paper is dedicated to analyzing the role and contribution of understanding in human performance, pointing, in particular, at uniquely human forms of prediction involving generation of explanations derived from attentively (deliberately, consciously) constructed situation models. Because prediction necessarily entails the consequences of action, these models must include the (counterfactual) consequences of acting. In turn, this mandates generative models of the future (i.e., with temporal depth) and implicit agency. The ensuing approach differs from that adopted in the conventional AI, as follows.

Behaviorist psychology conceptualized the brain as a “black box” and was “fanatically uninterested” in reports concerning events in the box (Solms, 2021, p.10). Borrowing from this expression, one might suggest that cognitivist psychology and AI have been “fanatically uninterested” in the role of understanding; focusing predominantly on learning and reasoning (this contention will be re-visited later in the paper). This paper argues that the capacity for understanding is the definitive feature of human intellect enabling adequate performance in novel situations when one needs to act without the benefit of prior experience or even to counteract the inertia of prior learning. The argument is presented in five parts: the remainder of part I analyzes the notions of situation awareness and situation understanding, focusing on the latter; part II outlines Virtual Associative Network (VAN) theory of understanding, part III places VAN theory in a broader context of Active Inference, part IV considers implementation (machine understanding), followed by a concluding discussion in part V. In the remainder of this part, we define some of the key notions that set the stage for and will be unpacked in the rest of the paper.

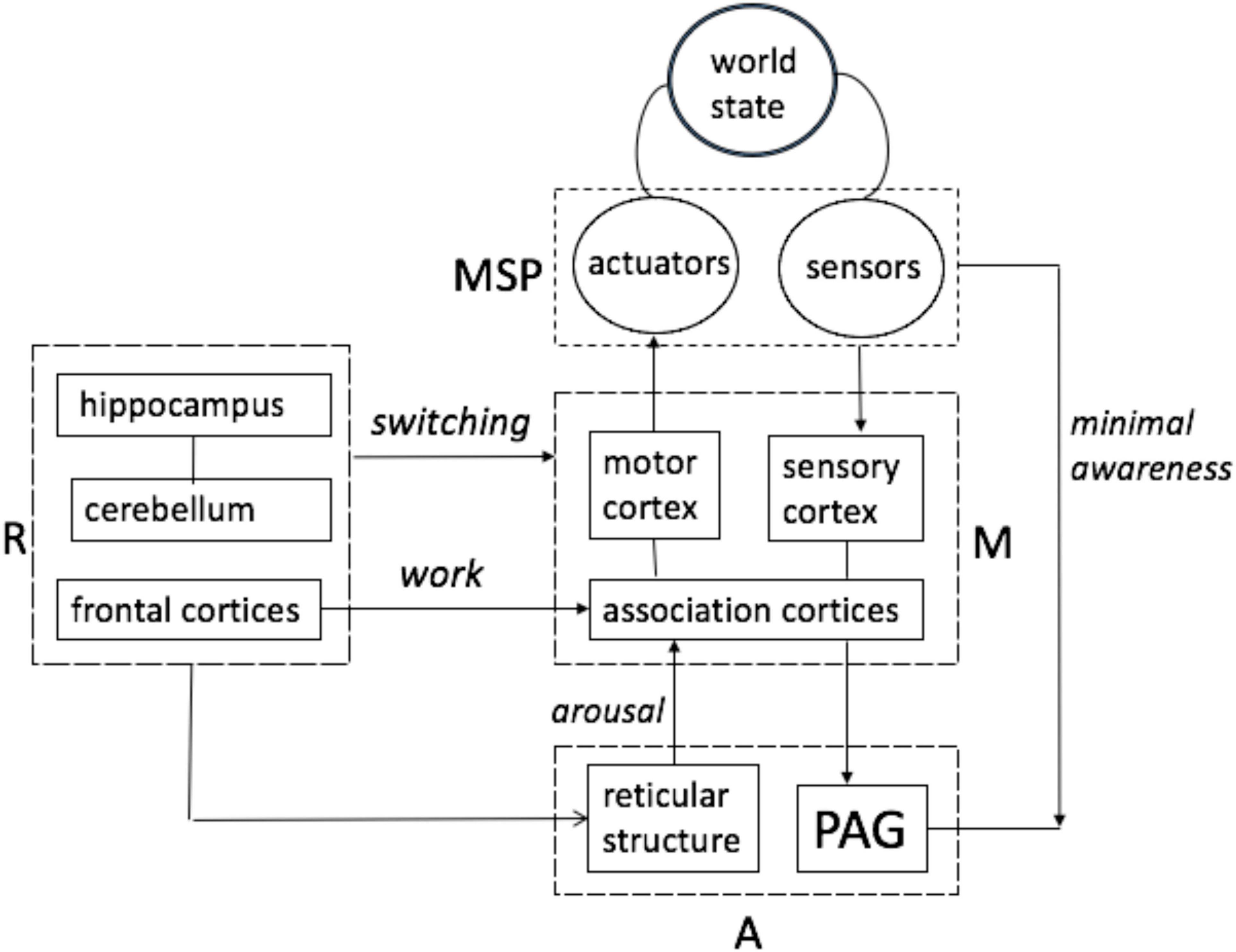

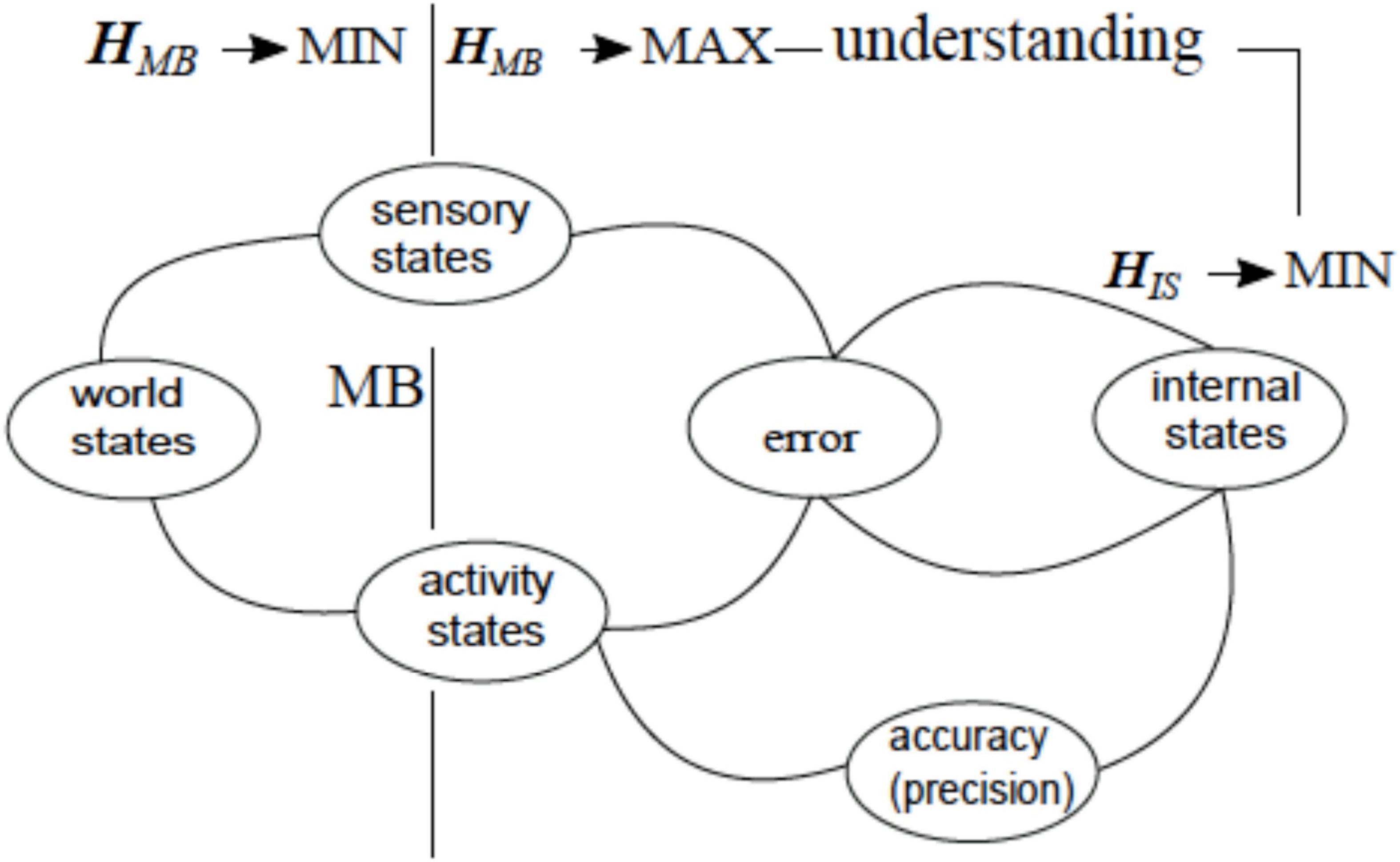

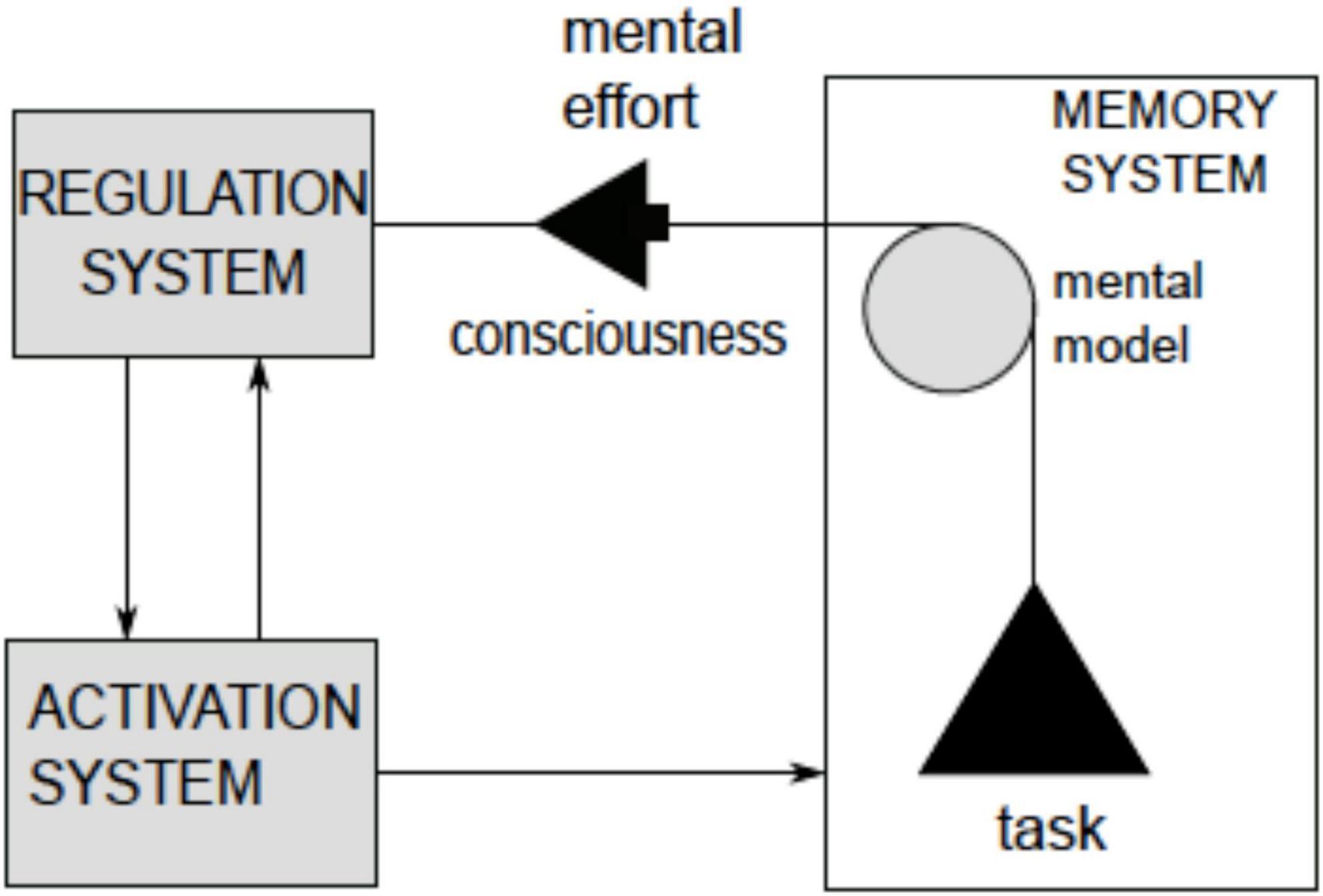

The central tenet of this paper boils down to the notion that understanding involves self-directed construction and the manipulation of mental models. In short, planning (as inference). This idea is not original but suggestions concerning the structure of the models and the underlying neuronal mechanisms are (Yufik, 1996, 1998; Yufik and Friston, 2016; Yufik, 2018, 2021b). Figure 2 introduces some key notions in the proposal, seeking to position mechanisms of awareness and mental modeling within the brain’s functional architecture. Stated succinctly, the following treatment builds upon an understanding of the computational architecture of the only systems that evince “understanding”; namely, ourselves.

Figure 2. Mental models are structures formed in Memory System (M) and manipulated by Regulatory System (R). Manipulation is enabled by activation (arousal) arriving from the Activation System A (includes Reticular Activating System) and serves to organize activities in Motor-Sensory Periphery (MSP) in such a way that the resulting changes in world states are consistent with the intent originating in R.

Figure 2 adopts the classical three-partite model of brain architecture in Luria (1973, 1974); Sigurdsson and Duvarci (2016), except for the inclusion of the cerebellum and Periaqueductal Grey (PAG) structure, whose role in cognitive processes—in particular the maintenance of awareness—was recently discovered (Solms, 2021). It was found that removing the bulk of cortex (in both R and M systems) while leaving the PAG intact preserves a degree of awareness (Solms, 2021). For example, hydranencephalic children (born without cortex) respond to objects placed in their hands, and surgically decorticated animals remain capable of some responses and even rudimentary learning (moreover, in some cases a casual observer might fail to notice differences in the behavior of decorticated animals and intact controls) (Oakley, 1981; Cerminara et al., 2009; Solms, 2021). By contrast, lesions of the PAG and/or reticular structure obliterate awareness (reticular structures project into cortex while PAG receives converging projections from cortex) (Solms, 2021). The architecture in Figure 2 indicates that intact PAG and RAS support minimal awareness (link from PAG to MSP indicates awareness achieved in the absence of the cortex) while an interplay of all the other functional systems produces a hierarchy of awareness levels above the minimal.

To define levels of awareness, one needs to conceptualize the world as generating a stream of stimuli and cognition as a process of assimilating sensory streams aimed to extract energy and sustain energy inflows (these crucially important notions will be re-iterated throughput the paper). With these notions in mind, the following levels of awareness can be identified.

1. Minimal awareness (“vegetative wakefulness,” the term is due to Solms, 2021, p. 134). Streams of sensory stimuli are experienced as flux (noise).

2. Selective awareness. Organism responds to fixed combinations of contiguous stimuli as they appear in the flux (as in frogs catching flies).

3. Discriminating awareness. “Blobs” with fuzzy boundaries emerge in perceptual synthesis comprising some contiguous stimuli groupings with varying correlation strength inside the groups.

4. Differentiating awareness. Different stimuli compositions are assimilated into “blobs” that are sharply bounded and segregated from the surrounds (“blobs” subsequently turn into distinct “objects,” as in telling letters apart).

5. Recognition-based awareness. Variations in stimuli compositions in the objects are differentiated (stated differently, different stimuli compositions are experienced as manifestations of the same object, as in recognizing letters in different fonts or handwriting).

6. Context-based awareness. The perceptual recognition of objects is influenced by their surrounds (think of the often-cited example of perceiving a shape that looks like a distorted letter A or distorted letter H, depending on its appearance in the middle of C_T or the beginning of _AT).

7. Understanding-based awareness. This level is qualitatively different from the preceding levels: all levels deal with learning, i.e., developing memory structures reflecting the statistics of correlation, contingencies and contiguity in the world. By contrast, this level produces and manipulates complex relational structures (mental models) uprooted from such statistics (accounting for non-contiguous and weakly correlated, sparse stimuli groupings) — in other words, compositions and counterfactuals. To illustrate the distinction: the statistics of English texts would suffice for resolving the “_AT or C_T” ambiguity but not for understanding the expression “hats on cats” (when was the last time you saw or read about cats wearing hats?).

Arguably, Figure 1 refers primarily to understanding-based situation awareness. It is informative to note that cells in prefrontal cortices represent the association of sensory items of more than one sensory modality, integrate these items across time and participate in performing tasks requiring reasoning and manipulation of complex relational structures (Kroger et al., 2002). Construction and manipulation of complex relational structures underlies understanding. More precisely, understanding enables construction of models expressing unlikely correlations (like cats in hats), while sometimes failing to register some precise and routinely encountered ones (e.g., medieval medicine for centuries failed to see the relation between a beating heart and blood circulation, placing the source of circulation in the liver). This paper offers ideas seeking to account for both the strengths and the weaknesses of the understanding capacity. Three pivotal notions (work, switching, and arousal) are referenced in Figure 2 (labeled in italics).

Operations on mental models demand effort and energy, in the same manner as are those demanded by any bodily (i.e., thermodynamic) work, such as running or lifting weights.

The functional architecture in Figure 2 is shared across many species, except for the capability to temporarily decouple mental models from the motor-sensory periphery and environmental feedback. The emergence of this regulatory capacity—to allow such decoupling— underwrites the development of an understanding capacity that is uniquely human and enables uniquely human skills, such as extending the horizon of prediction reach from the immediate to an indefinitely remote future and extending actions reach from objects in the immediate surrounds to indefinitely distant ones, etc.

Regulation of arousal (energy distribution in the cortices) is an integral and critical ingredient of mental modeling. In particular, modeling is contingent on maintaining the stability and integrity of neuronal structures in the cortices implementing the models. Resisting entropic erosion and disintegration of the structures require sustained inflows of metabolic energy. These ideas will be given precise definitions that will be mapped onto a simple mathematical formalism.

To summarize, three different brain mechanisms have been identified: those that circumvent the cortex, those that engage the cortex, and those mechanisms that are realized in the cortex and are temporarily disengaged from the motor-sensory periphery (switching). The former two mechanisms underlie learning and are shared among multiple species, including humans, while the latter is unique to humans and underlies understanding. The proposal so far is derived from the following conceptualization: (a) the world is a process or stream (not a “static pond”), (b) cognition is a process of adapting an organism’s state and behavior to variations in the stream, and (c) the adaptation are powered by energy (work) extracted from the stream and distributed inside the system (regulation of arousal). Understanding complements learning: learning extrapolates from past experiences, while understanding overcomes the inertia of learning when encountering new conditions with no precedents. Overcoming inertia is an effortful process that can fail but provides unique performance advantages when it succeeds. It was noted that AI and the cognitivist school of thought have downplayed the role of understanding in performance.

The concept of Situation Awareness in Figure 1 predicates awareness on understanding, consistent with the notion of understanding-based awareness introduced in this section (note that Figure 1 does not address learning. Accordingly, this article does not expand on relations between learning and understanding, except for the comments in the preceding paragraph). The next section takes a closer look at the process of understanding and provides examples of its successes and failures.

Colloquially, “understanding” denotes an ability to figure out what to do when there is no recipe available and no precedent or aid to consult. The dictionary formulation captures the essence of that ability defining understanding (comprehension, grasp) as “apprehending general relations in a multitude of particulars” (Webster’s Collegiate Dictionary). In science, relations are expressed by equations. Accordingly, in understanding scientific theory T, apprehending general relations takes the form of “recognizing qualitatively characteristic consequences of T without performing exact calculations” (Criterion for Intelligibility of Theories) (de Regt, 2017, p. 102). The experience of attaining scientific understanding was described by Richard Feynman as having

“some feel for the character of the solution in different circumstances. … if we have a way of knowing what should happen in given circumstances without actually solving the equations, then we “understand” the equation, as applied to the circumstances. A physical understanding is a completely unmathematical, imprecise, and inexact thing, but absolutely necessary for a physicist (Feynman, c/f de Regt, 2017, p. 102).

The Criterion subsumes epistemic and pragmatic aspects of theoretical understanding, i.e., producing explanations of various phenomena and applications in various situations. Figuratively, understanding cuts through the “fog of war” (Clausewitz, 2015/1835) when apprehending battlefield situations and the “fog of mathematics” when apprehending scientific theories.

These notions are consistent with conceptualizations of understanding in psychology [theory of understanding (Piaget, 1975, 1978), theory of fluid and crystallized intelligence (Cattell, 1971, 1978)], emphasizing ability to apprehend relations under novel conditions and in the absence of practice or instruction [fluid intelligence (Cattell, 1971, 1978)]. The term “situational understanding” connotes changing conditions, with situations transforming fluidly into each other (e.g., attack- halt - withdraw - attack…, etc.). The remainder of this section presents examples of situational understanding, prefaced by a brief analysis (anatomy of the process) in the next two paragraphs. These examples are followed by preliminary suggestions regarding the underlying mechanisms.

Reduce a multitude of objects to just two, A and B, and consider situation “A moves towards B.” In reaching decision that A attacks B, three stages can be identified, with the first one being readily apparent, while the significance of the second is easily overlooked. First, one must perceive A and B, which involves distinct activities (alternating attention between A and B) producing two distinct memory elements (percepts). Second, percept A and percept B must be juxtaposed (grouped), i.e., brought together and held together in memory (call it “working memory”). The task appears to be easy when the activities follow in tight succession (e.g., both A and B are within the field of view) but not so easy when they are separated by large time intervals. The third stage involves establishing a relation, which is predicated on the success of the preceding stages. The second stage is crucial: arguably, the development of understanding was launched by the emergence of mechanisms in the brain allowing juxtaposition of percepts separated by large stretches of time. At this point, it is informative to note that a recent theory concerning the origins of language capacity in the humans associated this capacity with the emergent availability of mental operation (called Merge) where disjoint units A and B are brought together to produce a new unit (A B) → C amenable to subsequent Merge, (C Q) → Z, and so on (Chomsky, 2007; Berwick and Chomsky, 2016).

Identifying stages in the understanding process helps to appreciate the staggering challenges it faces. When experiencing A, how does the idea of relating A to B come to mind? Figuring out this relation takes effort but the very expression “coming to mind” connotes spontaneity. Accordingly, understanding can break down if the effort is insufficient and/or spontaneous mechanisms fail to deliver. The point is that understanding involves dynamic interplay of deliberate operations and automatic memory processes triggered by the operations that might or might not converge in a grasp. To exemplify the point, consider a syllogism (say, “all humans are mortal, Socrates is a human, therefore Socrates is mortal”). It might appear that the conclusion inescapably follows from the premises but that’s an illusion: one might be aware of each of the premises individually but fail to bring them together, and/or the conclusion might either not come to mind or get suppressed upon arrival. Some extreme examples of failed and successful situational understanding are listed next.

On May 17, 1987, the USS Stark on patrol in the Persian Gulf was struck by two Exocet AM-39 cruise missiles fired from an Iraqi F-1 Mirage fighter. An investigation revealed that the aircraft was detected by AWAC (Airborne Warning and Control) patrolling in the area and identified as “friendly.” Due to the erroneous initial identification, the captain and crewmembers on the frigate ignored subsequent aircraft maneuvers that were unambiguously hostile (turning, descending and accelerating in the direction of the ship) which resulted in a loss of 37 lives and severe damages to the ship (Miller and Shattuck, 2004).

Between May 9th and June 14th in 1940, France was invaded by the German army. France was one of the major military powers in Europe that maintained adequately equipped forces and, besides, invested tremendous resources in erecting state-of-the-art fortifications on its northern border (the Maginot Line). Despite these preparations, France suffered a historic defeat. Massive literature has been produced over many decades, analyzing the course of events and suggesting various reasons for this colossal and catastrophic failure. A book published in 1941 by a competent French author (served as a liaison officer in the British army during WWI) summarized discussions with French and British officers and political figures before and after the events in question, His analysis offers what appears to be a plausible account and explanations (Maurois, 1941). In particular, the book pointed out that French military and political authorities overestimated the efficiency of the Maginot defenses which stemmed, interestingly, from French technical advances and a sense of engineering superiority. French generals determined Maginot fortifications to be impenetrable on the grounds that they “can be built so rapidly that, in the time necessary for an enemy to take a first line, the defending army can construct a second …” (Maurois, 1941, p. 42). A full range of state-of-the-art technologies (reconnaissance photography, advanced communications, etc.) was employed, the terrain was meticulously examined and mapped out and artillery ranges were calculated in advance. “These painstaking labors assured absolute precision of fire. The spotters in front of the forts had before them photographs of the country divided into numbered squares. Perceiving the enemy in square 248-B, all they would have to do was murmur “248-B” into the telephone, and 10 s later the occupied zone would have been deluged with shells and bullets” (Maurois, 1941, p. 48). In short, a confident consensus was predicting that the Maginot fortifications will never be broken through. These predictions turned out to be correct: Germans went around and bypassed the Maginot Line entirely, invading Paris on June 14, 1940.

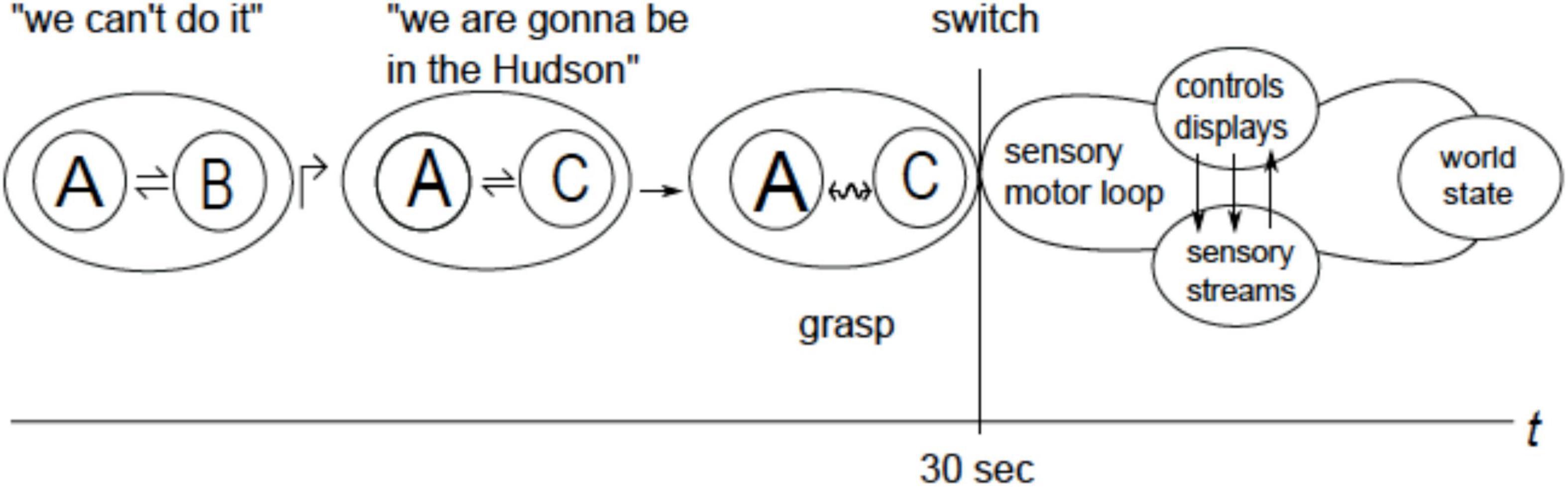

On January 15th, 2009, the Airbus A320-214 flying from LaGuardia Airport in New York struck a flock of geese during its initial climb out. The plane lost engine power, and ditched in the Hudson River off midtown Manhattan just 6 min after the take off. The pilot in command was Captain Sullenberger (CS), the first officer was Skiles. The bird strike occurred 3 min into the flight and resulted in an immediate and complete loss of thrust from both engines. At that instant, Skiles began going through the three-page emergency procedures checklist and CS took over the controls. In about 30 s, he requested permission for an emergency landing in a nearby airport in New Jersey (NJ) but decided on a different course of action after the permission was granted. Having informed controllers on the ground about his reasons (“We can’t do it”) and intents (“We’re gonna be in the Hudson”), CS proceeded to glide along and then ditch the aircraft in the river. All the 155 people on board survived against a staggeringly bad odds (Suhir, 2019).

The underlying mental process in all three incidents involves item grouping, success or failure in the overall task performance depended on how that step was accomplished. One more example will help to illustrate this contention. Analysis of eye tracking records of ATC controllers revealed latent grouping of aircraft signatures on ATC displays which appeared to be motivated by gestalt criteria (e.g., relative proximity). The probability of detecting possible collision was higher for the aircraft residing in the same group (A B) than for those residing in different groups, (A B) (C D). It was hypothesized that novice controllers could not disable gestalt grouping but the more skilled ones developed a capacity for overcoming its impact on performance (Landry et al., 2001; Yufik and Sheridan, 2002). We now turn to analyzing these examples.

In the USS Stark incident, three items had to be accounted for in the Captain’s decision process: A = own ship, B = AWAC, and C = F1 Mirage. In the Captain’s mental model, grouping (A B) was the dominant one (i.e., attributing significance to any item C respective A relied entirely on B). The “friendly” determination rendered C irrelevant to A and removed it from consideration. Hence, the “blind spot” on the Iraqi F-1 Mirage fighter whose behavior was displaying signs of attack that could not be any clearer: the aircraft was ascending away from the ship but then sharply changed its course and started descending and accelerating toward the ship.

French military planners recognized the possibilities of German bypassing maneuvers (e.g., attacking through Belgium) but “rationalized them away,” i.e., worked out lines of reasoning that rendered them highly unlikely and, ultimately, have forced them out of consideration. French strategic thinking was structured by the experience of trench warfare in WWI when opponents were facing each other from fortified positions and conducted frontal assaults to break through each other’s defenses. As a result, the mental models of the leading strategists were focused on the fortifications and defended areas in front of them (A B) while turning a “blind eye” to the adjacent areas (C). Because of the influence earned by the generals in their past victories, these models became the dominant view across the French military, intelligence and political communities. Common sense would suggest that the Maginot Line needed to be “prolonged along the Belgian frontier by fortifications that were perhaps less strong but nevertheless formidable. I received one of the greatest shocks of my life when I saw the pathetic line…which was all that separated us from invasion and defeat” (Maurois, 1941, p. 19). The point is that experience- sculpted models can produce pathological tunnel vision which cannot be remedied by reasoning – to the contrary, reasoning confined to the same tunnels can only make them more rigid. Practical validation, an otherwise uncompromisingly reliable criteria, could also do a disservice (one can imagine placing targets in front of the fortifications and, after some extra practice, having them destroyed, not in 10 but in 8 s).

The Airbus A320-214 incident prompted a thorough investigation and analysis that engaged the most advanced investigative and analytic tools available (Suhir, 2012, 2018, 2019; Suhir et al., 2021). Unlike in the previously cited scenarios, this analysis had unlimited access to complete records and could use computer modeling and testing in flight simulators to validate the conclusion. The analysis was centered on probabilistic risk estimates accounting for the human error stemming from imbalances between human capacities (Human Capacity Factor, or HCF) and mental workload (MWL). Ten major contributors into HCF were identified: (1) psychological suitability for the given task, (2) professional qualifications and experience, (3) level, quality, and timeliness of past and recent training, (4) mature (realistic) and independent thinking, (5) performance sustainability (predictability, consistency), (6) ability to concentrate and act in cold blood (“cool demeanor”) in hazardous and even in life threatening situations, (7) ability to anticipate (“expecting the unexpected”), (8) ability to operate effectively under pressure, (9) self-control in hazardous situations, and (10) ability to make a substantiated decision in a short period of time. Captain Sullenberger was expected to score high on the majority of these factors. In simulator tests, four pilots were briefed in advance about the entire scenario in full detail and then exposed to simulated conditions immediately after the bird strike. Knowing in advance what to expect, all four were able to land the aircraft. However, when a 30 sec delay was imposed (the time it took Sullenberger to assess the situation and decide on the course of action), all four pilots crashed (Suhir, 2013).

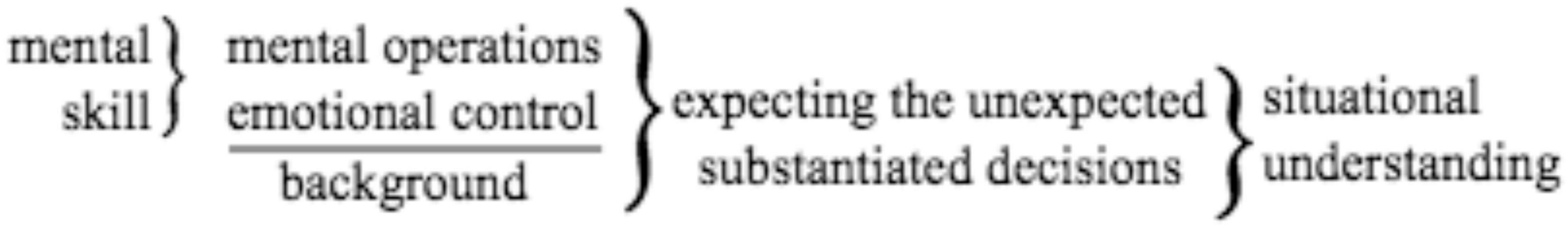

Applying the HCF metric to other examples, it can be suggested that HCF scores reflect capacity for situational understanding, ranging from the bottom low to exceptionally high. For the purposes of this paper, the ten factors can be divided into four groups three of which can be roughly mapped onto components in the architecture in Figure 2 (roughly, factors 2 and 3 relate to Memory, factors 1, 5, 6 relate to Activation and factor 4 and 9 relate to Regulation) while the forth group is made up of 7, the ability to anticipate (“expecting the unexpected”), and 10, the ability to make a substantiated decision in a short period of time) relate to Situational Understanding, conceptualized here as a product of interplay between the other three groups. Figure 3 re-phrases this suggestion.

Figure 3. Situational Understanding is a product of background (knowledge, training) and mental skills. A solid horizontal line underscores that skills operate on top of the background.

Mental skills operate on top of background, including knowledge and skills acquired in training, but are qualitatively different from those. The distinction extends from responding to unexpected eventualities to constructing scientific proofs or theories where the process of selecting and applying the rules of the theory at each stage cannot be itself governed by another set of rules (de Regt, 2017). In the Airbus incident, emergency rules and training dictated either consulting the emergency checklists or seeking possibilities for heading to the nearest airport. Following either of these courses of action would be both rational (not random or unreasonable) and in line with the cumulative experience in the aviation community, but would have surely killed all on board.

There are five points to be made here. First, an NJ landing was initially considered by CS and implicitly supported by controllers on the ground, as evidenced by the granting landing permission. Second, CS could not even start analyzing the NJ option (i.e., considering the current altitude, airspeed, distance, wind, aircraft characteristics, etc.) but could only develop a “feel” that it would not work out. Third, despite the absence of analysis, the “feel” allowed a substantive decision (“We can’t do it”). Forth, having developed the “feel,” CS acted on it resolutely, entailing another substantive decision (“We’re gonna be in the Hudson”). Fifth, CS did not know the future but performed comparably to or better than pilots who knew the scenario in advance. In short, CS understood the situation in a process involving three distinct mental operations, as follows.

1. Forceful re-grouping, not derived from any rule or precedent (jump, ↱).

(AB)↱(AC), here A is the aircraft, B is the New Jersey airport and C is the Hudson River.

The expression reads as “group (A B) is jumped to group (A C).”

2. Alternating attention between members inside a group while envisioning variations in their characteristics (coordination, ⇌).

[var (A) ⇌var (C)], attention alternates between envisioning variations in the aircraft behavior [var (A) (e.g., changes in attack angle) and changes along the riverbed (var (C)] (e.g., changes in width, curvature, etc.). Reads as “A is coordinated with C.”

3. Forcefully iterating coordination until a particular coordination pattern (relation) is apprehended (blending, ↭).

[(var (A) ↭var (C)], reads as “A is blended with C.” Blending transforms a coordinated group into a cohesive and coherent functional whole so that, e.g., envisioning variations in one member brings to mind the corresponding variations in the other one (thinking of ditching near a particular spot brings to mind the required changes in aircraft behavior and, vice versa, envisioning changes in the behavior brings to mind the corresponding changes in the location of the spot). Blending establishes relation R on the group [var (A) ⇌var (C)] → (A R C) which gets expressed in substantive decisions (“We’re gonna be in the Hudson”) and gives rise to probability estimates for coordinated activities (“chances of a successful ditching are not too bad”) and their outcomes (more on that in the next section).

Operations jump, coordination, and blending participate in the construction of mental models, culminating in blending which makes one aware of, i.e., anticipate direct and indirect results of one’s actions without considering situational details. Intuitive appreciation of this dualistic relationship between awareness and understanding seems to be the motivation in the Situational Awareness concept and the SA schema in Figure 1.

To summarize, understanding involves the construction of mental models that make an adequate performance possible when exploring unknown phenomena and/or dealing with unforeseeable eventualities in the otherwise familiar tasks. In the latter case, understanding enables decision processes that are substantive, short (as compared to the duration of the task), rely on minimal information intake, and achieve results approximating those one would achieve had all the eventualities been known in advance. Importantly, mental models not only generate likelihood estimates for future conditions but make one envision them and then actively regulate motor-sensory activities consistent with the anticipated conditions and in coordination with motor-sensory feedback (hence, the “expecting of the unexpected”). Note that simply to decide or choose immediately requires there to be a space of policies or narratives to select from. The position offered in this paper is that this necessarily entails the ability to represent the (counterfactual) consequences of two or more courses of action—and to select optimally among these representations. What brain mechanisms could underlie this capability?

The VAN model was motivated by one paramount question (“How does understanding work?”) and stems from the three already familiar ideas that can be re-stated as follows:

(1) The world is a stream, and brain processes are dynamically orchestrated to adapt organism’s behavior to variations in the stream.

(2) The brain is a physical system, wherein all processes need to be powered by energy extracted from the stream.

(3) Physical systems are dissipative, so any re-organization takes time (instantaneous reorganization would require infinite energy). As a result, adaptive re-organizations are necessarily anticipatory.

Taken together, these ideas entailed the following two hypotheses:

(a) the evolution of biological intelligence has been (selectively) pressured to stabilize energy supplies above some life-sustaining thresholds and

(b) human intelligence was brought about by biophysical processes—discovered by evolution—that allowed for two fundamental mechanisms to emerge: mechanisms that stabilized energy supplies from the outside and those minimized dissipative losses and energy consumption inside the brain. These mechanisms culminate in the uniquely human capacity for understanding, as outlined in the remainder of this section. The next section will suggest a hand-in-glove relationship between thermodynamic efficiency and variational free energy minimization (VFEM) (Friston, 2009, 2010).

Note that the VAN approach is orthogonal to that expressed in the perceptron (neural nets) idea: dynamically orchestrated neuronal structures vs. fixed structures (after the weights are settled), input streams where stimuli combinations are never twice the same vs. recurring inputs. Crucially, accounting for energy and time is integral to the VAN model and alien to the perceptron framework. In short, the VAN and perceptron models reside in different conceptual terrains. The appeal of the former is the possibility of quickly reaching a point where a theory of understanding can be articulated. Technically, the distinction between perceptron and related reinforcement learning and VAN is the distinction between an appeal to the Bellman optimality principle (any part of an optimal path between two configurations of a dynamical system is itself optimal) and a more generic principle of least action where action corresponds to energy times time. VAN and the free energy principle (a.k.a. active inference) share exactly the same commitments. Note that formulating optimal behavior in terms of a principle of least action necessarily involves time—and the consequences of behavior.

To set the stage, return to Figure 2, and think of the world as a succession or stream of states Si,Sj… arriving with time interval τ1,and think of the brain as a pool of N binary neurons. Interaction is driven by the need to extract energy from the world in the amounts sufficient for the pool’s survival. Anthropomorphically, this entails recurring cycles of inquiring (What is the current state of affairs in the world?) and forming responses (What shall I do about it?). The sequence of “inquiries” at each cycle can be expanded: What is the state? What can I do about it? What shall I do about? How shall I do it? and so on. Also, different types of neurons can be envisioned and mapped onto different components in the architecture in Figure 2 (sensory neurons, motor neurons, etc.).

Whatever the composition of the pool and the content and order of the inquiries, activities in the pool boil down to selectively flipping (exciting or inhibiting) neurons in a particular order. Make two assumptions: (a) each state Sican emit energy reward i ranging from 0 to some maximum , depending on the order and composition of “flippings” in the pool and (b) each “flip” consumes energy d (at the first approximation, let all flips be powered by the same energy amount). The problem facing the pool can be defined now as maximizing energy inflows while minimizing the number of flips. It will be argued, in four steps, that understanding involves a particular strategy for satisfying this dual objective (step 4 defines architecture for understanding).

A pool of N binary neurons admits 2N configurations so that, in principle, selecting a rewarding configuration for a particular world state can pose a problem that grows in complexity with the size of the pool (associating complexity measure with the number of options). The problem is alleviated when choices are dictated by the world state itself (i.e., each stimulus in the composition of Siexcites particular neurons) but, otherwise, the pool needs to choose between 2N options.

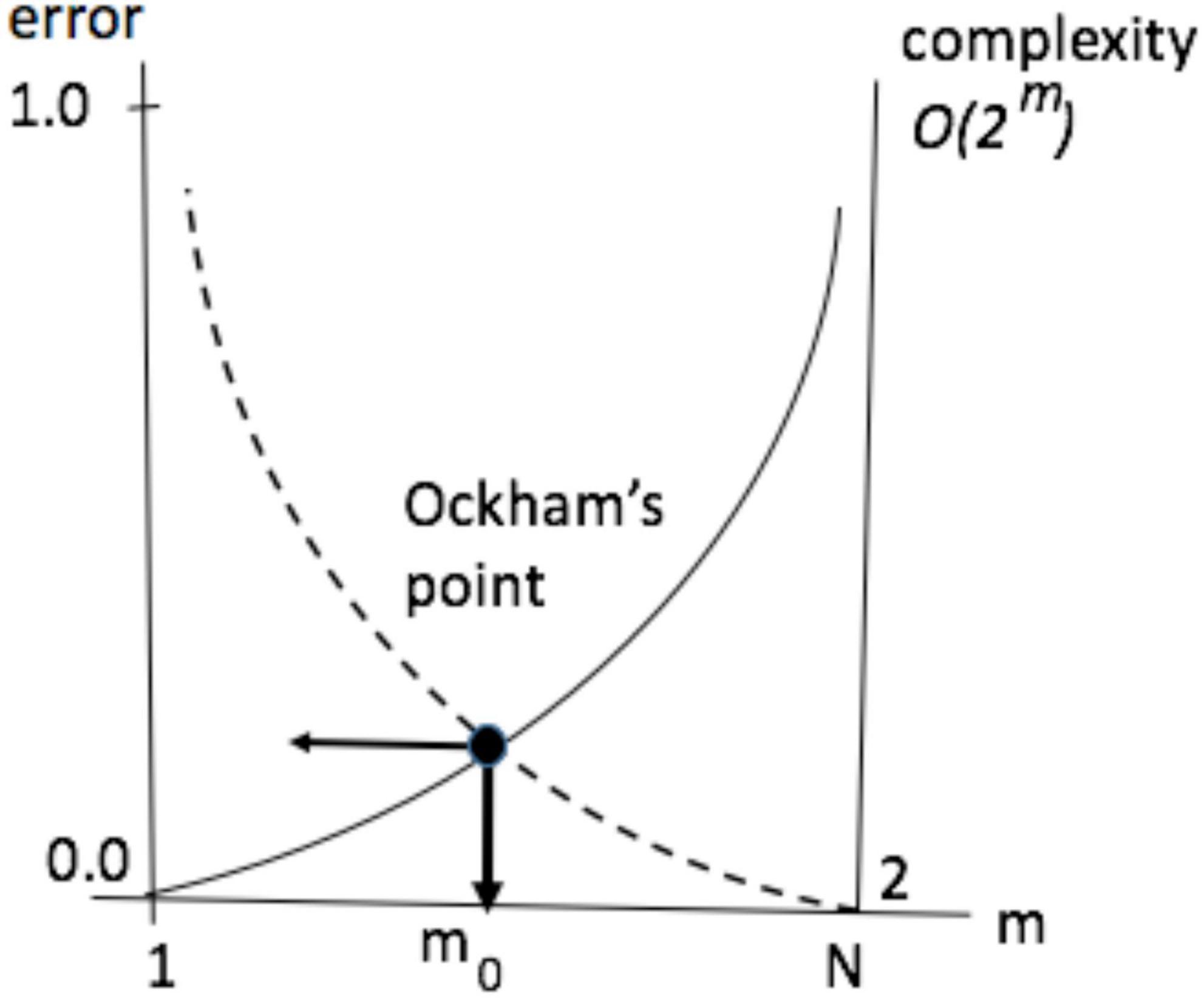

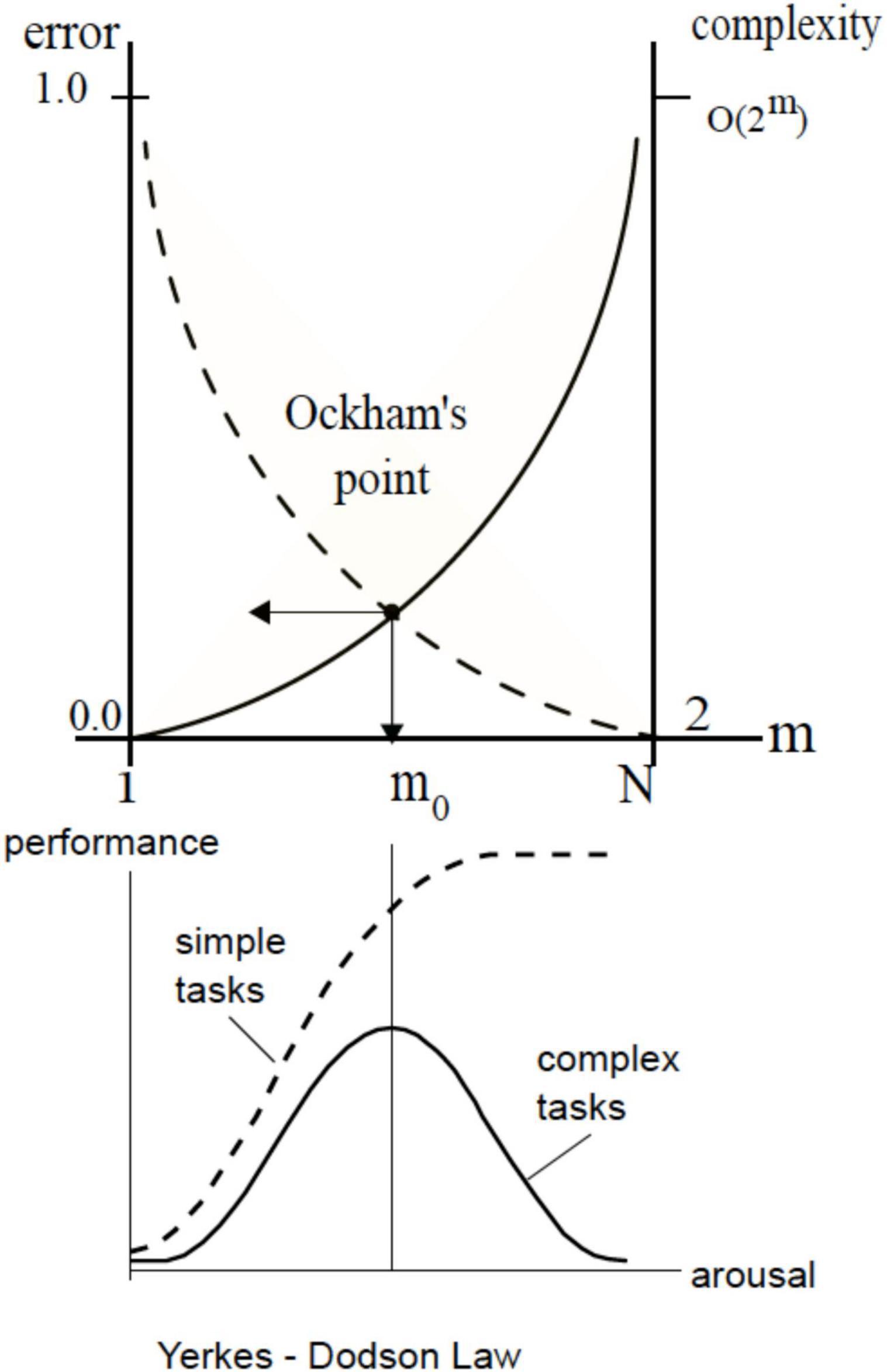

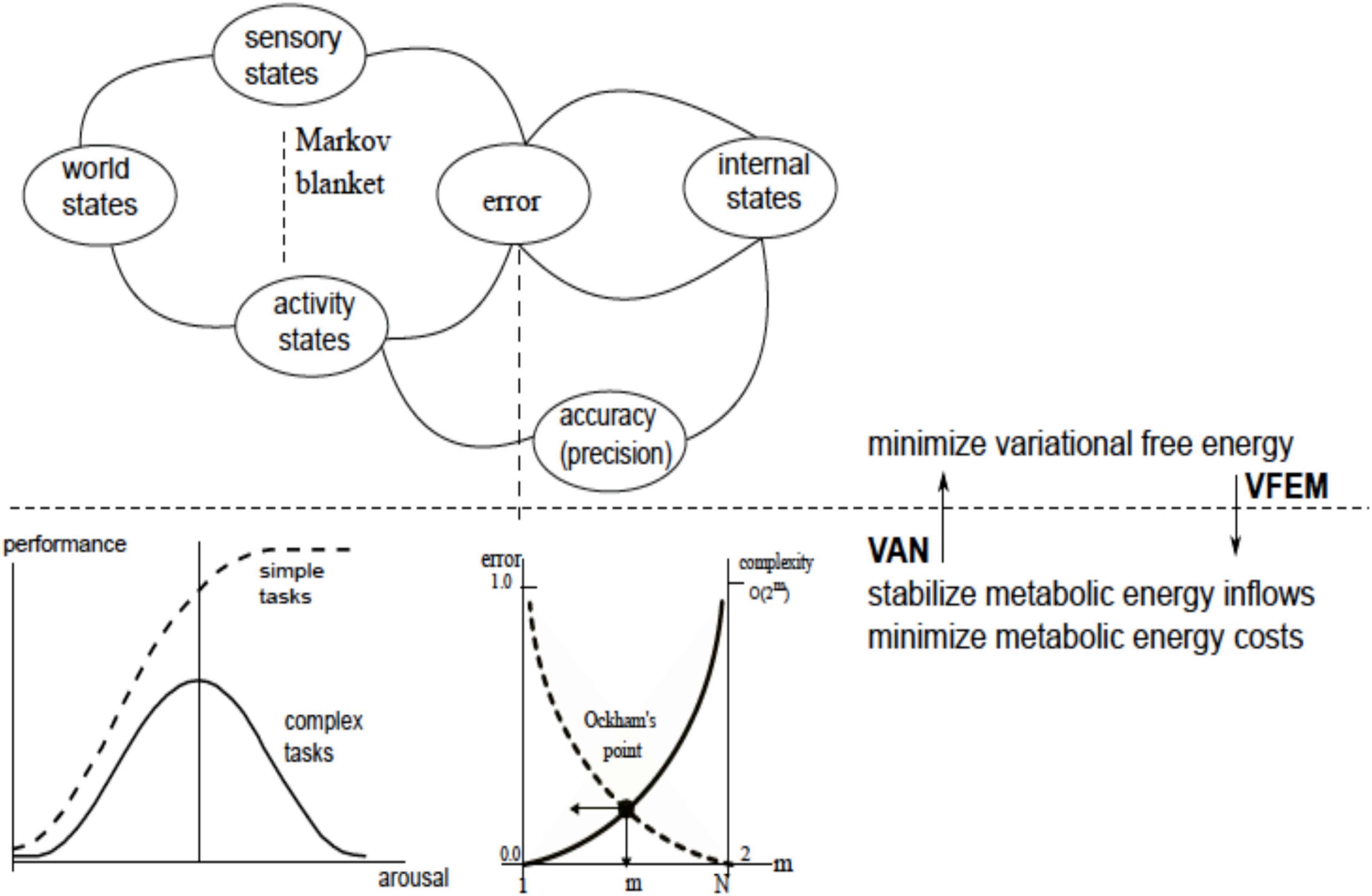

Assume that a mechanism exists to partition the pool into m groupings [call them neuronal assemblies (Hebb, 1949, 1980)] such that all the neurons in every group behave in unison. Such partitioning would offer more efficiency, reducing the number of choices from 2N to 2m. The remedy is radical because it not only puts a lid on complexity growth but causes complexity to decrease steeply with the size of the pool (e.g., partitioning pool of 10 neurons into 5 groups yields 25: 1 reduction in the number of options while having 5 groups in a pool of 100 neurons obtains 295: 1 reduction). Complexity reduction translates into an increase decision speedup (e.g., equating complexity to time-complexity, by assuming one choice per unit time) and internal energy savings. Indeed, complexity reduction can be regarded as underlying all (i.e., universal) computation; in the sense of algorithmic complexity and Solomonov induction. The benefits of compression and complexity minimization come at a price: imploding complexity is accompanied by exploding error — as the loss of degrees of freedom precludes an accurate prediction. This trade-off between accuracy and complexity is illustrated in the notional diagram in Figure 4 (error ηi is measured by the difference between obtainable in the pool without partitioning and yielded by m- partitioning).

Figure 4. Self-partitioning in the neuronal pool radically impacts pool’s capacities in responding to world streams and involves trade-offs between time and accuracy, as a function of group size. The relationship is non-linear, creating long tail areas where, on the one side, sacrificing speed (increasing the number of groups) produces no appreciable improvements in accuracy (“useless details”) and, on the other side, small speed gains produce quickly increasing errors (“useless generalities”). A narrow inflection zone (Ockham’s point, or O-point) lies between the tail areas.

To illustrate, veering to the left of the O-point in the Airbus accident would be akin to CS receiving advice “aviate, navigate, communicate” from the ground controllers, which is a paramount principle in aviation human factors (Wiener and Nagler, 1988) but hardly a useful guidance under the circumstances, while veering to the right would be like offering a refreshment course in plane aerodynamics. Depending on the task, the relative width of Ockham’s zone on the group size axis can be very small so the ability to stay within it (e.g., not going through emergency checklists, discontinuing communications, etc.) can make vital differences in the performance outcomes. Put simply, there is a right level of “grouping” or “course graining” that provides the right balance between accuracy and complexity. Statistically speaking, this corresponds to maximizing marginal likelihood or model evidence.

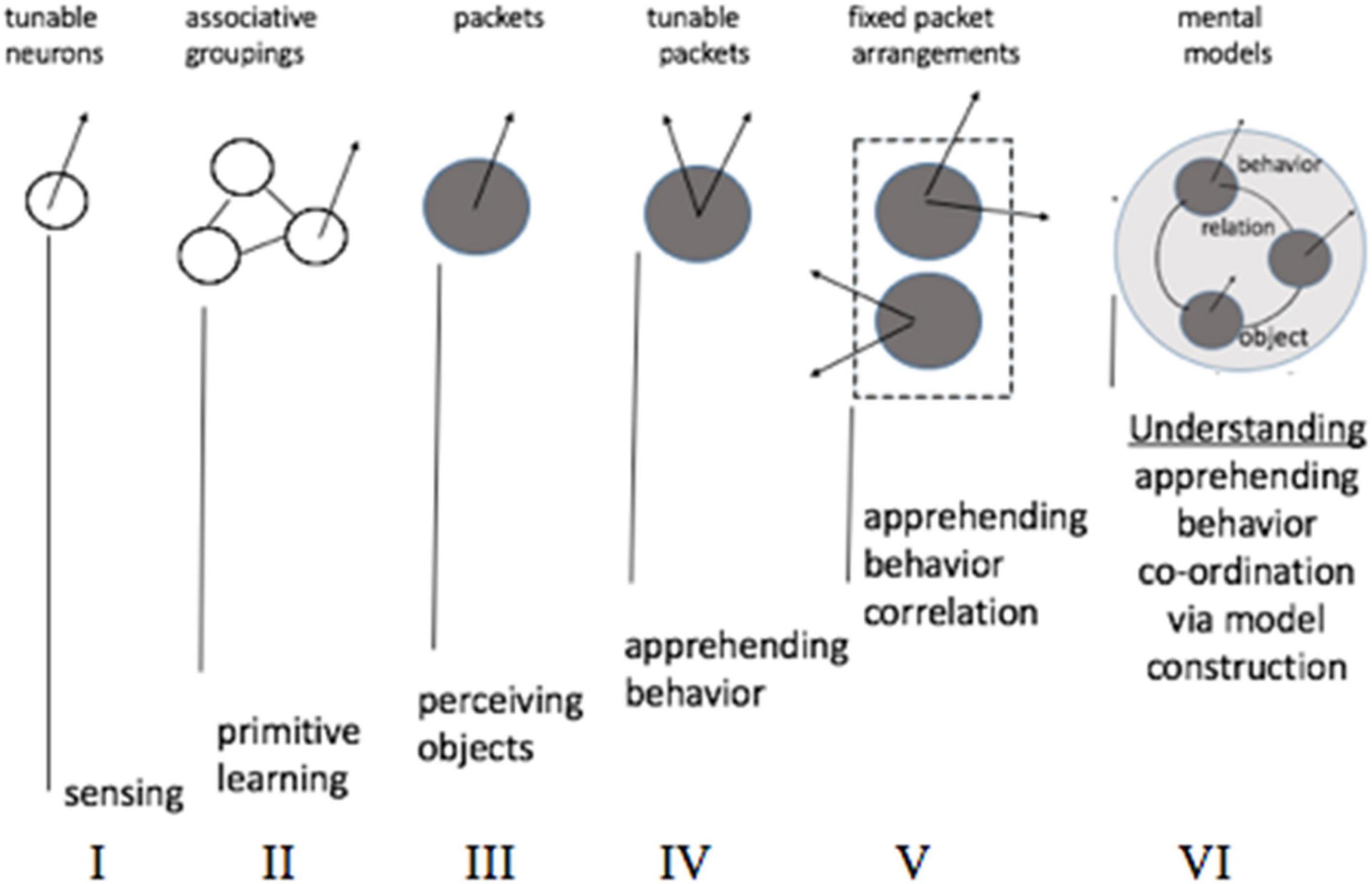

Arguably, the emergence of grouping mechanisms in the neuronal substrate was a major discovery in the evolution of biological intelligence (from sensing to understanding). Accordingly, the concept of neuronal assembly remains the single, most revealing idea at the foundation of neuroscience (Hebb, 1949, 1980). Neuronal groupingopened new avenues for development, via fine-tuning and manipulation of the groups. Pursuing such adaptive improvements equates to bending curves and “pushing” the Ockham’s point toward obtaining minimal error in the smallest number of groups (see Figure 3). It was subsequently argued that thermodynamics has been doing the “pushing” (Yufik, 1998, 2013), we will touch on that later.

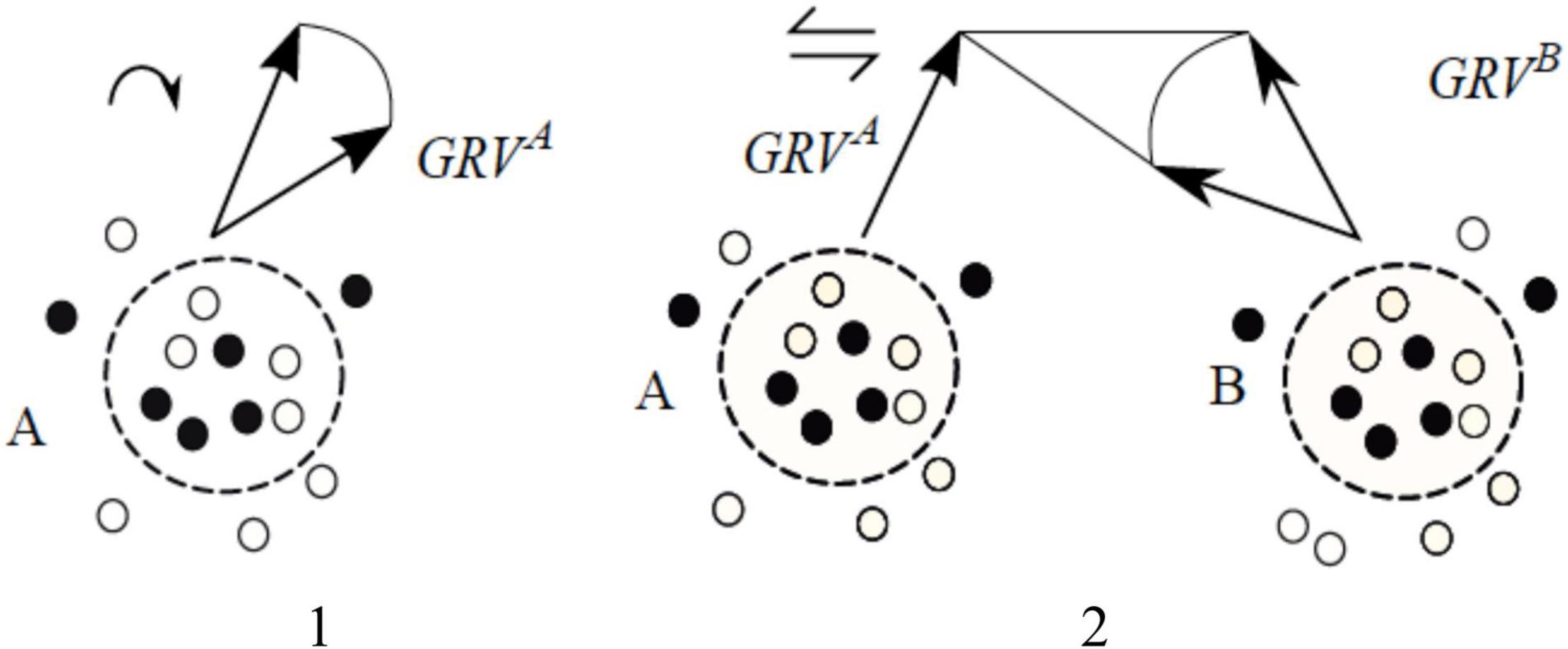

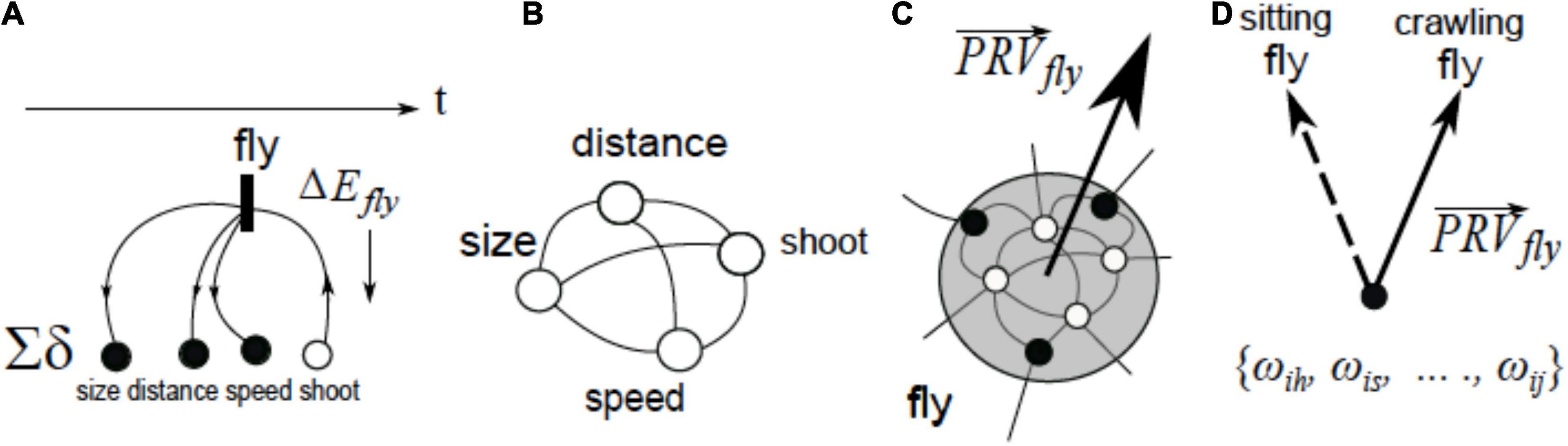

On-off decisions on neuronal groups can be dynamically nuanced to allow more close tracking of the world stream, by, first, tuning receptive fields in individual neurons and, second, by varying excitation–inhibition balance within each. A convenient expression of that strategy can be obtained by summing up response vectors of all the participating neurons in a group to obtain “group response vector” (GRV) and then characterizing activity variations inside a group as patterns in the movement of GRV. Finally, mechanisms for inter-group coordination would develop on top of the mechanisms for controlling intra-group variations. Coordination involves mutual constraints, i.e., variations in one group can both trigger and limit the range of variations in another one. Mutual constraints reduce the number of options, thus shifting the O-point down and to the left. Figure 5 depicts progression from intra-group variation to inter-group coordination.

Figure 5. (1) Patterns of excitation-inhibition within groups can be varied, which can be expressed as rotation of group response vectors (symbol ↷ denotes operation “rotation of group response vector”). (2) Movements of group response vectors can be coordinated: every position of GRVA determines a range of admissible positions for GRVB, and vice versa, movement of one vector causes re-positioning of the other one (i.e., thinking of changes in A brings to mind the corresponding changes in B).

One of the cornerstone findings in neuroscience revealed that movement control (e.g., extending hand toward a target) involves a rotation of response vectors in groups of motor neurons, as in Figure 5.1 (Georgopoulos and Massey, 1987; Georgopoulos et al., 1988, 1989, 1993). Accordingly, complex coordinated movements can involve coordinated rotation of group response vectors in synergistic structures in the motor cortex comprising multiple neuronal groups (Latash, 2008).

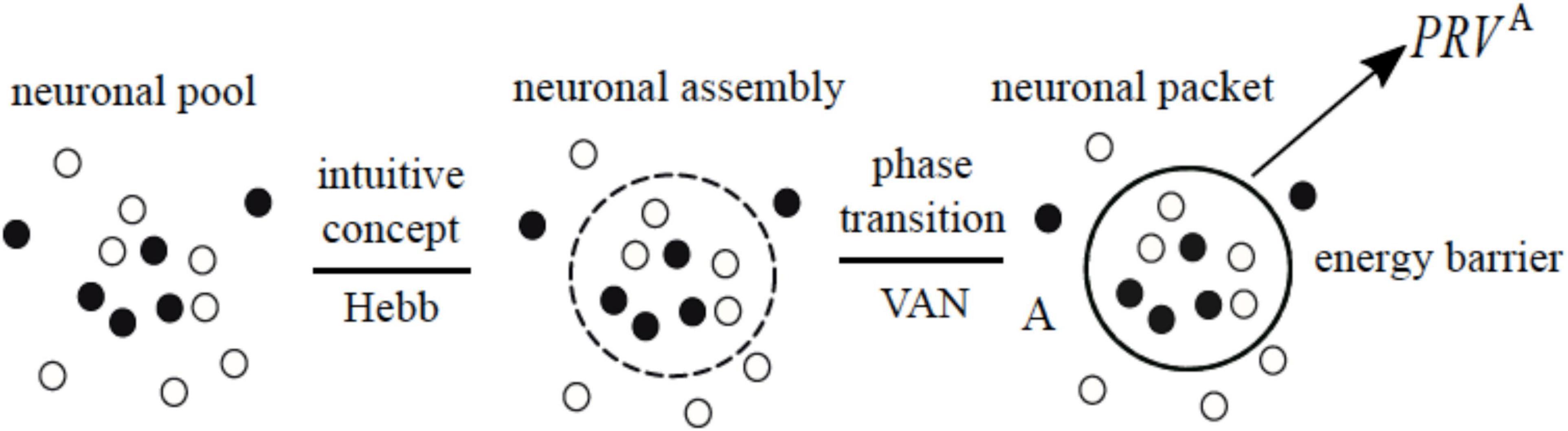

The following hypotheses is central in the VAN model: neuronal assemblies are formed as a result of phase transitions (Kozma et al., 2005; Berry et al., 2018) in associative networks, when tightly associated subnets become separated by energy barriers from their surrounds (c.f., the formation of droplets in oversaturated vapors). The term “neuronal packet” was coined in Yufik (1998) to denote neuronal assemblies bounded by energy barriers. It can be argued that Hebb’s insight recognizing assemblies as functional units in the nervous system (as opposed to attributing this role to individual neurons) necessarily implied the existence of biophysical mechanisms that keep such assemblies together, separate them from the surrounding network and make it possible to manipulate them without violating their integrity and separation. On that argument, the VAN theory only makes explicit what was already implied in the idea of neuronal assembly. Figure 6 elaborates this contention.

Figure 6. The idea of assembly expresses the notion that groups of tightly associated neurons form cohesive units distinct from their surrounds in the network (associative links are not shown). The notion of a neuronal packet expresses, in the most general terms, a mechanism for forming and stabilizing such units in a material substrate (i.e., phase transition and emergence of an energy barrier in the interface between the phases). PRVA denotes “packet response vector.”

Associating boundary energy barriers with biological neuronal groups expresses a non-negotiable mandate that operations on such groups, including accessing the neurons inside, varying excitation-inhibition patterns in the groups, removing neurons from a group, etc. all involve work and thus require a focused energy supply to the group’s vicinity sufficient for performing that work. Multiple packets establish an energy landscape in the associative network, as shown in Figure 7.

Figure 7. Associative structures reside in continuous energy landscape. Coordinating objects A and B occupying different minima (A B) → (A ⇋B) requires repetitive climbing over the energy “hill” between the minima. Deformation in the landscape (lowering the “hill”) enables blending (A ⇋B) → (A ↭B), producing a structure where A and B remain distinct and, at the same time, capable of constraining each other’s behavior.

Packets are internally cohesive and externally weakly coupled (i.e., neurons in a packet are strongly connected with each other and weakly connected with the neurons in other packets), the cohesion/coupling ratio in a packet determines the depth of energy “well” in which it resides: the deeper the well, the more stable the packet, which translates into reduced amounts of processing and higher degree of subjective confidence when packet contents are matched against the stream [packets respond to correlated stimuli groupings, the number of matches sufficient for confidently identifying the current input decreases as the cohesion/coupling ratio increase (Malhotra and Yufik, 1999)]. Changes in the landscape, as in Figure 6, result from changes in arousal accompanying changes in subjective values (importance) attributed to the input (objects, situation): the higher the value attribute to an object, the deeper the corresponding well becomes (more on that shortly).

The notions of neuronal packets and energy landscape in Yufik (1996, 1998); Yufik and Sheridan (2002) anticipated experimental and theoretical investigations of cortical energy landscapes (Watanabe et al., 2014; Gu et al., 2017, 2018; Kang et al., 2019). However, packet energy barriers are amenable to direct experience, as was first intimated by William James in his classic “The Principles of Psychology” back in 1890, as follows. To access an item in memory, one must make attention

“linger over those which seem pertinent, and ignore the rest. Through this hovering of the attention in the neighborhood of the desired object, the accumulation of associates become so great that the combined tensions of their neural processes break through the bar, and the nervous wave pours into the track which has so long been awaiting its advent” (James, 1950/1890, v. 1, p. 586).

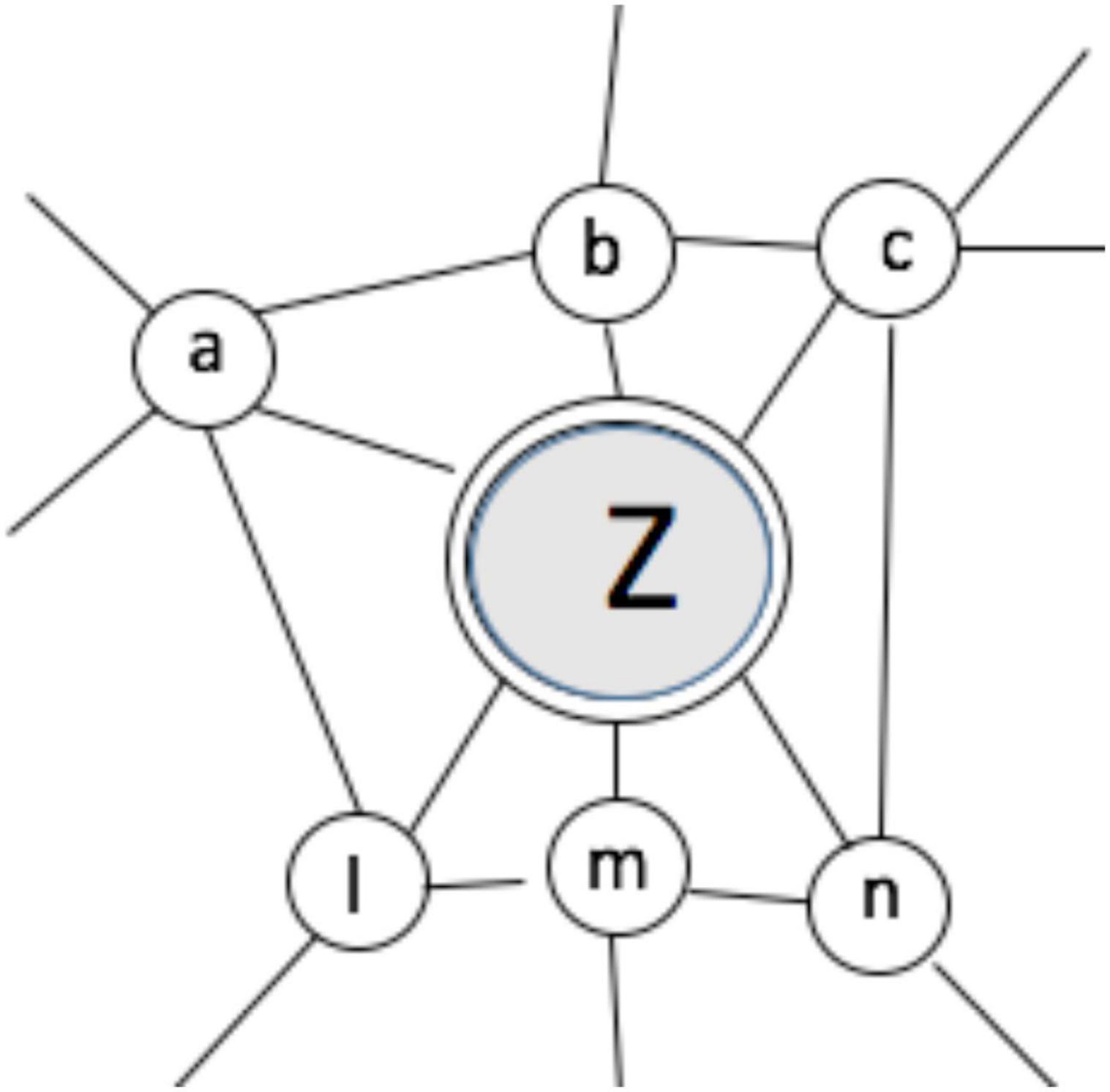

To appreciate the insightful metaphor “breaking through the bar,” think of desperately trying to recollect the name of an acquaintance that escaped you just at the moment you were making an introduction. With a stunning insight and vividness (Figure 8), James describes the experience of mounting mental effort to access packet’s internals from the surrounding associative structure:

Figure 8. Accessing contents of packet Z requires sustained attention in the associative neighborhood until effort is mounted sufficient for overcoming “resistance”(i.e., boundary energy barrier) (adopted from James, 1950/1890, v. 1, p. 586).

“Call the forgotten thing Z, the first facts with which we felt it was related, a, b, c and the details finally operative in calling it up, l, m and l. …The activity in Z will at first be a mere tension, but as the activities in a, b and c little by little irradiate into l, m, n, and as all these processes are somehow connected with Z, their combined irradiation upon Z …succeed in helping the tension there to overcome the resistance, and in rousing Z to full activity” (James, 1950/1890, v. 1, p. 586).

Building on the notions in Figure 7, assume, first, that Z admits a number of distinguishable states Z = Z1, Z2, …, Zk, second, another packet Q = Q1, Q2, …., Qm exists somewhere in the associative network, third, attention alternates between varying states in Z Z1 → Z2 → …→ Zk and Q Q1→ Q2→…. →Qm (i.e., rotating packet vectors) until, finally, a particular form of coordination between the variation patterns is established (relation r), producing a coordinated relational structure Z r Q. With that, a model is formed expressing variations in the world stream in terms of objects, their behavior and inter-object relations (more on that shortly). Transporting James’ vivid account into modern context, “hovering of the attention” can be compared to burning fuel in a helicopter hovering over a particular spot, and inter-packet coordination is like keeping two helicopters airborne and executing different but coordinated flight patterns. Finally, forcing changes in the landscape and establishing coordination, as in Figure 6, is analogous to letting the helicopters roll on the ground and having them connected by a rod to coordinate their moves. The following two suggestions reiterate these notions more precisely.

First, alternating between the packets is an effortful process critically dependent on the strength of “resistance” offered by the energy barrier: excessive height will make the packets mutually inaccessible while low barriers will make them less stable and thus disallow sustained and reproducible variations. In short, the process is contingent on maintaining a near-optimal height of energy barriers throughout the landscape, as suggested in Figure 6.

Second, establishing relations replaces effortful alternations between packets with effortless (automatic) “facilitation” (the term is due to Hebb, 1949). Stated differently, a rule “varied together, coordinated together” can be suggested as a complement to Hebb’s “fire together, wire together” rule, extending its application from neurons to packets. Facilitation underlies the experience of coming to mind when thinking of changes in Z brings to mind the corresponding changes in Q, as in Figure 5.2. More generally, packets become organized (blended) into a model yielding the capacity to “have some feel for the character of the solution ….without actually solving the equations” (Feynman, see section “The Virtual Associative Network Theory of Mental Modeling”). Stated differently, one becomes aware of the direction in which changes in one model component impact behavior of the other ones and of the entire composition, consistent with the insight expressed in Figure 1. Situational “feeling” is coextensive with reaching understanding and obtaining complexity reduction in the modeling process on a scale ranging from small in simple situations to astronomical in complex ones.

To appreciate the significance of the benefit, think of a most rudimentary task, e.g., a chimpanzee connecting sticks and climbing on top of piled boxes to reach some fruit. Connecting sticks involves trying out different random variations until the proper coordination is encountered (Koehler, 1999). Connected sticks become a physical unit that can be physically coordinated with other units (i.e., carried on top the boxes) which is contingent on forming and coordinating the corresponding memory units (pairwise coordinations, i. e, stick1- fruit, stick2- fruit, box1- fruit, etc. might never amount to a solution). Ability to temporarily decouple mental operations from their motor-sensory expressions and to combine coordinated packets into stable functional units amenable to further coordination (that is, the ability to think and understand) separates humans from other species. Piaget articulated these notions convincingly, by pointing at the “contrast between step-by-step material coordinations and co-instantaneous mental coordinations” and demonstrating in multiple experiments that “mental co-ordinations succeed in combining all the multifarious data and successive data into an overall, simultaneous picture which vastly multiplies their power of spatio-temporal extensions…” (Piaget, 1978, p. 218).

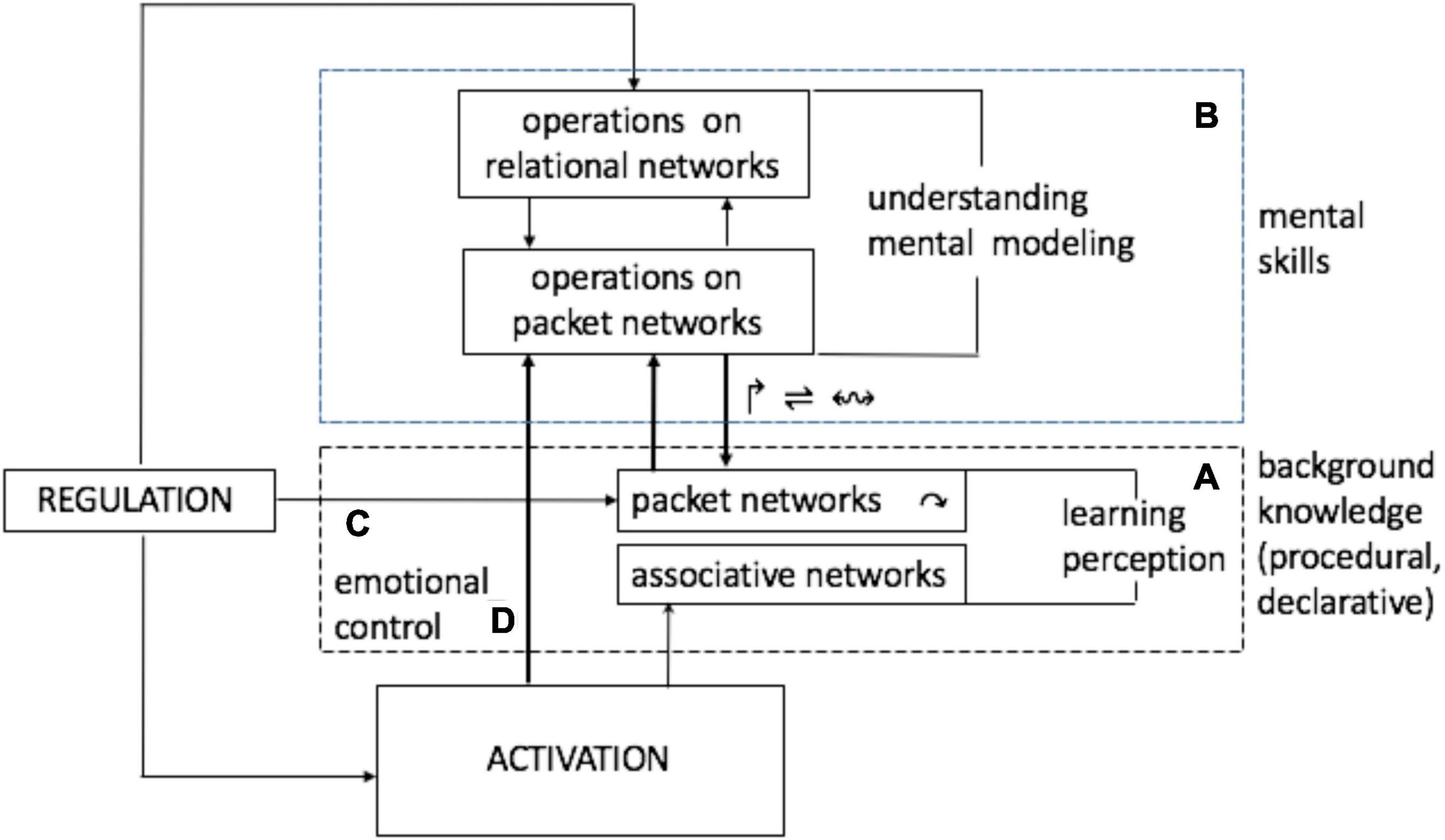

Figure 9 positions mechanisms of packet manipulation in the three-partite brain architecture in Figure 2 superposed on the schema of Situational Understanding in Figure 3.

Figure 9. Architectures for understanding. This diagram represents cognition as a regulatory process that is directed at adapting (matching) behavior variations in the organism to condition variations in the world stream and is powered by energy inflows extracted from the stream. Organization in the system comprises different structures submitted to regulation [from tuning receptive fields in individual neurons (Fritz et al., 2003, 2005), to rotating packet vectors, to constructing and manipulating mental models], seeking to stabilize energy inflows while minimizing metabolic costs.

Two blocks are identified, denoting two classes of memory processes and operations: block A is shared across many species while block B is exclusively human, as follows. Block A limits memory processes to the formation of associative networks and packets, and allows for the rotation of packet vectors. Block B allows other operations leading to construction of mental models and operations on them.

Block A (block B is absent or underdeveloped) reflects cognitive capacities in non-humans, from simple organisms to advanced animals. Rudimentary forms of learning reduced to selective formation and strengthening of associative links are available in simple organisms (e.g., worms, frogs) and decorticated animals [e.g., rats having 99.8% of the cortex surgically removed (Oakley, 1981)]. Intact rodents occupy an intermediate position in the capacity ladder [learning involves formation of a few neuronal groups that get selectively re-combined depending on changes in the situation (Lin et al., 2006)]. Apes and some avians can learn to coordinate a few objects (link C).

Block A operates on the associative and packet network in block B while leaving the mosaic of associative links intact. Flexible neuronal “maneuvers” [fluid intelligence (Cattell, 1971, 1978)] underlie management of competing goals and other executive functions (Mansouri et al., 2009, 2017) and involve selective re-combination of packets, producing a hierarchy of relational models (hierarchy of flexible relational structures developing on top of an associative network is called virtual associative network). Interactions between levels are two-directional, with the top-down processes selectively engaging lower levels, down to deployment of sensorimotor resources which can entail changes in the bottom associative network due to sensorimotor feedback (please see below).

Link D places energy distribution across the packet network under regulatory influence (volitional control), thus making it an integral part of a human cognitive system, as suggested in Figure 10.

Figure 10. The shape of the energy landscape is a function of interplay between arousal and value distribution across the packets (reflecting value distribution in the corresponding objects). Heightened arousal lowers energy barriers across the landscape enabling coordination of distant packets, as might be necessary for unfamiliar and complex (creative) tasks, while decreasing arousal elevates the barriers thus restricting coordination to proximal packets (which might suffice for simple and familiar tasks).

In the extreme, low barriers allow floods of irrelevant associations while high barriers confine attention to a few familiar associations. Accordingly, optimal arousal obtains optimal task space partitioning (m_0) yielding optimal performance.

Arousal-induced changes in the landscape account for the levels of awareness, from vegetative wakefulness (flat landscape) to understanding-based awareness (optimal landscape, see Figure 1). Subjective experience of arousal varies from fear, stress, anxiety on the one and of the spectrum to excitement and exhilaration on the other end. Accordingly, moving along the spectrum changes the topological characteristics of the energy landscape: from fragmented access (i.e., some areas are inaccessible) to the unrestricted accessibility of a flat surface. Stress-induced changes in landscape topology are likely to underlie the idea of “suppressed memories” treated in psychoanalysis (disturbing memories are not erased or degraded but become “walled off” behind high barriers, so access to them can be restored if the barriers are lowered. Treatment that concentrates on the associative neighborhood (see Figure 6), as in dream analysis, seems to be appropriate for that purpose). Methods of memory recovery were disputed, on the grounds that it might be as likely to conjure false memories as to recover access to the lost ones (Loftus and Ketcham, 1996). However, creation and suppression are two sides of the same coin, i.e., the same mechanism that facilitates creative re-combination of memory structures can block access to some of them. Stress-induced landscape distortions can be responsible for other psychological symptoms, such as obsessive thoughts.

We will now return to the examples above, this time applying the notions of the VAN framework. The USS Stark incident and the Maginot catastrophe were not a product of insufficient training or illogical reasoning but resulted from understanding failure, that is, the inability to form “mental co-ordinations … combining all the multifarious data and successive data into an overall, simultaneous picture” (Piaget, 1978, p. 218). Despite differences in circumstances, the nature of cognitive deficiency was the same in both scenarios: an inability to overcome the resistance of elevated energy barriers, which resulted in fragmented (as opposed to simultaneous) “pictures.” On the VAN theory account, the captain’s mental model in the first scenario comprised two uncoordinated packets: A = ship, objects relevant to the ship, and B = all other objects. A highly valued but erroneous AWAC classification placed the Iraq jet in the second group, and the captain’s mental skills did not allow crossing the A | B barrier and coordinating members of B with members of A. In the second scenario, mental model of the high command comprised A = fortifications, defended area in front of fortifications and B = adjacent areas, objects in the adjacent areas separated by an energy barrier that turned out to be insurmountable due to overvalued significance of past experiences. French high command, as a collective entity, demonstrated low level of self-control under fear and anxiety brought about by the anticipated German attack, which caused them to fall back on the past tactics and made them “fanatically uninterested” in deviating from them.

By contrast, a high degree of self control (“ability to operate effectively under pressure, self-control in hazardous situations” Suhir, 2013) demonstrated in the Airbus incident made possible suppressing fear and bringing arousal to a level enabling situational understanding manifested in overcoming the inertia of training and customary practices (regulations, authority of the ground control, etc.), “feeling” the appropriate course of action, and making decisions at a substantive level (“we can’t do it,” “we’re gonna be in the Hudson”). The well coordinated mental model regulated subsequent activities in a top-down fashion, by selectively engaging skills and knowledge in the pilot’s background repertoire as necessary for coordinating flight pattern with river characteristics to enable a safe ditching. Figure 11 depicts a succession of mental operations.

Figure 11. Here A - aircraft, B - New Jersey airport, C - Hudson River. Mental operations are accompanied by imagery and remain decoupled from the motor-sensory feedback until, following grasp, the motor-sensory system gets engaged.

Figure 10 underscores that mental models are regulatory structures that, beside supplying “pictures,” control their own execution via dynamic coordination of various data streams in the motor-sensory loop completed via environmental feedback [sensory streams include visual input (e.g., river shape), motor-kinesthetic input, etc.]. Execution is accompanied by a feeling of confidence in reaching the objective (e.g., safe ditching) that varies depending on the varying degree of correspondence between the envisioned outcomes of control actions and the actually observed ones. Technically, grasp can be said to establish a functional on the space of packet vectors that returns confidence values for different patterns of inter-packet coordination. Behavior of the functional depends on the vector space topology, i.e., accessibility between packets.

Following grasp, the repetitive successful exercise of a newly formed model causes its stabilization, which is captured, to an extent, in the concept of frame (schema, script, etc.) defined as a fixed memory arrangement comprised of components (slots) with variable contents (e.g., script of visiting a restaurant comprises slots “entering,” “being seated,” “studying menu,” etc. (Schank and Abelson, 1977; Norman, 1988). A few comments on the frame idea are offered in the discussion part.

Since the VAN theory pivots on the notion of energy efficiency in the brain, a brief excursion into that subject is in order. The notion that neuronal system optimizes energy processes (Yufik, 1998, 2013; Yufik and Sheridan, 2002) is consistent with later theoretical proposals (e.g., Niven and Laughlin, 2008; Vergara et al., 2019; Pepperell, 2020, 2018) and an increasing number of experimental findings (the discussion section offers a brief review of some data). To appreciate the sources of energy efficiency inherent in the VAN concept, consider the following. In an associative network, excitation in any node or group of nodes can propagate throughout the entire network. By contrast, propagation of excitations induced within a packet is obstructed by boundary energy barriers (i.e., crossing a barrier incurs energy costs). Moreover, seeking further energy savings drives the system toward constraining intra-packet activities to packet subsets and, when crossing the barriers, to engage only packets amenable to mutual coordination. In this way, formation of mental models comprising entities (packets), behavior (transition between intra-packet activity patterns) and relations (inter-packet coordination) expresses the dual tendency to increase the efficacy of action plans (enabled by situation understanding) while decreasing the costs of such planning. A reference to neuronal processes that might be responsible for some of these phenomena will conclude this section.

Interaction between neuronal cells is mediated by several types of substances, including neurotransmitters and neuromodulators. Neurotransmitters act strictly locally, i.e., they are released by a pre-synaptic neuron and facilitate (or inhibit) generation of action potentials in a single post-synaptic target. By contrast, neuromodulators act diffusely, i.e., they are released to a neighborhood as opposed to a specific synapse and affect a population of neurons in that neighborhood possessing a particular receptor type (metabotropic receptors). Neuromodulators control the number of neurotransmitters synthesized and released by the neurons, thus allowing up- or down- regulation of interaction intensity. Neurotransmitters move through fast-acting receptors metabotropic receptors are slow-acting receptors that modulate the functioning of the neuron over longer periods (Avery and Krichmar, 2017; Pedrosa and Clopath, 2017). Neuromodulators were found to provide emotional content to sensory inputs, such as feelings of risk, reward, novelty, effort and, perhaps, other feelings in the arousal spectrum (Nadim and Bucher, 2014). It can be suggested that James’ vivid depiction of “hovering of the attention in the neighborhood of the desired object” provides an accurate introspective account of the work invested in regulating neuromodulator concentration and neurotransmitter production at the packet boundary, which amounts to lowering the energy barrier until “the combined tensions of neural processes break through the bar” (James, 1950/1890, v. 1, p. 586). Since neuromodulators are slow acting, the packet remains accessible for a period of time sufficient for the task at hand.

To summarize, psychology usually treats awareness as a necessary but insufficient prerequisite for reaching understanding (e.g., one can be fully aware of all the pieces and their positions on the chessboard but fails to understand the situation). According to the present theory, predicating situation awareness on situation understanding, as in Figure 1, refers to understanding-based awareness (see section “Levels of Awareness”) and expresses a keen insight consistent with one of the key assertions in the VAN theory: the experience of attaining understanding accompanies emergence of a synergistic (coherent and cohesive) mental models, simulating (envisioning) possible actions on particular elements in such models generates awareness of the constraints and likely consequences of those actions in the other elements throughout the model (hence, the situation awareness).

The Free Energy Minimization principle offers a “rough guide to the brain” (Friston, 2009) and extends to any biological system, from single-cell organisms to social networks (Friston, 2010). The central tenets of the VFEM come from the realization that any living system must resist tendencies to disorder, including those emanating from the environment, while obtaining means for resistance from that same environment:

“The motivation for the free-energy principle … rests upon the fact that self-organizing biological agents resist a tendency to disorder and therefore minimize the entropy of their sensory states” (Friston, 2010, p. 293).

The success or failure of the enterprise depend on the system’s ability to adapt, via forming models of the world used to predict the forthcoming conditions. The VFEM principle expresses this insight in information-theoretic terms, via the notion of variational free energy defined as follows:

“Free-energy is an information theory quantity that bounds the evidence for a model of data … Here, the data are sensory inputs and the model is encoded by the brain. ….. In fact, under simplifying assumptions…it is just the amount of prediction error” (Friston, 2010, p. 293).

Technically, variational free energy is Fvisdefined as surprise (or self-information) - ln p (y| m) associated with observation y under model m, plus the difference between the expected and the actual observations (i.e., the prediction error under model m), measured as a Kullback-Leibler divergence DKL, or entropy, quantifying distinguishability of two probability distributions.

This section adopts the simplifying assumptions and equates variational free energy to prediction error. The VFEM principle conceptualizes minimization of prediction error as a causal factor guiding interaction with the environment, as follows:

“We are open systems in exchange with the environment; the environment acts on us to produce sensory impressions and we act on the environment to change its states. This exchange rests upon sensory and effector organs (like photoreceptors and oculomotor muscles). If we change the environment or our relationship to it, sensory input changes. Therefore, action can reduce free-energy (i.e., prediction errors) by changing sensory input, whereas perception reduces free-energy by changing predictions” (Friston, 2010, p. 295).

Adaptive capacities culminate in the ability to adjust accuracy, or precision to optimally match the amplitude of prediction errors, as follows:

“Conceptually, precision is a key determinant of free energy minimization and the enabling – or activation – of prediction errors. In other words, precision determines which prediction errors are selected and, ultimately, how we represent the world and our actions upon it. ….it is evident that there are three ways to reduce free energy or prediction error. First, one can act to change sensations, so they match predictions (i.e., action). Second, one can change internal representations to produce a better prediction (i.e., perception). Finally, one can adjust the precision to optimally match the amplitude of prediction errors” (Solms and Friston, 2018).

The VAN theory instantiates the VFEM principle for the human brain, identifying understanding with a particular strategy for predictive error reduction and a particular form of precision adjustment. In this way, the VAN theory proposes some substantive contributions to the VFEM framework, including the following. Firstly, the VFEM principle envisions changing actions to change sensations and changing internal representations in order to change perceptions. The VAN theory envisions, in addition, changes in the internal models to produce and change understanding. Secondly, “the motivation for the free-energy principle …. rests upon the fact that self-organizing biological agents resist a tendency to disorder and therefore minimize the entropy of their sensory states” (Friston, 2010, p. 293). VAN postulates that self-directed construction of mental models constitutes a form of self-organization in the brain that reduces the entropy of its internal states (Yufik, 2013, 2019) (more on that important point in the next section). Thirdly, according to the VFEM, error minimization brings about the minimization of energy consumption in the brain. By contrast, VAN attributes ontological primacy to energy processes and derives error reduction from the pressure to reduce energy consumption.

Technically, the VAN and VFT share the same commitment to finding the right balance between accuracy and complexity, i.e., the right kind of grouping or course graining that conforms to Occam’s principle. This follows because variational free energy is a bound upon the log of marginal likelihood or model evidence (i.e., negative surprise or self information). As noted above, the marginal likelihood can always be decomposed into accuracy and complexity. This means that the energy landscapes above map gracefully to the variational free energy landscapes that attend the free energy principle. The link between the informational imperatives for minimizing prediction errors and the thermodynamic imperatives for efficient processing rest upon the complexity cost, that can be expressed in terms of a thermodynamic cost (via the Jarzynski equality). An example will illustrate the underlying notion of efficiency from both a statistical and thermodynamic perspective:

Consider a frog trying to catch flies and getting disappointed by the results (too many misses). To secure a better energy supply, the frog can start shooting its tongue faster, more often, etc. If the hit/miss ratio does not improve and the frog keeps shooting the tongue in vain, it will soon sense the amplitude of prediction error unambiguously – by dying from exhaustion. Presume that neuronal mechanisms emerge that improve the score by improving sensory- motor coordination. In principle, this line of improvement could continue indefinitely making the frog progressively more sophisticated hunter, except that the mechanisms can require more neurons engaged in more intense activities which will result in increasing energy demands that can outweigh increases in the intake (besides, there are obvious physiological and physical limitations on the brain size, and neither neurons can become smaller, nor the underlying chemical processes can run faster).

Consequently, radical behavior improvements are predicated on discovering mechanisms that deliver them without increases in the size of neuronal pool and/or neuronal activities, that is, without increases in internal energy consumption or, better yet, entailing energy savings. The point is that such mechanisms might or might not emerge, and error reduction is a consequence of their development, as opposed to such mechanisms being a guaranteed accompaniment of error reduction. With these caveats, Figure 12 suggests a straightforward integration of the VAN model into the VFEM framework.

Figure 12. VAN theory instantiates VFEM framework, by accounting for error optimization mechanisms underlying human understanding. The figure above the horizontal dotted line depicts a VFEM construct where adaptive interaction between world states and the brain (internal states) is conducted via motor-sensory loop and pivots on the mechanisms of error minimization and precision adjustment (adopted from Solms and Friston, 2018). Figure below the horizontal dotted line summarizes the VAN approach deriving error optimization from capabilities inherent in self-directed packet manipulation (see Figure 9). Vertical dotted lines suggest mapping between the VFEM and VAN constructs. The sensory and activity states constitute Markov Blanket that shields internal states from the world states and, at the same, mediates interaction between them.

This section illustrates the function of machine situational understanding and discusses approaches toward its implementation.

A machine can be said to possess situational understanding to the extent it can:

(a) accept task definition from the operator expressed in substantive terms,

(b) evaluate a novel, unfamiliar situation and develop a course of action consistent with the task and situational constraints (the available time, data sources, etc.) and

(c) communicate its decisions and their reasons to the operator in substantive terms.

In other words, decision aid is attributed a degree of situational understanding if the operator feels that the machine input contributes into his/her situational awareness and can be sufficiently trusted to adjust his/her own situational understanding and to act on machine advice. In the VAN framework, substantive expressions address objects (entities), their behavior, and forms of behavior co-ordination (relations). The same three examples will illustrate these suggestions.

In the USS Stark incident, an on-board situation understanding aid (SUA) could overrule AWAC target classification and issue a warning like “Attention: there is 0.92 probability that this is enemy aircraft.” The chances that the warning will be trusted and acted upon will improve significantly if, when asked “How do you know?” the system would reply with “The aircraft was ascending but then turned sharply and started descending and accelerating toward you.” Assuming that the captain interacts with the ship systems via the aid, the SUA would accept the captain’s command “Engage the target” and initiate activities by the engagement protocol [note that learning systems (e.g., deep learning) are capable of reliably detecting and identifying objects but are limited in their ability to apprehend relations and explain their decisions to the user].

In the Airbus incident, the SUA could be tasked with interacting with ground control to request permission to land in NJA, and could respond with “We are not going to make it.” Improving situation understanding in the Maginot scenario would require breaking a rigid mental template, some (tentative) suggestions for a possible role of SUA will be made shortly, after introducing VAN computational framework.

The VAN computational framework was dubbed “gnostron” (Yufik, 2018), to underscore distinction from “perceptron”: perceptron has a fixed neuronal structure while gnostron is a neuronal pool where structure evolves gradually and remains flexible. Gnostron formalism is a straightforward expression of VAN considerations summarized in section “Integrating Virtual Associative Network into the Variational Free Energy Minimization Framework,” as follows.

World is a stream of stimuli S = s_1, s_2, …., sM arriving in different combinations at a pool comprised of N neurons X = x1,x2,xN, with each neuron responding probabilistically to a subset of stimuli. In turn, the stimuli respond probabilistically to the neurons that pool mobilizes and “fires at” them, by releasing energy deposits (neuronxi has receptive field μij,μih, …μik, here μih denotes probability that stimulus s_h will release deposit E_h in response to the pool having fired x_i). Mobilization (selecting neurons and preparing them to fire) takes time and neurons, after having fired, need to time to recover, which forces the pool to engage in anticipatory mobilization. Engaging xiconsumes energy δi comprising the work of mobilization ρi and the work of firing νi, δi = ρi + νi (note that mental operations are constituents of mobilization).