95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Syst. Neurosci. , 12 May 2016

Volume 10 - 2016 | https://doi.org/10.3389/fnsys.2016.00039

This article is part of the Research Topic Perisaccadic vision View all 19 articles

In the oculomotor system, spatial updating is the ability to aim a saccade toward a remembered visual target position despite intervening eye movements. Although this has been the subject of extensive experimental investigation, there is still no unifying theoretical framework to explain the neural mechanism for this phenomenon, and how it influences visual signals in the brain. Here, we propose a unified state-space model (SSM) to account for the dynamics of spatial updating during two types of eye movement; saccades and smooth pursuit. Our proposed model is a non-linear SSM and implemented through a recurrent radial-basis-function neural network in a dual Extended Kalman filter (EKF) structure. The model parameters and internal states (remembered target position) are estimated sequentially using the EKF method. The proposed model replicates two fundamental experimental observations: continuous gaze-centered updating of visual memory-related activity during smooth pursuit, and predictive remapping of visual memory activity before and during saccades. Moreover, our model makes the new prediction that, when uncertainty of input signals is incorporated in the model, neural population activity and receptive fields expand just before and during saccades. These results suggest that visual remapping and motor updating are part of a common visuomotor mechanism, and that subjective perceptual constancy arises in part from training the visual system on motor tasks.

Spatial updating is the ability to locate targets that were perceived before an intervening self-motion displaces the original sensory frame of reference (Baker et al., 2003; Klier and Angelaki, 2008; Sommer and Wurtz, 2008; Crawford et al., 2011). In the oculomotor system, this is often studied using the “double step” task, where subjects are required to make an accurate saccade toward a remembered visual target after an intervening eye motion. Such experiments have shown that humans and monkeys are able to do this despite intervening saccades (Hallett and Lightstone, 1976; Mays and Sparks, 1980), head-unrestrained gaze shifts (Munoz et al., 1991), smooth pursuit eye movements (Lisberger et al., 1987; Schlag et al., 1990; Herter and Guitton, 1998; Baker et al., 2003; Medendorp et al., 2003; Blohm et al., 2005; Klier and Angelaki, 2008), translational motion of the head/body (Angelaki and Yakusheva, 2009; Clemens et al., 2012), and torsional rotation of the head/body (Medendorp et al., 2003; Klier and Angelaki, 2008). The detailed neurophysiological mechanisms are not yet known, but it is thought that this involves the use of internal efference copies of eye motion (Mays and Sparks, 1980; Sommer and Wurtz, 2006), and results in the recalculation of an appropriate motor saccade vector for the final eye position in areas such as the superior colliculus (SC) (Groh and Sparks, 1996).

Spatial updating in the oculomotor system may be intimately related to visual space constancy—perception of constant spatial locations despite frequent displacement of the retina by saccades—and may share common mechanisms (Wurtz, 2008). The neurophysiology of visual space constancy has mainly been studied using the single-step saccade task, where a task-irrelevant stimulus is presented just before a saccade. This often results in the phenomenon known as predictive remapping, which has been observed through higher level visual and visuomotor areas of the brain (Duhamel et al., 1992; Walker et al., 1995; Umeno and Goldberg, 1997; Nakamura and Colby, 2002; Sommer and Wurtz, 2006). Neurons that show predictive remapping are activated by saccades that will bring visual stimulus into their receptive fields (RFs) even before the actual movement of the eyes (Duhamel et al., 1992). This is often interpreted in terms of the visual properties of single neurons, i.e., as a transient extension of the RF toward the future location of the stimulus; the future RF. However, some neurons that show predictive remapping also show sustained activity at the new eye position when the standard RF stabilizes over the previous stimulus location (Duhamel et al., 1992). Further, both of these phenomena can be explained as equivalent to the trans-saccadic transfer of activity from the original population of neurons representing the visual stimulus relative to initial gaze position to another population coding for the stimuli relative to the final gaze position (Keith and Crawford, 2008). This retained gaze-centered information could then be used for a variety of purposes, including saccade generation. Thus, remapping, spatial memory, and sensorimotor updating may involve several common or inter-related features (Mays and Sparks, 1980; Duhamel et al., 1992; Walker et al., 1995; Batista et al., 1999; Tian et al., 2000; Nakamura and Colby, 2002; Balan and Ferrera, 2003a,b).

There is also reason to expect somewhat different mechanisms for updating visual space during behaviors that involve slow, continuous motion of the eye in space, like smooth pursuit eye movements or translations of the head (Angelaki and Yakusheva, 2009; Clemens et al., 2012; Dash et al., 2015). First, unlike saccades, slow, continuous eye movements often have unpredictable trajectories. Second, unlike saccades, slower eye movements do not suppress vision, presumably because the retina still provides useful information and thus it is useful and important to maintain vision during longer-duration eye motion. For such movements predictive remapping is not ideal, but rather spatial updating should occur continuously. This prediction was recently confirmed by recording from neurons in the SC during a double-step smooth pursuit-saccade task. In this experiment most visual cells showed gaze-centered memory-related activity for the saccade target, such that the population showed a “moving hill” of activity across the SC topographic map during pursuit (Dash et al., 2015). Here, visual memory activity was spatially-specific, only occurring when the remembered target crossed the visual RF, and motor activity only occurred at the end of pursuit, just before the saccade.

Despite numerous investigations on this topic, many unanswered questions remain about the theory and mechanisms of visual and visuomotor updating (Thier and Ilg, 2005; Ibbotson and Krekelberg, 2011). Since experimental work is extremely difficult and often (in animal experiments) can only target selected areas and signals at one time, it is important to have a theoretical framework to guide such experiments. Past theoretical efforts have used control-system type models to explain the spatiotemporal and geometric aspects of updating (Quaia et al., 1998; Optican and Quaia, 2002; Blohm et al., 2006; Cromer and Waitzman, 2006; Van Pelt and Medendorp, 2007), and neural network models to predict specific signals (Zipser and Andersen, 1988; White and Snyder, 2004, 2007; Keith et al., 2010). However, there is still no general theoretical framework for spatial updating and remapping. Here, we took a step in this direction by examining whether training on the motor aspects of spatial updating can produce the updating/remapping signals that have been observed in visual neurons. To do this, we developed a state-space model (SSM) for updating target-related spatial information in gaze-centered coordinates. SSMs provide an effective method for modeling dynamical systems and it can represent the internal behavior of these systems.

Here, we constrained our model to receive simulated inputs (Visual RFs, eye positions signals, and eye movements signals) that have already been physiologically verified (Walker et al., 1995; Hanes and Schall, 1996; Sommer and Wurtz, 2002; Marino et al., 2008; Morris et al., 2012), and trained it to update the location of saccade target after an intervening saccade or smooth pursuit movement. After training, the RFs of state-space units replicated both predictive remapping during saccades (Duhamel et al., 1992) and continuous eye-centered updating during smooth pursuit (Dash et al., 2015). In addition, during trans-saccadic remapping, RFs also expanded (a prediction which to our knowledge has not yet been reported in the published literature). These findings demonstrate that, in principle, the neural phenomena associated with the remapping of visual responses can arise from training on a motor task, and thus suggest a strong association between visual remapping and motor updating.

The proposed model aims to study the dynamics of spatial updating through time in two types of eye movements, saccades and smooth pursuits. As in previous models on this general topic (Zipser and Andersen, 1988; White and Snyder, 2004, 2007; Keith et al., 2010) we aimed to model this system at a level that bridges the computational and algorithmic levels (Marr, 1982), and made no attempt to model mechanisms at the biophysical level. In order to simulate the dynamics of neural mechanism during smooth pursuit and saccades, we used these novel approaches: we developed a SSM and we used a dual Extended Kalman filter (DEKF) approach (Wan and Nelson, 1997, 2001). More specifically, we used two interleaved Extended Kalman filters (EKFs), (1) one for state estimation (signal estimation) and (2) the other one for weights estimation (model estimation). In our proposed model, we tried to not only replicate the behavioral aspect of updating but also show the neural mechanism underlying this phenomenon. In this section, we first explain the task widely used to study spatial updating and then explain our proposed model intuitively in a simple way.

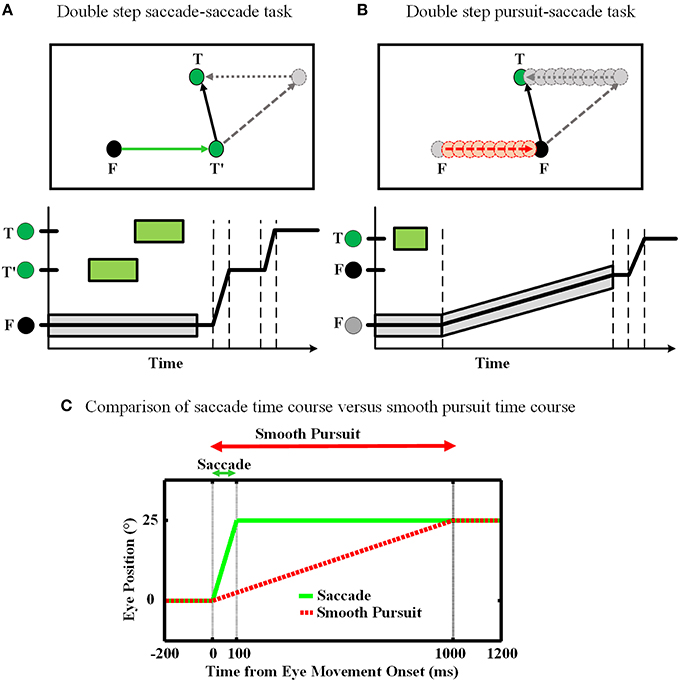

The double step task is a common approach to study the concept of spatial updating. In our work, we consider two forms of this task: (1) saccade-saccade (Hallett and Lightstone, 1976; Mays and Sparks, 1980), and (2) pursuit-saccade (Schlag et al., 1990; Herter and Guitton, 1998; Baker et al., 2003; Medendorp et al., 2003; Blohm et al., 2005; Klier and Angelaki, 2008). Figure 1 illustrates the spatial geometry of these tasks (A and B), and compares their relative timing. In the saccade-saccade task (Figure 1A) subjects fixate (F) while a target (T′) briefly appears (and then disappears) in the visual periphery. Then a second target (T) briefly appears. When the fixation point (F) disappears, the subject must make two successive saccades to the remembered position of T′ and then T. The pursuit-saccade task (Figure 1B), is similar, except that there is only one saccade target (T) and after it disappears, F starts to slowly move. The subject must follow F until it disappears. At that time, the subject makes a saccade to the remembered position of the previously shown target T.

Figure 1. Spatial updating of second target position in retinotopic coordinates. (A) Spatial updating of remembered second target position during a saccadic eye movement. Upper panel shows the configuration of the double step saccade-saccade task in 2D spatial coordinates. When the subject is fixating to the fixation point F (black circle), the locations of the two visual targets T′ and T (green circles) are coded in retinotopic coordinates relative to F. After the completion of the first intervening saccade to T′, if spatial updating were not occurring the gray circle would be the destination of the second saccade. But primates are able to compensate for the first saccade (dotted arrow) and update the location of T relative to their new gaze position (T′) and thus make a correct saccade to the position of T. The lower panel shows the eye position in time during the saccade-saccade task. The timing of the visual targets appearance is depicted by green rectangular which shows the duration of each target presentation. Also the gray rectangular shows the duration of the fixation point appearance. (B) Spatial updating of remembered second target location during a slow continuous eye movement. While subject is fixating to the fixation point F, the position of the remembered target T is coded in retinotopic coordinates relative to F. During the smooth pursuit phase, while the subject is following the slowly moving fixation point (red dashed arrow), the position of the previously perceived target T is getting updated continuously and gradually (gray dotted arrow) as the eyes are moving. Therefore, in the end of pursuit the subject can make a saccade to the correct position of T. The lower panel shows the eye position in time during smooth-saccade task. The green rectangular shows the duration of the target presentation. The timing of the fixation point presentation is shown by a gray shadow around the position curve which also shows the slow movement of the fixation point. (C) Comparison of these two tasks in terms of timing. The duration of a 10° saccade is around 50 ms which is much less than a typical smooth pursuit which can be around 1000 ms.

Smooth pursuit differs from saccades in several fundamental ways (Figure 1C). First, saccades are ballistic: they are very brief and rapid (often ≥ 500 degree/s) and thus do not receive or require visual feedback (Straube et al., 1997). They are internally driven by a discrete burst of activity and obey lawful behavioral relationships (Robinson, 1964; Fuchs and Luschei, 1970). This provides the necessary conditions for a mechanism that predicts both saccade duration and final eye position (Duhamel et al., 1992; Walker et al., 1995; Umeno and Goldberg, 1997; Nakamura and Colby, 2002; Sommer and Wurtz, 2006). In contrast, pursuit requires visual feedback based on movement of the fixation point, which may be unpredictable in speed, length, and duration. However, pursuit is also slower (≤ 100 degree/s), allowing ample time for on-line feedback not only from retinal signals, but also extra-retinal signals such as an accurate sense of current eye position and velocity. This provides the necessary conditions for a mechanism that continuously updates remembered target position.

Humans and animals are able to perform both of these two tasks almost accurately in the dark, i.e., based on egocentric cues in the absence of allocentric cues (Klier and Angelaki, 2008; Sommer and Wurtz, 2008). This means that the final saccade target (T) must be stored and updated within some egocentric frame of reference. Otherwise, if subjects simply used the original visual vector for T, they would generate the wrong saccade (gray dashed lines in Figures 1A,B). Most experimental evidence suggests that visual targets like T′ and T are coded in short-term memory using gaze-centered coordinates (Walker et al., 1995; Sommer and Wurtz, 2002; Marino et al., 2008). As a result, there has to be a mechanism in the brain which updates the remembered position of T′ in spite of the intervening changes in the gaze position during the first eye movement. In effect, the original visual vector (gray dashed vectors) in Figures 1A,B must be transformed by the reverse of the first eye movement vector (gray dotted lines) into the correct second saccade vector (black solid lines). In the current study, we aimed to simulate both the behavior and emergent neural mechanisms associated with spatial updating during the pursuit-saccade and saccade-saccade tasks, using the model described in the next subsection.

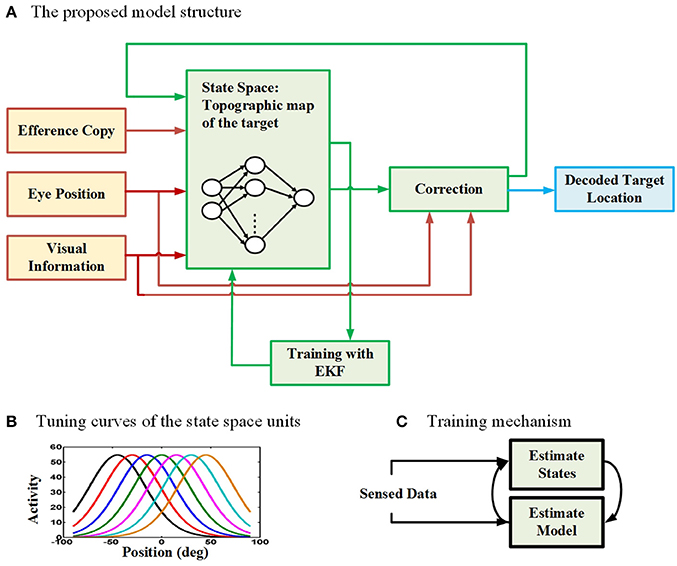

To model the neural behavior of the brain in spatial updating paradigm, we developed a recurrent radial basis function neural network (RBFNN) trained with an EKF method (Wan and Nelson, 1997, 2001; Huang et al., 2005; Vukovi and Miljkovi, 2013). Figure 2 demonstrates the general structure of our proposed model. The proposed model aims to update the location of a perceived target given that an eye movement is occurring. The main part of the model is the box consisting of a three-layer neural network. The input layer takes three types of input (red lines): (1) an efference copy signal (depending on the task, this signal is a motor burst signal or an eye velocity signal), (2) an eye position signal, and (3) visual target information. The input layer receives the input signals and then distributes them through a full connection to an intermediate layer. As we mentioned before, the model aims to keep track of the second target location during the saccade-saccade task or the remembered saccade target position in the pursuit-saccade task. This target position is encoded in the brain in a gaze-centered population code. This target position is an internally encoded signal and cannot be sensed or measured directly. This population of neurons encoding target position in gaze-centered coordinates constructs the state space in our model and it is developed by a layer of radial basis function (RBF) units (intermediate layer in Figure 2A). Each neuron in this layer has a bell-shaped tuning curve with a specific preferred position (Figure 2B). This RBF layer receives input signals from input layer of the neural network. The output layer produces the inferred location of the second target based on the activity of neurons in the intermediate layer. This prediction is corrected using the input signals and provides a feedback to the input layer of the neural network (a recurrent architecture). The output of the correction box is the updated location of the second target after the first intervening eye movement. This inferred updated position can be used by the brain to send a command for making the second eye movement (saccade) in both types of double step task. Importantly, the model was designed and trained in such a way that it could update remembered targets from one trial to the next in either task (pursuit-saccade and saccade-saccade) using the same set of internal parameters (see Mathematical formalization and Supplementary materials for details). In the following sections we explain the model input signals, training procedure, and mathematical formalization with more detail.

Figure 2. The proposed model for spatial updating during eye movements. (A) The architecture of the proposed model which takes eye position, efference copy, and visual information as input signals (The input connections and boxes are indicated with red color arrows) and it produces the decoded location of the remembered target through time as output (The output connection and box are indicated with blue color). A three layer neural network is considered to model the state space units (The internal connections of the model are illustrated in green). (B) The tuning curves of the state space units in the hidden layer of the neural network are spatially selective radial basis functions in gaze-centered coordinates. (C) The training mechanism in the proposed model. The model uses an expectation maximization approach to estimate both the model parameters (parameters of the neural network) as well as the states (position of the second target).

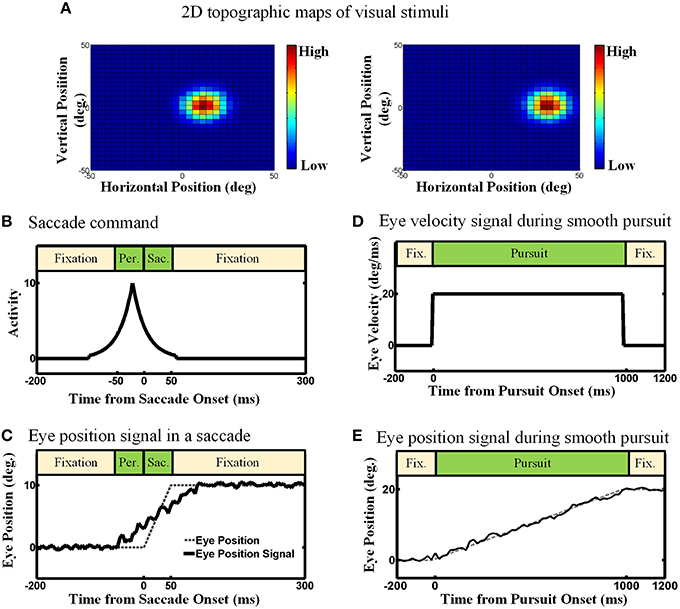

We considered three types of input in our proposed model: (1) an efference copy signal, depending on the type of eye movement, it can be a signal inspired by the motor burst in the SC for saccades, or an eye velocity signal for smooth pursuit1, (2) an eye position signal, found in the lateral intraparietal cortex (LIP), the ventral intraparietal (VIP), the middle temporal (MT) and the medial superior temporal (MST) areas (Morris et al., 2012), and (3) visual topographic maps of visual stimuli located in the SC (Walker et al., 1995; Sommer and Wurtz, 2002; Marino et al., 2008). Figure 3 depicts the detailed nature and physiological inspiration of the input signals we employed in our computational model through some examples. Consider a double step task, visual information is the first input that the subject receives and it is encoded as a topographic map in the population activity of midbrain SC neurons as depicted in Figure 3A (Mays and Sparks, 1980; Sparks and Porter, 1983; Walker et al., 1995; Sommer and Wurtz, 2002; Marino et al., 2008). In other words, the visual input is used to initialize the neurons in the state space. Note that, like most previous models of spatial updating (e.g., Keith et al., 2010), we used a homogenous retinotopic map in our model, which is a simplification of the actual SC map (Cynader and Berman, 1972; Munoz and Wurtz, 1993a,b). This simplification reduced the computational complexity of the model without interfering with its ability to simulate spatial updating (see Section 3). Eye position signal is another signal which we used as input in our model. For simplicity and clarity, we illustrate these input signals in one dimension. Depending on the type of the double step task (pursuit-saccade or saccade-saccade), we employed different eye position signal to train our model. For the double-step saccade-saccade task, we employed the eye position signal which can be found in many neurons in LIP, VIP, MT, and MST. As explained in Morris et al. (2012), the main feature of this signal is that it leads the actual eye movement (shown as dashed line in Figure 3C) and lags as the saccade completes. Figure 3C depicts an eye position signal (solid curve) for a 10-degree saccade which lasts for 50 ms. We considered a 50 ms duration for a 10-degree saccade and a 100 ms duration for a 25-degree saccade and we calculated the duration for the other saccade sizes based on a linear relation according to these values (Carpenter, 1988). In the double-step pursuit-saccade task, the subject's sensorimotor system must have an accurate representation of eye position (Noda and Warabi, 1982; Squatrito and Maioli, 1997; Tanaka and Fukushima, 1998); moreover, the eye movement lasts for around 1000 ms. Therefore, we employed an eye position signal as depicted in Figure 3E. This figure shows an eye movement of 20 degrees in 1000 ms (dashed line) and the corresponding eye position signal (solid line) which can be found in many neurons in MT, MST, LIP, and VIP (Bremmer et al., 1997a,b) and it is produced by adding noise to the actual eye position (noise free signal as shown in dash line in Figure 3E). The duration of a smooth pursuit eye movement in time is much longer compared to a saccadic eye movement. The third input is an efference copy signal which is believed to have the major effect on the spatial updating in the brain. This signal also depends on the task. In the pursuit-saccade task, since the brain must have an accurate representation of eye velocity, we used this signal (Figure 3D) as efference copy to train our model. In the saccade-saccade task which involves jerky rapid eye movements, the intended eye displacement in the form of a motor burst signal which can be found in SC (Hanes and Schall, 1996) is used as the efference copy signal (Figure 3B). The peak height of this signal determines the intended eye displacement and as it is depicted in Figure 3B, it leads the saccade onset as it is an efference copy signal.

Figure 3. The model inputs (Examples). (A) 2D topographic maps of visual stimuli. (B) Efference copy signal for the saccadic eye movements. The peak of this signal depends on the size of the saccade. Also the peak occurs before the saccade onset. (C) Eye position signal for the saccadic eye movement. This eye position signal can be found in many brain areas like LIP, VIP, MT, and MST. It leads and lags the saccade duration. (D) Eye velocity signal in the smooth pursuit eye movement. For simplicity, this Eye velocity is modeled as constant during the smooth pursuit because the fixation point is moving with a constant velocity. (E) Eye position signal in the smooth pursuit eye movement. Since the eyes perfectly track the fixation point in our simulation, the eye position signal exactly follows the position of fixation.

The proposed model aims to update the stored location of a perceived visual target, knowing that an eye movement is occurring. To this end, the model finds the retinotopic location of the desired target at each time step by integrating the issued eference copy command and the target location at the previous time step. The intermediate layer of the proposed network is initialized with the retinotopic topographic population code in SC. Then the activity of neurons in this layer is estimated through time incorporating the EKF approach. Using the estimated population activities, the model infers the gaze-centered location of the target using another Kalman filter estimation method and this estimation is fed back to the input layer through the recurrent connection and used for the estimation of the population activities in the next time step. In other words, the proposed recurrent neural network computes on-line estimates of the target location as well as population activities through time. We used a dual estimation approach which alternates between estimating the model parameters based on the estimated denoised states and estimating the states using the current model parameters (Figure 2C). The details about convergence of this approach can be found in Wan and Nelson (2001). Next section provides a brief mathematical formalization of the proposed model. Those who are not interested in computational details can skip the next section.

In this section, we present the details of our proposed model for spatial updating of remembered visual targets across saccadic and smooth pursuit eye movements. This model aims at studying the dynamics of spatial updating and describe the effect of eye position uncertainty in this dynamics. The model which is presented in this section can be considered as the first level in Marr's levels of analysis (Marr, 1982) and it is designed to answer to questions about the effects of uncertainty in the spatial updating phenomenon in system level. We express the problem of spatial updating in double-step task as aiming at estimating the current location of the second target in a gaze-centered frame of reference based on the previous estimation of this location and the efference copy signal about the eye movement. Therefore, we can express the dynamics of spatial updating with the following equation:

where TRk is a D-dimensional vector representing the second target (memory target) position in the retinotopic frame of reference, ECk denotes the efference copy signal which describes the eye movement; for saccadic eye movement it is a motor burst-like signal which shows the intended eye movement while for smooth pursuit eye movement it is an eye velocity signal describing the constant velocity of slow motion of the eyes. Of course, there is an error in any estimation; the error associated with this estimation is captured by a zero mean additive noise νk with a variance of . Studying saccadic over/undershoots are out of the scope of the current work; however, over/undershoots can be considered by adding an offset term to Equation (1). As the literature suggests this offset is dependant to the target distance (Deuble et al., 1984; Becker, 1989; Harris, 1995). Therefore, this offset can be modeled as another input in Equation (1).

The Brain has access to different sources of observations which can be employed to improve the estimation described in Equation (1). Eye position signal which has been found in many brain areas like LIP, VIP, MT, and MST (Morris et al., 2012) seems like the most related noisy observations available in the brain. This signal can be related to the location of the memory target with the following equation:

where EPk shows the eye position signal at time point k, TS denotes the position of the memory target in the head frame of reference, and is an additive observation noise featuring the uncertainty about the eye position signal in the brain. TS is required because eye position is in head frame of reference and TR is in eye frame of reference and mathematically to write an equation that relates TR to EP, we need this information to simplify the calculation in a common reference frame, but previous neural network studies have shown that such reference frame transformations can be performed without ever having an explicit representation of space in head or space coordinates (Smith and Crawford, 2005; Blohm et al., 2009).

Equations (1) and (2) form the SSM for our variable of interest TR. Please note that Equation (2) provides a relation between the available noisy observation EP and the variable of interest TR. The aim is to find an optimal estimation of TR using both the available observations EP and the dynamics described in (1). Describing the problem of spatial updating with a linear SSM, we can find the optimal estimation of the desired location of the memory target by employing the Kalman filtering approach. Kalman filters provide the optimal estimation for a linear SSM, assuming that the noise follows a Gaussian distribution (Kalman, 1960). In our model described with Equations (1) and (2), the optimal estimation of the target location using Kalman filtering can be obtained with the following recursive equation:

where is the estimation of the target location based on the dynamics described in Equation (1) and denotes the corrected estimation using the available measurements EPk (This process corresponds to the “correction" box in Figure 2A). Following Kalman filter theory, the optimal value for KGk which is known as Kalman gain can be calculated iteratively with the following equation:

with

where denotes the estimated error variance. We will use this estimation as an assessment of uncertainty for the estimation of . We used this model for both saccadic and smooth pursuit eye movements. These two kinds of eye motion differ in several aspects. The first important difference as we already mentioned is the efference copy signal which drives them. The other difference is uncertainty about noisy observations, i.e., eye position. Knowing that saccades are jerky rapid eye movements, the uncertainty about eye position signal increases around the time of the saccade, whereas smooth pursuit is slow and almost accurate and therefore the uncertainty about eye position signal is much less compared to saccades. The uncertainty about eye movement is captured in the variance of additive Gaussian noise nk. In our simulations we considered during saccades but for smooth pursuits during the eye motions.

In the previous section, we explained the linear SSM that describes the dynamics of spatial updating of memory visual targets in both saccadic and smooth pursuit eye movements in system level. Here, we went a step further and asked how this dynamics can be represented in the neural level. Therefore, we proposed the following non-linear SSM for the problem of spatial updating of remembered visual targets to make a bridge between the Marr's first level model to a Marr's second level model:

where TRk (as defined before) is a D-dimensional vector representing the memory target position in the retinotopic frame of reference (Here we explain the mathematics for D = 1; The extension to D = 2 is straight-forward and is presented in the Supplementary materials), again ECk denotes the efference copy signal, and f is a non-linear function and represents the model dynamics. In our model f is implemented through a radial-basis-function neural network (RBFNN). More intuitively, the RBFNN represents a population of neurons with Gaussian tuning curves. In our model we are interested in the dynamics of the population of neurons that represents our state space during saccadic and smooth pursuit eye movements. As explained in the previous section, shows the error in the estimation of TRk, the memory target position. Finally, w is a vector of the neural network parameters, so we refer to this vector as “the model parameters” in the rest of the article.

The architecture of our non-linear SSM is depicted in Figure 2. The main part of the model is the box including the three layer RBF neural network which represents the state space. The inputs are fed into the input neurons and directly propagates to the intermediate layer. The intermediate layer of this neural network consists of a population of RBF neurons with the following bell-shaped tuning curves (Zhang, 1996):

where ai is the activity of the ith neuron to an input x at time t, P denotes the number of neurons in the population, μi shows the gaze-centered preferred position of the ith neuron, Ai and Bi show the peak and background firing rates, respectively, and σi is its corresponding tuning curve width. Figure 2B shows the tuning curves of neurons in the population. In this simple structure, the tuning curves of the RBF layer neurons can be considered equivalent to their receptive field. The output of the neural network is the predicted location of the memory target based on the population activities in the intermediate layer:

where represents the fixed post-connection weights. These connection weights are proportional to the center of the Gaussian tuning curve of the units (in the intermediate layer) they are starting from (). The values assigned to these weights () assures a decoding mechanism similar to the center of mass calculation (see the Supplementary materials section). Equation (8) assumes that the estimated target position is integrated with the efference copy at the current time point and activates the neural population in a way to estimate the target position in the next time point. νk shows the error in this estimation.

To find the memory target position (TRk) and also the neural activity parameters, we again used Kalman filtering approach. EKF is a powerful and efficient extension of Kalman filter and provides approximately optimal estimates of the states for a non-linear SSM (Anderson and Moore, 2012). Our model is non-linear and its parameters (the parameters of the RBF units which determine the activity of these neurons through time) are unknown. Therefore, the model parameters ()2 must be estimated at each time point k as well as the memory target position (TRk). As a result, using a regular Kalman filter is not applicable in our model. With this aim, we employed a more sophisticated approach known as dual estimation method (Wan and Nelson, 2001). In this approach at each time point, in one stage, the model parameters () are estimated using the input data and the states (TRk); then in the other stage, the states are predicted using the input data and the estimated model parameters in the previous stage. This process is demonstrated schematically in Figure 2C. This interleaved approach, alternating between two stages, is known as Expectation-Maximization in signal processing literature (Gupta and Chen, 2011). With this approach, one can estimate both the model parameters and hidden states just using the noisy sensed inputs. As mentioned before, this model makes a bridge between Marr's first level (computational level) and Marr's second level (algorithmic level). Therefore, explaining biophysical/biological mechanism for how the Kalman filter is implemented in neural circuitry is out of the scope of this paper.

In order to optimally estimate the memory target position (TRk) and model parameters () with Kalman filtering approach, a generative model for evolution and observations of these parameters is required. We presented the equation that describes the evolution of (TRk) in Equations (6) or (8). The inputs that brain receives as noisy observation is the noisy eye position signal as described in Equation (2).

We assume a simple random walk for neural activity parameters () evolution equation as

where w = [A1, σ1, A2, σ2, …, AP, σP] is defined as model parameter vector. rk ~ N(0, Σw) is the process noise in estimation of the model parameters. The Equation (6) is considered as observation equation for model parameters space.

Having developed the generative models for both target position space and model parameters space, one can now estimate theses variables through time optimally using the Kalman filtering approach. Given the observed eye position signal EPk and the previous estimate of memory target position , as well as the statistics of the observation noise in Equation (2), the estimation of memory target position for the next time step can be calculated using Equation (3) (Note that here is obtained based on Equations 8), (4), and

where denotes the estimated error variance. We will use this estimation as an assessment of uncertainty for the estimation of . Fk − 1 is the Taylor linear approximation of non-linear function f around the previous estimation, . The detail of calculating Fk − 1 can be found in the Supplementary materials. Finally, the current estimation of the target position, is fed back to the input neurons which propagates this vector to the RBF layer where this vector is coded as the population activity according to Equation (7).

Similar procedure is used for estimating the model parameters, wk, through time. Another extended Kalman filter approach is used for the estimation of the model parameters as follows

where is the Kalman gain for the second Kalman filter and is optimally calculated as

with

in which denotes the estimated error covariance matrix for parameters w, and is the linear approximation of function f around .

Here we are using the same architecture to model spatial updating across both saccadic and smooth pursuit eye movements. Our model is designed to make an online estimation of memory target position during these two kinds of eye movements. Employing the Dual EKF as described before suffers from a drawback; the dual extended Kalman filter makes an overdefinite estimation about the model parameters; therefore, one cannot see the effect of increase in uncertainty in the dynamics of the model. For this reason, we combined the approach described above with the sequential “growing and pruning” learning strategy which is a modified version of the work presented by Huang et al. (2005). The short training time and fast convergence of this method makes it an efficient approach for real time estimation of the model parameters. We know that there are a large number of visual neurons in SC (Mays and Sparks, 1980). Each is spatially selective to visual stimulus presented in a specific position relative to the gaze position. In the proposed method we only estimate the model parameters for the subset of neurons that were activated in response to the memory target. This makes the online estimation more efficient. The details of our proposed learning method are provided in the following.

In this method, we define a criterion named neuron contribution (similar to the neuron significance criterion in Huang et al., 2005) which determines the contribution of each neuron of the intermediate layer in the output of the model as

where shows the tuning curve of the ith neuron and p(x) is the probability density function of input signal x. Kalman filter approach estimates a Gaussian distribution over the hidden states which is also the feedback input in our proposed model (The dynamics of model parameters are presented in Equation 9). Therefore, at each step of dual Kalman filter the distribution p(x) is estimated by the first Kalman filter and can be used in the second Kalman filter to estimate the parameters of the model. In the pruning and growing method a neuron in the intermediate layer gets activated/deactivated based on its contribution in the model output. By activation, we mean that the neuron is allocated to the model and its corresponding parameters are estimated by the Kalman filtering approach described in Equations (11)–(13). Assume that is the kth input to each neuron in the intermediate layer of the network and is the most recent output of the neural network. Therefore, the error is defined as

Using the definition provided in Equation (14), the neuron contribution for the ith neuron of the population with a Gaussian tuning curve with position selectivity of μi and width of σi and an input TRk with a Gaussian distribution with mean and variance of can be calculated as

A neuron in the intermediate layer is activated if

where emin is the minimum expected error. Activation of the ith neuron means that Ai = A0 and σi = σ0 (The initial values for an activated neuron). The condition in Equation (17) ensures that a neuron in the hidden layer is activated when its activation improves the learning accuracy. After the growing step, the parameters of the network are updated and moreover, the neurons in the intermediate layer are checked for possible pruning that improves the learning accuracy. In other words, all the model parameters are updated using the same process as explained above in the extended Kalman filter learning method in Equations (11)–(13) and then the neurons with a contribution of less than emin are deactivated in the intermediate layer (i.e., Ai = 0). More details on the steps of the method can be found in Supplementary materials.

In this section we tested the model described above to see if it can replicate the behavioral and neurophysiological evidence for spatial updating in the oculomotor system and if it can make further predictions about the dynamics of neural and population behavior of visual remapping. In the first section of the results, we will consider the pursuit-saccade task and show the results our model provides being trained on this task. Then, we will consider the results when our model is trained on the saccade-saccade task. As we shall show, our model accounts for different aspects of these two types of eye movements in behavioral and neural levels.

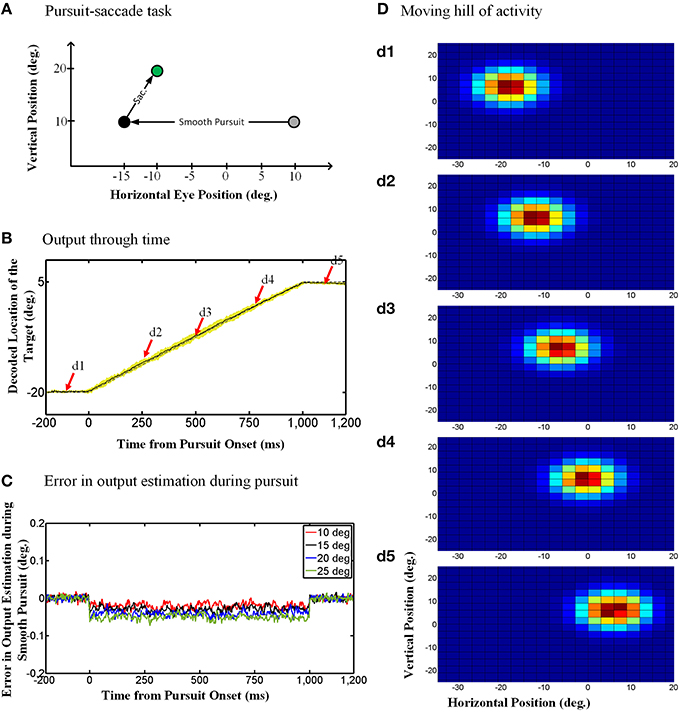

In this section, we evaluated our proposed model on the spatial updating paradigm during slow continuous eye movements through a double-step pursuit-saccade task (Figure 1B). Examples of the signals used for evaluating the model are shown in Figures 3D,E. In this case, there is no “amplitude burst” so the updating model had to rely on velocity and position signals.

The “behavioral” outputs of the model are presented in Figure 4. The diagram in Figure 4A depicts the pursuit-saccade task that we used to test our model, similar to that shown in Figure 1B. Figure 4B shows the model output for this task: the estimated location of the previously viewed target in gaze-centered coordinates through time. Note that the simulations were two-dimensional, but in Figure 4B vertical eye position remained stable so only the horizontal position of the target relative to current gaze position is shown. The solid black curve in Figure 4B shows the target location averaged over 100 trials of the paradigm explained in Figure 4A. The yellow shadow shows one standard deviation over these 100 trails. The dashed gray curve shows the target position relative to current gaze direction when no noise exists (ideal behavior). As one can see, the model shows a continuous gaze-centered tracking rather than predictive updating, and follows the ideal behavior very closely.

Figure 4. Continuous spatial updating during smooth pursuit eye movements. (A) Configuration of the double step pursuit-saccade task we used to evaluate our model. The initial fixation point (gray circle) is placed at (10°, 10°) and the memory saccade target (green circle) is located at (−10°, 20°) in space coordinates. The subject follows the fixation point from gray circle to the black one [at (−15°, 10°)] where he is supposed to make a saccade to the remembered target (green circle). (B) Model output through time. While the subject is following the fixation point from gray circle to the black one, the position of the remembered target (solid curve) is getting updated continuously through time. This diagram shows the horizontal retinotopic position of the target through time (solid curve). The yellow shadow shows the standard deviation of this output over 100 trials. (C) Error in the estimation of the target position during smooth pursuit eye movements (for different pursuit amplitudes). The negative values show a tracking behavior in smooth pursuit rather than a predictive one. Also the error increase with the amplitude of the smooth pursuit. (D) Moving hill of population activity through time. The population activities which code the retinotopic position of the saccade target is moving continuously from its initial position in (d1) to its final position in (d5). The timing of the (d1–d5) are marked up in (B) with red arrows. The color scale indicates activity in individual units, with blue corresponding to minimal activity and dark red corresponding to maximal activity as depicted in Figure 3A.

To evaluate the consistency of these results across different trials and different pursuit velocities/amplitudes, and assess the potential implications for vision, we calculated the error between the estimated output by the model and the output obtained based on the actual eye movement. To find this error, we first found the average of the estimated output over 100 trails and then calculated the difference between the outputs obtained based on the actual eye movement and the averaged estimated output by the model. Figure 4C shows this error in degrees for smooth pursuit eye movements with the same duration but different velocities. This error in output estimation for the smooth pursuit movement is constant and negative during the eye movement, meaning that the internal representation consistently lagged relative to the direction of eye movement but did not accumulate. The error increased with smooth pursuit velocity, but note that it was always very small (range [−0.1, 0.02]). This shows near-ideal tracking behavior in slow continuous eye movement. Similar results were obtained when we simulated pursuit of different amplitudes and velocities (Figure 4C). In other words, regardless of pursuit kinematics and saccade target location, the remembered location of the target was updated continuously, with a short delay relative to the actual pursuit eye movement.

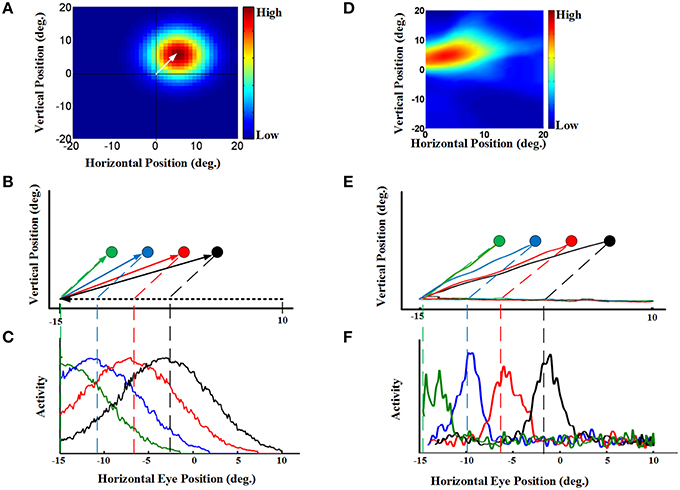

To understand how the model produced the behavior described above, we looked at both population activity (Figure 4D) and the properties of individual “neurons” in the hidden layer of the RBF neural network (Figure 5). For our single-unit analysis, we used the same conventions used by experimental neurophysiologists. Thus, Figures 5A–C (left column) provides simulations that use the same conventions utilized by Dash et al. (2015) to analyze their single-unit SC data. Corresponding plots for an actual example neuron from Dash et al. (2015), with similar properties and from a similar task are provided in the adjacent rightward column (Figures 5D–F). Figure 5A shows the eye-fixed Gaussian RF of a typical neuron, which in this case peaked 5° to the right and 5° above the fovea/gaze fixation point (similar to the example SC neuron RF shown in Figure 5D). The task details were then arranged so that remembered saccade targets would pass through the neuron's RF at different times during the pursuit eye movement. Figure 5B illustrates a series of four 25-degree leftward smooth pursuit eye movements followed by saccade to the remembered location of four different targets (Figure 5E shows similar trajectories of monkey's eye movements in (Dash et al., 2015).). Finally, Figure 5C plots the activity of our neuron as a function the same horizontal positions plotted in Figure 5B. These show that, as each target entered the neuron's RF, it became active, rising to a peak of activity when the remembered target's location crossed the peak of the RF (vertical dashed lines), and then dissipating as the eye continued to move the target out of the RF. The response was spatially selective, depending on both the RF and the target location. This pattern of simulated activity was similar for all of our hidden units and agrees exactly with actual SC visual neuron responses, like those shown in Figure 5F. The model and data also showed similar results when divided into short, medium, and long pursuit ramps (Figure S1).

Figure 5. Continuous updating of an example neuron activity during smooth pursuit eye movement [a comparison of our model to the experimental SC data in Dash et al. (2015)]. (A) a neuron 2D receptive field centered at 5° right and 5° up relative to gaze position. (B) Eye movement trajectories in 2D spatial coordinates. This panel shows four trials of a 25° leftward smooth pursuit followed by a saccade to different previously shown visual targets. The circles indicate the remembered positions of the targets. The green, blue, red, and black shows the remembered positions of targets previously shown at the horizontal positions −10°, −6°, −2°, and 2°, respectively. All targets are placed at 5 degree vertical position. (C) This panel shows the neural activity of an example neuron with receptive field centered at 5° right and 5° up. The neural activity is plotted in terms of horizontal eye position for the trials explained in (B). This neural activities for different trials are color coded. These neural activities show that the neuron responds as the remembered position of the target passes through its receptive field. (D) an example SC neuron with a 2D receptive field also centered at 5° right and 5° up relative to gaze position. (E) Actual eye movement trajectories in 2D spatial coordinates with similar configuration as (B). (F) This panel shows the neural activity (spike density) of the example SC neuron with receptive field shown in (D) plotted in the same convention as (C). This neural activities for different target positions are color coded. These SC neural activities are presented for comparison of the model neuron (C) with the SC neuron (F). For complete details of the experimental methods used to obtain the data in (D–F) see Dash et al. (2015). Those experiments were done in accordance with Canadian Council for Animal Care guidelines and were approved by the York Animal Care Committee.

Dash et al. (2015) could not directly reproduce topographic population activity, because in their experiment they varied the task for each neuron. However, they argued that by knowing single-unit properties, one can predict the population behavior (Dash et al., 2015). Specifically, the updating activity at the level of the single unit properties illustrated in Figure 5 (for both the model simulations and real data) should correspond to a moving hill of activity at the population level. Here, we demonstrate this directly by simulating SC population activity through time during the updating task in our model. This is illustrated in Figure 4D, using the same task as 4.A, and taking temporal “snapshots” at the times (d1–d5) indicated by the arrows in Figure 4B. Figure 4D shows the entire population of responses in the hidden layer of our RBF neural network. Our simulated hidden units do not have physical topography, but for illustrative purposes they have been arranged in the figure according to the peak of their RFs in a gaze-centered retinotopic map similar to that observed in the superficial layers of the SC (Walker et al., 1995; Sommer and Wurtz, 2002; Marino et al., 2008). Figure 4d1 shows the population activity 100 ms before pursuit onset. This shows a “hill-like” pattern, with the peak of activation corresponding to saccade target position relative to initial gaze, and spatially dissipating in the surrounding circle (this circle is stretched horizontally by the scale in our illustration). This pattern of population activity arises naturally from Gaussian RFs and is typical of topographically arranged visual and motor responses in the SC (Walker et al., 1995; Sommer and Wurtz, 2002; Marino et al., 2008).

More importantly, Figures 4d2–4 depict the population activity at 250, 500, and 750 ms, respectively, after the onset of the rightward pursuit movement. These panels demonstrate snapshots through time of a continuously “moving hill” of activity during smooth pursuit eye movement. This hill moves from right to left in opposition to the direction of eye motion, maintaining the location of the saccade target in gaze-centered coordinates. As a result, whenever pursuit stops (Figure 4d5) the necessary population activity was retained for transformation into a saccade. In other words, the neural population code for the remembered saccade target was updated continuously in gaze-centered coordinates during a smooth pursuit eye movement, always ready for behavior.

As argued by Dash et al. (2015) this is simply the necessary population corollary of the single unit properties reported above: the peak of the moving hill corresponds to the target entering the peak of the eye-fixed RF of some cell at one point in time (vertical dashed lines in Figures 5B,C) whereas the surrounding cells (in our virtual topographic map) show decrementing activity as a function of the distance of the remembered target from the peak of their RFs. It is this population response, combined with the output decoding process incorporated into our model, that results in the updating behavior shown in Figure 4B. In physiological terms, this decoding process could be implemented by transfer of this visual memory activity into saccade motor activity (Also observed by Dash et al., 2015).

The preceding phenomenology is similar to what neurophysiologists have reported, but here we designed the model so we know what it does and we know how it works. Thus, in our model we know the purpose of the continuous updating responses in our hidden units is to update the memory of the saccade target, and that it is causally related to this function. We know that this works through the continuous transfer of activity across the virtual retinotopic map of our hidden layer.

We also used our model to simulating saccade-saccade task. To this end, we used the efference copy signal presented in Figure 3B and the eye position signal depicted in Figure 3C as the inputs of the model. As we explained in Section 2.3, during a saccade, the eye velocity or position is less reliable comparing to a smooth pursuit task and the intended eye displacement becomes a more dominant factor in updating during a saccadic eye movement.

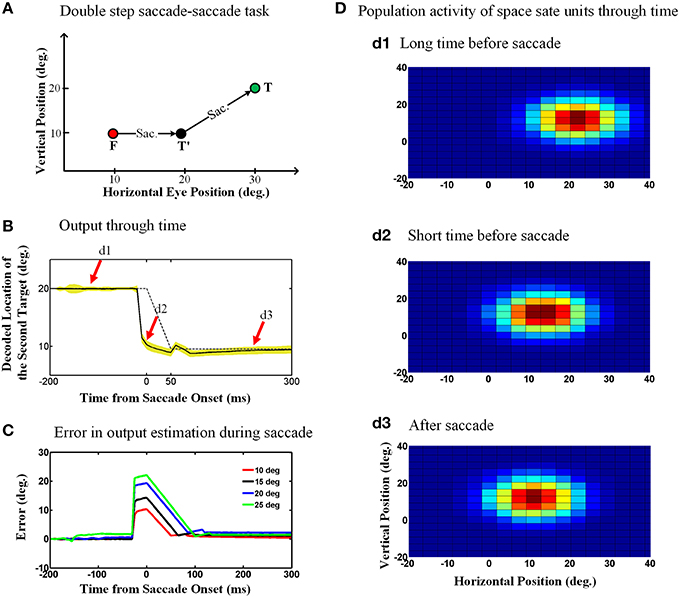

Figure 6A shows the double step saccade-saccade paradigm we used to test our model. As can be seen in this figure, the initial fixation point is located at the coordinates (10°, 10°), the first target is placed at the position (20°, 10°) and finally the second target position relative to space is (30°, 20°). Therefore, the second target position in gaze centered coordinates at the initial fixation point is (20°, 10°) and after the first saccade, the second target position relative to gaze will be (10°, 10°). Figure 6B depicts the model output which is the estimated position of the second target relative to gaze through time, 200 ms before saccade onset to 250 ms after the saccade completion. The duration of this 10-degree saccade is considered to be 50 ms. The solid curve is the output average over 100 trials with the configuration as explained in Figure 6A and the yellow shadow is the standard deviation of the predicted output over these 100 trails. The dashed curve shows the second target position relative to gaze position in an ideal case without noise. As can be seen in Figure 6B, the estimated second target position by the model shows a predictive behavior. In other words, the output (solid line) jumps predictively to its future position before the saccade onset (dashed line; actual eye movement). This is because the efferent saccade displacement signal, largely responsible for the updating, arises before the actual saccade (Figure 3).

Figure 6. Predictive remapping of remembered second target position during saccades. (A) Configuration of the double step saccade-saccade task we used to evaluate our model. The initial fixation point is placed at (10°, 10°), and the first and second saccade targets are located at (20°, 10°) and (30°, 20°) in space coordinates, respectively. The subject makes the first saccade from the fixation point (red circle) to the first target (black circle) where he is supposed to make the second saccade to the remembered second target (green circle). (B) Model output through time. When the subject makes the first saccade from the fixation point (red circle) to the first target (black circle), the retinotopic position of second target is updated discretely and predictively. The solid curve shows the retinotopic horizontal position of the second target through time. As can be seen, this estimated position jumps predictively to its future position even before the saccade onset at time zero. The yellow shadow shows the standard deviation of the output over 100 trials. (C) Error in the estimation of the second target position during saccadic eye movements (for different saccade amplitudes). These positive values show a predictive behavior. Also as the saccade amplitude increases, the peak of the corresponding curve increases proportionally. (D) Population activity of the state space units through time. The population activity of neurons which code the retinotopic position of the second saccade target are depicted in (d1–d3), respectively, at a long time before the saccade, a short time before the saccade and long after the saccade. As can be seen, the population activity jumps to its future position at a short time before the upcoming first saccade, i.e., it shows predictive remapping.

One consequence of the predictive updating response is that there is a transient peri-saccadic mis-match between actual and remembered target position. To quantify this, we again calculated the error between the estimated output by the model and the output obtained based on the actual eye movement. Figure 6C shows the calculated error in estimation of the second target location through time for different saccade sizes. As can be seen in Figure 6C, this error arises rapidly just before the saccade, peaks around the time of saccade onset, and then drops back to zero (with some minor oscillations) after the saccade. Further, the direction of the error is always positive (in the direction of the saccade) and peaks at an amplitude approximately equal to the corresponding saccade amplitude. In our model, these observations result from the predictive behavior in the estimation of the second target position using the first saccade displacement command, followed by the influence of the eye position signal, which has a slower time course. If this influenced perception, it would predict large errors in stimulus localization just before saccades and smaller errors just afterwards (This will be discussed further in Section 4.2).

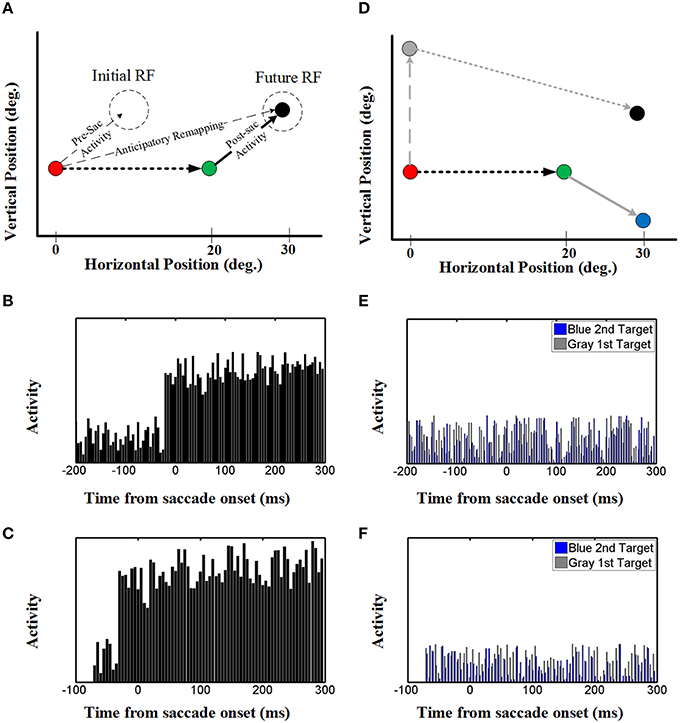

Once again, to understand how the decoded output of the model arose, we investigated both the population behavior and individual neurons in the hidden layer, again using techniques described by neurophysiologists. This simulation illustrated in Figure 7A replicates the conditions used in the classic Duhamel et al. (1992) experiment, with two visual stimuli presented before the first saccade (to the green target) except that we have added a second saccade to the black target to clearly define the behavioral relevance (Figure 1A). These neurons again have eye-fixed Gaussian RFs, and the geometry of the simulation has been arranged so that the RF of the neuron (initial RF) is initially far from any stimulus, whereas the first saccade brings it right over the black stimulus (future RF).

Figure 7. Effect of upcoming saccade on an example neuron activity. (A) This panel shows the double step saccade-saccade paradigm we used to find neural activity. The red, green, and black circles indicate the fixation point, intervening saccade target, and the memory target, respectively. The memory target is placed in (30, 5)° relative to the first fixation point and after a 20° rightward intervening saccade, the position of this memory target will be at (10, 5)° relative to the new gaze position. (B) This diagram shows the neural activity of a neuron with receptive field centered at (10, 5) relative to gaze position. As can be seen in this diagram, the neural activity (black spikes) raised before saccade onset. This shows a predictive remapping behavior of this neuron. (C) This diagram shows the neural activity of a neuron with receptive field centered at (10, 5) relative to gaze position in the case the second target presented close to the saccade onset (similar to Duhamel et al., 1992) experiment. As can be seen in this diagram, similar to (B) the neural activity (black spikes) raised before saccade onset. This shows a predictive remapping behavior of this neuron. (D) As control conditions, the activity of the same neuron but for a different memory target at position (30, −5) (blue circle) and the activity of this neuron for a different intervening saccade target (gray circle) are also plotted (gray spikes). (E,F) In the control test, the activity of this neuron does not show any specific behavior. This is due to the fact that the blue memory target is out of its receptive field before, during and after the saccade.

The diagram in Figure 7B shows the neural activity of the neuron mentioned above, using our standard 200 ms memory interval before saccade onset (Figures 1A,B). Initially, this “neuron” only showed random background activity because there was nothing in its initial RF. As can be seen in this diagram, the neural activity then rose abruptly before saccade onset. This shows a predictive remapping behavior of this neuron, corresponding to the dashed line labeled “anticipatory remapping” in Figure 7A. Recording from this neuron, it would look like its RF transiently shifted (remapped) toward the future RF. This neuron then shows sustained activity after the first saccade, corresponding to the location of black target (now extinguished) in its normal eye-fixed RF. Thus, at the population level (Figure 6) one can see that neural activity is predictively transferred between different neurons (i.e., from the population activated at the original eye position to the appropriate population for the final eye position), whereas at the single unit level (Figure 7A), this same phenomenon manifests itself as a predictive remapping response followed by sustained activity in cells with RFs corresponding to the final target/eye position.

These observations are very similar to the data reported by Duhamel et al. (1992) in a single step task (no saccade to the second target), except that in those experiments there was no memory lag between the visual stimulus and the first saccade. To show that this delay makes no differences in our model, we reduced the memory interval to 70 ms before saccade onset, so that there was only one time step between the arrival of the visual stimulus (Figure 3A) and the pre-saccadic efference copy (Figure 3B). (We could not reduce this further because our model structure does not allow simultaneous presentation of visual and motor inputs.) The results (Figure 7C) show that, other than the arrival of visual activation closer to the saccade, this simulation gave the same results as Figure 7B. Thus, this predictive and sustained activity of our model is in agreement with neurophysiological evidence found by Duhamel et al. (1992), and in subsequent remapping experiments (Walker et al., 1995; Umeno and Goldberg, 1997). Moreover, when these simulations are repeated over multiple trials (similar to multiple neurons) the uncorrelated noise cancels, providing a very clean updating response (Figure S2).

We also tested our neurons in two control conditions (Figure 7D) with either 1) the same final target but a different first saccade target (gray: that does not bring the final target into the neuron RF), or the same first saccade and different final target (blue, outside of the neuron RF after the first saccade). In either case, this did not result in any change in baseline neural activity (blue and gray activity plots in Figures 7E,F). As shown in Figures 7E,F, this observation was also not influenced by memory delay in our model. This shows that the predictive and sustained remapping response in our model was spatially selective, depending on the first saccade bringing the second saccade target into the neurons RF.

As a result of these single unit properties, one can again understand and predict what will happen at the population level. The population activities are shown in Figure 6D, using the same plotting conventions described above for Figure 4D. The three panels 6.d1-d3 correspond to the task shown in Figure 6A, taken at the times indicated by the red arrows in Figure 6B. Figure 6d1 again shows the typical “hill” of population activity before saccade onset. Figure 6d2 depicts population activity at a short time before saccade onset, showing a predictive jump to its final position before eye movement. Finally, Figure 6d3 demonstrates the population activity at a long time after the saccade completion. Thus, the peak of the population activity “skips ahead” in the direction of the saccade, and then is sustained after the saccade, giving rise to the decoded behavioral outputs illustrated in Figure 6B.

Again, we know the reason and purpose for this behavior in our model: it is to update remembered activity relevant for future behavior (here the second saccade), and the anticipatory aspect simply results from the timing of internal saccade motor signals relative to actual delays in the eye movements.

Until now we have assumed that the input signals to our model are equally stable during fixations and saccades, but this is unlikely to be true. Saccades are very rapid and accompanied by large transient signals that are thought to add noise and uncertainty to the visual system (Harris and Wolpert, 1998; Niemeier et al., 2003; Prime et al., 2007). For example, around the time of saccades eye position signals are probably unstable, inaccurate, and possibly out of synch with real time. Speaking statistically, the uncertainty in the probability distribution of the eye position signal increases around the time of the saccade compared to the fixation conditions. This was a motivation for us to investigate that how this increased uncertainty might influence spatial updating. To investigate this we employed this input uncertainty in the learning procedure of the model (details and mathematical aspects are provided in the Supplementary Materials).

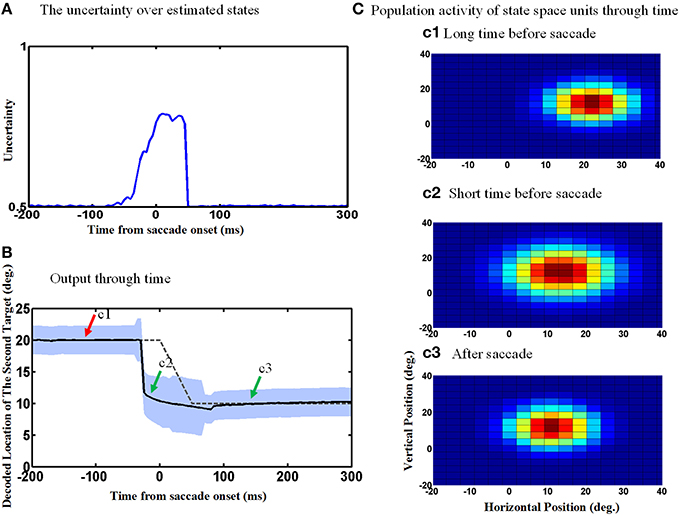

Figure 8 repeats the simulations shown in Figure 6, except now allowing for the uncertainty of eye position signal around the time of the saccade which results in an increase in the uncertainty of the estimated internal states around the time of the saccade. This internal states uncertainty (shown as the width of the input Gaussian distribution of the estimated hidden states) is depicted through the time course of the spatial updating task in Figure 8A. Simulating the double-step saccade-saccade task under this new condition produced model output (Figure 8B) that still showed the anticipatory “jump” (solid black line relative to the dashed line for the actual saccade). Likewise, the population activity of hidden-layer neurons (Figure 8C) again jumps, but also expands a short time before the saccade (Figure 8c2). The time course of this expansion is represented by the vertical width of the blue shaded area in Figure 8B. The expansion begins at the onset of the predictive jump before the saccade (dashed line), and occurs continuously until just after the saccade, after which the population activity returns to its original size (Figure 8B/mark c3 and Figure 8c3).

Figure 8. Population activities expand during saccades due to the increase in eye position measurements uncertainty. (A) The uncertainty over estimated states by Kalman filter. The uncertainty is measured by the width of Gaussian distribution. (B) Model output through time. The estimated second target position jumps predictively to its future value even before the upcoming eye movement. Moreover, the width of the population activities increases before the upcoming saccade and this broadening continues during the saccade as shown by the blue shadow. As depicted, more neurons get activated when the width of distribution (shown in A) increases. (C) Population activity of the state space units through time. The population activity of neurons which code the retinotopic position of the second saccade target are depicted in (c1–c3), respectively, at a long time before the saccade, a short time before the saccade and long after the saccade. As can be seen, the population activity jumps to its future position and also expands at a short time before the upcoming first saccade.

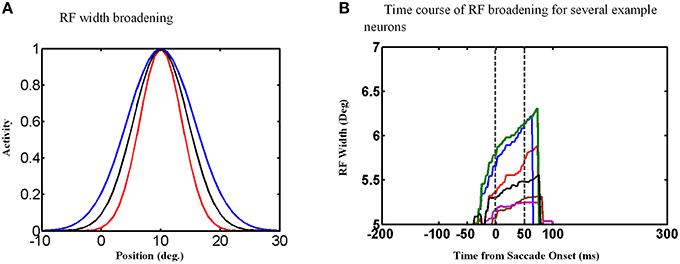

An examination of the behavior of single units explains the behavior of the population and its output. The width of the Gaussian RFs increases around the time of the saccade (Figure 9A). In this figure, red curve shows the RF of an example neuron long before the saccade, black curve shows this neuron RF shortly before the saccade and blue curve shows its RF during the saccade. Figure 9B shows the time course of some example neurons which the memory target falls in their RF. As can be seen the width of RFs start to increase before saccade onset and broaden continuously during the saccade, and finally return abruptly to their normal fixation size shortly after the saccade. As a result of this transient RF broadening, a wider swath of neurons are activated during the updating task (Figure 8c2). This leads to two predictions: expanding RFs, and broader recruitment of neurons around the time of a saccade.

Figure 9. RF width of state space units through time. (A) Shows how the RF of an example neuron broadens during the saccade. (B) This figure shows the RF widths of several neurons which the remembered target falls in their RFs through time for a 10° saccade. As can be seen in this figure, the RF size starts to increase before saccade onset and returns back to its actual size shortly after the saccade completes.

The primary aim of this study was to determine if an artificial network developed to perform a simple double-step spatial updating task could also replicate the major neurophysiological phenomena that have been associated with spatial updating and remapping during saccades and pursuit eye movements. To do this, we developed a state-space model (SSM) to study the dynamics of spatial updating across eye movements. The proposed model follows a dual EKF structure which is well-developed to study both behavioral and neural population activities through time. Further, depending on the inputs to this model, it is able to switch between simulating updating saccade targets across either smooth pursuit or an intervening saccade, much like the actual brain. During smooth pursuit movements, the proposed model demonstrated a continuously moving hill of activity related to the remembered target for a subsequent saccade, replicating the results of Dash et al. (2015). Moreover, during saccades our model showed a predictive “jump” in memory-related population activity to its future position, replicating the results of several “predictive remapping” studies (Duhamel et al., 1992; Walker et al., 1995; Umeno and Goldberg, 1997). Thus, the same general model structure was able to account for spatial updating / remapping during both saccadic and smooth pursuit eye movements, by using different input signals. Furthermore, the proposed model provides a new prediction that population activities expand during the saccadic eye movement. To our knowledge, no other model has been able to explain so many phenomena associated with updating/remapping, based on the single unifying concept of using corollary discharge to retain visual information in a gaze-centered frame.

In studies of spatial updating that looked at motor activity in the double-step task, it is clear that plans for saccades are updated to compensate for intervening eye movements in a spatially specific fashion (e.g., Sparks and Mays, 1983; Medendorp et al., 2003). It is less clear how motor updating relates to the updating of visual signals during saccades (Duhamel et al., 1992; Zirnsak et al., 2014). However, it is noteworthy that most of the brain areas which show alterations in visual RFs around the time of saccades also show signals related to saccades (Duhamel et al., 1992; Walker et al., 1995; Umeno and Goldberg, 1997; Dash et al., 2015). Therefore, it may be best to consider an integrated visual-motor approach to this question.

One structure that has been implicated in both saccade production and spatial updating is the SC (Walker et al., 1995; Sommer and Wurtz, 2000; Dash et al., 2015). Dash et al. recently employed a double step pursuit-saccade task to study updating of a memory visual target during smooth pursuit eye movements in the SC. The authors reported that almost all of visual neurons in their dataset showed continuous updating during smooth pursuit, whereas motor saccade signals were only updated at the time of the saccade (Dash et al., 2015). Our model, trained on a similar task, showed similar results in single neuron level as in the visual activity reported by Dash et al. (2015). Moreover, in our model we could directly observe that the corresponding population of SC neurons shows a continuously moving hill of activity, as predicted by Dash et al. (2015) from their data. Furthermore, our model showed that this continuous updating lags the actual eye movement, a prediction that has not yet been tested. As proposed by Dash et al. (2015), it is essentially certain that our model would produce similar predictions for updating across other types of slow eye movement, for example head motion through space, if this were included in our simulations (Medendorp et al., 2003; Klier and Angelaki, 2008).

The influence of saccades on visual signals has mainly been studied in single-step saccade experiments where animals were not explicitly required to retain memory of the additional visual target for any behavioral purpose. In 1992, Duhamel and colleagues showed that LIP neurons can respond to a visual stimulus in their future RF after a saccade even before the eyes move (Duhamel et al., 1992). In other words, they found that parietal RFs are remapped to their future position predictively in order to compensate for the upcoming eye movement. After that, similar remapping evidence showing similar compensatory predictive mechanism have been found in the FEF (Umeno and Goldberg, 1997) and the SC (Walker et al., 1995). Our model produced similar remapping-like results in single neuron level (Figure 7), whereas at the population level, our model demonstrated a jump in hill of activities predictively to its future position (Figure 6). One can thus see how remapping and updating could appear to be two different things when viewed from these two different perspectives, but in our model these were simply two different ways of describing the same simulation. Further, in our model, this did not depend on timing of the stimulus: the same results occurred whether the saccade occurred immediately after the visual stimulus (as in the single-step experiments described above), or after a more prolonged delay. Finally, in our model –trained on the double step task– we know a priori the purpose of these phenomena: to place neural activity at the appropriate location to be ready for a second saccade from the future eye position.

The logical implication of these results is that there may also be no fundamental difference between the mechanisms for updating gaze-centered of visual memory responses vs. remapping visual responses in the real brain. One reason we used the double step task in our network was to compare the behavioral output of the network against ideal behavior to train the network. Likewise, before even entering a laboratory, a monkey (or human) has already undergone years of natural training on sensorimotor tasks that involve spatial integration across eye movements. Even in a single-step task where the remapped stimulus is irrelevant to the reward, it seems unlikely that the visual system could entirely ignore a highly salient stimulus flashed in the dark or dis-engage circuits that have been established through years of training. Indeed, the fact that some visual information is retained and updated relative to gaze direction after saccades in such tasks (Duhamel et al., 1992) suggests that this does not happen. Thus, visual remapping experiments may be tapping into part of a mechanism that was trained by behaviors resembling the double step task. This could be further tested by directly comparing the results of single-step and double-step tasks in areas associated with remapping. Based on our results here, we expect that anticipatory remapping and post-saccadicc retention would correlate (if they are part of the same mechanism), and that both would be enhanced when the visual stimulus becomes more relevant to the task. These factors suggest that a more unified approach, like ours, is required to understand the spatial updating of visual memory for action and visual remapping, which to date has mainly been interpreted in light of perceptual constancy (Wurtz, 2008; Higgins and Rayner, 2015). In short, we suggest that visual remapping and motor updating are part of a common visuomotor mechanism, and that perceptual constancy at the subjective level likely is influenced by training the brain on motor tasks.

Recently Zirnsak et al. tested a wider range of spatial combinations of visual stimuli and saccade metrics than those used in the original single-step remapping experiments (Zirnsak et al., 2014). Although some responses were consistent with remapping, many responses were more consistent with a shift of attention toward eye movement targets, and might explain why perceived visual space is transiently compressed toward these targets. We did not observe this shift of RFs toward the target in our model, but again it is important to note the differences between general context and task between our double-step study and both the Zirnsak et al. (2014) and classic remapping studies. First, in our model spatial updating was the only constraint, whereas the real system has other constraints such as attention and saccade production. Second, as pointed out above, it is difficult to interpret responses to task-irrelevant stimuli. These responses may have been influenced by subtle differences between labs that might influence implicit training and attention to the stimuli (as well as selection criteria for different cell types). We did not attempt to model these things here. Again, the best way to resolve these differences might be to repeat the Zirnsak recording paradigm on animals trained on a double-step task, where the task-relevance of the stimulus is known, and then compare cell responses during single-step and double-step behavior.