94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Synaptic Neurosci., 26 July 2022

Volume 14 - 2022 | https://doi.org/10.3389/fnsyn.2022.888214

This article is part of the Research TopicSubcellular Computations and Information ProcessingView all 5 articles

Jacob L. Yates1†

Jacob L. Yates1† Benjamin Scholl2*†

Benjamin Scholl2*†The synaptic inputs to single cortical neurons exhibit substantial diversity in their sensory-driven activity. What this diversity reflects is unclear, and appears counter-productive in generating selective somatic responses to specific stimuli. One possibility is that this diversity reflects the propagation of information from one neural population to another. To test this possibility, we bridge population coding theory with measurements of synaptic inputs recorded in vivo with two-photon calcium imaging. We construct a probabilistic decoder to estimate the stimulus orientation from the responses of a realistic, hypothetical input population of neurons to compare with synaptic inputs onto individual neurons of ferret primary visual cortex (V1) recorded with two-photon calcium imaging in vivo. We find that optimal decoding requires diverse input weights and provides a straightforward mapping from the decoder weights to excitatory synapses. Analytically derived weights for biologically realistic input populations closely matched the functional heterogeneity of dendritic spines imaged in vivo with two-photon calcium imaging. Our results indicate that synaptic diversity is a necessary component of information transmission and reframes studies of connectivity through the lens of probabilistic population codes. These results suggest that the mapping from synaptic inputs to somatic selectivity may not be directly interpretable without considering input covariance and highlights the importance of population codes in pursuit of the cortical connectome.

Cortical neurons are driven by large populations of excitatory synaptic inputs. Synaptic populations ultimately shape how sensory signals are encoded, decoded, or transformed. The sensory representation or functional properties of an excitatory input population will define and constrain the operations a neuron can perform and reflects the rules neurons use to form connections. Electrophysiological and anatomical studies suggest that connections between excitatory neurons exhibit functional specificity, where inputs are tuned for similar features as the soma (Reid and Alonso, 1995; Ko et al., 2011; Cossell et al., 2015; Lee et al., 2016). In contrast, synaptic imaging techniques have revealed that synaptic populations exhibit functional diversity, deviating from canonical connectivity rules, such as “like-connects-to-like” (Scholl and Fitzpatrick, 2020). This functional diversity within input populations has been observed in a variety of mammalian species, from rodents to primates, and for a variety of sensory cortical areas (Jia et al., 2010, 2011; Chen et al., 2011, 2013; Wertz et al., 2015; Wilson et al., 2016, 2018; Iacaruso et al., 2017; Scholl et al., 2017; Kerlin et al., 2019; Ju et al., 2020). This apparent discrepancy challenges our understanding of how synaptic inputs drive the selective outputs of cortical neurons and lead to a simple fundamental question: if the goal is to produce selective somatic responses, why would a neuron have excitatory synaptic inputs tuned far away from the somatic preference?

To explore this question, we turn to population coding theory; starting with the idea that to accurately represent sensory signals, cortical neurons integrate across or decode the activity of neural populations upstream. Many studies have examined how sensory variables might be decoded from cortical populations (Butts and Goldman, 2006; Jazayeri and Movshon, 2006; Shamir and Sompolinsky, 2006; Graf et al., 2011), an endeavor increasingly applied to larger population sizes with innovative recording techniques (Stringer et al., 2019; Rumyantsev et al., 2020). These decoding approaches are often used as a tool to quantify the information about a stimulus available in a neural population, carrying the assumption that downstream areas could perform such a process (Berens et al., 2011; DiCarlo et al., 2012). In real brain circuits, decoders must be composed of individual neurons, driven by sets of synaptic inputs, akin to a decoder’s weights over a given input population. To date, few studies have explicitly examined the weight structure of population decoders (Jazayeri and Movshon, 2006; Rust et al., 2006; Zavitz and Price, 2019).

In this article, we examine how the functional diversity of synaptic inputs measured in vivo compares to the weights of a simple population decoder. Focusing on a single sensory variable, orientation, we derive the maximum-likelihood readout for a simulated input population that encodes stimuli with noisy tuning curves (e.g., Ecker et al., 2011). Under reasonable assumptions, the decoder weights can be interpreted as simple synaptic wiring from the input population to the downstream decoder neurons. This allows a direct comparison of population decoders to synaptic input measured in vivo. We then test a hypothesis that an optimal decoder will show substantial heterogeneity in its synaptic weights given a biologically realistic input population. We find that when input populations are shifted copies of the same tuning curve (homogenous), the synaptic excitatory inputs closely resemble the somatic output. However, with a biologically realistic input population (heterogenous), the expected inputs onto readout neurons exhibit functional diversity. We then compare the orientation tuning of simulated inputs with large populations of dendritic spines (excitatory synaptic inputs) onto individual neurons of ferret primary visual cortex (V1), recorded with two-photon calcium imaging in vivo. This revealed similar diversity in the orientation tuning of dendritic spines on ferret V1 neurons and simulated decoder weights. The similarity between the synaptic populations of actual V1 neurons and the optimal neural decoder suggests that diversity and heterogeneity observed in dendritic spines across sensory cortices are, in fact, expected when considering how information propagates through neural circuits.

All procedures were performed according to NIH guidelines and approved by the Institutional Animal Care and Use Committee at Max Planck Florida Institute for Neuroscience. This study is reported in accordance with the ARRIVE guidelines.

We construct a probabilistic decoder, represented by a population of neurons, that reports or estimates the identity of a stimulus from the spiking response of an input population of neurons. We assume an input population with responses that are a function of the stimulus, f(θk), plus Gaussian noise, f(θk), and the covariance (Q) is equal for all stimulus conditions (Q) such that Q = Qk = Qi. Then, the posterior distribution can be written as

where a multivariate Gaussian is

This can be expanded and simplified such that

where

Here, w are the weights over k for each neuron in the decoder population and β is a constant term for each k. Importantly, because we assume Gaussian input, with this formulation, w and β are derived in closed form. More generally, w and β, can be estimated numerically using multinomial logistic regression and this form remains optimal for any input population statistics within the exponential family (e.g., Poisson noise).

To generate input populations (PIN), we simulated N neurons responding to a stimulus characterized by orientation (θk ε [−π/2:π/2K:π/2]). The response of each neuron, ri, depends on a tuning function, fi(θ), and an additive noise term, εi, describing trial-to-trial variability. Noise is correlated across the population, generated from a multivariate Gaussian distribution with zero mean and covariance C. Orientation tuning functions were defined as:

Here, α is the baseline firing rate, β scales the tuned response, κ scales the tuning bandwidth, and Φ is the orientation preference of each neuron. For homogeneous PIN all parameters except Φ were fixed: (α, β, κ) = (0, 5, 4). For heterogenous PIN, we sampled parameters to match measurements from macaque V1 (Ringach et al., 2002) and our ferret V1 data. Tuning bandwidth was generated by converting half-width at 1/√2 height (γ) values from a lognormal distribution (μ = −1, σ = 0.6):

Limited-range correlations were included so neural noise correlation depends on tuning preference difference (Ecker et al., 2011). A correlation matrix, C, was specified by the difference between preferred orientations of neurons and the maximum pairwise correlation, co:

where δ is the circular difference and

where I is the identity matrix of size N. We scaled the correlation matrix by the mean firing rate of each neuron to produce Poisson-like noise (Ecker et al., 2011).

Derived weights for a given PIN were artificially smoothed using the following equation from Park and Pillow (2011):

Here, S+ is the pseudoinverse of S, δ(Φi−Φj) is the circular difference between preferred orientations of neurons, ρ1 scales the amplitude of smoothing, and ρ2 scales the functional range of smoothing.

Decoding accuracy was calculated with the mean-squared-error of the maximum a posterior probability (MAP) estimate across t simulated trials of each stimulus (k):

Here, wk are the weights for a given decoder neuron and θk is the true stimulus.

Briefly, female ferrets aged P18–23 (Marshall Farms) were anesthetized with isoflurane (delivered in O2). Atropine was administered and a 1:1 mixture of lidocaine and bupivacaine was administered SQ. Animals were maintained at an internal temperature of 37°C. Under sterile surgical conditions, a small craniotomy (0.8 mm diameter) was made over the visual cortex (7–8 mm lateral and 2–3 mm anterior to lambda). A mixture of diluted AAV1.hSyn.Cre (1:25,000–1:50,000) and AAV1.Syn.FLEX.GCaMP6s (UPenn) was injected (125–202.5 nl) through beveled glass micropipettes (10–15 micron outer diameter) at 600, 400, and 200 microns below the pia. Finally, the craniotomy was filled with sterile agarose (Type IIIa, Sigma-Aldrich) and the incision site was sutured.

After 3–5 weeks of expression, ferrets were anesthetized with 50 mg/kg ketamine and isoflurane. Atropine and bupivacaine were administered, animals were placed on a feedback-controlled heatingpad to maintain an internal temperature of 37°C, and intubated to be artificially respirated. Isoflurane was delivered throughout the surgical procedure to maintain a surgical plane of anesthesia. An intravenous cannula was placed to deliver fluids. Tidal CO2, external temperature, and internal temperature were continuously monitored. The scalp was retracted and a custom titanium headplate adhered to the skull (Metabond, Parkell). A craniotomy was performed and the dura retracted to reveal the cortex. One piece of custom cover-glass (3 mm diameter, 0.7 mm thickness, Warner Instruments) adhered using optical adhesive (71, Norland Products) to custom insert was placed onto the brain to dampen biological motion during imaging. A 1:1 mixture of tropicamide ophthalmic solution (Akorn) and phenylephrine hydrochloride ophthalmic solution (Akorn) was applied to both eyes to dilate the pupils and retract the nictating membranes. Contact lenses were inserted to protect the eyes. Upon completion of the surgical procedure, isoflurane was gradually reduced and pancuronium (2 mg/kg/h) was delivered IV.

Visual stimuli were generated using Psychopy (Peirce, 2007). The monitor was placed 25 cm from the animal. Receptive field locations for each cell were hand mapped and the spatial frequency optimized (range: 0.04–0.25 cpd). For each soma and dendritic segment, square-wave drifting gratings were presented at 22.5 degree increments (2 s duration, 1 s ISI, 8–10 trials for each field of view).

Two-photon imaging was performed on a Bergamo II microscope (Thorlabs) running Scanimage (Pologruto et al., 2003; Vidrio Technologies) with 940 nm dispersion-compensated excitation provided by an Insight DS+ (Spectraphysics). For spine and axon imaging, power after the objective was limited to <50 mW. Cells were selected for imaging on the basis of their position relative to large blood vessels, responsiveness to visual stimulation, and lack of prolonged calcium transients resulting from over-expression of GCaMP6s. Images were collected at 30 Hz using bidirectional scanning with 512 × 512 pixel resolution or with custom ROIs (frame rate range: 22–50 Hz). Somatic imaging was performed with a resolution of 2.05–10.24 pixels/micron. Dendritic spine imaging was performed with a resolution of 6.10–15.36 pixels/micron.

Imaging data were excluded from analysis if motion along the z-axis was detected. Dendrite images were corrected for in-plane motion via a 2D cross-correlation-based approach in MATLAB or using a piecewise non-rigid motion correction algorithm (Pnevmatikakis and Giovannucci, 2017). ROIs (region of interest) were drawn in ImageJ; dendritic ROIs spanned contiguous dendritic segments and spine ROIs were fit with custom software. Mean pixel values for ROIs were computed over the imaging time series and imported into MATLAB (Sage et al., 2012; Hiner et al., 2017). ΔF/Fo was computed by computing Fo with time-averaged median or percentile filter (10th percentile). For spine signals, we subtracted a scaled version of the dendritic signal to remove back-propagating action potentials as performed previously (Wilson et al., 2016). ΔF/Fo traces were synchronized to stimulus triggers sent from Psychopy and collected by Spike2. Spines were included for analysis if the SNR of the preferred response exceeded two median absolute deviations above the baseline noise (measured during the blank) and were weakly correlated with the dendritic signal (Spearman’s correlation, r < 0.4). Some spine traces contained negative events after subtraction, so correlations were computed ignoring negative values. We then normalized each spine’s responses so that each spine had equal weight. The preferred orientation for each spine was calculated by fitting responses with a Gaussian tuning curve using lsqcurvefit (Matlab). Tuning selectivity was measured as the vector strength index (v) for each neuron’s response:

Here r is the mean responses over the orientations (θk) presented for each spine (i). Note, this same index is used to characterize simulated input selectivity.

To compare input tuning (derived synaptic population or measured dendritic spine population) with output tuning (downstream readout or measured somatic tuning) we computed the Pearson Correlation coefficient (Matlab). This correlation was computed on trial-averaged responses across different orientations. For dendritic spines and soma, measured responses across stimulus presentation trials were averaged. For simulated synaptic populations and corresponding downstream readout neurons, we simulated trials by adding noise to each synaptic tuning curve.

Matlab code to generate input and readout populations used are provided: https://github.com/schollben/SpineProbablisticModel2020.

Following several decades of work on population coding theory, we derive a Bayesian decoder to report the probability of a visual stimulus given inputs from a neural population (Figure 1). With this framework, and given the specifics of the encoding population, we can analytically derive the optimal decoding weights of a population of readout neurons. Here, we use “optimal” to refer to the maximum-likelihood solution. Previous work has shown that a population of neurons could perform such probabilistic decoding with weighted summation and divisive normalization, as long as their inputs exhibit Poisson-like noise (Jazayeri and Movshon, 2006; Ma et al., 2006). Starting from that basic framework, we derived a decoder that represents the probability that each possible stimulus orientation was present given the responses of a large population of upstream, input neurons (PIN). This is effectively a categorical decoder, where each possible orientation is a different category. Similar decoders have been used throughout the literature to estimate how much information is in a neural recording and suggest how downstream neurons might read it out (Graf et al., 2011; Stringer et al., 2019). Our decoder has weight vectors for each possible stimulus orientation, which integrate across PIN and are passed through a static nonlinearity (the exponential function) and normalized. As we will show below, given specific assumptions about the variability in PIN, the weights over PIN depend systematically on the tuning functions and covariance of PIN. Following characterization of this decoding framework, we will make direct comparisons with real data: defining an effective “synaptic input population” (PSYN) as nonzero, positive weights over PIN. Although our strategy applies to any one-dimensional stimulus variable, we describe this model in the context of the orientation of drifting gratings presented to V1 neurons for a direct comparison with in vivo measurements.

Figure 1. A population decoding framework to study synaptic diversity. An upstream population of neurons is tuned for a single stimulus variable (orientation; top). This input population is readout by downstream decoder neurons (bottom). Downstream neurons decode stimulus identification by reading out spikes from the upstream input population. Each decoder neuron is defined by set weights (middle) over the upstream population, which are summed and rectified to produce an output.

A categorical probabilistic decoder reports the probability that a particular stimulus orientation, θk, was present given the spiking responses of an input population, R. This can be expressed as a normalized exponential function of the log-likelihood plus the log prior for each θk,

where

The likelihood, p(R|θk), is the probability of the observed responses in an input population given the stimulus k and p(θk) is the prior probability of that stimulus class. If p(R|θk) is in the exponential family, then L(θk) can be written as a weighted sum of the input population response vector plus an offset, which can be estimated numerically via multinomial logistic regression (Ma et al., 2006). For simplicity, we assume the input population has a response that is a function of the stimulus plus Gaussian noise and equal covariance across all stimulus conditions. Although this assumption about the covariance structure deviates from real neural activity, this assumption means the weights and offset can be solved analytically (see “Methods” Section), and as will be shown below, such a simple model makes substantial headway in explaining biological phenomena. Our goal here is to provide a plausible alternative to “somatic selectivity” for the connectivity rules in the cortex. Under the Gaussian assumption, the decoder amounts to:

Here, f(θk) is the mean input population response to stimulus orientation, k, K is the total number of orientations, and Q is the covariance matrix. The covariance term captures the influence of each neuron’s response variance (diagonal elements) and the variability shared with other neurons (off-diagonal elements). Intuitively, in the absence of covariability (i.e., off-diagonal elements are zero), the weights are proportional to the signal-to-noise ratio of the neuron (the mean divided by the variance). The term RTwk is the dot product between the population response and weights. The second term, βk, is an offset for each stimulus. ln p(θk) is a constant reflecting the log prior probability of stimulus k. It is worth noting that if the covariance depends on the stimulus, the optimal readout is no longer a linear function of R and is quadratic, which can be interpreted as a complex-cell (Pagan et al., 2016; Jaini and Burge, 2017) and is a potentially fruitful future direction.

In this study, we focus on the weights of this simple Gaussian, equal covariance decoder in order to examine how synaptic tuning from such a simple decoder would arise. Because the optimal weights have an analytic solution (Equation 1), we can see how they depend on the parameters of PIN. The simplifying assumptions we use to derive the maximum-likelihood weights help build intuitions about what can be expected in biological circuits, and linear weights such as these could be learned by real neural systems (Dayan and Abbott, 2001). A key difference here from prior work is that rather than focus on discrimination (Haefner et al., 2013), we treat orientation estimation as a multiclass identification problem, discretizing θ such that for each possible θk, there is a separate weight vector. Thus, in this derivation, the optimal weights depend on the tuning curves themselves, not the derivative.

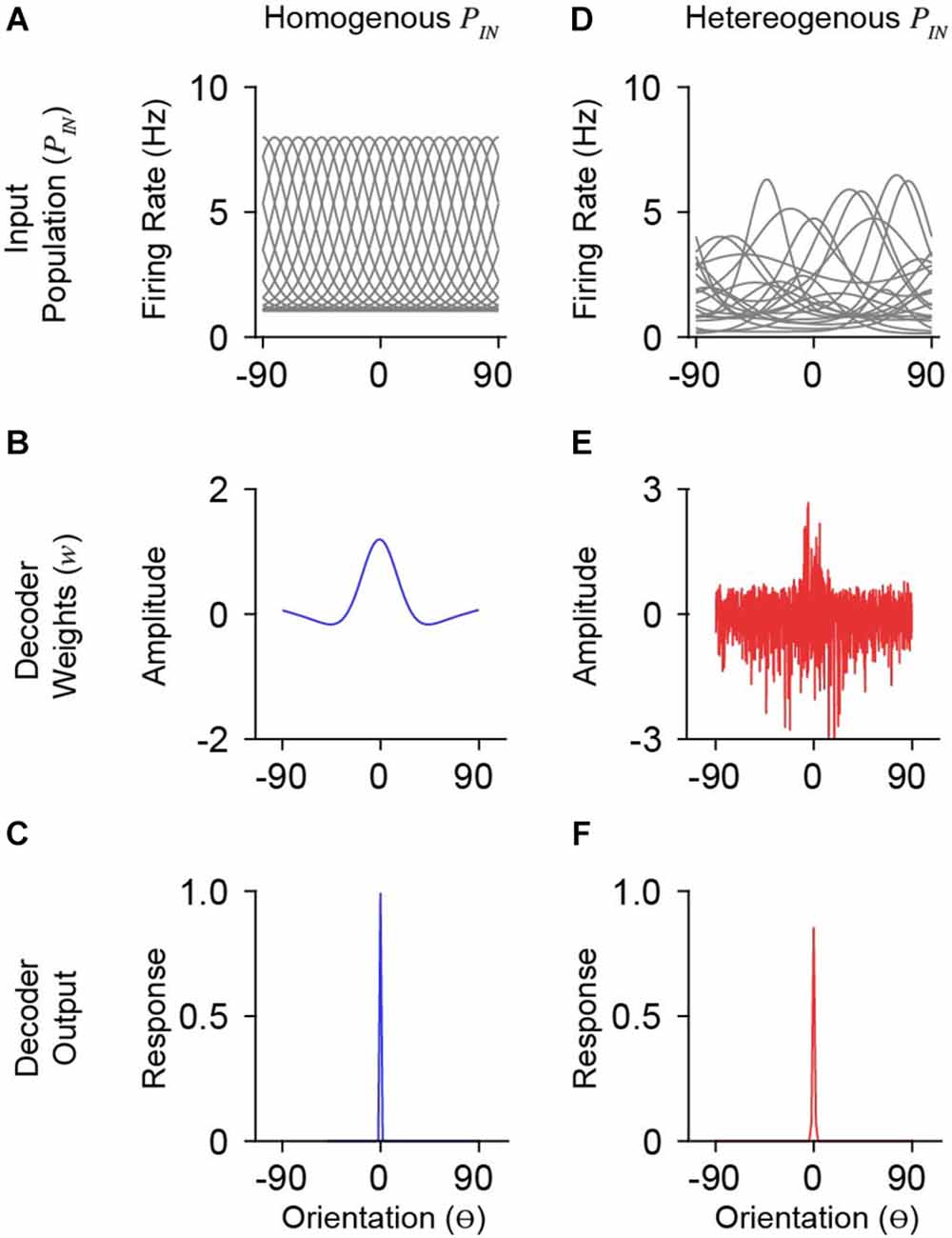

To understand how synaptic weights depend on input statistics, we derived maximum-likelihood weights for input populations, PIN, with different tuning and covariance. To generate PIN we simulated N neurons responding to K oriented stimuli (θ=[−90o:K/180:+90o]). We briefly describe the construction of PIN here (full details are described in the “Methods” Section). Each neuron is defined by a tuning function and noise term, describing trial-by-trial variability, which are summed to generate stimulus-driven responses. We compared two fundamentally different types of input populations that have been used in the literature, homogeneous and heterogenous, as well as the role of correlated variability in shaping readout weights. A homogeneous PIN consists of shifted copies of a single tuning curve (Figure 2A). Heterogenous PIN have diverse tuning functions and were generated to match measurements from macaque V1 (Ringach et al., 2002). The heterogenous PIN consisted of tuning curves closely resembling V1 physiology in terms of the variation in peak firing rate, bandwidth, and baseline firing rate (Figure 2D). Varying amounts of limited-range correlations were included such that the noise correlation between two neurons depends on the difference in their tuning preferences (Ecker et al., 2011; Kohn et al., 2016).

Figure 2. Model simulations with homogenous and heterogeneous input populations. (A) Orientation tuning of a homogenous input population. Shown is a subset of the total population (n = 20/1,000). The ordinate is orientation preference, restricted between −90 o and 90o. (B) Derived weights for a single decoder neuron (preferring 0o) reading out the homogenous (blue) input population in (A). Weights for homogenous populations smoothly vary over orientation space. (C) Response output of the decoder neuron whose weights are shown in (B). (D–F) Same as in (A–C) for a heterogeneous input population with moderate correlation (co = 0.25). Note that decoder weights for heterogeneous input populations are not smooth.

The statistics of PIN responses, R, will dictate the weight structure for neurons in a decoding population. For a homogeneous PIN, the weights are smooth across orientation space and exhibit three primary features: a prominent peak about the preferred orientation of the output tuning, slight negative weights for orientations just outside the preferred, and near-zero weights at orthogonal orientations (Figure 2B). With more realistic tuning diversity (heterogenous PIN), optimal weights are no longer smooth (Figure 2E). While the optimal weights appear to roughly have the same overall shape as for homogeneous PIN, there is considerable positive and negative weighting across orientation space. Despite substantial changes in optimal weight vectors, the decoder output (i.e., somatic response) tuning was narrow (Figure 2F), similar to the output for the homogeneous case (Figure 2C).

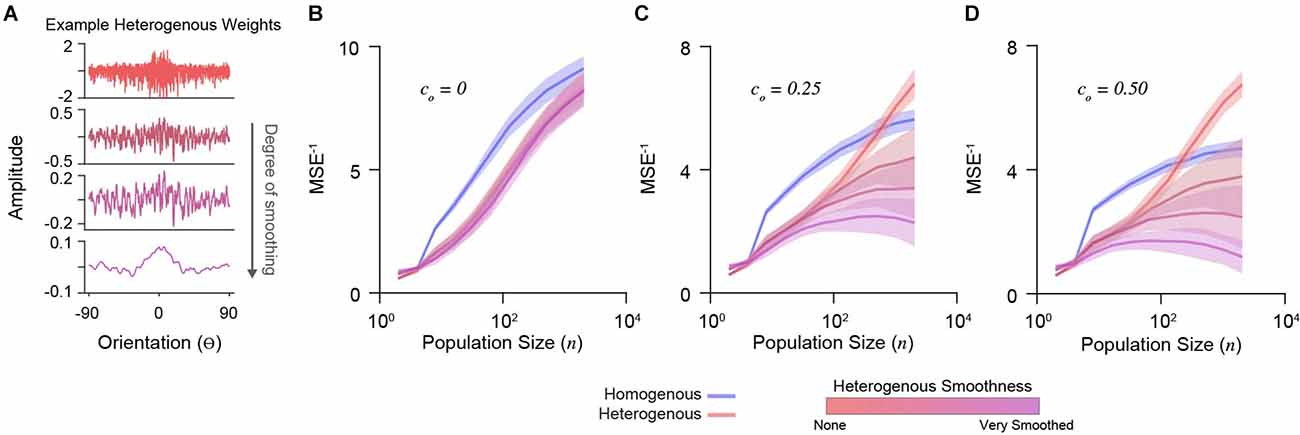

To explore the importance of decoding weight diversity, we imposed a smoothing penalty on weight vectors (Park and Pillow, 2011). We calculated cross-validated decoder accuracy using the mean-squared error between the maximum a posteriori estimation and true stimulus (see “Methods” Section). Different degrees of smoothing are shown for an example set of weights in Figure 3A. We simulated a range of population sizes (n = 2–2,048) and correlations (co= 0, 0.25, 0.50).

Figure 3. Decoder performance of heterogeneous input populations depends on population size, correlations, and weight diversity. (A) Example weight distribution for a decoder neuron reading out a heterogeneous input population (top). Shown are the effects of progressively smoothing weights. Smooth parameters (see “Methods” Section) from top to bottom: (0, 0), (0.1, 1), (0.2, 2), (1, 10). The ordinate is orientation preference, restricted between −90o and 90o. (B) Decoder performance (inverse mean-squared-error) plotted for homogenous and heterogeneous input populations of increasing size. Simulations here include no correlations (co = 0). Shading indicates standard error. (C) Same as in (D) for input populations with moderate correlation (co = 0.25). (D) Same as in (B) for input populations with stronger correlation (co = 0.50).

Without noise correlations, the accuracy of all decoders increases with population size, with a homogenous PIN performing best (Figure 3B). In the presence of noise correlations, accuracy saturates for large homogenous PIN (Figures 3C,D). As previously shown (Ecker et al., 2011), accuracy for heterogeneous populations with limited-range correlations does not saturate (Figures 3C,D). However, this depends on weight diversity. Smoothing the weights for heterogeneous PIN caused saturation and decreased accuracy (Figures 3C,D), demonstrating that weight amplitude diversity in analytically derived weights distributions are critical for the decoder performance.

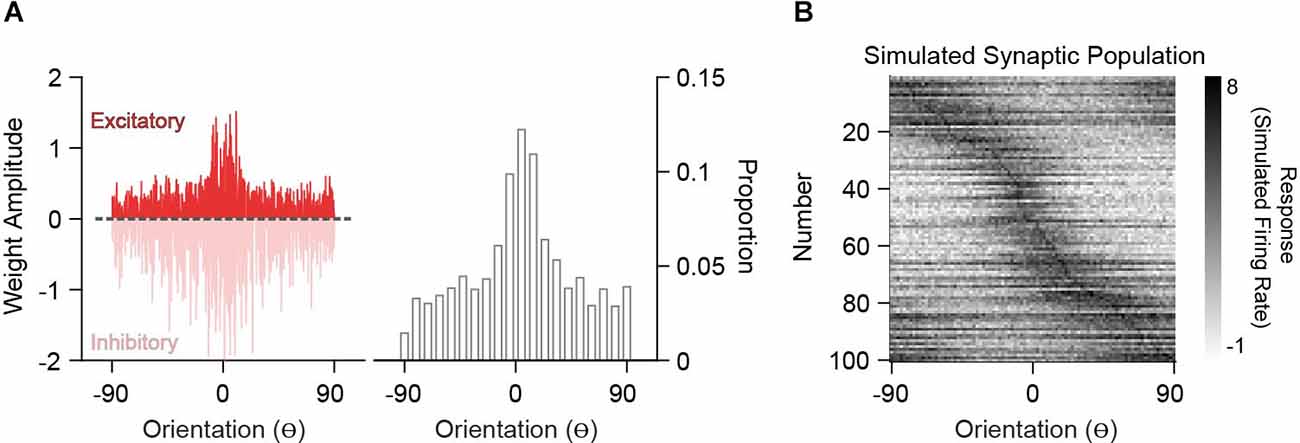

In order to compare analytically derived weights with the synaptic inputs onto V1 neurons measured in vivo, we generated excitatory synaptic input populations (PSYN). Under the assumption that synaptic integration is linear, two synapses of equal weight are the same as one synapse with double that weight. This creates a degeneracy where synapse count and size trade-off. Because current spine imaging techniques typically capture large synapses and there is no relationship between strength and orientation preference (Scholl et al., 2021), we can assume size is fixed and convert the derived weights into a frequency distribution of “synaptic inputs” (Figure 4A). The tuning curve for such a synapse is the tuning curve of the input and thus, the synaptic input population, PSYN, is the input population resampled with probabilities given by the derived weights. An example PSYN for a single decoder neuron is shown in Figure 4B (drawn from the heterogeneous PIN in Figure 2). PSYN in this example displays some specificity in orientation tuning relative to the somatic output, indicated by a larger proportion of simulated synapses with similar orientation preference as the somatic output (0o) of the decoder neuron.

Figure 4. Simulation of synaptic populations from decoder neuron weight distributions. (A) Example weight distribution for a single decoder neuron tuned to 0o (left). Ordinates are orientation preference, restricted between −90o and 90o. The dashed line separates excitatory (positive) and inhibitory (negative) weights. Excitatory weight distribution over the input population is transformed into a frequency distribution, whereby greater amplitude equates to a greater frequency of occurrence (right). (B) Example simulated synaptic population (n = 100 spines) from the weight distribution in (A). Shown are the orientation tuning curves of each simulated synapse (normalized).

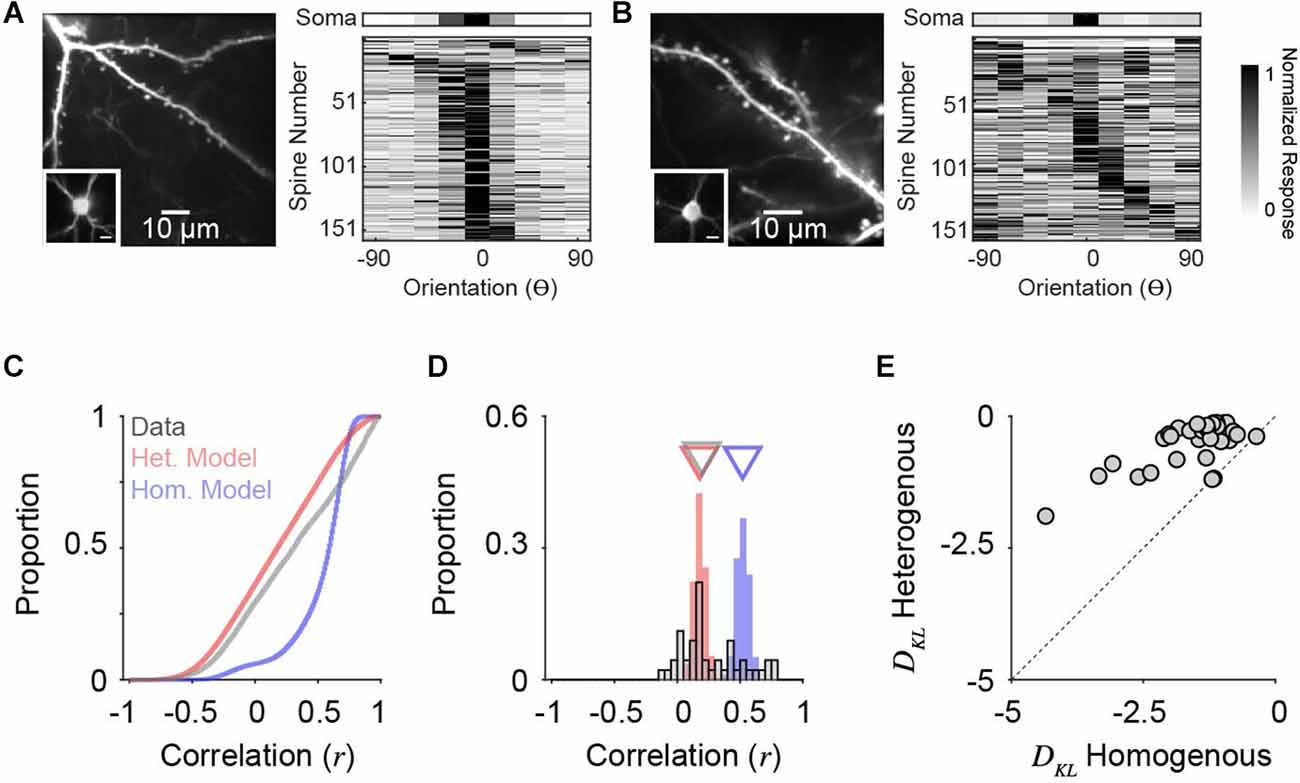

We analyzed two-photon calcium recordings from soma and corresponding dendritic spines on individual neurons in ferret V1 during the presentation of oriented drifting gratings (see “Methods” Section). While our model draws from a PIN matched to measurements from macaque V1, the orientation tuning of layer 2/3 neurons in ferret V1, as measured by two-photon cellular imaging, exhibit a similar range in selectivity (Wilson et al., 2016). Visually responsive and isolated dendritic spines (see “Methods” Section) typically exhibit diverse orientation tuning relative to the somatic output, although some individual cells show greater overall diversity (Figure 5B) than others (Figure 5A). To characterize PSYN diversity, both for real dendritic spines and simulated inputs, we computed the Pearson correlation coefficient between the tuning curves of individual inputs and the corresponding somatic output (Scholl et al., 2021). For these comparisons, we sampled orientation space in the model spines to match our empirical measurements (22.5 deg increments), and the number of total excitatory inputs recovered for each simulated downstream neuron was set to 100, similar to the average number of visually-responsive spines recorded for each ferret V1 neuron (n = 45, n = 158.9 ± 73.2 spines/cell). Simulations were run 10,000 times, with n = 1,000 for PIN and co= 0.20.

Figure 5. Orientation tuning diversity of dendritic spine populations in ferret V1 match simulations with correlated, heterogeneous input populations. (A) Two-photon standard deviation projection of example dendrite and spines recorded from a single cell (left). Inset: Two-photon standard-deviation projection of corresponding soma. The scale bar is 10 microns. Orientation tuning of soma (top) and all visually-responsive dendritic spines from this single cell (n = 159) are shown (right). Spine responses are normalized peak ΔF/F. Orientation preferences are shown relative to the somatic preference (aligned to 0o). (B) Same as in (A) for another example cell (n = 162 visually-responsive spines). (C) Cumulative distributions of tuning correlation between individual dendritic spines or simulated synaptic inputs with corresponding somatic tuning or decoder output. Shown are correlations of simulations of homogenous (blue) or heterogeneous (red) input populations, compared to empirical data (gray). (D) Distributions of average tuning correlation between synaptic input and somatic output across measured cells (n = 45). Also shown are distributions of average tuning correlation for simulated cells. Triangles denote median values for each distribution. (E) Comparison of Kullback-Leibler divergence (DKL) between data and each model type. Each data point represents an individual cell’s population of dendritic spines.

Across all simulated inputs, input-output tuning correlation was higher for homogeneous PSYN compared to heterogeneous PSYN (median rhom = 0.60, median rhom = 0.18, n = 900,000; Figure 5C). Tuning correlation between all imaged dendritic spines and soma was low (median rcell = 0.31, n = 7,151 spines from 45 cells), more closely resembling our model with a heterogenous PIN. As somatic orientation selectivity (i.e., tuning bandwidth) varies for single cells in ferret V1 (Goris et al., 2015; Wilson et al., 2016), we next examined the average input-output tuning correlation across individual cells (Figure 5D). Here, the homogeneous model exhibited greater specificity then the heterogeneous model (median rhom = 0.52; median rhet = 0.18, n = 90,000). For ferret V1 cells, we observed similar spine-soma correlation as the heterogeneous simulation (median rcell = 0.20, n = 45). Ferret V1 cells were not statistically different from neural decoders with a heterogeneous PSYN (p = 0.19, Mann-Whitney test), while neural decoders with a homogeneous PSYN were significantly more correlated with the inputs (p < 0.0001, Mann-Whitney test). A small percentage of imaged cells (11.1%, n = 5/45) had synaptic populations whose mean tuning correlation was within the 95% confidence interval of the homogeneous model distribution. This observation may be influenced by contamination from back-propagating action potentials, the limited sensory space probed, or indicate a true functional specialization of a subpopulation of excitatory neurons. Additionally, some cells had negative average correlations with their spines, which never occurred in the models—potentially indicating linearities between the spines and soma. It is also important to emphasize that both synaptic populations and the heterogeneous model exhibit a positive bias in tuning correlations, illustrating that while inputs are functionally diverse, they are, on average, more similarly tuned to the cell/decoder output.

Given the differences between ferret V1 neurons, we quantified the degree to which synaptic populations on each neuron matched tuning correlation distributions from models of homogenous and heterogenous PIN, by calculating the Kullback-Leibler divergence (DKL, bin size = 0.05, see “Methods” Section). Across our population, imaged neurons more closely resembled simulations with heterogenous, compared to homogenous, PIN (93.3%, n = 42/45; Figure 5E) and DKL from a heterogenous model was consistently larger (p < 0.0001, sign rank Wilcoxon test). This trend held for a range of histogram bin sizes (0.001–0.20). Importantly, the models are not fit to data. They are derived entirely from the statistics of the input population, so this correspondence between the heterogenous model and the data results from no free parameters.

In addition to the similarity in input-output tuning correlation, we observed several trends predicted by the heterogeneous model that were evident in synaptic populations imaged in vivo. Simulated excitatory inputs correlated with the decoder output were not more selective for orientation (see “Methods” Section; bootstrapped PCA slope = 0.001 ± 0.004 s.e., n = 10,000 simulation runs). For two-photon data, a minuscule, but significant, trend was evident (bootstrapped PCA slope = 0.03 ± 0.1 s.e., n = 7,151). So while selective inputs are proposed to provide more information about encoded stimulus variables (Seriès et al., 2004; Shamir and Sompolinsky, 2006; Zavitz and Price, 2019) and unselective (or poorly selective) inputs could convey information through their covariance with selective neurons (Zylberberg, 2017), our model and experimental data suggest co-tuned and orthogonally-tuned inputs exhibit a wide range of tuning selectivity. Response variability (i.e., standard deviation) across trials for simulated excitatory inputs was significantly smaller for “null” orientations (± 90 deg) than at the “preferred” (median = 0.30 and IQR = 0.14, median = 0.38 and IQR = 0.22, respectively; p < 0.001, Wilcoxon rank-sum test) and this trend was also observed in our two-photon data (null: median = 0.13 and IQR = 0.15; preferred: median = 0.23 and IQR = 0.31, respectively; p < 0.001, Wilcoxon rank-sum test). As both modeled and imaged neurons had “null”-tuned excitatory inputs that exhibited less response variability, these inputs may carry useful information about when the preferred stimulus is not present.

Taken together, our decoding framework with realistic (i.e., heterogenous orientation tuning), noisy input populations suggests the collection of orthogonally-tuned excitatory inputs in cortical neurons in vivo is not unexpected. Instead, the synaptic architecture of layer 2/3 neurons in the ferret visual cortex is likely optimized for the readout of upstream populations tuned to orientation. As functional heterogeneity and correlated noise are ubiquitous in sensory populations (Jia et al., 2010, 2011; Chen et al., 2011, 2013; Wertz et al., 2015; Wilson et al., 2016, 2018; Iacaruso et al., 2017; Scholl et al., 2017; Kerlin et al., 2019; Ju et al., 2020), the fact that simple linear model utilizes orthogonally-tuned excitatory inputs suggests this is a general principle across mammal species.

We used a population decoding framework (Pouget et al., 2000; Jazayeri and Movshon, 2006; Shamir, 2014; Kohn et al., 2016) to elucidate a possible source of synaptic diversity in functional response properties. We find that even simple decoders exhibit substantial heterogeneity in their weights when the inputs are noisy, correlated neural populations with heterogeneous orientation tuning. This observation is consistent with several studies that find benefits of heterogeneity for population coding, computational capacity, and self-organization of global networks (Tripathy et al., 2013; Weigand et al., 2017; Duarte and Morrison, 2019). We argue that this could naturally explain the heterogeneity in synaptic inputs measured in vivo if these cortical neurons are decoding information from upstream input populations. We compared two neural decoders: one with homogenous input (Jazayeri and Movshon, 2006) and one with heterogenous input (Ecker et al., 2011). We show that empirical measurements from dendritic spines recorded within individual cortical neurons in ferret V1 exhibit a similar amount of diversity in orientation tuning as simulated inputs (i.e., excitatory weights) from heterogeneous input populations. It may appear trivial that heterogeneous input populations would produce heterogeneous weights, but it was neither immediately obvious that the weights would not be smooth nor that excitatory weights would be evident for orthogonal orientations. Orthogonally-tuned or non-preferred inputs are often considered to be aberrant; to be pruned away during experience-dependent plasticity or development (Holtmaat and Svoboda, 2009). Our decoding approach suggests these inputs are purposeful and could emerge through development as cortical circuits learn the statistics of their inputs (Avitan and Goodhill, 2018). Our approach also suggests that while V1 does not exhibit strict “like-to-like” connectivity, there is a functional bias in the distribution of inputs onto single neurons. Taken together, our results shed light on synaptic diversity that has been puzzling, suggesting that it is, in fact, expected given the known properties of the input population.

We believe our study is a step forward in combing population coding theory (Pouget et al., 2000; Averbeck et al., 2006) and functional connectomics (Scholl et al., 2021). The ability to measure receptive field properties and statistics of sensory-driven responses of synapses in vivo provides a new testing bed for population codes. The individual neurons which synapses converge on are the real components of what has long been a hypothetical downstream population decoder. While we did not set out to build a computational or biophysical model of a neuron, we believe simplistic approaches such as ours are fruitful for understanding basic principles.

One limitation of our approach is that our model did not account for many aspects of cortical networks such as stimulus-dependent correlations, recurrent connections, biophysics, inhibitory subnetworks, or nonlinear dendritic integration (Polsky et al., 2004; Lavzin et al., 2012; Goetz et al., 2021; Lagzi and Fairhall, 2022). The utility of our approach is that it demonstrates that even a simple linear model can produce heterogenous weights, without incorporating elements that introduce nonlinearities. Under more realistic visual stimulus conditions (e.g., natural or stimulus-dependent covariance), the optimal decoder is no longer linear and can be modeled with nonlinear subunits (Pagan et al., 2016; Jaini and Burge, 2017). Extending a decoding framework to include realistic noise has been used to capture many nonlinear features of neural responses including divisive normalization, gain control, and contrast-dependent temporal dynamics—all features which fall naturally out from a normative framework (Chalk et al., 2017). These more sophisticated approaches may be able to make predictions about the synaptic organization itself, whereby local clusters of synapses act as nonlinear subunits (Ujfalussy et al., 2018).

Our model does not describe a cortical transformation. Instead, to limit complexity, we focused on the propagation of orientation selectivity from one neural population to another, akin to the propagation of basic receptive field properties from V1 to higher-visual areas. Our approach was chosen to provide a starting point for predicting the tuning diversity of synaptic input populations as compared to the tuning output or downstream cells. However, this model could be modified to study the convergence and transformation of cortical inputs. An obvious case study would be complex cells in layer 2/3 V1 (Hubel and Wiesel, 1962; Movshon et al., 1978; Spitzer and Hochstein, 1988), which are thought to integrate across presynaptic cells with similar oriented receptive fields with offset spatial subunits to produce polarity invariance. This extension would be better suited for a nonlinear quadratic decoder (Pagan et al., 2016; Jaini and Burge, 2017), rather than the linear one used here. We hope that future studies build upon this modeling framework, exploring quadratic decoders and work towards using richer visual stimuli and neural models (Chalk et al., 2017). We believe this will be critical for gaining insight into how information propagates between cortical areas and from large-scale measurements of cortical functional connectivity.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The animal study was reviewed and approved and all procedures were performed according to NIH guidelines and approved by the Institutional Animal Care and Use Committee at Max Planck Florida Institute for Neuroscience.

JY and BS conceived of the experiment, performed analysis, and wrote the manuscript. JY derived analytic solutions. All authors contributed to the article and approved the submitted version.

This work was supported by the NIH and Max Planck Florida Institute. JY is supported by the K99EY032179. BS is supported by R00EY031137.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We thank Alex Huk, Richard Lange, Sabya Shivkumar, and Jan Kirchner for helpful comments and discussion.

Averbeck, B. B., Latham, P. E., and Pouget, A. (2006). Neural correlations, population coding and computation. Nat. Rev. Neurosci. 7, 358–366. doi: 10.1038/nrn1888

Avitan, L., and Goodhill, G. J. (2018). Code under construction: neural coding over development. Trends Neurosci. 41, 599–609. doi: 10.1016/j.tins.2018.05.011

Berens, P., Ecker, A. S., Gerwinn, S., Tolias, A. S., and Bethge, M. (2011). Reassessing optimal neural population codes with neurometric functions. Proc. Natl. Acad. Sci. USA 108, 4423–4428. doi: 10.1073/pnas.1015904108

Butts, D. A., and Goldman, M. S. (2006). Tuning curves, neuronal variability and sensory coding. PLoS Biol. 4:e92. doi: 10.1371/journal.pbio.0040092

Chalk, M., Masset, P., Deneve, S., and Gutkin, B. (2017). Sensory noise predicts divisive reshaping of receptive fields. PLoS Comput. Biol. 13:e1005582. doi: 10.1371/journal.pcbi.1005582

Chen, X., Leischner, U., Rochefort, N. L., Nelken, I., and Konnerth, A. (2011). Functional mapping of single spines in cortical neurons in vivo. Nature 475, 501–505. doi: 10.1038/nature10193

Chen, T.-W., Wardill, T. J., Sun, Y., Pulver, S. R., Renninger, S. L., Baohan, A., et al. (2013). Ultrasensitive fluorescent proteins for imaging neuronal activity. Nature 499, 295–300. doi: 10.1038/nature12354

Cossell, L., Iacaruso, M. F., Muir, D. R., Houlton, R., Sader, E. N., Ko, H., et al. (2015). Functional organization of excitatory synaptic strength in primary visual cortex. Nature 518, 399–403. doi: 10.1038/nature14182

Dayan, P., and Abbott, L. F. (2001). Theoretical neuroscience: computational and mathematical modeling of neural systems. Cambridge, MA: The MIT Press.

DiCarlo, J. J., Zoccolan, D., and Rust, N. C. (2012). How does the brain solve visual object recognition? Neuron 73, 415–434. doi: 10.1016/j.neuron.2012.01.010

Duarte, R., and Morrison, A. (2019). Leveraging heterogeneity for neural computation with fading memory in layer 2/3 cortical microcircuits. PLoS Comput. Biol. 15:e1006781. doi: 10.1371/journal.pcbi.1006781

Ecker, A. S., Berens, P., Tolias, A. S., and Bethge, M. (2011). The effect of noise correlations in populations of diversely tuned neurons. J. Neurosci. 31, 14272–14283. doi: 10.1523/JNEUROSCI.2539-11.2011

Goetz, L., Roth, A., and Häusser, M. (2021). Active dendrites enable strong but sparse inputs to determine orientation selectivity. Proc. Natl. Acad. Sci. USA 118:e2017339118. doi: 10.1073/pnas.2017339118

Goris, R. L. T., Simoncelli, E. P., and Movshon, J. A. (2015). Origin and function of tuning diversity in macaque visual cortex. Neuron 88, 819–831. doi: 10.1016/j.neuron.2015.10.009

Graf, A. B. A., Kohn, A., Jazayeri, M., and Movshon, J. A. (2011). Decoding the activity of neuronal populations in macaque primary visual cortex. Nat. Neurosci. 14, 239–245. doi: 10.1038/nn.2733

Haefner, R. M., Gerwinn, S., Macke, J. H., and Bethge, M. (2013). Inferring decoding strategies from choice probabilities in the presence of correlated variability. Nat. Neurosci. 16, 235–242. doi: 10.1038/nn.3309

Hiner, M. C., Rueden, C. T., and Eliceiri, K. W. (2017). ImageJ-MATLAB: a bidirectional framework for scientific image analysis interoperability. Bioinformatics 33, 629–630. doi: 10.1093/bioinformatics/btw681

Holtmaat, A., and Svoboda, K. (2009). Experience-dependent structural synaptic plasticity in the mammalian brain. Nat. Rev. Neurosci. 10, 647–658. doi: 10.1038/nrn2699

Hubel, D. H., and Wiesel, T. N. (1962). Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. (Lond.) 160, 106–154. doi: 10.1113/jphysiol.1962.sp006837

Iacaruso, M. F., Gasler, I. T., and Hofer, S. B. (2017). Synaptic organization of visual space in primary visual cortex. Nature 547, 449–452. doi: 10.1038/nature23019

Jaini, P., and Burge, J. (2017). Linking normative models of natural tasks to descriptive models of neural response. J. Vis. 17:16. doi: 10.1167/17.12.16

Jazayeri, M., and Movshon, J. A. (2006). Optimal representation of sensory information by neural populations. Nat. Neurosci. 9, 690–696. doi: 10.1038/nn1691

Jia, H., Rochefort, N. L., Chen, X., and Konnerth, A. (2010). Dendritic organization of sensory input to cortical neurons in vivo. Nature 464, 1307–1312. doi: 10.1038/nature08947

Jia, H., Rochefort, N. L., Chen, X., and Konnerth, A. (2011). In vivo two-photon imaging of sensory-evoked dendritic calcium signals in cortical neurons. Nat. Protoc. 6, 28–35. doi: 10.1038/nprot.2010.169

Ju, N., Li, Y., Liu, F., Jiang, H., Macknik, S. L., Martinez-Conde, S., et al. (2020). Spatiotemporal functional organization of excitatory synaptic inputs onto macaque V1 neurons. Nat. Commun. 11:697. doi: 10.1038/s41467-020-14501-y

Kerlin, A., Mohar, B., Flickinger, D., MacLennan, B. J., Dean, M. B., Davis, C., et al. (2019). Functional clustering of dendritic activity during decision-making. eLife 8:e46966. doi: 10.7554/eLife.46966

Ko, H., Hofer, S. B., Pichler, B., Buchanan, K. A., Sjöström, P. J., Mrsic-Flogel, T. D., et al. (2011). Functional specificity of local synaptic connections in neocortical networks. Nature 473, 87–91. doi: 10.1038/nature09880

Kohn, A., Coen-Cagli, R., Kanitscheider, I., and Pouget, A. (2016). Correlations and neuronal population information. Annu. Rev. Neurosci. 39, 237–256. doi: 10.1146/annurev-neuro-070815-013851

Lagzi, F., and Fairhall, A. (2022). Tuned inhibitory firing rate and connection weights as emergent network properties. BioRxiv [Preprint]. doi: 10.1101/2022.04.12.488114

Lavzin, M., Rapoport, S., Polsky, A., Garion, L., and Schiller, J. (2012). Nonlinear dendritic processing determines angular tuning of barrel cortex neurons in vivo. Nature 490, 397–401. doi: 10.1038/nature11451

Lee, W.-C. A., Bonin, V., Reed, M., Graham, B. J., Hood, G., Glattfelder, K., et al. (2016). Anatomy and function of an excitatory network in the visual cortex. Nature 532, 370–374. doi: 10.1038/nature17192

Ma, W. J., Beck, J. M., Latham, P. E., and Pouget, A. (2006). Bayesian inference with probabilistic population codes. Nat. Neurosci. 9, 1432–1438. doi: 10.1038/nn1790

Movshon, J. A., Thompson, I. D., and Tolhurst, D. J. (1978). Receptive field organization of complex cells in the cat’s striate cortex. J. Physiol. (Lond.) 283, 79–99. doi: 10.1113/jphysiol.1978.sp012489

Pagan, M., Simoncelli, E. P., and Rust, N. C. (2016). Neural quadratic discriminant analysis: nonlinear decoding with v1-like computation. Neural Comput. 28, 2291–2319. doi: 10.1162/NECO_a_00890

Park, M., and Pillow, J. W. (2011). Receptive field inference with localized priors. PLoS Comput. Biol. 7:e1002219. doi: 10.1371/journal.pcbi.1002219

Peirce, J. W. (2007). PsychoPy—Psychophysics software in Python. J. Neurosci. Methods 162, 8–13. doi: 10.1016/j.jneumeth.2006.11.017

Pnevmatikakis, E. A., and Giovannucci, A. (2017). NoRMCorre: an online algorithm for piecewise rigid motion correction of calcium imaging data. J. Neurosci. Methods 291, 83–94. doi: 10.1016/j.jneumeth.2017.07.031

Pologruto, T. A., Sabatini, B. L., and Svoboda, K. (2003). ScanImage: flexible software for operating laser scanning microscopes. Biomed. Eng. Online 2:13. doi: 10.1186/1475-925X-2-13

Polsky, A., Mel, B. W., and Schiller, J. (2004). Computational subunits in thin dendrites of pyramidal cells. Nat. Neurosci. 7, 621–627. doi: 10.1038/nn1253

Pouget, A., Dayan, P., and Zemel, R. (2000). Information processing with population codes. Nat. Rev. Neurosci. 1, 125–132. doi: 10.1038/35039062

Reid, R. C., and Alonso, J. M. (1995). Specificity of monosynaptic connections from thalamus to visual cortex. Nature 378, 281–284. doi: 10.1038/378281a0

Ringach, D. L., Shapley, R. M., and Hawken, M. J. (2002). Orientation selectivity in macaque V1: diversity and laminar dependence. J. Neurosci. 22, 5639–5651. doi: 10.1523/JNEUROSCI.22-13-05639.2002

Rumyantsev, O. I., Lecoq, J. A., Hernandez, O., Zhang, Y., Savall, J., Chrapkiewicz, R., et al. (2020). Fundamental bounds on the fidelity of sensory cortical coding. Nature 580, 100–105. doi: 10.1038/s41586-020-2130-2

Rust, N. C., Mante, V., Simoncelli, E. P., and Movshon, J. A. (2006). How MT cells analyze the motion of visual patterns. Nat. Neurosci. 9, 1421–1431. doi: 10.1038/nn1786

Sage, D., Prodanov, D., and Tinevez, J. Y. (2012). MIJ: making interoperability between ImageJ and Matlab possible. Available online at: http://bigwww.epfl.ch.

Scholl, B., and Fitzpatrick, D. (2020). Cortical synaptic architecture supports flexible sensory computations. Curr. Opin. Neurobiol. 64, 41–45. doi: 10.1016/j.conb.2020.01.013

Scholl, B., Thomas, C. I., and Ryan, M. A. (2021). Cortical response selectivity derives from strength in numbers of synapses. Nature 590, 111–114. doi: 10.1038/s41586-020-03044-3

Scholl, B., Wilson, D. E., and Fitzpatrick, D. (2017). Local order within global disorder: synaptic architecture of visual space. Neuron 96, 1127–1138.e4. doi: 10.1016/j.neuron.2017.10.017

Seriès, P., Latham, P. E., and Pouget, A. (2004). Tuning curve sharpening for orientation selectivity: coding efficiency and the impact of correlations. Nat. Neurosci. 7, 1129–1135. doi: 10.1136/bmjresp-2022-001224

Shamir, M. (2014). Emerging principles of population coding: in search for the neural code. Curr. Opin. Neurobiol. 25, 140–148. doi: 10.1016/j.conb.2014.01.002

Shamir, M., and Sompolinsky, H. (2006). Implications of neuronal diversity on population coding. Neural Comput. 18, 1951–1986. doi: 10.1162/neco.2006.18.8.1951

Spitzer, H., and Hochstein, S. (1988). Complex-cell receptive field models. Prog. Neurobiol. 31, 285–309. doi: 10.1016/0301-0082(88)90016-0

Stringer, C., Michaelos, M., and Pachitariu, M. (2019). High precision coding in mouse visual cortex. BioRxiv [Preprint]. doi: 10.1101/679324

Tripathy, S. J., Padmanabhan, K., Gerkin, R. C., and Urban, N. N. (2013). Intermediate intrinsic diversity enhances neural population coding. Proc. Natl. Acad. Sci. USA 110, 8248–8253. doi: 10.1073/pnas.1221214110

Ujfalussy, B. B., Makara, J. K., Lengyel, M., and Branco, T. (2018). Global and multiplexed dendritic computations under in vivo-like conditions. Neuron 100, 579–592.e5. doi: 10.1016/j.neuron.2018.08.032

Weigand, M., Sartori, F., and Cuntz, H. (2017). Universal transition from unstructured to structured neural maps. Proc. Natl. Acad. Sci. USA 114, E4057–E4064. doi: 10.1073/pnas.1616163114

Wertz, A., Trenholm, S., Yonehara, K., Hillier, D., Raics, Z., Leinweber, M., et al. (2015). Presynaptic networks. Single-cell-initiated monosynaptic tracing reveals layer-specific cortical network modules. Science 349, 70–74. doi: 10.1126/science.aab1687

Wilson, D. E., Scholl, B., and Fitzpatrick, D. (2018). Differential tuning of excitation and inhibition shapes direction selectivity in ferret visual cortex. Nature 560, 97–101. doi: 10.1038/s41586-018-0354-1

Wilson, D. E., Whitney, D. E., Scholl, B., and Fitzpatrick, D. (2016). Orientation selectivity and the functional clustering of synaptic inputs in primary visual cortex. Nat. Neurosci. 19, 1003–1009. doi: 10.1038/nn.4323

Zavitz, E., and Price, N. S. C. (2019). Weighting neurons by selectivity produces near-optimal population codes. J. Neurophysiol. 121, 1924–1937. doi: 10.1152/jn.00504.2018

Keywords: synapse, two-photon imaging, population coding, visual cortex, input - output analysis

Citation: Yates JL and Scholl B (2022) Unraveling Functional Diversity of Cortical Synaptic Architecture Through the Lens of Population Coding. Front. Synaptic Neurosci. 14:888214. doi: 10.3389/fnsyn.2022.888214

Received: 02 March 2022; Accepted: 21 June 2022;

Published: 26 July 2022.

Edited by:

Athanasia Papoutsi, Foundation of Research and Technology-Hellas (FORTH), GreeceReviewed by:

Srikanth Ramaswamy, Newcastle University, United KingdomCopyright © 2022 Yates and Scholl. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Benjamin Scholl, YmVuamFtaW4uc2Nob2xsQHBlbm5tZWRpY2luZS51cGVubi5lZHU=

† These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.