94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

DATA REPORT article

Front. Neuroergonomics, 05 June 2024

Sec. Cognitive Neuroergonomics

Volume 5 - 2024 | https://doi.org/10.3389/fnrgo.2024.1411305

This article is part of the Research TopicOpen Science to Support Replicability in Neuroergonomic ResearchView all 5 articles

One of the key challenges in the design of immersive virtual reality (VR) is to create an experience that mimics the natural, real world as closely as possible. The overarching goal is that users “treat what they perceive as real” and consequently feel present in the virtual world (Slater, 2009). To feel present in an environment, users need to establish a dynamic and precise interaction with their surroundings. This allows users to infer the causal structures in the (virtual) world they find themselves in and develop strategies to deal with uncertainties (Knill and Pouget, 2004).

Here, we present a data set that indexes interaction realism in VR. By violating users' predictions about the VR's interaction behavior in an “oddball-like” manner (Sutton et al., 1965), labels with high temporal resolution were obtained (that describe the interaction); see our previous publications (Gehrke et al., 2019, 2022).

Today, the brain is frequently conceived of as creating a model of its environment in the constant game of predicting the causes of its available sensory data (Rao and Ballard, 1999; Friston, 2010; Clark, 2013). In this predictive coding conception, probabilistic analyzes of previous experiences drive inferences about which actions and perceptual events are causally related. This is inherently tied to the body's capacity to act on the environment, rendering the action–perception cycle of cognition into an embodied process (Friston, 2012). When all movement-related sensory data (i.e., sensorimotor data) are consistent with the predicted outcome of an action, the action is regarded as successful. However, when a discrepancy between the predicted and the actual sensorimotor data are detected, a prediction error occurs, and attention will be directed to correct for the discrepancy in real time (Savoie et al., 2018). In their work, Savoie et al. (2018) manipulated the control-to-display ratio in a quarter of the trials. In the manipulated trials, a dot moved at 45° offset compared to the real hand motion during a reach to a target. The authors found electroencephalographic data (EEG) data to reflect this prediction error in sensorimotor mapping.

Therefore, the fast and accurate detection of such discrepancies is crucial for performing precise interactions in the real as well as in virtual worlds.

The underlying mechanisms and neural foundations of predictive coding have been extensively studied; see, for example, Holroyd and Coles (2002), Bendixen et al. (2012), and Clark (2013). The frontal mismatch negativity paradigm (MMN, a type of event-related potential, also known as ERP) has often been employed to probe the predictive brain hypothesis, Stefanics et al. (2014) for a review. Lieder et al. (2013) have shown that the best-fitting explanation of MMN activity is the computation of a Bayes-optimal generative model, that is, prediction errors.

However, these research findings originate from stationary EEG protocols that require the user to passively observe presented stimuli, neglecting the embodied cognitive aspects of goal-directed behavior. As a consequence, the cortical activity patterns underlying predictive embodied processes during goal-directed movement are not fully established. How these electrocortical features reflect a perceived loss in physical immersion when interacting with virtual- and augmented reality (VR/AR) is yet to be understood.

The presented mobile brain/body imaging data include brain recordings via EEG and behavioral indexes, as well as motion capture during an interactive VR experience (Makeig et al., 2009; Gramann et al., 2014; Jungnickel et al., 2019). Based on the idea that the brain has evolved to optimize motor behavior by detecting sensory mismatches, we have previously leveraged these data to use the frontal “prediction error” negativity (PEN) as a feature for detection of system errors in haptic VR (Gehrke et al., 2019, 2022).

The data set is available in the Brain Imaging Data System (BIDS) format (Pernet et al., 2019; Jeung et al., 2023). In an oddball-style paradigm, haptic realism was altered, resulting in a 2 (mismatch) × 3 (level of haptic immersion) design. This allows for both, the analyzes of each main effect as well as their interaction. In the experiment, interaction realism was manipulated by adding temporally unexpected visual and haptic feedback. To this end, visuo-haptic glitches were introduced during a reaching task, similar to unexpected tones in classical auditory oddball paradigms (Sutton et al., 1965). Haptic realism was altered by adding haptic channels per condition in the experimental block design. Two haptic conditions were presented following a baseline, non-haptic, condition. Touching a surface was rendered through a vibration motor under the fingertip in one condition, and in another condition, this was further combined with rendering object rigidity (force feedback) through the use of electrical muscle stimulation (EMS).

After experiencing each haptic modality, participants rated their subjective level of presence on the Igroup Presence Questionnaire (IPQ; Schubert, 2003). This questionnaire is a scale for measuring the subjective sense of presence experienced in VR.

The experiment was approved by the local ethics committee of the Department of Psychology and Ergonomics at the TU Berlin (Ethics approval: GR1020180603). In total, 20 participants (12 female, mean age = 26.7 years, SD = 3.6 years) were recruited through an online tool provided by the Department of Psychology and Ergonomics of the Berlin Institute of Technology and local listings. In line with the ethics approval, only right-handed people between the ages of 18 and 65 were recruited.

All participants had normal or corrected-to-normal vision and had not experienced VR with either vibrotactile feedback at the fingertip or any form of force feedback, including EMS. Participants were informed about the nature of the experiment, recording and anonymization procedures. Each subject signed a consent form. Participants were compensated 10 euros or 1 study participation hour (course credit) per hour.

Before further analysis, data from the first subject were removed due to data recording errors.

A virtual environment was designed in Unity3D (Unity Software Inc., San Francisco, CA, USA) and presented through the HTC Vive Pro (High Tech Computer Co., Taoyuan, Taiwan) featuring a 1, 440 × 1, 600 per-eye resolution and a 98° horizontal field of view (for technical details, see: https://vr-compare.com/headset/htcvivepro). An HTC VIVE tracker (High Tech Computer Co., Taoyuan, Taiwan) was used to capture the position of the hand (for technical details, see: https://vr-compare.com/accessory/htcvivetracker3.0).

One vibrotactile actuator (Model 308–100 from Precision Microdrives, London, UK) worn on the fingertip was used to generate (vibro)tactile feedback, with 0.8 g at 200 Hz. This motor measures 8 mm in diameter, making it ideal for the fingertip. The vibration feedback was driven at 70 mA by a 2N7000 Metal Oxide Semiconductor Field-Effect Transistors (MOSFET), which was connected to an Arduino output pin at 3 V. To generate force feedback, we actuated the index finger via EMS, which was delivered via two electrodes attached to the participants' extensor digitorum muscle. We used a medically compliant EMS device (Rehastim, Hasomed, Germany), which provides a maximum of 100 mA and is controllable via USB. The EMS was pre-calibrated per participant to ensure pain-free stimulation and robust actuation.

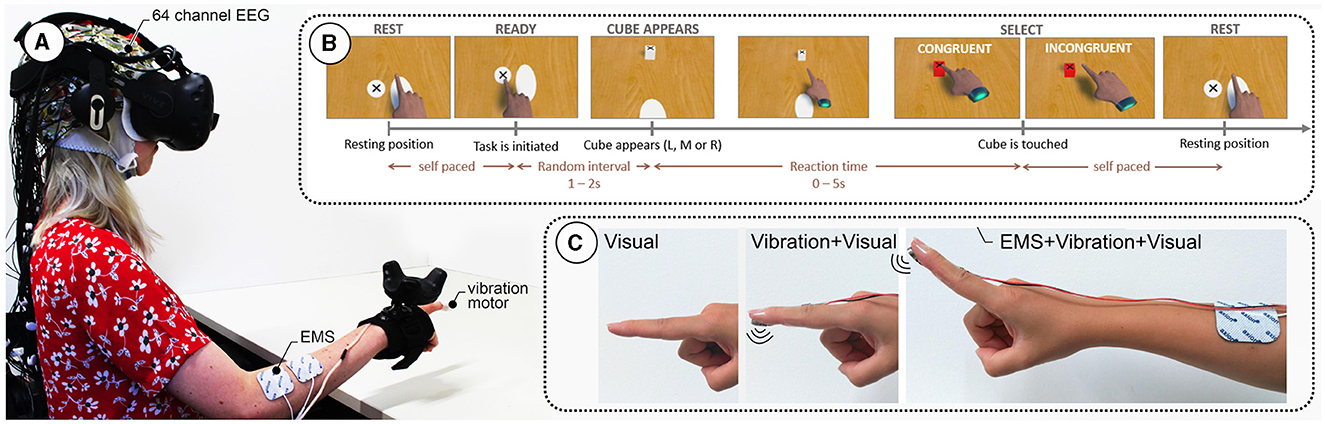

EEG data were recorded from 64 actively amplified electrodes using BrainAmp DC amplifiers from BrainProducts (BrainProducts GmbH, Gilching, Germany). Electrodes were placed according to the 10–20 system (Homan, 1988). Custom EEG cap spacers1 were used to ensure a good fit and less discomfort due to the VR–EEG combination. After fitting the cap, all electrodes were filled with conductive gel to ensure proper conductivity. Electrode impedance was brought below 5K Ohm when possible. See Figure 1A for the full experimental setup.

Figure 1. (A) Experimental setup showing a participant wearing a 64-channel electroencephalography (EEG) cap and a virtual reality (VR) headset. The participant's right arm is equipped with electrical muscle stimulation (EMS) electrodes and a vibration motor under the index finger. (B) Task sequence: The participant starts in a resting position and initiates the task at their own pace. After a random interval of 12 s, a cube appears in one of three positions (Left, Middle, Right). The participant reaches for the cube, with the selection being either congruent or incongruent. The task ends when the cube is touched, followed by a return to the resting position. (C) Different feedback modalities used in the study: Visual feedback only, combined Vibration and Visual feedback, and EMS combined with Vibration and Visual feedback.

The data were collected during a repeated reach-to-tap task in a 2 × 3 study design with the within-subject factors feedback congruity and modality.

Participants performed the task sitting in front of a table, virtually as well as physically. The interaction flow, depicted in Figure 1B, was as follows: participants moved their hands from the resting position to the ready position to indicate they were ready to start the next trial. Participants waited for a new target (a cube) to appear in one of three possible positions (center, left, and right), all located at the same distance from the ready position button on the table. The time for a new target spawning was randomized between 1 and 2 s. A black cross on the top of the cube indicated the location participants were instructed to tap. Then, participants completed the task by tapping the target with their index finger. Tapping success was indicated through three different sensory modalities (see Figure 1C):

Touching the virtual cube led to a change in its color from white to red. No haptic feedback was given.

In addition to visual feedback, touching of the virtual cube was additionally confirmed by a 100 ms vibrotactile stimulus.

In addition to visual and tactile feedback, participants received 100 ms of EMS via two electrodes at the extensor digitorum muscle.

After a target was tapped, participants moved back to the resting position. Here, they could rest before starting the next trial. To maximize EEG data quality, participants were instructed to remain in a calm upright seated position while carrying out the reaching movement. Furthermore, they were instructed to be precise and keep a good pace. However, no feedback was given on the accuracy and speed of their task completion.

The key experimental manipulation in these data is the introduction of prediction errors occurring at different levels of immersion rendered through the haptic modalities. Therefore, to allow assessment of the effects of flawed sensory feedback, the feedback congruity was manipulated in a subset of the trials; see Figure 1B.

Feedback stimuli were presented upon tapping the object exactly when participants expected them to occur based on the available visual information (finger touching the target in the virtual environment).

Feedback stimuli were triggered prematurely. Specifically, we introduced a temporal delta between the expected time of feedback, based on proprioceptive and visual information (finger touching the target in the virtual environment), and the actual time of feedback. This delta was realized by changing the cue triggering the hit sphere (sphere collider) around the virtual cube. While using a collision detection volume of the exact size of the cube in the match trials, we enlarged the radius of a cue-triggering sphere by 350% in the mismatch trials. This decision was based on the study design by Singh et al. (2018), in which they showed that VR users can detect a visual mismatch at ~200% of visual offset from the target. Based on pilot tests, we decided to extend the offset to 350% to increase the salience of the mismatch. One alternative solution to this, would be to alter the control-to-display ratio (Terfurth et al., 2024). This allows for a more precise timing of the violation with respect to the ballistic and corrective phases of the motion.

The experiment consisted of five phases that started with (1) a setup phase, (2) a calibration phase, and (3) a short training phase. For training purposes, we asked participants to wear the VR headset for a maximum of 24 practice trials. Overall, the EEG fitting, calibration, and practice trials took ~30 min (with two experimenters). In step (4), the task itself, the procedure varied between participants.

Per participant, 300 trials were recorded for the Visual and Vibro feedback condition. For the EMS condition, 100 trials were recorded, as this condition was exploratory, and we did not want to put too much strain on participants with a feedback channel that not many people are familiar with.

The order of the Visual and Vibro conditions was counterbalanced across participants, with the EMS condition always being the last block. EMS trials were only collected for 11 participants. The EMS condition was added as an exploratory part of the study, and 11 participants were deemed enough for exploratory analyzes. The EMS condition was always presented as the last block in order to prevent overshadowing of the strong stimuli of the EMS simulations on the other conditions. Because of this positioning, no impact on the visual and visuo-tactile contrast was implied. The general blocked design of the interface conditions was chosen to emphasize the influence of the additional haptic channels while attenuating higher order interactions, such as a prediction error about the upcoming interface condition.

At the end of each condition, we presented four questions from the standard igroup presence questionnaire (IPQ) (Schubert, 2003), in particular: The general presence item (G1), The second item of the realness subscale (REAL2), the fourth item of the spatial presence subscale, and the first item of the involvement subscale (INV1). The questionnaire was implemented into the virtual environment.

EEG, motion capture, and an experiment marker stream were recorded and synchronized using “load_xdf” from labstreaminglayer (https://github.com/sccn/labstreaminglayer). The XDF files were then converted to BIDS format (Gorgolewski et al., 2016; Pernet et al., 2019; Jeung et al., 2023) and the data are available online (https://openneuro.org/datasets/ds003846/versions/2.0.2). Motion data of a head and hand rigid body conform to the BIDS-Motion specification (Jeung et al., 2023) as of March 26, 2024. EEG data were recorded with a sampling rate of 500 Hz and FCz as the reference electrode. Hand and head movements were sampled at 90 Hz when coming out of the HTC VIVE processing cascade.

A full repository including links to the data, experimental VR protocol (Unity), and publication resources can be found at: https://osf.io/x7hnm/.

We provide the code to fully reproduce our results (https://github.com/lukasgehrke/2021-Scientific-Data-Prediction-Error), starting with the conversion of the raw .xdf files to the BIDS format. To ensure the quality of the data set, event-related potential (ERP) and event-related spectral perturbation (ERSP) are reported here at moments of prediction violation.

Our pipeline uses parts of the BeMoBIL pipeline, which wraps and extends EEGLAB toolboxes (Delorme and Makeig, 2004; Klug et al., 2022). Statistical tests were then computed using MNE–Python (Gramfort et al., 2013).

After removing non-experiment segments at the beginning and end of the recording, EEG data were resampled to 250 Hz. Next, bad channels were detected using the “FindNoisyChannel” function, which selects bad channels by amplitude, the signal-to-noise ratio, and correlation with other channels (Bigdely-Shamlo et al., 2015). Rejected channels were then interpolated while ignoring the electrooculogram (EOG) channel and finally re-referenced to the average of all channels, including the original reference channel FCz. After applying a high-pass filter at 1.5 Hz, time-domain cleaning and outlier removal were performed using adaptive mixture of independent component analyzers (AMICA) auto rejection (Palmer et al., 2011). Eye artifacts were removed using the ICLabel toolbox applied to the results from an AMICA (Pion-Tonachini et al., 2019). For this, the popularity classifier was used, meaning that all components having the highest probability for the eye class were projected out of the sensor data.

Motion capture data were filtered with a 6 Hz low-pass filter and resampled to match the EEG sample rate. The first and second derivative were taken and subsequently filtered using an 18 Hz low-pass filter.

We obtained the time of movement onset and subsequent peak velocity by applying a velocity-based algorithm on the hand-motion time series. The algorithm used a simple two-step threshold approach to obtain a robust movement onset of the outward reaching motion. First, a robust onset was defined by the time point where the velocity first exceeded 50% of the maximum velocity between the trial start event and the successful object tap event. Next, a precise motion onset was defined by the first time point where the signal preceding the robust onset fell below 10% of the robust threshold value.

Subsequently, the peak velocity of the outward motion was determined by peak extraction using the MATLAB function “findpeaks” in the time window between the motion onset and the object tap event; see Knill and Pouget (2004).

Event-related time courses from both, band-pass filtered (0.1–15 Hz) electrode FCz (ERP), as well as the hand velocity (ERV) were extracted. ERPs were obtained from –100 to 600 ms around the “tap” event. ERVs were obtained from –500 to 500 ms around the maximum velocity peak; see Knill and Pouget (2004).

Event-related spectral perturbations (ERSP) were obtained by extracting epochs from the trial onset, that is, spawn of the sphere, to the object tap. A pre-stimulus interval was included for later baseline correction. A spectrogram of all single trials was computed using the EEGLAB's “newtimef” function (3–100 Hz in logarithmic scale, using a wavelet transformation with three cycles for the lowest frequency and a linear increase with frequency of 0.5 cycles). The resulting spectograms were linearly time-warped to the movement onset and time of peak velocity.

ERPs were baseline-corrected by subtracting the average amplitude of the last 100 ms preceding the trial start. To ascertain effects of both ERP and ERV, the linear mixed-effects model “sample condition + modality + 1|participantID” was fit at each time point. Effects were assessed using likelihood ratio tests for the main effects with Benjamini–Hochberg p-value correction for false discovery rate (Benjamini and Hochberg, 1995).

For ERSP, a spatiotemporal cluster test was conducted in comparison to power values in a –300 to –100 ms pretrial baseline window. The test was conducted for both contrasts: one test against a pre-stimulus baseline and one between conditions.

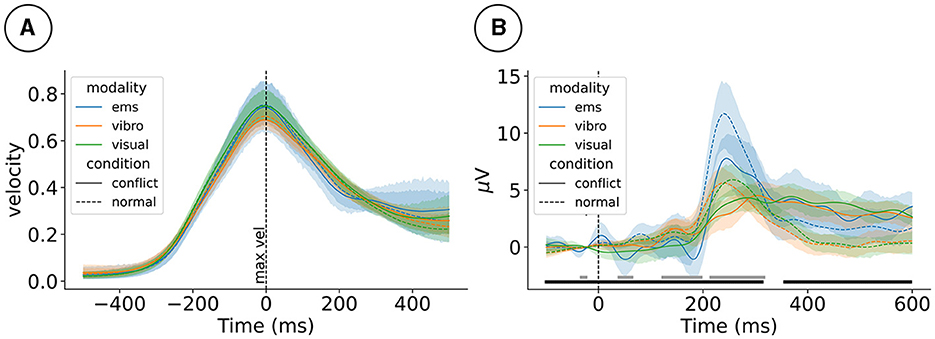

We observed similar motion profiles across the three different haptic modalities. The oddball-like mismatch manipulation did not change how participants moved. This can be seen in Figure 2A, which shows the hand velocity time-locked to the velocity peak of the motion.

Figure 2. (A) Event-related hand velocity (ERV) and (B) event-related potentials (ERP) at electrode FCz for the 2 (mismatch condition) × 3 (haptic modality) design. ERV is plotted from –500 to 500 ms around the peak velocity. ERP is plotted from –100 to 600 ms around the tap event. Gray (haptic modality) and black (mismatch condition) blocks at the bottom mark effects. ems, electrical muscle stimulation.

Visuo-haptic mismatches impacted event-related processing as picked up by the EEG. As reported in our previous studies, we observed that mismatch stimuli impacted the ERP, for example, at electrode FCz 170 ms post-stimulus, ; see Figure 2B. Furthermore, the level of haptic immersion impacted the ERP, for example, electrode at 170ms post-stimulus ().

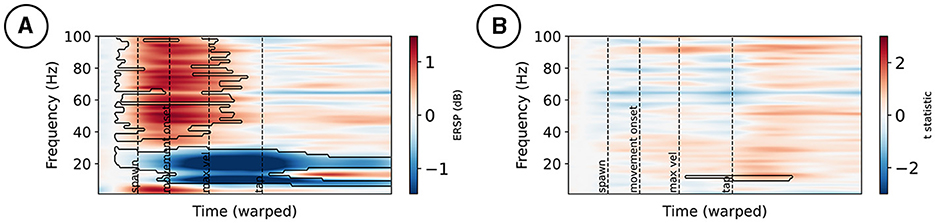

For simplicity, only the test against baseline and the main effect of the mismatch condition is plotted for electrode FCz in Figures 3A, B, respectively. At FCz, ERSPs appear in high frequencies early on during the movement, with a positive change compared to baseline. Furthermore, a negative change compared to baseline power appears in the alpha and beta frequency ranges during the movement, with a peak following maximum velocity; see Figure 3A. Mismatch stimuli affected the spectral power at FCz in lower frequency bands around the tap event; see Figure 3B.

Figure 3. Event-related spectral perturbations at electrode FCz (A). Changes from a –300 to –100 ms pre-stimulus baseline are marked by a black contour. (B) t-statistic of the mismatch condition contrast, with effects marked by a black contour.

Publicly available datasets were analyzed in this study. This data can be found at: https://openneuro.org/datasets/ds003846.

The studies involving humans were approved by Ethik-Kommision (EK), Institut für Psychologie und Arbeitswissenschaft (IPA), TU Berlin. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

LG: Writing – review & editing, Writing - original draft, Visualization, Validation, Supervision, Software, Project administration, Methodology, Investigation, Formal analysis, Data curation, Conceptualization. LT: Writing – review & editing, Writing – original draft. SA: Writing – original draft, Methodology, Investigation, Data curation, Conceptualization. KG: Writing – review & editing, Resources, Project administration, Funding acquisition.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. The research leading to these data being published has received funding from the Bundesministerium für Bildung und Forschung (01GQ1511) and the Deutsche Forschungsgemeinschaft (462163815). We acknowledge support by the Open Access Publication Fund of TU Berlin.

We thank Pedro Lopes (PL) and Albert Chen (AC) from the University of Chicago for conceptualizing and helping set up the experiment (PL), writing and reviewing earlier publications leveraging these data (PL and AC), and assisting with data collection (AC).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Bendixen, A., SanMiguel, I., and Schröger, E. (2012). Early electrophysiological indicators for predictive processing in audition: a review. Int. J. Psychophysiol. 83, 120–131. doi: 10.1016/j.ijpsycho.2011.08.003

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. 57, 289–300.

Bigdely-Shamlo, N., Mullen, T., Kothe, C., Su, K. M., and Robbins, K. A. (2015). The PREP pipeline: standardized preprocessing for large-scale EEG analysis. Front. Neuroinformat. 9:16. doi: 10.3389/fninf.2015.00016

Clark, A. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36, 181–204. doi: 10.1017/S0140525X12000477

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Friston, K. (2010). The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11, 127–138. doi: 10.1038/nrn2787

Friston, K. (2012). Embodied inference and spatial cognition. Cogn. Process 13, 171–177. doi: 10.1007/s10339-012-0519-z

Gehrke, L., Akman, S., Lopes, P., Chen, A., Singh, A. K., Chen, H. T., et al. (2019). “Detecting visuo-haptic mismatches in virtual reality using the prediction error negativity of event-related brain potentials,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI '19 (New York, NY: Association for Computing Machinery), 1–11.

Gehrke, L., Lopes, P., Klug, M., Akman, S., and Gramann, K. (2022). Neural sources of prediction errors detect unrealistic VR interactions. J. Neural Eng. 19:e036002. doi: 10.1088/1741-2552/ac69bc

Gorgolewski, K. J., Auer, T., Calhoun, V. D., Craddock, R. C., Das, S., Duff, E. P., et al. (2016). The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Sci. Data 3:160044. doi: 10.1038/sdata.2016.44

Gramann, K., Jung, T. P., Ferris, D. P., Lin, C. T., and Makeig, S. (2014). Toward a new cognitive neuroscience: modeling natural brain dynamics. Front. Hum. Neurosci. 8:444. doi: 10.3389/fnhum.2014.00444

Gramfort, A., Luessi, M., Larson, E., Engemann, D. A., Strohmeier, D., Brodbeck, C., et al. (2013). MEG and EEG data analysis with MNE—Python. Front. Neurosci. 7, 1–13. doi: 10.3389/fnins.2013.00267

Holroyd, C. B., and Coles, M. G. H. (2002). The neural basis of human error processing: reinforcement learning, dopamine, and the error-related negativity. Psychol. Rev. 109, 679–709. doi: 10.1037/0033-295X.109.4.679

Homan, R. W. (1988). The 10–20 electrode system and cerebral location. Am. J. EEG Technol. 28, 269–279.

Jeung, S., Cockx, H., Appelhoff, S., Berg, T., Gramann, K., Grothkopp, S., et al. (2023). Motion-BIDS: Extending the Brain Imaging Data Structure Specification to Organize Motion Data for Reproducible Research. doi: 10.31234/osf.io/w6z79

Jungnickel, E., Gehrke, L., Klug, M., and Gramann, K. (2019). “Chapter 10 - MoBI—mobile brain/body imaging,” in Neuroergonomics, eds. H. Ayaz and F. Dehais (Academic Press), 59–63. doi: 10.1016/B978-0-12-811926-6.00010-5

Klug, M., Jeung, S., Wunderlich, A., Gehrke, L., Protzak, J., Djebbara, Z., et al. (2022). The BeMoBIL Pipeline for Automated Analyses of Multimodal Mobile Brain and Body Imaging Data. doi: 10.1101/2022.09.29.510051

Knill, D. C., and Pouget, A. (2004). The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 27, 712–719. doi: 10.1016/j.tins.2004.10.007

Lieder, F., Stephan, K. E., Daunizeau, J., Garrido, M. I., and Friston, K. J. (2013). A neurocomputational model of the mismatch negativity. PLoS Comput. Biol. 9:e1003288. doi: 10.1371/journal.pcbi.1003288

Makeig, S., Gramann, K., Jung, T. P., Sejnowski, T. J., and Poizner, H. (2009). Linking brain, mind and behavior. Int. J. Psychophysiol. 73, 95–100. doi: 10.1016/j.ijpsycho.2008.11.008

Palmer, J. A., Kreutz-Delgado, K., and Makeig, S. (2011). AMICA: an Adaptive Mixture of Independent Component Analyzers with Shared Components. Technical Reports. Swartz Center for Computatonal Neursoscience, University of California San Diego.

Pernet, C. R., Appelhoff, S., Gorgolewski, K. J., Flandin, G., Phillips, C., Delorme, A., et al. (2019). EEG-BIDS, an extension to the brain imaging data structure for electroencephalography. Sci. Data 6:103. doi: 10.1038/s41597-019-0104-8

Pion-Tonachini, L., Kreutz-Delgado, K., and Makeig, S. (2019). ICLabel: an automated electroencephalographic independent component classifier, dataset, and website. NeuroImage 198, 181–197. doi: 10.1016/j.neuroimage.2019.05.026

Rao, R. P. N., and Ballard, D. H. (1999). Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci. 2, 79–87.

Savoie, F. A., Thénault, F., Whittingstall, K., and Bernier, P.-M. (2018). Visuomotor prediction errors modulate EEG activity over parietal cortex. Sci. Rep. 8:12513. doi: 10.1038/s41598-018-30609-0

Schubert, T. W. (2003). The sense of presence in virtual environments:. Zeitschrift für Medienpsychologie 15, 69–71. doi: 10.1026/1617-6383.15.2.69

Singh, A. K., Chen, H. T., Cheng, Y. F., King, J. T., Ko, L. W., Gramann, K., et al. (2018). Visual appearance modulates prediction error in virtual reality. IEEE Access 6, 24617–24624. doi: 10.1109/ACCESS.2018.2832089

Slater, M. (2009). Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philos. Trans. Royal Soc. B 364, 3549–3557. doi: 10.1098/rstb.2009.0138

Stefanics, G., Kremláček, J., and Czigler, I. (2014). Visual mismatch negativity: a predictive coding view. Front. Hum. Neurosci. 8:666. doi: 10.3389/fnhum.2014.00666

Sutton, S., Braren, M., Zubin, J., and John, E. R. (1965). Evoked-potential correlates of stimulus uncertainty. Science 150, 1187–1188.

Keywords: neuroergonomics, BIDS, EEG, prediction error, motion, virtual reality

Citation: Gehrke L, Terfurth L, Akman S and Gramann K (2024) Visuo-haptic prediction errors: a multimodal dataset (EEG, motion) in BIDS format indexing mismatches in haptic interaction. Front. Neuroergon. 5:1411305. doi: 10.3389/fnrgo.2024.1411305

Received: 02 April 2024; Accepted: 20 May 2024;

Published: 05 June 2024.

Edited by:

Ranjana K. Mehta, University of Wisconsin-Madison, United StatesReviewed by:

Ronak Ranjitkumar Mohanty, University of Wisconsin-Madison, United StatesCopyright © 2024 Gehrke, Terfurth, Akman and Gramann. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lukas Gehrke, bHVrYXMuZ2VocmtlQHR1LWJlcmxpbi5kZQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.