94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

DATA REPORT article

Front. Neuroergonomics, 24 August 2023

Sec. Neurotechnology and Systems Neuroergonomics

Volume 4 - 2023 | https://doi.org/10.3389/fnrgo.2023.1216440

This article is part of the Research TopicMachine Learning and Signal Processing for Neurotechnologies and Brain-Computer Interactions Out of the LabView all 5 articles

The AMBER dataset aims to support researchers in developing signal-denoising techniques that mitigate the impact of noise sources such as eye blinks, eye gaze, talking and body movements, in order to ameliorate the signal-to-noise characteristics of EEG (Electroencephalography) measurements. The emphasis of this research is to enable the robust performance of brain-computer interface systems in naturalistic real-world settings, i.e., outside of the lab.

Prior studies (Manor et al., 2016; Huang et al., 2020; Ranjan et al., 2021) have typically focused on using characteristics of EEG signals in isolation without contextual signal sources to identify and ameliorate such artifacts with limited success. Presently, however, no suitable dataset exists in order to train and evaluate such approaches.

Several research articles have discussed P300 RSVP datasets available to researchers (Lees et al., 2018). Notably, Won et al. (2022) collected P300 RSVP data from 50 participants using a 32-channel Biosemi ActiveTwo system, while another dataset was presented by Acqualagna and Blankertz (2013), acquired from 12 subjects. Additionally, an extensive dataset was introduced by Zhang et al. (2020), which involved 64 subjects recorded with a 64-channel Synamps2 system. However, these datasets share a common limitation in that they solely focus on EEG signals without incorporating any contextual signal sources. In contrast, our aim is to provide the research community with an extensive dataset that not only includes participant video data alongside EEG recordings, but also closely replicates real-world situations, bridging the gap between controlled laboratory settings and naturalistic environments.

The AMBER dataset incorporates a multimodal and contextual approach by capturing video data of the participant alongside the EEG recording. The inclusion of video data enriches the dataset by providing contextual information and additional modalities for analysis. By recording video data simultaneously with EEG signals, researchers can leverage a broader range of contextual cues, such as facial expressions, body movements, and eye movements.

EEG is an accessible and safe method for researchers and users to build and operate BCI (Brain-Computer Interface) applications (Awais et al., 2021). While the initial use of such techniques began in clinical/rehabilitative settings for the purposes of augmenting communication and control, a recent trend has been to use such signals and methods in new domains, such as the image annotation task, which relies on the identification of target brain events to trigger labeling (Bigdely-Shamlo et al., 2008; Pohlmeyer et al., 2011; Marathe et al., 2015).

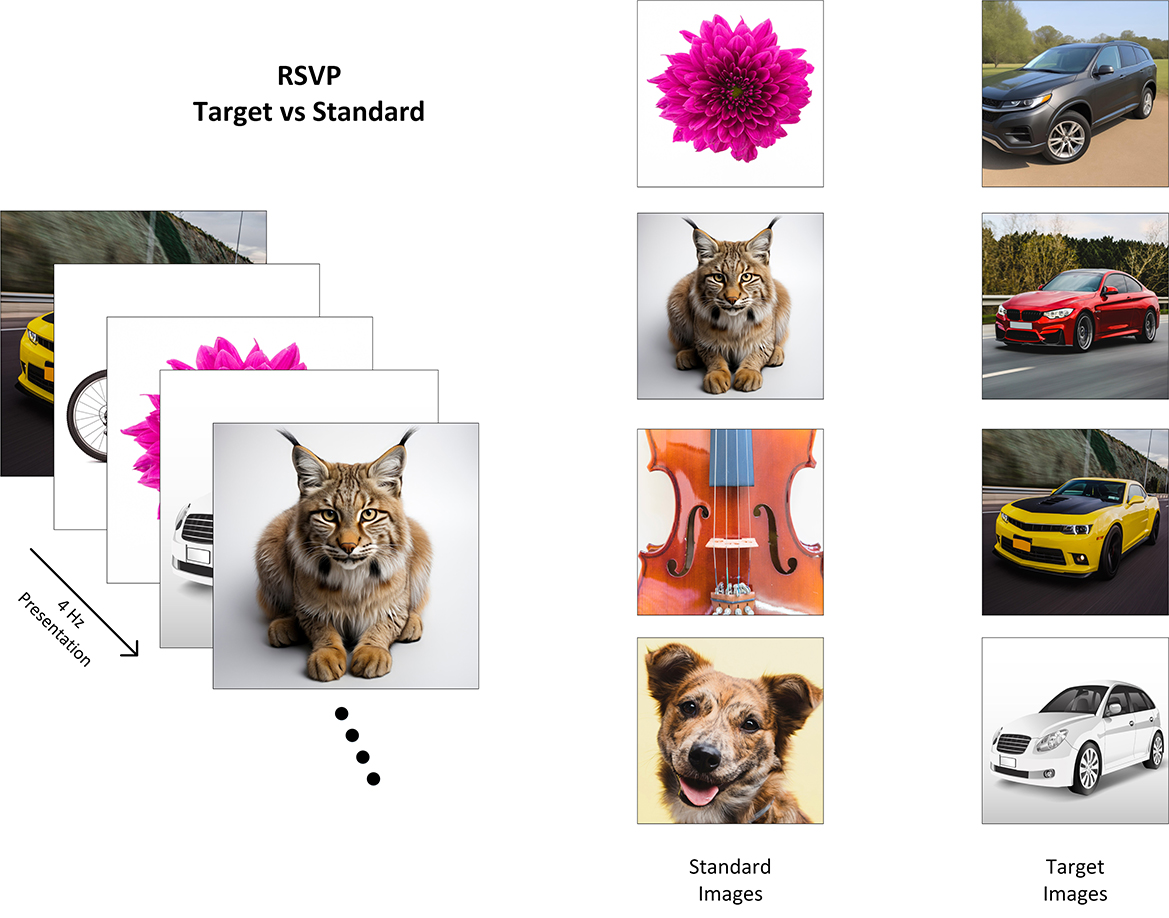

The Rapid Serial Visual Presentation (RSVP) is an approach to BCI in which a series of images are displayed at high speed. Participants are asked to differentiate between a set of target images and a set of standard images, where P300 ERP is evoked by a target image but not by standard images (Healy and Smeaton, 2017; Wang et al., 2018).

While the RSVP-BCI paradigm can be demonstrated in controlled lab-based environments, translation of this paradigm into consumer contexts requires a better understanding of real-world EEG artifacts that impact EEG signal quality in ecologically valid settings, e.g., in online worlds, metaverse, and gaming contexts. Such application scenarios are characterized by less constrained user behavior, some of which is entirely necessary for the normally expected interactions. Examples include talking, head and hand movements, all of which generate artifacts that impede the application of EEG in BCIs in real-world contexts.

By utilizing the P300/RSVP task in this dataset, we aim to differentiate the EEG results obtained in the presence of noise from those obtained in noise-free conditions. This differentiation allows for a comprehensive analysis of the impact of noise on EEG signals and facilitates the evaluation of signal-denoising techniques. The P300/RSVP task is particularly relevant as it involves measuring accuracy, making it an effective dependent variable to assess the efficacy of noise cleaning and evaluate the influence of behavioral artifacts on the EEG signals. Furthermore, we can gain insights into the relationship between noise, behavioral artifacts, and the quality of EEG signals, ultimately enhancing our understanding of the robustness of EEG data in real-world settings.

For the purpose of creating this dataset, participants were instructed to produce particular artifacts at particular times via a carefully controlled protocol, e.g., moving head left to right vs. up and down, eye movement, eye blinks, facial expressions, lip movement, body movement, etc. The specific artifacts that participants were instructed to produce during data recording reflect the most problematic artifacts encountered in real-world EEG recording (Urigüen and Garcia-Zapirain, 2015; Jiang et al., 2019; Rashmi and Shantala, 2022).

Moreover, the AMBER dataset represents a significant advancement in the field of brain-computer interfaces (BCIs) by providing researchers with a resource to address one of the key challenges in EEG data analysis—signal denoising. EEG recordings are often plagued by various artifacts and noise, which can obscure the underlying neural signals and hinder accurate analysis. The importance of signal-denoising lies in its potential to enhance the quality and reliability of EEG measurements, enabling more accurate identification and interpretation of neural responses. By effectively removing unwanted artifacts and noise, researchers can gain deeper insights into brain activity and cognitive processes, leading to a more comprehensive understanding of neural mechanisms. Moreover, denoising techniques are crucial for developing robust BCIs that can reliably detect and interpret brain signals in real-world scenarios. The dataset serves as a valuable resource for exploring and developing novel signal denoising techniques, ultimately paving the way for more effective and practical applications of BCIs in naturalistic settings.

Ten healthy participants aged between 20 and 35 years were recruited from Dublin City University to participate in the data collection. Among these participants, there were 6 males and 4 females, and each participant was assigned an alias ranging from “P1” to “P10”. Data acquisition was performed with approval from the Dublin City University (DCU) Research Ethics Committee (DCUREC/2021/175).

The hardware used for data collection was the ANT-Neuro eego sports mobile EEG system. A 32-channel EEG cap positioned according to the 10-20 international electrode system was used for the data acquisition. CPz was used as the online reference channel, and the impedance of all electrodes was kept under 15 kOhm. Data was collected at a sampling rate of 1,000 Hz (using a lowpass filter of 500 Hz) and saved in EDF format.

EEG was recorded from the ten participants while they followed a pre-defined protocol of tasks. Timestamp information for image presentation (via a photodiode and hardware trigger) was also captured to allow for precise epoching of the EEG signals for each trial (Wang et al., 2016).

In the AMBER dataset, we employ a multimodal approach that encompasses two primary signal sources: (1) EEG data collection and (2) video recording. Both EEG recordings and video are captured at the same time.

The dataset contains EEG responses to 10,500 images, in total. Each participant completed 4 sessions where each session contained 8 blocks followed by 3 different baselines. A description of each task in a single session is given in Table 1.

As seen in Table 1, the data collection is split into three sections:

1. The standard RSVP image search paradigm, in which the subjects perform the target search task while sitting still in front of the monitor (X1 and X2);

2. The RSVP paradigm with participant-induced noise, in which the participants through following a protocol, generate three distinct types of EEG artifactual noise through movements (i.e., head movement, body movement, and talking) while doing the RSVP task (X4, X6, and X8);

3. The noise paradigm, in which the participants generate three distinct types of noises throughout the trial without performing any RSVP image search task (X3, X5, and X7).

In the image search RSVP task, participants searched for a known type of target (e.g., a car) and were instructed to covertly count occurrences of target images in the RSVP sequence so as to maintain their attention on the task. In Figure 1, we show examples of the target search images used. In each 90-s RSVP block, images were presented successively at a rate of 4 Hz with target images randomly interspersed among standard images with a percentage of 10% across all blocks. In each block, 360 images (36 targets/324 standards) were presented in rapid succession on screen.

Figure 1. Illustration of the P300/RSVP task where images of cars have been used as the target class. Reproduced with permission from Freepik.com1.

There were 288/2592 target/standard trials captured for the standard RSVP task per participant, whereas, in the case of each noisy RSVP task (1-body movement, 2-talking, and 3-head movement), there were 144/1296 target/standard trials available.

In addition to recording using the pre-defined RSVP paradigm, intentional artifacts were also generated by the participants through following a protocol. In the first scenario, participants generated noise in parallel with the RSVP task, and in the second scenario, they generated artifactual noise without performing the RSVP task.

These intentional artifacts were induced to simulate realistic scenarios and study the effects of body movement, talking, and head movement on EEG data. By performing these intentional artifact-generating tasks in parallel with the RSVP task, this dataset captures the effect of coincident noise on task performance and EEG data. The inclusion of intentionally generated artifacts also enables a more comprehensive analysis of the noise characteristics and their impact on EEG data, enabling researchers to develop more effective signal-denoising techniques.

To induce body movement artifacts, participants were instructed to repeatedly raise and wave their hands, followed by putting their hands down, and repeating this sequence of movements for the entire duration of the 90-s block. Participants were given the freedom to choose which hand(s) to raise and in what sequence, with the intention of inducing variability around artifact production. They were instructed to perform the task at a comfortable speed, neither too fast nor too slow, in order to maintain consistency across participants. This task was designed to simulate a real-world or metaverse-type environment where a person might be moving their hands and arms while using a virtual reality setup such as playing a game or engaging in any other activity that involves body movements.

To generate talking artifacts, participants were instructed to count aloud, mixing numbers and letters in random sequences, for the entire 90-s block. They were given the freedom to choose the order and sequence of their counting, with the aim of inducing variability.

During the RSVP task, participants were instructed to count and continuously repeat the number of target images out loud to create talking artifactual noise in the EEG. The aim of this instruction was to simulate a real-life scenario where individuals may need to focus on a task while simultaneously communicating verbally in situations like playing a game or collaborating with others in a virtual space.

To generate head movement artifacts, participants were instructed to move their heads in an up-down (nodding) or left-right motion, at a natural speed, throughout the entire 90-s block. Participants were specifically instructed to alternate between up-down and left-right head movements in a randomized manner. This task was designed to simulate real-world scenarios where a person might produce head movements in situations like nodding in agreement during a conversation or shaking their head in response to a question. Additionally, this task aimed to simulate virtual reality (VR) environments, where a person might use a VR headset and move their head to explore different directions while playing games or visiting virtual spaces.

Figure 2 illustrates the organization of the EEG recordings for each participant. It also provides an overview of the files (i.e., raw EEG data and labels) associated with each task.

We employed a multimodal approach that involved capturing video data of BCI participants while they produced specific artifacts.

To accurately capture the various artifacts, video data was recorded using a Logitech C920 webcam, which has a frame rate of 20 fps. The camera was calibrated using OpenCV (Zhang, 2000) to determine the camera matrix and distortion coefficients, ensuring the accuracy of the recorded video data.

During the recording of eye, head, and mouth-related artifacts, the participant's face was kept fully in the frame. This framing approach allowed for a clear and unobstructed view of the participant's movements, including pupil dilation, eye-opening size, and movement. For arm movements, the upper torso was maintained in full frame, ensuring proper visibility of the body movements.

Each video frame was timestamped and assigned a corresponding frame number, which was recorded in a corresponding CSV file. This file also contained information on frame size, zoom factors, and camera matrix. The timestamping process ensured that the video data could be accurately synchronized with the EEG data, allowing for a robust multimodal analysis.

The integration of video data into the Contextual EEG Dataset is critical for developing advanced signal-denoising techniques and improving BCI performance in real-world settings. Combining video data with electroencephalogram (EEG) recordings enables researchers to explore the correlations between facial expressions, head movements, and brain activity, leading to a better understanding of various (neuro-)physiological phenomena. By capturing these problematic artifacts encountered in naturalistic environments, researchers can better understand the factors affecting EEG data quality and develop solutions to mitigate their impact on BCI performance.

In this work, we collected EEG data from 10 participants, which is labeled as Dataset A. For illustrative purposes, we conducted basic pre-processing steps on the raw EEG data, resulting in a modified version referred to as Dataset B. Alongside the EEG recordings, we simultaneously captured video data, which was independently processed to extract relevant information. These video-derived extractions are denoted as Dataset C. The availability of multiple datasets enables comprehensive analysis, allowing us to explore the relationship between EEG signals, video data, and their potential combined insights. Detailed descriptions of each dataset are provided below.

The raw EEG data recorded during each task is stored in EDF file format, which contains all the relevant information, including the complete EEG signal as well as the events that occurred during the task. For each participant, there are 11 EDF files corresponding to each session, which results in a total of 44 files for all four sessions.

The RSVP tasks have additional information about the target and standard image triggers, which are given in the form of CSV files. There are five RSVP tasks per session (two standard and three noisy RSVP tasks), which results in 20 CSV marker files for each participant. This combination of EDF and CSV files provides a comprehensive dataset that allows for detailed analysis of EEG signals and their corresponding events during the RSVP tasks. An overview of the files (i.e., EDF and CSV) associated with each task in a session can be seen in Figure 2.

During each RSVP task, a total of 360 images were presented to participants. As a result, each corresponding CSV marker file contains 360 rows of information having two distinct labels, “1” and “2”. The label “1” indicates the presence of a target image in the corresponding epoch for the RSVP task, while the label “2” indicates the absence of a target image, and, therefore, a standard non-target image. These labels provide vital information for the analysis of the EEG data as they allow the identification of the specific moments in time when the target and non-target stimuli were presented during the task.

In addition to the raw data (i.e., Dataset A), an illustrative pipeline has been provided that encompasses crucial preprocessing steps to make the EEG data amenable to analysis, visualization, and machine learning. This transformation procedure was proposed as an example to restructure the continuous raw data into a more compact dataset and to make it easier to use.

The pipeline includes essential stages such as filtering, resampling, re-referencing, and epoching. By following this example pipeline, researchers can effectively transform and structure the data, allowing for a deeper understanding of its characteristics and facilitating further analysis. Python (version 3.9.12) was mainly used along with the MNE (version 0.24.0) to implement the processing. This example pipeline is available (click here), allowing interested researchers to modify the processing setup as they wish.

A function was developed in Python to quickly load the raw EEG data for a particular participant and session. This function is helpful for efficiently accessing the EEG data for analysis and processing. The raw data is stored in the EDF file format, which contains all the relevant information related to the EEG recordings, including the event markers. Further information about event markers is stored in separate CSV files for each RSVP task.

A low pass filter with a cut-off frequency of 30 Hz was applied to the EEG data to remove any high-frequency noise or artifacts that may be present in the signal above 30 Hz. This filtering step helped to improve the signal-to-noise ratio and enhance the quality of the EEG data.

We have carried out down-sampling on our original signal sampled at 1,000 Hz to obtain a down-sampled signal with a new sampling rate of 100 Hz. The down-sampled signal can help reduce the computational load of subsequent processing steps while preserving the essential features of the signal.

The raw EEG data was initially recorded using the default CPz reference. However, in order to improve the quality of the data, common average referencing (CAR) was applied using the MNE re-reference function.

The continuous EEG recordings were segmented into epochs by extracting the time series data from −0.2 to 1 s relative to the onset of the visual stimulus.

After undergoing the aforementioned pre-processing steps, the data is saved into CSV files. Each session comprises 11 CSV files, resulting in a total of 44 files for each participant. Each file contains comprehensive information about the channels, epochs, and their respective categories. By providing access to this pre-processed data as an example, users can explore the raw datasets in a multitude of ways, unlocking various possibilities for analysis and interpretation.

In this section, we describe the process of extracting metadata from video recordings using OpenCV libraries and synchronizing it with EEG data recorded in the European Data Format (EDF).

Video recordings can provide valuable information on an individual's facial expressions, head position, and skeletal movements. To extract this information, we utilized the OpenCV libraries, which offer a wide range of functionalities for image and video analysis. The metadata extracted includes:

1. Three-dimensional head position.

2. Eye opening.

3. Mouth opening.

4. Two-dimensional eye position.

5. Three-dimensional skeleton movement.

Each of these metadata elements is timestamped to ensure accurate synchronization with the EEG data.

Once the metadata is extracted from the video, it is converted into a suitable format for integration with the EEG data. We represent each metadata element as a graph line to facilitate the visualization and analysis of the combined data. This graphical representation allows researchers to easily identify patterns and correlations between video metadata and EEG activity.

In order to accurately integrate the video metadata with the EEG data, we utilize the video frame timestamps as a reference for aligning the metadata with the EEG data. This ensures that each metadata element is correctly associated with the corresponding EEG activity.

The combined analysis of video metadata and EEG data has numerous applications in various fields, such as neuroscience, psychology, and human-computer interaction. By understanding the relationship between facial expressions, head movements, and brain activity, researchers can gain insights into emotion recognition, attention, and cognitive processes. Moreover, this approach can also be applied to develop advanced human-computer interfaces and improve the accuracy of brain-computer interfaces (Redmond et al., 2022).

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://github.com/meharahsanawais/AMBER-EEG-Dataset.

The studies involving humans were approved by Dublin City University (DCU) Research Ethics Committee (DCUREC/2021/175). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

MA, PR, GH, and TW have made substantial contributions to this research work. MA designed the EEG-based experimental paradigm and conducted the data acquisition. PR contributed by designing the video recording protocols. GH contributed by designing the RSVP task and reviewing the paper. TW reviewed and finalized the manuscript for publication. All authors contributed to the article and approved the submitted version.

This work was funded by Science Foundation Ireland under Grant Number SFI/12/RC/2289_P2 and CHIST-ERA under Grant Number (CHISTERA IV 2020 - PCI2021-122058-2A).

We would like to express our gratitude to all the individuals who participated in the EEG experiments for their valuable contribution to this research. We are thankful for their time and effort in making this research possible.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) TW declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^Freepik.com image source links: https://www.freepik.com/free-photo/red-luxury-sedan-road_6198664.htm#query=cars&position=0&from_view=search&track=sph; https://www.freepik.com/free-photo/yellow-sport-car-with-black-autotuning-road_6159512.htm#query=cars&position=2&from_view=search&track=sph#position=2&query=cars; https://www.freepik.com/free-vector/white-hatchback-car-isolated-white-vector_3602751.htm#from_view=detail_alsolike; https://www.freepik.com/free-photo/pink-flower-white-background_976070.htm#query=flower&position=5&from_view=search&track=sph; https://www.freepik.com/free-photo/close-up-fine-tuning-string-adjuster-violin_2978632.htm#query=wooden%20guitar%20on%20table&position=27&from_view=search&track=ais; https://www.freepik.com/free-photo/front-view-cute-domestic-dog-pet_5795881.htm#query=golden%20retriever%20face&position=1&from_view=search&track=ais; https://www.freepik.com/free-ai-image/scary-bobcat-indoors_59584025.htm#query=bengal%20cat%20close%20up&position=3&from_view=search&track=ais; https://www.freepik.com/free-ai-image/gray-suv-is-parked-road-front-field_40368536.htm#query=cars&position=36&from_view=search&track=sph.

Acqualagna, L., and Blankertz, B. (2013). Gaze-independent BCI-spelling using rapid serial visual presentation (RSVP). Clin. Neurophysiol. 124, 901–908. doi: 10.1016/j.clinph.2012.12.050

Awais, M. A., Yusoff, M. Z., Khan, D. M., Yahya, N., Kamel, N., and Ebrahim, M. (2021). Effective connectivity for decoding electroencephalographic motor imagery using a probabilistic neural network. Sensors 21, 6570. doi: 10.3390/s21196570

Bigdely-Shamlo, N., Vankov, A., Ramirez, R. R., and Makeig, S. (2008). Brain activity-based image classification from rapid serial visual presentation. IEEE Trans. Neural Syst. Rehabil. Eng. 16, 432–441. doi: 10.1109/TNSRE.2008.2003381

Healy, G., Ward, T., Gurrin, C., and Smeaton, A. F. (2017). “Overview of NTCIR-13 NAILS task,” in The 13th NTCIR Conference on Evaluation of Information Access Technologies (Tokyo).

Huang, G., Hu, Z., Zhang, L., Li, L., Liang, Z., and Zhang, Z. (2020). “Removal of eye-blinking artifacts by ICA in cross-modal long-term EEG recording,” in 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) (Montreal, QC: IEEE), 217–220.

Jiang, X., Bian, G.-B., and Tian, Z. (2019). Removal of artifacts from EEG signals: a review. Sensors 19, 987. doi: 10.3390/s19050987

Lees, S., Dayan, N., Cecotti, H., McCullagh, P., Maguire, L., Lotte, F., et al. (2018). A review of rapid serial visual presentation-based brain–computer interfaces. J. Neural Eng. 15, 021001. doi: 10.1088/1741-2552/aa9817

Manor, R., Mishali, L., and Geva, A. B. (2016). Multimodal neural network for rapid serial visual presentation brain computer interface. Front. Comput. Neurosci. 10, 130. doi: 10.3389/fncom.2016.00130

Marathe, A. R., Ries, A. J., Lawhern, V. J., Lance, B. J., Touryan, J., McDowell, K., et al. (2015). The effect of target and non-target similarity on neural classification performance: a boost from confidence. Front. Neurosci. 9, 270. doi: 10.3389/fnins.2015.00270

Pohlmeyer, E. A., Wang, J., Jangraw, D. C., Lou, B., Chang, S.-F., and Sajda, P. (2011). Closing the loop in cortically-coupled computer vision: a brain–computer interface for searching image databases. J. Neural Eng. 8, 036025. doi: 10.1088/1741-2560/8/3/036025

Ranjan, R., Sahana, B. C., and Bhandari, A. K. (2021). Ocular artifact elimination from electroencephalography signals: a systematic review. Biocybernet. Biomed. Eng. 41, 960–996. doi: 10.1016/j.bbe.2021.06.007

Rashmi, C., and Shantala, C. (2022). EEG artifacts detection and removal techniques for brain computer interface applications: a systematic review. Int. J. Adv. Technol. Eng. Explorat. 9, 354. doi: 10.19101/IJATEE.2021.874883

Redmond, P., Fleury, A., and Ward, T. (2022). “An open source multi-modal data-acquisition platform for experimental investigation of blended control of scale vehicles,” in 2022 IEEE International Conference on Metrology for Extended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE) (Rome), 673–678.

Urigüen, J. A., and Garcia-Zapirain, B. (2015). EEG artifact removal-state-of-the-art and guidelines. J. Neural Eng. 12, 031001. doi: 10.1088/1741-2560/12/3/031001

Wang, Z., Healy, G., Smeaton, A. F., and Ward, T. E. (2016). “An investigation of triggering approaches for the rapid serial visual presentation paradigm in brain computer interfacing,” in 2016 27th Irish Signals and Systems Conference (ISSC) (Londonderry: IEEE), 1–6.

Wang, Z., Healy, G., Smeaton, A. F., and Ward, T. E. (2018). Spatial filtering pipeline evaluation of cortically coupled computer vision system for rapid serial visual presentation. Brain Comput. Interfaces 5, 132–145. doi: 10.1080/2326263X.2019.1568821

Won, K., Kwon, M., Ahn, M., and Jun, S. C. (2022). EEG dataset for RSVP and P300 speller brain-computer interfaces. Sci. Data 9, 388. doi: 10.1038/s41597-022-01509-w

Zhang, S., Wang, Y., Zhang, L., and Gao, X. (2020). A benchmark dataset for RSVP-based brain–computer interfaces. Front. Neurosci. 14, 568000. doi: 10.3389/fnins.2020.568000

Keywords: EEG, BCI, artifacts, signal denoising, P300 ERPs, RSVP, noise, EEG dataset

Citation: Awais MA, Redmond P, Ward TE and Healy G (2023) AMBER: advancing multimodal brain-computer interfaces for enhanced robustness—A dataset for naturalistic settings. Front. Neuroergon. 4:1216440. doi: 10.3389/fnrgo.2023.1216440

Received: 03 May 2023; Accepted: 01 August 2023;

Published: 24 August 2023.

Edited by:

Anirban Dutta, University of Lincoln, United KingdomReviewed by:

Diana Carvalho, University of Porto, PortugalCopyright © 2023 Awais, Redmond, Ward and Healy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Muhammad Ahsan Awais, bXVoYW1tYWQuYXdhaXMyQG1haWwuZGN1Lmll

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.