94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neuroergonomics, 06 April 2023

Sec. Neurotechnology and Systems Neuroergonomics

Volume 4 - 2023 | https://doi.org/10.3389/fnrgo.2023.1080200

This article is part of the Research TopicNeurotechnologies and Brain-Computer Interaction for NeurorehabilitationView all 8 articles

Brain-computer interfaces (BCI) have been developed to allow users to communicate with the external world by translating brain activity into control signals. Motor imagery (MI) has been a popular paradigm in BCI control where the user imagines movements of e.g., their left and right limbs and classifiers are then trained to detect such intent directly from electroencephalography (EEG) signals. For some users, however, it is difficult to elicit patterns in the EEG signal that can be detected with existing features and classifiers. As such, new user control strategies and training paradigms have been highly sought-after to help improve motor imagery performance. Virtual reality (VR) has emerged as one potential tool where improvements in user engagement and level of immersion have shown to improve BCI accuracy. Motor priming in VR, in turn, has shown to further enhance BCI accuracy. In this pilot study, we take the first steps to explore if multisensory VR motor priming, where haptic and olfactory stimuli are present, can improve motor imagery detection efficacy in terms of both improved accuracy and faster detection. Experiments with 10 participants equipped with a biosensor-embedded VR headset, an off-the-shelf scent diffusion device, and a haptic glove with force feedback showed that significant improvements in motor imagery detection could be achieved. Increased activity in the six common spatial pattern filters used were also observed and peak accuracy could be achieved with analysis windows that were 2 s shorter. Combined, the results suggest that multisensory motor priming prior to motor imagery could improve detection efficacy.

Brain-computer interfaces (BCI) represent a burgeoning modality to control and communicate with peripheral devices via non-muscular motor control and directly through brain signals (Vidal, 1973). To establish a dialogue between the brain and computers, the first BCI systems were developed based on electroencephalography (EEG) signals in the early 1970s (Wolpaw et al., 2002). Their primary goals focused on clinical applications to repair, improve, or replace the natural output of the human central nervous system (Wolpaw and Wolpaw, 2012). Today, so-called passive/affective BCIs have emerged to monitor unintentional, involuntary, and spontaneous modulations in user cognitive states (Zander and Jatzev, 2011). For example, BCIs have been proposed to detect emotion states during meditation (Kosunen et al., 2017), to provide insights on a user's quality of experience (Gupta et al., 2016), and have recently been integrated into virtual reality headsets to allow for personalized experiences (Bernal et al., 2022; Moinnereau et al., 2022), just to name a few applications.

Reactive BCI systems, in turn, are driven by explicit communication between humans and computers by exploiting brain activity arising in reaction to external stimulation, which is indirectly modulated by the user to control an application (Wolpaw et al., 2020). For example, spellers using the P300 event-related potential (ERP) have been proposed and perfected over the years (e.g., Philip and George, 2020), as have games based on steady-state visual evoked potentials (SSVEP) (e.g., Lopez-Gordo et al., 2019; Filiz and Arslan, 2020). Active BCIs, on the other hand, derive their outputs from brain activity which is directly and consciously controlled by the user, independent of external events, to control an application. The most common mental task used is motor imagery (MI), where the person imagines moving their hands, feet, and/or tongue and these imagined movements produce modulations over the sensorimotor cortex.

MI-based BCIs are very popular (Zhang and Wang, 2021) as they have shown to engage the same underlying neural circuits associated with executed motor actions (Abbruzzese et al., 2015). As such, they have been used to control, for example, wheelchairs, drones, and exoskeletons (Kim et al., 2016) or to improve attention levels (Yang et al., 2018) in both healthy and patient populations (Ruffino et al., 2017). In the medical field, they have been employed for stroke rehabilitation (Tang et al., 2018). In this case, the coupling of the BCI with a functional electrical stimulator allows for direct feedback to the user via muscle stimulation (Marquez-Chin and Popovic, 2020) once a successful imagery task is achieved. Research is showing that when the MI task is repeated many times, it can induce greater brain plasticity, reduce spasticity, and help patients more quickly restore movements (Sebastián-Romagosa et al., 2020).

Despite these reported benefits of using MI-based BCIs, studies have reported that detecting motor imagery tasks using off-the-shelf neuroimaging tools can be challenging for 15–30% of the population (Blankertz et al., 2010; Thompson, 2019). Earlier studies referred to this as “BCI illiteracy,” which insinuates the issue is on the user. While, indeed, studies have shown that MI-BCI accuracy can be affected by user-related factors, such as attention and frustration levels (Myrden and Chau, 2015), limitations in hardware (e.g., signal acquisition systems and signal quality) and software (e.g., accuracy of the classification algorithms) also play a crucial role (Allison and Neuper, 2010). As such, the terminology “BCI inefficiency” (Edlinger et al., 2015) has been more recently incorporated. To overcome this issue, recent research has focused on developing new filtering methods, feature extraction techniques, and newer and more complex machine learning algorithms to tackle the software aspect (Gaur et al., 2015; Zhou et al., 2020; Benaroch et al., 2022; Tibrewal et al., 2022). Moreover, improvements in bioamplifiers and electrodes (dry versus gel-based; active versus passive) have been explored to address the hardware issues (Cecotti and Rivet, 2014). Lastly, new training paradigms and presentation modalities (e.g., virtual reality), as well as priming methods have been explored to help users generate neural signals that can be better detected with existing technologies (Birbaumer et al., 2013).

Virtual reality (VR) has emerged as one particular presentation modality that has shown to positively impact BCI performance (Vourvopoulos et al., 2016; Amini Gougeh and Falk, 2022a; Arpaia et al., 2022; Choy et al., 2022). VR-based presentation can increase the sense of embodiment, improve immersion, and foster greater engagement levels, which, in turn, could lead to the generation of neural signals with increased discriminability (Škola and Liarokapis, 2018; Vourvopoulos et al., 2019). In fact, Amini Gougeh and Falk (2022a) surveyed the literature and showed that VR coupled with MI-BCIs could lead to improved neurorehabilitation outcomes.

In addition to VR-based training, priming strategies have also been explored as a tool to further enhance motor imagery. For instance, Vourvopoulos et al. (2015) and Vourvopoulos and Bermúdez i Badia (2016) showed that motor priming, where a VR-based physical activity was performed prior to the MI task, could enhance the imagery task. In particular, subjects who received motor priming in VR had higher MI-BCI performance compared to a standard setup. Stoykov and Madhavan (2015), in turn, highlighted the potential of sensory priming and showed how incorporating vibration stimuli during priming could improve motor imagery and neurorehabilitation outcomes. Vibrotactile stimuli, however, covers only one modality in sensory priming, which leaves the question “Are there any benefits to including other sensory modalities during VR-based priming?” still unanswered. In this work, we wish to explore the impact of including olfactory and tactile stimuli on overall motor imagery performance in order to help answer this question.

It is known that smells can influence user behavior through affective priming (Smeets and Dijksterhuis, 2014). Multisensory stimuli can result in experiences that enhance the sense of embodiment, presence, immersion, engagement, and overall experience of the user relative to a conventional audio-visual experience (e.g., Melo et al., 2020; Amini Gougeh and Falk, 2022b; Amini Gougeh et al., 2022). As such, it is hypothesized that a multisensory immersive priming paradigm will further improve motor imagery detection accuracy. In fact, olfaction has been linked to improved relaxation states, increased attention, and more positive emotions (Gougeh et al., 2022; Lopes et al., 2022). Hence, inclusion of smells during priming may counter the negative effects that attention and frustration can have on motor imagery accuracy, as reported by Myrden and Chau (2015).

Moreover, sensory processing, including olfactory, has been shown to influence motor processing (Ebner, 2005). In a recent study using transcranial magnetic stimulation, the perception of a pleasant smell and its olfactory imagery showed to be associated with primary motor cortex excitability (Infortuna et al., 2022). The results were in line with those of Rossi et al. (2008), which showed a cross-link between the olfactory and motor systems. The work by Tubaldi et al. (2011), in turn, suggested a superadditive effect on brain activity during action observation executed with a small odorant object (e.g., grasping a strawberry, almond, orange, or apple). Combined, these findings suggest that the combination of motor tasks in the presence of olfactory stimuli could have an impact on user behavior and on motor cortex excitability. Ultimately, this could lead to improved MI-BCI performance.

In light of these insights, our main research question (RQ) is to investigate if VR training, combined with multisensory priming, can improve MI-BCI performance. Here, improvement will be explored across two dimensions: accuracy and timing. Improvements in accuracy suggest neural signatures that are more discriminative for existing signal processing and machine learning pipelines. Improvements in timing suggest that imagery can be detected faster. Timing is important, as it is known that mental fatigue from long task times can induce frustration and, ultimately, reduce overall BCI performance (Talukdar et al., 2019). In addition to this main RQ, several other sub-RQs will be explored. These will investigate aspects, such as, user experience and sense of presence provided by the multisensory priming paradigm, the differences in accuracy achieved on a per-subject versus global scale, the impact of varying distances and depths cues of the movement imagination on overall accuracy, as well as the impact of multisensory priming on different aspects of the signal processing pipelines, including window duration size and time-from-cue, and their impact on overall accuracy.

To help answer these questions, a multisensory motor priming experiment was performed in VR. Two motor priming tasks took place between two motor imagery tasks: a multisensory one that included tactile, olfactory, and audio-visual stimuli, and a baseline that included only audio-visual. Different analyses are performed to help answer the RQs and sub-RQs mentioned above. The remainder of this paper is organized as follows. In Section 2, we detail the material and methods used in the study. Experimental results are then presented in Section 3 and discussed in light of existing literature. Lastly, Conclusions are presented in Section 4.

Eleven participants (three female, 25.81 ± 3.88 years old) were recruited to participate in this pilot study. Eligibility criteria included healthy individuals. Participants with a history of severe cybersickness and sensitivity to scents were excluded. The experiment protocol was reviewed and approved by the Ethics Committee of the Institut national de la recherche scientifique (INRS), University of Quebec (number: CER-22-663). During data collection, COVID-19 safety measures were considered and put in place, including maintaining social distance, wearing a face mask, and disinfecting all devices with alcohol wipes and a UV-C chamber. All participants were considered novice BCI users, and this was their first time performing a motor imagery task. It is important to emphasize that the data from one subject was considered too noisy for analysis, thus was discarded here from the analysis.

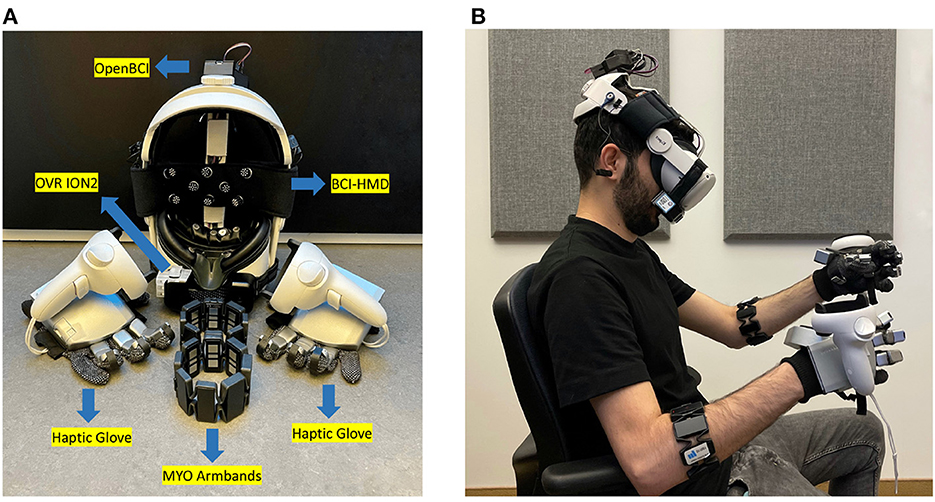

In this study, a VR head-mounted display (HMD) was coupled with force feedback haptic gloves, an electromyogram (EMG) armband, a portable scent diffusion device, and a wireless BCI system (henceforth referred as “BCI-HMD") embedded directly into the headset following guidelines described by Cassani et al. (2020). An illustration of the different components of the system is shown in Figure 1A, along with a visual of a user wearing them in Figure 1B. In addition, a VR game was created and synchronized with the hardware. More details about the instrumented HMD is given next.

Figure 1. (A) Instrumented BCI-HMD headset comprised of a HMD-VR and bioamplifier to monitor electroencephalography (EEG), electrooculography (EOG), facial electromyography (EMG), and photoplethysmography (PPG). Other devices include the OVR ION2 scentware, Myo armbands, and a Senseglove Nova haptic glove. (B) A participant wearing the BCI-HMD system.

A Meta Quest2 HMD (LCD display with a resolution of 1, 920×1, 832, 72 Hz refresh rate, and 89° field of view) was used. Three physiological signal modalities, including electroencephalography (EEG), electrooculography (EOG), and photoplethysmography (PPG) were integrated into the facial foam and head straps of the VR headset and directly connected to an OpenBCI bioamplifier encased in a 3D-printed box and placed on top of the HMD straps (see Figure 1B). OpenBCI Cyton and Daisy bioamplifiers (OpenBCI, USA) were used to capture 11 EEG channels from frontal (Fp1, Fpz, Fp2, F3, F4, Fz, Fc1, Fc2) and central (C3, C4, Cz) regions following the international 10-20 system (Jasper, 1958) at a 125 Hz sample rate. In order to ensure participant comfort, softPulseTM soft dry EEG electrodes (Datwyler, Switzerland) were employed on the VR strap and flat sensors were placed directly on the faceplate cushion of the headset. A PPG sensor was used to monitor heart rate and was also integrated into the faceplate of the HMD, as well as two pairs of vertical and horizontal EOG electrodes. A green LED light was used in the PPG sensor to ensure more accurate measurements (Castaneda et al., 2018). Moreover, a pair of Myo 8-channel armbands (Thalmic labs, Canada) were placed on the participant forearms in order to capture electromyography (EMG) signals at a rate of 200 Hz using dry electrodes. Different modalities and signal inputs were synchronized and recorded using lab streaming layer (LSL) to be used in post-experiment offline data processing.

A pair of NovaTM haptic gloves (SenseGlove, Netherlands) were employed to deliver accurate force feedback to each finger. The gloves could also track wrist, hand, and finger gestures using inertial measurement unit (IMU) sensors. Taking advantage of linear resonant actuators on the thumb and index fingers, participants could perceive the texture and stiffness of a 3D object in the virtual environment. Mounting the Meta Quest2 controllers on the gloves enabled 3D mapping of the hand locations onto the virtual space, as shown in Figure 1B. Furthermore, an OVR ION2 scentware device (OVR Technologies, USA) was connected to the BCI-HMD to provide an olfactory stimulus by dispersing aromas close to the user's nose. The employed scent kit contains nature-oriented scents including beach, flowers, earth dirt, pine forest, ocean breeze, wood, citrus, ozone, and grass smells. In this study, the citrus scent was chosen as an olfactory stimulus.

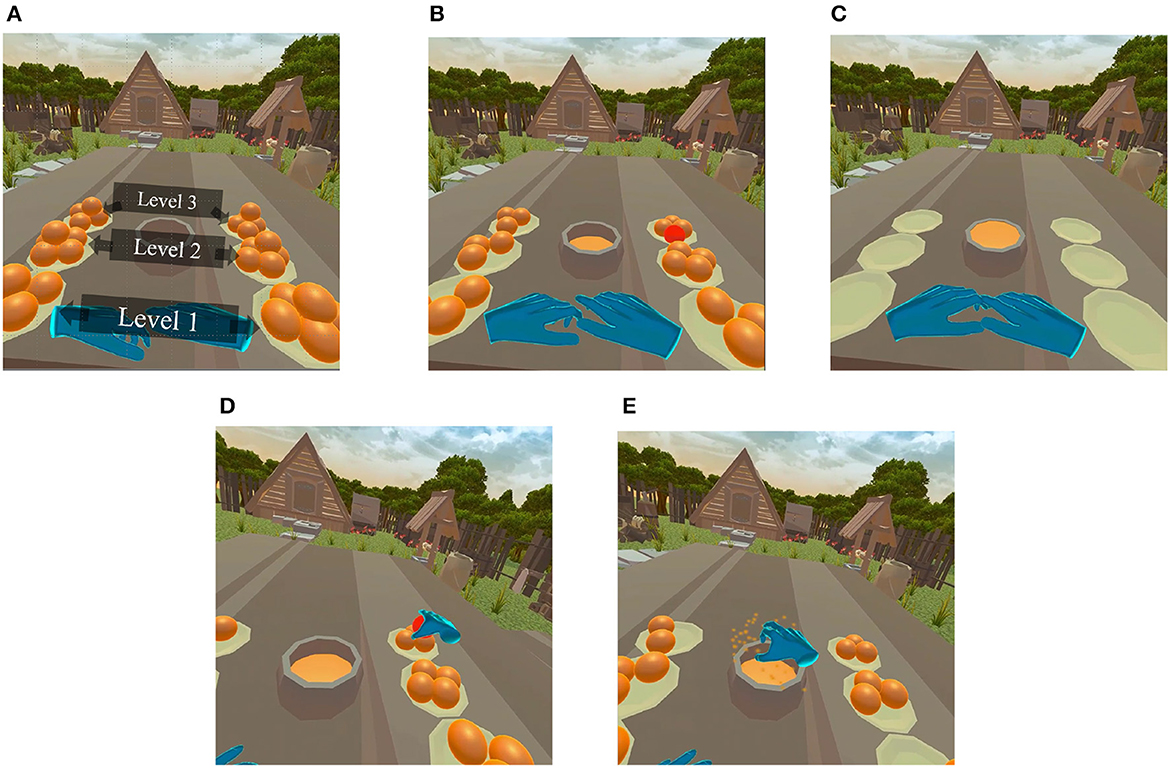

A custom virtual environment was designed in Unity3D 2021. As illustrated in Figure 2A, five oranges were placed on each of six plates positioned on top of a table. The participant was seated at the center and three plates to the left and three to the right side of the participant were strategically positioned at three different distances (three levels). The nearest plates (level 1) positioned at a relative distance of 20 cm from subject in the real-world, while the farthest plates (level 3) were located at 60 cm; the middle plates were placed at 40cm (level 2). With this arrangement, we could investigate the role of depth cues on sense of presence and, ultimately, on BCI performance. The environment and the distance were devised in a way that even the oranges placed on the farthest plate were accessible by full arm extension, without the need for any additional body movement.

Figure 2. (A) Developed game environment, five oranges placed in each of the 6 plates (overall 30 oranges). (B) A randomly cued orange in the MI session. The task in the MI session was to imagine grabbing a cued orange, moving it on top of the bowl placed in center, and squeezing it. (C) After a pre-determined amount of time, the bowl fills up with orange juice. (D) A randomly cued orange that participants were required to grab, move to the top of the center bowl, and squeeze. In the multisensory condition, participants felt the volume and texture of the 3D object, while in audio-visual, oranges could be grabbed and squeezed without any force. (E) After successfully squeezing the orange, the cued orange disappeared simultaneously with playing an animation. In the multisensory condition, this event was synchronized with the olfactory stimulus, whereas in the audio-visual condition, it was followed by auditory effects.

A repeated measures experimental design (so-called within-subject experimental protocol) was followed in which all the participants underwent the same experimental conditions (with counterbalanced ordering). Figure 3 illustrates the step-by-step protocol timelines and experimental blocks. In the pre-experiment phase, participants were first assessed for any known motion sickness or sensitivity to smells, as well as their comfort with the BCI-HMD and haptic gloves. Each participant was then given comprehensive instructions verbally, before wearing the Myo armbands and BCI-HMD. Afterwards, all the systems were calibrated, signal quality checked, and any adjustments were made to increase signal quality. Finally, participants received in-game training with and without multisensory stimuli.

Figure 3. An overview of the experiment timeline. Screening for motion sickness and smell sensitivity was part of the pre-assessment process. Afterwards, instructions were given, followed by system calibration and in-game training. The experiment begun with a motor imagery session, where users only receive visual cues as to which oranges to grab and when the imagery task was concluded. Subsequently, the motor priming block consisted of two experimental conditions with varying types of sensory stimuli. Next, another motor imagery session was conducted. A final post-experiment interview was conducted.

After the pre-experiment, participants were asked to perform a common BCI MI task where they imagined grabbing an orange using either their left or right hand (Chatterjee and Bandyopadhyay, 2016). In this MI session, the user was cued to imagine reaching for and grabbing the orange that has turned red for a duration of 10 s. Oranges were randomly turned red between the left and right sides, as shown in Figure 2B. During the 10 s, the participants were instructed to imagine grabbing the cued orange, moving it to the middle of the table, and squeezing it onto the center bowl. After the 10 s the orange disappeared for a 5-s rest period, after which another orange was cued. This procedure was repeated until all 30 oranges (5 oranges × 6 plates) were squeezed, all plates became empty, and the center bowl filled with orange juice, as depicted by Figure 2C). It is important to emphasize that this was an offline MI-BCI task, without any online BCI classification model involved. The visuals were provided only as cues to which orange to imagine grabbing and when to finalize the imagery task.

Following this first motor imagery task, participants were instructed to perform the motor priming (MP) task twice, once with only audio-visual stimuli (audiovisual MP) and once with the multisensory (multisensory MP) stimuli. A counterbalanced ordering of conditions was applied across subjects to eliminate any biases. Given the high inter-subject variability of MI-BCI accuracy, as reported previously (Blankertz et al., 2010; Thompson, 2019), it was decided to have all participants perform both tasks, as opposed to having half of them perform the multisensory MP task and the other half the conventional task. The work by Wriessnegger et al. (2018) showed the benefits of motor execution training prior to MI. As such, we will refer to the condition preceding the last MI task as the motor priming condition in our analyses and compare the conventional and multisensory MP paradigms.

In the motor priming sessions, a randomly assigned orange was cued in red color and users were instructed to grab them (Figure 2D), move them over the center bowl, and squeeze the orange until all juice was extracted, causing the orange to burst and vanish and the juice level in the bowl to rise (Figure 2E). Participants had their real-world hand movement mapped onto the virtual hand models (shown as blue hands in the figures) with a 100 Hz refresh rate. Unlike the MI sessions where imagery was performed for a fixed period of time (i.e., 10 s), in the motor priming sessions participants were allowed to interact with the virtual environment at their own pace and a trial ended only when the orange was successfully squeezed. In the audiovisual MP experimental condition, squeezing an orange was followed by a “squish” sound effect synced with an animation without applying any physical force feedback. In the multisensory MP condition, on the other hand, users were able to feel the 3D shape and texture of the oranges in the virtual environment and had to apply force in order to successfully squeeze and extract the orange juice. In this condition, the audio-visual stimulus was also time-aligned with a 3-s burst of citrus scent. The experiment concluded when all oranges had been squeezed and all juice extracted.

After the two counterbalanced priming conditions were performed, users underwent a second (offline) motor imagery task following the same instructions and procedure as the first MI session described above. All the participants completed both training conditions, however, half of them completed the audiovisual MP first, whereas the other half completed the multisensory MP task first. Upon completion of each priming condition, participants responded to several questionnaires that appeared directly into the game environment (more details in the next section) as well as the EmojiGrid, a graphical tool to gauge user emotions. At the end, a post-experiment comparison questionnaire was answered and an open-ended interview was conducted.

After completion of each priming condition, participants answered questions about their quality of experience, including their perceived level of presence, immersion, realism, engagement, overall experience, and cybersickness using a 5-point absolute category rating like scale ranging from low/poor to high/excellent (ITU, 2008). The ratings and questions were embedded directly into the game environment to avoid any disruption in the experience; the toolkit developed by Feick et al. (2020) was used. Moreover, subjects also reported their valence and arousal state levels using the Emojigrid (Toet and van Erp, 2019), a graphical instrument used to capture emotional states on a continuous Cartesian grid. After both MI sessions, participants also rated their perceived difficulty in performing each MI task using a 5-point scale with options “very easy,” “easy,” “neutral,” “difficult,” and “very difficult.” At the end of the overall experiment, users were asked to rate their preference for the audio-visual or multisensory MP conditions in terms of presence, immersion, realism, engagement, and overall experience. Lastly, an open-ended interview was conducted to gather candid feedback from the participants.

Physiological data including EEG, EOG, EMG, and PPG signals were synchronized with game events using lab stream layer (LSL). In this paper, we focused only on the EMG and EEG signals. Using MATLAB (R2021a, The MathWorks, USA), the EMG signal was band-pass filtered between 10 and 500 Hz and full-wave rectified. The mean absolute value (MAV) of the EMG signal was then extracted for both left and right arms, as suggested by Alkan and Günay (2012), and finally averaged across arms to obtain a final overall muscle activity for each user.

Using the EEGLAB toolbox (Delorme and Makeig, 2004), the EEG signals were band-pass filtered between 4 and 70 Hz, then zero-mean normalized, and spectral power from alpha (7–13 Hz) and beta (13–30 Hz) bands were computed, as suggested by Ahn et al. (2013). Artifact subspace reconstruction (ASR) was also employed to eliminate movement-related artifacts (Kothe and Jung, 2016). For offline classification, common spatial pattern (CSP) features were extracted from the MI trials (Nguyen et al., 2018; Jiang et al., 2020; Amini Gougeh et al., 2021) and fed to a support vector machine (SVM) classifier to discriminate between left or right hand motor imagery (Ramoser et al., 2000; Dornhege et al., 2005).

For binary tasks, CSP features calculate spatial filters that maximize the variance of one class while simultaneously minimizing the variance for the other class. The spatially filtered signal S of an EEG trial is given by:

where W is a L × N matrix of spatial filters, whereas L is the number of filters and N number of EEG channels. M represents the EEG signal of a certain trial with N rows and T data points. The first J rows of the W matrix reflect the maximum variance in the first class (and minimum variance in the second class) and the last J rows reflect maximum variance in second class. In this study, we used six spatial filters, three (J = 3) from each side, as suggested by Blankertz et al. (2007).

Here, several tests were performed. First, we explored the accuracy achieved with CSP features computed over the entire 10-s (cue duration) motor imagery trial. Next, we explored the use of different window sizes to gauge if priming resulted in motor imagery patterns that could be more quickly discriminated by the available processing pipeline. Lastly, using a fixed window of 5 s duration (as per suggestions by Mzurikwao et al., 2019; Garcia-Moreno et al., 2020; Leon-Urbano and Ugarte, 2020), we explored the impact of different post-task-cue starting points on overall accuracy. As the task involved reaching for an orange, grabbing the orange, moving the orange to the center of the table and squeezing, this analysis could shed some light on what parts of the task may be more discriminative, thus resulting in shorter experimental protocols in the future. These analyses were performed per subject and then averaged to obtain an overall MI-BCI accuracy.

Classification was performed under three settings: in the first, all plates on the left and all plates on the right were aggregated into two classes: left and right. In the second, classification of left versus right imagery was done per level (i.e., plate distance, as shown in Figure 2A) to gauge if depth imagery played a role on motor imagery accuracy. For these two methods, a per-subject analysis was performed and a bootstrap methodology was applied to account for the small dataset size. As such, of the available 30 trials, 20 were randomly assigned to the training set and 10 were set aside for testing and this resampling was repeated 200 times for each subject. An SVM classifier with a linear kernel and default parameters (with OptimizeHyperparameters option in MATLAB) was used for classification, resulting in 200 accuracy values per subject which were then used for significance testing. Lastly, in the third setting we pooled all participants together to explore the impact of motor priming on a generic BCI combining neural patterns consistent across multiple subjects. In this case, a total of 300 trials were available (150 left-side oranges and 150 right-side). As done previously, a bootstrap methodology was applied. In this case, 70% of the data was assigned to the training set and 30% to the test set and this was repeated 200 times. An SVM classifier with a linear kernel and default parameters (with OptimizeHyperparameters option in MATLAB) was used for classification, resulting in a total of 200 total accuracy values, which were then used for significance testing.

To test the significance of the differences of the two priming conditions, statistical analysis was performed using IBM SPSS 20. Normality of the variables was assessed using the Shapiro-Wilk (S-W) normality test, recommended for small sample size datasets (Elliott and Woodward, 2007). For measures found to be normally distributed, the paired sample t-test was used to determine whether there were statistically significant differences between the two priming conditions. For measures that did not exhibit a normal distribution, a Wilcoxon signed-rank test was performed. We report the obtained mean (M) and standard deviation (SD) across the 200 runs, as well as the p-value. For all analyses, a probability level of p < 0.05 was considered to be statistically significant.

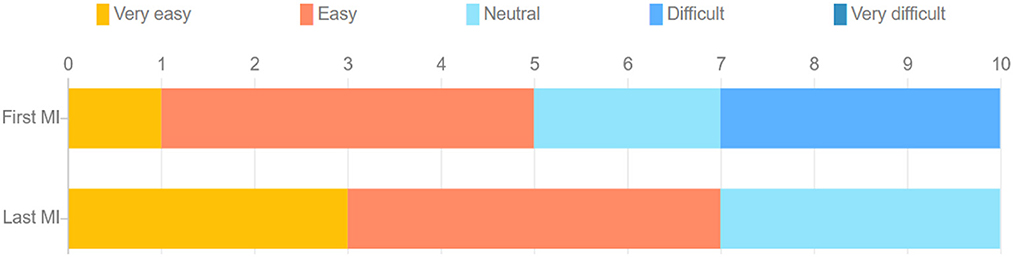

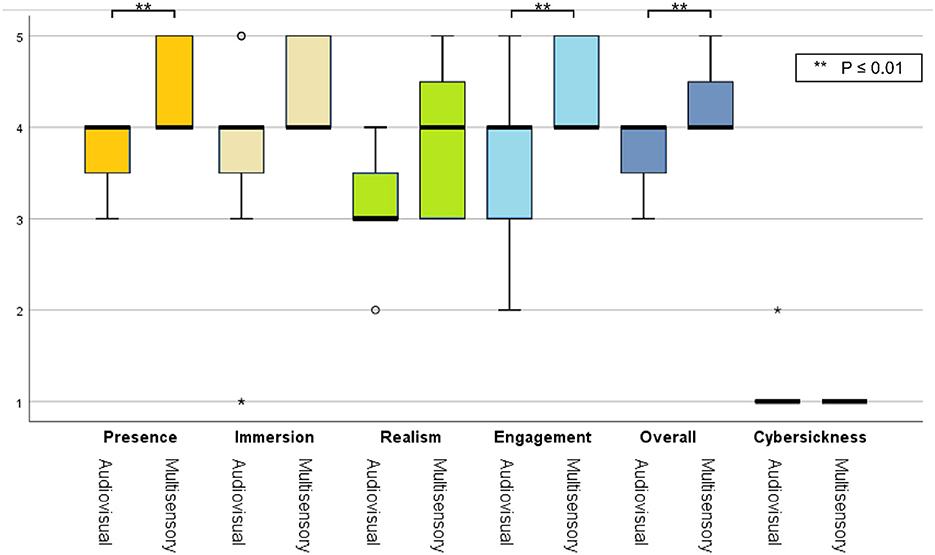

The stacked bar chart in Figure 4 depicts the perceived difficulty ratings reported by each subject after execution of the MI tasks. The S-W test indicated a non-normal distribution of these ratings. A statistically significant difference was observed across the first (M = 2.82, SD = 1.079) and the last (M = 2.00, SD = 0.775) MI session (Z = –2.165, p = 0.030), suggesting the latter task was perceived as being easier relative to the first. This was expected, as motor imagery tasks have been reported to become easier with training, especially in VR (Wang et al., 2019). For the first MI task, four participants rated it as “easy,” whereas three others rated it “difficult." In the last MI task, on the other hand, all participants rated the task as either “very easy," “easy," or “neutral." Figure 5 depicts bar plots of the average subjective ratings provided after each priming condition. The S-W test indicated a non-normal distribution of subjective ratings. The descriptive statistics observed for each motor priming condition are reported in Table 1.

Figure 4. Stacked bar chart of comparison of difficulty of performing MI task during the first and last MI sessions.

Figure 5. Box plots of subjective ratings averaged across participants for both priming conditions. Asterisks represent statistically significant difference.

Using a Wilcoxon signed-rank test, a statistically significant difference was observed for the presence (Z= 2.449, p= 0.014), engagement (Z = 1.983, p= 0.047) and overall experience (Z = 2.121, p= 0.034) ratings; the multisensory MP condition resulted in higher ratings. As the virtual environment was stationary, all participants reported a low cybersickness level and only one participant reported mild dizziness symptoms during the audio-visual session. Lastly, from the EmojiGrid ratings, a statistically significant increase was reported in valence [t(9) = 2.882, p = 0.018] and arousal [t(9) = 7.258, p = 0.001] with the multisensory MP condition relative to the audio-visual condition, thus suggesting a more pleasant and exciting experience.

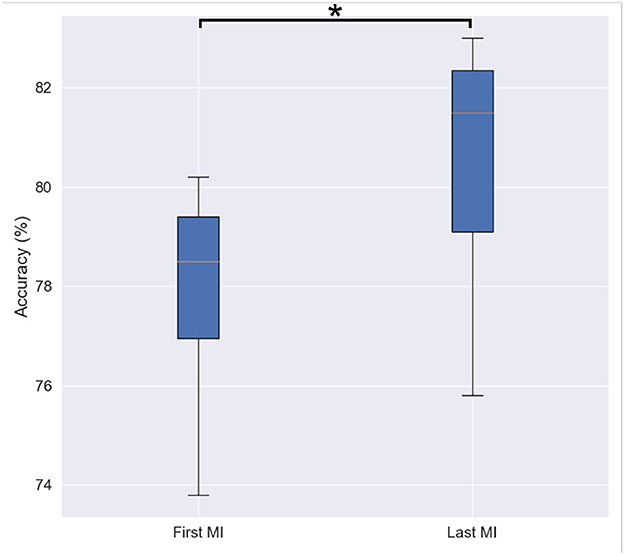

As previously described, each MI trial consisted of 10 s of imagery followed by 5 s of rest. Participants were asked to perform the MI task 15 times for all of the left oranges and 15 times for all of the right oranges, totalling 30 trials per subject. Figure 6 depicts the accuracy (averaged across participants and bootstrap instances) achieved for the first and last MI tasks. As can be seen, a statistically significant difference was observed by the paired sample t-test between the initial (M = 77.76, SD = 2.23) and the final (M = 80.32, SD = 2.62) MI sessions [t(9)= 2.407, p= 0.039]. This finding, aligned with the subjective reports described above, highlights the importance of priming prior to the motor imagery task, thus corroborating the findings from Daeglau et al. (2020).

Figure 6. Box-plot of binary classification accuracy for first and last motor imagery sessions. Asterisk shows statistically significant difference among two conditions revealed by a paired sample t-test.

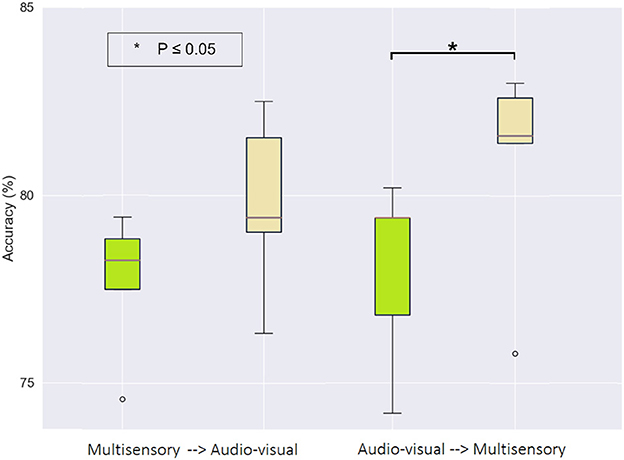

More importantly, we were interested in investigating which priming condition performed directly before the motor imagery task resulted in the greatest improvement. To this end, Figure 7 illustrates the accuracy achieved in the first (green) and last (beige) MI task, but now separated based on the subjects that performed the multisensory MP task first, followed by audio-visual (left graph), versus those that performed the audio-visual MP first and the multisensory last (right graph). The S-W test only revealed non-normal distribution for values of the first and last MI sessions in the group that received multisensory MP as their second condition. Using a Wilcoxon signed-rank test, a statistically significant difference was observed between the first (M = 78.02, SD = 2.48) and the last (M = 80.89, SD = 2.91) MI session (Z = 2.023, p = 0.043) in this group. This finding highlights the potential of multisensory MP directly preceding motor imagery as an interesting candidate to enhance MI-BCI accuracy. This short-term effect of priming on MI accuracy was also reported by Sun et al. (2022).

Figure 7. Box-plot of binary classification accuracy for the first (green) and last (beige) motor imagery sessions grouped by motor priming order. The * symbol indicates a statistically significant difference across experimental conditions using a paired sample t-test.

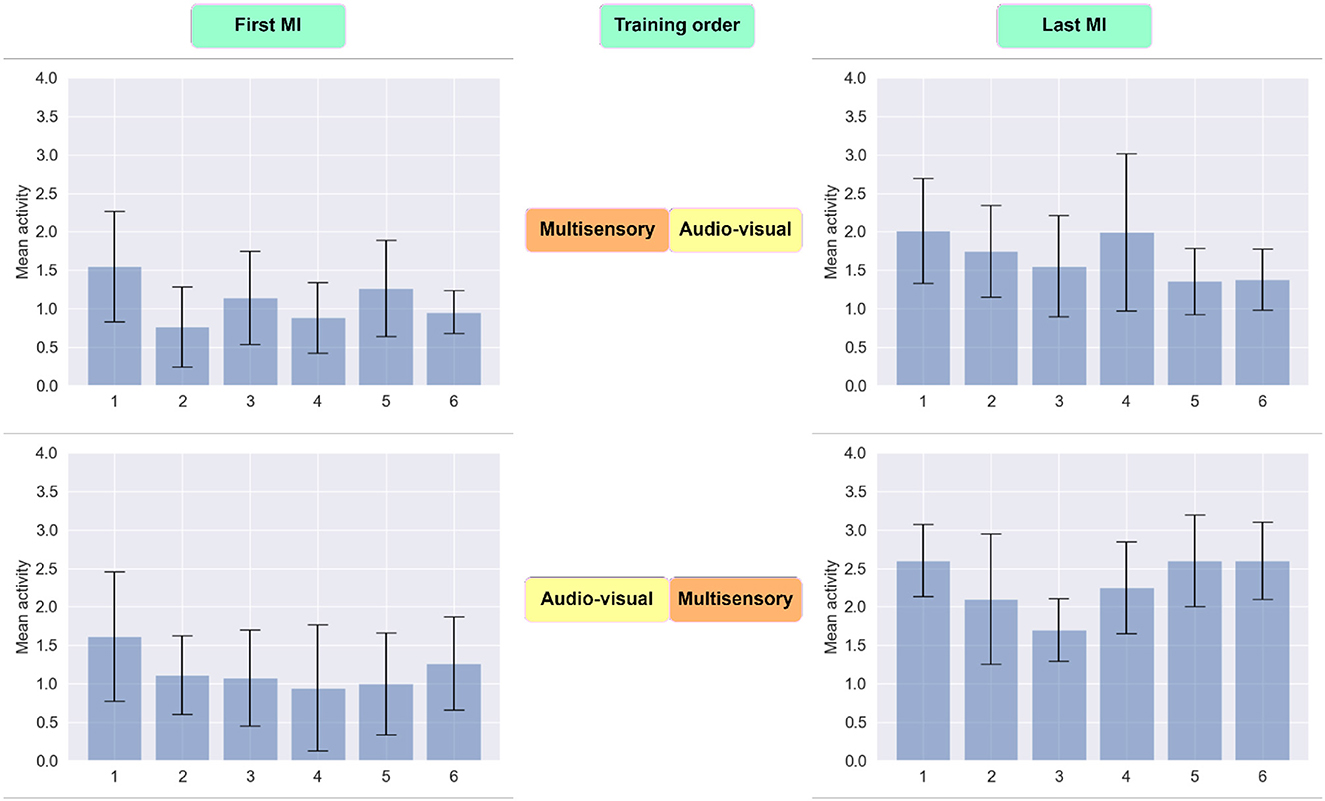

To gain further insights on the impact that priming has on motor imagery detection accuracy, we explored the impact of priming on the magnitude of the six CSP filters, where greater activity could be indicative of higher discriminability between the two classes. As shown in Figure 8, in both cases the CSP activity was higher in the last MI session relative to the first. This is expected given the effect of training and experience on BCI efficiency (Jochumsen et al., 2018). Notwithstanding, when multisensory MP was performed last, the CSP activity seen during the subsequent MI task was higher than when the audio-visual priming was performed last. This higher CSP activity corroborated the higher overall MI detection accuracy reported previously.

Figure 8. Distribution of extracted data using six CSP filters. The first row reflects the average activity of newly spatially filtered data during the first and last MI session for participants who received multisensory MP prior to audio-visual experience. In the second row, the average activity of newly spatially filtered data are reflected for subjects who exposed to audio-visual MP as their first priming condition.

The results reported above relied on CSP features computed over the entire 10-s imagery window and these features were used for offline BCI analysis. For real-time applications, however, it may be interesting to rely on lower window durations. To this end, we explored the effect that this window size has on overall accuracy. Lower window sizes would mean CSP filters would be computed faster and decisions could be made more quickly. Window size duration corresponds to the amount of time from the task cue.

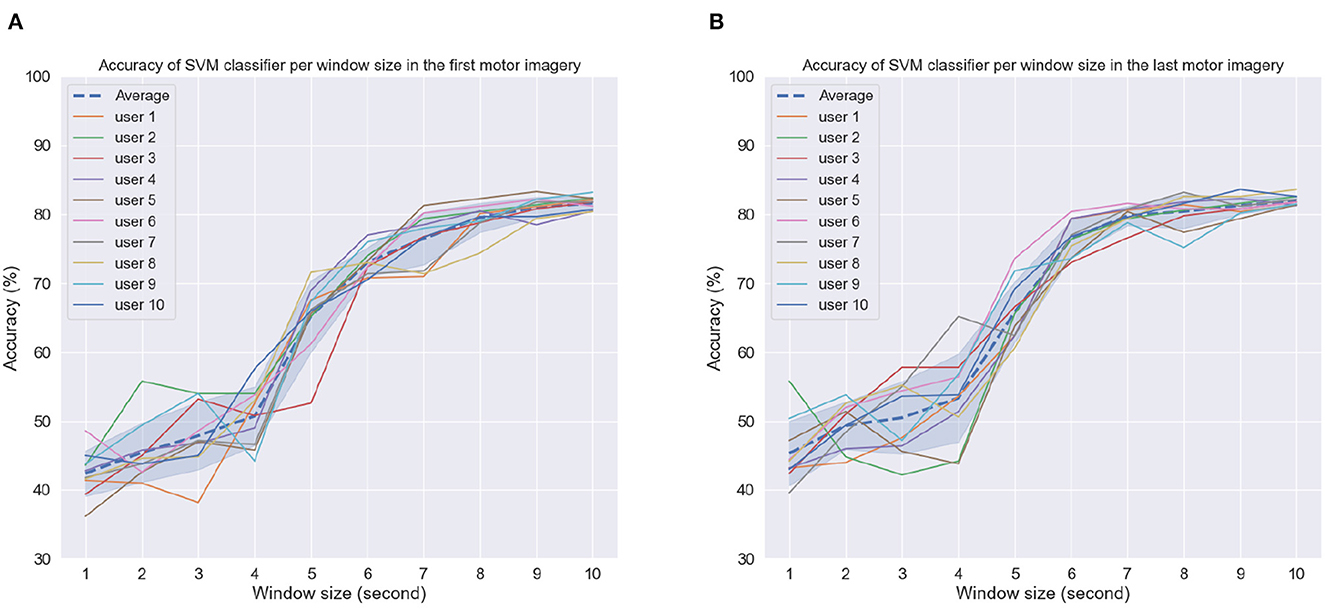

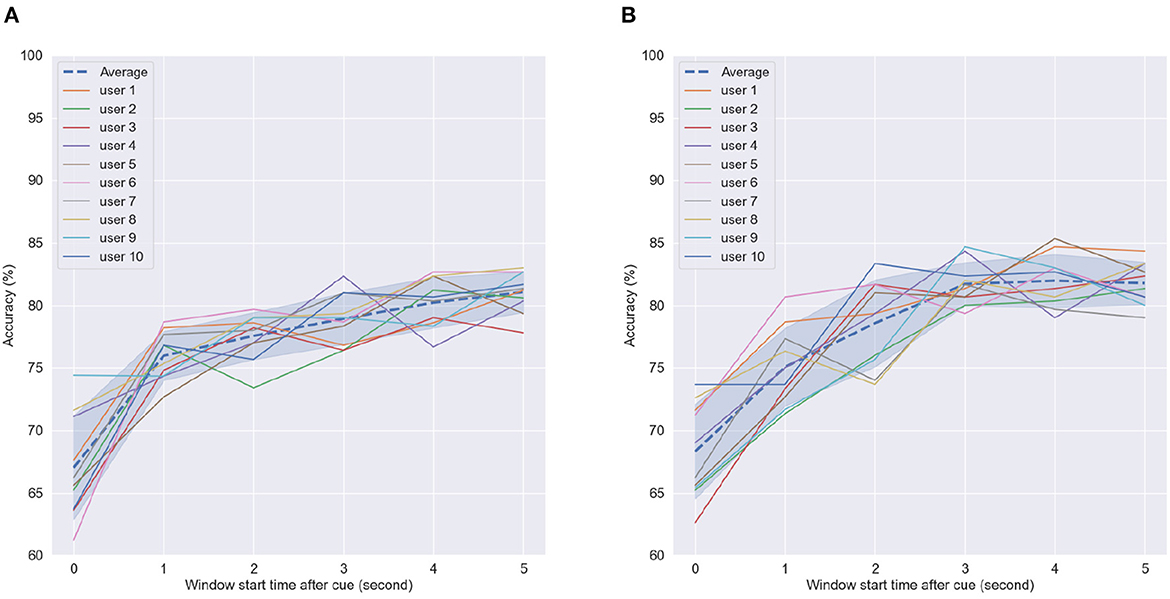

Figures 9A, B depict the achieved accuracy as a function of window size for the first and last MI tasks, respectively. Accuracy is reported per subject, as well as averaged across subjects (dashed line). As can be seen, for window sizes between 1 and 4 s, chance or below-chance levels were achieved on the unseen test dataset, suggesting that the imagery task should be performed for at least 5 s. However, this could be related to the time users needed to perform the MI task proposed here, i.e., reaching, grabbing, and squeezing the orange. In the first MI task, accuracy levels stabilized for some subjects for window sizes greater than 8 s, whereas in the last MI session, this could be achieved for most subjects around 7 s and for some even at 6 s. These findings suggest that after priming, peak MI-BCI accuracy could be achieved potentially 2 s faster than without priming for some subjects.

Figure 9. Accuracy as a function of window size per subject in the (A) first motor imagery session and (B) last motor imagery session. Dashed line indicates average accuracy across users per window length while shaded interval shows the standard deviation.

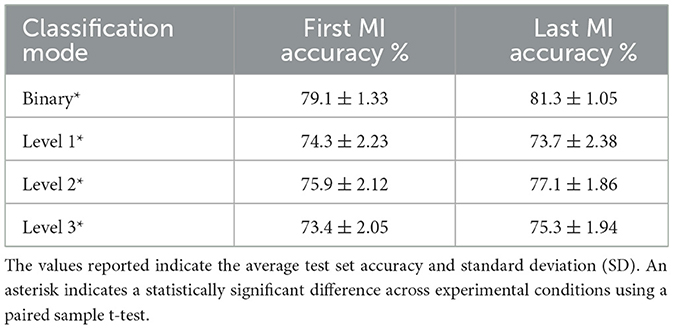

Next, we examined the effect of time-from-cue on overall accuracy. Time from cue indicates the amount of time waited once the subjects were cued to perform the task until CSP computation was performed. As the motor imagery task was fairly long (reach, grab an orange, move to the middle of the table, and then squeeze), certain parts of the imagery task may elicit stronger sensorimotor cortical activity. A longer time-from-cue duration will likely focus more on the imagery of moving the orange to the middle of the table and squeezing and less about the reaching of the arm to grab the orange. For this analysis, we kept the window size constant and varied the starting point for analysis. A 5-s window length was used based on the previous analysis. Figure 10 shows the achieved accuracy per subject and averaged across all subjects (dashed line) as a function of time-from-cue in seconds.

Figure 10. Accuracy of SVM classifier using 5-s fixed-length window size with different start points after cue in (A) First motor imagery session, (B) Last motor imagery session. Dashed line indicates average accuracy across users per window length while shaded interval show standard deviation.

As can be seen, the greatest gains were seen when 1 s or more were considered post cue presentation for CSP feature computation; such findings corroborate those reported previously in the literature (e.g., Blankertz et al., 2007). For the first MI task, average accuracy continued increasing, whereas in the last MI task, it plateaued after 3 s, where it reached peak values. These findings suggest that the arm reaching part done at the beginning of the imagery task may not generate sufficiently discriminative CSP patterns for the BCI processing pipeline. In fact, this part during priming received no tactile or olfactory stimuli. These only appeared later in the task during the grabbing and squeezing of the oranges. These findings further motivate the use of multisensory MP prior to MI.

Results reported up to now have been based on classifiers trained and tested on the same subject, thus were fine-tuned on their unique neural patterns. Here, we trained a global classifier where data from all subjects were pooled together. The first row of Table 2 reflects the average accuracy achieved on the test set for the first and last MI sessions. A paired sample t-test suggested a statistically significant difference [t(199) = –18.213, p ≤ 0.001].

Table 2. Global performance accuracy achieved once all left or right oranges were grouped together (binary), vs. when the classification was done per distance level.

Next, we were interested in observing if the distance of the imagined movement had an effect on overall MI-BCI accuracy. In this case, the left versus right classification task was performed three times, once for plates closest to the participant (level 1), at middle distance (level 2), and furthest away (level 3), each comprised of 100 trials. As can be seen, for level 1 classification, the last MI session actually achieved slightly lower accuracy compared to the first MI session. For the other two levels, higher accuracy was achieved during the last MI task. This finding may be explained by the underlying effects of distance postulated by Fitt's law in previous studies (e.g., Lorey et al., 2010; Anema and Dijkerman, 2013; Gerig et al., 2018; Errante et al., 2019). For instance, the work by Decety and Jeannerod (1995) showed a linear relationship between time elapsed to imagine a movement with width and distance to the cues. This influence could be a result of spatial presence in virtual environments (Ahn et al., 2019). Therefore, alongside duration and type of stimulus, the distance to the cued object from the participants or the depth of the 3D objects in the virtual environment could potentially play an important role in MI performance. Further studies are needed to validate this hypothesis.

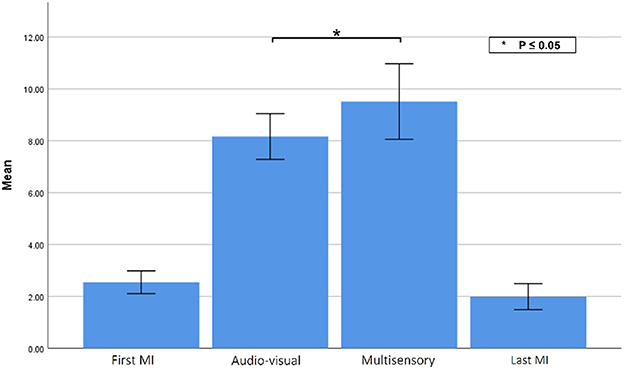

Lastly, to gauge the changes in EMG activity during the tasks, Figure 11 illustrates the changes in EMG MAV during the two MI tasks, as well as the two priming conditions. Using a paired sample t-test, a statistically significant difference was observed [t(9) = 2.935, p = 0.016] in muscle activity between the audio-visual (M = 8.16, SD = 1.40) and multisensory (M= 9.51, SD= 2.30) priming sessions, as the force feedback gloves were on only in the latter condition. The slight activity observed during the motor imagery tasks are likely indicative of the signal noise floor of the EMG sensors, but could also be an indicator of covert motor function that has been reported during motor imagery (Lebon et al., 2008; Dos Anjos et al., 2022).

Figure 11. EMG MAV during each experimental condition. Asterisks indicate statistically significant difference.

As mentioned previously, at the very end of the experiment an open-ended interview was conducted with each participant. All of the participants reported being able to perceive and recognize the olfactory and tactile stimuli in the multisensory condition. All confirmed they could feel the texture of the objects in the virtual environment and that this helped improve their sense of presence and the realism of the interaction. Regarding the citrus scent, some participants reported it as pleasant, while others suggested it was too intense. Such variability could be associated with age and/or gender related factors, as reported previously by Murray et al. (2013). The majority reported that the olfactory stimulus helped them enhance their sense of immersion, thus corroborating previous studies (Kreimeier et al., 2019; De Jesus et al., 2022).

The presented study was conducted during the sixth wave of the COVID-19 pandemic, therefore, several limitations were applied to ensure participant safety. Consequently, this limited our sample size, as well as the generalizability of the achieved results. As such, future work should aim to increase the participant pool size. Nevertheless, the observed significant changes already corroborate those found in previous studies with higher number of participants, thus the obtained results are promising. Future work should take advantage of multisensory priming directly preceding motor imagery tasks to improve MI-BCI performance, especially for inefficient users. Moreover, analysis of the impact of different window duration during the MI task suggested that for some subjects, decisions could be made up to 2 s faster. These benefits could lead to BCI protocols that are faster and more engaging for participants.

The methods used here relied on conventional feature extraction (i.e., CSPs) and classification (i.e., SVM) pipelines. Future work could explore the use of more recent algorithms, such as deep neural networks. In fact, deep learning approaches have provided opportunities for real-time analysis and interpretation of brain signals (Cho et al., 2021), as well as improved MI accuracy (Zhang et al., 2019), thus could further benefit inefficient users.

The development of the virtual environment for the MP task was driven by the available functionalities of the haptic glove and the available smells within the OVR kit, which was custom-developed for nature scenes. Therefore, future motor priming tasks could benefit from the use of multisensory VR systems that incorporate more realistic scenarios tailored to the preferences and interests of each user. Personalized environments could increase levels of engagement during the MP sessions and potentially further improve MI performance. By providing a more immersive and engaging experience, multisensory VR systems could help users sustain interest in the task at hand. This aspect is particularly important for rehabilitation purposes, where participants have been shown to struggle with motivation and adherence to conventional protocols Oyake et al. (2020). Lastly, the proposed multisensory MP task requires users to have some functional motor control, which may limit its application for individuals with severe motor disabilities, such as those with locked-in syndrome or post-stroke.

In this paper, we reported the results of a pilot study conducted on 10 participants to evaluate the impact of multisensory motor priming in VR (where olfactory and haptic stimuli were included) to improve motor imagery performance. To this end, a biosensor-instrumented head-mounted display was developed and coupled with an off-the-shelf scent diffuser and haptic glove. Experimental results showed the benefits of a multisensory VR-based priming task relative to a conventional audio-visual VR MP task, with significant improvements achieved in motor imagery detection accuracy. Insights on the impact of window size and time-from-cue duration were also obtained and reported. Overall, these preliminary results provided insights on the advantages of multisensory motor priming on motor imagery performance and offered new perspectives on how to potentially improve MI-BCI performance.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Institut National de la Recherche Scientifique (INRS), University of Quebec (number: CER-22-663). The patients/participants provided their written informed consent to participate in this study.

Both authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

This work was funded by the Natural Sciences and Engineering Council (NSERC) of Canada (RGPIN-2021-03246).

The authors wish to thank the reviewers whose thorough comments and insightful suggestions have helped significantly improve the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbruzzese, G., Avanzino, L., Marchese, R., and Pelosin, E. (2015). Action observation and motor imagery: innovative cognitive tools in the rehabilitation of parkinson's disease. Parkinsons Dis. 2015, 124214. doi: 10.1155/2015/124214

Ahn, M., Cho, H., Ahn, S., and Jun, S. C. (2013). High theta and low alpha powers may be indicative of BCI-illiteracy in motor imagery. PLoS ONE 8, e80886. doi: 10.1371/journal.pone.0080886

Ahn, S., Sah, Y. J., and Lee, S. (2019). “Moderating effects of spatial presence on the relationship between depth cues and user performance in virtual reality,” in International Conference on Ubiquitous Information Management and Communication (Cham: Springer), 333–340. doi: 10.1007/978-3-030-19063-7_27

Alkan, A., and Günay, M. (2012). Identification of EMG signals using discriminant analysis and SVM classifier. Expert Syst. Applic. 39, 44–47. doi: 10.1016/j.eswa.2011.06.043

Allison, B. Z., and Neuper, C. (2010). “Could anyone use a BCI?” in Brain-Computer Interfaces (London: Springer), 35–54. doi: 10.1007/978-1-84996-272-8_3

Amini Gougeh, R., and Falk, T. H. (2022a). HMD-based virtual reality and physiological computing for stroke rehabilitation: a systematic review. Front. Virtual Real. 3, 889271. doi: 10.3389/frvir.2022.889271

Amini Gougeh, R., and Falk, T. H. (2022b). “Multisensory immersive experiences: A pilot study on subjective and instrumental human influential factors assessment,” in 2022 14th International Conference on Quality of Multimedia Experience (QoMEX) (Lippstadt: IEEE), 1–6. doi: 10.1109/QoMEX55416.2022.9900907

Amini Gougeh, R., Jesus, B., Lopes, M. K. S., Moinnereau, M.-A., Schubert, W., and Falk, T. H. (2022). “Quantifying user behaviour in multisensory immersive experiences,” in 2022 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence, and Neural Engineering (MetroXRAINE) (Rome: IEEE), 1–5.

Amini Gougeh, R., Rezaii, T. Y., and Farzamnia, A. (2021). “An automatic driver assistant based on intention detecting using EEG signal,” in Proceedings of the 11th National Technical Seminar on Unmanned System Technology 2019 (Singapore: Springer), 617–627. doi: 10.1007/978-981-15-5281-6_43

Anema, H. A., and Dijkerman, H. C. (2013). Multisensory Imagery: Motor and Kinesthetic Imagery. New York, NY: Springer New York, 93–113 doi: 10.1007/978-1-4614-5879-1_6

Arpaia, P., Coyle, D., Donnarumma, F., Esposito, A., Natalizio, A., and Parvis, M. (2022). “Non-immersive versus immersive extended reality for motor imagery neurofeedback within a brain-computer interfaces,” in International Conference on Extended Reality (Cham: Springer), 407–419. doi: 10.1007/978-3-031-15553-6_28

Benaroch, C., Yamamoto, M. S., Roc, A., Dreyer, P., Jeunet, C., and Lotte, F. (2022). When should MI-BCI feature optimization include prior knowledge, and which one? Brain Comput. Interfaces 9, 115–128. doi: 10.1080/2326263X.2022.2033073

Bernal, G., Hidalgo, N., Russomanno, C., and Maes, P. (2022). “Galea: a physiological sensing system for behavioral research in virtual environments,” in 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Christchurch: IEEE), 66–76. doi: 10.1109/VR51125.2022.00024

Birbaumer, N., Ruiz, S., and Sitaram, R. (2013). Learned regulation of brain metabolism. Trends Cogn. Sci. 17, 295–302. doi: 10.1016/j.tics.2013.04.009

Blankertz, B., Sannelli, C., Halder, S., Hammer, E. M., Kübler, A., Müller, K.-R., et al. (2010). Neurophysiological predictor of SMR-based BCI performance. Neuroimage 51, 1303–1309. doi: 10.1016/j.neuroimage.2010.03.022

Blankertz, B., Tomioka, R., Lemm, S., Kawanabe, M., and Muller, K.-R. (2007). Optimizing spatial filters for robust EEG single-trial analysis. IEEE Signal Process. Magaz. 25, 41–56. doi: 10.1109/MSP.2008.4408441

Cassani, R., Moinnereau, M.-A., Ivanescu, L., Rosanne, O., and Falk, T. (2020). Neural interface instrumented virtual reality headsets: toward next-generation immersive applications. IEEE Systems Man Cybernet. Magaz. 6, 20–28. doi: 10.1109/MSMC.2019.2953627

Castaneda, D., Esparza, A., Ghamari, M., Soltanpur, C., and Nazeran, H. (2018). A review on wearable photoplethysmography sensors and their potential future applications in health care. Int. J. Biosensors Bioelectron. 4, 195. doi: 10.15406/ijbsbe.2018.04.00125

Cecotti, H., and Rivet, B. (2014). Subject combination and electrode selection in cooperative brain-computer interface based on event related potentials. Brain Sci. 4, 335–355. doi: 10.3390/brainsci4020335

Chatterjee, R., and Bandyopadhyay, T. (2016). “EEG based motor imagery classification using SVM and MLP,” in 2016 2nd International Conference on Computational Intelligence and Networks (CINE) (Bhubaneswar: IEEE), 84–89. doi: 10.1109/CINE.2016.22

Cho, J.-H., Jeong, J.-H., and Lee, S.-W. (2021). Neurograsp: real-time eeg classification of high-level motor imagery tasks using a dual-stage deep learning framework. IEEE Trans. Cybernet. 52, 13279–13292. doi: 10.1109/TCYB.2021.3122969

Choy, C. S., Cloherty, S. L., Pirogova, E., and Fang, Q. (2022). Virtual reality assisted motor imagery for early post-stroke recovery: a review. IEEE Rev. Biomed. Eng. 16, 487–498. doi: 10.1109/RBME.2022.3165062

Daeglau, M., Zich, C., Emkes, R., Welzel, J., Debener, S., and Kranczioch, C. (2020). Investigating priming effects of physical practice on motor imagery-induced event-related desynchronization. Front. Psychol. 11, 57. doi: 10.3389/fpsyg.2020.00057

De Jesus, B. Jr, Lopes, M., Moinnereau, M.-A., Amini Gougeh, R., Rosanne, O. M., Schubert, W., et al. (2022). “Quantifying multisensory immersive experiences using wearables: is (stimulating) more (senses) always merrier?” in Proceedings of the 2nd Workshop on Multisensory Experiences-SensoryX'22 (Porto Alegre: SBC). doi: 10.5753/sensoryx.2022.20001

Decety, J., and Jeannerod, M. (1995). Mentally simulated movements in virtual reality: does fitt's law hold in motor imagery? Behav. Brain Res. 72, 127–134. doi: 10.1016/0166-4328(96)00141-6

Delorme, A., and Makeig, S. (2004). Eeglab: an open source toolbox for analysis of single-trial eeg dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Dornhege, G., Blankertz, B., Krauledat, M., Losch, F., Curio, G., and Müller, K.-R. (2005). Optimizing spatio-temporal filters for improving brain-computer interfacing. Adv. Neural Inform. Process. Syst. 18.

Dos Anjos, T., Guillot, A., Kerautret, Y., Daligault, S., and Di Rienzo, F. (2022). Corticomotor plasticity underlying priming effects of motor imagery on force performance. Brain Sci. 12, 1537. doi: 10.3390/brainsci12111537

Ebner, F. F. (2005). Neural Plasticity in Adult Somatic Sensory-Motor Systems. Boca Raton: CRC Press. doi: 10.1201/9780203508039

Edlinger, G., Allison, B. Z., and Guger, C. (2015). How many people can use a bci system? Clin. Syst. Neurosci. 33–66. doi: 10.1007/978-4-431-55037-2_3

Elliott, A. C., and Woodward, W. A. (2007). Statistical Analysis Quick Reference Guidebook: With SPSS Examples. Thousand Oaks, CA: Sage. doi: 10.4135/9781412985949

Errante, A., Bozzetti, F., Sghedoni, S., Bressi, B., Costi, S., Crisi, G., et al. (2019). Explicit motor imagery for grasping actions in children with spastic unilateral cerebral palsy. Front. Neurol. 10:837. doi: 10.3389/fneur.2019.00837

Feick, M., Kleer, N., Tang, A., and Antonio, K. (2020). “The virtual reality questionnaire toolkit,” in The 33st Annual ACM Symposium on User Interface Software and Technology Adjunct Proceedings, UIST '20 Adjunct (New York, NY, USA: Association for Computing Machinery. event-place: Minneapolis, Minnesota, USA), 68–69. doi: 10.1145/3379350.3416188

Filiz, E., and Arslan, R. B. (2020). “Design and implementation of steady state visual evoked potential based brain computer interface video game,” in 2020 IEEE 20th Mediterranean Electrotechnical Conference (MELECON) (Palermo: IEEE), 335–338. doi: 10.1109/MELECON48756.2020.9140710

Garcia-Moreno, F. M., Bermudez-Edo, M., Rodríguez-Fórtiz, M. J., and Garrido, J. L. (2020). “A CNN-LSTM deep learning classifier for motor imagery EEG detection using a low-invasive and low-cost BCI headband,” in 2020 16th International Conference on Intelligent Environments (IE) (Madrid: IEEE), 84–91. doi: 10.1109/IE49459.2020.9155016

Gaur, P., Pachori, R. B., Wang, H., and Prasad, G. (2015). “An empirical mode decomposition based filtering method for classification of motor-imagery EEG signals for enhancing brain-computer interface,” in 2015 International Joint Conference on Neural Networks (IJCNN) (Killarney: IEEE), 1–7. doi: 10.1109/IJCNN.2015.7280754

Gerig, N., Mayo, J., Baur, K., Wittmann, F., Riener, R., and Wolf, P. (2018). Missing depth cues in virtual reality limit performance and quality of three dimensional reaching movements. PLoS ONE 13, e0189275. doi: 10.1371/journal.pone.0189275

Gougeh, R. A., De Jesus, B. J., Lopes, M. K., Moinnereau, M.-A., Schubert, W., and Falk, T. H. (2022). “Quantifying user behaviour in multisensory immersive experiences,” in 2022 IEEE International Conference on Metrology for Extended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE) (Rome: IEEE), 64–68.

Gupta, R., Laghari, K., Banville, H., and Falk, T. H. (2016). Using affective brain-computer interfaces to characterize human influential factors for speech quality-of-experience perception modelling. Hum. Centric Comput. Inform. Sci. 6, 1–19. doi: 10.1186/s13673-016-0062-5

Infortuna, C., Gualano, F., Freedberg, D., Patel, S. P., Sheikh, A. M., Muscatello, M. R. A., et al. (2022). Motor cortex response to pleasant odor perception and imagery: the differential role of personality dimensions and imagery ability. Front. Hum. Neurosci. 16, 943469. doi: 10.3389/fnhum.2022.943469

ITU. (2008). ITU-T Recommendation p. 910: Subjective Video Quality Assessment Methods for Multimedia Applications. Technical report.

Jasper, H. H. (1958). The ten-twenty electrode system of the international federation. Electroencephalogr. Clin. Neurophysiol. 10, 370–375.

Jiang, A., Shang, J., Liu, X., Tang, Y., Kwan, H. K., and Zhu, Y. (2020). Efficient CSP algorithm with spatio-temporal filtering for motor imagery classification. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 1006–1016. doi: 10.1109/TNSRE.2020.2979464

Jochumsen, M., Niazi, I. K., Nedergaard, R. W., Navid, M. S., and Dremstrup, K. (2018). Effect of subject training on a movement-related cortical potential-based brain-computer interface. Biomed. Signal Process. Control 41, 63–68. doi: 10.1016/j.bspc.2017.11.012

Kim, K.-T., Suk, H.-I., and Lee, S.-W. (2016). Commanding a brain-controlled wheelchair using steady-state somatosensory evoked potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 26, 654–665. doi: 10.1109/TNSRE.2016.2597854

Kosunen, I., Ruonala, A., Salminen, M., Järvelä, S., Ravaja, N., and Jacucci, G. (2017). “Neuroadaptive meditation in the real world,” in Proceedings of the 2017 ACM Workshop on An Application-Oriented Approach to BCI out of the Laboratory (New York, NY: Association for Computing Machinery), 29–33. doi: 10.1145/3038439.3038443

Kothe, C. A. E., and Jung, T.-P. (2016). Artifact Removal Techniques With Signal Reconstruction. US Patent App. 14/895,440.

Kreimeier, J., Hammer, S., Friedmann, D., Karg, P., Bühner, C., Bankel, L., et al. (2019). “Evaluation of different types of haptic feedback influencing the task-based presence and performance in virtual reality,” in Proceedings of the 12th acm International Conference on Pervasive Technologies Related to Assistive Environments (New York, NY: Association for Computing Machinery), 289–298. doi: 10.1145/3316782.3321536

Lebon, F., Rouffet, D., Collet, C., and Guillot, A. (2008). Modulation of EMG power spectrum frequency during motor imagery. Neurosci. Lett. 435, 181–185. doi: 10.1016/j.neulet.2008.02.033

Leon-Urbano, C., and Ugarte, W. (2020). “End-to-end electroencephalogram (EEG) motor imagery classification with long short-term,” in 2020 IEEE Symposium Series on Computational Intelligence (SSCI) (Canberra: IEEE), 2814–2820. doi: 10.1109/SSCI47803.2020.9308610

Lopes, M. K., de Jesus, B. J., Moinnereau, M.-A., Gougeh, R. A., Rosanne, O. M., Schubert, W., et al. (2022). “Nat(UR)e: Quantifying the relaxation potential of ultra-reality multisensory nature walk experiences,” in 2022 IEEE International Conference on Metrology for Extended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE) (Rome: IEEE), 459–464. doi: 10.1109/MetroXRAINE54828.2022.9967576

Lopez-Gordo, M., Perez, E., and Minguillon, J. (2019). “Gaming the attention with a SSVEP-based brain-computer interface,” in International Work-Conference on the Interplay Between Natural and Artificial Computation (Cham: Springer), 51–59. doi: 10.1007/978-3-030-19591-5_6

Lorey, B., Pilgramm, S., Walter, B., Stark, R., Munzert, J., and Zentgraf, K. (2010). Your mind's hand: motor imagery of pointing movements with different accuracy. Neuroimage 49, 3239–3247. doi: 10.1016/j.neuroimage.2009.11.038

Marquez-Chin, C., and Popovic, M. R. (2020). Functional electrical stimulation therapy for restoration of motor function after spinal cord injury and stroke: a review. Biomed. Eng. Online 19, 1–25. doi: 10.1186/s12938-020-00773-4

Melo, M., Gonćcalves, G., Monteiro, P., Coelho, H., Vasconcelos-Raposo, J., and Bessa, M. (2020). Do Multisensory Stimuli Benefit the Virtual Reality Experience? A Systematic Review. IEEE Transactions on Visualization and Computer Graphics.

Moinnereau, M.-A., Oliveira, A., and Falk, T. H. (2022). “Human influential factors assessment during at-home gaming with an instrumented vr headset,” in 2022 14th International Conference on Quality of Multimedia Experience (QoMEX) (Lippstadt: IEEE), 1–4. doi: 10.1109/QoMEX55416.2022.9900912

Murray, N., Qiao, Y., Lee, B., Muntean, G.-M., and Karunakar, A. K. (2013). “Age and gender influence on perceived olfactory & visual media synchronization,” in 2013 IEEE International Conference on Multimedia and Expo (ICME) (San Jose: IEEE), 1–6. doi: 10.1109/ICME.2013.6607467

Myrden, A., and Chau, T. (2015). Effects of user mental state on EEG-BCI performance. Front. Hum. Neurosci. 9, 308. doi: 10.3389/fnhum.2015.00308

Mzurikwao, D., Samuel, O. W., Asogbon, M. G., Li, X., Li, G., Yeo, W.-H., et al. (2019). “A channel selection approach based on convolutional neural network for multi-channel EEG motor imagery decoding,” in 2019 IEEE Second International Conference on Artificial Intelligence and Knowledge Engineering (AIKE) (Sardinia: IEEE), 195–202. doi: 10.1109/AIKE.2019.00042

Nguyen, T., Hettiarachchi, I., Khatami, A., Gordon-Brown, L., Lim, C. P., and Nahavandi, S. (2018). Classification of multi-class BCI data by common spatial pattern and fuzzy system. IEEE Access 6, 27873–27884. doi: 10.1109/ACCESS.2018.2841051

Oyake, K., Suzuki, M., Otaka, Y., and Tanaka, S. (2020). Motivational strategies for stroke rehabilitation: a descriptive cross-sectional study. Front. Neurol. 11, 553. doi: 10.3389/fneur.2020.00553

Philip, J. T., and George, S. T. (2020). Visual P300 mind-speller brain-computer interfaces: a walk through the recent developments with special focus on classification algorithms. Clin. EEG Neurosci. 51, 19–33. doi: 10.1177/1550059419842753

Ramoser, H., Muller-Gerking, J., and Pfurtscheller, G. (2000). Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Trans. Rehabil. Eng. 8, 441–446. doi: 10.1109/86.895946

Rossi, S., De Capua, A., Pasqualetti, P., Ulivelli, M., Fadiga, L., Falzarano, V., et al. (2008). Distinct olfactory cross-modal effects on the human motor system. PLoS ONE 3, e1702. doi: 10.1371/journal.pone.0001702

Ruffino, C., Papaxanthis, C., and Lebon, F. (2017). Neural plasticity during motor learning with motor imagery practice: review and perspectives. Neuroscience 341, 61–78. doi: 10.1016/j.neuroscience.2016.11.023

Sebastián-Romagosa, M., Cho, W., Ortner, R., Murovec, N., Von Oertzen, T., Kamada, K., et al. (2020). Brain computer interface treatment for motor rehabilitation of upper extremity of stroke patients: a feasibility study. Front. Neurosci. 14, 1056. doi: 10.3389/fnins.2020.591435

Škola, F., and Liarokapis, F. (2018). Embodied VR environment facilitates motor imagery brain-computer interface training. Comput. Graphics 75, 59–71. doi: 10.1016/j.cag.2018.05.024

Smeets, M., and Dijksterhuis, G. B. (2014). Smelly primes-when olfactory primes do or do not work. Front. Psychol. 5, 96. doi: 10.3389/fpsyg.2014.00096

Stoykov, M. E., and Madhavan, S. (2015). Motor priming in neurorehabilitation. J. Neurol. Phys. Ther. 39, 33. doi: 10.1097/NPT.0000000000000065

Sun, Z., Jiang, Y.-,c., Li, Y., Song, J., and Zhang, M. (2022). Short-term priming effects: an EEG study of action observation on motor imagery. IEEE Trans. Cogn. Dev. Syst. 1. doi: 10.1109/TCDS.2022.3187777

Talukdar, U., Hazarika, S. M., and Gan, J. Q. (2019). Motor imagery and mental fatigue: inter-relationship and eeg based estimation. J. Comput. Neurosci. 46, 55–76. doi: 10.1007/s10827-018-0701-0

Tang, N., Guan, C., Ang, K., Phua, K., and Chew, E. (2018). Motor imagery-assisted brain-computer interface for gait retraining in neurorehabilitation in chronic stroke. Ann. Phys. Rehabil. Med. 61, e188. doi: 10.1016/j.rehab.2018.05.431

Thompson, M. C. (2019). Critiquing the concept of BCI illiteracy. Sci. Eng. Ethics 25, 1217–1233. doi: 10.1007/s11948-018-0061-1

Tibrewal, N., Leeuwis, N., and Alimardani, M. (2022). Classification of motor imagery EEG using deep learning increases performance in inefficient bci users. PLoS ONE 17, e0268880. doi: 10.1371/journal.pone.0268880

Toet, A., and van Erp, J. B. (2019). The emojigrid as a tool to assess experienced and perceived emotions. Psych 1, 469–481. doi: 10.3390/psych1010036

Tubaldi, F., Turella, L., Pierno, A. C., Grodd, W., Tirindelli, R., and Castiello, U. (2011). Smelling odors, understanding actions. Soc. Neurosci. 6, 31–47. doi: 10.1080/17470911003691089

Vidal, J. J. (1973). Toward direct brain-computer communication. Annu. Rev. Biophys. Bioeng. 2, 157–180. doi: 10.1146/annurev.bb.02.060173.001105

Vourvopoulos, A., and Bermúdez i Badia, S. (2016). Motor priming in virtual reality can augment motor-imagery training efficacy in restorative brain-computer interaction: a within-subject analysis. J. Neuroeng. Rehabil. 13, 1–14. doi: 10.1186/s12984-016-0173-2

Vourvopoulos, A., Cardona, J. E. M., and i Badia, S. B. (2015). “Optimizing motor imagery neurofeedback through the use of multimodal immersive virtual reality and motor priming,” in 2015 International Conference on Virtual Rehabilitation (ICVR) (Valencia: IEEE), 228–234. doi: 10.1109/ICVR.2015.7358592

Vourvopoulos, A., Ferreira, A., and i Badia, S. B. (2016). “Neurow: an immersive vr environment for motor-imagery training with the use of brain-computer interfaces and vibrotactile feedback,” in International Conference on Physiological Computing Systems, volume 2 (Madrid: SciTePress), 43–53. doi: 10.5220/0005939400430053

Vourvopoulos, A., Jorge, C., Abreu, R., Figueiredo, P., Fernandes, J.-C., and Bermudez i Badia, S. (2019). Efficacy and brain imaging correlates of an immersive motor imagery BCI-driven VR system for upper limb motor rehabilitation: a clinical case report. Front. Hum. Neurosci. 13, 244. doi: 10.3389/fnhum.2019.00244

Wang, W., Yang, B., Guan, C., and Li, B. (2019). “A VR combined with MI-BCI application for upper limb rehabilitation of stroke,” in 2019 IEEE MTT-S International Microwave Biomedical Conference (IMBioC), volume 1 (Nanjing: IEEE), 1–4. doi: 10.1109/IMBIOC.2019.8777805

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain–computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/S1388-2457(02)00057-3

Wolpaw, J. R., Millán, J. d. R, and Ramsey, N. F. (2020). Brain-computer interfaces: definitions and principles. Handb. Clin. Neurol. 168, 15–23. doi: 10.1016/B978-0-444-63934-9.00002-0

Wolpaw, J. R., and Wolpaw, E. W. (2012). Brain-computer interfaces: something new under the sun. Brain Comput. Interfaces Principles Pract. 14. doi: 10.1093/acprof:oso/9780195388855.001.0001

Wriessnegger, S. C., Brunner, C., and Müller-Putz, G. R. (2018). Frequency specific cortical dynamics during motor imagery are influenced by prior physical activity. Front. Psychol. 9:1976. doi: 10.3389/fpsyg.2018.01976

Yang, C., Ye, Y., Li, X., and Wang, R. (2018). Development of a neuro-feedback game based on motor imagery EEG. Multimedia Tools Applic. 77, 15929–15949. doi: 10.1007/s11042-017-5168-x

Zander, T. O., and Jatzev, S. (2011). Context-aware brain-computer interfaces: exploring the information space of user, technical system and environment. J. Neural Eng. 9, 016003. doi: 10.1088/1741-2560/9/1/016003

Zhang, J., and Wang, M. (2021). A survey on robots controlled by motor imagery brain-computer interfaces. Cogn. Robotics 1, 12–24. doi: 10.1016/j.cogr.2021.02.001

Zhang, X., Yao, L., Wang, X., Monaghan, J., Mcalpine, D., and Zhang, Y. (2019). A survey on deep learning based brain computer interface: recent advances and new frontiers. arXiv preprint arXiv:1905.04149,04166. 1–42. doi: 10.48550/arXiv.1905.04149

Keywords: brain-computer interface, motor imagery, multisensory priming, virtual reality, haptics, force feedback, olfaction

Citation: Amini Gougeh R and Falk TH (2023) Enhancing motor imagery detection efficacy using multisensory virtual reality priming. Front. Neuroergon. 4:1080200. doi: 10.3389/fnrgo.2023.1080200

Received: 26 October 2022; Accepted: 23 March 2023;

Published: 06 April 2023.

Edited by:

Athanasios Vourvopoulos, Instituto Superior Técnico (ISR), PortugalReviewed by:

Maryam Alimardani, Tilburg University, NetherlandsCopyright © 2023 Amini Gougeh and Falk. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tiago H. Falk, dGlhZ28uZmFsa0BpbnJzLmNh

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.