- 1Neurophysiology of Everyday Life Group, Department of Psychology, University of Oldenburg, Oldenburg, Germany

- 2Pius-Hospital Oldenburg, University Hospital for Visceral Surgery, University of Oldenburg, Oldenburg, Germany

- 3Research Center for Neurosensory Science, University of Oldenburg, Oldenburg, Germany

Introduction: In demanding work situations (e.g., during a surgery), the processing of complex soundscapes varies over time and can be a burden for medical personnel. Here we study, using mobile electroencephalography (EEG), how humans process workplace-related soundscapes while performing a complex audio-visual-motor task (3D Tetris). Specifically, we wanted to know how the attentional focus changes the processing of the soundscape as a whole.

Method: Participants played a game of 3D Tetris in which they had to use both hands to control falling blocks. At the same time, participants listened to a complex soundscape, similar to what is found in an operating room (i.e., the sound of machinery, people talking in the background, alarm sounds, and instructions). In this within-subject design, participants had to react to instructions (e.g., “place the next block in the upper left corner”) and to sounds depending on the experimental condition, either to a specific alarm sound originating from a fixed location or to a beep sound that originated from varying locations. Attention to the alarm reflected a narrow attentional focus, as it was easy to detect and most of the soundscape could be ignored. Attention to the beep reflected a wide attentional focus, as it required the participants to monitor multiple different sound streams.

Results and discussion: Results show the robustness of the N1 and P3 event related potential response during this dynamic task with a complex auditory soundscape. Furthermore, we used temporal response functions to study auditory processing to the whole soundscape. This work is a step toward studying workplace-related sound processing in the operating room using mobile EEG.

1. Introduction

Auditory attention, i.e., focusing on relevant sounds and ignoring irrelevant sounds, is a fundamental skill at workplaces with both, a high level of responsibility and a soundscape containing a variety of sounds. Surgery staff, for example, performs a highly complex task while being exposed to conversations, machine and tool sounds, and background music. This soundscape accumulates to sound pressure levels regularly exceeding 50dB(A) (Tsiou et al., 2008; Hasfeldt et al., 2010; Engelmann et al., 2014; Baltin et al., 2020). The soundscape can become a burden for the medical staff (Healey et al., 2007; Tsiou et al., 2008; Jung et al., 2020; Pleban et al., 2021; van Harten et al., 2021; Maier-Hein et al., 2022), and increases surgical complication rates (Kurmann et al., 2011; Engelmann et al., 2014). Interestingly, the focus of attention to sounds and their interpretation changes throughout a surgery. For example, conversations of others are sometimes perceived as distrubing when concentration is high, while other times they are attended to and even encouraged (van Harten et al., 2021). In the former case auditory attention is focused only on task-relevant sounds (e.g., instructions or alarm sounds) and suppresses irrelevant sounds (e.g., chatting). In other words, the attentional focus is narrowed to the task. In the latter case attention switches between multiple sound sources, such as task-relevant instructions and task-irrelevant chatting. In other words, the attentional focus is wide and a large extentd of the soundscape is processed. Our goal was to study a narrow compared to a wide focus to better understand auditory attention in a complex and multi-sensory environment.

Electroencephalography (EEG) can be used to measure auditory attention continuously, objectively, and without the interruption of a person. Mobile EEG systems allow to study the brain in a working environment rather than in the lab (Wascher et al., 2014, 2021; Hölle et al., 2021) and has already been used to assess performance during laparoscopic training and simulation (Pugh et al., 2020; Shafiei et al., 2021; Thomaschewski et al., 2021; Maier-Hein et al., 2022; Suárez et al., 2022). Thus, with EEG we want to study auditory attention in the operating room and understand when sounds become a burden.

When transitioning from the lab to the operating room, we must consider that our expectation about auditory attention is mainly derived from highly controlled studies (Gramann et al., 2021). In order to generalize lab findings to more complex environments we have to increase the environmental complexity. One approach to increase complexity is naturalistic laboratory research, which provides a balance between stimulus control and ecological validity (Matusz et al., 2019). We decided to develop a complex and dynamic, audio-visual-motor task while maintaining experimental control over stimuli. Thereby, EEG responses related to auditory attention can be studied in a complex environment.

We first operationalized the soundscape of an operating room into five stimulus categories: a continuous background stream, as well as, task relevant and irrelevant sounds, and task relevant and irrelevant speech stimuli (Hasfeldt et al., 2010; Engelmann et al., 2014). The background stream represents sounds originating from running machines, ventilation, and people moving around. Task relevant speech represents exchanges about the surgery, as well as, instructions. Task irrelevant speech represents private conversations. Task relevant sounds represent, e.g., alarm sounds and feedback from instruments. Task irrelevant sounds represent, e.g., phone ringing or sounds from monitors.

We then combined our operationalization of the soundscape with a visual-motor task, namely the computer game Tetris. The game requires the use of both hands to navigate blocks. For the continuous background stream, we chose a hospital soundscape. For task relevant speech, participants received instructions within the game. For task irrelevant speech a conversation unrelated to the game was presented. The task relevant sound changed between two conditions. For task irrelevant sounds, monitor sounds from a surgery machine were presented.

Lastly, we manipulated the attentional focus of the participants by changing the task relevant sound while keeping the complexity of the soundscape constant. In a narrow attentional focus condition (from here on narrow condition) participants had to attend to an alarm sound (from here on the alarm). This sound originated from a specific location, i.e., was easy to detect. The rest of the soundscape (except the task relevant speech) could be ignored. In a wide attentional focus condition (from here on wide condition) we implicitly direct the participants attention toward all sound streams. This was approached by instructing participants to attend to a sound that was embedded in any of the five streams. We refer to this sound as the beep, as it served the purpose of manipulating participants attention but was generally unrelated to the operating room soundscape.

Our study addressed two research questions: First, can we investigate well-known EEG responses, namely event-related potentials (ERPs) and temporal response functions (TRFs) in a dynamic task with a complex soundscape using a mobile EEG setup? Second, what are the differences in neural processing when the auditory attentional focus was narrow (i.e., most of the soundscape can be ignored) compared to wide (i.e., most of the soundscape must be attended to)? We used ERPs to study responses to distinct stimuli, i.e., relevant and irrelevant sounds, and focused on two components, the N1 and P3.: The N1 is an early negative deflection related to auditory processes and modulated by attention (Hillyard et al., 1973; Picton and Hillyard, 1974; Hansen and Hillyard, 1980; Näätänen and Picton, 1987; Luck, 2014). For our first hypothesis, we expected a larger N1 for irrelevant sounds (i.e., non-target sounds in both conditions) in the wide condition than in the narrow condition. Attention to the beep, which was integrated into other sounds, should lead to a stronger processing of the whole soundscape. Therefore, we expected a stronger processing of the irrelevant sounds.

The P3 is a late positive deflection in response to target sounds (from here on targets). As this response is absent in non-targets, it thereby marks attentional processes (Polich, 2007; Luck, 2014). For our second and third hypotheses we expected a P3 to the target of the respective conditions. The alarm was the target in the narrow condition, thus, we expected a larger response in this condition compared to the wide condition. The beep was the target in the wide condition, thus, we expected a larger response in this condition compared to the narrow condition.

We used TRFs to investigate processing of the soundscape as a whole. TRFs are the result of correlating a continuous EEG signal with a continuous audio signal (Crosse et al., 2016). The correlation (i.e., response) is larger for attended compared to unattended signals (Mirkovic et al., 2015; O'Sullivan et al., 2015). For our fourth hypothesis, we expected a larger TRF in the wide compared to the narrow condition, as the beep should direct attention toward the whole sound environment.

2. Method

This study was registered prior to any human observation of the data (https://osf.io/sgvk6). Deviations from our preregistration are described in the Supplementary material. We provided the experiment, as well as the code and data to reproduce the statistical analyses and figures, here: Rosenkranz and Bleichner (2022).

2.1. Participants

Twenty-two participants (age range: 20–30 years; female: 16) were recruited through an online announcement on the University board. We based the sample size on previous studies showing P3 effects in naturalistic settings (e.g., Protzak and Gramann, 2018; Scanlon et al., 2019; Hölle et al., 2021) due to the exploratory approach of this study. All participants signed prior to the experiment their informed consent, approved by the medical ethics committee of the University of Oldenburg, and received monetary reimbursement. Eligibility criteria included: normal hearing (self- reported), normal or corrected vision, no psychological or neurological conditions, right-handedness, and compliance with current COVID-related hygiene regulations (e.g., this could include proof of vaccination).

Two participants were excluded from the final analysis. One participant showed high impedance (>100 kΩ) for 10 channels at the end of the experiment and overall poor data quality. One participant had a very low hit rate which indicates that this participant did not follow task instructions. The final sample consisted of 20 participants (female: 14).

2.2. Paradigm

Participants performed a complex audio-visual-motor task—an adapted 3D Tetris game. The basis for the game was developed by Kalarus (2021) and we changed it to our needs. Below is a short description of the paradigm. For a detailed description of the game and generation of the auditory stimulus material see Supplementary material.

Participants had to play a 3D Tetris game while reacting to different sounds and instructions (see Figure 1A). In 3D Tetris one is presented with a three-dimensional space in which differently shaped, three-dimensional blocks are placed. The falling blocks must be placed in such a way that they form a layer. When a layer is complete, the layer disappears. Participants controlled the rotation of the blocks with the left hand and position of the blocks with the right hand. The goal was to place blocks to remove as many layers as possible to receive points. Unlike the classic Tetris game, participants could not loose when the blocks were stacked too high. Instead, the game restarted at the bottom layer to allow for a continuous game-play. When that happened, participants lost points.

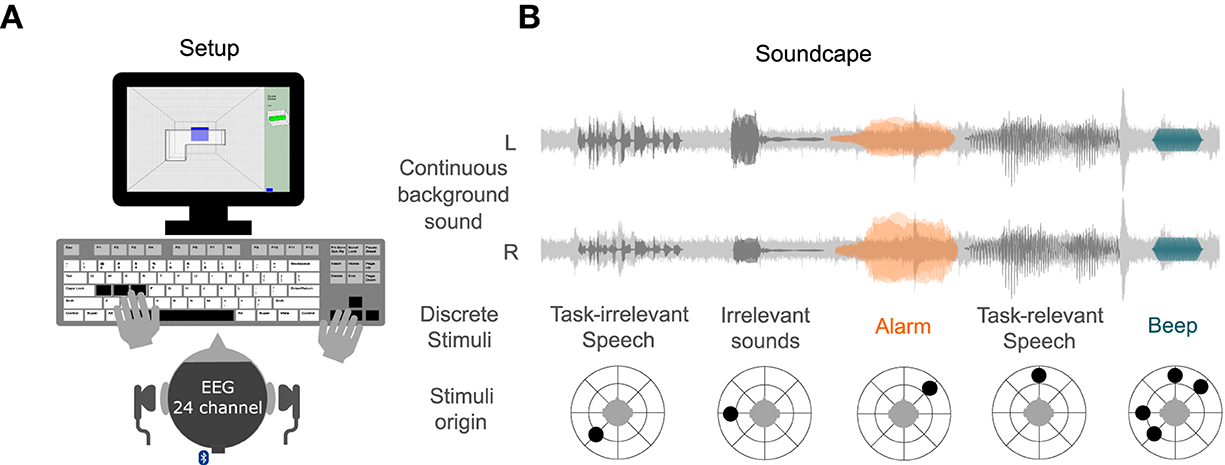

Figure 1. (A) Experimental Setup: Participants played 3D Tetris (with their left hand the participant controlled the rotation of a block, with their right hand the position of a block). The soundscape was presented via headphones. EEG was recorded using a 24-channel mobile EEG setup. (B) Soundscape: A continuous background sound was presented throughout the task. Discrete stimuli were subsequently presented. The alarm was the target in the narrow condition, while the beep was the target in the wide condition. The alarm was presented from one direction, while the beep was presented from any direction as the other sounds. If participants detected a target, they should press the space bar.

Furthermore, participants were listening to a soundscape. The soundscape included one continuous background sound, and five discrete stimuli (see Figure 1B). The background sound consisted of hospital sounds, e.g., air conditioning and people moving around, and was presented from both sides. A task irrelevant speech of two people talking in the background originated to the left behind the participant (−135°). Two irrelevant sounds were presented from the left side (−90°). Participants also received instructions from time to time from the front (0°) on where to place the next block. Furthermore, an alarm was presented from the right (45°) and a beep could occur from the same direction as the other stimuli. All sounds were spatially separated using the Head Related Impulse function (Kayser et al., 2009).

For the auditory task, participants should have attend to the task relevant speech, which instructed participants to place the next block in one of the four corners of the Tetris layer. Furthermore, participants played the game twice (a game lasted approximately 18 min) and received a different instruction for each condition. Note, that the soundscape was conceptually the same for both conditions. In the narrow condition, participants were instructed to additionally attend to the alarm, i.e., participants had to attend to the task relevant speech and the alarm. The alarm was long, had a high intensity, and was presented always from the same direction, thus, it was not necessary to attend to the rest of the soundcape to detect it. In the wide condition, participants were instructed to attend to the beep, i.e., participants had to attend to the task relevant speech and the beep. The beep was short, had a low intensity, and was integrated into other stimuli, thus, the whole soundscape had to be monitored to detect it. To summarize, the difference between the two conditions were the instruction on which target should be attended to. The target of the narrow and wide condition were the alarm and the beep, respectively. If participants detected a target, they should press the space bar. Hitting a target, as well as, following the speech instructions, granted points, while misses and not following instructions subtracted points.

All discrete auditory stimuli were initially presented 48 times in a random order. However, the response to the beep overlapped with the response to the alarm and irrelevant sounds when it was integrated into them. Therefore, we added all overlapping sounds again to derive at 48 non-overlapping sounds. Note, that only responses to non-overlapping sounds were used in the ERP-analyses.

To get acquainted with the game, participants received written instructions for the game. Then, a general training without auditory stimuli and a training for the relevant speech instructions was performed. Before each condition, participants also performed a condition specific training in which they received feedback on whether they correctly detected the target (see Supplementary material for a detailed description of the training games). EEG was not recorded during the training games. Before the game of each condition started, resting EEG was recorded, by instructing participants to first focus on a fixation cross and then close their eyes for 1 min each. Furthermore, two questionnaires were administered: At the beginning of the experiment, participants filled out a noise sensitivity questionnaire (NoiSeQ—results are not part of the current study; Schutte et al., 2007) and after each condition a workload questionnaire (NASA-TLX; Hart and Staveland, 1988).

2.3. Data acquisition

Participants were asked to wash their hair on the day of recording. EEG data was recorded using a wireless 24-channel amplifier (SMARTING, mBrainTrain, Belgrade, Serbia) attached to the back of the EEG cap (EasyCap GmbH, Hersching, Germany) with Ag/AgCl passive electrodes (see Supplementary Figure S3 for the channel layout) and the reference and ground electrode at position Fz and AFz, respectively. The data was recorded using a sampling rate of 500 Hz, and transmitted via Bluetooth from the amplifier to a Bluetooth dongle (BlueSoleil) that was plugged into a computer (Dell Optiplex 5070).

After fitting the cap, the skin was cleaned using 70% alcohol. To increase skin conductance between the scalp and electrodes, abrasive gel (Abralyt HiCl, Easycap GmbH, Germany) was used. Impedance were kept below 20 kΩ at the beginning and again checked at the end of the recording using the SMARTING Streamer software (v3.4.3; mBrainTrain, Belgrade, Serbia). Recording took place in a quiet and electrically shielded room. Participants were seated in front of a screen (Samsung, SyncMaster P2470) and keyboard (Dell, KB 1421). Auditory stimuli were presented using Psychtoolbox 3 (v3.0.17, Kleiner et al., 2007). For each stimulus type, a sound marker was generated using the lab streaming layer library.1 A key capture software2 was used to record which key was pressed on the keyboard and an audio capture software3 (this is used as input for the TRF analysis, see below) was used to record the presented audio with a sampling rate of 44100 Hz. To synchronize all data streams, the transmitted EEG data, sound marker, keyboard marker, and computer audio were collected in the Lab Recorder software4 based on the Lab Streaming Layer and saved as .xdf files. The same computer was used for data recording and experiment presentation.

2.4. Preprocessing

The EEG was analyzed using EEGLAB (v2021.0, Delorme and Makeig, 2004) in MATLAB R2020b (The MathWorks, Natick, MA, United States).

For each participant and condition, the continuous data was filtered with Hamming windowed FIR filter using the EEGLAB default setetings: (1) high-pass: passband edge = 0.1 Hz5; (2) low-pass: passband edge = 30 Hz.6 These filter settings are recommended for ERP analyses (Luck, 2014), as well as TRF analyses (Crosse et al., 2021). The filtered data was re-sampled to 250 Hz. Channels were visually checked for flat lines and bad data quality (e.g., if impedance were above 20 kΩ). Bad channels were removed from both conditions. Afterwards, the data was cleaned from artifacts using infomax independent component analysis (ICA), and rejected channels were interpolated.

For the ICA, a copy of the preprocessed data was high-pass filtered (passband edge = 1 Hz7) and cut into consecutive epochs of 1 second (s). Epochs with a global or local threshold of 2 standard deviations were automatically rejected. ICA decomposition was applied on the remaining epochs of both conditions. The resulting components were back-projected on the original preprocessed, but uncleaned, data of each condition. Components related to eye-blinks, eye-movement, heart rate, and muscle movement were identified and removed using the EEGLAB toolbox ICLabel (Pion-Tonachini et al., 2019) with a threshold of 0.9. On average, 2.8 (±1.32) components were rejected. Afterwards, previously rejected channels were interpolated using spherical interpolation. Then, channels were re-referenced to the linked mastoids (TP9/TP10). For all auditory stimuli, a constant delay of 19 milliseconds (ms) between the sound marker and sound presentation was taken into account.

2.4.1. ERP analysis

ERP analyses were performed for the alarm, beep, and the two irrelevant sounds. For each of the sounds, epochs from −200 to 800 ms with respect to the stimulus onset were generated and a baseline correction from −200 to 0 ms prior to stimulus onset was performed. Epochs with a global or local threshold of 3 standard deviations were automatically rejected. For targets (i.e., the alarm or beep), only hit trials were included in the analysis. A hit was defined as any space bar press within 3 seconds after a target.

We calculated ERP amplitudes averaged over time based on individual time-windows. Our ERP analyses focused on the N1 and P3 component. The analyses of the two components were identical, except for the selection of channel and time-window. For each participant, an average response was calculated from the two conditions and selected channels. The ERP N1 is typically associated with a negative frontal polarity around 100 ms after stimulus presentation (Näätänen and Picton, 1987) and the ERP P3 with a positive parietal polarity around 300 ms after stimulus presentation Polich (2007). For the N1, we selected channel Fz, FC1, FC2, Cz, C3, and C4; and for the P3, we selected channel Pz, P3, P4, CPz, CP1, and CP2 (see Supplementary Figure S3). The average response was used to find the component peaks of each participant. For the N1, we searched for a negative deflection between 50 and 150 ms following stimulus onset. For the P3, we searched for a positive deflection between 300 and 400 ms following stimulus onset. Following peak detection, the component time-window was determined. For this, a time-window of ±25 ms and ±50 ms around the N1 and P3 peak was taken, respectively. Lastly, to derive at trial-level data, for each participant, condition, selected channel, and trial, the mean amplitude over the individual time-window was calculated.

2.4.2. TRF analysis

For the analysis of the soundscape as a whole, the mTRF toolbox (Crosse et al., 2016) was used. Therefore, the recorded audio was preprocessed as follows: First, the absolute of the Hilbert transform of the audio was low-pass filtered at 30 Hz6 and resampled to 250 Hz. Second, we were interested in the response to the whole soundscape irrespective of the targets, therefore, the alarm and beep were excluded from the EEG and audio data of both conditions. Data from the onset of the alarm and beep was were excluded up to 1 s after the onset, creating epochs of unequal length. The total length of data was unequal between conditions, therefore we excluded epochs until the total length difference between conditions for each participant was <1 min. Third, EEG data was multiplied by factor 0.0313 for normalization (as suggested in the provided code by Crosse et al., 2016). Finally, a forward model was trained on the epoched EEG data and audio data using the function mTRFtrain. Time lags were calculated from −200 to 800 ms and a lambda of 0.1 was used.

The TRF usually reveals classic ERP peaks known from the auditory processing literature (Crosse et al., 2016, Mirkovic et al., 2019; Jaeger et al., 2020). Based on pilot data from three participants (not included in the final analyses), we expected these peaks at approximately 100, 200, and 300 ms time lag.

We verified these condition-independent peaks using a permutation- based approach, which was implemented with the Mass Univariate ERP Toolbox (Groppe et al., 2011). First, the TRF of each participant, condition, and channel was baseline corrected within the function sets2GND using time lags from −100 to 0 ms. Second, two-sided t-values were calculated and corrected for multiple comparisons within the function tmaxGND using a time-window from 0 to 450 ms time lag. Finally, a time-window was identified as significant when t-values exceeded a significant threshold of p < 0.05.

Within the significant time-windows, we determined individual TRF peaks. For this, we first calculated the standard deviation over channels to derive the global field power (GFP) of the TRF. The GFP indicates the magnitude of a signal across channels. Thereby, it accounts for individual differences in spatial distribution and avoids channel selection (Murray et al., 2008). The resulting GFP of each condition were averaged. Next, we searched for the condition-averaged, maximum GFP value in each significant time-window and for each participant. Then, we calculated for each participant the full width at half maximum with respect to the peak to determine individual time-windows. Finally, we averaged over the individual time-windows of the GFP of each condition. This resulted in an average GFP value for each participant, condition, and significant time-window.

2.5. Statistics

2.5.1. Preregistered analyses

Condition differences of the auditory task were analyzed using a linear mixed model (LMM). The analysis was performed in RStudio (version 2021.09.0) using the R package lmer4 (version 1.1-23). For all analyses a categorical fixed factor 'condition' with two categories was used, i.e., narrow and wide, which were coded 0 and 1, respectively.

For the ERP analysis, the response amplitude was predicted for each trial. Participant and channel were included as random factors (Volpert-Esmond et al., 2021):

For the ERP model of the beep, we encountered singularity issues. The random factor for channel showed a variance of 0 indicating over-specification of this random factor (Volpert-Esmond et al., 2021). We therefore excluded this factor when computing the model for the beep.

For the TRF analysis, GFP differences were predicted for individual time averaged peaks. Participants were included as a random factor:

LMMs allow the investigation of the random factors participant and channel. For this, the intraclass correlation coefficient (ICC) was used, which represents the amount of variance in the predicted variable that is explained by the random factors (Lorah, 2018; Volpert-Esmond et al., 2021). Variances for each factor were calculated using an intercept only model for the analysis of ERPs (AMP~ 1 + (1|partcipant) + (1|channel)) and TRFs (GFP ~ 1 + (1|partcipant)). ICCs were calculated by dividing the variance of participant or channel by the total variance.

Fixed effects were evaluated using Satterthwaite approximations within the R package lmerTest, which estimates the degrees of freedom to calculate two-tailed p-values. Evidence for an effect were assumed for p-values below 0.05. We also report standard errors (SE) and 95% confidence intervals (CI).

2.5.2. Exploratory analyses

For a better understanding of the performance on the Tetris and auditory task, we explored results of the NASA-TLX and several behavioral measures. The NASA-TLX was used to investigate differences in perceived workload between conditions. The questionnaire has six subscales, with scores ranging from 0 to 20. A high score is associated with high workload. We summed the scores of all subscales to receive one workload score per condition and participant (i.e., total scores can range from 0 to 120; Hart and Staveland, 1988). We checked task performance on the Tetris task by comparing the number of completed layers. We also checked performance on the speech instruction task by comparing the number of instructions that were correctly followed. Workload and behavioral scores were compared between conditions by computing LMMs for each score (i.e., workload; completed layers; followed instructions) with condition as a fixed factor, and participant as a random factor (Score ~ condition + (1|partcipant)).

We further explored reaction time and hit rate in response to the targets, i.e., the target in the narrow condition was the alarm and in the wide condition the beep. For this we used all trial-level responses, i.e., also those that were not considered in the ERP-analyses.

Individual reaction times between conditions were compared using a generalized linear mixed model (GLMM) with an inverse Gaussian distribution to account for a positive skew in reaction time data (Reactiontime ~ condition + (condition|participant)).

Hit rate followed a binomial distribution, as hits and misses were coded one and zero, respectively. Differences between conditions was therefore compared using a GLMM with a binomial distribution and logit link function (Hitrate ~ condition + (condition|partcipant)).

The statistical significance of differences in reaction times or hit rate between the alarm in the narrow and the beep in the wide condition was evaluated using the Wald Chi-square test.

3. Results

3.1. Behavioral results

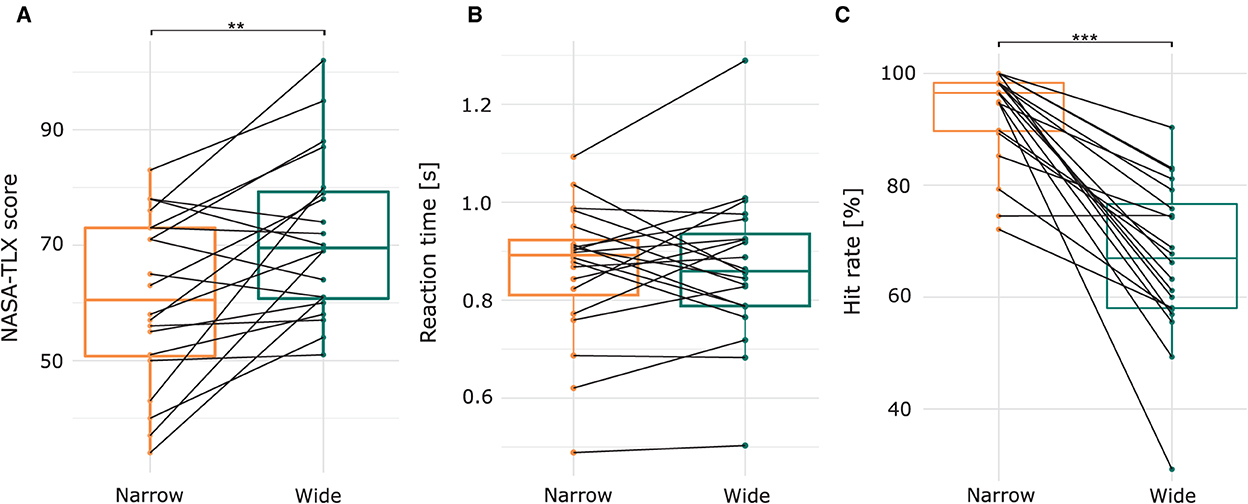

Figure 2A shows the subjective workload of each participant and condition. The average rating in the narrow condition was 60.6, which significantly increased in the wide condition to 71.45 (b = 10.85; SE = 3.22; p = 0.0016; CI = [4.92 16.78]). There were no differences between conditions in the number of completed layers (Supplementary Figure S4A; b = −1.37; SE 1.09; p = 0.222; CI = [−3.55 0.797]) and followed speech instructions(Supplementary Figure S4B; b = −0.77; SE 0.92; p = 0.458; CI = [−2.55 1.15]). Figures 2B, C show the performance of the auditory task, i.e., reaction time and hit rate, in response to targets. Here, the response to the alarm in the narrow condition is compared to the response to the beep in the wide condition. Estimated mean reaction times in response to the alarm in the narrow condition were 0.814 s (b = 1.22; SE = 0.064), and to the beep in the wide condition 0.81 s. Reaction times did not differ between the two targets (Figure 2A; b = −0.008; SE = 0.045; p = 0.869). However, the chance of hitting a target in the narrow condition was 96.2% and in the wide condition 68%. The beep was significantly less often detected than the alarm (Figure 2B; b = −2.478; SE = 0.344; p < 0.001).

Figure 2. (A) Subjective workload scores measured by the NASA-TLX. (B) Trial-averaged reaction time and (C) trial-averaged hit rate in response to the targets of the respective condition, i.e., the alarm and the beep in the narrow and wide condition, respectively. Each black line represents one subject. **p < 0.01 and ***p < 0.001.

3.2. Event-related potentials

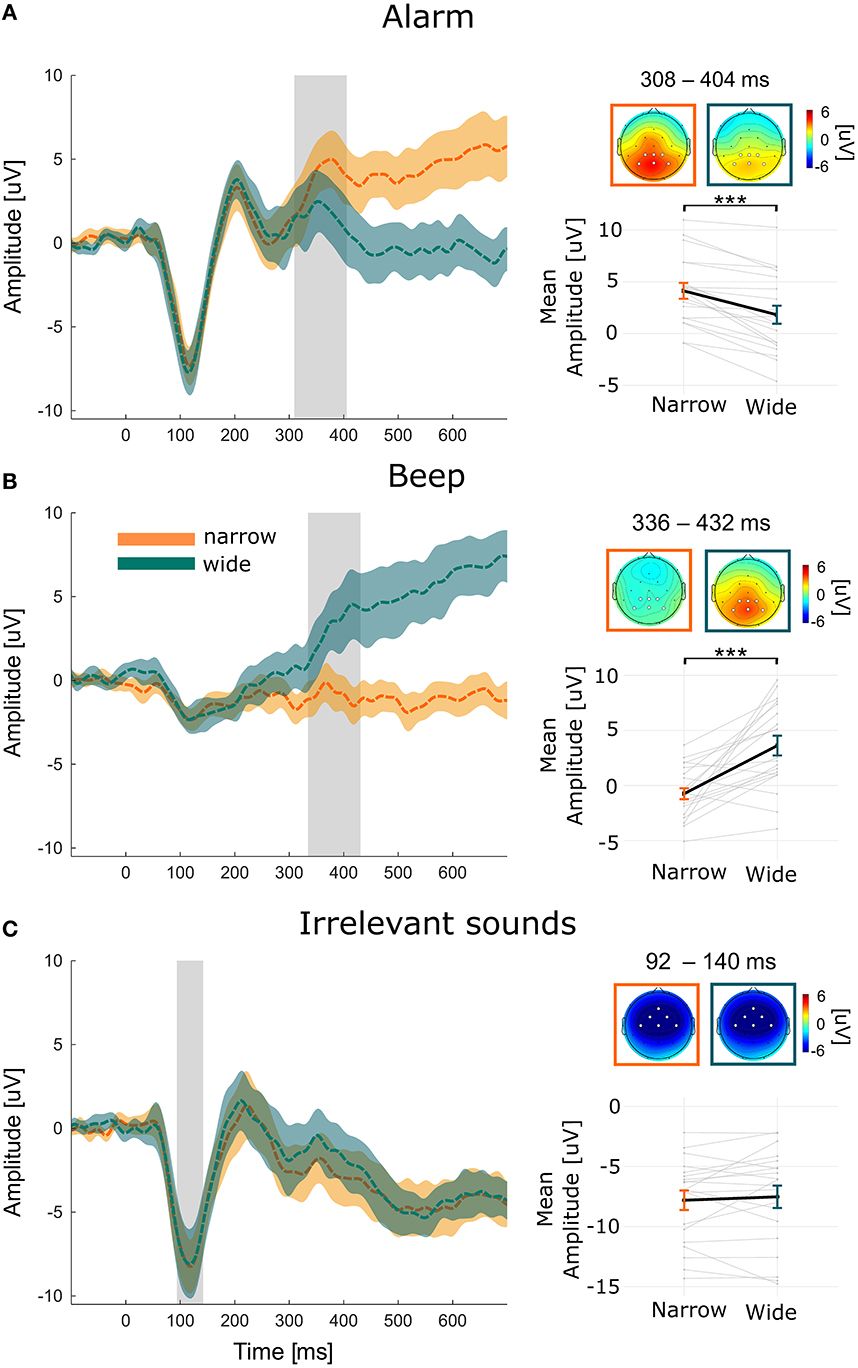

We investigated ERPs in response to task-relevant and irrelevant sounds. The alarm was relevant in the narrow and the beep relevant in the wide condition. For these sounds we investigated the P3, and expected that targets show larger P3 amplitudes than non-targets. The irrelevant sounds were ignored in both conditions. Here we investigated the N1 and expected a lower amplitude in the wide compared to the narrow condition.

3.2.1. The alarm

Figure 3A shows the grand average ERP (i.e., averaged over participants and selected channel) in response to the alarm in the two conditions. We see a clear N1 peak around 100 ms, a P2 peak around 200 ms, and a P3 that starts around 300 ms. The topographies of the narrow condition shows a typical parietal P3 distribution (Polich, 2007). The mean amplitude of the P3 for the alarm in the narrow condition was 4.2 μV with a significant mean amplitude decrease in the wide condition of −2.3 μV (SE = 0.53; CI = [−3.39, −1.27]; p < 0.001).

Figure 3. ERPs of the (A) Alarm, (B) Beep, and (C) Irrelevant sounds for each condition and averaged over participants and selected channel. The selected channels are marked in white in the topographies. The narrow and wide condition are marked with orange and green, respectively. Color shades indicate the 95% confidence interval. The gray area indicates the average time-window and the topographies show the average amplitudes averaged over this time-window. Note, that individual time-windows were used for the statistical comparison. Below the topographies, the fixed effects (thick black lines) and the variability of effect between individuals (i.e., each gray line corresponds to one participant) are displayed. ***p < 0.001.

Computing the ICC showed that variance between people and channel accounted for 12.2 and 0.1% of the total variance, respectively (see Supplementary Table 2 for the results of the random effect models).

3.2.2. The beep

Figure 3B shows the grand average ERP in response to the beep. For the beep we see neither a clear N1, nor a P2 peak, but a P3 that starts around 300 ms. The topography also reflects a P3 to the beep in the wide condition. The mean amplitude of the P3 for the beep in the narrow condition was −0.609 μV with a significant mean increase in the wide condition of 4.1 μV (SE = 0.92; CI = [2.58, 6.16]; p < 0.001). The ICC showed that the variance between people and channel accounted for 6 and 0% of the total variance, respectively.

3.2.3. Irrelevant sounds

Figure 3C shows the grand average ERP in response to the irrelevant sounds. We see a clear N1 peak around 100 ms and a P2 peak around 200 ms. The mean amplitude of the N1 for the irrelevant sound in the narrow condition was −7.81 μV, which did not differ from the wide condition (b = −0.28; SE = 0.48; CI = [−1.02, 0.87]; p = 0.558). The topography reflects a frontal N1 in both conditions. The ICC showed that variance between people and channel accounted for 14.8 and 0.2% of the total variance, respectively.

3.2.4. Summary of ERP results

We found evidence for two of our three hypotheses. The alarm and beep both showed a P3 when they were the target. This shows that participants were able to detect the sounds. The P3 subsides after approximately 1.5 s (see Supplementary Figure S5). The response to the irrelevant sounds did not change. In the following we look at the processing of the entire soundscape.

3.3. Processing of the soundscape as a whole

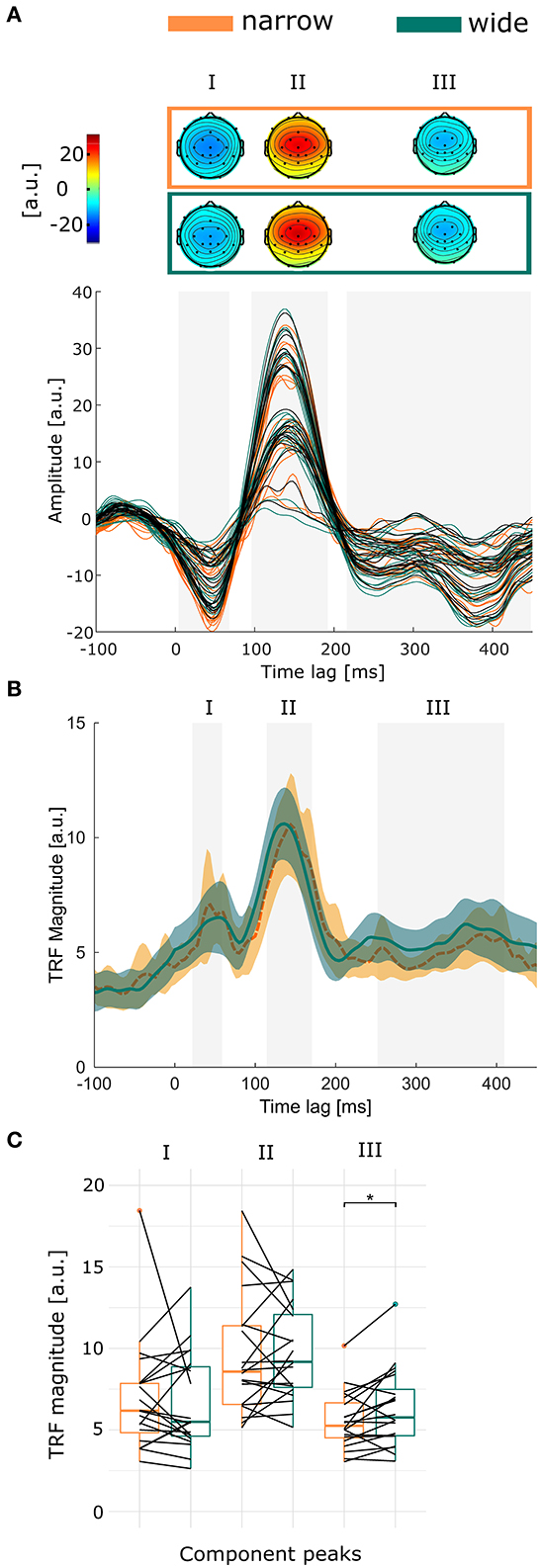

Figure 4A shows the TRF for both conditions (colored) and condition-independent (black). Condition specific and independent TRFs show a typical shape (Crosse et al., 2016). The gray areas indicate the three time-windows (0–68 ms; 96–192 ms; 216–448 ms time lag) that significantly differed from zero. The topographies show TRF values averaged over the significant time-window. All time-windows show the largest values across the fronto-central channels. For the first and last time-window the values were negative, while the second time-window showed positive values. Figure 4B illustrates the grand average GFP over all participants. In each significant time-window, we determined individual time-windows of the GFP and calculated the average amplitude over the individual time-window. The results are shown in Figure 4C. The individual GFP in the third time-window was on average 5.57 in the narrow condition, which significantly increased in the wide condition to 6.43 (b = 0.77; SE = 0.3; CI = [0.15 1.38]; p = 0.0211). We did not find significant differences for the first (b = −0.26; SE = 0.68; CI = [−1.63 1.1]; p = 0.705) and second (b = 0.11; SE = 0.59; CI = [−1.07 1.3]; p = 0.853) time-window.

Figure 4. (A) The topographies show the significant time-windows averaged over time. Below are the TRFs for each channel, averaged over participants. Orange and green mark the narrow and wide condition, respectively. Black marks the TRF calculated over both conditions. The significant time-windows are marked in gray. (B) The GFP of the TRF is shown for each condition, averaged over participants. Color shades mark the 95% confidence interval. For each participant, an individual time-window within the significant time-window was calculated. The gray area marks the average individual time-window. (C) Boxplots show the differences of the individual time-window for each significant time-window. Each line represents one participant. *p < 0.05.

The ICC of the third time-window showed that variance between people accounted for 73.3% of the total variance, indicating a large between-person variance. Note, that the high between-person variance of the TRF compared to the ERPs is the result of using averaged compared to trial-level data, respectively.

One participant showed extremely high standard deviations (see Supplement Figure S9) across all channels, thus, we excluded this participant and ran the analyses again, however, this did not change the results (First: b = 0.28; SE = 0.43; CI = [−0.58 1.15]; p = 0.5174; Second: b = 0.44; SE = 0.52; CI = [−0.61 1.48]; p = 0.414; Third: b = 0.84; SE = 0.31; CI = [0.22 1.47]; p = 0.014).

From Figure 4B and the Supplementary Figure S9, it appears that the time-windows vary largely between participants, especially with regards to the last time-window. Therefore, we re-analyzed the data by averaging over the significant time-windows that are seen in Figure 4A. Using the same time-window for each participant, we receive the same results (First: b = −0.03; SE = 0.46; CI = [−0.96 0.9]; p = 0.95; Second: b = 0.13; SE = 0.48; CI = [−0.84 1.1]; p = 0.791; Third: b = 0.628; SE = 0.26; CI = [0.106 1.15]; p = 0.026).

4. Discussion

We investigated auditory attention using a mobile EEG setup while participants completed a complex audio-visual-motor task with a rich soundscape. We manipulated auditory attention while keeping the complexity of the soundscape constant. In both conditions, participants attended to a target. In one condition, this target was a clearly audible alarm originating from one direction which required a narrow attentional focus. In the other condition, this target was a beep originating from different directions which required attention to the whole soundscape, i.e., a wide attentional focus.

Behaviorally, we found, that the sound that was assumed to be more difficult to detect (i.e., the beep), was indeed less often detected than the sound that was assumed to be easy to detect (i.e., the alarm). This is also reflected in perceived workload, which was higher in the wide condition than the narrow condition, but in contrast to Tetris and speech instruction performance which was similar across conditions. It appears that a trade-off between the Tetris task and the auditory task occurred. In other words, if the auditory task was too difficult, participants rather concentrated on the Tetris task and speech instructions.

Regrading the ERPs, we found a larger P3 if a sound was a target compared to the same sound if it was not the target, i.e., the response was larger for the alarm in the narrow compared to wide condition and for the beep in the wide compared to narrow condition. We observed the difference around 300 ms after stimulus onset. Contrary to our expectation, we did not find a clear difference in the N1 to stimuli that were irrelevant in both conditions. We also found that the TRF was larger in the last time-window for the wide compared to narrow condition.

4.1. Processing of relevant stimuli

We observed a larger P3 for target compared to non-target stimuli, which indicates that participants were generally performing the auditory task. The P3 is related to attentional processesd and has two subcomponents, the P3a and P3b (Polich, 2007). The P3a is typically associated with an attention switch to novel or salient stimuli and shows a central topography, while the P3b response is typically elicited by task-relevant stimuli and shows a parietal topography (Polich, 2007; Luck, 2014). In this study we focused on the P3b, as attention to different task-relevant sounds (i.e., the alarm or beep) should lead to a narrow or wide attentional focus. Our findings show the robustness of the P3b even in a complex task with visual input, auditory instructions, and motor responses. Therefore, it lines up with beyond-the-lab studies that showed the P3b during walking (Debener et al., 2012), biking (Scanlon et al., 2019), driving (Protzak and Gramann, 2018), and office work (Hölle et al., 2021).

Importantly, the P3b morphology was comparable between conditions (also when looking at the individual participant data in Supplementary Figures S6, S7) despite the fact that the targets differed in their characteristics. The alarm was louder than the beep, it always came always from the same direction, and was the only sound coming from that direction. The beep, originated from different directions and was embedded into the other sound streams. The behavioral results show that the alarm was easier to detect than the beep. The reaction time for the detected sounds was not significantly different. This shows that a sound which is hard to detect, i.e., acoustically not salient, can elicit a clear attention response if it is considered task-relevant.

4.2. Processing of irrelevant stimuli

Regarding the irrelevant sounds, we did not find a difference between conditions in the N1. We expected that attention to the beep (i.e., the wide condition) would draw attention to the whole soundscape and in turn also lead to a stronger processing of the irrelevant sounds. This manipulation was apparently not strong enough to produce a difference in the N1 component.

Nevertheless, we can draw a conclusion from the observed ERP morphology. The alarm and irrelevant sound elicited an N1 with a clear peak and strong deflection (~7–8 μV), while the beep elicited an N1 that was smaller (~2–3 μV) and smeared. We interpret the clear peak of the alarm and irrelevant sounds as an indication that these sounds were easily detectable (i.e., acoustically salient) compared to the beep as the N1 is sensitive to sound intensity (Näätänen, 1982; Näätänen and Picton, 1987). Furthermore, early auditory responses indicate awareness of a stimulus (Schlossmacher et al., 2021). Thus, the clear peak of the alarm and irrelevant sounds might indicate that these sounds showed a different early processing compared to the beep.

4.3. Processing of the soundscape as a whole

We found reliable TRFs in response to the complex soundscape (including language and non-language stimuli) in this complex task, with three time-windows which significantly differed from zero. These time-windows have repeatedly been reported for speech and music stimuli (e.g., Horton et al., 2013; O'Sullivan et al., 2015; Hausfeld et al., 2018), however not for other complex soundscapes.

We further expected a difference in processing of the whole soundscape between the two conditions. We used the beep in the wide condition to implicitly direct the participants attention toward the whole soundscape. In the narrow condition most of the soundscape could be ignored. We found a significant but small increase of processing in the wide condition after controlling that the effect was not due to the targets. Interestingly, the difference appeared in the last time-window. When tracking the response to an attended and continuous speech stream, an enhanced responses in late time-windows is observed compared to an ignored stream (Horton et al., 2013; Kong et al., 2014; Petersen et al., 2017; Mirkovic et al., 2019; Jaeger et al., 2020; Holtze, 2021). As we expected that participants attend to the whole sound scape more in the wide compared to narrow condition, it is plausible that we observed a difference in the last time-window.

There are several reasons why the observed difference was small. On the one hand, participants did not attend to the whole soundscape much more in the wide than in the narrow condition. This would also explain the low hit rate for the beep. On the other hand, there was no incentive to ignore the soundscape in the narrow condition, which might have increased the response to the soundscape in the narrow condition. We conclude that our results are an indication that differences in the processing of the whole soundscape are found in late time-windows.

4.4. Random effects of the models

We further investigated the random effect structure for a better understanding of the variance that contributed to our models (Lorah, 2018; Volpert-Esmond et al., 2021). Interestingly, the between-person ICC of the response to the alarm was twice as large compared to the beep. This indicates that in naturalistic soundscapes, reliable sounds (such as the alarm which was presented from the same direction with the same sound intensity) produce a more reliable trial-level response than unreliable sounds (such as the beep which was presented from different directions and with different sound intensities).

The low between-channel variance indicates that we used channels that were related to the investigated components (i.e., N1 and P3; Volpert-Esmond et al., 2018). Furthermore, the selected channels had a close proximity. For the beep we even had to exclude channel as a factor, as we ran into singularity issues.

4.5. Translation to the operating room

We designed our study to contain several factors that characterize the working environment in an operating room, i.e., multiple sound streams from different locations with relevant and irrelevant sounds, speech and non-speech sounds, and a visual-motor task. Our results demonstrate that it is feasible to study auditory attention in such a complex scenario. We observed a clear N1 peak for sounds that were acoustically salient, a P3b for relevant sounds, and a TRF in response to the whole soundscape. Thereby, our study is a step toward studying auditory responses in the operating room using mobile EEG.

We manipulated the attentional focus of participants who were naive to the soundscape of the operating room, thus the soundscape was rather arbitrary to them. This way, our results are generalizable to other scenarios with similar complex soundscapes. A limitation of this approach is that medical staff might react differently to the soundscape, because they are regularly exposed to it. Eventually, we want to know how the individual perceives the soundscape in the operating room and when sounds become a burden. Therefore, one must be aware of the challenges of studying sound processing in the operating room: First, the soundscape of an operating room is an uncontrolled setting in which the presentation of sounds is ethically not viable. Therefore, it is necessary to relate the natural soundscape to the EEG recording. We showed that meaningful EEG responses can be measured in a complex soundscape. In a following step, we suggest using smartphone-based technology that enables the simultaneous recording of EEG and audio features in a data protected way (Blum et al., 2021; Hölle et al., 2022), and applying it to the operating room. This way, responses to naturally occurring sounds can be measured. Second, surgery staff are exposed to the soundscape for several hours per day. Investigating changes in sound processing over the day requires long-term recordings. Here, one could use minimal and unobtrusive EEG set-ups, like the cEEGrid (Debener et al., 2015), that can be used to study EEG responses to auditory stimuli (Holtze et al., 2022; Meiser and Bleichner, 2022) over more than 6 h (Hölle et al., 2021). Lastly, the cognitive load (e.g., working memory and attentional capacities) varies during a surgery over time and between staff members, which likely affects auditory processing. van Harten et al. (2021) observed that surgery staff with high workload are more often distracted by irrelevant sounds than surgery staff with low workload. However, this relationship is simplified as high load can also reduce the processing of irrelevant sounds (Brockhoff et al., 2022). Studying the relationship between load and auditory processing in the operating room is therefore necessary to understand the effect that sounds have on surgery staff.

5. Conclusion

We showed that ERPs, as well as TRFs, are useful tools to study different aspects of sound perception in complex sound environments. To balance between high control over stimuli and the uncontrolled operating room we developed a laboratory experiment with a naturalistic soundscape. In this scenario, ERPs are robust to detect attention responses to specific sounds while TRFs can measure responses to an uncontrolled soundscape. Our results demonstrate that we can use mobile EEG in a complex acoustic-visual-motor task to study auditory perception and are therefore an important step toward understanding auditory attention in uncontrolled settings.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: Zenodo (https://zenodo.org/record/7147701).

Ethics statement

The studies involving human participants were reviewed and approved by Medizinische Ethikkommission, University of Oldenburg, Oldenburg. The patients/participants provided their written informed consent to participate in this study.

Author contributions

MR and MB conceptualized the experiment. MR performed the data acquisition, analyzed the data, and wrote the manuscript to which TC, VU, and MB contributed with critical revisions. All authors approved the final version and agreed to be accountable for this work.

Funding

This work was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under the Emmy-Noether program–BL 1591/1-1–Project ID 411333557 and by the Forschungspool Funding of the Oldenburg School of Medicine and Health Science.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnrgo.2022.1062227/full#supplementary-material

Footnotes

1. ^https://github.com/labstreaminglayer/liblsl-Matlab, v1.14.

2. ^https://github.com/labstreaminglayer/App-Input, v1.15.

3. ^https://github.com/labstreaminglayer/App-AudioCapture, v1.14.

4. ^https://github.com/labstreaminglayer/App-LabRecorder, v1.14.

5. ^filter order = 16,500, transition bandwidth = 0.1 Hz, cutoff frequency (−6dB) = 0.05 Hz.

6. ^filter order = 220, transition bandwidth = 7.5 Hz, cutoff frequency (−6dB) = 33.75 Hz.

7. ^filter order = 1,650, transition bandwidth = 1 Hz, cutoff frequency (−6dB) = 0.5 Hz.

References

Baltin, C. T., Wilhelm, H., Wittland, M., Hoelscher, A. H., Stippel, D., and Astvatsatourov, A. (2020). Noise patterns in visceral surgical procedures: analysis of second-by-second dBA data of 599 procedures over the course of one year. Sci. Rep. 10, 1–10. doi: 10.1038/s41598-020-59816-4

Blum, S., Hölle, D., Bleichner, M. G., and Debener, S. (2021). Pocketable labs for everyone: synchronized multi-sensor data streaming and recording on smartphones with the lab streaming layer. Sensors 21, 8135. doi: 10.3390/s21238135

Brockhoff, L., Schindler, S., Bruchmann, M., and Straube, T. (2022). Effects of perceptual and working memory load on brain responses to task-irrelevant stimuli: review and implications for future research. Neurosci. Biobehav. Rev. 135, 104580. doi: 10.1016/j.neubiorev.2022.104580

Crosse, M. J., Di Liberto, G. M., Bednar, A., and Lalor, E. C. (2016). The multivariate temporal response function (mTRF) toolbox: a MATLAB toolbox for relating neural signals to continuous stimuli. Front. Hum. Neurosci. 10, 1–14. doi: 10.3389/fnhum.2016.00604

Crosse, M. J., Zuk, N. J., Di Liberto, G. M., Nidiffer, A. R., Molholm, S., and Lalor, E. C. (2021). Linear modeling of neurophysiological responses to speech and other continuous stimuli: methodological considerations for applied research. Front. Neurosci. 15, 705621. doi: 10.3389/fnins.2021.705621

Debener, S., Emkes, R., De Vos, M., and Bleichner, M. (2015). Unobtrusive ambulatory EEG using a smartphone and flexible printed electrodes around the ear. Sci. Rep. 5, 16743. doi: 10.1038/srep16743

Debener, S., Minow, F., Emkes, R., Gandras, K., and de Vos, M. (2012). How about taking a low-cost, small, and wireless EEG for a walk? Psychophysiology 49, 1617–1621. doi: 10.1111/j.1469-8986.2012.01471.x

Delorme, A., and Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics. J. Neurosci. Methods 13, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Engelmann, C. R., Neis, J. P., Kirschbaum, C., Grote, G., and Ure, B. M. (2014). A noise-reduction program in a pediatric operation theatre is associated with surgeon's benefits and a reduced rate of complications: a prospective controlled clinical trial. Ann. Surg. 259, 1025–1033. doi: 10.1097/SLA.0000000000000253

Gramann, K., McKendrick, R., Baldwin, C., Roy, R. N., Jeunet, C., Mehta, R. K., et al. (2021). Grand field challenges for cognitive neuroergonomics in the coming decade. Front. Neuroergonom. 2, 643969. doi: 10.3389/fnrgo.2021.643969

Groppe, D. M., Urbach, T. P., and Kutas, M. (2011). Mass univariate analysis of event-related brain potentials/fields I: a critical tutorial review. Psychophysiology 48, 1711–1725. doi: 10.1111/j.1469-8986.2011.01273.x

Hansen, J. C., and Hillyard, S. A. (1980). Endogeneous brain potentials associated with selective auditory attention. Electroencephalogr. Clin. Neurophysiol. 49, 277–290. doi: 10.1016/0013-4694(80)90222-9

Hart, S. G., and Staveland, L. E. (1988). Development of NASA-TLX (Task Load Index): results of empirical and theoretical research. Adv. Psychol. 52, 139–183. doi: 10.1016/S0166-4115(08)62386-9

Hasfeldt, D., Laerkner, E., and Birkelund, R. (2010). Noise in the operating room-what do we know? A review of the literature. J. Perianesth. Nurs. 25, 380–386. doi: 10.1016/j.jopan.2010.10.001

Hausfeld, L., Riecke, L., Valente, G., and Formisano, E. (2018). Cortical tracking of multiple streams outside the focus of attention in naturalistic auditory scenes. Neuroimage 181, 617–626. doi: 10.1016/j.neuroimage.2018.07.052

Healey, A. N., Primus, C. P., and Koutantji, M. (2007). Quantifying distraction and interruption in urological surgery. Quality Safety Health Care 16, 135–139. doi: 10.1136/qshc.2006.019711

Hillyard, S. A., Hink, R. F., Schwent, V. L., and Picton, T. (1973). Electrical signs of selective attention in the human brain abstract. Science 182, 177–179. doi: 10.1126/science.182.4108.177

Hölle, D., Blum, S., Kissner, S., Debener, S., and Bleichner, M. G. (2022). Real-time audio processing of real-life soundscapes for EEG analysis: ERPs based on natural sound onsets. Front. Neuroergon. 3:793061. doi: 10.3389/fnrgo.2022.793061

Hölle, D., Meekes, J., and Bleichner, M. G. (2021). Mobile ear-EEG to study auditory attention in everyday life: auditory attention in everyday life. Behav. Res. 53, 2025–2036. doi: 10.3758/s13428-021-01538-0

Holtze, B. (2021). Are they calling my name? Attention capture is reflected in the neural tracking of attended and ignored speech. 15, Front. Neurosci. 15, 643705. doi: 10.3389/fnins.2021.643705

Holtze, B., Rosenkranz, M., Jaeger, M., Debener, S., and Mirkovic, B. (2022). Ear-EEG measures of auditory attention to continuous speech. Front. Neurosci. 16, 869426. doi: 10.3389/fnins.2022.869426

Horton, C., D'Zmura, M., and Srinivasan, R. (2013). Suppression of competing speech through entrainment of cortical oscillations. J. Neurophysiol. 109, 3082–3093. doi: 10.1152/jn.01026.2012

Jaeger, M., Mirkovic, B., Bleichner, M. G., and Debener, S. (2020). Decoding the attended speaker from EEG using adaptive evaluation intervals captures fluctuations in attentional listening. Front. Neurosci. 14, 603. doi: 10.3389/fnins.2020.00603

Jung, J. J., Jüni, P., Lebovic, G., and Grantcharov, T. (2020). First-year analysis of the operating room black box study. Ann. Surg. 271, 122–127. doi: 10.1097/SLA.0000000000002863

Kalarus, M. (2021). Tetris3d. Available online at: https://www.mathworks.com/matlabcentral/fileexchange/92243-tetris3d

Kayser, H., Ewert, S. D., Anemüller, J., Rohdenburg, T., Hohmann, V., and Kollmeier, B. (2009). Database of multichannel in-ear and behind-the-ear head-related and binaural room impulse responses. EURASIP J. Adv. Signal Process. 2009, 298605. doi: 10.1155/2009/298605

Kleiner, M., Brainard, D., Pelli, D., Ingling, A., Murray, R., and Broussard, C. (2007). What's new in psychtoolbox-3. Perception 36, 1–16.

Kong, Y.-Y., Mullangi, A., and Ding, N. (2014). Differential modulation of auditory responses to attended and unattended speech in different listening conditions. Hear. Res. 316, 73–81. doi: 10.1016/j.heares.2014.07.009

Kurmann, A., Peter, M., Tschan, F., Mühlemann, K., Candinas, D., and Beldi, G. (2011). Adverse effect of noise in the operating theatre on surgical-site infection. Br. J. Surgery 98, 1021–1025. doi: 10.1002/bjs.7496

Lorah, J. (2018). Effect size measures for multilevel models: Definition, interpretation, and TIMSS example. Largescale Assess. Educ. 6, 8. doi: 10.1186/s40536-018-0061-2

Luck, S. (2014). An Introduction to the Event-related Potential Technique. Cambridge, MA: MIT Press.

Maier-Hein, L., Eisenmann, M., Sarikaya, D., März, K., Collins, T., Malpani, A., et al. (2022). Surgical data science –from concepts toward clinical translation. Med. Image Anal. 76, 102306. doi: 10.1016/j.media.2021.102306

Matusz, P. J., Dikker, S., Huth, A. G., and Perrodin, C. (2019). Are we ready for real-world neuroscience? J. Cogn. Neurosci. 31, 327–338. doi: 10.1162/jocn_e_01276

Meiser, A., and Bleichner, M. G. (2022). Ear-EEG compares well to cap-EEG in recording auditory ERPs: a quantification of signal loss. J. Neural Eng. 19, 026042. doi: 10.1088/1741-2552/ac5fcb

Mirkovic, B., Debener, S., Jaeger, M., and De Vos, M. (2015). Decoding the attended speech stream with multi-channel EEG: Implications for online, daily-life applications. J. Neural Eng. 12, 46007. doi: 10.1088/1741-2560/12/4/046007

Mirkovic, B., Debener, S., Schmidt, J., Jaeger, M., and Neher, T. (2019). Effects of directional sound processing and listener's motivation on EEG responses to continuous noisy speech: do normal-hearing and aided hearing-impaired listeners differ? Hear. Res. 377, 260–270. doi: 10.1016/j.heares.2019.04.005

Murray, M. M., Brunet, D., and Michel, C. M. (2008). Topographic ERP analyses: a step-by-step tutorial review. Brain Topogr. 20, 249–264. doi: 10.1007/s10548-008-0054-5

Näätänen, R. (1982). Processing negativity: an evoked-potential reflection of selective attention. Psychol. Bull. 92, 605–640. doi: 10.1037/0033-2909.92.3.605

Näätänen, R., and Picton, T. (1987). The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology 24, 375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x

O'Sullivan, J. A., Power, A. J., Mesgarani, N., Rajaram, S., Foxe, J. J., Shinn-Cunningham, B. G., et al. (2015). Attentional selection in a cocktail party environment can be decoded from single-trial EEG. Cereb. Cortex 25, 1697–1706. doi: 10.1093/cercor/bht355

Petersen, E. B., Wöstmann, M., Obleser, J., and Lunner, T. (2017). Neural tracking of attended versus ignored speech is differentially affected by hearing loss. J. Neurophysiol. 117, 18–27. doi: 10.1152/jn.00527.2016

Picton, T. W., and Hillyard, S. A. (1974). Human auditory evoked potentials. II: Effects of attention. Electroencephalogr. Clin. Neurophysiol. 36, 191–200. doi: 10.1016/0013-4694(74)90156-4

Pion-Tonachini, L., Kreutz-Delgado, K., and Makeig, S. (2019). ICLabel: an automated electroencephalographic independent component classifier, dataset, and website. Neuroimage 198, 181–197. doi: 10.1016/j.neuroimage.2019.05.026

Pleban, D., Radosz, J., Kryst, L., and Surgiewicz, J. (2021). Assessment of working conditions in medical facilities due to noise. Int. J. Occupat. Safety Ergon. 27, 1199–1206. doi: 10.1080/10803548.2021.1987692

Polich, J. (2007). Updating P300: an integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148. doi: 10.1016/j.clinph.2007.04.019

Protzak, J., and Gramann, K. (2018). Investigating established EEG parameter during real-world driving. Front. Psychol. 9, 2289. doi: 10.3389/fpsyg.2018.02289

Pugh, C. M., Ghazi, A., Stefanidis, D., Schwaitzberg, S. D., Martino, M. A., and Levy, J. S. (2020). How wearable technology can facilitate AI analysis of surgical videos. Ann. Surgery Open 1, e011. doi: 10.1097/AS9.0000000000000011

Rosenkranz, M., and Bleichner, M. (2022). Data and experiment from: Investigating the attentional focus to workplace-related soundscapes in a complex audio-visual-motor task using EEG. Zenodo. doi: 10.5281/zenodo.7147701

Scanlon, J. E., Townsend, K. A., Cormier, D. L., Kuziek, J. W., and Mathewson, K. E. (2019). Taking off the training wheels: measuring auditory P3 during outdoor cycling using an active wet EEG system. Brain Res. 1716, 50–61. doi: 10.1016/j.brainres.2017.12.010

Schlossmacher, I., Dellert, T., Bruchmann, M., and Straube, T. (2021). Dissociating neural correlates of consciousness and task relevance during auditory processing. Neuroimage 228, 117712. doi: 10.1016/j.neuroimage.2020.117712

Schutte, M., Marks, A., Wenning, E., and Griefahn, B. (2007). The development of the noise sensitivity questionnaire development of the NoiSeQ. Noise Health 9, 15–24. doi: 10.4103/1463-1741.34700

Shafiei, S. B., Jing, Z., Attwood, K., Iqbal, U., Arman, S., Hussein, A. A., et al. (2021). Association between functional brain network metrics and surgeon performance and distraction in the operating room. Brain Sci. 11, 468. doi: 10.3390/brainsci11040468

Suárez, J. X., Gramann, K., Ochoa, J. F., Toro, J. P., Mejía, A. M., and Hernández, A. M. (2022). Changes in brain activity of trainees during laparoscopic surgical virtual training assessed with electroencephalography. Brain Res. 1783, 147836. doi: 10.1016/j.brainres.2022.147836

Thomaschewski, M., Heldmann, M., Uter, J. C., Varbelow, D., Münte, T. F., and Keck, T. (2021). Changes in attentional resources during the acquisition of laparoscopic surgical skills. BJS Open 5, zraa012. doi: 10.1093/bjsopen/zraa012

Tsiou, C., Efthymiatos, G., and Katostaras, T. (2008). Noise in the operating rooms of Greek hospitals. J. Acoust. Soc. Am. 123, 757–765. doi: 10.1121/1.2821972

van Harten, A., Gooszen, H. G., Koksma, J. J., Niessen, T. J., and Abma, T. A. (2021). An observational study of distractions in the operating theatre. Anaesthesia 76, 346–356. doi: 10.1111/anae.15217

Volpert-Esmond, H. I., Merkle, E. C., Levsen, M. P., Ito, T. A., and Bartholow, B. D. (2018). Using trial-level data and multilevel modeling to investigate within-task change in event-related potentials. Psychophysiology 55, e13044. doi: 10.1111/psyp.13044

Volpert-Esmond, H. I., Page-Gould, E., and Bartholow, B. D. (2021). Using multilevel models for the analysis of event-related potentials. Int. J. Psychophysiol. 162, 145–156. doi: 10.1016/j.ijpsycho.2021.02.006

Wascher, E., Heppner, H., and Hoffmann, S. (2014). Towards the measurement of event-related EEG activity in real-life working environments. Int. J. Psychophysiol. 91, 3–9. doi: 10.1016/j.ijpsycho.2013.10.006

Keywords: auditory attention, mobile EEG, work strain, P3 ERP, N1 ERP, temporal response function (TRF)

Citation: Rosenkranz M, Cetin T, Uslar VN and Bleichner MG (2023) Investigating the attentional focus to workplace-related soundscapes in a complex audio-visual-motor task using EEG. Front. Neuroergon. 3:1062227. doi: 10.3389/fnrgo.2022.1062227

Received: 05 October 2022; Accepted: 16 December 2022;

Published: 02 February 2023.

Edited by:

Sara Riggs, University of Virginia, United StatesReviewed by:

Hiroshi Higashi, Kyoto University, JapanYuxiao Yang, Zhejiang University, China

Dan Furman, Arctop Inc., United States

Copyright © 2023 Rosenkranz, Cetin, Uslar and Bleichner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marc Rosenkranz,  bWFyYy5yb3NlbmtyYW56QHVuaS1vbGRlbmJ1cmcuZGU=

bWFyYy5yb3NlbmtyYW56QHVuaS1vbGRlbmJ1cmcuZGU=

Marc Rosenkranz

Marc Rosenkranz Timur Cetin

Timur Cetin Verena N. Uslar

Verena N. Uslar Martin G. Bleichner

Martin G. Bleichner