94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

EDITORIAL article

Front. Integr. Neurosci., 25 August 2023

Volume 17 - 2023 | https://doi.org/10.3389/fnint.2023.1271818

This article is part of the Research TopicReproducibility in NeuroscienceView all 8 articles

Editorial on the Research Topic

Reproducibility in neuroscience

Scientific progress depends on the ability to independently repeat and validate key scientific findings. To verify the rigor, robustness, and validity of a research study, scientists test if they can reach the same conclusions when they use the same methods, data, and code (reproducible result) or when they use a different, independent model, technology, or tool (replicable result). Several large-scale efforts have revealed significant challenges related to reproducing and replicating research studies, including lack of access to research reagents, detailed methodology, or source code developed for the study (Manninen et al., 2018; Errington et al., 2021; Botvinik-Nezer and Wager, 2022). In addition, studies that “merely” repeat a published work are seen as lacking novelty and, therefore, difficult to fund and publish, further lowering the incentive for researchers to embark on replication or reproducibility studies, but this is starting to change.

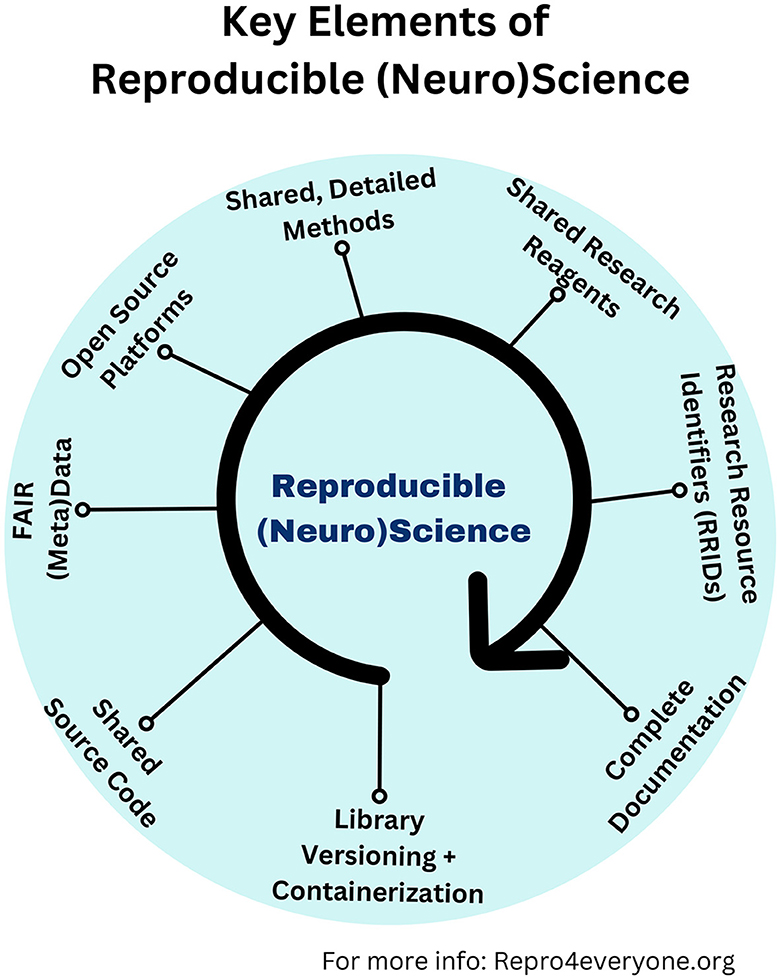

Several organizations worldwide have tried to increase awareness about the importance of reproducibility and replicability in different disciplines in recent years. Myriad tools have been developed to support rigor and reproducibility, including open-source repositories for research resources, protocols, source code, data, etc. (Figure 1). We initiated this Research Topic to highlight how these tools promote efforts to replicate basic and computational research studies in integrative neuroscience and to increase awareness of this vital topic.

Figure 1. Key elements of reproducible (neuro)science. Graphical illustration showing which are the critical ingredients that make a paper or research project reproducible and replicable (Auer et al., 2021). Library versioning refers to the practice of assigning unique identifiers (usually numbers or names) to different releases or versions of a software library. Containerization is a technology that allows developers to package an application and all its dependencies (such as libraries and configurations) together into a single unit called a “container.” More information can be found at: www.repro4everyone.org.

A study by Wirth et al. measured vascular health in older adults. The study specifically aimed to replicate the link between resting state functional connectivity (RSFC) with functional brain networks (Köbe et al., 2021). The study examined 95 non-demented older adults from the IMAP+ cohort in France. The older adults had measurements taken at baseline, 18-, and 36-month time points. The researchers were able to replicate that RSFC increased over time. In addition, the scientists found that changes in RFSC also correlated with several measures of vascular health, such as diastolic blood pressure, β-Amyloid load, and glycated hemoglobin levels. The findings from this study show that good vascular health may help preserve brain health and cognitive resilience in older adults.

Moving toward the computational neuroscience realm, five of the papers included in this Research Topic used the open-source software NEST, a widespread spiking neural network (SNN) simulator, to reproduce prominent articles, with the additional benefit of implementing neural models using an up-to-date, reusable and maintainable simulation and analysis pipelines. All authors made the developed code publicly available, thus providing a more accessible version of the model to the computational neuroscience community.

Schulte to Brinke et al. successfully implemented the cortical column model originally proposed by Haeusler and Maass (2007). The results confirm the findings of the original study, most notably that the data-based circuit has superior computational performance to other control circuits without laminar structure. Going beyond the scope of the seminal work of Haeusler and Maass (2007), the study investigated the robustness with respect to the specifics of the neuron model used. To do so, Schulte to Brinke et al. reduced the complexity of the neuron model by eliminating intrinsic noise and simplifying it to an integrate-and-fire neuron.

The structure of the cortical columnar circuit was investigated by Zajzon et al. too, with a focus on cross-columnar communication. They used a SNN to conduct an extensive sensitivity analysis of the network originally implemented by Cone and Shouval (2021). They addressed the limits in biological plausibility found in the original model and proposed three alternative solutions.

Tiddia et al. replicated the simulations of working memory as proposed by Mongillo et al. (2008). While in the original study, the authors used a simple mean-field model to describe the firing rate behavior of an excitatory population modulated by short-term plasticity, Tiddia et al. created a SNN, which showed typical working memory behavior driven by short-term synaptic plasticity in a robust and energetically efficient manner.

Modeling and simulating a biologically relevant temporal component in neural networks to study spatiotemporal sequences observed in motor tasks has proven challenging, but a recent model developed by Maes et al. (2020) succeeded at creating a “neural clock” that can learn complex, higher-order sequences and behaviors. In a brief research report, Oberländer et al. re-implemented the SNN model and found they could replicate the original study's key findings.

Finally, Trapani et al. embedded the SNN cortical model proposed by Wang (2002) in a virtual robotic agent to perform a simulated behavioral task. They performed multiple simulations to assess the equivalence of the re-implemented SNN with the original study and validate its ability to perform an in silico behavioral task, discriminating between two stimuli, when embedded in a neurorobotics environment.

A study by Appukuttan and Davison explored reproducing a biologically-constrained point-neuron model of CA1 pyramidal neurons originally developed for Brian2 and NEURON simulators. The replication was purely based on the information contained within the published research article. The researchers found that they were able to replicate the core features of the model, but there were discrepancies that the authors could not account for, which might be a result of missing details in the original paper. The authors adopted the SciUnit framework (Omar et al., 2014), which offers a generalized approach that can be easily employed in other replication and reproduction studies.

In conclusion, the seven articles published in this Research Topic emphasize the strength and importance of replicating research studies to confirm and advance our knowledge in neuroscience. Independent review of the study design, source code, and/or research data is essential for confirming the robustness and generalizability of the original findings and for building on them to further advance our knowledge. In addition, the re-introduction and adoption of computational models onto open-source platforms, such as NEST, help make the models more accessible to the broader research community. However, care should be taken when editing and reviewing replication studies as we have found that not every researcher understands the need and importance of publishing replication studies. In addition, while it may seem logical to invite the authors of the original study to review the study, their underlying bias may result in some issues, both in the case of confirmatory or contrasting results. We hope this Research Topic and editorial demonstrate the importance and value of reproducibility and replicability in (neuro)science.

NJ: Writing—original draft, Writing—review and editing. NH: Writing—original draft, Writing—review and editing. RS: Writing—original draft, Writing—review and editing. AA: Conceptualization, Supervision, Writing—original draft, Writing—review and editing.

AA is funded by the Project EBRAINS-Italy granted by EU – NextGenerationEU (Italian PNRR, Mission 4, Education and Research - Component 2, From research to Business Investment 3.1 - Project IR0000011, CUP B51E22000150006). RS is funded by Rohini Nilekani Philanthropies, India (Center for Brain and Mind) and Department of Biotechnology, Ministry of Science and Technology, Government of India and the Pratiksha Trust (Accelerator Program for Discovery in Brain disorders using Stem cells).

The authors acknowledge the support of Denes Szucs in conceptualizing this Research Topic.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Auer, S., Haelterman, N. A., Weissberger, T. L., Erlich, J. C., Susilaradeya, D., Julkowska, M., et al. (2021). Reproducibility for everyone team. A community-led initiative for training in reproducible research. Elife. 10, e64719. doi: 10.7554/eLife.64719

Botvinik-Nezer, R., and Wager, T. D. (2022). Reproducibility in neuroimaging analysis: challenges and solutions. Biol. Psychiatry Cogn. Neurosci. Neuroimaging. 19, S2451. doi: 10.1016/j.bpsc.2022.12.006

Cone, I., and Shouval, H. Z. (2021). Learning precise spatiotemporal sequences via biophysically realistic learning rules in a modular, spiking network. Elife. 10, e63751. doi: 10.7554/eLife.63751

Errington, T. M., Denis, A., Perfito, N., Iorns, E., and Nosek, B. A. (2021). Challenges for assessing replicability in preclinical cancer biology. Elife. 10, e67995. doi: 10.7554/eLife.67995

Haeusler, S., and Maass, W. A. (2007). statistical analysis of information-processing properties of lamina-specific cortical microcircuit models. Cereb. Cortex. 17, 149–162. doi: 10.1093/cercor/bhj132

Köbe, T., Binette, A. P., Vogel, J. W., Meyer, P-. F., Breitner, J. C. S., Poirier, J., et al. (2021). Vascular risk factors are associated with a decline in resting state functional connectivity in cognitively unimpaired individuals at risk for Alzheimer's disease: vascular risk factors and functional connectivity changes. NeuroImage 231, 117832. doi: 10.1016/j.neuroimage.2021.117832

Maes, A., Barahona, M., and Clopath, C. (2020). Learning spatiotemporal signals using a recurrent spiking network that discretizes time. PLoS Comput. Biol. 16, e1007606. doi: 10.1371/journal.pcbi.1007606

Manninen, T., Aćimović, J., Havela, R., Teppola, H., and Linne, M. L. (2018). Challenges in reproducibility, replicability, and comparability of computational models and tools for neuronal and glial networks, cells, and subcellular structures. Front. Neuroinform. 12, 20. doi: 10.3389/fninf.2018.00020

Mongillo, G., Barak, O., and Tsodyks, M. (2008). Synaptic theory of working memory. Science. 319, 1543–1546. doi: 10.1126/science.1150769

Omar, C., Aldrich, J., and Gerkin, R. C. (2014). “Collaborative infrastructure for test-driven scientific model validation,” in Companion Proc. of the 36th International Conf. on Software Engineering, ICSE Companion 2014, New York, NY: ACM, 524–527.

Keywords: replicability, FAIR (findable accessible interoperable and reusable) principles, rigor and quality, research integrity, replication studies

Citation: Jadavji NM, Haelterman NA, Sud R and Antonietti A (2023) Editorial: Reproducibility in neuroscience. Front. Integr. Neurosci. 17:1271818. doi: 10.3389/fnint.2023.1271818

Received: 02 August 2023; Accepted: 11 August 2023;

Published: 25 August 2023.

Edited and reviewed by: Elizabeth B. Torres, Rutgers, The State University of New Jersey, United States

Copyright © 2023 Jadavji, Haelterman, Sud and Antonietti. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alberto Antonietti, YWxiZXJ0by5hbnRvbmlldHRpQHBvbGltaS5pdA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.