- 1 Laboratory of Auditory Neurophysiology, Department of Biomedical Engineering, School of Medicine, Johns Hopkins University, Baltimore, MD, USA

- 2 Cortical Systems and Behavior Laboratory, Department of Psychology, University of California, San Diego, La Jolla, CA, USA

- 3 Department of Neuroscience, Zanvyl Krieger Mind/Brain Institute, Johns Hopkins University, School of Medicine, Baltimore, MD, USA

All non-human primates communicate with conspecifics using vocalizations, a system involving both the production and perception of species-specific vocal signals. Much of the work on the neural basis of primate vocal communication in cortex has focused on the sensory processing of vocalizations, while relatively little data are available for vocal production. Earlier physiological studies in squirrel monkeys had shed doubts on the involvement of primate cortex in vocal behaviors. The aim of the present study was to identify areas of common marmoset (Callithrix jacchus) cortex that are potentially involved in vocal communication. In this study, we quantified cFos expression in three areas of marmoset cortex – frontal, temporal (auditory), and medial temporal – under various vocal conditions. Specifically, we examined cFos expression in these cortical areas during the sensory, motor (vocal production), and sensory–motor components of vocal communication. Our results showed an increase in cFos expression in ventrolateral prefrontal cortex as well as the medial and lateral belt areas of auditory cortex in the vocal perception condition. In contrast, subjects in the vocal production condition resulted in increased cFos expression only in dorsal premotor cortex. During the sensory–motor condition (antiphonal calling), subjects exhibited cFos expression in each of the above areas, as well as increased expression in perirhinal cortex. Overall, these results suggest that various cortical areas outside primary auditory cortex are involved in primate vocal communication. These findings pave the way for further physiological studies of the neural basis of primate vocal communication.

Introduction

Vocal communication plays a central role in mediating conspecific social interactions in a broad range of species (Hauser, 1996). Amongst primates, vocal communication systems are ubiquitous and have clearly evolved in response to direct selection pressures on the species of this taxonomic group (Ghazanfar and Santos, 2004). Non-human primate vocalizations are produced in a diverse set of contexts and accordingly communicate a wide range of information to conspecifics (Seyfarth et al., 1980; Cheney and Seyfarth, 1982a,b, 2007; Cheney et al., 1996; Zuberbuhler et al., 1997, 1999). Despite the significance of vocal communication in primates, our understanding of how neural substrates in the cerebral cortex contribute to its various components is incomplete. In its most basic form, vocal communication consists of a combination of sensory (vocal perception) and motor (vocal production) events. Studies of vocalization processing consistently show responses in auditory and prefrontal cortices (Miller and Cohen, 2010), while evidence of the role cortex plays in vocal production is less clear. Historically, subcortical structures were thought to mediate primate vocal production (Jurgens, 2002a), but more recent behavioral evidence suggests that the decision-making and vocal control involved in call production requires neural mechanisms more generally associated with cortex (Mitani and Gros-Louis, 1998; Suguira, 1998; Miller et al., 2003; Egnor et al., 2006, 2007; Miller and Wang, 2006). Elucidating this issue requires more data on the role of cortex in vocal communication.

The earliest physiology studies on the neural basis of vocal communication focused on sensory neurons in the auditory cortex of awake squirrel monkeys (Saimiri sciureus; Wolberg and Newman, 1972; Newman and Wollberg, 1973; Winter and Funkenstein, 1973; Newman and Lindsley, 1976; Newman, 2003). Many of these studies sought to determine whether “call detector” neurons existed in this the auditory cortex. After several years, the line of work was abandoned in large part due to the lack of evidence for such neurons (Newman, 2003). But interest in the topic did not entirely abate. Several years later, Rauschecker et al. (1995) recorded from neurons in both primary auditory cortex and lateral belt of rhesus monkeys (Macaca mulatta) during presentation of vocalizations to anesthetized rhesus monkeys. They observed that neurons in lateral belt were driven more strongly by complex stimuli such as vocalizations, while A1 neurons responded most strongly to pure tones. Neurons in A1, however, appear to encode acoustic features specific to vocalizations as well. Single-units in common marmoset (Callithrix jacchus) primary auditory cortex were shown to be more responsive to forward played vocalizations than those in reverse for their own species-specific calls, while cat auditory cortex neurons showed no difference between these two classes of marmoset call stimuli (Wang and Kadia, 2001). A multi-unit neurophysiology study of area CM in medial belt showed that neurons are responsive to species-specific vocalizations suggesting that several areas of primate auditory cortex are may contribute to aspects of vocalization processing (Kajikawa et al., 2008). Building on these neurophysiology studies, recent fMRI data from awake rhesus monkey auditory cortex showed evidence of a potential voice selective area in auditory cortex, similar to what is observed in humans (Petkov et al., 2008). The overall picture emerging from these studies is that primary auditory cortex and other areas of the superior temporal gyrus likely play a significant role in processing vocalizations.

Several studies of vocalization processing in prefrontal cortex suggest the involvement of neurons in this area as well (Romanski and Goldman-Rakic, 2001; Gifford et al., 2005; Romanski et al., 2005; Cohen et al., 2006, 2007). Romanski et al. (2005) presented awake rhesus monkeys with a large corpus of vocalizations, including multiple exemplars of individual call types. While there was little evidence of neurons selective for individual call types, data did indicate that many neurons could be driven by vocalizations. In a related study, Gifford et al. (2005) presented awake rhesus monkeys with exemplars of vocalizations from two behavioral classes of sounds: low-quality food calls and high quality food calls. Behavioral studies showed that these classes of vocalizations were categorized as different based on the communicative content of the signal (high or low-quality food item) and not the acoustic structure (Gifford et al., 2003). Evidence suggested that the responses of prefrontal cortex neurons were related to the communicative content of the vocalizations rather than their acoustic structure. These studies suggest that neurons in ventrolateral prefrontal cortex are involved in several aspects of vocalization processing.

The exact role of cortex in primate vocal production is not clear. Early work using a combination of techniques showed that several subcortical areas, such as the periaqueductal gray, are involved in vocal production (Jurgens, 2002a). This line of work has been used to argue for vocal control in primates being primarily mediated by subcortical areas (Hammerschmidt and Fischer, 2008; Jurgens, 2009), a marked distinction from humans. At least three sources of evidence, however, suggest that the story is more complicated. First, squirrel monkey PAG neurons exhibit vocalization correlated activity during vocal production, with changes in neural activity occurring before and/or during call emission (Larson, 1991). Larson and colleagues (Larson and Kistler, 1986; Larson, 1991), however, showed that PAG neurons also show correlated changes in activity related to respiration. As such, it is difficult to disambiguate whether the response during vocal production is specific to the motor act itself or more generally reflects the coordinated inhalation/exhalation and laryngeal movements necessary to produce a vocalization. The increase in PAG neuronal activity observed before vocal onset could simply reflect the fact that monkeys inhale just prior to vocalizing, while sustained activity could be related to the exhale. Moreover, PAG neurons show little correlation to particular acoustic features of a vocal signal (Jurgens, 2002b), calling into question whether these neurons truly represent the vocalization structure. Second, lesions to PAG are reported to disrupt vocal production (Jurgens and Pratt, 1979). Because of the location of PAG in the motor pathway, it is not clear whether the effects of the lesions are due to a break down in control over vocal production or simply because PAG enervates a range of muscles that are essential to vocal production (Larson, 1991). In other words, the effects on vocal production from the lesion to PAG could occur because it effectively disrupts a top-down signal to produce a vocalization. Third, studies show that microstimulation of PAG elicits vocal production in non-human primates (Jurgens and Ploog, 1970), though a similar effect occurs in a range of mammalian species including humans (Jurgens, 2002a). Unfortunately, the spectrograms of stimulation elicited vocalizations from this seminal paper in squirrel monkeys were not published making it difficult to assess the similarity between elicited and naturally produced calls (Jurgens and Ploog, 1970). As a whole, evidence does suggest that the PAG and other subcortical areas along the motor pathway that show correlated vocalization activity, such as cingulate cortex (Sutton et al., 1974; MacLean and Newman, 1988) which receives direct projections from motor cortex (Showers, 1959), clearly play some role in vocal production, but it remains unclear whether these substrates are the ultimate source of all vocal control. Missing are data that show which underlying neural structures mediate the more sophisticated elements of primate vocal production, such as the decisions for if, when and which call type to produce.

The primate frontal cortex controls a range of coordinated motor actions (Fuster, 2008), including human speech (Hickok and Poeppel, 2004). Therefore, it is unsurprising that several studies have sought to test whether these cortical substrates contribute to elements of vocal control in primates. Thus far, data have been mixed. Several studies lesioned the purported homolog of Broca’s area in ventral premotor cortex and observed no changes in the monkeys vocalizations (Sutton et al., 1974; Aitken, 1981). These results were interpreted as evidence against a cortical involvement in vocal production, but there are two sources of data that should be considered before accepting this interpretation. First, a study by MacLean and Newman (1988) showed that following frontal cortex lesions, squirrel monkeys did not produce normal vocal behaviors for the first 2 weeks. Following this time, however, vocal behavior returned to roughly normal levels. Although the authors argued that these data provided evidence against frontal cortex playing a significant role in vocal production, there is another interpretation that is more consistent with what is currently known about this area of cortex. If frontal cortex played no role in vocal production, monkeys in this study should have shown no changes in vocal behavior. Even if these changes occurred only during the first 2 weeks, we must conclude that the lesion to the population disrupted some functions involved in these behaviors. Work by Miller and colleagues show that neurons in prefrontal cortex are relatively plastic with respect to being utilized for a wide range of tasks (Freedman et al., 2001; Neider et al., 2002; Wallis and Miller, 2003). As such, the functions of the lesioned population may have been co-opted by neurons in the other hemisphere in order to continue the behavior. Second, although historically Broca’s area has been lauded as a central locus for human speech and language production, this is not consistent with the modern understanding of the functional neuroanatomy of language (Hickok and Poeppel, 2004; Poeppel and Hickok, 2004). Rather, Broca’s area is one substrate of a widely distributed cortical system underlying speech production. In fact, lesions to Broca’s area do not cause Broca’s aphasia (Mohr et al., 1978). Therefore, we may not expect that lesions to this area in non-human primates would necessarily result in a cessation or significant alteration of vocal production.

Several sources of evidence show that a series of subcortical substrates along the motor pathway are involved in vocal production. Missing, however, are data that address a central question about the source of vocal control and whether these mechanisms reside in neocortical substrates. The historic view of vocal production in primates being mediated largely by subcortical structures was consistent with behavioral evidence at the time showing no substantive vocal control and learning (Egnor and Hauser, 2004; Hammerschmidt and Fischer, 2008). More recent data, however, show this earlier view of primate vocal behavior is not entirely accurate. Several lines of work show that primates can exert control over their vocalizations along multiple parameters, including the timing and acoustic structure of the call (Mitani and Gros-Louis, 1998; Suguira, 1998; Miller et al., 2003, 2009a,b; Egnor et al., 2006, 2007; Miller and Wang, 2006). In fact, neurophysiological evidence shows a cortical mechanism to mediate auditory feedback for vocal control (Eliades and Wang, 2008). Evidence of vocal learning is also available in the form of dialects and vocal convergence (Elowson and Snowdon, 1994; Snowdon and Elowsen, 1999; De la Torre and Snowdon, 2009), though ontogenetic learning of call structure remains limited. The growing body of behavioral data suggest a more sophisticated system of vocal production for primates than was previously thought, providing a more substantive empirical basis for cortical control of vocal production.

Results from neural studies are consistent with these more recent behavioral data in implicating a role for cortex, particularly frontal cortex, in vocal production. Gemba et al. (1995, 1999) showed a change in field potentials from surface electrodes in prefrontal, premotor, and motor cortex of Japanese macaques (Macaca fuscata) that precede vocal production. Microstimulation of ventral premotor cortex in rhesus monkeys resulted in orofacial movements (Petrides et al., 2005). Although no calls were elicited during stimulation, the authors suggested that the observed facial movements were consistent with the articulation occurring during vocal production. A functional neuroanatomy study in squirrel monkeys showed that subjects elicited to vocalize by stimulating PAG exhibited an increase in 2-Deoxyglucose uptake not only in the typical subcortical areas, but dorsal frontal cortex as well (Jurgens et al., 2002). In order to resolve the standing debate, more data are needed that show whether populations of neurons in primate frontal cortex are directly involved in vocal production.

Our aim here was to identify the cortical substrates underlying vocal communication in common marmosets. We exclusively examined cortex for the following reasons. The role of cortex in the sensory aspects of primate vocal communication is well established by the aforementioned previous research. But few data are available that describe the contributions of cortex to vocal production. A growing body of data is beginning to suggest that mechanisms for various aspects of vocal control might require more sophisticated cortical mechanisms. More data, however, are needed to test this possibility. To this end, we quantified expression of the IEG cFos, a functional neuroanatomy technique (Clayton, 2000), in three areas of marmoset cortex – frontal, temporal (auditory), and medial temporal – during three aspects of vocal communication: vocal perception, vocal production, and antiphonal calling. The first two experimental conditions tested the cortical substrates underlying the sensory and vocal-motor components independently. As antiphonal calling is a natural vocal behavior involving the reciprocal exchange of vocalizations (Miller and Wang, 2006), this behavior allowed us to examine whether distinct changes occurred across the cortex during sensory–motor interactions. Much of our impetus for this study was to determine whether particular areas of marmoset cortex would be of interest for our future neurophysiology studies of vocal communication.

Materials and Methods

Subjects

Six adult common marmosets (C. jacchus) served as subjects in this study. Two subjects contributed to each of the three test conditions: vocal perception, vocal production, and antiphonal calling. The common marmoset is a small-bodied (∼400 g), New World primate. This species’ vocal communication system has been studied at both the behavioral and neural levels (Norcross and Newman, 1993; Wang et al., 2003; Miller and Wang, 2006; Pistorio et al., 2006; Wang, 2007; Miller et al., 2009a,b, 2010). A small number of subjects were used due to the ethical concerns working with non-human primates. All experimental protocols were approved by the Johns Hopkins University Animal Use and Care Committee.

Behavioral Procedures

Familiarization sessions

We familiarized subjects to the testing room over a period of 2 weeks. Each familiarization session lasted 60 min. For a familiarization session, we transported subjects directly from the home colony in a transport cage to the test room. Once in the test room, we placed subjects into a test cage. The test cage was situated 1m in front of a curtain, behind which we placed a free-field speaker (Cambridge Soundworks M80, Frequency Range: 40–22,000 Hz). During each familiarization session, we broadcasted phee vocalizations from subjects’ cagemates in an interactive playback paradigm (Miller and Wang, 2006). Subjects were run on familiarization sessions until they exhibited no signs of anxiety in the test room. We determined this based on the number of alarm call vocalizations and frenetic behavior apparent during the sessions. Overall subjects were run on 5–7 familiarization sessions prior to testing. Following the final familiarization session, we did not remove subjects from the home colony for 3 days. On the fourth day, we conducted the “Test Session”. We selected subjects for the different test conditions based on their behavior during these familiarization sessions. Those subjects who engaged in high levels of antiphonal calling were run in either the “Vocal Production” or “Antiphonal Calling” condition, while subjects who did not produce vocalizations during these sessions were run in the “Vocal Perception” condition.

Test sessions

For these experiments, we sought to not disturb the typical behavioral patterns of subjects’ prior to the test session. The logic here followed Jarvis et al. (1998, 2000) in that we aimed to have the least amount of sensory and motor activity that could induce unintended IEG expression prior to testing, while not altering subjects from their typical daily patterns. To this end we removed subjects from their home cages within 10 min of the beginning of the daily light cycle (7 am) on the day of the test session. As marmosets do not begin typical levels of vocal behavior within the first 30 min of the light cycle, removing the subjects during this time assured the lowest levels of IEG expression related to vocal communication. Following removal from the home cage, we immediately transported subjects to the test room and performed one of the following test conditions. The duration of each test session was 60 min.

Test conditions

Vocal perception. Two subjects participated in this condition. In this condition, we presented subjects with phees produced by two foreign subjects (one male/one female). The presentation consisted of a phee vocalization being broadcast at a regular interval for the duration of the session. Specifically 100 phees were broadcast in the first 30 min and again in the final 30 min. In this sequence of vocalizations, we switched the identity of the caller every 20 vocalizations. The number of phees presented was meant to match the minimum number of vocalizations subjects heard during the “Antiphonal Calling” condition (i.e., 50 self produced and 50 stimulus presentations).

Vocal production. Two subjects participated in this condition. During this condition, we presented no vocalizations to subjects. But rather recorded subjects’ spontaneous vocal behavior during the test session. When isolated, subjects produce phee vocalizations and this accounted for over 90% of vocalizations recorded during this condition. In order to proceed to the perfusion, we required subjects to produce at least 50 phee vocalizations during the first 30 min of the test session.

Antiphonal calling. Two subjects participated in this condition. Here we employed the interactive antiphonal calling playback paradigm for the duration of the test session (Miller and Wang, 2006). We presented subjects with phees produced by two foreign subjects (one male/one female). Specifically, we changed the identity of the caller following an antiphonal calling bout. Subjects heard approximately equal numbers of phees from each of the two foreign animal stimulus sets. In order to proceed to the perfusion, we required subjects to produce at least 50 phee calls during the first 30 min of the test session.

Immunocytochemistry

Immediately following the end of the behavioral period on the test day, the animal was quickly anesthetized with ketamine, euthanized with pentobarbital sodium, and perfused transcardially with phosphate-buffered heparin solution followed by 4% paraformaldehyde. The brain was impregnated with 20% phosphate-buffered sucrose and then blocked and frozen at −80°C. We sectioned frozen blocks on a sliding microtome at 30 μm thickness and the tissue processed for several standard stains (AChE, CO, Nissl, etc.) and immunohistochemical reactions (calcium-binding proteins, neurofilaments, IEGs). Sections to be processed for cFos were incubated in 1° antibody (cFos, Santa Cruz Biotechnology) followed by 2° antibody (biotinylated α-rabbit, Vector Labs). Avidin was fixed by incubating in an avidin-biotin system (ABC peroxidase standard kit, Vector Labs). We reacted the sections in DAB/peroxide and counterstained with methylene blue. Tissue was mounted and sections visualized using a light microscope.

Data Analysis

Divisions of marmoset frontal cortex

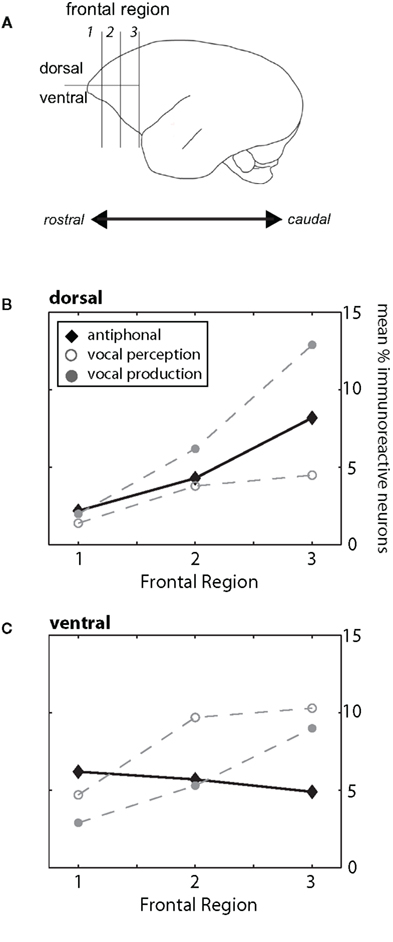

In contrast to many other primates, frontal cortex of marmosets does not have prominent landmark sulci (Figure 1A; Petrides and Pandya, 1999, 2002; Burman et al., 2006; Roberts et al., 2007). The lack of arcuate sulcus makes it particularly difficult to determine the anatomical boundary between prefrontal and premotor cortex. Previous anatomical studies of marmoset frontal cortex provided some evidence of the cytoarchitectural divisions in this cortical area (Brodman, 1909; Burman et al., 2006), but these boundaries are subtle and could not be readily identified in all subjects here. In light of these limitations, we divided frontal cortex into three regions along the rostral–caudal axis and partitioned these regions further along the dorsal–ventral plane (Figure 1A). Frontal cortex was considered the area rostral to motor cortex (Burman et al., 2008). For the rostral–caudal axis, each frontal region contained an equal number of sections. We divided the dorsal–ventrolateral axis by placing a midline along the lateral portion of each section. While the boundaries may not reflect the exact cytoarchitectural divisions in marmoset frontal cortex, partitioning the brain in this way allowed us, as a first step, to determine whether different regions of frontal cortex contribute to different functional aspects of vocal communication. Figure 1B shows the locations of the key immunoreactive populations found in frontal cortex in this study. Based on descriptions from previous research (Brodman, 1909; Burman et al., 2006), the two most rostral regions in our analysis (Frontal Regions 1 and 2) showing high levels of cFos expression likely correspond to ventral-prefrontal cortex area 12/45, whereas the populations in the most caudal region (Frontal Region 3) are likely premotor cortex areas 6V and 6D. Future research will aim to refine this analysis and ascertain the functional role and anatomical boundaries of these neuronal populations.

Figure 1. (A) Schematic drawing of the marmoset cortex. For our analysis we divided the frontal cortex, defined as the area of cortex rostral to motor cortex, into three regions (1, 2, 3) along the rostral–caudal plane and two areas along the dorsal/ventrolateral plane. These divisions are shown in the drawing. For both B and C, the three behavioral conditions are plotted (Antiphonal – black diamond; Vocal Perception – open gray circle; Vocal Production – filled gray circle). (B) Figure plot the mean percentage of immunoreactive neurons in the dorsal (above) area of the frontal cortex. Data are shown for each of the three frontal regions (1–3). (C) Figure plot the mean percentage of immunoreactive neurons in the ventrolateral area of the frontal cortex. Data are shown for each of the three frontal regions (1–3).

Divisions of auditory cortex

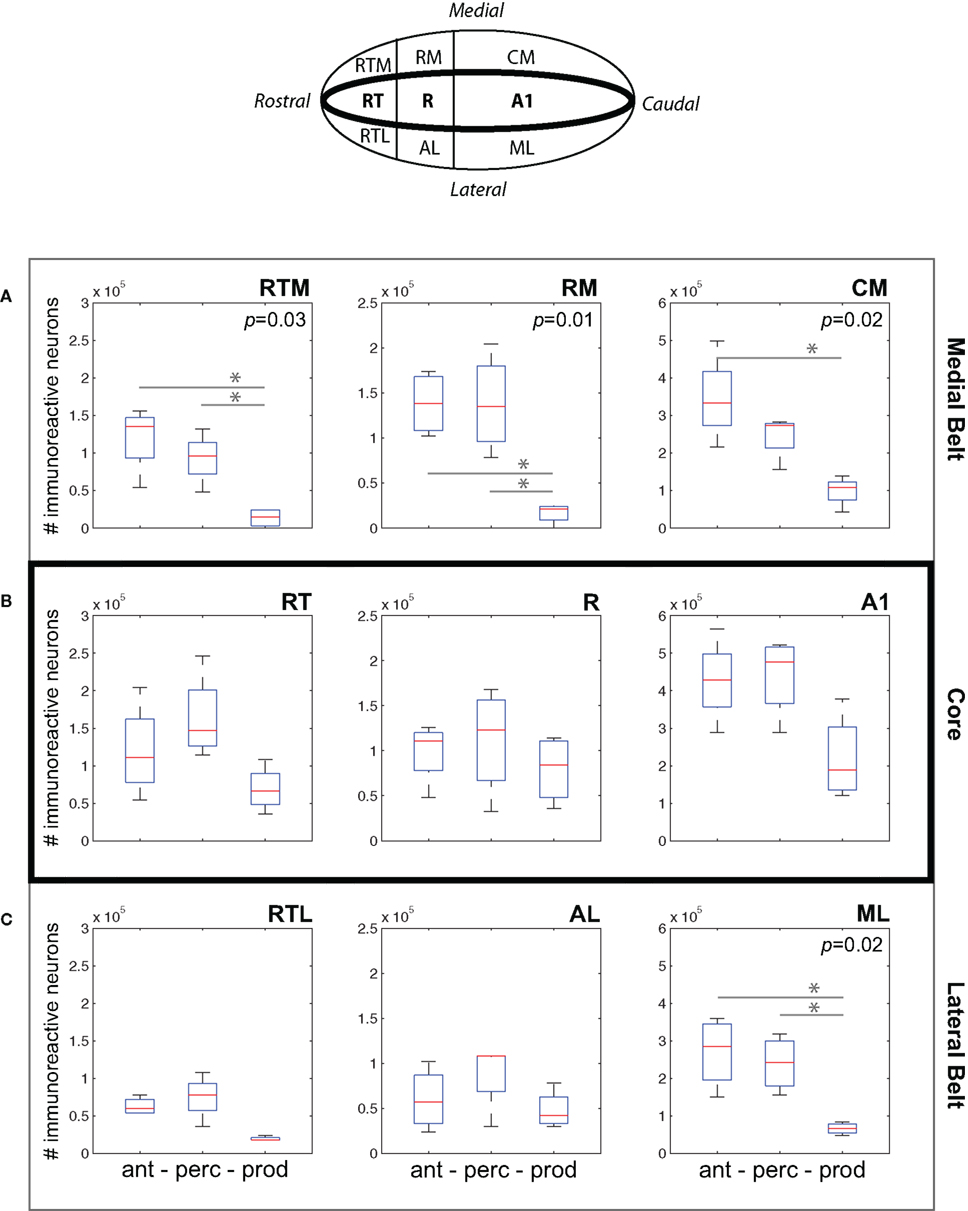

Following Kaas and Hackett (2000), we divided auditory cortex into medial belt, core, and lateral belt. In this study, we quantified cFos expression in each of these three regions of auditory cortex. We also analyzed the data based on IEG expression in three subfields within these areas. Specifically, the core of auditory cortex is divided into three areas – A1, R, RT – along a caudal–rostral axis that can be identified physiologically (Bendor and Wang, 2008) and through cytoarchitectonic differences (Morel et al., 1993; Kaas and Hackett, 1998). Each of the belt areas also consists of three areas. The medial belt is comprised of CM, RM, and RTM, while the lateral belt consists of areas ML, AL, and RTL. A further caudal field, CL, also is evident in the lateral belt, but was not included in this study.

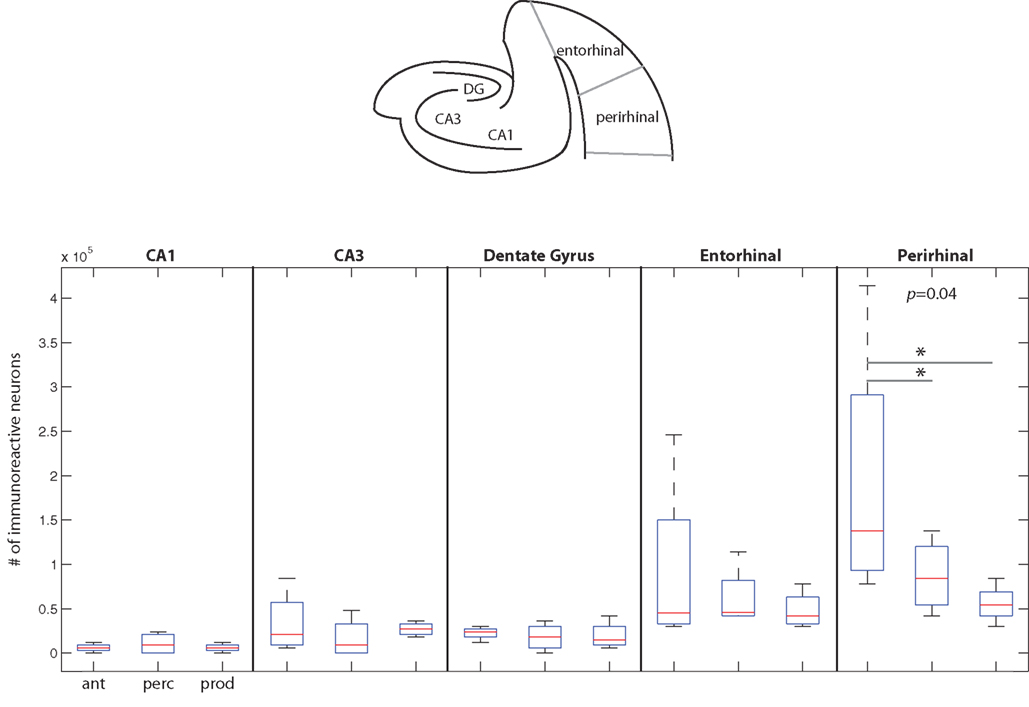

Divisions of medial temporal lobe

We quantified cFos expression in five areas of marmoset medial temporal cortex. Within hippocampus, we examined CA1, CA3, and the Dentate Gyrus (Amaral and Witter, 1989). We further analyzed IEG expression in entorhinal and perirhinal cortex (Suzuki and Amaral, 1994). Although both these latter areas can be further divided into more specific fields, we observed no difference in IEG expression in these fields. As such, we do not distinguish between areas of medial and lateral entorhinal cortex or areas 35 and 36 of perirhinal cortex.

cFos quantification

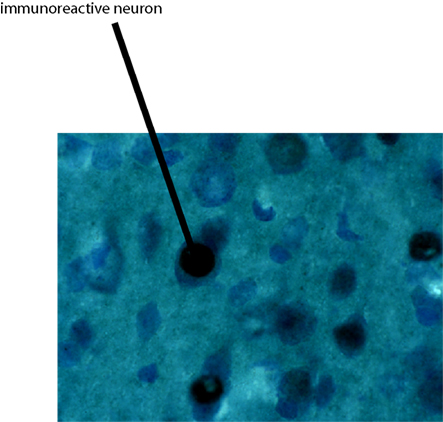

To quantify cFos expression, we conducted a stereological analysis of the tissue. Using Microbrightfield Stereoinvestigator, we employed the Optical Fractionator method (West et al., 1991; West, 2003). This method is used to systematically sample tissue sections and generate an unbiased estimate of immunoreactive cells. For this analysis, we used a 50 μm × 50 μm counting frame and sampled at 500 μm intervals. All sections were viewed at 100× magnification when counting immunoreactive neurons. An example of an immunoreactive neuron is shown in Figure 2.

Figure 2. Shows an example of an immunoreactive neuron viewed at the same magnification (100×) and window size (50 μm × 50 μm) that all stereology analyses were performed.

A preliminary test showed that a subject who produced 23 phee vocalizations and heard 30 phee presentations exhibited minimal cFos expression. As such, we created a baseline criterion for subjects in each condition to ensure measurable levels of cFos expression. Since this experiment was conducted on a non-human primate, we were not able to systematically test the number of vocalizations produced and perceived necessary to maximize IEG expression in each condition. Although some variability in the extent of cfos expression could be the result of each subject producing and/or perceiving different numbers of calls, this is unlikely to account for the pattern of results observed here for at least two reasons. First, work on songbirds shows that once a minimum threshold for stimulation has been reached, additional song presentations do not result in significantly greater IEG expression (Kruse et al., 2000). Given that subjects showed strong IEG expression in all conditions, it is likely that we exceeded the requisite threshold for common marmosets. Second, as discussed above, we normalized the cFos expression for each subject to account for any between-subject variability. As such, our analyses were conducted on the relative amount of IEG expression for each subject, rather than the absolute numbers of labeled cells. By normalizing the data in this way, we avoided biasing our analysis toward any subjects or conditions that may have produced or perceived more phee vocalizations.

Statistical analysis

As this study consisted of a small number of subjects, controlling for variation in the total amount of immunoreactivity across subjects when testing for statistical differences was critical. When comparing across areas of the brain, we performed Repeated-measures ANOVAs on the raw data. This test reveals area-specific immunoreactivity while inherently controlling for between-subject variation. Importantly, all analyses in the manuscript utilizing Repeated-Measures ANOVAs were performed on the raw data.

Due to the small number of subjects, individual variation in total IEG expression could mask comparisons within particular areas of cortex. As such, we normalized the data by generating the percentage of immunoreactive neurons in a particular sub-area relative to the total number of neurons for that individual. This allowed us to observe the general pattern of cFos expression while adjusting for total number of immunoreactive neurons in that individual. We used these normalized data for comparisons between the experimental conditions within particular areas of cortex. To test for differences between conditions within areas, we computed a One-way ANOVA. Direct paired comparisons of each condition for a given area were analyzed using independent samples t-tests.

A Repeated-measures ANOVA showed no interaction between area of cortex, behavioral condition and hemisphere (F(40,120) = 1.16, p = 0.76) suggesting there is no difference in the pattern of activity in the two hemispheres of the brain across the test conditions. As such, we treated each hemisphere as an independent sample.

Results

Overall, we observed differences in the pattern of cFos expression across the areas of cortex during the three test conditions. These different aspects of vocal communication elicited distinct genomic responses in each of the three targets areas of cortex – frontal, temporal and medial temporal. Below we detail the observed changes in each of the areas of cortex studied here.

Frontal Cortex

The pattern of cFos expression across frontal cortex was notably different across the behavioral conditions. With the exception of the rostral areas of dorsal frontal cortex, subjects in the Vocal Perception and Vocal Production conditions exhibited almost opposing cFos expression. In other words, areas in which Vocal Perception condition subjects exhibited high levels of expression, subjects in the Vocal Production condition showed low levels, and vice versa. Antiphonal Calling animals largely showed a combination of the two other conditions, though several areas showed increased or decreased levels of cFos expression suggesting of sensory–motor interactions.

Analyses showed a significant difference between the regions of frontal cortex and behavioral condition (p < 0.0001) suggesting that the pattern of IEG expression in frontal cortex differed across the behavioral conditions (Figures 1B,C). We next analyzed the pattern of activity in each region of frontal cortex and compared IEG expression in the dorsal and ventrolateral areas. cFos expression was significantly different between the behavioral conditions in “Region 1” (p = 0.05), “Region 2” (p = 0.001) and “Region 3” (p = 0.001). These analyses suggest that the pattern of IEG expression was unique for each of the behavioral conditions.

We performed paired comparisons of the pattern of cFos expression across frontal cortex between the Vocal Perception and Vocal Production conditions. Our analyses show that cFos expression in these two conditions is strongly different (Figures 1B,C). Repeated-measures ANOVAs showed that cFos expression in the Vocal Perception and Vocal Production conditions across all six areas of frontal cortex was significantly different (p < 0.0001) suggesting that the overall pattern of expression between these conditions was statistically different. Further analyses showed a significant difference in the pattern of expression in the dorsal area across frontal cortex between these conditions (p = 0.02), while the effect in the ventrolateral area approached, but did not reach statistical significance (p = 0.06). This suggests that different neural substrates underlie the individual vocal perception and production processes in marmoset frontal cortex.

Building on these analyses, we compared cFos expression in the Antiphonal Calling condition with the other two behavioral conditions. As a whole, the pattern of expression in Antiphonal Calling differed from the other two conditions, but some overlap in cFos expression was evident (Figures 1B,C). Comparisons with the Antiphonal Calling condition showed a significant difference in IEG expression across the frontal cortex between both the Vocal Perception (p < 0.0001) and Vocal Production (p = 0.02) conditions suggesting the pattern of expression between these conditions was different. Across the dorsal region, cFos expression in the Antiphonal Calling condition was not significantly different than the Vocal Production condition, but approached significance in when compared with the Vocal Perception condition (p = 0.06). This suggests that the pattern of cFos expression in the Antiphonal Calling condition was more similar to the Vocal Production condition in the dorsal regions of frontal cortex. In the ventrolateral regions of frontal cortex, however, the cFos expression in the Antiphonal Calling condition was significantly different from both the Vocal Perception (p = 0.002) and Vocal Production (p = 0.005) conditions. These results suggest that the pattern of immunoreactivity in marmoset frontal cortex for subjects in the Antiphonal calling condition was distinct from both the two other behavioral conditions.

We next compared the pattern of expression within the individual areas of frontal cortex across the behavioral conditions. These analyses corroborated the above analyses by showing two general trends. First, cFos expression in the Vocal Perception and Vocal Production conditions was largely the opposite of each other. And second, cFos expression in the Antiphonal Calling condition reflecting both the two other behavioral conditions, but with a few unique patterns of expression (Figures 1B,C).

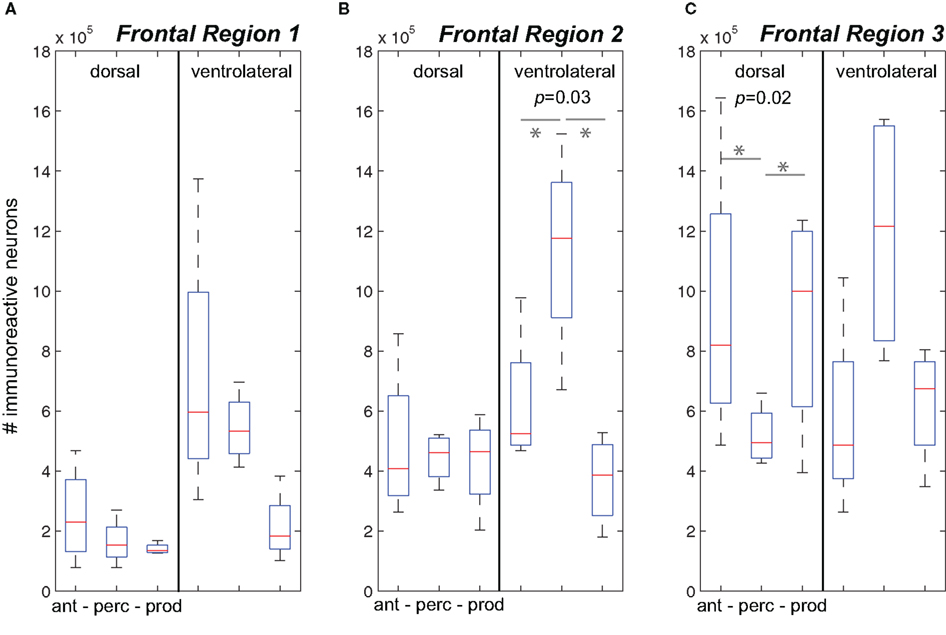

Figure 3A plots data for Frontal Region 1. While analyses showed no difference in cFos expression between the three behavioral conditions in the dorsal region or ventrolateral region was evident. We did observe a general increase in cFos expression in the Antiphonal Calling (p = 0.08) and Vocal Perception (p = 0.1) conditions relative to Vocal Production, but the analysis did not reach statistical significance. This suggests that conditions during which subjects were presented with phee stimuli trended toward an increase in cFos expression in the ventral area, though the small sample size precluded its statistical significance.

Figure 3. The number of immunoreactive neurons in the frontal cortex. (A–C) Data from the three behavioral conditions are shown in box plots for the dorsal and ventrolateral areas for Frontal Regions 1–3. Each box plot shows the median and upper and lower quartiles; the whiskers plot the range. Statistically significant p-values for One-way ANOVA tests comparing cFos expression across the behavioral conditions within a region are noted. “*” denotes significant differences in paired comparisons. (Ant, Antiphonal calling; Perc, Vocal perception; Prod, Vocal production).

Figure 3B plots data for Frontal Region 2. Here analyses showed significant differences between the behavioral conditions in both the ventral (p = 0.03), but not dorsal region regions suggesting differences in the pattern of IEG expression was specific to the ventral area. Further analyses on the ventral area showed that subjects in the Vocal Perception condition exhibited a significantly higher number of immunoreactive neurons than both the Antiphonal Calling (p = 0.04) and Vocal Production (p = 0.03) conditions. These data suggest that the increase in cFos expression here was specific to the sole test condition in which subjects heard vocalizations, but did not utter vocal responses.

Figure 3C plots data for Frontal Region 3. Statistical tests revealed significant differences across the three behavioral conditions for only the dorsal (p = 0.02) regions suggesting a different pattern of IEG expression in each of the behaviors in this area. When we compared cFos expression in the dorsal region between the specific behavioral conditions, analyses revealed significantly fewer immunoreactive neurons in the Vocal Perception condition than in both the Antiphonal Calling (p = 0.04) and Vocal Production conditions (p = 0.02), though no difference was evident between these latter conditions. These data suggest that some aspect of vocal production is likely driving the gene expression observed in the dorsal area.

Auditory Cortex

A Repeated-Measures ANOVA showed a significant interaction between the behavioral conditions and the areas of auditory cortex (p = 0.002) suggesting that the pattern of IEG expression in auditory cortex varied depending on subjects’ behavior. Comparisons of the cFos expression across auditory cortex for each behavioral condition showed a significant interaction between the areas of auditory cortex and subjects in the Vocal Perception and Vocal Production conditions (p = 0.01). Interestingly, IEG expression in the Antiphonal Calling condition was significantly different across auditory cortex from the Vocal Production condition (p = 0.0001) but not the Vocal Perception condition. This suggests that cFos expression in the Antiphonal Calling and Vocal Perception conditions were statistically indistinguishable, but both are significantly different from the Vocal Production condition.

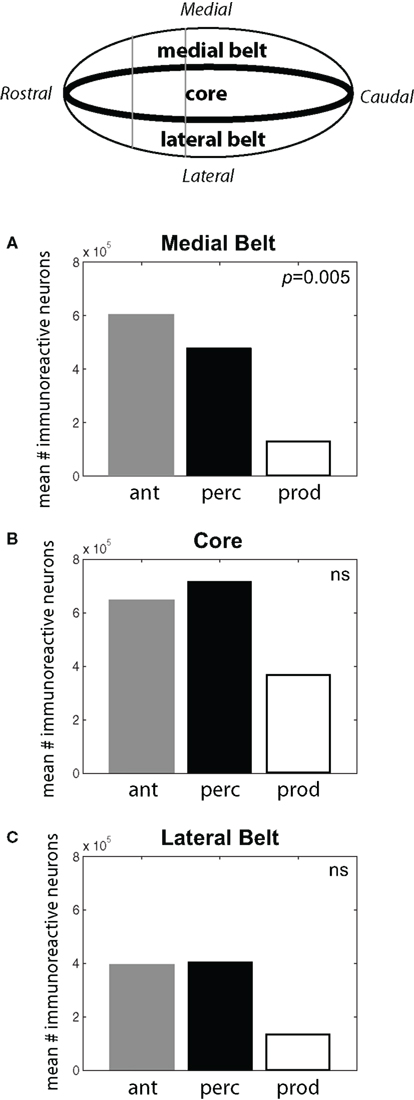

As shown in Figure 4, subjects in the Antiphonal Calling and Vocal Perception conditions showed a stronger genomic response across the auditory cortex. Figure 4A shows the mean # of immunoreactive neurons counted in the medial belt for each of the three behavioral conditions. Analyses revealed a significant difference in cFos expression across the behavioral conditions in medial belt (p = 005). Figure 4B shows cFos expression in the auditory cortex core for the three behavioral conditions. In contrast to medial belt, no difference was observed across the three behaviors. Figure 4C shows data for lateral belt. Similarly to Medial Belt, cFos expression was higher in the Antiphonal Calling and Vocal Perception condition than Vocal Production, but here it did not reach statistical significance. These data suggest that cFos expression differed between the three behavioral conditions primarily in the medial belt of auditory cortex.

Figure 4. (Top) A schematic drawing of the auditory cortex. The locations of the Medial Belt, Core, and Lateral Belt are highlighted. (A–C) Bar graphs plot the mean # of immunoreactive neurons measured in the three behavioral conditions in each of these three areas of the auditory cortex. Statistically significant differences are noted. [Ant, Antiphonal calling (gray bar); Perc, Vocal perception (black bar); Prod, Vocal production (white bar)].

Figure 5A plots data for three areas of medial belt: RTM, RM, and CM. We observed a consistent difference between cFos expression during the Vocal Production condition and both the Vocal Perception and Antiphonal Calling conditions. Analysis of cFos expression in each of the three areas of medial belt showed significant differences across the three behavioral conditions for all three areas: RTM (p = 0.03), RM (p = 0.01), CM (p = 0.02). Paired comparisons of each behavioral condition in each area showed that IEG expression in the Vocal Production condition was significantly less in two areas for both the Vocal Perception [RTM (p = 0.03), RM (p = 0.01)] and three areas for Antiphonal Calling [RTM (p = 0.03), RM (p = 0.01), and CM (p = 0.01)] conditions. IEG expression did not differ in any of these areas between the Vocal Perception and Antiphonal Calling condition. Overall, these data show that the number of immunoreactive neurons did not differ between the two conditions in which we presented phee calls (i.e., Vocal Perception and Antiphonal Calling), both of these conditions differed from Vocal Production subjects.

Figure 5. (Top) A schematic drawing of the auditory cortex. The anatomical locations of the fields within the (A) Medial Belt (RTM, RM, CM), (B) Core (RT, R, A1), and (C) Lateral Belt (RTL, AL, ML) are shown. (A–C) Data from the three behavioral conditions are shown in box plots for each of the nine auditory cortical fields measured here. Each box plot shows the median and upper and lower quartiles; the whiskers plot the range. Statistically significant p-values for One-way ANOVA tests comparing cFos expression across the behavioral conditions within a region are noted. “*„ denotes significant differences in paired comparisons. (Ant, Antiphonal calling; Perc, Vocal perception; Prod, Vocal production).

Figure 5B shows data for the three areas of the auditory core: RT, R, and A1. In contrast to the two belt regions, cFos expression was relatively similar in the core across the three behavioral conditions. Comparisons of the IEG expression in each of the three areas showed no significant differences between the conditions. This may occur because all conditions involved hearing vocalizations either by self-generation or external presentation, both of which are known to modulate neural activity in the marmoset primary auditory cortex (Wang and Kadia, 2001; Eliades and Wang, 2003).

Figure 5C plots data for three areas of lateral belt: RTL, ML, and AL. Similarly to medial belt, cFos expression in the Vocal Production condition was generally lower than in both the Vocal Perception and Antiphonal Calling conditions. Comparisons of IEG expression in each of the three areas of lateral belt showed significant differences across the behavioral conditions in only ML (p = 0.02), but showed no statistical difference in RTL or AL. Paired comparisons of the behavioral conditions for ML showed that subjects in the Vocal Production condition exhibited significantly less cFos expression than both the Antiphonal Calling (p = 0.02) and Vocal Perception (p = 0.008) animals. A similar pattern was observed in AL, though the effects did not reach statistical significance. As in the other areas of auditory cortex, the similar pattern of cFos expression in the Antiphonal Calling and Vocal Perception conditions is likely results from the sensory processing demands involved to hearing conspecific vocalizations.

Medial Temporal Cortex

A Repeated-Measures ANOVA showed a significant interaction between the behavioral conditions and the areas of medial temporal cortex (p = 0.01) suggesting that the pattern of cFos expression differed across the behaviors in this area of the brain (Figure 6). When we contrasted IEG expression across all areas of medial temporal cortex, analyses showed a significant difference between the Vocal Production condition and both the Vocal Perception (p = 0.03) and Antiphonal Calling (p = 0.02) conditions. There was, however, no difference in the pattern of cFos expression between the Vocal Perception and Antiphonal Calling conditions. This suggests that as in auditory cortex, IEG expression in medial temporal cortex was statistically similar in the Vocal Perception and Antiphonal Calling conditions and both differed significantly from the Vocal Production condition.

Figure 6. The number of immunoreactive neurons in the medial temporal cortex. (Top) A schematic coronal section of the medial temporal cortex areas examined here. (Below) Data from the three behavioral conditions are shown in box plots for each of the five medial temporal cortex areas measured here. Each box plot shows the median and upper and lower quartiles; the whiskers plot the range. Statistically significant p-values for One-way ANOVA tests comparing cFos expression across the behavioral conditions within a region are noted. * – denotes significant differences in paired comparisons. (Ant, Antiphonal calling; Perc, Vocal perception; Prod, Vocal production).

Figure 6 plots data for each area of the medial temporal cortex. Overall, cFos expression was lower in this area of the cortex than the two other areas examined in this study. The one area showing a notable genomic response was in perirhinal cortex, with subjects in the Antiphonal Calling conditions showing the highest level. Comparisons of the IEG expression within each of the five areas of medial temporal cortex measured in this study (i.e., CA1, CA3, dentate gyrus, entorhinal cortex, perirhinal cortex) showed significant differences between the behavioral conditions in only perirhinal cortex (p = 0.04; Figure 6). Paired comparisons of each of the behavioral conditions for the perirhinal cortex showed a significantly more cFos expression in the Antiphonal Calling condition than the Vocal Perception (p = 0.04) and Vocal Production (p = 0.05) conditions, but no difference between the Vocal Perception and Production animals. These data suggest that it is only during the active vocal behavior that a notable increase in cFos expression occurs in perirhinal cortex.

Discussion

Executing complex behaviors involves the coordination of multiple areas of cortex (Fuster, 1999; Buzsaki, 2006). Vocal communication is no exception, particularly since both sensory and motor processes are inherent to these communication systems. A number of critical subcortical areas that significantly contribute to primate vocal communication have been identified in previous work (Jurgens, 2002a, 2009). The aim of this study was to identify how substrates in primate cortex might also contribute to elements of vocal communication. We quantified cFos expression during three aspects of vocal communication – vocal perception, vocal production, and antiphonal calling – in frontal, auditory, and medial temporal cortex in common marmosets. Our logic was the following. IEG expression in the vocal perception and vocal production conditions would reflect the substrates in marmoset cortex involved in the individual sensory and vocal-motor components of vocal communication. As antiphonal calling is an active communicative behavior requiring both the perception and production of vocalizations (Miller and Wang, 2006), the pattern of cFos expression during this condition would reveal any unique patterns of neural activity that occur in a vocal behavior as a result of sensory–motor integration. These data will be used to guide current neurophysiology recordings aimed at determining the neural mechanisms underlying marmoset vocal communication.

The pattern of IEG expression in frontal cortex suggests that different areas are involved in the sensory and vocal-motor components of marmoset vocal communication (Figure 1). In the two conditions in which subjects produced vocalizations, Antiphonal Calling, and Vocal Production, a significant increase in cFos expression in the dorsal premotor cortex occurred (Figure 3). Similarly, Jurgens et al. (2002) showed significant uptake of 2-Deoxyglucose in squirrel monkey (S. sciureus) dorsal frontal cortex during vocal production. Although classically, the dorsal pathway of the cortex was thought to process spatial information (Ungerleider and Mishkin, 1982), more recent work suggests it is involved in various coordinated motor actions (Goodale et al., 1991; Murata et al., 1996; Rizzolatti et al., 1997), including speech production (Hickok and Poeppel, 2004, 2007). Results here are consistent with this pattern and suggest that dorsal frontal cortex may play a role in the control and coordination of marmsoet vocal production. A second pattern emerging from the frontal cortex results pertained to the sensory aspect of vocal communication. Subjects in the Antiphonal Calling and Vocal Perception conditions showed increases in cFos expression in the rostral regions of ventral frontal cortex. This finding is consistent with previous work showing neurons in prefrontal cortex are involved in processing auditory signals, including vocalizations (Gifford et al., 2005; Romanski et al., 2005). Together these data suggest that different areas of marmoset frontal cortex are involved in the sensory and vocal-motor elements of vocal communication.

IEG expression in auditory cortex revealed several significant differences across the behavioral conditions. Analyses of the overall amount of cFos expression in core showed no differences across the behavioral conditions (Figure 4). This effect is likely the result of all three behaviors having some amount of sensory input either in the form of playback phee stimuli (Rauschecker et al., 1995; Wang and Kadia, 2001) and/or self-produced vocalizations (Eliades and Wang, 2003, 2008), both of which are known to elicit responses in auditory cortex neurons. The effect in the two belt regions, however, was quite different. For these areas, IEG expression was significantly less in the Vocal Production condition than in both the Antiphonal Calling and Vocal Perception conditions. Earlier work suggests that neurons in lateral belt likely process more complex elements of vocalization structure (Rauschecker et al., 1995). As such, the diminished genomic response in the Vocal Production condition likely results from the lack of additional sensory processing needed in this behavior. The medial belt of auditory cortex has not been the subject of many neurophysiology studies due to its anatomical location within the lateral sulcus for many species (Kaas and Hackett, 2000). Our data here, however, suggest that it may play an important role in the vocal communication. Consistent with these data is a neurophysiology study reporting multi-unit responses to marmoset twitter calls in CM (Kajikawa et al., 2008). All three areas of medial belt showed significant cFos expression in both the Antiphonal Calling and Vocal Perception conditions (Figure 5) suggesting that medial belt in general, and CM more specifically, may contribute to the critical sensory recognition underlying many aspects of vocal communication.

Analyses revealed less overall cFos expression in the medial temporal lobe relative to the other two areas of cortex studied here. Despite the limited genomic response, one notable result did emerge in perirhinal cortex (Figure 6). Here our analysis showed a significant increase in cFos expression in both the Antiphonal Calling and Vocal Perception conditions relative to the Vocal Production condition, with Antiphonal Calling subjects showing the strongest response. This is significant because it suggests that a significant IEG response was elicited only during behaviors in which subjects heard conspecific vocalizations. As perirhinal cortex is thought to play a role in forming associations and recognition memory (Tokuyama et al., 2000; Brown and Aggleton, 2001; Naya et al., 2003), the increased expression of cFos found in the present study may result from the recognition demands of the sensory-driven vocal behaviors studied here. Since the Antiphonal Calling condition elicited the strongest, it may be that the functional contribution of this area is strongest during behaviors involving active conspecific social interactions. More explicit tests of this hypothesis are necessary before a strong conclusion can be drawn however.

The existence of immunoreactive neurons in frontal cortex for vocal communication, particularly the vocal-motor aspects, is noteworthy given the history of this field. Likewise it is not surprising and consistent with previous work (Jurgens et al., 2002; Petrides et al., 2005). In fact, recently Jurgens (2009) argued for a cortical pathway underlying vocal production. But he argues that the pathway is specific to the more sophisticated learned vocalizations which primates generally lack. This position is somewhat puzzling given that his own work shows the activation of frontal cortex in monkeys vocalizing from PAG stimulation (Jurgens et al., 2002), but the real source of contention may not be a product of the neural data. Rather it appears to be due to differences in interpreting the available behavioral data. As elegantly reviewed elsewhere (Egnor and Hauser, 2004), the view that primate vocalizations are largely innate and reflexive is inconsistent with current data. Certainly data do show that changes in the acoustic structure of vocalizations during ontogeny are limited (Hammerschmidt et al., 2001), but not all together absent (Seyfarth and Cheney, 1986). Ontogenetic vocal production learning, however, is but one function of the primate vocal-motor system. Evidence of learning and behavioral control is available for many other aspects of this system (Elowson and Snowdon, 1994; Mitani and Gros-Louis, 1998; Suguira, 1998; Snowdon and Elowsen, 1999; Miller et al., 2003; Egnor et al., 2006, 2007; Miller and Wang, 2006; De la Torre and Snowdon, 2009). Even if the basic acoustic structure of a call is innately determined, the vocal behaviors themselves are not. Primates must decide if (Miller and Wang, 2006), when (Egnor et al., 2007) and in what context (Seyfarth and Cheney, 1986) to produce calls. Controlling these aspects of vocal behavior would likely require the more complex cortical mechanisms used to guide other coordinated motor actions (Shadmehr and Wise, 2005). It is these processes that may be driving the IEG response in cortex observed during the vocal-motor conditions here. While non-human primates certainly lack the extent of vocal learning and control seen in human primates, it would be inaccurate to claim it is completely absent. Moreover, we should not limit our view of vocal production to the acoustic structure of the vocalizations, as the vocal behaviors appear to be guided by far more sophisticated mechanisms, ones likely requiring cortical control.

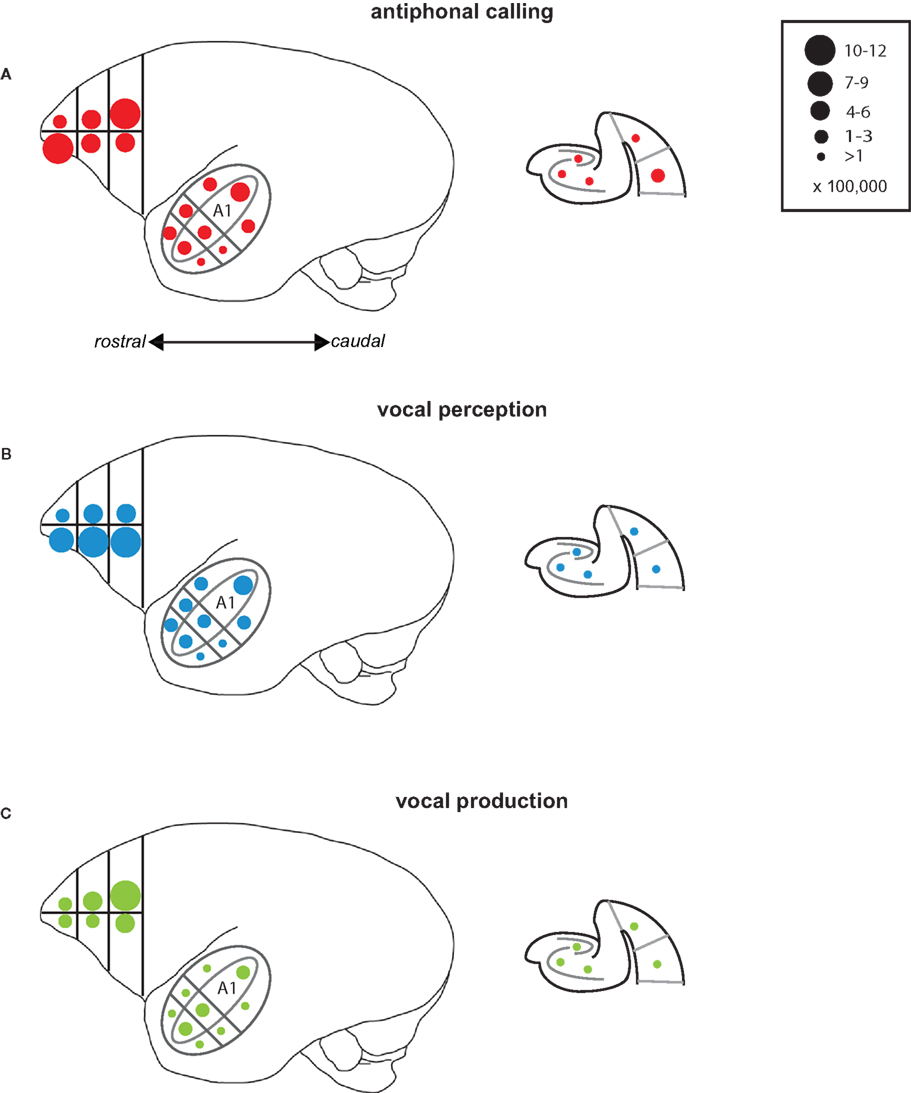

Several interesting patterns emerged in this study that may elucidate neural networks underlying aspects of vocal communication in primate cerebral cortex (Figure 7). Antiphonal calling was the only behavior studied here that involved both the sensory and vocal-motor demands of vocal communication as well as the various higher-level processes, such as decision-making and vocalization categorization (Miller et al., 2005; Miller and Wang, 2006). IEG expression during this condition yielded evidence of a pathway for call recognition and categorization. Three areas, specifically, that showed an increased genomic response during antiphonal calling were ventrolateral prefrontal cortex, CM, and medial belt more generally, as well as perirhinal cortex. Anatomical evidence in primates shows direct connections between ventrolateral prefrontal cortex and rostral auditory cortex, including medial belt (Romanski et al., 1999). Further, a direct connection between entorhinal cortex and CM was recently reported (de la Mothe et al., 2006). Though connections between auditory cortex and perirhinal have not been reported, entorhinal, and perirhinal are adjacent in the medial temporal lobe and share many synaptic connections (Suzuki and Amaral, 1994). In addition, evidence from rodents shows a connection between perirhinal and frontal cortex for acoustic signals indicating a potential functional connection between these areas (Kyuhou et al., 2003). Interestingly, subjects in the Vocal Perception condition showed a similar pattern of IEG expression, though for most of the areas the extent of expression was somewhat weaker. The genomic response in the Vocal Production condition, however, was significantly weaker across all of these areas. These data suggest that the pattern of expression across these areas is driven largely by the sensory demands of auditory behaviors and the active need to utilize the functional demands of this sensory pathway, such as during antiphonal calling, increases the IEG response.

Figure 7. Summary of results. Schematic drawings of the areas of the marmoset cortex from which IEG expression was measured here. The drawing of the whole marmoset cortex to the left shows the Frontal Cortex and Auditory Cortex, while the schematic of the medial temporal cortex is shown to the right. Circles placed in each of the individual areas reflect the mean number of immunoreactive neurons measured. The number of immunoreactive neurons each circle size represents is shown in the key at the top right. Data are shown for each of the test conditions: (A) Antiphonal Calling, (B) Vocal Perception, and (C) Vocal Production.

Limitations of the Current Study

It is important to note that the scope of this study was limited in the following three ways. First, we used only a small number of subjects; two subjects contributed to each of the three test conditions. Although we will quantify the effects observed in the test conditions, more subjects are needed to refine the specific functions of the different areas exhibiting changes in neural activity across the conditions. Second, we may have biased the results by preselecting subjects based on their volubility for particular experimental conditions. Specifically, subjects were screened before testing to determine whether the particular animal produced high numbers of call spontaneously since there are large individual differences for this behavior in marmosets. Subjects who produced few or no calls were used in the vocal perception condition. It is possible that these individuals also possessed other behavioral traits that would affect the pattern of observed cFos expression. Third, the anatomical organization of marmoset frontal cortex is not well established. Although some previous work is available describing cytoarchitectural differences for areas of frontal cortex (Burman et al., 2006), many of these areas were not apparent in our sections for all animals. Because of the observed inconsistencies between subjects, we analyzed IEG expression by dividing frontal cortex into six regions (Figure 1A) along the rostral–caudal and ventral–dorsal planes. Although this allowed us to normalize frontal cortex across subjects, this analysis precludes specific claims about the functionality of particular areas during vocal communication. The data do, however, provide an assessment of regions of interest for future more detailed neurophysiology studies. Despite these limitations, the data presented here still have value as a starting point for more detailed neuroanatomical and neurophysiological investigations currently being conducted.

Conclusion

Research on the neural mechanisms underlying primate vocal communication has been underway for several decades. While work on the sensory aspects of vocal communication have made significant progress in parsing the relative contributions of the auditory system for vocalization processing, data on vocal production are less clear. There is, on some level, an inconsistency between earlier neurophysiology evidence and more recent studies showing control and flexibility in vocal behaviors not previously thought to exist. The functional neuroanatomy findings presented here show that multiple areas of marmoset cortex play a functional role in vocal communication. Although several caveats limit the breadth of the conclusions drawn here. At the very least, however, data presented in this study identify a series of neural substrates in marmoset cortex that appear to contribute to the different sensory and vocal-motor aspects of vocal communication. Future work will build on the results of this functional neuroanatomy study and record the activity of neurons in each of the key areas revealed here in order to refine our understanding of their role in vocal communication.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Dan Bendor and Yi Zhou for helpful comments on the manuscript. This work was supported by grants to Cory T. Miller (NIH F32 DC007022, NIH R03 DC008404, NIH K99/R00 DC009007, National Organization of Hearing Research Foundation) and Xiaoqin Wang (NIH R01 DC005808, DC008578).

References

Aitken, P. G. (1981). Cortical control of conditioned and spontaneous vocal behavior in rhesus monkeys. Brain Lang. 13, 171–184.

Amaral, D. G., and Witter, M. P. (1989). The three-dimensional organization of the hippocampal formation: a review of anatomical data. Neuroscience 31, 571–591.

Bendor, D. A., and Wang, X. (2008). Neural response properties of the primary, rostral and rostrotemporal core fields in the auditory cortex of marmoset monkeys. J. Neurophysiol. 100, 888–906.

Brodman, K. (1909). Verglechende Lokalisationslehre Der Grosshirnrinde In Ihren Prinzipien Dargestellt Auf Grund Des Zellenbaues. Leipzig: Barth.

Brown, M. W., and Aggleton, J. P. (2001). Recognition memory: what are the roles of the perirhinal cortex and hippocampus. Nat. Rev. Neurosci. 2, 51–61.

Burman, K. J., Palmer, S. M., Gamberini, M., and Rosa, M. G. P. (2006). Cytoarchitectural subdivisions of the dorsolateral frontal cortex of the marmoset monkey (Callithrix jacchus), and their projections to dorsal visual areas. J. Comp. Neurol. 495, 149–172.

Burman, K. J., Palmer, S. M., Gamberini, M., Spitzer, M. W., and Rosa, M. G. P. (2008). Anatomical and physiological definition of the motor cortex of the marmoset monkey. J. Comp. Neurol. 506, 860–876.

Cheney, D. L., and Seyfarth, R. M. (1982a). How vervet monkeys perceive their grunts: field playback experiments. Anim. Behav. 30, 739–751.

Cheney, D. L., and Seyfarth, R. M. (1982b). Recognition of individuals within and between groups of free ranging vervet monkeys. Am. Zool. 22, 519–529.

Cheney, D. L., and Seyfarth, R. M. (2007). Baboon Metaphysics: The Evolution of a Social Mind. Chicago: University of Chicago Press.

Cheney, D. L., Seyfarth, R. M., and Palombit, R. (1996). The function and mechanisms underlying baboon “contact” barks. Anim. Behav. 52, 507–518.

Cohen, Y. E., Hauser, M. D., and Russ, B. E. (2006). Spontaneous processing of abstract categorical information in the ventrolateral prefrontal cortex. Biol. Lett. 2, 261–265.

Cohen, Y. E., Theunissen, F. E., Russ, B. E., and Gill, P. (2007). Acoustic features of rhesus vocalizations and their representation in the ventrolateral prefrontal cortex. J. Neurophysiol. 97, 1470–1484.

de la Mothe, L. A., Blumell, S., Kajikawa, Y., and Hackett, T. A. (2006). Cortical connections of the auditory cortex in marmoset monkeys: core and medial belt regions. J. Comp. Neurol. 496, 27–71.

De la Torre, S., and Snowdon, C. T. (2009). Dialiects in pygmy marmosets? Population variation in call structure. Am. J. Primatol. 71, 1–10.

Egnor, S. E. R., and Hauser, M. D. (2004). A paradox in the evolution of primate vocal learning. Trends Neurosci. 27, 649–654.

Egnor, S. E. R., Iguina, C., and Hauser, M. D. (2006). Perturbation of auditory feedback causes systematic pertubation in vocal structure in adult cotton-top tamarins. J. Exp. Biol. 209, 3652–3663.

Egnor, S. E. R., Wickelgren, J. G., and Hauser, M. D. (2007). Tracking silence: adjusting vocal production to avoid acoustic interference. J. Comp. Physiol. A 193, 477–483.

Eliades, S. J., and Wang, X. (2003). Sensory–motor interaction in the primate auditory cortex during self-initiated vocalizations. J. Neurophysiol. 89, 2185–2207.

Eliades, S. J., and Wang, X. (2008). Neural substrates of vocalization feedback monitoring in primate auditory cortex. Nature 453, 1102–1106.

Elowson, A. M., and Snowdon, C. T. (1994). Pygmy marmosets, Cebuella pygmaea, modify vocal structure in response to changed social environment. Anim. Behav. 47, 1267–1277.

Freedman, D. J., Anderson, K. C., and Miller, E. K. (2001). Categorical representation of visual stimuli in the primate prefrontal cortex. Science 291, 312–316.

Fuster, J. M. (1999). Memory in the Cerebral Cortex: An Empirical Approach to Neural Networks in the Human and Non-human Primate. Cambridge, MA: MIT Press.

Gemba, H., Kyuhou, S., Matsuzaki, R., and Amino, Y. (1999). Cortical field potentials with audio-initiated vocalization in monkeys. Neurosci. Lett. 272, 49–52.

Gemba, H., Miki, N., and Sasaki, K. (1995). Cortical field potentials preceding vocalization and influences of cerebellar hemispherectomy upon them in monkeys. Brain Res. 697, 143–151.

Ghazanfar, A. A., and Santos, L. R. (2004). Primate brains in the wild: the sensory basis for social interactions. Nat. Rev. Neurosci. 5, 603–616.

Gifford, G. W., Hauser, M. D., and Cohen, Y. E. (2003). Discrimination of functionally referential calls from laboratory-housed rhesus macaques: implications for neuroethological studies. Brain Behav. Evol. 61, 213–224.

Gifford, G. W., MacLean, K. A., Hauser, M.D., and Cohen, Y. E. (2005). The neurophysiology of functionally meaningful categories: macaque ventrolateral prefrontal cortex plays a critical role in spontaneous categorization of species-specific vocalizations. J. Cogn. Neurosci. 17, 1471–1482.

Goodale, M. A., Milner, A. D., Jakobson, L. S., and Carey, D. P. (1991). A neurological dissociation between perceiving objects and grasping them. Nature 349, 154–156.

Hammerschmidt, K., and Fischer, J. (2008). “Constraints in primate vocal production,” in: Evolution of Communicative Flexibility, eds D. K. Oller and U. Griebel (Cambridge, MA: MIT Press), 93–120.

Hammerschmidt, K., Freudenstein, T., and Jurgens, U. (2001). Vocal development in squirrel monkeys. Behaviour 138, 1179–1204.

Hickok, G., and Poeppel, D. (2004). Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition 92, 67–99.

Hickok, G., and Poeppel, D. (2007). The cortical organization of speech processing. Nat. Rev. Neurosci. 8, 393–402.

Jarvis, E. D., Ribeiro, S., Vielliard, J., Da Silva, M., Ventura, D., and Mello, C. V. (2000). Behaviorally driven gene expression reveals hummingbird brain song nuclei. Nature 406, 628–632.

Jarvis, E. D., Scharff, C., Grossman, M., Ramos, J. A., and Nottebohm, F. (1998). For whom the bird sings: context-dependent gene expression. Neuron 21, 775–788.

Jurgens, U. (2002a). Neural pathways underlying vocal control. Neurosci. Biobehav. Rev. 26, 235–258.

Jurgens, U. (2002b). A study of central control of vocalization using the squirrel monkey. Med. Eng. Phys. 24, 473–477.

Jurgens, U., Ehrenreich, L., and De Lanerolle, N. C. (2002). 2-Deoxyglucose uptake during vocalization in the squirrel monkey brain. Behav. Brain Res. 136, 605–610.

Jurgens, U., and Ploog, D. (1970). Cerebral representation of vocalization in squirrel monkey. Exp. Brain Res. 10, 532–554.

Jurgens, U., and Pratt, R. (1979). Role of the periaqueductal grey in vocal expression of emotion. Brain Res. 167, 367–378.

Kaas, J. H., and Hackett, T. A. (1998). Subdivisions of auditory cortex and levels of processing in primates. Audiol. Neurootol. 3, 73–85.

Kaas, J. H., and Hackett, T. A. (2000). Subdivisions of auditory cortex and processing streams in primates. Proc. Natl. Acad. Sci. U.S.A. 97, 11793–11799.

Kajikawa, Y., de la Mothe, L. A., Blumell, S., Sterbing-D’Angelo, S. J., D’Angelo, W., Camalier, C. R., and Hackett, T. A. (2008). Coding of FM sweep trains and twitter calls in area CM of marmoset auditory cortex. Hear. Res. 239, 107–125.

Kruse, A. A., Stripling, R., and Clayton, D. F. (2000). Minimal experience required for immediate-early gene induction in the zebra finch neostriatum. Neurobiol. Learn. Mem. 74, 179–184.

Kyuhou, S., Matsuzaki, R., and Gemba, H. (2003). Perirhinal cortex relays auditory information to the frontal motor cortices in the rat. Neurosci. Lett. 353, 181–184.

Larson, C. (1991). On the relation of PAG neurons to laryngeal and respiratory muscles during vocalization in the monkeys. Brain Res. 552, 77–86.

Larson, C. R., and Kistler, M. K. (1986). The relationship of periaqueductal gray neurons to vocalization and laryngeal EMG in the behaving monkey. Exp. Brain Res. 63, 596–606.

MacLean, P. D., and Newman, J. D. (1988). Role of midline frontolimbic cortex in production of isolation call of squirrel monkeys. Brain Res. 450, 111–123.

Miller, C. T., Beck, K., Meade, B., and Wang, X. (2009a). Antiphonal call timing in marmosets is behaviorally significant: interactive playback experiments. J. Comp. Physiol. A Neuroethol. Sens. Neural. Behav. Physiol. 195, 783–789.

Miller, C. T., Eliades, S. J., and Wang, X. (2009b). Motor-planning for vocal production in common marmosets. Anim. Behav. 78, 1195–1203.

Miller, C. T., and Cohen, Y. E. (2010). “Vocalizations as auditory objects: behavior and neurophysiology,” in Primate Neuroethology, eds M. Platt and A. A. Ghazanfar (New York, NY: Oxford University Press), 237–255.

Miller, C. T., Flusberg, S., and Hauser, M. D. (2003). Interruptibility of cotton-top tamarin long calls: implications for vocal control. J. Exp. Biol. 206, 2629–2639.

Miller, C. T., Iguina, C., and Hauser, M. D. (2005). Processing vocal signals for recognition during antiphonal calling. Anim. Behav. 69, 1387–1398.

Miller, C. T., Mandel, K., and Wang, X., (2010). The communicative content of the common marmoset phee call during antiphonal calling. Am. J. Primatol. 72, 974–980.

Miller, C. T., and Wang, X. (2006). Sensory–motor interactions modulate a primate vocal behavior: antiphonal calling in common marmosets. J. Comp. Physiol. A 192, 27–38.

Mitani, J., and Gros-Louis, J. (1998). Chorusing and convergence in chimpanzees: tests of three hypotheses. Behaviour 135, 1041–1064.

Mohr, J. P., Pessin, M. S., Finkelstein, S., Funkenstein, H. H., Duncan, G. W., and Davis, K. R. (1978). Broca’s aphasia: pathologic and clinical. Neurology 28, 311–324.

Morel, A., Garraghty, P. E., and Kaas, J. H. (1993). Tonotopic organization, architectonic fields, and connections of auditory cortex in macaque monkeys. J. Comp. Neurol. 335, 437–459.

Murata, A., Gallese, V., Kaseda, M., and Sakada, H. (1996). Parietal neurons related to memory-guided hand manipulation. J. Neurophysiol. 75, 2180–2186.

Naya, Y., Yoshida, M., and Miyashita, Y. (2003). Forward processing of long-term associative memory in the monkey inferotemporal cortex. J. Neurophysiol. 23, 2861–2871.

Neider, A., Freedman, D. J., and Miller, E. H. (2002). Representation o the quantity of visual items in the primate prefrontal cortex. Science 297, 1708–1711.

Newman, J. D. (2003). “Auditory communication and central auditory mechanisms in the squirrel monkeys: past and present,” in Primate Audition: Ethology and Neurobiology, ed. A. A. Ghazanfar (New York, NY: CRC Press), 227–246.

Newman, J. D., and Lindsley, D. (1976). Single unit analysis of auditory processing in squirrel monkey frontal cortex. Exp. Brain Res. 25, 169–181.

Newman, J. D., and Wollberg, Z. (1973). Responses of single neurons in the auditory cortex of squirrel monkeys to variants of a single call type. Exp. Neurol. 40, 821–824.

Norcross, J. L., and Newman, J. D. (1993). Context and gender specific differences in the acoustic structure of common marmoset (Callithrix jacchus) phee calls. Am. J. Primatol. 30, 37–54.

Petkov, C. I., Kayser, C., Steudel, T., Whittingstall, K., Augath, M., and Logothetis, N. K. (2008). A voice region in the monkey brain. Nat. Neurosci. 11, 367–374.

Petrides, M., Cadoret, G., and Mackey, S. (2005). Orofacial somatomotor responses in the macaque monkey homologue of Broca’s area. Nature 435, 1235–1238.

Petrides, M., and Pandya, D. N. (1999). Dorsolateral prefrontal cortex: comparative cytoarchitectonic analysis in the human and the macaque brain and corticocortico connection patterns. Eur. J. Neurosci. 11, 1011–1036.

Petrides, M., and Pandya, D. N. (2002). Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur. J. Neurosci. 16, 291–310.

Pistorio, A., Vintch, B., and Wang, X. (2006). Acoustic analyses of vocal development in a new world primate, the common marmoset (Callithrix jacchus). J. Acoust. Soc. Am. 120, 1655–1670.

Poeppel, D., and Hickok, G. (2004). Towards a new functional anatomy of language. Cognition 92, 1–12.

Rauschecker, J. P., Tian, B., and Hauser, M. (1995). Processing of complex sounds in the macaque non-primary auditory cortex. Science 268, 111–114.

Rizzolatti, G., Fogassi, L., and Gallese, V. (1997). Parietal cortex: front sight to action. Curr. Opin. Neurobiol. 7, 562–567.

Roberts, A. C., Tomic, D. L., Parkinson, C. H., Roeling, T. A., Cutter, D. J., Robbins, T. W., and Everitt, B. J. (2007). Forebrain connectivity of the prefrontal cortex in the marmoset monkey (Callthrix jacchus): an anterograde and retrograde tract tracing study. J. Comp. Neurol. 502, 86–112.

Romanski, L. M., Averbeck, B. B., and Diltz, M. (2005). Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J. Neurophysiol. 93, 734–747.

Romanski, L. M., Bates, J. F., and Goldman-Rakic, P. S. (1999). Auditory belt and parabelt projections in the prefrontal cortex in the rhesus macaque. J. Comp. Neurol. 403, 141–157.

Romanski, L. M., and Goldman-Rakic, P. S. (2001). An auditory domain in primate prefrontal. Nat. Neurosci. 5, 15–16.

Seyfarth, R. M., and Cheney, D. L. (1986). Vocal development in vervet monkeys. Anim. Behav. 34, 1640–1658.

Seyfarth, R. M., Cheney, D. L., and Marler, P. (1980). Monkey responses to three different alarm calls: evidence of predator classification and semantic communication. Science 210, 801–803.

Shadmehr, R., and Wise, S. P. (2005). Computational Neurobiology of Reaching and Pointing: A Foundation for Motor Learning. Cambridge, MA: MIT Press.

Showers, M. J. C. (1959). The cingulate gyrus: additional motor area and cortical autonomic regulator. J. Comp. Neurol. 112, 231–287.

Snowdon, C. T., and Elowsen, A. M., (1999) Pygmy marmosets modify call structure when paired. Ethology 105, 893–908.

Suguira, H. (1998). Matching of acoustic features during the vocal exchange of coo calls by Japanese macaques. Anim. Behav. 55, 673–687.

Sutton, D., Larson, C., and Lindeman, C. (1974). Neocortical and limbic lesion effects on primate phonation. Brain Res. 71, 61–75.

Suzuki, W. A., and Amaral, D. G. (1994). Topographic organization of the reciprocal connections between the monkey entorhinal and the perirhinal and parahippocampal cortices. J. Neurosci. 14, 1856–1877.

Tokuyama, W., Okuno, H., Hashimoto, T., Li, Y., and Miyashita, Y. (2000). BDNF upregulation during declarative memory formation in monkey inferior temporal cortex. Nat. Neurosci. 3, 1134–1142.

Ungerleider, L. G., and Mishkin, M. (1982). “Two cortical visual systems,” in Analysis of Visual Behavior, eds D. G. Ingle, M. A. Goodale, and R. J. Q. Mansfield (Cambridge: MIT Press), 549–586.

Wallis, J. D., and Miller, E. K. (2003). From rule to response: neuronal processes in the premotor and prefrontal cortex. J. Neurophysiol. 90, 1790–1806.

Wang, X., and Kadia, S. C. (2001). Differential representation of species-specific primate vocalizations in the auditory cortices of marmoset and cat. J. Neurophysiol. 86, 2616–2620.

Wang, X., Kadia, S. C., Lu, T., Liang, L., and Agamaite, J. A. (2003). “Cortical processing of complex sounds and species-specific vocalizations,” in Primate Audition: Ethology and Neurobiology, ed. A. A. Ghazanfar (Baton Rouge, LA: CRC Press LLC), 279–300.

West, M. J., Slomianka, L., and Gundersen, H. J. G. (1991). Unbiased stereological estimation of the total number of neurons in the subdivisions of the rat hippocampus using the optical fractionator. Anat. Rec. 231, 482–497.

Winter, P., and Funkenstein, H. H. (1973). The effect of species-specific vocalizations on the discharge of auditory cortical cells in the awake squirrel monkey. Exp. Brain Res. 18, 489–504.

Wolberg, Z., and Newman, J. D. (1972). Auditory cortex of squirrel monkeys: response patterns of single cells to species-specific vocalizations. Science 175, 212–214.

Zuberbuhler, K., Cheney, D. L., and Seyfarth, R. M. (1999). Conceptual semantics in a non-human primate. J. Comp. Psychol. 113, 33–42.

Keywords: immediate early gene expression, common marmoset, vocal communication, frontal cortex, auditory cortex, medial temporal cortex

Citation: Miller CT, DiMauro A, Pistorio A, Hendry S and Wang X (2010) Vocalization induced cFos expression in marmoset cortex. Front. Integr. Neurosci. 4:128. doi: 10.3389/fnint.2010.00128

Received: 17 August 2010;

Paper pending published: 08 October 2010;

Accepted: 30 November 2010;

Published online: 14 December 2010.

Edited by:

David C. Spray, Albert Einstein College of Medicine, USAReviewed by:

Dumitru A. Lacobas, Albert Einstein College of Medicine of Yeshiva University, USAJean Hebert, Albert Einstein College of Medicine, USA

Copyright: © 2010 Miller, DiMauro, Pistorio, Hendry and Wang. This is an open-access article subject to an exclusive license agreement between the authors and the Frontiers Research Foundation, which permits unrestricted use, distribution, and reproduction in any medium, provided the original authors and source are credited.

*Correspondence: Cory T. Miller, Cortical Systems and Behavior Laboratory, Department of Psychology, University of California, San Diego, 9500 Gilmann Drive #109, La Jolla, CA 92093, USA. e-mail: corymiller@ucsd.edu