94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 13 November 2024

Sec. Translational Neuroscience

Volume 18 - 2024 | https://doi.org/10.3389/fnins.2024.1482849

This article is part of the Research Topic Mechanism of Neural Oscillations and Their Relationship with Multiple Cognitive Functions and Mental Disorders View all 11 articles

Qian Yang1†

Qian Yang1† Yanyan Fu1†

Yanyan Fu1† Qiuli Yang1

Qiuli Yang1 Dongqing Yin2

Dongqing Yin2 Yanan Zhao3

Yanan Zhao3 Hao Wang1

Hao Wang1 Han Zhang4

Han Zhang4 Yanran Sun5

Yanran Sun5 Xinyi Xie1

Xinyi Xie1 Jian Du1*

Jian Du1*Objective: Depression is a complex affective disorder characterized by high prevalence and severe impact, commonly presenting with cognitive impairment. The objective diagnosis of depression lacks precise standards. This study investigates eye movement characteristics during emotional face recognition task (EFRT) in depressive patients to provide empirical support for objective diagnosis.

Methods: We recruited 43 patients with depression (Depressive patients, DP) from a psychiatric hospital and 44 healthy participants (Healthy Control, HC) online. All participants completed an EFRT comprising 120 trials. Each trial presented a gray screen for 800 ms followed by a stimulus image for judgment. Emotions were categorized as positive, neutral, or negative. Eye movement trajectories were recorded throughout the task. Latency of First Fixation (LFF), Latency of First Fixation for Eye AOI, and Latency of First Fixation for Mouth AOI were used as representative indicators of early attention, Proportion of Eye AOI, and Proportion of Mouth AOI as measures of intermediate attention, Accuracy (ACC) and Reaction Time (RT) as behavioral indicators of late-stage attention. In this study, these metrics were employed to explore the differences between patients with depression and healthy individuals.

Results: Compared to healthy participants, individuals with depression exhibit longer first fixation latencies on the eyes and mouth during the early attention stage of emotional face recognition, indicating an avoidance tendency toward key facial recognition cues. In the mid-to-late attention stages, depressive individuals show an increased fixation ratio on the eyes and a decreased fixation ratio on the mouth, along with lower accuracy and longer response times. These findings suggest that, relative to healthy individuals, individuals with depression have deficits in facial recognition.

Conclusion: This study identified distinct attention patterns and cognitive deficits in emotional face recognition among individuals with depression compared to healthy individuals, providing an attention-based approach for exploring potential clinical diagnostic markers for depression.

Depression is a complex affective disorder with significant emotional, cognitive, and physical symptoms, characterized by persistent low mood, reduced activity, and slowed cognitive function. According to the Global Burden of Disease Study, approximately 280 million people worldwide suffer from depression, including 5% of adults and 5.7% of individuals over 60, and 25% increase has been triggered by COVID-19 (World Health Organization, 2022). Depression is significantly associated with disability and can lead to severe consequences, including suicide, negatively impacting individuals’ mental and physical health (Iancu et al., 2020; Morin et al., 2020).

Most patients with depression experience cognitive dysfunction, including deficits in executive function, attention, memory, and processing speed (Yan and Li, 2018). These impairments manifest as reduced cognitive flexibility, decision-making, and inhibitory control, along with difficulties in maintaining attention, short-term memory loss, and slower reaction times (Hollon et al., 2006; Koenig et al., 2014; Lee et al., 2012; Wagner et al., 2012). Depression also causes a negative emotional bias, where individuals exhibit a preference for negative stimuli, leading to the misinterpretation of information through a negative lens. This bias is linked to deeply ingrained negative self-schemas that sustain depressive symptoms (Jiang, 2024). Studies show depressed individuals have slower reaction times when recognizing facial expressions, particularly neutral ones, and reduced accuracy in identifying positive expressions, often mistaking them for neutral or negative (Leppanen et al., 2004; Sfarlea et al., 2018). However, they generally retain the ability to recognize sad expressions (Dalili et al., 2015).

The clinical diagnosis of depression primarily relies on patients’ clinical symptoms supplemented by depression-related scale scores, lacking specific physiological and biochemical indicators as auxiliary diagnostic criteria (Cuijpers et al., 2020). This absence of a “gold standard” akin to the diagnosis of other organic diseases has prompted numerous studies in recent years to explore objective diagnostic indicators for depression using physiological signals, biochemical markers, and facial visual features (Byun et al., 2019; Koller-Schlaud et al., 2020; Rushia et al., 2020; Thoduparambil et al., 2020; Xing et al., 2019; Du et al., 2022).

The diagnostic approach based on facial visual features objectively assesses the severity of depression by analyzing relevant information from the patient’s face. It further summarizes behavioral characteristics specific to individuals with depression to guide clinical diagnoses made by doctors. The equipment required for this method is simple—a camera—making it cost-effective and easily accessible. Importantly, subjects do not need direct contact with the equipment during data collection, allowing them to maintain a natural state of mind without any hindrance and ensuring genuine mental state data can be captured. Some scholars believe that this method is particularly beneficial for patients experiencing reduced interest or pleasure due to its user-friendly nature. Consequently, it holds significant research value and potential for development (Du et al., 2022).

Recently, there have been significant findings from eye movement experiments conducted on patients with depression. In free-viewing tasks, patients with depression show fewer fixation points and shorter total fixation times on positive images compared to healthy controls. Additionally, the transition time from negative to neutral stimuli was longer in the depression group than in the healthy group (Qian et al., 2019). In dot-probe tasks, it was observed that after undergoing positive word training, patients with depression showed a significant reduction in both the number and duration of fixations on the negative portion of pictures when compared to their pre-training performance (Liu et al., 2015). In recent years, an increasing number of researchers have integrated machine learning algorithms into modeling various indicators derived from eye movement experiments as well as other cross-modal indicators. The aim is to identify a combination method that yields optimal recognition rates for diagnosing depression (Pan et al., 2019; Shen et al., 2021; Wang et al., 2018).

Therefore, this study focuses specifically on individuals with mild to moderate depression and utilizes eye tracking technology to investigate different eye movement indicators during an emotional face recognition task among depressive patients. A comparison will be made against healthy individuals to enhance support for behavioral experimental data related to objective diagnostic indicators for depression while providing valuable reference data for future research involving emotional face recognition and eye movements among depressive patients.

All participants were required to have normal or corrected normal vision, as well as be free from color blindness or color weakness to eliminate any potential impact of visual impairments on the experiment. Participants were aged between 18 and 60, right-handed, and were required to read the experimental instructions, agree to the procedures, and sign an informed consent form.

Depressed patients were recruited from a tertiary psychiatric hospital in Beijing. They were assessed by 2–3 psychiatrists, including one senior psychiatrist, using the 17-item Hamilton Depression Rating Scale (HAMD-17), and clinically diagnosed according to the criteria for depression outlined in the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5). Patients scoring >7 on the HAMD-17 and clinically diagnosed with depression were included in the depression group, while individuals with other psychiatric disorders such as schizophrenia, anxiety disorders, or bipolar disorder were excluded.

The healthy control group was recruited online via social media platforms and consisted of volunteers. In addition to meeting the vision, age, and handedness requirements, healthy participants were required to score < 7 on the HAMD-17 and have a total score of <160 on the Symptom Checklist-90 (SCL-90).

A total of 45 participants were recruited for the depression group and 51 for the healthy control group. Due to data quality issues, 2 depressed participants and 7 healthy participants were excluded, resulting in a final sample of 87 participants, with 43 in the depression group and 44 in the healthy control group.

This study was approved by the Ethics Committee of the Institute of Clinical Basic Medicine, China Academy of Chinese Medical Sciences, under the approval number P23009/PJ09.

The emotional facial images used in the Emotional Face Recognition Task were sourced from the Chinese Affective Face Picture System (CAFPS), developed by Professor Yuejia Luo’s team at Shenzhen University (Xu et al., 2011). All images were standardized in terms of color, brightness, and size and demonstrated good reliability and validity. A total of 120 images were selected from the CAFPS database for the experiment, with 40 images for each emotional category (positive, neutral, negative). The images were matched for gender, with 20 male and 20 female faces in each emotional category.

The experiment employed a Tobii Pro X3-120 eye tracker produced by Tobii Technology AB, Sweden. The device is 324 mm in length, weighs 118 g, and operates at a sampling rate of 120 Hz. It can be directly mounted on monitors or laptops with screens up to 25 inches using the accompanying adhesive stand (as shown in Figure 1). During the experiment, participants were seated approximately 65 cm from the screen. Fixations were calculated using Tobii’s built-in I-VT algorithm, which automatically identified fixations with a duration greater than 60 ms. Adjacent fixations separated by less than 75 ms and with an angular distance of less than 0.5 degrees were merged into a single fixation point. Eye-tracking data were collected and exported using Tobii Pro Lab Version 1.152. Behavioral data were collected, merged, and exported using E-Prime 3.0. Data preprocessing and statistical analysis were conducted using R Studio version 4.3.2, and data visualization was performed using GraphPad Prism version 10.1.2.

The experiment followed a 2 (group: depression patients/healthy controls) × 3 (emotional face type: positive/neutral/negative) mixed factorial design. Participants were required to complete the Emotional Face Recognition Task (EFRT), in which they were asked to identify the emotional attributes of randomly presented facial images. Meanwhile, the eye tracker recorded their eye movement trajectories.

Before the experiment, the eye-tracking equipment was calibrated. Upon arrival at the laboratory, participants were briefed on the task and informed of the relevant precautions. They then performed the Emotional Face Recognition Task. The experiment took place in a soundproof, windowless room, with the main light source being a ceiling light in the center. The computer faced the wall with its back to the light source to ensure soft, non-reflective lighting conditions.

The eye-tracking experiment followed an event-related design with a total of 120 trials presented in random order. The flow of a single trial is illustrated in Figure 1. Each trial began with a blank gray screen displayed for 800 ms, followed by the random presentation of an emotional face image at the center of the monitor. The duration of each image presentation was not fixed and depended on the participant’s response. Participants were required to make a judgment by pressing one of three keys: “1” for positive, “2” for neutral, and “3” for negative. Once a selection was made, the trial ended, and the next trial began.

In Python, the shape key point coordinate prediction tool of the DLIB library and the standardized 68-point facial feature point calibration model are used to automatically identify the facial features coordinates of the stimulus images, the location is recorded by drawing and generating AOI coordinates table, and the AOI is judged by comparing the fixation point x and Y coordinates with the AOI coordinates, blue is Mouth, red is Face, and the Other areas are all Other (Figure 2).

In this study, to clearly differentiate between eye-tracking data and reaction time/accuracy data, we referred to the latter as “behavioral data.” Eye-tracking data were first stored in Tobii Pro Lab software, and during export, only data with a valid sampling rate of ≥60% were selected. Invalid sampling points during the experiment could result from undetected eye movements or unclassified samples, such as when participants blinked, closed their eyes, or looked away from the screen. During this process, data from two participants in the depression group were excluded.

Both eye-tracking and behavioral data were preprocessed and analyzed using R. Outliers beyond 1.5 times the interquartile range (IQR) were excluded. Missing values were imputed using mean substitution. After data cleaning, missing values were imputed as follows: Latency of first fixation (12%), LFF of eye (3%), LFF of mouth (3%), Look proportion of eye (2%), Look proportion of mouth (0%), Accuracy (9%), and Reaction time (5%).

In this study, the analyzed data primarily comprised eye-tracking and behavioral data. Eye-tracking metrics were derived from the raw data, including the latency of the first fixation (LFF) for each trial, the LFF of the eye AOI (area of interest), the LFF of the mouth AOI, the proportion of fixation time on the eye AOI, and the proportion of fixation time on the mouth AOI. Behavioral data included reaction time and accuracy.

First, the timestamps from the raw data were used to record the stimulus start time, end time, and the start and end times of each fixation point. Statistical metrics were then calculated using the following formulas.

LFF-related eye-tracking metrics reflect early visual attention characteristics. By analyzing the LFF in different AOIs, early attention preferences can be inferred.

Calculated as the time from the stimulus onset (STS, Start Time of Stimulus) to the first fixation onset (STFF, Start Time of First Fixation). This measures the time from when the image appears until the first meaningful fixation occurs. Note that fixations that started before but ended after stimulus onset were excluded to ensure that the first fixation was drawn by the stimulus image.

Time from the stimulus onset to the first fixation on the eye AOI.

Time from the stimulus onset to the first fixation on the mouth AOI.

Fixation proportion is calculated by measuring the fixation time in different AOIs. Fixation duration is a common metric for mid-term fixation analysis, but since the stimulus presentation time was not fixed in this study, fixation duration is highly correlated with reaction time, making it less suitable as a statistical metric. Instead, we converted fixation duration to a proportion, allowing a comparison of depression characteristics across different AOIs.

First, the fixation duration for each AOI in a single trial was calculated, then summed to get the total fixation duration (total look time). The fixation proportion for each AOI was then calculated by dividing the fixation duration for that AOI by the total fixation duration. Look proportion of eye = Look time of eye AOI/Total look time

Similar to the above, Look proportion of mouth = Look time of mouth AOI/Total look time.

For demographic data, age differences between the two groups were analyzed using a t-test, and gender differences were compared using a chi-square test. Since age differences were significant, age was controlled as a covariate in the analysis of eye-tracking and behavioral data, using a 2×3 ANCOVA design. Post-hoc tests and simple effects analyses were corrected using the Bonferroni method for p-values.

Demographic information was statistically presented in Table 1, there was no difference in gender composition between the DP group and the HC group, however, the age of the DP group was significantly larger than that of the HC group, so the covariance analysis was used to count the dependent variable and the age was a covariate to control for the subsequent statistics.

Table 2 showed the descriptive statistical table of the values of each dependent variable in the DP group and the HC group under different emotional face conditions, and the summary table of the results of analysis of covariance for all dependent variables was shown in Table 3.

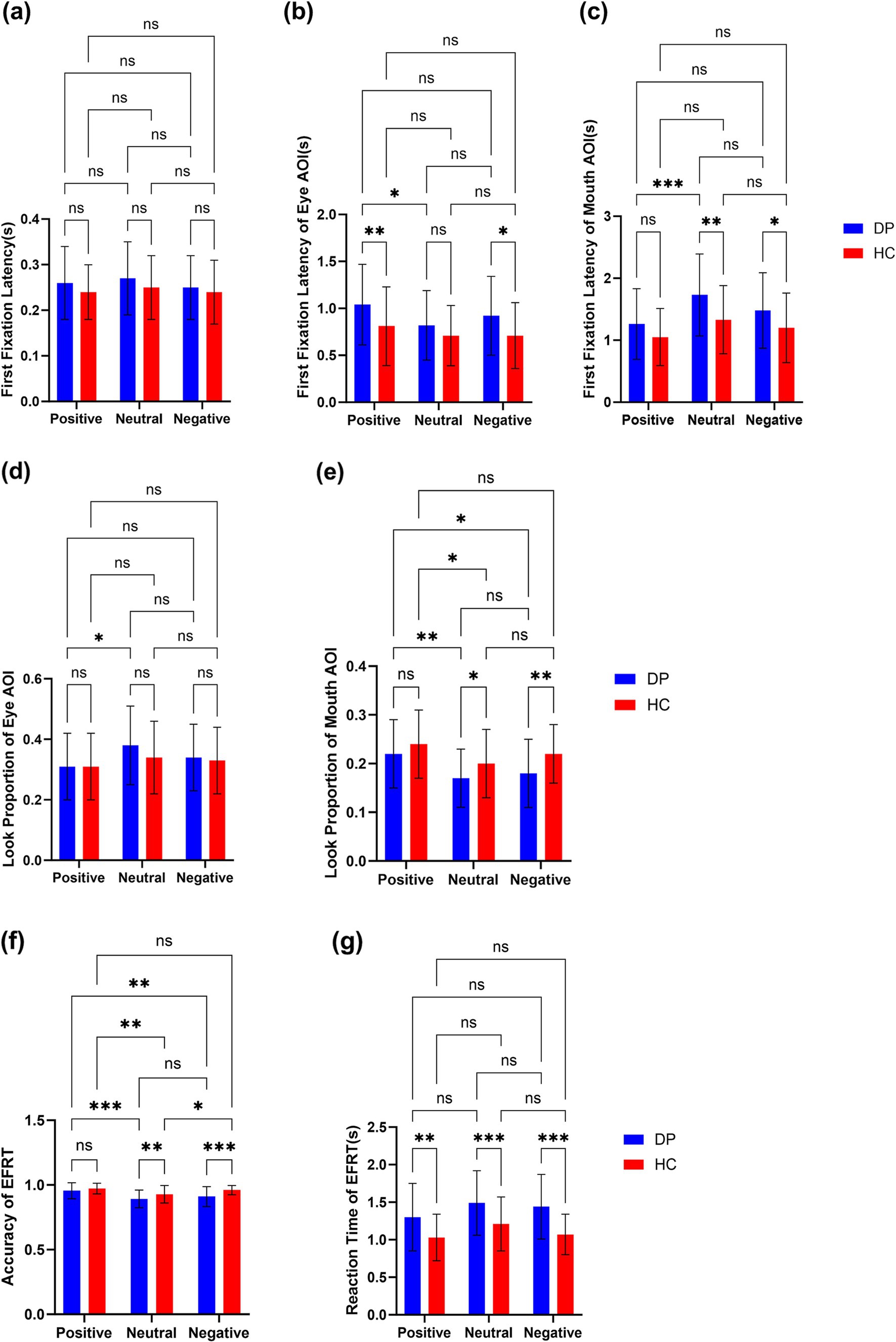

The main effect of GROUP was not significant in the 2 × 3 ANCOVA with the LFF as the dependent variable, but it was significant in the ANCOVA with the LFF of Eye AOI [F(1, 85) = 17.519, p < 0.001, η2p = 0.016] and LFF of Mouth AOI [F(1, 85) = 21.329, p < 0.001, η2p = 0.027]. The first fixation latency of Eye AOI and Mouth AOI in DP group was significantly longer than HC group.

In the ANCOVA analysis of look proportion, the main effects of GROUP were significant both in Eye AOI [F(1, 85) =4.819, p = 0.029, η2p = 0.048] and Mouth AOI [F(1, 85) =11.791, p = 0.001, η2p = 0.033], the post-hoc test showed that the proportion of Mouth AOI in DP group is significantly lower than HC group.

The main effect of GROUP was also significant in the analysis of Accuracy [F(1, 85) =27.654, p < 0.001, η2p = 0.036] and Reaction Time [F(1, 85) =49.430, p < 0.001, η2p = 0.060], the post-hoc test showed that the accuracy of EFRT in DP group was significantly lower than HC group, and the reaction time in DP group was significantly longer than HC group.

We found some interesting results on the latency of first fixation. For the whole picture (Figure 3a), there was no difference between the DP group and the HC group, but there were some differences when the latency of the first fixation in the different regions of interest was analyzed, people with depression pay attention to the pictures at the same time as healthy people, but they pay attention to the features later than healthy people.

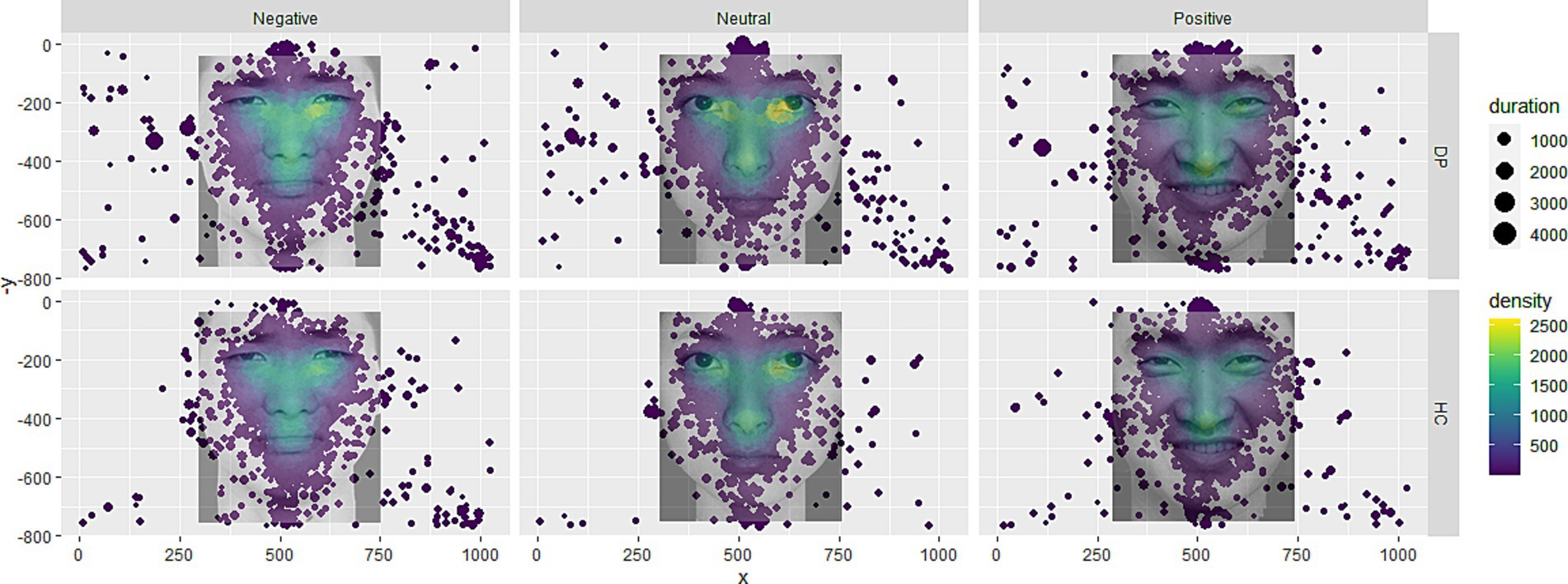

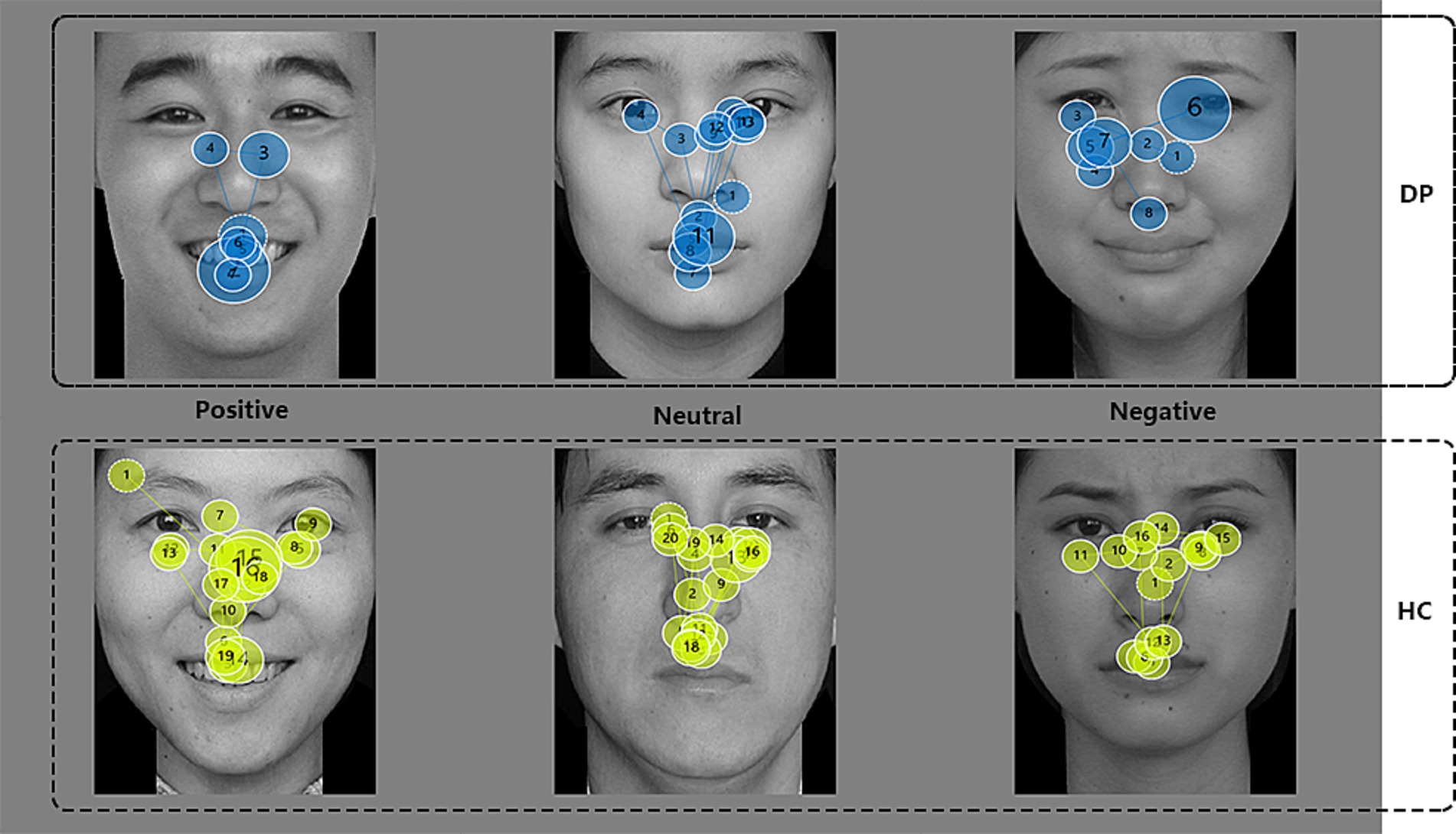

Previous studies have shown that most of the implementation of emotional face recognition is focused on the eyes and mouth (Eisenbarth and Alpers, 2011). The eye is a major cue for recognizing negative emotions (Franca et al., 2023; Grainger and Henry, 2020), the latency of the first fixation of the eye was greater in the DP Group than in the HC Group, suggesting that depressed patients are slower to notice the eye site when recognizing expressions, as can be seen from Figure 3b, especially when judging positive and negative faces, the latency of the first fixation point of “Eyes” was significantly greater than that of the healthy group, this suggests that people with depression have significantly more avoidance of the eye region when it comes to recognizing faces with distinct facial expressions than healthy people. This is consistent with findings from a study on the effects of depressive tendencies on eye gaze in social interactions. The study found that while depressive tendencies do not affect attention to others’ faces as a whole, they do influence attention to others’ eyes, suggesting an avoidance of the eye region among individuals with depressive tendencies (Suslow et al., 2024). Furthermore, a comparison within the depression group revealed that the first fixation latency on the eye region was significantly longer for positive emotional faces than for neutral images. This suggests a stronger avoidance of the eye region in response to positive emotional faces among individuals with depression. It may also be due to the role of the eyes as a primary cue for recognizing negative emotions, which could counterbalance avoidance tendencies in response to negative faces, thus making avoidance less prominent for positive faces. Similarly, the first fixation latency on the mouth was longer in the depression group than in the healthy control group, indicating a slower attention shift to the mouth region when identifying emotional faces compared to healthy individuals. As shown in Figure 3c, this delay is particularly evident in responses to neutral and negative expressions, which may be attributed to the mouth’s role as a key indicator of positive emotional expressions (Grainger and Henry, 2020). When judging positive emotional faces, people typically fixate on the mouth first, which might explain why differences in first fixation latency on the mouth between the depression and control groups are less pronounced for positive faces but more noticeable in neutral and negative conditions (Figures 4, 5).

Figure 3. (a–c) Latency of first fixation in EFRT(s). (d,e) Look proportion of AOIs (eye and mouth) in EFRT. (f,g) Accuracy and reaction time(s) of EFRT. ***p < 0.001; **p < 0.01; *p < 0.05; ns, non-significant.

Figure 4. Fixation point heat map. Larger size of the fixation point represents longer fixation time, and the higher the color heat, the higher the density.

Figure 5. Eye trace images of DP and HC groups. The number in circle represents the order of fixation points, the size of the circle represents the duration of fixation, and the larger the circle, the longer the duration.

The relevant LFF indicators reveal that, in the early attention stage, individuals with depression do not show a significant difference in response to stimulus images compared to healthy controls. However, they exhibit a certain delay in focusing on effective facial expression cues. This phenomenon reflects, to some extent, a slowing in attention direction and a reduction in efficiency for emotion recognition tasks among individuals with depression.

The look proportion is derived from fixation duration, which reflects the difficulty participants experience in completing the task—the longer the duration, the greater the difficulty (Goller et al., 2019). Some discrepancies exist between prior studies and the findings of this study. Most studies suggest that individuals experiencing sadness tend to focus less on the eyes during facial recognition (Hills and Lewis, 2011), and individuals with depression engage in less eye contact during conversations (Fiquer et al., 2018). In social interactions, eye contact represents crucial information in the dialogue, conveying social interest and closeness (Cui et al., 2019). By diverting their gaze from others’ eyes, individuals with depression may avoid deeper social engagement (Hames et al., 2013). The observed differences may be attributed to the specificity of this experimental task. Studies that use look proportion as a measure typically employ a free-viewing paradigm, where participants have ample time to view images. However, in this study, participants were required to respond to stimulus images as quickly as possible, with the images disappearing immediately after the response. Since facial expression recognition generally begins with the eyes and then moves to the mouth (Xue and Yantao, 2007), it is possible that limited viewing time in this study resulted in a focus advantage for the eyes as the first facial feature noticed. Given that both the depressed and healthy groups were under the same task conditions, a more plausible explanation may be an altered facial recognition pattern among individuals with depression.

In this study, Figures 3d,e reveal that individuals with depression show a higher look proportion on the eyes but a significantly lower look proportion on the mouth compared to the healthy control group. This suggests that during the task, participants in the depression group attempt to gather cues more from the eyes and less from the mouth. Research on facial expression recognition patterns in healthy individuals indicates a general preference to seek emotional cues from the mouth, with the eyes providing comparatively less prominent cues (Wang et al., 2011). Thus, these findings may suggest that individuals with depression have an altered facial expression recognition pattern compared to healthy individuals. When considering response time and accuracy, the accuracy rate in the depression group was lower than that in the healthy control group, while response times were longer. This indicates that individuals with depression are not only slower in recognizing emotional faces but also less accurate. These results imply that a facial emotion recognition pattern focused more heavily on the eyes is less effective for individuals with depression than for healthy individuals, highlighting deficits in emotional face recognition among those with depression compared to healthy controls.

Individuals with depression display certain disadvantages and deficits in attention characteristics during emotional face recognition tasks compared to healthy individuals. Specifically, depressive participants exhibit increased first fixation latency to key facial cue areas (eyes and mouth), indicating an avoidance of effective cues in facial recognition. They also show a decreased look proportion on the mouth and an increased look proportion on the eyes, accompanied by reduced accuracy and prolonged response times. These findings suggest changes in cognitive patterns and deficits in facial recognition during emotional processing in individuals with depression. The results of this study support the hypothesis that individuals with depression have cognitive impairments and facial recognition deficits, offering an attention-based approach to exploring clinical diagnostic markers for depression.

In recruiting healthy controls and screening them using scales, the healthy participants’ ages were relatively concentrated, creating a disparity with the experimental group. Future research should increase the sample size to match the ages of the healthy control group more closely with the experimental group. What is more important is that the conclusions of this study need further validation in future research.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by the Ethics Committee of the Institute of Clinical Basic Medicine, China Academy of Chinese Medical Sciences. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

QiaY: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Validation, Visualization, Writing – original draft, Writing – review & editing. YF: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Validation, Visualization, Writing – original draft, Writing – review & editing. QiuY: Conceptualization, Funding acquisition, Resources, Supervision, Writing – review & editing. DY: Conceptualization, Data curation, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing – review & editing. YZ: Conceptualization, Resources, Supervision, Writing – review & editing. HW: Funding acquisition, Supervision, Writing – review & editing. HZ: Investigation, Writing – review & editing, Validation. YS: Investigation, Writing – review & editing. XX: Investigation, Writing – review & editing. JD: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Visualization, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Beijing Natural Science Foundation (No. 7232327), Study on the Characteristics of NIR Brain Imaging in Depressive Patients with Deficiency and Excess TCM Syndrome after Resting-state and Emotional Activation, and the Excellent Young Talent Cultivation Program of China Academy of Chinese Medicine (No. ZZ16-YQ-059).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer XS declared a shared affiliation with the author YS to the handling editor at the time of review.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Byun, S., Kim, A. Y., Jang, E. H., Kim, S., Choi, K. W., Yu, H. Y., et al. (2019). Detection of major depressive disorder from linear and nonlinear heart rate variability features during mental task protocol. Comput. Biol. Med. 112:103381. doi: 10.1016/j.compbiomed.2019.103381

Cui, M., Zhu, M., Lu, X., and Zhu, L. (2019). Implicit perceptions of closeness from the direct eye gaze. Front. Psychol. 9:2673. doi: 10.3389/fpsyg.2018.02673

Cuijpers, P., Noma, H., Karyotaki, E., Vinkers, C. H., Cipriani, A., and Furukawa, T. A. (2020). A network meta-analysis of the effects of psychotherapies, pharmacotherapies and their combination in the treatment of adult depression. World Psychiatry 19, 92–107. doi: 10.1002/wps.20701

Dalili, M. N., Penton-Voak, I. S., Harmer, C. J., and Munafo, M. R. (2015). Meta-analysis of emotion recognition deficits in major depressive disorder. Psychol. Med. 45, 1135–1144. doi: 10.1017/S0033291714002591

Du, M., Shuang, L., Xiao-ya, L., Wen-quan, Z., and Dong, M. (2022). Research progress of facial visual features in depression diagnosis. J. Chin. Comput. Syst. 43, 483–489. doi: 10.20009/j.cnki.21-1106/TP.2021-0545

Eisenbarth, H., and Alpers, G. W. (2011). Happy mouth and sad eyes: scanning emotional facial expressions. Emotion 11, 860–865. doi: 10.1037/a0022758

Fiquer, J. T., Moreno, R. A., Brunoni, A. R., Barros, V. B., Fernandes, F., and Gorenstein, C. (2018). What is the nonverbal communication of depression? Assessing expressive differences between depressive patients and healthy volunteers during clinical interviews. J. Affect. Disord. 238, 636–644. doi: 10.1016/j.jad.2018.05.071

Franca, M., Bolognini, N., and Brysbaert, M. (2023). Seeing emotions in the eyes: a validated test to study individual differences in the perception of basic emotions. Cogn. Res. Princ. Impl. 8:67. doi: 10.1186/s41235-023-00521-x

Goller, J., Mitrovic, A., and Leder, H. (2019). Effects of liking on visual attention in faces and paintings. Acta Psychol. 197, 115–123. doi: 10.1016/j.actpsy.2019.05.008

Grainger, S. A., and Henry, J. D. (2020). Gaze patterns to emotional faces throughout the adult lifespan. Psychol. Aging 35, 981–992. doi: 10.1037/pag0000571

Hames, J. L., Hagan, C. R., and Joiner, T. E. (2013). Interpersonal processes in depression. Annu. Rev. Clin. Psychol. 9, 355–377. doi: 10.1146/annurev-clinpsy-050212-185553

Hills, P. J., and Lewis, M. B. (2011). Sad people avoid the eyes or happy people focus on the eyes? Mood induction affects facial feature discrimination. Br. J. Psychol. 102, 260–274. doi: 10.1348/000712610X519314

Hollon, S. D., Shelton, R. C., Wisniewski, S., Warden, D., Biggs, M. M., Friedman, E. S., et al. (2006). Presenting characteristics of depressed outpatients as a function of recurrence: preliminary findings from the STAR*D clinical trial. J. Psychiatr. Res. 40, 59–69. doi: 10.1016/j.jpsychires.2005.07.008

Iancu, S. C., Wong, Y. M., Rhebergen, D., van Balkom, A., and Batelaan, N. M. (2020). Long-term disability in major depressive disorder: a 6-year follow-up study. Psychol. Med. 50, 1644–1652. doi: 10.1017/S0033291719001612

Jiang, Y. (2024). A theory of the neural mechanisms underlying negative cognitive bias in major depression. Front. Psych. 15:1348474. doi: 10.3389/fpsyt.2024.1348474

Koenig, A. M., Bhalla, R. K., and Butters, M. A. (2014). Cognitive functioning and late-life depression. J. Int. Neuropsychol. Soc. 20, 461–467. doi: 10.1017/S1355617714000198

Koller-Schlaud, K., Strohle, A., Barwolf, E., Behr, J., and Rentzsch, J. (2020). EEG frontal asymmetry and Theta power in unipolar and bipolar depression. J. Affect. Disord. 276, 501–510. doi: 10.1016/j.jad.2020.07.011

Lee, R. S., Hermens, D. F., Porter, M. A., and Redoblado-Hodge, M. A. (2012). A meta-analysis of cognitive deficits in first-episode major depressive disorder. J. Affect. Disord. 140, 113–124. doi: 10.1016/j.jad.2011.10.023

Leppanen, J. M., Milders, M., Bell, J. S., Terriere, E., and Hietanen, J. K. (2004). Depression biases the recognition of emotionally neutral faces. Psychiatry Res. 128, 123–133. doi: 10.1016/j.psychres.2004.05.020

Liu, M. F., Huang, R. Z., Xu, X. L., and Liu, Q. S.. (2015). Experimental manipulation of positive attention bias in remitted depression: Evidence from eye movements. Chinese Journal of Clinical Psychology 1, 48–51.

Morin, R. T., Nelson, C., Bickford, D., Insel, P. S., and Mackin, R. S. (2020). Somatic and anxiety symptoms of depression are associated with disability in late life depression. Aging Ment. Health 24, 1225–1228. doi: 10.1080/13607863.2019.1597013

Pan, Z., Ma, H., Zhang, L., and Wang, Y. (2019). Depression detection based on reaction time and eye movement. 2019 IEEE international conference on image Processiong (ICIP). Taipei.

Qian, H., Lei, Z., and Yuqian, Q. (2019). A study on depression Patients' eye tracking of attention Bias on emotional pictures. Heihe Xueyuan Xuebao 10, 207–209.

Rushia, S. N., Shehab, A., Motter, J. N., Egglefield, D. A., Schiff, S., Sneed, J. R., et al. (2020). Vascular depression for radiology: a review of the construct, methodology, and diagnosis. World J. Radiol. 12, 48–67. doi: 10.4329/wjr.v12.i5.48

Sfarlea, A., Greimel, E., Platt, B., Dieler, A. C., and Schulte-Korne, G. (2018). Recognition of emotional facial expressions in adolescents with anorexia nervosa and adolescents with major depression. Psychiatry Res. 262, 586–594. doi: 10.1016/j.psychres.2017.09.048

Shen, R., Zhan, Q., Wang, Y., and Ma, H. (2021). Depression detection by analysing eye movements on emotional images. Paper Presented at the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON.

Suslow, T., Hoepfel, D., Kersting, A., and Bodenschatz, C. M. (2024). Depressive symptoms and visual attention to others’ eyes in healthy individuals. BMC Psychiatry 24:184. doi: 10.1186/s12888-024-05633-2

Thoduparambil, P. P., Dominic, A., and Varghese, S. M. (2020). EEG-based deep learning model for the automatic detection of clinical depression. Phys. Eng. Sci. Med. 43, 1349–1360. doi: 10.1007/s13246-020-00938-4

Wagner, S., Doering, B., Helmreich, I., Lieb, K., and Tadic, A. (2012). A meta-analysis of executive dysfunctions in unipolar major depressive disorder without psychotic symptoms and their changes during antidepressant treatment. Acta Psychiatr. Scand. 125, 281–292. doi: 10.1111/j.1600-0447.2011.01762.x

Wang, L., Qian, E., Zhang, Q., and Pan, F. (2011). Eyes clue effect in facial expression recognition. J. Educ. Sci. Hun. Norm. Univ. 10, 115–119. doi: 10.3969/j.issn.1671-6124.2011.06.029

Wang, Q., Yang, H., and Yu, Y. (2018). Facial expression video analysis for depression detection in Chinese patients. J. Vis. Commun. Image Represent. 57, 228–233. doi: 10.1016/j.jvcir.2018.11.003

World Health Organization. (2024). COVID-19 pandemic triggers 25% increase in prevalence of anxiety and depression worldwide.

Xing, Y., Rao, N., Miao, M., Li, Q., Li, Q., Chen, X., et al. (2019). Task-state heart rate variability parameter-based depression detection model and effect of therapy on the parameters. IEEE Access 7, 105701–105709. doi: 10.1109/ACCESS.2019.2932393

Xue, S., and Yantao, R. (2007). Online processing of facial expression recognition. Acta Psychol. Sin. 39, 64–70.

Xu, G., Yu-Xia, H., Yan, W., and Yue-Jia, L. (2011). Revision of the Chinese Facial Affective Picture System. Chinese Mental Health Journal, 25, 40–46.

Keywords: eye movement, depression, AOI, emotional facial expression recognition, cognitive deficit

Citation: Yang Q, Fu Y, Yang Q, Yin D, Zhao Y, Wang H, Zhang H, Sun Y, Xie X and Du J (2024) Eye movement characteristics of emotional face recognizing task in patients with mild to moderate depression. Front. Neurosci. 18:1482849. doi: 10.3389/fnins.2024.1482849

Received: 18 August 2024; Accepted: 28 October 2024;

Published: 13 November 2024.

Edited by:

Chuanliang Han, The Chinese University of Hong Kong, ChinaReviewed by:

Xunbing Shen, Jiangxi University of Chinese Medicine, ChinaCopyright © 2024 Yang, Fu, Yang, Yin, Zhao, Wang, Zhang, Sun, Xie and Du. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jian Du, ZGp0aWFubGFpQDE2My5jb20=

†These authors share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.