- 1Ningbo Polytechnic, Institute of Artificial Intelligence Application, Zhejiang, China

- 2Industrial Technological Institute of Intelligent Manufacturing, Sichuan University of Arts and Science, Sichuang, China

- 3Ningbo Vichnet Technology Co., Ltd., Zhejiang, China

The affective Brain-Computer Interface (aBCI) systems strive to enhance prediction accuracy for individual subjects by leveraging data from multiple subjects. However, significant differences in EEG (Electroencephalogram) feature patterns among subjects often hinder these systems from achieving the desired outcomes. Although studies have attempted to address this challenge using subject-specific classifier strategies, the scarcity of labeled data remains a major hurdle. In light of this, Domain Adaptation (DA) technology has gradually emerged as a prominent approach in the field of EEG-based emotion recognition, attracting widespread research interest. The crux of DA learning lies in resolving the issue of distribution mismatch between training and testing datasets, which has become a focal point of academic attention. Currently, mainstream DA methods primarily focus on mitigating domain distribution discrepancies by minimizing the Maximum Mean Discrepancy (MMD) or its variants. Nevertheless, the presence of noisy samples in datasets can lead to pronounced shifts in domain means, thereby impairing the adaptive performance of DA methods based on MMD and its variants in practical applications to some extent. Research has revealed that the traditional MMD metric can be transformed into a 1-center clustering problem, and the possibility clustering model is adept at mitigating noise interference during the data clustering process. Consequently, the conventional MMD metric can be further relaxed into a possibilistic clustering model. Therefore, we construct a distributed distance measure with Discriminative Possibilistic Clustering criterion (DPC), which aims to achieve two objectives: (1) ensuring the discriminative effectiveness of domain distribution alignment by finding a shared subspace that minimizes the overall distribution distance between domains while maximizing the semantic distribution distance according to the principle of “sames attract and opposites repel”; and (2) enhancing the robustness of distribution distance measure by introducing a fuzzy entropy regularization term. Theoretical analysis confirms that the proposed DPC is an upper bound of the existing MMD metric under certain conditions. Therefore, the MMD objective can be effectively optimized by minimizing the DPC. Finally, we propose a domain adaptation in Emotion recognition based on DPC (EDPC) that introduces a graph Laplacian matrix to preserve the geometric structural consistency between data within the source and target domains, thereby enhancing label propagation performance. Simultaneously, by maximizing the use of source domain discriminative information to minimize domain discrimination errors, the generalization performance of the DA model is further improved. Comparative experiments on several representative domain adaptation learning methods using multiple EEG datasets (i.e., SEED and SEED-IV) show that, in most cases, the proposed method exhibits better or comparable consistent generalization performance.

1 Introduction

Within the research domain of affective computing (Mühl et al., 2014), Automatic Emotion Recognition (AER; Dolan, 2002) has garnered extensive interest and attention from researchers in the field of computer vision (Kim et al., 2013; Zhang et al., 2017). To date, numerous emotion recognition methods based on Electroencephalogram (EEG) have been successively proposed (Zheng, 2017; Li X. et al., 2018; Pandey and Seeja, 2019; Jenke et al., 2014; Musha et al., 1997). From the perspective of machine learning, EEG-based AER tasks can be formulated as classification or regression problems for processing (Kim et al., 2013; Zhang et al., 2017). In such tasks, state-of-the-art AER techniques often involve training classifiers on data from multiple subjects to achieve precise emotion recognition. However, classifiers that rely on specific subjects typically exhibit limited generalization capabilities due to significant variations in emotional expression patterns across different subjects (Pandey and Seeja, 2019). By optimizing feature representations and learning models (Li et al., 2018a, 2018b, 2019; Du et al., 2020; Song et al., 2018; Zhong et al., 2020; Zheng and Lu, 2015a, Zheng et al., 2015b), the accuracy of emotion recognition has been significantly improved. Given the inherent individual differences in EEG-based AER, applying the learned classifiers to unseen subjects may yield unsatisfactory results based on qualitative and empirical observations (Ghifary et al., 2017; Lan et al., 2018; Jayaram et al., 2016; Zheng and Lu, 2016; Wang et al., 2022). To address this issue, one potential solution is to adopt subject-specific classifiers, but this approach is often impractical due to the scarcity of training data. Furthermore, even if this approach is feasible in certain scenarios, fine-tuning the classifier to maintain its good recognition performance is indispensable, partly because EEG signals from the same subject can change over time (Zhou et al., 2022). To tackle these challenges, the Domain Adaptation (DA) learning paradigm has emerged and has been widely and effectively applied (Patel et al., 2015; Dan et al., 2022; Tao et al., 2017, 2021, 2022; Zhang Y. et al., 2019). This paradigm aims to enhance the learning performance of the target domain (where labeled samples are scarce or absent) by transferring and leveraging prior knowledge from other related but differently distributed domains (i.e., source or auxiliary domains).

To achieve effective knowledge transfer across different professional domains, the crux lies in ensuring similarity or consistency in data distributions between the source domain and the target domain. Since the discrepancies in data distributions are particularly pronounced and more complex in sentiment analysis and other emotionally related fields, a significant challenge is posed for Domain Adaptation (DA) learning at present. Currently, a commonly adopted approach in the field of DA learning is to address distribution differences by identifying features (or samples) that remain constant across different domains (Pan and Yang, 2010; Patel et al., 2015). In order to more effectively leverage these domain-invariant features, traditional shallow DA models have gradually evolved into deep DA models. These deep DA models (Long et al., 2015, 2016; Chen et al., 2019; Lee et al., 2019; Ding et al., 2018; Tang and Jia, 2019) with their profound feature transformation capabilities have made notable advancements in adaptive learning. Although deep DA models can mitigate the impact of distribution differences between domains when dealing with large datasets, they have not yet fully resolved the issue of domain shift. Deep DA methods exhibit robust performance. The specific mechanisms underlying these effects remain unclear, since these advantages may stem from various factors such as deep feature representations, model fine-tuning, or adaptive regularization. More importantly, the learning outcomes of these methods still lack adequate explanation and validation at both theoretical and practical levels.

To better characterize the generalization capability of shallow DA algorithms, existing theoretical research on DA has proposed [i.e., the generalization error bound (Ben-David et al., 2010)] for DA by the following inequality:

Based on Equation 1, it can be seen that the upper bound of the expected error of the target hypothesis is mainly determined by three aspects: the expected error of the source domain hypothesis, the distribution discrepancy between the source and target domains, and the discrepancy in the labeling functions between the two domains. Therefore, to reduce the DA generalization error, in addition to retaining the discriminative information of the source domain (the first aspect), it is also necessary to consider reducing the distribution discrepancy between domains (the second aspect) and the discrepancy in the labeling functions between domains (the third aspect). Accordingly, current mainstream DA methods can be divided into those based on distribution alignment (including instance weighting and feature transformation) and those based on classifier model alignment (Gretton et al., 2007; Chu et al., 2013; Pan et al., 2011; Long et al., 2013; Luo et al., 2020; Baktashmotlagh et al., 2013; Ganin et al., 2016; Kang et al., 2022; Liang et al., 2019; Tao et al., 2012; Tao et al., 2015; Tao et al., 2016; Tao et al., 2019).

To address the challenges posed by domain distribution shift, early research endeavors adopted an instance weighting strategy. This strategy involves calculating the probability of each sample belonging to either the source or target domain, referred to as the instance’s membership weight, and subsequently mitigating the domain shift issue by re-weighting the samples. Among these techniques, the Maximum Mean Discrepancy (MMD; Gretton et al., 2007) has been widely applied due to its simplicity and effectiveness. However, its optimization process is often isolated from the training of the classifier, making it difficult to achieve simultaneous optimization of both. In response to this limitation, Chu et al. (2013) proposed a domain-adaptive classifier that integrates instance weighting. In order to further transcend the constraints imposed by the assumption of conditional distribution consistency in instance weighting methods, feature transformation methods have become a focal point of research in recent years (Pan et al., 2011; Long et al., 2013; Luo et al., 2020; Baktashmotlagh et al., 2013; Kang et al., 2022; Liang et al., 2019). For instance, Pan et al. (2011) introduced the Transfer Component Analysis (TCA) method. This approach aims to minimize the MMD distance between the source and target domain distributions by learning a transformation matrix while preserving the original variance of the data. Unfortunately, it does not take into account the semantic consistency alignment across different domains. To address this, Long et al. (2013) proposed the Joint Domain Adaptation (Joint DA, JDA) method, which not only considers feature distribution alignment but also accounts for conditional distribution alignment, and initializes the category labels of the target domain using pseudo-labels. More recently, Luo et al. (2020) presented a unified domain adaptation framework, DGA-DA, that synthesizes the ideas of TCA and JDA. This framework introduces a strategy of inter-domain dissimilar exclusion and retains the geometric structure information of domain data, thereby effectively facilitating the propagation of target labels. Most existing affective models are based on deep transfer learning methods utilizing domain-adversarial neural networks (DANN; Ganin et al., 2016), as seen in studies (Li et al., 2018c; Li et al., 2018d; Du et al., 2020; Luo et al., 2018; Sun et al., 2022). DANN aims to find a shared feature representation for both source and target domains with indistinguishable distribution differences while maintaining the predictive capacity of the features on source samples for specific classification tasks. Additionally, the framework preserves the geometric structure information of domain data to ensure effective target label propagation. Baktashmotlagh et al. (2013) introduced the Domain Invariant Projection (DIP) algorithm. This algorithm applies a polynomial kernel to the MMD metric, aiming to construct a compact shared feature space and minimize the intra-class scatter through a clustering-based method.

A comprehensive review of the current state of Domain Adaptation (DA) research reveals that Maximum Mean Discrepancy (MMD) is a widely employed measure of distribution distance in the field of feature transformation. Traditional MMD-based DA methods primarily focus on reducing the distribution discrepancies between different domains. However, these methods often overlook the statistical (clustering) structure of the target domain data, which can adversely affect the inference of target domain labels. To address this issue, Kang et al. (2022) proposed an unsupervised DA method known as the Contrastive Adaptation Network. This approach hypothesizes the labels of the target domain through clustering and utilizes contrastive difference metrics in multiple fully connected layers to adjust the feature representations, aiming to minimize intra-class domain differences and maximize inter-class domain differences. During the training process, the hypotheses of target labels and feature representations are iteratively optimized in a cross-manner to enhance the model’s generalization capability. Concurrently, inspired by clustering methods, Liang et al. (2019) developed an effective Domain Invariant Projection Ensemble method. This method leverages clustering principles to seek the optimal projections among various categories within the domain, thereby narrowing the semantic gap between domains and enhancing the cohesion of intra-domain categories. Nevertheless, these methods essentially remain within the scope of MMD-based feature transformation DA methods.

It is worth noting that existing MMD-based methods did not fully consider the impact of intra-domain noise when measuring domain distribution distance. In real scenarios, noise inherently exists in domains. The intra-domain noise can lead to mean-shift problems on distance measure by traditional MMD methods and their variants. This phenomenon to some extent is affecting the generalization performance of MMD-based DA methods (Tao et al., 2023).

Fortunately, the proposed possibilistic clustering models (Krishnapuram and Keller, 1993; Dan et al., 2021) offer a comprehensive solution to the aforementioned issues. Unlike hard clustering models, possibilistic clustering effectively suppresses noise interference during the data clustering process (Dan et al., 2021). Inspired by this, the traditional MMD metric is relaxed into one-center center objective to tackle the mean shift problem of domain distributions in noisy environments. The possibilistic one-center center model with a fuzzy entropy regularization term is reconstructed. Then, we propose a distributed distance measure with Discriminative Possibilistic Clustering (DPC) criterion, and further develop a domain adaptation in Emotion recognition based on DPC model (EDPC). EDPC mainly comprises two jointly optimized components: DPC and classifier. First, DPC seeks a shared latent space to achieve discriminative alignment of overall domain distribution and inter-domain semantic distribution, the samples adhering to the principle of attract each other with similar class and repulse each other with different class. Clustering membership is adopted to indicate the likelihood of each sample being consistent with the overall domain distribution. It aims to suppress the impact of noisy data during domain matching and enhance the robustness and effectiveness of domain distribution clustering metrics. Second, in the classifier model learning phase, a graph Laplacian regularization term is introduced to preserve the local geometric structure of sample in the latent domain space to improve the performance of domain label propagation. Finally, we gain superior knowledge transfer performance by maximizing the utilization of source domain discriminative information minimizing the discriminative error in the target domain. The EDPC method will be evaluated in emotion recognition. Compared to other state-of-the-art methods, this approach demonstrates significant performance improvements in most cases. The major novelties of the proposed EDPC are summarized as follows:

1. To address the noisy problem in existed methods, we establish a robust distributed distance measure with Possibilistic Clustering by relaxing the traditional MMD metric criterion into a possibilistic one-center center model. From a theoretical point of view, we proposed measure method serves as an upper bound to the traditional MMD metric under certain conditions.

2. The samples follow the principle of “attract each other with similar class and repulse each other with different class,” we extend and establish a robust distributed distance measure with Discriminative Possibilistic Clustering (i.e., DPC) metric criterion. Based on this, we seek to optimize a domain-invariant subspace that achieves joint alignment of overall domain distribution and inter-domain semantic distribution.

3. We propose a unified domain adaptation Emotion recognition model based on DPC (i.e., EDPC). An iterative optimization algorithm is provided. We finally prove its consistent convergence and propose a generalization error bound for the model based on Rademacher complexity theory.

4. Extensive experiments on six real-world datasets validate the robust effectiveness of the proposed method.

2 Proposed method

In DA learning, the source domain is defined as , where the sample set is defined as , and the corresponding class labels are defined as . Here, is a one-hot encoded vector; if belongs to the -th class, then . The unlabeled target domain is defined as , where the sample set and unknown sample labels during training are , , respectively. We further define and , where . Let and be the mean values of the samples in the source and target domains, respectively. Our work has the following assumptions:

1. The source domain distribution and the target domain distribution are different, but they share the same feature space , i.e., and , where are the feature spaces of the source domain and the target domain, respectively.

2. The class-conditional probability distributions between domains are different but they share the same label space , i.e., , where are the label spaces of the source domain and the target domain, respectively.

The rest of this section is organized as follows. In Subsection 2.1, we give the general formulation of the proposed method. We describe the details of the general formulation in Subsection 2.2 about discriminative possibilistic clustering (i.e., DPC) formulation and Subsection 2.3 about the classifier in emotion recognition with DPC. The final detail formulation is showed in Subsection 2.4.

2.1 General formulation

To effectively align domains’ distribution and achieve maximum knowledge transferring, this paper seeks to optimize a domain-invariant subspace , where is the subspace dimension and making be an orthogonal subspace (i.e., , is the identity matrix). In other words, Our work is to achieve and in the optimized domain-invariant subspace .

For the problem of DA in complex structures and noisy environments, we aim to improve the robustness of distribution distance metrics for DA and enhance generalization in the target domain. Based on the DA generalization error theory (Ben-David et al., 2010), we explore to achieve the following two core objectives: First, we construct a robust distribution distance metric that can resist the impact of noise for addressing the issue of domain mean-shift. The differences of domains distribution can be selectively corrected. Second, we effectively perform semantic reasoning in the target domain by maintaining the geometric structure consistency of samples in domain and connecting the discriminative information of the source domain and minimizing the discriminative error in the target domain. The a highly generalizable target domain classifier be constructed. Therefore, our general framework can be described as following:

where the first term is the empirical risk function of the decision function on the domain sample, represents the model parameters, and is the empirical loss function. It can be chosen according to different application needs. The second term is a regularization term that measures the semantic distribution discrepancy between and in the domain-invariant subspace . denotes the clustering membership degree. The last term aims to enhance the discriminative ability for the second term. The orthogonal constraint serves as a regularization constraint on . It does not need to be described in the objective function. Two hyper-parameters (i.e., and ) are used to measure the semantic distribution discrepancy and the inter-classes distribution discrepancy of the two domains, respectively. Therefore, the subspace , the membership degree , and the decision function can be learned simultaneously during optimizing the Problem 2.

2.2 Discriminative possibilistic clustering formulation

The rest of this subsection is organized as follows. In 2.2.1, we give the motivation of the proposed method DPC and proof the MMD metric can be relaxed and modeled as a special one-center clustering problem. We then explain all details of DPC metric in 2.2.2.

2.2.1 Motivation

In a certain Reproducing Kernel Hilbert Space (RKHS) , the original space data is transformed into feature representations in the RKHS through a nonlinear mapping . The corresponding kernel function is defined as , where , . Here, is a feature mapping function that maps samples from the original space to a high-dimensional or even infinite-dimensional space (i.e., the RKHS ). For uniform representation, the mapping result is defined as following:

where is called the empirical kernel mapping [32]. Then and respectively represent the unified representation of linear kernel mapping and nonlinear kernel mapping of and . Accordingly, .

Therefore, we try to learn a DA learning machine . Thus, the formal description of Problem 2 can be rewritten in Equation (3):

In classical DA research, a commonly adopted strategy to address the domain mean-shift problem is to reduce the distribution distance between the source domain and the target domain in a certain latent feature space. This involves identifying a feature descriptor space, where MMD is a frequently used method to measure the distribution difference between two domains. MMD utilizes the framework of RKHS to effectively quantify the gap between two distributions [as described in references (Bruzzone and Marconcini, 2010; Gretton et al., 2010)]. It is assumed that there exists a set containing all domain-invariant transformation matrices (i.e., ) in this framework. The maximum empirical mean discrepancy between the source domain distribution and the target domain distribution can be defined in Equation (4):

According to Hoeffding’s inequality theorem, when the domain sample size is sufficiently large (or approaches infinity), the expected difference and the empirical mean difference are approximately (or equal). To illustrate the generalized connection between the traditional MMD criterion and the mean clustering model, the following theorem is presented:

Theorem 1. The MMD metric can be relaxed and modeled as a special one-center clustering problem, where the clustering center of the one-center is and the sample clustering membership is the vector .

Proof: From the definition of empirical MMD, we have (let for simplify):

where the cluster center is defined as , ; when n = m,1 let ; the sample membership of the one-center center is defined in Equation (6):

By analyzing Equation 5, it can be inferred that the one-center is actually constitutes an upper bound of the traditional MMD metric. It means that the MMD metric can be simplified to a special form of the one-center objective function. In this case, it is possible to minimize the MMD between different domains by optimizing this special clustering objective.

According to Theorem 1 and the explanation in Baktashmotlagh et al. (2013), it can be recognized that the MMD metric standard for domain distribution is essentially related to the clustering model. It is possible to more effectively achieve distribution alignment between different domains promote domain adaptive learning by clustering domain data. It should be noted that traditional clustering models are usually sensitive to noise (Krishnapuram and Keller, 1993), which limits MMD-based DA methods and make them prone to domain mean-shift issues in noisy environment. To address this challenge, we further explore more robust clustering methods and propose a new discriminative domain distribution distance metric criterion in following subsection.

2.2.2 Discriminative possibilistic clustering

2.2.2.1 Possibilistic clustering

Recently proposed probabilistic clustering methods have been proven to effectively mitigate the negative impact of noise on clustering results (Dan et al., 2021). In light of this, this section extends the original one-center method to the realm of probabilistic one-center. Then we propose a Possibilistic Clustering distribution distance metric in domain-invariant subspace , namely PC. We extends the hard clustering approach of MMD to a soft clustering form by incorporating the concept of possibilistic clustering entropy. In this framework, each sample determines its contribution based on its distance from the overall domain mean (that is, the greater the distance of the data sample, the lower its contribution, and conversely, the more likely it is considered as noise). In this way, PC allows for the attenuation of the impact of mean-shift caused by noise during domain alignment through adjustment. Therefore, the formula for the possibilistic clustering distribution distance metric can be defined as follows:

where is the possibilistic clustering membership vector, the parameter is the weight exponent of is used to adjust the uncertainty or degree of samples belong to multiple categories. To avoid trivial solutions, we set in the subsequent formulas. The detailed introduction to different values of is referred to (Krishnapuram and Keller, 1993). According to Equation 7, is a possibilistic one-center objective function (with the cluster center being ). When , represents the aforementioned special form of one-center. Next, we verify that the proposed is an upper bound of the traditional MMD metric at a certain condition by the following theorem:

Theorem 2. When the possibilistic clustering membership satisfies ( ), the possibilistic distribution distance metric is an upper bound of the traditional MMD metric.

Proof: Combining Equations 5 and 7, we can obtain:

According to the value range of , when and , the second inequality in Equation 8 holds true and the conclusion is proved.

Guided by Theorem 1 and Theorem 2, we are able to reconstruct the traditional MMD metric into a function that targets 1-center probabilistic clustering. From this new perspective, we can gain a profound understanding that the objective of probability distribution distance measure is not only to facilitate effective adjustment of feature distributions across different domains but also to mitigate the negative transfer effects induced by intra-domain noisy data during the training process.

In the PC described in Equation 7, its primary objective is centered on reducing the statistical distribution discrepancy between the source domain and the target domain. However, this method fails to adequately emphasize the importance of preserving the semantic structural information of instances during the process of domain distribution matching. This approach may compromise the ability to distinguish between categories within the domains. To preserve the distinctiveness of the statistical distribution structures between domains, we further propose a Discriminative PC model (i.e., DPC). This model aims to implement discriminative statistical distribution alignment between the source and target domains while adjusting the semantic distribution through a clustering hypothesis based on likelihood.

The samples adhere to the principle of “attract each other with similar class and repulse each other with different class.” DPC criterion aims to achieve dual objectives: first, it reduces the distribution bias of similar samples within different domains, thereby minimizing semantic differences between domains; second, it increases the distribution gap between different classes of samples within different domains by enhancing the differentiability of domain samples.

2.2.2.2 DPC with Intra-class alignment

In the PC model shown in Equation 7, the process of domain distribution alignment does not consider the semantic structure information of samples. This oversight may compromise the local discriminative structure between different categories within the domain. To address this issue, Tao et al. (2016) proposes further considering the semantic distribution structure between domains during the alignment process and evaluating the contribution of each sample to semantic matching. Hence, we have the following DPC framework with semantic registration functionality by extending the PC model:

where , , , , is the number of classes in the target domain, is the number of samples in the class of the source domain, is the number of samples in the class of the target domain, , and . When , and represent the mean of the entire source domain and the entire target domain, respectively. Equation 9 is the form of feature distribution alignment. When , and represent the mean of the corresponding classes in the source domain and target domain, respectively. is the matching contribution value of belonging to the category in the domain.

To enhance the robustness and effectiveness of the possibilistic clustering distribution distance measure method in handling noisy data, a fuzzy entropy regularization term, which is related to the parameter , is introduced based on Equation 9. With this improvement, the DPC for semantic alignment can be redefined as following:

where the parameter serves as an adjustable balancing factor, aimed at ensuring that the value of the relevant data remains at a high level to avoid obtaining trivial solutions that lack discriminative power. The improved DPC model now becomes a function that monotonically decreases as the value of decreases. This model uses the second term in Equation 10, namely fuzzy entropy, to mitigate the adverse impact of noisy data on the model’s classification decisions. An increase in fuzzy entropy signifies an enhancement in the discriminatory information content of samples, which plays a positive role in strengthening the robust effectiveness of distribution distance measures. Furthermore, the introduction of a fuzzy entropy-regularized possibility distribution distance measure model can effectively limit the influence of noisy data in domain distribution alignment, reducing the interference of noise or outlier data in the domain adaptation learning process. For more details and empirical analysis on how fuzzy entropy improves robustness, refer to the discussion in reference (Gretton et al., 2010).

2.2.2.3 DPC with Inter-class discrimination

The intra-class alignment neglects inter-class discrimination. We therefore add an additional inter-class repulsion term into the DPC model to increase the inter-class distance across domain. It enhances the semantic discriminatory of samples in the domain-invariant subspace and improves the robustness and effectiveness of domain adaptation learning. Specifically, let be the inter-domain different classes repulsion term, which is defined as the total difference between the mean of each class and the mean of all other classes (excluding class c). That is:

where,

2.3 Induction Learning

The DPC criterion effectively addresses the challenges of domain distribution alignment and noise interference. Building upon this, we dedicate to achieving two core objectives in the process of target domain knowledge inference: (1) maintaining the consistency of geometric structures between the source domain and the target domain, ensuring that label information for neighboring samples remains consistent; and (2) striving simultaneously to minimize the structural risk loss between the source domain and the target domain. Through the description of the target task, the general form of the target risk function can be described in Equation (12):

where represents the joint knowledge transfer and label propagation loss, which preserves the geometric structure consistency of the sample in both the source and target domains, and includes the structural risk loss terms of the source domain and target domain. Next, we will design these two terms separately.

2.3.1 Label Propagation

Firstly, we define the undirected weighted graph on the entire domain as , and let be the weight matrix and . The calculation method for is defined in Equation (13):

where indicates that is a neighbor of , controls the local influence range of the Gaussian kernel function and is also a hyper-parameter. The larger , the greater local influence range. Conversely, the smaller local influence range. When is fixed, the value of decreases monotonically as the distance between and increases.

By combining source domain knowledge transfer and the graph Laplacian matrix (Long et al., 2013; Wang et al., 2018), label propagation modeling is performed as:

where , is the label matrix of the target domain. If a sample in the target domain is unlabeled, the corresponding label value in is all zeros. is the label matrix of the source domain. is the Laplacian graph matrix (Long et al., 2013) and is a diagonal matrix with .

2.3.2 Design of Structural Risk

In our method, the source domain classifier and target domain classifier are defined as and , where ( ) is the bias term for the source domain (target domain), and ( ) is the parameter for the source domain (target domain). Let , , , and . Then, the two classifiers can be rewritten as and . Let and . By combining the two classifiers into a single classifier, we get: .

According to the least squares loss function, the problem of minimizing structural risk for both domains can be described as:

where the first term is the structural risk loss term with . The second term is the constraint of the classification model. The features can be selected by applying regularization. It can effectively control the model complexity to a certain extent for preventing the target classification model from over-fitting.

Since the classification task ensures the reliability of predictions through the dual prediction of the label matrix and the decision function , combining Equations 14 and 15 constitutes the target classification function. We can describe it as following:

2.4 Final formulation

Combining the intra-class attraction term in Equation 10, the inter-class repulsion term in Equation 11, and the induction learning in Equation 16, the final optimization formulation of the EDPC method can be described as following:

where , , , and are the balance parameters.

Once all model parameters are obtained, knowledge inference in the target domain can be achieved. By maximizing the use of discriminative information from the source domain, the two classifiers and are linearly combined, and this linear fusion model is used for target domain knowledge inference. The fusion form can be written as follows:

where is an adjustable parameter that balances the two classifiers. To emphasize the importance of discriminative information from the source domain as prior knowledge, we let based on experience.

3 Optimization

The optimization problem of EDPC is a non-convex problem with respect to , , , and This paper adopts an alternating iterative optimization strategy to solve these parameters, ensuring that each optimization variable has a closed-form analytical solution.

3.1 Update as given , , and

Since the third and fifth terms in Equation 17 do not involve the calculation of , the optimization solution of EDPC is described as follows:

Theorem 3. The optimal solution to the original optimization problem of the objective function (18) is:

where,

Proof: Taking the partial derivative of Equation 18 with respect to the variable and setting it to zero, we get:

By combining like terms and rearranging Equation 20, the solution for can be obtained as Equation 19, thus proving the theorem.

According to Theorem 3, the matching contribution of any sample can be derived from Equation 19.

3.2 Update as given , , and

Since the first to third terms in Equation 17 do not involve the calculation of , the optimization solution formula for EDPC is described as follows:

where, and each element is . represents the membership value of belonging to the c-th category.

Theorem 4. The optimal solution to the original optimization problem of the objective Function (21) is:

where .

Proof: According to Equation 19, let and solve for , that is

where, , where is a diagonal matrix with diagonal elements , and is the i-th row vector of matrix . By rearranging Equation 23, the analytical solution for can be derived as given in Equation 22.

3.3 Update by fixing , , and

Since the first, second, and fifth terms in Equation 17 do not involve the calculation of , and substituting the result into Equation 17, and the constraint can reduce the interference information in the obtained label matrix , the objective form for optimizing and solving is represented as:

where, , . The optimization Problem (24) is a standard singular value decomposition problem. consists of the eigenvectors of matrix , so the optimal solution for can be obtained by solving the singular value decomposition of matrix .

3.4 Update by fixing , , and

From Equation 17, it can be seen that the optimization solution for EDPC can be described as:

Theorem 5. The optimal solution to the original optimization problem of the objective Function (25) is:

where is the balancing parameter of the constraint term .

Proof: According to Equation 25, let , solve for , it is shown in Equation (27):

The solution for can be obtained as Equation 26, thus Theorem 5 is proved.

4 Algorithm

4.1 Algorithm description

In the context of unsupervised domain adaptation where the target domain lacks labeled data, achieving semantic alignment between domains relies on initial label information from the target domain. The initial label information of the target domain samples can be obtained through the following three strategies (Liang et al., 2019): (1) by a random method; (2) by setting all labels to zero; (3) by performing clustering on the target domain data using a model trained from the source domain data. Strategies (1) and (2) are cold start methods. Strategy (3) is a warm start method, which is usually more beneficial for the subsequent learning process. Therefore, we choose the third strategy to initialize the prior information of the target domain, thereby initializing , , , and . EDPC utilizes an iterative optimization strategy, which is a common approach in multi-objective optimization. The iterative process of the algorithm will stop when the following conditions are met: , where represents the value of the objective function at the z-th iteration, and is a predefined threshold. The complete learning process of the proposed method is given in Algorithm 1.

ALGORITHM 1 : Domain adaptation learning based on EDPC

Input: The source domain data , the target domain data , unknown target domain labels (initialized via clustering), and parameters , the threshold for iteration termination , and the maximum number of iterations .

Output: The contribution matrix , which represents the matching contributions of each instance at the mean points of various categories in the overall domain; the shared subspace ; the decision function on the source and target domain datasets; and the label matrix .

Procedure:

1. Initialize the label values for the unlabeled data in the target domain.

2. Calculate the mean values and for different categories in the target domain and source domain, respectively, where .

3. Then calculate the mean values for different categories in the overall domain as .

4. Obtain the initial values for using Equation 19.

5. Obtain the initial values for using Equation 22.

6. Obtain the initial values for using Equation 24.

7. Obtain the initial values for using Equation 26.

8. Compute the value of the objective function .

9. Repeat the following steps sequentially from :

{

1. Update the values of to using Equation 19.

2. Obtain the updated values for using Equation 22.

3. Obtain the updated values for using Equation 24.

4. Obtain the updated values P2 for using Equation 26.

5. Update the value of the objective function to .

6. If , terminate the repetition and return the matrices , , , and ; otherwise, go back to step 9.1 and continue the calculation until the condition in step 9.5 is satisfied.

}

4.2 Generalization Analysis

Rademacher complexity is an effective measure of a function set’s capacity to fit noise (Ghifary et al., 2017; Tao and Dan, 2021). Therefore, we will derive the generalization error bound for the proposed method using Rademacher complexity. Let be a set of hypothesis functions in the RKHS space, where is a compact set and is a label space. Given a loss function and a neighborhood distribution on , the expected loss between two hypothesis functions is defined as:

The difference in domain distributions between the source domain distribution and the target domain distribution can be defined in Equation (29):

Let and be the true label functions for and , respectively, and let the corresponding optimized hypothesis functions be:

Their corresponding expected loss is denoted as . Our EDPC method aims to achieve the empirical loss target through the objective function .

The following theorem provides the generalization error bound for the proposed method:

Theorem 6 (Generalization Error Bound; Nie et al., 2010). Let be a function set in the reproducing kernel Hilbert space (RKHS) . Consider and as datasets from the source domain and the target domain, respectively. Assume the loss function loss (.) is , mapping . For , the condition holds. The generalization error bound for any hypothesis function , with a probability of at least , having Rademacher complexity on , is shown in Equation (30):

where is the Rademacher complexity, is the disctriminative possibilistic clustering distribution distance measure and is composed by possibilistic clustering distribution distance measure with intra-class alignment item and disctriminative item (i.e., ).

Theorem 6 demonstrates that the disctriminative possibilistic clustering distribution distance measure and the model alignment function can jointly control the generalization error bound of the proposed method. Consequently, by minimizing both the disctriminative possibilistic distribution distance between domains and the model bias, the proposed method can effectively enhance its generalization performance in domain adaptation. Experimental results on real-world datasets also support this conclusion.

5 Experiments

To validate the effectiveness of the proposed EDPC in the cross-domain emotion recognition, this section systematically compares and analyzes the performance of the EDPC method with current state-of-the-art unsupervised domain adaptation techniques on several key EEG datasets (i.e., SEED and SEED-IV).

5.1 Databases description

To ensure a fair comparison with state-of-the-art (SOTA) methods, extensive experiments were conducted for effective validation using two well-known open datasets: SEED (Zheng and Lu, 2015a) and SEED-IV (Zheng et al., 2018). The SEED datasets comprises data collected from 15 subjects, each participating in three sessions held at different times. Each session includes 15 trials, featuring three types of emotional stimuli: negative, neutral, and positive. Similarly, the SEED-IV datasets also involves 15 subjects, each undergoing three sessions at different times. Each session in SEED-IV consists of 24 trials, with four emotional stimuli: happy, sad, fearful, and peaceful.

EEG signals for both datasets (SEED and SEED-IV) were simultaneously recorded using a 62-channel ESI Neuroscan system. During EEG signal preprocessing, the data were down-sampled to a rate of 200 Hz. Environmental noise was manually removed, and the data were filtered using a 0.3 Hz-50 Hz band-pass filter. To make a fair comparison with the existing studies on the two benchmark databases, we also use the pre-computed differential entropy (DE) features as the model input. Specifically, for each trial, the EEG data was divided into a number of 1-s segments, and the DE features were extracted from each 1-s segment at the given five frequency bands [Delta (1–3 Hz), Theta (4–7 Hz), Alpha (8–13 Hz), Beta (14–30 Hz), and Gamma (31–50 Hz)] from the 62 channels. Then, for each 1-s segment, it was represented by a 310-dimensional feature vector (5 frequency bands × 62 channels), which was further filtered by a linear dynamic system method for smooth purpose (Shi and Lu, 2010).

5.2 Experiment Settings

Before delving into the detailed analysis of the experimental results, it is essential to fine-tune the hyperparameters in the EDPC strategy. Empirical evidence suggests that the hyperparameters and serve to balance the trade-off between fuzziness and local structure preservation in the objective Function (17) for both the source and target domains. Meanwhile, the other two hyperparameters and can still be adjusted to, respectively, balance the influence of class exclusion and feature selection. Therefore, these two parameters play a crucial role in the final performance of the algorithm.

Given that parameter setting remains a challenging issue in the field of machine learning, this study adopts an experience-based parameter space exploration strategy to determine the optimal parameter configuration. This, in turn, allows for the evaluation of various methods on the datasets and the recording of the best performance for each method. Except in special cases, all related methods will undergo fine-tuning to achieve optimal results. Regarding the potential geometric structure of the data, it is associated with the neighborhood size chosen when constructing the Laplacian matrix. Experimental observations show that the model’s performance is slightly sensitive to changes in neighborhood size when the neighborhood is small. Therefore, when constructing the nearest neighbor graph in EDPC, this study uses grid search within the range to determine the optimal number of neighbors and reports the highest recognition accuracy results obtained under this optimal parameter configuration.

Additionally, for all methods implemented in this paper, a Gaussian kernel is used in both the source and target domains, where is determined by minimizing the Maximum Mean Discrepancy (MMD) to establish a benchmark. Based on prior experience, is initially set to the square root of the average norm of the binary training data, and for multiclass classification, is adjusted to (where C represents the number of classes). The underlying geometric structure relies on k neighbors for computing the Laplacian matrix. In our experiments, we observed that performance slightly varies when k is not large. Consequently, to construct the nearest neighbor graph in EDPC, we conduct a grid search to determine the optimal number of nearest k neighbors from the set , and report the best recognition accuracy from the optimal parameter configuration.

Before presenting the detailed evaluation, it is essential to explain the tuning process for the hyper-parameters of EDPC. Based on prior experience, the parameter is used to balance the fuzzy entropy and the alignment of domain probability distributions in the objective Function (16). The parameters and are adjustable and used to balance the importance of structure description and feature selection. Given that parameter uncertainty remains an open issue in machine learning, we rely on previous work experience to determine these parameters. Consequently, we evaluate all methods on the datasets by empirically searching the parameter space to identify the optimal settings and report the best results for each method. Except for special cases, all parameters of relevant methods are fine-tuned to achieve optimal results.

Since unsupervised domain adaptation lacks target labels for standard cross-validation, we employ a leave-one-subject-out strategy on the SEED and SEED-IV datasets (detailed in Section 6.2). We identify the optimal parameter values from the set { , , … , } by achieving the highest average accuracy on these datasets using the aforementioned method. This strategy typically constructs an effective EDPC model for unsupervised domain adaptation, and a similar approach is used to find optimal parameter values for other domain adaptation methods.In the subsequent sub-sections, a series of experiments is designed to test the sensitivity of the proposed EDPC method to parameter selection (see Section 6.4.1), verifying that EDPC can maintain stable performance across a wide range of parameter values. Additionally, the hyper-parameters for other methods are selected according to their original literature.

5.3 Experimental protocols

To fully verify the robustness and stability of the proposed method, this paper employs three different validation protocols (leave-one-subject-out; Zhang et al., 2020) to compare the proposed method with the latest methods.

1. Single-subject cross-session leave-one-session-out cross-validation. In line with existing methods, a time series cross-validation approach is utilized here, where past data is leveraged to predict current or future data. For each subject, the first two sessions are designated as the source domain, while the latter session serves as the target domain. The final results are determined by calculating the average accuracy and standard deviation across all subjects.

2. Cross-subject single-session leave-one-subject-out cross-validation. This validation scheme is the most widely used in emotion recognition tasks based on EEG data (Li J. et al., 2020; Luo et al., 2018). In this approach, one session’s data from a subject is treated as the target domain, while the data from the remaining subjects serve as the source domain. The training and validation process is repeated until each subject has been used as the target once. Consistent with other studies, we only consider the first session for this type of cross-validation.

3. Cross-subject cross-session leave-one-subject-out cross-validation. To comprehensively assess the robustness of the model on unseen subjects and trials, this paper employs a rigorous leave-one-out cross-subject cross-session method for evaluation. In this approach, all session data from a single subject are designated as the target domain, while data from all sessions of the remaining subjects serve as the source domain. The training and validation process is repeated until each subject’s sessions have been used as the target domain once. Given the variations between subjects and sessions, this evaluation protocol presents a substantial challenge to the effectiveness of models in EEG-based emotion recognition tasks.

5.4 Experimental results

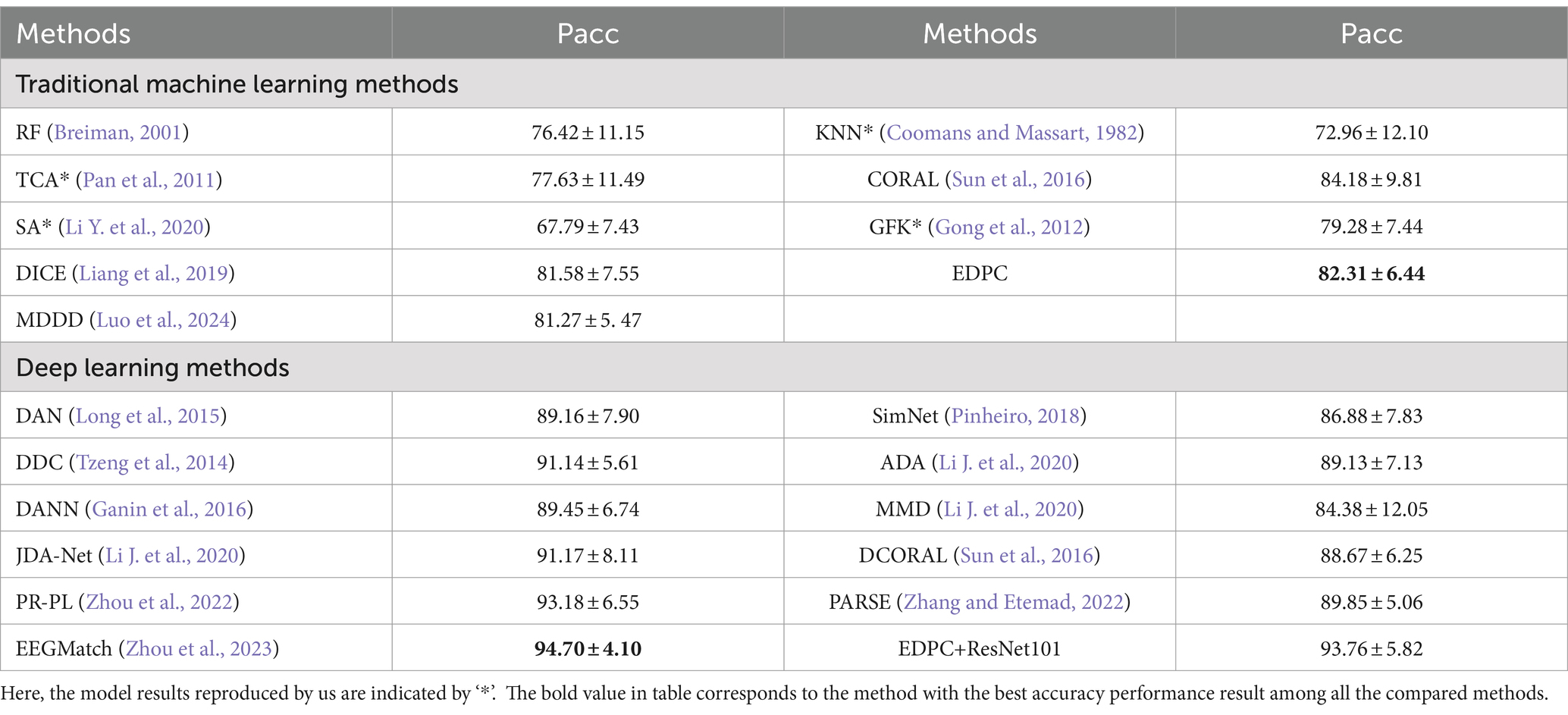

Specifically, in the following tables of experimental results, the bold values in each table are the best accuracy performance results achieved by the compared methods. Pacc denotes the average accuracy performance of each method. In following tables, EDPC denotes the method proposed by us. During the implementation of the experiments, the features of the data were initially extracted using shallow technical means. When labeled as EDPC+ResNet101, it indicates that we employed the deep neural network ResNet101 for data feature extraction in the experimental process.

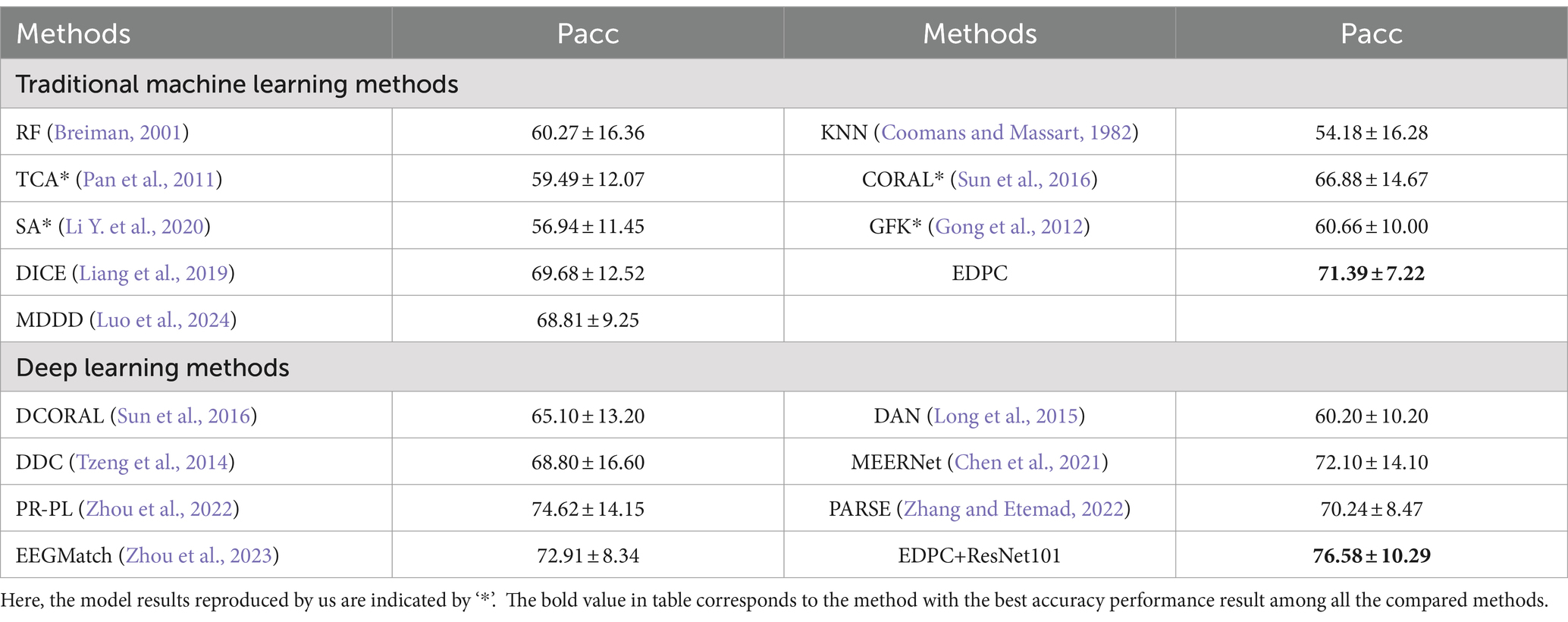

5.4.1 Single-subject cross-session

We calculate the average and standard deviation of each subject’s experimental results, the cross-session validation results for each subject on different datasets (i.e., SEED and SEED-IV) show in Tables 1, 2, respectively. When the proposed EDPC method is compared with the traditional machine learning methods on both SEED and SEED-IV, the EDPC obtained the best accuracy performance, even DICE. It indicates that the EDPC method with discrimination has a more significant noise suppression effect.

Table 1. The mean accuracies (%) and standard deviations (%) of emotion recognition on SEED database using single-subject cross-session leave-one-subject-out cross-validation.

Table 2. The mean accuracies (%) and standard deviations (%) of emotion recognition on SEED-IV database using single-subject cross-session leave-one-subject-out cross-validation.

Additionally, in the experiments on the SEED datasets, the results from deep learning methods show that the EEGMatch method achieved the best performance among the deep learning methods. This is possibly because the mixup technique provided richer and more effective data information, which aided the model training, although the increased data volume naturally led to higher computational costs. Nevertheless, the EDPC method still obtained comparable performance and ranked closely its behind. This demonstrates the advantage of the EDPC method in distinguishing the single subject across sessions.

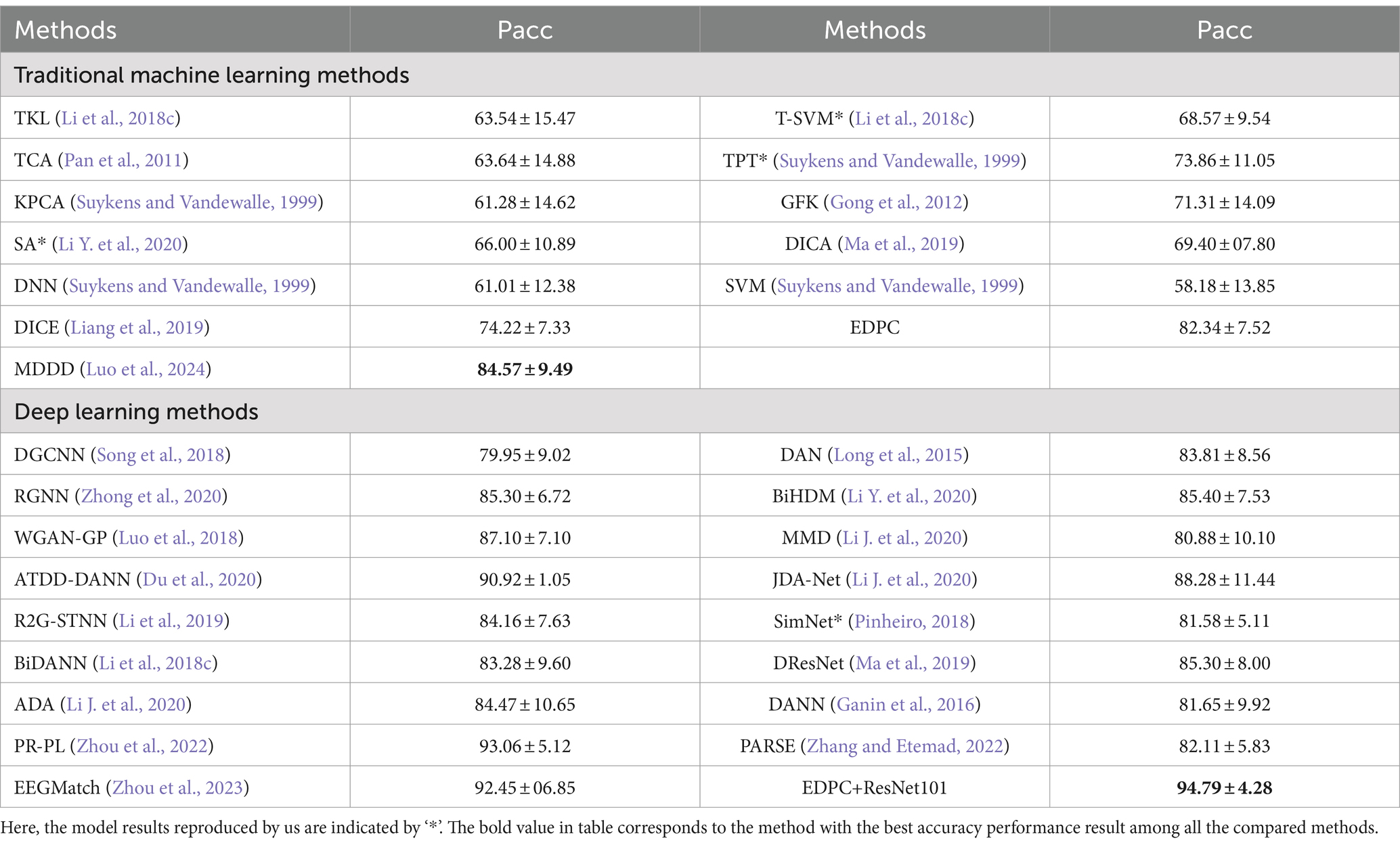

We can observe from the Table 3 that the EDPC method achieved the best performance on SEED-IV datasets with four different emotions (SEED has three different emotions), no matter what in the traditional machine learning or the deep learning methods. It signs that the EDPC method with “sames attract and opposites repel” characteristics can get more accuracy performance on finer-grained emotion recognition. The EDPC has stronger scalability in more nuanced emotion recognition tasks.

Table 3. The mean accuracies (%) and standard deviations (%) of emotion recognition on SEED database using cross-subject single-session leave-one-subject-out cross-validation.

5.4.2 Cross-subject single-session

Table 3 presents the model results for the recognition task using the leave-one-subject-out method within a single session, comparing them with the performance of the latest methods in the literature. All results are presented as mean ± standard deviation. The MDDD method achieved the best performance, the possible reason is: a more balanced impact of noise may arise when the different subjects in the same session faced consistent consistent environment, the MDDD adopted ensemble learning approach can effectively handle this kind of data. The MDDD method needs higher computational costs since it requires training and combining multiple models.

However, the EDPC achieved the best accuracy (82.34) with a standard deviation of 7.52 except MDDD method. It still maintains a comparable performance advantage overall among traditional machine learning methods. The recognition performance of EDPC surpasses that of the DICE method, indicating that the EDPC method handles noise better than the DICE method. It shows that the clustering hypothesis with fuzzy entropy can overcome the influence of noise and outliers in unsupervised classification.

When we compared to the latest deep learning methods, particularly deep transfer learning networks based on DANN (e.g., ATDD-DANN, R2GSTNN, BiHDM, BiDANN, WGAN-GP, PR-PL, EEGMatch), the proposed EDPC method demonstrated the best performance from Table 3. It effectively addresses issues of individual differences and noisy labels in aBCI applications, indicating that the EDPC method has better generalization performance and discrimination across subjects within the same session.

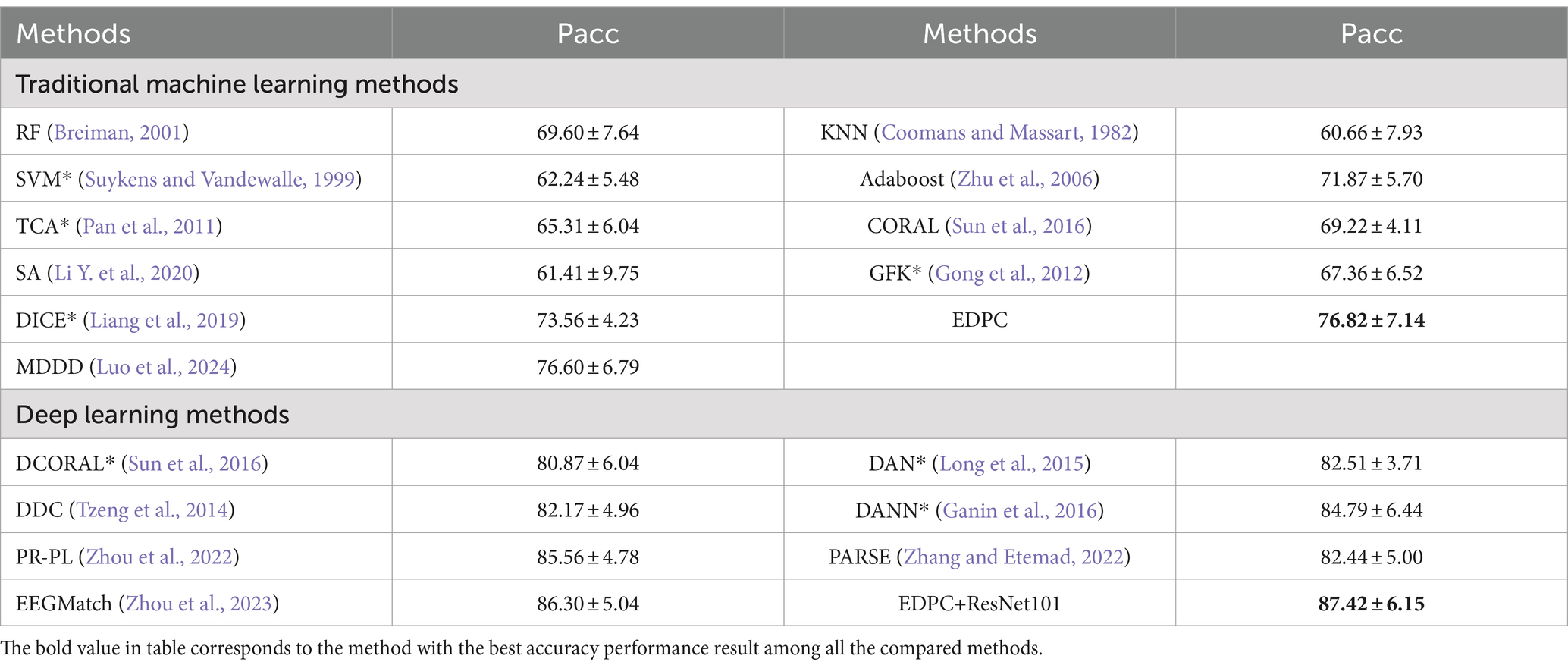

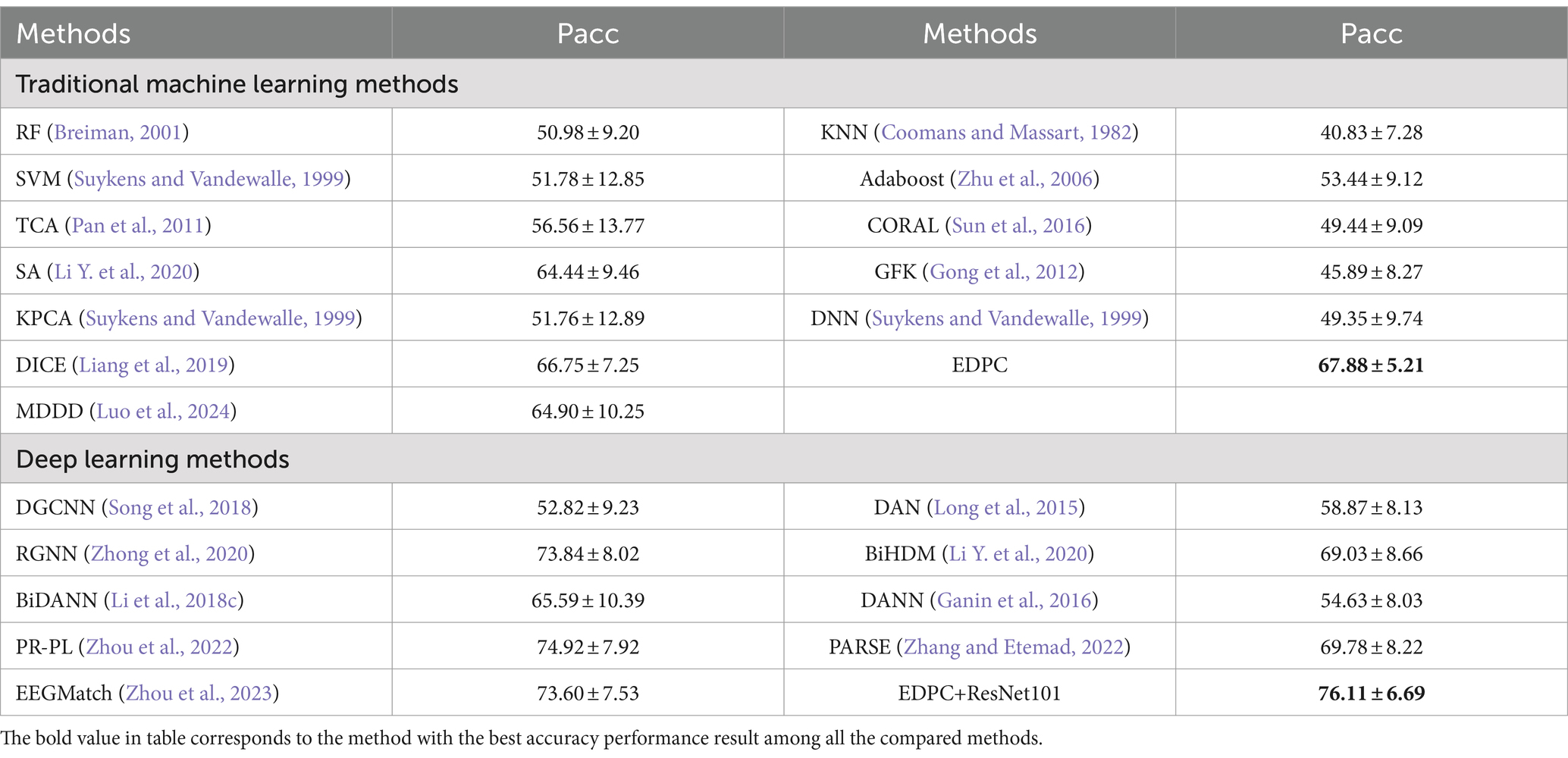

5.4.3 Cross-subject cross-session

To validate the efficiency and stability of the EDPC method under cross-subject and cross-session conditions, this study uses cross-subject cross-session leave-one-out cross-validation on the SEED and SEED-IV databases to verify the proposed EDPC method. As shown in Tables 4, 5, the proposed EDPC method achieves the best performance when compared to traditional machine learning methods and deep learning methods. Moreover, Table 3 obtained better performance than Table 5 since the cross-subject cross-session is more complicated. We easy to see that the performance of EDPC method is better than the MDDD method. All these results demonstrate that the proposed EDPC method has higher recognition accuracy and better generalization ability in the face of more complex individual and environmental differences, indicating better emotional validity.

Table 4. The mean accuracies (%) and standard deviations (%) of emotion recognition on SEED database using cross-subject cross-session leave-one-subject-out cross-validation.

Table 5. The mean accuracies (%) and standard deviations (%) of emotion recognition on SEED-IV database using cross-subject cross-session leave-one-subject-out cross-validation.

6 Discussion

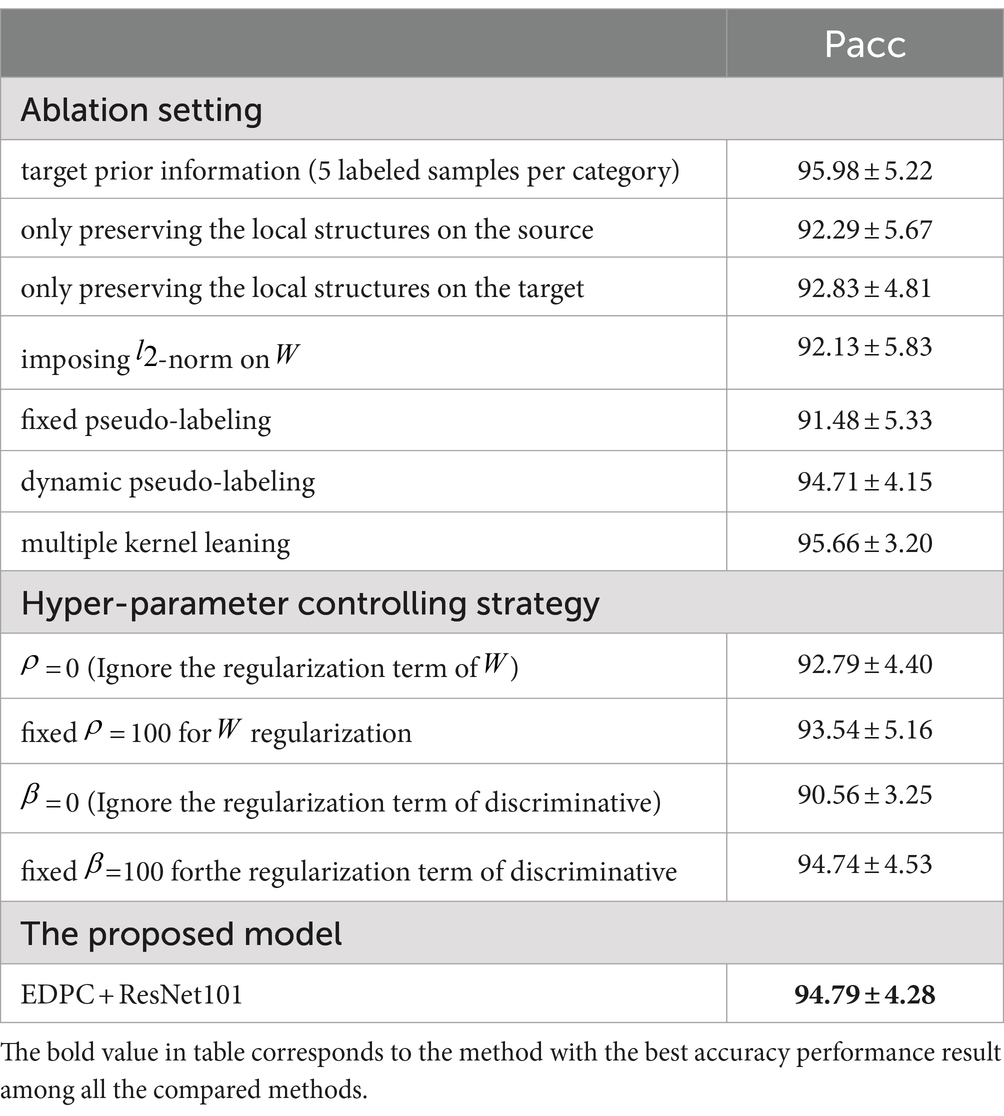

To comprehensively study the model’s performance, this section evaluates the effects of different settings in EDPC. Please note that all results presented in this section are based on the SEED datasets, using the cross-subject single-session cross-validation evaluation protocol. The bold values in Table 6 is the best accuracy performance result achieved of this method. It’s best to review in color mode.

6.1 Ablation study

This ablation study systematically explores the effectiveness of different components in the proposed model and presents the corresponding contributions of each component to the overall performance of the model. As shown in Table 6, adding 5 labeled data points per category in the target domain achieves a recognition accuracy (95.98 ± 5.22) very close to the recognition accuracy of EDPC (unsupervised learning; 92.19 ± 4.70). This decline indicates that prior label information in the target domain significantly enhances model performance and highlights the great potential of transfer learning in aBCI applications. Moreover, simultaneously preserving the local structure of data in both the source and target domains helps to improve model performance; otherwise, the recognition accuracy significantly decreases (92.29 ± 5.67 and 92.83 ± 4.81, respectively). When the l21 norm in W is replaced with the l2 norm, the model’s recognition accuracy drops to 92.13 ± 5.83. This result demonstrates that using the l21 constraint achieves better sample selection and denoising effects.

For the pseudo-labeling method, when switching from a fixed mode to a linear dynamic update, the corresponding accuracy increases from 91.48 to 94.71. When using an adaptive pseudo-labeling method based on nonlinear dynamics, the accuracy further improves to 94.79. When employing multi-kernel learning, the accuracy further increases to 95.66. These results indicate that multi-kernel learning helps rationalize the importance of different kernels in various scenarios and enhances the model’s generalization ability.

Next, we analyze the impact of different hyperparameters on the overall performance of the model. According to the experimental results, it can be observed that when the dynamic learning rate varies from 0 to 100, the model accuracy continuously improves from 92.79 to 93.54. This indicates that a dynamic learning rate is superior to a fixed value in terms of recognition accuracy. Additionally, the results suggest that the value of directly affects the importance of the discriminative term in the model. When the discriminative term is removed, the model accuracy drops to 90.56, whereas when = 100, the model accuracy reaches 94.74, which is close to the performance of EDPC. This demonstrates that the discriminative term plays an indispensable role in the model.

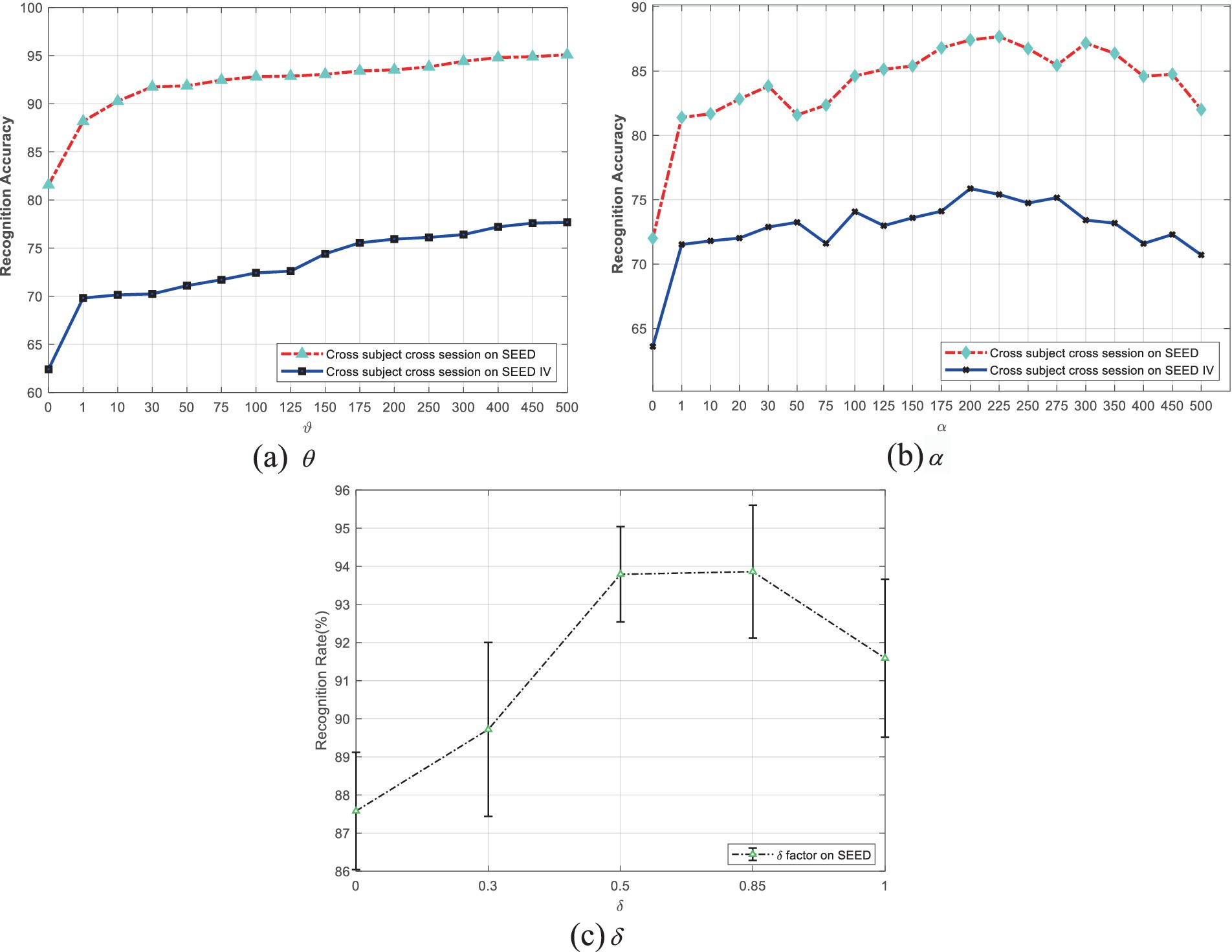

Additionally, and are two balancing parameters used to optimize the performance of EDPC by weighting fuzzy entropy and local retention (referring to the source and target domains). As shown in Figure 1a, when is 0, the performance is poor. Performance jumps significantly when is 1, and it continues to improve steadily as increases. When reaches 400 or higher, the performance stabilizes. Therefore, based on the experimental results, setting around 400 can achieve the best EDPC performance.

Figure 1. Performance variations of the EDPC method due to changes in parameters , , and . (a) Adjusted for fuzzy entropy; (b) Adjusted for label propagation; (c) Weighted for the different classes centers of source domain.

From Figure 1b, it can be seen that when is 0, the performance is poor. When is 1, there is a performance jump. As increases further, the performance improves slowly and becomes jittery. According to the trend observed in the experimental curve, can be adjusted within the range of [100, 225] to achieve optimal EDPC performance.

Figure 1c shows that when is within the range of [0.5, 0.85], the performance is high, indicating that the source domain contains a significant amount of discriminative information, and the means of different categories play a more important role. When takes larger values up to 1 (completely removing the target domain’s mean information), the performance declines, further demonstrating that the mean information of the target domain also plays an auxiliary role.

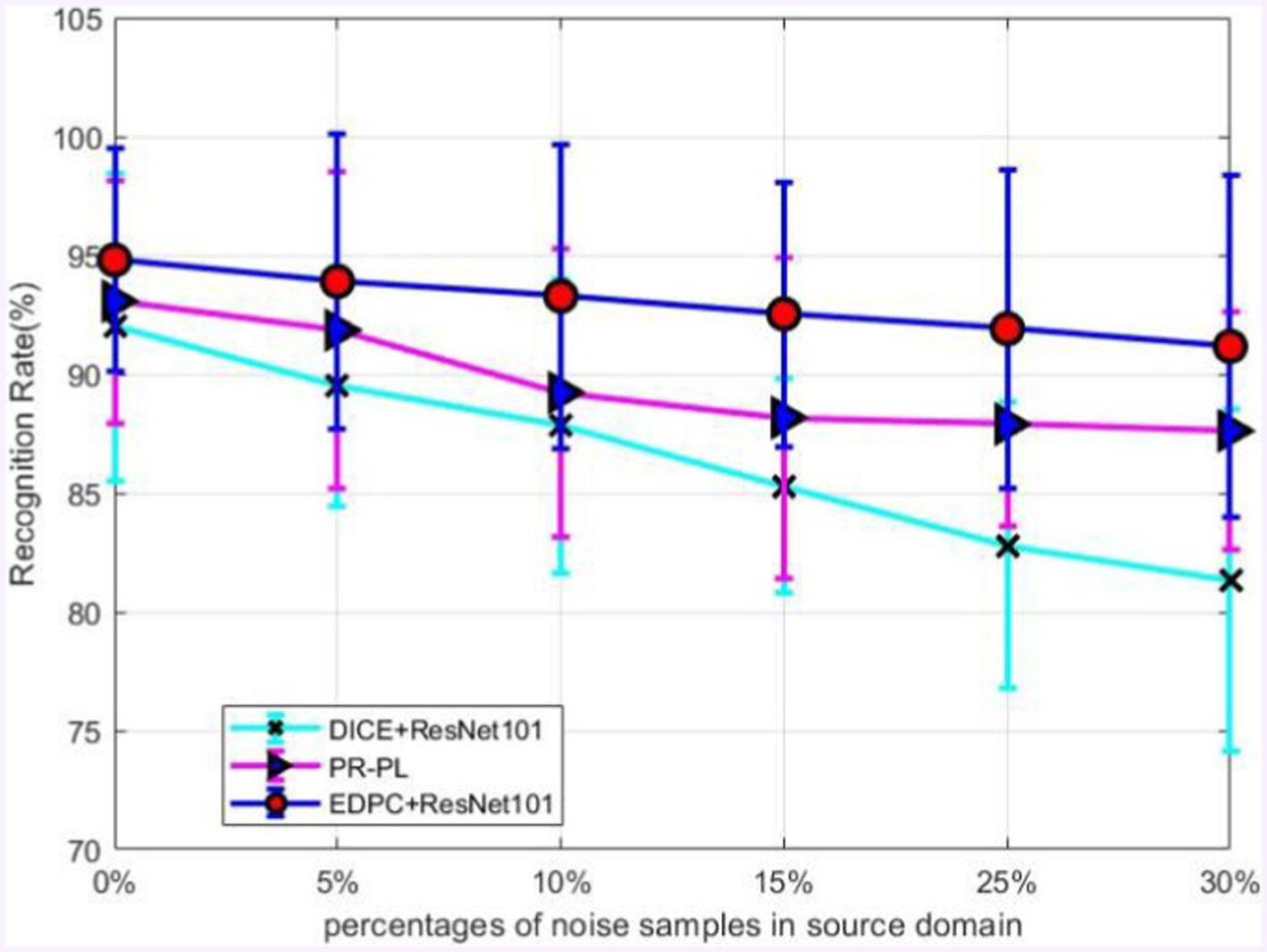

6.2 Effect of noisy labels

To further validate the robustness of the model in the presence of noisy labels, we conducted an experiment where noise was randomly added to the source labels at different proportions, and the corresponding model performance on unseen target data was tested. Specifically, we replaced a certain proportion of the true labels with randomly generated labels and trained the model using semi-supervised learning. Then, the trained model’s performance was evaluated on the target domain. It is important to note that noise was only added to the source domain data, while the target domain was used for model evaluation. In the implementation, the noise proportions were adjusted to 5, 15, 25, and 30% of the source domain data.

The experimental results shown in Figure 2 indicate that as the amount of noise increases, the accuracy of the proposed EDPC decreases at the slowest rate, demonstrating that EDPC is a reliable model with a high tolerance for noisy data. In future work, recent methods such as [52, 53] could be integrated to further reduce the more prevalent noise in EEG signals and enhance the model’s stability in cross-corpus applications.

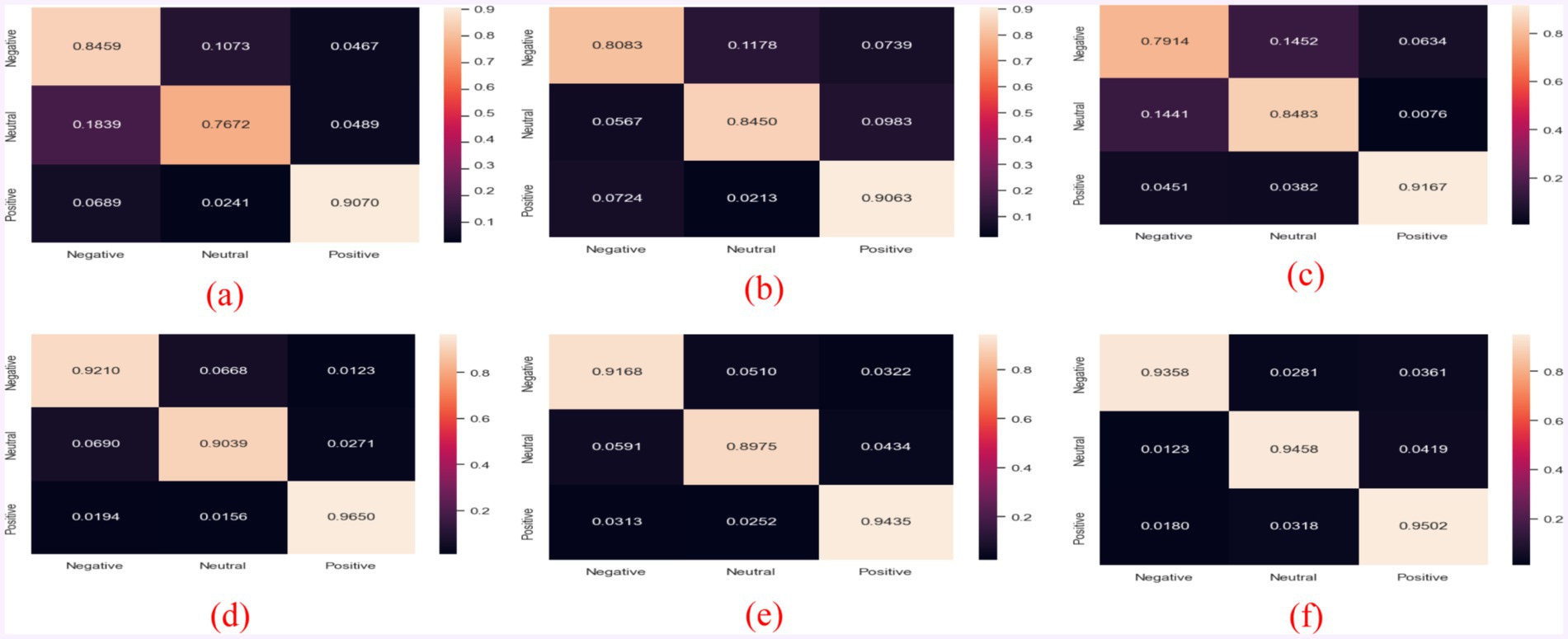

6.3 Confusion matrices

To qualitatively study the performance of the model in each emotion category, this section visualizes the confusion matrix and compares the results with state-of-the-art models (i.e., BiDANN, BiHDM, RGNN, PR-PL, DICE ResNet101). As shown in the Figure 3, all models excel at distinguishing positive emotions from other emotions (with recognition rates above 90%), but they perform relatively poorly in distinguishing negative emotions from neutral emotions. For example, the emotion recognition rate in RGNN [25] is even below 80% (specifically, 79.14%).

Figure 3. Confusion matrices of different models: (a) BiDANN; (b) BiHDM; (c) RGNN; (d) PR-PL; (e) DICE+ResNet101; and (f) EDPC+ResNet101.

Additionally, the PR-PL method slightly outperforms the EDPC method in recognizing positive emotions, likely due to the use of adversarial networks in PR-PL, which also increases its computational cost. Compared to existing methods (Figures 3a–c,e), the EDPC method is optimal, particularly in distinguishing neutral and negative emotions (even surpassing PR-PL). Furthermore, the overall performance of this method is superior to the DICE method (as shown in the comparison between Figures 3e,f).

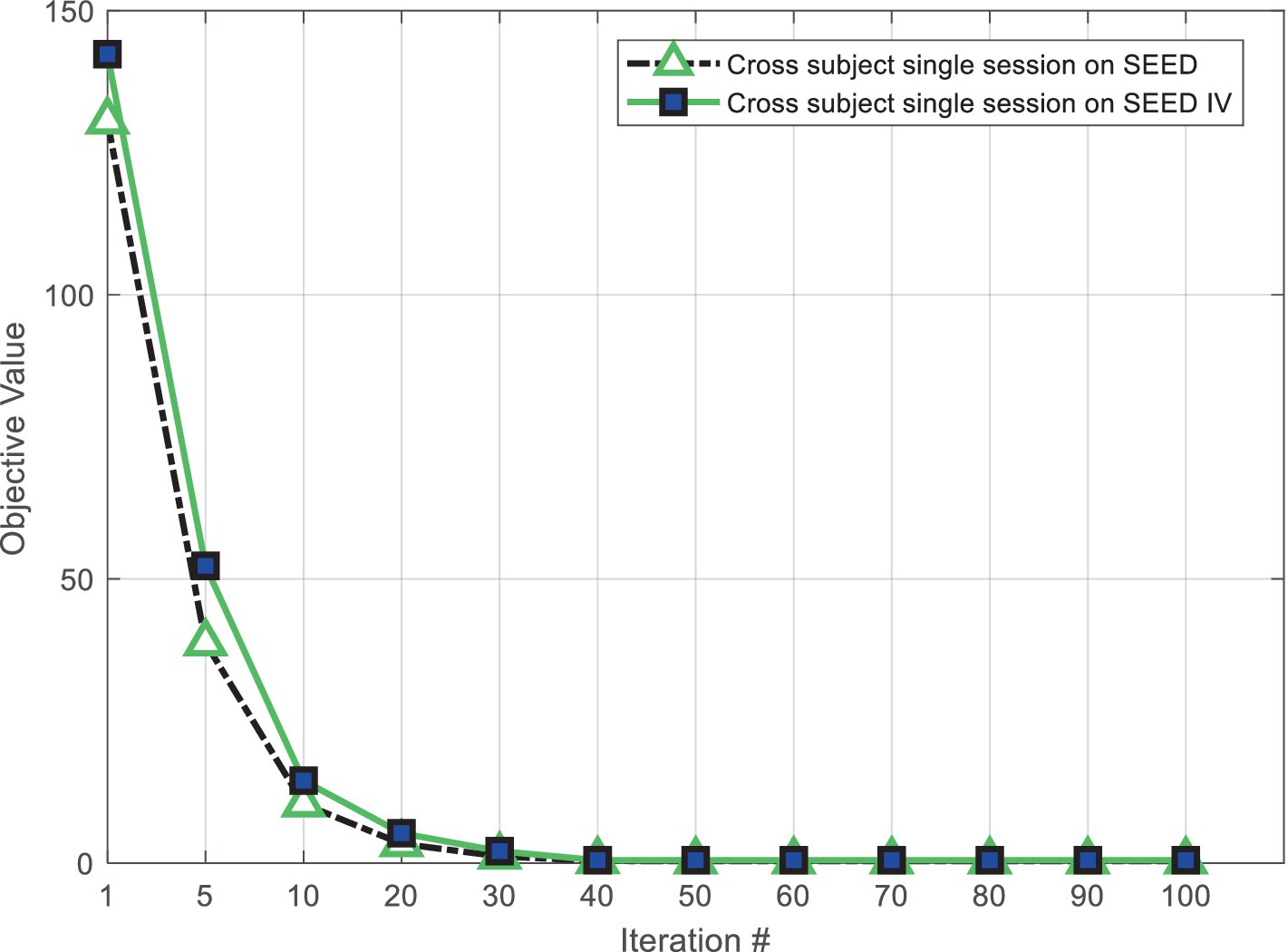

6.4 Convergence

The proposed algorithm in this paper employs an iterative optimization strategy. To demonstrate the convergence of the algorithm, experiments were conducted on the MATLAB platform. The hardware configuration used for implementation includes 64GB of memory, a 2.5GHz CPU, and an 8-core Intel i7-11850H processor. The Figure 4 shows the convergence process of EDPC at different iteration counts. From the displayed results, it is evident that the proposed algorithm approaches convergence at around 30 iterations. In the algorithm, the objective function of each sub-problem optimization is a decreasing function, thereby proving that the EDPC method has good convergence properties.

7 Conclusion

The article analyzes the issue of mean shift that may arise during distribution distance measure in domain adaptation due to potential noise in the field. It proposes a novel distribution distance measure method with DPC criterion. This method determines the membership degree of each instance (i.e., the closer the distance, the more likely it is non-noise data) by measuring the distance between each instance and the overall domain mean. It uses membership degree and fuzzy trimming to mitigate the impact of noise data on final performance. Additionally, an outlier repulsion term is added during the domain adaptation process to further improve classifier discrimination accuracy. Based on this, a Emotion recognition DA method based on DPC is proposed (namely EDPC). It minimizes domain distribution differences while introducing a graph Laplacian matrix to learn a target domain label matrix and minimizes domain discrimination differences to learn a classifier for both the source and target domains. Theoretically, it is proven that the proposed algorithm has consistent convergence and an effective generalization error bound. Finally, the proposed EDPC method is extensively compared with the latest shallow and deep DAL methods on real datasets, validating its robustness and classification accuracy. Existing research results indicate that multi-source domain adaptation can effectively avoid the “negative transfer” situation caused by a single source domain. However, multi-source domain adaptation increases computational complexity. Therefore, constructing the EDPC model based on multi-source domain adaptation is a direction worthy of further research in this article.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

YD: Formal analysis, Methodology, Validation, Writing – original draft. DZ: Data curation, Validation, Writing – review & editing. ZW: Data curation, Validation, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Ningbo Natural Science Foundation project (No. 2022J180), and by Basic Public Welfare Research Program of Zhejiang Province (No. ZCLTGY24F0301), and by Special Polymer Materials for Automobile Key Laboratory of Sichuan Province (No. TZGC2023ZB-06), and by Key Laboratories of Sensing and Application of Intelligent Optoelectronic System in Sichuan Provincial Universities (No. ZNGD2309).

Conflict of interest

ZW was employed by Ningbo Vichnet Technology Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^When , we can draw an equal number of samples from the source domain and the target domain, respectively.

References

Baktashmotlagh, M., Harandi, M. T., Lovell, B. C., and Salzmann, M. (2013). “Unsupervised Domain Adaptation by Domain Invariant Projection”. in IEEE International Conference on Computer Vision. IEEE Computer Society.

Ben-David, S., Blitzer, J., Crammer, K., Kulesza, A., Pereira, F., and Vaughan, J. W. (2010). A theory of learning from different domains. Mach. Learn. 79, 151–175. doi: 10.1007/s10994-009-5152-4

Bruzzone, L., and Marconcini, M. (2010). Domain adaptation problems: A DASVM classification technique and a circular validation strategy. IEEE Trans. Pattern Anal. Mach. Intell. 32, 770–787. doi: 10.1109/TPAMI.2009.57

Chen, Z. L., Zhang, J. Y., Liang, X. D., and Lin, L. (2019). “Blending-target domain adaptation by adversarial meta-adaptation networks,” in Proceeding of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA-United States. June 15–20, 2019.

Chen, H., Li, Z., Jin, M., and Li, J., (2021). “Meernet: Multi-source eeg-based emotion recognition network for generalization across subjects and sessions.” in Proceeding of 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). IEEE, 2021. pp. 6094–6097.

Chu, W. S., Torre, F. D. L., and Cohn, J. F., (2013). “Selective transfer machine for personalized facial action unit detection.” in Proceeding of 2013 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA. June 23–28, 2013. pp: 3515–3522

Coomans, D., and Massart, L. D. (1982). Alternative k-nearest neighbour rules in supervised pattern recognition: Part 1. k-nearest neighbour classification by using alternative voting rules. Anal. Chim. Acta 136, 15–27. doi: 10.1016/S0003-2670(01)95359-0

Dan, Y. F., Tao, J. W., Fu, J. J., and Zhou, D. (2021). Possibilistic clustering-promoting semi-supervised learning for eeg-based emotion recognition. Front. Neurosci. 15:690044. doi: 10.3389/fnins.2021.690044

Dan, Y. F., Tao, J. W., and Zhou, D. (2022). Multi-model adaptation learning with possibilistic clustering assumption for EEG-based emotion recognition. Front. Neurosci. :16. doi: 10.3389/fnins.2022(16):855421

Ding, Z. M., Li, S., Shao, M., and Fu, Y., (2018). “Graph adaptive knowledge transfer for unsupervised domain adaptation.” in Proceeding of European Conference on Computer Vision, Munich, Germany. September 8–14, 2018, pp. 36–52.

Dolan, R. J. (2002). Emotion, cognition, and behavior. Science 298, 1191–1194. doi: 10.1126/science.1076358

Du, X., Ma, C., Zhang, G., Li, J., Lai, Y.-K., Zhao, G., et al. (2020). An efficient lst m network for emotion recognition from multichannel eeg signals. IEEE Trans. Affect. Comput. 13:1. doi: 10.1109/TAFFC.2020.3013711

Ganin, Y., Ustinova, E., Ajakan, H., Germain, P., Larochelle, H., Laviolette, F., et al. (2016). Domain-adversarial training of neural networks. Jj. Mach. Learn. Res. 17, 2096–2030. doi: 10.48550/arXiv.1505.07818

Ghifary, M., Balduzzi, D., Kleijn, W. B., and Zhang, M. (2017). Scatter component analysis: a unified framework for domain adaptation and domain generalization. IEEE Trans. Pattern Anal. Mach. Intel. 99:10.1109/TPAMI.2016.2599532, 1–1.

Gong, B., Shi, Y., Sha, F., and Grauman, K. (2012). Geodesic flow kernel for unsupervised domain adaptation. IEEE Conf. Comp. Vision Pattern Recogn. 2012, 2066–2073. doi: 10.1109/CVPR.2012.6247911

Gretton, A., Borgwardt, K. M., Rasch, M., Scholkopf, B., and Smola, A. J. (2007). “A kernel method for the two-sample-problem.” in Proceeding of the 21st Annual Conference on Neural Information Processing Systems, Vancouver, B.C., Canada. Dec. 3–6.

Gretton, A., Harchaoui, Z., and Fukumizu, K J., Sriperumbudur, B. K. (2010). A fast, consistent kernel two-sample test.” in Proceedings of the 22nd International Conference on Neural Information Processing Systems. pp:673–681

Jayaram, V., Alamgir, M., Altun, Y., Scholkopf, B., and Grosse-Wentrup, M. (2016). Transfer learning in brain-computer interfaces. IEEE Comput. Intell. Mag. 11, 20–31. doi: 10.1109/MCI.2015.2501545

Jenke, R., Peer, A., and Buss, M. (2014). Feature extraction and selection for emotion recognition from EEG. IEEE Trans. Affect. Comput. 5, 327–339. doi: 10.1109/TAFFC.2014.2339834

Kang, G. L., Jiang, L., Wei, Y., Yang, Y., and Hauptmann, A. (2022). Contrastive adaptation network for single- and multi-source domain adaptation. IEEE Trans. Pattern Anal. Mach. Intel. 44, 1793–1804. doi: 10.1109/TPAMI.2020.3029948

Kim, M. K., Kim, M., Oh, E., and Kim, S. P. (2013). A review on the computational methods for emotional state estimation from the human EEG. Comput. Math. Methods Med. 2013, 1–13. doi: 10.1155/2013/573734

Krishnapuram, R., and Keller, J.-M. (1993). A possibilistic approach to clustering. IEEE Trans. Fuzzy Syst. 1, 98–110. doi: 10.1109/91.227387

Lan, Z., Sourina, O., Wang, L., Scherer, R., and Muller-Putz, G. R. (2018). Domain adaptation techniques for eeg-based emotion recognition: a comparative study on two public datasets. IEEE Trans,. Cogn. Dev. Syst. 11, 85–94. doi: 10.1109/TCDS.2018.2826840

Lee, S. M ., Kim, D. W., and Kim, N., Jeong, S.-G. , (2019). “Drop to adapt: Learning discriminative features for unsupervised domain adaptation.” in Proceeding of 2019 IEEE/CVF International Conference on Computer Vision(ICCV), Seoul, Korea (South). October 27–November 2. PP:90–100.

Li, X., Song, D., Zhang, P., Zhang, Y., Hou, Y., and Hu, B. (2018). Exploring EEG features in cross-subject emotion recognition. Front. Neurosci. 12:162. doi: 10.3389/fnins.2018.00162

Li, Y., Zheng, W., Cui, Z., Zong, Y., and Ge, S. (2018a). EEG emotion recognition based on graph regularized sparse linear regression. Neural. Process. Lett. 47, 1–19. doi: 10.1007/s11063-017-9609-3

Li, Y., Zheng, W., Cui, Z., Zhang, T., and Zong, Y. (2018b). “A novel neural network model based on cerebral hemispheric asymmetry for EEG emotion recognition.” in Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI).

Li, Y., Zheng, W., Zong, Y., Cui, Z., Zhang, T., and Zhou, X. (2018c). A bi-hemisphere domain adversarial neural network model for eeg emotion recognition. IEEE Trans. Affect. Comput. 12, 494–504. doi: 10.1109/TAFFC.2018.2885474

Li, Y., Jin, M., Zheng, W. L., and Lu, B. L. (2018d). Cross-subject emotion recognition using deep adaptation networks. Neural Inform. Proces. 11305, 403–413. doi: 10.1007/978-3-030-04221-9_36

Li, Y., Zheng, W., Wang, L., Zong, Y., and Cui, Z. (2019). From regional to global brain: a novel hierarchical spatial-temporal neural network model for EEG emotion recognition. IEEE Trans. Affect. Comput. 13, 568–578. doi: 10.1109/TAFFC.2019.2922912

Li, J., Qiu, S., du, C., Wang, Y., and He, H. (2020). Domain adaptation for EEG emotion recognition based on latent representation similarity. IEEE Trans,. Cogn. Dev. Syst. 12, 344–353. doi: 10.1109/TCDS.2019.2949306

Li, Y., Wang, L., Zheng, W., Zong, Y., Qi, L., Cui, Z., et al. (2020). A novel bi-hemispheric discrepancy model for EEG emotion recognition. IEEE Trans,. Cogn. Dev. Syst. 13, 354–367. doi: 10.1109/TCDS.2020.2999337

Liang, J., He, R., Sun, Z. N., and Tan, T. (2019). Aggregating Randomized Clustering-Promoting Invariant Projections for Domain Adaptation. IEEE Trans. Pattern Anal. Mach. Intel. 41, 1027–1042. doi: 10.1109/TPAMI.2018.2832198

Long, M. S., Wang, J. M., Ding, G. G., Sun, J., and Yu, P. S. (2013). “Transfer Feature Learning with Joint Distribution Adaptation.” in Proceedings of the 2013 IEEE International Conference on Computer Vision. IEEE.

Long, M. L., Cao, Y., Wang, J., and Jordan, M. (2015). “Learning transferable features with deep adaptation networks.” in Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille. pp. 97–105.

Long, M. S., Wang, J. M., and Jordan, M. I. (2016). “Unsupervised domain adaptation with residual transfer networks.” in Proceeding of the 30th Annual Conference on Neural Information Processing Systems, Barcelona, Spain, December 5–10, 2016. pp. 136–144.

Luo, Y., Zhang, S. Y., Zheng, W. L., and Lu, B. L. (2018), Wgan domain adaptation for EEG-based emotion recognition, International Conference on Neural Information Processing.

Luo, L. K., Chen, L. M., Hu, S. Q., Lu, Y., and Wang, X. (2020). Discriminative and geometry aware unsupervised domain adaptation. IEEE Trans. Cybernetics 50, 3914–3927. doi: 10.1109/TCYB.2019.2962000

Luo, T., Zhang, J., Qiu, Y. W, Zhang, L., Hu, Y., Yu, Z., et al., (2024). MDDD- manifold-based domain adaptation with dynamic distribution for non-deep transfer learning in cross-subject and cross-session EEG. arXiv:2404.15615v1

Ma, B.Q., Li, H., Zheng, W.L., and Lu, B.L., (2019). Reducing the subject variability of eeg signals with adversarial domain generalization, in Neural Information Processing, 26th International Conference, ICONIP 2019, Sydney, NSW, Australia, December 12–15, 2019, Proceedings, Part I. pp.30-42.

Mühl, C., Allison, B., Nijholt, A., and Chanel, G. (2014). A survey of affective brain computer interfaces: principles, state-of-the-art, and challenges. Brain-Comput. Interfaces 1, 66–84. doi: 10.1080/2326263X.2014.912881

Musha, T., Terasaki, Y., Haque, H. A., and Ivamitsky, G. A. (1997). Feature extraction from EEGs associated with emotions. Artif. Life Robot. 1, 15–19. doi: 10.1007/BF02471106

Nie, F P ., Huang, H, Cai, X, and Ding, C. (2010). “Efficient and robust feature selection via joint l2,1-norms minimization.” in Proceedings of the 23rd International Conference on Neural Information Processing Systems. Curran Associates Inc. pp. 1813–1821.

Pan, S. J., Tsang, I. W., and Kwok, J. (2011). et al, Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 22, 199–210. doi: 10.1109/TNN.2010.2091281

Pandey, P., and Seeja, K. (2019). “Emotional state recognition with EEG signals using subject independent approach” in Data Science and Big Data Analytics (Springer), Women In Research. 117–124. Available at: https://api.semanticscholar.org/CorpusID:70190352

Pan, S. J., and Yang, Q. (2010). A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359. doi: 10.1109/TKDE.2009.191

Patel, V. M., Gopalan, R., Li, R., and Chellappa, R. (2015). Visual domain adaptation: a survey of recent advances. IEEE Signal Process. Mag. 32, 53–69. doi: 10.1109/MSP.2014.2347059

Pinheiro, P. O. , (2018). “Unsupervised domain adaptation with similarity learning.” in Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8004–8013.

Shi, L. C., and Lu, B. L., (2010). “Off-line and on-line vigilance estimation based on linear dynamical system and manifold learning.” in Proceedings of Annual International Conference of the IEEE Engineering in Medicine and Biology, 2010. pp. 6587–6590.