94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Neurosci., 10 April 2024

Sec. Neuromorphic Engineering

Volume 18 - 2024 | https://doi.org/10.3389/fnins.2024.1279708

This article is part of the Research TopicFrom Theory to Practice: The Latest Developments in Neuromorphic Computing ApplicationsView all 5 articles

A neuromorphic system is composed of hardware-based artificial neurons and synaptic devices, designed to improve the efficiency of neural computations inspired by energy-efficient and parallel operations of the biological nervous system. A synaptic device-based array can compute vector–matrix multiplication (VMM) with given input voltage signals, as a non-volatile memory device stores the weight information of the neural network in the form of conductance or capacitance. However, unlike software-based neural networks, the neuromorphic system unavoidably exhibits non-ideal characteristics that can have an adverse impact on overall system performance. In this study, the characteristics required for synaptic devices and their importance are discussed, depending on the targeted application. We categorize synaptic devices into two types: conductance-based and capacitance-based, and thoroughly explore the operations and characteristics of each device. The array structure according to the device structure and the VMM operation mechanism of each structure are analyzed, including recent advances in array-level implementation of synaptic devices. Furthermore, we reviewed studies to minimize the effect of hardware non-idealities, which degrades the performance of hardware neural networks. These studies introduce techniques in hardware and signal engineering, as well as software-hardware co-optimization, to address these non-idealities through compensation approaches.

The neuromorphic system, designed to mimic the neuron-synapse connections of the human neural network, aims to achieve a robust and efficient operation in big data-based deep learning and artificial intelligence (AI) systems (Mead, 1990; Ham et al., 2021). To accomplish this goal, it focuses on key characteristics of biological neural networks, such as large-scale parallel signal processing and ultra-low power consumption. Over the past few decades, extensive research has been conducted on artificial neural network (ANN) algorithms, which emulate biological neural networks using a mathematical perceptron (Jain et al., 1996; Maass, 1997; Gardner and Dorling, 1998). These ANN algorithms include various network structures like convolutional neural networks (CNNs), used for image classification through kernel-based feature extraction, and fully connected networks (FCNs) with multiple perceptron layers (Albawi et al., 2017; Gu et al., 2018). In general, ANNs operate in two phases: the ‘training’ phase, where interconnected synaptic weights are adjusted in the direction of the desired output based on gradient descent with respect to the loss function, and the ‘inference’ phase, where output values are determined through the vector–matrix multiplication (VMM) of input data and weights. Throughout this phase, neuron outputs are represented using activation functions such as sigmoid, ReLU, tanh, and others.

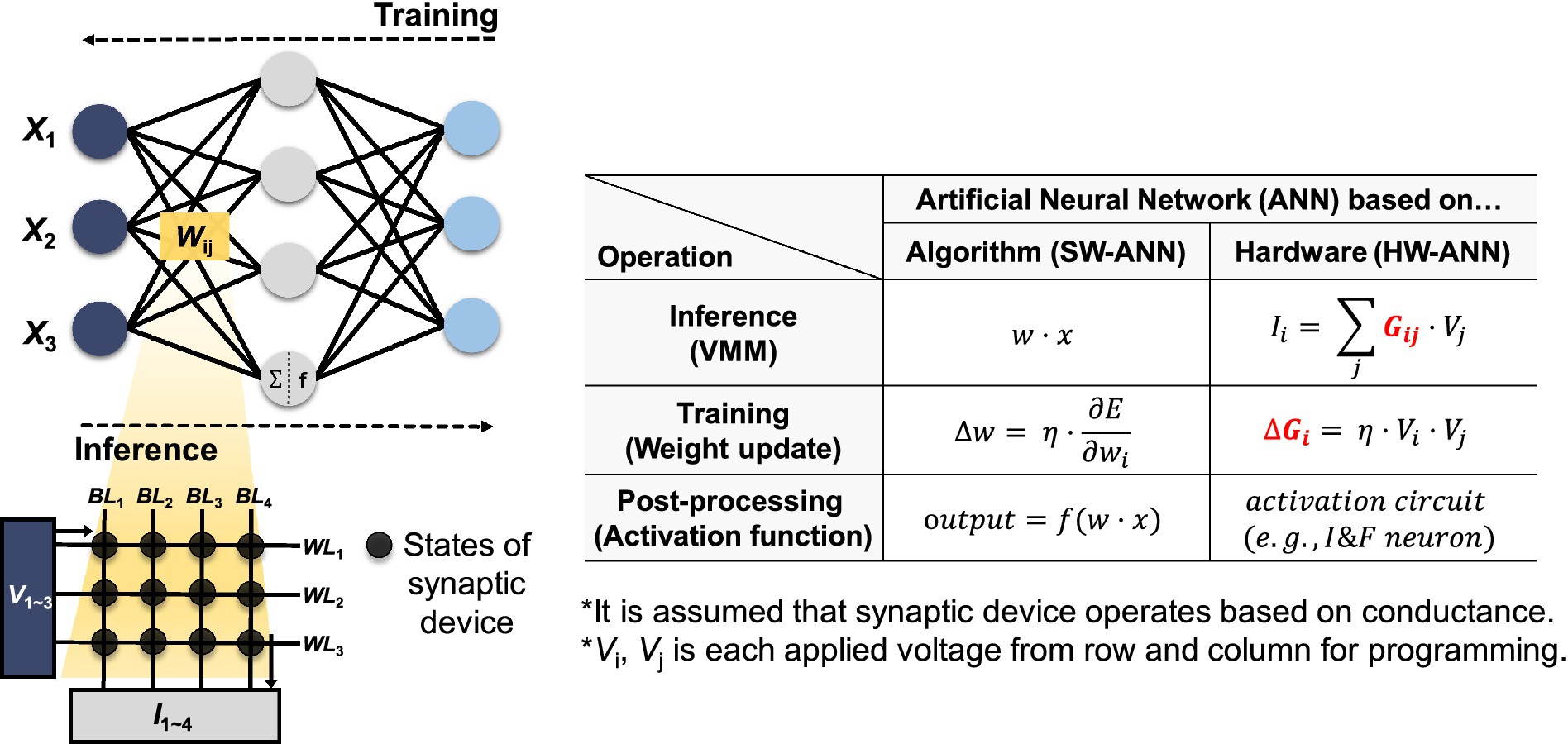

Neuromorphic systems can perform powerful and efficient neural computations by hardware implementations of ANNs (Misra and Saha, 2010; Indiveri and Liu, 2015; Schuman et al., 2017), and the basic concept of hardware-based neural networks (HW-ANNs) with synaptic devices is described in Figure 1. In HW-ANNs, the two phases of the ANN algorithm can be realized by reading and adjusting the states of synaptic devices for inference and training, respectively. The SW-ANN model can be implemented as array structure-based hardware consisting of several synaptic elements. The ANN model has three core components: input vector x, weight matrix w, and output vector f(w·x), where f is the activation function. When these components are implemented in hardware, they have the following correspondence: (1) Input vector x corresponds to signals applied to the wordlines (WLs) of the synapse device array. For example, in the case of the Modified National Institute of Standards and Technology database (MNIST), images are composed of 28 × 28 pixels with 8 bits per pixel. Such images are encoded into waveforms based on pulse amplitude or repetition count and then presented as input to the neural network. (2) The weight matrix, denoted as w, is implemented by the state of synapse devices. Assuming a conductance-based device, it is symbolized as G. (3) The output vector, denoted as f(w·x), which serves as the input vector to the next layer, corresponds to the output of the CMOS peripheral circuit. The output current (Ii) at each BL can be determined by the VMM operation of the input voltage signals (Vj) applied to the WLs and the device state. Neuron circuits in HW-ANNs correspond to activation functions in SW-ANNs and are implemented in analog or mixed-signal circuits to represent the output signal generation or firing of neurons. These circuits receive presynaptic signals from synaptic devices, and then generate corresponding output signals when integrated signals exceed a certain threshold.

Figure 1. Correspondence between SW-ANN and HW-ANN realized by synaptic device array and the correlation between SW-ANN and HW-ANN. Red text indicates that it is implemented by synaptic devices.

Generally, incorporating synaptic devices into large-scale neuromorphic systems demands consideration of specific performance metrics aligned with industrial requirements. These metrics include (1) non-volatile memory, which is essential for maintaining network function by reliably storing weight values; (2) high integration density is necessary to efficiently accommodate a large neural network in a limited area; (3) high operating speed is needed for fast inference (read) and training (write) operations in HW-ANNs; (4) low power consumption is desired to achieve energy-efficient neural network operations, including both inference and training. Various types of synaptic devices have been investigated for these purposes and can be categorized into two types: conductance-based and capacitance-based. Among conductance-based devices, there are two-terminal devices exhibiting memristive behaviors (Strukov et al., 2008) and three-terminal devices with an additional selecting electrode such as a gate for the channel conductivity. Synaptic array architectures, which consist of synaptic device cells, can vary based on the device operation mechanism and structure, each having its unique VMM operations depending on the array structure. Furthermore, the neuromorphic system can be affected by the inherent non-ideal characteristics of synaptic devices. Due to material and process-related limitations, synaptic devices may exhibit non-linear and asymmetric conductance modulations, a narrow on/off ratio, low precision of device states, poor reliability, and device-to-device or cycle-to-cycle variations. These challenges make it difficult to achieve reliable and accurate analog computing operations, including computations with analog states, compared to conventional digital computing. To mitigate this issue, device-to-circuit- and circuit-to-algorithm-level studies have been conducted to explore compensation methods for addressing hardware non-idealities.

This article provides a comprehensive investigation into neuromorphic systems with non-volatile memory devices. This article explores the significance of synaptic device metrics in various applications and discusses their importance. Firstly, synaptic devices are discussed depending on the operation mechanism and device structure in Section 2. Furthermore, the corresponding array structure is explored, including weight mapping schemes with recently reported advances, such as capacitor-based synapses. In Section 3, we have introduced studies that explored the compensation methods to mitigate hardware non-idealities from two perspectives. Given inevitable non-idealities, compensation approaches against the non-idealities are discussed, including hardware and signal engineering as well as software-hardware co-optimization methods to specifically address non-idealities and enhance neuromorphic system performance. We believe that this review article could contribute to a better understanding of recent advances in neuromorphic system engineering and the development of hardware-driven neural network systems, even in the presence of non-idealities.

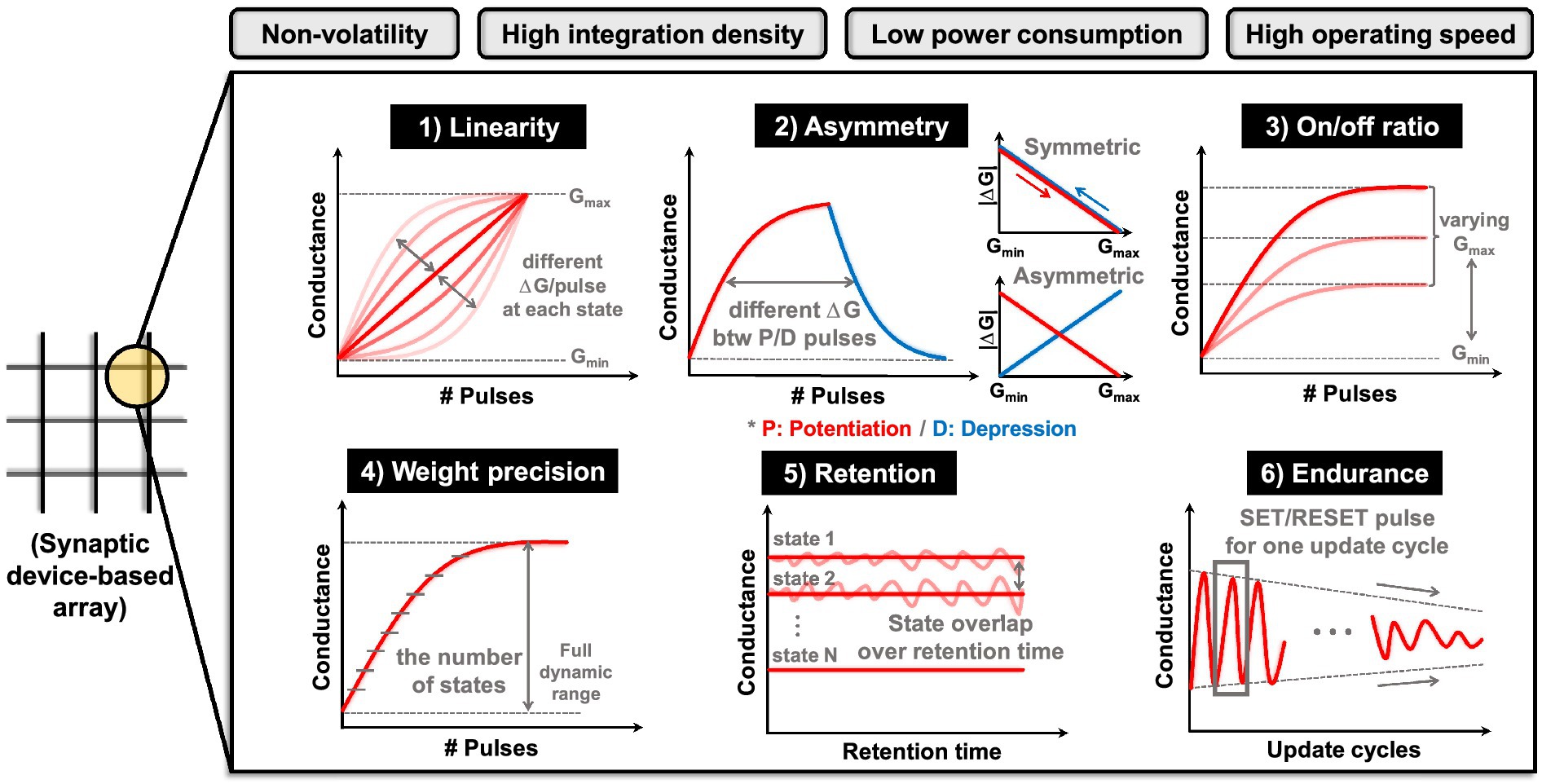

The essence of a neuromorphic system resides in synaptic devices that not only fulfill ‘store’ functions but also execute ‘computational’ operations. In other words, synaptic devices must be capable of both storing the states and accurately representing analog values while also performing precise VMM operations. These functionalities hold immense importance as they directly influence the performance of neural network applications. The following are the required performance metrics that synaptic devices should exhibit, as depicted in Figure 2 (Misra and Saha, 2010; Chen et al., 2017; Yu, 2018). (1) Linearity: it indicates how much the conductance of a device changes in relation to the applied pulses. Better linearity means that the amount of weight change is consistent in relation to the number of applied pulses. This factor plays a critical role in weight modulation to reach a target device state. It also affects the precision of weight states, along with the time and energy necessary for weight modulation. Linearity can be analyzed through the conductance response (G response) according to the number of pulses. (2) Asymmetry: it refers to the disparity in the amount of conductance change (ΔG) when the device undergoes potentiation (increasing conductance) or depression (decreasing conductance). This implies that ΔG depends on the current state of the device. Thus, when certain pulses are applied to the current state, an imbalance arises in the conductance increase and decrease, which subsequently affects the precision of weight adjustment. Asymmetry can be determined from G response, and G versus ΔG response data can provide a more intuitive interpretation. (3) On/off ratio: it is also referred to as the dynamic range, the on/off ratio signifies the proportion between the maximum conductance (Gmax) and the minimum conductance (Gmin) achievable by the device. The higher dynamic ranges provide sufficient margins between weight states, guaranteeing weight precision and establishing a more stable state representation. (4) Precision: it means the number of states that a device can exhibit within its dynamic range, corresponding to the concept of multi-bit characteristics in the conventional memory device. It entails maintaining intervals between device states to achieve multi-bit representation, aligning with the objective of preventing overlaps. The attainable number of states relies on the distribution of states resulting from device variability. Weight precision is closely related to the capability of analog value implementation. (5) Retention: it is a reliability metric for memory devices, indicating their ability to maintain their current weight states effectively. To assess it consistently over an extended period, changes in conductance state are analyzed under high-temperature conditions. (6) Endurance: it is also a reliability metric that quantifies how many switching cycles a memory device can endure. It evaluates the number of times the device can switch during set/reset or program/erase pulse cycles, serving as a measure of the weight update lifetime and directly impacting the overall performance of HW-ANNs.

Figure 2. Requirements for large-scale neuromorphic systems and each performance metric for synaptic device.

In addition to these considerations, the switching (write/read) speed of synapse devices, which represents the fundamental level of the overall system, must be carefully addressed to efficiently implement large-scale HW-ANNs. Slow operation of synaptic devices can lead to decreased throughput and increased latency, making it a critical metric for real-time applications across the entire system (Zhang W. et al., 2019). Moreover, power consumption is a crucial requirement at the device level. Energy efficiency, from a device design perspective, is closely tied to the switching operating voltage of memory devices. Balancing power consumption and operational stability requires careful design and configuration of switching/read voltage amplitudes, especially to achieve selective operation on target cells (van De Burgt et al., 2018). However, it is important to note that the metrics associated with synaptic devices, while critical, do not always collectively fulfill ideal characteristics. For instance, achieving excellent retention may come at the cost of low endurance, and weight precision may vary based on factors such as on/off ratio and programming characteristics. Furthermore, consistently defining optimal synapse device characteristics proves challenging due to diverse considerations such as target application, network topology, and system-wide optimization. More details on these challenges are provided below.

There are two HW-ANN learning methods: in-situ (online) learning and ex-situ (offline) learning. In-situ learning involves conducting training directly on the hardware itself. This method possesses tolerance and self-adapting capabilities toward hardware imperfections (Li et al., 2018). On the other hand, the ex-situ approach involves training in software and then importing the pre-trained weights into the neuromorphic system. Ex-situ learning benefits from the direct use of training algorithm in software-based artificial intelligence, resulting in higher performance. Figure 3 explains the operation phases of each learning method. The primary action in in-situ learning is weight update, while ex-situ learning focuses on transferring the pre-trained weights, referred to as weight import. Consequently, the importance of specific metrics in the synaptic devices varies according to the learning method. In other words, the required metrics for synaptic devices are application-dependent.

The requirements of each metric can be prioritized based on the characteristics of the target application. (1) Frequency of state writing/reading: In classification applications (or inference machines, write-once-read-only systems), the stored memory state is read repeatedly, necessitating uniform reading at every step. Therefore, metrics such as retention, read stability, read speed, and a wide on/off ratio are of greater importance. Conversely, for on-chip training applications (such as pulse modulation, online learning for DNN), frequent weight update-state writing is required in hardware. Achieving this necessitates the use of a constant programming pulse, thus requiring synapse devices with robust endurance to sustain operational range during frequent and swift switching. Precise linear and symmetric programming, along with high bit precision, is essential to clearly represent different states (Sun and Yu, 2019; Zhao et al., 2020). (2) Network topology: The size of the network and the defined form of input/weight/output also influence metric requirements. As the network size increases, the array size grows accordingly, and design considerations must extend beyond single-device operation to the array level. With an increase in the number of cells, desired values at the single-cell level may change due to inter-cell influence, necessitating faster switching speeds. Furthermore, depending on whether the input/weight/output form of the neural network is binary or analog, requirements for weight precision vary according to the on/off ratio and programming characteristics. (3) Systematic design interface: Additionally, considering the hierarchy of the overall neuromorphic system, specifications of the signal conversion peripheral circuit connected to the synaptic device (such as DAC/ADC precision, parasitic resistance, etc.) as well as the on/off ratio, weight precision, and speed of the synaptic device should be considered.

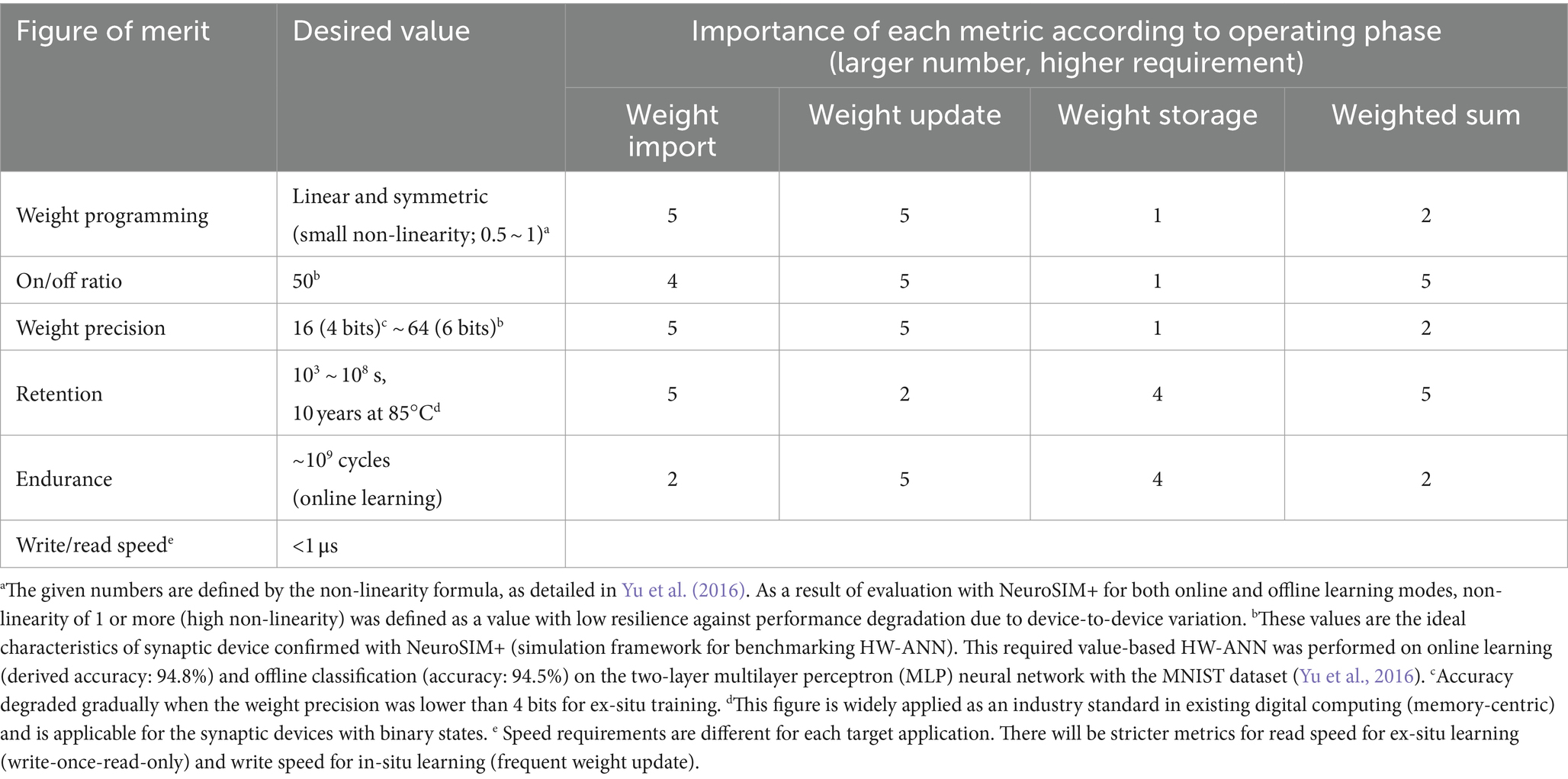

The desired metric values are outlined in Table 1, where their significance varies depending on the target application, often necessitating trade-offs. Key metrics for enhancing synaptic device characteristics include tuning accuracy during weight import, conductance response for weight updating, long retention time for weight storage, and evaluating the difference in VMM current during weighted sum operations (VMM operations). The importance of each metric can fluctuate depending on specific operational requirements, such as read speed for inference and write speed for frequent weight updates. We will explore the operational principles and metrics of conductance-based devices (both two- and three-terminal devices) as well as capacitance-based devices to achieve these desired synaptic device characteristics.

Table 1. Desired figure of merit as synaptic devices and significance of each metric according to operating phase in a hardware-based neural network.

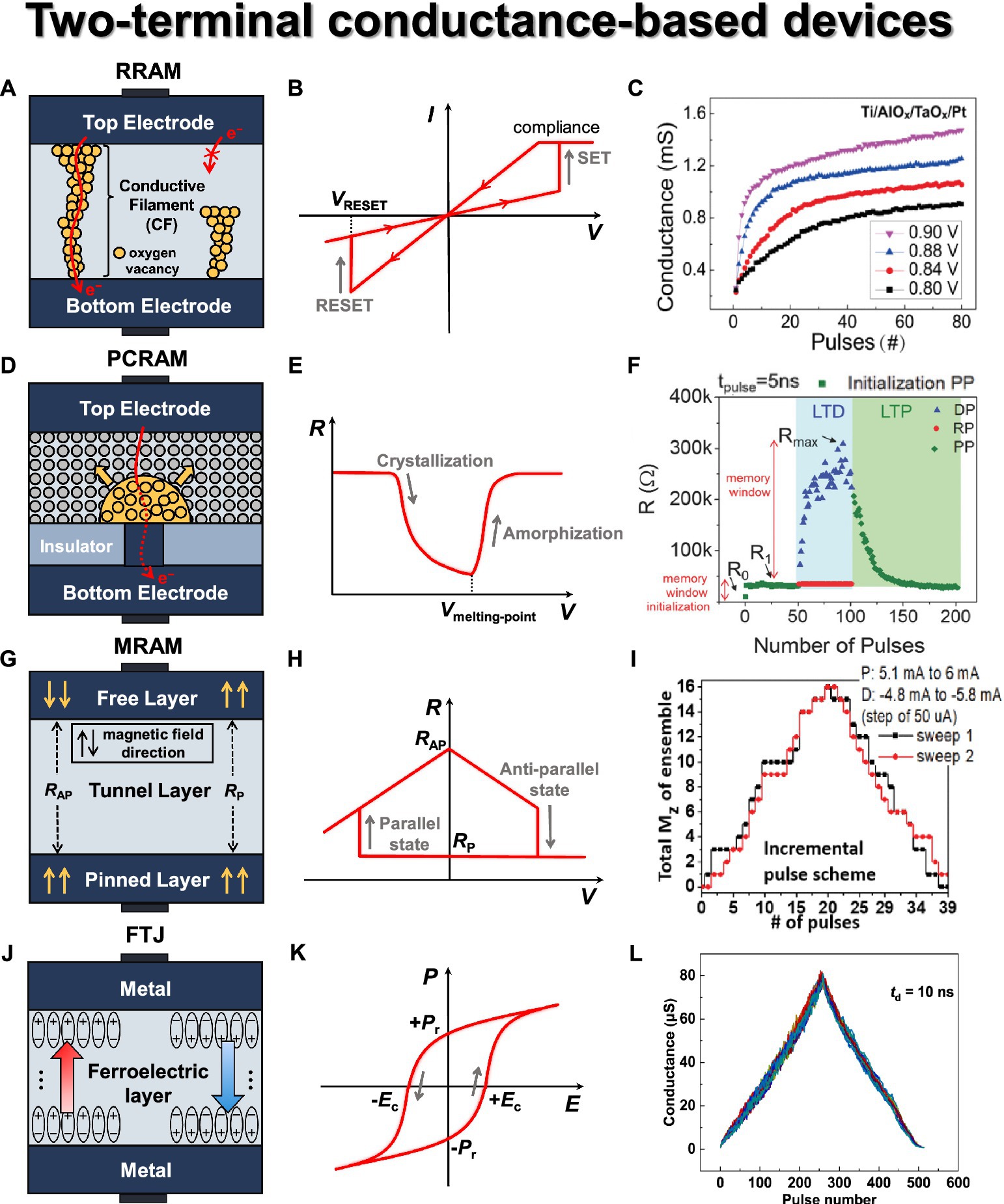

Resistive random-access memory (RRAM) is one of the most representative two-terminal devices with a metal–insulator–metal (MIM) structure in general, as shown in Figure 4A. Resistance changes occur within an intermediate material known as the switching layer, utilizing a variety of metal oxide materials such as TiOx, HfOx, AlOx, WOx, TaOx, and others. RRAM can be classified into filamentary type and non-filamentary type (also referred to as interfacial type; Lee et al., 2008; Jang et al., 2015; Wang et al., 2015; Hu et al., 2022). The filamentary type includes OxRAM (metal oxide RRAM) and CBRAM (conductive bridge RAM). OxRAM utilizes metal oxide materials as the switching layer, and oxygen ions migrate toward the top electrode when switching voltage is applied, leading to the formation of conductive filaments (CFs) consisting of oxygen vacancies within the switching layer. Various metal oxide materials can be employed, and in certain cases, a thin bilayer can be introduced to facilitate the development of multiple weaker filaments, enabling gradual switching operations (Gao et al., 2017). CBRAM forms a CF consisting of metal ions and employs materials such as metal oxides, amorphous silicon, and solid electrolytes as the switching layer. Due to its operation mechanism, it is believed to have the potential to be scaled down to an atomic-level dimension. This type of RRAM device exhibits stochastic and abrupt switching characteristics due to the randomness of CF generation. Consequently, this leads to challenges in achieving the desired state because of non-linearity in the conductance response and variations in device reliability caused by fluctuations in the device state. In addition, CBRAM tends to have a relatively high LRS (low resistance state) current due to the metal path within the CF, resulting in increased leakage current. In contrast, the switching materials employed in non-filamentary type devices are not as widespread as those used in filamentary types, leading to increased costs, reduced retention, and slower switching speeds (Gao et al., 2016).

Figure 4. Two terminal conductance-based synaptic devices. (A) RRAM basic structure. (B) RRAM I-V curve. (C) Conductance modulation on AlOx/TaOx-based RRAM under identical pulses with different amplitudes. Reproduced with permission (Sun et al., 2018), Copyright 2018 IEEE. (D) PCRAM basic structure. (E) PCM R-V curve. (F) Conductance modulation on narrow heater electrode-based PCM with identical fast programming pulses of depression, potentiation, and read, resulting in gradual amorphization and crystallization (La Barbera et al., 2018), Copyright 2018 John Wiley & Sons. (G) MRAM basic structure. (H) MRAM R-V house curve. (I) Conductance modulation on CoFeB/MgO-based MRAM under incremental pulse scheme. Reproduced under the terms of the CC-BY Creative Commons Attribution 4.0 International License (Ostwal et al., 2019), Copyright 2019 IEEE. (J) FTJ basic structure. (K) FTJ P-E hysteresis curve. (L) Symmetric conductance modulation on FTJ. Reproduced under the terms of the CC-BY Creative Commons Attribution 4.0 International License (Luo et al., 2022), Copyright 2022 Springer Nature.

The operation of RRAM can be divided into three phases—forming, set, and reset—which are determined by the states of CFs within the switching layer. Initially, RRAM is in the pristine state, where little to no conductive filament is present in the switching region, resulting in minimal current flow. When voltage is applied to the top electrode, oxygen ions (O2−) migrate toward the top electrode, generating oxygen vacancies in the switching region. This results in the formation of CF through which electrons can flow, a process known as the forming phase. During the forming phase, RRAM transitions to the LRS, allowing current to flow easily through the CF. In the LRS, applying VRESET to the top electrode induces recombination of oxygen ions near the top electrode, filling the oxygen vacancies and leading to the rupture of the CF. As a result, the device switches to the HRS (high resistance state) with reduced current flow. This process is called the reset operation. In the HRS, applying VSET to recreate the conductive filament is known as the SET phase. The current–voltage (I-V) curve of a typical RRAM device, exhibiting its representative switching behavior, is shown in Figure 4B. Based on this characteristic, it is necessary to determine an appropriate read voltage (Vread) that does not alter the device state.

RRAM offers several advantages, including BEOL (back-end-of-line) compatibility, scalability (4F2/n, where n refers to the number of layers in the 3-D structure), nanosecond-level read/write speed, 106 endurance cycles, and low power consumption (Wong et al., 2012). However, RRAM exhibits stochastic resistance states resulting from the randomness of oxygen vacancy generation by oxygen ion trapping and de-trapping. Thus, this leads to device-to-device and cycle-to-cycle variations in conductance values rather than maintaining a stable conductance level Liao et al. (2020) incorporated the number of conductive filaments (N), filament gaps (Tgap), and applied voltage (V) into the I-V characteristic of the switching region of multiple-weak-filament-based analog RRAM. This enables us to anticipate the dynamic switching behavior of RRAM devices with stochastic characteristics. Furthermore, based on the mathematical modeling, it provides a reasonable explanation for the weight update behavior within the array structure, and the consistency with experimental results validates the soundness of this model. Kang et al. (2022) proposed a novel cluster-type CBRAM to tackle the challenge of achieving analog resistance changes caused by the electric field feedback effect in conventional filament-type CBRAM. The proposed solution involves precise control over the amount of metal ions in the cluster type. By utilizing a reducing agent, Ti, with a lower standard reduction potential than Ag, the oxidation and reduction processes of Ag cations are carefully regulated. This innovative approach mitigates the electric field feedback effect, resulting in a balance between linear switching characteristics and a high on/off ratio, demonstrating its potential as an exceptional analog synaptic device.

Efforts to achieve gradual switching characteristics in RRAM have primarily focused on the utilization of additive layers such as bilayers (commonly referred to as multilayers; Wang Z. et al., 2018; Kim S. et al., 2022). Sun et al. (2018) fabricated AlOx/TaOx-based RRAM devices and achieved high uniformity, excellent analog switching characteristics (~200 states), and impressive retention properties (~30,000 s). They conducted a comparison between identical and non-identical pulse inputs to modulate conductance, demonstrating that the modulation of RRAM device weights can be achieved through pulse parameters, as shown in Figure 4C. They observed different conductance responses based on amplitude (VSET: 0.8–0.9 V) and width (1–15 μs), confirming the potential for weight modulation of RRAM devices. Yeon et al. (2020) fabricated Si-alloy:Ag electrochemical RRAM devices and achieved highly stable operational characteristics. By employing an optimized alloying ratio, they successfully controlled the movement of mobile Ag ions with Cu, resulting in spatial and temporal switching uniformity. They also obtained a conductance range exceeding 102 and significantly enhanced programmed symmetry characteristics.

The fundamental mechanism for the recently reported RTN of RRAM has been demonstrated through conductive atomic force microscopy (C-AFM) and simulations based on the electron wave energy function. RTN arises from electron transport obstruction by trapped charges within incomplete channels, in addition to the main conduction channels. A solution to this issue involves the removal of the RTN component by applying a subthreshold voltage significantly smaller than the set/reset voltage, thereby eliminating incomplete channel islands and phase boundaries (a process known as phase field relaxation). Consequently, achieving high-precision programming is feasible through a stabilization process involving denoising voltage for the RTN components generated within the synapse device. In essence, the development of an RRAM-based neuromorphic chip with remarkable analog functionality, capable of expressing up to 2,048 states through fundamental-level operation analysis from a device perspective, underscores the potential for the commercialization of memristor device-based arrays (Rao et al., 2023).

Phase-change memory (PCM) is a two-terminal device where the resistance state is determined by the phase change of a material located between the two electrodes through the application of heat, as shown in Figure 4D (Raoux et al., 2008; Bruns et al., 2009; Wong et al., 2010). It is simply composed of electrodes, a heater, and phase-change material. Typically, the material of the bottom electrode is deposited and then etched into a trench shape, with its surroundings encapsulated by an insulating material (e.g., SiO2). Commonly used phase-change materials include chalcogenide-based materials (e.g., Ge2Sb2Te5 or GST). GST can readily switch between amorphous and crystalline states bidirectionally and can maintain each state for an extended period. When the phase-change material is in the crystalline state, it exhibits LRS; when it is in the amorphous state, on the other hand, it blocks the conduction path between the two electrodes, as depicted in Figure 4D as a dot-dash line, resulting in HRS. When a specific voltage is applied between the top and bottom electrodes, the current flows through the GST material, heating and changing its phase. This region where the change occurs is referred to as the programming region or active region, and it typically exhibits a mushroom-shaped profile due to the current crowding effect.

PCM starts in its initial state (as-fabricated device) with a low-resistive crystalline phase. When relatively small-amplitude voltage pulses are applied over an extended period, the GST material undergoes crystallization due to Joule heating. This heating aligns the atomic arrangements within the material without reaching its melting point. It causes the device state to transition from HRS to LRS, a process known as set (see Figure 4E). On the contrary, when relatively large-amplitude but short-duration voltage pulses are applied, a portion of the GST material undergoes local melting (the melt-quench process), resulting in consequent amorphization and a transition to HRS, a process known as reset. For a read operation, the current state of PCM is sensed by applying a weak electrical pulse that does not induce a significant phase change. PCM has several advantages, including fast SET speed, scalability, high endurance, long data retention over 10 years, and a high dynamic range around 103 (Burr et al., 2016). However, PCM exhibits abrupt RESET characteristics due to the crystallization/amorphization of the phase-change material, as reported by Burr et al. (2015). This implies that the conductance modulation is not consistent with the number of pulses applied. Additionally, a prominent issue in PCM is ‘resistance drift’, where the resistance value increases over time.

Kuzum et al. (2012) fabricated a PCM with W/TiN/GST/TiN stack that shows no degradation up to 107 cycles and demonstrates gradual set/reset operations with progressively increasing voltage pulses, enabling analog behavior. For this device, spike-timing-dependent plasticity (STDP) was experimentally verified, indicating the potential for extending to synaptic PCM arrays in terms of nanoscale and energy efficiency. Ding et al. (2019) fabricated a phase-change heterostructure (PCH) device to achieve continuous resistance states. The PCH is a multilayer structure where phase-change materials and confinement nanolayers (TiTe2/Sb2Te3) are alternately deposited. Given that the nanoscale amorphous Sb2Te3 layers restrict structural relaxation, this device significantly reduced the resistance drift problem compared to traditional GST-based PCMs (Ielmini et al., 2007). As a result, the enhanced weight resolution achieved through stable set/reset operations makes PCH-based devices promising candidates for synaptic applications in neuromorphic systems. La Barbera et al. (2018) demonstrated the potential for synaptic devices using a narrower bottom electrode-based PCM device. With a memory initialization step before applying switching pulses, they employed identical pulses (VSET: 1.25 V, VRESET: 1.6 V) for 50 cycles each, resulting in the switching characteristics shown in Figure 4F. These conditions allowed for conductance modulation to initiate without fully covering the amorphous region above the heater, leading to a more gradual switching behavior. The device exhibited endurance of at least 106 cycles across 145 cells.

Magneto-resistive RAM (MRAM) is a two-terminal device featuring two ferromagnetic layers separated by a non-magnetic layer (Akerman, 2005; Bhatti et al., 2017). The upper ferromagnetic layer is referred to as the free layer, while the lower ferromagnetic layer is known as the pinned or fixed layer. A thin insulating tunnel barrier is placed between these two layers, as shown in Figure 4G. To change the magnetization direction within the magnetic layers, the current direction in the word line (WL) connected to the lower layer is fixed, while the current direction in the bit line (BL) connected to the upper layer is varied. Through this process, the magnetization direction of the free layer can be freely changed based on the programming voltage. The ferromagnetic layers typically consist of transition metal elements (Fe, Co, Ni, etc.) and their alloys (CoFeB, NiFe, etc.), while the non-magnetic layer mainly consists of insulating materials such as MgO.

The resistance state of MRAM is determined based on the alignment of the magnetic orientations in the two ferromagnetic layers, whether they are parallel or antiparallel. If the magnetic orientations are in the same direction, it results in LRS, also known as the parallel state (RP). Conversely, if they are in opposite directions, it leads to HRS, or the antiparallel state (RAP), as depicted in Figure 4H. With increasing integration, interference between adjacent cells has led to read errors and energy inefficiencies, prompting the adoption of spin transfer torque MRAM (STT-MRAM). STT involves directly injecting current into the magnetic layer, where the spin carried by the injected electrons is transferred to the spins in the magnetic layer. This allows for the direct switching of the magnetic orientation. Another type of MRAM that utilizes a different mechanism for magnetization inversion is spin-orbit torque MRAM (SOT-MRAM). It generates spin-orbit effects, including a spin Hall effect within the device that enables the generation of spin currents and changes the magnetic orientation of the free layer to enable switching operations. SOT-MRAM injects current in the horizontal direction, producing more spin current for the magnetic layer. This results in faster operation and lower power consumption compared to STT-MRAM, making it the focus of recent extensive research.

In MRAM, the electron traverse between the two ferromagnetic layers through quantum tunneling in the thin insulating layer. The switching operation is achieved by applying different current directions. When the current flows from the pinned layer to the free layer, the spins of the free electrons align themselves according to the magnetization direction of the pinned layer due to the magnetic exchange coupling energy. This alignment of spin-polarized current exerts a torque on the free layer, causing its magnetization direction to align in parallel with that of the pinned layer. This results in LRS (RP) during the SET operation, as illustrated in Figure 4H. On the contrary, when the current flows from the free layer to the pinned layer, the free electrons with different spin orientations from the pinned layer exert a torque on the free layer in the opposite direction of its magnetization. This counteracting torque changes the magnetization direction of the free layer, leading to HRS (RAP) during the RESET operation.

MRAM has several advantages, including high endurance (>109 cycles) and fast switching speed (<100 ns). However, it also has some limitations, such as a limited number of distinct resistance states and a small on/off ratio, which can be drawbacks in its state representation capabilities. The key metric that represents the performance of MRAM is the magnetoresistance (MR) ratio, which indicates the ratio of resistance values between LRS (RP) and HRS (RAP), so that MR is defined as (RAP–RP)/RP. To achieve high performance during the inference process, high tunnel magnetoresistance (TMR), low write error rate (WER, <10−6), and low read disturbance rate (RDR, <10−6) are required during the weight import and weighted sum phases (Xu et al., 2018). Zhang et al. (2021) fabricated a high-TMR perpendicular MTJ device with W inserted into the free layer using the following stack: W/CoFeB/MgO/CoFeB/W/CoFeB/MgO/Ta. The device exhibited a 200% TMR at the nanoscale level, and the strong domain wall pinning effect in the free layer facilitated memristive behavior. The plasticity characteristics (resistance changes over time) for ramped and constant voltage pulse sequences were studied. By applying a subthreshold voltage to the free layer with a positive or negative delay Δt following a pre-spike, the resistance was effectively increased or decreased, simulating the STDP characteristic of a biological synapse.

Siddiqui et al. (2019) demonstrated the fabrication of MTJ devices utilizing the magnetic domain wall effect with CoFeB ferromagnetic layers and MgO spacer, which enabled the generation of linear multilevel weights. Additionally, non-linear activation functions were implemented using MTJ devices with sigmoid-like behavior, indicating the versatility of MTJ devices in neuromorphic systems. The linear resistance (weight) changes were observed in a parallel connection of seven MTJ devices when subjected to positive/negative currents in the CoFeB/Ta wire. Ostwal et al. (2019) fabricated a perpendicular SOT-MRAM device based on a Ta/CoFeB/MgO/Ta stack. This configuration yielded a high TMR and gradual potentiation/depression characteristics, as depicted in Figure 4I. In the case of an identical pulse scheme, they repetitively applied a current pulse of 5.4 mA for potentiation and a current pulse of 5.2 mA for depression. In the case of an incremental pulse scheme, they applied a current pulse ranging from 5 mA to 6 mA for potentiation and pulses ranging from −4.8 mA to −5.8 mA for depression, with increments and decrements of ±0.05 mA. Through this approach, they achieved improved linearity and demonstrated the potential of SOT-MRAM as synaptic devices.

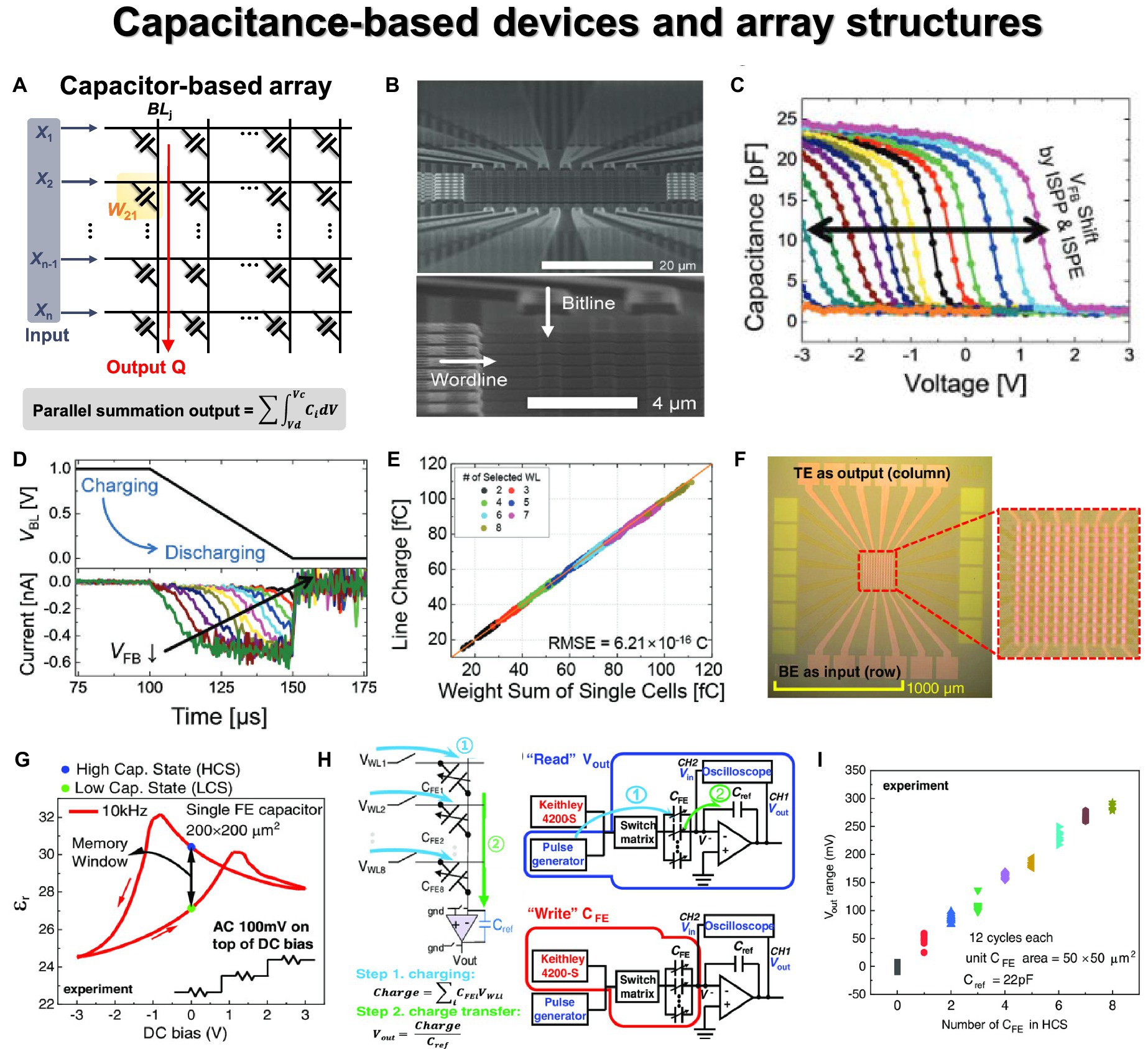

Ferroelectric memory can be utilized as both capacitor-based types, such as ferroelectric random-access memory (FeRAM) and ferroelectric capacitor, and conductance-based types, including ferroelectric field-effect transistor (FeFET) and ferroelectric tunneling junction (FTJ; Oh et al., 2019; Slesazeck et al., 2019; Mikolajick et al., 2022). Ferroelectric memory functions by storing information through the polarization of ferroelectric material when an external electric field is applied, as illustrated in Figure 4J. Ferroelectric materials have a polarization-electric field (P–E) hysteresis due to their non-centrosymmetric structure, as shown in Figure 4K. Unlike most materials that lose their polarization properties once the electric field is removed, non-centrosymmetric FE materials maintain polarization even without the electric field. The polarization state when the electric field is zero is termed as remnant polarization (Pr), and this lattice structure and the presence of electric dipoles give rise to two distinct polarization states (+Pr and − Pr). The absence of a central positive charge leads to an electric dipole moment, often resulting in two stable configurations. Altering the state of Pr involves applying an electric field greater than a threshold value known as the coercive field, which signifies the reverse field necessary to nullify the polarization state.

Among ferroelectric devices, FTJ is a two-terminal device that utilizes ferroelectric materials for practical memory applications (Kohlstedt et al., 2005; Böscke et al., 2011; Garcia and Bibes, 2014). Operating on the principle of electron tunneling, this memory necessitates an exceedingly thin ferroelectric film. Thus, HfO2-based material capable of deposition in ultrathin layers has predominantly been used. The FTJ features a metal-ferroelectric-metal sandwich structure and shares similarities in configuration with RRAM. The process of polarization reversal is employed to alter the effective tunneling barrier between the electrodes. Charge carriers accumulate or deplete within the interface layers of electrodes to screen bound polarization charges, depending on the polarization direction within the tunneling barrier. In junctions employing distinct electrodes, this screening effect induces an uneven barrier potential. By using top and bottom electron electrodes with two different screening lengths, the tunneling probability varies depending on the polarization direction. The state is classified based on the difference in tunneling current generated from the read bias, and tunneling electro-resistance (TER) is a method used to control the tunneling current through polarization. TER is measured by calculating the resistance ratio in LRS and HRS (Gruverman et al., 2009; Zhuravlev et al., 2009). In the MFM structure, the ferroelectric layer needs to be as thin as 3–4 nm in thickness to ensure a reasonable tunneling current, but such thickness leads to reduced polarization and TER ratio. Additionally, the traps generated by the tunneling current can reduce the memory window. To address this problem, researchers are investigating a metal-ferroelectric-interlayer-metal (MFIM) structure, which introduces an additional thin tunneling layer to the MFM structure. The MFIM structure improves TER by creating an extra energy barrier by inserting an interlayer (IL) with a high bandgap into the silver MFM structure. By separating the tunneling effect and ferroelectric materials, electron tunneling occurs through thin tunneling, and it can take advantage of tunneling optimization and high speed.

As a synaptic device, it has been investigated for its notable advantages, including high-speed switching, multi-value cell operation, and low power consumption attributed to low current levels and operating voltage (Chen et al., 2018; Majumdar et al., 2019; Ryu et al., 2019; Max et al., 2020; Song et al., 2022). In addition, it can operate with low power compared to other synapse devices because it has good endurance characteristics of 105 or more and uses a TER-based current mechanism. Polarization changes to store information, and various states can be implemented by adjusting the polarization of the domains by changing the programming voltage. Ryu et al. (2019) improved the TER ratio by fabricating an HZO-based FTJ with an interfacial Al2O3 layer added. The reliability of the Synapse device was measured to secure endurance (>107) and retention (10 years) characteristics. Using the incremental step pulse programming (ISPP) 10 μs pulse method, linear and symmetric characteristics were obtained for long-term potentiation (LTP) and long-term depression (LTD). Luo et al. (2022) fabricated an FTJ with a PZT-based metal-ferroelectric-semiconductor (MFS) structure. It was possible to operate in the multilevel state of the (111)-oriented PZT structure due to the ultra-fine polydomain structure and at a very high speed (10 ns). As characteristics of the synaptic device, ISPP pulses were used (10 ns), 256 conductance states, high dynamic range (~100), linearity close to 1, and symmetric characteristics were secured, as depicted in Figure 4L. In addition, it secured the characteristics of a synaptic device with very high endurance (109) and retention (104 s). Goh et al. (2021) fabricated an FTJ with a TiN/HZO/TaN/W structure. The TaN layer prevented diffusion and prevented leakage current, improving the TER value (~100). The endurance (~108) and retention (10 years) characteristics of the synaptic device were secured, and 30 multilevel states were secured through LTP and LTD measurements.

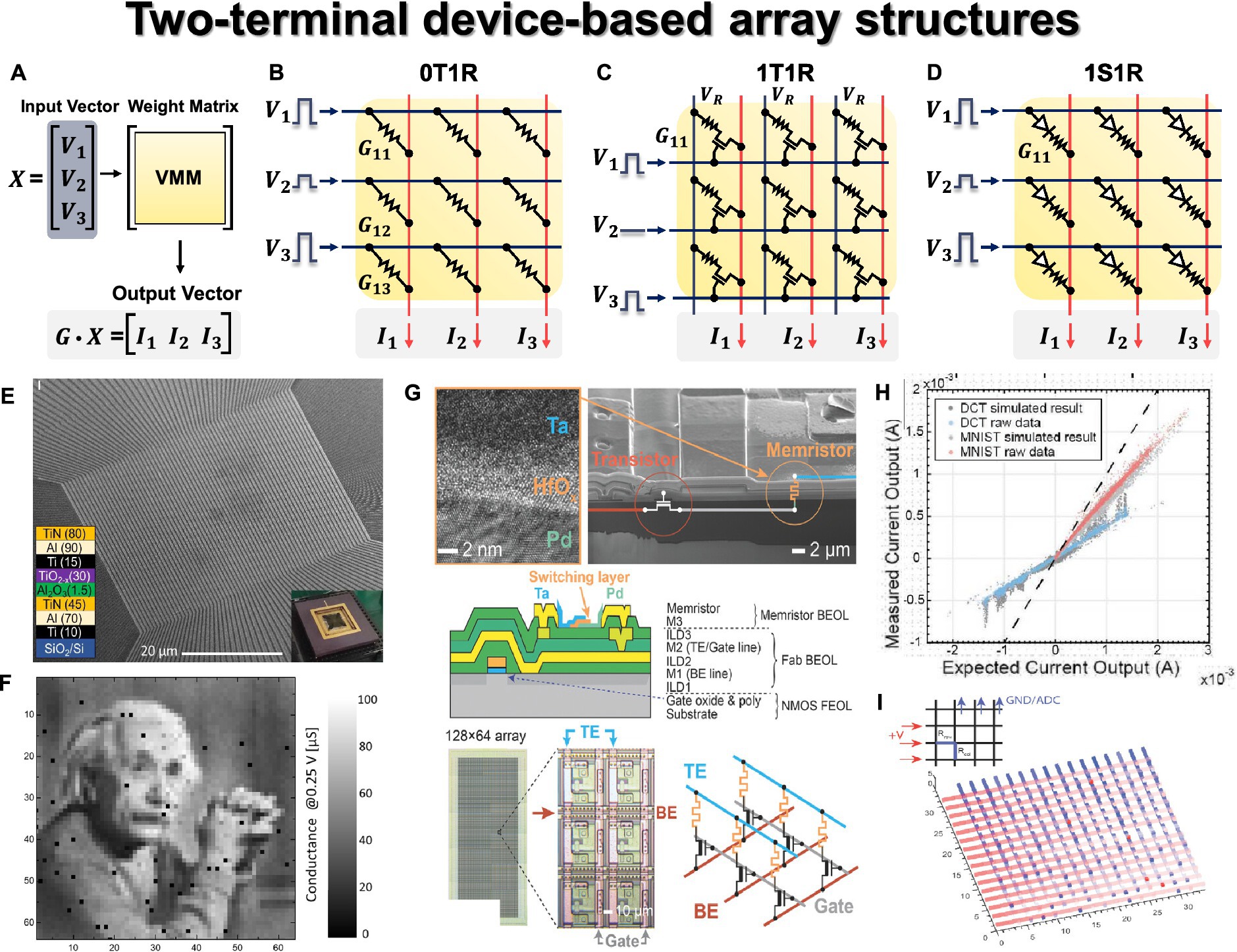

The key operation of neural networks is VMM. During inference, large-scale VMM operations need to be efficiently implemented on hardware using a crossbar array (CBA) structure. The CBA structure is inherently suitable for VMM based on synaptic devices (Gao et al., 2016, 2017). As depicted in Figure 5A, input voltages ( encoded from input data are applied to each row (jth) or each WL of the CBA. Through Ohm’s law at each device cell ( ) and Kirchhoff’s current law ( ), the BL currents are obtained as a result of VMM operation. These currents contribute to the weighted sum within the activation neuron circuits. Furthermore, to account for negative weights in the CBA, a commonly employed technique is the use of a differential pair, involving a pair of devices for each weight.

Figure 5. Two terminal device array structures. (A) Schematic of VMM operation with input vector, weight matrix, and output vector. (B) 0T1R passive crossbar array structure. (C) 1T1R array structure. (D) 1S1R array structure. Yellow panel indicates the weight matrix implemented by the conductance of two-terminal devices, and red lines indicate the bitline where output currents are obtained through Ohm’s law and Kirchhoff’s current law. (E) Scanning electron microscopy image of 64 × 64 0T1R crossbar array. (F) Weight tuning results in crossbar array. (E,F) Reproduced under the terms of the CC-BY Creative Commons Attribution 4.0 International License (Kim H. et al., 2021), Copyright 2021 Springer Nature. (G) Fabricated Ta/HfO2/Pd memristor 1T1R array. (H) VMM results in 1T1R array. To check the DPE VMM error, circuit simulation (gray) and raw data (colored) from experimentally measured VMM data were compared for both a signal processing application of the discrete cosine transform and neural network inference for the MNIST database. (I) Impact of ‘stuck on’ defects and parasitic of 16 × 16 array, resulting in the signal degradation from left to right and from top to bottom. Red lines indicate the rows of 16 × 16 array with applying voltages from left, and blue lines indicate the columns grounded on top, and red dots indicate “stuck on” defects. (G–I) Reproduced under the terms of the CC-BY Creative Commons Attribution 4.0 International License (Hu et al., 2018), Copyright 2018 John Wiley & Sons.

The most basic two-terminal device-based CBA structure is the 0T1R passive array, consisting of memristor elements (1R) in each unit cell. As depicted in Figure 5B, the passive CBA structure positions a two-terminal element at the crosspoint where WL and BL intersect perpendicularly. In this configuration, where two-terminal elements are located in parallel, an appropriate bias scheme is necessary to program and read only the selected cell. Accurate array operation for the selected cell is crucial for efficient performance of the entire HW-ANN, including inference accuracy, speed, and power efficiency. The half-V scheme represents a standard biasing method commonly employed in passive CBA. It aims to enhance cell selectivity by applying full bias (V) to the WL of the selected cell and 0 V to its BL. Meanwhile, unselected WLs and BLs receive half of the full bias (V/2). Specifically, when reading a certain cell, Vread is applied to the top electrode (WL, row), while 0 V is applied to the bottom electrode (BL, column). However, for the remaining unselected cells, half-Vread (Vread/2) is applied to the remaining rows and columns, ensuring that the voltage across those cells remains at 0 V. For instance, when reading the first column in Figure 5B, Vread is applied to all rows, 0 V is applied to the first column, and Vread/2 is applied to the rest of the columns simultaneously. In this scenario, other unselected cells located in the same row or column as the selected cell experience Vread/2. The better the device I-V non-linearity (or I-V selectivity) that ensures low OFF current for unselected cells compared to the ON current for selected cells, the more effectively the off-switch operation can be carried out on those cells. This approach enables parallel operations with the weight matrix implemented in the CBA when a specific input voltage vector is applied. However, if sufficient I-V selectivity (I@Vread/I@Vread/2) is not guaranteed, passive arrays with high integration and low process costs encounter issues such as unwanted current flow in unselected cells (sneak current issue) and read errors during the half-Vread scheme (half-select disturbance). This results in challenges in high-precision weight programming/reading in passive arrays. Nonetheless, this can be mitigated with an additional non-linear selection device (referred to as an extra access device, selection device, or series device), including transistor, diode (with unipolar memristor), or selector (with bipolar memristor) devices.

A 1T1R structure is one of the active arrays having a transistor with a gate terminal that provides switch and selective operation for the selected device, as shown in Figure 5C. Typically, a two-terminal memristor is deposited on the drain end of a transistor co-integrated through a mature CMOS integration process. Due to the maturity and controllability of the CMOS process and transistor operation, many neural network applications have adopted the 1T1R-based CBA structure (Yu et al., 2016; Yao et al., 2017; Hu et al., 2018; Cai et al., 2019; Xue et al., 2020; Yao et al., 2020). Excellent weight programming is possible for the desired cell, but the 1T1R structure has a tradeoff between the scaling difficulty of the transistor (at least 6F2 when W/L = 1) compared to the passive 0T1R structure with 4F2 and difficulty in 3-D stackability. To resolve this tradeoff, the need for a two-terminal synaptic device and a serially stackable two-terminal selection device emerged. A 1D1R structure addresses this need, where a diode, a representative non-linear device, is utilized as a selection device. The diode stack can be deposited in series with the memristor, each stackable on top, solving the low integration density of 1T1R (Kim et al., 2013; Gül, 2019; Li et al., 2021). Additionally, the rectifying characteristic of diode can guarantee I-V selectivity, but resistive devices connected in series must have unipolar characteristics, enabling set/reset switching for voltage of one polarity. However, the unipolar memristor device faces challenges such as low program margin (weight precision) due to a narrow switching voltage window (Huang et al., 2011). Additionally, in the case of 1D1R, reverse bias is applied to unselected WL/BL, which imposes stricter requirements on non-linearity factors compared to 1S1R.

A 1S1R configuration, depicted in Figure 5D, involves stacking selector materials and a memristor in series, effectively alleviating the sneak current problem. This configuration combines the advantages of both active and passive arrays, meaning that it possesses the benefits of read/write selectivity while improving integration density. The selector is expected to perform a role similar to that of a transistor in a 1T1R configuration, exhibiting high non-linearity in its I-V characteristics and offering electroforming-free behavior and scalability. The selector exhibits bipolar switching characteristics, with various material stacks studied to fulfill this function (Wong et al., 2012; Burr et al., 2014; Aluguri and Tseng, 2016; Li et al., 2021; Woo et al., 2022). From a stack perspective, these are referred to as two-terminal selector ‘materials’, and from a device perspective, they can be expressed as a ‘bidirectional diode’ (Jeon et al., 2024). To distinguish it from the diode used for the unipolar memristor device in the 1D1R, selector material compositions with bidirectional diode characteristics are included in the 1S1R configuration. Representative types include ovonic threshold switching (OTS) with high compatibility of PCM devices (Kau et al., 2009; Lee et al., 2012, 2013; Song et al., 2015; Chen et al., 2016; Velea et al., 2017; Hua et al., 2019), mixed ionic-electronic conductors (Gopalakrishnan et al., 2010; Burr et al., 2012; Shenoy et al., 2014; Luo et al., 2015), metal–insulator-transition (Lee et al., 2007; Son et al., 2011; Kim S. et al., 2012; Lee et al., 2015; Cha et al., 2016), tunnel barrier (Lee et al., 2012; Choi et al., 2016; Upadhyay et al., 2020), and field-assisted super-linear threshold switching materials (Jo et al., 2014, 2015).

The 1S1R structure requires a balance between the conductivity of the selection device and the memristor device. Devices with excessively high conductivity may struggle to function effectively as selectors, while those with low conductivity may require higher operating voltages and could reduce the programming window, complicating the design process for optimized read/write voltage margins (Wong et al., 2012; Li et al., 2021). Furthermore, additional selector devices or materials may pose challenges in terms of material optimization and fabrication compatibility with the memristor device. In response to these challenges, there is active research into self-rectifying memristor (or self-rectifying cell) passive arrays. These arrays eliminate the need for extra access devices for memory elements found in existing 0T1R passive arrays. The memristor cell itself exhibits I-V non-linearity characteristics and offers an alternative to the area overhead of 1T1R structures and the design complexity of 1S1R configurations (Kim K.-H. et al., 2012; Hsu et al., 2013; Kim et al., 2016; Li and Xia, 2019; Sun et al., 2019; Jeon et al., 2024). However, there is a need for further research into CMOS-compatible selector-based large-scale 1S1R crossbar array structures.

The fabrication of a large-scale hardware synaptic device-based array, a 64 × 64 Al2O3/TiOx RRAM passive array, is presented (see Figure 5E) for ex-situ training (Kim H. et al., 2021). By utilizing low-temperature processes, high yield of 99% is achieved, ensuring the array’s high potential for synaptic operations. Additionally, three voltage-programming techniques (bound reducing, high-voltage devices aware, and shifting conductance) are proposed for precise state writing within the array, and the result of weight import (write) on CBA is shown in Figure 5F. In particular, the passive array structure has been shown to address the issue of half-select disturbance that arises in passive array configurations. This research demonstrates the potential for mitigating half-select disturbances in passive array structures and validates the robust operation of the passive array. These techniques take into consideration hardware characteristics, securing the required multilevel state in synaptic devices by ensuring a reliable weight import, a critical operation in ex-situ training. Hu et al. (2018) fabricated a 128 × 64 Ta/HfO2-based 1T1R array (Figure 5G) and experimentally demonstrated its impressive performance in analog computing for the VMM operation in a CBA. In Figure 5H, the linear relationship between VMM experimental raw data and ideal circuit simulation, including circuit parasitic, was shown for both discrete cosine transform signal processing applications and neural network inference for the MNIST dataset. It suggests a close match between the simulation-based VMM results and the measured one from the fabricated array-based VMM, demonstrating 6-bit precision, re-programmability, and stable operation. In addition, it includes simulations displaying the influence of the impact of faulty cells on VMM performance, as shown in Figure 5I. This article showcases the potential of a memristor CBA-based Dot Product Engine (DPE) for analog computing. Li et al. (2022) demonstrated the potential of HW-ANNs using a 2-D material, HfSe2-based CBA. Traditional bulk-based and transition metal oxide (TMO)-based memristor devices suffer from limited resistive switching ratios and challenges related to variations. To address the hard-breakdown issue caused by the forming voltage in bulk material-based RRAM devices in CBAs, a 3 × 3 memristor array was fabricated using polycrystalline HfSe2 thin films with defects and dislocations. Through stable control of conductive filaments via defect paths, the HfSe2-based 3 × 3 array was experimentally shown to enable hardware neural network operations in ex-situ training for edge extraction.

Giannopoulos et al. (2018) experimentally demonstrate the VMM performance on the projected PCM array as 8-bit precision and low power (60 nW). A single-layer neural network implemented on a 10 × 3 GeTe-based projected PCM array successfully performed inference without errors even under varying external temperatures. The projected PCM devices exhibit much weaker field dependency compared to conventional PCM (Koelmans et al., 2015), thereby resolving the conductance drift issue caused by structural relaxation in the amorphous state of conventional PCM. Consequently, they enable more accurate VMM. Figure 4L shows the VMM results obtained from 2,000 experimental runs of 4 × 3 VMM, which closely approximates the performance of 8-bit fixed-point arithmetic with the inclusion of temperature compensation techniques. A three-layer neural network consisting of 164,885 synapses was experimentally implemented using a 500 × 661 1T1R PCM array for in-situ training (Burr et al., 2015). Each synapse utilized two PCM devices (G+, G−) as a differential pair, and the weighted sum operation was performed by the software-based neurons with sense amplifiers to process the column currents. The measurements obtained from the hardware implementation were compared with simulation results based on parameter values, and a close match was observed, confirming the predictability of the array’s behavior and consideration of non-ideal effects.

An experimental implementation of ex-situ training was achieved using a 64 × 64 1T1MTJ CBA-based hardware neural network (Jung et al., 2022). To address the power consumption issue in large-scale arrays caused by the low resistance of MRAM, a new cell-based CBA was fabricated, proposing an energy-efficient synaptic hardware system. The 1-bit cell, composed of two complementary devices, represents the 1-bit state with the combination of FET’s gate voltage and the state of MTJ. For conventional VMM operations, Kirchhoff’s current law was used to calculate the current sum. However, by measuring the RC time delay, the new structure determines the neuron output based on the resistance sum of each column. The time taken to charge the capacitor at the end of each column to Vref varies depending on the resistance of each column, allowing VMM operations to be performed based on the time difference based on the CBA. Berdan et al. (2020) fabricated an FTJ in which thin SiO2 was added to the ferroelectric layer doped with SiO2 to HfOx and confirmed the VMM operation in a neuromorphic system in a passive 5 × 5 crossbar array. An FTJ array without a selector was fabricated, and analog voltage-amplitude VMM operation was demonstrated using the non-linear and very low current characteristics of the FTJ. The non-linear I-V characteristics were linearized using a word line driver, and the very linear I-V for input voltage in 16 conductance states was confirmed through experiments. MM operation was performed for 100 inputs by adjusting the state with different weight maps, and a very accurate result was obtained with an error of 0.77% between the value calculated through effective conductance and the actual VMM operation.

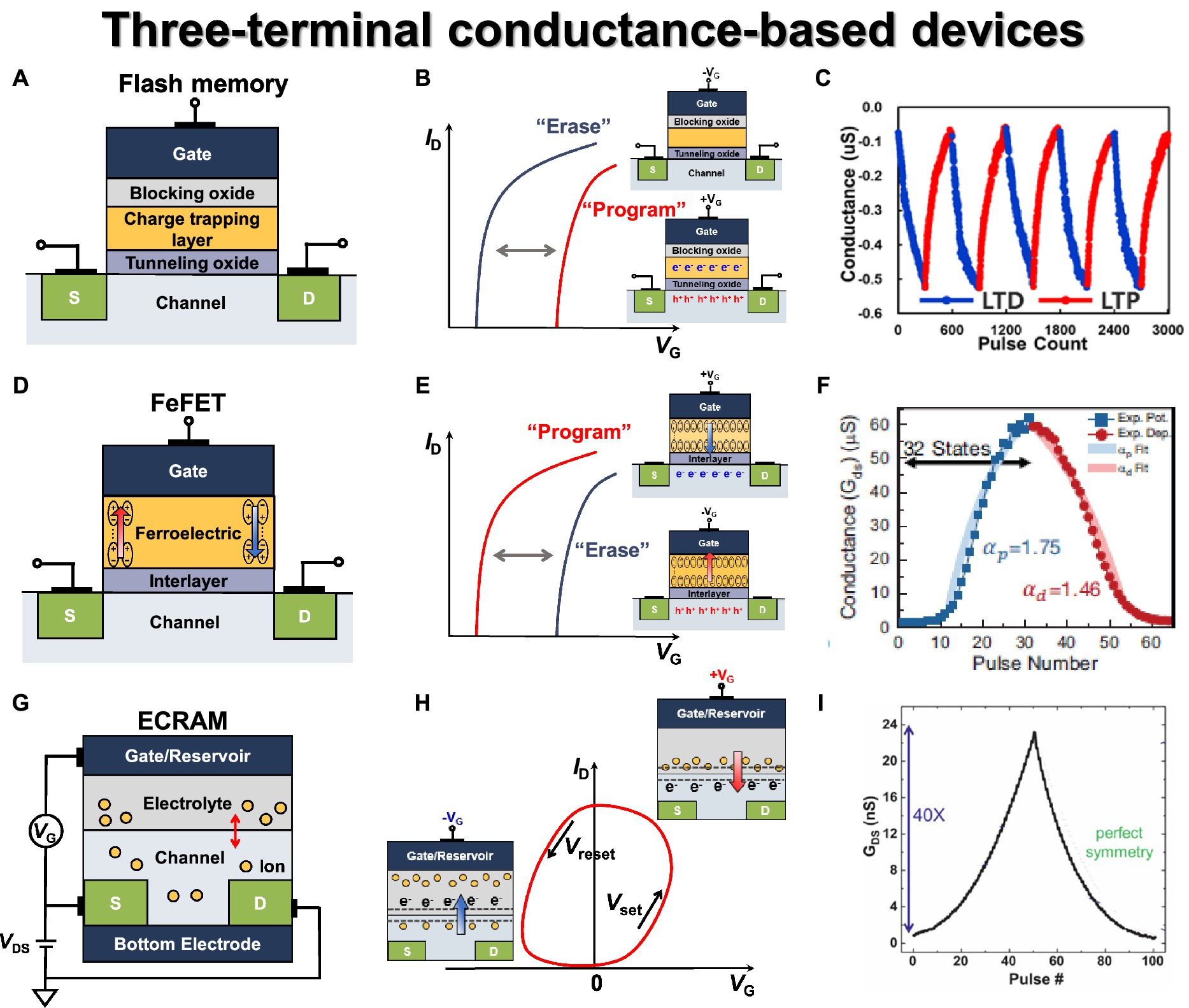

Flash memory is one of the most representative and successfully commercialized three-terminal non-volatile memory devices. It has gained popularity due to its scalability, high reliability (Mizoguchi et al., 2017), and multilevel capabilities (Wang F. et al., 2020). However, both charge-trapping layer (CTL)-based and floating gate (FG)-based devices suffer from the drawback of requiring high voltage during program and erase operations, which can lead to a decline in endurance characteristics (Lee et al., 2003). As technology scales down, researchers have investigated solutions like 3-D NAND flash or closer cell spacing to ensure better scalability and to overcome cell-to-cell interference (Jang et al., 2009; Compagnoni and Spinelli, 2019). To achieve better scaling and performance, the flash memory structure has evolved from the traditional MOSFET to a configuration involving blocking oxide/charge trapping layer/tunneling oxide, as illustrated in Figure 6A. In the past, FG was used as the charge-trapping layer, but this approach became impractical due to cell-to-cell interference as scaling continued (Lu, 2012). Thus, insulator materials with high trap density like Si3N4 and HfO2 have been adopted as CTLs (Jung et al., 2006; You and Cho, 2010). Each material offers distinct advantages and disadvantages, with HfO2 having good memory window but poor retention, while Si3N4 has excellent retention due to the absence of shallow traps.

Figure 6. Three terminal conductance-based synaptic devices. (A) Flash memory basic structure. (B) Flash memory transfer curve. (C) Conductance modulation on flash memory under incremental step pulse programming (ISPP). Reproduced with permission (Zhou et al., 2022), Copyright 2022 IEEE. (D) FeFET basic structure. (E) FeFET transfer curve. (F) Conductance modulation under ISPP. Reproduced with permission (Jerry et al., 2017), Copyright 2017 IEEE. (G) ECRAM basic structure. (H) ECRAM transfer curve. (I) Conductance modulation on ECRAM under identical pulses. Reproduced with permission (Tang et al., 2018), Copyright 2018 IEEE.

In general, flash memory device can be integrated into NAND and NOR array structures based on the connection method, leading to a distinct program/erase method for each cell (Bez et al., 2003). NOR flash enables parallel computation for ANN applications, but it suffers from low area efficiency. On the other hand, NAND flash exhibits excellent area efficiency but lacks parallel summation capability due to its serial connection structure. As a result, to read the entire current of one bit line, the string cell must be read sequentially, leading to a significant disadvantage in terms of speed. Both NAND and NOR structures utilize Fowler–Northeim (F-N) tunneling for the erase method. However, they differ in their programming methods. In NOR flash, a high positive voltage applied to the gate and drain via channel hot electron injection causes electrons to move to the CTL in the drain pinch-off region. In NAND flash, a strong positive voltage applied to the gate through F-N tunneling bends the tunneling oxide, enabling electron injection into the CTL. When electrons are accumulated in the charge-trapping layer, the formation of the inversion layer is hindered. As a result, the threshold voltage is increased, and the threshold voltage is decreased in the opposite case, as shown in Figure 6B.

As a synaptic device, it offers significant advantages for analog computing, such as excellent endurance and retention characteristics, a wide memory window enabling numerous states, large on/off ratio, and good linearity. Zhou et al. (2022) demonstrated the conductance modulation of 3-D NAND differential pair with identical LTP and LTD pulses, as shown in Figure 6C. Due to the block erase operation in the NAND flash architecture, the synaptic weight was decreased or increased by simply programming one of the devices within the differential pair. However, in the NAND flash structure, cells are connected in series, and multiple cells are connected to a single WL. Due to the structural features of the NAND flash array, performing an inference operation may alter the weights of unintended cells due to the read and pass voltage. Therefore, it is essential to assess the disturbance characteristics caused by read and pass voltages. The repeated weight modulations were performed using an identical pulse (12 V, 13 μs), and excellent disturbance characteristics were confirmed for read (108) and program (106) for reliability characteristics as a synapse device. Lee et al. (2019b) employed a 2-D NAND flash string fabricated in the industry using 26 nm technology and confirmed the characteristics as synaptic devices. By changing the drain voltages, 30 multilevel states were secured, and the LTP and LTD characteristics were repeatedly checked using identical pulses (14 V, 100 μs). The retention (>104 s) and conductance response (after 1 k endurance cycling) were checked in 30 states for reliability characteristics.

FeFET has a three-terminal structure with a ferroelectric layer as the gate dielectric. FeFET controls the polarization direction of the ferroelectric layer through the gate voltage, and the threshold voltage is modulated in the opposite direction compared to flash memory, as depicted in Figure 6D. When a positive voltage is applied, the threshold voltage is lowered due to polarization and positive charge on the channel side, whereas the threshold voltage increases when a negative voltage is applied, as shown in Figure 6E. Unlike a flash memory device, which necessitates high voltage for F-N tunneling during program and erase operations, FeFET takes advantage of polarization induced by low switching voltage, resulting in lower power consumption. While perovskite materials have been studied for their good endurance and high-speed operation, hafnium oxide-based ferroelectric layers have gained attraction due to good CMOS process compatibility and scaling down (Böscke et al., 2011). FeFETs can be fabricated by replacing the gate dielectric layer with HfOx or HfZrOx (HZO) layers through low-temperature atomic layer deposition (ALD), following the conventional CMOS process flow (Ali et al., 2018; Mulaosmanovic et al., 2021).

Unlike the early Si channel-based MFS structure of the FeFET device (Yoon et al., 1999), the annealing process used to create the orthorhombic phase of HZO layer has led to the formation of a SiO2 interlayer between the ferroelectric gate dielectric and Si channel. When an intrinsically grown SiO2 interlayer is formed, applying voltage to the gate results in most of the electric field being confined within the SiO2 layer because of a lower dielectric constant (3.9) compared to HZO (20–40). This disparity in dielectric constants can potentially lead to charge trapping/de-trapping, interface reaction, and inter-diffusion issues (Tokumitsu et al., 2000; Anderson et al., 2018), which can contribute to poor endurance, retention, and a small dynamic range of FeFETs. To address the interface reaction and diffusion issues observed in the MFS structure, the metal-ferroelectric-insulator–semiconductor (MFIS) structures have been explored with the insertion of an interlayer between the ferroelectric layer and the Si layer (Mueller et al., 2013; Mulaosmanovic et al., 2017; Ni et al., 2018). The interlayer offers the advantage of preventing interdiffusion and interface reactions, thus reducing gate leakage current. When utilizing the MFIS structure, the ferroelectric layer and the dielectric interlayer form a configuration where capacitors are connected in series. This structure design leads to a decrease in the potential difference across the ferroelectric layer, posing a challenge that necessitates higher voltage for programming and erasing operations. To optimize the gate voltage applied to the ferroelectric layer, the magnitude of the voltage applied to the interlayer can be decreased by employing a high-k material for the interlayer, leading to extensive exploration into investigating suitable stack configurations. The MFMIS structure enhances the MFIS design by incorporating a floating gate metal layer between the ferroelectric layer and the Si substrate. The top MFM and bottom MIS components are independently designed, which effectively mitigates voltage drop issues in the MFM capacitor. This leads to a reduction in the operating voltages required for program and erase operations, addressing a pre-existing concern and significantly improving data retention characteristics.

FeFET emerges as a strong candidate for a synaptic device given its advantages, including low operating voltage and power consumption. Additionally, it exhibits effective conductance control, a high on/off ratio, a minimal sneak path, and rapid operation speed (Mulaosmanovic et al., 2017; Jerry et al., 2018). Jerry et al. (2017) fabricated an HZO-based MFIS-structured FeFET and demonstrated multilevel characteristics of high dynamic range (45) and 32 conductance state levels by using multi-domain polarization characteristics. The linearity characteristics were verified by using three methods of changing identical, ISPP, and pulse width modulation, and the linear and symmetric weight update characteristics were secured with ISPP pulses (75 ns, 50 mV step), as shown in Figure 6F. Dutta et al. (2020) fabricated a BEOL-compatible FeFET capable of 3-D integration with In2O3 (IWO) channel and HZO layer. A wide memory window of 1.2 V, a fast write speed of 100 ns, endurance cycle (>108), and memory retention characteristics (>103 s) were confirmed through gate voltage pulses. In addition, uniform cycle-to-cycle variation was secured for the state of 2 bits according to the program voltage. Kim M.-K. et al. (2021) fabricated a FeFET using an HZO ferroelectric layer and an IZTO channel. The conductance modulation characteristics were confirmed through the gate voltage, and the on/off ratio (>10) and endurance characteristics (>107) were secured. To obtain linear and symmetric characteristics, the ISPP method was used to secure 64 conductance states and symmetric characteristics of 0.98 and 1.1795.

Electrochemical RAM (ECRAM) is a non-volatile, three-terminal device operating with electrochemical switching behaviors. It consists of a gate, ionic reservoir, source/drain, electrolyte, and conductive channel, as depicted in Figure 6G (Nitta et al., 2015; Van De Burgt et al., 2017; Jeong et al., 2021; Kang and Woo, 2021; Talin et al., 2022). It is also known as an ion-based synaptic transistor. In ECRAM, the conventional gate dielectrics of three-terminal transistors are replaced with an electrolyte layer. Depending on the applied gate voltage, ions from the electrolyte diffuse into the channel, inducing an electrochemical change that controls the conductance of the channel, as shown in Figure 6H. In general, the electrolyte ions include Li+, H+, O2−, etc., and lithium-ion-based synaptic transistors have been extensively studied (Fuller et al., 2017; Yang et al., 2018). By allowing ions to leave or enter the channel, ECRAM can modulate the conductivity of the channel, enabling it to function as an analog synaptic device. The conductivity of the channel is monitored by applying a fixed voltage to the source-drain voltage during read and write operations. ECRAM exhibits relatively symmetric switching characteristics and low stochasticity due to the control of electrochemical reactions by the amount of charge generated in response to the applied gate voltage.

Tang et al. (2018) demonstrated an ECRAM device based on a WO3 channel with LiPON electrolyte and its operational characteristics. By applying positive and negative gate current pulses, the conductance of the WO3 channel was modulated through the intercalation of lithium ions, achieving potentiation and depression responses, as shown in Figure 6I. The measured conductance response exhibited excellent symmetry, a large dynamic range (up to 103), and minimal stochasticity, allowing for precise conductance state representation. Furthermore, the ECRAM device demonstrated the endurance characteristics with no degradation, lasting up to 105 pulses. Additionally, the ECRAM device showed high-speed programming capability (down to 5 ns) and scalability potential, indicating its promising prospects as a synaptic device. Kim et al. (2019) demonstrated a metal oxide ECRAM array based on WO3 channel, HfO2 electrolyte, and metal oxide reservoir stack, and both array operations and stochastic update algorithm-based weight update characteristics were experimentally presented. The fabricated devices exhibited reliable synaptic characteristics, including retention of over 14 h after programming, endurance of 20 million pulses, and an on/off ratio of 2. The metal oxide ECRAM-based 2 × 2 array was configured with the drain terminals of each device connected in one row and the source terminals connected in one column, and the half-V scheme was employed for weight updates. The array was trained using a linear regression problem, and the learning algorithm tuned the weights toward the target values. As the training progressed, the weight values converged to target values, and the error with respect to the epoch approached zero, as demonstrated through experimentation.

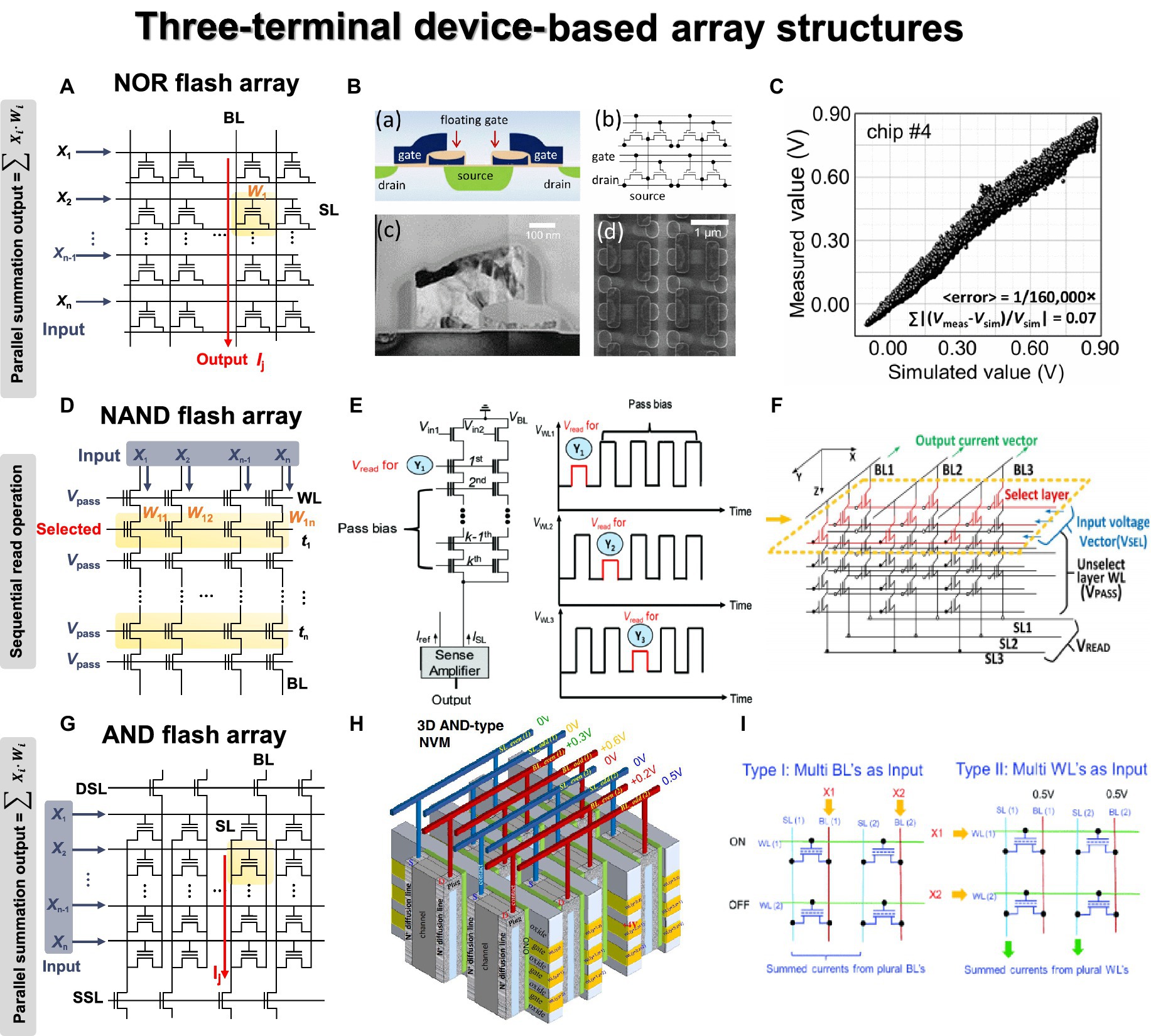

Most three-terminal devices are integrated into flash memory arrays, and representative structures are NOR, NAND, and AND flash arrays. Additionally, some studies have demonstrated the use of 3-D stacked arrays, considering the commercialized 3-D NAND flash memory. Figure 7A illustrates the structure of a 2-D NOR flash array and the VMM operation mechanism. Similar to the memristor CBA, each cell is connected in parallel, enabling simultaneous VMM operations. When input signals are applied to WLs, drain current with constant bias can be summed along with the BL or source line (SL) direction. The input signals can also be applied to BLs, and current summations occur along with SLs. Guo et al. (2017) employed embedded NOR flash memory technology for neuromorphic classifiers, as shown in Figure 7B. For analog computing, the synaptic devices in the array exhibited near analog-grade weight levels achieved by the weight-tuning process, including a verification step. The accurate VMM results were experimentally demonstrated with 10,000 CIFAR-10 test images, as depicted in Figure 7C. The reliable classification operation was also verified by comparing the relative changes of the output voltages for all 10,000 MNIST test images between the originally measured one and those measured 7 months later.

Figure 7. Three terminal device array structures. Yellow panel indicates the weight matrix implemented by conductance of three-terminal devices, and red lines indicate the bitline where output currents are obtained through Ohm’s law and Kirchhoff’s current law. (A) NOR flash array. (B) Analog VMM circuit schematic using floating gate NOR flash structure. (C) Simulated and measured VMM results for 10,000 inputs. (B,C) Reproduced with permission (Guo et al., 2017), Copyright 2017 IEEE. (D) NAND flash array. (E) Circuit diagram and bias scheme of 3-D NAND array architecture for VMM operation with sequential read method. Reproduced with permission (Lee et al., 2019a), Copyright 2019 IEEE. (F) The circuit diagram of VMM operations in 3-D NAND array structure. For the selected layer, the selected input voltage vector is applied to WL, and a pass voltage is applied to WL of the remaining layers. Vread is applied to SL, and GND is applied to BL to read the BL current. Reproduced with permission (Wang P. et al., 2018), Copyright 2018 IEEE. (G) AND flash array. (H) Schematic diagram of 3-D AND-type NVM. (I) Two VMM methods proposed in the 3D AND flash array structure. Method 1: Insert analog input into BL to read current from SL. Method 2: Apply binary input to WL to enable high-density, fully connected operation. (H,I) Reproduced with permission (Lue et al., 2018), Copyright 2018 IEEE.

In contrast to NOR flash array, NAND flash structure connects cells in series to form a string, as shown in Figure 7D. This series connection prevents parallel current summation, allowing only one cell to be read at a time. To read the state of one cell, pass voltage must be applied to all unselected cells in the same string to function as wires. During program or erase operations, a high voltage is applied to the same page due to the shared WL structure, and an inhibit voltage is necessary to prevent disturbance. Because of this structure, simultaneous VMM operation is challenging in NAND flash array. The input signals can be applied to either WL or BL, while the other one is biased. In both scenarios, however, each cell needs to be read sequentially due to the serial connection of NAND flash string. Lee et al. (2019a) presented a method for implementing the XNOR operation using a 2-D NAND string within the group. Figure 7E shows the process of performing VMM operation on a 2-D NAND string, along with the distribution of string current output based on the XNOR operation results. When two cells are utilized as a pair, +1 is an output when the left cell is in the on state. The input signals were applied to the input transistors of the string cells, and the VMM operation was executed while sequentially reading the WLs. The distribution of outputs +1 and − 1 confirmed the proper execution of the XNOR operation based on the state of each cell.

This issue can be addressed with a 3-D NAND flash structure, where each string is connected in parallel through BL. This structural feature allows the string current to be summed along the BL direction, enabling simultaneous VMM operations (Wang P. et al., 2020). The VMM method slightly varies depending on the planes within the 3-D-based structure used for weight mapping. In addition, the technologically matured 3-D NAND flash structure offers significant advantages for implementing large-scale DNNs with numerous synapse weights. Wang P. et al. (2018) demonstrated a method for VMM operation based on 3-D NAND flash architecture, as shown in Figure 7F. The weight matrix was mapped on one WL layer (XY plane), so the selection voltage was needed for the selected WL, while pass voltage was applied to the unselected WLs. The input signals were applied to the SL in the form of a read voltage so that string current could be summed along the BL. The result of VMM calculation performed in the 256 × 256 × 8 structure was demonstrated with SPICE simulation, showing a linear BL current increase as the number of cells increased. Kim I.-J. et al. (2023) developed a 3-D NAND structure using FeFETs and conducted VMM operations with 4 × 2 images. The fabricated 3-D Fe-NAND structure demonstrated various electrical characteristics, and array operations were successfully verified. Like traditional NAND flash, the pass voltage of 2 V was applied to unread cells, while −5 V pulse was used for erasing, and a program pulse of 4 V was applied to the selected cell with 0 V. A voltage of 2.5 V was applied to the BL for the unwanted string. Through repetitive device measurements, program, erase, and inhibit characteristics were confirmed to work effectively. The device also exhibited reliable retention characteristics (106 s) and endurance operation (106) in each state, proving its usability as a synapse device. As a synapse array, the input signal was applied to BL in the 3-D NAND structure, and string current was summed to the SL line as a result of the multiplication between the BL voltage and the cell conductance.

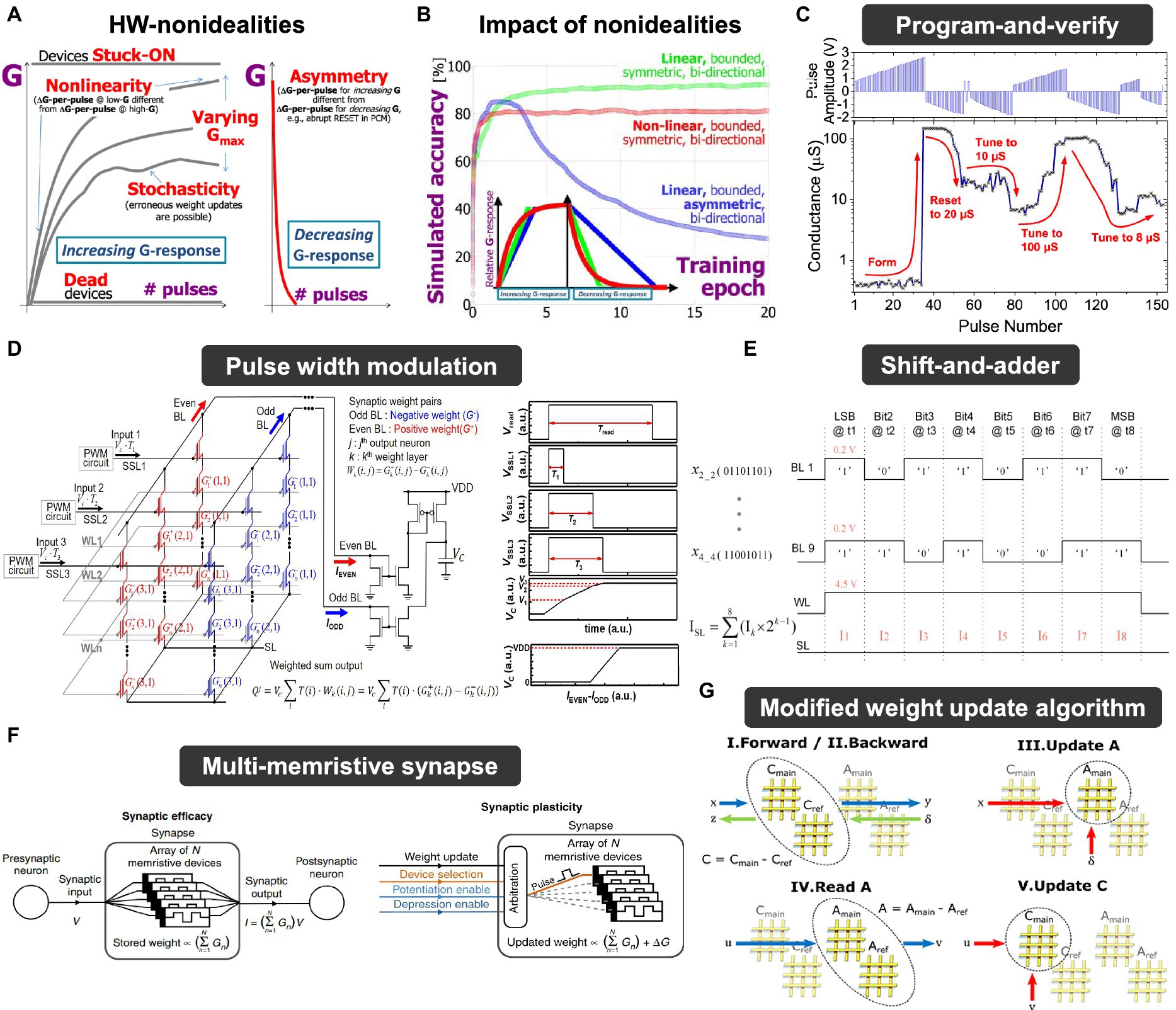

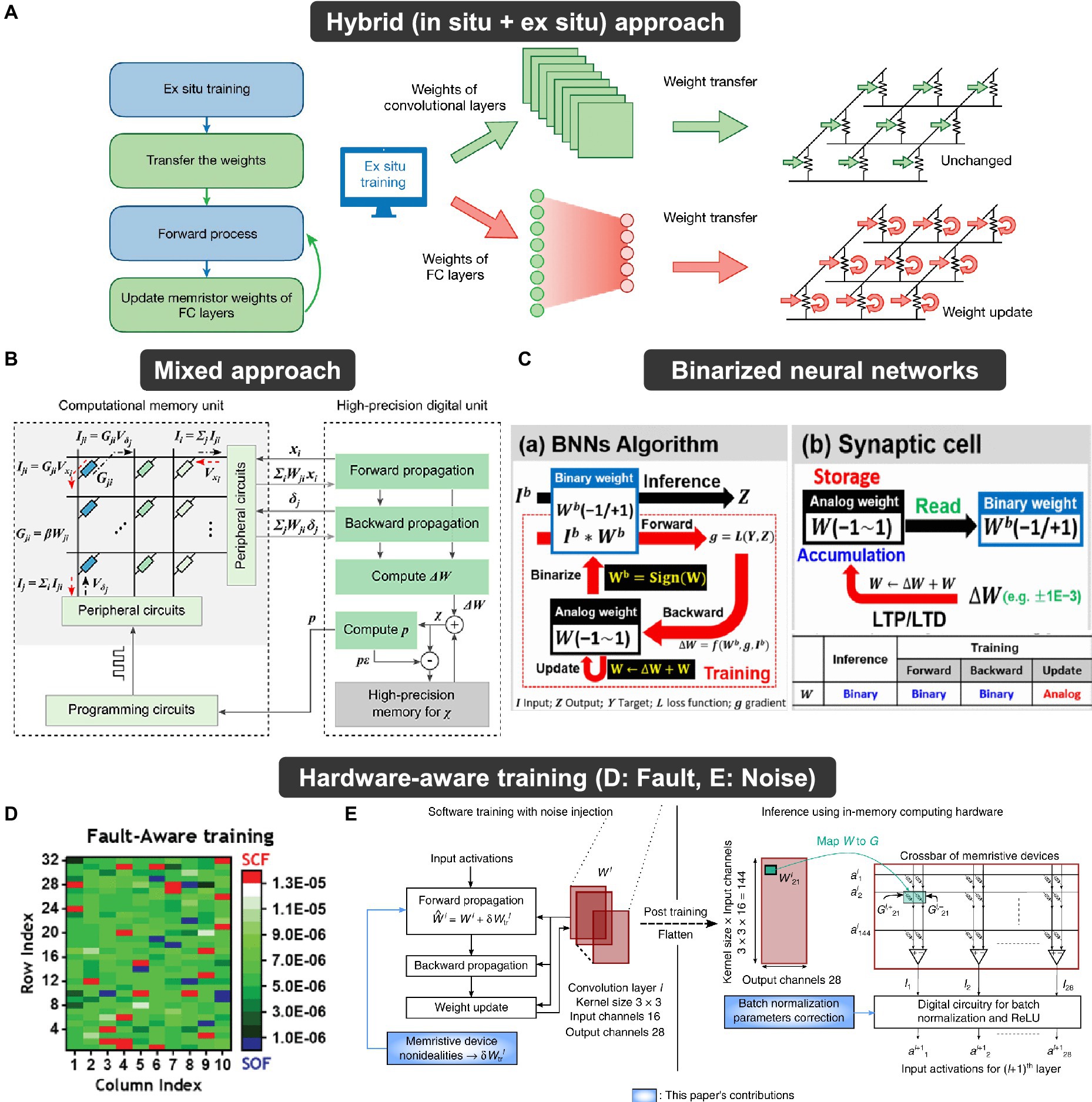

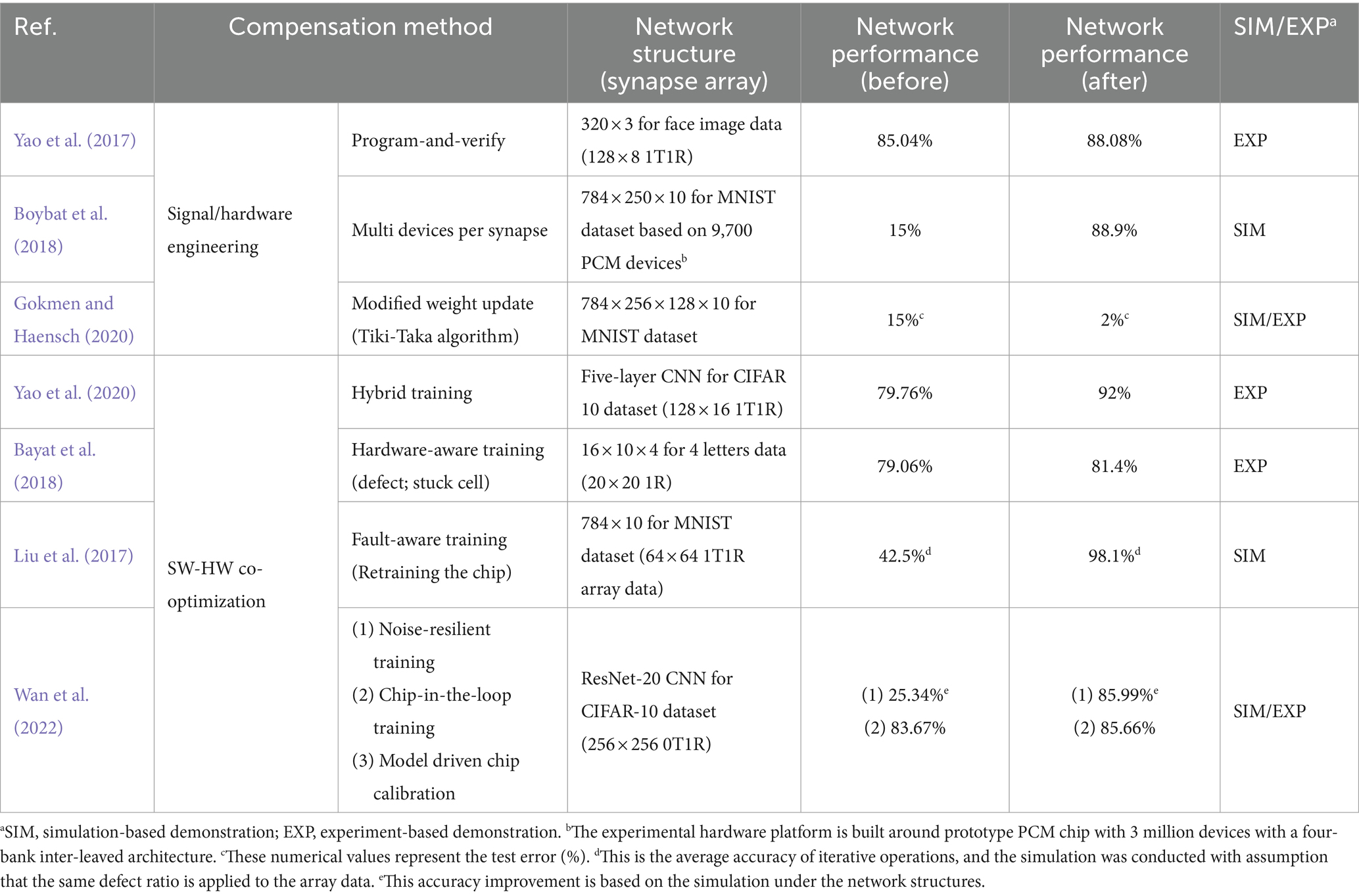

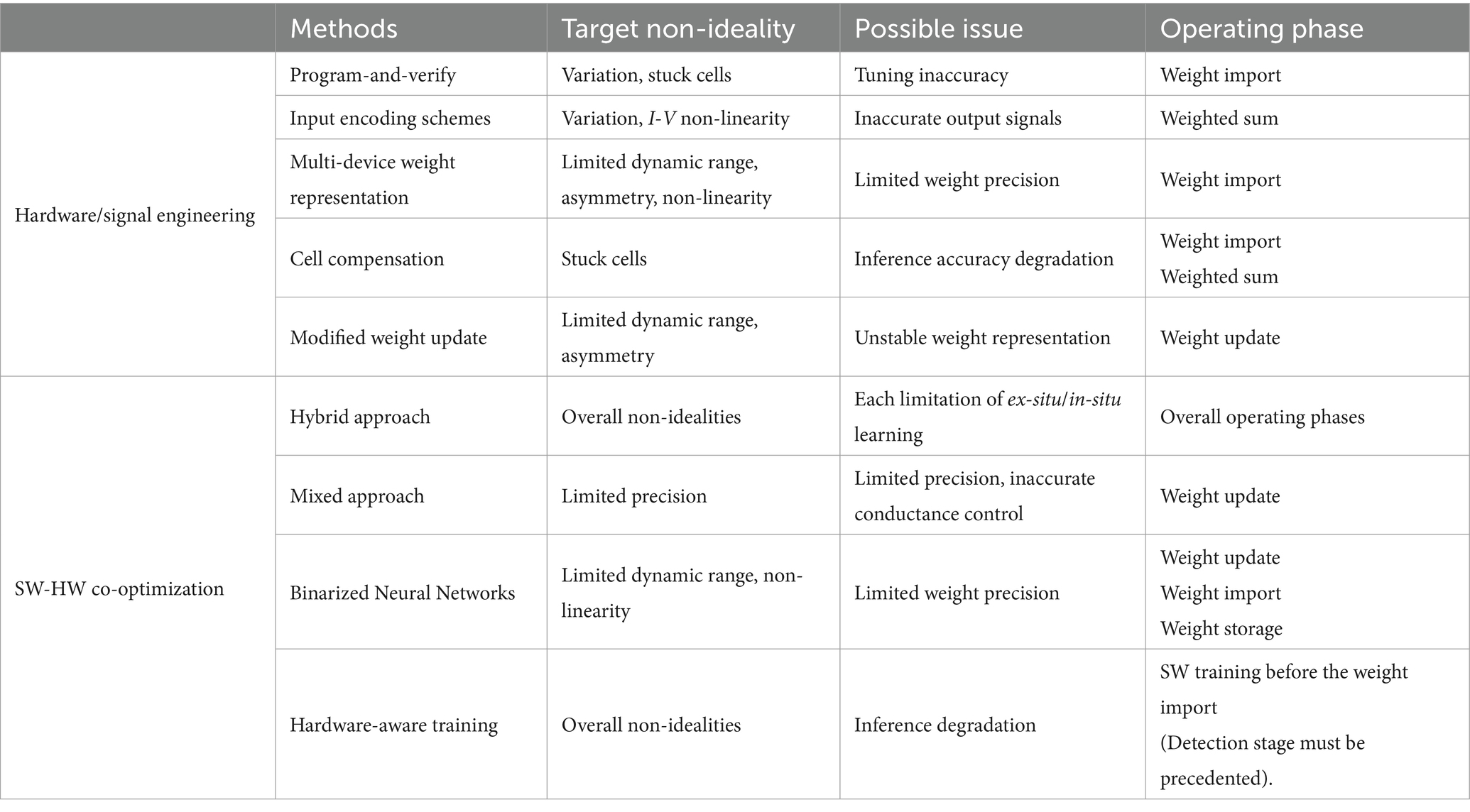

AND flash array is also one of the potential candidates as a synapse array for three-terminal devices, as shown in Figure 7G (Jang et al., 2020; Lue et al., 2020; Seo et al., 2021). Its VMM operation is very similar to that of the NOR flash array. The input signals can be applied to either WL or BL, and each cell current can be summed along with SL. Since each cell is integrated in parallel along with the BL and SL directions, parallel current summation and VMM operations can be conducted. Due to the parallel BLs and SLs, sneak paths can be prevented during programming, and 3-D array integration is in a structure similar to that of 3-D NAND flash architecture with common drain and source plugs. Lue et al. (2018) presented 3-D AND-type array for in-memory VMM computations, as shown in Figure 7H. The current summations were conducted along with the SL as a result of the multiplication between the BL voltage and the device conductance. In addition, two VMM methods were proposed for the 3-D AND flash array depending on whether WL or BL is used as input signal, as shown in Figure 7I. The former method enabled high-resolution operation because analog input was inserted into BL to read current from SL. The latter method enabled high-density and fully connected operation by applying binary input to WL.