- 1School of Information Science and Electrical Engineering, Shandong Jiaotong University, Jinan, China

- 2School of Science, Shandong Jianzhu University, Jinan, China

Introduction: The current method of monitoring sleep disorders is complex, time-consuming, and uncomfortable, although it can provide scientifc guidance to ensure worldwide sleep quality. This study aims to seek a comfortable and convenient method for identifying sleep apnea syndrome.

Methods: In this work, a one-dimensional convolutional neural network model was established. To classify this condition, the model was trained with the photoplethysmographic (PPG) signals of 20 healthy people and 39 sleep apnea syndrome (SAS) patients, and the influence of noise on the model was tested by anti-interference experiments.

Results and Discussion: The results showed that the accuracy of the model for SAS classifcation exceeds 90%, and it has some antiinterference ability. This paper provides a SAS detection method based on PPG signals, which is helpful for portable wearable detection.

1. Introduction

According to the statistics of the World Health Organization, more than one-third of the world’s population suffers from sleep disorders, which seriously affect people’s health. SAS is a common sleep disorder, and its standard recognized method of diagnosis is polysomnography. However, this method requires multiple sensors, resulting in discomfort during the detection process. It can also seriously affect the patient’s natural sleep mode, with high costs (Phan and Mikkelsen, 2022). Thus, it is an urgent problem to find a simple and comfortable diagnostic method for the detection of SAS. To improve the comfort of the diagnostic process, thermal infrared imaging, radio frequency (RF) architecture, and sound detection have been introduced for non-contact detection (Murthy et al., 2009; Norman et al., 2014; Penzel, 2017; Tran et al., 2019), since body position, limb movement, and noise can easily interfere with the monitoring results. In recent years, some scholars have been committed to researching SAS detection based on wearable devices, which are used to collect chest bioimpedance, electrocardiogram (ECG), or PPG. At the same time, machine learning or deep learning are used to detect SAS, with accuracy generally around 70–85% (Baty et al., 2020; Hsu et al., 2020; Papini et al., 2020; van Steenkiste et al., 2020).

At present, many scholars have conducted research on convenient SAS detection based on neural networks. Convolutional neural networks have been gradually applied to analyze sleep quality. Song and other researchers constructed convolutional neural networks to classify sleep stages using single-channel electrocardiogram signals (Song et al., 2016; Sors et al., 2018; Wang et al., 2019; Eldele et al., 2021; Haghayegh et al., 2023). Guo et al. (2022) proposed a pseudo-3D convolutional neural network method to detect people’s nocturnal sleep behavior, with an accuracy of 90.67% on the test set. du-Yan et al. (2022) used convolutional neural networks to analyze sleep stages using heart rate variability. Casal et al. (2022) constructed a time convolutional network and transformer using pulse oximeter signals to classify sleep stages. The above research methods mostly extract classification features from ECG signals. However, ECG signals are easily affected by low-frequency and large-amplitude P and T waves, and the above studies are mostly used for sleep stages but not for SAS detection.

Pulse signals contain all kinds of human information and can be easily obtained, and monitoring it is of great significance in assessing the risk of various diseases (Allen and Hedley, 2019). Pulse wave amplitude and pulse rate variability have been used for SAS diagnosis and detection (Haba-Rubio et al., 2005; Liu, 2017). However, when the signal is disturbed or weak, it is very difficult to extract local features of the waveform using these methods. As fitting functions, the Gaussian function and lognormal function use the global information of the signal to extract the characteristics of pulse waves for SAS research (Jiang et al., 2021), but this method requires normalizing the data, resulting in a long processing period. Shen et al. established a convolutional network using PPG signals collected from wearable smart bracelet devices to detect sleep apnea syndrome, but the accuracy of fragment detection is approximately 80% (Shen et al., 2022).

In this study, a one-dimensional convolutional neural network (1D-CNN) was established by using PPG signals for the recognition of SAS, with a classification accuracy of over 90%. The results indicate that the convolutional model based on PPG has satisfactory recognition performance for SAS. This means that SAS can be identified using PPG signals by a one-dimensional convolutional model, which can make the detection process of SAS convenient and comfortable.

2. Methods

2.1. Subjects and data

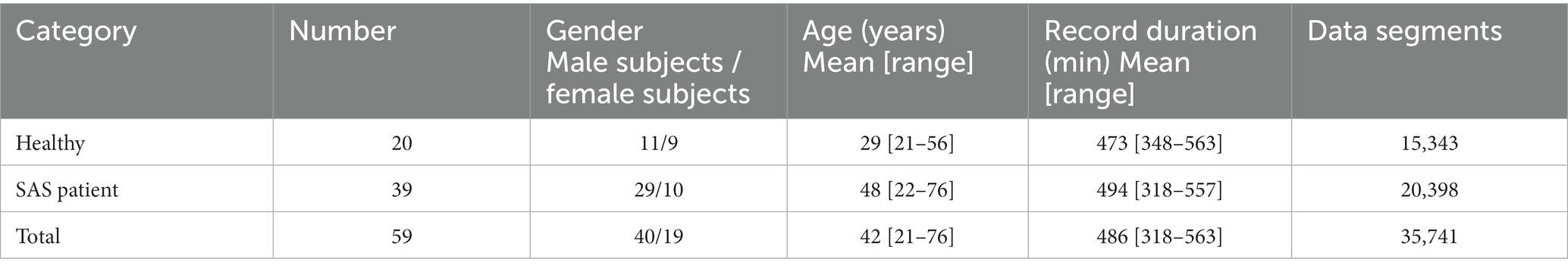

In this study, signals were collected from 59 subjects, which included 20 healthy people and 39 SAS patients. The data used for analysis were the PPG signals of the subject’s fingers obtained from the Alice 5 detection system of the polysomnography monitor in the Sleep Center of Shandong Provincial Hospital. It was approved by the committee of our research institute as a retrospective study with the subjects’ informed consent. The PPG signals (sampling frequency is 100 Hz) of each subject are segmented by 1,500 points. Table 1 shows the clinical information of 59 subjects and the summary of the PPG datasets.

As shown in Table 1, this study used 35,741 data segments, all of which were randomly divided into training, validation, and test sets, with a ratio of 6:2:2. To avoid the contingency of the experimental results, five cross-validations were used for training.

2.2. One-dimensional convolutional neural network

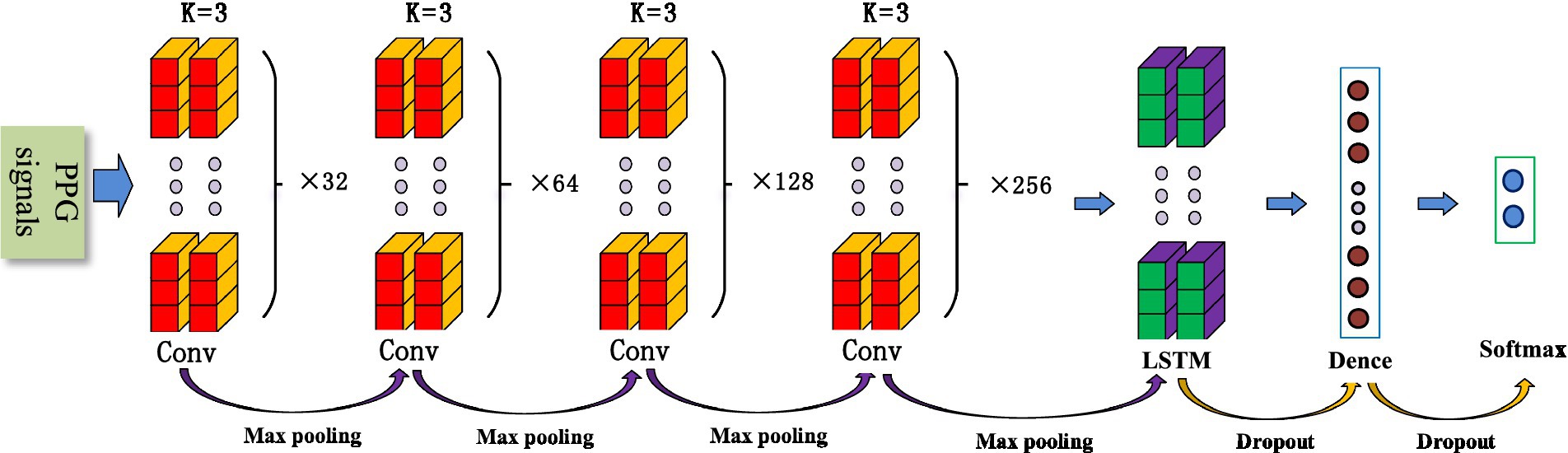

The convolutional neural network (CNN) is a common deep learning model, whose convolutional kernel can extract intrinsic features from different dimensions. It has the characteristics of local perception and weight, allowing the merging of local features from different fields of view. This greatly enhances learning efficiency and accuracy. In addition, its network structure mainly adopts local connections and weight-sharing methods, which reduce the number of weights, facilitate network optimization, and minimize model complexity and the occurrence of overfitting. Considering that pulse wave data is a one-dimensional time series signal, this study proposes a 1D-CNN model for SAS classification and detection that includes eight convolutional layers, four maximum pooling layers, two LSTMs, and two fully connected layers. Figure 1 shows the structure of the 1D-CNN model.

2.2.1. Convolutional layers

Convolutional layers are mainly used for feature extraction and can automatically extract features for learning. Different convolutional kernels can extract different local features, and the amount of feature learning can be increased by setting different convolutional kernels. As the number of layers in a neural network increases, convolutional neural networks typically have stronger feature extraction capabilities and yield better results. However, increasing the number of convolutional kernels significantly raises computational complexity and the difficulty of network training. At the same time, with the increase in network depth, it is easy to cause gradient vanishing and overfitting. To prevent these phenomena and obtain accurate results, this paper designs a progressive convolutional kernel scheme layer by layer. As shown in Figure 1, the model consists of eight convolutional layers, divided into four groups, each with two convolutional layers. A pooling layer and the ELU activation function are added between the convolution layer groups. The k-value of each convolutional kernel is 3, and the number of neurons in the four groups is 32, 64, 128, and 256, respectively.

2.2.2. Pooling layers

Pooling is a process of data processing that reduces the dimensionality of feature maps and the number of parameters in the network. The pooling layer can gradually reduce the feature map output of the network and improve learning efficiency. In this study, four maximum pooling layers were designed. This design achieves rapid dimensionality reduction of information by mapping distributed features to the sample label space while ensuring its comprehensiveness and translation invariance.

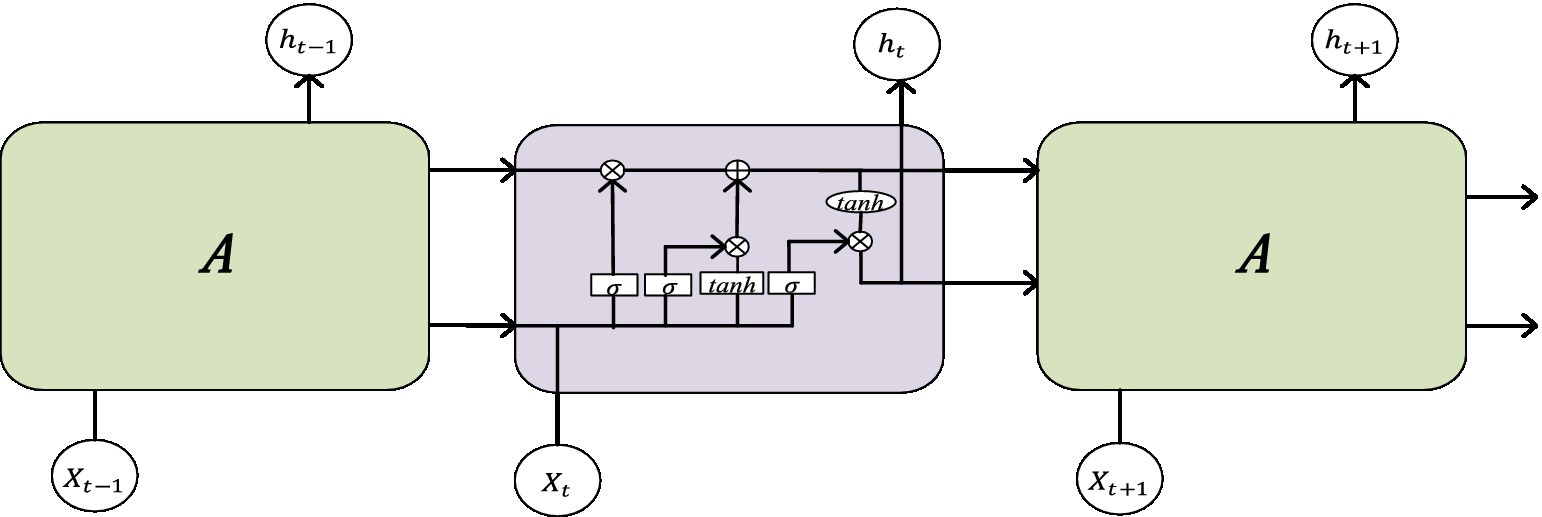

2.2.3. LSTM

The Long Short-Term Memory (LSTM) neural network is an improved network based on recurrent neural networks. Due to the fact that traditional RNN structures are prone to associated gradient problems during training, they are not suitable for processing time dependence. The LSTM network can solve the dependency problem of RNN networks through the gate structure, thereby establishing a larger deep network. Its structural diagram is shown in Figure 2. Input gates can facilitate the flow of information and update the state of cells. The output gate can not only achieve information outflow but also be used to determine the value of the next hidden state. The Forgotten Gate can update the previous state and choose whether to discard or retain the information. The sigmoid function categorizes the data between 0 and 1, filters the updated data, and then transfers the output data of the previously hidden layer and the current state data together to the Tanh function to determine a new candidate value. Finally, the outputs of these two functions are multiplied.

The pulse wave is a temporal signal, and this article uses two LSTMs to extract temporal features from the data.

2.2.4. Cross-entropy loss function

The loss function is the key factor that guides the optimization direction of neural network parameters. The parameters of the network model are updated according to the backpropagation of the loss function to optimize the model. The cross-entropy loss function uses the logic function to obtain probabilities and adopts an inter-class competition mechanism to effectively learn inter-class information. This scientific question in this paper is a binary problem; therefore, the binary cross-entropy loss function is utilized, which is defined in Formula 1 as:

3. Results

3.1. Evaluation indicators

To validate the performance of the model, four indicators were used to evaluate the classification performance of the model: accuracy (ACC), precision (PRE), sensitivity (SE), and specificity (SP). The calculation formula for each indicator is as follows:

3.2. Result comparison

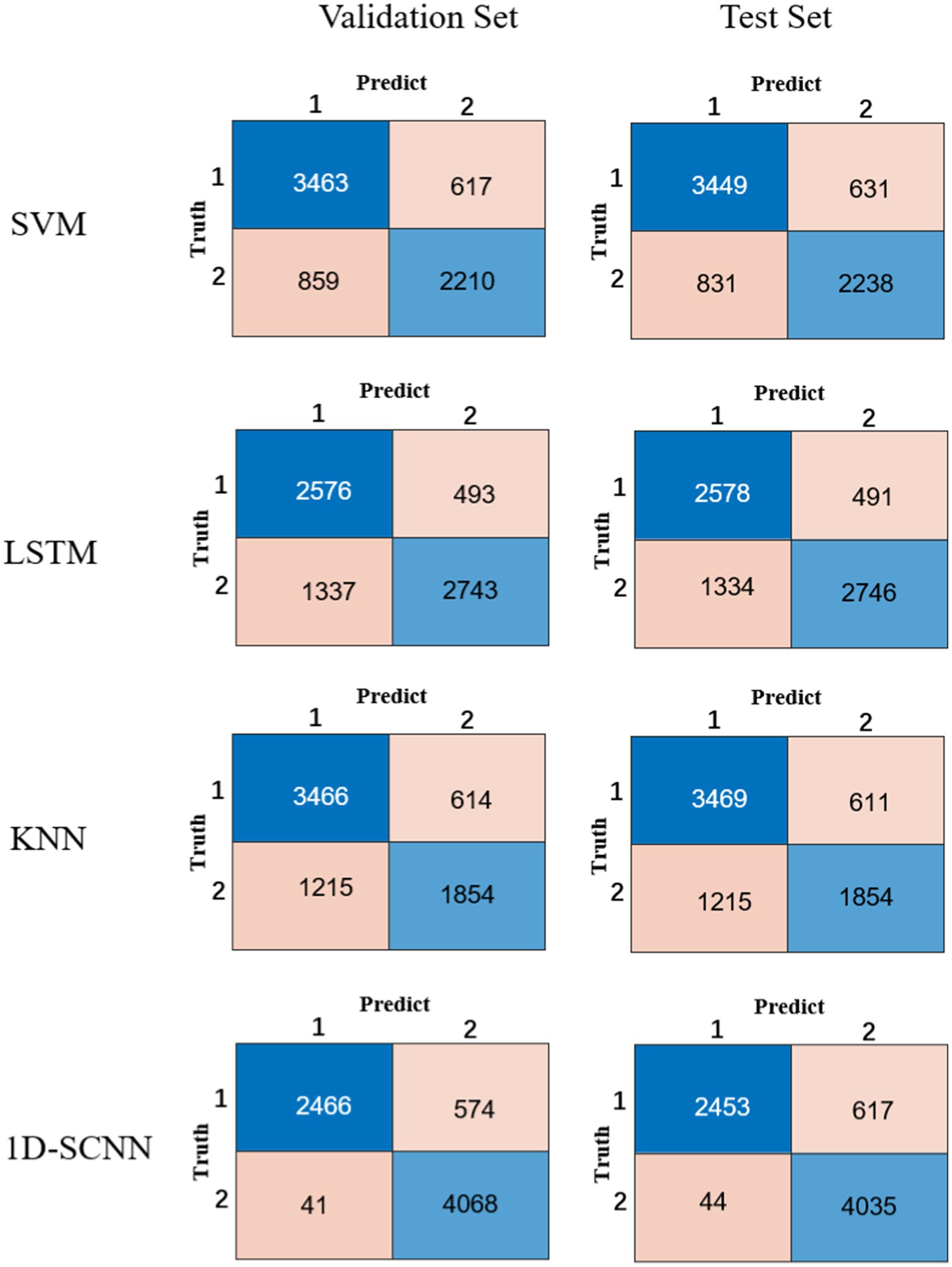

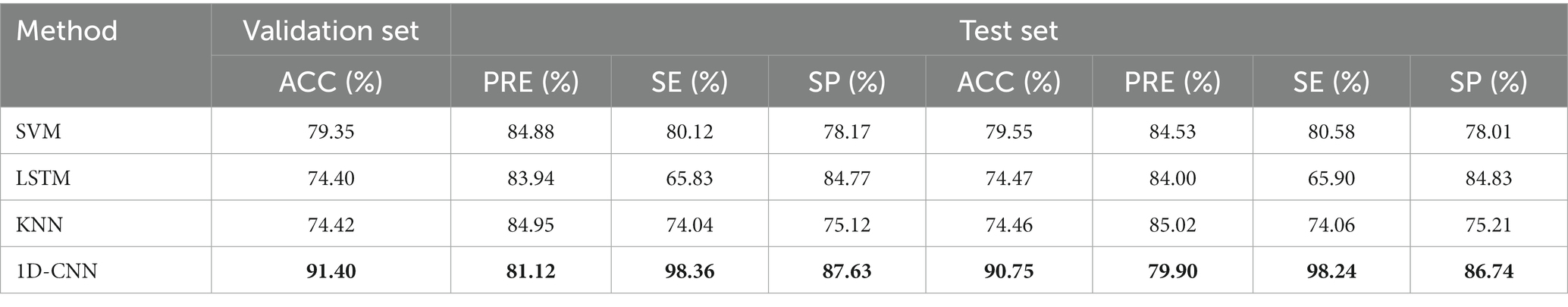

In order to verify the performance of the 1D-CNN model, SVM, LSTM, and KNN models were also constructed using the same data. The comparison between the confusion matrix results of the four models is shown in Figure 3. The evaluation index values of each model are shown in Table 2.

Compared to the other three models, it is obvious from Table 2 that the 1D-CNN model established in this paper exhibited good performance. Except for the PRE indicator, the 1D-CNN model achieved the highest values for the other three performance indicators, ACC, SE, and SP Their values are 91.40, 98.36, and 87.63%, respectively, in the validation set; and 90.75, 98.24, and 86.74%, respectively, in the test set.

3.3. Anti-interference experiment

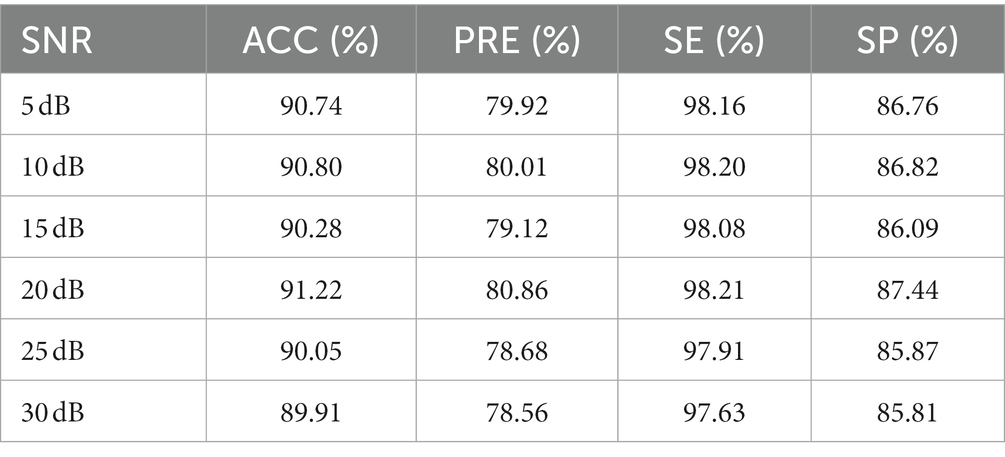

To test the influence of noise on the performance of 1D-SCNN, an anti-interference experiment was designed by adding Gaussian white noise to the original signal with a signal-to-noise ratio (SNR) of 5, 10, 15, 20, 25, and 30 dB, respectively. The data segments were also randomly divided into training, validation, and test sets in a ratio of 6:2:2. The anti-interference test results of the model’s test set are shown in Table 3. The experimental results indicated that noise has little effect on the performance indicators of 1D-SCNN, and the model has a certain anti-interference ability.

3.4. Ablation experiment

To verify the role of the LSTM layer in the model, we designed an ablation experiment by removing the LSTM layer from the 1D-CNN model, and then used the original data and followed the same method to train the model without the LSTM layer. The performance indicators ACC, PRE, SE, and SP of the test set without the LSTM layer had values of 89.94, 78.57, 97.71, and 85.81%, respectively, which reduced its accuracy by 0.81% compared to the 1D-CNN model. The results showed that the LSTM layer can improve system performance, although the accuracy improvement is not very significant.

4. Discussion

In this study, we constructed a 1D-CNN model for SAS detection and compared its performance with SVM, LSTM, and KNN models. The results showed that the accuracy of the 1D-CNN model on the test set was 90.75%, which was 11.2% higher than the results for the SVM model, with a recorded accuracy of 79.55% on the test set. The results indicate that the constrained 1D-CNN model in this study has better performance in the classification of SAS. At the same time, we designed anti-interference and ablation experiments to test the anti-noise performance of the model and the role of LSTM layers, respectively. The experimental results indicated that the model has a certain level of anti-interference ability, and the LSTM layer helps to improve the performance of the model.

Shen et al. (2022) proposed a Multitask Residual Shrinkage Convolutional Neural Network that utilizes PPG signals to detect SAS with a fragment detection accuracy of 81.82%. Lazazzera et al. (2021) also proposed a method to detect and classify sleep apnea and hypopnea using light plethysmography (PPG) and peripheral oxygen saturation [SpO(2)] signals. However, there is significant room for improvement in the accuracy of their models. In our previous work (Jiang et al., 2021), Gaussian and lognormal functions were used to build SVM models based on PPG signals to classify SAS. The correct rate of the SVM model with a lognormal function in the awake period reached 95.00%, and the correct rate of the SVM model with a Gaussian function in the rapid eye movement periods reached 93%. However, in this study, only 10 cycles of pulse signals were captured from each subject, and the difference between the number of healthy individuals and the number of patients was too large, while the SVM machine learning method did not separate more subtypes. All these factors make the generalization ability of SVM models weak.

This study has several limitations. First, the sample size is small, involving only 59 subjects for a total of 35,741 data segments, which may have affected the performance of the model. Second, compared to SAS patients, healthy subjects are younger. Previous studies have shown that age affects PPG signals (Millasseau et al., 2002; Liu et al., 2015), and differences in PPG signals caused by different age groups may also affect the classification performance of the model. However, the above factors have a small impact on the performance of the model, which has not changed much overall.

5. Conclusion

In this study, a 1D-CNN model based on PPG signals for SAS classification was established. The results showed that this had the best performance, with a test set accuracy of over 90%, compared to other types of models. Our research results indicate that using only PPG signals for SAS classification is feasible, which can provide a foundation for seeking convenient and comfortable SAS detection methods. Furthermore, this can be helpful for portable wearable detection.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

XJ was mainly responsible for data analysis and writing of the manuscript. YR was mainly responsible for data collection and analysis of the manuscript. HW was mainly responsible for algorithms. YL was mainly responsible for organizing data. FL was mainly responsible for the structural design and revision of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the National Natural Science Foundation of China (Grant nos. 82072014 and 61901114), the National Key R&D Program of China (Grant no. 2019YFE010670), the Natural Science Foundation of Shandong Province (Grant no. ZR2020MF028), and the Key R&D Program of Shandong Province (Grant no.2020CXGC010110).

Acknowledgments

The authors acknowledge the support of the Southeast-Lenovo Wearable Heart-Sleep-Emotion Intelligent Monitoring Lab.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Allen, J., and Hedley, S. (2019). Simple photoplethysmography pulse encoding technique for communicating the detection of peripheral arterial disease-a proof of concept study. Physiol. Meas. 40:08NT01. doi: 10.1088/1361-6579/ab3545

Baty, F., Boesch, M., Widmer, S., Annaheim, S., Fontana, P., Camenzind, M., et al. (2020). Classification of sleep apnea severity by electrocardiogram monitoring using a novel wearable device. Sensors 20, 286–298. doi: 10.3390/s20010286

Casal, R., Di Persia, L. E., and Schlotthauer, G. (2022). Temporal convolutional networks and transformers for classifying the sleep stage in awake or asleep using pulse oximetry signals. J. Comput. Sci. 59:101544. doi: 10.1016/j.jocs.2021.101544

du-Yan, G., Jia-Xing, W., Yan, W., and Xuan-Yu, L. (2022). Convolutional neural network is a good technique for sleep staging based on HRV: a comparative analysis. Neurosci. Lett. 779:136550. doi: 10.1016/j.neulet.2022.136550

Eldele, E., Chen, Z., Liu, C., Wu, M., Kwoh, C. K., Li, X., et al. (2021). An attention-based deep learning approach for sleep stage classification with single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 809–818. doi: 10.1109/TNSRE.2021.3076234

Guo, R., Zhai, C., Zheng, L., and Zhang, L. (2022). Sleep behavior detection based on pseudo-3d convolutional neural network and attention mechanism. IEEE Access 10, 90101–90110. doi: 10.1109/ACCESS.2022.3201496

Haba-Rubio, J., Darbellay, G., Herrmann, F. R., Frey, J. G., Fernandes, A., Vesin, J. M., et al. (2005). Obstructive sleep apnea syndrome: effect of respiratory events and arousal on pulse wave amplitude measured by photoplethysmography in NREM sleep. Sleep Breath. 9, 73–81. doi: 10.1007/s11325-005-0017-y

Haghayegh, S., Hu, K., Stone, K., Redline, S., and Schernhammer, E. (2023). Automated sleep stages classification using convolutional neural network from raw and time-frequency electroencephalogram signals: systematic evaluation study. J. Med. Internet Res. 25:e40211. doi: 10.2196/40211

Hsu, Y.-S., Chen, T. Y., Wu, D., Lin, C. M., Juang, J. N., and Liu, W. T. (2020). Screening of obstructive sleep apnea in patients who snore using a patch-type device with electrocardiogram and 3-axis accelerometer. J. Clin. Sleep Med. 16, 1149–1160. doi: 10.5664/jcsm.8462

Jiang, X., Wei, S., Zhao, L., Liu, F., and Liu, C. (2021). Analysis of photoplethysmographic morphology in sleep apnea syndrome patients using curve fitting and support vector machine. J. Mech. Med. Biol. 21:2140019. doi: 10.1142/S0219519421400194

Lazazzera, R., Deviaene, M., Varon, C., Buyse, B., Testelmans, D., Laguna, P., et al. (2021). Detection and classification of sleep apnea and hypopnea using PPG and SpO2 signals. IEEE Trans. Biomed. Eng. 68, 1496–1506. doi: 10.1109/TBME.2020.3028041

Liu, S. (2017). Comparison between heart rate variability and pulse rate variability during different sleep stages for sleep apnea patients. Technol. Health Care 25, 435–445. doi: 10.3233/THC-161283

Liu, C., Zheng, D., and Murray, A. (2015). Arteries stiffen with age, but can retain an ability to become more elastic with applied external cuff pressure. Medicine 94:e1831. doi: 10.1097/MD.0000000000001831

Millasseau, S. C., Kelly, R. P., Ritter, J. M., and Chowienczyk, P. J. (2002). Determination of age-related increases in large artery stiffness by digital pulse contour analysis. Clin. Sci. 103, 371–377. doi: 10.1042/cs1030371

Murthy, J. N., van Jaarsveld, J., Fei, J., Pavlidis, I., Harrykissoon, R. I., Lucke, J. F., et al. (2009). Thermal infrared imaging: a novel method to monitor airflow during polysomnography. Sleep 32, 1521–1527. doi: 10.1093/sleep/32.11.1521

Norman, M. B., Middleton, S., Erskine, O., Middleton, P. G., Wheatley, J. R., and Sullivan, C. E. (2014). Validation of the sonomat: a contactless monitoring system used for the diagnosis of sleep disordered breathing. Sleep 37, 1477–1487. doi: 10.5665/sleep.3996

Papini, G. B., Fonseca, P., van Gilst, M. M., Bergmans, J. W. M., Vullings, R., and Overeem, S. (2020). Wearable monitoring of sleep-disordered breathing: estimation of the apnea–hypopnea index using wrist-worn reflective photoplethysmography. Sci. Rep. 10:13512. doi: 10.1038/s41598-020-69935-7

Penzel, T. (2017). “Home sleep testing – science direct” in Principles and practice of sleep medicine. eds. M. Kryger, T. Roth, and C. William. 6th ed, (Elsevier), 1610–1614.

Phan, H., and Mikkelsen, K. (2022). Automatic sleep staging of EEG signals: recent development, challenges, and future directions. Physiol. Meas. 43:04TR01. doi: 10.1088/1361-6579/ac6049

Shen, Q., Yang, X., Zou, L., Wei, K., Wang, C., and Liu, G. (2022). Multitask residual shrinkage convolutional neural network for sleep apnea detection based on wearable bracelet photoplethysmography. IEEE Internet Things J. 9, 25207–25222. doi: 10.1109/JIOT.2022.3195777

Song, C., Liu, K., Zhang, X., Chen, L., and Xian, X. (2016). An obstructive sleep apnea detection approach using a discriminative hidden Markov model from ECG signals. IEEE Trans. Biomed. Eng. 63, 1532–1542. doi: 10.1109/TBME.2015.2498199

Sors, A., Bonnet, S., Mirek, S., Vercueil, L., and Payen, J. F. (2018). A convolutional neural network for sleep stage scoring from raw single-channel EEG. Biomed. Signal Proces. Control 42, 107–114. doi: 10.1016/j.bspc.2017.12.001

Tran, T. H., Nguyen, T. T., Yuldashev, Z. M., Sadykova, E. V., and Nguyen, M. T. (2019). The method of smart monitoring and detection of sleep apnea of the patient out of the medical institution. Proc. Comput. Sci. 150, 397–402. doi: 10.1016/j.procs.2019.02.069

van Steenkiste, T., Groenendaal, W., Dreesen, P., Lee, S., Klerkx, S., de Francisco, R., et al. (2020). Portable detection of apnea and hypopnea events using bio-impedance of the chest and deep learning. IEEE J. Biomed. Health Inform. 24, 2589–2598. doi: 10.1109/JBHI.2020.2967872

Keywords: sleep apnea syndrome (SAS), convolutional neural networks (CNN), photoplethysmographic (PPG) signals, sleep apnea syndrome (SAS) recognition, cross entropy

Citation: Jiang X, Ren Y, Wu H, Li Y and Liu F (2023) Convolutional neural network based on photoplethysmography signals for sleep apnea syndrome detection. Front. Neurosci. 17:1222715. doi: 10.3389/fnins.2023.1222715

Edited by:

Chang Yan, Southeast University, ChinaReviewed by:

Liting Zhang, Shandong Provincial Hospital, ChinaL. C. Yang, Shandong University, China

Copyright © 2023 Jiang, Ren, Wu, Li and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Feifei Liu, ZmVpZmVpbGl1MTk4N0BnbWFpbC5jb20=

Xinge Jiang

Xinge Jiang YongLian Ren

YongLian Ren Hua Wu1

Hua Wu1