94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 15 September 2023

Sec. Visual Neuroscience

Volume 17 - 2023 | https://doi.org/10.3389/fnins.2023.1170951

This article is part of the Research TopicEye Movement Tracking in Ocular, Neurological, and Mental DiseasesView all 13 articles

Fanchao Meng1,2†

Fanchao Meng1,2† Fenghua Li3†

Fenghua Li3† Shuxian Wu2

Shuxian Wu2 Tingyu Yang2

Tingyu Yang2 Zhou Xiao4

Zhou Xiao4 Yujian Zhang5

Yujian Zhang5 Zhengkui Liu3

Zhengkui Liu3 Jianping Lu4*

Jianping Lu4* Xuerong Luo2*

Xuerong Luo2*Background: Studies on eye movements found that children with autism spectrum disorder (ASD) had abnormal gaze behavior to social stimuli. The current study aimed to investigate whether their eye movement patterns in relation to cartoon characters or real people could be useful in identifying ASD children.

Methods: Eye-tracking tests based on videos of cartoon characters and real people were performed for ASD and typically developing (TD) children aged between 12 and 60 months. A three-level hierarchical structure including participants, events, and areas of interest was used to arrange the data obtained from eye-tracking tests. Random forest was adopted as the feature selection tool and classifier, and the flattened vectors and diagnostic information were used as features and labels. A logistic regression was used to evaluate the impact of the most important features.

Results: A total of 161 children (117 ASD and 44 TD) with a mean age of 39.70 ± 12.27 months were recruited. The overall accuracy, precision, and recall of the model were 0.73, 0.73, and 0.75, respectively. Attention to human-related elements was positively related to the diagnosis of ASD, while fixation time for cartoons was negatively related to the diagnosis.

Conclusion: Using eye-tracking techniques with machine learning algorithms might be promising for identifying ASD. The value of artificial faces, such as cartoon characters, in the field of ASD diagnosis and intervention is worth further exploring.

Social interaction impairment is the most common clinical manifestation of autism spectrum disorder (ASD), which is characterized by verbal and nonverbal communication difficulties as well as stereotyped obsessive behaviors (Volkmar et al., 2004). Abnormal eye contact during social situations is among the most noticeable manifestations of social interaction difficulties for those with ASD (Leekam et al., 1998; Spezio et al., 2007). Early screening remains one of the major challenges in ASD research. According to a recent meta-analysis involving 30 studies with over 60,000 ASD participants from 35 countries, the age for diagnosis occurred at approximately 60 months of age, which was late for early intervention to be initiated (Van’t Hof et al., 2021). Delayed diagnosis and intervention will have a negative impact on children’s prognoses and may lead to lifelong unpleasant outcomes, posing a significant burden on families and society.

Tremendous efforts have been made by clinical workers to create techniques for the early screening of ASD, and there are numerous tools available (Sappok et al., 2015). However, due to the popularity of existing instruments and incorrect operating methods, some missed diagnoses may occur in places with exceptionally large populations and few or no community health workers (James et al., 2014). The results have aroused the attention of professionals involved in the early detection of ASD.

Eye-tracking technology has become an increasingly important tool in the early screening and diagnosis of ASD in recent years. In contrast to electroencephalography (EEG) and magnetic resonance imaging (MRI), which are time-consuming and difficult to perform, eye-tracking is regarded as a very child-friendly tool that enables a variety of original designs for the investigation of visual exploration patterns and their underlying mechanisms. Evidence proved that eye-tracking techniques combined with machine learning algorithms might be promising in the early and objective diagnosis of ASD (Kollias et al., 2021). Because of ASD children’s difficulties in social interaction, the complexity of social interaction is lacking, and eye-tracking technology can capture the distinctions between high and low social significance stimulation in ASD children via the stimulation paradigm. Studies investigating the factors influencing social attention in ASD found a decrease in gaze to stimuli with high social significance and an increase in gaze to stimuli with low social significance (Chita-Tegmark, 2016a; Frazier et al., 2017). For example, there was decreased attention to the entire face and upper face regions, increased attention to body regions and other unimportant or extraneous aspects of stimuli, and decreased attention to the lower face (mouth) (Chita-Tegmark, 2016b; Frazier et al., 2017).

The total time of gaze fixation with low social significance (such as geometric figures) has been successfully applied as a criterion to distinguish ASD (Shi et al., 2015; Pierce et al., 2016; Moore et al., 2018). Multiple studies for ASD identification using machine learning with eye-tracking data exhibited accuracies of 67–98% in non-toddler groups (Kollias et al., 2021). To the best of our knowledge, there were no studies combining eye tracking using cartoons as stimuli with machine learning algorithms. Using cartoons as stimuli has several advantages. First, it might better capture the attention of toddlers who can be easily influenced by the outside environment, especially when they are not interested in the proposed stimuli (Masedu et al., 2022). Second, a recent study found that ASD children had lower levels of social orientation (SO) than TD children in the realistic task but comparable levels in the cartoon task. Nonetheless, their findings indicated that the cartoon task effectively captured developmental and adaptive delay by demonstrating numerous correlations with visual exploration parameters such as social prioritization, fixation duration, and percentage of SO (Robain et al., 2022). In addition, studies investigating factors that influence social attention in ASD found that ASD children seem to process cartoon faces in a similar manner that typical development (TD) children do; they tend to look more at cartoon characters than at other objects in cartoon situations (Van der Geest et al., 2002). The differences between these groups make it simpler for us to capture the complexities of ASD social interaction and then infer the difference between ASD and TD children in social interaction, which becomes a diagnostic signal. Therefore, given that developmental delay and abnormal gaze are early markers of ASD, we hypothesized that the cartoon task, as well as other minimally social stimuli, could be a useful tool for early screening.

Participants were recruited between 2019 and 2021 in Changsha and Shenzhen, China. The inclusion criteria for children with ASD were as follows: (1) those aged 12–60 months; (2) those who met the diagnosis of ASD according to the Diagnostic and Statistical Manual of Mental Disorders, fifth edition (DSM-V) (American Psychological Association, 2009) and the Autism Diagnostic Observation Schedule (ADOS) confirmed the diagnosis (Lord et al., 1999); and (3) children with normal vision and hearing who can complete the eye movement tests. Those with other major mental disorders or serious physical health problems were excluded. Toddlers who participated when they were younger than 24 months old were classified as global developmental failures based on their performance on the Chinese version of the Gesell development scale (GDS) (Yang, 2016). They were followed and diagnosed every 3–6 months until they were 2 years old. TD children aged 12–60 months were recruited without gender restrictions. According to their parents/caregivers, they had no evidence of developmental disabilities or neuropsychiatric conditions.

The research was carried out in accordance with the Declaration of Helsinki’s ethical principles. The experimental procedures had been explained to all participants’ parents or caregivers, and written informed consent was obtained from all of them. The ethics committee of the Second Xiangya Hospital, Central South University, reviewed and approved the study (No. 2017YFC1309904).

ADOS is a semi-structured, standardized observational tool that is frequently used as a diagnostic indicator for ASD. It can accurately assess and diagnose ASD using a variety of play-based activities that focus on communication, social engagement, play, and innovative use of materials, as well as restricted and repetitive behaviors (González et al., 2019).

GDS was used to assess the development of children. It is composed of five domains: adaptability, gross motor, fine motor, language, and social–emotional responses (Yang, 2016). Participants’ development quotient (DQ) in each domain was calculated. Using the full-scale DQ, the development was classified as normal (DQ ≥ 85), deficient (DQ ≤ 75), or borderline (75 ~ 85). In this field, DQ in any single domain less than 75 was considered deficient.

The eye-tracking tests were carried out in a quiet environment. A SensoMotoric Instruments Red500 remote eye tracker (Teltow, Germany) was attached to the frame of a 1,680 × 1,050 22-inch LCD stimulus presentation monitor. The highest spatial resolution and gaze position accuracy were 0.1 and 0.4, respectively. The capture range for eye movement was 40° horizontally (±20°) and 60° vertically (±40°). The tracking range of the head motion is 40 × 20 cm when the man–machine distance is 70 cm. Throughout the experiment, two 5-point calibrations were obtained at fixed times.

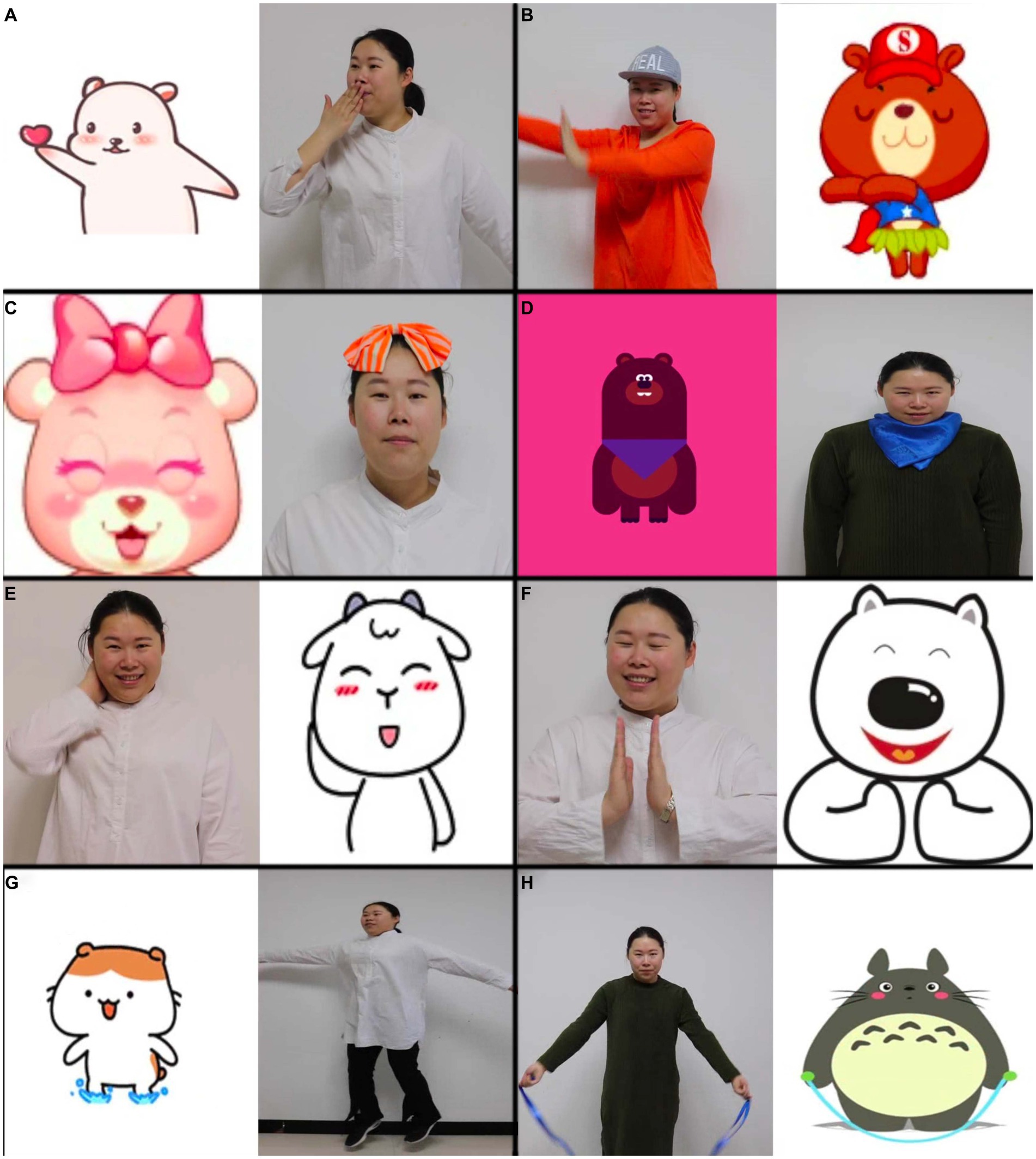

Eight videos were played in the SMI Experiment Center. Each video has two large rectangular areas side by side, and the screen is also divided into the left and right sides. There was a cartoon character on the left (or right) side playing actions such as dancing, nodding, blowing a kiss, scratching the neck, clapping hands, bouncing, skipping rope, and nodding while stretching thumbs. The opposite side presented a real person. This person imitates the cartoon character. During the imitation process, the person tried to match the expression (smiling or no obvious expression), movement, clothing (clothing color and accessories), character size, and appearance (half body or whole body) with the cartoon pattern (Figures 1A–H). All of the videos were soundless, and the SMI Experiment Center software was created using a random playback option so that each child would see the eight videos in a different order.

Figure 1. Pictures of eight videos used for eye-tracking tests, (A-H) represented different scenarios.

A three-level hierarchical structure [participants, events, and area of interest (AOI)] was used to arrange the data obtained by the SMI BeGaze program (Figure 2). Each participant had eight event data entities, matching one of the eight videos used in the trials. A total of 24 data items, including fixation frequency, saccade amplitude, count, frequency, average latency, fixation dispersion, saccade length, saccade velocity, and total, average, maximum, and minimum values of fixation time, were recorded for each event data entity. Three events (nodding, clapping hands, and nodding while stretching thumbs) had four AOIs (cartoon, people, people’s heads, and people’s bodies) (Figure 3). Five events (dancing, blowing a kiss, scratching the neck, bouncing, and skipping rope) had two AOIs (cartoon and people) (Figure 4). Each AOI contained a set of 14 data items. The 14 data items within each AOI data entity were as follows: entry time, visible time (equivalent to the duration of the event in this study), net dwell time (time of all gazes that hit the AOI), dwell time (sum of net dwell time and time of saccades that hit the AOI), glance duration (sum of dwell time and duration of saccade entering the AOI), and diversion duration (sum of glance duration and duration of saccade leaving the AOI). In this study, gaze refers to the non-saccade movement status, and fixation refers to a cluster of gaze points that are close in space and time (60 ms).

We flattened each participant’s hierarchical data structure into a single vector with a length of 688 elements in order to thoroughly investigate the data items collected from the participants. Therefore, an array of event items and AOI entities from a single participant were arranged consecutively (Figure 5). Flatten vectors and diagnostic information were used as features and labels, and random forest (RF) was used as the feature selection method and classifier.

RF is a common ensemble classifier and feature-selection technique (Menze et al., 2009; Boulesteix et al., 2012; Biau and Scornet, 2016). Multiple independent decision trees make up an RF. Each decision tree uses a random subset of the input features from a random subset of the training examples to fit itself during the training phase. The predictions of all the fitted trees were averaged to determine the final classification of an RF. In addition to the final choice, an RF also produces a significant value for each feature (entropy decrease, Gini impurity, etc.).

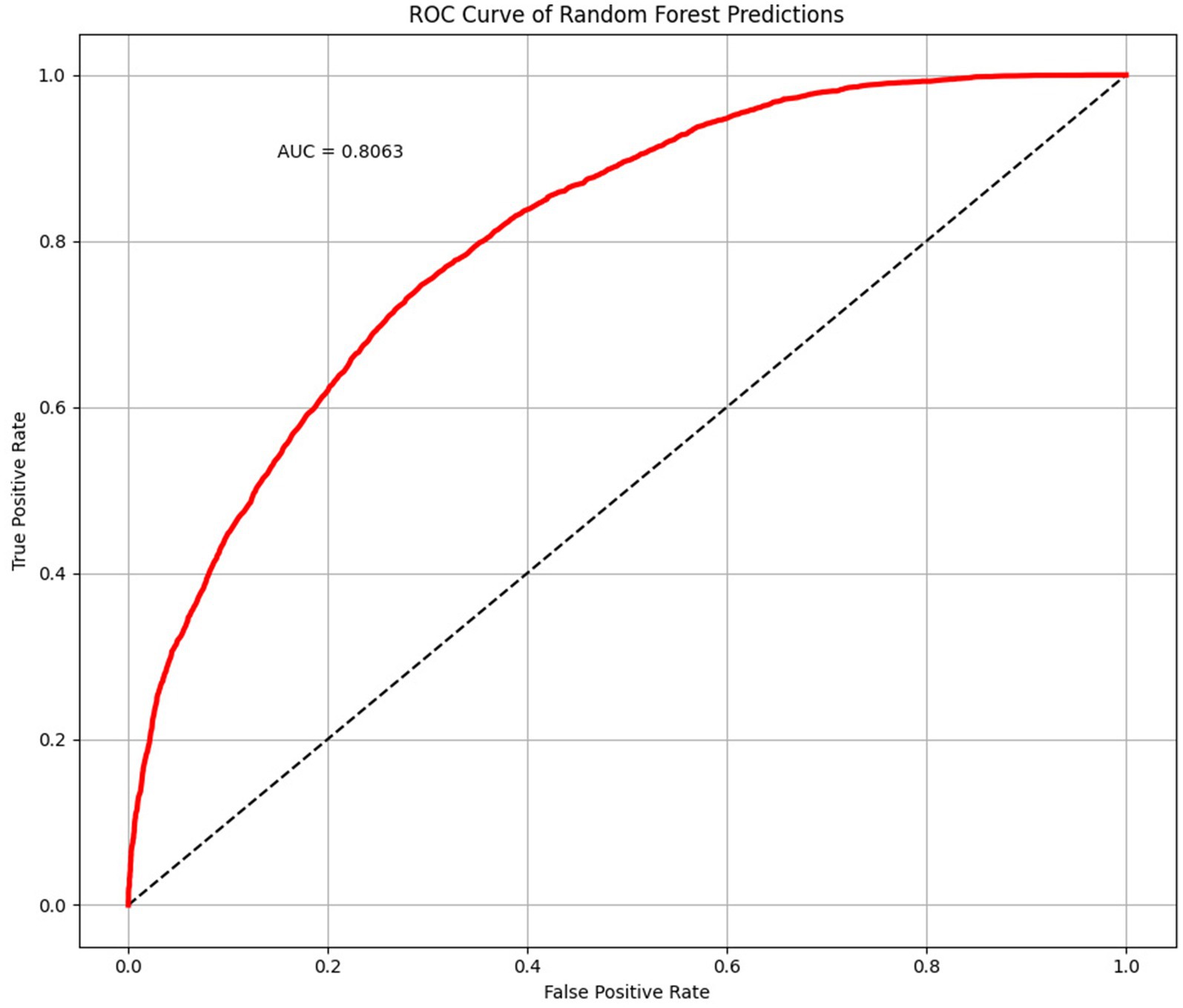

In our research, we created equal-sized autistic and healthy groups using the undersampling technique and constructed RF models. Gini impurity was utilized as the split criterion, and there were 800 decision trees. The ratio of training to validation cases was 7:3. The average accuracy, precision, and recall were obtained after 500 iterations of the fitting process (Powers, 2020). Additionally, we plotted the receiver operating characteristic curve (ROC) using all the prediction findings and determined the area under the curve (AUC). The undersampling and training-validation splits were randomized before each of the 500 fitting processes.

A logistic regression was applied to examine if the features were related to the diagnoses. Forward stepwise factor selection was used to build the logistic regression model. Features in the RF models were used as independent variables in the regression model. Features were added to the model one at a time. A feature was chosen if statistical significance could be found in the feature itself or if the statistical significance of previously added features was unaffected by the new feature. Python 3.8.10, sci-kit learn 1.1.1, and the R language 4.1.1 were used for all data organization and analysis.

The characteristics of the participants are presented in Table 1. A total of 161 children with a mean age of 39.70 ± 12.27 months were recruited, and 47 of them were girls. Among them, 117 were diagnosed with ASD and 44 with TD. There were 91 boys and 26 girls in the ASD group and 23 boys and 21 girls in the TD group. The children in the ASD group were significantly younger than those in the TD group. Children in the ASD group had significantly lower scores in the GDDS compared to their healthy controls.

The average accuracy, precision, and recall of the 500-time fitting validation were 0.73, 0.73, and 0.75, respectively, and the AUC was 0.81 (Figure 6). Randomized undersampling and the train-test split were performed before every fitting. The sizes of the ASD and TD groups were both 44 after undersampling. The size ratio of the training and validation sets was 7:3.

Figure 6. Receiver operating characteristic (ROC) curve and area under curve (AUC) plotted with 500 times randomized validation results.

In the logistic regression analysis, we first included age as the independent variable before the stepwise factor selection as children in the ASD and TD groups had significantly different ages. In addition to age, the best-fit logistic model found that nine features were significantly associated with an ASD diagnosis (Table 2). The features that were negatively associated with ASD were body-revisits and head-glance-duration when the cartoon character makes the gesture of clapping hands, cartoon-sequence when scratching the neck, people-glances-count when nodding while stretching thumbs, and people-net-dwell-time when dancing. The features that were positively associated with ASD were people-first-fixation-duration when the people are dancing, cartoon-sequence when the character is nodding, and cartoon-fixation-time when blowing a kiss. In addition, the saccade velocity maximum has a positive correlation with ASD diagnoses (Table 2).

In this study, we developed a novel ASD identification framework for children using a specific cartoon paradigm, together with eye movement data for cartoon preferences and machine learning. We gave children a task based on Pierce et al. (2011), in which videotaped moving/dancing kids were pitted against geometric patterns moving repetitively. The cartoon and real human figures were used at the same time in the stimulus design to make sure the clothing and actions in the cartoon were comparable to those in the real figures. ASD and TD children had different visual preferences for cartoon and real human faces, and ASD children preferred cartoon faces much more than TD children (Van der Geest et al., 2002; Rosset et al., 2008). According to the results, our stimulus paradigm had satisfactory efficacy in distinguishing ASD from TD children. The ML model has an average accuracy, precision, and recall ASD of 0.73, 0.73, and 0.75, respectively. As a classifier to differentiate between ASD and TD children, it has an AUC of 0.81.

Similar efforts have been made by some other studies combining eye-tracking technology with ML algorithms for the objective diagnosis of ASD. For example, Liu et al. (2016) used a data-driven approach to extract features from face scanning data, and a support vector machine (SVM) was applied in the data analysis. While this study showed a maximum classification accuracy of 88.5%, it had a relatively small sample size with 29 ASD children included. Kang et al. (2020) recruited 77 low-functioning autistic children and 80 TD children to watch a random sequence of face photos. With SVM, they found a maximum classification accuracy of 72.5% (AUC = 0.77). However, all of their participants were aged between 3 and 6 years, and no younger children were included. Consistent with previous findings (Tao and Shyu, 2019; Tsuchiya et al., 2021), our study has the advantage of including the largest sample size of younger children under the age of three. Other researchers promote the research ideology that early diagnosis and special education can be accomplished through the use of computer-aided methods based on EEG signals and/or imaging; however, the results in different studies are not quite the same. Wee et al. (2014) reported the greatest accuracy of 96% using SVM as a classifier in a study utilizing sMRI (structural magnetic resonance image). Haar et al. (2016) conducted a large-scale investigation with 245 ASD and 245 control subjects, using cortical surface area as a feature and linear discriminant analysis as a classifier, and reported a low accuracy of 60%. Rane et al. (2017) conducted the largest fMRI study, with over a thousand participants. A low accuracy rate of 60.56% was obtained. Wang et al. (2019) had over a thousand participants as well and reported a higher accuracy of 93.59%. Although the research findings are highly exciting, we prefer to use eye movement in clinical promotion because the clinical operability of MRI is significantly more challenging.

In the current study, we found several glancing behaviors that related to real humans or human parts, such as body-revisits, head-glance-duration, people-glance-count, and people-net-dwell-time, were all negatively associated with ASD. In contrast, glancing behaviors that related to cartoon characters such as cartoon-sequence and cartoon-fixation-time were positively associated with ASD. These behaviors had social significance. Children were more likely to have ASD when they were less interested in real humans or human parts and were more interested in cartoon characters. These results were similar to previous findings that adults with ASD were slow to respond to social stimuli, especially when there were non-social stimuli competing with social stimuli that were related to the narrow interests of ASD (Sasson and Touchstone, 2014; Wang et al., 2014). People with a higher degree of autistic features showed a greater interest in non-human social beings such as animals, robots, or cartoons (Atherton and Cross, 2018). One possible explanation for the cartoon preference of ASD children is that cartoons do not require social interaction. That is to say, the typicality of ASD in cartoon processing may be due to the damage to their social communication skills (Rosset et al., 2008).

Notwithstanding, we found that people-first-fixation-duration when dancing was positively associated with an ASD diagnosis. The reason under this might be that real people had a greater range of motion and children were more attracted to this motion. Another unexpected result was that when watching the video of scratching the neck, ASD children showed quick attention to the cartoon area, while when watching the video of nodding, they showed slow attention to the cartoon area. We compared these two videos and found that in the video of nodding, the face size of the cartoon character was significantly larger than that of a real human. This result reminds us that although cartoon characters have lower social intensity than real people, ASD children also showed similar face avoidance when the face size was relatively large (Falck-Ytter and von Hofsten, 2011). This study utilizes non-linear machine learning models to select a series of indicators, which have been automatically summarized through multiple iterations of machine learning. These indicators possess strong data-driven characteristics and are not heavily reliant on the specific features of the selection method itself. According to our statistics, the predictive capabilities of each indicator decrease with the order of the indicator list. However, it is essential to note that this research utilizes a non-linear machine learning method (RF) for selection. While the authors attempted to provide explanations for the rationality of the selected indicators, their individual use or linear combination to construct predictive models may not necessarily achieve the same effect as when combined in the RF. This is determined by the working mechanism of the RF’s decision trees, where the same indicator may be used multiple times based on different premises at different decision nodes.

There are several limitations to this study. First, the artificial undersampling may lead to an increase in false-positive judgments in an ecological setting, especially when the prevalence is extremely low. Nonetheless, the samples collected in this study differ significantly from the real-world prevalence of ASD (117 positive cases and 44 negative cases). Therefore, without the use of undersampling to balance the samples, it would not be possible to create a model that better adapts to large-scale screening. Moreover, an abundance of positive samples might mislead the model, which is another reason why we ultimately decided to use balanced samples. In future research, these extracted indicators should be applied to fit a screening model more suitable for ecological settings in larger-scale samples (such as screening studies at the provincial level). Second, the age and development level among ASD and TD children were different, and these differences might affect the face scanning patterns (Yi et al., 2014). We did not consider age in the prediction model, and we just focused on whether there was a discrepancy in task performance. With a balanced age distribution and cognitive levels, incorporating age range as a factor in the model would further improve its performance. However, due to the limitations of sample size and an imbalanced age distribution, this study has not been able to achieve this step. Third, though we found a correlation between eye movement features and ASD diagnosis, considering the complex nonlinear classification characteristics of RF, the actual eye movement patterns of ASD children were still not fully clear to us, especially when facing cartoon characters. Fourth, even though our study included the largest sample size of children under the age of three, a larger sample size is still needed in future studies. In particular, those who are at high risk of ASD and younger than 2 years old are needed to validate our results and model.

Despite these limitations, the current study demonstrated that using eye-tracking techniques with ML algorithms might be promising for identifying ASD. The value of artificial faces, such as cartoon characters in the field of ASD diagnosis and intervention is worth further exploring.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by the ethics committee of the Second Xiangya Hospital, Central South University, reviewed and approved the study (No. 2017YFC1309904). The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

FM, SW, TY, ZX, and YZ performed the experiments. FL and ZL performed the statistical analysis. FM revised the manuscript. JL drafted the manuscript. XL designed the study. All authors have given final approval for the version to be published.

This work was supported by Youth Talent Training “Green Seedling Program of Beijing Hospital Management Center” (No. QML20231906 to FM), Shenzhen Fund for Guangdong Provincial High-level Clinical Key Specialties (No. SZGSP013 to JL), National Key R&D Program of China (No. 2017YFC1309900 to XL), and Key Research and Development Program of Hunan Province (No. 2019SK2081 to XL).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

American Psychological Association. Diagnostic and statistical manual of mental disorders. 5th Edn. Washington, DC: American Psychological Association. (2009).

Atherton, G., and Cross, L. (2018). Seeing more than human: autism and anthropomorphic theory of mind. Front. Psychol. 9:528. doi: 10.3389/fpsyg.2018.00528

Biau, G., and Scornet, E. (2016). A random forest guided tour. TEST 25, 197–227. doi: 10.1007/s11749-016-0481-7

Boulesteix, A. L., Janitza, S., Kruppa, J., and König, I. R. (2012). Overview of random forest methodology and practical guidance with emphasis on computational biology and bioinformatics. Wiley Interdiscipl. Rev. 2, 493–507. doi: 10.1002/widm.1072

Chita-Tegmark, M. (2016a). Social attention in ASD: a review and meta-analysis of eye-tracking studies. Res. Dev. Disabil. 48, 79–93. doi: 10.1016/j.ridd.2015.10.011

Chita-Tegmark, M. (2016b). Attention allocation in ASD: a review and Meta-analysis of eye-tracking studies. Rev. J. Autism Dev. Disord. 3:400. doi: 10.1007/s40489-016-0089-6

Falck-Ytter, T., and von Hofsten, C. (2011). How special is social looking in ASD: a review. Prog. Brain Res. 189, 209–222. doi: 10.1016/B978-0-444-53884-0.00026-9

Frazier, T. W., Strauss, M., Klingemier, E. W., Zetzer, E. E., Hardan, A. Y., Eng, C., et al. (2017). A Meta-analysis of gaze differences to social and nonsocial information between individuals with and without autism. J. Am. Acad. Child Adolesc. Psychiatry 56, 546–555. doi: 10.1016/j.jaac.2017.05.005

González, M. C., Vásquez, M., and Hernández-Chávez, M. Autism spectrum disorder: Clinical diagnosis andADOS Test. Rev Chil Pediatr. (2019). 90(5), 485–491. doi: 10.32641/rchped.v90i5.872

Haar, S., Berman, S., Behrmann, M., and Dinstein, I. (2016). Anatomical abnormalities in autism? Cereb. Cortex 26, 1440–1452. doi: 10.1093/cercor/bhu242

James, L. W., Pizur-Barnekow, K. A., and Schefkind, S. (2014). Online survey examining practitioners’ perceived preparedness in the early identification of autism. Am. J. Occup. Ther. 68, e13–e20. doi: 10.5014/ajot.2014.009027

Kang, J., Han, X., Hu, J.-F., Feng, H., and Li, X. (2020). The study of the differences between low-functioning autistic children and typically developing children in the processing of the own-race and other-race faces by the machine learning approach. J. Clin. Neurosci. 81, 54–60. doi: 10.1016/j.jocn.2020.09.039

Kollias, K.-F., Syriopoulou-Delli, C. K., Sarigiannidis, P., and Fragulis, G. F. (2021). The contribution of machine learning and eye-tracking technology in autism spectrum disorder research: a systematic review. Electronics 10:2982. doi: 10.3390/electronics10232982

Leekam, S. R., Hunnisett, E., and Moore, C. (1998). Targets and cues: gaze-following in children with autism. J. Child Psychol. Psychiatry 39, 951–962. doi: 10.1111/1469-7610.00398

Liu, W., Li, M., and Yi, L. (2016). Identifying children with autism spectrum disorder based on their face processing abnormality: a machine learning framework. Autism Res. 9, 888–898. doi: 10.1002/aur.1615

Lord, C., Rutter, M., DiLavore, P. C., and Risi, S.. Autism diagnostic observation schedule – WPS (ADOS-WPS). Los Angeles, CA: Western Psychological Services. (1999).

Masedu, F., Vagnetti, R., Pino, M. C., Valenti, M., and Mazza, M. (2022). Comparison of visual fixation trajectories in toddlers with autism Spectrum disorder and typical development: a Markov chain model. Brain Sci. 12:10. doi: 10.3390/brainsci12010010

Menze, B. H., Kelm, B. M., Masuch, R., Himmelreich, U., Bachert, P., Petrich, W., et al. (2009). A comparison of random forest and its Gini importance with standard chemometric methods for the feature selection and classification of spectral data. BMC Bioinformat. 10, 1–16. doi: 10.1186/1471-2105-10-213

Moore, A., Wozniak, M., Yousef, A., Barnes, C. C., Cha, D., Courchesne, E., et al. (2018). The geometric preference subtype in ASD: identifying a consistent, early-emerging phenomenon through eye tracking. Mol. Autism. 9:19. doi: 10.1186/s13229-018-0202-z

Pierce, K., Conant, D., Hazin, R., Stoner, R., and Desmond, J. (2011). Preference for geometric patterns early in life as a risk factor for autism. Arch. Gen. Psychiatry 68, 101–109. doi: 10.1001/archgenpsychiatry.2010.113

Pierce, K., Marinero, S., Hazin, R., Mckenna, B. S., Barnes, C. C., and Malige, A. (2016). Eye tracking reveals abnormal visual preference for geometric images as an early biomarker of an autism Spectrum disorder subtype associated with increased symptom severity. Biol. Psychiatry 79, 657–666. doi: 10.1016/j.biopsych.2015.03.032

Powers, D. M. (2020). Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv. doi: 10.48550/arXiv.2010.16061

Rane, S., Jolly, E., Park, A., Jang, H., and Craddock, C. (2017). Developing predictive imaging biomarkers using whole-brain classifiers: application to the ABIDE I dataset. Res. Ideas Outcomes 3:e12733. doi: 10.3897/rio.3.e12733

Robain, F., Godel, M., Kojovic, N., Franchini, M., Journal, F., and Schaer, M. (2022). Measuring social orienting in preschoolers with autism spectrum disorder using cartoons stimuli. J. Psychiatr. Res. 156, 398–405. doi: 10.1016/j.jpsychires.2022.10.039

Rosset, D. B., Rondan, C., Da Fonseca, D., Santos, A., Assouline, B., and Deruelle, C. (2008). Typical emotion processing for cartoon but not for real faces in children with autistic spectrum disorders. J. Autism Dev. Disord. 38, 919–925. doi: 10.1007/s10803-007-0465-2

Sappok, T., Heinrich, M., and Underwood, L. (2015). Screening tools for autism spectrum disorders. Adv. Autism 1, 12–29. doi: 10.1108/AIA-03-2015-0001

Sasson, N., and Touchstone, E. (2014). Visual attention to competing social and object images by preschool children with autism spectrum disorder. J. Autism Dev. Disord. 44, 584–592. doi: 10.1007/s10803-013-1910-z

Shi, L., Zhou, Y., Ou, J., Gong, J., Wang, S., Cui, X., et al. (2015). Different visual preference patterns in response to simple and complex dynamic social stimuli in preschool-aged children with autism spectrum disorders. PLoS One 10:e0122280. doi: 10.1371/journal.pone.0122280

Spezio, M. L., Huang, P.-Y. S., Castelli, F., and Adolphs, R. (2007). Amygdala damage impairs eye contact during conversations with real people. J. Neurosci. 27, 3994–3997. doi: 10.1523/JNEUROSCI.3789-06.2007

Tao, Y., and Shyu, M.-L. (2019). SP-ASDNet: CNN-LSTM based ASD classification model using observer scanpaths. IEEE, 641–646. doi: 10.1109/ICMEW.2019.00124

Tsuchiya, K. J., Hakoshima, S., Hara, T., Ninomiya, M., Saito, M., Fujioka, T., et al. (2021). Diagnosing autism spectrum disorder without expertise: a pilot study of 5-to 17-year-old individuals using Gazefinder. Front. Neurol. 11:603085. doi: 10.3389/fneur.2020.603085

Van der Geest, J. N., Kemner, C., Camfferman, G., Verbaten, M., and van Engeland, H. (2002). Looking at images with human figures: comparison between autistic and normal children. J. Autism Dev. Disord. 32, 69–75. doi: 10.1023/A:1014832420206

Van’t Hof, M., Tisseur, C., van Berckelear-Onnes, I., van Nieuwenhuyzen, A., Daniels, A. M., Deen, M., et al. (2021). Age at autism spectrum disorder diagnosis: a systematic review and meta-analysis from 2012 to 2019. Autism 25, 862–873. doi: 10.1177/1362361320971107

Volkmar, F. R., Lord, C., Bailey, A., Schultz, R. T., and Klin, A. (2004). Autism and pervasive developmental disorders. J. Child Psychol. Psychiatry 45, 135–170. doi: 10.1046/j.0021-9630.2003.00317.x

Wang, C., Xiao, Z., Wang, B., and Wu, J. (2019). Identification of autism based on SVM-RFE and stacked sparse auto-encoder. IEEE Access 7, 118030–118036. doi: 10.1109/ACCESS.2019.2936639

Wang, S., Xu, J., Jiang, M., Zhao, Q., Hurlemann, R., Adolphs, R., et al. (2014). Autism spectrum disorder, but not amygdala lesions, impairs social attention in visual search. Neuropsychologia 63, 259–274. doi: 10.1016/j.neuropsychologia.2014.09.002

Wee, C. Y., Wang, L., Shi, F., Yap, P. T., and Shen, D. (2014). Diagnosis of autism spectrum disorders using regional and interregional morphological features. Hum. Brain Mapp. 35, 3414–3430. doi: 10.1002/hbm.22411

Yang, Y. Rating scales for Children’s developmental behavior and mental health version 1, Beijing: People's Medical Publishing House. (2016): 71.

Keywords: autism spectrum disorder, eye-tracking, cartoon character, machine learning, random forest

Citation: Meng F, Li F, Wu S, Yang T, Xiao Z, Zhang Y, Liu Z, Lu J and Luo X (2023) Machine learning-based early diagnosis of autism according to eye movements of real and artificial faces scanning. Front. Neurosci. 17:1170951. doi: 10.3389/fnins.2023.1170951

Received: 21 February 2023; Accepted: 17 August 2023;

Published: 15 September 2023.

Edited by:

Xuemin Li, Peking University Third Hospital, ChinaReviewed by:

Kotaro Yuge, Kurume University Hospital, JapanCopyright © 2023 Meng, Li, Wu, Yang, Xiao, Zhang, Liu, Lu and Luo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xuerong Luo, bHVveHVlcm9uZ0Bjc3UuZWR1LmNu; Jianping Lu, c3psdWppYW5waW5nQDEyNi5jb20=

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.