95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 27 March 2023

Sec. Visual Neuroscience

Volume 17 - 2023 | https://doi.org/10.3389/fnins.2023.1143006

This article is part of the Research Topic Eye Movement Tracking in Ocular, Neurological, and Mental Diseases View all 13 articles

Zhili Tang1

Zhili Tang1 Xiaoyu Liu1,2*

Xiaoyu Liu1,2* Hongqiang Huo1

Hongqiang Huo1 Min Tang1

Min Tang1 Xiaofeng Qiao1

Xiaofeng Qiao1 Duo Chen1

Duo Chen1 Ying Dong1

Ying Dong1 Linyuan Fan1

Linyuan Fan1 Jinghui Wang1

Jinghui Wang1 Xin Du1

Xin Du1 Jieyi Guo1

Jieyi Guo1 Shan Tian1

Shan Tian1 Yubo Fan1,2*

Yubo Fan1,2*Introduction: Eye-tracking technology provides a reliable and cost-effective approach to characterize mental representation according to specific patterns. Mental rotation tasks, referring to the mental representation and transformation of visual information, have been widely used to examine visuospatial ability. In these tasks, participants visually perceive three-dimensional (3D) objects and mentally rotate them until they identify whether the paired objects are identical or mirrored. In most studies, 3D objects are presented using two-dimensional (2D) images on a computer screen. Currently, visual neuroscience tends to investigate visual behavior responding to naturalistic stimuli rather than image stimuli. Virtual reality (VR) is an emerging technology used to provide naturalistic stimuli, allowing the investigation of behavioral features in an immersive environment similar to the real world. However, mental rotation tasks using 3D objects in immersive VR have been rarely reported.

Methods: Here, we designed a VR mental rotation task using 3D stimuli presented in a head-mounted display (HMD). An eye tracker incorporated into the HMD was used to examine eye movement characteristics during the task synchronically. The stimuli were virtual paired objects oriented at specific angular disparities (0, 60, 120, and 180°). We recruited thirty-three participants who were required to determine whether the paired 3D objects were identical or mirrored.

Results: Behavioral results demonstrated that the response times when comparing mirrored objects were longer than identical objects. Eye-movement results showed that the percent fixation time, the number of within-object fixations, and the number of saccades for the mirrored objects were significantly lower than that for the identical objects, providing further explanations for the behavioral results.

Discussion: In the present work, we examined behavioral and eye movement characteristics during a VR mental rotation task using 3D stimuli. Significant differences were observed in response times and eye movement metrics between identical and mirrored objects. The eye movement data provided further explanation for the behavioral results in the VR mental rotation task.

Mental rotation is the ability to mentally represent and rotate two-dimensional (2D) images or three-dimensional (3D) objects (Shepard and Metzler, 1971) and has been widely used to examine visuospatial ability (Pletzer et al., 2019; Ito et al., 2022). In classical mental rotation tasks, participants visually perceive 3D objects and mentally rotate them until the objects are identified. It is generally accepted that the mental rotation process includes five cognitive stages (Desrocher et al., 1995): (i) processing of visual information and creating mental images of the presented objects (imagining and evaluating the presented objects from different angles); (ii) mentally rotating the objects or images; (iii) comparing the presented objects; (iv) determining whether the presented objects are identical; (v) making a decision (indicated by a button). Response times and accuracy rates are widely employed in examining a mental rotation effect and mental rotation performance (Shepard and Metzler, 1971; Berneiser et al., 2018), but these behavioral indices are not sufficient to fully understand the related complex cognitive processes. Recent studies have provided evidence that eye movement characteristics are promising for examining these mental processes (Xue et al., 2017; Toth and Campbell, 2019; Tiwari et al., 2021).

Eye-tracking technology is an effective tool for examining cognitive processes required for complex cognitive tasks (Lancry-Dayan et al., 2023). Eye movements captured by eye tracking systems can provide comprehensive information on mental processes in mental rotation tasks and have been effective in revealing brain activity (Toth and Campbell, 2019). It has been suggested that eye movement parameters could characterize mental representation according to specific patterns (Nazareth et al., 2019). Eye movement metrics, including fixations and saccades, have been used in studies using mental rotation tasks (Suzuki et al., 2018). Identifying fixations allows researchers to examine objects of interest (Mast and Kosslyn, 2002); fixations are mainly responsible for the acquisition of visual information in mental rotation tasks (Yarbus, 1967). A recent study indicated that fixation metrics could illustrate mental rotation strategies, and that fixation patterns were related to mental rotation performance (Nazareth et al., 2019). Saccades are the rapid eye movements between fixations (Kowler et al., 1995). Saccades serve to rapidly shift the fovea to a new target to integrate visual information from fixations. The integration allows a brain to compare the visual information obtained from fixations with the remembered image of the object (Ibbotson and Krekelberg, 2011). In addition, eye tracking data can be used to characterize different mental rotation strategies. Holistic and piecemeal strategies have been extensively investigated in previous studies on mental rotation (Khooshabeh et al., 2013; Hsing et al., 2023). The holistic strategy refers to mentally rotating one of two 3D objects as a whole and encoding the spatial information of the object. For instance, when comparing two objects, one object is holistically rotated along a vertical axis for comparison with the other object. The piecemeal strategy refers to segmenting an object into several pieces and encoding only part of its spatial information. For instance, one of the two objects would be segmented into several independent pieces, and participants may mentally rotate one piece of both objects and see if the two pieces match. Strategy ratio was commonly used to reflect which strategies are performed during mental rotation (Khooshabeh and Hegarty, 2010). The strategy ratio refers to the ratio of the number of fixations within an object to the number of saccades between the two objects. In a holistic strategy, the ratio would be 1; in a piecemeal strategy, the ratio would be greater than one. Although previous studies have provided insights into eye movement characteristics in mental rotation tasks, these findings have mainly used 2D images presented on computer screens (Xue et al., 2017; Campbell et al., 2018). Previously, the use of visual stimuli has relied heavily on simplified image stimuli (Snow and Culham, 2021), which are distinctly different from naturalistic stimuli.

Recently, visual neuroscience studies have focused on visual behavior in response to naturalistic stimuli rather than simplified images (Haxby et al., 2020; Jaaskelainen et al., 2021; Musz et al., 2022). Visual behavior and brain activity evoked by planar images are different from those evoked by natural 3D objects (Marini et al., 2019; Chiquet et al., 2020). Natural 3D objects are rich in depth cues and visual input from the surrounding environment (e.g., edges) (Chiquet et al., 2020). For example, a recent study showed that 3D objects triggered more stronger brain responses than 2D images do (Marini et al., 2019; Tang et al., 2022).

Virtual Reality (VR) is an emerging technology used to provide naturalistic stimuli, allowing us to understand behavioral characteristics in an immersive environment similar to real world (Hofmann et al., 2021). This technology serves to fill the gap between traditional presentations based on 2D computer screen and naturalistic visual presentations close to real world (Wenk et al., 2022). Virtual environments can simulate real-world visual inputs (Robertson et al., 1993; Minderer and Harvey, 2016), allowing more naturalistic 3D objects with depth cues (El Jamiy and Marsh, 2019). Comparing with the mental rotation tasks using 2D images, the VR version of mental rotation task using 3D objects provides new opportunities to quantify visuospatial ability in environments similar to the real world. For example, a recent work has shown the impact of virtual environments on mental rotation performance. They assessed the differences in mental rotation ability based on dimensionality and the complexity of virtual environments, which provided new insights into the impact of stereo 3D objects on mental rotation performance (Lochhead et al., 2022). Their results suggested that the performance advantage of 3D objects could be greater than that of conventional 2D mediums. In addition, the previous work of our lab demonstrated the behavioral performance and neural oscillations in the mental rotation task using 2D images and using stereoscopic 3D objects (Tang et al., 2022). These studies inspired some expanded studies related to the VR version of mental rotation task, such as exploration of eye movement characteristics. Eye-tracking technology was incorporated into a head-mounted display (HMD), allowing to provide a new opportunity to understand human visual behavior in VR (Clay et al., 2019; Chiquet et al., 2020). Eye movement characteristics based on 3D objects help to understand visual behavior in a natural context close to real world (Chiquet et al., 2020). However, the eye movement characteristics in mental rotation tasks using naturalistic 3D objects with depth cues have been rarely reported.

Here, we designed a VR mental rotation task using 3D stimuli presented in a HMD with an eye tracker to examine the eye movements during the task synchronically. We first used behavioral performance to validate a mental rotation effect presented in a VR environment. Further, we highlighted the eye movement characteristics in this task. As we expected, our results indicated that the VR task also evoke a mental rotation effect, suggesting that the virtual task was effective and available. We analyzed the eye movement data to explain the behavioral results observed in the VR mental rotation task.

Thirty-three participants were enrolled [17 females and 16 males; mean age = 28.59 years, standard deviation (SD) = 2.41]. All participants were recruited from university, were right-handed, and had normal or corrected-to normal vision. Written informed consent was obtained from all participants, and ethical approval was granted by the local ethical committee in accordance with the Declaration of Helsinki. Data from one participant was excluded because of low eye tracking data quality. Thus, data from 32 participants were included in the analysis.

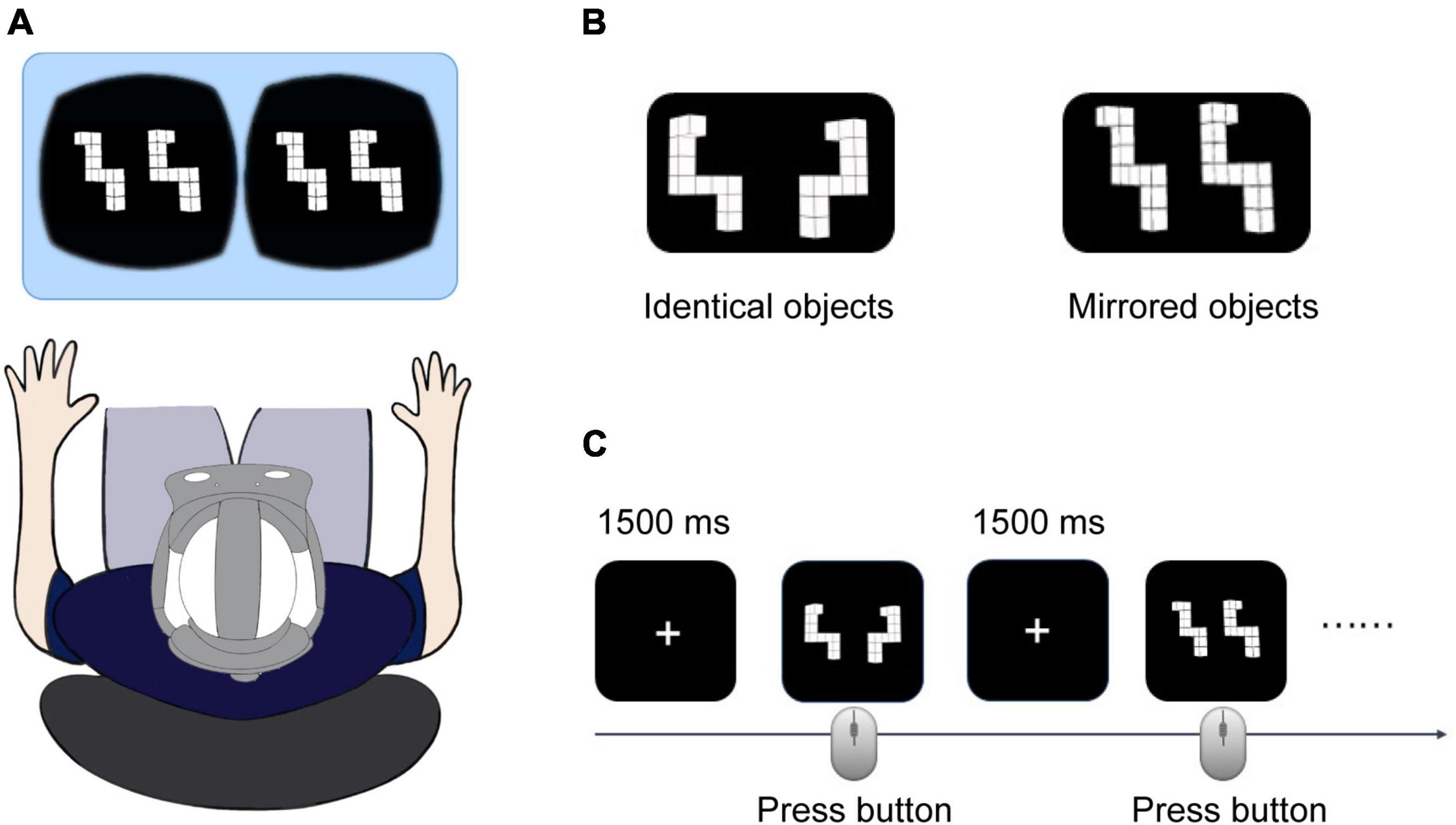

We constructed 120 pairs of 3D objects based on original stimuli from the mental-rotation stimulus library (the 4th prototype) (Peters and Battista, 2008). The 3D paired objects were oriented at specific angular disparities (0, 60, 120, and 180°). For each pair, the two stimuli were identical or mirrored objects (Figure 1B). The 120 stimuli consisted of pairs of objects (identical, mirrored) in four angular disparities (0, 60, 120, and 180°).

Figure 1. (A) Rendered 3D visual stimuli presented by a head-mounted display (HMD). (B) Sample of three-dimensional (3D) identical and mirrored stimuli. (C) Schematic diagram of the mental rotation task. The participants were asked to judge whether the paired objects were identical or mirrored and respond by pressing a mouse button (left: identical and right: mirrored) as quickly and accurately as possible.

Each 3D object was constructed using 3D Studio Max (Autodesk Inc., San Rafael, CA, USA) and stored as .obj files. We used the Unity game engine (Unity Software, Inc., San Francisco, CA, USA) to render the 3D objects. The rendered 3D visual stimuli were presented in a HMD (VIVE Pro Eye; HTC Corporation, Taipei, Taiwan) in randomized sequences [generated using MATLAB (The MathWorks, Natick, MA, USA)].

All participants completed the VR mental rotation task with 3D stimuli presented in the HMD in a quiet room (Figure 1A). Participants’ information, including demographic data and VR experience, were collected before the experiment. During the experiment, participants were seated comfortably in a chair. The experimenter put a VR headset on the participants’ head, and a five-point calibration of the eye tracker was performed before the experiment. We started the experiment when the calibrations were successful.

The VR mental rotation task consisted of 120 trials separated into two blocks of 60 trials. Short breaks of approximate 5 min were assigned between blocks to prevent participant fatigue. For each trial, a white fixation cross was displayed in the center of the HMD for 1,500 ms, followed by the presentation of a pair of 3D visual stimuli. The participants were asked to judge whether the paired objects were identical or mirrored and to respond by pressing a mouse button (left button for identical and right button for mirrored) as quickly and accurately as possible. The trial ended once the participants indicated their decision by pressing a button (Figure 1C).

We used a customized script in the Unity 3D platform to record behavioral data including response times and accuracy rates. Stimulus onsets and trial completion times were marked by the script. The response time was defined as the duration from stimulus onset to trial completion. The accuracy rate was defined as the ratio of trials correctly judged out of the total number of trials.

The eye tracker was incorporated into the HMD. Eye movement data were collected using an eye tracking SDK (SRanipal), with a maximum frequency of 120 Hz. A collider (i.e., a Unity object) was added to the surface of each 3D object presented in the HMD. The eye tracker in the HMD could capture all the possible gazes on the surface of the collider.

Eye movement events, including fixations and saccades, were identified to represent the eye movement characteristics in the VR mental rotation task. For each trial, we applied an identification by dispersion threshold (IDT) algorithm to group the collected gaze data into fixations (Salvucci and Goldberg, 2000; Llanes-Jurado et al., 2020). The end of each fixation was marked as a saccade event. The IDT algorithm requires two parameters: the dispersion threshold and the minimum fixation duration (Blignaut, 2009). This algorithm has been found to be the most similar to manual detection from human experts (Andersson et al., 2017). In the present study, fixations were marked using a 1° spatial dispersion threshold (Blignaut, 2009; Arthur et al., 2021) and a minimum duration of 60 ms (Komogortsev et al., 2010). To test the reliability of the results presented in our study, we used the eye movement metrics to compare the eye movement results obtained from the IDT algorithm with the three dispersion thresholds (1, 1.2, and 1.4°). Further analyses showed that the eye movement results obtained from the dispersion threshold of 1° were consistent with that from the dispersion thresholds of 1.2 and 1.4°, respectively (Supplementary Figures 1–4). Detailed statistical results are listed in Supplementary Tables 1–4. These results justify the dispersion threshold of 1° in our study.

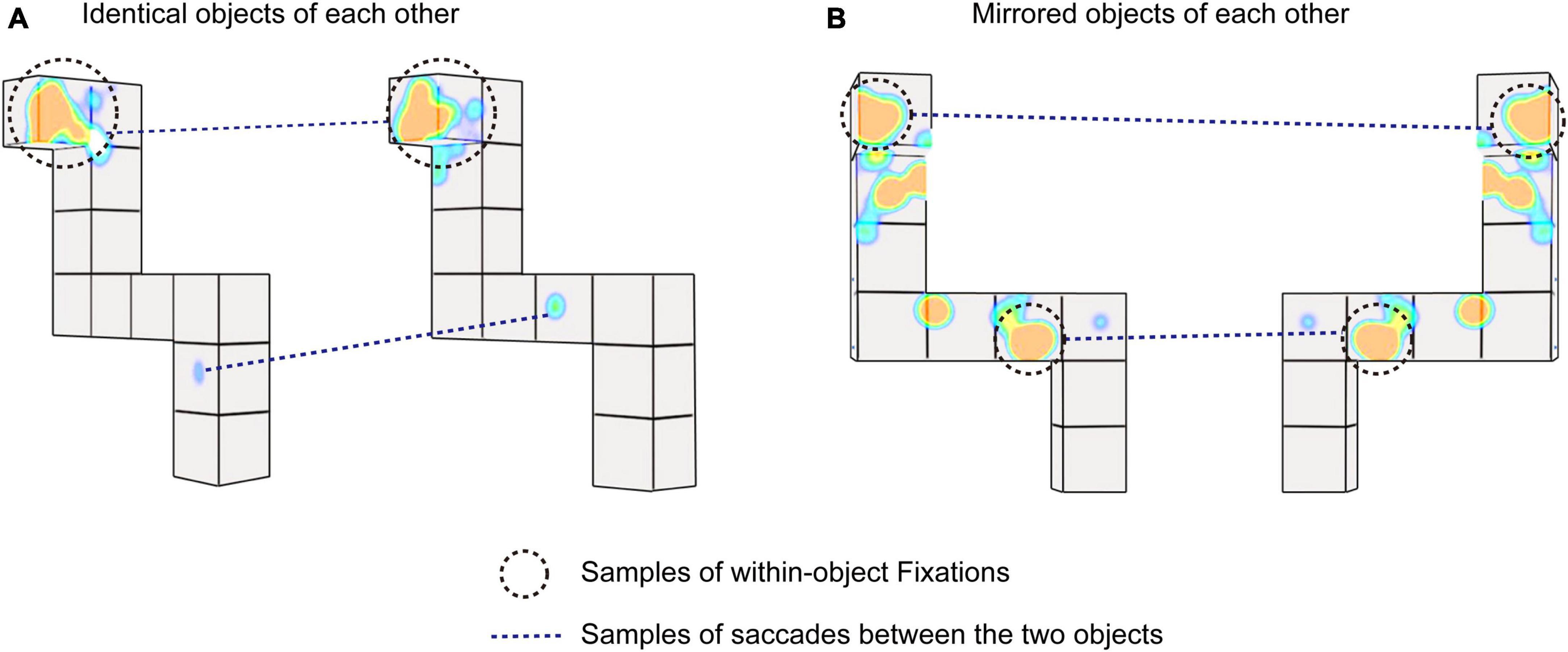

Four eye movement metrics were used based on prior studies. The percent fixation time, the number of within-object fixations, the number of saccades, and the strategy ratio were used to quantify visual behavior (Khooshabeh and Hegarty, 2010; Khooshabeh et al., 2013; Lavoie et al., 2018; Nazareth et al., 2019; Hsing et al., 2023). Examples of the eye movement metrics (e.g., fixations and saccades) are presented in Figure 2. The eye movement metrics were defined as follows.

Figure 2. Examples of eye movements during the virtual reality (VR) mental rotation. (A) Sample three-dimensional (3D) identical stimuli. (B) Sample 3D mirrored stimuli. Black circles with dotted lines indicate samples of fixations within one object. Dotted lines indicate samples of saccades between the two objects.

The amount of time fixated on the two objects during the mental rotation task divided by the total duration of the task (i.e., response time), multiplied by 100.

The number of fixations per second made on either of the two objects.

The number of saccades that a participant made from one 3D object to another within 1 s.

The ratio of the number of fixations within an object to the number of saccades made between the two objects. The strategy ratio was used to reflect holistic or piecemeal strategies. A strategy ratio close to one indicates a holistic strategy, and a strategy ratio greater than one suggests a piecemeal strategy.

All statistical analyses were conducted using SPSS software (IBM Corp., Armonk, NY, USA). Two-way repeated-measures analysis of variance (ANOVA) was used to analyze behavioral metrics (response time and accuracy rate) and eye movement metrics (fixations and saccades). Stimulus Type (identical and mirrored) and Angular Disparity (0, 60, 120, and 180°) served as within-subject factors. Sphericity was examined using Mauchly’s test of sphericity; if the assumption of sphericity had been violated, Greenhouse-Geisser correction were reported. Bonferroni correction was used to account for multiple comparisons. The effect size was evaluated using partial eta squared (). All data are presented as mean ± standard error of the mean (SE). The detailed statistical results about ANOVA were listed in Supplementary Table 5.

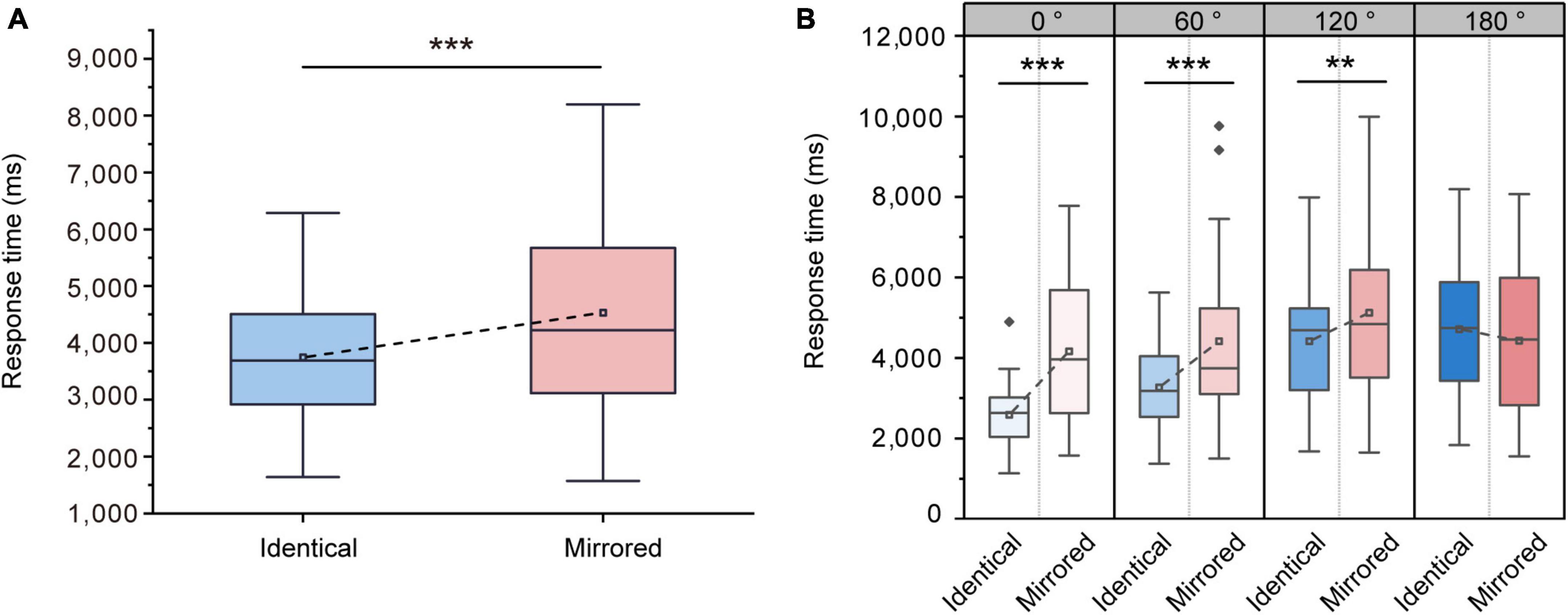

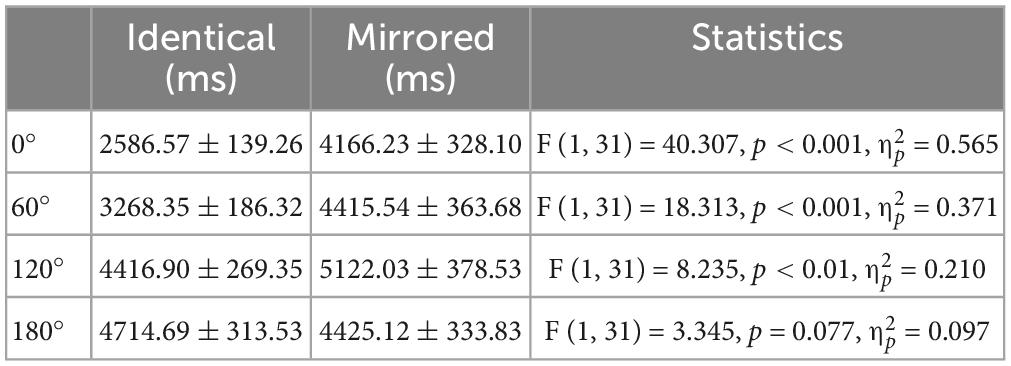

Response times were used to examine whether there is a mental rotation effect during the VR task. The ANOVA on the response times showed significant main effects of Stimulus Type [F (1, 31) = 22.147, p < 0.001, = 0.417] and Angular Disparity [F (3, 93) = 39.530, p < 0.001, = 0.560]. The significant main effect of Stimulus Type was caused by lower response times when presented with identical objects (3746.63 ± 207.94 ms, mean ± SE) compared to mirrored objects (4532.23 ± 327.65 ms, mean ± SE; Figure 3A). The interaction between Stimulus Type and Angular Disparity was also significant [F (3, 93) = 17.854, p < 0.001, = 0.365]. Pairwise comparisons revealed significant differences in response times between identical and mirrored objects at 0, 60 and 120° (all p < 0.01; Table 1 and Figure 3B). There was no significant difference in response times between identical and mirrored objects at 180° (p = 0.077; Table 1 and Figure 3B).

Figure 3. Changes in response times during the virtual reality (VR) mental rotation task. (A) Boxplots present response times for identical and mirrored objects. (B) Grouped boxplots present response times for identical and mirrored objects at 0, 60, 120 and 180°. The boxplots illustrate the first quartile, median, and third quartile and 1.5 times the interquartile range for both the upper and lower ends of the box. Black horizontal lines and asterisks denote significant differences (**p < 0.01, ***p < 0.001).

Table 1. Overview of response times (mean ± standard error) for identical and mirrored objects at four angular disparities.

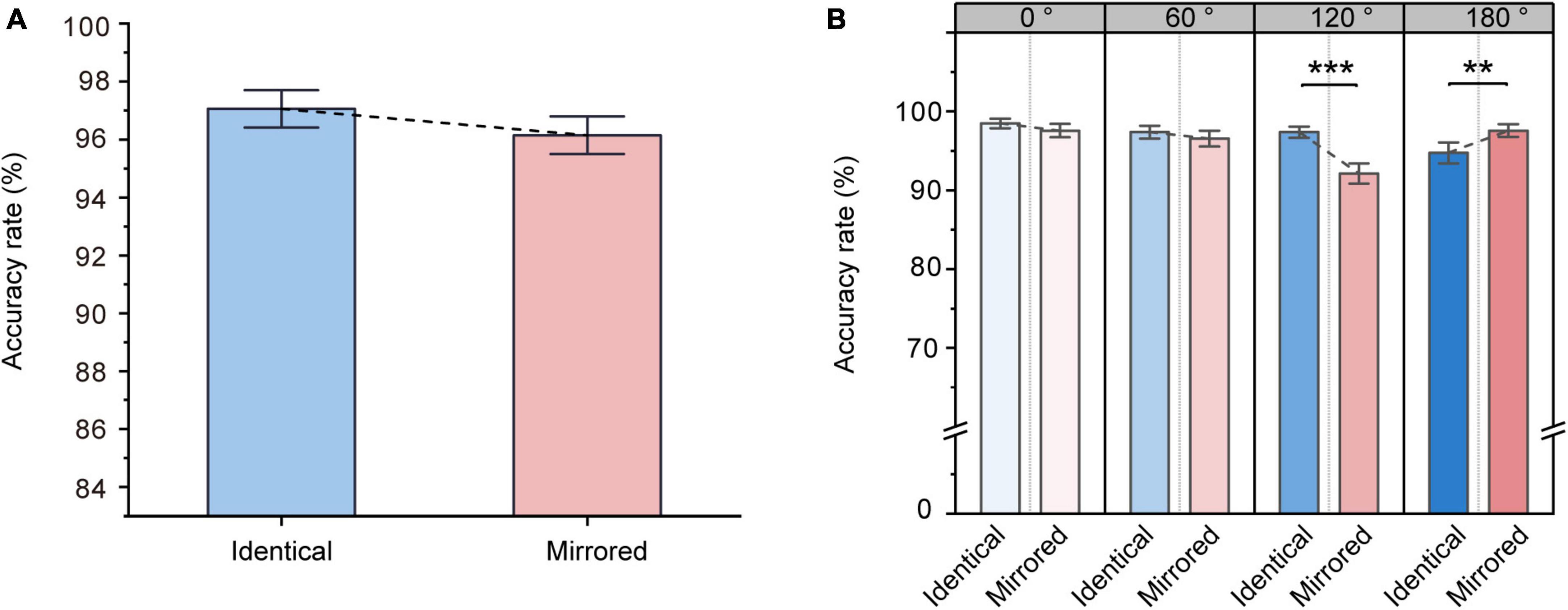

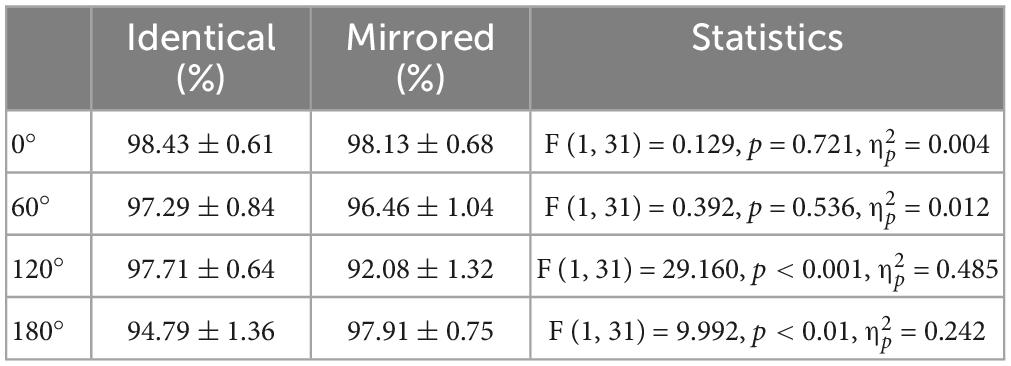

The ANOVA on the accuracy rates showed no significant main effect of Stimulus Type [F (1, 31) = 2.519, p = 0.123, = 0.075]. The accuracy rates for identical objects (97.05 ± 0.64 %, mean ± SE) were not significantly higher than that for mirrored objects (96.14 ± 0.65 %, mean ± SE; Figure 4A). There was a significant main effect of Angular Disparity [F (3, 93) = 5.255, p = 0.002, = 0.145] and a significant interaction between Angular Disparity and Stimulus Type [F (3, 93) = 11.873, p < 0.001, = 0.277]. The differences in different angular disparity between identical and mirrored objects were further revealed. Pairwise comparisons revealed significant differences in accuracy rates between identical and mirrored objects at 120 and 180° (all p < 0.01; Table 2 and Figure 4B). There was no difference (all p > 0.05; Table 2 and Figure 4B) in accuracy rates between identical and mirrored objects at 0 and 60°.

Figure 4. Changes in accuracy rates during the virtual reality (VR) mental rotation task. (A) Bar graphs present average accuracy rates for identical and mirrored objects. (B) Grouped bar graphs present accuracy rates for identical and mirrored objects at 0, 60, 120 and 180°. Error bars denote standard errors. Black lines and asterisks denote significant differences (**p < 0.01, ***p < 0.001).

Table 2. Accuracy rates (mean ± standard error) for identical and mirrored objects at four angular disparities.

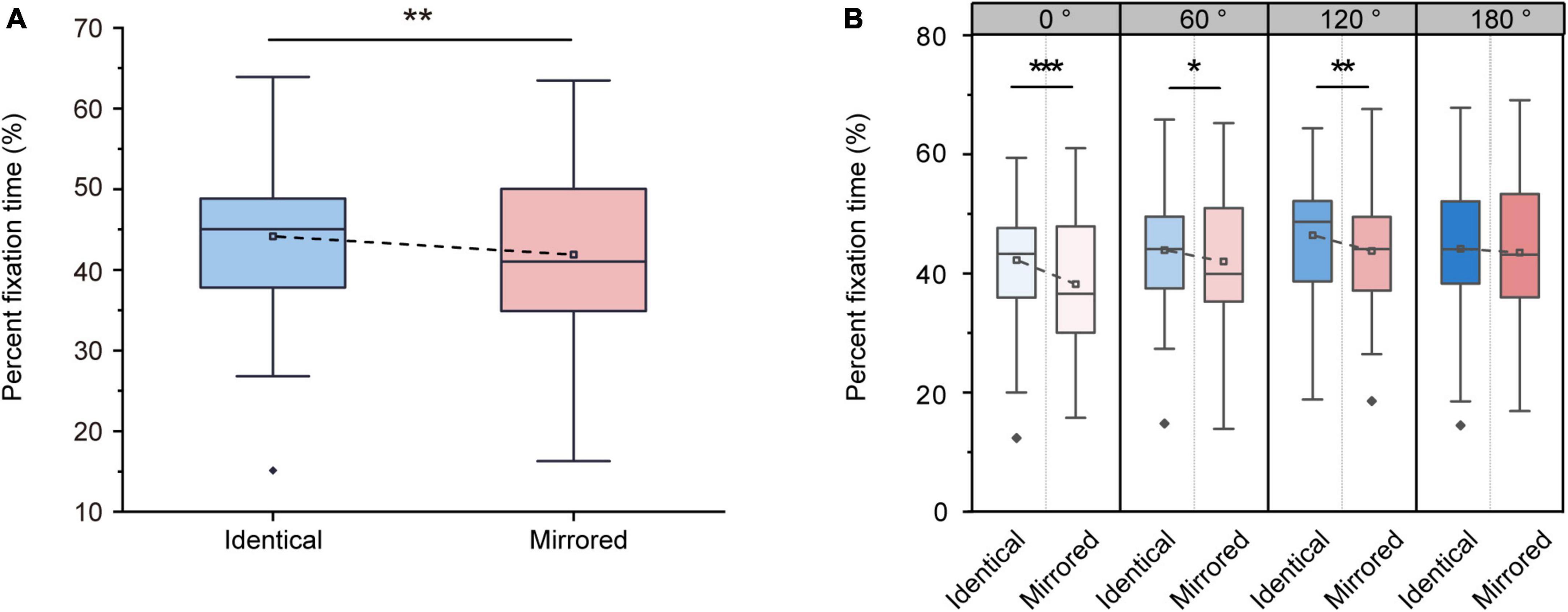

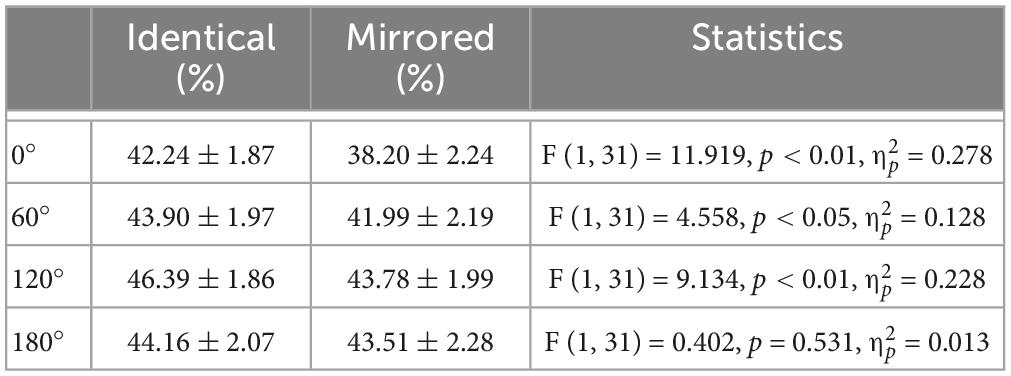

The ANOVA on the percent fixation time revealed significant main effects of Stimulus Type [F (1, 31) = 11.658, p = 0.002, = 0.273] and Angular Disparity [F (3, 93) = 14.334, p < 0.001, = 0.316]. The significant main effect of Stimulus Type demonstrated that the percent fixation time was significantly higher for identical objects (44.18 ± 1.84 %, mean ± SE) than for mirrored objects (41.87 ± 2.11 %, mean ± SE; Figure 5A). Moreover, there was also a significant interaction between Angular Disparity and Stimulus Type [F (3, 93) = 2.789, p = 0.045, = 0.083]. Pairwise comparisons revealed significant differences between identical and mirrored objects at 0, 60 and 120° (all p < 0.05; Table 3 and Figure 5B). There was no difference in the percent fixation time (p = 0.531; Table 3 and Figure 5B) between identical and mirrored objects at 180°.

Figure 5. Changes in percent fixation time during the virtual reality (VR) mental rotation task. (A) Boxplots present percent fixation times for identical and mirrored objects. (B) Grouped boxplots present percent fixation times for identical and mirrored objects at 0, 60, 120 and 180°. The boxplots illustrate the first quartile, median, and third quartile and 1.5 times the interquartile range for both the upper and lower ends of the box. Black horizontal lines and asterisks denote significant differences (*p < 0.05, **p < 0.01, ***p < 0.001).

Table 3. Overview of percent fixation time (mean ± standard error) for identical and mirrored objects at four angular disparities.

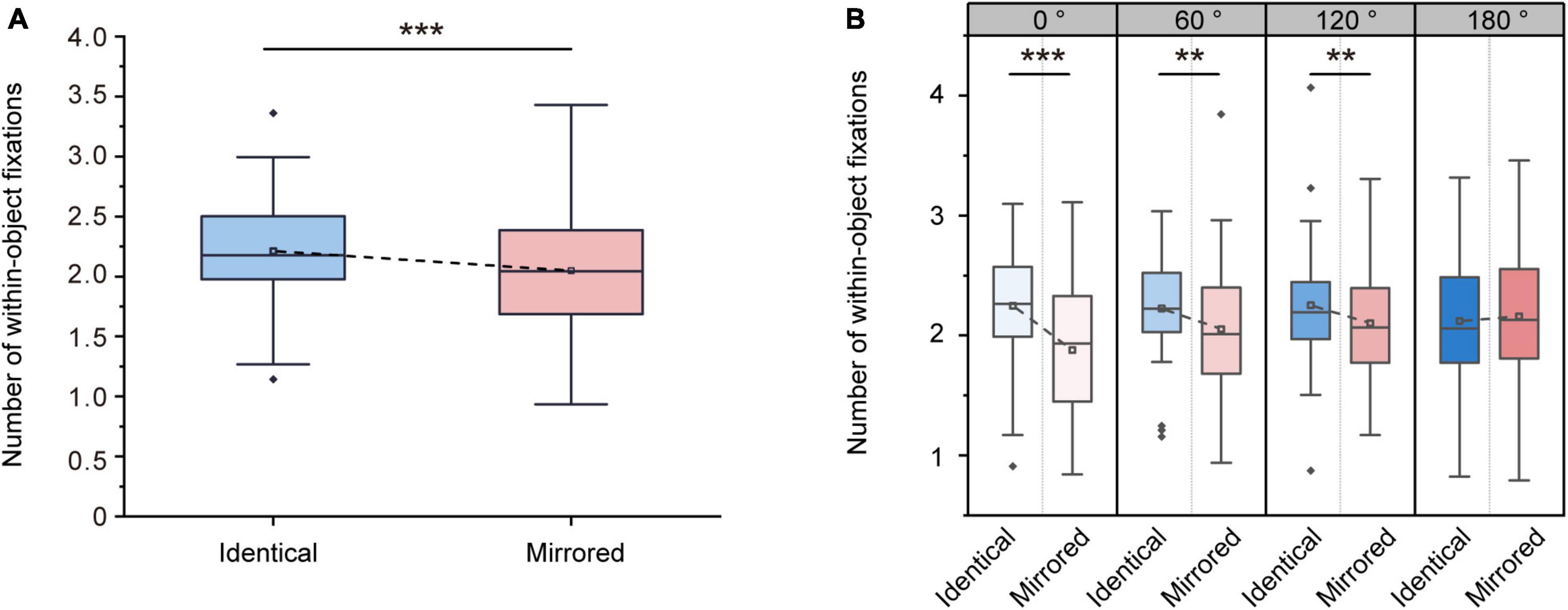

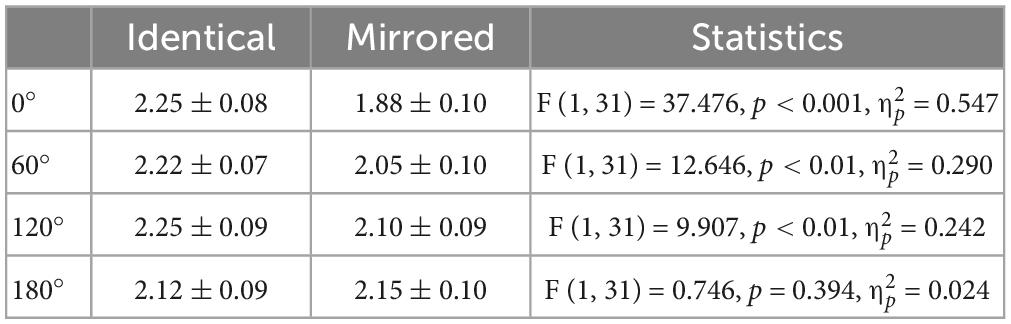

Repeated-measures ANOVA on the number of within-object fixations revealed a significant main effect of Stimulus Type [F (1, 31) = 32.853, p < 0.001, = 0.515]. The number of within-object fixations was significantly higher for identical objects (2.21 ± 0.08, mean ± SE) than for mirrored objects (2.05 ± 0.09, mean ± SE; Figure 6A), suggesting that the participants might make multiple comparisons within an object when comparing identical objects. This was akin to a piecemeal strategy (Khooshabeh et al., 2013), in which the participants might break the objects into pieces and encode partial spatial information. We also observed a significant main effect of Angular Disparity [F (3, 93) = 3.225, p = 0.026, = 0.094] and a significant interaction between Angular Disparity and Stimulus Type [F (3, 93) = 11.929, p < 0.001, = 0.278]. Pairwise comparisons revealed significant differences in the number of within-object fixations between identical and mirrored objects at 0, 60 and 120° (all p < 0.01; Table 4 and Figure 6B). However, there was no difference (p = 0.394; Table 4 and Figure 6B) in the number of within-object fixations between identical and mirrored objects at 180°.

Figure 6. Changes in number of within-object fixations during the virtual reality (VR) mental rotation task. (A) Boxplots present the number of within-object fixations for identical and mirrored objects. (B) Grouped boxplots present the number of within-object fixations for identical and mirrored objects at 0, 60, 120 and 180°. The boxplots illustrate the first quartile, median, and third quartile and 1.5 times the interquartile range for both the upper and lower ends of the box. Black horizontal lines and asterisks denote significant differences (**p < 0.01, ***p < 0.001).

Table 4. Overview of number of within-object fixations (mean ± standard error) for identical and mirrored objects at four angular disparities.

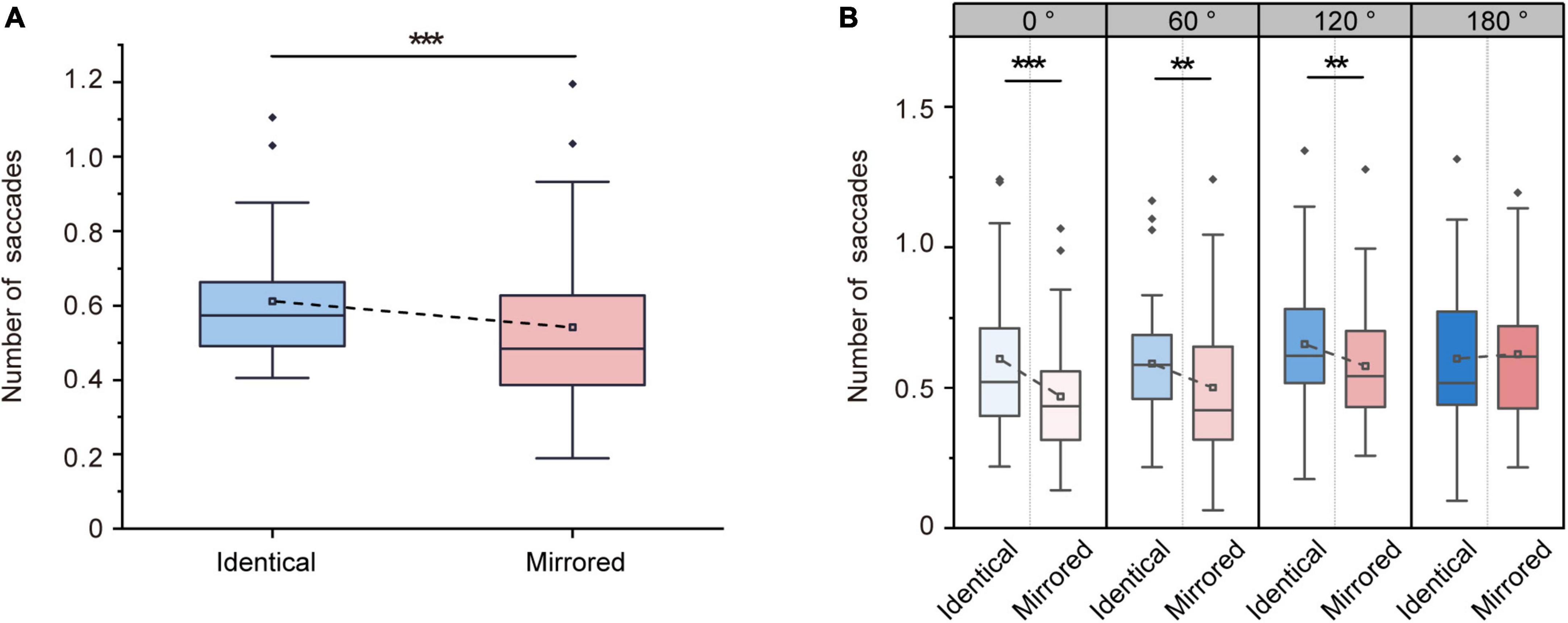

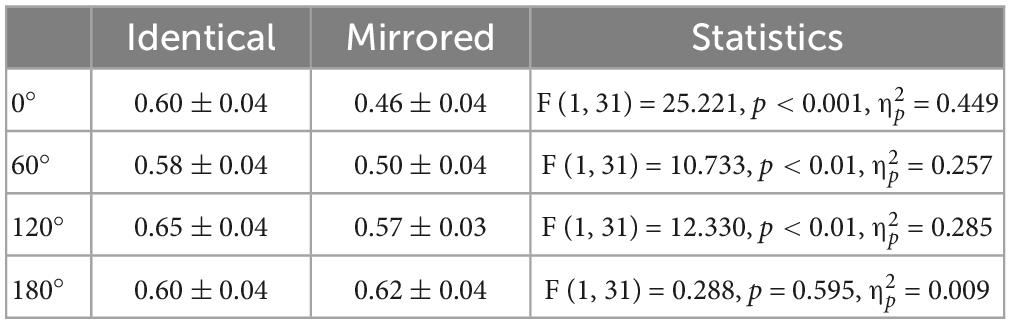

Repeated-measures ANOVA revealed significant main effects of Stimulus Type [F (1, 31) = 20.299, p < 0.001, = 0.396] and Angular Disparity [F (3, 93) = 11.343, p < 0.001, = 0.268]. The significant main effect of Stimulus Type was due to a higher number of saccades for identical objects (0.61 ± 0.03, mean ± SE) compared to for mirrored objects (0.54 ± 0.04, mean ± SE; Figure 7A). A significant interaction between Angular Disparity and Stimulus Type was also observed [F (3, 93) = 6.760, p < 0.001, = 0.179]. Pairwise comparisons revealed significant differences in the number of saccades between identical and mirrored objects at 0, 60 and 120° (all p < 0.01; Table 5 and Figure 7B). However, there was no difference in the number of saccades (p = 0.595; Table 5 and Figure 7B) between identical and mirrored objects at 180°.

Figure 7. Changes in number of saccades during the virtual reality (VR) mental rotation task. (A) Boxplots present the number of saccades for identical and mirrored objects. (B) Grouped boxplots present the number of saccades for identical and mirrored objects at 0, 60, 120 and 180°. The boxplots illustrate the first quartile, median, and third quartile and 1.5 times the interquartile range for both the upper and lower ends of the box. Black horizontal lines and asterisks denote significant differences (**p < 0.01, ***p < 0.001).

Table 5. Overview of number of saccades (mean ± standard error) for identical and mirrored stimuli at four angular disparities.

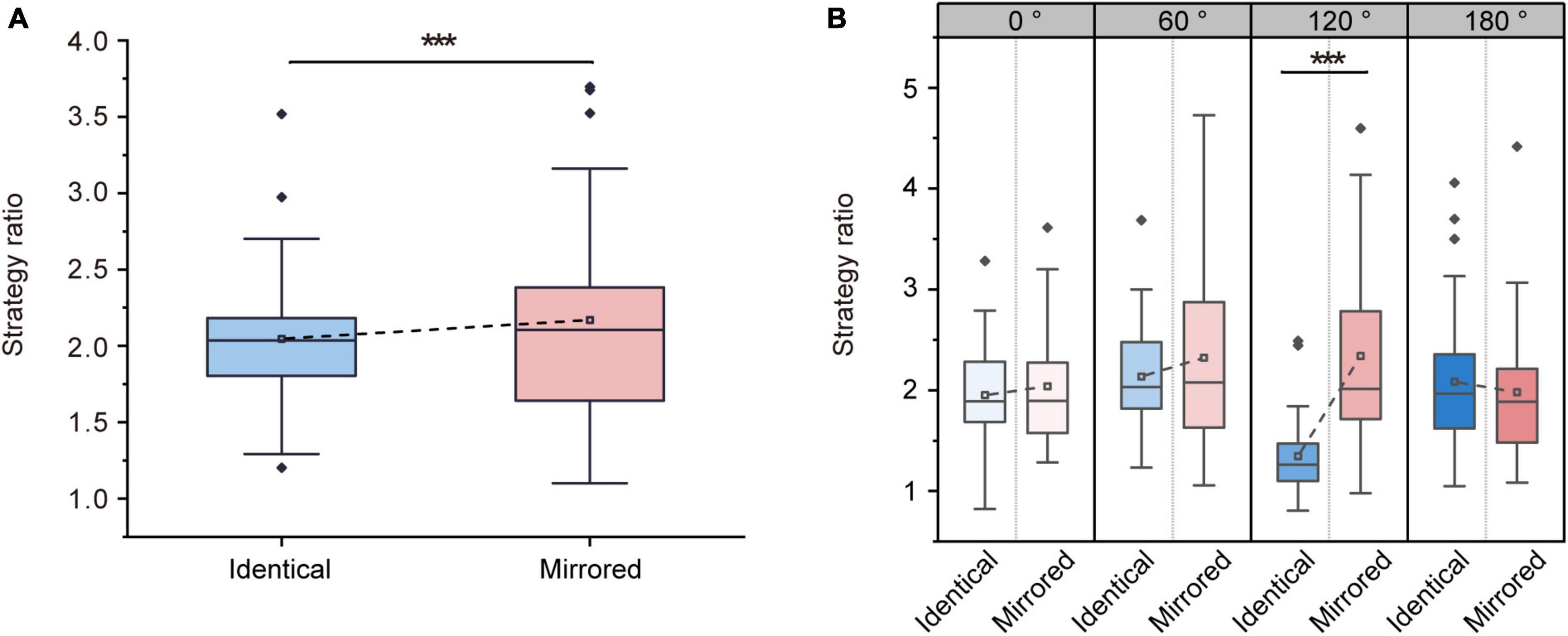

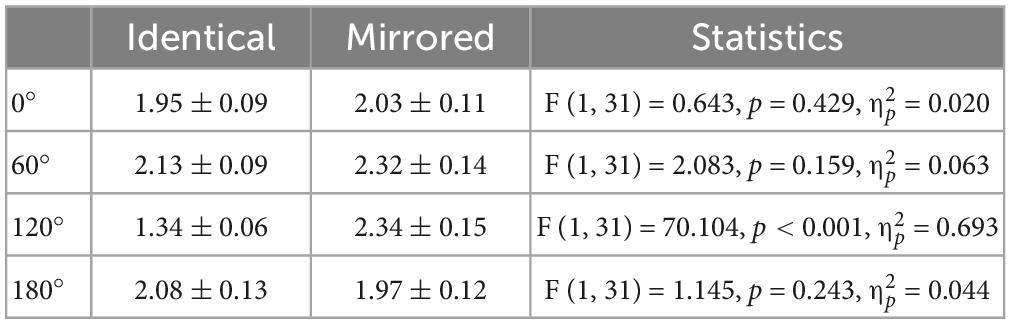

Repeated-measures ANOVA on strategy ratios revealed significant main effects of Stimulus Type [F (1, 31) = 17.008, p < 0.001, = 0.354] and Angular Disparity [F (3, 93) = 9.987, p < 0.001, = 0.244]. The significant main effect of Stimulus Type demonstrated that the strategy ratio for identical objects (2.04 ± 0.08, mean ± SE) was significantly lower than that for mirrored objects (2.17 ± 0.12, mean ± SE; Figure 8A). Moreover, there was a significant interaction between Angular Disparity and Stimulus Type [F (3, 93) = 23.035, p < 0.001, = 0.426]. Pairwise comparisons revealed significant differences in strategy ratios between identical and mirrored objects at 120° (p < 0.001; Table 6 and Figure 8B). There was no difference in strategy ratios (p > 0.05; Table 6 and Figure 8B) between identical and mirrored objects at 0, 60 and 180°.

Figure 8. Changes in strategy ratio during the virtual reality (VR) mental rotation task. (A) Boxplots present average strategy ratio for identical and mirrored objects. (B) Grouped boxplots present strategy ratio for identical and mirrored objects at 0, 60, 120 and 180°. The boxplots illustrate the first quartile, median, and third quartile and 1.5 times the interquartile range for both the upper and lower ends of the box. Black horizontal lines and asterisks denote significant differences (***p < 0.001).

Table 6. Overview of strategy ratio (mean ± standard error) for identical and mirrored objects at four angular disparities.

In the present study, we presented behavioral and eye movement characteristics during a VR mental rotation task using 3D objects. We found that the VR mental rotation task also evoked a mental rotation effect. Significant differences were observed in response times and eye movement metrics between identical and mirrored objects. The eye movement data further explained the reasons for that response times were longer when comparing mirrored objects than when comparing identical objects.

Based on mental rotation task using 2D images, we conducted a VR mental rotation task using 3D objects. The 2D images presented via a computer screen provide an illusion of depth (Snow and Culham, 2021). Compared to 2D images of the 3D objects (Griksiene et al., 2019; Toth and Campbell, 2019), 3D objects presented in VR can provide real depth cues, enhancing the realness of visual stimuli (Tang et al., 2022). VR allows balances between experimental control and ecological validity (Snow and Culham, 2021). The stereo 3D objects presented in VR in the current task are more ecologically valid than simple 2D images, which can improve our understanding of the neural mechanisms associated with naturalistic visual stimuli. Previously, some studies have compared the metal rotation performance of 2D and stereo 3D forms (Neubauer et al., 2010; Price and Lee, 2010; Lochhead et al., 2022; Tang et al., 2022). Although some factors (i.e., experimental paradigm, sample size, and experimental environment) may affect the experimental results of comparisons between conventional 2D and stereo 3D forms, these studies may provide support for the VR mental rotation task with eye tracking.

As expected, we observed a significant mental rotation effect (Shepard and Metzler, 1971), indicating that the VR mental rotation task is effective and available. Interestingly, the identical and mirrored stimuli are different in behavioral performance, which was consistent with literatures (Hamm et al., 2004; Paschke et al., 2012; Chen et al., 2014). Our results demonstrated that the accuracy rate for identical objects decreased at 180° and the accuracy rate for mirrored objects increased at 180°, which were line with the findings of a prior study (Chen et al., 2014). The differences in accuracy rate between identical and mirrored objects might be associated with the differences in cognitive processing and a decrease of the task difficulty at higher angular disparities for mirrored objects (Paschke et al., 2012). Due to opposite arm positions, the paired mirrored stimulus at 180° could be directly perceived as mirrored object without any rotation manipulation. That is, the participants probably determined the mirrored objects by only comparing the arm positions of objects, which might increase correct responses. By contrast, rotation operation is required at 120°. Response-preparation theory (Cooper and Shepard, 1973) suggested that motor response during mental rotation was planned on the basis of the expectancy of the paired objects being identical. For mirrored objects, the expectancy may result in that an already planned motor response would have to be inhibited and re-planned. Moreover, the expectancy would contribute to a lower accuracy rate because the planned motor responses were probably difficult to inhabit. Therefore, the accuracy rates for identical objects are higher than that for mirrored objects at 120°. Response times were higher when comparing mirrored objects than when comparing identical objects, which might be attributed to additional cognitive processing. In the 3D mental rotation task, participants were asked to decide whether the paired objects were identical or mirrored. The participants mentally represented the paired objects and rotated them and simultaneously made a direct match between their internal mental representation and the external visual stimuli. A decision of “mirrored” would be made if the participants discovered that some parts of the paired objects were different. When the participants identified differences between objects, more response time was required to choose the “mirrored” response. This was possibly associated with the strategy of visual processing during mental rotation (Toth and Campbell, 2019), which was further supported by the eye tracking data.

Eye movements allow us to scan the visual field with high resolution (Mast and Kosslyn, 2002), and can provide insights into visual behavior in mental rotation task using 2D images (Nazareth et al., 2019). However, few studies have reported eye movements in mental rotation tasks involving naturalistic 3D stimuli. In the present work, we analyzed eye movement parameters, including fixations and saccades, to quantify the processes of visual processing of 3D objects. We found that percent fixation time was higher when participants compared identical objects than mirrored objects. Eye fixations provide information on active processing of information (Mast and Kosslyn, 2002) and are associated with visual attention (Verghese et al., 2019; Skaramagkas et al., 2023). The 3D objects were encoded at each fixation and was then reconstructed in the brain based on input from multiple fixations. Effective visual information can be extracted around these fixations (Ikeda and Takeuchi, 1975). The higher percent fixation times for identical objects suggested that the participants spent more time fixating on the objects, indicating that the participants might allocate more attention to the objects during the completion of the mental rotation task when comparing the identical objects. The increased attention suggested that more visual information was obtained when the participants compared identical 3D objects. In addition, the number of fixations made on 3D objects per second was higher for identical objects than for mirrored objects, suggesting that the participants might make more fixations per second when comparing identical objects. Because visual information was obtained from each fixation, the participants dealt with more visual information per second when comparing the identical objects. This may indicate that the efficiency of visual information acquisition was higher for identical objects than for mirrored objects. These results further illustrated that faster response times for identical objects could be attributed to increased attention and higher efficiency of visual information acquisition.

Visual strategies during mental rotation have been associated with behavioral performance (Heil and Jansen-Osmann, 2008). Strategy dichotomies, including holistic and piecemeal strategies, have been extensively discussed in previous studies (Heil and Jansen-Osmann, 2008; Khooshabeh et al., 2013). The piecemeal strategy generally results in longer response times compared with the holistic strategy. These different visual strategies can be reflected by the strategy ratio, which is the ratio of the number of within-object fixations to the number of between-object saccades. The present study showed a difference in strategy ratios between mirrored objects and identical objects. Previous studies demonstrated that a strategy ratio close to 1 indicated a holistic strategy and a strategy ratio greater than one indicated a piecemeal strategy (Khooshabeh and Hegarty, 2010). Thus, the participants in the present study preferred a piecemeal strategy for mirrored objects compared with identical objects. For mirrored objects, participants performed multiple fixations within one object to compare different parts of the object before they switched to the other. Fixation switches were more frequently observed when comparing mirrored objects than when comparing identical objects. Fixation switches between the two 3D objects could be regard as constant updates, which is necessary to maintain the object perception (Hyun and Luck, 2007). These constant updates may be more difficult during the mental rotation of mirrored objects. These findings provided further explanation for the behavioral results observed in the VR mental rotation task.

This study presents the behavioral and eye movement characteristics in a VR mental rotation task with eye tracking, but it still has some limitations. Although the present VR mental rotation task elicits a mental rotation effect, comparing the characteristics of VR to 2D mental rotation task is also important. The comparisons could provide compelling evidence that whether the VR mental rotation task is a better alternative. Future studies could add a conventional 2D mental rotation task with eye tracking and compare the differences in behavioral and eye movement characteristics between 2D and VR mental rotation tasks.

In the present work, we examined behavioral and eye movement characteristics during a VR mental rotation task using 3D stimuli. Significant differences were obtained in behavioral performance and eye movement metrics in the rotation and comparison of identical and mirrored objects. Eye movement metrics, including the percent fixation time, the number of within-object fixations, and the number of saccades, were significantly lower when comparing mirrored objects than identical objects. The eye movement data provided further explanation for the behavioral results in the VR mental rotation task.

The original contributions presented in this study are included in the article/Supplementary material, further inquiries can be directed to the corresponding authors.

The studies involving human participants were reviewed and approved by the current study adhered to the tenets of the Declaration of Helsinki, and the ethical approval was approved by Beihang University (BM20200183). All of the participants provided written informed consents in advance of the study. A signed informed consent statement was received from each participant. The patients/participants provided their written informed consent to participate in this study.

ZT: formal analysis, writing—original draft, and visualization. XL: conceptualization, funding acquisition, project administration, and writing—review and editing. HH and XD: formal analysis. MT and LF: software. XQ, DC, JW, and JG: visualization. YD: writing—original draft. ST: writing—review and editing. YF: conceptualization, funding acquisition, project administration, supervision, and writing—review and editing. All authors contributed to the article and approved the submitted version.

This work was supported by the National Key Research and Development Plan of China (2020YFC2005902) and the National Natural Science Foundation of China (T2288101, U20A20390, and 11827803).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2023.1143006/full#supplementary-material

Andersson, R., Larsson, L., Holmqvist, K., Stridh, M., and Nystrom, M. (2017). One algorithm to rule them all? An evaluation and discussion of ten eye movement event-detection algorithms. Behav. Res. Methods 49, 616–637. doi: 10.3758/s13428-016-0738-9

Arthur, T., Harris, D. J., Allen, K., Naylor, C. E., Wood, G., Vine, S., et al. (2021). Visuo-motor attention during object interaction in children with developmental coordination disorder. Cortex 138, 318–328. doi: 10.1016/j.cortex.2021.02.013

Berneiser, J., Jahn, G., Grothe, M., and Lotze, M. (2018). From visual to motor strategies: Training in mental rotation of hands. Neuroimage 167, 247–255. doi: 10.1016/j.neuroimage.2016.06.014

Blignaut, P. (2009). Fixation identification: The optimum threshold for a dispersion algorithm. Atten. Percept. Psychophys. 71, 881–895. doi: 10.3758/APP.71.4.881

Campbell, M. J., Toth, A. J., and Brady, N. (2018). Illuminating sex differences in mental rotation using pupillometry. Biol. Psychol. 138, 19–26. doi: 10.1016/j.biopsycho.2018.08.003

Chen, H., Guo, X., Lv, Y., Sun, J., and Tong, S. (2014). “Mental rotation process for mirrored and identical stimuli: A beta-band ERD study,” in Proceedings of the 2014 36th annual international conference of the IEEE engineering in medicine and biology society, EMBC 2014, (Piscataway, NJ: Institute of Electrical and Electronics Engineers Inc), 4948–4951. doi: 10.1109/EMBC.2014.6944734

Chiquet, S., Martarelli, C. S., and Mast, F. W. (2020). Eye movements to absent objects during mental imagery and visual memory in immersive virtual reality. Virtual Real. 25, 655–667. doi: 10.1007/s10055-020-00478-y

Clay, V., König, P., and König, S. (2019). Eye tracking in virtual reality. J. Eye Mov. Res. 12, 1–8. doi: 10.16910/jemr.12.1.3

Cooper, L. A., and Shepard, R. N. (1973). “Chronometric studies of the rotation of mental images,” in Visual information processing, ed. W. G. Chase (Amsterdam: Elsevier), 75–176.

Desrocher, M. E., Smith, M. L., and Taylor, M. J. (1995). Stimulus and sex-differences in performance of mental rotation–evidence from event-related potentials. Brain Cogn. 28, 14–38. doi: 10.1006/brcg.1995.1031

El Jamiy, F., and Marsh, R. (2019). Survey on depth perception in head mounted displays: Distance estimation in virtual reality, augmented reality, and mixed reality. IET Image Proc. 13, 707–712. doi: 10.1049/iet-ipr.2018.5920

Griksiene, R., Arnatkeviciute, A., Monciunskaite, R., Koenig, T., and Ruksenas, O. (2019). Mental rotation of sequentially presented 3D figures: Sex and sex hormones related differences in behavioural and ERP measures. Sci. Rep. 9:18843. doi: 10.1038/s41598-019-55433-y

Hamm, J. P., Johnson, B. W., and Corballis, M. C. (2004). One good turn deserves another: An event-related brain potential study of rotated mirror-normal letter discriminations. Neuropsychologia 42, 810–820. doi: 10.1016/j.neuropsychologia.2003.11.009

Haxby, J. V., Gobbini, M. I., and Nastase, S. A. (2020). Naturalistic stimuli reveal a dominant role for agentic action in visual representation. Neuroimage 216:116561. doi: 10.1016/j.neuroimage.2020.116561

Heil, M., and Jansen-Osmann, P. (2008). Sex differences in mental rotation with polygons of different complexity: Do men utilize holistic processes whereas women prefer piecemeal ones? Q. J. Exp. Psychol. 61, 683–689. doi: 10.1080/17470210701822967

Hofmann, S. M., Klotzsche, F., Mariola, A., Nikulin, V. V., Villringer, A., and Gaebler, M. (2021). Decoding subjective emotional arousal from EEG during an immersive virtual reality experience. Elife 10:e64812. doi: 10.7554/eLife.64812

Hsing, H. W., Bairaktarova, D., and Lau, N. (2023). Using eye gaze to reveal cognitive processes and strategies of engineering students when solving spatial rotation and mental cutting tasks. J. Eng. Educ. 112, 125–146. doi: 10.1002/jee.20495

Hyun, J. S., and Luck, S. J. (2007). Visual working memory as the substrate for mental rotation. Psychonom. Bull. Rev. 14, 154–158. doi: 10.3758/BF03194043

Ibbotson, M., and Krekelberg, B. (2011). Visual perception and saccadic eye movements. Curr. Opin. Neurobiol. 21, 553–558. doi: 10.1016/j.conb.2011.05.012

Ikeda, M., and Takeuchi, T. (1975). Influence of foveal load on the functional visual field. Percept. Psychophys. 18, 255–260. doi: 10.3758/BF03199371

Ito, T., Kamiue, M., Hosokawa, T., Kimura, D., and Tsubahara, A. (2022). Individual differences in processing ability to transform visual stimuli during the mental rotation task are closely related to individual motor adaptation ability. Front. Neurosci. 16:941942. doi: 10.3389/fnins.2022.941942

Jaaskelainen, I. P., Sams, M., Glerean, E., and Ahveninen, J. (2021). Movies and narratives as naturalistic stimuli in neuroimaging. Neuroimage 224:117445. doi: 10.1016/j.neuroimage.2020.117445

Khooshabeh, P., and Hegarty, M. (2010). “Representations of shape during mental rotation,” in Proceedings of the 2010 AAAI spring symposium, (San Francisco, CA: AI Access Foundation), 15–20.

Khooshabeh, P., Hegarty, M., and Shipley, T. F. (2013). Individual differences in mental rotation: Piecemeal versus holistic processing. Exp. Psychol. 60, 164–171. doi: 10.1027/1618-3169/a000184

Komogortsev, O. V., Gobert, D. V., Jayarathna, S., Koh, D. H., and Gowda, S. M. (2010). Standardization of automated analyses of oculomotor fixation and saccadic behaviors. IEEE Trans. Biomed. Eng. 57, 2635–2645. doi: 10.1109/tbme.2010.2057429

Kowler, E., Anderson, E., Dosher, B., and Blaser, E. (1995). The role of attention in the programming of saccades. Vis. Res. 35, 1897–1916. doi: 10.1016/0042-6989(94)00279-U

Lancry-Dayan, O. C., Ben-Shakhar, G., and Pertzov, Y. (2023). The promise of eye-tracking in the detection of concealed memories. Trends Cogn. Sci. 27, 13–16. doi: 10.1016/j.tics.2022.08.019

Lavoie, E. B., Valevicius, A. M., Boser, Q. A., Kovic, O., Vette, A. H., Pilarski, P. M., et al. (2018). Using synchronized eye and motion tracking to determine high-precision eye-movement patterns during object-interaction tasks. J. Vis. 18:18. doi: 10.1167/18.6.18

Llanes-Jurado, J., Marin-Morales, J., Guixeres, J., and Alcaniz, M. (2020). Development and calibration of an eye-tracking fixation identification algorithm for immersive virtual reality. Sensors 20:4956. doi: 10.3390/s20174956

Lochhead, I., Hedley, N., Çöltekin, A., and Fisher, B. (2022). The immersive mental rotations test: Evaluating spatial ability in virtual reality. Front. Virtual. Real. 3:820237. doi: 10.3389/frvir.2022.820237

Marini, F., Breeding, K. A., and Snow, J. C. (2019). Distinct visuo-motor brain dynamics for real-world objects versus planar images. Neuroimage 195, 232–242. doi: 10.1016/j.neuroimage.2019.02.026

Mast, F. W., and Kosslyn, S. M. (2002). Eye movements during visual mental imagery. Trends Cogn. Sci. 6, 271–272. doi: 10.1016/s1364-6613(02)01931-9

Minderer, M., and Harvey, C. D. (2016). Forum neuroscience virtual reality explored. Nature 533, 324–324. doi: 10.1038/nature17899

Musz, E., Loiotile, R., Chen, J., and Bedny, M. (2022). Naturalistic audio-movies reveal common spatial organization across “visual” cortices of different blind individuals. Cereb. Cortex 33, 1–10. doi: 10.1093/cercor/bhac048

Nazareth, A., Killick, R., Dick, A. S., and Pruden, S. M. (2019). Strategy selection versus flexibility: Using eye-trackers to investigate strategy use during mental rotation. J. Exp. Psychol. Learn. Mem. Cogn. 45, 232–245. doi: 10.1037/xlm0000574

Neubauer, A. C., Bergner, S., and Schatz, M. (2010). Two- vs. Three-dimensional presentation of mental rotation tasks: Sex differences and effects of training on performance and brain activation. Intelligence 38, 529–539. doi: 10.1016/j.intell.2010.06.001

Paschke, K., Jordan, K., Wüstenberg, T., Baudewig, J., and Leo Müller, J. (2012). Mirrored or identical–is the role of visual perception underestimated in the mental rotation process of 3D-objects?: A combined fMRI-eye tracking-study. Neuropsychologia 50, 1844–1851. doi: 10.1016/j.neuropsychologia.2012.04.010

Peters, M., and Battista, C. (2008). Applications of mental rotation figures of the Shepard and Metzler type and description of a mental rotation stimulus library. Brain Cogn. 66, 260–264. doi: 10.1016/j.bandc.2007.09.003

Pletzer, B., Steinbeisser, J., Van Laak, L., and Harris, T. (2019). Beyond biological sex: Interactive effects of gender role and sex hormones on spatial abilities. Front. Neurosci. 13:675. doi: 10.3389/fnins.2019.00675

Price, A., and Lee, H. S. (2010). The effect of two-dimensional and stereoscopic presentation on middle school students’ performance of spatial cognition tasks. J. Sci. Educ. Technol. 19, 90–103. doi: 10.1007/s10956-009-9182-2

Robertson, G. G., Card, S. K., and Mackinlay, J. D. (1993). Three views of virtual reality: Nonimmersive virtual reality. Computer 26:81. doi: 10.1109/2.192002

Salvucci, D. D., and Goldberg, J. H. (2000). “Identifying fixations and saccades in eye-tracking protocols,” in Proceedings of the eye tracking research and applications symposium 2000, ed. S. N. Spencer (New York, NY: Association for Computing Machinery (ACM)), 71–78.

Shepard, R. N., and Metzler, J. (1971). Mental rotation of 3-dimensional objects. Science 171, 701–703. doi: 10.1126/science.171.3972.701

Skaramagkas, V., Giannakakis, G., Ktistakis, E., Manousos, D., Karatzanis, I., Tachos, N. S., et al. (2023). Review of eye tracking metrics involved in emotional and cognitive processes. IEEE Rev. Biomed. Eng. 16, 260–277. doi: 10.1109/RBME.2021.3066072

Snow, J. C., and Culham, J. C. (2021). The treachery of images: How realism influences brain and behavior. Trends Cogn. Sci. 25, 506–519. doi: 10.1016/j.tics.2021.02.008

Suzuki, A., Shinozaki, J., Yazawa, S., Ueki, Y., Matsukawa, N., Shimohama, S., et al. (2018). Establishing a new screening system for mild cognitive impairment and Alzheimer’s disease with mental rotation tasks that evaluate visuospatial function. J. Alzheimers Dis. 61, 1653–1665. doi: 10.3233/jad-170801

Tang, Z. L., Liu, X. Y., Huo, H. Q., Tang, M., Liu, T., Wu, Z. X., et al. (2022). The role of low-frequency oscillations in three-dimensional perception with depth cues in virtual reality. Neuroimage 257:119328. doi: 10.1016/j.neuroimage.2022.119328

Tiwari, A., Pachori, R. B., and Sanjram, P. K. (2021). Isomorphic 2D/3D objects and saccadic characteristics in mental rotation. Comput. Mater. Continua 70, 433–450. doi: 10.32604/cmc.2022.019256

Toth, A. J., and Campbell, M. J. (2019). Investigating sex differences, cognitive effort, strategy, and performance on a computerised version of the mental rotations test via eye tracking. Sci. Rep. 9:19430. doi: 10.1038/s41598-019-56041-6

Verghese, P., McKee, S. P., and Levi, D. M. (2019). Attention deficits in amblyopia. Curr. Opin. Psychol. 29, 199–204. doi: 10.1016/j.copsyc.2019.03.011

Wenk, N., Buetler, K. A., Penalver-Andres, J., Müri, R. M., and Marchal-Crespo, L. (2022). Naturalistic visualization of reaching movements using head-mounted displays improves movement quality compared to conventional computer screens and proves high usability. J. Neuroeng. Rehabil. 19:137. doi: 10.1186/s12984-022-01101-8

Xue, J., Li, C., Quan, C., Lu, Y., Yue, J., and Zhang, C. (2017). Uncovering the cognitive processes underlying mental rotation: An eye-movement study. Sci. Rep. 7:10076. doi: 10.1038/s41598-017-10683-6

Keywords: eye movements, virtual reality, naturalistic stimuli, mental rotation, three-dimensional stimuli, visual perception

Citation: Tang Z, Liu X, Huo H, Tang M, Qiao X, Chen D, Dong Y, Fan L, Wang J, Du X, Guo J, Tian S and Fan Y (2023) Eye movement characteristics in a mental rotation task presented in virtual reality. Front. Neurosci. 17:1143006. doi: 10.3389/fnins.2023.1143006

Received: 12 January 2023; Accepted: 13 March 2023;

Published: 27 March 2023.

Edited by:

Jiawei Zhou, Wenzhou Medical University, ChinaReviewed by:

Rong Song, Sun Yat-sen University, ChinaCopyright © 2023 Tang, Liu, Huo, Tang, Qiao, Chen, Dong, Fan, Wang, Du, Guo, Tian and Fan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaoyu Liu, eC55LmxpdUBidWFhLmVkdS5jbg==; Yubo Fan, eXVib2ZhbkBidWFhLmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.