- Cyberpsychology Laboratory, Faculty of Social Sciences, Lobachevsky State University, Nizhny Novgorod, Russia

Music is increasingly being used as a therapeutic tool in the field of rehabilitation medicine and psychophysiology. One of the main key components of music is its temporal organization. The characteristics of neurocognitive processes during music perception of meter in different tempo variations technique have been studied by using the event-related potentials technique. The study involved 20 volunteers (6 men, the median age of the participants was 23 years). The participants were asked to listen to 4 experimental series that differed in tempo (fast vs. slow) and meter (duple vs. triple). Each series consisted of 625 audio stimuli, 85% of which were organized with a standard metric structure (standard stimulus) while 15% included unexpected accents (deviant stimulus). The results revealed that the type of metric structure influences the detection of the change in stimuli. The analysis showed that the N200 wave occurred significantly faster for stimuli with duple meter and fast tempo and was the slowest for stimuli with triple meter and fast pace.

1. Introduction

Music has been an integral part of the life of a human over its documented history. The same holds true for musical ability, i.e., the ability to perceive tone that is inherent to human beings in various degrees. Human beings are capable of perceiving and responding to rhythmic structures from infancy. Studies have shown that the rhythm young children find most comforting and the rate of synchronization with external rhythm change with age: the internal rhythm slows down and the rate of synchronization with the external rhythm decreases (Drake et al., 2000).

The physiological basis for rhythm perception lies in the motor networks, particularly the basal ganglia. Grahn and Brett (2007) found that the basal ganglia and supplementary motor area (SMA) responded with increased activity to rhythm with integer interval ratios and regular accents. At the same time, these areas are active even if there is no movement to the beat of the music (Merchant et al., 2015). Perception is also associated with the interaction of auditory and motor brain areas (Zatorre et al., 2007). Investigating beta-band fluctuations during rhythm perception, Fujioka et al. (2009) suggested that alongside with the sensorimotor function, the processing of auditory information is also provided by the motor system. Hence, to catch the effect of auditory information on motor system means of monitoring and control are required. Such control is carried out through modeling of upcoming events, which allows not only to anticipate them, but also to save resources for processing incoming information (Schubotz, 2007). Engel and Fries (2010) also found a large network in sensorimotor areas engaged when tracking the beat tempo even without the intention to adjust to the rhythm of movement and concluded that the motor system is activated during passive perception of rhythms.

The process of predicting rhythm events is reflected in event-related potentials (Bouwer and Honing, 2015). There are three components of event-related potentials associated with prediction generation: MMN, N2b, and P3a (Bouwer et al., 2016). In particular, the MMN (mismatch negativity) component reflects the memory system’s encoding of information beyond the acoustic properties of sound, and is related to rhythm, melody, and harmony (Näätänen et al., 2007). Therefore, a common research approach is to disrupt the predictions that the listener’s brain creates regarding the stimulus being played (Brochard et al., 2003; Honing et al., 2014; Bouwer et al., 2016). This approach involves using different types of stimulus materials for specific studies, such as music-like sounds (Bouwer et al., 2014), rhythms with the same sounds but different time intervals between them (Geiser et al., 2010), stimuli with changing tone of sounds and rhythm (Geiser et al., 2009), monotone isochronous sequences (Schwartze et al., 2011), and ‘shaking’ sequences with sound skips or changes in tone in unexpected places (Teki et al., 2011).

Music perception can be influenced by various factors. For example, Bouwer and Honing (2015) demonstrated that temporal attending and temporal prediction affect the way a metrical rhythm is processed. They found that intensity increments that fall on the expected beat are detected faster than those off the beat. Brochard et al. (2003) provided physiological evidence for the phenomenon of subjective accentuation, where identical sound events in isochronous sequences are perceived by the listener as unequal. Based on an analysis of the event-related potential components, it has been suggested that perceived disturbances are related to the dynamic unfolding of attention.

Two questions remain unclear regarding music perception: how it is affected by attention, and the listener’s musical training. Geiser et al. (2009) showed that musicians are faster and more accurate at detecting errors in rhythm and meter tasks, but the neurophysiological processes that accompany the perception of rhythm and meter did not differ between individuals with and without musical training. However, musical skills are associated with an increased sensitivity to meter, which can be traced to the MMN component at the physiological level (Geiser et al., 2010). Bouwer et al. (2014) found that simple rhythms can be perceived regardless of attention or musical training, and that consistent training improves rhythm perception even without attention directed to musical stimuli. However, differences in rhythm perception between musicians and non-musicians are only evident when attention is directed to the rhythm (Bouwer et al., 2016). In other words, conscious effort is required to manifest musical abilities or skills.

Geiser et al. (2010) consider rhythm and meter perception to be the ability to extract not only a regular pulse but also a hierarchical structure with accents of tones from a sequence of sound stimuli. Thus, the study of neural correlates of meter perception in musicians as a model can be extremely useful for the study of patterns of a higher hierarchical level. It is believed that the study of rhythmic series perception can provide a more detailed insight into the dynamic deployment of attention and the physiological correlates of this process (Schwartze et al., 2011). In addition, recent evidence suggests that there are shared neurobiological resources for processing musical rhythms and speech rhythms, which is reflected in reading. Fotidzis et al. (2018) applied the expected rhythm disturbance model to reading and found a relationship between musical rhythm abilities and reading comprehension skills reflected in EEG measures (Fotidzis et al., 2018).

The N200 wave is associated with the detection of novelty or mismatch in the presented stimuli. It is assumed that the amplitude of this wave reflects the degree of mismatch between the processed event and the representation in sensory memory of the “expected” event (Folstein and Van Petten, 2008). In our previous studies, we showed that the amplitude of the N200 wave is related to the processing of tonal harmony (the relationship between tones in musical sounds), which along with rhythmic organization refers to the key elements of musical syntax in traditional Western music (Radchenko et al., 2019). This suggests that the N200 wave will also be sensitive to changes in temporal organization, reflecting its representation in sensory auditory memory. The overall aim of the study is to examine the neurocognitive processing of the temporal organization of music in non-musician listeners. This may contribute to a better understanding of the effects observed when listening to music and increase the effectiveness of such procedures.

The primary goals of the current study are:

1. Comparison of amplitude-temporal characteristics of wave N200 event-related potentials (ERP) when listening to musical fragments with changing rhythmic structure (duple/triple meter);

2. Comparison of amplitude-temporal characteristics of the N200 wave of the ERP while listening to musical fragments with changing rhythmic structure in the context of fast/slow tempo of playback.

The general goal of this study is to investigate how the brain processes the rhythmic structure of music in relation to the tempo (fast vs. slow) and meter (duple vs. triple) factors.

2. Materials and methods

2.1. Study sample

Twenty participants took part in the study (6 men) aged 18–42 years with a median age of 23 years. None of them was a professional musician and had no special musical education other than secondary school. All participants gave written voluntary consent to participate in the study.

2.2. Stimuli

Audio signals with the following characteristics were generated to assess neural processing characteristics of the metrical structure and tempo. The rhythmic structure included changes in dynamics (volume) for strong and weak beats. A synthesized complex tone was used as the sample signal. The tone duration was 100 ms, the frequency was 1,046 Hz, corresponding to C6 (two octaves above middle C). The sampling rate was 44.1 kHz. The stimuli were recorded using the Ableton live 10 sequencer and Addictive Drums 2 plug-ins. The “downbeat” and “upbeat” part was realized through a similar complex tone, but with the volume lowered by 10 dB. A piano preset (Native Instruments Kontakt 5 plugin and The Giant library) was used in the tone coloring.

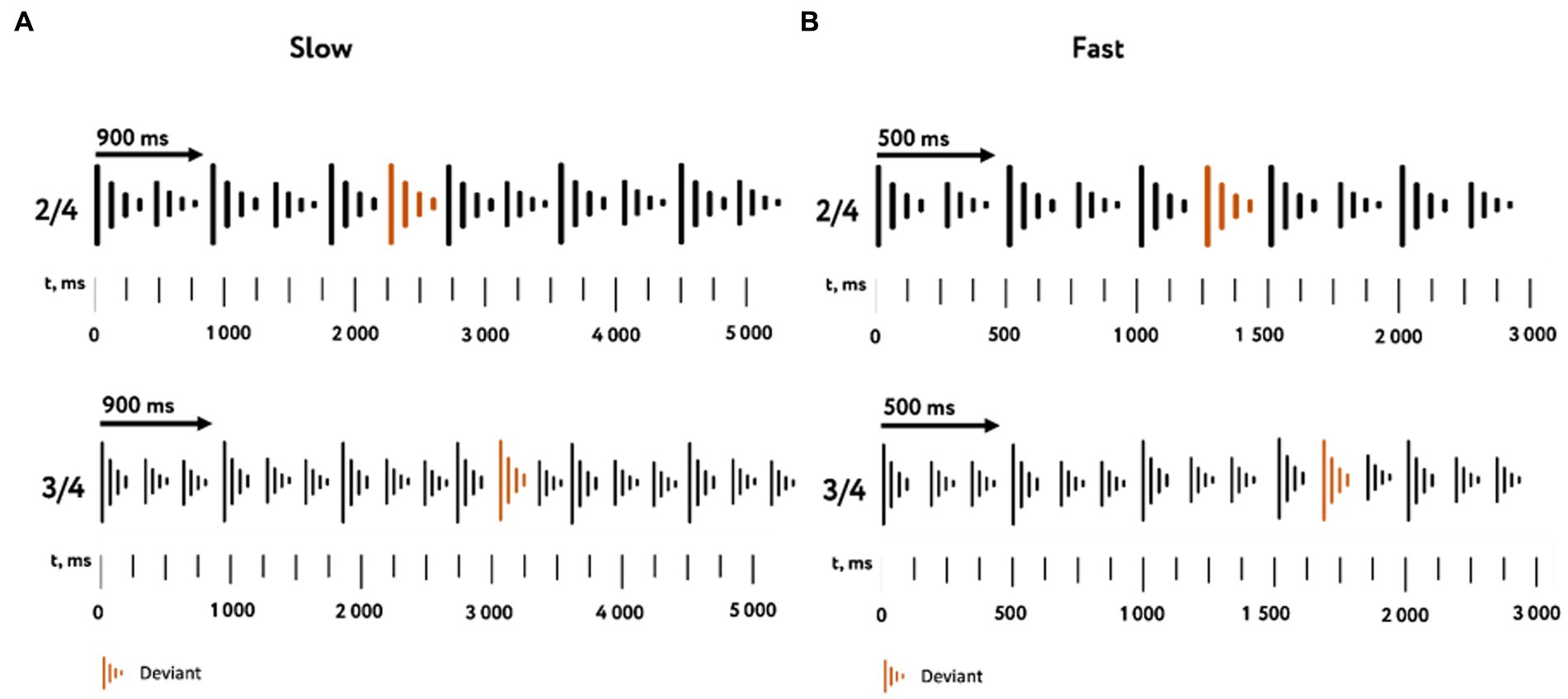

Using the received tones, four variations of rhythmic sequences were composed: duple meter in slow and fast tempo, triple meter in slow and fast tempo. For each variation, a standard version and a deviant version were implemented. The standard version was a successive alternation of a strong and weak beats. In the deviant version, all the weak beats were replaced by strong ones. Thus, the deviant patterns changed the accent pattern of strong and weak beats. The beat durations, depending on the type of rhythmic sequences, were 500 ms in the fast tempo and 900 ms in the slow tempo (Figure 1).

Figure 1. Characteristics of the stimulus material. The used variations of rhythmic sequences in slow (A) and fast (B) tempos with duple (top) and triple (bottom) meters are represented. The color indicates tone replacement in deviant stimuli.

2.3. Study design

The study consisted of four experimental series that differed in tempo (fast and slow) or meter (duple and triple). The order in which the series were presented was random for each participant. A break was taken between sessions to allow the participant to rest.

Each experimental series consisted of 625 audio stimuli, 85% of which were with a standard metric structure (standard stimulus) and 15% with a modified metric structure (deviant stimulus). The order in which the stimuli were presented was random, with at least 4 standard stimuli presented between 2 deviant stimuli and at least 10 standard stimuli presented at the beginning of the procedure.

The study of the temporal characteristics of musical signals is critical to any possible micro-delays between notes, so to eliminate these delays, a single sound file consisting of 625 stimuli was generated and subsequently played. Additionally, events were filled in the generated file, containing the playback time of each stimulus in the file. A cable connecting the COM port of the computer from which the sound file was played, and the peripheral port of the electroencephalograph was used to synchronize the playback time of the stimuli and the EEG recording.

The whole procedure of basic stimulus randomization, audio file generation, audio file playback, and event synchronization via COM port was performed in Presentation software.1

All stimuli were played from a separate presentation computer running Presentation software, using a Steinberg UR-12 audio interface through Sennheiser HD 569 headphones at 80 dB. The subjects were seated in a comfortable chair with adjustable backrests and armrests and viewed a video sequence of natural landscapes without sound.

The experimental procedure consisted of the following stages:

• Instruction of the subject;

• Listening to four experimental series, with a break between them for rest.

Recording was made using electroencephalograph-analyzer EEGA-21-26 ‘Encephalan-103’ (Medikom-MTD, Taganrog). Recording was performed from 19 electrodes (F7, F3, Fz, F4, F8, T3, C3, Cz, C4, T4, T5, P3, Pz, P4, T6, Fp1 Fp2, O1, and O2) with the conventional 10–20 placement, with reference electrodes on the mastoids and a grounding electrode in the vertex and a signal sampling frequency of 250 Hz. The original signal was filtered with a bandpass filter at 0.5–70 Hz and a notch filter at 50 Hz. The subelectrode impedance during the recording did not exceed 10 kΩ.

2.4. Data analysis

EEG data were processed using Brainstorm software version 20.10.2021 (http://neuroimage.usc.edu/brainstorm; Tadel et al., 2011). Initially the signal was converted into EDF format and imported into Brainstorm software. A high-pass filter with a cutoff frequency of 1 Hz and a low-pass filter with a cutoff frequency of 40 Hz were used. Recalculation of the EEG channel reference was performed using the average signal from the mastoids. Epochs at the time of standard and deviant stimuli with a time window of [−100; 500] ms were cut out for analysis. Each epoch was normalized with its baseline in the [−50; 0] ms window to remove the constant amplitude bias. To eliminate the effect of the artifacts caused by eye movements, the EOG channels were analyzed by a 200-ms sliding window, and samples with a standard deviation above 35 mV and amplitude above 100 mV were excluded from further analysis. On average, the number of excluded epochs was 15%. To reduce error of the first kind, the difference between standard and deviant stimuli was used for statistical analysis for the Cz electrode, as the one least affected by noise and having a pronounced effect for MMN waves (Luck and Gaspelin, 2017). For this signal, weighted mean amplitude and fractional area latency values were calculated in the range of 150–250 ms from stimulus presentation. The weighted mean amplitude was calculated as the average value of the amplitude in the analysis window. The fractional area latency means the time point that divides the area under the curve into two specified parts (in this case, 50% was used) in the corresponding time range. For statistical analysis, analysis of variance (ANOVA) was used with repeated measures taking into account the factors of tempo (fast vs. slow) and meter (duple vs. triple). Additionally, Tukey’s correction for multiple comparisons was applied. Statistical analysis was performed in RStudio (v. 2022.07.2 Build 576). The figures were plotted in Jupyter Notebook (desktop graphical user interface Anaconda Navigator 1.9.12).

3. Results

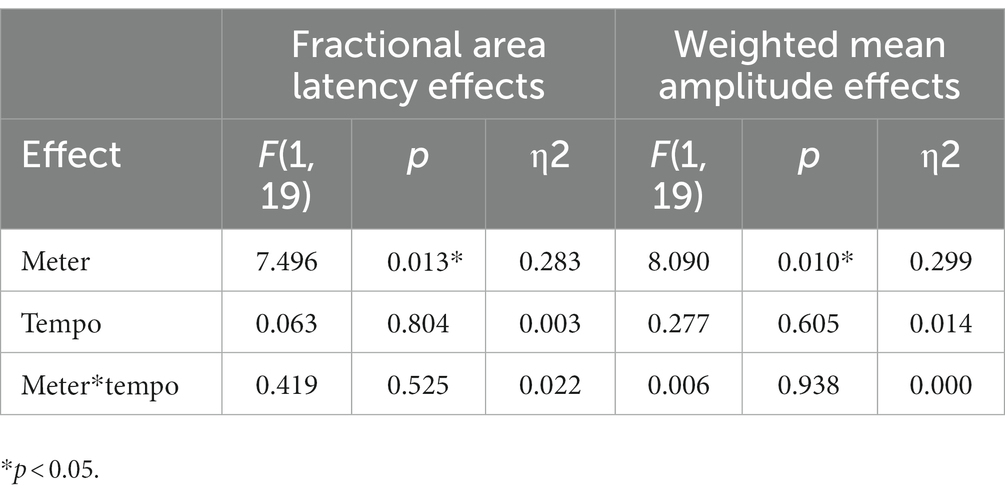

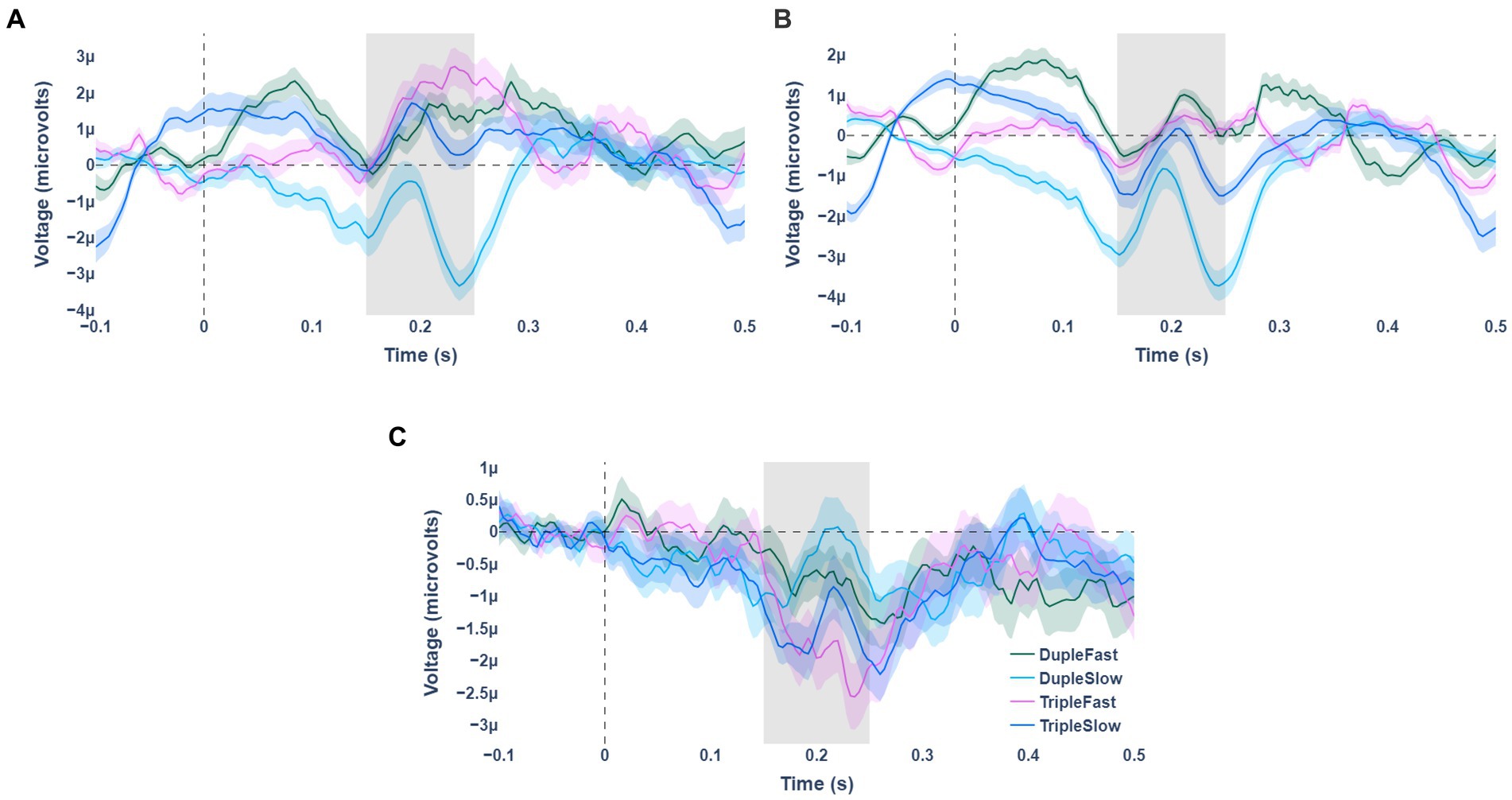

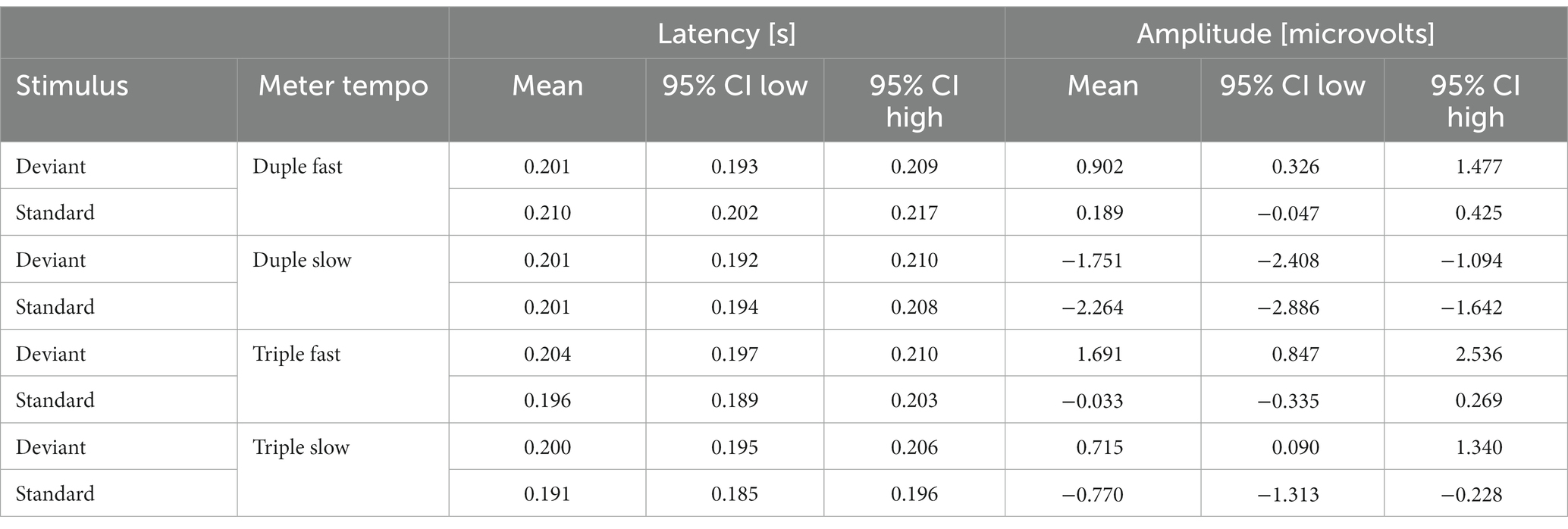

Statistically significant fractional area latency effects were found for the meter factor [F(1, 19) =7.496, p = 0.013, partial η2 = 0.283]. No significant effects were observed for the tempo factor and for the interaction effect of meter and tempo (see Table 1). The N200 wave occurred significantly faster for stimuli with duple meter and fast tempo, and the slowest for stimuli with triple meter and fast pace. Analysis of the weighted mean amplitude values showed a significant effect for the meter factor [F(1,19) = 8.090, p = 0.010, partial η2 = 0.299], no significant effects were observed for the tempo factor and for the interaction effect of meter and tempo (see Table 1). The N200 waves averaged over all participants for standard, deviant stimuli, and their differences are shown in Figure 2. Mean values and confidence intervals of latencies and amplitudes for each category of stimuli are shown in Table 2.

Table 1. Fractional area latency and weighted mean amplitude effects for the meter and tempo factors and their interaction.

Figure 2. Averaged waves of event-related potentials for the 4 stimulus presentation variants (indicated by color). Panel (A) shows the waves for deviant types of stimuli. Panel (B) is for standard stimulus types. Panel (C) represents the difference between the standard and deviant stimulus types.

Table 2. Mean values and confidence intervals (CI) of latencies and amplitudes for each category of stimuli.

4. General conclusions

The results revealed that the type of metric structure influences the detection of the change in it, since the N200 waves are larger for the triple meter and occur with a delay. We interpret these results as indicating that under these conditions the disturbances are more prominent and more perceptible, indicating a greater degree of mismatch detected by the nervous system (Winkler et al., 2009). Previous results obtained on a similar type of stimulus for tempo effects (Zhao et al., 2017) did not reach statistical significance in our study. In our work, we did not observe any significant effects for the tempo factor, while our colleagues observed a difference for both the tempo factor and the meter factor. It can be assumed that this effect may be due to the different level of musical experience of the non-musician participants in the two studies. In Zhao et al. (2017), the total time of private music lessons (less than 2 years) and the time period since the lessons finished end (more than 5 years) are specified as criteria. In our study, none of the participants had a specialized music education. Both criteria are quite general and do not allow for a homogeneous comparison of participants’ musical experience.

It is necessary to further assess the level of musicality of the non-musician participants, which will allow the evaluation of their experience and degree of involvement in the interaction with the musical environment. Additionally, the cultural background of the participants should be considered, as individual studies have demonstrated that the differences in cultural backgrounds affect the way people process auditory cues. For example, there is evidence that subjects from tonal language cultures (e.g., Chinese) performed better than subjects from non-tonal language cultures (e.g., French) at distinguishing pitch (Yu et al., 2015).

5. Study limitations

The level of musical training of the participants was not considered by means of the questionnaire. In the future we plan to take this into account. The current study may be limited by controlling for participants’ overall level of attention. Although all participants were watching a silent video to reduce blinking and were instructed to ignore the sounds from their headphones, musically trained individuals may unconsciously allocate a different amount of attention resources to sounds than non-musicians. It is necessary to continue the experiment and include professional musicians and children of school age in order to evaluate the age dynamics of wave N200 in the development process.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the Institutional Review Board of Faculty of Social Sciences of Lobachevsky State University. The patients/participants provided their written informed consent to participate in this study.

Author contributions

GR: experiment design and methodology, data analysis, and preparation of first draft. VD: experiment administration, literature review, data processing, and manuscript preparation. IZ, MZ, AD, and AR: assistance with experiment administration and data collection. KG: experiment pipeline preparation and data processing. All authors contributed to the article and approved the submitted version.

Funding

This research was funded by the grant of the Federal Academic Leadership Program Priority 2030.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^ www.neurobs.com

References

Bouwer, F. L., and Honing, H. (2015). Temporal attending and prediction influence the perception of metrical rhythm: evidence from reaction times and ERPs. Front. Psychol. 6:1094. doi: 10.3389/fpsyg.2015.01094

Bouwer, F. L., Van Zuijen, T. L., and Honing, H. (2014). Beat processing is pre-attentive for metrically simple rhythms with clear accents: an ERP study. PLoS One 9:e97467. doi: 10.1371/journal.pone.0097467

Bouwer, F. L., Werner, C. M., Knetemann, M., and Honing, H. (2016). Disentangling beat perception from sequential learning and examining the influence of attention and musical abilities on ERP responses to rhythm. Neuropsychologia 85, 80–90. doi: 10.1016/j.neuropsychologia.2016.02.018

Brochard, R., Abecasis, D., Potter, D., Ragot, R., and Drake, C. (2003). The “Ticktock” of our internal clock. Psychol. Sci. 14, 362–366. doi: 10.1111/1467-9280.24441

Drake, C., Jones, M. R., and Baruch, C. (2000). The development of rhythmic attending in auditory sequences: attunement, referent period, focal attending. Cognition 77, 251–288. doi: 10.1016/S0010-0277(00)00106-2

Engel, A. K., and Fries, P. (2010). Beta-band oscillations – signalling the status quo? Curr. Opin. Neurobiol. 20, 156–165. doi: 10.1016/j.conb.2010.02.015

Folstein, J. R., and Van Petten, C. (2008). Influence of cognitive control and mismatch on the N2 component of the ERP: a review. Psychophysiology 45, 152–170. doi: 10.1111/j.1469-8986.2007.00602.x

Fotidzis, T., Moon, H., Steele, J., and Magne, C. (2018). Cross-modal priming effect of rhythm on visual word recognition and its relationships to music aptitude and Reading achievement. Brain Sci. 8:210. doi: 10.3390/brainsci8120210

Fujioka, T., Trainor, L. J., Large, E. W., and Ross, B. (2009). Beta and gamma rhythms in human auditory cortex during musical beat processing. Ann. N. Y. Acad. Sci. 1169, 89–92. doi: 10.1111/j.1749-6632.2009.04779.x

Geiser, E., Sandmann, P., Jäncke, L., and Meyer, M. (2010). Refinement of metre perception—training increases hierarchical metre processing. Eur. J. Neurosci. 32, 1979–1985. doi: 10.1111/j.1460-9568.2010.07462.x

Geiser, E., Ziegler, E., Jäncke, L., and Meyer, M. (2009). Early electrophysiological correlates of meter and rhythm processing in music perception. Cortex 45, 93–102. doi: 10.1016/j.cortex.2007.09.010

Grahn, J. A., and Brett, M. (2007). Rhythm and beat perception in motor areas of the brain. J. Cogn. Neurosci. 19, 893–906. doi: 10.1162/jocn.2007.19.5.893

Honing, H., Bouwer, F. L., and Háden, G. P. (2014). “Perceiving temporal regularity in music: the role of auditory event-related potentials (ERPs) in probing beat perception” in Neurobiology of interval timing. eds. H. Merchant and V. de Lafuente (New York: Springer)

Luck, S. J., and Gaspelin, N. (2017). How to get statistically significant effects in any ERP experiment (and why you shouldn’t). Psychophysiology 54, 146–157. doi: 10.1111/psyp.12639

Merchant, H., Grahn, J. A., Trainor, L. J., Rohrmeier, M. A., and Fitch, W. T. (2015). Finding the beat: a neural perspective across humans and non-human primates. Philos.Trans. R. Soc. Lond. Ser. B Biol. Sci. 370:20140093. doi: 10.1098/rstb.2014.0093

Näätänen, R. N., Paavilainen, P., Rinne, T., and Alho, K. (2007). The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin. Neurophysiol. 118, 2544–2590. doi: 10.1016/j.clinph.2007.04.026

Radchenko, G. S., Gromov, K. N., Korsakova-Kreyn, M. N., and Fedotchev, A. I. (2019). Investigation of the amplitude-time characteristics of the N200 and P600 waves of event-related potentials during processing of the distance of tonal modulation. Hum. Physiol. 45, 137–144. doi: 10.1134/S0362119719020105

Schubotz, R. I. (2007). Prediction of external events with our motor system: towards a new framework. Trends Cogn. Sci. 11, 211–218. doi: 10.1016/j.tics.2007.02.006

Schwartze, M., Rothermich, K., Schmidt-Kassow, M., and Kotz, S. A. (2011). Temporal regularity effects on pre-attentive and attentive processing of deviance. Biol.Psychol. 87, 146–151. doi: 10.1016/j.biopsycho.2011.02.021

Tadel, F., Baillet, S., Mosher, J. C., Pantazis, D., and Leahy, R. M. (2011). Brainstorm: a user-friendly application for MEG/EEG analysis. Comput. Intell. Neurosci. 2011:879716. doi: 10.1155/2011/879716

Teki, S., Grube, M., Kumar, S., and Griffiths, T. D. (2011). Distinct neural substrates of duration-based and beat-based auditory timing. J. Neurosci. 31, 3805–3812. doi: 10.1523/JNEUROSCI.5561-10.2011

Winkler, I., Denham, S. L., and Nelken, I. (2009). Modeling the auditory scene: predictive regularity representations and perceptual objects. Trends Cogn. Sci. 13, 532–540. doi: 10.1016/j.tics.2009.09.003

Yu, X., Liu, T., and Gao, D. (2015). The mismatch negativity: an Indicator of perception of regularities in music. Behav. Neurol. 2015:469508. doi: 10.1155/2015/469508, 1, 12

Zatorre, R. J., Chen, J. L., and Penhune, V. B. (2007). When the brain plays music: auditorymotor interactions in music perception and production. Nat. Rev. Neurosci. 8, 547–558. doi: 10.1038/nrn2152

Keywords: musical meter, temporal structure, tempo, duple vs. triple meter, EEG, ERP

Citation: Radchenko G, Demareva V, Gromov K, Zayceva I, Rulev A, Zhukova M and Demarev A (2023) Neural mechanisms of temporal and rhythmic structure processing in non-musicians. Front. Neurosci. 17:1124038. doi: 10.3389/fnins.2023.1124038

Edited by:

Valeri Makarov, Complutense University of Madrid, SpainReviewed by:

Max Talanov, Institute for Artificial Intelligence R&D, SerbiaAlexander E. Hramov, Immanuel Kant Baltic Federal University, Russia

Anthony Kroyt Brandt, Rice University, United States

Copyright © 2023 Radchenko, Demareva, Gromov, Zayceva, Rulev, Zhukova and Demarev. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Valeriia Demareva, dmFsZXJpaWEuZGVtYXJldmFAZnNuLnVubi5ydQ==

Grigoriy Radchenko

Grigoriy Radchenko Valeriia Demareva

Valeriia Demareva Kirill Gromov

Kirill Gromov Irina Zayceva

Irina Zayceva Marina Zhukova

Marina Zhukova Andrey Demarev

Andrey Demarev