95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 18 October 2022

Sec. Brain Imaging Methods

Volume 16 - 2022 | https://doi.org/10.3389/fnins.2022.983602

Today, most neurocognitive studies in humans employ the non-invasive neuroimaging techniques functional magnetic resonance imaging (fMRI) and electroencephalogram (EEG). However, how the data provided by fMRI and EEG relate exactly to the underlying neural activity remains incompletely understood. Here, we aimed to understand the relation between EEG and fMRI data at the level of neural population codes using multivariate pattern analysis. In particular, we assessed whether this relation is affected when we change stimuli or introduce identity-preserving variations to them. For this, we recorded EEG and fMRI data separately from 21 healthy participants while participants viewed everyday objects in different viewing conditions, and then related the data to electrocorticogram (ECoG) data recorded for the same stimulus set from epileptic patients. The comparison of EEG and ECoG data showed that object category signals emerge swiftly in the visual system and can be detected by both EEG and ECoG at similar temporal delays after stimulus onset. The correlation between EEG and ECoG was reduced when object representations tolerant to changes in scale and orientation were considered. The comparison of fMRI and ECoG overall revealed a tighter relationship in occipital than in temporal regions, related to differences in fMRI signal-to-noise ratio. Together, our results reveal a complex relationship between fMRI, EEG, and ECoG signals at the level of population codes that critically depends on the time point after stimulus onset, the region investigated, and the visual contents used.

Any human cognitive function is realized by complex neural dynamics that evolve both in time and in locus (Haxby et al., 2014; Buzsáki and Llinás, 2017) across the brain. No currently available non-invasive neuroimaging technique can resolve such complex brain dynamics both in space and time with high resolution simultaneously. Instead, different techniques are used to pursue one goal or the other. Specifically, BOLD-fMRI1 resolves brain activity with high spatial resolution up to and beyond the millimeter scale, but low temporal resolution (Gosseries et al., 2008; Feinberg and Yacoub, 2012; Kim et al., 2020; Bollmann and Barth, 2021). In contrast, Magneto/Electroencephalogram (M/EEG) resolves brain activity with high temporal resolution in the millisecond range, but its spatial resolution is limited (Michel and Murray, 2012; Burle et al., 2015). To better understand the brain function in humans across time and space we require integration of non-invasively available information from multiple brain imaging techniques. The data provided by M/EEG and functional magnetic resonance imaging (fMRI) have a complex and incompletely understood relationship to the underlying neural sources. This complicates their interpretation on their own as well as in combination, introducing methodological uncertainty in our knowledge of the spatiotemporal neural dynamics underlying cognitive functions.

Interpreting the results of fMRI and EEG can benefit from comparison with results from invasive techniques that sample brain activity with high temporal and spatial specificity simultaneously, such as the electrocorticogram (ECoG). A large body of research has taken this route, relating invasive electrophysiological recordings and EEG or fMRI, respectively.

The relationship between EEG signals and invasive electrophysiological recordings has been investigated before mostly in the context of seizure detection (Kokkinos et al., 2019; Meisel and Bailey, 2019) and activity localization (Yamazaki et al., 2012; Hnazaee et al., 2020). Further studies have also explored frequency-band links between the two modalities (Petroff et al., 2016; Haufe et al., 2018; Meisel and Bailey, 2019). An emerging pattern from these studies is that the analytical method used for the comparison (Ding et al., 2007; Hnazaee et al., 2020) and the signal components of EEG and ECoG selected (Yamazaki et al., 2012; Meisel and Bailey, 2019) play a key role in achieving a reliable correspondence between the two modalities.

The relationship between fMRI signals and electrophysiological recordings has been investigated in a set of seminal studies in non-human primates during visual processing (Logothetis et al., 2001; Niessing et al., 2005; Magri et al., 2012; Klink et al., 2021). In the visual cortex, overall Blood Oxygen Level Dependent (BOLD) responses reflect Local Field Potentials (LFPs) more than the spiking output of the neurons and lower frequency activities (Logothetis et al., 2001; Niessing et al., 2005; Magri et al., 2012; Klink et al., 2021), but the correspondence also varies across brain regions. Studies in humans comparing anatomical and functional correspondence of fMRI and invasive electrophysiological signals show frequency (Huettel et al., 2004; Privman et al., 2007; Khursheed et al., 2011; Engell et al., 2012; Hermes et al., 2012; van Houdt et al., 2013), region (Huettel et al., 2004; Khursheed et al., 2011; Sanada et al., 2021), and time-specific (Jacques et al., 2016b) correspondence, for both task responses and resting state activity (Khursheed et al., 2011; Hermes et al., 2012; Hacker et al., 2017).

Common to most of the aforementioned studies is that the links between non-invasive neuroimaging signals and ECoG signals were established using univariate analysis. However, the brain codes information in population codes that cannot be captured by univariate analysis. In contrast, multivariate analysis that assesses neural signals at the level of activation patterns can provide complementary information to univariate analysis with potentially higher sensitivity (Kriegeskorte and Kievit, 2013; Cichy and Pantazis, 2017; Guggenmos et al., 2018).

Here, we thus investigated the relationship between EEG, fMRI, and ECoG signals at the level of population codes using multivariate analysis techniques (Cichy and Pantazis, 2017; Guggenmos et al., 2018). Our analyses build on previously published ECoG data that was recorded while participants viewed object images from five different object categories, different scales and rotations (Liu et al., 2009). We additionally recorded event-related fMRI and EEG responses separately to the same object images. This allowed for a direct content-sensitive comparison between ECoG, EEG, and fMRI signals, detailing how much the correspondence depends on the content (object category) as well as variations in object scale and orientation.

Twenty-one healthy volunteers (13 females, age: mean ± SD = 24.61 ± 3.47) with no history of visual or neurological problems participated in this study. All participants went through a health examination before each session and signed a written consent form, declaring that their anonymized data can be used for research purposes. The study was approved by the Iran University of Medical Sciences Ethics Committee and was conducted in accordance with the Declaration of Helsinki.

We used a publicly available stimulus set used previously to record ECoG data in humans (for example, stimuli see Supplementary Figure 1 in Liu et al., 2009). The stimulus set consisted of 125 grayscale images, with 25 images from each of five object categories: animals, chairs, faces, fruits, and vehicles. Each set of 25 images consisted of 5 different objects under 5 different viewing conditions that were defined by rendered object size and rotation angle: (1) 3° visual angle size, 0° depth rotation, (2) 3° visual angle size, 45° depth rotation, (3) 3° visual angle size, 90° depth rotation, (4) 1.5° visual angle size, 0° depth rotation, and (5) 6° visual angle size, 0° depth rotation.

The duration of the experiment was about one hour. The experiment consisted of 32 runs and participants were allowed to take rest in between every 4 runs. Stimuli were presented on a computer screen subtending 10.69° of visual angle. In each run, all 125 images were presented once in a random order. On each trial, an image was presented for 200 ms followed by 800 ms of blank screen. A fixation cross was presented throughout the experiment, and participants were asked to fixate it. Participants conducted a one-back task on object identity independent of the object’s orientation or size, indicating their response via a keyboard button press. The experiment was programmed using MATLAB 2016 and the Psychophysics Toolbox (Brainard and Vision, 1997; Pelli and Vision, 1997).

We recorded EEG signals from 64 sensors with a g.GAMMAsys cap and g.LADYbird electrodes with a g.HIamp amplifier using the 10-10 system. Continuous EEG was digitized at 1,100 Hz without applying any online filters. The electrode placed at the left mastoid was used as the reference electrode. The forehead (FPz) electrode was used as the ground electrode. We further used three EOG channels to record vertical and horizontal eye movements.

We used EEGLAB14 (Delorme and Makeig, 2004) for preprocessing. In the first step, we concatenated all EEG data from a given recording and filtered them using a low-pass filter (FIR filter with an order of 396) with a cutoff frequency of 40 Hz. Looking at every ms in the EEG after filtering at 40 Hz means looking at a sliding window with a length of 25 ms, making it more comparable to ECoG data. In EEG, higher frequencies are difficult to measure as the signal is attenuated more than in lower frequencies. Consistent with this, explorative classification analysis of the EEG data resolved in frequency showed no object-related information above 25 Hz (Supplementary Figure 1). Further, high-frequency neural activity overlaps strongly with the spectral bandwidth of muscle activity (Muthukumaraswamy, 2013). Applying a 40 Hz cutoff point in EEG – rather than the 100 Hz cutoff point as in ECoG – does not seem to affect the results of the classification analysis. We did not high-pass filter the data because it is known that it creates artifacts that result in the displacement of multivariate patterns into activity-silent periods (van Driel et al., 2021). We then resampled the signals to 1,000 Hz, re-referenced them to the electrode placed on the left mastoid, and extracted epochs from −100 to +600 ms with respect to the stimulus onset. Last, we applied Infomax Independent Component Analysis (ICA) on each individual dataset. Then the spatial pattern of components and their associated timecourses were explored visually to remove eye blink and movement artifacts. For each participant, this procedure yielded 32 preprocessed trials for each of the 125 images.

We used time-resolved multivariate pattern classification to estimate the amount of information about object category in EEG signals at each time point of the epoch. All analyses were conducted independently for each participant. We classified object categories in a one-vs.-all procedure, which is one category (e.g., faces) vs. all others (animals, chairs, fruits, and vehicles). We conducted the analyses in three schemes equivalent to the ones used in Liu et al. (2009) to evaluate the classification performance for ECoG data: category selectivity, rotation invariance, and scale invariance. The schemes are described in detail below.

The scheme “category selectivity” refers to the training and testing of the classifier during category classification using EEG data for all versions of the stimuli independent of stimulus variations (i.e., images with size of 3° visual angle, and 0°, 45°, and 90° depth rotation, sizes of 1.5° and 6° visual angle, and 0° depth rotation). The analysis was conducted separately for each time point of the epoch and for each category. For each category, there were 800 trials (5 identities × 5 variations × 32 repetitions) resulting in two partitions of 800 vs. 3,200 trials in the one-vs.-other classification scheme. To compensate for the imbalanced class ratio, we randomly selected 800 trials from the 3,200 trials belonging to the other four categories. To increase the signal-to-noise ratio, we randomly assigned the 800 trials into 150 sets (with five or six trials at each set) and averaged the trials in each set to generate 150 pseudo-trials. We further applied multivariate noise normalization (Guggenmos et al., 2018). Then, 149 trials were used to train the linear support vector machine (SVM) classifier, and data from the left-out trial were used for the testing. We repeated the above procedure 300 times, each time with a different, random selection of 800 trials from the other category class.

The scheme “rotation invariance” refers to classifying object categories across orientation changes. For this, we trained the classifier using EEG data for images with size of 3° visual angle and 0° depth rotation and tested it with EEG data for images of size of 3° visual angle and either 90° or 45° depth rotation, and averaged the results.

Finally, the scheme “scale invariance” refers to classifying object categories across scale changes. For this, we trained the classifier using EEG data for images with size of 3° visual angle and 0° depth rotation and tested it with EEG data for images of size of 1.5° or 6° visual angle and 0° depth rotation, before averaging the results.

For both the rotation and scale invariance schemes, class ratios were imbalanced in the following way: for each category, there were 160 (5 identities × 1 variation × 32 repetitions) trials per participant and thus 160 vs. 640 trials in the one-vs.-all class sets. To balance the ratio, we randomly selected 160 trials from the set of 640 trials. After applying multivariate noise normalization, we conducted leave-one-trial-out classification. We repeated the procedure 150 times and averaged the results.

A detailed description of the ECoG data recording is available in the original publication (Liu et al., 2009). Here, we provide a short summary. The ECoG data were recorded from 912 subdural electrodes implanted in 11 participants (6 male, 9 right-handed, age range 12–34 years) for evaluation of surgical approaches to alleviate a resilient form of epilepsy. Participants were presented with images of the same set as described above in pseudorandom order. On each trial, an image was presented for 200 ms, followed by 600 ms of blank screen. Out of the 912 electrodes evaluated, 111 (12%) showed visual selectivity. These electrodes were none-uniformly distributed across the different lobes (occipital: 35%; temporal: 14%; frontal: and 7%; parietal: 4%). The areas with the highest proportions of selective electrodes were inferior occipital gyrus (86%), fusiform gyrus (38%), parahippocampal portion of the medial temporal lobe (26%), midoccipital gyrus (22%), lingual gyrus in the medial temporal lobe (21%), inferior temporal cortex (18%), and temporal pole (14%) (30).

Liu et al. (2009) used an SVM classifier with a linear kernel to calculate category selectivity, rotation, and scale invariance. They built a neural ensemble vector that contained the range of the signal [max(x)–min(x)] in individual bins of 25 ms duration using 11 selective electrodes and the 50–300 ms interval post stimulus onset. They tested for significance by randomly shuffling the category labels. Here, rather than re-analyzing the data, we reused the results as reported in Liu et al. (2009).

Each participant completed one session consisting of 8 functional runs of data recording. During each run, the same set of images used in EEG and ECoG was presented once in a random order. On each trial, the image was presented for 200 ms followed by a 2,800 ms blank screen. In each run, there were also 30 null trials during which a gray screen with a fixation cross at the center was presented for 3,000 ms. As in the EEG design, participants conducted a one-back task on object identity independent of the object’s orientation or size. The stimulus set was presented to the participants through a mirror placed in the head coil.

We recorded fMRI data using a Siemens Magnetom Prisma 3 Tesla with a 64-channel head coil. MRI head cushions and pillows were used to comfort participants and minimize head movements. To dampen the scanner noise, all participants were provided with a set of earplugs. Structural T1-weighted images were acquired at the beginning of the session for all participants (voxel size = 0.8 mm × 0.8 mm × 0.8 mm, slices = 208, TR = 1,800 ms, TE = 2.41 ms, flip angle = 8°). We obtained functional images using an EPI sequence with the whole brain coverage (voxel size = 3.5 mm × 3.5 mm × 3.5 mm, TR = 2,000 ms, TE = 30 ms, flip angle = 90°, FOV read = 250 mm, FOV phase = 100%).

SPM8 (Penny et al., 2011) and MATLAB 2016 were used to preprocess functional and structural magnetic resonance images. The slices of the functional images were temporally realigned to the middle slice. Then, the volumes of the whole session were aligned to the first volume and co-registered to the structural images.

We used a General Linear Model (GLM) to estimate condition-specific activations. For each run, the onsets of image presentation entered the GLM separately as regressors of interest and were convolved with a canonical hemodynamic response function. We included motion regressors as regressors of no interest. This procedure yielded 125 parameter estimates per run, indicating the responses of each voxel to the 25 different objects presented.

We used Freesurfer package (Dale et al., 1999) with Destrieux and Desikan-Killiany atlases to extract six regions of interest (ROIs) from surface-based parcelations derived from the T1 images. We used 6 visual ROIs: occipital-inferior, fusiform, lingual, parahippocampal, inferior temporal, and pole temporal cortex. We constructed a binary mask for each region based on the individuals’ structural image parcelation. We then selected voxels’ beta values resulting from fMRI GLM analysis overlapping with the binary mask for further ROI-specific analysis.

We used multivariate pattern classification to estimate category selectivity, rotation invariance, and scale invariance at each participant and ROI. Equivalent to the EEG and ECoG analysis, we conducted three analysis schemes: category selectivity, scale invariance, and rotation invariance. We thus do not describe the general setup again, but restrict ourselves to particulars to the fMRI analysis.

In the “category selectivity” scheme, we employed a leave-one-run-out cross-validation procedure to train and test the classifier. There were eight repetitive functional runs of data recording. For each category, there were 175 trials (5 identities × 5 variations × 7 repetitions) in the training set, resulting in two partitions of 175 vs. 700 trials in the one-vs.-other classification scheme. To compensate for the imbalanced class ratio, we randomly selected 175 trials from the 700 trials belonging to the other four categories. We tested the classifier with the left-out run to discriminate between each category and all other four categories. We repeated the random selection of trials 300 times. The classification performances reported here are the average values across the repetitions.

In scale and rotation invariance analyses, for each category and participant, there were 40 (5 identities × 1 variation × 8 repetitions) trials and thus 40 vs. 160 trials in the one-vs.-other class sets. To balance the number of trials for two classes (one category vs. the other four categories) in the training set, we randomly selected 40 trials from the other four categories (160 trials). We tested the classifier using a one-trial-out procedure, repeated these steps 300 times, and averaged the results.

We used two principled ways to relate EEG, fMRI, and ECoG data to each other: in terms of classification performance resulting from multivariate pattern analysis, and in terms of similarity relations between multivariate activation patterns using representational similarity analysis (RSA). We detail each approach below.

Relating EEG and ECoG, in general, we compared their signals by comparing their classification time courses over time with each other. To enable this step, for EEG, we averaged the EEG classification time courses across all participants, yielding one classification time course for each analysis scheme. For ECoG, we used the classification time courses as provided by Liu et al. (2009).

In detail, we compared EEG and ECoG signals for each classification scheme in two ways. In the first analysis, we calculated the correlation (Spearman’s R) between the complete accuracy time courses. The second analysis was more fine grained in that it was time-resolved and depended on category-specific time courses. For every time point, we aggregated the classification accuracies for each of the five categories into a 1 (time point) × 5 (category) vector. We did so independently for ECoG and EEG and then compared the vectors using correlation (Spearman’s R). This yielded one correlation value at every millisecond.

Relating fMRI and ECoG, we considered each classification scheme and ROI separately. To enable this step, for fMRI, we averaged the classification results across participants. For ECoG, we again used the classification time courses as provided by Liu et al. (2009).

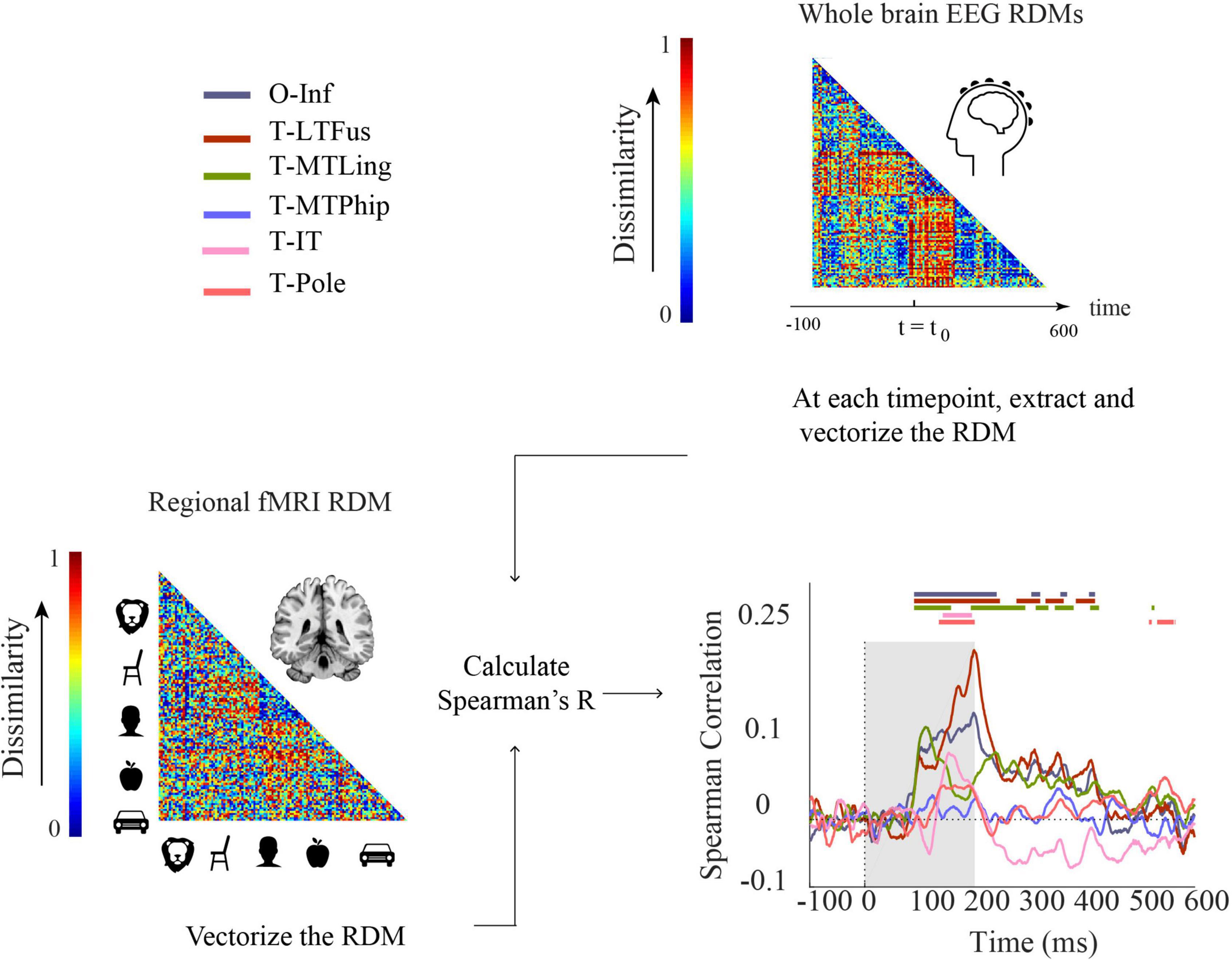

We used RSA (Edelman, 1998; Kriegeskorte et al., 2008; Carlson et al., 2013) to relate fMRI, EEG, and ECoG to each other. RSA abstracts from the incommensurate signal spaces of the neuroimaging modalities into a similarity space defined through representational similarity of activation patterns between experimental conditions in each modality. As the 125 experimental conditions were the same in fMRI, EEG, and ECoG, their similarity space is directly comparable. RSA uses dissimilarity rather than similarity by convention which otherwise leaves the rationale of the procedure untouched. Dissimilarities between conditions are stored in representational dissimilarity matrices (RDMs). RDMs are indexed in rows and columns by the experimental conditions compared, are symmetric across the diagonal, and the diagonal is undefined. Here defined by our stimulus set, the RDMs are of dimensions 125 × 125.

We constructed RDMs using 1-Spearman’s R as a dissimilarity measure in each case. For each participant, for each condition, we averaged trials across repetitions and used the resulting averages to compute dissimilarity matrices (RDMs). In detail, for EEG, for every millisecond of the epoch separately, we constructed one RDM from sensor-level activation patterns per subject. We used the mean RDM across subjects to compare the results of EEG/fMRI and ECoG modalities. For ECoG, we calculated two different RDM versions. The first version we call “ECoG RDMs”: for every time point, we used data aggregated across all participants in all electrodes (Supplementary Figure 2) to calculate pairwise dissimilarities between experimental conditions. The second we call “ECoG regional RDMs.” Here, we differentiated between electrodes by ROIs, calculating RDMs separately for each ROI rather than across all electrodes. In the fMRI analysis, for each participant and ROI, we constructed only one RDM based on ROI-specific beta activation patterns resulting from a GLM using a classical hrf (hemodynamic response function). The fMRI analysis thus collapses over time – all time resolution comes from the ECoG or EEG signal.

In fMRI recordings, the signal-to-noise ratio (SNR) is known to differ across the brain. For example, due to artifacts related to the closeness of the ear canal, temporal brain regions often suffer from SNR loss (Murphy et al., 2007; Triantafyllou et al., 2011; Welvaert and Rosseel, 2013; Sanada et al., 2021). We therefore explored if there is a significant relation between the SNR in a given ROI and the relationship between ECoG and fMRI in the classification performance-based analysis. To this end, we computed fMRI SNR by averaging the correlation of each participant’s RMD with the group mean RDM. Then for fMRI, we averaged classification accuracies across participants in each region. Then, we calculated Spearman’s correlation between mean classification accuracies for ECoG and MRI. This gave one correlation coefficient per region (i.e., a 1 × 6 vector). Finally, the relation between ECoG-fMRI correlation and SNR was obtained using Spearman’s correlation. We used the upper bound of the noise-ceiling as defined in (Nili et al., 2014) as a proxy for SNR in the fMRI data.

We used non-parametric statistics that do not make assumptions about the distribution of the data for inferential analysis.

The time for peak decoding accuracy was defined as the time where the decoding accuracy was the maximum value.

We used bootstrap resampling of participants (21 participants with 10,000 repetitions) to examine whether peak latencies between EEG and ECoG classification time courses were significantly different.

We defined onset latency as the earliest time where performance became significantly above chance for at least 15 consecutive time-points. We used the non-parametric Wilcoxon signrank test (one-sided) across participants to determine time-points with significantly above chance decoding accuracy. To adjust p-values for multiple comparisons (i.e., across time), we further applied the false discovery rate (FDR) correction.

We further used bootstrap resampling of participants (21 participants with 10,000 repetitions) to examine whether onset latencies between EEG and ECoG classification time courses were significantly different.

To assess the statistical significance of RDM-to-RDM Spearman’s R correlation, we used a random permutation test based on 10,000 randomizations of the condition labels; where required, the results were FDR-corrected at p < 0.05.

We used bootstrap resampling of participants (21 participants with 10,000 repetitions) to investigate whether EEG-ECoG RDM correlations were significant.

We used bootstrap resampling of participants (21 participants with 10,000 repetitions) to examine if decoding accuracies in fMRI were significantly higher than chance level.

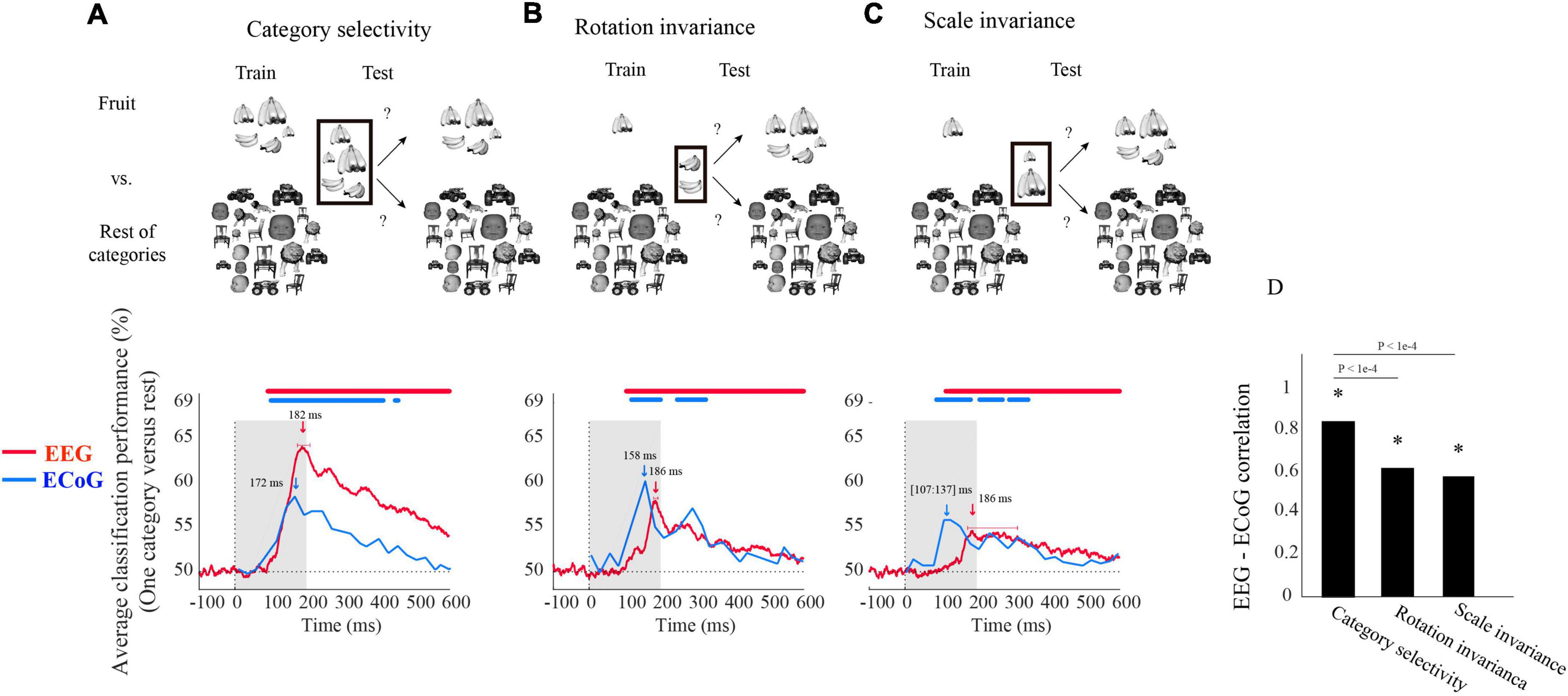

We determined similarities and differences with which EEG and ECoG reveal the temporal dynamics of visual category representations in the human brain. For this, we investigated time-resolved object category sensitivity in neural signals and their robustness to changes in viewing conditions using multivariate pattern analysis. In particular, we classified object category in three different classification schemes (Figures 1A–C). In the first analysis, we classified the object category based on neural signals lumped together for objects of all sizes and rotations, indexing overall object category selectivity of the neural signals (analysis from here on termed “category selectivity”). In the second and third analyses, we classified the object category across changes in rotation and scale of the objects. This indexes category selectivity of the neural signals tolerant to changes in viewing conditions.

Figure 1. Electroencephalogram and ECoG average classification performance (one category vs. rest) assessing (A) category selectivity, (B) rotation invariance, and (C) scale invariance over time. The sketches in the top row visualize the rationale of the three classification schemes (i.e., determining category selectivity, rotation invariance, and scale invariance) by showing example images for data entering the classifications. The bottom row shows the classification results. The red curves show EEG classification performance averaged over five categories and participants (N = 21). The blue curves show the mean ECoG classification performance over 5 categories using 11 selective electrodes and IFP (intracranial field potential) power in bins of 25 ms. The horizontal lines above the curves indicate significant time points [EEG: bootstrap test of 21 participants with 10,000 repetitions, FDR-corrected at p < 0.05; ECoG: as in the Liu et al. (2009), i.e., permutation test over stimuli, not corrected, for details on peak latency comparison, see Supplementary Table 1]. (D) Correlation between EEG and ECoG classification time courses. Stars show significance, and the values above the bar charts indicate the p-values of the differences between the correlation coefficients (bootstrap test of 21 participants with 10,000 repetitions, for more details, see Supplementary Table 2).

We begin the comparison of EEG vs. ECoG signals at a coarse level by inspecting the shape of the grand average classification results from the above-mentioned classification schemes. We found that object category was classified from neural signals in all three analysis schemes, with the highest classification accuracy of 64% in category selectivity for EEG and 60% in rotation invariance for ECoG (Figures 1A–C). In each case, we observed a rapid rise of classification accuracy after image presentation to a peak followed by a gradual decline, with no significant differences in the onset latencies for EEG and ECoG (bootstrap test of 21 participants with 10,000 repetitions and signrank test, all p > 2e−1). This shows that both measurement techniques equally reveal the swift emergence of object category signals in the visual system.

Based on this finding, we quantitatively compared the dynamics with which object category signals become visible in EEG and ECoG for similarity. For this, we correlated their respective time courses across time for each analysis scheme separately. There was a significant relationship in all three cases (Figure 1D), being highest in the general category selectivity analysis and reduced for the scale-and rotation tolerant analysis (bootstrap test of 21 participants with 10,000 repetitions; for detail see Supplementary Table 1).

Besides similarities, we also observed two marked differences between the classification curves for EEG and ECoG. For one, the peak latency for ECoG was significantly shorter than that of EEG in all three analysis schemes (bootstrap test of 21 participants with 10,000 repetitions; for details, see Supplementary Table 2). Second, peak classification accuracy was significantly higher for EEG than for ECoG in the category selectivity analysis (Figure 1A; bootstrap test of 21 participants with 10,000 repetitions, p < 9.9e−4), but lower in the rotation and scale invariant analysis (Figures 1B,C; difference not significant, p > 9.3e−1). This refines the point that neural signals recorded with ECoG and EEG differ in that the ECoG signals reflect visual object representations tolerant to changes in visual processing proportionally more strongly than the EEG signals.

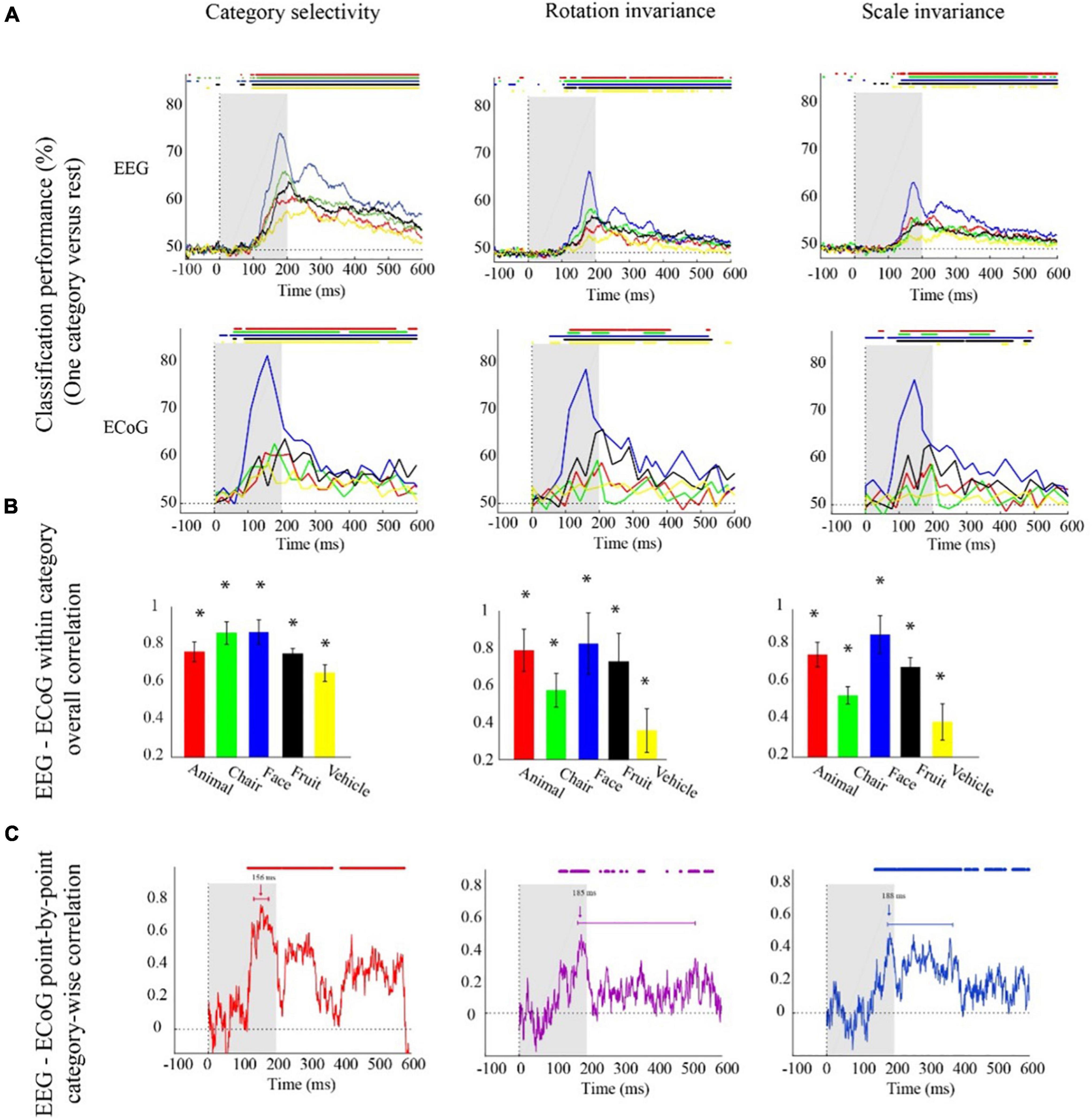

We continue the comparison of EEG vs. ECoG signals at a finer level by inspecting the shape of the classification results for each object category separately (Figure 2) to refine the conceptual resolution of the analysis. Consistent with the previous analyses, we observed that each single category was classified in all three analysis schemes from both EEG and ECoG data with the highest classification accuracy of 74 and 80% in face category, respectively (Figure 2A). Further consistent with the previous analysis, we found significantly shorter peak latencies for ECOG than for EEG signals for all categories except fruits in the category selectivity scheme, but the difference was not significant for category selectivity under rotation and scale changes (bootstrap test of 21 participants with 10,000 repetitions; for details, see Supplementary Table 3).

Figure 2. Electroencephalogram and ECoG category-specific classification performances over time. (A) Classification performances across all channels of EEG averaged over 21 participants, and ECoG classification performances using 11 selective electrodes and IFP (intracranial field potential) power in bins of 25 ms. The horizontal lines above the curves indicate significant time points (EEG: bootstrap test of 21 participants with 10,000 repetitions, FDR-corrected at p < 0.05, ECoG: permutation test over stimuli, not corrected). (B) EEG–ECoG category-wise overall correlation. Bar charts show the correlation coefficients between the classification time courses performances of EEG and ECoG for each category separately for the two modalities. The bars are color-coded, and the stars indicate significance (bootstrap test of 21 participants with 10,000 repetitions). (C) EEG–ECoG time-resolved point-by-point category-wise correlation. For each time point, we compared classification performance across categories between EEG and ECoG. At each time point, accuracies for categories were compared between EEG and ECoG. The horizontal lines above the curves indicate significant time points (bootstrap test of 21 participants with 10,000 repetitions, FDR-corrected at p < 0.05). For ECoG, the results are presented as reported in Liu et al. (2009). We did not analyze the data; we reproduced the figures in Liu et al. (2009).

With respect to onset latencies, ECoG had a significantly earlier onset compared to EEG for all object categories under the category selectivity scheme; but this was not the case under scale and orientation variations (bootstrap test of 21 participants with 10,000 repetitions; p-values are given in Supplementary Table 4). However, in the grand average analysis (Figures 1A–C), there were no significant differences between ECoG and EEG onset latencies in any of the three schemes.

The finer level of inspection at the level of single categories allowed us also to compare time courses of classification between different categories. We found that in both EEG and ECoG and across all the three classification schemes, the face category was discriminated earlier and had a significantly higher peak amplitude compared to other categories (Figure 2A; bootstrap test of 21 participants with 10,000 repetitions, all ps < 1e−3). This shows that the consistency between ECoG and EEG also depends on the content, i.e., the category used to probe the visual system.

To further quantitatively determine similarities between EEG and ECoG at this finer level, we conducted two analyses. First, analogous to the analysis above, we correlated the respective time courses for each category and analysis scheme separately (Figure 2B). We found a positive relationship in all cases (bootstrap test of 21 participants with 10,000 repetitions; for details, see Supplementary Table 5). Our results show that after introducing changes in size and orientation, the correlation between EEG and ECoG decoding accuracies was significantly reduced for the chair and the vehicle category (Figure 2B; bootstrap test of 21 participants with 10,000 repetitions, all ps < 4e−3). Second, we related EEG and ECoG by correlating the vector of category-specific classification results for each time point separately. Again, we found positive relationships in all cases. Highest similarity and shortest onset latencies were observed in the general category selectivity analysis compared to the scale and rotation tolerant analyses (bootstrap test of 21 participants with 10,000 repetitions; for details, see Supplementary Tables 6, 7). Consistent with the grand average analysis (Figure 1D), we see that the similarity between EEG and ECoG is reduced and delayed after introducing variations in object scale and orientation.

In the previous sections, we compared EEG to ECoG in terms of object category classification time courses in classification schemes that assess the effect of changes across scale and invariance.

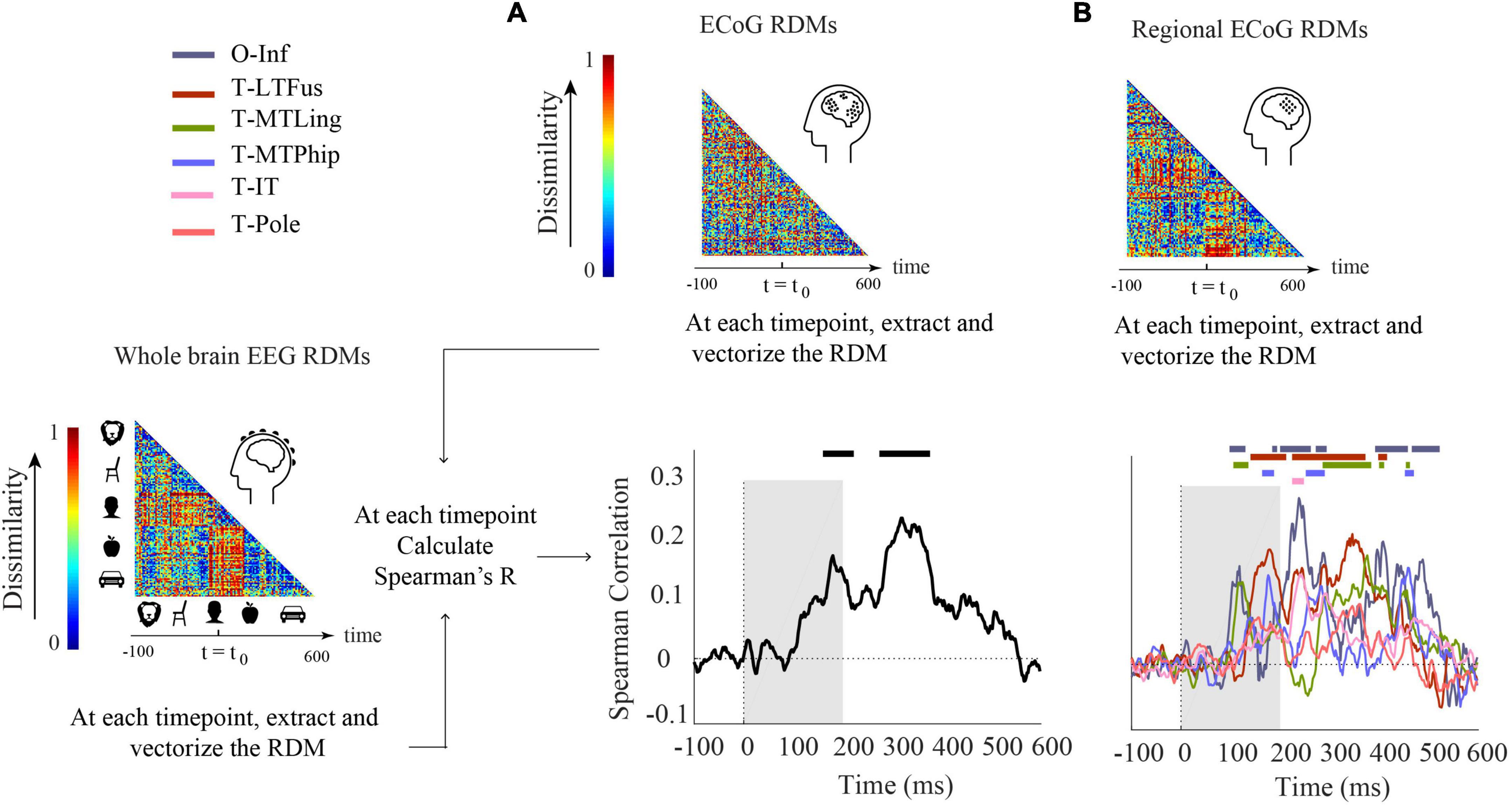

Here, we use RSA (Edelman, 1998; Kriegeskorte et al., 2008; Carlson et al., 2013) to compare EEG and ECoG representations across time and selected brain regions, regardless of the object category and changes in scale and orientation.

We conducted two analyses defined by the way ECoG data were aggregated in RDMs. In the first analysis (Figure 3A), ECoG RDMs were calculated from data of all ECoG electrodes (called here “ECoG RDMs”), giving a spatially unspecific large-scale statistical summary of representational relations in the ECoG signal. We observed two time periods at which EEG and ECoG representations were significantly similar (i.e., 154–222 and 274–376 ms) (Figure 3A). We note that the first time period overlaps with both EEG and ECoG peak decoding accuracies at 182 and 172 ms, respectively (Figure 1A).

Figure 3. Comparison of EEG and ECoG by representational similarity. We compared the EEG RDM (mean over participants) to (A) the ECoG RDM, constructed using all electrodes from all participants and (B) the regional ECoG RDM, constructed from electrodes limited to specific ROIs. The horizontal lines above the curves indicate significant time points (permutation test over stimuli, 10,000 iterations, FDR-corrected at p < 0.05). The ROIs include occipital-inferior (O-Inf), fusiform (T-LFus), lingual (T-MLing), parahippocampal (T-MTPhip), inferior-temporal (T-IT), and pole-temporal cortex (T-Pole).

In the second analysis (Figure 3B), we limited the analysis to electrodes in predefined visual ROIs, yielding ECoG RDMs that enable spatially specific assessment of the ECoG data per region (called here “Regional ECoG RDMs”).

We observed a significant representational similarity between EEG and ECoG in occipital-inferior, lingual, fusiform, parahippocampal, and inferior-temporal regions (Figure 3B). Comparison of the results in Figures 3A,B suggests how each region may have contributed differently to the overall EEG-ECoG correlation across time. Occipital-inferior and lingual regions are the regions that show the first significant correlation value at 100 and 106 ms, respectively. Then the fusiform region shows first significant correlations at 119 ms, followed by the parahippocampal and temporal-inferior regions at 177 and 230 ms. Furthermore, we find that there were significant differences between the regions’ latencies of the first peak; occipital-inferior and lingual peaked at 118 and 116 ms, followed by the fusiform (178 ms), parahippocampal (142 ms), and temporal-inferior region (186 ms) (bootstrap test of 21 participants with 10,000 repetitions; for details, see Supplementary Table 8). Together, this suggests that the fusiform region contributes to the overall pattern of results more than the other regions investigated here (Figure 3A vs. red curve in Figure 3B).

We began the comparison of fMRI with ECoG signals by evaluating the results of category classification in the same three classification schemes as used previously. We focused the analysis on the same six ROIs as in the ECoG-EEG comparison: occipital-inferior, fusiform, lingual, parahippocampal, inferior-temporal, and pole-temporal cortex. Focusing on spatial rather than temporal specificity, for ECoG, we used the classification performance in the time range of 50–300 ms after stimulus onset as calculated in Liu et al. (2009), as this period captures the visual systems first response to external stimuli as detected by electrophysiological modalities.

The results (Figure 4) revealed a nuanced picture showing both similarities and differences between fMRI and ECoG, depending on category classified, region, and analysis scheme.

Figure 4. Classification performance (one category vs. rest) for the classification schemes (A) category selectivity, (B) rotation invariance, (C) and scale invariance. First column: cartoon visualization of stimuli for data used to train and test the classifier in each analysis scheme. Second column: region-specific classification results for (average performances across 21 participants). Third Column: region-specific classification results for ECoG. Error bars indicate one standard deviation, and stars above bars indicate statistical significance (fMRI: bootstrap test of 21 participants with 10,000 repetitions, FDR-corrected at p < 0.05. ECoG: permutation test over stimuli, not corrected). Fourth Column: correlation between signal-to-noise ratio in fMRI and ECOG classification results in the category selectivity scheme across regions. The ROIs include occipital-inferior (O-Inf), fusiform (T-LFus), lingual (T-MLing), parahippocampal (T-MTPhip), inferior-temporal (T-IT), and pole-temporal cortex (T-Pole).

First, decoding accuracies in ECoG were significantly higher than that of fMRI for all categories and for all three classification schemes (bootstrap test of 21 participants with 10,000 repetitions, FDR-corrected at p < 0.05). Further, compared to ECoG, fewer categories could be decoded in fMRI in the orientation and scale invariance scheme, suggesting that some of the information relevant for invariant object recognition was captured less well by the fMRI signal in our experiment. Second, in all three analysis schemes, animal, chair, face, fruit, and vehicle categories were decoded significantly in both fMRI and ECoG in the occipital-inferior region. Third, in the fusiform region, when examining results for the rotation and scale invariance analysis schemes, we observed that all categories could be decoded using fMRI, but in ECoG, the chair category could not be decoded.

This complicated picture across regions led us to ask about underlying factors that could explain those results. We hypothesized that the differential signal-to-noise ratio (SNR) of fMRI across regions might play a role. To investigate this, we plotted SNR in fMRI against the similarity in result patterns for fMRI and ECoG for each region and classification scheme (Figure 4, last column). Correlating SNR with fMRI-ECoG similarity values we observed strong positive relationships (bootstrap test of 21 participants with 10,000 repetitions, all ps < 0.01): regions with low SNR in fMRI such as the ventral occipital temporal and inferior lateral temporal region showed low correlation in classification results patterns with ECOG, whereas regions with high SNR in fMRI such as the occipital inferior and temporal fusiform region showed high correlations. This shows that SNR differences in fMRI across regions strongly contribute to whether positive relationships with ECoG can be established.

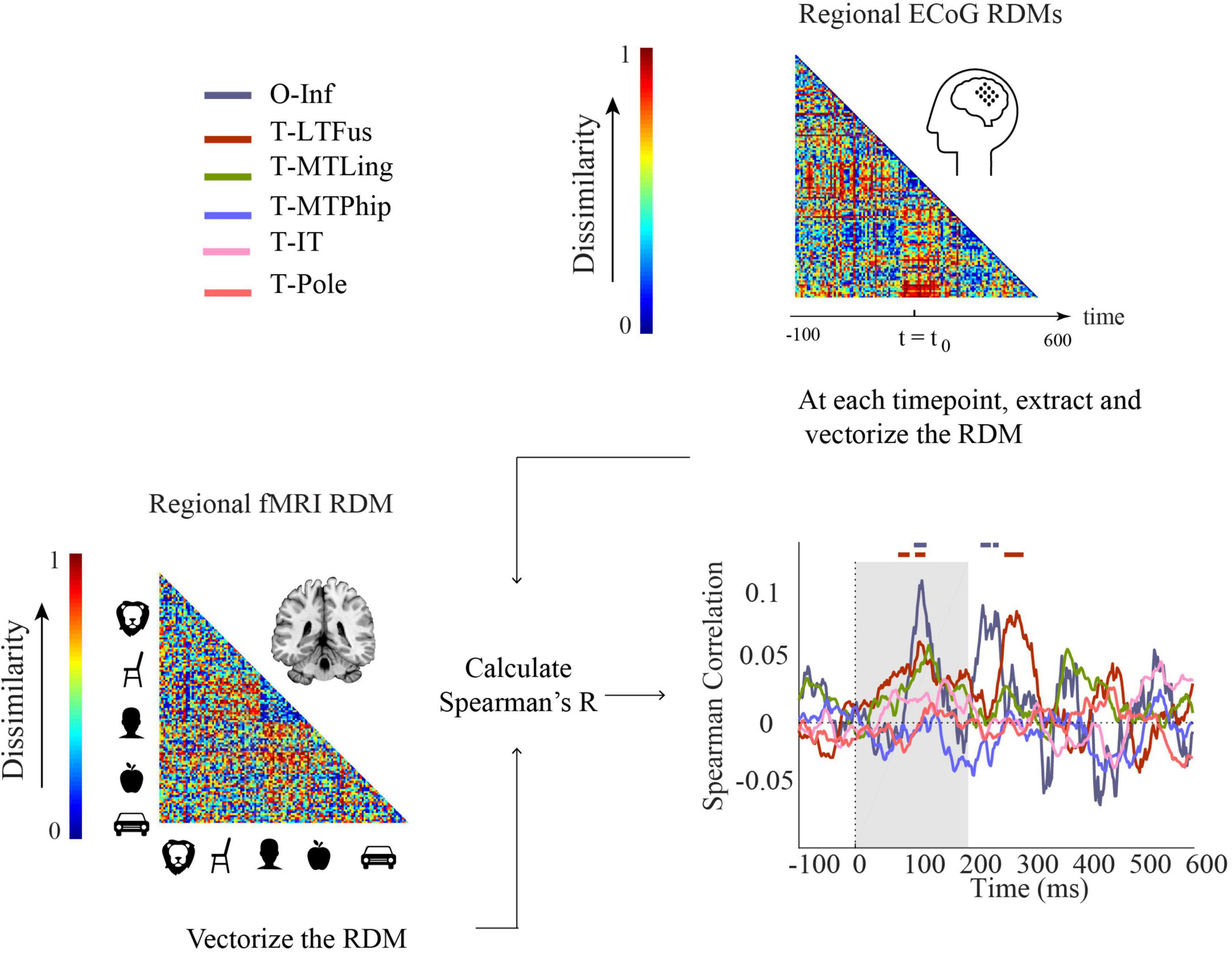

We further studied the relationship between fMRI and ECoG in a region-specific way using RSA (Figure 5) in a similar way as delineated above for the relationship between EEG and ECoG (Figure 3). In detail, we compared regional fMRI RDMs with regional ECoG RDMs across time.

Figure 5. Comparison of fMRI and ECoG in representational similarity by cortical regions. For each participant, fMRI RDMs were calculated using 1-Spearman’s correlation. We then compared the mean fMRI RDM to the ECoG regional RDMs. The horizontal lines above the curves indicate significant time points (permutation test over stimuli, 10,000 repetitions, FDR-corrected at p < 0.05). The ROIs include occipital-inferior (O-Inf), fusiform (T-LFus), lingual (T-MLing), parahippocampal (T-MTPhip), inferior-temporal (T-IT), and pole-temporal cortex (T-Pole).

We found representations as measured by fMRI and ECoG to be similar in occipital-inferior and fusiform regions (Figure 5). The correlation time courses indicating representational similarity showed two peaks around 100 and 250 ms for the occipital-inferior region and around 100 and 300 ms for the fusiform region (for details see Supplementary Table 9). This suggests that two temporally distinct neural processes occur in those regions, possibly related to feed-forward and feedback processing.

Finally, aiming to reveal neural dynamics in humans resolved both in space and time using non-invasive techniques only, we used fMRI-M/EEG representational fusion (Cichy et al., 2014, 2016; Cichy and Oliva, 2020). In detail, we compared regional fMRI RDMs with EEG RDMs across time (Figure 6).

Figure 6. Comparing fMRI and EEG by representational similarity. For each participant, EEG and fMRI RDMs were calculated using 1-Spearman’s correlation. We then compared the mean fMRI RDM to the mean EEG RDM. The horizontal lines above the curves indicate significant time points (permutation test over stimuli, 10,000 repetitions, FDR-corrected at p < 0.05). The ROIs include occipital-inferior (O-Inf), fusiform (T-LFus), lingual (T-MLing), parahippocampal (T-MTPhip), inferior-temporal (T-IT), and pole-temporal cortex (T-Pole).

We found significant correlations between EEG-fMRI RDMs from about 100 ms onward, in occipital-inferior, fusiform, and lingual regions, and from about 130 ms onward in inferior-temporal and pole-temporal regions (for details, see Supplementary Table 10). We also observed later representational correspondence between EEG and fMRI starting from about 280 to about 420 ms in the fusiform region, from about 190 ms to about 430 ms in the lingual, and 530–560 ms in the inferior temporal region. These results provide an important coordinate to consider how integration methods based on non-invasive EEG and fMRI results perform in comparison to ECoG. For example, we found that occipital inferior, fusiform and lingual were among the first regions to emerge in both ECoG (Supplementary Figure 3) and EEG-fMRI fusion. According to the ECoG results, parahippocampal cortex was the last region that showed selectivity. However, this region showed no significant time point in EEG-fMRI fusion. Moreover, the temporal pole had the longest onset latency in ECoG. This was consistent with EEG-ECoG results which showed that the temporal pole and inferior temporal cortex had longer onset latencies compared to the other regions.

In this study, we investigated the correspondence between EEG and fMRI and ECoG data recorded during object vision in humans at the level of population codes using multivariate analysis methods. Our findings are threefold. First, the EEG-ECoG comparison revealed a correspondence that drops when viewing-condition invariant representations are assessed. Second, using multivariate pattern analysis, we showed that fMRI-ECoG relation is region dependent; in regions with lower fMRI signal-to-noise-ratio, fMRI-ECoG correlation decreases. Last, comparing the results of EEG-fMRI fusion with that of EEG-ECoG and fMRI-ECoG, we observe both consistencies and inconsistencies across time and region.

Our study adds to the large body of research investigating the relationship between EEG and ECoG signals by detailing the relationship in the context of invariant object representations (Eger et al., 2005; Cichy and Oliva, 2020). We observed that when assessing object representations independent of size and orientation, the correlation between EEG and ECoG decoding accuracies was significantly reduced for the chair and the vehicle category. This drop suggests that invariant object representations are located in brain areas that are less accessible by EEG compared to ECoG (Figure 2). This conjecture is further corroborated by the observation that after changing the size and orientation of the objects, the EEG classification accuracy decreased, but the ECoG classification accuracy did not (Figure 1).

Previous studies on fMRI signal quality comparing different brain regions suggest that fMRI has low SNR in temporal regions (Murphy et al., 2007; Triantafyllou et al., 2011; Welvaert and Rosseel, 2013; Sanada et al., 2021). Consistent with the results, here we observed that in temporal areas of the brain (pole temporal and inferior temporal), the fMRI-ECoG correlation is lower, whereas in regions with higher SNR, such as occipital-inferior and fusiform, the fMRI and ECoG correlation is higher. Together this further highlights the importance of taking the specific sensitivities of different imaging modalities into account when relating and interpreting their results.

When conducting EEG-fMRI representational fusion, i.e., correlating the whole-brain EEG RDMs with the regional fMRI RDMs (i.e., EEG-fMRI fusion; Cichy et al., 2014, 2016), we find that consistent with EEG-ECoG RDM correlations across time, occipital-inferior and lingual cortex are among the first regions that emerge around 100 ms. The EEG-fMRI fusion results further suggest that in regions closer to the skull and thus to the EEG electrodes, EEG-fMRI fusion may give more reliable results than in regions further away.

Comparing EEG-fMRI fusion with fMRI-ECoG, we find that pole temporal and temporal inferior fMRI RDMs both had a significant correlation with EEG around 150 ms. However, there was no significant correlation between fMRI and ECoG RDMs for these regions. Pole temporal and temporal inferior are regions typically reported to have lower SNR and more difficult for fMRI to read from (Sanada et al., 2021), which may explain the inconsistency between fMRI-ECoG (Figure 5) and fMRI-EEG (Figure 6) results with regard to these regions.

While ECoG provides high density coverage over a limited cortical region, EEG and fMRI can provide broad coverage over all cortical areas, including deep brain structures in fMRI. This is a key consideration when comparing EEG-fMRI fusion and ECoG results. For areas and time points where EEG and fMRI have reliable readings from the brain and we have enough electrodes from ECoG to read out data, we can expect the best between-modality consistency.

One of the study’s limitations is that the EEG-fMRI data were from a different set of participants, compared to that of the ECoG data. Thus, subject-specific signal components could not be assessed. While challenging, future studies may attempt to record EEG, fMRI, and ECoG data from the same participants to be able to do a more direct comparison of the modalities at the level of single individuals.

While EEG and ECoG both measure neural signals, there are significant differences between the signals’ SNR, noise factors, and coverage of brain areas. Due to these differences, the modalities require different preprocessing and processing steps. First, while EEG can provide whole-brain coverage, and thus participant-specific data are used in EEG (Karimi et al., 2022; Moon et al., 2022; Xu et al., 2022), ECoG has very limited brain coverage as electrode coverage is dictated by medical reasons for each individual; as such usually, the data are pooled across subjects from different participants to provide a wider coverage (Sellers et al., 2019; Yang et al., 2019; Ahmadipour et al., 2021). Second, single trial ECoG data can provide reliable information (Jacques et al., 2016b; Haufe et al., 2018); however, it is typical to average several trials in EEG to reduce noise and acquire higher SNR. The process of averaging across trials is a common preprocessing step in EEG studies to increase signal-to-noise ratio (Wardle et al., 2016; Guggenmos et al., 2018; Kong et al., 2020; Ashton et al., 2022), and to obtain a more reliable signal. When the study design and duration of recording allow, it is preferred to have more repetitions so one can average across. Here, the EEG category selectivity results had possibly higher SNR, compared to rotation and scale invariance analysis, given there were more repetitions.

Third, we used a different statistical test (i.e., bootstrap resampling of participants) for EEG results compared to the permutation test (permutation of labels) that was used in ECoG. The way ECoG data was collected did not allow for an equivalent analysis. Instead, Liu et al. chose selective electrodes across several subjects, creating a super subject. In EEG, having more subjects and comparable data across subjects enabled us to run random effect analysis across them, which is the preferred method for analyzing the EEG data (Jacques et al., 2016a; Karimi et al., 2022).

Last, the data were averaged in different ways in ECoG and EEG/fMRI following the recommended optimized method of analysis in each modality. In ECoG, the data from all subjects were pooled across subjects and the average trial across repetitions was used to construct RDMs. However, in EEG, for each subject, we calculated RDMs separately. Combining all EEG electrodes from all subjects together before creating RDMs would have been possible, but it would not make the analysis more comparable. It is not desirable to apply the same pipeline for both EEG and ECoG; instead, the modality-specific processing steps are best suited here to bring out reliable results with high sensitivity. In sum, due to the above differences mentioned, we used different preprocessing pipelines to prepare the data for classification and RSA.

We appreciate that each of those analysis choices and steps can influence the results to an unknown degree, for example, the category selectivity analysis and the rotation/scale invariance analyses can be affected by differences in EEG/ECoG’s SNR, due to the trial averaging in EEG, or using mixed subject electrodes in ECoG. Similarly, EEG-ECoG comparisons regarding the onset latencies could have been affected by different statistical procedures run in each modality. Our general approach in this study was to follow the best way of analysis for each modality. Alternatively, one could have forced equating the pre-processing steps in ECoG and EEG. In that case, one still needs to decide whether to follow a procedure that is optimized for ECoG data or the EEG data or otherwise a neutral procedure that is optimized for neither of the two. Each of those decisions will have implications on the results and interpretations. Future work systematically investigating the influence of analysis choices on the results in ECoG and EEG is required to solve these open questions. That would be more achievable in non-human primates, where EEG is being established, and ECoG can be placed more freely. For example, similar cut-off frequencies were used in Sandhaeger et al. (2019) to compare EEG, ECoG, and MEG signals in monkeys.

Our findings of a spatiotemporal correspondence between patterns of category-selective responses across ECoG and fMRI/EEG are in line with previous human studies reporting on between-modality correspondence in visual areas (Puce et al., 1997; Parvizi et al., 2012; Jacques et al., 2016b; Haufe et al., 2018). However, these studies did not investigate how the correspondence changes under variations in object size and/or orientation. Here, we highlighted key differences between EEG-ECoG and fMRI-ECoG, both temporally and spatially, when object variations are introduced. Our study guides interpretation of neuroimaging studies of invariant object recognition when using M/EEG and fMRI by showing when and where we can be more confident about the results.

The datasets presented in this study can be found in online repositories. The EEG and fMRI data recorded here are available in Brain Imaging Data Structure (BIDS) (Gorgolewski et al., 2016; Pernet et al., 2019) format on the OpenNeuro repository (Ebrahiminia et al., 2022): https://openneuro.org/datasets/ds002814/versions/1.3.0. The ECoG data is made publicly accessible by Kreiman’s Lab2 (Liu et al., 2009).

The studies involving human participants were reviewed and approved by the Iran University of Medical Sciences Ethics Committee and were conducted in accordance with the Declaration of Helsinki. The patients/participants provided their written informed consent to participate in this study.

S-MK-R and FE conceived the study and experimental design. FE collected and processed the data. FE analyzed the data with S-MK-R and RC supervision. FE, RC, and S-MK-R wrote the manuscript. All authors contributed to the article and approved the submitted version.

This study was supported by the German Research Council (DFG) (CI241/1-1 and CI241/3-1, RC), the European Research Council (ERC-StG-2018-803370, RC), and the Iranian National Science Foundation (grant number: 96007585, S-MK-R).

The EEG and fMRI data were recorded at the Iranian National Brain Mapping Laboratory (NBML). We thank Mahdiyeh Khanbagi and Morteza Mahdiani for their support and help with some of the data collection and analysis.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2022.983602/full#supplementary-material

Ahmadipour, P., Yang, Y., Chang, E. F., and Shanechi, M. M. (2021). Adaptive tracking of human ECoG network dynamics. J. Neural Eng. 18:016011. doi: 10.1088/1741-2552/abae42

Ashton, K., Zinszer, B. D., Cichy, R. M., Nelson, C. A. III, Aslin, R. N., and Bayet, L. (2022). Time-resolved multivariate pattern analysis of infant EEG data: A practical tutorial. Dev. Cogn. Neurosci. 54:101094. doi: 10.1016/j.dcn.2022.101094

Bollmann, S., and Barth, M. (2021). New acquisition techniques and their prospects for the achievable resolution of fMRI. Prog. Neurobiol. 207:101936. doi: 10.1016/j.pneurobio.2020.101936

Brainard, D. H., and Vision, S. (1997). The psychophysics toolbox. Spat. Vis. 10, 433–436. doi: 10.1163/156856897X00357

Burle, B., Spieser, L., Roger, C., Casini, L., Hasbroucq, T., and Vidal, F. (2015). Spatial and temporal resolutions of EEG: Is it really black and white? A scalp current density view. Int. J. Psychophysiol. 97, 210–220. doi: 10.1016/j.ijpsycho.2015.05.004

Buzsáki, G., and Llinás, R. (2017). Space and time in the brain. Science 358, 482–485. doi: 10.1126/science.aan8869

Carlson, T., Tovar, D. A., Alink, A., and Kriegeskorte, N. (2013). Representational dynamics of object vision: The first 1000 ms. J. Vis. 13:1. doi: 10.1167/13.10.1

Cichy, R. M., and Oliva, A. (2020). AM/EEG-fMRI fusion primer: Resolving human brain responses in space and time. Neuron 107, 772–781. doi: 10.1016/j.neuron.2020.07.001

Cichy, R. M., and Pantazis, D. (2017). Multivariate pattern analysis of MEG and EEG: A comparison of representational structure in time and space. Neuroimage 158, 441–454. doi: 10.1016/j.neuroimage.2017.07.023

Cichy, R. M., Pantazis, D., and Oliva, A. (2014). Resolving human object recognition in space and time. Nat. Neurosci. 17, 455–462. doi: 10.1038/nn.3635

Cichy, R. M., Pantazis, D., and Oliva, A. (2016). Similarity-based fusion of MEG and fMRI reveals spatio-temporal dynamics in human cortex during visual object recognition. Cereb. Cortex 26, 3563–3579. doi: 10.1093/cercor/bhw135

Dale, A. M., Fischl, B., and Sereno, M. I. (1999). Cortical surface-based analysis: I. Segmentation and surface reconstruction. Neuroimage 9, 179–194. doi: 10.1006/nimg.1998.0395

Delorme, A., and Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Ding, L., Wilke, C., Xu, B., Xu, X., van Drongelen, W., Kohrman, M., et al. (2007). EEG source imaging: Correlating source locations and extents with electrocorticography and surgical resections in epilepsy patients (in Eng). J. Clin. Neurophysiol. 24, 130–136. doi: 10.1097/WNP.0b013e318038fd52

Ebrahiminia, F., Mahdiani, M., and Khaligh-Razavi, S.-M. (2022). A multimodal neuroimaging dataset to study spatiotemporal dynamics of visual processing in humans. bioRxiv [Preprint]. doi: 10.1101/2022.05.12.491595

Edelman, S. (1998). Representation is representation of similarities. Behav. Brain Sci. 21, 449–467. doi: 10.1017/S0140525X98001253

Eger, E., Schweinberger, S. R., Dolan, R. J., and Henson, R. N. (2005). Familiarity enhances invariance of face representations in human ventral visual cortex: fMRI evidence. Neuroimage 26, 1128–1139. doi: 10.1016/j.neuroimage.2005.03.010

Engell, A. D., Huettel, S., and McCarthy, G. (2012). The fMRI BOLD signal tracks electrophysiological spectral perturbations, not event-related potentials. Neuroimage 59, 2600–2606. doi: 10.1016/j.neuroimage.2011.08.079

Feinberg, D. A., and Yacoub, E. (2012). The rapid development of high speed, resolution and precision in fMRI. Neuroimage 62, 720–725. doi: 10.1016/j.neuroimage.2012.01.049

Gorgolewski, K. J., Auer, T., Calhoun, V. D., Craddock, R. C., Das, S., Duff, E. P., et al. (2016). The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Sci. Data 3:160044. doi: 10.1038/sdata.2016.44

Gosseries, O., Demertzi, A., Noirhomme, Q., Tshibanda, J., Boly, M., Op de Beeck, M., et al. (2008). Functional neuroimaging (fMRI, PET and MEG): What do we measure? Rev. Méd. Liège 63, 231–237.

Guggenmos, M., Sterzer, P., and Cichy, R. M. (2018). Multivariate pattern analysis for MEG: A comparison of dissimilarity measures. Neuroimage 173, 434–447. doi: 10.1016/j.neuroimage.2018.02.044

Hacker, C. D., Snyder, A. Z., Pahwa, M., Corbetta, M., and Leuthardt, E. C. (2017). Frequency-specific electrophysiologic correlates of resting state fMRI networks. Neuroimage 149, 446–457. doi: 10.1016/j.neuroimage.2017.01.054

Haufe, S., DeGuzman, P., Henin, S., Arcaro, M., Honey, C. J., Hasson, U., et al. (2018). Elucidating relations between fMRI, ECoG, and EEG through a common natural stimulus. Neuroimage 179, 79–91. doi: 10.1016/j.neuroimage.2018.06.016

Haxby, J. V., Connolly, A. C., and Guntupalli, J. S. (2014). Decoding neural representational spaces using multivariate pattern analysis. Annu. Rev. Neurosci. 37, 435–456. doi: 10.1146/annurev-neuro-062012-170325

Hermes, D., Miller, K. J., Vansteensel, M. J., Aarnoutse, E. J., Leijten, F. S., and Ramsey, N. F. (2012). Neurophysiologic correlates of fMRI in human motor cortex. Hum. Brain Mapp. 33, 1689–1699. doi: 10.1002/hbm.21314

Hnazaee, M. F., Wittevrongel, B., Khachatryan, E., Libert, A., Carrette, E., Dauwe, I., et al. (2020). Localization of deep brain activity with scalp and subdural EEG. Neuroimage 223:117344. doi: 10.1016/j.neuroimage.2020.117344

Huettel, S. A., McKeown, M. J., Song, A. W., Hart, S., Spencer, D. D., Allison, T., et al. (2004). Linking hemodynamic and electrophysiological measures of brain activity: Evidence from functional MRI and intracranial field potentials. Cereb. Cortex 14, 165–173. doi: 10.1093/cercor/bhg115

Jacques, C., Witthoft, N., Weiner, K. S., Foster, B. L., Rangarajan, V., Hermes, D., et al. (2016b). Corresponding ECoG and fMRI category-selective signals in human ventral temporal cortex. Neuropsychologia 83, 14–28. doi: 10.1016/j.neuropsychologia.2015.07.024

Jacques, C., Retter, T. L., and Rossion, B. (2016a). A single glance at natural face images generate larger and qualitatively different category-selective spatio-temporal signatures than other ecologically-relevant categories in the human brain. Neuroimage 137, 21–33. doi: 10.1016/j.neuroimage.2016.04.045

Karimi, H., Marefat, H., Khanbagi, M., Kalafatis, C., Modarres, M. H., Vahabi, Z., et al. (2022). Temporal dynamics of animacy categorization in the brain of patients with mild cognitive impairment. PLoS One 17:e0264058. doi: 10.1371/journal.pone.0264058

Khursheed, F., Tandon, N., Tertel, K., Pieters, T. A., Disano, M. A., and Ellmore, T. M. (2011). Frequency-specific electrocorticographic correlates of working memory delay period fMRI activity. Neuroimage 56, 1773–1782. doi: 10.1016/j.neuroimage.2011.02.062

Kim, S.-G., Jin, T., and Fukuda, M. (2020). “Spatial resolution of fMRI techniques,” in fMRI, eds S. Ulmer and O. Jansen (Berlin: Springer), 65–72. doi: 10.1007/978-3-030-41874-8_6

Klink, P. C., Chen, X., Vanduffel, W., and Roelfsema, P. R. (2021). A comparison of population receptive fields from fMRI and large-scale neurophysiological recordings in non-human primates. bioRxiv [Preprint]. doi: 10.1101/2020.09.05.284133

Kokkinos, V., Zaher, N., Antony, A., Bagiæ, A., Richardson, R. M., and Urban, A. (2019). The intracranial correlate of the 14&6/sec positive spikes normal scalp EEG variant. Clin. Neurophysiol. 130, 1570–1580. doi: 10.1016/j.clinph.2019.05.024

Kong, N. C., Kaneshiro, B., Yamins, D. L., and Norcia, A. M. (2020). Time-resolved correspondences between deep neural network layers and EEG measurements in object processing. Vis. Res. 172, 27–45. doi: 10.1016/j.visres.2020.04.005

Kriegeskorte, N., and Kievit, R. A. (2013). Representational geometry: Integrating cognition, computation, and the brain. Trends Cogn. Sci. 17, 401–412. doi: 10.1016/j.tics.2013.06.007

Kriegeskorte, N., Mur, M., and Bandettini, P. A. (2008). Representational similarity analysis-connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2:4. doi: 10.3389/neuro.06.004.2008

Liu, H., Agam, Y., Madsen, J. R., and Kreiman, G. (2009). Timing, timing, timing: Fast decoding of object information from intracranial field potentials in human visual cortex. Neuron 62, 281–290.

Logothetis, N. K., Pauls, J., Augath, M., Trinath, T., and Oeltermann, A. (2001). Neurophysiological investigation of the basis of the fMRI signal. Nature 412, 150–157. doi: 10.1038/35084005

Magri, C., Schridde, U., Murayama, Y., Panzeri, S., and Logothetis, N. K. (2012). The amplitude and timing of the BOLD signal reflects the relationship between local field potential power at different frequencies. J. Neurosci. 32, 1395–1407. doi: 10.1523/JNEUROSCI.3985-11.2012

Meisel, C., and Bailey, K. A. (2019). Identifying signal-dependent information about the preictal state: A comparison across ECoG, EEG and EKG using deep learning. EBioMedicine 45, 422–431. doi: 10.1016/j.ebiom.2019.07.001

Michel, C. M., and Murray, M. M. (2012). Towards the utilization of EEG as a brain imaging tool. Neuroimage 61, 371–385. doi: 10.1016/j.neuroimage.2011.12.039

Moon, A., He, C., Ditta, A. S., Cheung, O. S., and Wu, R. (2022). Rapid category selectivity for animals versus man-made objects: An N2pc study. Int. J. Psychophysiol. 171, 20–28. doi: 10.1016/j.ijpsycho.2021.11.004

Murphy, K., Bodurka, J., and Bandettini, P. A. (2007). How long to scan? The relationship between fMRI temporal signal to noise ratio and necessary scan duration. Neuroimage 34, 565–574. doi: 10.1016/j.neuroimage.2006.09.032

Muthukumaraswamy, S. D. (2013). High-frequency brain activity and muscle artifacts in MEG/EEG: A review and recommendations. Front. Hum. Neurosci. 7:138. doi: 10.3389/fnhum.2013.00138

Niessing, J., Ebisch, B., Schmidt, K. E., Niessing, M., Singer, W., and Galuske, R. A. (2005). Hemodynamic signals correlate tightly with synchronized gamma oscillations. Science 309, 948–951. doi: 10.1126/science.1110948

Nili, H., Wingfield, C., Walther, A., Su, L., Marslen-Wilson, W., and Kriegeskorte, N. (2014). A toolbox for representational similarity analysis. PLoS Comput. Biol. 10:e1003553. doi: 10.1371/journal.pcbi.1003553

Parvizi, J., Jacques, C., Foster, B. L., Witthoft, N., Rangarajan, V., Weiner, K. S., et al. (2012). Electrical stimulation of human fusiform face-selective regions distorts face perception. J. Neurosci. 32, 14915–14920. doi: 10.1523/JNEUROSCI.2609-12.2012

Pelli, D. G., and Vision, S. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spat. Vis. 10, 437–442. doi: 10.1163/156856897X00366

Penny, W. D., Friston, K. J., Ashburner, J. T., Kiebel, S. J., and Nichols, T. E. (2011). Statistical parametric mapping: The analysis of functional brain images. Amsterdam: Elsevier.

Pernet, C. R., Appelhoff, S., Gorgolewski, K. J., Flandin, G., Phillips, C., Delorme, A., et al. (2019). EEG-BIDS, an extension to the brain imaging data structure for electroencephalography. Sci. Data 6:103. doi: 10.1038/s41597-019-0104-8

Petroff, O. A., Spencer, D. D., Goncharova, I. I., and Zaveri, H. P. (2016). A comparison of the power spectral density of scalp EEG and subjacent electrocorticograms. Clin. Neurophysiol. 127, 1108–1112. doi: 10.1016/j.clinph.2015.08.004

Privman, E., Nir, Y., Kramer, U., Kipervasser, S., Andelman, F., Neufeld, M. Y., et al. (2007). Enhanced category tuning revealed by intracranial electroencephalograms in high-order human visual areas. J. Neurosci. 27, 6234–6242. doi: 10.1523/JNEUROSCI.4627-06.2007

Puce, A., Allison, T., Spencer, S. S., Spencer, D. D., and McCarthy, G. (1997). Comparison of cortical activation evoked by faces measured by intracranial field potentials and functional MRI: Two case studies. Hum. Brain Mapp. 5, 298–305. doi: 10.1002/(SICI)1097-0193(1997)5:4<298::AID-HBM16>3.0.CO;2-A

Sanada, T., Kapeller, C., Jordan, M., Grünwald, J., Mitsuhashi, T., Ogawa, H., et al. (2021). Multi-modal mapping of the face selective ventral temporal cortex–a group study with clinical implications for ECS, ECoG, and fMRI. Front. Hum. Neurosci. 15:616591. doi: 10.3389/fnhum.2021.616591

Sandhaeger, F., Von Nicolai, C., Miller, E. K., and Siegel, M. (2019). Monkey EEG links neuronal color and motion information across species and scales. Elife 8:e45645. doi: 10.7554/eLife.45645

Sellers, K. K., Schuerman, W. L., Dawes, H. E., Chang, E. F., and Leonard, M. K. (2019). “Comparison of common artifact rejection methods applied to direct cortical and peripheral stimulation in human ECoG,” in Proceedings of the 2019 9th international IEEE/EMBS conference on neural engineering (NER) (San Francisco, CA: IEEE), 77–80. doi: 10.1109/NER.2019.8716980

Triantafyllou, C., Polimeni, J. R., and Wald, L. L. (2011). Physiological noise and signal-to-noise ratio in fMRI with multi-channel array coils. Neuroimage 55, 597–606. doi: 10.1016/j.neuroimage.2010.11.084

van Driel, J., Olivers, C. N., and Fahrenfort, J. J. (2021). High-pass filtering artifacts in multivariate classification of neural time series data. J. Neurosci. Methods 352:109080. doi: 10.1016/j.jneumeth.2021.109080

van Houdt, P. J., de Munck, J. C., Leijten, F. S. S., Huiskamp, G. J. M., Colon, A. J., Boon, P. A. J. M., et al. (2013). EEG-fMRI correlation patterns in the presurgical evaluation of focal epilepsy: A comparison with electrocorticographic data and surgical outcome measures. Neuroimage 75, 238–248. doi: 10.1016/j.neuroimage.2013.02.033

Wardle, S. G., Kriegeskorte, N., Grootswagers, T., Khaligh-Razavi, S.-M., and Carlson, T. A. (2016). Perceptual similarity of visual patterns predicts dynamic neural activation patterns measured with MEG. Neuroimage 132, 59–70. doi: 10.1016/j.neuroimage.2016.02.019

Welvaert, M., and Rosseel, Y. (2013). On the definition of signal-to-noise ratio and contrast-to-noise ratio for fMRI data. PLoS One 8:e77089. doi: 10.1371/journal.pone.0077089

Xu, Z., Bai, Y., Zhao, R., Hu, H., Ni, G., and Ming, D. (2022). Decoding selective auditory attention with EEG using a transformer model. Methods 204, 410–417. doi: 10.1016/j.ymeth.2022.04.009

Yamazaki, M., Tucker, D. M., Fujimoto, A., Yamazoe, T., Okanishi, T., Yokota, T., et al. (2012). Comparison of dense array EEG with simultaneous intracranial EEG for interictal spike detection and localization. Epilepsy Res. 98, 166–173. doi: 10.1016/j.eplepsyres.2011.09.007

Keywords: multivariate analysis, fMRI, EEG, ECoG, visual object representation

Citation: Ebrahiminia F, Cichy RM and Khaligh-Razavi S-M (2022) A multivariate comparison of electroencephalogram and functional magnetic resonance imaging to electrocorticogram using visual object representations in humans. Front. Neurosci. 16:983602. doi: 10.3389/fnins.2022.983602

Received: 01 July 2022; Accepted: 23 September 2022;

Published: 18 October 2022.

Edited by:

Jürgen Dammers, Institute of Neuroscience and Medicine, Jülich Research Center, Helmholtz Association of German Research Centres (HZ), GermanyReviewed by:

Ravichandran Rajkumar, University Hospital RWTH Aachen, GermanyCopyright © 2022 Ebrahiminia, Cichy and Khaligh-Razavi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Radoslaw Martin Cichy, cm1jaWNoeUBnb29nbGVtYWlsLmNvbQ==; Seyed-Mahdi Khaligh-Razavi, cy5tYWhkaXJhemF2aUBnbWFpbC5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.