- 1Department of Digital Medicine, School of Biomedical Engineering and Imaging Medicine, Army Medical University, Third Military Medical University, Chongqing, China

- 2Department of Neurosurgery, First Affiliated Hospital,Southwest Hospital, Army Medical University, Third Military Medical University, Chongqing, China

- 3School of Basic Medicine, Army Medical University, Third Military Medical University, Chongqing, China

The study aims to enhance the accuracy and practicability of CT image segmentation and volume measurement of ICH by using deep learning technology. A dataset including the brain CT images and clinical data of 1,027 patients with spontaneous ICHs treated from January 2010 to December 2020 were retrospectively analyzed, and a deep segmentation network (AttFocusNet) integrating the focus structure and the attention gate (AG) mechanism is proposed to enable automatic, accurate CT image segmentation and volume measurement of ICHs. In internal validation set, experimental results showed that AttFocusNet achieved a Dice coefficient of 0.908, an intersection-over-union (IoU) of 0.874, a sensitivity of 0.913, a positive predictive value (PPV) of 0.957, and a 95% Hausdorff distance (HD95) (mm) of 5.960. The intraclass correlation coefficient (ICC) of the ICH volume measurement between AttFocusNet and the ground truth was 0.997. The average time of per case achieved by AttFocusNet, Coniglobus formula and manual segmentation is 5.6, 47.7, and 170.1 s. In the two external validation sets, AttFocusNet achieved a Dice coefficient of 0.889 and 0.911, respectively, an IoU of 0.800 and 0.836, respectively, a sensitivity of 0.817 and 0.849, respectively, a PPV of 0.976 and 0.981, respectively, and a HD95 of 5.331 and 4.220, respectively. The ICC of the ICH volume measurement between AttFocusNet and the ground truth were 0.939 and 0.956, respectively. The proposed segmentation network AttFocusNet significantly outperforms the Coniglobus formula in terms of ICH segmentation and volume measurement by acquiring measurement results closer to the true ICH volume and significantly reducing the clinical workload.

Introduction

Intracerebral hemorrhage (ICH) is a hemorrhage caused by primary, non-traumatic vascular rupture in the brain parenchyma. In China, the incidence rate of ICH is approximately 69.6/100,000 people every year (Gbd 2016 Stroke Collaborators., 2019), and the mortality rate is 40% within 30 day. Only 12–39% of patients achieve long-term functional independence (Zhang et al., 2021), placing an enormous burden on society and families. For this reason, early and accurate judgment of the severity of an ICH is vital for guiding clinical treatment decisions and predicting the long-term outcomes of patients.

The larger the hematoma volume is, the worse the damage to brain tissue. In general, emergency intervention, including intubation, ventilation and neuromonitoring, should be applied for acute ICH (Sheng et al., 2022), and a surgical decision should be made if the hematoma volume exceeds 30 mL (Rodriguez-Luna et al., 2017). Therefore, accurate measurement of ICH volume is of vital significance for determining the severity of brain injuries. Computed tomography (CT), the preferred examination for the clinical diagnosis of ICHs, is convenient and fast and has a definite effect. Currently, the ICH volume is mostly measured via the “Coniglobus formula” in clinical practice (Kwak et al., 1983). The principle is to idealize the shape of the ICH as an ellipsoid and calculate its volume using the formula V = A × B × C × 1/2, where V is the ICH volume, A is the largest diameter of the lesion on the maximum ICH slice in the CT image, B is the largest width perpendicular to A in this slice, and C is the number of ICH slices × slice thickness. The “Coniglobus formula” is a simple and fast method for volume measurement and has acceptable accuracy for ellipsoid shaped ICHs. However, the non-ellipsoid shape of most ICHs in clinical practice and the limited experience of radiologists result in large errors in the measurement of ICH volume (Huttner et al., 2006; Freeman et al., 2008), thus producing a certain degree of uncertainty in clinical decisions. The quantitative CT method refers to the accurate slice-by-slice delineation of the hemorrhage site and calculation of the volume in a CT image and is regarded as the gold standard for non-invasive measurement of the ICH volume (Yan et al., 2013). However, this method is complex and time-consuming, making it difficult to apply in clinical practice.

With the flourishing development of artificial intelligence technology represented by deep learning (DL) in recent years, DL-based segmentation methods have been widely applied in the segmentation and measurement of brain tissues (Valliani et al., 2019). For ICHs, Cho et al. (2019) constructed a cascaded DL model for ICH detection and segmentation, with an accuracy of only 80.19% in ICH segmentation. Chilamkurthy et al. (2018) developed a DL model combined with natural language processing, trained it with over 300,000 brain CT images, and employed it for the identification of various subtypes of ICHs, skull fractures, and midline displacements (area under the receiving operator characteristic curve (AUC) >0.9). Ye et al. (2019) combined the convolutional neural network (CNN) VGG-16 and the bidirectional gate recurrent unit (Bi-GRU) to form an end-to-end deep neural network (DNN), which was then trained and tested using 2,836 brain CT images from three hospitals, with an AUC exceeding 0.8 in terms of the identification of different subtypes of ICHs. An end-to-end DNN model for ICH classification and segmentation was proposed by Kuo et al. (2019) that demonstrated a near-expert level in the detection of ICH subtypes. Arbabshirani et al. (2018) collected approximately 50,000 brain CT images to validate the effectiveness of a 3D CNN in ICH detection. Chang et al. (2020) established a CNN-based method for ICH segmentation. Zhao et al. (2021) applied the nnU-Net framework to the segmentation and volume calculation of ICH and peripheral oedema. Xu et al. (2021) evaluated Dense U-Net framework for the segmentation and quantification of ICH, EDH (extradural hemorrhage) and SDH (subdural hemorrhage). Rava et al. (2021) evaluated the Canon automatic stroke detection system and the automatic ICH segmentation tool in Vitrea and investigated the performance of the system in ICH detection and the effect of ICH volume on the detection performance of the system. Compared with the Coniglobus formula, deep learning technology demonstrates a higher accuracy in measuring the intracerebral hematoma volume (Lai et al., 2020; Jia et al., 2021). However, most studies of ICH segmentation have focused on the application of existing deep networks, lack of improvement on deep network to further improve performance.

In this study, a large-scale dataset of ICH CT images was established, and a DLN (AttFocusNet) for accurate segmentation of CT images of ICH was constructed and compared with other typical DLNs in terms of segmentation performance. Experimental results in both internal and external validation sets showed that AttFocusNet outperformed other networks in terms of segmentation, exhibited much higher accuracy than the Coniglobus formula in terms of ICH volume measurement, and significantly reduced the workload of ICH volume measurement in clinical practice, thus offering strong support for the clinical diagnosis of ICH.

Materials and methods

Clinical data

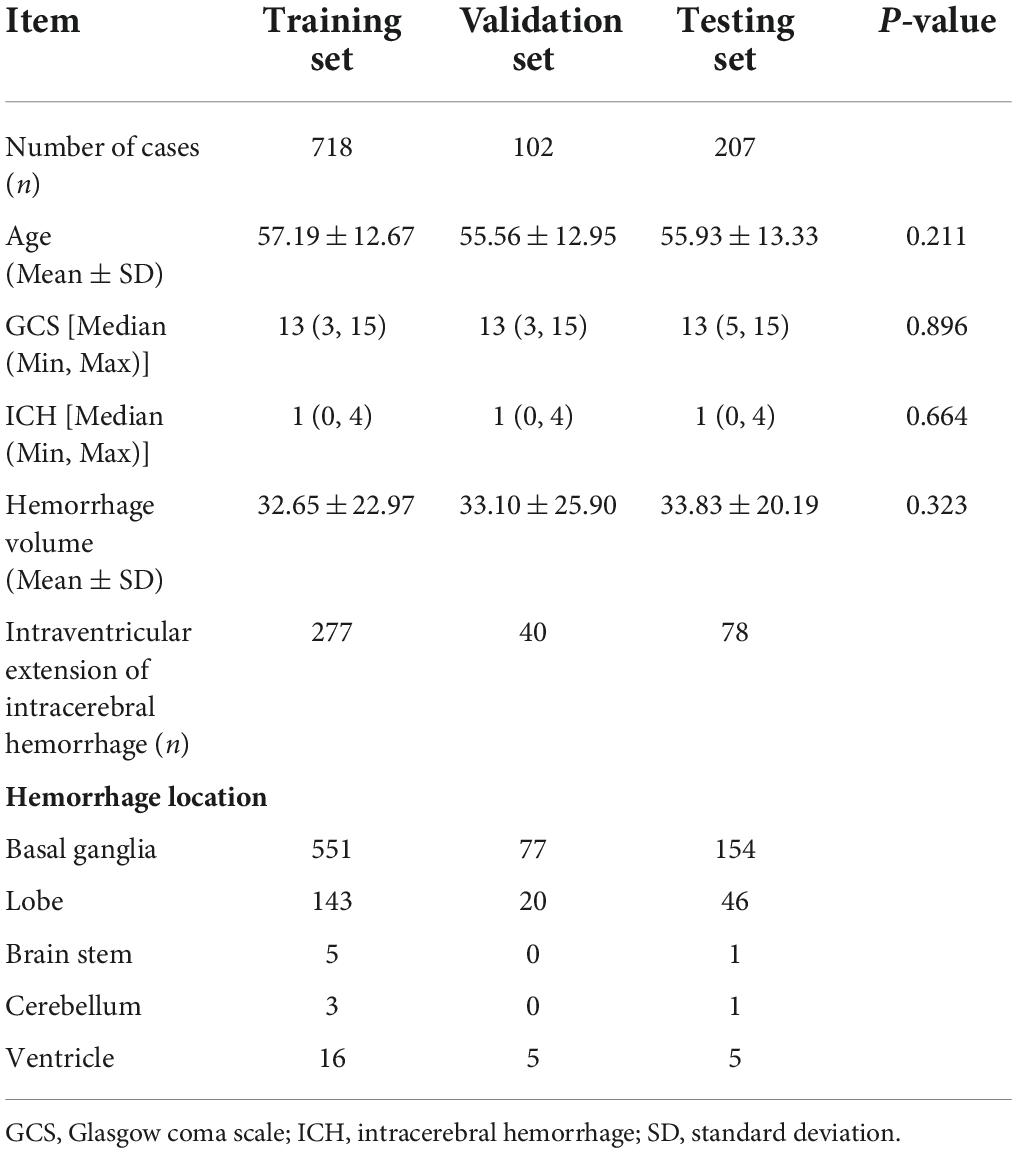

The data used in this study were head CT images of 1,027 ICH patients within 24 h of admission to first affiliated hospital of Army Medical University (Southwest Hospital), with a CT slice thickness of 4.0 mm. All CT scans were randomly assigned to the training dataset, validation dataset and testing dataset at a ratio of 7:1:2 during the experiment. Table 1 shows the detailed statistical information of each dataset. Age and hematoma volume are reported as the mean ± standard deviation (SD), and the Glasgow coma scale (GCS) score and ICH score are reported as the median (minimum, maximum). In addition, ICHs were observed in the basal ganglia for approximately 76% of the cases and found in locations such as lobes for the rest of the case. If an ICH was found in multiple locations at the same time, it was classified according to the first ICH location in the pathology report.

The resolution of all CT images was resampled to a 512 × 512 matrix, and then the scan slices of chest and abdomen were removed. As a result, 40 slices of CT images of each patient were preserved. The CT values of ICHs were mainly concentrated in the range of [−60, 140]. To ensure the segmentation effect, before the CT images were sent to the DLN, they were first windowed according to the above CT value range and then normalized. This data collection was reviewed and approved by the Ethics Committee of First Affiliated Hospital of Army Medical University (Southwest Hospital) (No. KY2021185).

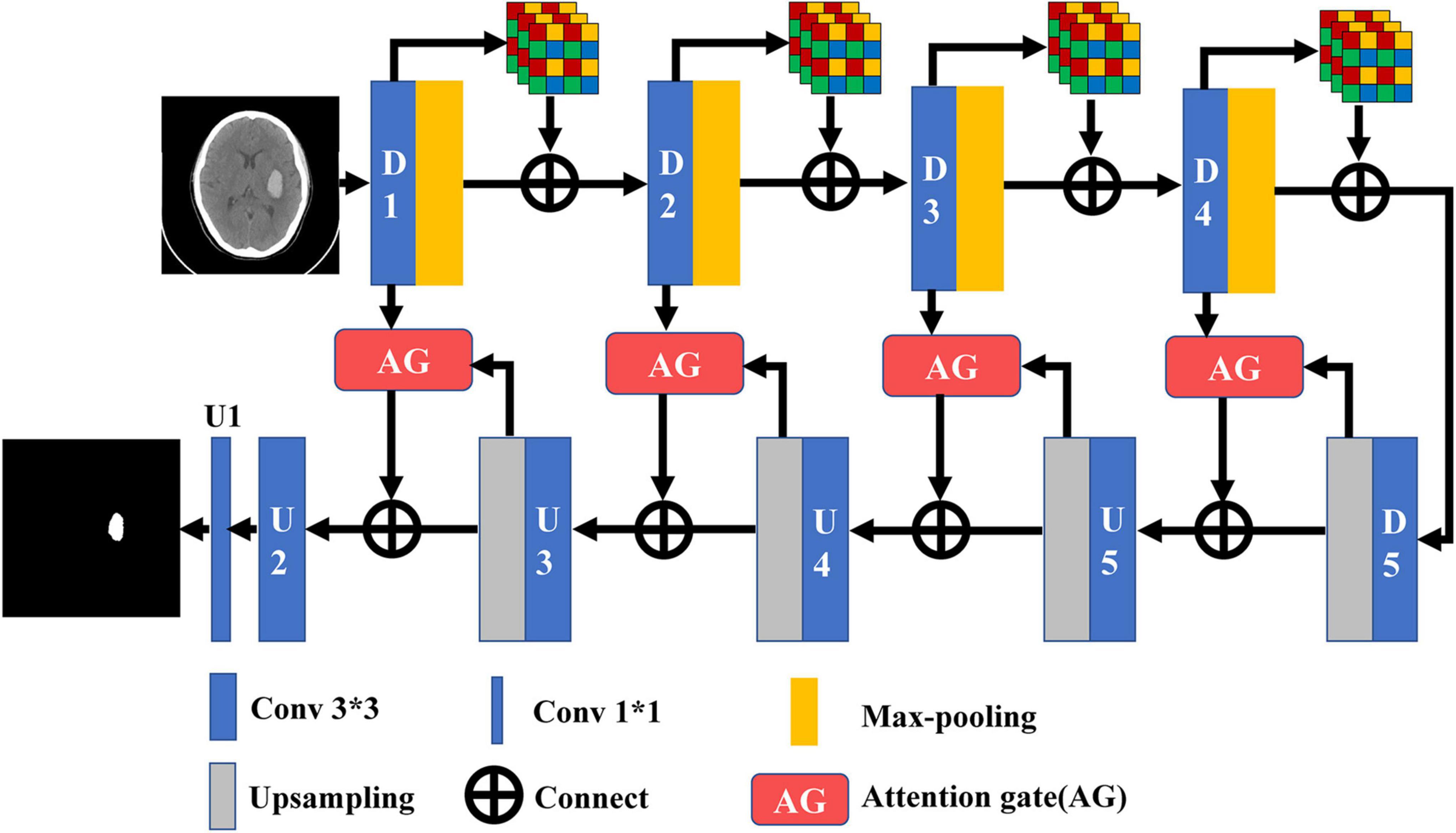

Development of the deep learning model for intracerebral hemorrhage segmentation

The attention gate (AG) is an attention mechanism proposed by Oktay et al. (2018) for three-dimensional (3D) segmentation of pancreatic CT images. In the model learning stage, the AG is able to suppress the parts irrelevant to the task features and focus on learning the features related to the task. In this study, the two-dimensional (2D) implementation of the AG is carried out and then combined with U-Net to obtain a 2D segmentation network, AttUNet. On this basis, a 2D segmentation network, AttFocusNet, based on AttUNet and the focus structure is proposed, which can be seen in Figure 1. The CT images of ICHs are input slice by slice, and the image features are extracted using an encoder with a focus structure, which is combined with AG in the encoder stage, to finally obtain the accurate segmentation of ICHs.

Figure 1. The structure diagram of AttFocusNet based on focus and the AG (D* and U* represent the convolutions in the encoding process and decoding process, respectively).

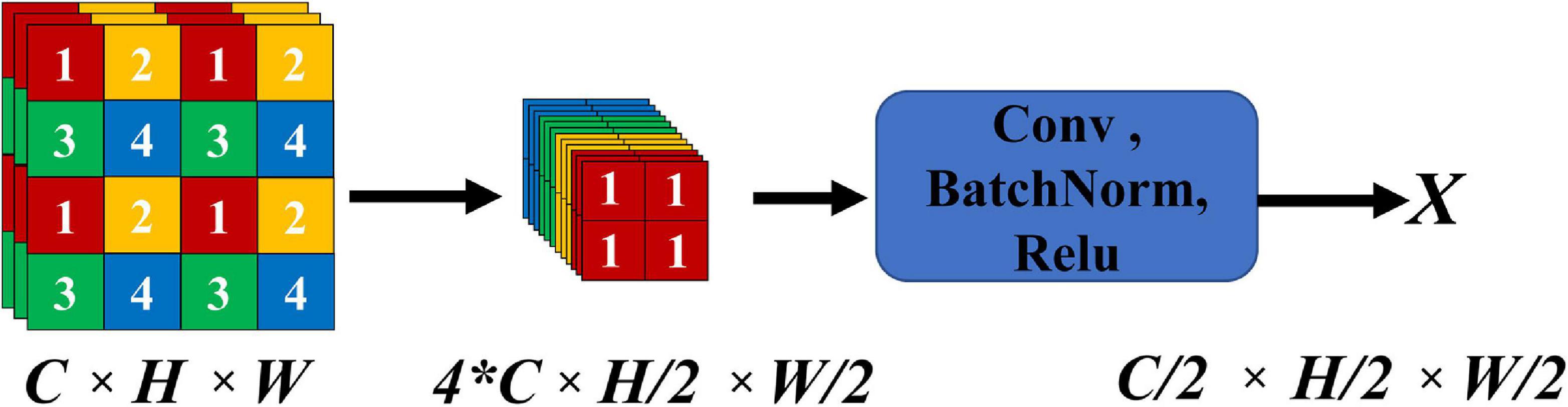

In DL-based image segmentation methods, feature dimensionality reduction is performed by encoders mostly through convolutional pooling. The signals at some small ICH points are hypointense on CT images, so they may be deemed to be redundant information and removed during feature extraction. The focus structure in YOLO-V5 is introduced to integrate the image information into the channel space for convolution, and the complete image features without pooling are fused with the features after convolutional pooling to effectively preserve the integrity of the overall features of the ICH during encoding. Figure 2 shows the focus structure, where C, H, and W represent the number of channels, height and width, respectively. In the focus structure, a feature map with C channels was sampled with a step size of 2 to evenly distribute the features of the feature map into 4C channels, and the feature map with C/2 channels was then output through convolution.

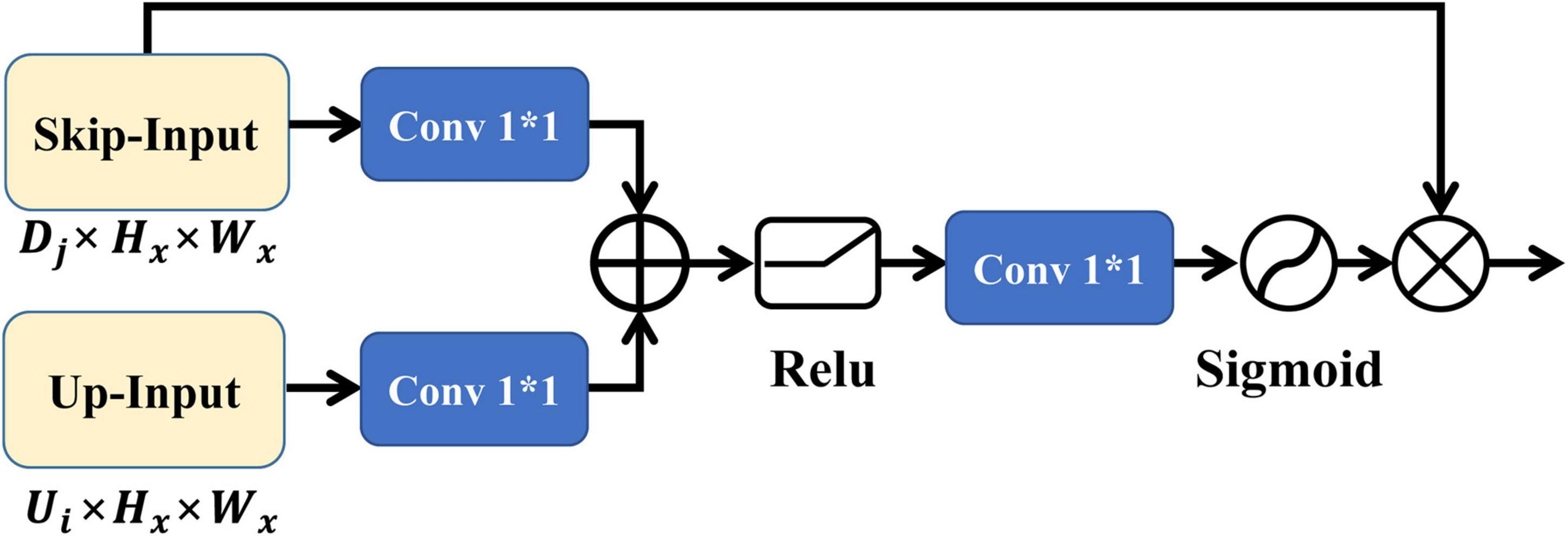

The output of the focus structure contains redundant low-level features that can be effectively suppressed by the AG, thus capturing more important features relevant to the task. The 2D implementation of the AG is shown in Figure 3, where the inputs skip-input and up-input have the same feature size, where up-input is the feature input after sampling on the Ui slice and skip-input is the feature input of the Dj slice of the encoder corresponding to the Ui slice. After convolution, up-input and skip-input are subjected to feature splicing, pass through the rectified linear unit (ReLU) activation function, and are subjected to the next convolution. Finally, the features of the sigmoid activation function are integrated with those of skip-input to obtain the output of the 2D AG.

The proposed AttFocusNet was implemented based on the PyTorch DL framework, with a workstation equipped with NVIDIA Quadro RTX5000 video memory (16 G) for model training and the RMS prop optimization function as the optimizer. The initial learning rate, epoch and batch size were set to 0.00001, 40 and 2, respectively. The learning rate was adjusted by a conditional trigger strategy, which was triggered when the model failed to converge for two consecutive epochs.

Volume measurement

The manual segmentation results of CT slices obtained by neurosurgeons were considered as the ground truth (GT), which could be used to evaluate the segmentation performance of deep models. The segmentation of each CT slice in this study was performed by a junior neurosurgeon with 7 years of working experience using the Mimics software, and the segmentation result was further corrected by a senior neurosurgeon with 20 years of working experience. From the Digital Imaging and Communications (DICOM) file header of CT images of ICHs, the voxel spacing X and Y in the horizontal and vertical directions, respectively, as well as the slice thickness T of CT scan can be obtained. Once the ground truth of all slices was obtained, the intracerebral hemorrhage volume of each patient could be calculated as follows

where Wi is the total number of voxels in the i(1 ≤ i ≤ L)-th slice of the ICH area and L is the total number of slices of the CT image. Note that the intracerebral hemorrhage volume that calculated using GT of each slice could be considered as the ground truth of intracerebral hemorrhage volume (V-GT).

Evaluation of performance

The ICH segmentation performance was evaluated using the Dice, intersection-over-union (IoU), sensitivity, positive predictive value (PPV), and 95% Hausdorff distance (HD95) metrics. Let true positive (TP) represent the number of true positive samples predicted to be positive samples, false positive (FP) represent the number of true negative samples predicted to be positive samples, and false negative (FN) represent the number of true positive samples predicted to be negative samples. Then, the above indicators are calculated as follows.

Note that HD represents the distance between the surface point sets of the calculated true sample and the predicted sample. Let G denote the ground truth and P denote the predicted value set of ICH. HD can be calculated as follows:

where and

Note that the HD value is usually multiplied by 95% (HD95) for practical application to eliminate the influence of a very small subset of outliers.

Results

Metrics evaluation

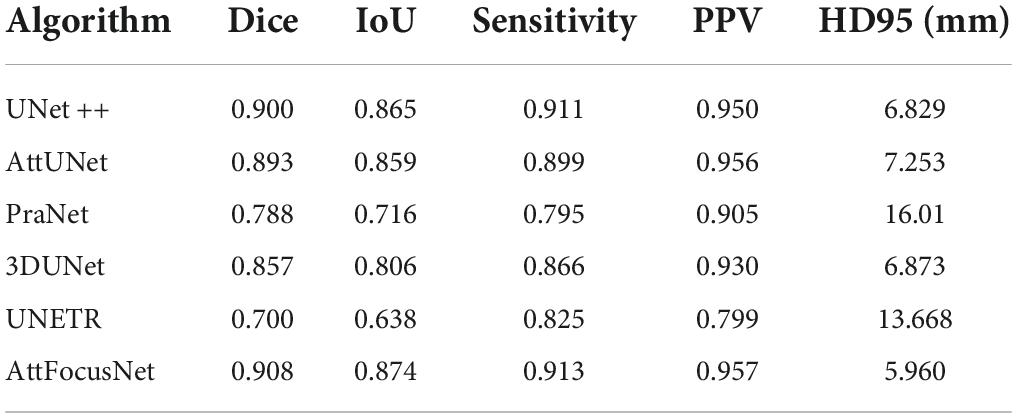

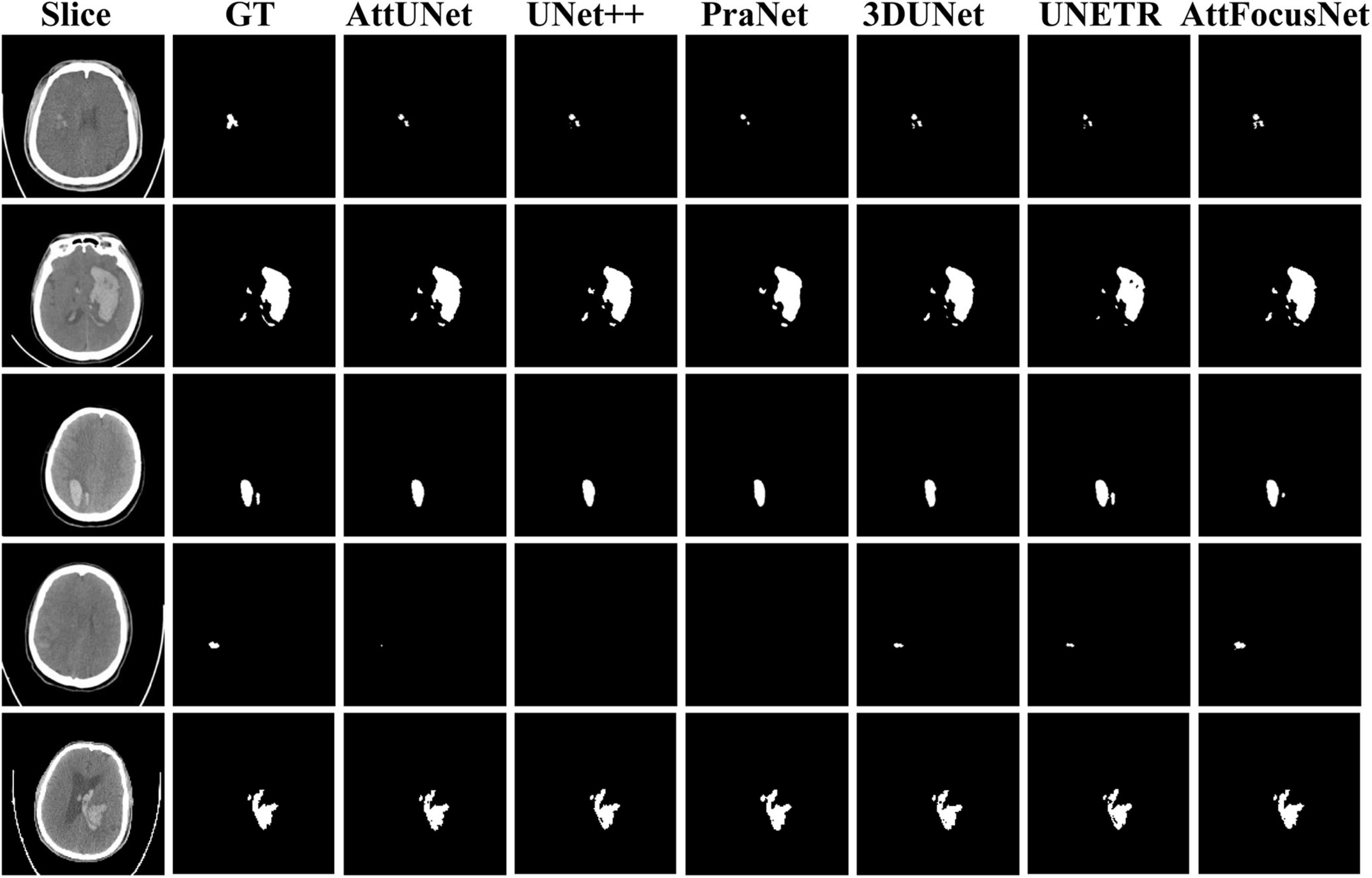

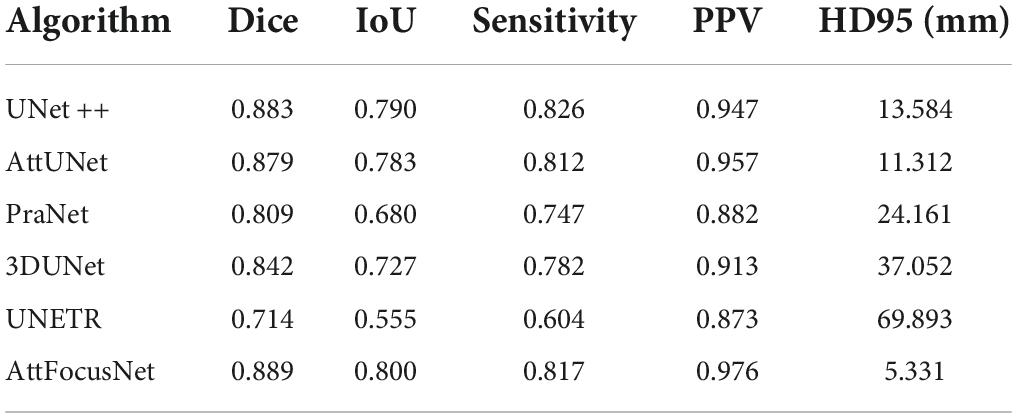

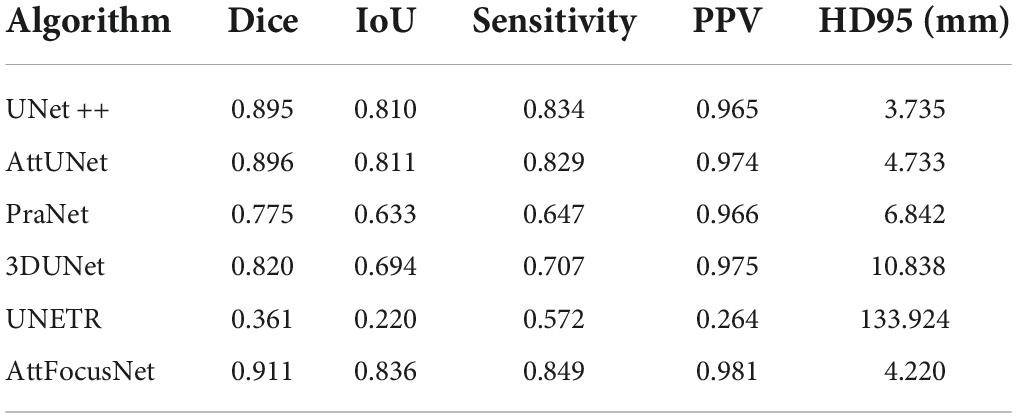

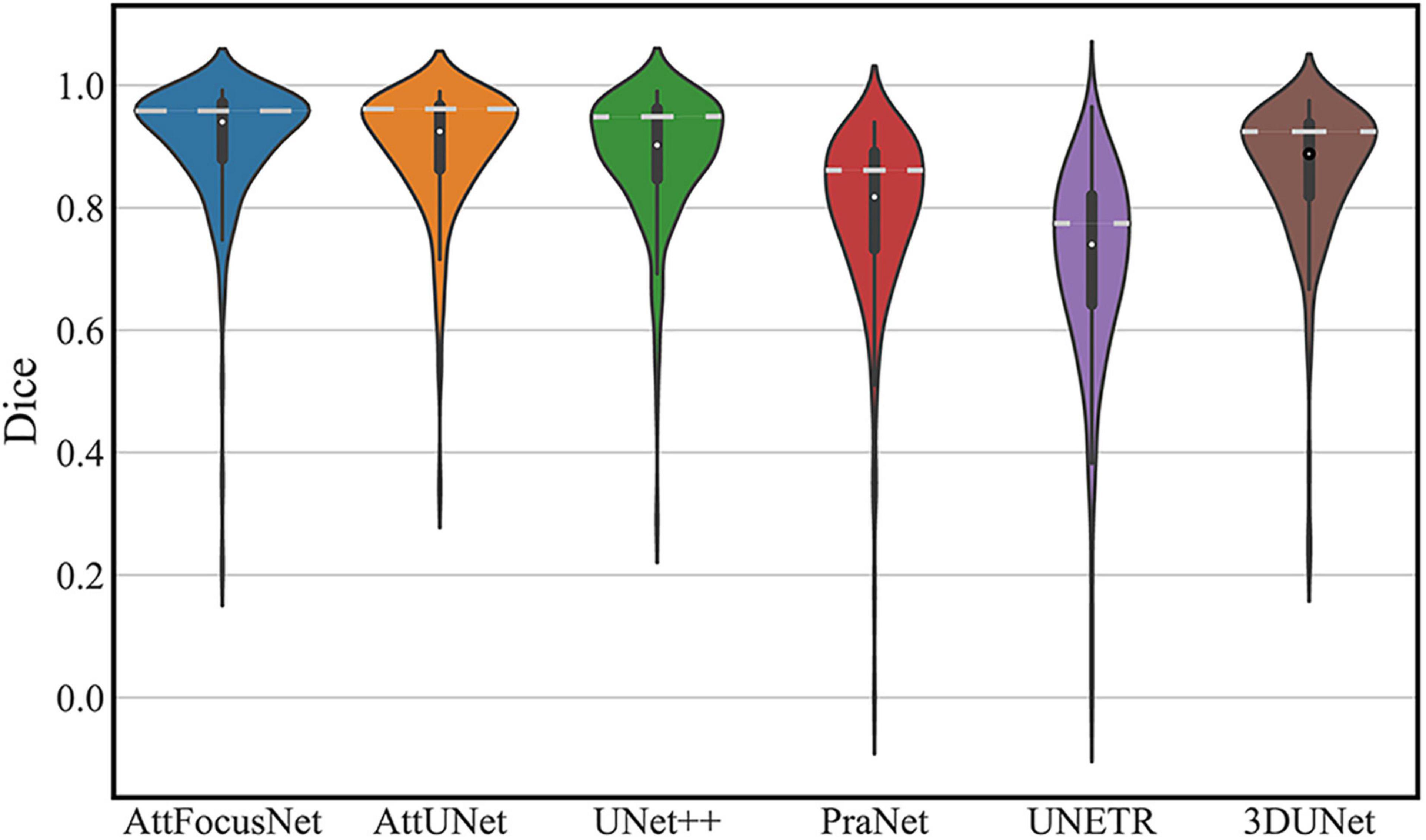

AttFocusNet was compared with UNet++ (Zhou et al., 2020), AttUnet, PraNet (Fan et al., 2020), 3DUNet (Çiçek et al., 2016), and UNETR (Hatamizadeh et al., 2022) in terms of segmentation performance (Table 2). AttFocusNet exhibited the best comprehensive performance, outperforming other networks in terms of Dice, IoU, sensitivity, PPV, and, more prominently, HD95, fully demonstrating the effectiveness of AttFocusNet.

The segmentation performance of each network on the testing dataset is shown in a violin plot (Figure 4), where the width between the left and right peaks (as shown by the white dashed lines) indicates the density of the data distribution.

Figure 4. Distribution of the segmentation performance of different deep models (White dots, squares, vertical lines, and peaks represent the median, interquartile range, 95% confidence interval, and data density distribution, respectively).

Segmentation effect

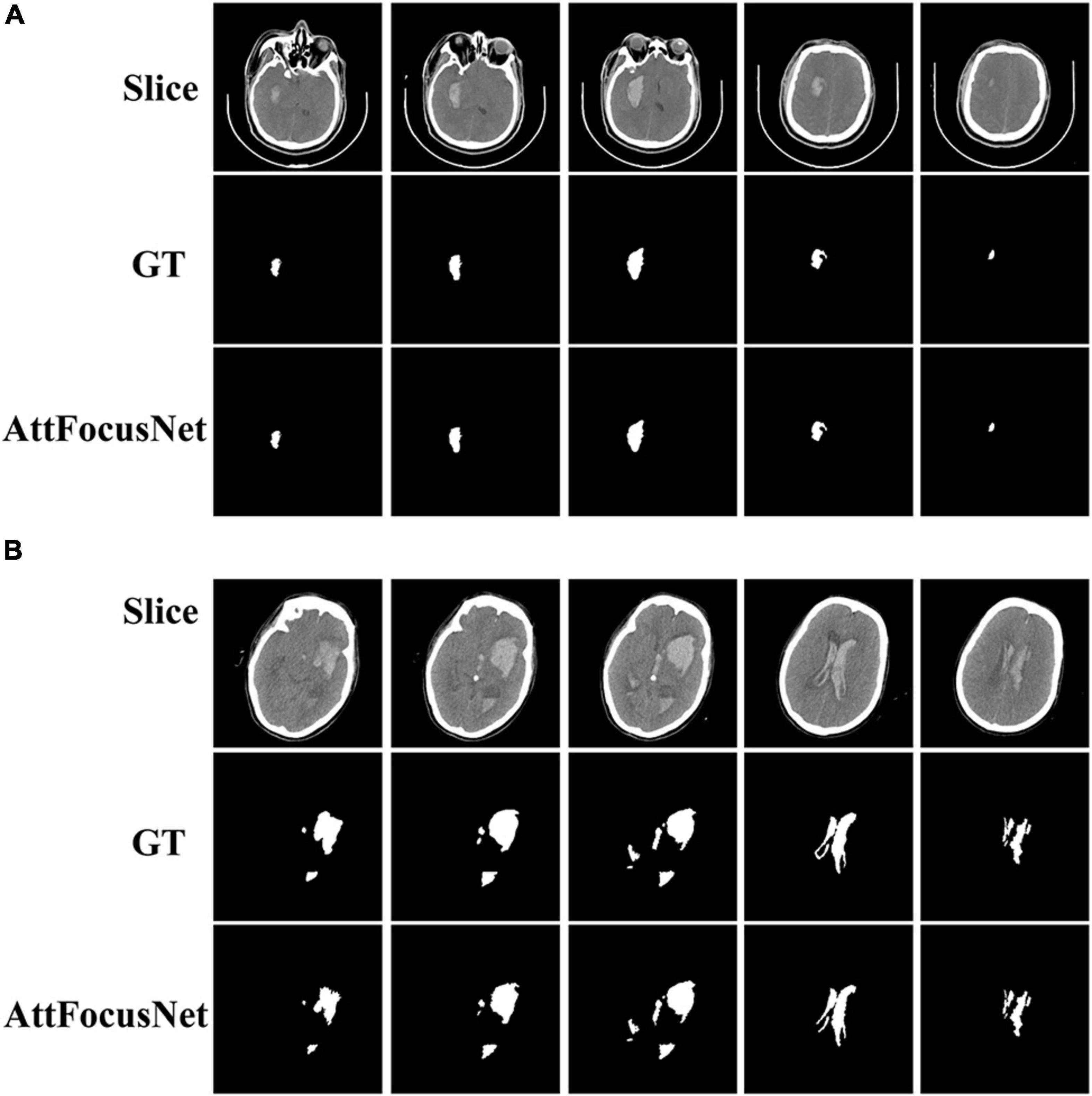

Figure 5 exhibits segmentation results from the different methods. The first row shows the original CT image of different slices, the second row shows the corresponding GT with respect to each slice in the first row, and rows 3–8 show the segmentation results by different methods.

Figure 6 presents the CT images and segmentation results of the patients for whom the results of the two methods show differences of 1.86 and 74.05 mL, respectively.

Figure 6. Computed tomography (CT) images and segmentation results corresponding to the difference in volume measurements between AttFocusNet and the Coniglobus formula. (A) A difference of 1.86 mL between volume measurements. (B) A difference of 74.05 mL between volume measurements.

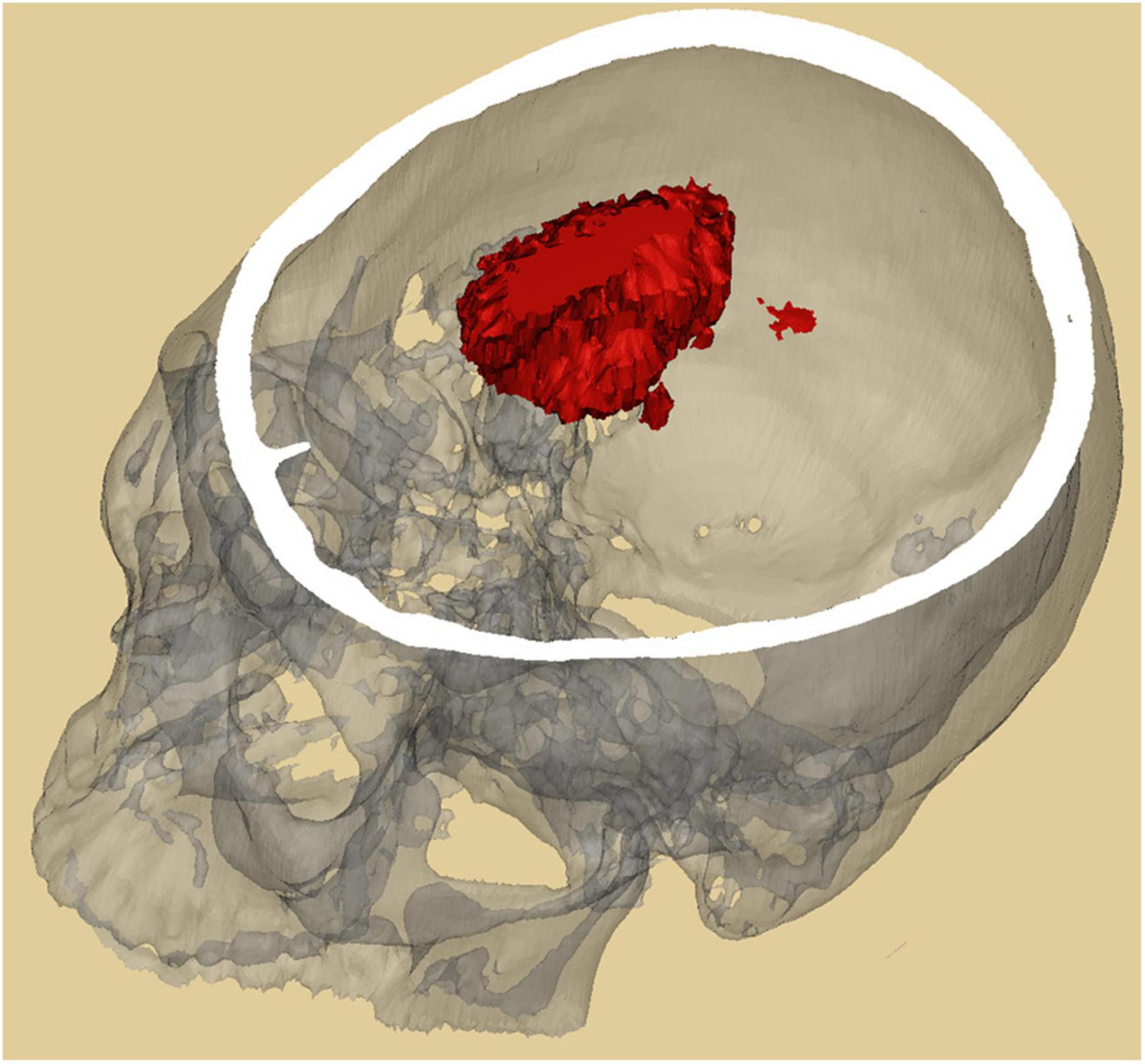

The segmentation results obtained by AttFocusNet were used to generate the 3D visualization of the ICH via Mimics software, as shown in Figure 7.

Consistency evaluation

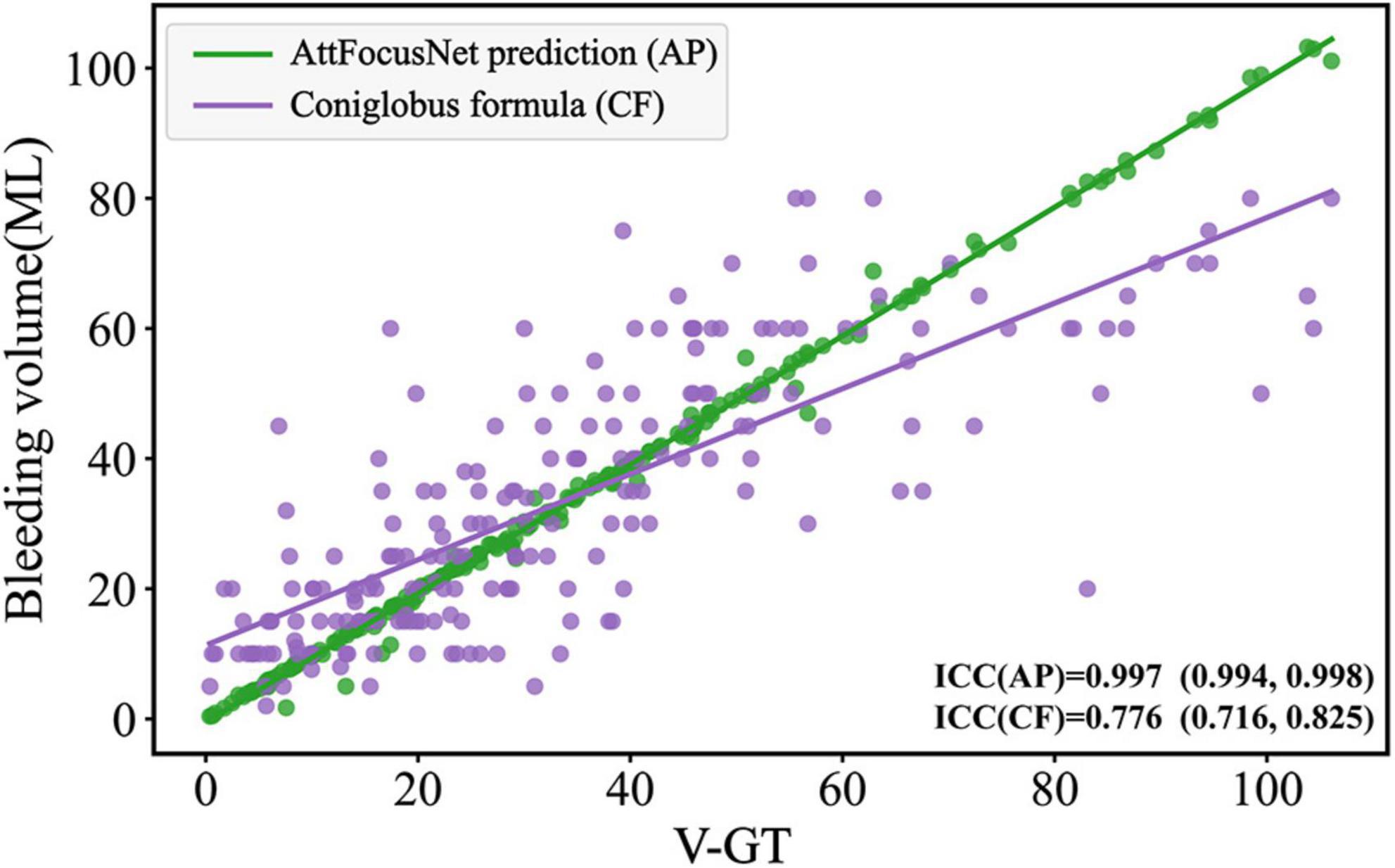

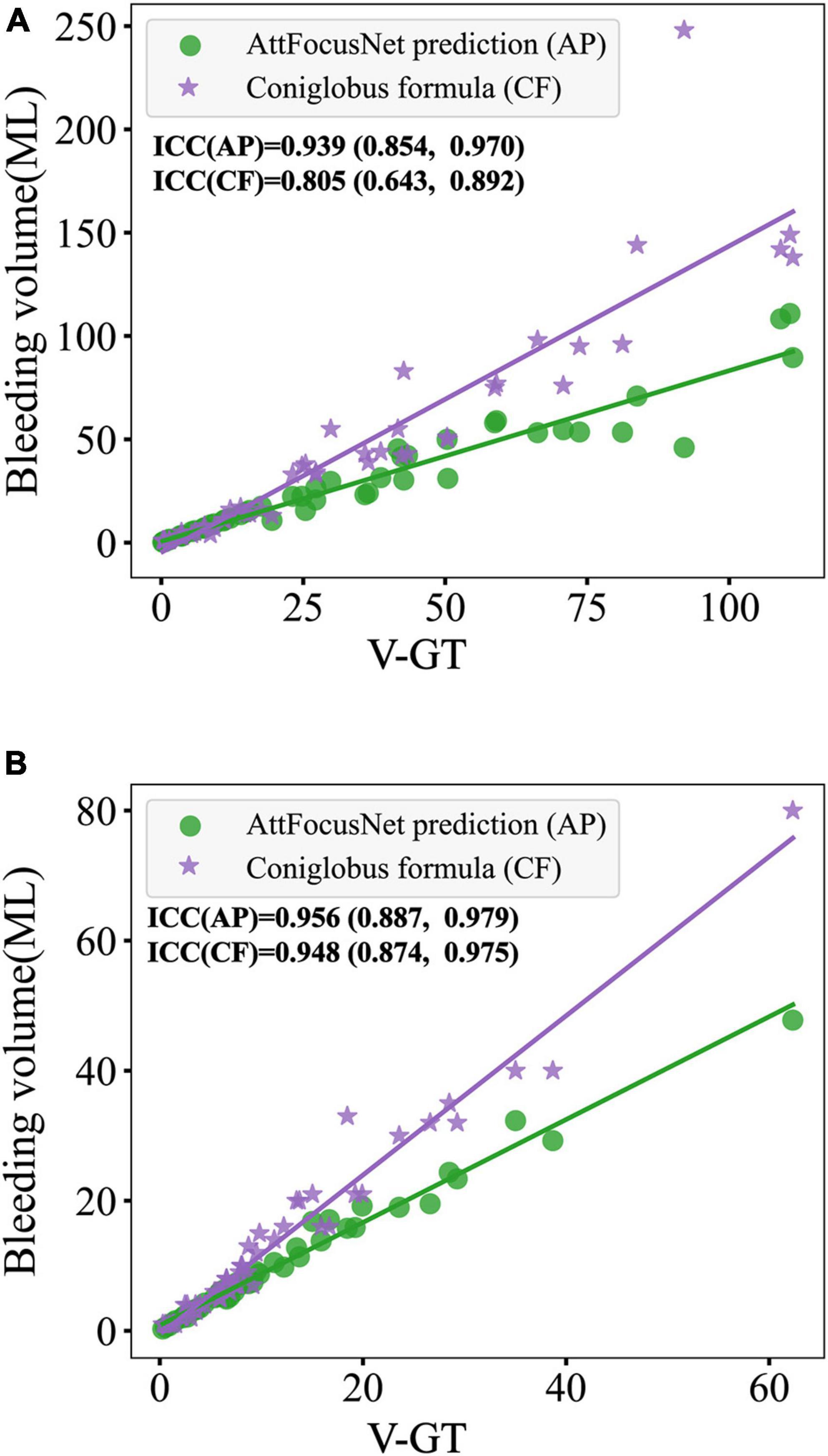

The consistency of different methods was evaluated by linear regression as shown in Figure 8, where the abscissa scale is the V-GT and the ordinate represents the ICH volume measured by the different methods.

External validation

In order to further evaluate the effectiveness of AttFocusNet, we, respectively, selected CT images of 50 patients from CQ500 dataset (Chilamkurthy et al., 2018) and RSNA2019 dataset (Flanders et al., 2020) for external validation, which was shown in Tables 3, 4. The results showed that AttFocusNet outperformed the other deep models on most metrics, fully demonstrating the effectiveness of AttFocusNet.

Similar with Figure 8, the consistency of different methods in external validation set was evaluated by linear regression as shown in Figure 9, where the ICC of the ICH volume measurement on CQ500 and RSNA2019 between AttFocusNet and the ground truth were, respectively, 0.939 and 0.956, while the ICC of the ICH volume measurement between Coniglobus formula and the ground truth were, respectively, 0.805 and 0.948.

Discussion

A major objective of our study was to accurately segment the ICH area from CT scans by DL as the premise of accurate volume calculation. We constructed large-scale CT scans of ICH, which is very important to ensure the segmentation performance of the DL model. The proposed AttFocusNet combines the focus structure with AttUNet. AttFocusNet outperformed the other five DL models in five indicators, especially HD95, clearly illustrating its effectiveness for ICH segmentation. AttFocusNet segments the ICH area from CT scans slice by slice and is significantly superior to 3D DL models in the overall segmentation of ICH from CT scans. The difficulty of training a 3D segmentation model is significantly higher than that of a 2D segmentation model, while the segmentation efficiency of a 3D DL model is usually lower than that of a 2D DL model. Therefore, the use of slice-by-slice mode in AttFocusNet can be considered a good choice for the volume measurement of clinical ICH. As shown in Figure 4, the Dice coefficient distributions of AttUNet and UNet + were between 0.8 and 1.0; however, the significantly broader width indicated that AttUNet achieved better comprehensive performance on the testing dataset. The Dice coefficient distributions of PraNet, 3D U-Net and UNETR were mainly between 0.7 and 0.9 with a narrow width, indicating poor segmentation performance. AttFocusNet achieved the best performance with a Dice coefficient close to 1.0 and the broadest width, indicating excellent segmentation performance.

As shown in Figure 5, regardless of whether the bleeding area was large or small, or the bleeding shape was ellipsoid or non-ellipsoid, AttFocusNet always achieved the best segmentation performance among the evaluated DL models, thus providing a good foundation for the calculation of ICH volume. As shown in Figure 6A, when the ICH had a relatively ellipsoid shape, the volume measured by the Coniglobus formula was similar to that measured by AttFocusNet. When the ICH had a highly non-ellipsoid shape, as shown in Figure 6B, the volume measurements of the Coniglobus formula and AttFocusNet differed considerably, and the volume measured by the Coniglobus formula was less accurate. The above results are completely consistent with our understanding of the Coniglobus formula. In fact, if the shape of ICH is non-ellipsoidal, compared with the Coniglobus formula, AttFocusNet was essentially validated against a poor method. Note that most ICHs are non-ellipsoid in shape, which means that our method has better application prospects in clinical practice. The ICH score (Hemphill et al., 2001) is a strong predictor of 30-day mortality and includes five independent indicators: GCS score, age, ICH volume, IVH and ICH origin. This information can be obtained from CT scans with the exception of the GCS score, which must be evaluated by clinicians. The proposed AttFocusNet is helpful for the volume measurement of ICH regardless of hematoma shape, which could be helpful to accurately determine the ICH score. Based on the segmentation results, we can easily establish a 3D model for the visualization of ICH by using Mimics software. 3D visualization can help clinicians conduct comprehensive and accurate observations and analyses of ICH from a three-dimensional perspective to design accurate treatment schemes and improve the level of medical diagnosis and treatment and the utilization value of medical imaging.

From the perspective of the fitting of the linear regression, the volumes measured by the Coniglobus formula were highly scattered, with a number of outliers, poor consistency with the V-GT, and an intraclass correlation coefficient (ICC) of 0.776, indicating weak consistency between the Coniglobus formula and the V-GT. In contrast, the volumes measured by AttFocusNet showed an ideal fit, with few outliers, high consistency with the V-GT, and an ICC of 0.997, indicating strong consistency between AttFocusNet and the V-GT.

In terms of efficiency, AttFocusNet took approximately 5.6 s to provide automatic segmentation and volume analysis for each patient, while the Coniglobus formula and manual segmentation by the neurosurgeon (based on the Mimics software) required 47.7 and 170.1 s for each patient on average. Therefore, AttFocusNet can reduce the clinical workload significantly while ensuring high measurement accuracy of ICH volume. Once the ICH segmentation results are obtained, we can accurately calculate the ICH volume. In terms of consistency evaluation, compared with the Coniglobus formula, the volume calculated by AttFocusNet was closer to the V-GT, which further shows the effectiveness of the segmentation algorithm.

Similar with internal validation, AttFocusNet outperformed the other deep models for the external validation, which showed that AttFocusNet had better generalization performance. Moreover, according to the ICC values, compared with the Coniglobus formula, the ICH volume obtained by AttFocusNet had better consistency with V-GT, which fully showed the effectiveness of the proposed deep network.

There were several limitations to our work. First, the hematoma subtypes of the patients were either ICH or IVH, while other subtypes, including extradural hemorrhage (EDH), subdural hemorrhage (SDH), and subarachnoid hemorrhage (SAH), were excluded. In addition, patients under 18 years of age were excluded, which could limit the generalizability of our model. Second, the ground truth masks required consensus from more than one human expert, which would reduce errors caused by fatigue, technical overload, lack of concentration and other factors.

In conclusion, AttFocusNet provided automatic segmentation and volume measurement of ICH. AttFocusNet achieved high accuracy in ICH volume measurement regardless of ICH shape and significantly reduced the clinical workload, indicating that AttFocusNet is a promising approach for clinical practice. At present, Transformer has been gradually applied to the feature extraction of 3D data in the construction of DLNs, and the combination of AttFocusNet and Transformer is expected to further improve the segmentation effect in the future.

Data availability statement

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Ethics statement

This study was approved by the Ethics Committee of First Affiliated Hospital of Army Medical University (Southwest Hospital) (No. KY2021185). Written informed consent was not required for the study on human participants in accordance with the local legislation and institutional requirements.

Author contributions

YN, RH, and HF are responsible for the design of the study and review of the manuscript. QP and CZ contributed to data collection. XC and JL contributed to data analysis. QP, XC, and YN contributed to manuscript preparation. YW, WL, and TS contributed to manuscript revision. All authors have read and approved the final manuscript.

Funding

This study was supported by the Program for Innovation Research Group of Universities in Chongqing (No. CXQT19012), Undergraduate Scientific Research Training Program of Army Medical University (Third Military Medical University) (No. 2021XBK13), and the Key Projects of Chongqing Natural Science Foundation (No. cstc2020jcyj-zdxmX0025).

Acknowledgments

We thank the support and convenience provided by the clinicians in the neurosurgery department of First Affiliated Hospital of Army Medical University (Southwest Hospital) in data collection. We also thank the contributions made by the people involved in this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Arbabshirani, M. R., Fornwalt, B. K., Mongelluzzo, G. J., Suever, J. D., Geise, B. D., Patel, A. A., et al. (2018). Advanced machine learning in action: Identification of intracranial hemorrhage on computed tomography scans of the head with clinical workflow integration. NPJ. Digit Med. 1, 1–7. doi: 10.1038/s41746-017-0015-z

Chang, J. B., Jiang, S. Z., Chen, X. J., Luo, J. X., Li, W. L., Zhang, Q. H., et al. (2020). Consistency evaluation of an automatic segmentation for quantification of intracerebral hemorrhage using convolution neural network. Chin. J. Contemp. Neurol. Neurosurg. 20, 585–590. doi: 10.3969/j.issn.1672-6731.2020.07.005

Chilamkurthy, S., Ghosh, R., Tanamala, S., Biviji, M., Campeau, N. G., Venugopal, V. K., et al. (2018). Deep learning algorithms for detection of critical findings in head CT scans: A retrospective study. Lancet 392, 2388–2396. doi: 10.1016/S0140-6736(18)31645-3

Cho, J., Park, K. S., Karki, M., Lee, E., Ko, S., Kim, J. K., et al. (2019). Improving sensitivity on identification and delineation of intracranial hemorrhage lesion using cascaded deep learning models. J. Digit Imaging 32, 450–461. doi: 10.1007/s10278-018-00172-1

Çiçek, Ö, Abdulkadir, A., Lienkamp, S. S., Brox, T., and Ronneberger, O. (2016). “3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation,” in Proceedings Of International Conference On Medical Image Computing And Computer-Assisted Intervention, (Berlin) 424–432. doi: 10.1007/978-3-319-46723-8_49

Fan, D. P., Ji, G. P., Zhou, T., Chen, G., Fu, H. Z., Shen, J. B., et al. (2020). “Pranet: Parallel reverse attention network for polyp segmentation,” in Proceedings Of International Conference On Medical Image Computing And Computer-Assisted Intervention,(Cham) 263–273. doi: 10.1007/978-3-030-59725-2_26

Flanders, A. E., Prevedello, L. M., Shih, G., Halabi, S. S., Cramer, J. K., Ball, R., et al. (2020). Construction of a machine learning dataset through collaboration: The RSNA 2019 Brain CT Hemorrhage Challenge. Radiol. Artif. Intell. 2:e190211. doi: 10.1148/ryai.2020190211

Freeman, W. D., Barrett, K. M., Bestic, J. M., Meschia, J. F., Broderick, D. F., Brott, T. G., et al. (2008). Computer-assisted volumetric analysis compared with ABC/2 method for assessing warfarin-related intracranial hemorrhage volumes. Neurocritical Care 9, 307–312. doi: 10.1007/s12028-008-9089-4

Gbd 2016 Stroke Collaborators. (2019). Global, regional, and national burden of stroke, 1990-2016: A systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 18, 439–458. doi: 10.1016/S1474-4422(18)30499-X

Hatamizadeh, A., Tang, Y. C., Nath, V., Yang, D., Myronenko, A., Landman, B., et al. (2022). “UNETR: Transformers for 3D medical image segmentation,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision,(Piscataway, NJ) 574–584.

Hemphill, J. C., Bonovich, D. C., Besmertis, L., Manley, G. T., and Johnston, S. C. (2001). The ICH score: A simple, reliable grading scale for intracerebral hemorrhage. Stroke 32, 891–897. doi: 10.1161/01.str.32.4.891

Huttner, H. B., Steiner, T., Hartmann, M., Köhrmann, M., Juettler, E., and Mueller, S. (2006). Comparison of ABC/2 estimation technique to computer-assisted planimetric analysis in warfarin-related intracerebral parenchymal hemorrhage. Stroke 37, 404–408. doi: 10.1161/01.STR.0000198806.67472.5c

Jia, Y. J., Yu, N., Yu, Y., Yang, C. B., and Ma, G. M. (2021). Accuracy of deep learning-based computer aided diagnosis system for measuring the intracranial hematoma volume. Chin. Imaging J. Integr. Tradit. West. Med. 19, 180–183. doi: 10.3969/j.issn.1672-0512.2021.02.022

Kuo, W. C., Hne, C., Mukherjee, P., Yuh, E. L., et al. (2019). Expert-level detection of acute intracranial hemorrhage on head computed tomography using deep learning. Proc. Natl. Acad. Sci. U.S.A. 116, 22737–22745. doi: 10.1073/pnas.1908021116

Kwak, R., Kadoya, S., and Suzuki, T. (1983). Factors affecting the prognosis in thalamic hemorrhage. Stroke 10, 493–500. doi: 10.1161/01.STR.14.4.493

Lai, J. D., Wang, N., Luo, K., Pan, N., Zhu, Y. N., Zhou, H. P., et al. (2020). Measuring volume of cerebral hemorrhage based on deep learning computer aided diagnostic system. Chin. J. Med. Imaging Technol. 36, 1781–1785. doi: 10.13929/j.issn.1003-3289.2020.12.005

Oktay, O., Schlemper, J., Folgoc, L. L., Lee, M., Heinrich, M., Misawa, K., et al. (2018). Attention U-Net: Learning where to look for the pancreas. arXiv [Preprint]. doi: 10.48550/arXiv.1804.03999

Rava, R. A., Seymour, S. E., Laque, M. E., Peterson, B. A., Snyder, K. V., Mokin, M., et al. (2021). Assessment of an artificial intelligence algorithm for detection of intracranial hemorrhage. World Neurosurg. 150, 209–217. doi: 10.1016/j.wneu.2021.02.134

Rodriguez-Luna, D., Coscojuela, P., Rodriguez-Villatoro, N., Juega, J. M., Boned, S., Muchada, M., et al. (2017). Multiphase CT angiography improves prediction of intracerebral hemorrhage expansion. Radiology 285, 932–940. doi: 10.1148/radiol.2017162839

Sheng, J. T., Chen, W. Q., Zhuang, D. Z., Li, T., Yang, J. H., Cai, S. R., et al. (2022). A clinical predictive nomogram for traumatic brain parenchyma hematoma progression. Neurol. Ther. 11, 185–203. doi: 10.1007/s40120-021-00306-8

Valliani, A. A., Ranti, D., and Oermann, E. K. (2019). Deep learning and neurology: A systematic review. Neurol. Ther. 8, 351–365. doi: 10.1007/s40120-019-00153-8

Xu, J., Zhang, R. G., Zhou, Z. J., Wu, C. X., Zhang, H. L., et al. (2021). Deep network for the automatic segmentation and quantification of intracranial hemorrhage on CT. Front. Neurosci. 14:541817. doi: 10.3389/fnins.2020.541817

Yan, J., Zhao, K. J., Sun, J. L., Yang, W. L., Qiu, Y. L., Kleinig, T., et al. (2013). Comparison between the formula 1/2ABC and 2/3Sh in intracerebral parenchyma hemorrhage. Neurol. Res. 35, 382–388. doi: 10.1179/1743132812Y.0000000141

Ye, H., Gao, F., Yin, Y., Guo, D. F., Zhao, P. F., Lu, Y., et al. (2019). Precise diagnosis of intracranial hemorrhage and subtypes using a three-dimensional joint convolutional and recurrent neural network. Eur. Radiol. 29, 6191–6201. doi: 10.1007/s00330-019-06163-2

Zhang, C., Ge, H. F., Zhang, S. X., Liu, D., Jiang, Z. Y., Lan, C., et al. (2021). Hematoma evacuation via image-guided para-corticospinal tract approach in patients with spontaneous intracerebral hemorrhage. Neurol. Ther. 10, 1001–1013. doi: 10.1007/s40120-021-00279-8

Zhao, X. J., Chen, K. X., Wu, G., Zhang, G. Y., Zhou, X., Lv, C. F., et al. (2021). Deep learning shows good reliability for automatic segmentation and volume measurement of brain hemorrhage, intraventricular extension, and peripheral edema. Eur. Radiol. 31, 5012–5020. doi: 10.1007/s00330-020-07558-2

Keywords: deep learning, intracerebral hemorrhage, computed tomography, segmentation, volume measurement

Citation: Peng Q, Chen X, Zhang C, Li W, Liu J, Shi T, Wu Y, Feng H, Nian Y and Hu R (2022) Deep learning-based computed tomography image segmentation and volume measurement of intracerebral hemorrhage. Front. Neurosci. 16:965680. doi: 10.3389/fnins.2022.965680

Received: 10 June 2022; Accepted: 06 September 2022;

Published: 03 October 2022.

Edited by:

Doris Lin, School of Medicine, Johns Hopkins Medicine, United StatesReviewed by:

Rongguo Zhang, Advanced Institute, Infervision, ChinaLeonardo Augusto Carbonera, Hospital Moinhos de Vento, Brazil

Copyright © 2022 Peng, Chen, Zhang, Li, Liu, Shi, Wu, Feng, Nian and Hu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yongjian Nian, eWpuaWFuQHRtbXUuZWR1LmNu; Rong Hu, aHVjaHJvbmdAdG1tdS5lZHUuY24=

†These authors have contributed equally to this work

Qi Peng1†

Qi Peng1† Yi Wu

Yi Wu Hua Feng

Hua Feng Rong Hu

Rong Hu