- 1Department of Physics, University of Bucharest, Bucharest, Romania

- 2Epilepsy Monitoring Unit, Department of Neurology, Emergency University Hospital Bucharest, Bucharest, Romania

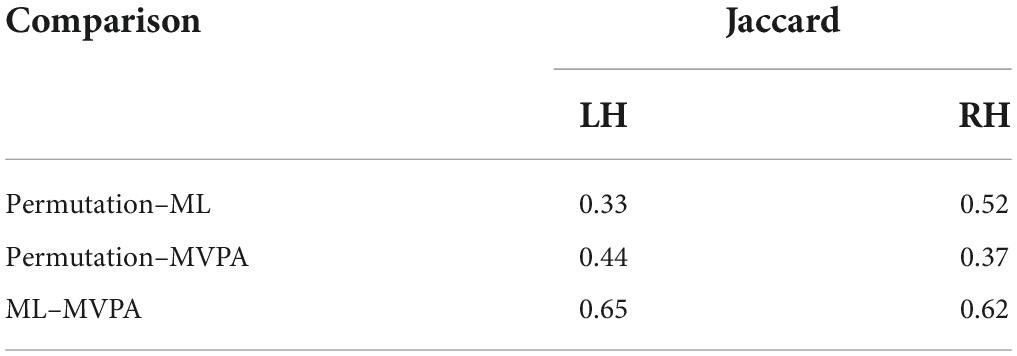

Cognitive tasks are commonly used to identify brain networks involved in the underlying cognitive process. However, inferring the brain networks from intracranial EEG data presents several challenges related to the sparse spatial sampling of the brain and the high variability of the EEG trace due to concurrent brain processes. In this manuscript, we use a well-known facial emotion recognition task to compare three different ways of analyzing the contrasts between task conditions: permutation cluster tests, machine learning (ML) classifiers, and a searchlight implementation of multivariate pattern analysis (MVPA) for intracranial sparse data recorded from 13 patients undergoing presurgical evaluation for drug-resistant epilepsy. Using all three methods, we aim at highlighting the brain structures with significant contrast between conditions. In the absence of ground truth, we use the scientific literature to validate our results. The comparison of the three methods’ results shows moderate agreement, measured by the Jaccard coefficient, between the permutation cluster tests and the machine learning [0.33 and 0.52 for the left (LH) and right (RH) hemispheres], and 0.44 and 0.37 for the LH and RH between the permutation cluster tests and MVPA. The agreement between ML and MVPA is higher: 0.65 for the LH and 0.62 for the RH. To put these results in context, we performed a brief review of the literature and we discuss how each brain structure’s involvement in the facial emotion recognition task.

Highlights

- Intracranial EEG recordings during a facial emotion recognition task.

- The first implementation of searchlight MVPA with sparse intracranial electrodes.

- Comparison of permutation cluster tests, ML classification, and searchlight MVPA.

Introduction

Our understanding of how the human brain works is currently at its peak, driven by significant technological and methodological advances. The 19th century marked the transition from non-scientific, and often superstitious approaches to treating and diagnosing brain-related diseases, to evidence-driven scientific research. The pioneering work of Paul Brocca on aphasia patients resulted in the first evidence of a region in the left frontal cortex being involved in the articulation of speech, and hence supported the hypothesis of localization of brain functions (Finger, 2004). Following the same reasoning, the lesion studies led to many brain functions being linked to specific brain regions (Rorden et al., 2007; Karnath et al., 2018; Vaidya et al., 2019), resulting in what we currently know as the “localizationist view.” However, a “holistic view,” that argues that brain functions are widely distributed across the cortex, emerged gained traction in the last couple of decades, fueled by the advances in technology (fMRI, MEG, etc.) and numerical methods (multivariate pattern analysis, machine learning based decoders, etc.) (Shehzad and McCarthy, 2018; Vaidya et al., 2019).

A study on brain connectivity and the networks involved in natural vision (Di and Biswal, 2020) has shown that the intersubject variability of the brain connectivity is low for the visual areas and the default mode network, but significantly higher for widespread brain networks that include brain regions that participate in the realization of a large number of functions, like the prefrontal cortex (Miller and Cohen, 2001; Lara and Wallis, 2015; Funahashi, 2017) and the anterior temporal lobe (Tsapkini et al., 2011; Rice et al., 2015). When attempting to identify the brain networks involved in a specific cognitive task, despite using the same raw data for analysis, the analysis itself may aim to highlight correlations between the task and the activation of various brain structures in an exploratory fashion (Price, 2010; Woolnough et al., 2020), or may aim to add a predictive dimension to the analysis, like in most brain-computer interface (BCI) applications (Nicolas-Alonso and Gomez-Gil, 2012; Saha et al., 2021), to generalize and use the observations to accurately predict an outcome in prospective subjects. One of the most common misconceptions is that the exploratory analysis, if performed rigorously in a well-controlled experimental setup, can be used to make predictions on prospective subjects. However, the p-values associated with various variables of interest identified in the exploratory analysis do not measure the predictive accuracy of the model, but merely the contribution of that variable to the realization of an outcome at a certain chance level (Bzdok and Ioannidis, 2019). Moreover, the methods relying on p-values are not suitable when there are multiple strategies to perform a certain cognitive task and the strategies are all represented in the study cohort. For example, to perform the cumulative sum of n integers, one may perform a serial summation to reach the results, may apply the formula , or, if n is small enough, may rely on mental imagery to calculate the result (Seghier and Price, 2018).

In this manuscript, we aim at identifying the brain areas that exhibit differential activation during a cognitive task. We chose a facial emotion recognition task, for which multiple theoretical models (Pessoa and Adolphs, 2010; You and Li, 2016; LeDoux and Brown, 2017) and significant evidence of different brain structures’ participation in the task already exist (Guillory and Bujarski, 2014), thus making it easier for us to interpret the results. At the same time, recent studies (Wager et al., 2015; Kragel and LaBar, 2016) have shown that the emotional brain response is widespread across the cortex, and requires a complex pattern of activation to identify a type of emotion. In our study, we chose representative methods for both exploratory and predictive types of analyses. We use three different methods for identifying condition contrasts: (1) a permutation cluster test, which is a commonly used technique for the analysis of EEG data (Maris and Oostenveld, 2007) in an exploratory fashion, (2) a machine learning (ML) classifier (Graimann et al., 2003) which was successfully used for epileptic seizure type prediction in prospective subjects (Donos et al., 2018) and in BCI applications (Steyrl et al., 2016), and (3) a searchlight multivariate pattern analysis (MVPA) (Haxby et al., 2001) which is a method that was successfully used in fMRI studies for identifying brain regions participating in a cognitive task in both exploratory (Kriegeskorte et al., 2006) and predictive type of analyses (Mitchell et al., 2008).

Although we will discuss the results of the three methods in the context of the existing literature on facial emotion recognition (Pessoa and Adolphs, 2010; Guillory and Bujarski, 2014), this manuscript does not aim to study the cognitive processes behind the facial emotion recognition task, but rather to highlight which analytical method is better suited for doing so. To support our findings, we present a brief review of the literature, showing that the brain regions deemed important for the facial emotion recognition task were indeed reported by other studies as well, in the context of emotion processing or supporting or co-occurring functions, such as the working memory or inner speech (Miendlarzewska et al., 2013).

Materials and methods

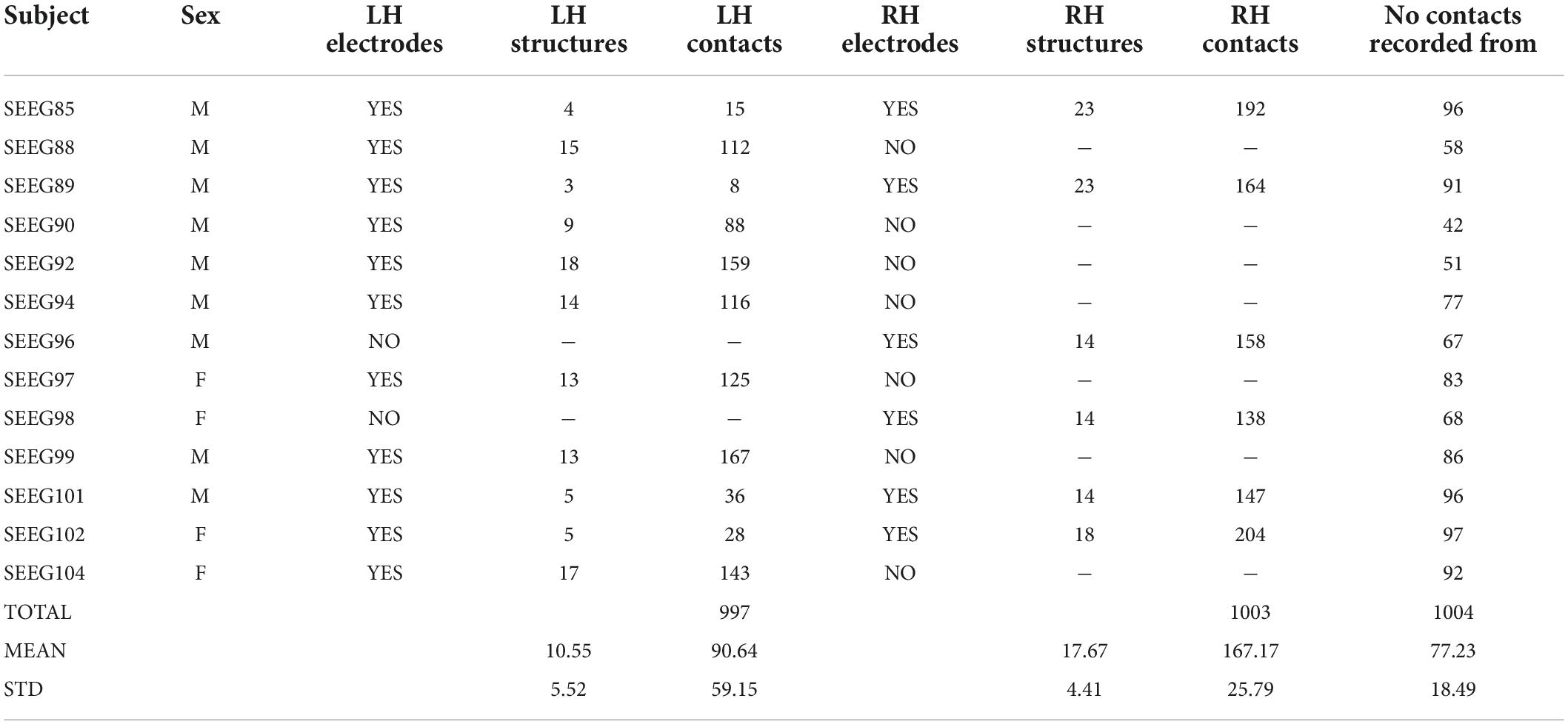

Thirteen subjects undergoing stereo-EEG (SEEG) presurgical evaluation for drug-resistant epilepsy at the Bucharest University Hospital were recruited for this study. All subjects provided informed consent and the investigation was performed under the Ethical Committee approval 43/02.10.2019. All subjects were implanted with Dixi depth electrodes (Dixi, Chaudefontaine, France) having 8–18 contacts per electrode, 2 mm contact length, 3.5 mm contact spacing, and 0.8 mm diameter. The location of depth electrodes was chosen solely based on the clinical hypothesis for the epileptogenic focus. At the group level, 7 subjects had electrodes implanted in the left hemisphere, 2 subjects had electrodes implanted in the right hemisphere, and 4 subjects had bilateral implantations. The depth electrodes and their contacts were precisely localized using post-operative CT images registered on top 1.5T or 3T presurgical T1 MRIs. The presurgical MRI was also used for brain segmentation (Dale et al., 1999), parcellation (Desikan et al., 2006; Destrieux et al., 2010), and non-linear registration (Postelnicu et al., 2009) to the “cvs_avg35_inMNI152” brain template, which is available in Freesurfer. Each electrode contact was assigned to a voxel whose 3D coordinates were further used to represent it on the group template. The anatomical label, according to the Desikan–Killiany atlas (Desikan et al., 2006), of each electrode contact was chosen as the label with the largest number of voxels in a 3×3×3 cube centered on the electrode contact. We used this procedure to minimize the chance of mislabeling contacts due to noise in the MRI image or contacts lying at the border of one or more brain structures. An average of 11.31 ± 2.75 depth electrodes were implanted per subject, sampling on average 10.55 ± 5.52 left hemisphere (LH) and 17.67 ± 4.41 right hemisphere (RH) brain structures. The average number of depth electrode contacts implanted per subject was 90.64 ± 59.15 in the LH, and 167.17 ± 25.76 in the RH (Table 1).

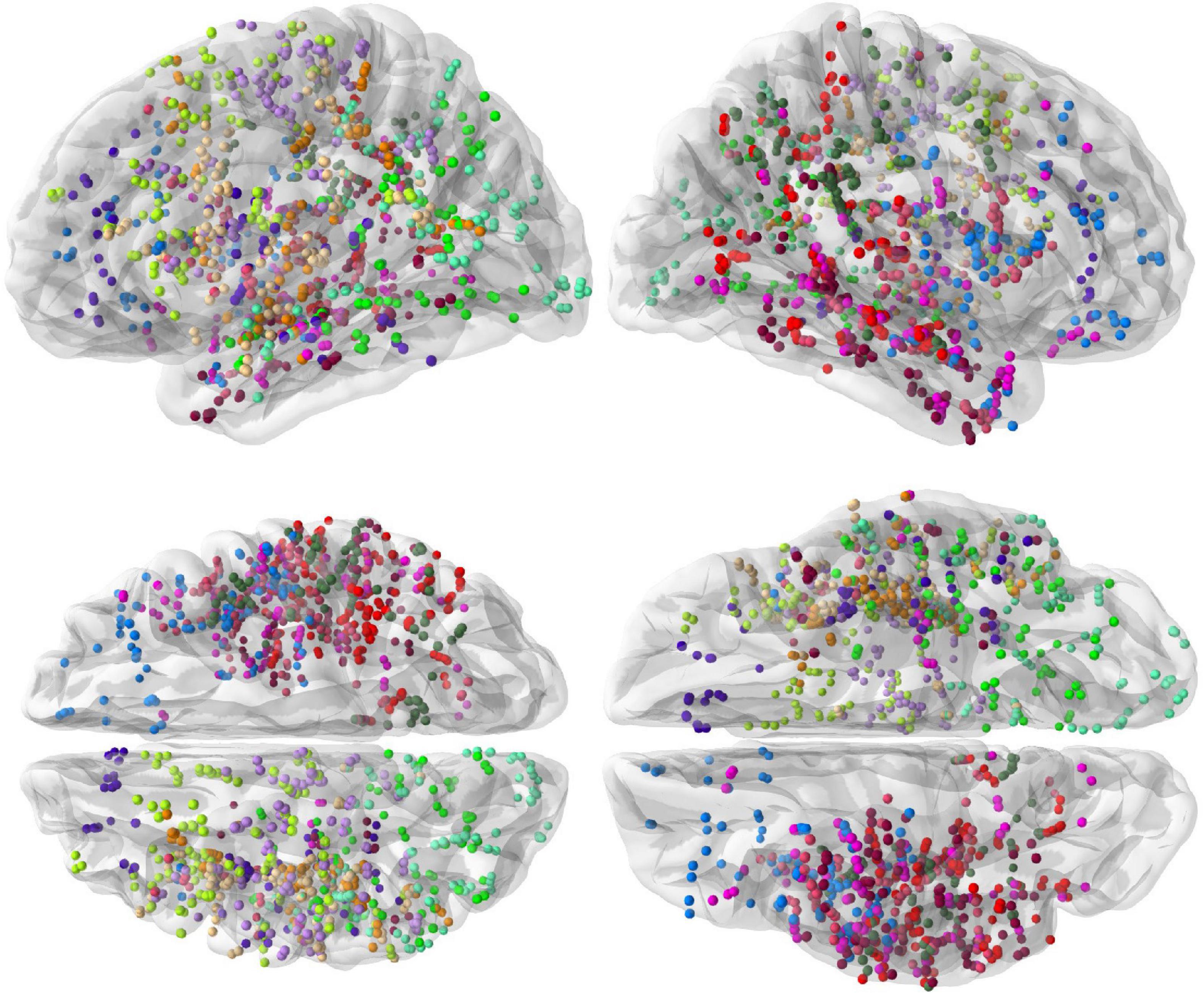

The spatial coverage of the brain with depth electrodes, represented on the “fsaverage” brain template at the group level, is shown in Figure 1.

Figure 1. Spatial coverage of the brain by intracranial electrodes (top row: left and right hemisphere views, bottom row: dorsal and ventral views). Intracranial electrode contacts of each of the 13 subjects are shown with a different color.

Cognitive task

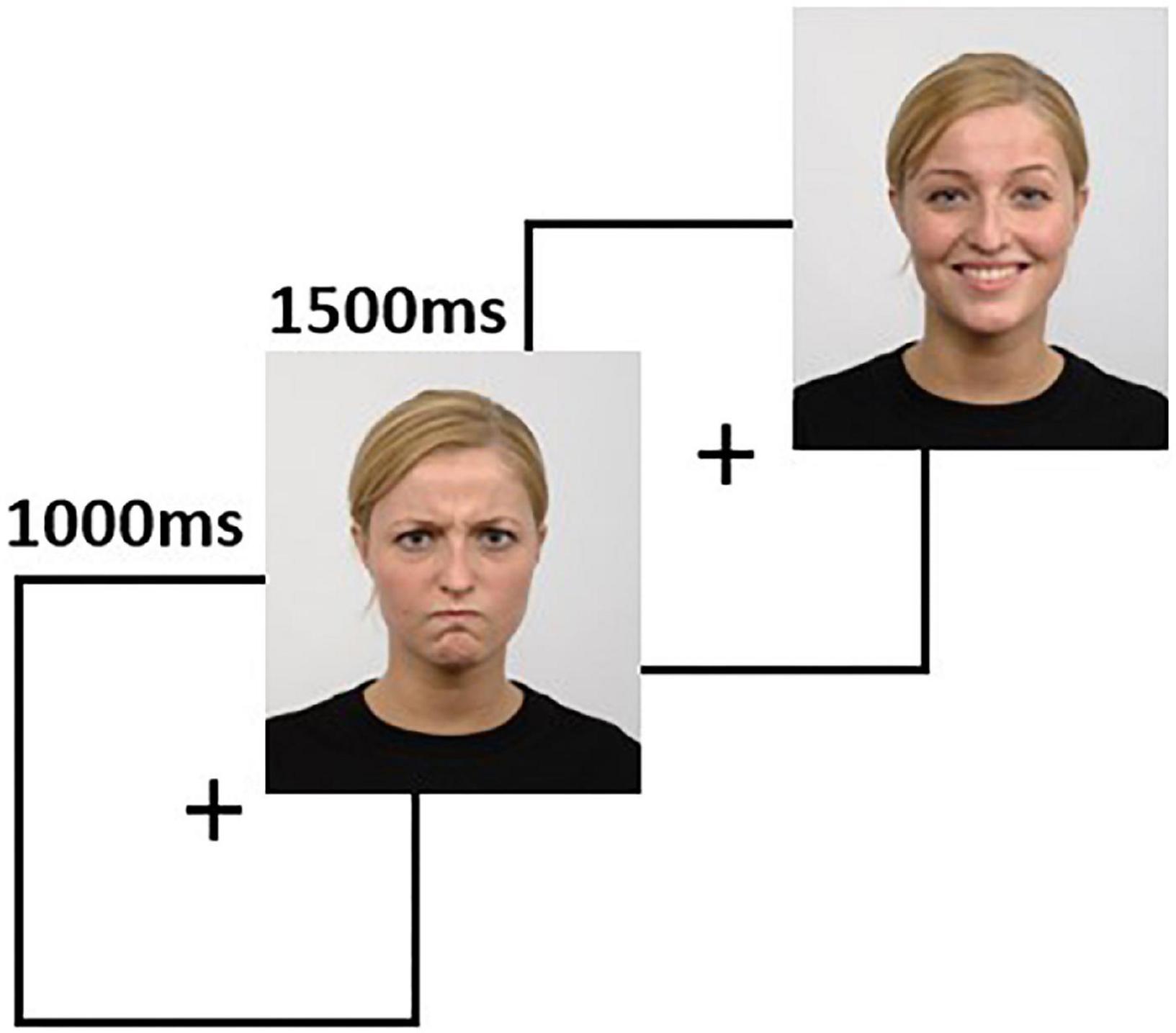

The task was developed using the stimuli available in the Radboud Faces Database (Langner et al., 2010). The stimuli are pictures of 67 actors posing with neutral, negative (angry), and positive (happy) faces, with matched facial landmarks used to recreate the facial expressions. The task contains 67 trials for each condition, each trial consisting of a 1-s fixation cross, followed by 1.5 s of a face image (Figure 2). The subjects were instructed to press one of three predefined keyboard buttons to indicate if the face has a neutral, negative, or positive expression. The task presentation was accomplished using PsychoPy (Peirce et al., 2019) and a 24-inch LCD monitor placed at 114 centimeters from the subject. A photodiode was used to synchronize the rendering of the visual stimuli with the EEG recording system.

Figure 2. The facial emotion recognition task is comprised of sequences of 1000 ms fixation cross, followed by 1500 ms of a face image. In this figure, we show two sample trials. Source for the images in this figure: Radboud Faces Database.

Intracranial EEG recordings

Intracranial EEG recordings was recorded while the subjects performed the cognitive task from 93.69 ± 15.26 contacts per subject, on average. The iEEG was recorded with a sampling rate of 4096 Hz using a 128- or 256-channel XLTek Quantum Amplifiers (Natus Neuro, Middleton, WI, USA).

The iEEG processing pipeline contains a mix of open-source software and in-house code developed in Matlab and Python. The raw recordings in XLTek format were loaded in Matlab and exported in ADES format and loaded in Anywave (Colombet et al., 2015) for visual inspection and marking for removal of individual trials or EEG channels exhibiting epileptic activity or non-physiological artifacts. The remaining pipeline steps were all performed in Python, using MNE (Gramfort et al., 2013) as the main framework for creating our custom processing steps. The EEG was notch filtered at 50Hz and its second (100 Hz) and third (150 Hz) harmonics, using a finite impulse response filter with Hamming window. A common-average reference was computed using the good EEG channels, then the EEG was cropped into epochs of [−0.3; 1] s relative to the stimuli onset and resampled to 256 Hz. An additional buffer of 1 s was added for each epoch to mitigate filtering artifacts that are expected to occur at the edges of the epochs during the time-frequency transformation which is necessary to extract the gamma-band spectral content of the signal. The time-frequency decomposition was accomplished with Morlet wavelets having a variable number of cycles for each frequency in the [1; 125] Hz range. The time-frequency representation of individual trials was cropped to [−0.3; 1] s to remove filtering artifacts and was baseline corrected. The power in the gamma band was computed by averaging the power of each frequency in the [55; 115] Hz range. These gamma power traces (GPTs) represent the inputs for all subsequent analysis methods, which we describe in detail below.

Permutation cluster analysis for task contrasts

For each EEG channel, we identified significant differences between the angry and happy task conditions in the GPTs using a non-parametric cluster-level statistical permutation test (Maris and Oostenveld, 2007). The permutation cluster test was implemented using 1,000 permutations. To assess the participation of different brain structures in the realization of the task, we aggregated at the group level the trials from all contacts within the same brain structure, and we performed the permutation cluster test as described previously. Only clusters showing significant differences between task conditions at p-values below 0.05 were further considered.

Machine learning classification for task conditions

Machine learning (ML) classification for task conditions takes the idea of identifying contrasts one step further, in the sense that once an ML model is trained, it can identify the task condition of new trials. This is a very strong outcome, as it proves that the underlying neuronal population that produces the iEEG recorded by a single contact has specific responses for different conditions, and the effect size is large enough to be identified at a single-trial level.

The ML classification was implemented using the “pipeline” feature of “scikit-learn” (Buitinck et al., 2013), which allows training of a model with cross-validation (CV) while performing data augmentation and transformation and separately for each fold.

In the data augmentation step, we compute the average GPT for the angry and happy conditions, then for each trial, we create two new time series by subtracting the condition averages. Next, during the data transformation step, we split the time series into three intervals [0.2; 0.4], [0.4; 0.6], and [0.6; 1] s, and for each interval, we compute 5 statistical measures: mean, standard deviation, median, skewness, and kurtosis. The data augmentation and transformation steps are encapsulated into a Transformer interface (Buitinck et al., 2013) so that they can be re-fitted and re-applied separately to each fold of the CV without information leakage. The transformed data is fed into a random forest classifier (Breiman, 2001), whose parameters are obtained through hyperoptimization. The hyperoptimization is performed using Bayesian Search with 10-fold stratified cross-validation (Snoek et al., 2012), as implemented by the “BayesSearchCV” function in “scikit-optimize” (Head et al., 2021). The parameters we optimized for are the number of estimators (nestimators ∈ {250, 500, 1000}), maximum tree depth (integer values in the range [2; 10]), and the percentage of features randomly chosen for growing each individual tree (pfeatures ∈ {0.15;0.5;0.75}). The optimizer performed 100 iterations using 6 out of 8 CPU cores of a 3.40 Ghz Intel Core i7-6700 CPU with 16 GB RAM. Once the best parameters for the random forest classifier were identified, we used a 10-fold stratified CV to evaluate the classification performance. The evaluation metric of choice was the normalized Matthews Correlation Coefficient (NMCC) (Chicco and Jurman, 2020):

TP = true positive; TN = true negatives; FP = false positives; FN = false negatives.

An NMCC value of 0.5 represents the random chance level, and to assess if the performance of our classifier is significantly better than chance, we employed a t-test against the null hypothesis that the mean of the 10 NMCC values resulting during the CV is 0.5.

The average training and evaluation time per intracranial contact was 406.8 ± 63.2 s.

Searchlight decoding for task conditions

The multivariate searchlight was initially developed for fMRI data, for localizing functional brain regions that are informative for brain processes (Kriegeskorte et al., 2006) triggered by a cognitive task. Searchlight maps the information over a set of neighboring voxels in fMRI, within a predefined spherical volume, therefore mitigating the issues related to the multiple comparison problem using a spatial smoothing approach. In this study, we apply the same reasoning to iEEG data. A searchlight radius of 25 mm was chosen. While there is no rule of thumb for choosing the searchlight radius size, previous studies (Wang et al., 2020) have shown that increasing the search radius results in the same clusters being identified, but yielding larger cluster sizes. This behavior is welcome given the sparsity of the intracranial electrodes and the distance of 3.5 mm between two adjacent electrode contacts from the same depth electrode. For each electrode contact, we identified all electrode contacts that were within the searchlight volume defined by the searchlight radius, regardless if they were located on the same or different depth electrodes, and we used MVPA to decode the task conditions from the gamma traces (King and Dehaene, 2014; King et al., 2018). The MVPA pipeline took as input the gamma power traces for both task conditions and the condition labels associated with the gamma traces, then performed scaling, concatenation across the channel dimension, and classification using logistic regression. Therefore, the MVPA decoder aggregated information across time and space. The MVPA decoder was trained with 10-fold cross-validation, evaluated by the NMCC, and assessed significance above or below the chance level using a t-test, as described in the ML classification paragraph.

Comparison of the three methods

The results of the three methods for identifying task contrasts were compared at the group level. For each method, we identified the brain structures that contained at least one contact with a significant contrast between the two conditions. We used the Jaccard index (Jaccard, 1901) to quantify the agreement between every pair of methods.

Results

Task performance

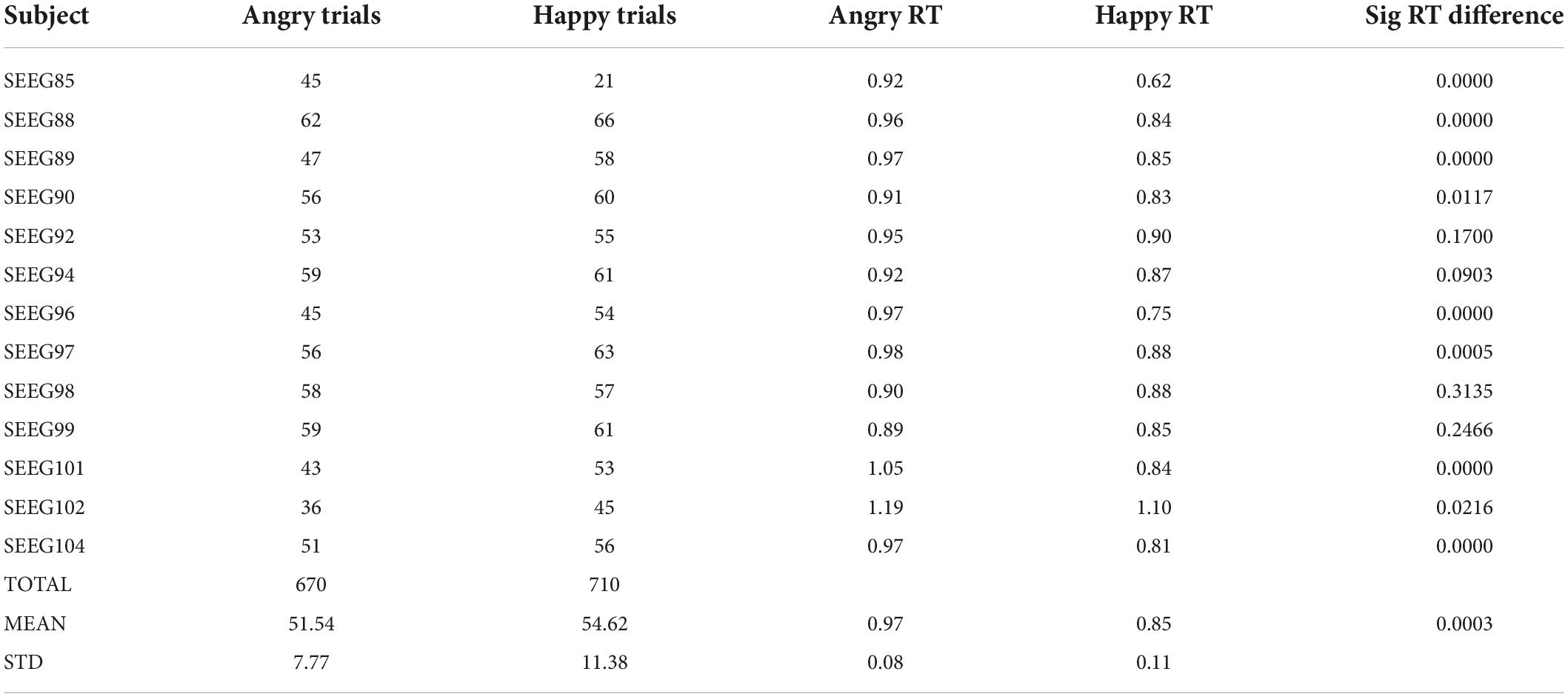

The average response time was 0.97 ± 0.08 s and 0.85 ± 0.11 s for the angry and happy conditions, respectively (Table 2). The average number of trials per subject, without interictal epileptic spikes and other non-physiological artifacts, and for which the subjects correctly identified the emotion was 51.54 ± 7.77 and 54.62 ± 11.38 for the angry and happy conditions. All subjects took a longer time to correctly identify the angry condition. For 10 out of 13 subjects, the difference in reaction time between the angry and happy conditions was significant (Mann–Whitney’s U-test, p < 0.05). At the group level, the differences in reaction times were significantly different as well (Mann–Whitney’s U-test, p < 0.05) (Table 2).

Brain sampling

Thirty-two brain structures were sampled by depth electrodes at the group level (32 LH and 31 RH), and a total of 1,004 electrode contacts (531 LH and 473 RH) were free of physiological and non-physiological artifacts and used in the analysis. The exact distribution of electrode contacts per implanted structure is detailed in Table 3.

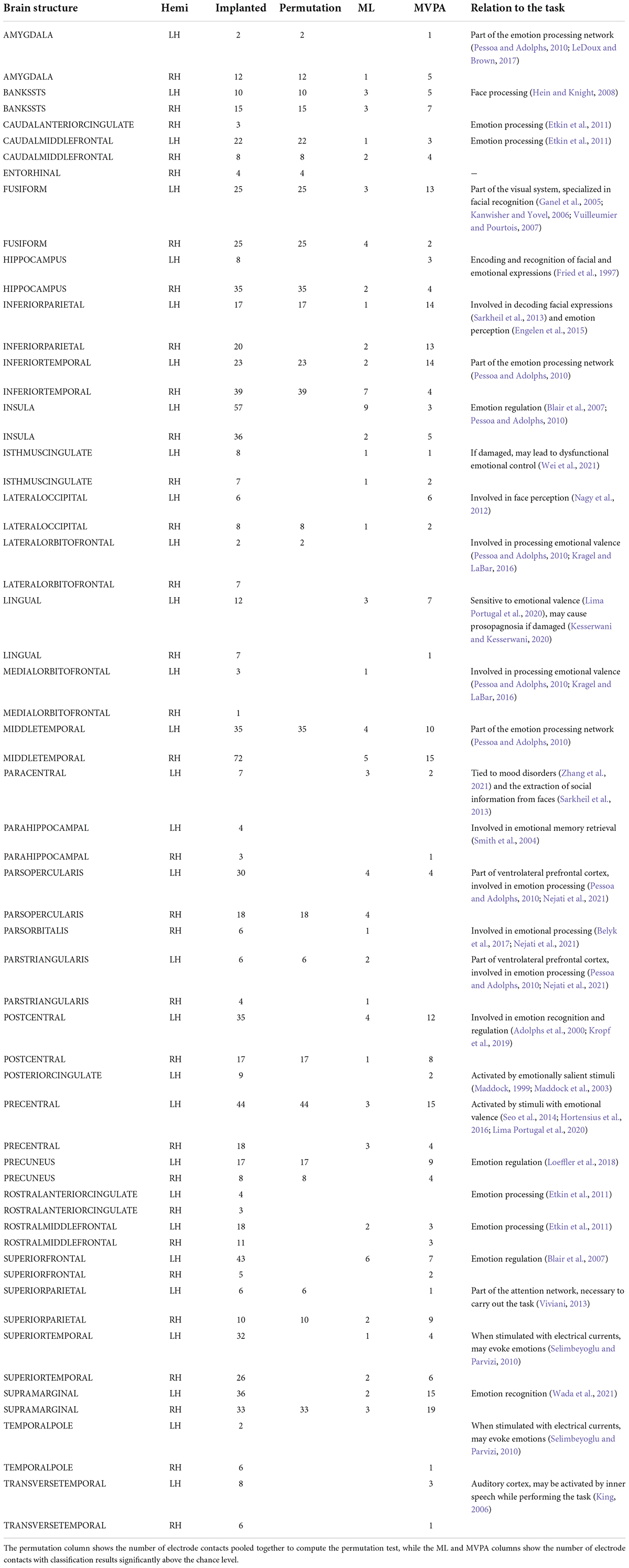

Table 3. Per structure distribution of implanted electrode contacts, and the number of significant contacts per each analysis method.

Comparison of methods for assessing condition contrasts

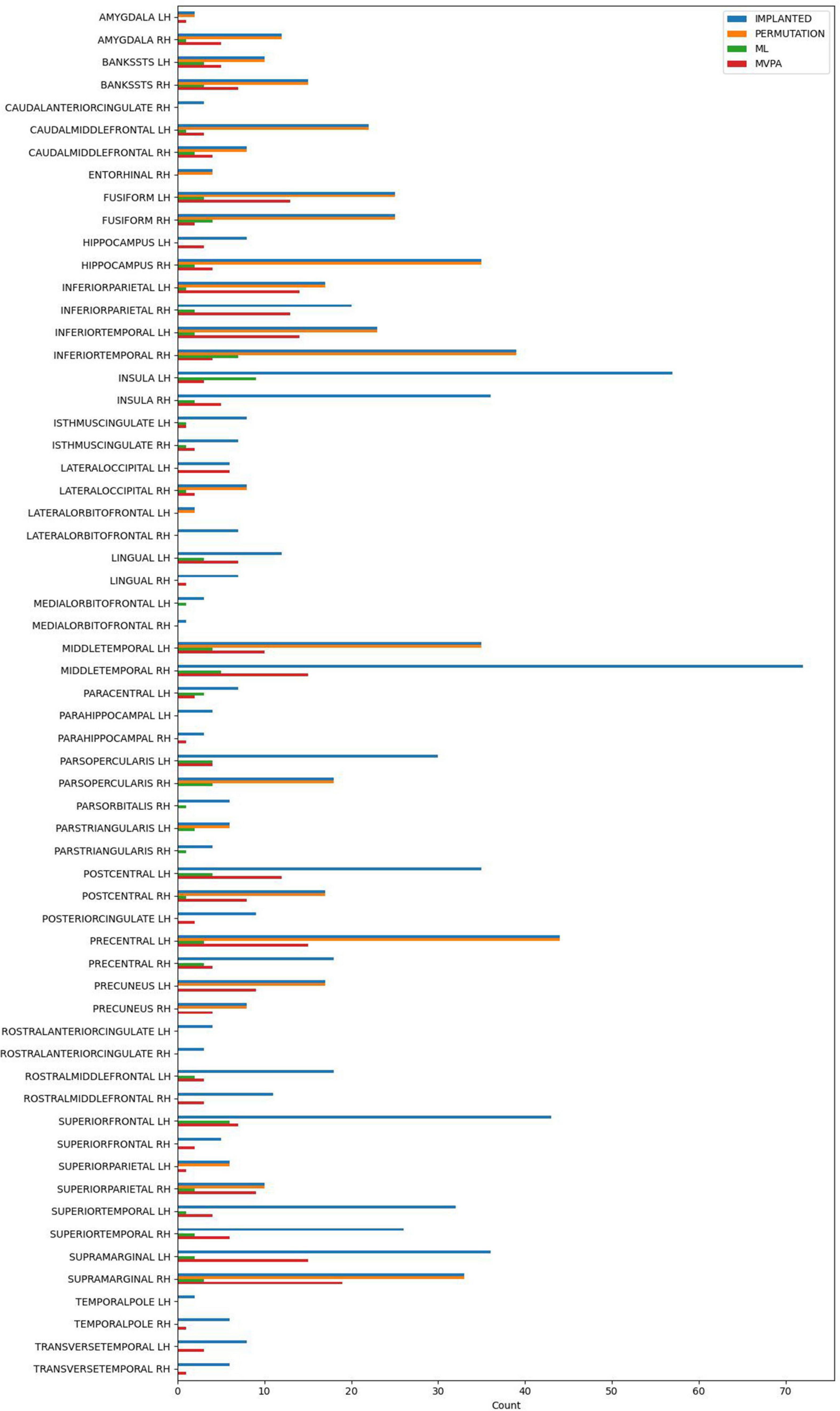

All the methods were compared at the same significance level of p < 0.05. No multiple comparison correction was used, as the p-value is computed using different approaches, that were described in detail above in each method’s paragraph. The number of electrode contacts deemed significant in each structure by each method is shown in Figure 3.

Figure 3. The number of implanted electrode contacts, and the number of electrode contacts exhibiting significant contrast between conditions, per brain structure and analysis method.

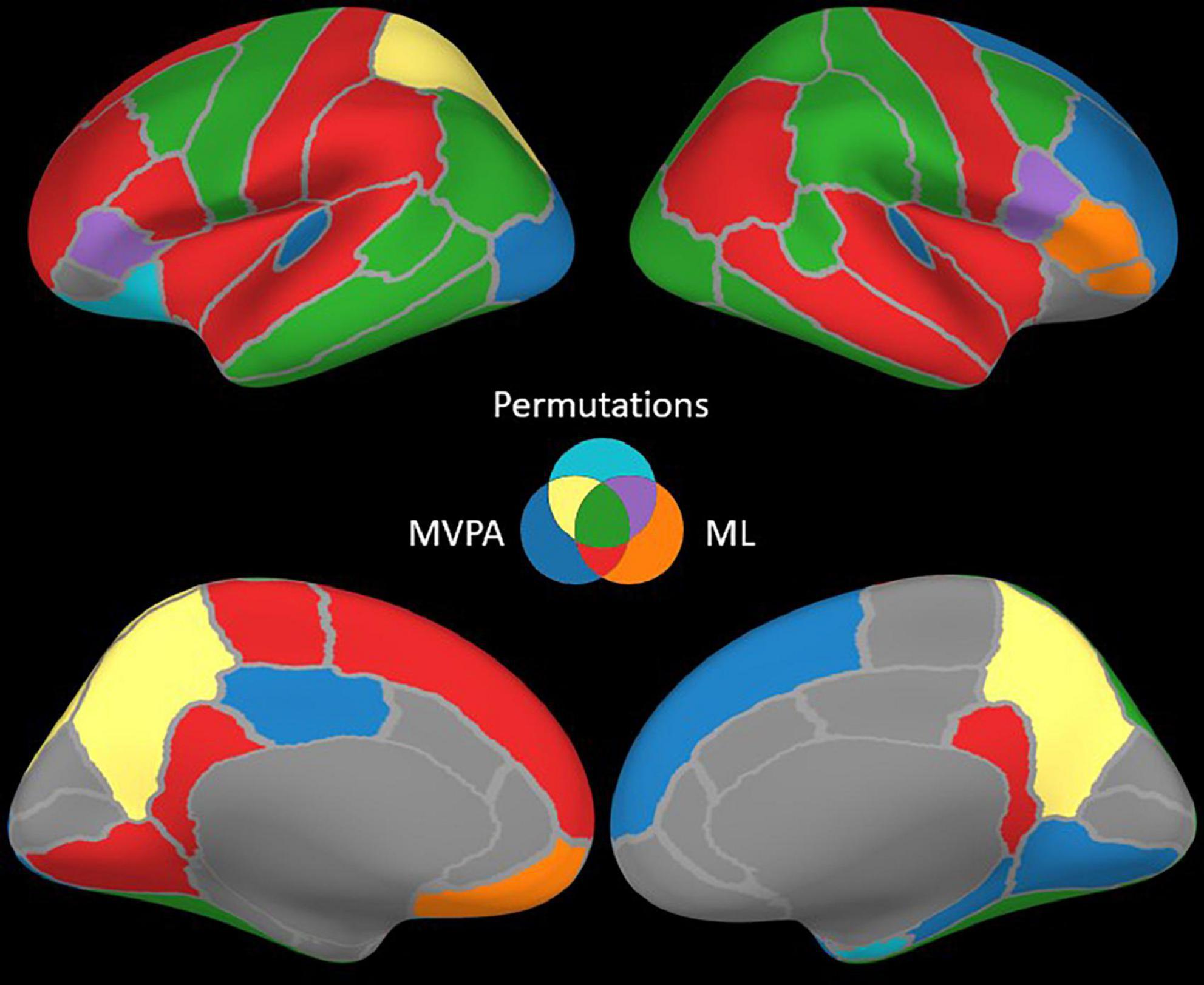

The permutation cluster test identified 12 LH and 13 RH brain structures exhibiting significant contrasts between the angry and happy task conditions (Figure 4). 209 LH and 232 RH electrode contacts were grouped by structures to compute these contrasts. The two conditions resulted in significant contrasts in the bilateral amygdala and the right hippocampus, left lateral occipital cortex, parts of the parietal lobe (bilateral precuneus and superior parietal cortex, left inferior parietal cortex, and right supramarginal and postcentral gyri), parts of the temporal lobe (right entorhinal cortex and bilateral inferior temporal gyri, banks of the superior temporal sulcus and fusiform cortex, and left middle temporal), and parts of the frontal lobe (bilateral caudal middle frontal gyrus, left lateral orbitofrontal cortex, right parsopercularis, and left parstriangularis) (Table 3).

The ML classifier identified angry-happy contrasts in more brain structures (19 LH and 19 RH) than the permutation cluster test (Figure 4). Within these brain structures, a total of 55 LH and 47 RH electrode contacts exhibited an NMCC that was significantly different from the random chance. In comparison with the permutation cluster test results, the ML classifier identified significant contrasts in the bilateral superior temporal gyrus, in the right middle temporal gyrus, in the bilateral insula and the bilateral isthmus cingulate. It also identified contrasts in the following structures of the left hemisphere: lingual gyrus, superior and rostral middle frontal gyri, the parsopercularis, the postcentral gyrus, and the paracentral lobule; and the following structures of the right hemisphere: parsorbitalis, parstriangularis, and the precentral gyrus. Interestingly, several brain structures were identified by the permutation cluster test, but not by the ML classifier: the left amygdala, the left lateral orbitofrontal gyrus, the left superior parietal cortex, the right entorhinal cortex, and the bilateral precuneus (Table 3). The Jaccard coefficient computed between the structures that exhibited significant contrasts during permutation cluster tests and ML classification was 0.33 for the LH and 0.52 for the RH (Table 4).

The searchlight MVPA approach identified contrasts in a large number of brain structures (24 LH and 23 RH) (Figure 4), with searchlight clusters centered on 157 LH and 122 RH electrode contacts. Only the left lateral orbitofrontal cortex, right entorhinal cortex, the left parstriangularis, and the right parsopercularis were found significant by the permutation cluster test, but not by the searchlight MVPA. However, many additional structures were found significant by the searchlight MVPA, that have not been identified by either permutation cluster test or the ML classifier: the left hippocampus, the left posterior cingulate, and the left lateral occipital cortex, as well as the right lingual and parahippocampal gyri, the superior and rostral middle frontal cortices, the right temporal pole and the bilateral transverse temporal cortex (Table 3). The Jaccard coefficient between permutation cluster test results and searchlight MVPA results was 0.44 for the LH and 0.37 for the RH, and 0.65 for the LH and 0.62 for the RH between ML classifier and searchlight MVPA results (Table 4).

Discussion

Building on the idea that humans developed a survival mechanism for immediate threat detection, it was hypothesized that two pathways are involved in the process of threat detection (LeDoux and Brown, 2017). In LeDoux’s model, the first pathway enables fast access from the retina to the amygdala, using the superior colliculus and pulvinar as relay nodes, while the second pathway is cortical and it involves the visual cortex and the fusiform gyrus. An alternative model was proposed (Pessoa and Adolphs, 2010), in which the cortex plays, through different cortical and subcortical routes, a more important role in driving visual inputs to (and back-propagated from) the amygdala through additional hubs located in the insula and orbitofrontal, frontal cingulate and posterior parietal cortices. A recent review of intracranial studies performed over the last 60 years on the topic of emotion (Guillory and Bujarski, 2014) signals the lack of intracranial data: 10 studies described the amygdala’s and 3 studies described the fusiform gyrus’ involvement in emotion processing. Moreover, only one study described the interaction between the amygdala and fusiform gyrus (Pourtois et al., 2010) at the time of the review, with a second one being published in 2016 (Méndez-Bértolo et al., 2016). Therefore, the emotion network, as described by LeDoux’s and Pessoa’s models, is understudied using intracranial methods.

The intracranial EEG studies present a specific set of challenges. The first challenge is that intracranial EEG can only be recorded from epileptic patients undergoing presurgical evaluation. In these patients, the intracranial electrodes are placed solely to localize the epileptogenic focus, therefore they may not cover all brain regions of interest for a given cognitive task, such as the facial emotion recognition task. Moreover, an emotion network as the one hypothesized by Pessoa is unlikely to be fully observed with intracranial electrodes for two reasons: (1) the network extends on multiple lobes, while the intracranial implantation schemes are usually focused on 1–2 lobes, and (2) the subcortical nuclei, such as the pulvinar, are not common targets for presurgical evaluation for epilepsy. Therefore, the emotion networks are prone to be studied at the group level, to overcome the brain spatial sampling issues. A second challenge relates to the effect size that is to be observed as the contrast between two conditions. It was estimated that such contrast can be as low as 3% of the signal-to-noise ratio (Selimbeyoglu and Parvizi, 2010). A third challenge is represented by the fact that there is no ground truth for what is the brain network activated by the facial emotion recognition task. In our study, we leverage previous studies and theoretical models (Pessoa and Adolphs, 2010) to explain why one of the three methods might highlight contrasts in a given brain structure. This approach is, however, a rough approximation, as most relevant studies were performed using fMRI, and the intracranial EEG literature on emotion processing is still limited (Guillory and Bujarski, 2014).

The permutation cluster test showed a larger number of contrasts in the RH than in the LH, a finding that is consistent with the “Right Hemisphere” model for emotional processing (Silberman and Weingartner, 1986; Demaree et al., 2005). Of the LH contrasts observed, the amygdala and the fusiform gyrus, the inferior and superior parietal and the lateral orbitofrontal, and the caudal middle frontal cortex are worth mentioning as they partially outline the non-occipital parts of the Pessoa model. In addition, we observed contrasts that are likely related to the task execution: decision-making in the lateral orbitofrontal and the caudal middle frontal cortex (Talati and Hirsch, 2005; Nogueira et al., 2017) and movement execution for the button-press in the precentral gyrus (Li et al., 2015). The same network was also observed in the RH, with additional contrasts in the hippocampus and entorhinal cortex [structures associated with the encoding and recognition of facial expressions (Fried et al., 1997)], the parsopercularis and the supramarginal gyrus, which takes part in the perception (Belyk et al., 2017) and recognition of emotion (Wada et al., 2021), respectively.

Moving from the permutation cluster test to the univariate (ML) and the multivariate (searchlight MVPA) classification methods, we observed an increase in the number of brain structures with significant contrasts (Figure 4 for qualitative results and Table 3 for the exact number of intracranial electrode contacts per brain structure). While some of these are part of Pessoa’s model, like the insula and the cingulate gyrus, other structures are surprising and not commonly associated with the processing of faces and emotions. However, these findings support the idea of larger and distributed networks for emotion processing, which encode the different types of emotions as activation patterns (Wager et al., 2015). The generalization power of classification methods is, in our view superior to the statistical method of permutation cluster test (Figure 4), as it can be demonstrated to classify trial conditions with unseen data (Cauchoix et al., 2014; Kragel and LaBar, 2016). However, while the participation of such brain structures in the realization of the cognitive task is undeniable, it is still debatable if they are part of the core emotion processing network, or if they participate in more general aspects of the task such as low-level visual processing, movement planning, inner speech evoked unconsciously by the images, etc. The banks of the superior temporal sulcus, which appeared significant for both ML and searchlight MVPA bilaterally, are considered an integration hub for audiovisual stimuli, inner speech, motion, and face processing (Hein and Knight, 2008). The Jaccard index showed a good agreement (∼0.65) between the ML and MVPA methods on both hemispheres, which is expected as both methods rely on different, yet similar, machine learning classifiers, and the input data are overlapping. A lower Jaccard index value (∼0.42) is observed between the permutation cluster test and each other method on the RH. Therefore, the searchlight MVPA approach integrates information from a larger brain volume, resulting in larger Jaccard index when compared to the permutation cluster test.

Several other fMRI studies have reported widely distributed brain regions that encode recognition of different emotional states through patterns of activations that were identified using MVPA (Kassam et al., 2013; Kragel and LaBar, 2016). Despite MVPA’s popularity in the fMRI field and its applicability to scalp EEG (Cauchoix et al., 2014) to our knowledge, only four studies leveraged MVPA techniques with intracranial EEG to study rapid visual categorization in the ventral stream in rhesus macaques (Cauchoix et al., 2016), fast visual recognition memory systems (Despouy et al., 2020), and semantic coding in humans (Chen et al., 2016; Rogers et al., 2021), to date. The searchlight MVPA method we implemented in this study allowed us to explore for the first time arbitrary spherical brain volumes centered on an intracranial depth electrode contact. Despite the variable number of electrode contacts contributing to this analysis at the patient level, we observed that the electrode contacts exhibiting statistically significant accuracies above the chance level tend to cluster at the group level.

Multivariate pattern analysis and its searchlight implementation appear to be more sensitive to small differences between conditions and therefore reveal a widespread brain network involved in emotion processing, which contradicts the ‘standard hypothesis’ model that considers the emotion network to have a cortical and subcortical route that connects the visual cortex to the amygdala (Pessoa and Adolphs, 2010), but supports the “multiple waves” model (Pessoa and Adolphs, 2010). A wider brain network provides more opportunities to modulate the brain functions, that have previously focused on the amygdala stimulation (Inman et al., 2018), and may explain the success of emotion regulation of various transcranial magnetic stimulation studies that focused on the prefrontal cortex (De Wit et al., 2015; Lantrip et al., 2017), a brain region that is part of the “multiple waves” model and was found to play an active role in the processing of different types of emotions as part of a widespread brain network (Wager et al., 2015).

We observed that the permutation cluster test has identified the least number of brain structures exhibiting a significant contrast between task conditions, followed by the ML classifier and then the searchlight MVPA. This behavior is expected and explained by the particularities of each method. The permutation cluster test is appropriate to detect large differences (clusters), but has a low sensitivity for small clusters that are commonly observed in EEG data (Nichols and Holmes, 2002; Huang and Zhang, 2017). The machine learning classifier can have better sensitivity, as even a single feature that is systematically different between the two task conditions is enough to provide good classification performance. In our study, we computed features over 200 and 400 ms intervals, but the five features we have used describe the data underlying these intervals sufficiently well to identify more brain structures with significant task contrasts than the permutation cluster test. The searchlight MVPA has all the benefits of the ML classifier, and adds the spatial dimension to the analysis: it considers the multi-variate changes in the features computed for all intracranial contacts within a predefined search radius. As expected, the results of searchlight MVPA were the best, this method identifying the largest number of brain structures with significant task condition contrasts, all of them being in agreement with the existing scientific literature on face recognition and emotion processing (Table 3).

Conclusion

This manuscript provides the first methodological side-by-side comparison of three methods for identifying task contrasts in EEG data and exemplifies the usage of searchlight MVPA with intracranial depth electrodes. However, an in-depth analysis of the brain networks identified by searchlight MVPA and the neuroscientific interpretation of the findings is beyond the scope of the current study, which is only aimed at validating the results through the existing literature. We have shown that the permutation cluster analysis, which is commonly used for the analysis of intracranial EEG data, is less sensitive to task contrasts than ML classification, and both of them are less sensitive than searchlight MVPA method. Of course, none of these three methods has identified significant task-related contrasts in all brain structures featured in Table 3, even though each of those structures is involved in one way or another in the processing of faces or emotions, according to the previous studies we have referenced.

At the same time, our study is the first intracranial EGG study to reinforce the idea that the emotion network is widespread and relies on activation patterns to process various emotions, as demonstrated by an fMRI study (Kragel and LaBar, 2016) and a meta-analysis of 148 emotion-related studies (Wager et al., 2015).

A detailed analysis of the searchlight MVPA brain networks and their temporal dynamics through time generalization (King and Dehaene, 2014; Rogers et al., 2021) will be addressed in a future study.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by Ethical Committee of University of Bucharest, approval 43/02.10.2019. The patients/participants provided their written informed consent to participate in this study.

Author contributions

CD: conceptualization, methodology, validation, software, formal analysis, writing—original draft, visualization, supervision, and funding acquisition. BB: software. CP: software and data curation. IO: data curation. IM: validation, writing—review and editing, and supervision. AB: validation, writing—review and editing, supervision, and funding acquisition. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by Romanian UEFISCDI PN-III-P1-1.1-TE-2019-0502 and PN-III-P4-ID-PCE-2020-0935.

Acknowledgments

We thank our patients for their voluntary participation in our study and the surgical and neurology teams at the Bucharest Emergency Hospital for the clinical care and support provided through this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

ML, machine learning; MVPA, multivariate pattern analysis; fMRI, functional magnetic resonance imaging.

References

Adolphs, R., Damasio, H., Tranel, D., Cooper, G., and Damasio, A. R. (2000). A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J. Neurosci. 20, 2683–2690. doi: 10.1523/JNEUROSCI.20-07-02683.2000

Belyk, M., Brown, S., Lim, J., and Kotz, S. A. (2017). Convergence of semantics and emotional expression within the IFG pars orbitalis. Neuroimage 156, 240–248. doi: 10.1016/j.neuroimage.2017.04.020

Blair, K. S., Smith, B. W., Mitchell, D. G. V., Morton, J., Vythilingam, M., Pessoa, L., et al. (2007). Modulation of emotion by cognition and cognition by emotion. Neuroimage 35, 430–440. doi: 10.1016/j.neuroimage.2006.11.048

Buitinck, L., Louppe, G., Blondel, M., Pedregosa, F., Müller, A. C., Grisel, O., et al. (2013). API design for machine learning software: Experiences from the scikit-learn project. arXiv [Preprint].

Bzdok, D., and Ioannidis, J. P. A. (2019). Exploration, inference, and prediction in neuroscience and biomedicine. Trends Neurosci. 42, 251–262. doi: 10.1016/j.tins.2019.02.001

Cauchoix, M., Barragan-Jason, G., Serre, T., and Barbeau, E. J. (2014). The Neural Dynamics of Face Detection in the Wild Revealed by MVPA. J. Neurosci. 34, 846–854. doi: 10.1523/JNEUROSCI.3030-13.2014

Cauchoix, M., Crouzet, S. M., Fize, D., and Serre, T. (2016). Fast ventral stream neural activity enables rapid visual categorization. Neuroimage 125, 280–290. doi: 10.1016/j.neuroimage.2015.10.012

Chen, Y., Shimotake, A., Matsumoto, R., Kunieda, T., Kikuchi, T., Miyamoto, S., et al. (2016). The ‘when’ and ‘where’ of semantic coding in the anterior temporal lobe: Temporal representational similarity analysis of electrocorticogram data. Cortex 79:1. doi: 10.1016/j.cortex.2016.02.015

Chicco, D., and Jurman, G. (2020). The advantages of the matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 21:6. doi: 10.1186/s12864-019-6413-7

Colombet, B., Woodman, M., Badier, J. M., and Bénar, C. G. (2015). AnyWave: A cross-platform and modular software for visualizing and processing electrophysiological signals. J. Neurosci. Methods 242, 118–126. doi: 10.1016/j.jneumeth.2015.01.017

Dale, A. M., Fischl, B., and Sereno, M. I. (1999). Cortical surface-based analysis. Neuroimage 9, 179–194. doi: 10.1006/nimg.1998.0395

De Wit, S. J., Van Der Werf, Y. D., Mataix-Cols, D., Trujillo, J. P., Van Oppen, P., Veltman, D. J., et al. (2015). Emotion regulation before and after transcranial magnetic stimulation in obsessive compulsive disorder. Psychol. Med. 45, 3059–3073. doi: 10.1017/S0033291715001026

Demaree, H. A., Everhart, D. E., Youngstrom, E. A., and Harrison, D. W. (2005). Brain lateralization of emotional processing: Historical roots and a future incorporating “Dominance”. Behav. Cogn. Neurosci. Rev. 4, 3–20. doi: 10.1177/1534582305276837

Desikan, R. S., Ségonne, F., Fischl, B., Quinn, B. T., Dickerson, B. C., Blacker, D., et al. (2006). An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 31, 968–980. doi: 10.1016/j.neuroimage.2006.01.021

Despouy, E., Curot, J., Deudon, M., Gardy, L., Denuelle, M., Sol, J. C., et al. (2020). A Fast visual recognition memory system in humans identified using intracerebral ERP. Cereb. Cortex 30, 2961–2971. doi: 10.1093/cercor/bhz287

Destrieux, C., Fischl, B., Dale, A., and Halgren, E. (2010). Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. Neuroimage 53, 1–15. doi: 10.1016/j.neuroimage.2010.06.010

Di, X., and Biswal, B. B. (2020). Intersubject consistent dynamic connectivity during natural vision revealed by functional MRI. Neuroimage 216:116698. doi: 10.1016/j.neuroimage.2020.116698

Donos, C., Maliia, M. D., Dümpelmann, M., and Schulze-Bonhage, A. (2018). Seizure onset predicts its type. Epilepsia 59, 650–660. doi: 10.1111/epi.13997

Engelen, T., de Graaf, T. A., Sack, A. T., and de Gelder, B. (2015). A causal role for inferior parietal lobule in emotion body perception. Cortex 73, 195–202. doi: 10.1016/j.cortex.2015.08.013

Etkin, A., Egner, T., and Kalisch, R. (2011). Emotional processing in anterior cingulate and medial prefrontal cortex. Trends Cogn. Sci. 15:85. doi: 10.1016/j.tics.2010.11.004

Fried, I., MacDonald, K. A., and Wilson, C. L. (1997). Single neuron activity in human hippocampus and amygdala during recognition of faces and objects. Neuron 18, 753–765. doi: 10.1016/S0896-6273(00)80315-3

Funahashi, S. (2017). Prefrontal contribution to decision-making under free-choice conditions. Front. Neurosci. 11:431. doi: 10.3389/fnins.2017.00431

Ganel, T., Valyear, K. F., Goshen-Gottstein, Y., and Goodale, M. A. (2005). The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43, 1645–1654. doi: 10.1016/j.neuropsychologia.2005.01.012

Graimann, B., Huggins, J. E., Schlögl, A., Levine, S. P., and Pfurtscheller, G. (2003). Detection of movement-related desynchronization patterns in ongoing single-channel electrocorticogram. IEEE Trans. Neural Syst. Rehabil. Eng. 11, 276–281. doi: 10.1109/TNSRE.2003.816863

Gramfort, A., Luessi, M., Larson, E., Engemann, D. A., Strohmeier, D., Brodbeck, C., et al. (2013). MEG and EEG data analysis with MNE-Python. Front. Neurosci. 7:267. doi: 10.3389/fnins.2013.00267

Guillory, S. A., and Bujarski, K. A. (2014). Exploring emotions using invasive methods: Review of 60 years of human intracranial electrophysiology. Soc. Cogn. Affect. Neurosci. 9, 1880–1889. doi: 10.1093/scan/nsu002

Haxby, J. V., Gobbini, M. I., Furey, M. L., Ishai, A., Schouten, J. L., and Pietrini, P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293, 2425–2430. doi: 10.1126/science.1063736

Head, T., Kumar, M., Nahrstaedt, H., Louppe, G., and Shcherbatyi, I. (2021). Scikit-optimize/scikit-optimize. Zenodo doi: 10.5281/zenodo.5565057

Hein, G., and Knight, R. T. (2008). Superior temporal sulcus–It’s my area: Or is it? J. Cogn. Neurosci. 20, 2125–2136. doi: 10.1162/jocn.2008.20148

Hortensius, R., Terburg, D., Morgan, B., Stein, D. J., van Honk, J., and de Gelder, B. (2016). The role of the basolateral amygdala in the perception of faces in natural contexts. Philos. Trans. R. Soc. B Biol. Sci. 371:20150376. doi: 10.1098/rstb.2015.0376

Huang, G., and Zhang, Z. (2017). “Improving sensitivity of cluster-based permutation test for EEG/MEG data,” in Proceedings of the 2017 8th International IEEE/EMBS Conference on Neural Engineering (NER) (Shanghai: IEEE), 9–12. doi: 10.1109/NER.2017.8008279

Inman, C. S., Bijanki, K. R., Bass, D. I., Gross, R. E., Hamann, S., and Willie, J. T. (2018). Human amygdala stimulation effects on emotion physiology and emotional experience. Neuropsychologia 145:106722. doi: 10.1016/j.neuropsychologia.2018.03.019

Jaccard, P. (1901). Distribution de la flore alpine dans le bassin des Dranses et dans quelques régions voisines. Bull. Soc. Vaudoise Sci. Nat. 37, 241–272.

Kanwisher, N., and Yovel, G. (2006). The fusiform face area: A cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B Biol. Sci. 361, 2109–2128. doi: 10.1098/rstb.2006.1934

Karnath, H. O., Sperber, C., and Rorden, C. (2018). Mapping human brain lesions and their functional consequences. Neuroimage 165, 180–189. doi: 10.1016/j.neuroimage.2017.10.028

Kassam, K. S., Markey, A. R., Cherkassky, V. L., Loewenstein, G., and Just, M. A. (2013). Identifying emotions on the basis of neural activation. PLoS One 8:66032. doi: 10.1371/journal.pone.0066032

Kesserwani, H., and Kesserwani, A. (2020). Apperceptive prosopagnosia secondary to an ischemic infarct of the lingual gyrus: A Case report and an update on the neuroanatomy, neurophysiology, and phenomenology of prosopagnosia. Cureus 12:e11272. doi: 10.7759/cureus.11272

King, A. J. (2006). Auditory neuroscience: Activating the cortex without sound. Curr. Biol. 16, R410–R411. doi: 10.1016/j.cub.2006.05.012

King, J. R., and Dehaene, S. (2014). Characterizing the dynamics of mental representations: The temporal generalization method. Trends Cogn. Sci. 18, 203–210. doi: 10.1016/j.tics.2014.01.002

King, J.-R., Gwilliams, L., Holdgraf, C., Sassenhagen, J., Barachant, A., Engemann, D., et al. (2018). Encoding and decoding neuronal dynamics: Methodological framework to uncover the algorithms of cognition. Lyon: HAL open Science.

Kragel, P. A., and LaBar, K. S. (2016). Decoding the nature of emotion in the brain. Trends Cogn. Sci. 20:444. doi: 10.1016/j.tics.2016.03.011

Kriegeskorte, N., Goebel, R., and Bandettini, P. (2006). Information-based functional brain mapping. Proc. Natl. Acad. Sci. U.S.A. 103, 3863–3868. doi: 10.1073/pnas.0600244103

Kropf, E., Syan, S. K., Minuzzi, L., and Frey, B. N. (2019). From anatomy to function: The role of the somatosensory cortex in emotional regulation. Rev. Bras. Psiquiatr. 41:261. doi: 10.1590/1516-4446-2018-0183

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. H. J., Hawk, S. T., and van Knippenberg, A. (2010). Presentation and validation of the radboud faces database. Cogn. Emot. 24, 1377–1388. doi: 10.1080/02699930903485076

Lantrip, C., Gunning, F. M., Flashman, L., Roth, R. M., and Holtzheimer, P. E. (2017). Effects of transcranial magnetic stimulation on the cognitive control of emotion: Potential antidepressant mechanisms. J. ECT 33, 73–80. doi: 10.1097/YCT.0000000000000386

Lara, A. H., and Wallis, J. D. (2015). The role of prefrontal cortex in working memory: A mini review. Front. Syst. Neurosci. 9:173. doi: 10.3389/fnsys.2015.00173

LeDoux, J. E., and Brown, R. (2017). A higher-order theory of emotional consciousness. Proc. Natl. Acad. Sci. U.S.A. 114, E2016–E2025. doi: 10.1073/pnas.1619316114

Li, N., Chen, T. W., Guo, Z. V., Gerfen, C. R., and Svoboda, K. (2015). A motor cortex circuit for motor planning and movement. Nature 519, 51–56. doi: 10.1038/nature14178

Lima Portugal, L. C., Alves, R. C. S., Junior, O. F., Sanchez, T. A., Mocaiber, I., Volchan, E., et al. (2020). Interactions between emotion and action in the brain. Neuroimage 214:116728. doi: 10.1016/j.neuroimage.2020.116728

Loeffler, L. A. K., Radke, S., Habel, U., Ciric, R., Satterthwaite, T. D., Schneider, F., et al. (2018). The regulation of positive and negative emotions through instructed causal attributions in lifetime depression–A functional magnetic resonance imaging study. Neuroimage Clin. 20, 1233–1245. doi: 10.1016/j.nicl.2018.10.025

Maddock, R. J. (1999). The retrosplenial cortex and emotion: New insights from functional neuroimaging of the human brain. Trends Neurosci. 22, 310–316. doi: 10.1016/S0166-2236(98)01374-5

Maddock, R. J., Garrett, A. S., and Buonocore, M. H. (2003). Posterior cingulate cortex activation by emotional words: FMRI evidence from a valence decision task. Hum. Brain Mapp. 18:30. doi: 10.1002/hbm.10075

Maris, E., and Oostenveld, R. (2007). Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 164, 177–190. doi: 10.1016/j.jneumeth.2007.03.024

Méndez-Bértolo, C., Moratti, S., Toledano, R., Lopez-Sosa, F., Martínez-Alvarez, R., Mah, Y. H., et al. (2016). A fast pathway for fear in human amygdala. Nat. Neurosci. 19, 1041–1049. doi: 10.1038/nn.4324

Miendlarzewska, E. A., van Elswijk, G., Cannistraci, C. V., and van Ee, R. (2013). Working memory load attenuates emotional enhancement in recognition memory. Front. Psychol. 4:112. doi: 10.3389/fpsyg.2013.00112

Miller, E. K., and Cohen, J. D. (2001). An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202. doi: 10.1146/annurev.neuro.24.1.167

Mitchell, T. M., Shinkareva, S. V., Carlson, A., Chang, K. M., Malave, V. L., Mason, R. A., et al. (2008). Predicting human brain activity associated with the meanings of nouns. Science 320, 1191–1195. doi: 10.1126/science.1152876

Nagy, K., Greenlee, M. W., and Kovács, G. (2012). The lateral occipital cortex in the face perception network: An effective connectivity study. Front. Psychol. 3:141. doi: 10.3389/fpsyg.2012.00141

Nejati, V., Majdi, R., Salehinejad, M. A., and Nitsche, M. A. (2021). The role of dorsolateral and ventromedial prefrontal cortex in the processing of emotional dimensions. Sci. Rep. 11, 1–12. doi: 10.1038/s41598-021-81454-7

Nichols, T. E., and Holmes, A. P. (2002). Nonparametric permutation tests for functional neuroimaging: A primer with examples. Hum. Brain Mapp. 15:1. doi: 10.1002/hbm.1058

Nicolas-Alonso, L. F., and Gomez-Gil, J. (2012). Brain computer interfaces, a review. Sensors (Basel) 12:1211. doi: 10.3390/s120201211

Nogueira, R., Abolafia, J. M., Drugowitsch, J., Balaguer-Ballester, E., Sanchez-Vives, M. V., and Moreno-Bote, R. (2017). Lateral orbitofrontal cortex anticipates choices and integrates prior with current information. Nat. Commun. 8:14823. doi: 10.1038/ncomms14823

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., et al. (2019). PsychoPy2: Experiments in behavior made easy. Behav. Res. Methods 51, 195–203. doi: 10.3758/s13428-018-01193-y

Pessoa, L., and Adolphs, R. (2010). Emotion processing and the amygdala: From a “low road” to “many roads” of evaluating biological significance. Nat. Rev. Neurosci. 11, 773–783. doi: 10.1038/nrn2920

Postelnicu, G., Zollei, L., and Fischl, B. (2009). Combined volumetric and surface registration. IEEE Trans. Med. Imaging 28, 508–522. doi: 10.1109/TMI.2008.2004426

Pourtois, G., Spinelli, L., Seeck, M., and Vuilleumier, P. (2010). Modulation of face processing by emotional expression and gaze direction during intracranial recordings in right fusiform cortex. J. Cogn. Neurosci. 22, 2086–2107. doi: 10.1162/jocn.2009.21404

Price, C. J. (2010). The anatomy of language: A review of 100 fMRI studies published in 2009. Ann. N. Y. Acad. Sci. 1191, 62–88. doi: 10.1111/j.1749-6632.2010.05444.x

Rice, G. E., Ralph, M. A. L., and Hoffman, P. (2015). The Roles of left versus right anterior temporal lobes in conceptual knowledge: An ALE meta-analysis of 97 functional neuroimaging studies. Cereb. Cortex (New York, NY) 25:4374. doi: 10.1093/cercor/bhv024

Rogers, T. T., Cox, C. R., Lu, Q., Shimotake, A., Kikuch, T., Kunieda, T., et al. (2021). Evidence for a deep, distributed and dynamic code for animacy in human ventral anterior temporal cortex. Elife 10:66276. doi: 10.7554/eLife.66276

Rorden, C., Karnath, H.-O., and Bonilha, L. (2007). Improving lesion-symptom mapping. J. Cogn. Neurosci. 19, 1081–1088. doi: 10.1162/jocn.2007.19.7.1081

Saha, S., Mamun, K. A., Ahmed, K., Mostafa, R., Naik, G. R., Darvishi, S., et al. (2021). Progress in brain computer interface: Challenges and opportunities. Front. Syst. Neurosci. 15:578875. doi: 10.3389/fnsys.2021.578875

Sarkheil, P., Goebe, R., Schneider, F., and Mathiak, K. (2013). Emotion unfolded by motion: A role for parietal lobe in decoding dynamic facial expressions. Soc. Cogn. Affect. Neurosci. 8:950. doi: 10.1093/scan/nss092

Seghier, M. L., and Price, C. J. (2018). Interpreting and utilising intersubject variability in brain function. Trends Cogn. Sci. 22:517. doi: 10.1016/j.tics.2018.03.003

Selimbeyoglu, A., and Parvizi, J. (2010). Electrical stimulation of the human brain: Perceptual and behavioral phenomena reported in the old and new literature. Front. Hum. Neurosci. 4:46. doi: 10.3389/fnhum.2010.00046

Seo, D., Olman, C. A., Haut, K. M., Sinha, R., Macdonald, A. W., and Patrick, C. J. (2014). Neural correlates of preparatory and regulatory control over positive and negative emotion. Soc. Cogn. Affect. Neurosci. 9, 494–504. doi: 10.1093/scan/nst115

Shehzad, Z., and McCarthy, G. (2018). Neural circuits: Category representations in the brain are both discretely localized and widely distributed. J. Neurophysiol. 119:2256. doi: 10.1152/jn.00912.2017

Silberman, E. K., and Weingartner, H. (1986). Hemispheric lateralization of functions related to emotion. Brain Cogn. 5, 322–353. doi: 10.1016/0278-2626(86)90035-7

Smith, A. P. R., Henson, R. N. A., Dolan, R. J., and Rugg, M. D. (2004). fMRI correlates of the episodic retrieval of emotional contexts. Neuroimage 22, 868–878. doi: 10.1016/j.neuroimage.2004.01.049

Snoek, J., Larochelle, H., and Adams, R. P. (2012). Practical bayesian optimization of machine learning algorithms [Internet]. Adv. Neural Inf. Process. Syst. 25, 2960–2968.

Steyrl, D., Scherer, R., Faller, J., and Müller-Putz, G. R. (2016). Random forests in non-invasive sensorimotor rhythm brain-computer interfaces: A practical and convenient non-linear classifier. Biomed. Tech. (Berl) 61, 77–86. doi: 10.1515/bmt-2014-0117

Talati, A., and Hirsch, J. (2005). Functional specialization within the medial frontal gyrus for perceptual go/no-go decisions based on “what,” “when,” and “where” related information: An fMRI study. J. Cogn. Neurosci. 17, 981–993. doi: 10.1162/0898929054475226

Tsapkini, K., Frangakis, C. E., and Hillis, A. E. (2011). The function of the left anterior temporal pole: Evidence from acute stroke and infarct volume. Brain 134:3094. doi: 10.1093/brain/awr050

Vaidya, A. R., Pujara, M. S., Petrides, M., Murray, E. A., and Fellows, L. K. (2019). Lesion studies in contemporary neuroscience. Trends Cogn. Sci. 23:653. doi: 10.1016/j.tics.2019.05.009

Viviani, R. (2013). Emotion regulation, attention to emotion, and the ventral attentional network. Front. Hum. Neurosci. 7:746. doi: 10.3389/fnhum.2013.00746

Vuilleumier, P., and Pourtois, G. (2007). Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia 45, 174–194. doi: 10.1016/j.neuropsychologia.2006.06.003

Wada, S., Honma, M., Masaoka, Y., Yoshida, M., Koiwa, N., Sugiyama, H., et al. (2021). Volume of the right supramarginal gyrus is associated with a maintenance of emotion recognition ability. PLoS One 16:e0254623. doi: 10.1371/journal.pone.0254623

Wager, T. D., Kang, J., Johnson, T. D., Nichols, T. E., Satpute, A. B., and Barrett, L. F. (2015). A bayesian model of category-specific emotional brain responses. PLoS Comput. Biol. 11:e1004066. doi: 10.1371/journal.pcbi.1004066

Wang, Q., Cagna, B., Chaminade, T., and Takerkart, S. (2020). Inter-subject pattern analysis: A straightforward and powerful scheme for group-level MVPA. Neuroimage 204:116205. doi: 10.1016/j.neuroimage.2019.116205

Wei, G. X., Ge, L., Chen, L. Z., Cao, B., and Zhang, X. (2021). Structural abnormalities of cingulate cortex in patients with first-episode drug-naïve schizophrenia comorbid with depressive symptoms. Hum. Brain Mapp. 42, 1617–1625. doi: 10.1002/hbm.25315

Woolnough, O., Donos, C., Rollo, P. S., Forseth, K. J., Lakretz, Y., Crone, N. E., et al. (2020). Spatiotemporal dynamics of orthographic and lexical processing in the ventral visual pathway. Nat. Hum. Behav. 5, 389–398. doi: 10.1101/2020.02.18.955039

You, Y., and Li, W. (2016). Parallel processing of general and specific threat during early stages of perception. Soc. Cogn. Affect. Neurosci. 11, 395–404. doi: 10.1093/scan/nsv123

Keywords: intracranial EEG (iEEG), brain network, searchlight analysis, multivariate pattern analysis (MVPA), facial emotion recognition (FER), machine learning

Citation: Donos C, Blidarescu B, Pistol C, Oane I, Mindruta I and Barborica A (2022) A comparison of uni- and multi-variate methods for identifying brain networks activated by cognitive tasks using intracranial EEG. Front. Neurosci. 16:946240. doi: 10.3389/fnins.2022.946240

Received: 17 May 2022; Accepted: 08 September 2022;

Published: 26 September 2022.

Edited by:

Marta Molinas, Norwegian University of Science and Technology, NorwayReviewed by:

Maximiliano Bueno-Lopez, University of Cauca, ColombiaSoumyajit Mandal, Brookhaven National Laboratory (DOE), United States

Copyright © 2022 Donos, Blidarescu, Pistol, Oane, Mindruta and Barborica. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Cristian Donos, cristian.donos@g.unibuc.ro

Cristian Donos

Cristian Donos Bogdan Blidarescu1

Bogdan Blidarescu1 Constantin Pistol

Constantin Pistol Andrei Barborica

Andrei Barborica