- 1School of Sport, Exercise and Rehabilitation Sciences, University of Birmingham, Birmingham, United Kingdom

- 2School of Health & Life Sciences, Teesside University, Middlesbrough, United Kingdom

Background: To date, no gold standard exists for the assessment of unilateral spatial neglect (USN), a common post-stroke cognitive impairment, with limited sensitivity provided by currently used clinical assessments. Extensive research has shown that computer-based (CB) assessment can be more sensitive, but these have not been adopted by stroke services yet.

Objective: We conducted a systematic review providing an overview of existing CB tests for USN to identify knowledge gaps and positive/negative aspects of different methods. This review also investigated the benefits and barriers of introducing CB assessment tasks to clinical settings and explored practical implications for optimizing future designs.

Methodology: We included studies that investigated the efficacy of CB neglect assessment tasks compared to conventional methods in detecting USN for adults with brain damage. Study identification was conducted through electronic database searches (e.g., Scopus), using keywords and standardized terms combinations, without date limitation (last search: 08/06/2022). Literature review and study selection were based on prespecified inclusion criteria. The quality of studies was assessed with the quality assessment of diagnostic accuracy studies tool (Quadas-2). Data synthesis included a narrative synthesis, a table summarizing the evidence, and vote counting analysis based on a direction of effect plot.

Results: A total of 28 studies met the eligibility criteria and were included in the review. According to our results, 13/28 studies explored CB versions of conventional tasks, 11/28 involved visual search tasks, and 5/28 other types of tasks. The vote counting analysis revealed that 17/28 studies found CB tasks had either equal or higher sensitivity than conventional methods and positive correlation with conventional methods (15/28 studies). Finally, 20/28 studies showed CB tasks effectively detected patients with USN within different patient groups and control groups (17/28).

Conclusions: The findings of this review provide practical implications for the implementation of CB assessment in the future, offering important information to enhance a variety of methodological issues. The study adds to our understanding of using CB tasks for USN assessment, exploring their efficacy and benefits compared to conventional methods, and considers their adoption in clinical environments.

Introduction

Unilateral spatial neglect (USN) is one of the most common post-stroke cognitive impairments with a prevalence of up to 80% early after stroke (Stone et al., 1993; Hammerbeck et al., 2019) and around 30% in the chronic phase of stroke (Esposito et al., 2021). USN as defined by Heilman et al. (1987) is an inability to explore or respond to a stimulus on the contralesional side of space, provided that this failure is not caused by lower-level sensory, motor, or visual impairment. USN can be observed either on the left or right side of space with higher frequency and more severe lateralized attention deficits on the left side (Ten Brink et al., 2017). In the early stages of stroke, the severity of USN can be a prognostic factor of increased hospital length of stay, worse rehabilitation outcome, family burden, and long-lasting impairments (Buxbaum et al., 2004; Luvizutto et al., 2018; Hammerbeck et al., 2019). The severity of neglect has also been associated with a higher risk of falls, reduced quality of life, reduced functional outcome, and reduced independence in the chronic stages (Jehkonen et al., 2006; Chen et al., 2015). The underlying mechanisms of USN have been intensively investigated by researchers, with proposed theories mainly focused on representational (Bisiach and Luzzatti, 1978; Milner et al., 1993) and attentional factors (Heilman and Van Den Abell, 1980; Posner et al., 1982; Kinsbourne, 1993). However, some aspects of the theoretical underpinnings of USN remain controversial in the literature (Karnath and Rorden, 2012; Baldassarre et al., 2014; Montedoro et al., 2018), possibly as a result of the complex and heterogeneous nature of the neglect syndrome; and diverse subtypes might be associated with varied brain areas and forms of the disorder (Rode et al., 2017; Gammeri et al., 2020). For example, USN can subdivide based on spatial domains and frames of reference (Buxbaum et al., 2004; Caggiano and Jehkonen, 2018). And in the last 20 years, neuroanatomical studies have highlighted associations with parietal, temporal, and frontal lobe brain lesions in affected patients (Corbetta and Shulman, 2011; Lunven and Bartolomeo, 2017; Zebhauser et al., 2019).

An additional challenge in our understanding of USN is diagnosis, associated with both the lack of gold standard assessment and the use of numerous and varied diagnostic tools (Azouvi et al., 2016). Menon and Korner-Bitensky (2004) detected more than 60 behavioral tests and functional assessment tools with a large variety of apparatus use, task requirements/design, and diagnostic accuracy, also highlighting different neglect subtypes or syndrome components (Verdon et al., 2010; Grattan and Woodbury, 2017). National clinical guidelines for stroke suggest using standardized assessments to assess USN, including paper-and-pencil (PnP) tests (Royal College of Physicians, 2016; Canadian Stroke Best Practice Recommendations, 2019). A multidisciplinary international survey (Checketts et al., 2021) reported that currently the most widely used USN assessments include those such as the Behavioral Inattention Test (BIT), developed by Wilson et al. (1987), and the Catherine Bergego Scale (CBS), developed by Azouvi (1996). Even though these tests are used on a daily basis in clinical practice, there remains criticism regarding how optimal they are, and studies have demonstrated that they still suffer from many limitations related to lack of precision, ecological validity, reduced sensitivity, and high false-negative results (Rengachary et al., 2009; Bonato and Deouell, 2013; Kaufmann et al., 2020).

There remains a need for higher quality assessment methods to be introduced to clinical practice and in the last two decades, a variety of studies have demonstrated that computer-based (CB) tasks may be able to make a significant contribution. These tasks can provide higher sensitivity and diagnostic accuracy (also detecting mild and chronic cases) and better psychometric properties (especially diagnostic validity) with low cost and short administration time (Schendel and Robertson, 2002; Deouell et al., 2005; Erez et al., 2009; Rengachary et al., 2009; Bonato, 2012; Villarreal et al., 2020). These CB tasks appear to be well-accepted by patients, and offer design flexibility, stimulus modification, adjustment of difficulty, and may also limit the use of compensatory strategies by patients. They may also be less likely to include floor and ceiling effects (Bonato et al., 2010, 2013; Bonato, 2012). These tasks can also provide important patient information regarding subtype and severity of neglect providing data to inform a more specific individually tailored rehabilitation program (Ulm et al., 2013; Dalmaijer et al., 2015). Computer-based tasks may therefore provide an opportunity to augment USN assessment in clinical practice; PnP are likely to remain important, due to some practical limitations for some CB tests such as the need for specified hardware or software, and the current requirement for basic programming/statistical skills to implement them (Bonato and Deouell, 2013). However, the practical limitations of CB assessment have been gradually minimized, and the past decade has seen the rapid increase of the accessibility of computers and their role in everyday life (e.g., education, entertainment etc.) providing a more supportive environment for their implementation in clinical practice.

This review draws a distinction between CB assessment and assessment using virtual reality (VR). Previous reviews have focused on VR -tasks (immersive and non-immersive) used in the assessment and rehabilitation of USN, demonstrating that VR assessment can effectively detect USN (Tsirlin et al., 2009; Pedroli et al., 2015; Ogourtsova et al., 2017). In contrast, this is the first study to provide an evaluation and overview of existing CB assessment tools for USN, not involving VR. The reviewers were not able to find existing operational definitions in the related literature for CB assessment and how this differs from non-immersive VR tasks; however, there is a clear distinction, since there was only very minimal overlap in this review (1 out of 28 studies) with a VR-focused systematic review (Ogourtsova et al., 2017). For the purpose of this systematic review, CB tasks were defined as screen-based tasks, where the authors of included studies defined these as CB tasks (even if they could potentially be taxonomized in the gray area of CB tasks and non-immersive VR tasks), using a variety of different displays (e.g., monitor, projector, tablet).

This systematic review will shed light on the methodological inconsistencies in related studies and provide an evaluation and overview of the existing evidence around the CB assessment of USN. The results will offer future researchers essential background knowledge for designing and optimizing CB tasks. This review will also explore the advantages and challenges for stroke services in adopting CB assessment for USN. To our knowledge, no previous study has conducted a systematic review on CB assessment for USN. To define the review question, a PICO (population, intervention, comparison, and outcome) framework was adopted (Higgins et al., 2019).

“Do CB tasks enhance the ability to detect USN in stroke survivors compared with conventional tests?”

PICO:

Participants: Studies including adult (aged over 18 years) stroke survivors or patients with other types of brain damage with or without USN.

Intervention: CB tasks (screen-based tasks that are categorized by the authors of the included studies as CB assessment/testing or computerized tasks etc., using displays such as monitor, projector, tablet etc.).

Comparison: Conventional tests (e.g., PnP, or functional assessment) or CB design based on PnP.

Outcomes: A variety of outcomes were accepted such as [diagnostic accuracy measures (e.g., sensitivity, specificity), psychometric properties (e.g., validity, reliability), correlation coefficient, subjects' performance data, narrative reports].

Methodology

The review was conducted based on the preferred reporting items for systematic reviews and meta-analysis (PRISMA) guidelines (Moher et al., 2009).

Search strategy

This review considered studies focusing on evaluating the diagnostic accuracy and performance of CB tasks in identifying USN in stroke survivors and patients with other types of brain damage.

A comprehensive search strategy of electronic databases (PsycINFO, EMBASE, MEDLINE (OVID), AMED, CINAHL (EBSCO), WEB of Science, PubMed, Scopus) using keywords and a combination of standardized terms was conducted.

The last search was conducted on 08/06/2022 with the following search strategy keywords:

- (“brain damage” OR “brain lesion” OR aneurysm OR “transient ischaemic attack” OR “ischemic attack” OR TIA OR “cerebrovascular accident” OR “cerebral vascular accident” OR CVA OR “traumatic brain injury” OR TBI OR stroke OR “brain tumor”) AND

- (neglect OR “visuospatial neglect” OR inattention OR “unilateral neglect” OR “unilateral inattention” OR hemineglect OR “unilateral spatial neglect” OR “hemispatial neglect” OR “visual neglect” OR “hemi-inattention” OR “perceptual disorder*”) AND

- (assess* OR measur* OR evaluat* OR test* OR screen* OR diagnos* OR assessment OR measurement OR evaluation OR diagnosis OR screening) AND

- (computer* OR computer OR computerized OR computer-based OR phone OR smartphone OR tablet)

In order to retrieve further published, unpublished and ongoing studies, manual searches were utilized through the references of included articles, gray literature was identified through Google Scholar and registered ongoing trials were searched [ClinicalTrials.gov (http://clinicaltrials.gov/)].

No restriction was considered regarding the publication date or status. Studies were not limited to those written in English, but all eligible studies were written in English.

Eligibility criteria

• Inclusion criteria

- Context: studies that present or evaluate the diagnostic accuracy and performance of CB tests for USN in stroke survivors and patients with other types of brain damage (e.g., brain lesion, aneurysm, traumatic brain injury, or brain tumor etc.).

- Studies that fulfill the criteria established by the PICO framework.

- The term “CB tasks” was not consistent in the literature, so the reviewers decided to include studies exploring screen-based tasks presented by the authors of the included studies as CB assessment/testing (or using related synonyms such as computerized or digitized/computer version of PnP etc.). We included CB tasks that followed these criteria even if they could be potentially classified in the gray area of CB tasks and non-immersive VR tasks, using a variety of different apparatus (e.g., computer-monitor, projectors, tablet).

• Exclusion criteria

- Studies not including stroke survivors or patients with other types of brain damage.

- Studies that did not focus on USN assessment.

- Studies where CB task is not the index test.

- Studies where the design of the CB task and apparatus are not substantially defined so that the reviewers can decide whether it can be included in the review. Studies that did not clarify the type of apparatus (e.g., response box, computer-monitor, projector) or specific design details (e.g., task demands and outcome measures) were excluded.

- Studies that did not clarify the use of a comparison tool.

- Studies that did not report or evaluate any form of diagnostic accuracy or performance of the CB test.

- Studies exploring VR based tasks (as defined by the authors and/or as interpreted by the reviewers).

Data extraction and selection

Duplicates were removed and IG screened the title and abstract of all identified studies, excluding irrelevant studies. The remaining studies were screened by full-text and excluded based on the eligibility criteria. This was verified by DL and in the case of conflict, it was resolved by discussion and if the discrepancy was not resolved, a third author DP would be included (but it was not needed).

IG and DL performed data extraction using a data extraction sheet (Supplementary Table 1), that was developed to accurately collect study characteristics and data. Data collection was verified, and disagreements resolved by discussion and consensus.

Extracted data included:

- Study Characteristics: study type, population, sample size, year, number of participants with USN (+) and without USN (-), patients with right brain damage (RBD) or left-brain damage (LBD), follow-up, inclusion/exclusion criteria, etc.

- CB task information (e.g., apparatus, outcome measures, administration time) and reference standards comparison for diagnosing USN.

- Study data: diagnostic accuracy measures, psychometric properties, outcome measures efficacy, patient history data, and test performance data, etc.

- Information for risk of bias assessment.

Risk of bias (quality) assessment

IG and DL independently used the quality assessment tool for diagnostic accuracy studies (QUADAS-2) for systematic reviews to evaluate the quality of evidence for the identified studies (Whiting et al., 2011).

Data synthesis

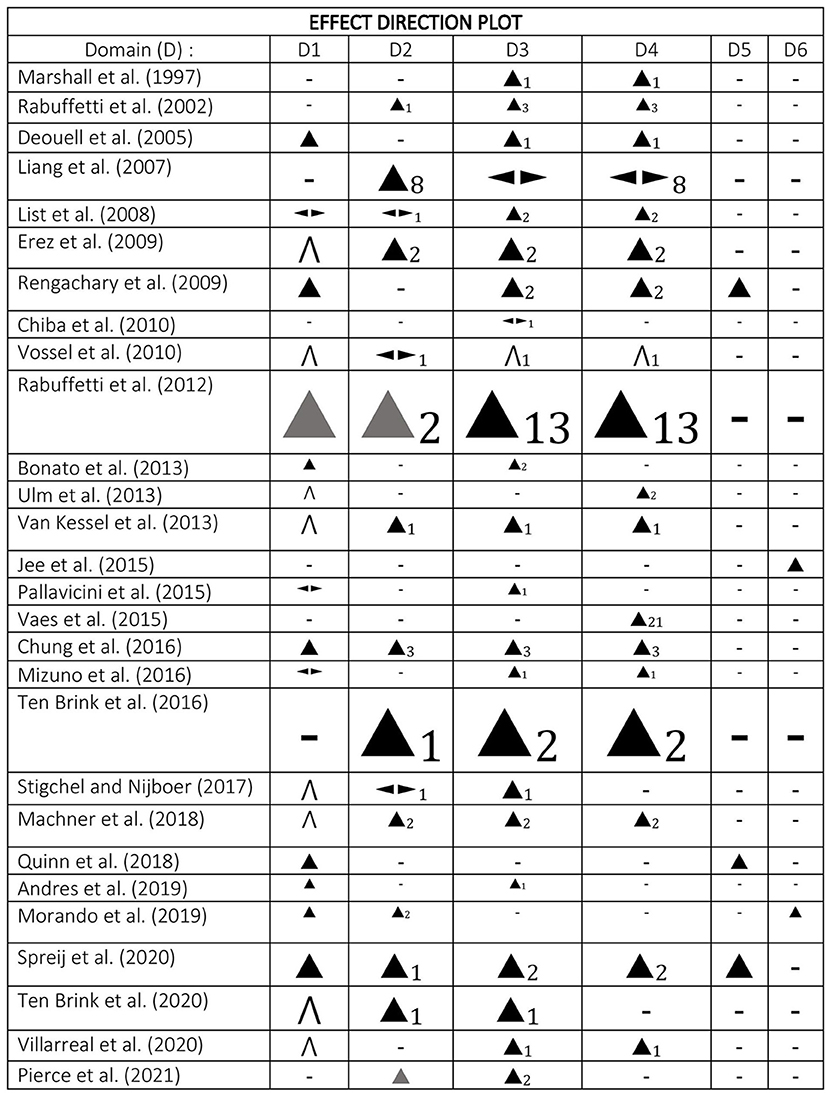

This review includes a narrative synthesis and analysis of the results of the included studies (McKenzie and Brennan, 2019). Studies were tabulated and compared in groups based on the index test type (e.g., CB version of PnP, visual search tasks, and other types) and heterogeneity was explored according to the type of analysis, data, and index test (Campbell et al., 2020). Study characteristics were synthesized and presented in a table (Supplementary Table 2) containing summaries of the outcome measures, patient history data, study details, and risk of bias. The main results were tabulated (Supplementary Table 3) and data were analyzed and presented as an effect direction plot (Figure 1) for 6 domains (sensitivity, correlation coefficient with conventional task or neglect symptoms, ability to distinguish among patient groups, ability to distinguish patients from control groups, specificity, reliability) (Boon and Thomson, 2021). In order to gain a better understanding and deeper analysis of the data, a vote counting technique of effect direction was conducted (McKenzie and Brennan, 2019).

Ideally, we would have performed a meta-analysis of our dataset from the included studies to compare outcomes of the CB assessment tools including properties (e.g., sensitivity, specificity) and outcome measures (e.g., reaction times (RT) and accuracy). However, there was not sufficient data with acceptable homogeneity to undertake a meta-analysis (Deeks et al., 2019).

Results

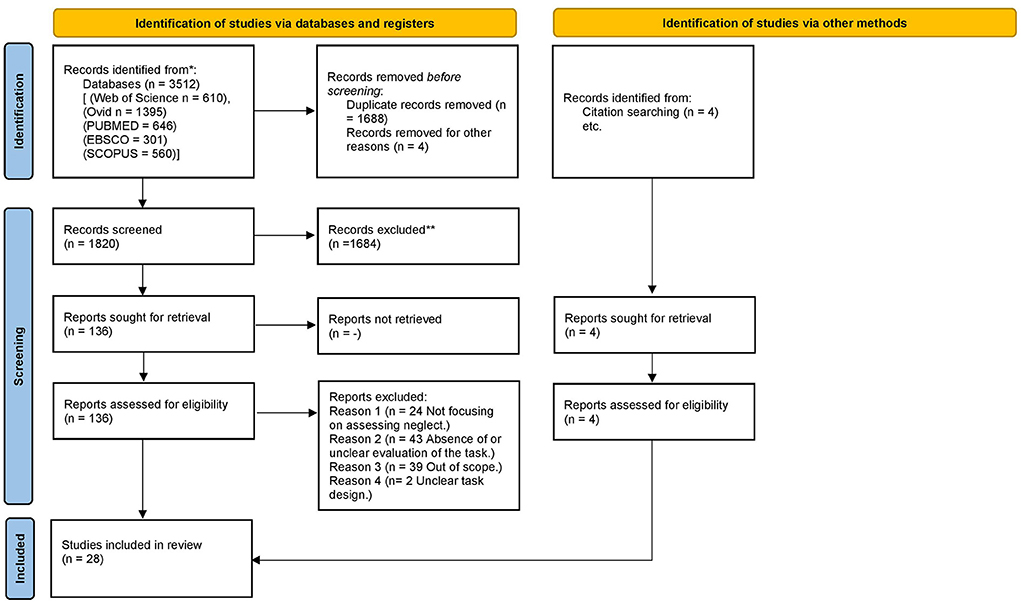

In the current systematic review, we aimed to provide a review of studies evaluating CB assessment of USN. The screening process is summarized within a PRISMA flow diagram (Figure 2) (Page et al., 2021). In total, 28 articles met the inclusion criteria and were critically appraised. However, we full screened 136 studies. The excluded citations and the reasons for exclusion were tabulated (Supplementary Table 4). There were three main reasons for the exclusion of studies that were not out of scope. The first case was where there was either an absence or not clear use of a comparison tool. Secondly, studies were excluded where authors did not clarify whether USN was assessed. Lastly, studies were excluded when the specific details regarding the design of the CB tasks were not explained thoroughly enough to allow the reviewers to decide whether the study was eligible for inclusion. This process led to excluding some important studies that explored CB assessment methods by using overlays of PnP on graphic tablets (Donnelly et al., 1999; Guest et al., 2002), CB versions of PnP tests (Halligan and Marshall, 1989; Kerkhoff and Marquardt, 1995; Chiba et al., 2006; Smit et al., 2013; Van der Stoep et al., 2013; Hopfner et al., 2015) and software for analysis (Rorden and Karnath, 2010; Dalmaijer et al., 2015). Moreover, the reviewers had to exclude a large body of work that investigated target/stimulus detection tasks (Beis et al., 1994; Tipper and Behrmann, 1996; Baylis et al., 2004), RT tasks (Anderson et al., 2000; Schendel and Robertson, 2002; Sacher et al., 2004), visual search task (Laeng et al., 2002; Toba et al., 2018; Borsotti et al., 2020), visual attention theory focused tasks (Habekost and Bundesen, 2003; Bublak et al., 2005) and the test battery of attentional performance (TAP) (Zimmermann and Fimm, 2002). Supplementary Table 2 illustrates some of the main characteristics of the included studies (risk of bias, subjects, conventional tool comparison, task details, results, and conclusions). The studies were grouped based on the CB assessment type in three groups [1. CB versions of conventional tasks, 2. Visual search tasks (2a. Dynamic & dual tasks, 2b. Feature and conjunction tasks, 2c. Static tasks) 3. Different types of tasks]. In our synthesis, we explored the apparatus, response method, outcome measure, comparison, and properties of the CB tools. Supplementary Table 3 represents a summary of the main results of the studies and Figure 1 is an effect direction plot based on the vote counting analysis conducted.

Computer-based task type

The authors identified 13 studies, that investigated test batteries of CB versions of conventional tasks, including tasks similar to line bisection (Chiba et al., 2010; Jee et al., 2015), cancellation (Rabuffetti et al., 2002, 2012), baking tray (Chung et al., 2016) or combinations of different types of tasks (Liang et al., 2007; Ulm et al., 2013; Pallavicini et al., 2015; Vaes et al., 2015; Mizuno et al., 2016; Ten Brink et al., 2016; Quinn et al., 2018; Morando et al., 2019). Our synthesis included 11 studies exploring visual search tasks such as static (Mizuno et al., 2016; Machner et al., 2018; Ten Brink et al., 2020), feature and conjunction (List et al., 2008; Erez et al., 2009), dynamic and dual tasks (Marshall et al., 1997; Deouell et al., 2005; Bonato et al., 2013; Van Kessel et al., 2013; Andres et al., 2019; Villarreal et al., 2020). We detected five studies that observed different types of tasks such as the widely investigated Posner cueing paradigm (Rengachary et al., 2009), a neglect/extinction task (Vossel et al., 2010), a temporal order judgment (TOJ) task (Stigchel and Nijboer, 2017), a driving simulator task (Spreij et al., 2020) and a manual spatial exploration task (Pierce et al., 2021).

By carefully examining the data, it was found that CB functional (Pallavicini et al., 2015) and visual search tasks were shown to be more sensitive than CB versions of PnP tasks (Ten Brink et al., 2020). Moreover, combining different types of tasks can capture a wider aspect of neglect symptoms (Erez et al., 2009; Mizuno et al., 2016; Spreij et al., 2020). An interesting finding was that different types of tasks were associated with different types of errors, for example, cancellation tasks were more sensitive to viewer-centered (egocentric) errors, with visual search and line bisection tasks more sensitive to stimulus-centered (allocentric) errors; Similarly RT and TOJ tasks also detected spatial bias deficits (Van Kessel et al., 2013; Mizuno et al., 2016; Stigchel and Nijboer, 2017).

Some studies compared diagnostic accuracy between CB tests, and as expected the more complex CB tasks (e.g., higher demands, dual tasks, increased number of targets, conjunction tasks) were more sensitive and could detect more cases (chronic/subclinical) than more simple versions (e.g., feature tasks) (Marshall et al., 1997; List et al., 2008; Erez et al., 2009; Bonato et al., 2013; Van Kessel et al., 2013; Andres et al., 2019; Ten Brink et al., 2020; Villarreal et al., 2020).

Outcome measures

Most cancellation tasks obtained measures including the number of touched (canceled) targets, revisits, intersections, omissions, center of cancellation (CoC) (Rabuffetti et al., 2002, 2012; Ulm et al., 2013; Pallavicini et al., 2015; Ten Brink et al., 2016). Line bisection tasks usually captured the mean deviation (Chiba et al., 2010; Ulm et al., 2013; Jee et al., 2015).

Visual search and exploration tasks included measures based on false alarms/catch trials responses, target detection (capturing accuracy, detection rate/probability) and time (such as RT, search time and task duration); data analysis in these tasks was performed based on target position [e.g., left/right (L/R)] and task demands (e.g., level of difficulty, number of distractors) (Marshall et al., 1997; Deouell et al., 2005; List et al., 2008; Erez et al., 2009; Vossel et al., 2010; Rengachary et al., 2011; Bonato et al., 2013; Van Kessel et al., 2013; Vaes et al., 2015; Mizuno et al., 2016; Machner et al., 2018; Andres et al., 2019; Ten Brink et al., 2020; Villarreal et al., 2020).

In the course of this work, we discovered that (L/R) target detection (Bonato et al., 2013; Van Kessel et al., 2013; Pallavicini et al., 2015; Machner et al., 2018; Andres et al., 2019), hit rate (response rate) (Erez et al., 2009; Ten Brink et al., 2020), RT asymmetry scores (Deouell et al., 2005; Rengachary et al., 2009; Van Kessel et al., 2013; Machner et al., 2018) and the number of intersection (disorganized search) (Ten Brink et al., 2016) were all shown to be among the most sensitive measures for spatial bias and visual search deficits detection in patients with brain damage.

Apparatus and response type

A number of studies have used manual response displays such as touchscreen monitors (Rabuffetti et al., 2002, 2012; Ulm et al., 2013; Ten Brink et al., 2016, 2020), smartphone/tablet devices (Pallavicini et al., 2015; Chung et al., 2016; Quinn et al., 2018; Morando et al., 2019; Pierce et al., 2021) or graphic tablets (Liang et al., 2007; Vaes et al., 2015). Several authors have explored the efficacy of using projectors/large screens (Van Kessel et al., 2013; Machner et al., 2018; Spreij et al., 2020; Villarreal et al., 2020), PC/laptop with a mouse (Marshall et al., 1997) or with a response box (Deouell et al., 2005; Erez et al., 2009; Rengachary et al., 2009; Chiba et al., 2010; Vossel et al., 2010; Stigchel and Nijboer, 2017). Some tests are designed requiring verbal (List et al., 2008; Bonato et al., 2013; Andres et al., 2019) or both verbal and manual responses (Chiba et al., 2010). Various tasks captured performance with more than one type of response or apparatus (Van Kessel et al., 2013; Jee et al., 2015; Vaes et al., 2015).

Some methods may be more accurate than others, however, the literature was reviewed, and no apparatus or response type was proved to be superior to any other.

Specific details

Tasks could take from either 5 to 10 min to complete (Marshall et al., 1997; Van Kessel et al., 2013) or 10–20 min (List et al., 2008; Rengachary et al., 2009; Vossel et al., 2010; Ulm et al., 2013) and test batteries could last more than 20 min (Vaes et al., 2015). The CB tasks were considered to provide accurate results within short administration time (Ulm et al., 2013; Pierce et al., 2021). Authors suggest they also offer reduced overall assessment time compared to conventional methods (e.g., batteries of pen and paper tasks), which is important as long administration time can cause fatigue to the patients and modulate the results (Liang et al., 2007; List et al., 2008).

The diameter of the screen/display varied from 13–15 inches (Deouell et al., 2005; Chiba et al., 2010; Ten Brink et al., 2020) to 17–19 inches (Rengachary et al., 2009; Rabuffetti et al., 2012; Andres et al., 2019) to 24–33 inches (Mizuno et al., 2016; Machner et al., 2018). The distance from the subject could be around 30–46 cm (Chiba et al., 2010; Mizuno et al., 2016; Quinn et al., 2018), 60–70 cm (List et al., 2008; Andres et al., 2019) or 90–100 cm (Deouell et al., 2005; Stigchel and Nijboer, 2017). These two factors were not found to affect the tasks' accuracy or the participants' performance.

Sensitivity

Sensitivity was reported in 20/28 studies with four reporting in the form of a percentage (Bonato et al., 2013; Chung et al., 2016; Quinn et al., 2018; Spreij et al., 2020), four with statistical significance values (Deouell et al., 2005; Rengachary et al., 2009; Rabuffetti et al., 2012; Andres et al., 2019) and 11 in a narrative manner (List et al., 2008; Erez et al., 2009; Vossel et al., 2010; Ulm et al., 2013; Van Kessel et al., 2013; Pallavicini et al., 2015; Mizuno et al., 2016; Stigchel and Nijboer, 2017; Machner et al., 2018; Ten Brink et al., 2020; Villarreal et al., 2020). Some CB tools had varied results, reporting both positive and negative or neutral outcomes (List et al., 2008; Pallavicini et al., 2015; Mizuno et al., 2016). Overall 17/28 CB tasks report superior or equal sensitivity in detecting USN symptoms when compared to a variety of conventional tasks (Deouell et al., 2005; Erez et al., 2009; Vossel et al., 2010; Rabuffetti et al., 2012; Ulm et al., 2013; Van Kessel et al., 2013; Chung et al., 2016; Stigchel and Nijboer, 2017; Quinn et al., 2018; Morando et al., 2019; Spreij et al., 2020; Ten Brink et al., 2020; Villarreal et al., 2020). The CB methods were able to detect USN cases that the PnP did not, either due to compensatory strategies implemented by the patients or due to ceiling effects (Marshall et al., 1997; Deouell et al., 2005; Rengachary et al., 2009; Rabuffetti et al., 2012; Bonato et al., 2013; Van Kessel et al., 2013); especially in chronic (Andres et al., 2019) mild (Rengachary et al., 2009; Mizuno et al., 2016) and subclinical cases (Van Kessel et al., 2013; Machner et al., 2018).

Specificity/reliability

Specificity was reported in 3/28 studies; however, only two studies included specificity percentage reports (high) (Quinn et al., 2018; Spreij et al., 2020) and only the latter included predictive values. Similarly, 2/28 studies reported high reliability; both of these studies report intra-rater reliability measurements (Jee et al., 2015; Morando et al., 2019), but only one also mentioned inter-rater reliability scores.

Group differences

The ability of the CB task indices/variables to distinguish among patient groups (LBD+, LBD-, RBD+, RBD-) was explored by 22/28 studies, with 2/28 reporting limited (Liang et al., 2007; Chiba et al., 2010) and 20/28 stronger ability (Marshall et al., 1997; Rabuffetti et al., 2002, 2012; Deouell et al., 2005; List et al., 2008; Erez et al., 2009; Rengachary et al., 2009; Vossel et al., 2010; Bonato et al., 2013; Van Kessel et al., 2013; Pallavicini et al., 2015; Chung et al., 2016; Mizuno et al., 2016; Ten Brink et al., 2016, 2020; Stigchel and Nijboer, 2017; Machner et al., 2018; Andres et al., 2019; Spreij et al., 2020; Villarreal et al., 2020; Pierce et al., 2021). In a similar way, 18/28 studies discussed the ability of CB tasks to detect USN patients among control groups with the majority of the studies reporting positive results (Marshall et al., 1997; Rabuffetti et al., 2002, 2012; Deouell et al., 2005; List et al., 2008; Erez et al., 2009; Rengachary et al., 2009; Vossel et al., 2010; Ulm et al., 2013; Van Kessel et al., 2013; Vaes et al., 2015; Chung et al., 2016; Mizuno et al., 2016; Ten Brink et al., 2016; Machner et al., 2018; Spreij et al., 2020; Villarreal et al., 2020).

Conventional or PnP comparison

Many studies compared CB tasks with the BIT (Deouell et al., 2005; Liang et al., 2007; Vossel et al., 2010; Van Kessel et al., 2013; Mizuno et al., 2016), with a combination of two or more original or similar subtests such as letter/star/line cancellation, line bisection, drawing and reading tasks (Marshall et al., 1997; Rabuffetti et al., 2002, 2012; Chiba et al., 2010; Ulm et al., 2013; Pallavicini et al., 2015; Morando et al., 2019). Some studies used only cancellation tasks (List et al., 2008; Bonato et al., 2013) or cancellation task(s) and line bisection (Quinn et al., 2018; Morando et al., 2019; Pierce et al., 2021). Other important tasks used as comparisons would be the baking tray and clock drawing task (Rengachary et al., 2009). Some studies compared CB tasks with both functional (e.g., CBS) (Erez et al., 2009; Ten Brink et al., 2016; Machner et al., 2018; Spreij et al., 2020) and PnP assessment (e.g., BIT) or with other widely used CB tools (e.g., TAP, CB, cancellation and line bisection) (Stigchel and Nijboer, 2017; Andres et al., 2019).

Correlation coefficient

Correlation coefficients were reported by 15/28 studies using data from the CB tasks comparing them to conventional methods. Most of these report positive correlations (Rabuffetti et al., 2002; Liang et al., 2007; Erez et al., 2009; Van Kessel et al., 2013; Chung et al., 2016; Ten Brink et al., 2016, 2020; Machner et al., 2018; Morando et al., 2019; Spreij et al., 2020; Pierce et al., 2021), whereas in some cases there were more mixed results (List et al., 2008; Vossel et al., 2010; Stigchel and Nijboer, 2017). A variety of CB tasks (e.g., TOJ, conjunction/feature, Posner, etc.) were found to be highly correlated with cancellation tasks (Erez et al., 2009; Vossel et al., 2010; Stigchel and Nijboer, 2017; Machner et al., 2018; Ten Brink et al., 2020) and similarly with the CBS test (Erez et al., 2009; Machner et al., 2018; Spreij et al., 2020; Ten Brink et al., 2020).

General benefits of CB assessment

The results of this investigation show that CB methods have been shown in many cases to be feasible (Rabuffetti et al., 2002; Deouell et al., 2005; Vossel et al., 2010; Jee et al., 2015; Chung et al., 2016; Morando et al., 2019), flexible (List et al., 2008; Vaes et al., 2015), valid (Erez et al., 2009; Ulm et al., 2013; Jee et al., 2015; Morando et al., 2019; Villarreal et al., 2020), reliable (Vossel et al., 2010; Jee et al., 2015; Morando et al., 2019) and user-friendly tools (List et al., 2008; Rabuffetti et al., 2012; Ulm et al., 2013; Pallavicini et al., 2015; Vaes et al., 2015).

This review confirms that CB assessment can provide important patient information and reveal more aspects of neglect symptoms than PnP assessment (Liang et al., 2007; Erez et al., 2009; Chung et al., 2016; Quinn et al., 2018), such as severity (Rengachary et al., 2009; Pierce et al., 2021), quantitative and qualitative data regarding patient behavior (Andres et al., 2019). Moreover, CB tasks can identify temporal, spatial, and non-spatial search strategy dynamics, helping to differentiate between different subtypes (Mizuno et al., 2016; Stigchel and Nijboer, 2017) with a relatively short administration time (Liang et al., 2007; List et al., 2008; Vossel et al., 2010; Ulm et al., 2013; Pierce et al., 2021).

Limitations of the studies

The majority of the studies reviewed had a relatively small sample size (e.g., less than 50 participants) for demonstrating the clinical validity of the CB tests and control demographics among patients (List et al., 2008; Ulm et al., 2013; Jee et al., 2015; Vaes et al., 2015; Machner et al., 2018; Quinn et al., 2018). However some studies had smaller sample sizes (Rabuffetti et al., 2002; Deouell et al., 2005; Chiba et al., 2010; Bonato et al., 2013; Pallavicini et al., 2015; Mizuno et al., 2016; Andres et al., 2019; Morando et al., 2019; Pierce et al., 2021) and a number of authors did not include an unimpaired control group (Marshall et al., 1997; List et al., 2008; Chiba et al., 2010; Bonato et al., 2013; Pallavicini et al., 2015; Stigchel and Nijboer, 2017; Quinn et al., 2018; Andres et al., 2019; Morando et al., 2019; Ten Brink et al., 2020). In specific studies, no clear attempt was made to compare with a PnP task (Vaes et al., 2015; Ten Brink et al., 2016) or only one or two comparison tasks were conducted (Marshall et al., 1997; Jee et al., 2015; Vaes et al., 2015; Chung et al., 2016; Morando et al., 2019); comparing the CB with a battery of tasks would have increased sensitivity. Practical issues are among the most important drawbacks for example, CB tasks can be impractical to perform in a clinical setting, especially where these require large/expensive equipment rather than a typical computer and monitor (Ulm et al., 2013; Van Kessel et al., 2013; Jee et al., 2015; Vaes et al., 2015; Spreij et al., 2020). Selection bias is another potential concern in cases where samples do not represent the entire stroke population (e.g., acute/subacute/chronic or RBD/LBD) (Deouell et al., 2005; List et al., 2008; Erez et al., 2009; Vossel et al., 2010; Chung et al., 2016; Quinn et al., 2018). Other limitations include follow-up absence, covering only specific neglect component (Jee et al., 2015; Pallavicini et al., 2015; Vaes et al., 2015), not controlling demographics (Van Kessel et al., 2013; Jee et al., 2015; Mizuno et al., 2016) and factors such as hemianopia (Vossel et al., 2010; Stigchel and Nijboer, 2017; Spreij et al., 2020). Only 7/28 studies had a low risk of bias (Rengachary et al., 2009; Rabuffetti et al., 2012; Ten Brink et al., 2016, 2020; Machner et al., 2018; Villarreal et al., 2020; Pierce et al., 2021) the rest of them had moderate (Rabuffetti et al., 2002; Deouell et al., 2005; Liang et al., 2007; List et al., 2008; Erez et al., 2009; Vossel et al., 2010; Bonato et al., 2013; Ulm et al., 2013; Van Kessel et al., 2013; Chung et al., 2016; Stigchel and Nijboer, 2017; Quinn et al., 2018; Spreij et al., 2020) and unclear (Marshall et al., 1997; Chiba et al., 2010; Jee et al., 2015; Pallavicini et al., 2015; Vaes et al., 2015; Mizuno et al., 2016; Andres et al., 2019; Morando et al., 2019).

Discussion

In this review, the main objective was to provide a summary and critical analysis of the existing evidence around CB assessment of USN, by investigating the shortcomings and strengths of the approaches followed by previous studies. Another purpose of our review was to generate fresh insight into our understanding of CB tasks and enhance future designs by demonstrating essential indications regarding clinical applicability and utility of CB assessment of USN.

One of our most important findings relates to the task type; most of the studies preferred to use CB versions of conventional tasks, however, it is revealed that CB tasks with more advanced designs such as RT and visual search tasks (e.g., feature and conjunction) can be more effective in detecting neglect symptoms. It was revealed that these types of tasks can capture more mild/chronic/subclinical cases than simple versions. Similarly, more complex designs, combining a variety of task types with different task demands, can maximize sensitivity by providing a wider data collection. These results are in line with existing evidence that presenting greater task difficulty can enhance sensitivity (Bonato, 2012; Buxbaum et al., 2012). These findings also support the work of other studies in the area demonstrating that divergent tasks can capture distinct components of this multifactorial syndrome (Sacher et al., 2004) and diversity of task demands can highlight disparate deficits associated with USN (Dukewich et al., 2012).

The results of this study explore the potential superiority of some outcome measures' sensitivity for detecting spatial bias and visual search deficit, such as RT and accuracy for visual search and comparable target detection tasks. This has previously been observed in a variety of other studies exploring the advantages of these measures (Bartolomeo et al., 1998; Anderson et al., 2000; Schendel and Robertson, 2002). Similarly, it was revealed in our review that in cancellation tasks and other CB versions of PnP, the CoC was among the most sensitive. These results are also in accordance with a wide background of evidence highlighting the benefits of capturing CoC in cancellation tasks (Rorden and Karnath, 2010; Suchan et al., 2012; Dalmaijer et al., 2015).

One of our objectives was to investigate features that optimize CB task design, but we were unable to demonstrate how some factors such as task duration, size of the display, and apparatus affect the effectiveness of these tasks. However, we concluded that most of the tasks last between 10 and 20 min, use a display with a 13–19 inches diagonal screen size, at a distance of 40–70 from the subject depending on the task design. Previous studies have reported that a short administration time can be more practical for a clinical environment and can avoid fatigue effect which can affect the patients' outcomes (Pedroli et al., 2015; Grattan and Woodbury, 2017). It was also observed that there are three main types of apparatus with the most used being a classic PC/laptop-monitor combination, the second most explored was the graphic tablet, and finally, the last decade has seen the introduction of touchscreen smartphone/tablet app tasks. It is important to consider various factors regarding hardware selection to enhance cost-effectiveness, feasibility and minimize practical issues affecting the clinician and patient (Tsirlin et al., 2009; Bonato and Deouell, 2013). The importance of minimizing motor and visuomotor task demands in order to avoid any contributing factors related to movement limitations is highlighted by the majority of the tests requiring a simple manual response either through a touchscreen, mouse, or response box/button. Previous research has established that increased motor demands can affect performance or cause motor bias to neglect patients with coexisting conditions such as directional hypokinesia (Mattingley et al., 1998; Sapir et al., 2007). One challenge influencing the optimization of CB tasks design is finding the right balance between the quantity and quality of data. For instance, a wider data collection would provide more information about the patient but could also increase the task duration, which could cause fatigue and affect the quality of the data.

As mentioned in the review most of the studies chose the CBS, cancellation, and line bisection tasks as a conventional comparison tool, these tests being among the most widely investigated existing neglect assessment tools with relatively high sensitivity scores (Bailey et al., 2000; Sarri et al., 2009; Chen et al., 2012; Azouvi, 2017). Several CB tasks were highly correlated with the CBS and cancellation tasks, and some studies reported varied results, which can be explained considering the lack of gold standard and the comparison with tasks requiring different demands and performance components. However, in order to accurately evaluate the diagnostic accuracy of a task, it is recommended to follow methodologies such as the ones summarized by Umemneku Chikere et al. (2019) in the absence of a golden standard. The CB tasks were overall more sensitive than conventional tools and could distinguish different patients groups (LBD, RBD+, RBD- etc.) from each other and from unimpaired control subjects, which corroborate the findings of a great deal of previous work (Schendel and Robertson, 2002; Dawson et al., 2008; Bonato, 2012; Bonato and Deouell, 2013). In the course of this review, we also discovered that CB tasks can collect a broad spectrum of data and provide more information about the patient's profile and behavior than PnP tasks. However, very little data were found in the literature around the specificity and reliability of CB tasks, and it was not possible to draw a conclusion regarding this. As presented in the review, CB tasks can provide greater flexibility than PnP (e.g., by projecting stimuli and testing neglect in near vs. far space). However, they operate in extra-personal space, in a similar way to PnP tasks, and cannot address all spatial representations relevant to the neglect syndrome (e.g., personal neglect).

The results presented here highlight the superiority of CB tasks compared to conventional methods in many domains, which is not unexpected considering most conventional tasks were designed and revised many years ago (e.g., the widely used BIT created by Wilson et al. in 1987). In recent years, the global technological expansion and digitalization of everyday life have minimized the practical constraints of CB assessment concerning requirements for hardware access. Additionally, technological advances have allowed the creation of more accessible designs, with some authors reducing further the practical boundaries by providing access to online/offline software for analysis and assessment (Rorden and Karnath, 2010; Dalmaijer et al., 2015). The reduction in barriers for the implementation of CB tasks and the evidence supporting their advantage in many domains, especially in detecting mild and chronic neglect cases, demonstrate that this method should be introduced to clinical settings. However, we do not suggest the total removal of conventional methods, since they can overcome practical restrictions (e.g., need for hardware) when CB methods are not necessary, such as in severe and acute cases. It can therefore be assumed that an optimized future model will include a combination of both conventional and CB assessment tools.

The evidence presented in this review demonstrates that CB neglect assessment methods are feasible, valid, flexible, reliable, and user-friendly tools. Our results are in accordance with the findings of multiple studies exploring the advantages of these methods (Halligan and Marshall, 1989; Kerkhoff and Marquardt, 1995; Donnelly et al., 1999; Guest et al., 2000; Smit et al., 2013; Van der Stoep et al., 2013; Hopfner et al., 2015).

Limitations

Most of the included studies suffer similar limitations. In order to overcome these issues and minimize bias, it is recommended that future studies avoid selection bias by including a consecutive or random large sample of control subjects and patients from a wide stroke population with equivalent demographics (Chassé and Fergusson, 2019). It is also important to include more than two sensitive conventional tools as comparisons since there is no accepted gold standard. Follow-up testing in order to explore effectively clinical validity, psychometric properties, and accuracy of the tasks would also be welcome (Umemneku Chikere et al., 2019). The main weakness of our study is that we could not perform a meta-analysis due to the high heterogeneity among index/comparison tests, study data types, and methodologies of analysis. However, we performed a vote counting analysis based on a direction of effect plot, which can be used to synthesize evidence when there is a lack of data consistency across the selected studies; this method is considered appropriate though less powerful than methods that include p-values (McKenzie and Brennan, 2019). Even though the QUADAS-2 tool seemed like the optimal tool it was designed for diagnostic accuracy studies and the heterogeneity of the studies affected the risk of bias decision, since the authors could not answer the questions confidently. The reviewers did not expand the inclusion criteria to include VR assessment studies, which would have increased the quantity of data. However, this allowed a more distinctive focus to be applied to the review on CB assessment. Virtual reality-based assessment of USN has already been the focus of similar previous work (Tsirlin et al., 2009).

Conclusion

Our review of major studies confirmed that CB assessment of USN can offer higher acceptability, flexibility, and feasibility compared to conventional methods. Extensive data show that CB methods have been proved to provide a wider variety of data than PnP tasks which can be crucial in understanding patients' profile and severity, as well as monitor progress and the effect of rehabilitation. There is a strong body of evidence demonstrating that CB assessment can be more sensitive and overcome conventional methods' practical issues such as compensatory strategies and ceiling effects, especially with more complex designs and combinations of different task types. The results obtained here may have implications for understanding essential features affecting the efficacy of CB tasks and indications can be implemented as a guide to improving future designs. The findings of this review complement those of earlier studies and suggest that CB tasks for USN assessment should be implemented in clinical settings.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author/s.

Author contributions

IG and DP conceived the presented idea. IG developed the methodology, conducted a comprehensive literature search, performed and expressed the data synthesis of the selected studies, and wrote the original draft. IG and DL performed the final study selection, data extraction, and quality appraisal of the studies. DP verified the findings of this work and was involved in expert review and editing. All authors contributed to the article and approved the final manuscript.

Funding

The authors received financial support for the publication of this article from the University of Birmingham.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2022.912626/full#supplementary-material

Abbreviations

BIT, behavioral inattention test; CB, computer-based; CBS, Catherine Bergego scale; CoC, center of cancellation; CVA, cerebrovascular accident; LBD, Left brain damaged; PnP, paper-and-pencil; PRISMA, preferred reporting items for systematic reviews and meta-Analysis; QUADAS-2, quality assessment tool for diagnostic accuracy studies-2; RBD, right brain damaged; RT, reaction time; TAP, test of attentional performance; TBI, traumatic brain injury; TIA, transient ischaemic attack; TOJ, temporal order judgment; USN, unilateral spatial neglect; –, without USN; +, with USN; VR, virtual reality.

References

Anderson, B., Mennemeier, M., and Chatterjee, A. (2000). Variability not ability: another basis for performance decrements in neglect. Neuropsychologia 38, 785–796. doi: 10.1016/S0028-3932(99)00137-2

Andres, M., Geers, L., Marnette, S., Coyette, F., Bonato, M., Priftis, K., et al. (2019). Increased cognitive load reveals unilateral neglect and altitudinal extinction in chronic stroke. J. Int. Neuropsychol. Society 25, 644–653. doi: 10.1017/S1355617719000249

Azouvi, P. (1996). Functional consequences and awareness of unilateral neglect: study of an evaluation scale. Neuropsychol. Rehabil. 6, 133–150. doi: 10.1080/713755501

Azouvi, P. (2017). The ecological assessment of unilateral neglect. Ann. Phys. Rehabil. Med. 60, 186–190. doi: 10.1016/j.rehab.2015.12.005

Azouvi, P., Jacquin-Courtois, S., and Luaute, J. (2016). Rehabilitation of unilateral neglect: evidence-based medicine. Ann. Phys. Rehabil. Med. 60, 191–197. doi: 10.1016/j.rehab.2016.10.006

Bailey, M. J., Riddoch, M. J., and Crome, P. (2000). Evaluation of a test battery for hemineglect in elderly stroke patients for use by therapists in clinical practice. NeuroRehabilitation 14, 139–150. doi: 10.3233/NRE-2000-14303

Baldassarre, A., Ramsey, L., Hacker, C. L., Callejas, A., Astafiev, S. V., Metcalf, N. V., et al. (2014). Large-scale changes in network interactions as a physiological signature of spatial neglect. Brain 137(Pt 12), 3267–3283. doi: 10.1093/brain/awu297

Bartolomeo, P., D'Erme, P., Perri, R., and Gainotti, G. (1998). Perception and action in hemispatial neglect. Neuropsychologia 36, 227–237. doi: 10.1016/S0028-3932(97)00104-8

Baylis, G. C., Baylis, L. L., and Gore, C. L. (2004). Visual neglect can be object-based or scene-based depending on task representation. Cortex 40, 237–246. doi: 10.1016/S0010-9452(08)70119-9

Beis, J. M., Andr,é, J. M., and Saguez, A. (1994). Detection of visual field deficits and visual neglect with computerized light emitting diodes. Arch. Phys. Med. Rehabil. 75, 711–714. doi: 10.1016/0003-9993(94)90201-1

Bisiach, E., and Luzzatti, C. (1978). Unilateral neglect of representational space. Cortex 14, 129–133. doi: 10.1016/S0010-9452(78)80016-1

Bonato, M. (2012). Neglect and extinction depend greatly on task demands: a review. Front. Hum. Neurosci. 6, 195. doi: 10.3389/fnhum.2012.00195

Bonato, M., and Deouell, L. Y. (2013). Hemispatial neglect: computer-based testing allows more sensitive quantification of attentional disorders and recovery and might lead to better evaluation of rehabilitation. Front. Hum. Neurosci. 7, 162. doi: 10.3389/fnhum.2013.00162

Bonato, M., Priftis, K., Marenzi, R., Umiltà, C., and Zorzi, M. (2010). Increased attentional demands impair contralesional space awareness following stroke. Neuropsychologia 48, 3934–3940. doi: 10.1016/j.neuropsychologia.2010.08.022

Bonato, M., Priftis, K., Umiltà, C., and Zorzi, M. (2013). Computer-based attention-demanding testing unveils severe neglect in apparently intact patients. Behav. Neurol. 179–181. doi: 10.1155/2013/139812

Boon, M. H., and Thomson, H. (2021). The effect direction plot revisited: Application of the 2019 Cochrane Handbook guidance on alternative synthesis methods. Res. Synth. Methods 12, 29–33. doi: 10.1002/jrsm.1458

Borsotti, M., Mosca, I. E., Di Lauro, F., Pancani, S., Bracali, C., Dore, T., et al. (2020). The visual scanning test: a newly developed neuropsychological tool to assess and target rehabilitation of extrapersonal visual unilateral spatial neglect. Neurol. Sci. 41, 1145–1152. doi: 10.1007/s10072-019-04218-2

Bublak, P., Finke, K., Krummenacher, J., Preger, R., Kyllingsbaek, S., Müller, H. J., et al. (2005). Usability of a theory of visual attention (TVA) for parameter-based measurement of attention II: evidence from two patients with frontal or parietal damage. J. Int. Neuropsychol. Soc. 11, 843–854. doi: 10.1017/S1355617705050988

Buxbaum, L. J., Dawson, A. M., and Linsley, D. (2012). Reliability and validity of the virtual reality lateralized attention test in assessing hemispatial neglect in right-hemisphere stroke. Neuropsychology 26, 430–441. doi: 10.1037/a0028674

Buxbaum, L. J., Ferraro, M. K., Veramonti, T., Farne, A., Whyte, J., Ladavas, E., et al. (2004). Hemispatial neglect: subtypes, neuroanatomy, and disability. Neurology 62, 749–756. doi: 10.1212/01.WNL.0000113730.73031.F4

Caggiano, P., and Jehkonen, M. (2018). The ‘neglected' personal neglect. Neuropsychol. Rev. 28, 417–435. doi: 10.1007/s11065-018-9394-4

Campbell, M., McKenzie, J. E., Sowden, A., Katikireddi, S. V., Brennan, S. E., Ellis, S., et al. (2020). Synthesis without meta-analysis (SWiM) in systematic reviews: reporting guideline. BMJ 368, l6890. doi: 10.1136/bmj.l6890

Canadian Stroke Best Practice Recommendations. (2019). Rehabilitation and Recovery Following Stroke: Recommendations Suggested Stroke Rehabilitation Screening and Assessment Tools, 6th Edn. Ottawa, ON: Heart and Stroke Foundation.

Chassé, M., and Fergusson, D. A. (2019). Diagnostic accuracy studies. Semin. Nucl. Med. 49, 87–93. doi: 10.1053/j.semnuclmed.2018.11.005

Checketts, M., Mancuso, M., Fordell, H., Chen, P., Hreha, K., Eskes, G. A., et al. (2021). Current clinical practice in the screening and diagnosis of spatial neglect post-stroke: Findings from a multidisciplinary international survey. Neuropsychol. Rehabil. 31, 1495–1526. doi: 10.1080/09602011.2020.1782946

Chen, P., Hreha, K., Fortis, P., Goedert, K. M., and Barrett, A. M. (2012). Functional assessment of spatial neglect: a review of the Catherine Bergego scale and an introduction of the Kessler foundation neglect assessment process. Top. Stroke Rehabil. 19, 423–435. doi: 10.1310/tsr1905-423

Chen, P., Hreha, K., Kong, Y., and Barrett, A. M. (2015). Impact of spatial neglect on stroke rehabilitation: evidence from the setting of an inpatient rehabilitation facility. Arch. Phys. Med. Rehabil. 96, 1458–1466. doi: 10.1016/j.apmr.2015.03.019

Chiba, Y., Nishihara, K., and Haga, N. (2010). Evaluating visual bias and effect of proprioceptive feedback in unilateral neglect. J. Clinic. Neurosci. 17, 1148–1152. doi: 10.1016/j.jocn.2010.02.017

Chiba, Y., Yamaguchi, A., and Eto, F. (2006). Assessment of sensory neglect: a study using moving images. Neuropsychol. Rehabil. 16, 641–652. doi: 10.1080/09602010543000073

Chung, S., Park, E., Ye, B. S., Lee, H., Chang, H.-J., Song, D., et al. (2016). The computerized table setting test for detecting unilateral neglect. PLoS ONE 11, e0147030. doi: 10.1371/journal.pone.0147030

Corbetta, M., and Shulman, G. L. (2011). Spatial neglect and attention networks. Annu. Rev. Neurosci. 34, 569–599. doi: 10.1146/annurev-neuro-061010-113731

Dalmaijer, E. S., Van der Stigchel, S., Nijboer, T. C., Cornelissen, T. H., and Husain, M. (2015). CancellationTools: all-in-one software for administration and analysis of cancellation tasks. Behav. Res. Methods 47, 1065–1075. doi: 10.3758/s13428-014-0522-7

Dawson, A. M., Buxbaum, L. J., and Rizzo, A. A. (2008). “The Virtual reality lateralized attention test: sensitivity and validity of a new clinical tool for assessing hemispatial neglect,” in 2008 Virtual Rehabilitation (Vancouver, BC). 77–82. doi: 10.1109/ICVR.2008.4625140

Deeks, J. J., Higgins, J. P., and Altman, D. G. (2019). “Analysing data and undertaking meta-analyses,” in Cochrane Handbook for Systematic Reviews of Interventions, eds J.P. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, and V. A. Welch. 241–284. doi: 10.1002/9781119536604.ch10

Deouell, L. Y., Sacher, Y., and Soroker, N. (2005). Assessment of spatial attention after brain damage with a dynamic reaction time test. J. Int. Neuropsychol. Society 11, 697–707. doi: 10.1017/S1355617705050824

Donnelly, N., Guest, R., Fairhurst, M., Potter, J., Deighton, A., and Patel, M. (1999). Developing algorithms to enhance the sensitivity of cancellation tests of visuospatial neglect. Behav. Res. Meth. Instrum. Comput. 31, 668–673. doi: 10.3758/BF03200743

Dukewich, K. R., Eskes, G. A., Lawrence, M. A., Macisaac, M.-B., Phillips, S. J., and Klein, R. M. (2012). Speed impairs attending on the left: comparing attentional asymmetries for neglect patients in speeded and unspeeded cueing tasks. Front. Hum. Neurosci. 6, 232–232. doi: 10.3389/fnhum.2012.00232

Erez, A., Katz, N., Ring, H., and Soroker, N. (2009). Assessment of spatial neglect using computerised feature and conjunction visual search tasks. Neuropsychol. Rehabil. 19, 677–695. doi: 10.1080/09602010802711160

Esposito, E., Shekhtman, G., and Chen, P. (2021). Prevalence of spatial neglect post-stroke: A systematic review. Ann. Phys. Rehabil. Med. 64, 101459. doi: 10.1016/j.rehab.2020.10.010

Gammeri, R., Iacono, C., Ricci, R., and Salatino, A. (2020). Unilateral spatial neglect after stroke: current insights. Neuropsychiatr. Dis. Treat. 16, 131–152. doi: 10.2147/NDT.S171461

Grattan, E. S., and Woodbury, M. L. (2017). Do neglect assessments detect neglect differently? Am J Occup Ther 71, 7103190050p7103190051–7103190050p7103190059. doi: 10.5014/ajot.2017.025015

Guest, R. M., Fairhurst, M. C., and Potter, J. M. (2002). Diagnosis of visuo-spatial neglect using dynamic sequence features from a cancellation task. Pattern Anal. Appl. 5, 261–270. doi: 10.1007/s100440200023

Guest, R. M., Fairhurst, M. C., Potter, J. M., and Donnelly, N. (2000). Analysing constructional aspects of figure completion for the diagnosis of visuospatial neglect. Proc. – Int. Conf. Pattern Recognit. 15, 316–319. doi: 10.1109/ICPR.2000.902922

Habekost, T., and Bundesen, C. (2003). Patient assessment based on a theory of visual attention (TVA): subtle deficits after a right frontal-subcortical lesion. Neuropsychologia 41, 1171–1188. doi: 10.1016/S0028-3932(03)00018-6

Halligan, P. W., and Marshall, J. C. (1989). Two techniques for the assessment of line bisection in visuo-spatial neglect: a single case study. J. Neurol. Neurosurg. Psychiatry 52, 1300–1302. doi: 10.1136/jnnp.52.11.1300

Hammerbeck, U., Gittins, M., Vail, A., Paley, L., Tyson, S. F., and Bowen, A. (2019). Spatial neglect in stroke: identification, disease process and association with outcome during inpatient rehabilitation. Brain Sci. 9, 374. doi: 10.3390/brainsci9120374

Heilman, K. M., and Van Den Abell, T. (1980). Right hemisphere dominance for attention: the mechanism underlying hemispheric asymmetries of inattention (neglect). Neurology 30, 327–330. doi: 10.1212/WNL.30.3.327

Heilman, K. M., Bowers, D., Valenstein, E., and Watson, R. T. (1987). “Hemispace and hemispatial neglect,” in Advances in Psychology, ed M. Jeannerod (North-Holland: Elsevier Science), 115–150. doi: 10.1016/S0166-4115(08)61711-2

Higgins, J. P. T., López-López, J. A., Becker, B. J., Davies, S. R., Dawson, S., Grimshaw, J. M., et al. (2019). Synthesising quantitative evidence in systematic reviews of complex health interventions. BMJ Glob Health. 4, e000858. doi: 10.1136/bmjgh-2018-000858

Hopfner, S., Kesselring, S., Cazzoli, D., Gutbrod, K., Laube-Rosenpflanzer, A., Chechlacz, M., et al. (2015). Neglect and motion stimuli–insights from a touchscreen-based cancellation task. PLoS ONE 10, e0132025. doi: 10.1371/journal.pone.0132025

Jee, H., Kim, J., Kim, C., Kim, T., and Park, J. (2015). Feasibility of a semi-computerized line bisection test for unilateral visual neglect assessment. Appl. Clin. Inform. 6, 400–417. doi: 10.4338/ACI-2015-01-RA-0002

Jehkonen, M., Laihosalo, M., and Kettunen, J. E. (2006). Impact of neglect on functional outcome after stroke – a review of methodological issues and recent research findings. Restor. Neurol. Neurosci. 24, 209–215.

Karnath, H.-O., and Rorden, C. (2012). The anatomy of spatial neglect. Neuropsychologia 50, 1010–1017. doi: 10.1016/j.neuropsychologia.2011.06.027

Kaufmann, B. C., Cazzoli, D., Pflugshaupt, T., Bohlhalter, S., Vanbellingen, T., Müri, R. M., et al. (2020). Eyetracking during free visual exploration detects neglect more reliably than paper-pencil tests. Cortex 129, 223–235. doi: 10.1016/j.cortex.2020.04.021

Kerkhoff, G., and Marquardt, C. (1995). VS - A new computer program for detailed offline analysis of visual-spatial perception. J. Neurosci. Methods 63, 75–84. doi: 10.1016/0165-0270(95)00090-9

Kinsbourne, M. (1993). “Orientational bias model of unilateral neglect: evidence from attentional gradients within hemispace,” in Unilateral Neglect: Clinical And Experimental Studies (Brain Damage, Behaviour and Cognition), eds. J. Marshall and I. Robertson (Psychology Press), 63–86.

Laeng, B., Brennen, T., and Espeseth, T. (2002). Fast responses to neglected targets in visual search reflect pre-attentive processes: an exploration of response times in visual neglect. Neuropsychologia 40, 1622–1636. doi: 10.1016/S0028-3932(01)00230-5

Liang, Y., Guest, R. M., Fairhurst, M. C., and Potter, J. M. (2007). Feature-based assessment of visuo-spatial neglect patients using hand-drawing tasks. Pattern Anal. Appl. 10, 361–374. doi: 10.1007/s10044-007-0074-x

List, A., Brooks, J. L., Esterman, M., Flevaris, A. V., Landau, A. N., Bowman, G., et al. (2008). Visual hemispatial neglect, re-assessed. J. Int. Neuropsychol. Society 14, 243–256. doi: 10.1017/S1355617708080284

Lunven, M., and Bartolomeo, P. (2017). Attention and spatial cognition: neural and anatomical substrates of visual neglect. Ann. Phys. Rehabil. Med. 60, 124–129. doi: 10.1016/j.rehab.2016.01.004

Luvizutto, G. J., Moliga, A. F., Rizzatti, G. R. S., Fogaroli, M. O., Moura Neto, E., Nunes, H. R. C., et al. (2018). Unilateral spatial neglect in the acute phase of ischemic stroke can predict long-term disability and functional capacity. Clinics (São Paulo) 73, e131. doi: 10.6061/clinics/2018/e131

Machner, B., Koenemund, I., von der Gablent, J., Bays, P. M., and Sprenger, A. (2018). The ipsilesional attention bias in right-hemisphere stroke patients as revealed by a realistic visual search task: neuroanatomical correlates and functional relevance. Neuropsychology 32, 850–865. doi: 10.1037/neu0000493

Marshall, S. C., Grinnell, D., Heisel, B., Newall, A., and Hunt, L. (1997). Attentional deficits in stroke patients: a visual dual task experiment. Arch. Phys. Med. Rehabil. 78, 7–12. doi: 10.1016/S0003-9993(97)90002-2

Mattingley, J., Husain, M., Rorden, C., Kennard, C., and Driver, J. (1998). Motor role of human inferior parietal lobe revealed in unilateral neglect patients. Nature 392, 179–182. doi: 10.1038/32413

McKenzie, J. E., and Brennan, S. E. (2019). “Synthesizing and presenting findings using other methods,” in Cochrane Handbook for Systematic Reviews of Interventions, 2nd Edn, eds J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, and V. A. Welch (Chichester: John Wiley & Sons), 321–347. doi: 10.1002/9781119536604.ch12

Menon, A., and Korner-Bitensky, N. (2004). Evaluating unilateral spatial neglect post stroke: working your way through the maze of assessment choices. Top. Stroke Rehabil. 11, 41–66. doi: 10.1310/KQWL-3HQL-4KNM-5F4U

Milner, A. D., Harvey, M., Roberts, R. C., and Forster, S. V. (1993). Line bisection errors in visual neglect: misguided action or size distortion? Neuropsychologia 31, 39–49. doi: 10.1016/0028-3932(93)90079-F

Mizuno, K., Kato, K., Tsuji, T., Shindo, K., Kobayashi, Y., and Liu, M. (2016). Spatial and temporal dynamics of visual search tasks distinguish subtypes of unilateral spatial neglect: comparison of two cases with viewer-centered and stimulus-centered neglect. Neuropsychol. Rehabil. 26, 610–634. doi: 10.1080/09602011.2015.1051547

Moher, D., Liberati, A., Tetzlaff, J., and Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ 339, b2535. doi: 10.1136/bmj.b2535

Montedoro, V., Alsamour, M., Dehem, S., Lejeune, T., Dehez, B., and Edwards, M. G. (2018). Robot diagnosis test for egocentric and allocentric hemineglect. Archiv. Clinic. Neuropsychol. 34, 481–494. doi: 10.1093/arclin/acy062

Morando, M., Bonotti, E., Giannarelli, G., Olivieri, S., Dellepiane, S., and Cecchi, F. (2019). “Monitoring home-based activity of stroke patients: a digital solution for visuo-spatial neglect evaluation” in Proceedings of the 4th International Conference on NeuroRehabilitation (ICNR2018), October 16-20, 2018, Pisa (Italy), 696–701. doi: 10.1007/978-3-030-01845-0_139

Ogourtsova, T., Silva, W. S., Archambault, P. S., and Lamontagne, A. (2017). Virtual reality treatment and assessments for post-stroke unilateral spatial neglect: a systematic literature review. Neuropsychol. Rehabil. 27, 409–454. doi: 10.1080/09602011.2015.1113187

Page, M., McKenzie, J., Bossuyt, P., Boutron, I., Hoffmann, T., Mulrow, C., et al. (2021). The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372, n71. doi: 10.1136/bmj.n71

Pallavicini, F., Pedroli, E., Serino, S., Dell'Isola, A., Cipresso, P., Cisari, C., et al. (2015). Assessing unilateral spatial neglect using advanced technologies: the potentiality of mobile virtual reality. Technol. Health Care 23, 795–807. doi: 10.3233/THC-151039

Pedroli, E., Serino, S., Cipresso, P., Pallavicini, F., and Riva, G. (2015). Assessment and rehabilitation of neglect using virtual reality: a systematic review. Front. Behav. Neurosci. 9, 226. doi: 10.3389/fnbeh.2015.00226

Pierce, J. E., Ronchi, R., Thomasson, M., Rossi, I., Casati, C., Saj, A., et al. (2021). A novel computerized assessment of manual spatial exploration in unilateral spatial neglect. Neuropsychol. Rehabil. 21, 1–22. doi: 10.1080/09602011.2021.1875850

Posner, M. I., Cohen, Y., and Rafal, R. D. (1982). Neural systems control of spatial orienting. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 298, 187–198. doi: 10.1098/rstb.1982.0081

Quinn, T. J., Livingstone, I., Weir, A., Shaw, R., Breckenridge, A., McAlpine, C., et al. (2018). Accuracy and feasibility of an android-based digital assessment tool for post stroke visual disorders-the strokevision app. Front. Neurol. 9, 146. doi: 10.3389/fneur.2018.00146

Rabuffetti, M., Farina, E., Alberoni, M., Pellegatta, D., Appollonio, I., Affanni, P., et al. (2012). Spatio-temporal features of visual exploration in unilaterally brain-damaged subjects with or without neglect: results from a touchscreen test. PLoS ONE 7,e3e31511. doi: 10.1371/annotation/bf311a56-bc48-44b6-9b0f-7ccc97fc290f

Rabuffetti, M., Ferrarin, M., Spadone, R., Pellegatta, D., Gentileschi, V., Vallar, G., et al. (2002). Touch-screen system for assessing visuo-motor exploratory skills in neuropsychological disorders of spatial cognition. Med. Biol. Engineer. Comput. 40, 675–686. doi: 10.1007/BF02345306

Rengachary, J., d'Avossa, G., Sapir, A., Shulman, G. L., and Corbetta, M. (2009). Is the posner reaction time test more accurate than clinical tests in detecting left neglect in acute and chronic stroke? Arch. Phys. Med. Rehabil. 90, 2081–2088. doi: 10.1016/j.apmr.2009.07.014

Rengachary, J., He, B. J., Shulman, G. L., and Corbetta, M. (2011). A behavioral analysis of spatial neglect and its recovery after stroke. Front. Hum. Neurosci. 5, 29. doi: 10.3389/fnhum.2011.00029

Rode, G., Pagliari, C., Huchon, L., Rossetti, Y., and Pisella, L. (2017). Semiology of neglect: an update. Ann. Phys. Rehabil. Med. 60, 177–185. doi: 10.1016/j.rehab.2016.03.003

Rorden, C., and Karnath, H.-O. (2010). A simple measure of neglect severity. Neuropsychologia 48, 2758–2763. doi: 10.1016/j.neuropsychologia.2010.04.018

Sacher, Y., Serfaty, C., Deouell, L., Sapir, A., Henik, A., and Soroker, N. (2004). Role of disengagement failure and attentional gradient in unilateral spatial neglect – a longitudinal study. Disabil. Rehabil. 26, 746–755. doi: 10.1080/09638280410001704340

Sapir, A., Kaplan, J., He, B., and Corbetta, M. (2007). Anatomical correlates of directional hypokinesia in patients with hemispatial neglect. J. Neurosci. 27, 4045–4051. doi: 10.1523/JNEUROSCI.0041-07.2007

Sarri, M., Greenwood, R., Kalra, L., and Driver, J. (2009). Task-related modulation of visual neglect in cancellation tasks. Neuropsychologia 47, 91–103. doi: 10.1016/j.neuropsychologia.2008.08.020

Schendel, K. L., and Robertson, L. C. (2002). Using reaction time to assess patients with unilateral neglect and extinction. J. Clin. Exp. Neuropsychol. 24, 941–950. doi: 10.1076/jcen.24.7.941.8390

Smit, M., Van der Stigchel, S., Visser-Meily, J. M. A., Kouwenhoven, M., Eijsackers, A. L. H., and Nijboer, T. C. W. (2013). The feasibility of computer-based prism adaptation to ameliorate neglect in sub-acute stroke patients admitted to a rehabilitation center. Front. Hum. Neurosci. 7, 353. doi: 10.3389/fnhum.2013.00353

Spreij, L. A., Ten Brink, A. F., Visser-Meily, J. M. A., and Nijboer, T. C. W. (2020). Simulated driving: the added value of dynamic testing in the assessment of visuo-spatial neglect after stroke. J. Neuropsychol. 14, 28–45. doi: 10.1111/jnp.12172

Stigchel, S., and Nijboer, T. (2017). Temporal order judgements as a sensitive measure of the spatial bias in patients with visuospatial neglect. J. Neuropsychol. 12, 427–441. doi: 10.1111/jnp.12118

Stone, S. P., Halligan, P. W., and Greenwood, R. J. (1993). The incidence of neglect phenomena and related disorders in patients with an acute right or left hemisphere stroke. Age Ageing 22, 46–52. doi: 10.1093/ageing/22.1.46

Suchan, J., Rorden, C., and Karnath, H.-O. (2012). Neglect severity after left and right brain damage. Neuropsychologia 50, 1136–1141. doi: 10.1016/j.neuropsychologia.2011.12.018

Ten Brink, A. F., Elshout, J., Nijboer, T. C., and Van der Stigchel, S. (2020). How does the number of targets affect visual search performance in visuospatial neglect? J. Clin. Exp. Neuropsychol. 42, 1010–1027. doi: 10.1080/13803395.2020.1840520

Ten Brink, A. F., van der Stigchel, S., Visser-Meily, J. M. A., and Nijboer, T. C. W. (2016). You never know where you are going until you know where you have been: disorganized search after stroke. J. Neuropsychol. 10, 256–275. doi: 10.1111/jnp.12068

Ten Brink, A. F., Verwer, J. H., Biesbroek, J. M., Visser-Meily, J. M. A., and Nijboer, T. C. W. (2017). Differences between left- and right-sided neglect revisited: a large cohort study across multiple domains. J. Clin. Exp. Neuropsychol. 39, 707–723. doi: 10.1080/13803395.2016.1262333

Tipper, S. P., and Behrmann, M. (1996). Object-centered not scene-based visual neglect. J. Exp. Psychol. Hum. Percept. Perform. 22, 1261–1278. doi: 10.1037/0096-1523.22.5.1261

Toba, M. N., Rabuffetti, M., Duret, C., Pradat-Diehl, P., Gainotti, G., and Bartolomeo, P. (2018). Component deficits of visual neglect: “magnetic” attraction of attention vs. impaired spatial working memory. Neuropsychologia 109, 52–62. doi: 10.1016/j.neuropsychologia.2017.11.034

Tsirlin, I., Dupierrix, E., Chokron, S., Coquillart, S., and Ohlmann, T. (2009). Uses of virtual reality for diagnosis, rehabilitation and study of unilateral spatial neglect: review and analysis. CyberPsychol. Behav. 12, 175–181. doi: 10.1089/cpb.2008.0208

Ulm, L., Wohlrapp, D., Meinzer, M., Steinicke, R., Schatz, A., Denzler, P., et al. (2013). A circle-monitor for computerised assessment of visual neglect in peripersonal space. PLoS ONE 8, e82892. doi: 10.1371/journal.pone.0082892

Umemneku Chikere, C. M., Wilson, K., Graziadio, S., Vale, L., and Allen, A. J. (2019). Diagnostic test evaluation methodology: a systematic review of methods employed to evaluate diagnostic tests in the absence of gold standard - an update. PLoS ONE 14, e0223832. doi: 10.1371/journal.pone.0223832

Vaes, N., Lafosse, C., Nys, G., Schevernels, H., Dereymaeker, L., Oostra, K., et al. (2015). Capturing peripersonal spatial neglect: an electronic method to quantify visuospatial processes. Behav. Res. Methods 47, 27–44. doi: 10.3758/s13428-014-0448-0

Van der Stoep, N., Visser-Meily, J. M., Kappelle, L. J., de Kort, P. L., Huisman, K. D., Eijsackers, A. L., et al. (2013). Exploring near and far regions of space: distance-specific visuospatial neglect after stroke. J. Clin. Exp. Neuropsychol. 35, 799–811. doi: 10.1080/13803395.2013.824555

Van Kessel, M. E., Van Nes, I. J. W., Geurts, A. C. H., Brouwer, W. H., and Fasotti, L. (2013). Visuospatial asymmetry in dual-task performance after subacute stroke. J. Neuropsychol. 7, 72–90. doi: 10.1111/j.1748-6653.2012.02036.x

Verdon, V., Schwartz, S., Lovblad, K. O., Hauert, C. A., and Vuilleumier, P. (2010). Neuroanatomy of hemispatial neglect and its functional components: a study using voxel-based lesion-symptom mapping. Brain 133(Pt 3), 880–894. doi: 10.1093/brain/awp305

Villarreal, S., Linnavuo, M., Sepponen, R., Vuori, O., Jokinen, H., and Hietanen, M. (2020). Dual-task in large perceptual space reveals subclinical hemispatial neglect. J. Int. Neuropsychol. Society 26, 993–1005. doi: 10.1017/S1355617720000508

Vossel, S., Eschenbeck, P., Weiss, P., and Fink, G. (2010). Assessing visual extinction in right-hemisphere stroke patients with and without neglect. Klinische Neurophysiologie. Conference 41, ID32. doi: 10.1055/s-0030-1250861

Whiting, P. F., Rutjes, A. W., Westwood, M. E., Mallett, S., Deeks, J. J., Reitsma, J. B., et al. (2011). QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 155, 529–536. doi: 10.7326/0003-4819-155-8-201110180-00009

Wilson, B., Cockburn, J., and Halligan, P. (1987). Development of a behavioral test of visuospatial neglect. Arch. Phys. Med. Rehabil. 68, 98–102.

Zebhauser, P. T., Vernet, M., Unterburger, E., and Brem, A.-K. (2019). Visuospatial neglect - a theory-informed overview of current and emerging strategies and a systematic review on the therapeutic use of non-invasive brain stimulation. Neuropsychol. Rev. 29, 397–420. doi: 10.1007/s11065-019-09417-4

Keywords: attention, unilateral spatial neglect (USN), computer-based assessment, stroke, visual search, systematic review

Citation: Giannakou I, Lin D and Punt D (2022) Computer-based assessment of unilateral spatial neglect: A systematic review. Front. Neurosci. 16:912626. doi: 10.3389/fnins.2022.912626

Received: 05 April 2022; Accepted: 02 August 2022;

Published: 19 August 2022.

Edited by:

Notger G. Müller, University of Potsdam, GermanyReviewed by:

Tatiana Ogourtsova, McGill University, CanadaPavel Bobrov, Institute of Higher Nervous Activity and Neurophysiology (RAS), Russia

Copyright © 2022 Giannakou, Lin and Punt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ioanna Giannakou, aXhnMDcxQHN0dWRlbnQuYmhhbS5hYy51aw==

Ioanna Giannakou

Ioanna Giannakou Dan Lin1,2

Dan Lin1,2 David Punt

David Punt