94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Neurosci., 02 June 2022

Sec. Brain Imaging Methods

Volume 16 - 2022 | https://doi.org/10.3389/fnins.2022.911957

This article is part of the Research TopicMultimodal Brain Image Fusion: Methods, Evaluations, and ApplicationsView all 13 articles

In this paper, a method for medical image registration based on the bounded generalized Gaussian mixture model is proposed. The bounded generalized Gaussian mixture model is used to approach the joint intensity of source medical images. The mixture model is formulated based on a maximum likelihood framework, and is solved by an expectation-maximization algorithm. The registration performance of the proposed approach on different medical images is verified through extensive computer simulations. Empirical findings confirm that the proposed approach is significantly better than other conventional ones.

Image registration is an essential part of computer vision and image processing (Visser et al., 2020), which is widely used in medical image analysis and intelligent vehicles (Zhu et al., 2013, 2017, 2021a,2021b,2022). Medical image analysis is the basis for judging the patient’s condition in future intelligent diagnosis and treatment or auxiliary diagnosis and treatment (Weissler et al., 2015; Yang et al., 2018). More importantly, image registration sets the stage for subsequent image segmentation and fusion (Saygili et al., 2015; Zhu et al., 2019). Current clinical practice typically involves printing images onto radiographic film and viewing them on a lightbox. The computerized approach offers potential benefits, particularly by accurately aligning the information in different images and providing tools to visualize the composite image. A key stage in this process is the alignment or registration of the images (Hill et al., 2001).

The premise of image registration is that there is a same logical part between the reference image and the floating image (Gholipour et al., 2007; Reaungamornrat et al., 2016). Image registration realizes transformation by determining the space coordinate transformation between two image pixels, which enables the corresponding region on the reference image to coincide with the floating image in space (Zhang et al., 2019). This means that the same anatomical point on the human body has the same spatial position (the same position, angle and size) on two matched images (Gefen et al., 2007).

There are two medical image registration methods: feature-based registration and gray-level-based registration (Sengupta et al., 2021). The feature-based registration method does not directly utilize the gray-level information of the image. It is based on abstracting the geometric features (such as corners, the center of the closed region, edges, contours, etc.) that remain unchanged in the image to be registered. The parameter values of the transformation model between the images to be registered are obtained by describing the features of the two images, respectively, and establishing the matching relationship (Huang, 2015). The image registration based on this feature has advantages of less computation and faster registration speed, and it is robust to changes of gray image scale. However, its registration accuracy is usually not as high as that of gray-level-based image registration (Li et al., 2020; Ran and Xu, 2020).

In the gray-level-based medical image registration method, a similarity measure function between images is established through the gray information of the entire image (Yan et al., 2020). The transformation model parameters between images are obtained by maximizing and minimizing the value of the similarity measure function (Zhang et al., 2019). The gray-level-based image registration algorithm uses all the gray information of the image in the registration process. Therefore, the precision and robustness of the obtained transformation model are higher than the feature-based image registration (Frakes et al., 2008). The commonly used gray-level-based image registration methods are sequential similarity detection algorithm (SSDA), cross-correlation, mutual information, and phase correlation (Gupta et al., 2021). Based on the traditional algorithms, Yan et al. (2010) extracted a fast and effective algorithm, SSDA. Anuta (1970) proposed an image registration technique using Fourier transform for cross-correlation image detection and calculation to improve speed performance of registration. Evangelidis and Psarakis (2008) offered a modified version of the correlation coefficient as a performance criterion for image approval. Zheng et al. (2011) proposed a cross-correlation registration algorithm based on image rotation projection to avoid rotation and interpolation steps in image registration, reducing data dimension and computational complexity. For image registration using mutual information as a similarity measure, Pluim et al. (2000) combined image gray level with spatial image information and added image gradient into the algorithm, which successfully solved the problem of finding the global optimal solution in the registration process. A direct image registration method using mutual information (MI) as an alignment metric was proposed by Dame and Marchand (2012). A set of two-dimensional motion parameters can be estimated accurately in real time by optimizing the maximum mutual information. Lu et al. (2008) proposed a new joint histogram estimation method, which utilizes Hanning’s windowed since approximation function as a kernel function of partial volume interpolation. Orchard and Mann (2009) utilized the maximum likelihood clustering method of the joint strength scatter chart. The expected probability of the cluster is modeled as a Gaussian mixture model (GMM), and the expectation-maximization (EM) method is utilized for achieving solution in iterative algorithm. Sotiras et al. (2013) emphasized the technology applied to medical images and systematically presented the latest technology. The paper provided an extensive account of registration techniques in a systematic manner. Pluim et al. (2004) compared the performance of mutual information as a registration measure with that of other f-information measures. An important finding is that several measures can potentially yield significantly more accurate results than mutual information. Klein et al. (2007) compared the performance of eight non-rigid registration optimization methods of medical images. The results show that the Robbins–Monro method is the best choice in most applications. With this approach, the computation time per iteration can be lowered approximately 500 times without affecting the rate of convergence. However, the distribution range of GMM is (−∞, + ∞), and so the method could not process the target information in a fixed area.

In the field of computer vision, image pixel values are distributed over a limited area of [0, 255]. Therefore, the bounded generalized Gaussian mixture model (BGGMM) is used to model the image (Nguyen et al., 2014), which can more thoroughly describe the joint intensity vector distribution of the image pixels and highlight the details of the image. The BGGMM has good robustness at the same time. Therefore, based on the BGGMM, this paper models both single-modality and multimodal image registration and then solves the model under the framework of maximum likelihood estimation (Zhu and Cochoff, 2002). Experimental verification results on a large number of image data sets show that compared with the existing gray-level-based medical image registration algorithm based, the image registration accuracy of the proposed method is improved.

Suppose that two different medical images are registered, one medical image represents the reference image, denoted by A, and the other represents the floating image, denoted by B. These two different medical images come from different sensors. Therefore, each pixel position x in the space of two medical images corresponds to a pixel value, and we use the joint intensity vector to represent the intensity value of the two images at the position. Here, Ix can be expressed as:

Among them, Ax and Bx, respectively, represent the pixel value of the reference image and the floating image at the pixel position x. In order to realize the registration of two images, it is necessary to assign N registration parameters to each image to describe the spatial transformation of the image. θ can represent the set of all registration parameters. Then, the joint intensity vector of the registration image after employing registration parameters can be re-expressed as .

The bounded generalized Gaussian mixture model (BGGMM) is used to describe the distribution of the joint intensity. The probability distribution of the joint strength vector is:

Where ρ={um,σm,Λm,τm}is the model parameters, M represents the number of bounded generalized Gaussian (BGG) distribution components in the mixture model, um, σm and Λm, respectively, represent the mean, covariance, and shape parameters of the m-th BGG distribution component. τmrepresents the weight of the distribution component in the mixture model and satisfies the condition τm≥0 and . BG(.) represents a BGG distribution, i.e.,

Which ∂m represents a bounded support area, and the distribution is written as

and

Where Γ(⋅) is the gamma function.

Therefore, X represents the number of pixels, and the log-likelihood function of image registration is:

In the framework of maximum likelihood, the hidden variable zxm that is introduced to the model indicates the category of the cluster that belongs to, that is, it belongs to the m-th (BGG) distribution component. Therefore, the log-likelihood function of the model can be written as:

According to the above model, the EM algorithm is used to estimate various parameters involved in the model. The EM algorithm is mainly divided into two steps, step E and step M.

Step E:

Step M:

Here t represents the t-th iteration. The final model parameters can be determined by iterating these two steps.

In step E, the probability that belonging to the m-th cluster is given:

Where . Using the posterior distribution η(zxm) and the current parameters ρ(t)

At step M, the parameters at the time (t+1) are updated by the maximizing equation (10). The results are as follows:

Where Rm represents:

In formula (12), when x ≥ 0, sign(x) is equal to 1, otherwise it is equal to 0. represents the random variable in the probability distribution , o is the number of random variables Som. Note that O is a large integer, and O = 106 is taken in this paper.

Where Gm represents:

Under the condition that other parameters are fixed, use the Newton-Raphson method to estimate Λm. Each iteration needs to solve the first and second derivatives of Q(ρ,ρt) with respect to parameter Λm. The next iteration value of Λm can be expressed as:

Where ϑ is the scale factor, and the derivative of Q(ρ,ρt) with respect to Λm is given by:

Where:

The second derivative of Q(ρ,ρt) with respect to Λm is:

Where,

Finally, update the estimate of the prior probability that can be expressed as:

Optimize the corresponding parameter θ by deriving the result of Q(ρ,ρt) to θ as 0:

In order to find the appropriate model movement parameter θ to satisfy the equation (23), introduce a small movement increment and replace θ with as the estimated parameter. The following is obtained by using approximate linear space transformation:

Incorporate formula (23) into formula (24) and the following can be obtained:

The optimization of the registration parameters can be achieved by solving the movement increment in equation (25).

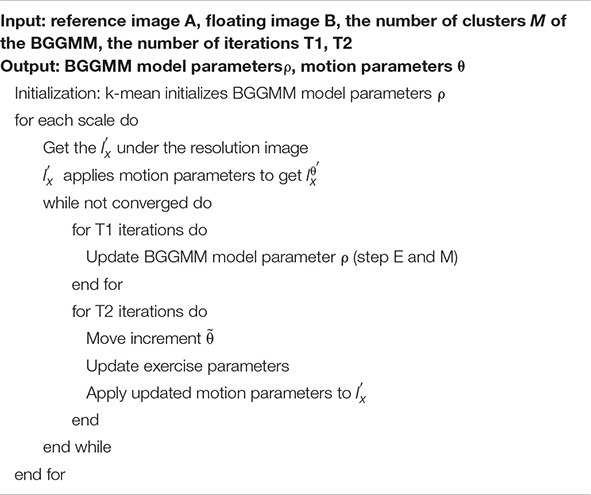

In summary, the proposed image registration algorithm based on the BGGMM is shown in Algorithm 1 and Figure 1. This paper regards M BGG distribution components in the joint intensity scatter plot of the registered image as M clusters, uses the k-mean method to find the cluster centers and compares parameter initialization of the BGGMM model. This paper initializes Λm = 2. Secondly, this paper also utilizes multi-resolution image registration, and the resolutions are set [0.1 0.2 1], respectively. The image is first registered at low resolution and then high resolution, and the registration result at each resolution can be used as the next resolution registration. Therefore, the calculation time can be reduced, and the algorithm convergence can be accelerated in the iterative process of the proposed algorithm.

Algorithm 1. Description of algorithm for medical image registration based on BGGMM.

The EM algorithm is first used to estimate the BGGMM model parameter ρ on the joint intensity scatter plot. After the optimal BGGMM model parameter ρ is estimated for T1 times, the motion adjustment is performed. This paper introduces a small movement increment and iterates T2 times to update the motion parameters, ensuring the optimal parameters are obtained. Finally, iterate repeatedly until convergence to achieve image registration.

The computer environment of experiments in this paper is Intel(R) Core (TM) i5-7300HQ CPU @ 2.50 GHz with 8 GB RAM, while the operating system is 64-bit Windows 10.0. All simulations are implemented using MATLAB R2020b.

The mutual information method (MI) (Lu et al., 2008), the enhanced correlation coefficient (ECC) (Evangelidis and Psarakis, 2008) and the ensemble registration approach (ER) (Orchard and Mann, 2009) are compared to evaluate the performance of the proposed method. The average pixel displacement (PAD) (Li et al., 2016) is used as a registration error to objectively measure the performance of different approaches. In the successful registration case, the value of the PAD is zero. The larger the PAD, the more significant deviation and the lower registration accuracy. If PAD is greater than 3, the registration is considered to have failed.

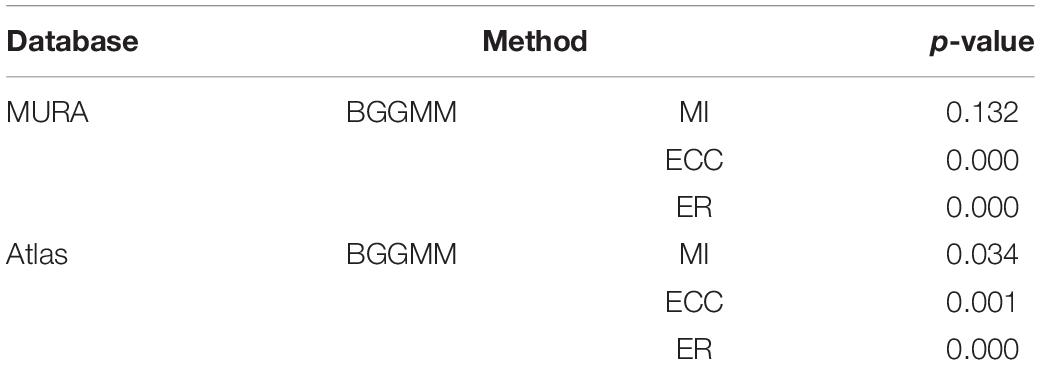

MURA (Rajpurkar et al., 2017) and Altas (Yu and Zheng, 2016) public image data sets are used to verify the performance of these methods. Details about two image datasets and experiments are reported, as shown in Table 1, where the bold values indicate the best results. The t-test is used to test the significance of the difference between the PAD results of the BGGMM method and the other three registration methods in image registration on public data sets. P < 0.05 means the difference is statistically significant, and the comparison results are summarized in Table 2. Both in the MURA and Atlas data sets, the PAD results of the BGGMM method were minor, and the differences were statistically significant compared to the PAD results of the ECC and ER methods (P < 0.05). In the MURA data set, the difference between the PAD results of the BGGMM method and the MI method was not statistically different (P > 0.05). However, in the Atlas dataset, the PAD results of the BGGMM method were smaller than those of the MI method, and the difference was statistically significant (P < 0.05).

Table 2. The t-test results of the pad results of BGGMM versus other image registration methods on public data sets.

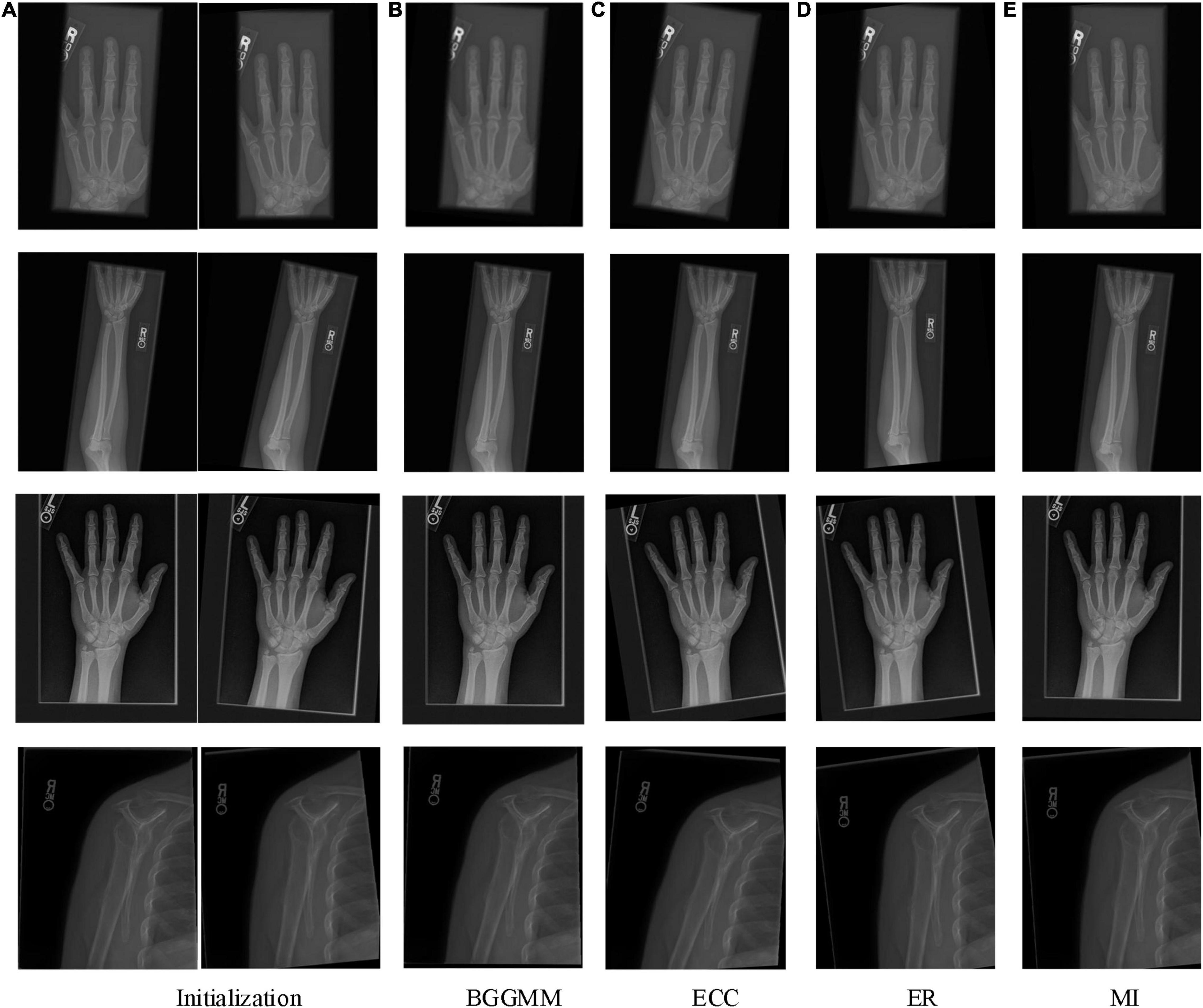

The proposed approach is tested on an ensemble of MURA images. The test set is from the Large Dataset for Abnormality Detection in Musculoskeletal Radiographs (MURA) project’s training data set. One slice of this dataset is depicted in Figure 2. The initial image to be registered is generated by random translation and rotation transformation, and the pixel and angle transformation parameters ranges are [–20, 20] and [–10, 10], respectively. This paper sets M = 6, that is, the number of BGG distribution components in the initial model is 6. The MURA dataset included 12,173 patients, 14,863 studies, and 40,561 multi-view radiographs. Each study belonged to one of the seven standard upper limb radiology study types: fingers, elbows, forearms, hands, humerus, shoulders, and wrists. Each study was manually marked as normal or abnormal by the radiologist.

The PAD values of the MURA dataset are summarized in the first column of Table 1. The average registration error of the proposed BGGMM method is significantly lower than other methods. The BGGMM method is more advantageous in edge retention and information content of source images. The registration results of the four methods are shown in Figure 3, which register the source image and transform the image with rotation and translation. In these four methods, registration is performed to the source image, and rotation, translation and transformation is performed to the image. Figure 3A shows the source image and the image to be registered.

Figure 3. The registration results of four methods in four skeleton images of MURA dataset. (A) Initialization. (B) BGGMM. (C) ECC. (D) (ER). (E) MI.

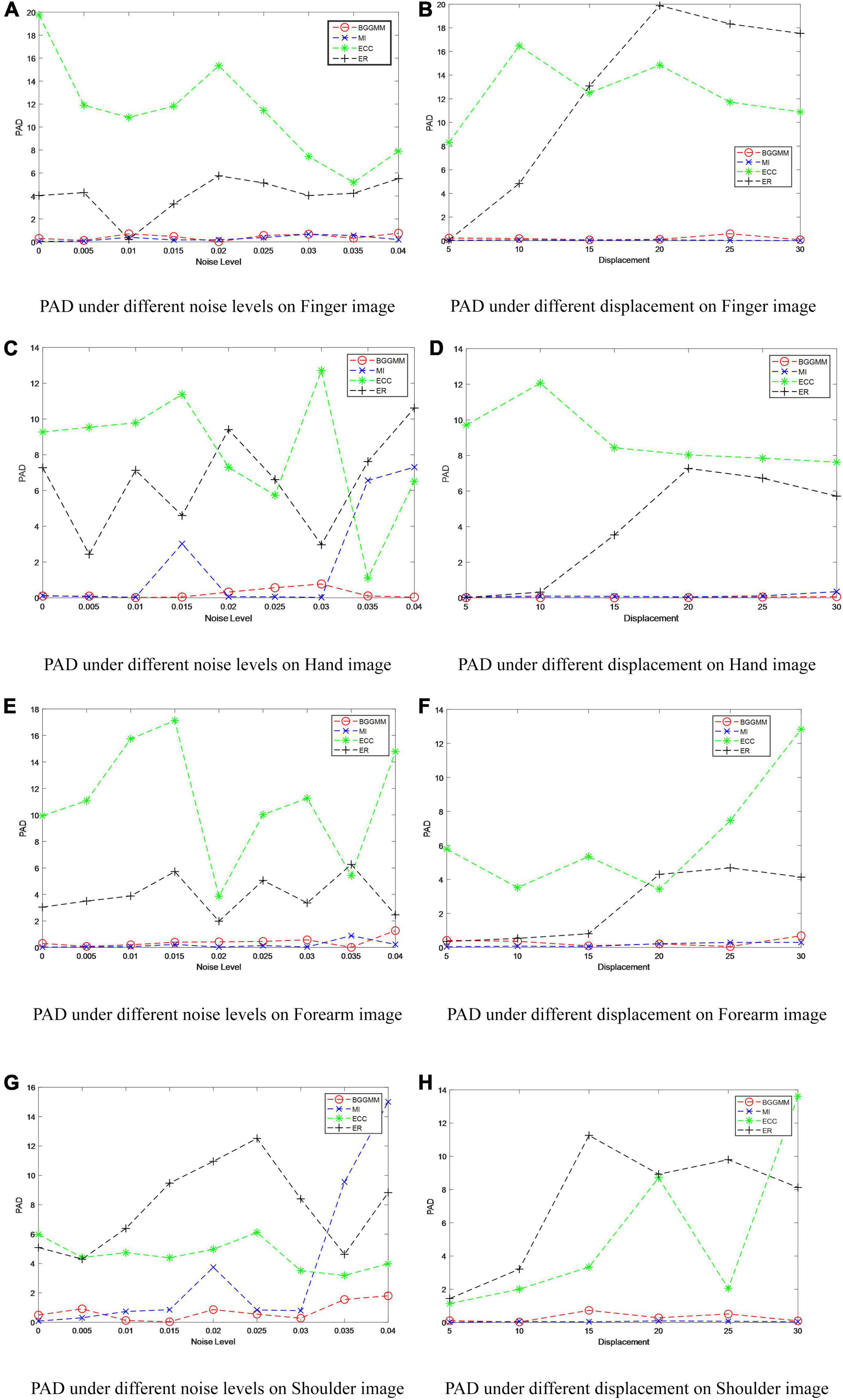

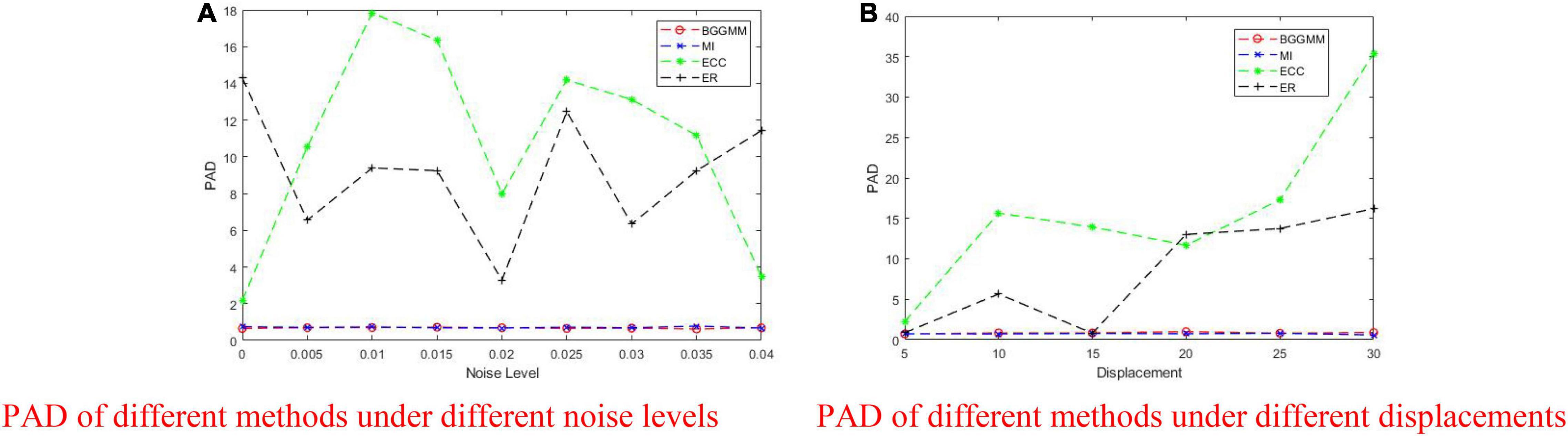

With different noise levels, Gaussian noise is used as the independent variable in finger images of the experiment, and the noise level increases incrementally to test the performance of BGGMM. The mean value of Gaussian noise is 0, and the variance ranges from 0 to 0.04. As shown in Figure 4A, the excellent registration performance of several comparison algorithms can be observed. Among them, the registration error of the ER algorithm is the largest. The registration error of the BGGMM algorithm is lower than other methods under different noise levels.

Figure 4. PAD of different methods under different noise levels and different displacements in MURA dataset. (A) PAD under different noise levels on Finger image. (B) PAD under different displacement on Finger image. (C) PAD under different noise levels on Hand image. (D) PAD under different displacement on Hand image. (E) PAD under different noise levels on Forearm image. (F) PAD under different displacement on Forearm image. (G) PAD under different noise levels on Shoulder image. (H) PAD under different displacement on Shoulder image.

The registration performance of the algorithm on Finger images is also tested under different displacement situations, as shown in Figure 4B. The displacement is added by moving the image t pixels horizontally and vertically, where the change range of t is 0–30, that is, the variation of the horizontal axis in Figure 4B. It is not difficult to see that the registration performance of this algorithm is better than other algorithms under different displacements. Among them, the ECC algorithm has poor anti-displacement interference, which is regarded as a registration failure. The ER algorithm has a good registration effect under the condition of small displacement. The BGGMM algorithm has the best performance when the change in displacement is large. Similarly, Figures 4C–H show the PAD value of different methods on Hand images, Forearm images, and Shoulder images under different noise levels and different displacements. The proposed method has the lowest registration error and the best registration performance.

Altas dataset is a multimodal dataset that includes more than 13,000 MRI and CT images of patients with brain diseases. Among them, MRI images have images with T1, T2, and PD weights. At the same time, it also includes the lesion images of patients with different lesion times. The image in which the MRI has T1, T2, and PD weights is selected, as shown in Figure 5. The initial image to be registered is generated by random translation and rotation transformation, and the pixel and angle transformation parameters ranges are [–20, 20] and [–10, 10], respectively. This paper sets M = 6, that is, the number of BGG distribution components in the initial model is 6.

The PAD values of Altas dataset are summarized in the second column of Table 1. The average registration error of the proposed BGGMM method is significantly lower than other methods. The BGGMM method has an advantage in preserving the edge information of the source image. The registration results of the four methods are shown in Figure 6. In these four methods, two different modality images are used to register separately.

The registration performance of BGGMM, ECC, and ER methods is tested under different Gaussian noises. According to the registration results in Figure 7A, the comparison of registration effects under different Gaussian noises can be obtained. The mean value of Gaussian noise is 0, and the variance ranges from 0 to 0.04. Among them, the registration error of the ECC algorithm is the largest. The PAD value of other algorithms mentioned above in this experiment is greater than 3, which is regarded as registration failures. The BGGMM algorithm has the lowest PAD value and has good registration performance.

Figure 7. PAD of BGGMM, ECC, ER, and MI methods under different noise levels and different displacements. (A) PAD of different methods under different noise levels. (B) PAD of different methods under different displacements.

As shown in Figure 7B, the displacement is added by moving the image t pixels horizontally and vertically, where the change range of t is 0–30. When the displacement changes considerably, the error generated by the ER algorithm becomes larger and exceeds the effective range. As the change in displacement increases, the PAD value of our BGGMM algorithm is still unaffected, always maintaining a low level and performing better among the four algorithms.

A medical registration method based on a BGGMM is proposed in this paper. Firstly, a BGGMM is applied to model the joint intensity vector distribution of the medical image. The proposed approach then formulates the model as an ML framework and estimates the parameters of models applying an EM algorithm. The experimental results indicate that the proposed BGGMM significantly improves registration performances on medical images compared with benchmark methods. The effect of this method is more pronounced when dealing with source images with more interference information and larger offsets. In the future, the research on medical image fusion will be carried out based on BGGMM image registration, which will provide convenience for medical image analysis.

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

YX and HZ conceived and designed the study. JW and KX conducted most of the experiments and data analysis and wrote the manuscript. KC, RL, and RN participated in collecting materials and assisting in drafting manuscripts. All authors reviewed and approved the manuscript.

This study was supported by the Talents’ Innovation Ability Training Program of Army Medical Center of PLA of China (Clinical Medicine Technological Innovation Ability Training Project, Grant No. 2019CXLCC017), the Key Talents Support Project of Army Medical University (Grant No. B-3261), the General Program of the National Natural Science Foundation of China (Grant Nos. 62073052 and 61905033), and the General Program of the Chongqing Natural Science Foundation of China (Grant No. cstc2021jcyj-msxmX0373).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Anuta, P. E. (1970). Spatial registration of multispectral and multitemporal digital imagery using fast Fourier transform techniques. IEEE Trans. Geosci. Electron. 8, 353–368. doi: 10.1109/tge.1970.271435

Dame, A., and Marchand, E. (2012). Second-order optimization of mutual information for real-time image registration. IEEE Trans. Image Process. 21, 4190–4203. doi: 10.1109/TIP.2012.2199124

Evangelidis, G. D., and Psarakis, E. Z. (2008). Parametric image alignment using enhanced correlation coefficient maximization. IEEE Trans. Pattern Anal. Mach. Intell. 30, 1858–1865. doi: 10.1109/TPAMI.2008.113

Frakes, D. H., Dasi, L. P., Pekkan, K., Kitajima, H. D., Sundareswaran, K., Yoganathan, A. P., et al. (2008). A new method for registration-based medical image interpolation. IEEE Trans. Med. Imaging 27, 370–377. doi: 10.1109/TMI.2007.907324

Gefen, S., Kiryati, N., and Nissanov, J. (2007). Atlas-based indexing of brain sections via 2-D to 3-D image registration. IEEE Trans. Biomed. Eng. 55, 147–156. doi: 10.1109/TBME.2007.899361

Gholipour, A., Kehtarnavaz, N., Briggs, R., Devous, M., and Gopinath, K. (2007). Brain functional localization: a survey of image registration techniques. IEEE Trans. Med. Imaging 26, 427–451. doi: 10.1109/TMI.2007.892508

Gupta, S., Gupta, P., and Verma, V. S. (2021). Study on anatomical and functional medical image registration methods. Neurocomputing 452, 534–548. doi: 10.1016/j.neucom.2020.08.085

Hill, D. L., Batchelor, P. G., Holden, M., and Hawkes, D. J. (2001). Medical image registration. Phys. Med. Biol. 46, R1–R45. doi: 10.1088/0031-9155/46/3/201

Huang, K. T. (2015). Feature Based Deformable Registration of Three-Dimensional Medical Images for Automated Quantitative Analysis and Adaptive Image Guidance. Int. J. Radiat. Oncol. Biol. Phys. 93, E558–E559.

Klein, S., Staring, M., and Pluim, J. P. W. (2007). Evaluation of Optimization Methods for Nonrigid Medical Image Registration Using Mutual Information and B-Splines. IEEE Trans. Image Process. 16, 2879–2890. doi: 10.1109/tip.2007.909412

Li, Q., Li, S., Wu, Y., Guo, W., Qi, S., Huang, G., et al. (2020). Orientation-independent Feature Matching (OIFM) for Multimodal Retinal Image Registration. Biomed. Signal Process. Control 60:101957. doi: 10.1016/j.bspc.2020.101957

Li, Y., He, Z., Zhu, H., and Wu, Y. (2016). Jointly registering and fusing images from multiple sensors. Inform Fus. 27, 85–94. doi: 10.1016/j.inffus.2015.05.007

Lu, X., Zhang, S., Su, H., and Chen, Y. (2008). Mutual information-based multimodal image registration using a novel joint histogram estimation. Comput. Med. Imaging Graph. 32, 202–209. doi: 10.1016/j.compmedimag.2007.12.001

Nguyen, T. M., Wu, Q. J., and Zhang, H. (2014). Bounded generalized Gaussian mixture model. Pattern Recognit. 47, 3132–3142.

Orchard, J., and Mann, R. (2009). Registering a multisensor ensemble of images. IEEE Trans. Image Process. 19, 1236–1247. doi: 10.1109/tip.2009.2039371

Pluim, J. P. W., Maintz, J. B. A., and Viergever, M. A. (2000). Image registration by maximization of combined mutual information and gradient information. IEEE Trans. Med. Imaging 19, 809–814. doi: 10.1109/42.876307

Pluim, J. P. W., Maintz, J. B. A., and Viergever, M. A. (2004). f-information measures in medical image registration. IEEE Trans. Med. Imaging 23, 1508–1516. doi: 10.1109/TMI.2004.836872

Rajpurkar, P., Irvin, J., Bagul, A., Ding, D., Duan, T., and Mehta, H. (2017). A. MURA Dataset: Towards Radiologist-Level Abnormality Detection in Musculoskeletal Radiographs. 1, 2–215. doi: 10.48550/arXiv.1712.06957

Ran, Y., and Xu, X. (2020). Point cloud registration method based on SIFT and geometry feature. Optik 203:163902. doi: 10.1016/j.ijleo.2019.163902

Reaungamornrat, S., De Silva, T., Uneri, A., Vogt, S., Kleinszig, G., Khanna, A. J., et al. (2016). MIND demons: symmetric diffeomorphic deformable registration of MR and CT for image-guided spine surgery. IEEE Trans. Med. Imaging 35, 2413–2424. doi: 10.1109/TMI.2016.2576360

Saygili, G., Staring, M., and Hendriks, E. A. (2015). Confidence estimation for medical image registration based on stereo confidences. IEEE Trans. Med. Imaging 35, 539–549. doi: 10.1109/TMI.2015.2481609

Sengupta, D., Gupta, P., and Biswas, A. (2021). A survey on mutual information based medical image registration algorithms. Neurocomputing 486, 174–188. doi: 10.1109/TMI.2003.815867

Sotiras, A., Davatzikos, C., and Paragios, N. (2013). Deformable Medical Image Registration: a Survey. IEEE Trans. Med. Imaging 47, 3132–3142. doi: 10.1109/TMI.2013.2265603

Visser, M., Petr, J., Müller, D. M., Eijgelaar, R. S., Hendriks, E. J., Witte, M., et al. (2020). Accurate MR image registration to anatomical reference space for diffuse glioma. Front. Neurosci. 14:585. doi: 10.3389/fnins.2020.00585

Weissler, B., Gebhardt, P., Dueppenbecker, P. M., Wehner, J., Schug, D., Lerche, C. W., et al. (2015). A digital preclinical PET/MRI insert and initial results. IEEE Trans. Med. Imaging 34, 2258–2270. doi: 10.1109/TMI.2015.2427993

Yan, X. C., Wei, S. M., Wang, Y. E., and Xue, Y. (2010). AGV’s image registration algorithm based on SSDA. Sci. Technol. Eng. 10, 696–699.

Yan, X., Zhang, Y., Zhang, D., and Hou, N. (2020). Multimodal image registration using histogram of oriented gradient distance and data-driven grey wolf optimizer. Neurocomputing 392, 108–120. doi: 10.1016/j.neucom.2020.01.107

Yang, W., Zhong, L., Chen, Y., Lin, L., Lu, Z., Liu, S., et al. (2018). Predicting CT image from MRI data through feature matching with learned nonlinear local descriptors. IEEE Trans. Med. Imaging 37, 977–987. doi: 10.1109/TMI.2018.2790962

Yu, W., and Zheng, G. (2016). “Atlas-Based Reconstruction of 3D Volumes of a Lower Extremity from 2D Calibrated X-ray Images,” in International Conference on Medical Imaging and Augmented Reality, (Cham: Springer International Publishing), 366–374. doi: 10.1007/978-3-319-43775-0_33

Zhang, J., Ma, W., Wu, Y., and Jiao, L. (2019). Multimodal remote sensing image registration based on image transfer and local features. IEEE Geosci. Remote Sens. Lett. 16, 1210–1214. doi: 10.1109/lgrs.2019.2896341

Zheng, L., Wang, Y., and Hao, C. (2011). Cross-correlation registration algorithm based on the image rotation and projection,” in 2011 4th International Congress on Image and Signal Processing, (Shanghai, China: IEEE), 1095–1098.

Zhu, H., Leung, H., and He, Z. (2013). State estimation in unknown non-Gaussian measurement noise using variational Bayesian technique. IEEE Trans. Aerosp. Electron. Syst. 49, 2601–2614. doi: 10.1109/TAES.2013.6621839

Zhu, H., Mi, J., Li, Y., Yuen, K. V., and Leung, H. (2021a). VB-Kalman Based Localization for Connected Vehicles with Delayed and Lost Measurements: theory and Experiments. IEEE ASME Trans. Mech. 49, 2601–2614. doi: 10.1109/TMECH.2021.3095096

Zhu, H., Zhang, G., Li, Y., and Leung, H. (2021b). A novel robust Kalman filter with unknown non-stationary heavy-tailed noise. Automatica 127:109511. doi: 10.1016/j.automatica.2021.109511

Zhu, H., Yuen, K. V., Mihaylova, L., and Leung, H. (2017). Overview of environment perception for intelligent vehicles. IEEE Trans. Intell. Transp. Syst. 18, 2584–2601. doi: 10.1109/TITS.2017.2658662

Zhu, H., Zhang, G., Li, Y., and Leung, H. (2022). An adaptive kalman filter with inaccurate noise covariances in the presence of outliers. IEEE Trans. Automat. Control 67, 374–381. doi: 10.1109/TAC.2021.3056343

Zhu, H., Zou, K., Li, Y., Cen, M., and Mihaylova, L. (2019). Robust non-rigid feature matching for image registration using geometry preserving. Sensors 19:2729. doi: 10.3390/s19122729

Keywords: medical image registration, gray-level-based registration, multimodal, Gaussian mixture model, bounded generalized Gaussian mixture model

Citation: Wang J, Xiang K, Chen K, Liu R, Ni R, Zhu H and Xiong Y (2022) Medical Image Registration Algorithm Based on Bounded Generalized Gaussian Mixture Model. Front. Neurosci. 16:911957. doi: 10.3389/fnins.2022.911957

Received: 03 April 2022; Accepted: 04 May 2022;

Published: 02 June 2022.

Edited by:

Yu Liu, Hefei University of Technology, ChinaReviewed by:

Xin Jin, Yunnan University, ChinaCopyright © 2022 Wang, Xiang, Chen, Liu, Ni, Zhu and Xiong. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hao Zhu, emh1aGFvQGNxdXB0LmVkdS5jbg==; Yan Xiong, eGlvbmd5YW44MTVAMTYzLmNvbQ==

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.