- 1School of Systems and Enterprises, Stevens Institute of Technology, Hoboken, NJ, United States

- 2College of Electrical Engineering, Qingdao University, Qingdao, China

- 3Department of Dermatology, Massachusetts General Hospital, Harvard Medical School, Boston, MA, United States

- 4Department of Biomedical Informatics, Harvard Medical School, Boston, MA, United States

- 5Department of Electrical and Computer Engineering, Stevens Institute of Technology, Hoboken, NJ, United States

- 6MEG Center, Division of Neurology, Cincinnati Children’s Hospital Medical Center, Cincinnati, OH, United States

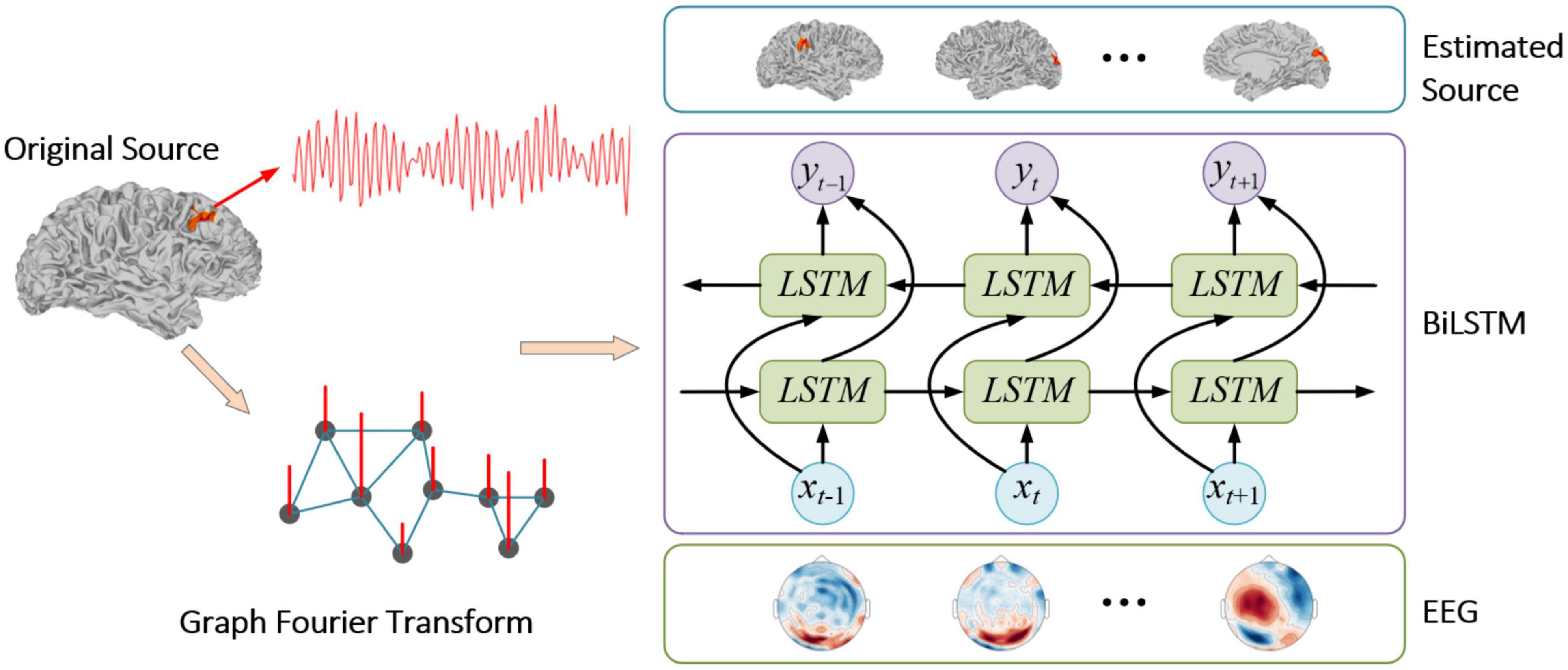

Electrophysiological source imaging (ESI) refers to the process of reconstructing underlying activated sources on the cortex given the brain signal measured by Electroencephalography (EEG) or Magnetoencephalography (MEG). Due to the ill-posed nature of ESI, solving ESI requires the design of neurophysiologically plausible regularization or priors to guarantee a unique solution. Recovering focally extended sources is more challenging, and traditionally uses a total variation regularization to promote spatial continuity of the activated sources. In this paper, we propose to use graph Fourier transform (GFT) based bidirectional long-short term memory (BiLSTM) neural network to solve the ESI problem. The GFT delineates the 3D source space into spatially high, medium and low frequency subspaces spanned by corresponding eigenvectors. The low frequency components can naturally serve as a spatially low-band pass filter to reconstruct extended areas of source activation. The BiLSTM is adopted to learn the mapping relationship between the projection of low-frequency graph space and the recorded EEG. Numerical results show the proposed GFT-BiLSTM outperforms other benchmark algorithms in synthetic data under varied signal-to-noise ratios (SNRs). Real data experiments also demonstrate its capability of localizing the epileptogenic zone of epilepsy patients with good accuracy.

Introduction

EEG/MEG source imaging (ESI), also known as EEG/MEG source localization, is a non-invasive neuroimaging technology that infers the location, direction, and distribution of the corresponding brain sources from the EEG or MEG data (He et al., 2018). Compared with the invasive modalities, the recording of EEG/MEG signals imposes minimum risks of blooding and inflammation of the brain (Portillo-Lara et al., 2021). Compared to other non-invasive brain imaging modalities, like computed tomography (CT), positron emission tomography (PET), functional magnetic resonance imaging (fMRI), and functional near-infrared spectroscopy (fNIRS), the temporal resolution of EEG is up to a millisecond (He et al., 2018), which allows it to track the electrical activity of neurons in smaller temporal granularity (Numata et al., 2019). The study of ESI is of great significance in both neuroscience and clinical applications (Congedo and Sherlin, 2011). Accurate estimation of brain sources can not only help neuroscientists to better understand the brain mechanism (Liu et al., 2019) and the pathological characteristics of brain injury or mental disorders (da Silva, 2013), but also help doctors to identify the lesion areas of brain diseases such as epilepsy focal regions, which can contribute to the improvement of the accuracy of presurgical evaluations (Sanei and Chambers, 2013).

However, the inverse problem of ESI is highly ill-posed (Qin et al., 2017; He et al., 2018; Cui et al., 2019), and there can be infinite numbers of source configurations that explain the EEG recording since the number of EEG sensors on the scalp is far less than the number of brain sources (Liu et al., 2017; Hecker et al., 2021). Consequently, numerous methods have been proposed to solve the ESI problem by incorporating different regularizations or prior information to seek a unique solution, as further discussed in see section “Related Work.” In recent years, deep learning has achieved great success in the fields of computer vision (Voulodimos et al., 2018), natural language processing (Young et al., 2018), bioinformatics (Min et al., 2017), etc., by employing its end-to-end feature extraction and representation capability (Deng and Yu, 2014; LeCun et al., 2015). Solving the inverse problems in the computer vision domain such as image reconstruction (Schlemper et al., 2017), super resolution (Dong et al., 2015), etc., has achieved great success by using a variety of artificial neural network (ANN) architectures such as the convolutional neural network (CNN) (LeCun and Bengio, 1995), the recurrent neural network (RNN) (Rodriguez et al., 1999), and the RNN with long short-term memory (LSTM) (Hochreiter and Schmidhuber, 1997).

To solve the ESI problem, deep learning frameworks have also been proposed in the past years, but with only a few existing works available. For example, Bore et al. (2021) introduced an RNN with LSTM units for spatiotemporal EEG source imaging and the proposed approach achieved good performance against the benchmark algorithms. Hecker et al. (2021) constructed a novel CNN-based structure, named ConvDip, to detect multiple sources, and this architecture is shown to outperform state-of-the-art methods. Wei et al. (2021) proposed an edge sparse basis network to learn the mapping between edge sparse source activation and recorded EEG signal.

As the source signal is defined on an irregular source space, where each source is defined as a vertex in a 3D source space (Liu et al., 2018), there exists a spatially connected graph structure among sources that have not been fully explored in the existing literature, especially with the recent advance of graph signal processing (Huang et al., 2016). In this work, we propose to employ the spatial-temporal structure of EEG source signal and come up with a new framework based on spatial graph Fourier transform (GFT) (Sandryhaila and Moura, 2013), and bidirectional LSTM (BiLSTM) neural network (Schuster and Paliwal, 1997), termed as GFT-BiLSTM to solve the ESI inverse problem. The main contributions of this paper are as follows:

(i) We propose to use the GFT on the 3D source space, and delineate the source space into spatially high, medium and low frequency subspaces spanned by corresponding eigenvectors, and the low frequency components naturally serve as a basis to estimate an extended areas of source activation.

(ii) By projecting the original source signal into a reduced dimensional subspace with low frequency eigenvectors, the dimension of output layer of BiLSTM can be greatly reduced.

(iii) The numerical experiments show that the proposed GFT-BiLSTM outperforms the benchmark algorithms based on area under the curve (AUC) and the localization error (LE).

Related Work

Given the ill-posedness nature of ESI, traditional methods typically adopt parsimonious models to get a unique solution by introducing priors or regularizations based on the assumptions from neural physiology, brain anatomy, etc. (Scherg and Berg, 1991). The first category of ESI approaches is the equivalent current dipole (ECD) source localization (Cover et al., 2007). This method treats the neural electrical activity of the cerebral cortex as one of several ECDs. With such a constraint, the spatial location and orientation of each ECD can be optimized to best interpret the measured EEG signals. The ECD model has played a certain role in the localization of focal brain activity. However, the real brain sources can have multiple source activations (Sanei and Chambers, 2013), while the ECD method can only locate a single source point which makes it unable to reconstruct the distributed pattern of activated sources (Zumer et al., 2007). Another category of ESI methods, namely distributed source localization framework, has become more widely used in recent years. The current density distribution (CDD) model-based approach does not make any prior assumptions on the number of dipoles but divides the cerebral cortex into numerous triangular grids (Liao et al., 2012). The neural electrical activity on the brain voxels is represented by brain sources defined on the 3D mesh grid. Since the location of each source in the CDD model is fixed, the distributed source imaging only needs to solve a linear inverse problem (Astolfi et al., 2004). Over the past few decades, many distributed source imaging algorithms have been developed. The most popular ones are based on L2 norm constraints such as the minimum norm estimate (MNE) (Koles, 1998), the dynamic statistical parametric mapping (dSPM) (Tanaka et al., 2009), the low-resolution electromagnetic tomography analysis (LORETA), and the exact LORETA (eLORETA), (Jatoi et al., 2014), etc. The computation of these methods is simple, but the resulting solutions can be overdiffuse (Ou et al., 2009). Consequently, the sparsity constraints-based source imaging algorithms have been proposed by many researchers, such as the minimum current estimate (MCE) (Wen et al., 1998), the focal underdetermined system solver (FOCUSS) (Murray and Kreutz-Delgado, 2001), etc. Another class of methods for the ESI inverse problem is the data-driven method, which mainly includes the subspace-based classic Multiple Signal Classification (MUSIC) (Vergallo and Lay-Ekuakille, 2013) and the beamforming approaches, such as the linearly constrained minimum variance (LCMV) beamformer (Lin et al., 2008). MUSIC is the version of the Spatio-temporal approach. Multiple dipoles can be found in this technique via scanning potential locations through one dipole model (Mosher et al., 1992). The LCMV beamformer is a type of adaptive spatial filter that localizes activity sources by minimizing the contributions of other uncorrelated sources (Wong and Gordon, 2009). Recent developments on ESI include some interesting works such as utilizing more sophisticated edge-sparse regularization (Sohrabpour et al., 2020), or multitask framework for source localization among multiple subjects (Janati et al., 2020), or employing manifold graph structure in the EEG source space (Liu et al., 2021), source localization using multimodality of fMRI and EEG (Nguyen et al., 2018). However, the graph structure of the spatially connected sources is not fully explored in the literature, as the graph signal processing technique (Ortega et al., 2018) can have a principled way to decompose the spatial graph signal into components with different spatial frequencies. In this work, we come up with a new framework based on spatial graph Fourier transform and bidirectional LSTM (BiLSTM) neural network to efficiently solve the brain source extents reconstruction problem.

Materials and Methods

In this section, we first give a brief introduction of the forward problem, then the spatial graph signal processing technique is explained, followed by structure of the BiLSTM neural network and finally, the GFT-BiLSTM model is introduced.

Forward Problem

The relationship between the scalp potential measured by the electrodes and the brain source distribution can be expressed as follows:

where t represents the time, vector x(t) ∈ Rn × 1 represents the EEG or MEG signal measured by n electrodes, matrix H ∈ Rn × m represents the lead field, vector s(t) ∈ Rm × 1 represents the source signal generated by m brain sources, and vector ε(t) ∈ Rn × 1 represents the additive noise from observation.

The forward model models the linear mapping between scalp potential measured by the electrodes and the brain source signal (Birot et al., 2014). The solution to the forward problem relies on the establishment of the head model, which is determined by the geometry and corresponding electrical conductivity of different head tissues such as brain, skull, scalp, etc. (Acar and Makeig, 2010). In the early days, the mainly used head models are the spherical model and the ellipsoid model (Gutiérrez et al., 2005). With the development of brain imaging technology, real head models, which can be calculated by the boundary element method (BEM), the finite elements method (FEM), and the finite difference method (FDM) are increasingly used (Akalin-Acar and Gençer, 2004). Once the head model is established, the lead field matrix can be determined.

Graph Signal Processing in the Brain Source Space

The connectivity relationship between m sources can be represented by an undirected graph, which can be defined as follows:

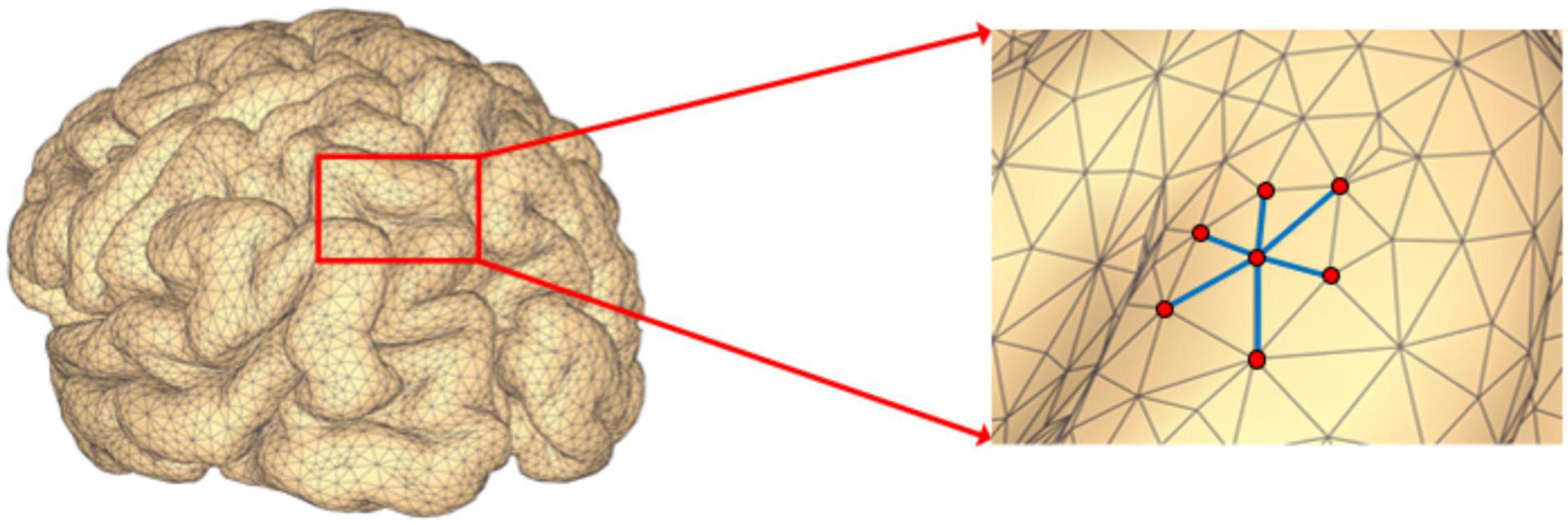

where V ∈ Rm × 1 is a set of m nodes, A ∈ Rm × m is the corresponding adjacency matrix. If there is no edge connecting nodes i and j, then aij = 0; otherwise, aij > 0, and its value represents the weight of the edge between the two nodes. In addition, since G is an undirected graph, then aij = aji, which means the adjacency matrix A is symmetric. In the EEG source space, all the potential source locations are represented by the nodes defined on a 3D mesh as is illustrated in Figure 1. When the source locations i and j are neighbors on the 3D mesh, then we set aij > 0.

The graph signal is defined on the set of graph nodes V, which is represented by a vector, and each element represents the signal value at the corresponding node. The brain source signal s = [s1, s2, …, sm]T, in which each element si represents the signal value of the i-th source voxel, is defined on the nodes of graph G. The traditional Fourier transform calculates the projection of a function f(t) on the basis function e−iwt, and the projected value of a time series signal using Fourier transform F(w) represents its magnitude at the basis of a specific frequency. Different from the traditional Fourier transform defined in the temporal domain, for signals defined on a graph, the eigenvectors of the Laplacian matrix L of the graph can be used as the basis vectors of the GFT, where the Laplacian matrix L can be calculated as follows:

where L ∈ Rm × m, and D ∈ Rm × m is a diagonal matrix called the degree matrix, in which the diagonal elements satisfy , that is, the sum of elements in the i-th row of A. Since A and L is real and symmetric, therefore, L can be decomposed as follows:

where Λ ∈ Rm × m is a diagonal matrix and the diagonal elements λi, (i = 1, 2, …, m) are the eigenvalues of L and satisfy λ1 ≤ λ2 ≤ … ≤ λm, U ∈ Rm × m is the eigenvector matrix, which is also an orthogonal matrix satisfying UUT = I, each column in U is an eigenvector of L and corresponds to the eigenvalue in Λ. With the eigenvectors of the Laplacian matrix L as the Fourier basis vectors, each eigenvector can be regarded as a graph basis with a certain frequency, and this frequency corresponds to the eigenvalue. The smaller the eigenvalue, the lower the frequency of the corresponding eigenvector, which is manifested as a small difference between the signals of adjacent nodes on the graph; on the contrary, a larger variation among neighboring signals. The value of a graph Fourier coefficient can measure the amplitude of the graph signal at different frequencies. With the eigenvectors as the Fourier basis vectors, the GFT of a given graph signal s can be defined as follows:

where vector is the graph Fourier coefficient. Further, the inverse graph Fourier transform (IGFT) of s can be defined as:

The above two formulas show that a graph signal can be decomposed into components with different frequencies through the GFT, and can also be recovered through the IGFT.

To characterize the graph frequency, we introduce the following definition:

Definition 1: Graph Frequency (GF): GF, denoted as fG, is a function of ui which represents the total number of sign flips of ui between any two connected nodes on G, it is defined as follows:

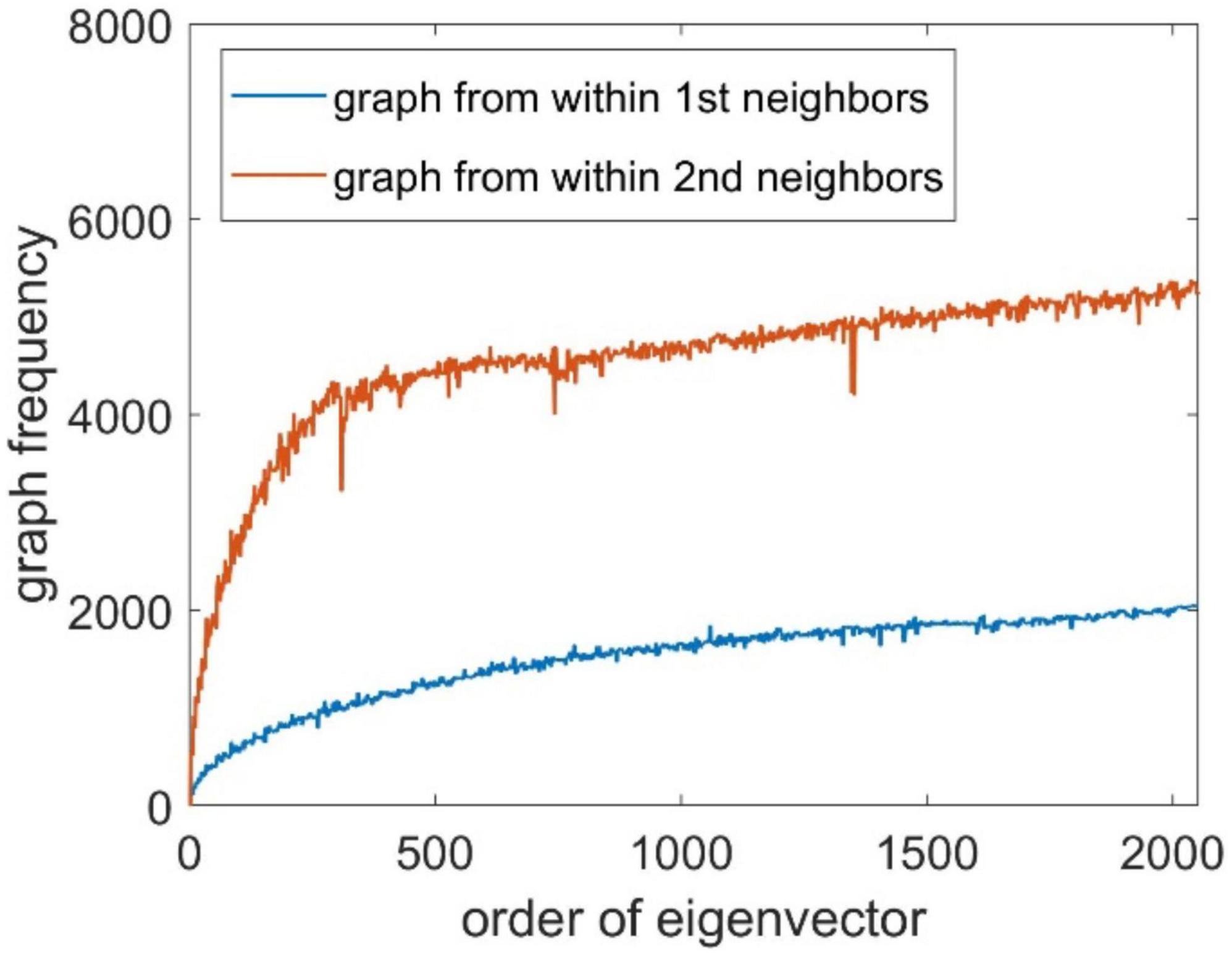

where Ω(j) represents all neighbors of node j, and I(⋅) is an indicator function to check whether the values of ui at nodes j and p have a sign flip. The number of sign flips is analogous to counting the number of zero crossings of the basis signal within a given window for a time series data. We constructed the Laplacian matrices within first-order neighbors and second-order neighbors, and the associated GF spectrum is shown in Figure 2. It can be seen that the GF value of the eigenvector increases as the eigenvalue increases.

Similar to the counterpart in the time domain, the spatial frequency basis matrix U can be similarly decomposed into different spatial frequency bands, such as U = [Ulow, Umedium, Uhigh]. The graph signal s can be projected into a subspace of U. For example, is the projection of s into a space spanned by low frequency eigenvectors. In our work, we use the spatially low frequency components as a filter to reconstruct the focally extended sources.

Bidirectional Long-Short Term Memory Neural Network

Bidirectional long-short term memory neural network is an extension of the traditional RNN (Schuster and Paliwal, 1997). For the time series, it is recognized that RNN can effectively estimate the information at the future moment based on the previous states. However, for the time series with a long sequence of states, the estimation performance of RNN will be greatly discounted, because the future information in a long time series usually depends on the information from distant history moments, which is the long-term dependence. However, the superior structure of the LSTM unit equips the network with the ability to solve long-term dependence. The RNN with LSTM units can filter the information by a unique structure called “gate” and store the valid information by the so-called “memory cell.” The elements in a gate vector have values in the interval [0,1]. When preceding time series information arrives at the gate, it will be multiplied with the gate vector element-wise, if the element value in the gate vector is 1, then the timing information multiplied with it will be retained, and if the element value is 0, the information will be discarded after a multiplication with 0. In this way, the filtering of information propagated in the LSTM unit is achieved. The valid information obtained after filtering is then stored in the memory cell and passed on to the next moment to prevent being lost over time, thus effectively addressing the long-term dependence existing in traditional RNNs.

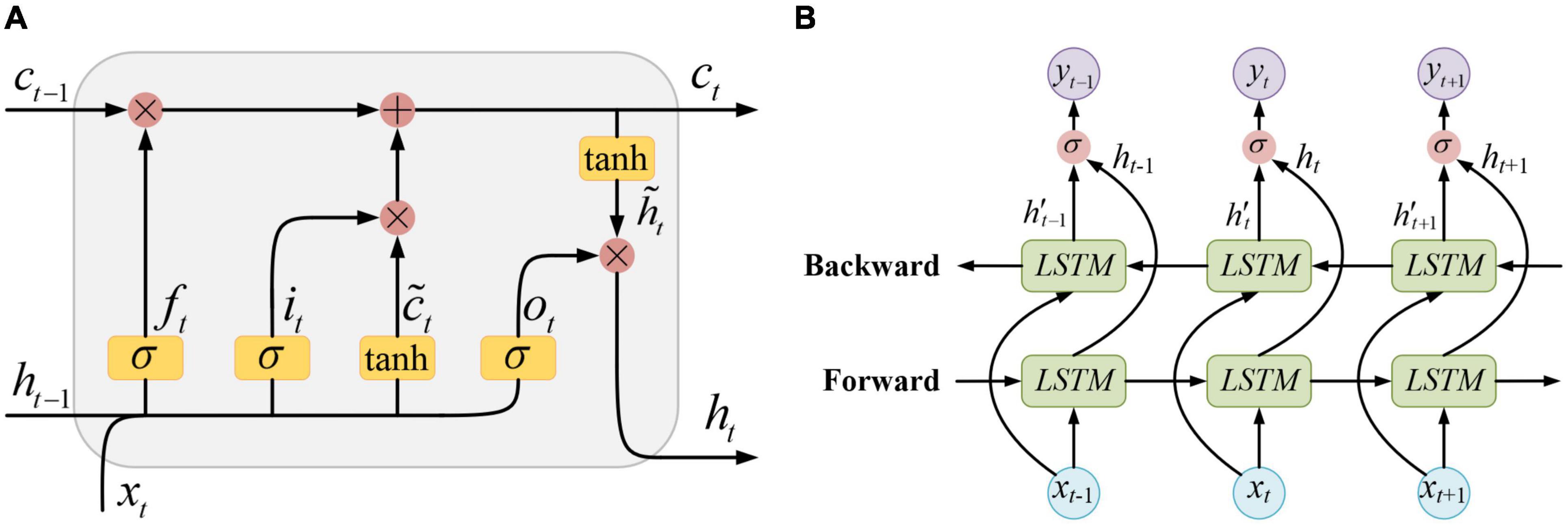

The structure of a standard LSTM unit is shown in Figure 3A, where xt, ht−1, and ct−1, respectively, represent the input sample at the current moment, the unit output and the memory state at the previous moment. The information contained in xt and ht−1 is first activated by σ(⋅) function and got the forget gate ft, the input gate it, and the output gate ot. At the same time, xt and ht−1 are also activated by tanh(⋅) function and got a temporary state . On the one hand, the information passed from the previous moment which contained in ct−1 is filtered by the forget gate ft. On the other hand, the newly input information contained in is filtered by the input gate it. Then, the valid information retained by the above two filtering processes is integrated together as a new memory state ct. This newly updated memory state is passed along time to the next moment, and simultaneously, it is also filtered by the output gate ot. Finally, the new output of the LSTM unit ht is obtained.

This propagation process can be formulated as follows:

where Wf, Wi, Wc, Wo are weight matrices; bf, bi, bc, bo are bias vectors; the symbol * stands for the element-wise multiplication.

The hidden layer of the BiLSTM neural network is composed of two layers of LSTM units that are reversely connected, and its structure is shown in Figure 3B. In a BiLSTM layer, the time series performs both forward propagation and backward propagation. Therefore, both the information at the previous moments and that at the future moments can be fully utilized.

The output of the BiLSTM neural network can be calculated as follows:

where yt is the final output, is the unit output of the backward propagation, Ws and bs are the weight matrix and the bias vector of the output layer, respectively, and the symbol ⊕ stands for the vector concatenation.

Graph Fourier Transform-Bidirectional Long-Short Term Memory for Electrophysiological Source Imaging

Graph Fourier Transform-Bidirectional Long-Short Term Memory Training Procedure

Generally, the brain is divided into smaller voxels, and each voxel can be activated and regarded as a source. Therefore, when a BiLSTM network is adopted to solve the inverse problem of ESI with the recorded EEG signal as the inputs and the source signal as the outputs, the number of nodes in the output layer of the network equals to the number of sources. This will lead to a significant number of parameters in the network. In order to improve the training speed of the BiLSTM network, in this paper, we reduce its output nodes by using projected coefficients as the output dimension based on low frequency eigenvectors, given the extended source activation pattern mainly contains signal from the low frequency subspace. With the training dataset {xi, si}, the training setup procedure is as follows:

Step 1: Perform the GFT on the original brain source signal s according to (5), then the Fourier coefficient s̃ is obtained.

Step 2: With the eigenvectors in U as the Fourier basis vectors, and the corresponding eigenvalues in Λ. Then, set the eigenvalue threshold as Tf, and the number of eigenvalues less than Tf is k.

Step 3: Take the first k columns of eigenvectors in U, denoted as Uk and the first k elements in the Fourier coefficients s̃ as s̃′.

Step 4: Set the number of the input nodes in the BiLSTM network as n, the number of the output nodes as k, and the number of the BiLSTM units in the hidden layer as l. Then take the EEG signal x as the input, s̃′ as the output to train the BiLSTM network.

The mean square error (MSE) is chosen as the loss function:

where N is the number of data points, is the true values, and is the estimated values by the network. The Nadam optimizer is adopted during the training process.

In general, we take the projections of the brain source signal on the basis spanned by the low frequency eigenvectors instead of the brain source signal itself as the output of the BiLSTM network. By doing this, the number of output nodes in the network can be reduced from m to k, which can make the parameters in the network significantly decrease and the training speed increase. In the meanwhile, the source extent pattern is recovered better after removing the spatial high frequency noise.

Source Signal Recovery

When the network training is completed, perform the IGFT on the estimated values as follows:

where is the estimated source signal. The whole process is summarized in Figure 4.

Experiments

In this section, the proposed GFT-BiLSTM is evaluated using both the synthetic data and the real data. The benchmark ESI algorithms, including dSPM, MNE, and sLORETA, are used for comparison.

In the simulated data, the number of brain sources is 2,052 and the number of electrodes is 128, then these source regions are activated in turn with one level neighborhood sources (sources that are directly connected to activated source in 3D mesh) activated at the same time, and the source signal time series is generated based on the 5th-order autoregressive (AR) model (Haufe and Ewald, 2019), with 100 Hz sampling rate and 1 s of length, and the simulated source signal s is obtained. Given the lead field matrix H, by using the forward model, the EEG data x can be calculated according to Eq. (1), in which the sensor noise ε is generated based on different signal-to-noise ratio (SNR) levels (20 dB, 30 dB, and 40 dB), where SNR is defined based on the ratio of the power of signal Psignal to the power of noise Pnoise, as prescribed below:

The Laplacian matrix L of the brain source signal is calculated according to Eq. 3, then decomposed according to Eq. 4 to obtain the eigenvalue matrix Λ and the corresponding eigenvector matrix U. Use the eigenvectors as the basis vectors of the GFT, then the Fourier coefficient is obtained according to Eq. 5. We set k = 615 as the number of eigenvectors, based on the frequency spectrum illustrate in Figure 2. We use the first k values of as the model output , and the EEG data is taken as the model input. The simulated data is divided into training, validation, and testing datasets according to the proportion of 70%, 15%, and 15%, respectively. The number of input nodes in the BiLSTM neural network is set to be 128, the hidden nodes is set to be 2,560, and the number of output nodes is 615. Adopt the MSE as the loss function and Nadam optimizer to train the BiLSTM neural network on the training set. After training, the testing dataset is used for model testing. The following two metrics are used as the metrics for model evaluation:

• Localization error: LE can be quantified as the distance between the true peak source point and estimated peak source point.

• Area under the curve: AUC measures the area underneath the receiver operating characteristic (ROC) curve.

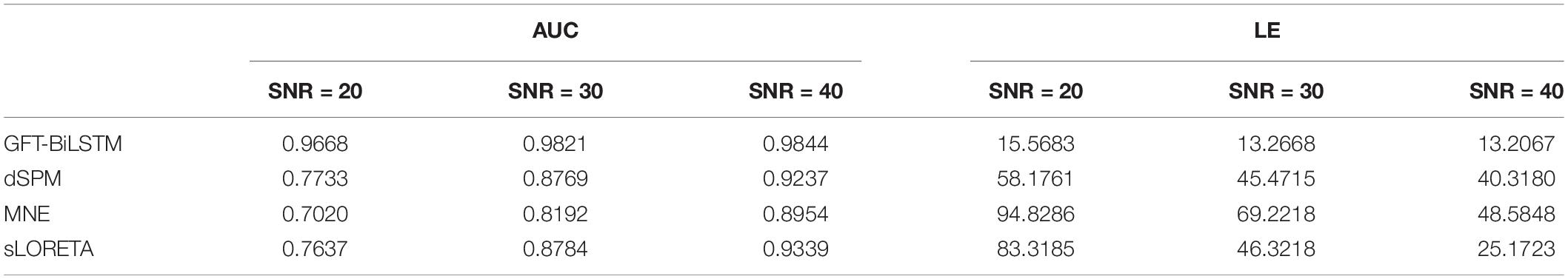

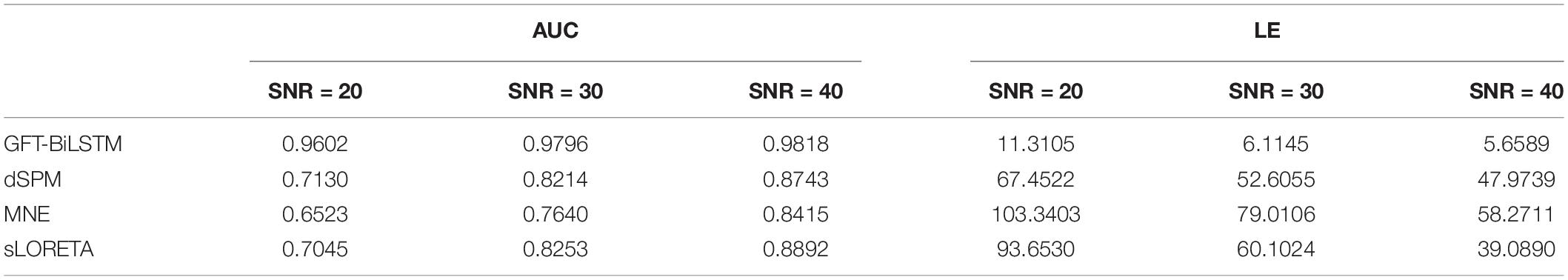

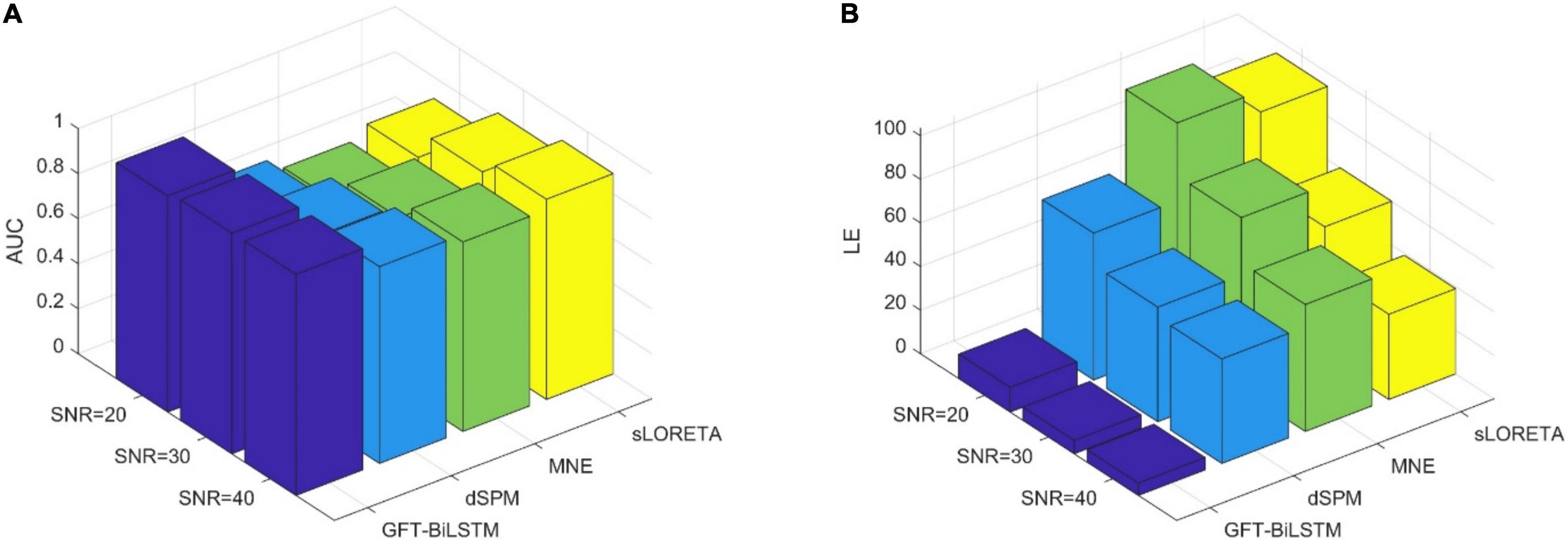

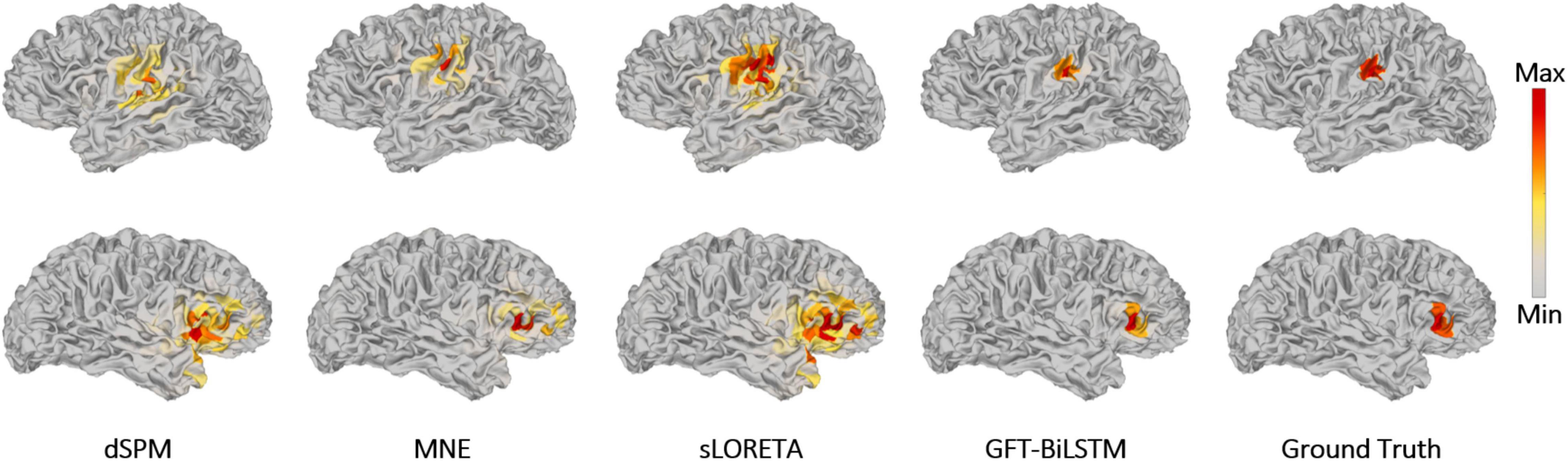

Evaluation With Single Source

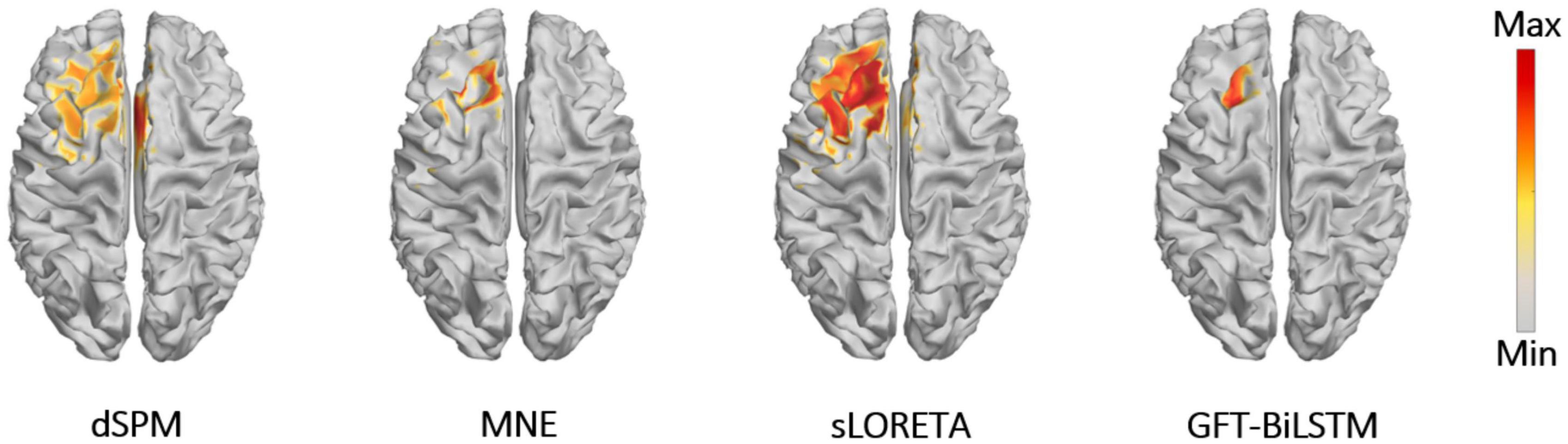

To render source extents activation, the adjacent sources along with a central source are activated at the same time. The signal strength of the adjacent sources is set to be lower than that of the central region. All the 2,052 potential source locations are chosen as the central source in turn to generate the scalp EEG data. In the first experiment, we test the proposed algorithm on the simulated EEG data and the true source activation pattern with one source extents activated. Apply the training and validation dataset to train and validate the proposed GFT-BiLSTM model, and then test it on the testing dataset. The performance of the GFT-BiLSTM is compared with that of dSPM, MNE, and sLORETA. The evaluation metrics of each model are shown in Table 1 and Figure 5. The comparison between the ground truth source and the reconstructed sources by different algorithms is shown in Figure 6.

Table 1. The evaluation metrics corresponding to different ESI inverse solutions with a single activated area.

Figure 5. The performance comparison of different ESI inverse solutions with a single activated area. (A) The comparison of AUC at different SNR levels. (B) The comparison of LE at different SNR levels.

Figure 6. Brain source activations estimated by different ESI algorithms with a single activated area.

From Table 1 and Figure 5, it can be seen that the proposed GFT-BiLSTM shows the better performance when compared to other methods. For different SNR levels, the AUC corresponding to GFT-BiLSTM is the highest while the LE is the lowest. The brain source distribution estimated by the proposed GFT-BiLSTM is closer to the ground truth as illustrated in Figure 6. The numerical result demonstrates the superiority of the GFT-BiLSTM when applied to solve the ESI inverse problem.

Evaluation With Multiple Sources

In order to study the performance of the proposed GFT-BiLSTM when there are multiple activated sources, we randomly select 2 out of 2,052 brain source locations to be activated and the first level neighboring sources of these two source locations are also activated with a lower signal magnitude. Apply this simulated dataset to train and validate the GFT-BiLSTM, and then test it on the testing dataset. The performance of the GFT-BiLSTM is compared with that of dSPM, MNE, and sLORETA. The evaluation metrics of each model are shown in Table 2 and Figure 7. The comparison between the real source and the estimated source is shown in Figure 8.

Table 2. The evaluation metrics corresponding to different ESI inverse solutions with multiple activated areas.

Figure 7. The performance metrics comparison of different ESI inverse solutions with multiple activated areas. (A) The comparison of AUC at different SNR levels. (B) The comparison of LE at different SNR levels.

Figure 8. Brain source activation reconstructed by different ESI algorithms with multiple activated areas. The upper figures correspond to the activated area in the left side of the brain, the bottom figures correspond to the activated area in the right side of the brain.

From Table 2 and Figure 7, the proposed GFT-BiLSTM demonstrates better performance when compared with other methods. In addition, with the increased number of activated source, the estimation performance of all methods except the GFT-BiLSTM deteriorates significantly. This is demonstrated as an increase in LE and a decreased AUC value. There is also a situation for other method in which the localization of the central region is accurate while the localization of its adjacent regions is slightly deviated. The reason is that as the number of activated regions increases, the distribution of the sources is no longer concentrated, and it is more challenging to accurately estimate the locations of all active regions. The reduction in performance for AUC is much more pronounced for the benchmark algorithms. In contrast, the performance of the GFT-BiLSTM is more stable and robust when it comes to multiple activated sources.

Evaluation With Real Epilepsy Data

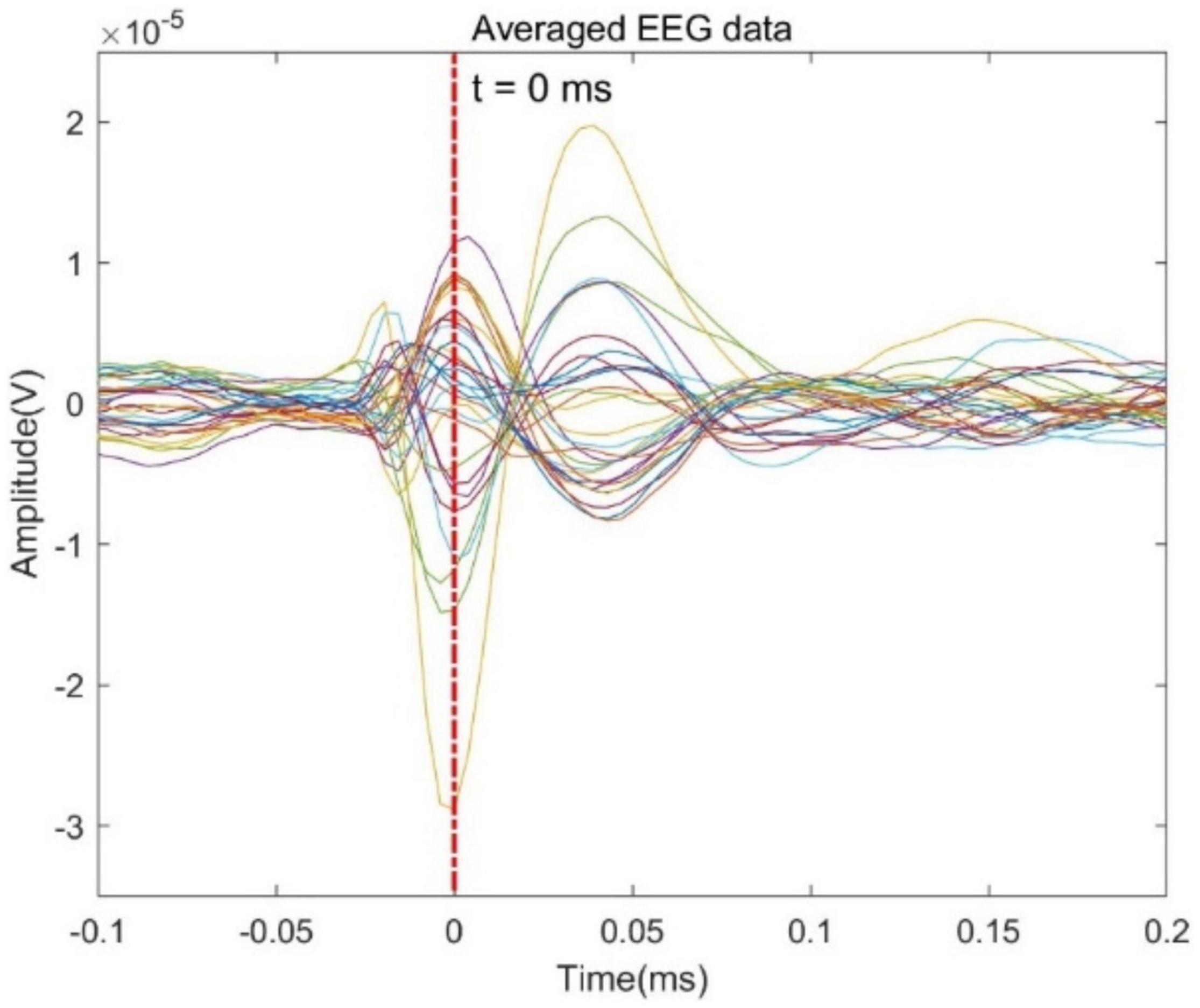

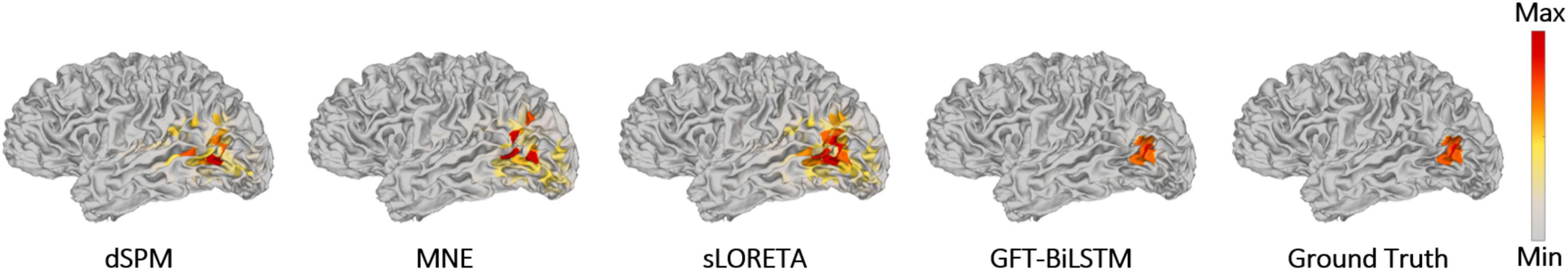

In order to further evaluate the performance of the proposed GFT-BiLSTM, we applied it on the public epilepsy EEG dataset from the Brainstorm tutorial datasets (Tadel et al., 2011). This dataset was recorded from a patient who suffered from focal epilepsy. The patient underwent invasive EEG to identify the epileptogenic area then underwent a left frontal tailored resection and was seizure-free during a 5-year follow-up period. We followed the Brainstorm tutorial to obtain the head model, and the lead field matrix. Then we calculated the average spikes (as shown in Figure 9) of the provided EEG measurements with 29 channels. Apply the averaged EEG data for brain source localization, and the estimated sources at peak (0 ms) from different methods are shown in Figure 10, as compared to other methods including dSPM, MNE, sLORETA.

It can be seen from Figure 10 that the proposed GFT-BiLSTM provides a good reconstruction of the epileptogenic zone which was validated by the follow-up survey after resection on the left frontal region. The source area estimated by dSPM and sLORETA spans a wide range cortical areas and includes part of the right frontal lobe which is not related to the epilepsy lesion. In contrast, the source location estimated by the MNE method and the GFT-BiLSTM proposed in this paper is more accurate. However, compared between the two methods, the range of sources estimated by the GFT-BiLSTM is smaller, and the source estimated by GFT-BiLSTM shows better continuity of the spatial signal, due to the benefit of using GFT.

Conclusion

The inverse problem of source extents reconstruction is challenging due to its highly ill-posed nature. In this paper, we present a novel ESI framework, named GFT-BiLSTM, which is based on the delineation of spatial graph frequency using graph Fourier transform and BiLSTM, to solve the ESI problem in a more efficient and robust way. Our numerical results based on the synthetic data and real data show that the proposed GFT-BiLSTM has a superior performance compared to other benchmark methods. The future work can further explore more clinical applications using the proposed framework. A more rigorous selection of the low frequency set of eigenvectors can also be investigated.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

MJ: methodology, software, investigation, data curation, and writing – original draft. GW: methodology, conceptualization, writing, review, and editing. YG: methodology and writing – original draft. DW and HL: methodology, writing, review, and editing. JX: conceptualization, writing, review, and editing. FL: conceptualization, methodology, writing – original draft, review, editing, and supervision. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors are grateful to Andreas Schulze-Bonhage and Marcel Heers at the Epilepsy Center Freiburg for their permission to use the epilepsy dataset in this work.

References

Acar, Z. A., and Makeig, S. (2010). Neuroelectromagnetic forward head modeling toolbox. J. Neurosci. Methods 190, 258–270. doi: 10.1016/j.jneumeth.2010.04.031

Akalin-Acar, Z., and Gençer, N. G. (2004). An advanced boundary element method (BEM) implementation for the forward problem of electromagnetic source imaging. Phys. Med. Biol. 49:5011. doi: 10.1088/0031-9155/49/21/012

Astolfi, L., Cincotti, F., Mattia, D., Salinari, S., Babiloni, C., Basilisco, A., et al. (2004). Estimation of the effective and functional human cortical connectivity with structural equation modeling and directed transfer function applied to high-resolution EEG. Magn. Reson. Imaging 22, 1457–1470. doi: 10.1016/j.mri.2004.10.006

Birot, G., Spinelli, L., Vulliémoz, S., Mégevand, P., Brunet, D., Seeck, M., et al. (2014). Head model and electrical source imaging: a study of 38 epileptic patients. NeuroImage: Clin. 5, 77–83. doi: 10.1016/j.nicl.2014.06.005

Bore, J. C., Li, P., Jiang, L., Ayedh, W. M., Chen, C., Harmah, D. J., et al. (2021). A long short-term memory network for sparse spatiotemporal EEG source imaging. IEEE Trans. Med. Imaging 40, 3787–3800. doi: 10.1109/TMI.2021.3097758

Congedo, M., and Sherlin, L. (2011). “EEG source analysis: methods and clinical implications,” in Neurofeedback and Neuromodulation Techniques and Applications, eds R. Coben and J. R. Evans (Cambridge, CA: Academic Press), 25–433.

Cover, K. S., Verbunt, J. P., de Munck, J. C., and van Dijk, B. W. (2007). Fitting a single equivalent current dipole model to MEG data with exhaustive search optimization is a simple, practical and very robust method given the speed of modern computers. Int. Cong. Ser. 1300, 121–124. doi: 10.1016/j.ics.2007.01.026

Cui, S., Duan, L., Gong, B., Qiao, Y., Xu, F., Chen, J., et al. (2019). EEG source localization using spatio-temporal neural network. China Commun. 16, 131–143. doi: 10.23919/jcc.2019.07.011

da Silva, F. L. (2013). EEG and MEG: relevance to neuroscience. Neuron 80, 1112–1128. doi: 10.1016/j.neuron.2013.10.017

Deng, L., and Yu, D. (2014). Deep learning: methods and applications. Found Trends Signal Process. 7, 197–387.

Dong, C., Loy, C. C., He, K., and Tang, X. (2015). Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal Mac. Intell. 38, 295–307.

Gutiérrez, D., Nehorai, A., and Preissl, H. (2005). Ellipsoidal head model for fetal magnetoencephalography: forward and inverse solutions. Phys. Med. Biol. 50:2141. doi: 10.1088/0031-9155/50/9/015

Haufe, S., and Ewald, A. (2019). A simulation framework for benchmarking EEG-based brain connectivity estimation methodologies. Brain Topogr. 32, 625–642. doi: 10.1007/s10548-016-0498-y

He, B., Sohrabpour, A., Brown, E., and Liu, Z. (2018). Electrophysiological source imaging: a noninvasive window to brain dynamics. Ann. Rev. Biomed. Eng. 20, 171–196. doi: 10.1146/annurev-bioeng-062117-120853

Hecker, L., Rupprecht, R., Tebartz van Elst, L., and Kornmeier, J. (2021). ConvDip: a convolutional neural network for better EEG Source Imaging. Front. Neurosci. 15:569918. doi: 10.3389/fnins.2021.569918

Huang, W., Goldsberry, L., Wymbs, N. F., Grafton, S. T., Bassett, D. S., and Ribeiro, A. (2016). Graph frequency analysis of brain signals. IEEE J. Sel.Top. Signal Process. 10, 1189–1203. doi: 10.1109/JSTSP.2016.2600859

Janati, H., Bazeille, T., Thirion, B., Cuturi, M., and Gramfort, A. (2020). Multi-subject MEG/EEG source imaging with sparse multi-task regression. NeuroImage 220:116847. doi: 10.1016/j.neuroimage.2020.116847

Jatoi, M. A., Kamel, N., Malik, A. S., and Faye, I. (2014). EEG based brain source localization comparison of sLORETA and eLORETA. Australas. Phys Eng. Sci. Med. 37, 713–721. doi: 10.1007/s13246-014-0308-3

Koles, Z. J. (1998). Trends in EEG source localization. Electroencephalogr. Clin. Neurophysiol. 106, 127–137. doi: 10.1016/s0013-4694(97)00115-6

LeCun, Y., and Bengio, Y. (1995). Convolutional networks for images, speech, and time series. Handbook Brain Theory Neural Netw. 3361:1995.

Liao, K., Zhu, M., Ding, L., Valette, S., Zhang, W., and Dickens, D. (2012). Sparse imaging of cortical electrical current densities via wavelet transforms. Phys. Med. Biol. 57:6881. doi: 10.1088/0031-9155/57/21/6881

Lin, F. H., Witzel, T., Zeffiro, T. A., and Belliveau, J. W. (2008). Linear constraint minimum variance beamformer functional magnetic resonance inverse imaging. Neuroimage 43, 297–311. doi: 10.1016/j.neuroimage.2008.06.038

Liu, F., Rosenberger, J., Lou, Y., Hosseini, R., Su, J., and Wang, S. (2017). Graph regularized EEG source imaging with in-class consistency and out-class discrimination. IEEE Trans. Big Data 3, 378–391. doi: 10.1109/tbdata.2017.2756664

Liu, F., Stephen, E. P., Prerau, M. J., and Purdon, P. L. (2019). “Sparse multi-task inverse covariance estimation for connectivity analysis in EEG source space,” in In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), (Francisco, CA: IEEE), 299–302. doi: 10.1109/NER.2019.8717043

Liu, F., Wang, L., Lou, Y., Li, R. C., and Purdon, P. L. (2021). Probabilistic structure learning for EEG/MEG source imaging with hierarchical graph priors. IEEE Trans. Med. Imaging 40, 321–334. doi: 10.1109/TMI.2020.3025608

Liu, F., Wang, S., Qin, J., Lou, Y., and Rosenberger, J. (2018). Estimating latent brain sources with low-rank representation and graph regularization. Int Conf. Brain Inform. 11309, 304–316. doi: 10.1007/978-3-030-05587-5_29

Min, S., Lee, B., and Yoon, S. (2017). Deep learning in bioinformatics. Brief. Bioinform. 18, 851–869.

Mosher, J. C., Lewis, P. S., and Leahy, R. M. (1992). Multiple dipole modeling and localization from spatio-temporal MEG data. IEEE Trans. Biomed. Eng. 39, 541–557. doi: 10.1109/10.141192

Murray, J. F., and Kreutz-Delgado, K. (2001). “An improved FOCUSS-based learning algorithm for solving sparse linear inverse problems,” in Proceedings of the Conference Record of Thirty-Fifth Asilomar Conference on Signals, Systems and Computers (Cat. No. 01CH37256), Vol 1, (Grove, CA: Institute of Electrical and Electronics Engineers, IEEE), 347–351.

Nguyen, T., Potter, T., Grossman, R., and Zhang, Y. (2018). Characterization of dynamic changes of current source localization based on spatiotemporal fMRI constrained EEG source imaging. J. Neural Eng. 15:036017. doi: 10.1088/1741-2552/aa9fb2

Numata, T., Kiguchi, M., and Sato, H. (2019). Multiple-Time-Scale analysis of attention as revealed by EEG. NIRS, and pupil diameter signals during a free recall task: a multimodal measurement approach. Front. Neurosci. 13:1307. doi: 10.3389/fnins.2019.01307

Ortega, A., Frossard, P., Kovačević, J., Moura, J. M., and Vandergheynst, P. (2018). “Graph signal processing: Overview, challenges, and applications,” in Proceedings of the IEEE 106, (Piscataway: Institute of Electrical and Electronics Engineers, IEEE), 808–828. doi: 10.1109/jproc.2018.2820126

Ou, W., Hämäläinen, M. S., and Golland, P. (2009). A distributed spatio-temporal EEG/MEG inverse solver. NeuroImage 44, 932–946. doi: 10.1016/j.neuroimage.2008.05.063

Portillo-Lara, R., Tahirbegi, B., Chapman, C. A., Goding, J. A., and Green, R. A. (2021). Mind the gap: State-of-the-art technologies and applications for EEG-based brain–computer interfaces. APL Bioeng. 5:031507. doi: 10.1063/5.0047237

Qin, J., Liu, F., Wang, S., and Rosenberger, J. (2017). “EEG source imaging based on spatial and temporal graph structures,” in Proceedings of the 2017 seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), (Montreal, QC: Institute of Electrical and Electronics Engineers, IEEE), 1–6. doi: 10.1117/1.jei.28.4.043032

Rodriguez, P., Wiles, J., and Elman, J. L. (1999). A recurrent neural network that learns to count. Connect. Sci. 11, 5–40. doi: 10.1080/095400999116340

Sandryhaila, A., and Moura, J. M. (2013). “Discrete signal processing on graphs: Graph Fourier transform,” in Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, (Vancouver: Institute of Electrical and Electronics Engineers, IEEE), 6167–6170.

Scherg, M., and Berg, P. (1991). Use of prior knowledge in brain electromagnetic source analysis. Brain Topogr. 4, 143–150. doi: 10.1007/BF01132771

Schlemper, J., Caballero, J., Hajnal, J. V., Price, A. N., and Rueckert, D. (2017). A deep cascade of convolutional neural networks for dynamic MR image reconstruction. IEEE Trans. Med. Imaging 37, 491–503. doi: 10.1109/TMI.2017.2760978

Schuster, M., and Paliwal, K. K. (1997). Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 45, 2673–2681. doi: 10.1109/78.650093

Sohrabpour, A., Cai, Z., Ye, S., Brinkmann, B., Worrell, G., and He, B. (2020). Noninvasive electromagnetic source imaging of spatiotemporally distributed epileptogenic brain sources. Nat. Commun. 11:1946. doi: 10.1038/s41467-020-15781-0

Tadel, F., Baillet, S., Mosher, J. C., Pantazis, D., and Leahy, R. M. (2011). Brainstorm: a user-friendly application for MEG/EEG analysis. Computat. Intell. Neurosci. 2011:879716. doi: 10.1155/2011/879716

Tanaka, N., Cole, A. J., von Pechmann, D., Wakeman, D. G., Hämäläinen, M. S., Liu, H., et al. (2009). Dynamic statistical parametric mapping for analyzing ictal magnetoencephalographic spikes in patients with intractable frontal lobe epilepsy. Epilepsy Res. 85, 279–286. doi: 10.1016/j.eplepsyres.2009.03.023

Vergallo, P., and Lay-Ekuakille, A. (2013). Brain source localization: a new method based on MUltiple SIgnal Classification algorithm and spatial sparsity of the field signal for electroencephalogram measurements. Rev. Sci. Instrum. 84:085117. doi: 10.1063/1.4818966

Voulodimos, A., Doulamis, N., Doulamis, A., and Protopapadakis, E. (2018). Deep learning for computer vision: A brief review. Computat. Intell. Neurosci. 2018, 1–13. doi: 10.1155/2018/7068349

Wei, C., Lou, K., Wang, Z., Zhao, M., Mantini, D., and Liu, Q. (2021). “Edge sparse basis network: a deep learning framework for EEG source localization,” in Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), (Shenzhen: Institute of Electrical and Electronics Engineers, IEEE), 1–8.

Wen, P., He, F., and Sammut, K. (1998). “A pseudo-conductivity inhomogeneous head model for computation of EEG,” in Proceedings of the 20th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Vol. 20 Biomedical Engineering Towards the Year 2000 and Beyond (Cat. No. 98CH36286), Vol. 4, (Kong: Institute of Electrical and Electronics Engineers, IEEE), 2167–2170.

Wong, D. D., and Gordon, K. A. (2009). Beamformer suppression of cochlear implant artifacts in an electroencephalography dataset. IEEE Trans. Biomed. Eng. 56, 2851–2857. doi: 10.1109/TBME.2009.2029239

Young, T., Hazarika, D., Poria, S., and Cambria, E. (2018). Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 13, 55–75. doi: 10.1109/mci.2018.2840738

Keywords: electroencephalography, source localization, inverse problem, graph Fourier transform, BiLSTM

Citation: Jiao M, Wan G, Guo Y, Wang D, Liu H, Xiang J and Liu F (2022) A Graph Fourier Transform Based Bidirectional Long Short-Term Memory Neural Network for Electrophysiological Source Imaging. Front. Neurosci. 16:867466. doi: 10.3389/fnins.2022.867466

Received: 01 February 2022; Accepted: 14 March 2022;

Published: 13 April 2022.

Edited by:

Tolga Cukur, Bilkent University, TurkeyReviewed by:

Guang Ling, Wuhan University of Technology, ChinaBoyu Wang, Western University, Canada

Copyright © 2022 Jiao, Wan, Guo, Wang, Liu, Xiang and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Feng Liu, ZmxpdTIyQHN0ZXZlbnMuZWR1

Meng Jiao

Meng Jiao Guihong Wan

Guihong Wan Yaxin Guo1

Yaxin Guo1 Dongqing Wang

Dongqing Wang Jing Xiang

Jing Xiang Feng Liu

Feng Liu