- Department of Mathematics and Statistics, Swarthmore College, Swarthmore, PA, United States

The notion that a neuron transmits the same set of neurotransmitters at all of its post-synaptic connections, typically known as Dale's law, is well supported throughout the majority of the brain and is assumed in almost all theoretical studies investigating the mechanisms for computation in neuronal networks. Dale's law has numerous functional implications in fundamental sensory processing and decision-making tasks, and it plays a key role in the current understanding of the structure-function relationship in the brain. However, since exceptions to Dale's law have been discovered for certain neurons and because other biological systems with complex network structure incorporate individual units that send both positive and negative feedback signals, we investigate the functional implications of network model dynamics that violate Dale's law by allowing each neuron to send out both excitatory and inhibitory signals to its neighbors. We show how balanced network dynamics, in which large excitatory and inhibitory inputs are dynamically adjusted such that input fluctuations produce irregular firing events, are theoretically preserved for a single population of neurons violating Dale's law. We further leverage this single-population network model in the context of two competing pools of neurons to demonstrate that effective decision-making dynamics are also produced, agreeing with experimental observations from honeybee dynamics in selecting a food source and artificial neural networks trained in optimal selection. Through direct comparison with the classical two-population balanced neuronal network, we argue that the one-population network demonstrates more robust balanced activity for systems with less computational units, such as honeybee colonies, whereas the two-population network exhibits a more rapid response to temporal variations in network inputs, as required by the brain. We expect this study will shed light on the role of neurons violating Dale's law found in experiment as well as shared design principles across biological systems that perform complex computations.

1. Introduction

Computation in the brain is closely tied to the complex network architecture of neuronal interactions and the host of electrochemical signals transmitted between neurons (Boccaletti et al., 2006; Stevenson et al., 2008; Gomez-Rodriguez et al., 2012). Studies relating network connectivity to biological function have revealed potential mechanisms for fundamental cognitive processes, characterizing important aspects of stimulus encoding in perception and evidence integration in choice (Hubel and Wiesel, 1972; Shadlen and Newsome, 2001; Hafting et al., 2005; Rangan et al., 2005; Bassett et al., 2010; Vzquez-Rodrguez et al., 2019). The network adjacency matrix dictates the general interaction structure for neuronal networks, revealing certain dynamical properties such as synchronizability and functional modules (Newman, 2003; Arenas et al., 2006; Estrada and Higham, 2010; Sporns, 2014; Barranca et al., 2015), but neurons also release diverse neurotransmitters that affect their neighbors in unique ways and this necessitates a more detailed portrait of neuronal communication beyond the binary adjacency matrix.

One classical organizing feature for neuronal interactions supported by strong experimental evidence is Dale's law, which typically states that a neuron releases the same types of neurotransmitters at all of its post-synpatic connections regardless of the nature of the post-synaptic neuron (Kandel, 1968; Strata and Harvey, 1999). Theoretical investigations and mathematical models almost unanimously reflect Dale's law by separating a neuronal network into two disjoint populations of neurons based on the collective action of their neurotransmitters, where one population is composed of excitatory neurons that upon firing increase the probability of their neighbors firing and the other population is composed of inhibitory neurons that analogously decrease the firing probability of their neighbors (Orlandi et al., 2014; Barranca and Zhou, 2019; Lian et al., 2019). However, experiments demonstrate that some neurons indeed violate Dale's law, for example through state-dependent co-transmission or the release of different neurotransmitters at distinct release sites (Jonas et al., 1998; Nicoll and Malenka, 1998; Ludwig and Leng, 2006; Svensson et al., 2018). The functional impact of such violations to Dale's law in the brain remains largely unexplored.

In a similar vein, biological systems, such as colonies of honeybees, also communicate via explicit excitatory and inhibitory feedback signals (Couzin, 2009; Marshall et al., 2009; Seeley et al., 2012). In collectively selecting a food source akin to decision making among neurons in the brain, each bee lacks knowledge of the detailed state of the colony, yet the entire group is able to effectively coordinate choices when accumulated positive feedback passes a threshold. Honeybees use waggle dances to encourage additional bees to forage at a profitable food source and also utilize stop signals to discourage other bees from foraging at a sub-optimal location (Seeley et al., 2012; Von Frisch, 2013; Borofsky et al., 2020). In contrast to the classical notion of Dale's law, a single honeybee can send out either type of feedback signal depending on its circumstances. In engineered systems, such as artificial neural networks, each unit generally has the potential to increase the activity of some of its neighbors while decreasing the activity of others, and this feature is typically preserved even upon sufficient learning for optimal task performance (Wang and Yeung, 2013; Deng et al., 2014; Chan et al., 2015; Schmidhuber, 2015; Barranca, 2021). Why do neuronal networks largely follow Dale's law unlike other systems and what classical brain computations are preserved by neuronal networks that violate it?

To address this key question, we formulate and analyze a mechanistic network model composed of a single population of neurons that can each transmit both excitatory and inhibitory signals. Like physiological neuronal networks, we demonstrate that this single-population model network exhibits asynchronous and irregular dynamics. In doing so, we provide a new generalization for the theory of balanced network dynamics (van Vreeswijk and Sompolinsky, 1996; Troyer and Miller, 1997; Vogels and Abbott, 2005), which posits that strong excitatory and inhibitory inputs into a given neuron are dynamically balanced in time such that infrequent input fluctuations result in the irregular activity typically observed in vivo (Haider et al., 2006; Miura et al., 2007; London et al., 2010). Such balanced dynamics are hypothesized to have numerous functional advantages, such as fast input tracking (van Vreeswijk and Sompolinsky, 1996), robust spatial working memory (Lim and Goldman, 2014), efficient predictive coding (Boerlin et al., 2013), and effective pattern learning (Ingrosso and Abbott, 2019). We show that the single-population model exhibits a higher degree of balance than the classical two-population model obeying Dale's law for relatively small network sizes, giving insight into why honeybee colonies and other smaller-scale systems may violate Dale's law. While we establish that the one-population model well replicates the rapid response to time-varying inputs found for classical balanced networks, we observe that the two-population network is able to yet more quickly respond and we conjecture this may explain the ubiquity of Dale's law in neuronal networks that require fast computations for survival. In comparing the computational properties of the two distinct network models, we further demonstrate that the single-population model fosters successful decision-making dynamics for two-alternative tasks, thereby carrying out a computation that is fundamental in numerous biological and engineered systems. We hypothesize that networks violating Dale's law are capable of more flexible computations, particularly for relatively small systems, and that functional or energetic constraints in large-scale systems may have promoted Dale's law among neurons.

The remainder of the paper is organized as follows. In Section 2.1, we briefly summarize the key aspects of balanced dynamics in the context of a two-population network of excitatory and inhibitory neurons, and then in Section 2.2, we formulate our one-population network model that violates Dale's law. We demonstrate that the one-population model exhibits robust balanced network dynamics, both theoretically and numerically, in Section 3.1 and compare how the balanced dynamics for the two different network models scale with network size in Section 3.2. The input-response properties for the network models are contrasted in Section 3.3, and we further show that the one-population model facilitates successful decision-making dynamics analogous to classical balanced networks in Section 3.4. Finally, we reflect on our findings and potential directions for future investigation in Section 4.

2. Models and Methods

2.1. Two-Population Model and Balanced Network Dynamics

We first introduce the conventional two-population network model that obeys Dale's law and summarize its dynamical properties in the balanced operating regime; these dynamical characteristics will provide a benchmark for comparison with the activity of the one-population model violating Dale's law that will be introduced in the next section. For concreteness and analytical tractability, we consider networks composed of the binary-state neurons with which balanced network theory was originally studied (van Vreeswijk and Sompolinsky, 1996, 1998). The two-population network is composed of N neurons, where NE neurons are excitatory (E) and NI neurons are inhibitory (I). The state of the ith neuron in the kth population (k = E, I) at time t is governed by

where θk is the firing threshold for the neurons in population k and H(·) is the Heaviside function. Each neuron, therefore, has two possible states, namely firing (σ = 1) or subthreshold (σ = 0), depending on whether its total input reaches a firing threshold. The total drive into the ith neuron in the kth population at time t is

where denotes the connection strength between the ith post-synaptic neuron in the kth population and the jth pre-synaptic neuron in the lth population (l = E, I), and is the strength of the external input into a neuron in the kth population. The recurrent connection strength is chosen to be with probability K/Nl and 0 otherwise, abstracting over any detailed network structure to highlight the impact of Dale's law in particular. Hence, a given neuron is expected to receive K incoming synaptic connections of each type. Reflecting the nature of the two populations, the excitatory connection strength JkE is positive and the inhibitory connection strength JkI is negative. In evolving the state of each neuron, the mean time between subsequent updates is τE = 10 ms for excitatory neurons and τI = 9 ms for inhibitory neurons, based on experimental estimates of cortical membrane potential time constants (McCormick et al., 1985; Shelley et al., 2002).

Since biophysical noise sources are generally unable to account for the irregular neuronal dynamics observed in vivo (Softky and Koch, 1993; Faisal et al., 2008), the model requires that asynchronous dynamics are fully produced by the recurrent interactions among neurons. We, therefore, assume constant external inputs and , where the positive external input strength is modulated by non-negative and parameter m0. It is assumed that fE as well as fI are positive parameters scaling the relative external input into the excitatory and inhibitory populations, respectively. The recurrent connection strengths and firing thresholds are also parameters, and the relationship between them and the external input scalings primarily determines the network dynamical regime as will be further discussed in Section 3.1.

Considering each neuron is expected to receive K excitatory incoming synaptic connections and K inhibitory incoming synaptic connections, if the excitatory and inhibitory inputs are not well balanced, the total drive is . Since the total drive in this case is larger in magnitude than the firing threshold, each neuron would generally either fire or remain subthreshold for all time, thereby demonstrating highly regular and unrealistic dynamics. When the total drive is instead of the same order as the firing threshold, intermittent input fluctuations become largely responsible for the exact timing of firing events and their highly irregular distribution. This theoretically produces balanced network dynamics, whose key features we summarize in Figure 1 and later compare to those produced for the one-population model violating Dale's law.

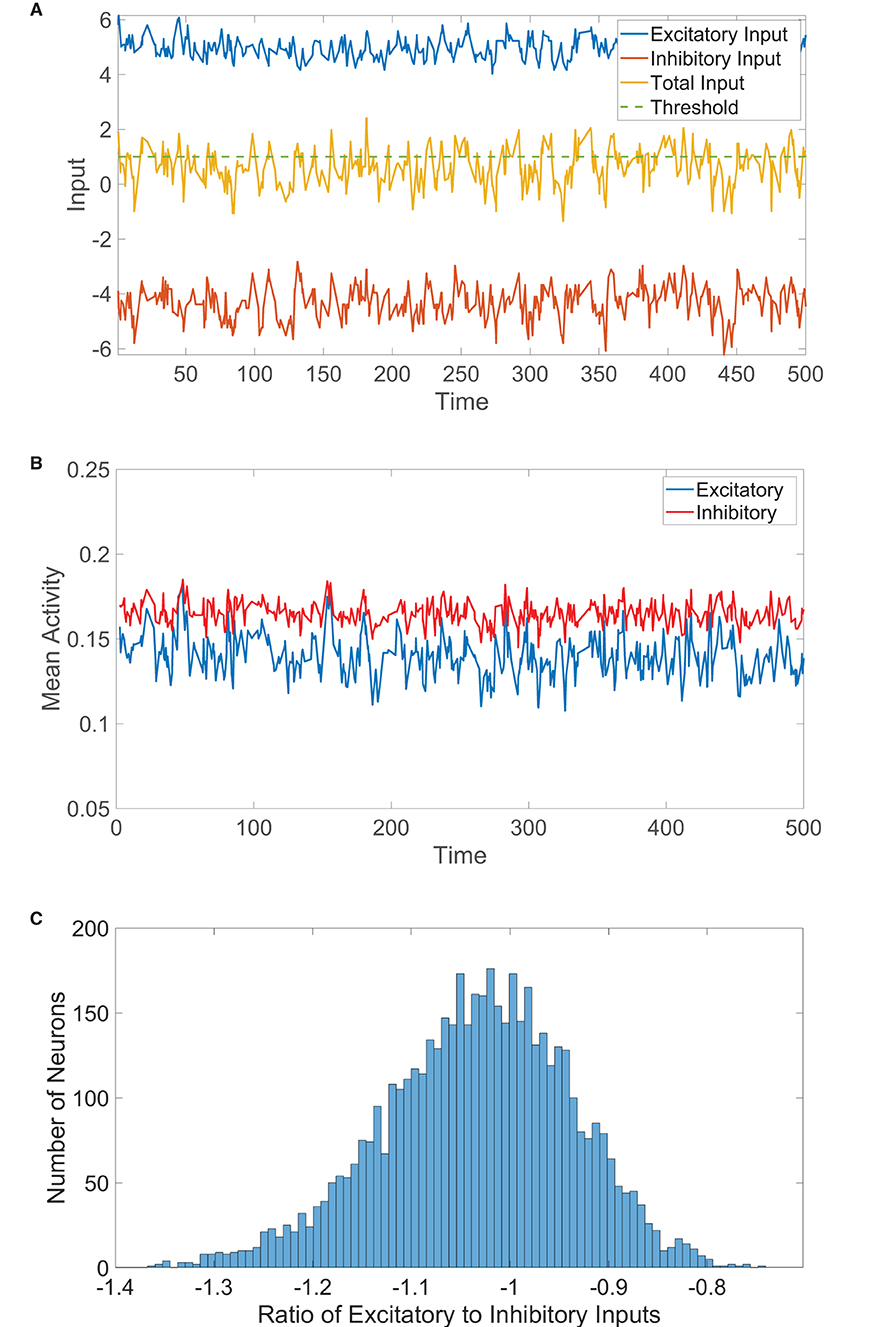

Figure 1. Two-population balanced network dynamics. (A) Excitatory (blue), inhibitory (red), and net (yellow) inputs into a sample excitatory neuron in a two-population balanced network. The dashed (green) line indicates the firing threshold. (B) The population-averaged state (mean activity) of the excitatory (blue) and inhibitory (red) populations as a function of time. (C) Histogram of the ratio between time-averaged excitatory and inhibitory inputs across the two-population neuronal network. Parameters utilized are JEE = JIE = 1, JII = −1.8, JEI = −2, NE = 4, 000, NI = 1, 000, fE = 1, fI = 0.8, m0 = 0.2, K = 200, θE = 1, and θI = 0.8.

In Figure 1A, we plot the total excitatory and inhibitory inputs over time for a sample neuron in the balanced state. The two input types are much larger in magnitude than the firing threshold, but the excitation and inhibition dynamically cancel and cause the total input to irregularly exceed threshold. We may similarly represent the resultant dynamics on the network level using the population-averaged state, which we will refer to as the mean activity and will serve to approximate the firing rate of each population. The mean activity for the kth population at time t is thus . The mean activity for each population is depicted over time in Figure 1B, exhibiting a high degree of statistical stationarity and relatively infrequent firing events. In comparison to the time-averaged mean activity over a long time horizon, mk, we see that fluctuations in the mean activity with time are quite small for each population and unpredictable in structure. Finally, we consider the time-averaged ratio between the total excitatory and total inhibitory input into each neuron, which we will refer to as the E/I ratio. We plot in Figure 1C a histogram of the E/I ratios across the network, which shows relatively tight clustering about a mean near −1. Since the excitatory and inhibitory inputs are nearly equal in magnitude for each neuron in this case, the total drive is quite small in magnitude across the network and the collective dynamics are thus well balanced.

2.2. One-Population Model Violating Dale's Law

In contrast to the conventional balanced network modeling framework that splits the full neuronal network into excitatory and inhibitory subpopulations to obey Dale's law, we now develop a single population network model in which each neuron is able to send out excitatory signals to a subset of its post-synaptic neighbors and inhibitory signals to other post-synaptic neighbors. In this case, the state of the ith neuron in the single population at time t is governed by

where the subscripts from the previous section are dropped since only one population is considered, and all terms without subscripts are given the same interpretations as described in the previous section. The total drive μi(t) into the ith neuron at time t is

where Jij denotes the connection strength between the ith post-synaptic neuron and the jth pre-synaptic neuron and is the total external input into a neuron. Reflecting the potential for each neuron to provide both excitation and inhibition, the recurrent connection strength Jij is now chosen to be with probability K/N, with probability K/N, and 0 otherwise. As in the two-population network, each neuron is expected to receive K excitatory and K inhibitory incoming synaptic connections. Positive parameters JE and JI determine the relative strengths of the excitatory and inhibitory connections, respectively.

Since this one-population network model contains both positive and negative feedback loops, with inhibition and excitation of the same order of magnitude, it is intuitive to hypothesize that balanced dynamics may be achieved under appropriate constraints on the network structure. However, since both feedback types are simultaneously provided by a single computational unit in this case, whether the lack of separation in the control mechanisms impacts the robustness of the balanced operating state and its corresponding parameter regime will be investigated in the next section. Moreover, we will later examine if there are any functional differences between the one-population and two-population models, discussing potential reasons for why nature has largely selected the two-population setting that obeys Dale's law for neuronal networks in particular.

3. Results

3.1. Balanced Network Dynamics in the One-Population Model

To address the question of whether the one-population model demonstrates balanced dynamics akin to the classical two-population model, we first derive the theoretical parameter regime for which the one-population network activity is indeed balanced. To achieve asynchronous dynamics, the balanced operating state requires the long-time mean activity of the population to remain positive and less than 1 in the large-network limit. Hence, we require 0 < m < 1 as N → ∞ and as K → ∞, with sparse connectivity such that 1 ≪ K ≪ N. We will show that this requirement yields theoretical bounds on the model parameters necessary in order to achieve balanced dynamics. In simulations that utilize finite network realizations, these bounds hold approximately so long as the network size is sufficiently large.

We consider the population-averaged total drive into the ith neuron in the one-population network, and use it to determine an analytical expression for the mean activity necessary to derive balance conditions. Assuming that the synaptic connections and states are independent, since correlations are weak in the large-network limit, . Since each neuron is expected to receive a total of K incoming connections of each type and because the population-averaged state is E[σj(t)] = m(t) regardless of neuron index, the population-averaged drive reduces to

Computing the time-average in the long-time limit, such that μi(t) → μ and m(t) → m, yields

The long-time, time-averaged mean total input is, therefore, at most . However, this implies that the time-averaged mean inputs potentially increase with network size and must be dynamically adjusted in order for the network to exhibit asynchronous and irregular dynamics. It is, therefore, necessary for the total excitatory and total inhibitory inputs to approximately cancel for balanced dynamics to be possible in the large-network limit. For μ to be , we require

which must vanish in the large-network limit. In this case, the resultant theoretical time-averaged mean activity is

which linearly grows with the overall external input strength parameter m0. Since the mean activity needs to be positive for biological realism and fE > 0, we obtain the balance condition

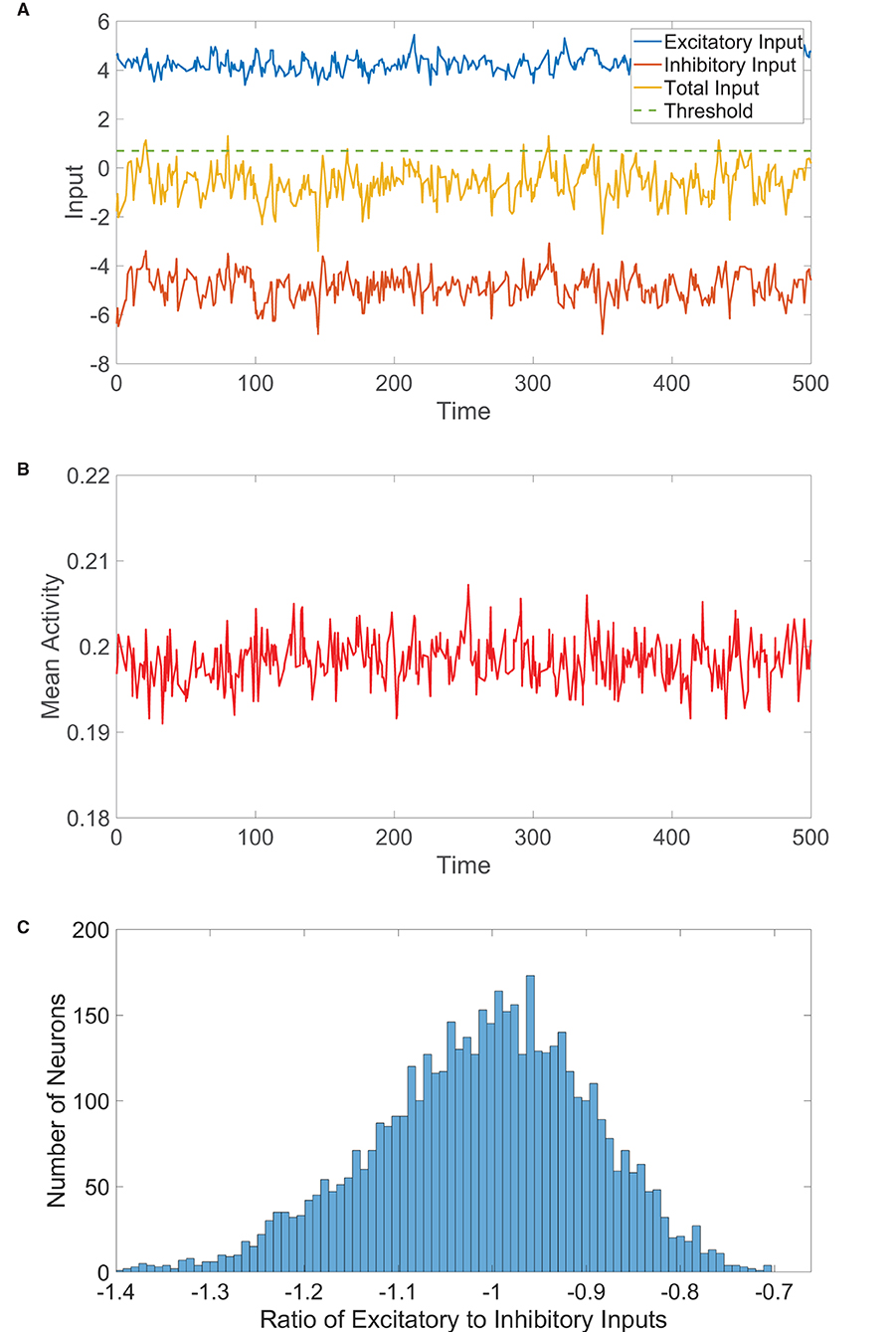

Intuitively, since the external input is excitatory, the synaptic inputs generated from within the network must be inhibition dominated to dynamically cancel the positive external input and produce irregular dynamics. In Figure 2A, we see that when the balance condition is satisfied, large excitatory and inhibitory inputs indeed cancel over time to produce irregular dynamics and infrequent firing events for a sample neuron in the single-population model.

Figure 2. One-population balanced network dynamics. (A) Excitatory (blue), inhibitory (red), and net (yellow) inputs into a sample neuron in a one-population balanced network. The dashed (green) line indicates the firing threshold. (B) The population-averaged state (mean activity) of the single population as a function of time. (C) Histogram of the ratio between time-averaged excitatory and inhibitory inputs across the one-population neuronal network. In evolving the state of each neuron in the one-population network, the mean time between subsequent updates is τ = 10 ms unless stated otherwise. Parameters utilized are JE = 1, JI = 1.5, N = 5, 000, fE = 0.5, m0 = 0.2, K = 200, and θ = 0.7.

The variance of the synaptic inputs across the one-population network is also bounded in the large-network limit. With justification analogous to the computation of the population-averaged drive, the long-time limit of the input variance for an arbitrary ith neuron is

Therefore, in the limit of large N with K ≪ N, , remaining bounded and (1). As in the two-population case, such finite input fluctuations about the near-zero expected total drive are of comparable order to the firing threshold and generate irregular firing activity. We verify this for a large one-population network realization in Figure 2B, observing that the dynamics are asynchronous with relatively small fluctuations in time about the nearly statistically stationary mean activity. The E/I input ratios across the network, plotted in Figure 2C, are centered around −1, as expected in the balanced state.

Similarly, in the case of the two-population network composed of excitatory and inhibitory neurons obeying Dale's law, it is necessary for both 0 < mE < 1 and 0 < mI < 1 to avoid synchronous or completely quiescent network dynamics. As shown in the seminal work on balanced networks in the case of two populations (van Vreeswijk and Sompolinsky, 1996, 1998), this yields the following theoretical expressions for the time-averaged mean activities in the balanced state

which generates a linear scaling of the mean activities in each subpopulation with m0, as obtained for the one-population network in Equation (7).

Requiring that both the excitatory and inhibitory time-averaged mean activities are positive and finite, the parameter bounds

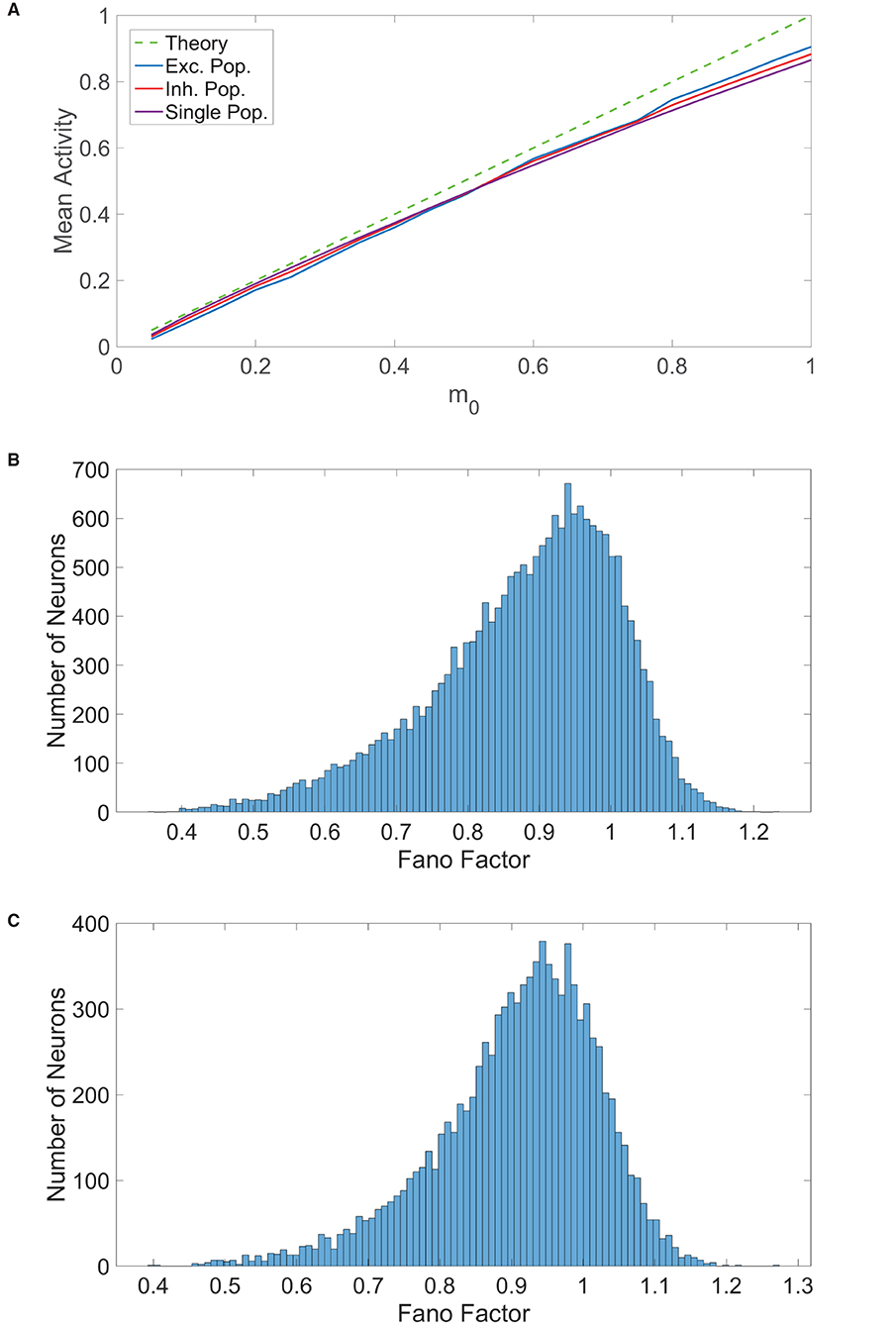

are necessary for balanced dynamics in the large-network limit for the two-population model. In Figure 3A, we see that as the external input is increased, by adjusting m0 for different network simulations, the time-averaged mean activity for both the one-population and two-population networks demonstrates highly linear growth. These strongly linear gain curves agree well with the theoretical predictions in the large-network limit given in Equations (7) and (9). Even when Dale's law is violated, we conclude that, although the activity of the individual neurons is extremely nonlinear, the mean activity of the network exhibits linear growth with external input strength for finite yet large network realizations in the balanced state. Experimental recordings of neuronal activity often exhibit a linear increase in firing rate with external input strength (Rauch et al., 2003; La Camera et al., 2006), but here we see that adherence to Dale's law is not in fact necessary to produce such a linear response. Similarly, the irregular neuronal dynamics observed in experiment (Haider et al., 2006; Miura et al., 2007; London et al., 2010) are reproduced theoretically and in simulation for the one-population model, capturing the hallmark dynamical features of classical two-population balanced networks.

Figure 3. Comparison of balanced network dynamics. (A) The mean activity averaged over a long time horizon for various choices of external input strength m0, plotted for the excitatory (blue), inhibitory (red), and single (purple) populations. This is compared to the expected mean activity curve from balanced network theory in the large-network limit. Parameters are chosen such that the theoretical mean activity is identical for all three population types. (B) Histogram of the spike count Fano factors across neurons in the two-population network. (C) Histogram of the spike count Fano factors across neurons in the one-population network. Parameters in the one-population network utilized are JE = 1, JI = 1.8, N = 20, 000, fE = 0.8, K = 800, and θ = 1. Parameters in the two-population network utilized are JEE = JIE = 1, JII = −1.8, JEI = −2, NE = 10, 000, NI = 10, 000, fE = 1, fI = 0.8, m0 = 0.1, K = 800, θE = 1, and θI = 0.8.

Large spike count variability across controlled trials is commonly observed in cortical neurons in evoked conditions (Shadlen and Newsome, 1994; Churchland et al., 2011), and such stochasticity in spike emission is seen in balanced networks that obey Dale's law (Tsodyks et al., 1999; Litwin-Kumar and Doiron, 2012). To compare the variability in activity for the one-population and two-population networks in the balanced state, we determine the distribution of spike count Fano factors across each network. The spike count Fano factor for a neuron over time interval [t, t + Δt] is

which gives the ratio of the variance to the mean for the number of times the neuron fires over this time window, N(t, t + Δt), across trials. In each trial for a given model, an identical network is generated with different initial conditions and each neuron in the network is updated at identical times across trials. The time window in this case is Δt = 100 ms and Fano factors were computed using 100 trials. For a given neuron, we averaged the Fano factors corresponding to each time interval in a full simulation of 10000 ms to obtain a single neuronal Fano factor. We plot in Figure 3B the histogram of spike count Fano factors across neurons in the two-population network and similarly plot the Fano factor histogram for the one-population network in Figure 3C. For both network types, we observe that the mean spike count Fano factor is near 1, which is the Fano factor for a homogeneous Poisson process. Hence, we conclude that a high degree of spike count variability is indeed achievable by the one-population model violating Dale's law, facilitating rich computational capabilities similar to those of the two-population network.

3.2. Scaling With Network Size

Since the key dynamical features explored thus far are comparable for the one-population and two-population networks, it still remains unclear why Dale's law is typically followed in the brain if not to help maintain balance. We now investigate several additional dynamical and functional properties for which the two network models potentially differ qualitatively to shed additional light on the ubiquity of Dale's law in the brain.

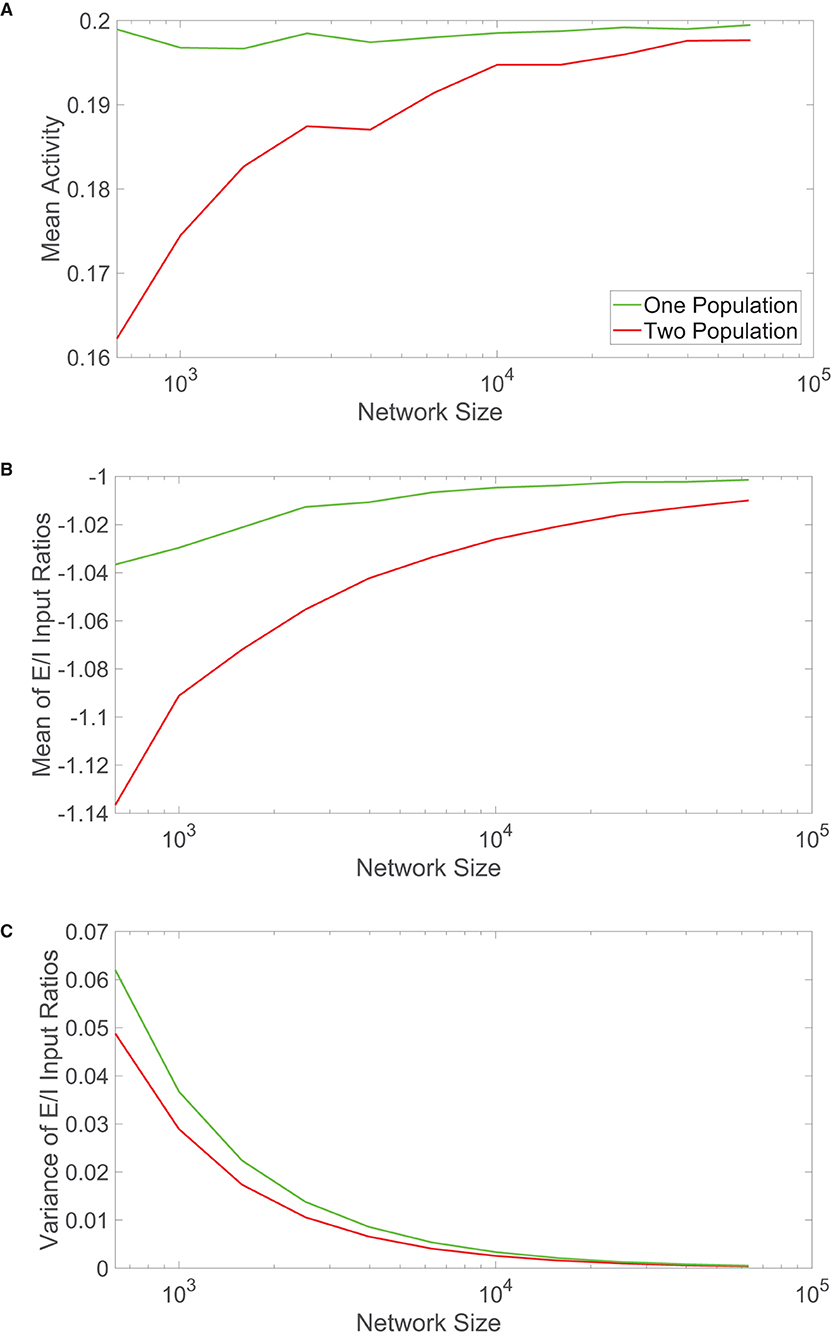

Previous sections only examined the activity of the networks theoretically in the large-network limit and compared their dynamics for relatively large-scale network realizations. Considering other biological systems that disobey Dale's law are often significantly smaller than brain networks, such as colonies of honeybees charged with similar functional tasks as neuronal networks, we compare the scaling properties of the two network models as the number of constituent neurons is increased. In Figure 4A, we plot the time-averaged mean activity across each network as a function of the network size. We observe that for relatively small networks, with less than ten thousand neurons, the mean activity for the two-population model remains below the theoretical prediction and grows with network size. On the other hand, the mean activity of the one-population model remains nearly constant and close to the value expected theoretically over the same range of network sizes.

Figure 4. Comparison of scaling properties with network size. (A) The time-averaged mean activity as a function of the total network size for the one-population (green) and two-population (red) networks. The mean activity for each population expected from theory is 0.2. (B) Mean of the ratio between time-averaged excitatory and inhibitory inputs across each network model as a function of network size. (C) Variance of the ratio between time-averaged excitatory and inhibitory inputs across each network model as a function of network size. Parameters in the one-population network utilized are JE = 1, JI = 1.5, m0 = 0.2, fE = 0.5, K = 0.08N, and θ = 0.7. Parameters in the two-population network utilized are JEE = JIE = 1, JII = −1.5, JEI = −5/3, fE = 1, fI = 0.8, m0 = 0.2, K = 0.08N, NE = NI = 0.5N, θE = 1, and θI = 0.8. Each plot is averaged over 10 network realizations.

We analogously plot the mean and variance of the E/I input ratios with growing network sizes in Figures 4B,C, respectively. Particularly for small network sizes, the one-population network exhibits mean E/I input ratios closer to −1 than seen for the two-population model. The variances are relatively comparable across network sizes, with the one-population network displaying slightly more variability in the E/I input ratios across the network.

These scaling properties together suggest that the one-population model better maintains balanced dynamics for smaller network sizes, with comparable performance once the networks are sufficiently large. Considering that the results in Figure 4 are averaged over 10 different network realizations for each network size, this trend is robust and largely independent of a specific network simulation. For smaller networks outside of the realm of neuroscience, it is possible that evolution has selected systems that violate Dale's law in order to better achieve balanced dynamics and the accompanying functional benefits of the balanced operating state. While a computational unit in the one-population network has more diversity in functionality and may require a larger energetic cost, if the network is of moderate size, the price is likely worthwhile to pay for maintaining balanced dynamics. This leaves the remaining question of why neuronal networks primarily obey Dale's law if in the large-network limit, which well applies for the brains of complex species, the two network types thus far exhibit similar dynamical features. We explore this question in the context of two common functional tasks in the subsequent sections.

3.3. Fast Tracking of Inputs

A major functional advantage of the balanced state in the two-population setting is the ability to rapidly respond to changes in external input (van Vreeswijk and Sompolinsky, 1998). In particular, an change in external input scaling strength m0 results in a comparable adjustment in the steady-state mean activity for each population in accordance with Equation (9). We determine how the fast-tracking ability of the one-population model compares to that of the classical two-population network, underlining a qualitative functional difference between the two networks.

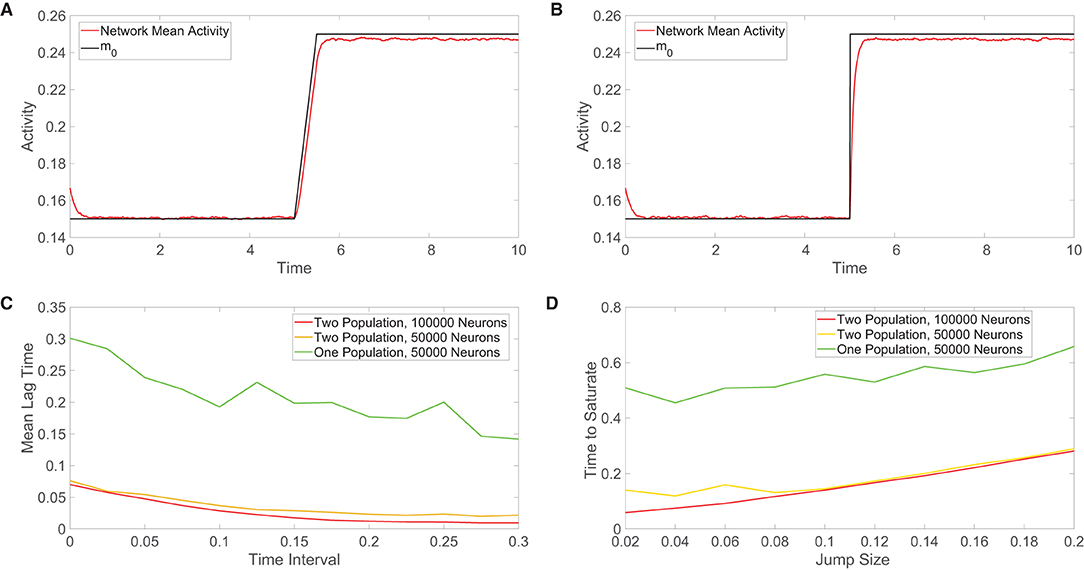

We consider the one-population network response to two classes of time-varying external inputs, and then compare the response speeds for the different network models across a family of external inputs of each class. In Figure 5A, the external input continuously increases in a linear fashion within a small time window and otherwise remains constant. For even a relatively high input slope, we see that the one-population network activity nearly identically follows the external input over all times, as expected from balanced network theory. We similarly address the case in which the external input instead demonstrates a jump discontinuity in Figure 5B, which can be viewed as the high-slope limit of the previous linearly-ramped input. The mean activity of the one-population network demonstrates a minor deviation from the external input immediately following the jump, but it calibrates to the new magnitude of the input after only a short amount of time.

Figure 5. Comparison of the tracking of network inputs. (A) Mean activity in the one-population model plotted over time (red) for an external input that evolves continuously in time. The external input strength parameter m0 varies over time and is plotted for comparison (black), while all other parameters are fixed. Here the external input is either constant or linearly increases in time. (B) Analogous plot as in (A), except now the external input changes discontinuously at t = 5 via a jump discontinuity and remains constant otherwise. The same range of m0 values is used in each plot. (C) Across a family of linearly-ramped inputs of the form in panel (A), the time interval over which the external input transitions from its fixed minimal value to its fixed maximal value is varied. For each such time interval, the mean lag time between the network response and the external input is plotted. The mean lag time plotted for each input is the time difference between the mean activity and the external input averaged from when the external input first begins to change in time until the network mean activity saturates (reaches the value of the long-time, time-averaged mean activity following input saturation). (D) Across a family of discontinuous inputs of the form in (B), the maximal value of the external input is varied. For each resultant input jump size, the time elapsed from the input jump until the network mean activity saturates is plotted. In (C,D), the fast-tracking properties are plotted in red for the two-population network with 100, 000 neurons, in orange for the two-population network with 50, 000 neurons, and in green for the one-population network with 50000 neurons. Parameters in the one-population network utilized are JE = 1, JI = 1.5, m0 = 0.2, fE = 0.5, K = 0.08N, and θ = 0.7. Parameters in the two-population network utilized are JEE = JIE = 1, JII = −1.5, JEI = −5/3, fE = 1, fI = 0.8, m0 = 0.2, K = 0.08N, NE = NI = 0.5N, θE = 1, and θI = 0.8. The mean activity is computed over 0.01 time windows in each case and the plots in (C,D) are averaged over 10 network realizations.

For the class of linearly-ramped inputs akin to Figure 5A, we investigate how the response of each network model depends on the time interval over which the input linearly increases to achieve its fixed maximal value. We note that as this time interval becomes longer, the input increases more slowly. We compute the mean lag time across a family of such linearly-ramped inputs. To measure the lag time in the tracking of each external input, we compute the difference between the time when each external input value is realized and the time when the corresponding value of the network mean activity is reached, averaging over this time difference from when the external input first begins to change until the network activity saturates in order to obtain the mean lag time. For both the one-population and two-population networks, we observe in Figure 5C that the mean lag time decreases with the size of the time interval over which the input is linearly ramped, demonstrating less lag for inputs that change more slowly as expected. However, for each external input, the two-population network exhibits less lag than the one-population network, especially for more rapidly varying inputs.

For the discontinuous class of inputs represented in Figure 5B, we similarly investigate how the external input tracking ability of each network is affected by the size of the jump in m0, which determines the resultant magnitude of the instantaneous input change that the network must respond to and also the maximal external input value. Since the input jumps instantaneously, the prior method for measuring the time difference between the network response and input must be modified. In this case, for each external input, we compute the amount of time following the jump that elapses until the network mean activity saturates; here shorter saturation time is evidence of a faster response. In Figure 5D, we see that as the jump size increases, the two network models require more time to saturate in response to the new external input value. As observed for the continuous class of external inputs, the two-population network demonstrates superior tracking ability with a shorter saturation time for each discontinuous input utilized.

This trend is true when the different network models each have the same total number of neurons as well as when the excitatory and inhibitory populations in the two-population model each have the same total number of neurons as the entire one-population network. Based on the diversity in the types of outputs that neurons can transmit to their neighbors, it can be argued that a neuron in the two-population network has less computational capability than a neuron in the one-population network. For this reason, we consider such a comparison in tracking performance when the two-population network has a larger total number of neurons. While doubling the number of neurons in the two-population network improves external input tracking, the gains depicted in Figure 5 are relatively marginal. We remark that in comparing the scaling properties for the one-population networks with two-population networks containing double the neurons in Figure 4, the overall trend of more balanced dynamics in the one-population model for small network sizes and comparable performance in the large-network limit still holds. Hence, the potentially high energetic costs of the large synaptic input currents necessary to maintain balanced dynamics garner a rapid response to changes in external inputs for both network types, but we hypothesize that evolution may have selected two-population networks obeying Dale's law in the brain in order to facilitate yet further improved input tracking necessary to respond to stimuli and make decisions over very short time scales. For smaller networks outside of the brain, where the response need not be quite so fast yet still efficient enough to carry out network functions, the one-population architecture likely suffices.

3.4. Decision-Making Dynamics

Activity in multiple brain areas, including the prefrontal cortex, thalamus, basal ganglia, and parietal cortex, plays an important role in decision-making (Platt and Glimcher, 1999; Munakata et al., 2011; Ding and Gold, 2013), and numerous studies have made significant parallels between the collective decision-making mechanisms used by social insects and neurons in the brain (Couzin, 2009; Marshall et al., 2009; Seeley et al., 2012). For each biological system, when a certain cluster of units displays sufficiently high activity relative to other competing groups, a decision is typically made. The classical leaky competing accumulator model for decision-making facilitates the competition between neuronal assemblies by directly including lateral inhibition between clusters (Usher and McClelland, 2001), and an attractor such that one cluster demonstrates significantly more activity than the others corresponds to a particular decision. Balanced networks containing excitatory and inhibitory neurons that incorporate competing pools of neurons are known to demonstrate such decision-making dynamics (Wang, 2008; Cohen et al., 2019), and we conclude by investigating if one-population networks violating Dale's law have analogous functionality.

We consider two competing pools of one-population neurons, A and B, with dynamics and connectivity within each pool as described in Section 2.2. We relabel the recurrent connection strengths within the kth pool as and (here k = A, B), to distinguish them from the cross-pool connections from the lth pool to the kth pool, whose strengths are similarly denoted and . We assume that each pool contains the same number of neurons, N, and that the cross-pool connection strength between the ith post-synaptic neuron in the kth pool and the jth pre-synaptic neuron in the lth pool is chosen to be with probability K/N, with probability K/N, and 0 otherwise. As a result, each neuron is expected to receive K excitatory and K inhibitory incoming synaptic connections from within its own pool and is also expected to receive the same number of incoming cross-pool connections of each type.

In facilitating an attractor state where relatively high activity in one pool corresponds to a particular decision, we assume that the external input into the kth pool is now scaled by parameter fk rather than fE as considered earlier, with the overall external input scaling strength m0 identical for each pool. For successful decision-making dynamics, we require that when fA > fB, the mean activity of pool A is greater than the mean activity of pool B, namely mA > mB.

Following an argument similar to Section 3.1, the long-time, population-averaged total drive into an arbitrary neuron in pool A and in pool B of the one-population network, respectively, is

In order for the dynamics to be balanced theoretically, it is necessary for E[μA] and E[μB] to remain (1) in the large-network limit, requiring that

We assume symmetry in the connectivity parameters for the two pools, such that and . This implies that the main determining factor in the decision-making process is the difference in the external input scalings for the pools, fA and fB.

Solving linear system (11) yields the time-averaged mean activity for each pool

For balanced dynamics among at least one of the competing pools, it is required that 0 < mA < 1 or 0 < mB < 1. We assume that in the absence of any communications between the pools, each is internally balanced. From the analysis in Section 3.1, we conclude that and, therefore, Jin < 0. The cross-pool connection strength is the remaining parameter that determines whether balanced dynamics are achieved and whether the network carries out successful decision-making as well. We argue that this is determined by the sign of Jout and how the magnitude of Jout compares to |Jin|.

For the mean activity of pool A to increase with fA and the mean activity of pool B to increase with fB, as expected in effective decision-making, it is necessary for |Jin| > |Jout|. Similarly, for increased input into the competing pool B to suppress the mean activity of pool A, it is also necessary for Jout < 0. When fA > fB, this means that pool A is dominant with balanced dynamics such that 0 < mA < 1, whereas pool B is either relatively suppressed or quiescent. Winner-take-all dynamics emerge when unequal alternatives are presented, and the pool with greater external input is capable of exhibiting nonzero mean activity with balanced dynamics while the other pool is fully suppressed. Collectively, this yields the following conditions for effective decision-making in competing pools of single-population neurons

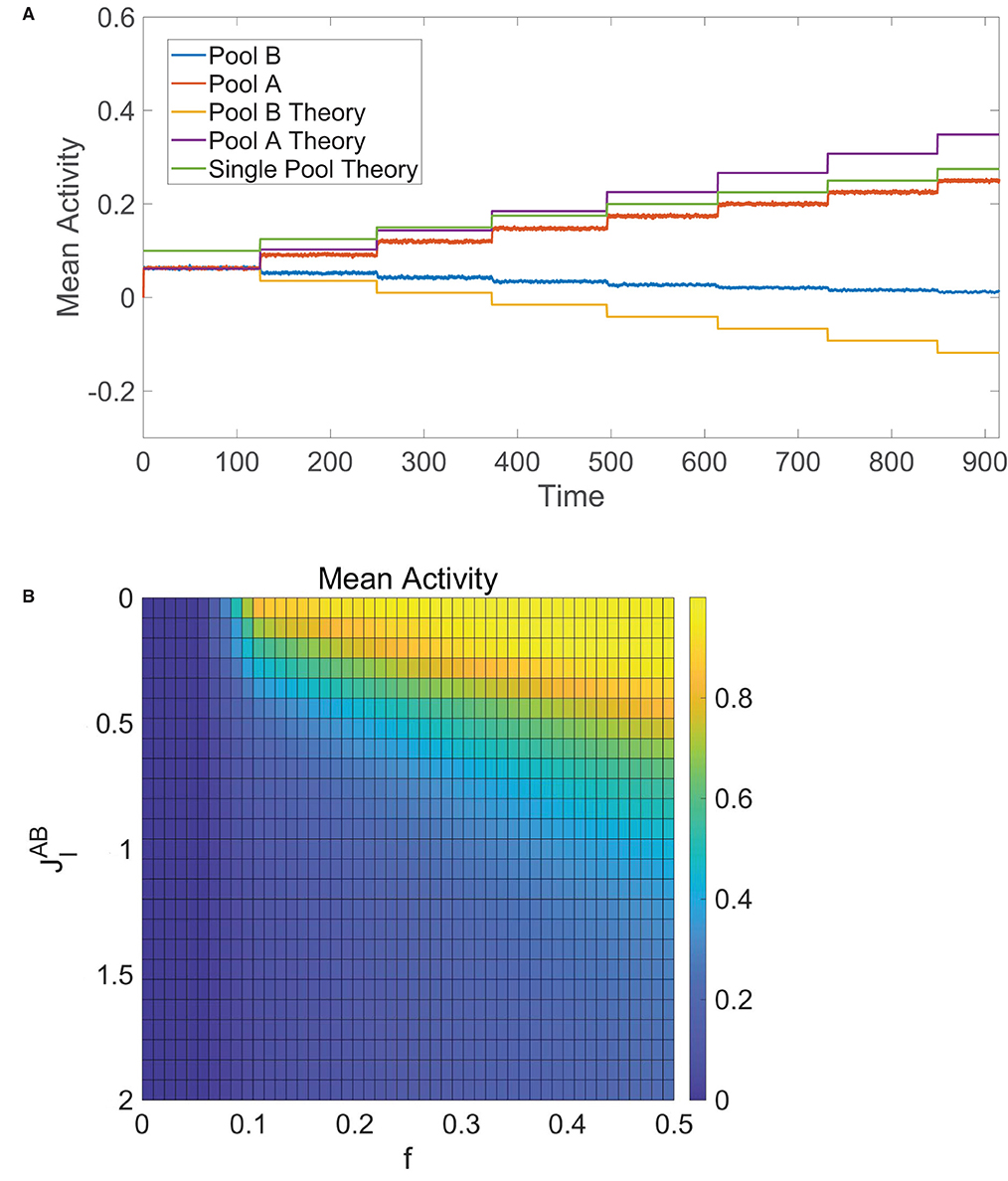

We verify this numerically in Figure 6A, where initially fA = fB and then fA jumps in value at several points in time. We see that the mean activities are equal and well agree with Equation (12) at the start of the simulation. As fA is first increased, the mean activity of pool A increases whereas the mean activity of pool B decreases yet remains nonzero, agreeing with theoretical predictions from Equation (12) as well. Once fA is sufficiently large, pool B becomes completely quiescent and dynamics of pool A are akin to the single-population balanced network theory for one pool given by Equation (7), since pool A is now effectively receiving no input from pool B.

Figure 6. Decision-making in competing pools of one-population neurons. (A) Mean activity for pool B (blue) and pool A (red) over time, where the input into pool B is fixed with fB = 0.1 and the input into pool A has fA = 0.1 initially with fA stepped up by 0.025 at several times. These simulated mean activities are compared to the theoretical mean activities in Equation (12) for pool B (orange) and pool A (purple) as well as the theoretical mean activity for a single pool of one-population neurons (green) given by Equation (7). (B) Time-averaged mean activity for pool A in the tied alternatives case as a function of external input scaling strength fA = fB = f and cross-pool inhibition strength , varied across runs. Unless specified as varying, the parameters utilized are N = 10, 000 neurons in each pool, and θ = 1.

In the special case of tied alternatives, when fA = fB = f, we note that Equation (12) reduces to . Under the same parameter restrictions in Equation (13), the mean activity of each pool in this case increases with external stimulus scaling fm0 and decreases with the magnitude of the effective internal recurrent connectivity strength, |Jin|, and the magnitude of the effective cross-cluster connectivity strength, |Jout|. These trends can be verified numerically in Figure 6B, which tracks the time-averaged mean activity for pool A in the case of tied alternatives across simulations where the external input scaling, f, and cross-pool inhibition strength, , are varied. Theoretically, balanced dynamics can persist as long as −Jout − Jin > fm0, particularly when there is enough total recurrent inhibition between the neurons to counteract the excitatory external stimulus.

When the balance conditions in Equation (13) are satisfied, the one-population network with competing pools generally functions as follows: (1) under spontaneous conditions when fA = fB, both pools demonstrate balanced dynamics that are responsive to changes in external input and (2) under evoked conditions when fA ≠ fB, the pool with a stronger external stimulus, signaling a more profitable alternative, continues to demonstrate balanced dynamics while the other pool is suppressed. Considering systems violating Dale's law are often required to effectively make decisions, though perhaps not as rapidly as neuronal networks in the brain, this functionality agrees with real-world constraints.

4. Discussion

While theoretical studies largely assume strict adherence to Dale's law, in light of notable exceptions in the brain and other biological systems with complex functionality, we have systematically studied the computational capabilities of neuronal networks that instead violate Dale's law. We demonstrated that, like classical two-population networks composed of excitatory and inhibitory subpopulations, one-population networks with neurons that can transmit both excitatory and inhibitory signals are capable of exhibiting balanced network dynamics resembling the irregular and asynchronous activity commonly observed in experiment. These one-population networks rapidly respond to changes in inputs and mediate effective decision-making dynamics in the balanced regime. In the case of smaller systems, the one-population networks in fact exhibit a higher degree of balance than two-population networks of the same size, suggesting a potential reason why systems with less computational units than the brain, such as honeybee colonies, do not adhere to Dale's law even though they operate under similar computational goals. However, for larger systems, where the dynamics are sufficiently balanced for both network models, the two-population networks demonstrate superior fast-tracking of inputs and this suggests that evolution may have constructed neuronal systems that primarily obey Dale's law in order to prioritize the particularly rapid information processing necessary for survival.

This work aimed to examine the fundamental mechanistic properties imbued by the presence or absence of a Dale's law constraint, abstracting over more detailed biological assumptions to isolate the impact of this specific network characteristic. Focusing on network computations imparted by firing patterns as opposed to subthreshold voltage dynamics, each individual neuron in our analysis possessed only two possible states. However, there is a rich history of studies demonstrating analogous balanced dynamics for networks with more detailed single-neuron models (Renart et al., 2007; Rosenbaum and Doiron, 2014), and we expect nearly identical comparisons to be drawn for neurons with integrate-and-fire dynamics. Prior work shows that in the classical two-population setting, both binary-state and integrate-and-fire balanced neuronal networks furnish the same input-output mapping structure (Barranca et al., 2019b; Gu et al., 2019). Since the presence of other systems capable of complex computations, such as honeybee colonies and artificial neural networks, was a motivation for this work, an investigation of Dale's law in the context of single-unit dynamics more faithful to such systems, as opposed to the brain, would be a natural avenue for further study. While numerous recent advances in neuromorphic computing demonstrate biologically plausible mechanisms for learning and transmitting error information (Lillicrap et al., 2016; Barranca, 2021; Yang et al., 2021), this work does not directly seek to improve upon state-of-the-art methods. Instead, this study shows that, from a functional perspective, strict adherence to Dale's law does not necessarily significantly diminish performance and rather suggests that for artificial neural networks with a particularly large number of units some efficiencies may be gleaned from connectivity structures inspired by two-population balanced networks.

There is a host of different excitatory and inhibitory neurotransmitters in the brain (Yamada et al., 1989; McCormick et al., 1993; Koch, 1999), and though we have neglected their individual time-scales and the relative magnitude of their effects, it is possible to similarly construct network models violating Dale's law that are capable of sending out distinct sets of neurotransmitters to different neighbors. The release of a diversity of neurotransmitters by a single neuron likely grants flexibility to circuits in the brain, but the maintenance of a mechanism for selective neurotransmitter release may require a higher metabolic cost. It would be especially informative to explore an energy landscape, where the addition of neurons obeying or violating Dale's law incur different energetic costs, and determine whether the relatively rare addition of neurons violating Dale's law as found in the brain indeed optimizes certain aspects of the network performance.

While we had assumed statistically homogeneous random connectivity to be faithful to traditional balanced network theory and to facilitate analytical tractability, neuronal networks observed in experiment often exhibit complex network structure that can intimately impact the network dynamics (Massimini et al., 2005; Bonifazi et al., 2009; Markov et al., 2013). An indirect inhibitory circuit, mediated by an intermediate inhibitory neuron post-connected to an excitatory neuron, for example, may effectively imbue function like a one-population neuron capable of sending out both excitatory and inhibitory signals in certain computations (Shpiro et al., 2007). Nevertheless, how this impacts more subtle functional properties affected by time lags and precise network structure in comparison to an analogous one-population model remains unexplored. It is also possible to similarly engineer one-population networks with the goal of effectively choosing among a large number of alternatives (Ganguli et al., 2008; Heekeren et al., 2008; Barranca et al., 2019a), and we expect such networks exhibit similar functional properties as we have demonstrated in the case of two options. Our analysis focused primarily on stimulus response properties and decision making, though it would be informative to study the computational properties of one-population networks in the context of other roles, such as predictive coding and short-term memory (Whalley, 2013; Ingrosso and Abbott, 2019). Since neurotransmitters and their co-transmission have been implicated in brain disorders, including Parkinson's disease (Svensson et al., 2018), the continued study of the functional consequences of neuroatypical characteristics, such as an imbalance of excitatory and inhibitory inputs or a surplus of neurons violating Dale's law, may chart out important advances in treating neurological disorders.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This work was supported by NSF DMS-1812478 (VB) and by a Swarthmore Faculty Research Support Grant (VB).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Arenas, A., Diaz-Guilera, A., and Perez-Vicente, C. J. (2006). Synchronization reveals topological scales in complex networks. Phys. Rev. Lett. 96, 114102. doi: 10.1103/PhysRevLett.96.114102

Barranca, V. J. (2021). Neural network learning of improved compressive sensing sampling and receptive field structure. Neurocomputing 455, 368–378. doi: 10.1016/j.neucom.2021.05.061

Barranca, V. J., Huang, H., and Kawakita, G. (2019a). Network structure and input integration in competing firing rate models for decision-making. J. Comput. Neurosci. 46, 145–168. doi: 10.1007/s10827-018-0708-6

Barranca, V. J., Huang, H., and Li, S. (2019b). The impact of spike-frequency adaptation on balanced network dynamics. Cogn. Neurodyn. 13, 105–120. doi: 10.1007/s11571-018-9504-2

Barranca, V. J., and Zhou, D. (2019). Compressive sensing inference of neuronal network connectivity in balanced neuronal dynamics. Front. Neurosci. 13, 1101. doi: 10.3389/fnins.2019.01101

Barranca, V. J., Zhou, D., and Cai, D. (2015). Low-rank network decomposition reveals structural characteristics of small-world networks. Phys. Rev. E 92, 062822. doi: 10.1103/PhysRevE.92.062822

Bassett, D. S., Greenfield, D. L., Meyer-Lindenberg, A., Weinberger, D. R., Moore, S. W., and Bullmore, E. T. (2010). Efficient physical embedding of topologically complex information processing networks in brains and computer circuits. PLoS Comput. Biol. 6, e1000748. doi: 10.1371/journal.pcbi.1000748

Boccaletti, S., Latora, V., Moreno, Y., Chavez, M., and Hwang, D.-U. (2006). Complex networks: Structure and dynamics. Phys. Rep. 424, 175–308. doi: 10.1016/j.physrep.2005.10.009

Boerlin, M., Machens, C. K., and Deneve, S. (2013). Predictive coding of dynamical variables in balanced spiking networks. PLoS Comput. Biol. 9, e1003258. doi: 10.1371/journal.pcbi.1003258

Bonifazi, P., Goldin, M., Picardo, M. A., Jorquera, I., Cattani, A., Bianconi, G., et al. (2009). GABAergic hub neurons orchestrate synchrony in developing hippocampal networks. Science 326, 1419–1424. doi: 10.1126/science.1175509

Borofsky, T., Barranca, V. J., Zhou, R., von Trentini, D., Broadrup, R. L., and Mayack, C. (2020). Hive minded: like neurons, honey bees collectively integrate negative feedback to regulate decisions. Anim. Behav., 168:33–44. doi: 10.1016/j.anbehav.2020.07.023

Chan, T.-H., Jia, K., Gao, S., Lu, J., Zeng, Z., and Ma, Y. (2015). Pcanet: a simple deep learning baseline for image classification? IEEE Trans. Image Process. 24, 5017–5032. doi: 10.1109/TIP.2015.2475625

Churchland, A. K., Kiani, R., Chaudhuri, R., Wang, X. J., Pouget, A., and Shadlen, M. N. (2011). Variance as a signature of neural computations during decision making. Neuron 69, 818–831. doi: 10.1016/j.neuron.2010.12.037

Cohen, B. P., Chow, C. C., and Vattikuti, S. (2019). Dynamical modeling of multi-scale variability in neuronal competition. Commun. Biol. 2, 319. doi: 10.1038/s42003-019-0555-7

Couzin, I. D. (2009). Collective cognition in animal groups. Trends Cogn. Sci. 13, 36–43. doi: 10.1016/j.tics.2008.10.002

Deng, L.Yu, D., et al. (2014). Deep learning: methods and applications. Found. Trends Signal Process. 7, 197–387. doi: 10.1561/2000000039

Ding, L., and Gold, J. I. (2013). The basal ganglia's contributions to perceptual decision making. Neuron 79, 640–649. doi: 10.1016/j.neuron.2013.07.042

Estrada, E., and Higham, D. J. (2010). Network properties revealed through matrix functions. SIAM Rev. 52, 696–714. doi: 10.1137/090761070

Faisal, A. A., Selen, L. P., and Wolpert, D. M. (2008). Noise in the nervous system. Nat. Rev. Neurosci. 9, 292–303. doi: 10.1038/nrn2258

Ganguli, S., Bisley, J. W., Roitman, J. D., Shadlen, M. N., Goldberg, M. E., and Miller, K. D. (2008). One-dimensional dynamics of attention and decision making in LIP. Neuron 58, 15–25. doi: 10.1016/j.neuron.2008.01.038

Gomez-Rodriguez, M., Leskovec, J., and Krause, A. (2012). Inferring networks of diffusion and influence. Trans. Knowl. Disc. Data 5, 21. doi: 10.1145/2086737.2086741

Gu, Q., Li, S., Dai, W., Zhou, D., and Cai, D. (2019). Balanced active core in heterogeneous neuronal networks. Front. Comput. Neurosci. 12, 109. doi: 10.3389/fncom.2018.00109

Hafting, T., Fyhn, M., Molden, S., Moser, M. B., and Moser, E. I. (2005). Microstructure of a spatial map in the entorhinal cortex. Nature 436, 801–806. doi: 10.1038/nature03721

Haider, B., Duque, A., Hasenstaub, A. R., and McCormick, D. A. (2006). Neocortical network activity in vivo is generated through a dynamic balance of excitation and inhibition. J. Neurosci. 26, 4535–4545. doi: 10.1523/JNEUROSCI.5297-05.2006

Heekeren, H. R., Marrett, S., and Ungerleider, L. G. (2008). The neural systems that mediate human perceptual decision making. Nat. Rev. Neurosci. 9, 467–479. doi: 10.1038/nrn2374

Hubel, D., and Wiesel, T. (1972). Laminar and columnar distribution of geniculo cortical fibers in the macaque monkey. J. Comp. Neurol. 146, 421–450.

Ingrosso, A., and Abbott, L. F. (2019). Training dynamically balanced excitatory-inhibitory networks. PLoS ONE 14, e0220547. doi: 10.1371/journal.pone.0220547

Jonas, P., Bischofberger, J., and Sandkühler, J. (1998). Corelease of two fast neurotransmitters at a central synapse. Science 281, 419–424.

Kandel, E. R. (1968). “Dale's principle and the functional specificity of neurons,” in Psychopharmacology; A Review of Progress, 1957–1967 (Washington, DC: US Government Printing Office), 385–398.

La Camera, G., Rauch, A., Thurbon, D., Luscher, H. R., Senn, W., and Fusi, S. (2006). Multiple time scales of temporal response in pyramidal and fast spiking cortical neurons. J. Neurophysiol. 96, 3448–3464. doi: 10.1152/jn.00453.2006

Lian, Y., Grayden, D. B., Kameneva, T., Meffin, H., and Burkitt, A. N. (2019). Toward a biologically plausible model of lgn-v1 pathways based on efficient coding. Front. Neural Circ. 13, 13. doi: 10.3389/fncir.2019.00013

Lillicrap, T. P., Cownden, D., Tweed, D. B., and Akerman, C. J. (2016). Random synaptic feedback weights support error backpropagation for deep learning. Nat. Commun. 7, 13276. doi: 10.1038/ncomms13276

Lim, S., and Goldman, M. S. (2014). Balanced cortical microcircuitry for spatial working memory based on corrective feedback control. J. Neurosci. 34, 6790–6806. doi: 10.1523/JNEUROSCI.4602-13.2014

Litwin-Kumar, A., and Doiron, B. (2012). Slow dynamics and high variability in balanced cortical networks with clustered connections. Nat. Neurosci. 15, 1498–1505. doi: 10.1038/nn.3220

London, M., Roth, A., Beeren, L., Hausser, M., and Latham, P. E. (2010). Sensitivity to perturbations in vivo implies high noise and suggests rate coding in cortex. Nature 466, 123–127. doi: 10.1038/nature09086

Ludwig, M., and Leng, G. (2006). Dendritic peptide release and peptide-dependent behaviours. Nat. Rev. Neurosci. 7, 126–136. doi: 10.1038/nrn1845

Markov, N. T., Ercsey-Ravasz, M., Van Essen, D. C., Knoblauch, K., Toroczkai, Z., and Kennedy, H. (2013). Cortical high-density counterstream architectures. Science 342, 1238406. doi: 10.1126/science.1238406

Marshall, J. A., Bogacz, R., Dornhaus, A., Planqué, R., Kovacs, T., and Franks, N. R. (2009). On optimal decision-making in brains and social insect colonies. J. R. Soc. Interface 6, 1065–1074. doi: 10.1098/rsif.2008.0511

Massimini, M., Ferrarelli, F., Huber, R., Esser, S. K., Singh, H., and Tononi, G. (2005). Breakdown of cortical effective connectivity during sleep. Science 309, 2228–2232. doi: 10.1126/science.1117256

McCormick, D., Wang, Z., and Huguenard, J. (1993). Neurotransmitter control of neocortical neuronal activity and excitability. J. Neurophysiol. 68, 387–398.

McCormick, D. A., Connors, B. W., Lighthall, J. W., and Prince, D. A. (1985). Comparative electrophysiology of pyramidal and sparsely spiny stellate neurons of the neocortex. J. Neurophysiol. 54, 782–806.

Miura, K., Tsubo, Y., Okada, M., and Fukai, T. (2007). Balanced excitatory and inhibitory inputs to cortical neurons decouple firing irregularity from rate modulations. J. Neurosci. 27, 13802–13812. doi: 10.1523/JNEUROSCI.2452-07.2007

Munakata, Y., Herd, S. A., Chatham, C. H., Depue, B. E., Banich, M. T., and O'Reilly, R. C. (2011). A unified framework for inhibitory control. Trends Cogn. Sci. (Regul. Ed.) 15, 453–459. doi: 10.1016/j.tics.2011.07.011

Newman, M. E. J. (2003). The structure and function of complex networks. SIAM Rev., 45:167–256. doi: 10.1137/S003614450342480

Orlandi, J. G., Stetter, O., Soriano, J., Geisel, T., and Battaglia, D. (2014). Transfer entropy reconstruction and labeling of neuronal connections from simulated calcium imaging. PLoS ONE 9, e98842. doi: 10.1371/journal.pone.0098842

Platt, M. L., and Glimcher, P. W. (1999). Neural correlates of decision variables in parietal cortex. Nature 400, 233–238.

Rangan, A. V., Cai, D., and McLaughlin, D. W. (2005). Modeling the spatiotemporal cortical activity associated with the line-motion illusion in primary visual cortex. Proc. Natl. Acad. Sci. U.S.A. 102, 18793–18800. doi: 10.1073/pnas.0509481102

Rauch, A., La Camera, G., Luscher, H.-R., Senn, W., and Fusi, S. (2003). Neocortical pyramidal cells respond as integrate-and-fire neurons to in vivo-like input currents. J. Neurophysiol. 90, 1598–1612. doi: 10.1152/jn.00293.2003

Renart, A., Moreno-Bote, R., Wang, X. J., and Parga, N. (2007). Mean-driven and fluctuation-driven persistent activity in recurrent networks. Neural. Comput. 19, 1–46. doi: 10.1162/neco.2007.19.1.1

Rosenbaum, R., and Doiron, B. (2014). Balanced networks of spiking neurons with spatially dependent recurrent connections. Phys. Rev. X 4, 021039. doi: 10.1103/PhysRevX.4.021039

Schmidhuber, J. (2015). Deep learning in neural networks: an overview. Neural networks, 61:85–117. doi: 10.1016/j.neunet.2014.09.003

Seeley, T. D., Visscher, P. K., Schlegel, T., Hogan, P. M., Franks, N. R., and Marshall, J. A. (2012). Stop signals provide cross inhibition in collective decision-making by honeybee swarms. Science 335, 108–111. doi: 10.1126/science.1210361

Shadlen, M., and Newsome, W. (1994). Noise, neural codes and cortical organization. Curr. Opin. Neurobiol. 4, 569–579.

Shadlen, M. N., and Newsome, W. T. (2001). Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. J. Neurophysiol. 86, 1916–1936. doi: 10.1152/jn.2001.86.4.1916

Shelley, M., McLaughlin, D., Shapley, R., and Wielaard, J. (2002). States of high conductance in a large-scale model of the visual cortex. J. Comp. Neurosci. 13, 93–109. doi: 10.1023/a:1020158106603

Shpiro, A., Curtu, R., Rinzel, J., and Rubin, N. (2007). Dynamical characteristics common to neuronal competition models. J. Neurophysiol. 97, 462–473. doi: 10.1152/jn.00604.2006

Softky, W. R., and Koch, C. (1993). The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. J. Neurosci. 13, 334–350.

Sporns, O. (2014). Contributions and challenges for network models in cognitive neuroscience. Nat. Neurosci. 17, 652–660. doi: 10.1038/nn.3690

Stevenson, I. H., Rebesco, J. M., Miller, L. E., and Kording, K. P. (2008). Inferring functional connections between neurons. Curr. Opin. Neurobiol. 18, 582–588. doi: 10.1016/j.conb.2008.11.005

Svensson, E., Apergis-Schoute, J., Burnstock, G., Nusbaum, M. P., Parker, D., and Schioth, H. B. (2018). General principles of neuronal co-transmission: insights from multiple model systems. Front. Neural. Circuits 12, 117. doi: 10.3389/fncir.2018.00117

Troyer, T. W., and Miller, K. D. (1997). Physiological gain leads to high ISI variability in a simple model of a cortical regular spiking cell. Neural Comput. 9, 971–983.

Tsodyks, M., Kenet, T., Grinvald, A., and Arieli, A. (1999). Linking spontaneous activity of single cortical neurons and the underlying functional architecture. Science 286, 1943–1946.

Usher, M., and McClelland, J. L. (2001). The time course of perceptual choice: the leaky, competing accumulator model. Psychol. Rev. 108, 550–592. doi: 10.1037/0033-295x.108.3.550

van Vreeswijk, C., and Sompolinsky, H. (1996). Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science 274, 1724–1726.

van Vreeswijk, C., and Sompolinsky, H. (1998). Chaotic balanced state in a model of cortical circuits. Neural Comput. 15, 1321–1371.

Vogels, T., and Abbott, L. (2005). Signal propagation and logic gating in networks of integrate-and-fire neurons. J. Neurosci 25, 10786–10795. doi: 10.1523/JNEUROSCI.3508-05.2005

Von Frisch, K. (2013). The Dance Language and Orientation of Bees. Cambridge, MA: Harvard University Press.

Vzquez-Rodrguez, B., Surez, L. E., Markello, R. D., Shafiei, G., Paquola, C., Hagmann, P., et al. (2019). Gradients of structure-function tethering across neocortex. Proc. Natl. Acad. Sci. U.S.A. 116, 21219–21227. doi: 10.1073/pnas.1903403116

Wang, N., and Yeung, D.-Y. (2013). “Learning a deep compact image representation for visual tracking,” in Advances in Neural Information Processing Systems (Red Hook, NY: Curran Associates Inc.), 809–817.

Wang, X. J. (2008). Decision making in recurrent neuronal circuits. Neuron 60, 215–234. doi: 10.1016/j.neuron.2008.09.034

Whalley, K. (2013). Neural coding: timing is key in the olfactory system. Nat. Rev. Neurosci. 14, 458. doi: 10.1038/nrn3532

Yamada, W., Koch, C., and Adams, P. (1989). “Multiple channels and calcium dynamics,” in Methods in Neuronal Modeling: From Synapses To Networks (Cambridge, MA: MIT Press), 97–133.

Keywords: neuronal networks, decision making, balanced networks, nonlinear dynamics, Dale's law

Citation: Barranca VJ, Bhuiyan A, Sundgren M and Xing F (2022) Functional Implications of Dale's Law in Balanced Neuronal Network Dynamics and Decision Making. Front. Neurosci. 16:801847. doi: 10.3389/fnins.2022.801847

Received: 25 October 2021; Accepted: 02 February 2022;

Published: 28 February 2022.

Edited by:

Sisir Roy, National Institute of Advanced Studies, IndiaReviewed by:

Shuangming Yang, Tianjin University, ChinaYi Sun, University of South Carolina, United States

Copyright © 2022 Barranca, Bhuiyan, Sundgren and Xing. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Victor J. Barranca, dmJhcnJhbjFAc3dhcnRobW9yZS5lZHU=

Victor J. Barranca

Victor J. Barranca Asha Bhuiyan

Asha Bhuiyan