95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 11 February 2022

Sec. Neural Technology

Volume 16 - 2022 | https://doi.org/10.3389/fnins.2022.782367

This article is part of the Research Topic Currents in Biomedical Signals Processing - Methods and Applications View all 11 articles

Electroencephalography (EEG) signals are disrupted by technical and physiological artifacts. One of the most common artifacts is the natural activity that results from the movement of the eyes and the blinking of the subject. Eye blink artifacts (EB) spread across the entire head surface and make EEG signal analysis difficult. Methods for the elimination of electrooculography (EOG) artifacts, such as independent component analysis (ICA) and regression, are known. The aim of this article was to implement the convolutional neural network (CNN) to eliminate eye blink artifacts. To train the CNN, a method for augmenting EEG signals was proposed. The results obtained from the CNN were compared with the results of the ICA and regression methods for the generated and real EEG signals. The results obtained indicate a much better performance of the CNN in the task of removing eye-blink artifacts, in particular for the electrodes located in the central part of the head.

Electroencephalography (EEG) is a method of examining brain activity commonly used in medical diagnostics (Levin et al., 2018; Browarska et al., 2021). Unfortunately, in some cases direct analysis of the EEG signal is very difficult or even impossible due to the presence of artifacts (Kilicarslan and Contreras-Vidal, 2017; Kawala-Sterniuk et al., 2020; Zhang C. et al., 2020). There are many types of physiological artifacts, for example those caused by muscle clenching, jaw, tongue movements, or eye movements. One of the strongest artifacts that interfere with the analysis of EEG signals are electrooculography (EOG) artifacts. EOG artifacts are generally high-amplitude patterns in the brain signal caused by blinking of the eyes or low-frequency patterns caused by movements (such as rolling) of the eyes (Anderer et al., 1999). EOG activity has a wide frequency range, being maximal at frequencies below 4 Hz, and is most prominent over the anterior head regions (McFarland et al., 1997). The subject of the article concerns the elimination of EOG artifacts created during blinking (Pham et al., 2017).

Generally, the concept of EOG artifacts is broader and covers both the activity of eye movement and blinking. For the purposes of this article, the authors equate the concept of EOG with eye blinks (EB). To eliminate them, the authors proposed a deep neural network-based method and compared its operation with the popular methods of artifact elimination – ICA and regression. During the research, the focus was on the analysis of real EEG signals recorded with the use of a professional biomedical signal amplifier. Twenty people participated in the experiment, and each session lasted about 60 min. The authors also used computer-generated signals to train and test the neural network. For this purpose, an algorithm was created to generate EEG/EOG signals.

Many methods are used to remove artifacts from the EEG signal (Mumtaz et al., 2021). The simplest of them just reject those fragments of EEG signals with artifacts. Unfortunately, this approach results in the loss of all information from the rejected signal fragments (Hasasneh et al., 2018; Khatwani et al., 2018; Nejedly et al., 2019; Tosun and Kasım, 2020; Iaquinta et al., 2021; Placidi et al., 2021). In addition, we must have a very good artifact detection algorithm that will allow us to identify them. Artifacts can also be selected by an expert by visual inspection. This approach is not always possible and usually applies to off-line analyzes. Artifact removal approaches may require so-called reference channels (Mumtaz et al., 2021). The regression method requires such a reference channel, that is, the one based on which artifacts of the remaining channels are removed (Mannan et al., 2018). Usually, one of the channels from the “frontal” position or the EOG signal is chosen as the reference channel. Then, with the use of signals from the reference channel, the regression method eliminates artifacts from successive electrodes (propagated from the reference electrode to the others). This means that the artifacts are not removed from the reference electrode (it only serves to eliminate artifacts from other electrodes). When artifacts are removed using a reference electrode (Mannan et al., 2018), it is assumed that neural activity (EEG) and electro-oculographic signals (EOG) are not correlated. In turn, the independent component analysis method (ICA) (Jiang et al., 2019) does not require a reference channel. The ICA method allows for the determination of the signal components (statistically independent), which enables the rejection of artifacts and disturbances. This method allows the removal of artifacts from all electrodes. In the ICA method, rejected components are often selected on the basis of their visualization. It requires expert knowledge (Mannan et al., 2018) and signal recording with the use of multiple channels. However, there are methods that allow for automatic selection of rejected components (Li et al., 2017). Hybrid methods are also used to remove artifacts (Li et al., 2017; Mumtaz et al., 2021). Their idea is to use more than one algorithm to remove artifacts. An example is the use of the combination of wavelet transform and blind signal separation (BSS) (Rakibul Mowla et al., 2015). By means of BBS, signals are decomposed into components, and then the components are subjected to the wavelet transform. The next step is to remove components that contain artifacts based on thresholding and then reconstruct the signal. Other examples of hybrid methods are the combination of adaptive filtering and BSS and the combination of BSS and supporting vector machine (SVM).

Deep learning methods are becoming more and more popular every year. An example of this method may be the convolutional neural network (CNN), which has a very wide application in many different fields of science (Arora et al., 2020). An example may be the field related to computer vision and image recognition (Chen et al., 2019; Lou and Shi, 2020). CNN has also found application in neuroinformatics to recognize emotions (Zhang Y. et al., 2020) and detect mild depression (Li et al., 2020) using encephalography. Another application is the detection of myocardial infarction based on the ECG signal (Natesan et al., 2020). On the basis of existing applications, it is assumed that convolutional networks can also work well in tasks related to cleaning biomedical signals from artifacts. Moreover, CNN offers very wide possibilities to select structures and hyperparameters (Arora et al., 2020).

In work (Garg et al., 2017) a 10-layer convolutional neural network (CNN) is presented, which directly labels eye-blink artifacts. Thirty subjects were tested. The classification accuracy achieved was 99.67%, the sensitivity was 97.62%, the specificity was 99.77%, and the ROC AUC was 98.69%. The authors also showed that the learned spatial features correspond to those that human experts typically use, which corroborated the validity of the model. In work (Placidi et al., 2021) independent component analysis (ICA) is used to split the signal into independent components (ICs) whose re-projections on 2D scalp topographies (images), also called topoplots, allow to separate artifacts and useful brain signals (UBS). In the article, a completely automatic and effective framework for EEG artifact recognition by IC topoplots is presented, based on 2D convolutional neural networks (CNNs), capable of dividing topoplots into four classes: three types of artifacts and UBS. Experiments carried out on public EEG datasets showed an overall accuracy of more than 98%. In Iaquinta et al. (2021) a reliable and user-independent algorithm is presented to detect and remove eye blink in EEG signals using CNN. For training and validation, three sets of public EEG data were used. All three sets contain samples obtained while the recruited subjects performed assigned tasks that included blinking voluntarily at specific moments, watching a video, and reading an article. The model used in this study was able to have an embracing understanding of all the features that distinguish a trivial EEG signal from a signal contaminated with eye blink artifacts. In Sun et al. (2020) a one-dimensional residual convolutional neural network (1D-ResCNN) model for raw waveform-based EEG denoising is proposed. An end-to-end (i.e., waveform in and waveform out) manner was used to map a noisy EEG signal to a clean EEG signal. The proposed model was evaluated on the EEG signal from the CHB-MIT Scalp EEG Database, and the added noise signals were obtained from the database. The proposed model was compared with independent component analysis (ICA), fast independent composite analysis (FICA), recursive least squares (RLS) filter, wavelet transform (WT), and deep neural network (DNN) models. Experimental results show that the proposed model can produce cleaner waveforms and achieve a significant improvement in SNR and RMSE. Meanwhile, the proposed model can also preserve the nonlinear characteristics of the EEG signals. In Yang et al. (2018) the use of the deep learning network (DLN) to remove ocular artifacts (OA) in EEG signals was investigated. The proposed method consists of an offline stage and an online stage. In the offline stage, training samples without OAs were intercepted and used to train a DLN to reconstruct the EEG signals. In the online stage, trained DLN was used as a filter to automatically remove OAs from contaminated EEG signals. The advantages of the proposed method are the non-use of additional EOG reference signals, the possibility of analyzing any number of EEG channels, time savings, and strong generalizability. The proposed method was compared with the classic independent component analysis (ICA), kurtosis-ICA (K-ICA), second-order blind identification (SOBI), and a shallow network method. Experimental results show that the proposed method performs better even for very noisy EEG.

A large number of teaching examples are needed to train the CNN. Unfortunately, the number of recorded EEG signal examples is often too small. Therefore, there is a need to use a technique called augmentation to increase the number of training examples. Various methods of augmentation of EEG signals are presented in Lashgari et al. (2020). In Lashgari et al. (2020) the authors indicate that the most popular methods of augmentation are those based on noise addition, GAN networks, sliding window, sampling, Fourier transform, recombination of segmentation. Wang et al. (2018) added Gaussian white noise to training data (in the time domain) to obtain new samples for an emotion-recognition task. Differential entropy (DE) features were used to train classifiers. For EEG signals, the DE features are equivalent to the logarithm of the energy spectrum in the delta (1–3 Hz), theta (4–7 Hz), alpha (8–13 Hz), beta (14–30 Hz), and gamma (31–50 Hz) frequency bands. The authors opted for Gaussian noise due to concerns that adding some local noise, such as Poisson or salt-and-pepper, may change the intrinsic features of EEG signals. In Wang et al. (2018) two basic data augmentation approaches used in image processing were implemented: geometric transformation and noise addition. In Luo et al. (2020) methods based on two deep generative models, variational autoencoder (VAE) and generative adversarial network (GAN), and two data augmentation strategies were proposed. To evaluate the effectiveness of these methods, a systematic experimental study was carried out on two public EEG datasets for emotion recognition, namely SEED and DEAP. First, realistic-like EEG training data in two forms were generated: power spectral density and differential entropy. Then, the original training data sets were augmented with a different number of realistic-like generated EEG data. Finally, support vector machines and deep neural networks with shortcut layers were trained to build affective models using the original and augmented training datasets. In Bao et al. (2021) a data augmentation model named VAE-D2GAN was proposed for EEG-based emotion recognition using a generative adversarial network. EEG features representing different emotions were extracted as topological maps of differential entropy (DE) in five classical frequency bands. The proposed model was designed to learn the distributions of these features for real EEG signals and generate artificial samples for training. The variational autoencoder (VAE) architecture can learn the spatial distribution of the actual data through a latent vector and is introduced into the dual discriminator GAN to improve the diversity of the generated artificial samples.

We propose a method based on the convolutional neural network (CNN) that allows the removal of eye blink artifacts from the EEG signal. The results obtained with the use of CNN were compared with the most popular methods of artifact removal – ICA and regression. For the implementation of the CNN-based method, it was necessary to achieve the intermediate goal, which was the implementation of the EEG signal and EOG artifact generators. The use of only a real EEG signal does not give the possibility of direct evaluation of the obtained results because we do not have a reference (it is not known what the real EEG signal is). Generated signals also enable better training of the neural network.

Signal fragments from 2 channels are fed to the CNN input. The first channel contains the eye blink signal and the second channel contains the EEG signal from which we want to remove the eye blink artifacts. The idea is presented in Figure 1. In this case, at the CNN input, fragments of the signal from the Fp1 electrode (eye blink artifacts) and the signal from which we want to eliminate blinks (the C3 electrode) are fed. CNN eliminates the eye blink signal (C3 – CNN). Then the input signals are shifted. This operation can be performed for each electrode.

The article is organized as follows. In the section “Materials,” two types of EEG/EOG signals used during the experiments were presented: real and generated signals containing eye blink artifacts. Details on generating artificial EEG signals with eye blink artifacts are also provided. The section “Methods” describes the structure of the CNN proposed to remove artifacts and details of training the network. Furthermore, two commonly used methods for removing eye blink artifacts are presented, i.e., independent component analysis and regression. The section “Results and Discussion” presents the results of the comparison of ICA, REG, and CNN methods for removing eye blink artifacts. The advantages and disadvantages of using CNN for this task are discussed.

To develop and evaluate eye blink artifact removal algorithms, we decided to use two datasets. The first set contains the real signals recorded for the N-back experiment. The N-back task is a standard method used to examine memory and attention (Salimi et al., 2020). This data set has a long duration and contains registrations from multiple users. Thanks to our algorithm, it was possible to generate a second set of artificial EEG/EOG signals. This data set was of particular importance for CNN training and testing.

Real EEG signals were recorded during an EEG test conducted with 20 people during an N-back task. EEG signals were recorded for the purposes of previous research related to the detection of user fatigue (Kołodziej et al., 2020). However, its use for research on methods to remove EB artifacts was not accidental. EEG signals were recorded for a relatively long time. There are numerous eye blink (EB) artifacts in the EEG signal. Participants (women and men) were 19–25 years old. They were informed about the overall purpose and organization of the experiment. The whole experiment lasted about 80 min and the experiment was always carried out at 10:00 am in a single session. Participants were recruited through an advertisement on the Internet and social media. During recruitment, they were asked to complete a survey via the Internet to collect basic information about them, such as age, sex, education, and presence/absence of neurological and psychiatric diseases. We only invited those who met the basic requirements (including, but not limited to, written permission to participate in the experiment and confirmation of no medical burden).

The letters were presented to the participants on a computer screen (one at a time). The task was to indicate whether the letter presented currently is the same as N = 2 letters back. Each participant completed the N-back task for 60 min. To register the EEG signal, we used a professional biomedical signal amplifier g.USBamp and an EEG cap equipped with 16 electrodes. The distribution of electrodes and their names are presented in Figure 2. The sampling frequency was 512 Hz. Electrodes were arranged according to the international 10–20 system: Oz, O2, O1, Pz, P4, P3, C4, C3, Cz, F8, F7, Fz, F4, F3, Fp1, and F9. No preprocessing methods were used.

A fragment of the EEG signal from one of the subjects is shown in Figure 3. Eye blink artifacts are very clearly visible, located around 10 and 12.5 s. The highest amplitudes of artifacts were recorded on the Fp1 and P3 electrodes. The propagation of artifacts to other electrodes is also visible.

The 1-s window presenting the signal fragment from Figure 3 is shown in Figure 4. It is a zoom-in on the eye blink artifact occurrence.

The real EEG signal contains various types of artifacts that appear when the test is performed. However, we do not have a reference signal from which to conclude what the EEG signal should look like after the artifacts have been removed. To enable such an evaluation, we have developed software that allows the generation of artificial EEG signals without artifacts and the addition of EOG artifacts to them. Due to this, it is possible to compare the performance of each of the analyzed methods (we have an EEG signal contaminated with artifacts and a clean EEG signal that should be obtained after cleaning). Statistical parameters of the generated signals were determined on the basis of observations of real signals recorded during the tests. These are the standard deviation (5–15 μV), the peak-to-peak value (45–100 μV), the interval between the appearance of eye blinks (0.5–4 s), the amplitude of eye blinks (0–650 μV), and the length of the signal in seconds.

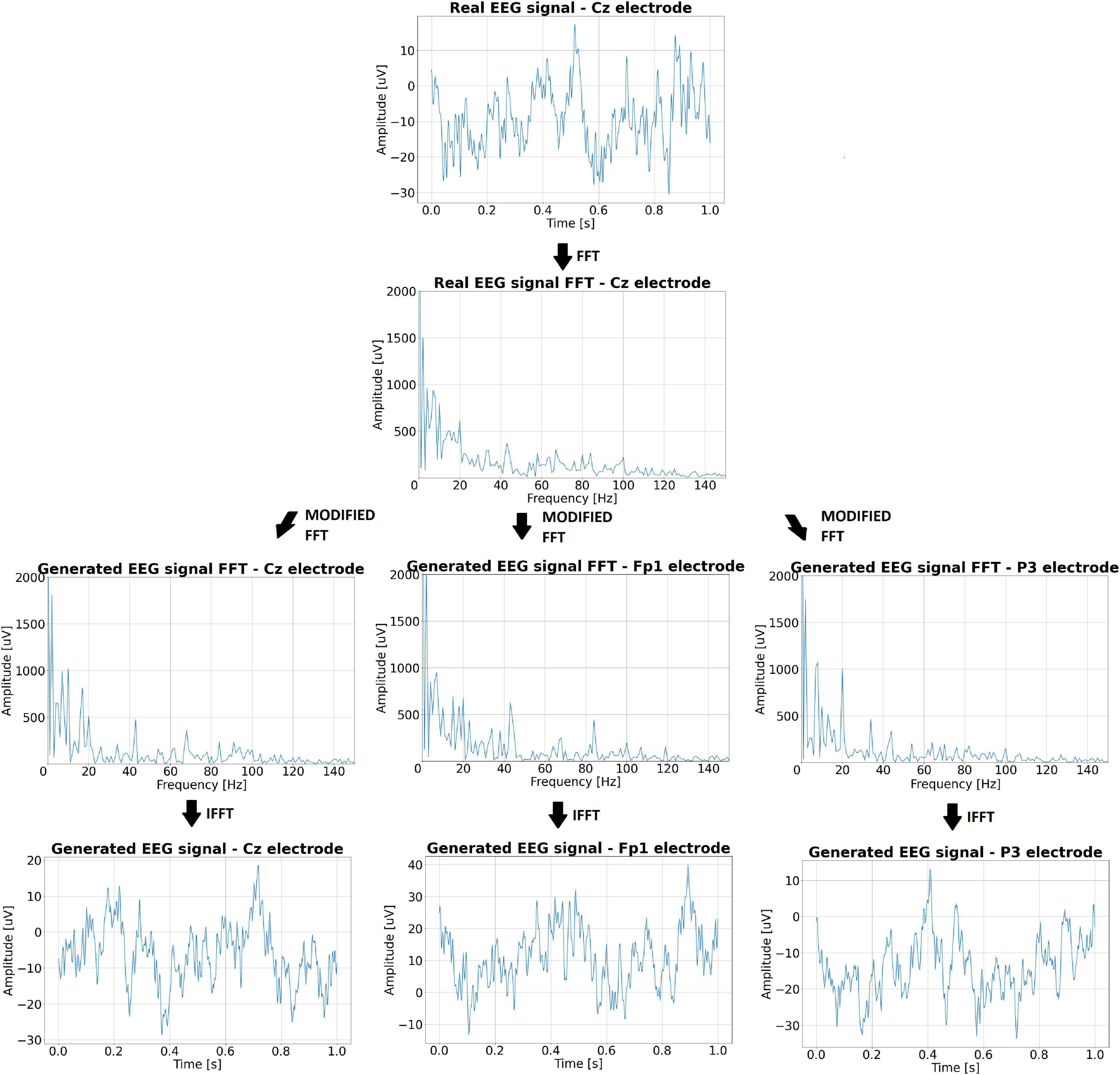

The first step is to generate an EEG signal and then add artifacts with the appropriate electrode-dependent gain to it. The EEG signal can be generated in many ways. One of them is to get pink noise with given parameters. Pink noise, also known as 1/f noise, is a random stochastic process or a signal whose mean power spectral density is inversely proportional to frequency (Isar and Gajitzki, 2016). According to Voytek et al. (2015), many natural phenomena, including electroencephalography, can be described by 1/f noise. We decided to use a different method of EEG signal generation (Sakai et al., 2017). This method generates an EEG signal based on random modifications of the spectrum of a real signal. As the EEG reference signal, the signal fragment from an electrode subjected to minimal EOG interference (e.g., the Cz electrode) is selected. Thus, the generated signal corresponds best to the undisturbed EEG signal. The signal generation process begins with the calculation of the spectrum of a given fragment of the real EEG signal using the fast Fourier transform (FFT). Then, random coefficients are generated and a modified spectrum is created by adding/subtracting the random values to the FFT coefficients of the real EEG signal. The spectrum-modifying coefficients are in the range of ±2 μV. The last step is to apply the inverse Fourier transform (IFFT), which enables us to obtain a time-domain signal similar to the real EEG signal. The generated EEG signal has a spectrum similar to pink noise.

The generated EEG signal is in the form of a 1-s window that can be combined into a signal with a predetermined number of seconds. The generation algorithm ensures that the amplitude differences at the border of the joined windows are not too large. The incoming signal can differ up to 7 μV from the last sample of the signal already created – this value was determined based on the observation of real signals. The generated signal (on different electrodes) based on the real EEG signal from the Cz electrode is shown in Figure 5. The spectra of the individual signals are also shown there.

Figure 5. The real EEG signal from the Cz electrode, its spectrum, and the artificial signals generated on its basis along with the spectra.

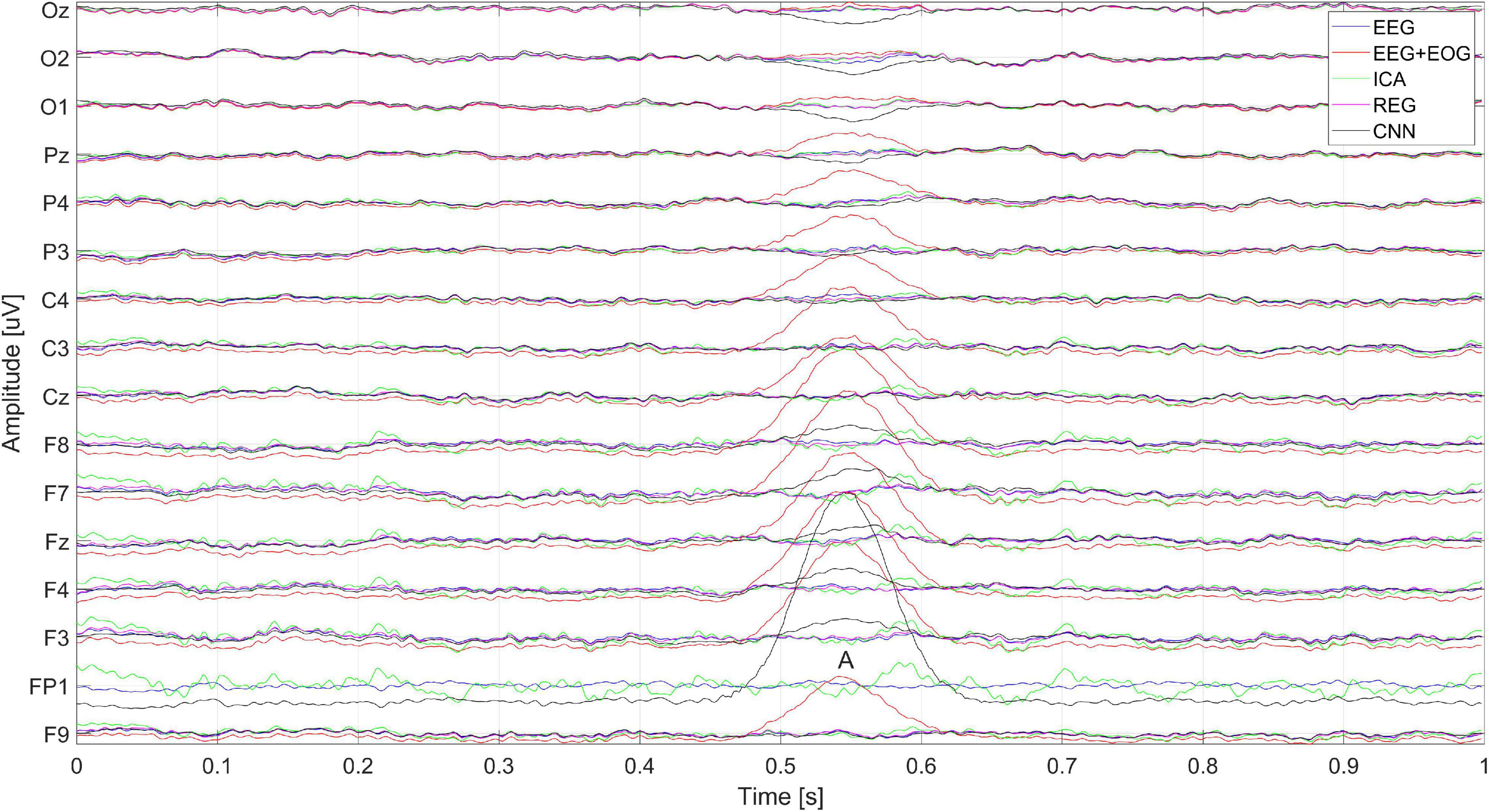

The next step is to generate eye blink artifacts, according to the parameters determined on the basis of observation of real signals. The propagation of artifacts on the EEG signal on individual electrodes is very important here. For this purpose, the range of coefficients responsible for artifact propagation was established for each of the electrodes, depending on their position. Artifacts were added to the pure signals after appropriate amplification or attenuation, depending on the coefficient specified for the given electrode. The eye blink artifact resembles the shape of a Gaussian window and this shape was used to generate the artifacts (Alquran et al., 2019). Eye blink artifacts were generated and added to the clean signal (with an appropriate time interval). Figure 6 presents 5 s of pure EEG signal and EEG signal with eye blink artifacts propagated on individual electrodes.

Zoom in on the EEG signal (Figure 6) containing the occurrence of the EOG artifact and its propagation to the remaining electrodes is shown in Figure 7.

The generated signals allow the check and comparison of individual methods because we have a reference in the form of a pure EEG signal. In the case of cleaning the real EEG signal from artifacts, it is not possible to compare the waveforms with the reference signal (pure EEG) because it is not known. Artificially generated signals were also used to train CNN.

In our research, we compared the use of the CNN method to remove EB artifacts with the two best-known methods: regression (REG) and independent component analysis (ICA). Each method works differently. The ICA method tries to find the most independent components. Based on expert knowledge or quantitative measures, we are able to identify ICA components responsible for artifacts and remove them. The regression method removes artifacts from individual channels. For this, it is required to indicate the signal in which the artifacts are found. We assumed that this is the signal from the Fp1 electrode.

The ICA method (Cheng et al., 2019; Jiang et al., 2019) allows the removal of artifacts from the EEG signal without the need for a reference channel. The ICA method works by decomposing the recorded signal into independent components. In principle, the components will include those responsible for the sources of artifacts. Such artifact-containing components are rejected automatically or by an expert, and the signal is then reconstructed by mixing the remaining components. As a result, we get signals without artifacts. The problem can be described by the equation (Jiang et al., 2019):

We assume that X is the matrix of signals recorded by the measuring electrodes, W is the mixing matrix, and S is the matrix of source signals. After transforming the equation, we get the unknown source signals:

The assumption of the ICA method (Jiang et al., 2019) is the statistical independence of the source signals. The aim of ICA is to find such a mixing matrix W that allows one to obtain the most independent result signals. If the components responsible for artifacts (eye blink or other) are found, it is enough to reset the appropriate weights of the W matrix and then mix the other components. The matrix Wm is the modified mixing matrix.

Before making the transformation, the low frequencies were filtered out (high-pass filtering, cutoff frequency 1 Hz, Butterworth filter order 6). We decided to choose 15 ICA components. Two components 0 and 1 (associated with artifacts) were removed and then the signal without these components was reconstructed. Examples of the components calculated for the real signal are shown in Figure 8. Each component was visually assessed and the eye blink components were selected.

The regression method, according to Urigüen and Zapirain (2015), was very often used to remove EOG artifacts in the 1990s due to its simplicity and low computational requirements. Regression is still a popular and commonly used method of artifact removal (Ranjan et al., 2021). The method requires a reference channel, which was chosen by us as Fp1 (the electrode closest to the eye). The regression method assumes (Urigüen and Zapirain, 2015) that each EEG measurement channel is the sum of a certain clean source signal and a reference signal (containing artifacts). The aim of regression is to estimate the optimal value of the propagation coefficient for each of the electrodes (except the reference one), allowing proper removal of artifacts. Removal of artifacts is the subtraction of a certain amount of the EOG reference signal (from the Fp1 electrode) from the contaminated EEG. As a result, we obtained a cleaned signal. In general, the regression equation (Jiang et al., 2019) can be written as:

EEGclear is the artifact-cleaned EEG signal, EEGnoised is the signal before artifact removal, B is the propagation factor, and EEGref is the EOG reference signal. With multiple regression (Urigüen and Zapirain, 2015), the signals measured on individual electrodes are influenced by more than one reference signal, for example, horizontal, vertical, or radial ocular artifacts.

The linear regression method used by us takes the data from the Fp1 electrode (EOGref) and subtracts from each sample the mean of the signal recorded on that channel (EOGref_ maen). Assuming that we have N samples, we can represent this as an equation:

The signal is then multiplied by its transposition to compute the cov factor.

In the next step, for each of the channels (separately, in order to reduce memory use), data is collected, and the average is calculated, which is subtracted from the entire signal for a given channel, similarly to the reference channel. To remove artifacts, from a channel (other than Fp1) containing N samples, the recorded EEGnoised signal with an average equal to EOGnoised_ maen is transformed as follows:

Then the coefficient B is calculated as a solution to the linear equation:

After transforming the equation we get:

In the next step, the reference signal multiplied by factor B is subtracted from the signal from the analyzed channel (electrode). In this way, we obtain a signal cleaned of artifacts for a given electrode (EEGclear).

The algorithm works in this way until all channels are cleared (apart from the reference channel, which in our case is Fp1).

Convolutional neural networks (CNN) (Arora et al., 2020) are most often used in problems related to computer vision. These networks can be used not only for classification but also for regression problems. A characteristic feature of CNN compared to the traditional neural network is the fact that during its operation it focuses on the extraction of features (Arora et al., 2020). Each CNN consists of four basic layers – the convolution layer (filters with given shapes that allow for the extraction of features), pooling layer (they are used to reduce the size of analyzed data, we distinguish several types of pooling, for example, MaxPooling or Average Pooling), fully connected layer and loss function (responsible for calculating errors between the current and the desired network output). There are many CNN structures, they can vary in the number of layers, shape and size of filters, activation functions, and other parameters. Examples of very popular networks are AlexNet, GoogLeNet, and VGGNet (Arora et al., 2020; Mutasa et al., 2021).

The operation of CNN is broken down into several stages (Mutasa et al., 2021). First, filters allow the designation of a feature map. This is done by the convolution layer (Vinayakumar et al., 2017). It is a key component of CNN. The process is repeated several times to filter the feature maps obtained with the use of subsequent convolutional kernels. Characteristic parameters of the convolution layer are the number and size of filters in individual layers, the step by which the window corresponding to the filter is moved (Murata et al., 2018). The pooling layer is usually placed between two convolutional layers (Zhao and Wang, 2019). The layer performs the pooling operation on feature maps, i.e., the reduction of data size while maintaining the most important features. For this purpose, the data is divided into cells of equal size and a certain value is kept for each cell (maximal – Max Pooling, average – Average Pooling). The pooling layer has two main hyperparameters. These are the size of the cell into which we divide the data and the step by which individual cells will be separated from each other. The ReLU correction layer allows you to convert all negative values to zero. It comes as an activation function (Tan and Pan, 2019). The fully connected layer is often the last layer of CNN. A feature vector is fed as an input, which is transformed into a new vector using a linear combination and an activation function. The network output is compared with the training data set and the resulting loss, depending on its degree, causes the network weights to be updated using gradient and backpropagation. During the training of the neural network, this process is repeated many times to improve the quality of the model.

Selecting the correct CNN structure requires a lot of research. We focus on ensuring a compromise between operating time and the effectiveness of cleaning the signal from artifacts. Due to the one-dimensional input data (signal to be cleaned and reference signal from Fp1), one-dimensional filters were used. Two convolutional layers were created. In the first one, the number of filters was 20, and the kernel size was assumed to be 40. The filter shift step was set to 2. The second convolution layer contained 10 filters and the kernel size was set to 20. The shift step was 1. In both convolutional layers, the activation function was the ReLU. Next, a densely connected layer was added, which also defined the size of the output data (1-s window, 512 samples). The ADAM optimizer was used in the training process. Table 1 shows the structure of the convolutional network. The network training set contained 70,000 1-s EEG/EOG signal windows, which were broken down into training data (80%) and validation data (20%).

Training the CNN required the determination of the number of epochs and examples that were fed to the input during subsequent iterations (batch size). The selected batch size was 128. This allowed for the use of less memory. Furthermore, more frequent updates of the network weights were performed, which accelerated training. The number of epochs used in CNN training was 10. We considered adding batch normalization layers, but it did not improve the performance of the network. Therefore, we decided to omit them. The network structure generated with the use of the tensorflow packet is shown in Figure 9.

The ADAM optimization algorithm was used in the learning process. The parameters selected during the training are summarized in Table 2. The chosen loss function was the mean square error.

With the use of Learning rate we can determine how much weights will be modified in subsequent training iterations (Yoo et al., 2019). Beta_1 and Beta_2 are hyperparameters used for first- and second-order moment estimation, respectively. Thanks to them, it is possible to correct the moments by removing the bias (Şen and Özkurt, 2020). The epsilon parameter is responsible for preventing a possible division by zero when updating the weights. Therefore, very low epsilon values should be chosen in such a way as not to affect the result and, at the same time, to ensure no division by zero.

To evaluate the effectiveness of the proposed CNN method for removing eye blink artifacts, comparisons were made with the ICA and regression methods. To be able to compare the methods, a set of EEG signals and a set of signals containing eye blink artifacts were generated. The pure EEG signal served as the reference signal. Then, ten 1-s windows containing EOG artifacts were generated. For each window, statistical coefficients (Ckk, CFp1 MAPE, RMSE, and Skewness) were calculated, allowing a comparison of the effectiveness of artifact removal.

The Ckk is the correlation between the cleaned signal (with the use of one of the methods – CNN, ICA, and regression) and the original signal on the electrode k. The measure used is the Pearson correlation. The higher the absolute value of the Ckk, the better, because the signal after cleaning is closer to the real signal. CFp1 is the correlation between the samples of the signal from the Fp1 (reference) electrode and the samples of the cleaned signal for a specific electrode. In general, it is better to keep the CFp1 value as low as possible. MAPE determines the mean percentage error between the reference signal (EEG) and the one cleared by an algorithm. It is calculated as the arithmetic mean of the sum of the absolute values of the differences between the samples from the real signal and the cleaned signal, related to the real signal.

The number of inputs is denoted by nsamples, yi is the value for the i-th sample, and is the model predicted value for the i-th sample. RMSE is the root mean square error. It is calculated as the root of the arithmetic mean of the sum of the squares of the differences between the samples of the raw signal (EEG) and the signal cleaned by the given method.

MAPE and MSE errors should be kept as low as possible. The Skewness describes the skewness calculated for the cleaned signal using a given method. For normally distributed data (perfectly symmetric distribution), the skewness should be zero. If skewness is greater than zero, the largest number of data is on the left side of the curve representing the probability distribution. A skewness that is different from zero may indicate an existing eye blink artifact (Xiang et al., 2020).

We calculated Ckk, CFp1, MAPE, RMSE, and Skewness for all electrodes and all subjects. Detailed results are presented in Supplementary Appendix 1. The calculated values of the coefficients are plotted on the head surface (Figures 10–14). Such a representation allows for easier comparison of the results.

Figure 10 shows the Ckk value plotted on the head surface. A great similarity can be observed in terms of the distribution and values for the CNN and ICA methods. On the other hand, higher values of the Ckk coefficient occur for the REG method.

Figure 11 shows the values of CFp1 plotted on the head surface. The lowest values of the coefficients are observed for the REG method. This is due to the principle of the REG method, that is, minimizing the correlation between individual electrodes and the Fp1 electrode (associated with eye blink artifacts). We can observe an increase in the CFp1 value for the electrodes at the front of the head for the CNN method. On the other hand, lower values of CFp1 can be observed for electrodes placed in the back of the head. For the ICA method, the distribution of the CFp1 coefficient is more homogeneous. In this case we did not observe negative values of the CFp1 coefficient.

Figure 12 shows the Skewness plotted on the surface of the head. The smallest disproportions of the coefficient values (close to zero) are observed for the ICA and REG methods. However, we can observe significant disproportions for the CNN method. For the CNN method, we can observe positive values of the skewness coefficient for the electrodes at the front of the head, while negative values for the electrodes at the back of the head, in particular for the electrodes O1, O2, and Oz.

Figures 13, 14 show the RMSE and MAPE errors. We can observe an increase in the values of the errors for the electrodes in the front of the head for the ICA and REG methods. Much lower values of the RMSE and MAPE errors can be observed for the CNN method, especially in the front part of the head. We observe lower values of the MAPE error for the entire head area for the CNN method compared to the ICA and REG methods. In our opinion, the MAPE/RMSE measure best describes the effectiveness of artifact removal as it relates to the reference signal.

To discuss in more detail the values obtained for Ckk, CFp1, MAPE, RMSE and Skewness, four electrodes were selected, located in the central, parietal, frontal, and occipital parts of the head: Cz, P3, F3, and Oz. The Ckk, CFp1, MAPE, RMSE, and Skewness values for electrodes Cz, P3, F3, and Oz are presented in Tables 3–6.

Table 3 presents the coefficients related to cleaning the signal from the Cz electrode. This electrode is located in the center of the head. In this case, very good results achieved by the CNN method can be observed. The correlation Ckk (0.93) is very high. The errors MAPE (0.805) and RMSE (2.935) have low values. The CFp1 coefficient (−0.027) is low, which confirms the correct elimination of eye blink artifacts.

Table 4 shows the coefficients related to cleaning the signal from the P3 electrode. The electrode is located on the left side of the central part of the head. You can also notice very good removal of artifacts using the CNN method. The cleaned and real EEG signals are strongly, positively correlated – the Ckk coefficient is 0.869. The MAPE (1.219) and RMSE (4.381) errors for the CNN method are the lowest among the methods analyzed. The Skewness coefficient (−0.018) is also the smallest – it proves that the distribution is even. The correlation with the Fp1 electrode is negative and reaches values close to the ICA method (CFp1 equal to −0.321). In this case, the CNN method turned out to be comparable (and even better in terms of errors) to the regression method. Additionally, the ICA method introduced changes to the signal skewness, which is not desirable for proper signal cleaning.

Table 5 presents the coefficients related to the cleaning of the signals from the F3 electrode. This electrode is located in the left front of the head. In this case, the CNN cleaning results are comparable to those of ICA. The CNN method achieved significantly smaller MAPE (2.712) and RMSE (11.975) errors compared to the other methods. However, the obtained values of Ckk (0.508) and a relatively high positive correlation with the Fp1 reference electrode (CFp1 equal to 0.790) indicate partial removal of artifacts. Furthermore, the Skewness index for the CNN method is high (1.499), which may indicate the existence of artifacts in the signal despite attempts to clean it.

Table 6 presents the average values of the coefficients for the Oz electrode. This electrode is located on the back of the subject’s head. In this case, the advantage of the ICA and regression methods over CNN can be observed. The Ckk coefficient that describes the correlation between the cleaned and the original signal is much lower for CNN (0.556) than for the other methods (0.944 for ICA and 0.980 for regression). This means that there is a discrepancy between the cleaned signal and the original one. The other coefficients, MAPE equal to 2.810 and RMSE 10.978, are also high for the CNN method. The CFp1 coefficient indicates a high content of artifacts in the cleaned signal – the correlation of the cleaned signals on individual electrodes with the Fp1 reference electrode is high (−0.725). The Skewness for the CNN method (−0.875) also indicates a higher occurrence of artifacts than for the ICA (−0.073) and regression methods (−0.036).

Analyzing Tables 3–6, it can be seen that for the CNN method, the Ckk coefficient is high for the Cz (0.93) and P3 (0.869) electrodes, which means that the signals are cleaned properly. A high correlation indicates a strong similarity between the cleaned signal and the original. The ICA and CNN methods are distinguished by relatively low MAPE and RMSE errors (for 3 out of 4 electrodes CNN achieves much lower errors). The cleaned signals are strongly correlated with the original, and the Ckk coefficients are high (for the Cz electrode −0.93, for the P3 electrode −0.869). Furthermore, cleaned signals are poorly correlated with the Fp1 reference electrode.

Figure 15 shows the artificially generated 1-s window (512 samples) of EEG, EEG + EOG, and cleaned signals using each of the tested methods – CNN, ICA, and regression. In the case of generated signals, the CNN changes the polarization of the Oz, O2, and O1 electrodes at the place of the artifacts (marked A in Figure 15) – this is not the desired phenomenon. Figure 5 shows the differences in the cleaned signals obtained with the use of the tested methods. You can observe discrepancies in the cleaned signal using the ICA method in relation to the others, for example, electrodes F7, F8, and Fz.

Figure 15. Artificially generated 1-s window of pure EEG, EEG + EOG, and cleaned signal using ICA, regression, CNN methods. The letter A represents the moment the blink artifact occurred.

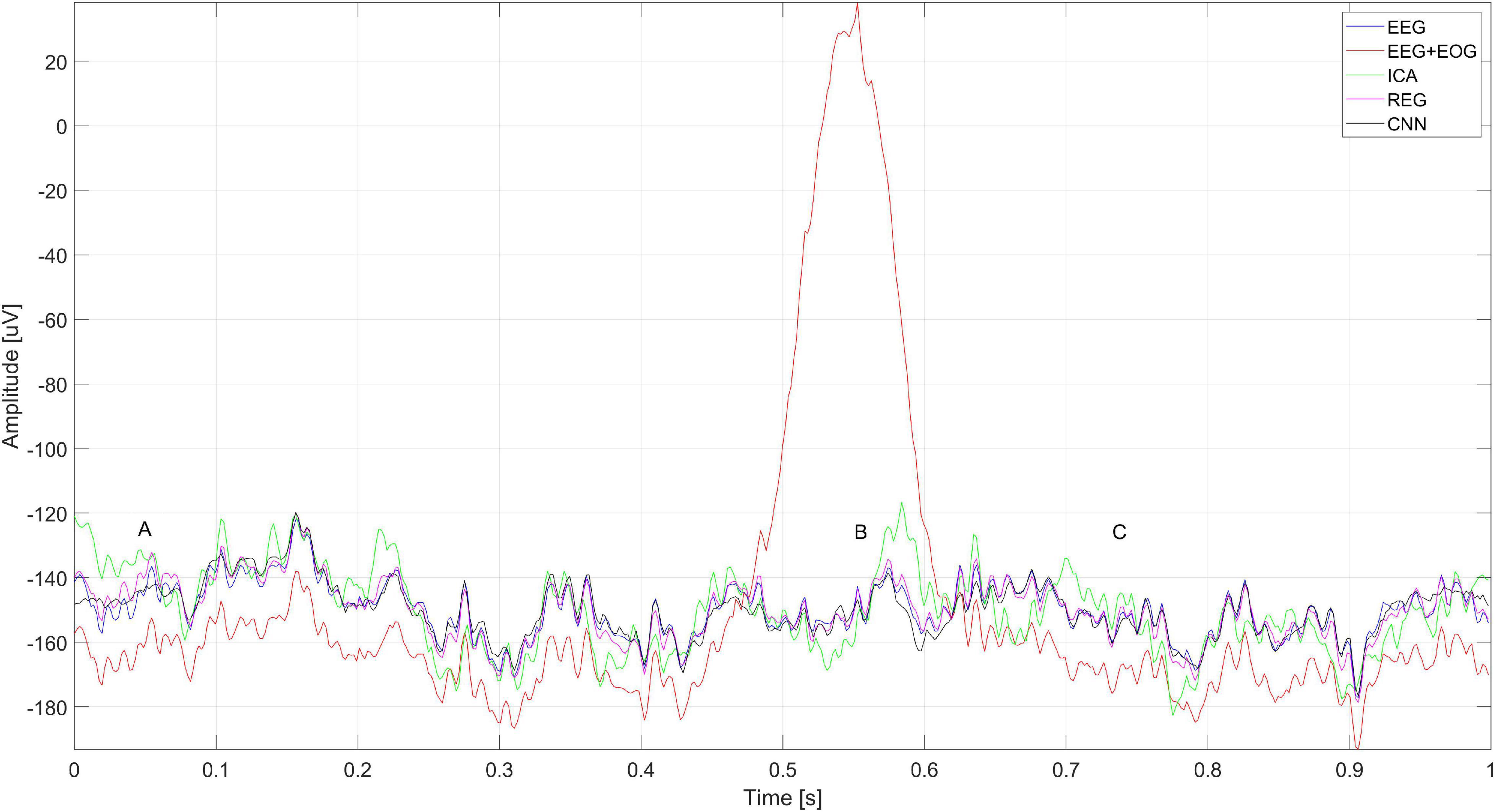

Figure 16 shows the spectrum of the signal from the Cz electrode (presented in Figure 15). EOG signals (Banerjee et al., 2013) are in the range of 0.1–20 Hz. In Figure 16, it can be seen that all the methods eliminated low-frequency amplitudes. The CNN-based method performed very well. The spectrum of the cleaned signal is closest to the original one. It should be noted that the ICA method introduced a significant distortion of the spectrum for 5–10 Hz.

Figure 17 shows a close-up of the signal from the Cz electrode. There is a noticeable difference in the operation of ICA and other methods visible in the times A, B, and C marked in Figure 17. Changes in the signals for A and C are caused by the presence of a constant component – in many cases of EEG signal analysis, it does not matter. It can also be seen that the highest coverage of signals with the original EEG (correct cleaning) is in the case of the regression method and CNN. In the part marked B, we can observe a significant modification of the signal using the ICA method.

Figure 17. A fragment of the simulated EEG signal for the Cz electrode. The letters A–C represent selected moments: A and C the EEG signal fragment without artifacts and B with an eye blink artifact.

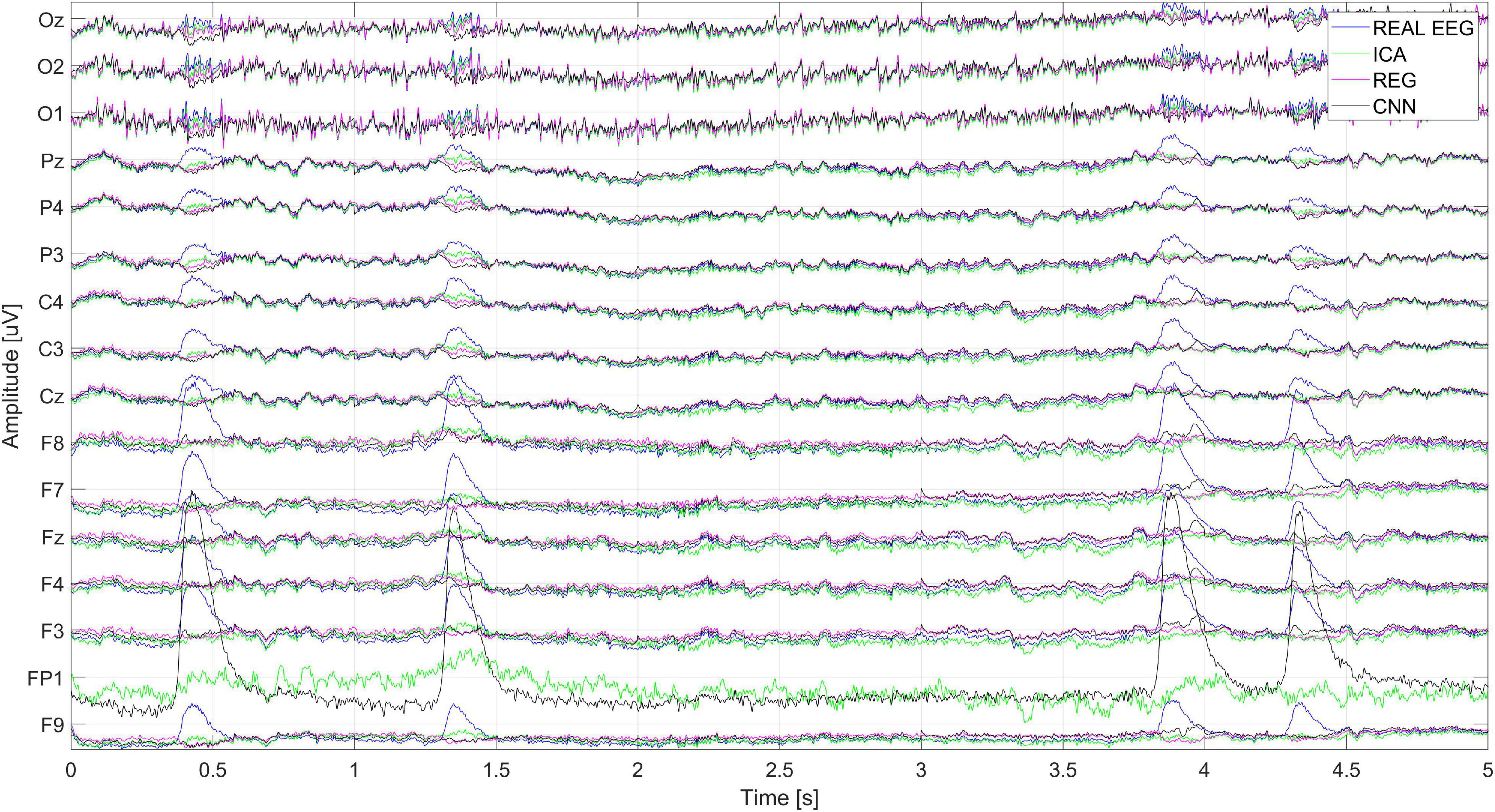

Figure 18 shows the cleaning effect on the real EEG signal (user S03). It can be seen that artifacts from the real EEG signal are correctly removed. The ICA method, as described above, also cleans the signal on the reference electrode. It can be seen that the removal of artifacts from the Fp1 reference electrode with ICA is much worse than the cleaning of signals on other electrodes.

Figure 18. The real EEG signal fragment recorded during the test and cleaned by ICA, regression, CNN method.

Figure 19 shows the spectrum of the signal from the Cz electrode (presented in Figure 18). There is a visible decrease in the amplitudes of successive bands of the spectrum in the low-frequency range, which indicates the correct operation of the methods used to eliminate eye blink artifacts. It can be seen that all the methods allow us to obtain a similar spectrum.

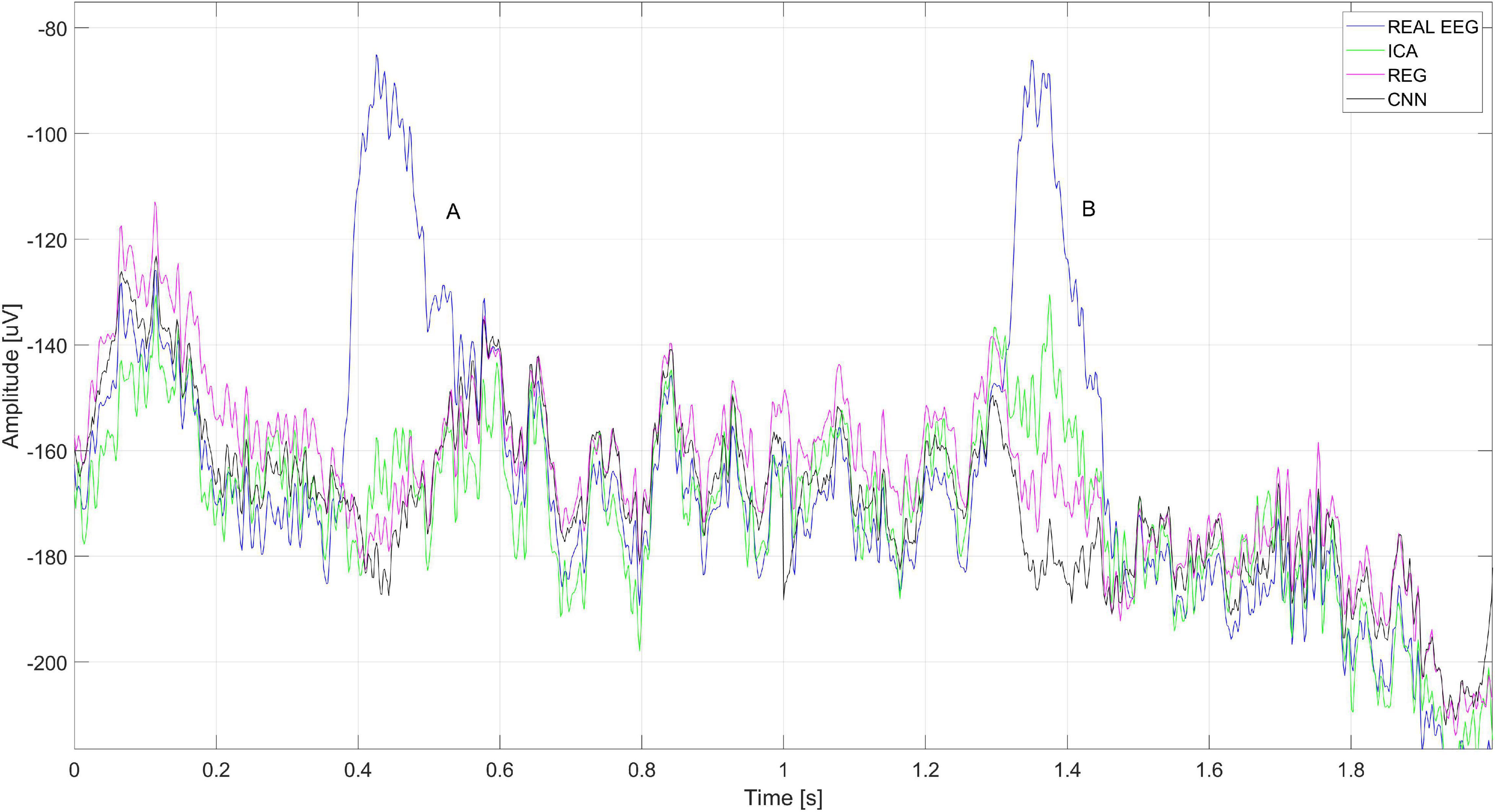

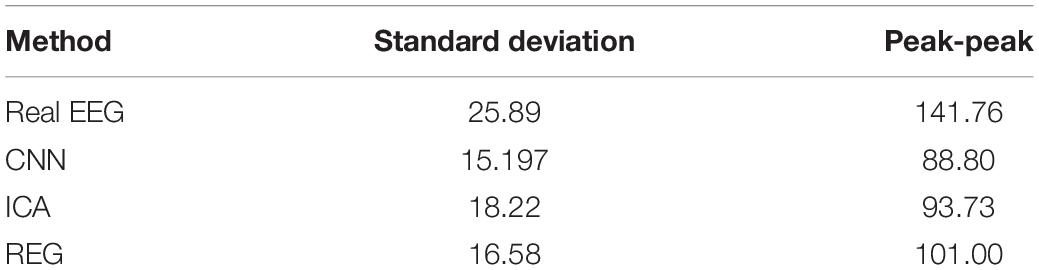

Figure 20 shows a close-up of the signals recorded on electrode Cz (presented in Figure 18). The figure shows two eye blink artifacts labeled A and B. The first was correctly removed with each of the analyzed methods. In the case of B, it can be seen that the artifact removal using the ICA method was not complete. Much better results were obtained using CNN and the regression method.

Figure 20. A fragment of the EEG signal recorded for the Cz electrode. The letters A and B represent the times when the blink artifact occurred.

The above discussion shows that the application of the CNN method gives very good results in the removal of eye blink artifacts, in particular for the electrodes placed in the central part of the head. Therefore, the application of the proposed method may be useful as a pre-processing in the analysis of the P300 potential or other event-related potential (ERP) occurring in the central part of the head. To verify the usefulness of the method to eliminate eye blink artifacts, we cleared the EEG signals from the Cz electrode for the signals registered during the experiments with the N-back task.

Table 7 presents the signal statistics – parameters describing the real signal and signals after artifact removal for the Cz electrode. Average values for standard deviation and peak-to-peak values are shown for all 20 users. The results obtained indicate that the artifacts are correctly removed. The peak-to-peak value for the tested methods is lower than that for the raw signal containing the artifacts. The peak-to-peak value of the signal before cleaning is 141.76 μV. After cleaning, it decreases for each method (CNN – 88.8 μV, ICA – 93.73 μV, regression – 101 μV). These indicate a good performance of the CNN and ICA methods and slightly worse for the regression method. In addition, a reduction in the standard deviation can be seen for each method. This indicates a reduction in the scattering of samples in the cleaned signal compared to the raw signal. The decrease is most noticeable for the CNN method (from 25.89 μV to 15.197 μV) and regression (from 25.89 μV to 16.58 μV).

Table 7. Statistical parameters describing 3 s of the real and cleaned signals (using CNN, ICA, and regression methods) for the Cz electrode.

Eye blink artifacts produce much larger amplitudes than potentials of interest in the EEG signal. This is especially true for ERP. During the N-back task, the users watched the computer monitor. Stimuli that are presented for a long time can cause discomfort in the examined person and force the eyes to blink. This is a natural activity. It happens that such blinks provoked by the presented stimuli can be easily mistaken for the desired potentials. Such an example can be observed in the case of recorded signals. For user S03, about 0.4 s after the stimulus presented, blinking of the eyes occurs very frequently and regularly. It is observable on the FP1 electrode but also on Cz, where we would expect, e.g., the P300 potential. Figure 21 shows an example of averaged ERP after the N-back stimulus. ERP without filtration (real) is shown in blue, orange – after removing artifacts using CNN, green – after removing artifacts using the ICA method, and red – after removing artifacts using regression. Even averaging, which is standard in this type of analysis, does not eliminate the problem of repetitive artifacts. This may result in incorrect interpretation of potentials.

Next, we check the operating times of the CNN, ICA, and regression algorithms implemented. We used the real signal (S03_EEG), fragments of various lengths were selected – 10 s, 60 s, 10 min, 30 min, and 50 min. The operation of the methods was tested using a computer equipped with an Intel Core i7-9750H 2.60 GHz processor, 32 GB RAM, and a GeForce GTX 1660 Ti graphics card with 6 GB GDDR6 memory. Table 8 shows the operating times of each method needed to clean EEG signals of various lengths.

According to the data in Table 8, it can be seen that the CNN method is the slowest method. It takes about 26 min to clean 1 h of an EEG signal with 16 channels. The fastest method is regression – for a signal lasting 50 min, the cleaning lasted 2.176 s. The time differences are due to the computational complexity of the individual methods. Despite long training and long operating time, the CNN method gave very good results in cleaning the signals from the electrodes located in the center and slightly on the back of the head. The RMSE and MAPE errors for these electrodes are much lower than those obtained when using other methods. In the case of analysis of real signals, the CNN method does not introduce distortion into the cleaned signal, which shows its advantage over the ICA method.

It should be noted that in experiments we used a signal database recorded previously with a fixed sampling frequency (fs = 512 Hz). The trained CNN for set conditions cannot be used for differently recorded EEG signals. Changing the sampling rate or changing the amplifier has to be associated with retraining the CNN. However, the results presented show that it is a promising method of artifact removal. In future experiments, the authors plan to record EEG signals, EOG signals, and muscle activity. The network could then be trained not only to remove EB-type artifacts, but also artifacts related to the movement of the eye, facial muscles, and neck. Future research should also include optimization of the number of samples of the EEG signal fed the CNN. Currently, the number of samples is 512. This number of samples is somewhat of a compromise between the signal time, which may contain a blink pattern, and the number of samples at the input of the network. Too many samples make it difficult to train the network, but too few samples could not take into account the shape of the eye blink.

Experiments have shown that the use of CNN method gives better results in the task of removing eye blink artifacts than regression methods or independent component analysis. The mean value of the MAPE error for the CNN method was 4.69, for the ICA method it was 7.84, and for the REG method it was 7.76. The CNN method better removes eye blink artifacts, especially in the central and parietal parts of the head. An example can be the electrode Cz. In that case, for the CNN method, errors such as MAPE (0.805) and RMSE (2.935) are much lower than for ICA (MAPE = 4.485 RMSE = 13.140) and regression (MAPE = 4.795 RMSE = 12.145). Furthermore, visual inspection showed that the ICA method introduces distortion in the shape of the EEG signal. No such changes were observed for the regression method and CNN. On the other hand, better artifact removal results were obtained for ICA and regression methods when it comes to electrodes placed in the occipital area of the head (O1, O2, and Oz). In this case, the use of the CNN method is questionable. It should be noted that the CNN method is much better suited for offline removal of artifacts than online removal. This is because we need to have a set of signals that are needed to train the network. In addition, we need to train the network. The time required on the CNN method to work on short EEG signals is acceptable (a few minutes). For EEG signals that last several hours, the analysis may be too time-consuming. Further research should also consider other CNN neural network structures and training the network using more examples and types of artifacts.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

Ethical approval was not provided for this study on human participants because the studies on volunteers were non-interventional. The patients/participants provided their written informed consent to participate in this study.

MJ was responsible for the implementation of deep learning methods (CNN), program generating EEG/EOG signals, and software comparing the operation of the methods, and preparation of the text of the article – introduction and results. MK was responsible for recording the EEG signal during the presentation of the N-back task for 20 people, research concept, developing the CNN method to remove artifacts, and developing a methodology for comparing the operation of the methods. AM was responsible for the preparation of the software for the presentation of the N-back task and EEG signal registration, substantive evaluation of the results, editing the summary of the article, and developing a method for comparing artifacts removal algorithms: CNN, ICA, and regression. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2022.782367/full#supplementary-material

Alquran, H., Alqudah, A., Abuqasmieh, I., and Almashaqbeh, S. (2019). “Gaussian model of electrooculogram signals,” in Proceeding of the 2019 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT), doi: 10.1109/JEEIT.2019.8717499

Anderer, P., Roberts, S., Schlögl, A., Gruber, G., Klösch, G., Herrmann, W., et al. (1999). Artifact processing in computerized analysis of sleep EEG – a review. Neuropsychobiology 40, 150–157. doi: 10.1159/000026613

Arora, D., Garg, M., and Gupta, M. (2020). “Diving deep in deep convolutional neural network,” in Proceeding of the 2020 2nd International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), 749–751. doi: 10.1109/ICACCCN51052.2020.9362907

Banerjee, A., Datta, S., Pal, M., Konar, A., Tibarewala, D. N., and Janarthanan, R. (2013). Classifying electrooculogram to detect directional eye movements. Proc. Technol. 10, 67–75. doi: 10.1016/j.protcy.2013.12.338

Bao, G., Yan, B., Tong, L., Shu, J., Wang, L., Yang, K., et al. (2021). Data augmentation for EEG-based emotion recognition using generative adversarial networks. Front. Comput. Neurosci. 15:115. doi: 10.3389/fncom.2021.723843

Browarska, N., Zygarlicki, J., Pelc, M., Niemczynowicz, M., Zygarlicka, M., and Kawala-Sterniuk, A. (2021). “Pilot study on using innovative counting peaks method for assessment purposes of the EEG data recorded from a single-channel non-invasive brain-computer interface,” in Proceeding of the 2021 25th International Conference on Methods and Models in Automation and Robotics (MMAR) (Miêdzyzdroje, Poland: IEEE), 68–72. doi: 10.1109/MMAR49549.2021.9528447

Chen, G., Chen, Y., Yuan, Z., Lu, X., Zhu, X., and Li, W. (2019). “Breast Cancer Image Classification based on CNN and Bit-Plane slicing,” in Proceeding of the 2019 International Conference on Medical Imaging Physics and Engineering (ICMIPE), 1–4. doi: 10.1109/ICMIPE47306.2019.9098216

Cheng, J., Li, L., Li, C., Liu, Y., Liu, A., Qian, R., et al. (2019). Remove diverse artifacts simultaneously from a single-channel EEG based on SSA and ICA: a semi-simulated study. IEEE Access 7:2019. doi: 10.1109/ACCESS.2019.2915564

Garg, P., Davenport, E., Murugesan, G., Wagner, B., Whitlow, C., Maldjian, J., et al. (2017). “Using convolutional neural networks to automatically detect eye-blink artifacts in magnetoencephalography without resorting to electrooculography,” in Medical Image Computing and Computer Assisted Intervention - MICCAI 2017 Lecture Notes in Computer Science, eds M. Descoteaux, L. Maier-Hein, A. Franz, P. Jannin, D. L. Collins, and S. Duchesne (Cham: Springer International Publishing), 374–381. doi: 10.1007/978-3-319-66179-7_43

Hasasneh, A., Kampel, N., Sripad, P., Shah, N. J., and Dammers, J. (2018). Deep Learning Approach for Automatic Classification of Ocular and Cardiac Artifacts in MEG Data. J. Eng. 2018, 1–10. doi: 10.1155/2018/1350692

Iaquinta, A. F., Silva, A. C., de, S., Júnior, A. F., de Toledo, J. M., and von Atzingen, G. V. (2021). EEG multipurpose eye blink detector using convolutional neural network. ArXiv [preprint]. ArXiv210714235.

Isar, D., and Gajitzki, P. (2016). “Pink noise generation using wavelets,” in Proceeding of the 2016 12th IEEE International Symposium on Electronics and Telecommunications (ISETC), 261–264. doi: 10.1109/ISETC.2016.7781107

Jiang, X., Bian, G.-B., and Tian, Z. (2019). Removal of artifacts from EEG signals: a review. Sensors 19:987. doi: 10.3390/s19050987

Kawala-Sterniuk, A., Podpora, M., Pelc, M., Blaszczyszyn, M., Gorzelanczyk, E. J., Martinek, R., et al. (2020). Comparison of smoothing filters in analysis of EEG data for the medical diagnostics purposes. Sensors 20:807. doi: 10.3390/s20030807

Khatwani, M., Hosseini, M., Paneliya, H., Mohsenin, T., Hairston, W. D., and Waytowich, N. (2018). “Energy efficient convolutional neural networks for EEG artifact detection,” in Proceeding of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), 1–4. doi: 10.1109/BIOCAS.2018.8584791

Kilicarslan, A., and Contreras-Vidal, J. L. (2017). “Full characterization and removal of motion artifacts from scalp EEG recordings,” in Proceeding of the 2017 International Symposium on Wearable Robotics and Rehabilitation (WeRob), 1–1. doi: 10.1109/WEROB.2017.8383881

Kołodziej, M., Tarnowski, P., Sawicki, D. J., Majkowski, A., Rak, R. J., Bala, A., et al. (2020). Fatigue detection caused by office work with the use of EOG signal. IEEE Sens. J. 20, 15213–15223. doi: 10.1109/JSEN.2020.3012404

Lashgari, E., Liang, D., and Maoz, U. (2020). Data augmentation for deep-learning-based electroencephalography. J. Neurosci. Methods 346:108885. doi: 10.1016/j.jneumeth.2020.108885

Levin, A. R., Méndez Leal, A. S., Gabard-Durnam, L. J., and O’Leary, H. M. (2018). BEAPP: the batch electroencephalography automated processing platform. Front. Neurosci. 12:513. doi: 10.3389/fnins.2018.00513

Li, P., Chen, Z., and Hu, Y. (2017). “A method for automatic removal of EOG artifacts from EEG based on ICA-EMD,” in Proceeding of the 2017 Chinese Automation Congress (CAC), 1860–1863. doi: 10.1109/CAC.2017.8243071

Li, X., La, R., Wang, Y., Hu, B., and Zhang, X. (2020). A deep learning approach for mild depression recognition based on functional connectivity using electroencephalography. Front. Neurosci. 14:192. doi: 10.3389/fnins.2020.00192

Lou, G., and Shi, H. (2020). Face image recognition based on convolutional neural network. China Commun. 17, 117–124. doi: 10.23919/JCC.2020.02.010

Luo, Y., Zhu, L.-Z., Wan, Z.-Y., and Lu, B.-L. (2020). Data augmentation for enhancing EEG-based emotion recognition with deep generative models. ArXiv [preprint]. ArXiv200605331, doi: 10.1088/1741-2552/abb580

Mannan, M. M. N., Kamran, M. A., and Jeong, M. Y. (2018). Identification and removal of physiological artifacts from electroencephalogram signals: a review. IEEE Access 6, 30630–30652. doi: 10.1109/ACCESS.2018.2842082

McFarland, D. J., McCane, L. M., David, S. V., and Wolpaw, J. R. (1997). Spatial filter selection for EEG-based communication. Electroencephalogr. Clin. Neurophysiol. 103, 386–394. doi: 10.1016/S0013-4694(97)00022-2

Mumtaz, W., Rasheed, S., and Irfan, A. (2021). Review of challenges associated with the EEG artifact removal methods. Biomed. Signal Process. Control 68:102741. doi: 10.1016/j.bspc.2021.102741

Murata, K., Mito, M., Eguchi, D., Mori, Y., and Toyonaga, M. (2018). “A Single Filter CNN Performance for Basic Shape Classification,” in Proceedings of the 2018 9th International Conference on Awareness Science and Technology (iCAST) (Fukuoka: IEEE), 139–143. doi: 10.1109/ICAwST.2018.8517219

Mutasa, S., Sun, S., and Ha, R. (2021). Understanding artificial intelligence based radiology studies: CNN architecture. Clin. Imaging 80, 72–76. doi: 10.1016/j.clinimag.2021.06.033

Natesan, P., Priya, V. V., and Gothai, E. (2020). “Classification of multi-lead ECG signals to predict myocardial infarction using CNN,” in Proceeding of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), 1029–1033. doi: 10.1109/ICCMC48092.2020.ICCMC-000192

Nejedly, P., Cimbalnik, J., Klimes, P., Plesinger, F., Halamek, J., Kremen, V., et al. (2019). Intracerebral EEG artifact identification using convolutional neural networks. Neuroinformatics 17, 225–234. doi: 10.1007/s12021-018-9397-6

Pham, V. T., Dang, N. D., Nguyen, D. A., Nguyen, T. A., Chu, D. H., and Tran, D.-T. (2017). “Automatic removal of EOG artifacts using SOBI algorithm combined with intelligent source identification technique,” in Proceeding of the 2017 International Conference on Advanced Technologies for Communications (ATC), 260–264. doi: 10.1109/ATC.2017.8167629

Placidi, G., Cinque, L., and Polsinelli, M. (2021). Convolutional neural networks for automatic detection of artifacts from independent components represented in scalp topographies of EEG signals. Comput. Biol. Med. 132:104347. doi: 10.1016/j.compbiomed.2021.104347

Rakibul Mowla, M., Ng, S.-C., Zilany, M. S. A., and Paramesran, R. (2015). Artifacts-matched blind source separation and wavelet transform for multichannel EEG denoising. Biomed. Signal Process. Control 22, 111–118. doi: 10.1016/j.bspc.2015.06.009

Ranjan, R., Chandra Sahana, B., and Kumar Bhandari, A. (2021). Ocular artifact elimination from electroencephalography signals: a systematic review. Biocybern. Biomed. Eng. 41, 960–996. doi: 10.1016/j.bbe.2021.06.007

Sakai, A., Minoda, Y., and Morikawa, K. (2017). “Data augmentation methods for machine-learning-based classification of bio-signals,” in Proceeding of the 2017 10th Biomedical Engineering International Conference (BMEiCON), 1–4. doi: 10.1109/BMEiCON.2017.8229109

Salimi, N., Barlow, M., and Lakshika, E. (2020). “Towards potential of N-back task as protocol and EEG net for the EEG-based biometric,” in Proceeding of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), 1718–1724. doi: 10.1109/SSCI47803.2020.9308487

Şen, S. Y., and Özkurt, N. (2020). “Convolutional neural network hyperparameter tuning with adam optimizer for ECG classification,” in Proceeding of the 2020 Innovations in Intelligent Systems and Applications Conference (ASYU), 1–6. doi: 10.1109/ASYU50717.2020.9259896

Sun, W., Su, Y., Wu, X., and Wu, X. (2020). A novel end-to-end 1D-ResCNN model to remove artifact from EEG signals. Neurocomputing 404, 108–121. doi: 10.1016/j.neucom.2020.04.029

Tan, Z., and Pan, P. (2019). “Network fault prediction based on CNN-LSTM hybrid neural network,” in Proceeding of the 2019 International Conference on Communications, Information System and Computer Engineering (CISCE) (Haikou: IEEE), 486–490. doi: 10.1109/CISCE.2019.00113

Tosun, M., and Kasım, Ö (2020). Novel Eye-Blink Artefact Detection Algorithm from Raw EEG Signals Using FCN-Based Semantic Segmentation Method - Tosun - 2020 - IET Signal Processing - Wiley Online Library. Available online at: https://ietresearch.onlinelibrary.wiley.com/doi/10.1049/iet-spr.2019.0602 (accessed December 21, 2021).

Urigüen, J., and Zapirain, B. (2015). EEG artifact removal – state-of-the-art and guidelines. J. Neural Eng. 12:031001. doi: 10.1088/1741-2560/12/3/031001

Vinayakumar, R., Soman, K. P., and Poornachandran, P. (2017). “Applying convolutional neural network for network intrusion detection,” in Proceeding of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI) (Udupi: IEEE), 1222–1228. doi: 10.1109/ICACCI.2017.8126009

Voytek, B., Kramer, M. A., Case, J., Lepage, K. Q., Tempesta, Z. R., Knight, R. T., et al. (2015). Age-related changes in 1/f neural electrophysiological noise. J. Neurosci. 35, 13257–13265. doi: 10.1523/JNEUROSCI.2332-14.2015

Wang, F., Zhong, S., Peng, J., Jiang, J., and Liu, Y. (2018). “Data augmentation for EEG-based emotion recognition with deep convolutional neural networks,” in MultiMedia Modeling Lecture Notes in Computer Science, eds K. Schoeffmann, T. H. Chalidabhongse, C. W. Ngo, S. Aramvith, N. E. O’Connor, Y.-S. Ho, et al. (Cham: Springer International Publishing), 82–93. doi: 10.1007/978-3-319-73600-6_8

Xiang, J., Maue, E., Fan, Y., Qi, L., Mangano, F. T., Greiner, H., et al. (2020). Kurtosis and skewness of high-frequency brain signals are altered in paediatric epilepsy. Brain Commun. 2:fcaa036. doi: 10.1093/braincomms/fcaa036

Yang, B., Duan, K., Fan, C., Hu, C., and Wang, J. (2018). Automatic ocular artifacts removal in EEG using deep learning. Biomed. Signal Process. Control 43, 148–158. doi: 10.1016/j.bspc.2018.02.021

Yoo, J.-H., Yoon, H., and Kim, H.-G., Yoon, H.-S., and Han, S.-S. (2019). “Optimization of Hyper-parameter for CNN Model using Genetic Algorithm”, in Proceedings of the 2019 1st International Conference on Electrical, Control and Instrumentation Engineering (ICECIE) (Kuala Lumpur, Malaysia: IEEE), 1–6. doi: 10.1109/ICECIE47765.2019.8974762

Zhang, C., Lian, Y., and Wang, G. (2020). “ARDER: an automatic EEG artifacts detection and removal system,” in Proceeding of the 2020 27th IEEE International Conference on Electronics, Circuits and Systems (ICECS), 1–2. doi: 10.1109/ICECS49266.2020.9294865

Zhang, Y., Chen, J., Tan, J. H., Chen, Y., Chen, Y., Li, D., et al. (2020). An investigation of deep learning models for EEG-based emotion recognition. Front. Neurosci. 14:1344. doi: 10.3389/fnins.2020.622759

Keywords: artifacts, electroencephalography, electrooculography, convolutional neural network, independent component analysis

Citation: Jurczak M, Kołodziej M and Majkowski A (2022) Implementation of a Convolutional Neural Network for Eye Blink Artifacts Removal From the Electroencephalography Signal. Front. Neurosci. 16:782367. doi: 10.3389/fnins.2022.782367

Received: 24 September 2021; Accepted: 10 January 2022;

Published: 11 February 2022.

Edited by:

Mariusz Pelc, University of Greenwich, United KingdomReviewed by:

Aleksandra Dagmara Kawala-Sterniuk, Opole University of Technology, PolandCopyright © 2022 Jurczak, Kołodziej and Majkowski. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marcin Kołodziej, bWFyY2luLmtvbG9kemllakBwdy5lZHUucGw=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.