95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 09 June 2021

Sec. Brain Imaging Methods

Volume 15 - 2021 | https://doi.org/10.3389/fnins.2021.611653

This article is part of the Research Topic Brain-Computer Interfaces for Perception, Learning, and Motor Control View all 17 articles

As a physiological process and high-level cognitive behavior, emotion is an important subarea in neuroscience research. Emotion recognition across subjects based on brain signals has attracted much attention. Due to individual differences across subjects and the low signal-to-noise ratio of EEG signals, the performance of conventional emotion recognition methods is relatively poor. In this paper, we propose a self-organized graph neural network (SOGNN) for cross-subject EEG emotion recognition. Unlike the previous studies based on pre-constructed and fixed graph structure, the graph structure of SOGNN are dynamically constructed by self-organized module for each signal. To evaluate the cross-subject EEG emotion recognition performance of our model, leave-one-subject-out experiments are conducted on two public emotion recognition datasets, SEED and SEED-IV. The SOGNN is able to achieve state-of-the-art emotion recognition performance. Moreover, we investigated the performance variances of the models with different graph construction techniques or features in different frequency bands. Furthermore, we visualized the graph structure learned by the proposed model and found that part of the structure coincided with previous neuroscience research. The experiments demonstrated the effectiveness of the proposed model for cross-subject EEG emotion recognition.

Human emotion is a complex psychophysiological process that plays an important role in daily communications. Emotion recognition is a significant and fundamental research topic in affective computing and neuroscience (Cowie et al., 2001). In general, human emotions can be recognized using data from different modalities, such as facial expression images, body language, textual information and physiological signals such as electromyogram (EMG), electrocardiogram (ECG), and electroencephalogram (EEG) (Busso et al., 2004; Shu et al., 2018). EEG is a widely used technique in neuroscience research that is able to directly capture brain signals that could reflect neural activities in real time. Therefore, EEG-based emotion recognition has received considerable attention in the areas of affective computing and neuroscience (Coan and Allen, 2004; Lin et al., 2010; Alarcao and Fonseca, 2017; Li et al., 2019).

In order to facilitate EEG-based emotion recognition research, the SJTU emotion EEG dataset (SEED) was released (Duan et al., 2013). In addition, its evolutionary dataset termed SEED-IV was also available (Zheng et al., 2018). Before the experiments on SEED and SEED-IV datasets, a series of film clips with different emotional tendencies were chosen as stimulation materials. In the SEED dataset, happy, sad and neutral emotions were included, while the SEED-IV dataset consisted of happy, sad, fear and neutral emotions. During the experiments, each participant watched the film clips while his/her EEG signals were recorded with a 62-channel ESI NeuroScan System. Consequently, the recorded EEG signals and the corresponding emotion labels of film clips can be used to train an emotion recognition model. If the trained emotion recognition model is effective, we will be able to decode the emotions of a new participant when he/she watched a film. Therefore, based on the SEED and SEED-IV datasets, different emotion recognition methods can be evaluated on common benchmarks.

In the past few years, many feature extraction and machine learning approaches have been proposed for EEG-based emotion recognition. In an original research on SEED dataset, the features of the energy spectrum (ES), differential entropy (DE), rational asymmetry (RASM), and differential asymmetry (DASM) were proven to be effective features for EEG-based emotion recognition (Duan et al., 2013). To explore different EEG features for cross-subject emotion recognition, 18 kinds of linear and non-linear EEG features were evaluated (Li et al., 2018b). Moreover, a machine learning technique was used to investigate stable EEG patterns for emotion recognition and achieved high performance on SEED and DEAP emotion recognition datasets (Zheng et al., 2017). To eliminate the individual differences in EEG signals, a deep adaption network (DAN) was proposed and applied on the SEED and SEED-IV datasets to conduct cross-subject emotion recognition (Li et al., 2018a). A novel group sparse canonical correlation analysis (GSCCA) method was proposed for simultaneous EEG channel selection and emotion recognition (Zheng, 2016).

Recently, deep learning and graph representation methodology were proven to be powerful tools to model structured data and achieved significant performance in many applications (Linial et al., 1995; Even, 2011). A deep belief network (DBN) was applied to process differential entropy features extracted from multichannel EEG signals (Zheng et al., 2014). To investigate critical frequency bands and channels for EEG-based emotion recognition, a deep neural network was proposed (Zheng and Lu, 2015). Long-short term memory (LSTM) was used to learn features from EEG signals, and these features were discriminative for emotion recognition on the DEAP dataset (Alhagry et al., 2017). EEG signals were recorded by EEG caps placed on the scalp, and these data can be considered to be a typical kind of structured data (Micheloyannis et al., 2006). Accordingly, graph representation approaches also achieved impressive performance in handling EEG signals in emotion recognition experiments. For example, a dynamic graph convolutional neural network (DGCNN) was proposed for emotion recognition, and its graph structure was determined by a dynamic adjacency matrix that reflected the intrinsic relationships between different EEG electrodes (Song et al., 2019b). In order to explore the deeper-level information of graph-structured EEG data, a graph convolutional broad network (GCB-net) was proposed and achieved high performance on the SEED and DREAMER datasets (Zhang et al., 2019). To capture both local and global interchannel relations, a regularized graph neural network (RGNN) was proposed and achieved state-of-the-art performance on the SEED and SEED-IV datasets (Zhong et al., 2020).

In this paper, we proposed a novel model for cross-subject EEG emotion recognition and evaluated the model on two common datasets. The main contributions of this paper can be summarized as follows:

1. A novel cross-subject emotion recognition model, termed the self-organized graph neural network (SOGNN), was proposed.

2. The SOGNN is able to achieve state-of-the-art emotion recognition performance with cross-subject accuracy of 86.81% on the SEED dataset and 75.27% on the SEED-IV dataset.

3. Interchannel connections and time-frequency features are aggregated by the self-organized graph construction module, graph convolution and hierarchical structure of the SOGNN to improve the cross-subject emotion recognition performance.

The remainder of this paper is organized as follows. The EEG emotion recognition datasets (SEED and SEED-IV) and the proposed SOGNN model are presented in section 2. In section 3, numerical emotion recognition experiments are conducted. In addition, the performance of the current methods and the proposed methods are presented and compared. Some discussions and analysis of the proposed model are presented in section 4. The conclusions of this paper are given in section 5.

In order to facilitate EEG-based emotion recognition research, the SJTU emotion EEG dataset (SEED) was released on http://bcmi.sjtu.edu.cn/~seed/ (Duan et al., 2013). In addition, its evolutionary dataset termed SEED-IV was also available (Zheng et al., 2018). Before the experiments on the SEED and SEED-IV datasets, a series of film clips with different emotional tendencies were chosen as stimulation materials. The SEED dataset includes happy, sad and neutral film clips while the SEED-IV dataset consists of happy, sad, fear and neutral film clips. During the experiments, each participant watched film clips while his/her EEG signals were recorded with a 62-channel ESI NeuroScan System.

In the SEED and SEED-IV datasets, 15 subjects (7 males and 8 females) participated in the experiments. During the experiments, 62-channel EEG signals of each subject were recorded when he/she was watching film clips with different emotion labels. There are 675 EEG samples (45 samples * 15 subjects) in SEED datasets. For each subject, there are 15 samples of happy, 15 samples of sad, and 15 samples of neutral emotion. There are 1,080 samples (72 samples * 15 subjects) in SEED-IV dataset. For each subject, there are 4 different kinds of emotion including happy, sad, fear and neutral emotion that the number of each emotion class is 18. So the number of samples per subject/class are balanced.

The signals were synchronously recorded at a 1,000 Hz sampling rate. Bandpass frequency filters of 0–75 and 1–75 Hz were applied to filter the unrelated artifacts for the SEED and SEED-IV datasets, respectively. To accelerate the computation, the signals were downsampled with sampling frequency of 200 Hz. In addition, the dataset provider applied the linear dynamic system approach to filter out noise and artifacts that were unrelated to the EEG features (Shi and Lu, 2010; Zheng et al., 2018). In the two datasets, the EEG features of the differential entropy (DE), power spectral density (PSD), asymmetry(ASM), differential asymmetry (DASM), differential caudality (DCAU), and radial asymmetry (RASM) were provided. The DE feature and PSD feature extract contents about the frequency and energy spectrum, respectively; the DASM feature and RASM feature obtain asymmetrical information of EEG channels, and DCAU feature computes the differences between channel pairs. Compared with the other features, the DE feature is more discriminative for emotion recognition according to the previous research (Duan et al., 2013; Song et al., 2019b; Zhong et al., 2020).

Therefore, we used DE features as the input data for our model. The DE features are frequency domain features that are calculated by a 512-point short-time Fourier transform with a non-overlapped Hanning window of 1 s and averaged in 5 frequency bands, e.g., δ band (1–3 Hz), θ band (4–7 Hz), α band (8–13 Hz), β band (14–30 Hz), and γ band (31–50 Hz). As a result, the output DE feature can be represented as a 5 × T matrix in which T denotes the time window which is dependent on the stimulated film clip. The time window T of the SEED dataset ranges from 185 to 265 while the window of SEED-IV ranges from 12 to 64. For normalization, the features with a short time window will be zero-padded to a length of 265 for SEED dataset and a length of 64 for the SEED-IV dataset.

Based on the benchmark SEED and SEED IV datasets, different EEG emotion recognition models can be evaluated and compared with each other.

Generally, EEG signal can be considered to be a typical kind of structured data and defined on a graph (Micheloyannis et al., 2006). Graph representation techniques and graph neural networks were proven to be effective in processing brain signals (Petrosian et al., 2000; de Haan et al., 2009; Varatharajah et al., 2017; Zhang et al., 2020). Here, the EEG signal is defined on a graph model as follows:

where denotes the nodes (a total of N nodes) in graph , are the connected edges between different nodes, each node denotes one EEG electrode, A ∈ ℝN×N is the adjacency matrix, and its element aij denotes the adjacent connection weight between nodes vi and vj. Consequently, the structure of a graph is determined by its adjacency matrix.

As shown in Figure 1, the brain graph structure is predefined by a distance function f between different channels in many previous studies (Micheloyannis et al., 2006; Ktena et al., 2018; Wang et al., 2018; Zhang et al., 2019; Zhong et al., 2020). However, the predefined and fixed graph structures could not properly model the dynamic brain signals of different subjects in different emotion states.

Here, we propose a self-organized graph construction module for modeling EEG emotion features. The proposed self-organized graph is determined by the input brain signals rather than based on a predefined graph structure as in many previous researches. The adjacent weight aij of the self-organized graph is defined by function f(vi, vj) as

where v ∈ ℝ1×F is a feature vector of one node (i.e., EEG electrode) in , there are a total of N nodes (EEG electrodes), W ∈ ℝF×L and θ are the weight and tanh activation function of a linear layer, respectively; and the exponential function is part of the softmax activation function for normalization and obtains a positive and bounded adjacent weight. The linear layer work as a bottleneck to reduce computational cost.

To clarify the details of the self-organized graph construction module, we also presented its matrix operation form in Figure 2. The self-organized adjacent matrix can be calculated as follows:

where is the input EEG features whose row vectors are node features of the graph to build, the W ∈ ℝF×L denote the weight of a linear layer, we adopted tanh activation function, G ∈ ℝN×L is the output of the linear layer, softmax activation function is applied to obtain a positive and bounded adjacent matrix A. With the self-organized graph construction module, the graph structure, is dynamically constructed by the corresponding input features.

Generally, the computational costs of sparse graphs are much lower than those of dense graphs. To construct a sparse graph, we adopt a top-k technique in which only the k largest weights of the adjacent matrix will be maintained while the small connection weights will be set to zero. The top-k operation is applied as follow

where argtopk(·) is a function to obtain the index of the top-k largest values of each vector A[i, :] in adjacent matrix A, and denotes the index of those values that do not belong to the top-k largest values in A[i, :]. As a result, only the k largest values in each row vector of adjacent matrix A are maintained while the remaining values will be set to zero. Actually, the top-k strategy can be considered as a modified max-pooling layer. Therefore, the parameters of the network can be updated as the network with max-pooling layers with backpropagation.

With the self-organized graph construction module, the graph structure is dynamically constructed by the corresponding input EEG features. Then, the newly built graphs can be processed by the graph convolutional layers to extract the local/global connection features for emotion recognition.

Based on the self-organized graph construction module, we propose SOGNN as shown in Figure 3. The SOGNN is composed of three conv-pool blocks, three self-organized graph layers, three graph convolution layers, one fully-connected layer and an output layer.

For the proposed SOGNN model, its input EEG feature is sized Electrodes × Bands × TimeFrames. To simplify the illustration of the model, we take the SEED dataset with a 62 × 5 × 265 input feature as an example in Figure 3. *Maps indicates the number of output feature maps of each layer. In each conv-pool block, standard convolution and max-pooling layers were applied to extract features for each EEG electrode independently. Therefore, the features of different EEG electrodes will not mix with each other so that the corresponding graph structure can be maintained. In the conv-pool 1 block, the 5 × 5 convolutional kernel extracts features in a window of 5 frequency bands and 5 time frames. Therefore, the output features are sized 62 × 1 × 261 in the SEED dataset. A 1 × 4 max-pooling layer is applied to downsample the features of the SEED dataset. Then the output feature map of conv-pool 1 block is sized 62 × 1 × 65 for the input feature of SEED. For the SEED-IV dataset, 1 × 2 max-pooling layers are used. A convolutional kernel 1 × 5 was applied in conv-pool 2 and 3. There are 32, 64, and 128 convolutional kernels in conv-pool 1-3 blocks that will obtain 32, 64, and 128 output feature maps, respectively.

The output of each conv-pool block was reshaped as a matrix with the shape of electrodes × features and fed into self-organized graph layers (SO-graphs 1-3). In the SO-graph layer, the feature of each EEG electrode remains unchanged, and only the adjacent weights between different EEG electrodes are calculated according to (2)–(5). For each SO-graph layer, there are 64 linear units, 32 output units and top-10 adjacent weights. With different input features, the graph features of the SO-graph 1-3 layers are different. Next, we applied graph convolution layers to process these graph features.

According to previous research (Bruna et al., 2013; Song et al., 2019b), spectral graph convolution multiplied a signal x ∈ ℝn with a graph convolution kernel Θ by a graph convolution operator as,

where graph Fourier basis U ∈ ℝN×N is the matrix of eigenvectors for the normalized graph Laplacian (In is an identity matrix, D ∈ ℝN×N is the diagonal degree matrix with , A ∈ ℝN×N is the adjacency matrix mentioned in Equation 1); Λ ∈ ℝN×N is the diagonal matrix of the eigenvalues of L, and filter Θ(Λ) is also a diagonal matrix. According to this definition, a graph signal x is filtered by a kernel Θ with multiplication between Θ and graph Fourier transform UTx (Shuman et al., 2013).

The outputs of the graph convolution layers were flattened and concatenated as a feature vector. This feature vector will be fed into fully-connected (FC) layer with a softmax activation function to predict emotional states. The proposed SOGNN model can be trained by minimizing the cross-entropy error of its prediction and ground truth. As a result, the loss function is defined as

where pic is the output value of the c-th output unit of the SOGNN model with the input of the i-th training sample, pic can be considered as the model's predicted probability of the c-th class, yic is the ground truth, and Ω denotes all of the training samples.

In this section, a series of experiments will be conducted to evaluate the proposed model. In addition, the corresponding experimental results of our method will be presented and compared with the results of the other methods. The model implementation will be publicly available at https://github.com/tailofcat/SOGNN. In our experiments, the hardware and software configuration of our system is a platform with an Nvidia Titan Xp, Ubuntu 16.04, PyTorch 1.5.1, and PyTorch-geometric 1.5.0 (Fey and Lenssen, 2019).

In order to investigate the cross-subject emotion recognition performance, a leave-one-subject-out (LOSO) cross-validation strategy was applied in the experiments. In each run of the LOSO experiment, the DE features of 14 subjects in SEED/SEED-IV are used as the training dataset while the data of the remaining subject is the validation dataset. Regarding normalization, the features of each subject will be normalized by subtracting its mean and then dividing by its standard deviation.

In order to train the proposed SOGNN model, the Adam optimizer is applied to minimize the model's loss. The proposed model was trained by the Adam optimizer with a learning rate of 0.00001, a weight-decay rate of 0.0001 and mini-batch size of 16. A drop-out operation with a dropout rate of 0.1 was applied in the training procedure to randomly block the output units of the internal layers. During the training procedure, we monitored the model's mean area under the curve (AUC) from the receiver operating characteristic curve for all emotion classes. Once the training averaged AUC score reached 0.99, the training procedure was stopped. Finally, the trained SOGNN model could be applied for emotion prediction. Once the proposed SOGNN model was trained, it could be applied to the validation dataset. For the SEED/SEED-IV database with 15 subjects, the LOSO experiment will be conducted in 15 runs. Then, the average validation accuracy can be considered as the model's performance, which can be compared with the results of other EEG-based emotion recognition models.

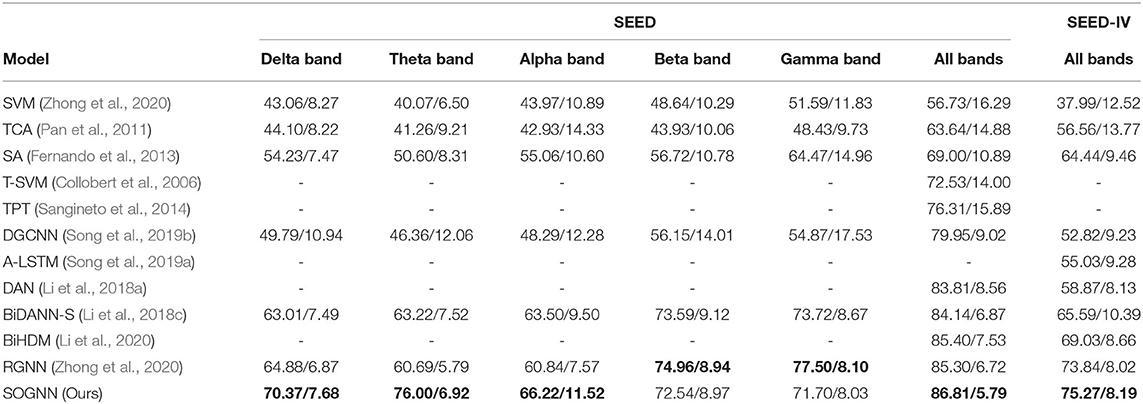

As shown in Table 1, the experimental results of the proposed SOGNN and many other methods on the SEED and SEED-IV databases are presented. The bold values indicated the largest values in all methods. In the experiments of the model for one-band features, we changed the input features from 5 bands to 1 band, changed the input size of the model to fit the inputs, and retrained the model for evaluation of sub-band features. The proposed SOGNN with delta or theta band features achieved higher accuracies than the other methods with the same features. Regarding the features of the other bands, the proposed SOGNN achieved relatively high performance which was quite close to the best performing methods.

Table 1. Leave-one-subject-out emotion recognition accuracy (mean/standard deviation) on SEED and SEED-IV.

With the features of all bands, the SOGNN achieved averaged accuracy of 86.81% on the SEED dataset and 75.27% on the SEED-IV dataset, which are higher than the performances of the state-of-the-art methods, i. e. the BiHDM (Li et al., 2020) and RGNN (Zhong et al., 2020) models. The proposed SOGNN achieved a macro-F1 score of 0.8669 and an AUC score of 0.9685 on the SEED dataset. The F1 scores of happy, sad and neutral emotion class are 0.8556, 0.8577, and 0.8874. For SEED-IV dataset, it achieved a macro-F1 score of 0.7547 and an AUC score of 0.9162. The F1 scores of happy, sad, fear and neutral class are 0.7517, 0.7419, 0.7441, and 0.7810. As a typical kind of neural network, the performance of the SOGNN may be different when the model is randomly initialized by different random seeds. According to our experiments, the averaged accuracy on SEED dataset is from 0.83 to 0.88 while the averaged accuracy on SEED-IV dataset is between 0.70 and 0.78. In Table 1, we presented the medium results of the two datasets. The performance of the proposed SOGNN demonstrated its effectiveness in cross-subject emotion recognition.

Many previous graph models like DGCNN and BIDANN were based on predefined graph structure according to prior knowledge of EEG emotion signals. However, the predefined and fixed graph structures could not properly model the dynamic brain signals of different subjects in different emotion states. The strength of the proposed SOGNN is that it could automatically extract graph structure from EEG features. The graph structure of SOGNN is dynamic and independent for different EEG features. As a result, the proposed SOGNN obtained more accurate and robust emotion recognition performance. In the next section, we will discuss and analyze the proposed model.

In this section, we analyze the proposed method and its internal properties in detail. We will discuss the performance differences of the SOGNN model with different features, self-organized graphs with different top-k rates, different graph construction methods, interchannel connections, etc.

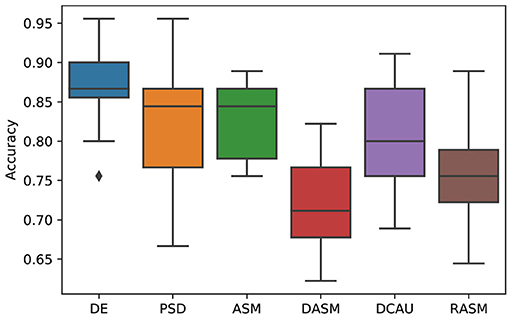

Figure 4 shows the emotion recognition accuracies of the proposed SOGNN model with different features including DE, PSD, ASM, DASM, DCAU, and RASM features. We found that the DE feature is the most discriminate feature while the performances of the other features are much lower. This finding is consistent with previous researches (Song et al., 2019b; Zhong et al., 2020).

Figure 4. Emotion recognition performance of SOGNN with DE, PSD, ASM, DASM, DCAU, and RASM features.

Accordingly, dense graph convolution usually has high computational costs. Therefore, it is significant to construct a sparse and effective graph in practice. To obtain a sparse adjacent matrix of graph, we applied the top-k technique in which only the k-largest connection weights of each EEG electrode in the adjacent matrix were maintained while the remaining small weights were set to zero. As shown in Figure 5, the performance of the SOGNN with different top-k sparse graphs is presented. In the figure, k-10 denotes that only the 10 largest connection weights were maintained while the remaining weights were set to zeros. Likewise, k-62 indicates that the total connections between all 62 electrodes were reserved. We can find that the model with k-10 connections achieved similar performances as those models with more connections. This finding indicates the effectiveness of the model with sparse adjacent matrix.

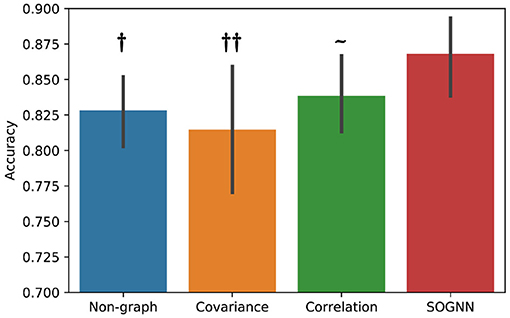

In the proposed SOGNN model, a self-organized graph construction module is applied to dynamically learn the interchannel relationships of EEG signals across subjects. Here, we investigate different graph construction technique and their performance. Figure 6 presents the emotion recognition accuracies on SEED dataset of the models with different graphs. To compare the performance of the models with different graphs, we would like to conduct statistical analyses. Evaluated on a dataset with only 15 subjects, the results of each model may not follow normal distribution. Wilcoxon signed-rank test is a non-parametric statistical hypothesis test which is suitable for the analysis on non-normally distributed data. With Wilcoxon signed-rank test result, we are able to determine whether the proposed model could achieve statistically significant better performance than the other models. As shown in Figure 6, the SOGNN achieved significantly better performance than non-graph model and the model with covariance graph. Regarding the covariance graph, the values of the elements in its adjacent matrix are usually too large that the graph convolutional layers will be easily saturated. This might be the reason for the low performance of the model with the covariance graph. The correlation graph can be considered as a normalized version of the covariance graph in which its adjacent matrix is normalized to be in [0, 1]. As a result, its performance is improved a little. Here, we propose a straightforward method termed self-organized graph construction in (5). The proposed SOGNN could achieve state-of-the-art emotion recognition performance on the SEED and SEED-IV datasets. Our experiments demonstrated the effectiveness of the proposed model and the self-organized graph construction method.

Figure 6. Emotion recognition performance based on different graphs. Wilcoxon signed rank test: ~ non-significant, †p < 0.05, ††p < 0.01.

To analyze the interchannel relationships learned by the proposed model, we obtained the average adjacent matrix of its self-organized graph (SO-graphs 1-3 as indicated in Figure 3) for SEED samples. Then, the average adjacent matrixes of SO-graphs 1-3 are normalized to [0, 1] for ease of analysis and presented in Figure 7A. These graphs reflect the common connections of EEG electrodes for emotion recognition. The SO-graph 1 is diagonally dominant that only few diagonal elements are relatively large while most of the rest elements are close to zero. That is only the features of a few EEG channels are discriminative for first graph convolution layer. Moreover, the off-diagonal elements of SO-graph 2 and 3 indicated that interchannel relationships also play important roles in classifying different emotion EEG signals.

Furthermore, we analyze the interchannel connections of the learned graphs for emotion recognition. We extracted the diagonal elements of the adjacent matrixes for SO-graph 1-3 and transformed into topographic maps. The topographic maps for SO-graphs 1-3 are presented in Figure 7B. According to the topographic maps, the prefrontal, and centro-parietal electrodes (e.g., F7, CPZ, FP2) had the largest weights in the topographic maps. The five electrodes with the largest weights connected with CPZ and FP2 are also presented. According to a previous study (Davidson et al., 1999), the activation in the regions of prefrontal cortex is related to blunted positive and negative emotions. A positive waveform will be enhanced over the centro-parietal electrode (CPZ) for emotional pictures (Lang and Bradley, 2010). In many related studies (Tyng et al., 2017; Alia-Klein et al., 2018; Pan et al., 2018), the prefrontal-parietal network is activated by emotion-related stimulus such as facial feelings, negative emotion processing, anger, etc. The interchannel relations between prefrontal, parietal and occipital channels are discriminative for emotion recognition EEG signals. Our findings coincide with the spatial distribution for emotion, as suggested by prior studies.

The above experiments and analysis of the proposed SOGNN model are significant for EEG-based emotion recognition. As a novel graph processing method for brain signals, it may bring some inspiration for neuroscience research, such as graph-based functional magnetic resonance imaging data processing.

In this paper, a novel model termed SOGNN was proposed for cross-subject emotion recognition. The SOGNN model was able to dynamically learn the interchannel relationships of EEG emotion signals using a self-organized graph construction module. The proposed model achieved state-of-the-art performance on two open EEG emotion recognition databases, i.e., SEED and SEED-IV. In addition, a series of analyses demonstrated the effectiveness of the proposed model on graph construction and emotion recognition. The experimental results indicated that the SOGNN model is not only an effective model for recognizing emotions, but it is also a potential technique for other EEG-based applications. In the future, we would like to build more efficient networks to model brain signals and effectively decode high-level cognitive behaviors. Moreover, some new emerging machine learning techniques can also inspire the methodology for emotion recognition and affective computing.

Publicly available datasets were analyzed in this study. This data can be found here: https://bcmi.sjtu.edu.cn/resource.html.

The studies involving human participants were reviewed and approved by Ethics Committee of South China Normal University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

JL proposed the idea, conducted the experiments, and wrote the manuscript. SL and FW provided advice on the research approaches, signal processing, and checked and revised the manuscript. JP offered important help that guided the experiments and analysis methods. All authors contributed to the article and approved the submitted version.

This work was supported by the National Natural Science Foundation of China (Grant Nos. 62006082, 61836003, and 61906019), the Key Realm R and D Program of Guangzhou (Grant No. 202007030005), the Guangdong Natural Science Foundation (Grant Nos. 2021A1515011600, 2020A1515110294, and 2021A1515011853), and Guangzhou Science and Technology Plan Project (Grant No. 202102020877).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank Prof. Zhu Liang Yu and his group at South China University of Technology for providing the computation platform. We thank Dr. Zhenfu Wen at New York University for taking care of our research work. Finally, we would also like to thank the editors, reviewers, and editorial staff who participated in the publication process of this paper.

Alarcao, S. M., and Fonseca, M. J. (2017). Emotions recognition using EEG signals: a survey. IEEE Trans. Affect. Comput. 10, 374–393. doi: 10.1109/TAFFC.2017.2714671

Alhagry, S., Fahmy, A. A., and El-Khoribi, R. A. (2017). Emotion recognition based on EEG using LSTM recurrent neural network. Emotion 8, 355–358. doi: 10.14569/IJACSA.2017.081046

Alia-Klein, N., Preston-Campbell, R. N., Moeller, S. J., Parvaz, M. A., Bachi, K., Gan, G., et al. (2018). Trait anger modulates neural activity in the fronto-parietal attention network. PLOS ONE 13:e0194444. doi: 10.1371/journal.pone.0194444

Bruna, J., Zaremba, W., Szlam, A., and LeCun, Y. (2013). Spectral networks and locally connected networks on graphs. arXiv [Preprint]. arXiv:1312.6203.

Busso, C., Deng, Z., Yildirim, S., Bulut, M., Lee, C. M., Kazemzadeh, A., et al. (2004). “Analysis of emotion recognition using facial expressions, speech and multimodal information,” in Proceedings of the 6th International Conference on Multimodal Interfaces, 205–211. doi: 10.1145/1027933.1027968

Coan, J. A., and Allen, J. J. (2004). Frontal EEG asymmetry as a moderator and mediator of emotion. Biol. Psychol. 67, 7–50. doi: 10.1016/j.biopsycho.2004.03.002

Collobert, R., Sinz, F. H., Weston, J., and Bottou, L. (2006). Large scale transductive SVMs. J. Mach. Learn. Res. 7, 1687–1712.

Cowie, R., Douglas-Cowie, E., Tsapatsoulis, N., Votsis, G., Kollias, S., Fellenz, W., et al. (2001). Emotion recognition in human-computer interaction. IEEE Signal Process. Mag. 18, 32–80. doi: 10.1109/79.911197

Davidson, R. J., Abercrombie, H., Nitschke, J. B., and Putnam, K. (1999). Regional brain function, emotion and disorders of emotion. Curr. Opin. Neurobiol. 9, 228–234. doi: 10.1016/S0959-4388(99)80032-4

de Haan, W., Pijnenburg, Y. A., Strijers, R. L., van der Made, Y., van der Flier, W. M., Scheltens, P., et al. (2009). Functional neural network analysis in frontotemporal dementia and Alzheimer's disease using EEG and graph theory. BMC Neurosci. 10:101. doi: 10.1186/1471-2202-10-101

Duan, R.-N., Zhu, J.-Y., and Lu, B.-L. (2013). “Differential entropy feature for EEG-based emotion classification,” in 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER) (San Diego, CA: IEEE), 81–84. doi: 10.1109/NER.2013.6695876

Fernando, B., Habrard, A., Sebban, M., and Tuytelaars, T. (2013). “Unsupervised visual domain adaptation using subspace alignment,” in 2013 IEEE International Conference on Computer Vision (Sydney, NSW), 2960–2967. doi: 10.1109/ICCV.2013.368

Fey, M., and Lenssen, J. E. (2019). “Fast graph representation learning with PyTorch Geometric,” in ICLR Workshop on Representation Learning on Graphs and Manifolds (New Orleans, LA).

Ktena, S. I., Parisot, S., Ferrante, E., Rajchl, M., Lee, M., Glocker, B., et al. (2018). Metric learning with spectral graph convolutions on brain connectivity networks. Neuroimage 169, 431–442. doi: 10.1016/j.neuroimage.2017.12.052

Lang, P. J., and Bradley, M. M. (2010). Emotion and the motivational brain. Biol. Psychol. 84, 437–450. doi: 10.1016/j.biopsycho.2009.10.007

Li, H., Jin, Y.-M., Zheng, W.-L., and Lu, B.-L. (2018a). “Cross-subject emotion recognition using deep adaptation networks,” in International Conference on Neural Information Processing (Siem Reap: Springer), 403–413. doi: 10.1007/978-3-030-04221-9_36

Li, J., Qiu, S., Shen, Y.-Y., Liu, C.-L., and He, H. (2019). Multisource transfer learning for cross-subject EEG emotion recognition. IEEE Trans. Cybernet. 50, 3281–3293. doi: 10.1109/TCYB.2019.2904052

Li, X., Song, D., Zhang, P., Zhang, Y., Hou, Y., and Hu, B. (2018b). Exploring EEG features in cross-subject emotion recognition. Front. Neurosci. 12:162. doi: 10.3389/fnins.2018.00162

Li, Y., Wang, L., Zheng, W., Zong, Y., Qi, L., Cui, Z., et al. (2020). A novel bi-hemispheric discrepancy model for EEG emotion recognition. IEEE Trans. Cogn. Dev. Syst. doi: 10.1109/TCDS.2020.2999337

Li, Y., Zheng, W., Zong, Y., Cui, Z., Zhang, T., and Zhou, X. (2018c). A bi-hemisphere domain adversarial neural network model for EEG emotion recognition. IEEE Trans. Affect. Comput. doi: 10.1109/TAFFC.2018.2885474

Lin, Y.-P., Wang, C.-H., Jung, T.-P., Wu, T.-L., Jeng, S.-K., Duann, J.-R., et al. (2010). EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 57, 1798–1806. doi: 10.1109/TBME.2010.2048568

Linial, N., London, E., and Rabinovich, Y. (1995). The geometry of graphs and some of its algorithmic applications. Combinatorica. 15, 215–245. doi: 10.1007/BF01200757

Micheloyannis, S., Pachou, E., Stam, C. J., Vourkas, M., Erimaki, S., and Tsirka, V. (2006). Using graph theoretical analysis of multi channel EEG to evaluate the neural efficiency hypothesis. Neurosci. Lett. 402, 273–277. doi: 10.1016/j.neulet.2006.04.006

Pan, J., Zhan, L., Hu, C., Yang, J., Wang, C., Gu, L., et al. (2018). Emotion regulation and complex brain networks: association between expressive suppression and efficiency in the fronto-parietal network and default-mode network. Front. Hum. Neurosci. 12:70. doi: 10.3389/fnhum.2018.00070

Pan, S. J., Tsang, I. W., Kwok, J. T., and Yang, Q. (2011). Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 22, 199–210. doi: 10.1109/TNN.2010.2091281

Petrosian, A., Prokhorov, D., Homan, R., Dasheiff, R., and Wunsch, I. I. D. (2000). Recurrent neural network based prediction of epileptic seizures in intra-and extracranial EEG. Neurocomputing 30, 201–218. doi: 10.1016/S0925-2312(99)00126-5

Sangineto, E., Zen, G., Ricci, E., and Sebe, N. (2014). “We are not all equal: personalizing models for facial expression analysis with transductive parameter transfer,” in Proceedings of the 22nd ACM International Conference on Multimedia (Orlando, FL), 357–366. doi: 10.1145/2647868.2654916

Shi, L.-C., and Lu, B.-L. (2010). “Off-line and on-line vigilance estimation based on linear dynamical system and manifold learning,” in 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology (IEEE), 6587–6590

Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., Liao, D., et al. (2018). A review of emotion recognition using physiological signals. Sensors 18:2074. doi: 10.3390/s18072074

Shuman, D. I., Narang, S. K., Frossard, P., Ortega, A., and Vandergheynst, P. (2013). The emerging field of signal processing on graphs: extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Process. Mag. 30, 83–98. doi: 10.1109/MSP.2012.2235192

Song, T., Zheng, W., Lu, C., Zong, Y., Zhang, X., and Cui, Z. (2019a). MPED: a multi-modal physiological emotion database for discrete emotion recognition. IEEE Access 7, 12177–12191. doi: 10.1109/ACCESS.2019.2891579

Song, T., Zheng, W., Song, P., and Cui, Z. (2019b). EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 11, 532–541. doi: 10.1109/TAFFC.2018.2817622

Tyng, C. M., Amin, H. U., Saad, M. N., and Malik, A. S. (2017). The influences of emotion on learning and memory. Front. Psychol. 8:1454. doi: 10.3389/fpsyg.2017.01454

Varatharajah, Y., Chong, M. J., Saboo, K., Berry, B., Brinkmann, B., Worrell, G., et al. (2017). “EEG-GRAPH: a factor-graph-based model for capturing spatial, temporal, and observational relationships in electroencephalograms,” in Advances in Neural Information Processing Systems, eds I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Long Beach, CA: NeurIPS Proceedings), 5371–5380.

Wang, X. H., Zhang, T., Xu, X. M., Chen, L., Xing, X. F., and Chen, C. L. P. (2018). “EEG emotion recognition using dynamical graph convolutional neural networks and broad learning system,” in 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (Madrid: IEEE), 1240–1244. doi: 10.1109/BIBM.2018.8621147

Zhang, D., Chen, K., Jian, D., and Yao, L. (2020). Motor imagery classification via temporal attention cues of graph embedded EEG signals. IEEE J. Biomed. Health Inform. 24, 2570–2579. doi: 10.1109/JBHI.2020.2967128

Zhang, T., Wang, X., Xu, X., and Chen, C. P. (2019). GCB-net: graph convolutional broad network and its application in emotion recognition. IEEE Trans. Affect. Comput. doi: 10.1109/TAFFC.2019.2937768

Zheng, W. (2016). Multichannel EEG-based emotion recognition via group sparse canonical correlation analysis. IEEE Trans. Cogn. Dev. Syst. 9, 281–290. doi: 10.1109/TCDS.2016.2587290

Zheng, W.-L., Liu, W., Lu, Y., Lu, B.-L., and Cichocki, A. (2018). Emotionmeter: a multimodal framework for recognizing human emotions. IEEE Trans. Cybernet. 49, 1110–1122. doi: 10.1109/TCYB.2018.2797176

Zheng, W.-L., and Lu, B.-L. (2015). Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Mental Dev. 7, 162–175. doi: 10.1109/TAMD.2015.2431497

Zheng, W.-L., Zhu, J.-Y., and Lu, B.-L. (2017). Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 10, 417–429. doi: 10.1109/TAFFC.2017.2712143

Zheng, W.-L., Zhu, J.-Y., Peng, Y., and Lu, B.-L. (2014). “EEG-based emotion classification using deep belief networks,” in 2014 IEEE International Conference on Multimedia and Expo (ICME) (Chengdu: IEEE), 1–6. doi: 10.1109/ICME.2014.6890166

Keywords: SEED dataset, graph neural network, cross-subject, emotion recognition, graph construction

Citation: Li J, Li S, Pan J and Wang F (2021) Cross-Subject EEG Emotion Recognition With Self-Organized Graph Neural Network. Front. Neurosci. 15:611653. doi: 10.3389/fnins.2021.611653

Received: 29 September 2020; Accepted: 17 May 2021;

Published: 09 June 2021.

Edited by:

Haider Raza, University of Essex, United KingdomReviewed by:

Archana Venkataraman, Johns Hopkins University, United StatesCopyright © 2021 Li, Li, Pan and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shuqi Li, c3Vya3lsaUBtLnNjbnUuZWR1LmNu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.