95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Neurosci. , 09 June 2021

Sec. Neuroprosthetics

Volume 15 - 2021 | https://doi.org/10.3389/fnins.2021.581414

This article is part of the Research Topic Wearable and Implantable Technologies in the Rehabilitation of Patients with Sensory Impairments View all 8 articles

Cochlear implants (CIs) have been remarkably successful at restoring speech perception for severely to profoundly deaf individuals. Despite their success, several limitations remain, particularly in CI users’ ability to understand speech in noisy environments, locate sound sources, and enjoy music. A new multimodal approach has been proposed that uses haptic stimulation to provide sound information that is poorly transmitted by the implant. This augmenting of the electrical CI signal with haptic stimulation (electro-haptic stimulation; EHS) has been shown to improve speech-in-noise performance and sound localization in CI users. There is also evidence that it could enhance music perception. We review the evidence of EHS enhancement of CI listening and discuss key areas where further research is required. These include understanding the neural basis of EHS enhancement, understanding the effectiveness of EHS across different clinical populations, and the optimization of signal-processing strategies. We also discuss the significant potential for a new generation of haptic neuroprosthetic devices to aid those who cannot access hearing-assistive technology, either because of biomedical or healthcare-access issues. While significant further research and development is required, we conclude that EHS represents a promising new approach that could, in the near future, offer a non-invasive, inexpensive means of substantially improving clinical outcomes for hearing-impaired individuals.

Cochlear implants (CIs) are one of the most successful neuroprostheses, allowing those with severe-to-profound deafness to access sound through electrical stimulation of the cochlea. Over 18,000 people in the United Kingdom alone currently use a CI (Hanvey, 2020), although it has been estimated that only 1 in 20 adults who could benefit from a CI have accessed one (Raine et al., 2016). Despite the success of CIs, there remain significant limitations in the performance that can be achieved by users (Spriet et al., 2007; Dorman et al., 2016). Recently, however, a new multimodal approach to improve CI user performance has emerged (Huang et al., 2017; Fletcher et al., 2018, 2019, 2020a, 2020b, 2020c; Ciesla et al., 2019; Fletcher, 2020; Fletcher and Zgheib, 2020). This approach uses “electro-haptic stimulation” (EHS)1, whereby the electrical CI signal is augmented by haptic stimulation, which provides missing sound-information. In addition to augmenting CI listening, new advances in haptic technology mean that haptic stimulation could provide a low-cost means to aid the many millions of people worldwide with disabling hearing loss who cannot access CI technology. In the following three sections of this review, we first examine the evidence of EHS benefits to CI listening, before reviewing the potential for a new generation of haptic aids to support those who are unable to access hearing-assistive devices. Finally, we discuss key areas in which further research is required, such as in identifying the optimal signal-processing regime to maximize EHS benefit, establishing the effects of long-term training with EHS, and understanding the mechanisms that underlie to EHS benefit.

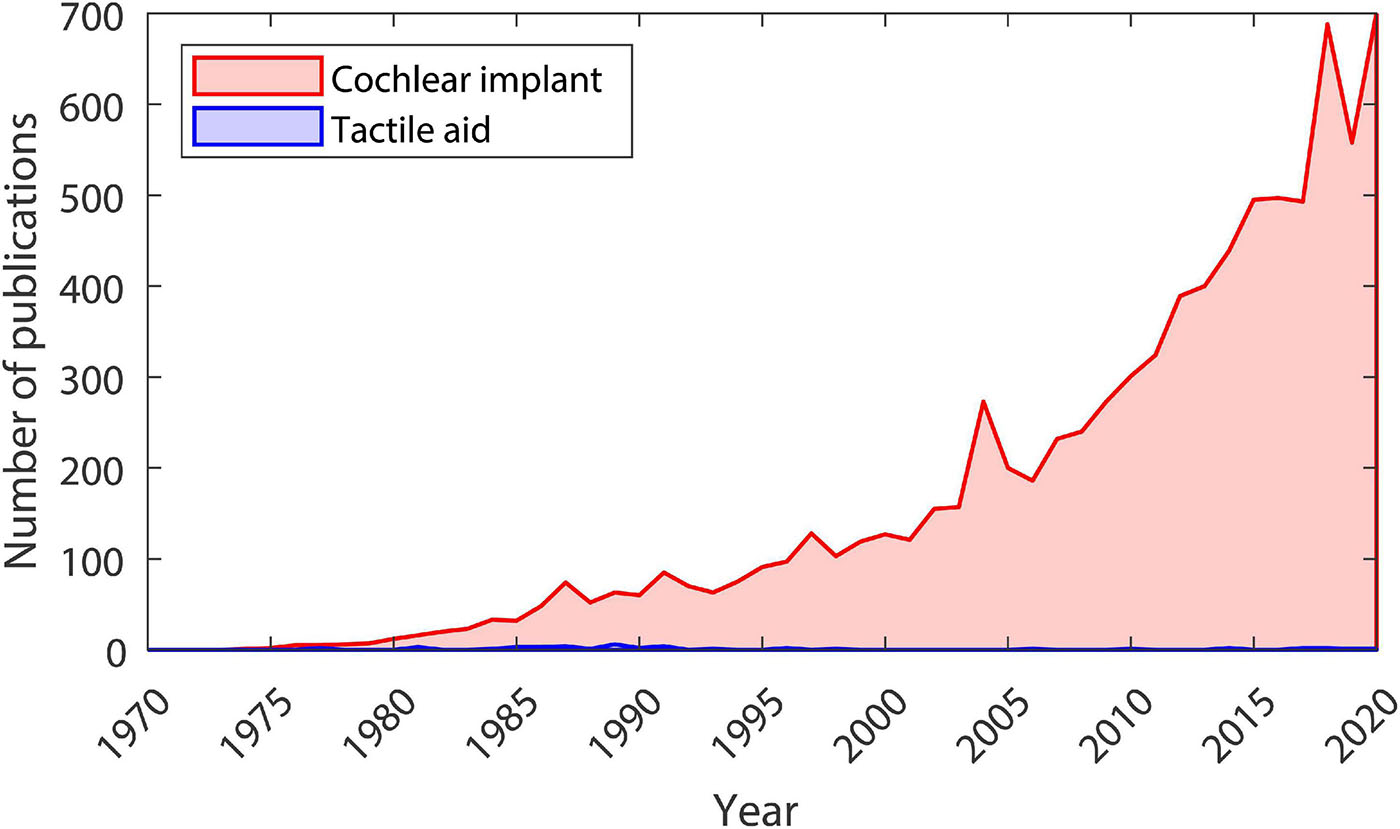

In the 1920s, the first “tactile aids” were developed to assist profoundly deaf children in the classroom (Gault, 1924, 1930). This was followed by influential work, beginning in the late 1960s, where visual information was delivered to blind individuals using haptic stimulation on the finger or back. Participants were able to recognize faces, complete complex inspection-assembly tasks, and judge the speed and direction of a rolling ball (Bach-y-Rita et al., 1969, 2003; Bach-y-Rita, 2004). Fascinatingly, after training, participants reported that objects became externalized, seeming as though they were outside of their body rather than being located on the skin (Bach-y-Rita, 2004). In the 1980s and 1990s, largely due to technological advances, interest in using tactile aids to treat deafness grew substantially. In the mid-1980s, one study showed that it was possible to learn a vocabulary of 250 words with a tactile aid (Brooks et al., 1985). This included the ability to discriminate words that differ only by place of articulation, such as “so” and “show” or “let” and “net.” Another set of studies showed that, for both hearing and post-lingually deafened individuals who are lip reading without auditory cues, haptic stimulation can increase the percentage of words recognized within a sentence by more than 15% (De Filippo, 1984; Brooks et al., 1986a; Hanin et al., 1988; Cowan et al., 1991; Reed et al., 1992). However, the development of tactile aids was halted by dramatic improvements in CI technology, which allowed users to achieve speech recognition far better than could conceivably be achieved using a tactile aid (Zeng et al., 2008). By the late 1990s, the use and development of tactile aids had almost completely ceased, and a rapid expansion in CI research began (see Figure 1).

Figure 1. Number of publications each year from 1970 to 2020. Data taken from Google Scholar searches for articles (including patents, not including citations) with the term “tactile aid” (shown in blue) or “cochlear implant” (shown in red) in the title. The search was conducted on 07/02/2021.

In recent decades, while the expansion of CI research has continued, the pace of improvements in patient outcomes has slowed (Zeng et al., 2008; Wilson, 2015). Despite the huge success of CIs, there remain significant limitations for even the best-performing users (Wilson, 2017), as well as substantial variation in performance between individuals (Tamati et al., 2019). For example, CI users have limited pitch perception (D’Alessandro and Mancini, 2019), frequency resolution (O’Neill et al., 2019), and dynamic range (Bento et al., 2005). These issues in extracting basic sound properties translate into limitations in real-world listening, with CI users often struggling to understand speech in challenging listening conditions (Hazrati and Loizou, 2012), struggling to locate sounds (Dorman et al., 2016), and having substantially reduced music appreciation (McDermott, 2004; Dritsakis et al., 2017). For CI users with useful residual acoustic hearing, combining electrical CI stimulation with acoustic stimulation (electro-acoustic stimulation) has been shown to improve performance (O’Connell et al., 2017). Impaired acoustic hearing can transmit important missing sound-information, such as pitch, temporal fine structure, and dynamic changes in intensity, more effectively than a CI (Gifford et al., 2007; Gifford and Dorman, 2012). However, the proportion of CI users with useful residual acoustic hearing is small (Verschuur et al., 2016) and residual hearing deteriorates at a faster rate after implantation (Wanna et al., 2018).

Electro-haptic stimulation has recently emerged as an alternative approach to improve CI outcomes. EHS uses haptic stimulation to augment the CI signal, rather than as an alternative to CI stimulation, as was the case with tactile aids. Early evidence suggests that EHS can improve speech-in-noise performance, sound localization, and music perception in CI users. Two recent studies showed improved speech-in-noise performance when the fundamental frequency (F_0) of speech (an acoustic correlate of pitch) was presented through haptic stimulation on the finger. This was demonstrated both for CI users (Huang et al., 2017) and for normal-hearing participants listening to simulated CI audio (Ciesla et al., 2019). However, in these studies, the haptic signal was extracted from the clean speech signal, which would not be available in the real world.

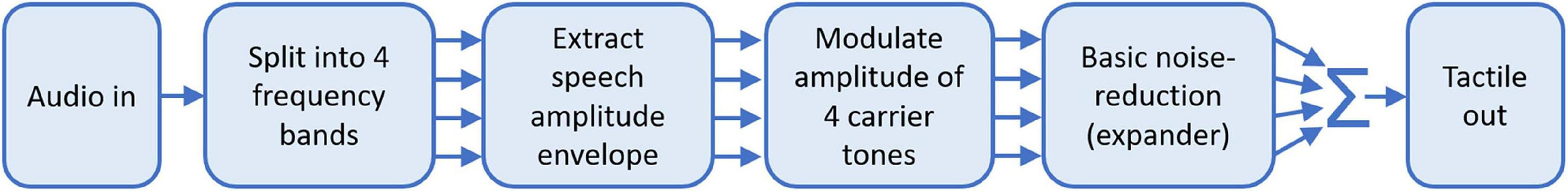

Fletcher et al. (2019) showed that presenting the speech amplitude envelope through haptic stimulation also improves speech-in-noise performance in CI users. In this study, the haptic signal was extracted from the speech-in-noise signal using a simple noise-reduction technique. Furthermore, the signal processing used could be applied in real-time on a compact device and haptic stimulation was delivered to the wrist, which is a more suitable site for a real-world application. A block diagram of the signal-processing strategy used is shown in Figure 2. The amplitude envelope is extracted from the audio in four frequency bands, which cover the frequency range where speech energy is maximal. Each of the four envelopes is then used to modulate the amplitude of one of four carrier tones. The carrier tone frequencies are focused where tactile sensitivity is highest and are spaced so that they are individually discriminable. Each tone is then passed through an expander, which exaggerates larger amplitude modulations and acts as a basic noise-reduction strategy. The tones are then delivered to each wrist through a single shaker contact. Using this approach, participants were able to recognize 8% more words in multi-talker noise with EHS compared to with their CI alone, with word recognition for some participants increasing by more than 20%. Similar benefit to speech-in-noise performance has also been found in normal-hearing participants listening to simulated CI audio (Fletcher et al., 2018). This study used a similar haptic signal-processing strategy, but haptic stimulation was delivered to the fingertip rather than the wrist.

Figure 2. Block diagram describing the haptic signal-processing strategy used by Fletcher et al. (2019).

In addition to these EHS studies, which used co-located speech and noise sources, Fletcher et al. (2020b) has shown large benefits of EHS for spatially separated speech and noise in unilaterally implanted CI users. In this study, the audio received by devices behind each ear was converted to haptic stimulation on each wrist. A similar signal-processing strategy to Fletcher et al. (2019) was used, but without the expander. EHS was found to improve speech reception thresholds in noise by 3 dB when the speech was presented directly in front and the noise was presented either to the implanted or non-implanted side. This improvement is comparable to that observed when CI users use implants in both ears rather than one (van Hoesel and Tyler, 2003; Litovsky et al., 2009; see Fletcher et al., 2020b for discussion). Interestingly, no improvement in speech-in-noise performance with EHS was observed when the speech and noise were co-located. This indicates that the expander was critical to achieving the performance enhancement measured by Fletcher et al. (2019).

In addition to work showing benefits to speech-in-noise performance, EHS has also been shown to substantially improve sound localization in CI users (Fletcher and Zgheib, 2020; Fletcher et al., 2020a). Like in Fletcher et al. (2020b), in these studies the speech amplitude envelope was extracted from audio received by hearing-assistive devices behind each ear and delivered through haptic stimulation on each wrist. Remarkably, using this approach, unilaterally implanted CI users were able to locate speech more accurately than bilateral CI users and at a comparable accuracy to bilateral hearing-aid users (Fletcher et al., 2020a). Furthermore, participants were found to perform better when audio and haptic stimulation were provided together than when either was provided alone. This suggests that participants were able to combine audio and haptic information effectively. Another study used a more sophisticated signal-processing strategy, which included individual correction for differences in tactile sensitivity, and gave extensive training (Fletcher and Zgheib, 2020). Using this approach, still greater haptic sound-localization accuracy was achieved and performance was found to improve continuously throughout an extended training regime.

Another recent set of studies have shown evidence that haptic stimulation might enhance music perception in CI users. Haptic stimulation on the fingertip (Huang et al., 2019) or wrist (Luo and Hayes, 2019) was found to improve melody recognition. In both these studies, haptic stimulation was delivered via a single motor. For stimulation on the fingertip, the low-frequency portion of the audio signal was delivered. For stimulation on the wrist, the F_0 of the audio was extracted and delivered through changes in the amplitude and frequency of the haptic signal, which varied together. This latter approach precludes the presentation of intensity information. In another study, intensity information was delivered through intensity and frequency variations and F_0 information was delivered through changes in the location of stimulation along the forearm (Fletcher et al., 2020c). The mosaicOne_B device used in this study also incorporates a new noise-reduction strategy for F_0 extraction. To assess the effectiveness of the mosaicOne_B, pitch discrimination was measured with and without background noise. On average, participants were able to discriminate sounds whose F_0 differed by just 1.4%. This is less than a semitone, which is the minimum pitch change in most western melodies, and is substantially better than is typically achieved by CI users (Kang et al., 2009; Drennan et al., 2015). In addition, pitch discrimination was found to be remarkably robust to background noise. Even when the noise was 7.5 dB louder than the signal, no reduction in performance was observed and some participants were still able to achieve pitch-discrimination thresholds of just 0.9%. It should be noted, however, that inharmonic background noise was used. Further work is required to establish the effectiveness of this approach for delivering pitch information when other harmonic sounds are also present, as is common in music and real-world listening scenarios. Future studies should also assess whether the mosaicOne_B can be used to enhance speech-in-noise performance.

While early evidence of EHS benefit to CI listening is highly promising, there are two key issues that should be addressed to fully assess its potential. Firstly, how effective is the tactile system at transferring sound information and, secondly, to what extent are haptic and CI signals linked together in the brain? These issues will be discussed in the following sections.

When assessing the potential of EHS and when designing haptic devices, it is important to understand the limits of the tactile system in transferring intensity, time, and frequency information. The tactile system is known to be highly sensitive to intensity differences. The just-noticeable intensity difference between two successive stimuli on the hand or index finger is around 1.5 dB (Craig, 1972; Gescheider et al., 1996b) and there is evidence that sensitivity is similar, or perhaps even greater, on the wrist (Summers et al., 2005). This sensitivity to intensity differences is comparable to that of the healthy auditory system (Harris, 1963; Penner et al., 1974; Florentine et al., 1987). When assessing the capacity of the tactile system to deliver intensity information, it is also important to consider its dynamic range and the number of discriminable intensity steps it contains. This determines how well the system can portray absolute intensity information, as well as how large a difference between stimuli it can represent. The dynamic range for electrical CI stimulation is around 10–20 dB (Zeng and Galvin, 1999; Zeng et al., 2002). The dynamic range of the tactile system at the fingertip or wrist, however, is around four times larger (∼60 dB; Verrillo et al., 1969; Fletcher et al., 2021a, b). Across the dynamic range, approximately 40 intensity steps can be discriminated with haptic stimulation (Gescheider et al., 1996b), whereas CI users can discriminate around 20 intensity steps (Kreft et al., 2004; Galvin and Fu, 2009). Given the high sensitivity to intensity differences and large dynamic range, the tactile system seems well suited to providing supplementary sound intensity information for CI users.

In contrast to intensity sensitivity, the temporal precision of the tactile system is more limited than for CI users. Temporal precision of CI stimulation is high, with gap detection thresholds typically 2–5 ms in CI users (Moore and Glasberg, 1988; Garadat and Pfingst, 2011), which is similar to normal-hearing listeners (Plomp, 1964; Penner, 1977). For haptic stimulation, however, gap detect thresholds are ∼10 ms (Gescheider, 1966, 1967). The tactile system is also more susceptible to masking from stimuli that are temporally remote. Masking sounds that precede a signal by 100 ms or more typically do little masking for normal-hearing listeners (Elliot, 1962) or for CI users (Shannon, 1990). However, for haptic stimulation, some masking continues even if the masker precedes the signal by several hundreds of milliseconds (Gescheider et al., 1989).

In addition to having limited temporal precision, the tactile system is poor at discriminating stimulation at different frequencies. The healthy auditory system can detect frequency changes of just 1% at 100 Hz and 10% at 10 kHz (Moore, 1973). CI users are much poorer at frequency discrimination, being able to detect minimum frequency changes of ∼10–25% at 500 Hz and ∼10–20% at 4 kHz (Turgeon et al., 2015). The tactile system is poorer still, only able to detect changes of ∼20% at 50 Hz and of ∼35% at 200 Hz for stimulation on the finger (Goff, 1967) or forearm (Rothenberg et al., 1977).

The properties of the tactile system detailed above focus mainly on the finger, hand, wrist, or forearm (where most data are available). However, tactile aids have previously been mounted at various points around the body, including the sternum (Blamey and Clark, 1985), abdomen (Sparks et al., 1978), and back (Novich and Eagleman, 2015). Tactile sensitivity is known to vary markedly across body sites (e.g., Wilska, 1954). This is partly due to the different receptors and structure of glabrous (smooth) skin and non-glabrous (hairy) skin (Bolanowski et al., 1994; Cholewiak and Collins, 2003). Relatively few studies have compared sensitive across sites. The available data suggest that sensitivity is highest at the fingertip and reduces with distance from the finger, at the palm, wrist, forearm, and biceps (Wilska, 1954; Verrillo, 1963, 1966, 1971; Cholewiak and Collins, 2003; Fletcher et al., 2021b). The sternum has been found to be approximately as sensitive as the forearm, with areas of the back being less sensitive, and the abdomen being less sensitive still (Wilska, 1954).

The aim of EHS is to use haptic stimulation to deliver important auditory cues that are not well perceived through a CI. For CI users, the amplitude envelope is particularly important, as spectral information is severely degraded and so cannot be fully utilized (Blamey and Clark, 1990). The amplitude envelope facilitates the segmentation of the speech stream and the separation of speech from background noise (by marking syllable and phonemic boundaries over time and giving information about syllable stress and number; Kishon-Rabin and Nir-Dankner, 1999; Won et al., 2014; Cameron et al., 2018). However, the coding of amplitude envelope information by the CI is highly susceptible to degradation both by external factors, such as background noise (Chen et al., 2020), and by internal factors, such as the limited dynamic-range available through electrical stimulation (see previous section) and the interaction between electrode channels (Chatterjee and Oba, 2004).

The tactile system is well suited to providing amplitude envelope information. In addition to having a much larger dynamic range than electrical CI stimulation, the tactile system is highly sensitive to amplitude envelope differences across the range of modulation frequencies most important for speech recognition (Weisenberger, 1986; Drullman et al., 1994). Interestingly, there is evidence that the wrist (a site commonly used for haptic devices) is particularly sensitive to amplitude modulation (Summers et al., 1994, 2005). Because of this high sensitivity and the importance to speech perception, some tactile aids (Proctor and Goldstein, 1983; Spens and Plant, 1983) and EHS approaches (Fletcher et al., 2018, 2019, 2020b) have focused on the provision of amplitude envelope information.

A further crucial limitation for CI users is the poor transmission of pitch information, particularly for speech and music (McDermott, 2004; Chatterjee and Peng, 2008). Accurate coding of pitch information in speech (through F_0 or its harmonics) is required for perception of supra-segmental and paralinguistic information, including intonation, stress, and identification of talker mood or identity (Traunmuller, 1988; Murray and Arnott, 1993; Summers and Gratton, 1995; Most and Peled, 2007; Meister et al., 2009). Pitch also serves as an important cue for talker segregation in noisy listening environments (Leclere et al., 2017). However, F_0 changes in speech over time or between talkers are not well coded by CIs. This is because the F_0 for speech typically varies within the frequency range coded by a single CI electrode, preventing the use of across-electrode pitch cues (Swanson et al., 2019; Pisanski et al., 2020).

The tactile system is poor at transferring information through changes in stimulation frequency. Nonetheless, some EHS approaches have used stimulation frequency to deliver spectral (Fletcher et al., 2018, 2019, 2020b) or F0(Huang et al., 2017) information. An alternative approach has been used by some tactile aids (Brooks and Frost, 1983; Hanin et al., 1988) and the mosaicOne series of EHS devices (Fletcher, 2020; Fletcher et al., 2020c). In these devices, frequency or pitch information is transferred through changes in the location of stimulation either along the forearm or around the wrist (see Figure 3).

Figure 3. Image of the mosaicOne_C device currently being developed at the University of Southampton as part of the Electro-Haptics Project. Text and arrows highlight that the device has four motors (extruding from the wristband), which are faded between to create the sensation of haptic stimulation at continuum of points around the wrist. Image reproduced with permission of Samuel Perry and Mark Fletcher.

Amplitude envelope and F_0 information have been shown to facilitate similar levels of speech recognition in quiet when provided through either haptic (Grant et al., 1985) or auditory stimulation (Summers and Gratton, 1995). These cues have also been found to provide similar benefit to speech-in-noise performance for CI users when provided through haptic (Huang et al., 2017; Fletcher et al., 2019, 2020b) or auditory (Brown and Bacon, 2009) stimulation. However, providing both amplitude envelope and F_0 cues together has been shown to facilitate better speech recognition than providing either alone, as each provides different information (Summers and Gratton, 1995; Brown and Bacon, 2009).

Another auditory feature that is important to speech recognition is spectral shape (Guan and Liu, 2019). Accurate perception of spectral shape is critical for phoneme recognition as it provides information about the place of articulation for consonants and the identity of vowels (Kewley-Port and Zheng, 1998; Li et al., 2012). Although CI users are able to access gross spectral information, perception of spectral shape and corresponding phoneme identification abilities are limited compared to normal-hearing listeners (Sagi et al., 2010). Future EHS approaches might therefore enhance speech perception in CI users by providing access to information about spectral shape, such as flatness, spread, or centroid. Currently, EHS devices like the mosaicOne_C provide amplitude envelope and F_0 information using a one-dimensional array of haptic stimulators (with amplitude encoded as simulation intensity and F_0 coded to location along the array). Some tactile aids used two-dimensional arrays (typically coding sound intensity on one dimension and frequency on the other; Sparks et al., 1978; Snyder et al., 1982). This two-dimensional array approach could be used to extend existing EHS devices and allow for coding of additional spectral sound features.

Finally, CI users tend to have limited access to cues that are critical to sound localization and segregation, such as time and intensity differences across the ears (van Hoesel and Tyler, 2003; Litovsky et al., 2009; Dorman et al., 2016). This is primarily because the majority of adult CI users are implanted in only one ear (Raine, 2013), but CI users implanted in both ears also have substantially limited spatial hearing (Dorman et al., 2016). This is due to the fact that timing differences between the ears cannot be accessed or are highly degraded (Laback et al., 2004) and so bilaterally implanted CI users rely primarily or entirely on intensity differences (van Hoesel and Tyler, 2003). These intensity differences can be heavily distorted by independent pre-processing between devices (particularly automatic gain control; Potts et al., 2019). Additional factors that limit spatial hearing abilities in bilateral CI users are mismatches across devices in the perceived intensity and the place of electrical stimulation within the cochlea (Kan et al., 2019) as well as the impaired perception of spectral (e.g., pinna) cues (Fischer et al., 2020).

Previous EHS studies have used haptic stimulation to provide spatial-hearing cues to CI users. In these studies, the audio received by devices behind each ear was converted to haptic stimulation on each wrist. This meant that time and intensity differences across the ears were available as across-wrist time and intensity differences. Using this approach, large improvements were shown in both sound-localization accuracy (Fletcher and Zgheib, 2020; Fletcher et al., 2020a) and speech reception for spatially separated speech and noise (Fletcher et al., 2020b). Two recent studies have investigated sensitivity to across-wrist tactile time and intensity differences (Fletcher et al., 2021a, b). Encouragingly, participants could detect tactile intensity differences across the wrists of just 0.8 dB, which is similar to (or perhaps even better than) sensitivity to sound intensity differences across the ears (Grantham, 1984). Furthermore, no decline in this sensitivity with age was found for participants up to 60 years old. In contrast, sensitivity to tactile time differences across the wrists was found to be far worse than would be required to transfer across-ear time difference cues.

Anatomical, physiological, and behavioral studies all indicate that audio and haptic signals are strongly linked in the brain. Anatomical and physiological studies have revealed extensive connections from somatosensory brain regions at numerous stages along the auditory pathway, from the first node (the cochlear nucleus) to the cortex (Aitkin et al., 1981; Foxe et al., 2000; Shore et al., 2000, 2003). Physiological studies have also shown that substantial populations of neurons in the auditory cortex can be modulated by haptic stimulation (Lakatos et al., 2007; Meredith and Allman, 2015). Behavioral studies have demonstrated that haptic stimulation can affect auditory perception. Haptic stimulation has been found to facilitate the detection of faint sounds (Schurmann et al., 2004) and to modulate loudness and syllable perception (Gillmeister and Eimer, 2007; Gick and Derrick, 2009). Recent studies using EHS (reviewed above) have also shown that haptic stimulation can be integrated to improve sound localization (Fletcher et al., 2020a) and speech-in-noise performance (Huang et al., 2017; Fletcher et al., 2018, 2019, 2020b).

Given that audio and haptic signals can be integrated in the brain, it is important to understand how this integration can be maximized to increase EHS benefit. One important principle of multi-sensory integration is the principle of inverse effectiveness (Wallace et al., 1996; Hairston et al., 2003; Laurienti et al., 2006). This states that maximum multisensory integration occurs when senses provide low-quality information in isolation. This condition would appear to be well met in previous EHS studies, where participants received incomplete speech or sound location information through both their CI and through haptic stimulation. Another important principle for maximizing integration is correlation of temporal properties (Ernst and Bulthoff, 2004; Fujisaki and Nishida, 2005; Burr et al., 2009; Parise and Ernst, 2016). Again, this condition would appear to be well met in many EHS studies, where both audio and haptic signals were temporally complex and highly correlated.

Following from earlier work with tactile aids, modern haptic devices might be used to assist those who could benefit from a CI but cannot access or effectively use one. It is estimated that around 2% of CI users become non or minimal users (Bhatt et al., 2005; Ray et al., 2006). A higher proportion of non-use is found among adult CI recipients who were born deaf or who became deaf early in childhood (Lammers et al., 2018). Additionally, some deafened individuals achieve no or minimal benefit from a CI, for example, when cochlear ossification has occurred following meningitis (Durisin et al., 2015). Haptic technology has the potential to provide benefit to sound detection, discrimination, and localization, as well as speech perception in these groups. It could also benefit the many millions of people around the world who do not have access to hearing-assistive technologies, such as CIs, because of inadequate health-care provision or overburden some cost (Bodington et al., 2020; Fletcher, 2020).

All CI recipients undergo a period of auditory deprivation following surgery, as hearing aid use is not possible directly after implantation. For those undergoing bilateral implant surgery (which includes the majority of children receiving a CI in the United Kingdom), this can mean complete loss of auditory stimulation for a period of up to a month between CI surgery and initial device tuning. Another group that have a period of no or limited access to auditory stimulation are the 1–2% of CI users per year that experience device failure (Causon et al., 2013). These individuals typically face a wait of many months between the failure occurring and switch-on of a re-implanted device. Haptic stimulation could provide a means to maintain access to auditory information, including enhancing lip-reading, for these groups during this period of auditory deprivation. The effectiveness of haptic stimulation in supporting lip-reading has already been demonstrated in work using tactile aids (Kishon-Rabin et al., 1996).

Recent advances in key technologies provide an opportunity to develop a new generation of haptic aids that give greater benefit and have higher acceptance than the tactile aids of the 1980s and 1990s. Particularly important are advances in micro-motor, micro-processor, wireless communication, and battery technology, as well as in manufacturing and prototyping techniques such as 3D printing. These technologies will allow modern haptic devices to avoid many of the pitfalls of early tactile aids, such as bothersome wires, large power and computing units, highly limited signal-processing capacity, and short battery lives (for a detailed review of haptic device design considerations see Fletcher, 2020). Battery and wireless technology and improved manufacturing techniques will also reduce many of the practical and esthetic issues faced by earlier haptic devices. For example, new devices would not require wires to connect device components (e.g., microphones, battery and signal processing units, and haptic motors), can be more compact and discreet, and would require far less regular battery charging. In addition, modern haptic devices can deliver haptic signals with higher precision and deploy cutting-edge signal-processing techniques to substantially improve auditory feature extraction, particularly in the presence of background noise. Finally, modern haptic devices could improve safety and awareness by interfacing with smart devices in the internet of things, such as doorbells, telephones, and intruder or fire alarms.

Recently, haptic devices have been developed that—with further development—could likely be deployed as effective haptic aids to hearing. The mosaicOne_B (Fletcher et al., 2020c) is worn as a sleeve (15 cm long), with a total of 12 motors arranged along the dorsal and palmar sides of the forearm. The mosaicOne_C (Fletcher, 2020; see Figure 3), Tabsi (Pezent et al., 2019), and Buzz (Perrotta et al., 2021) are all wrist-worn devices, with multiple motors arranged around the wrist. In addition to delivering vibration, the Tabsi device includes a mechanism for modulating the amount of pressure (“squeeze”) applied to the wrist. Each of these devices use motor and haptic driver technology that overcomes many of the substantial haptic signal reproduction issues faced by earlier tactile aids (Summers and Farr, 1989; Cholewiak and Wollowitz, 1992). One haptic motor design (used in the mosaicOne_B) is the eccentric rotating mass, in which an asymmetric mass is turned to create vibration. These motors are low cost and able to produce high vibration intensity. However, they have quite low power efficiency, which limits their utility for real-world use. The vibration frequency and intensity of these motors change together and cannot be controlled independently. While this may be a limiting factor, it may also be advantageous for effective transfer of high-resolution information as higher sensitivity to change has been observed when frequency and intensity are modulated together than when either are modulated alone (Summers et al., 2005). Another low-cost motor design (used in the Tasbi and Buzz) is the linear resonant actuator, in which a mass is moved by a voice coil to create vibration. Linear resonant actuators are often unable to produce intense vibration but are highly power efficient. Unlike eccentric rotating mass motors, they operate at a single fixed frequency. A final alternative is the piezoelectric motor design, in which vibration is created by a material that bends and deforms as voltage is applied. Piezoelectric motors are able to produce complex waveforms (with the capacity to control the frequency spectrum and intensity independently) and are power efficient. However, they are currently typically much more expensive than linear resonant actuators or eccentric rotating mass motors.

The mosaicOne_B, mosaicOne_C, and Tasbi haptic devices are lab-based prototypes, with the haptic signal fed to the device through a separate unit that manages signal processing and audio capture. The Buzz, on the other hand, is available for real-world use. However, as discussed in Fletcher (2020), there are a number of important limitations in its current design. These include the capture of audio from an onboard microphone that is highly susceptible to wind noise and disruption from movement of clothing across the device. Previous EHS studies have advocated streaming of audio from behind-the-ear hearing-assistive devices, which already include technologies to address many of the issues faced by the Buzz (e.g., wind noise; Fletcher, 2020; Fletcher and Zgheib, 2020; Fletcher et al., 2020a, 2021a). This approach would also allow access to spatial-hearing cues and would increase the correspondence between audio and haptic stimulation, facilitating maximal multisensory integration. This approach could be readily implemented using existing wireless streaming technology (such as Bluetooth Low Energy), which is already implemented in the latest hearing-assistive devices. Alternatively, audio could be streamed from a remote microphone close to sound source of interest to maximize the signal-to-noise ratio (e.g., Dorman and Gifford, 2017). This may be particularly effective in noisy environments, such as classrooms.

It will be important for future work to establish how much EHS benefit can be achieved in different clinical populations. No study has yet aimed to compare EHS benefit across user groups. So far, EHS enhancement of speech-in-noise performance has been shown in unilaterally implanted CI users (Huang et al., 2017; Fletcher et al., 2019, 2020b) and in one bilaterally implanted participant [P9 in Fletcher et al. (2019)] for whom there was a large benefit (20.5% more words in noise recognized with EHS than with their CIs alone). A recent study that demonstrated EHS benefit to sound localization included only unilateral CI users, with around half also having a hearing aid in the non-implanted ear (Fletcher et al., 2020a). Although those without hearing aids benefitted most from EHS, substantial benefit was shown for both sets of participants.

Future work should also compare EHS benefit in those with congenital, early, and late deafness. Studies that have assessed multisensory integration in CI users have shown evidence that CI recipients with late-deafness and those with congenital or early-deafness who are implanted early are able to effectively integrate audio and visual information (Bergeson et al., 2005; Schorr et al., 2005; Tremblay et al., 2010). However, those implanted late (after a few years of deafness) integrate audio and visual information less effectively. Studies in non-human animals have also shown that extensive sensory experience in early development is required for multisensory integration networks to fully develop (Wallace and Stein, 2007; Yu et al., 2010). Although congenitally deaf CI recipients are able to effectively integrate audio and haptic information, some studies suggest that they do so less effectively than late-deafness CI recipients (Landry et al., 2013; Nava et al., 2014). This might suggest that congenitally deaf individuals will benefit less from EHS. However, there is also some evidence to suggest that congenitally deaf individuals have increased tactile sensitivity (Levanen and Hamdorf, 2001) and faster response times to tactile stimuli (Nava et al., 2014). This could mean that congenitally deaf people can access more information through haptic stimulation than those with late deafness and will therefore benefit more from EHS.

EHS benefit should also be assessed across different age groups. For haptic stimulation, like for hearing, detection and frequency-discrimination thresholds (particularly at high frequencies) worsen with age (Verrillo, 1979, 1980; Moore, 1985; Stuart et al., 2003; Reuter et al., 2012; Valiente et al., 2014). The ability to discriminate haptic stimulation at different locations on the skin has also been found to worsen with age (Leveque et al., 2000). However, intensity discrimination both at a single stimulation site (Gescheider et al., 1996a) and across sites (Fletcher et al., 2021a) has been found to be robust to aging. The evidence of decline in some aspects of haptic performance might suggest that EHS benefit will be reduced in older populations. However, the ability use haptic stimulation to achieve high sound-localization accuracy and enhanced speech-in-noise performance has been shown in both young (Fletcher et al., 2018; Fletcher and Zgheib, 2020) and older (Fletcher et al., 2019, 2020a, 2020b) adults. Furthermore, a range of evidence suggests that multisensory integration is increased in older adults (Laurienti et al., 2006; Diederich et al., 2008; de Dieuleveult et al., 2017), which could mean that EHS will be more effective in older CI users. In children, there may also be enhanced multisensory integration. One popular theory of brain development posits that infants are sensitive to a broad range of stimuli before becoming more specialized (a process known as “perceptual narrowing”; Slater and Kirby, 1998; Kuhl et al., 2006; Lewkowicz and Ghazanfar, 2009). There is evidence that a similar process occurs for multisensory integration (Lewkowicz and Ghazanfar, 2006). This could mean that EHS will be most effective in children, who have high tactile sensitivity and whose brains are most able to integrate novel multisensory stimuli.

To comprehensively assess EHS benefit, further testing with ecologically relevant outcome measures is required. This should include assessing EHS effects on speech prosody perception (rhythm, tone, intonation, and stress in speech) and listening effort. Speech prosody allows a listener to distinguish emotions and intention (e.g., the presence of sarcasm), and to distinguish statements from questions and nouns from verbs (e.g., “object” from “object”). CI users typically have impaired speech prosody perception (Xin et al., 2007; Meister et al., 2009; Everhardt et al., 2020) and report high levels of listening effort (Alhanbali et al., 2017). Access to pitch information has been shown to be critical to perception of speech prosody (Murray and Arnott, 1993; Banse and Scherer, 1996; Most and Peled, 2007; Xin et al., 2007; Peng et al., 2008; Meister et al., 2009). The mosaicOne_B haptic device, which was recently shown to transmit high-resolution pitch information (Fletcher et al., 2020c), would therefore appear a strong candidate device for recovering speech prosody perception in CI users.

Studies have so far shown that haptic stimulation can be used to accurately locate a single sound source (Fletcher and Zgheib, 2020; Fletcher et al., 2020a). It has also been shown that EHS improves speech recognition both for co-located and spatially separated speech and noise sources (Huang et al., 2017; Fletcher et al., 2018, 2019, 2020b). Future work should establish the robustness of haptic sound-localization to the presence of multiple simultaneous sounds and the extent to which EHS can enhance speech recognition in more complex acoustic environments, with numerous simultaneous sources at different locations.

To maximize EHS benefit, it will be critical to establish which sound features are most important for enhancing CI listening, and the most effective way to map these features to haptic stimulation. As already discussed, to date, most studies with EHS or tactile aids have focused on either F_0 or speech amplitude envelope, but the effectiveness of presenting other sound features, such as spectral flatness or spread, either in addition or instead of these cues should also be explored. It will also be important to establish which noise reduction and signal enhancement strategies are most effective. As argued above, there is already a strong indication that an expander can be effective in allowing EHS to give benefit to speech-in-noise performance for co-located speech and noise sources (Fletcher et al., 2018, 2019, 2020b). However, more advanced noise-reduction techniques for enhancing speech-in-noise performance (e.g., Goehring et al., 2019; Keshavarzi et al., 2019) and music perception (e.g., Tahmasebi et al., 2020) should also be trialed, as well as techniques for enhancing spatial-hearing cues (Francart et al., 2011; Brown, 2014).

In addition to determining the optimal signal extraction strategy, the importance of individual tuning of the haptic device should be explored. Substantial additional EHS benefit might be achieved if haptic devices are, for example, effectively tuned to the individual’s tactile sensitivity (as in Fletcher and Zgheib, 2020), amount of residual acoustic hearing, or the CI device type or fitting used. It may also be important to adjust devices depending on how tightly the individual has secured the haptic device to their body, as this will affect the coupling of the haptic motor with the skin. This could involve exploiting existing methods, or those currently under development, which allow automatic correction for the amount of pressure applied to each motor in a device (Dementyev et al., 2020).

Another crucial consideration is how much time delay between audio and haptic signals can be tolerated while maintaining EHS benefit. This will dictate the sophistication of signal processing that can be used in EHS devices. One study explored the influence of haptic stimulation (air puffs) on the perception of aspirated and unaspirated syllables, with different delays between the audio and haptic signals (Gick et al., 2010). They found no significant change in the influence of haptic stimulation when it arrived up to 100 ms after the audio. This suggests that delays of several tens of milliseconds may be acceptable without reducing EHS benefit. A haptic signal can be delayed from an audio signal by up to around 25 ms before the signals are no longer perceived to be simultaneous (Altinsoy, 2003). This may suggest a delay of only a few tens of milliseconds would be tolerated. However, there is significant evidence that the brain rapidly corrects for consistent delays between correlated sensory inputs so that perceptual synchrony is retained (referred to as “temporal recalibration”; Navarra et al., 2007; Keetels and Vroomen, 2008; Van der Burg et al., 2013). If haptic stimulation can be delayed by several tens of milliseconds without reducing EHS benefit, this would allow for highly sophisticated signal-processing strategies to be implemented.

It will be important to understand how and where along the auditory pathway haptic and audio information are combined. One study of audio-tactile integration found somatosensory input was able to modulate the rhythm of ambient neural oscillations in auditory cortex. These oscillations were shifted into an ideal rhythm for enhancing auditory cortical responses to the auditory input (Lakatos et al., 2007). This may describe a key neural mechanism through which EHS enhances CI listening. In better understanding the mechanism, we might better understand how to maximize audio-tactile integration. This could inform how and where haptic stimulation is delivered, the choice of signal-processing approach, the design of training programs, and when in the CI care pathway EHS is introduced.

For EHS benefit to be maximized, optimal training regimes will need to be devised. EHS benefit has been shown to increase with training, both for enhancing speech-in-noise performance (Fletcher et al., 2018, 2019, 2020a) and for enhancing sound localization (Fletcher and Zgheib, 2020; Fletcher et al., 2020a). Earlier studies with tactile aids have also established that participants continue to improve their ability to identify speech presented through haptic stimulation (without concurrent audio) after months or even years of training (e.g., Sherrick, 1984; Brooks et al., 1986a, b; Weisenberger et al., 1987). So far, EHS studies have given only modest amounts of training and used simple training approaches. With more extensive training and more sophisticated training regimes, it seems likely that EHS can give even larger benefits than have already been observed.

Haptic aids for the hearing-impaired were rendered obsolete in the 1990s by the development and success of CIs. However, researchers have recently shown compelling evidence that haptic stimulation can augment the CI signal, leading to enhanced speech-in-noise performance, sound localization, and music perception. Furthermore, significant developments in technology mean that the time is right for a new generation of haptic devices to aid the large number of people who are unable to access or benefit from a CI, whether for biomedical reasons or because of inadequate healthcare provision. With investment in the development of a low-power, compact, inexpensive, and non-invasive haptic device, the EHS approaches that have recently shown great promise in laboratory studies could soon be made available for testing in real-world trials. This new technology could enhance communication and quality of life for the nearly one million individuals who use CI technology, as well as the many millions of people across the world with disabling deafness who cannot access hearing-assistive devices.

MF drafted the manuscript. MF and CV edited and reviewed the manuscript. Both authors contributed to the article and approved the submitted version.

Funding for the salary of author MF was provided by the William Demant Foundation.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Our warmest thanks to Andrew Brookman for proofreading the text and Helen and Alex Fletcher for their support during the writing of this manuscript.

Aitkin, L. M., Kenyon, C. E., and Philpott, P. (1981). The representation of the auditory and somatosensory systems in the external nucleus of the cat inferior colliculus. J. Comp. Neurol. 196, 25–40. doi: 10.1002/cne.901960104

Alhanbali, S., Dawes, P., Lloyd, S., and Munro, K. J. (2017). Self-reported listening-related effort and fatigue in hearing-impaired adults. Ear. Hear. 38, 39–e48. doi: 10.1097/AUD.0000000000000361

Altinsoy, M. E. (2003). “Perceptual aspects of auditory-tactile asynchrony,” in Proceedings of the 10th International Congress on Sound and Vibration (Stockholm: Institut für Kommunikationsakustik).

Bach-y-Rita, P. (2004). Tactile sensory substitution studies. Ann. N. Y. Acad. Sci. 1013, 83–91. doi: 10.1196/annals.1305.006

Bach-y-Rita, P., Collins, C. C., Saunders, F. A., White, B., and Scadden, L. (1969). Vision substitution by tactile image projection. Nature 221, 963–964. doi: 10.1038/221963a0

Bach-y-Rita, P., Tyler, M. E., and Kaczmarek, K. A. (2003). Seeing with the brain. Int. J. Hum. Comput. Int. 15, 285–295. doi: 10.1207/S15327590ijhc1502_6

Banse, R., and Scherer, K. R. (1996). Acoustic profiles in vocal emotion expression. J. Pers. Soc. Psychol. 70, 614–636. doi: 10.1037//0022-3514.70.3.614

Bento, R. F., De Brito Neto, R. V., Castilho, A. M., Gomez, M. V., Sant’Anna, S. B., Guedes, M. C., et al. (2005). Psychoacoustic dynamic range and cochlear implant speech-perception performance in nucleus 22 users. Cochlear Implants Int. 6(Suppl 1), 31–34. doi: 10.1179/cim.2005.6.Supplement-1.31

Bergeson, T. R., Pisoni, D. B., and Davis, R. A. (2005). Development of audiovisual comprehension skills in prelingually deaf children with cochlear implants. Ear Hear. 26, 149–164. doi: 10.1097/00003446-200504000-00004

Bhatt, Y. M., Green, K. M. J., Mawman, D. J., Aplin, Y., O’Driscoll, M. P., Saeed, S. R., et al. (2005). Device nonuse among adult cochlear implant recipients. Otol. Neurotol. 26, 183–187. doi: 10.1097/00129492-200503000-00009

Blamey, P. J., and Clark, G. M. (1985). A wearable multiple-electrode electrotactile speech processor for the profoundly deaf. J. Acoust. Soc. Am. 77, 1619–1620. doi: 10.1121/1.392009

Blamey, P. J., and Clark, G. M. (1990). Place coding of vowel formants for cochlear implant patients. J. Acoust. Soc. Am. 88, 667–673. doi: 10.1121/1.399770

Bodington, E., Saeed, S. R., Smith, M. C. F., Stocks, N. G., and Morse, R. P. (2020). A narrative review of the logistic and economic feasibility of cochlear implants in lower-income countries. Cochlear Implants Int. 20, 1–10. doi: 10.1080/14670100.2020.1793070

Bolanowski, S. J., Gescheider, G. A., and Verrillo, R. T. (1994). Hairy skin: psychophysical channels and their physiological substrates. Somatosens Mot. Res. 11, 279–290. doi: 10.3109/08990229409051395

Brooks, P. L., and Frost, B. J. (1983). Evaluation of a tactile vocoder for word recognition. J. Acoust. Soc. Am. 74, 34–39. doi: 10.1121/1.389685

Brooks, P. L., Frost, B. J., Mason, J. L., and Chung, K. (1985). Acquisition of a 250-Word Vocabulary through a tactile vocoder. J. Acoust. Soc. Am. 77, 1576–1579. doi: 10.1121/1.392000

Brooks, P. L., Frost, B. J., Mason, J. L., and Gibson, D. M. (1986a). Continuing evaluation of the Queen’s University tactile vocoder II: identification of open set sentences and tracking narrative. J. Rehabil. Res. Dev. 23, 129–138.

Brooks, P. L., Frost, B. J., Mason, J. L., and Gibson, D. M. (1986b). Continuing evaluation of the Queen’s University tactile vocoder. I: identification of open set words. J. Rehabil. Res. Dev. 23, 119–128.

Brown, C. A. (2014). Binaural enhancement for bilateral cochlear implant users. Ear Hear. 35, 580–584. doi: 10.1097/AUD.0000000000000044

Brown, C. A., and Bacon, S. P. (2009). Low-frequency speech cues and simulated electric-acoustic hearing. J. Acoust. Soc. Am. 125, 1658–1665. doi: 10.1121/1.3068441

Burr, D., Silva, O., Cicchini, G. M., Banks, M. S., and Morrone, M. C. (2009). Temporal mechanisms of multimodal binding. Proc. R. Soc. B Bio. Sci. 276, 1761–1769. doi: 10.1098/rspb.2008.1899

Cameron, S., Chong-White, N., Mealings, K., Beechey, T., Dillon, H., and Young, T. (2018). The parsing syllable envelopes test for assessment of amplitude modulation discrimination skills in children: development, normative data, and test-retest reliability studies. J. Am. Acad. Audiol. 29, 151–163. doi: 10.3766/jaaa.16146

Causon, A., Verschuur, C., and Newman, T. A. (2013). Trends in cochlear implant complications: implications for improving long-term outcomes. Otol. Neurotol. 34, 259–265. doi: 10.1097/MAO.0b013e31827d0943

Chatterjee, M., and Oba, S. I. (2004). Across- and within-channel envelope interactions in cochlear implant listeners. J. Assoc. Res. Otolaryngol. 5, 360–375. doi: 10.1007/s10162-004-4050-5

Chatterjee, M., and Peng, S. C. (2008). Processing F0 with cochlear implants: Modulation frequency discrimination and speech intonation recognition. Hear. Res. 235, 143–156. doi: 10.1016/j.heares.2007.11.004

Chen, B., Shi, Y., Zhang, L., Sun, Z., Li, Y., Gopen, Q., et al. (2020). Masking effects in the perception of multiple simultaneous talkers in normal-hearing and cochlear implant listeners. Trends Hear. 24:2331216520916106. doi: 10.1177/2331216520916106

Cholewiak, R. W., and Collins, A. A. (2003). Vibrotactile localization on the arm: effects of place, space, and age. Percept. Psychophys. 65, 1058–1077. doi: 10.3758/bf03194834

Cholewiak, R. W., and Wollowitz, M. (1992). “The design of vibrotactile transducers,” in Tactile aids for the Hearing Impaired, ed. I. R. Summers (London: Whurr), 57–82.

Ciesla, K., Wolak, T., Lorens, A., Heimler, B., Skarzynski, H., and Amedi, A. (2019). Immediate improvement of speech-in-noise perception through multisensory stimulation via an auditory to tactile sensory substitution. Restor. Neurol. Neurosci. 37, 155–166. doi: 10.3233/RNN-190898

Cowan, R. S., Blamey, P. J., Sarant, J. Z., Galvin, K. L., Alcantara, J. I., Whitford, L. A., et al. (1991). Role of a multichannel electrotactile speech processor in a cochlear implant program for profoundly hearing-impaired adults. Ear Hear. 12, 39–46. doi: 10.1097/00003446-199102000-00005

Craig, J. C. (1972). Difference threshold for intensity of tactile stimuli. Percept. Psychophys. 11, 150–152. doi: 10.3758/Bf03210362

D’Alessandro, H. D., and Mancini, P. (2019). Perception of lexical stress cued by low-frequency pitch and insights into speech perception in noise for cochlear implant users and normal hearing adults. Euro Arch. Oto. Rhino. Laryngol. 276, 2673–2680. doi: 10.1007/s00405-019-05502-9

de Dieuleveult, A. L., Siemonsma, P. C., van Erp, J. B., and Brouwer, A. M. (2017). Effects of aging in multisensory integration: a systematic review. Front. Aging Neurosci. 9:80. doi: 10.3389/fnagi.2017.00080

De Filippo, C. L. (1984). Laboratory projects in tactile aids to lipreading. Ear Hear. 5, 211–227. doi: 10.1097/00003446-198407000-00006

Dementyev, A., Olwal, A., and Lyon, R. F. (2020). “Haptics with input: back-EMF in linear resonant actuators to enable touch, pressure and environmental awareness,” in Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology: UIST ‘20, (New York, NY: Association for Computing Machinery).

Diederich, A., Colonius, H., and Schomburg, A. (2008). Assessing age-related multisensory enhancement with the time-window-of-integration model. Neuropsychologia 46, 2556–2562. doi: 10.1016/j.neuropsychologia.2008.03.026

Dorman, M. F., and Gifford, R. H. (2017). Speech understanding in complex listening environments by listeners fit with cochlear implants. J. Speech Lang. Hear. Res. 60, 3019–3026. doi: 10.1044/2017_JSLHR-H-17-0035

Dorman, M. F., Loiselle, L. H., Cook, S. J., Yost, W. A., and Gifford, R. H. (2016). Sound source localization by normal-hearing listeners, hearing-impaired listeners and cochlear implant listeners. Audiol. Neurootol. 21, 127–131. doi: 10.1159/000444740

Drennan, W. R., Oleson, J. J., Gfeller, K., Crosson, J., Driscoll, V. D., Won, J. H., et al. (2015). Clinical evaluation of music perception, appraisal and experience in cochlear implant users. Int. J. Audiol. 54, 114–123. doi: 10.3109/14992027.2014.948219

Dritsakis, G., van Besouw, R. M., and O’ Meara, A. (2017). Impact of music on the quality of life of cochlear implant users: a focus group study. Cochlear. Implants Int. 18, 207–215. doi: 10.1080/14670100.2017.1303892

Drullman, R., Festen, J. M., and Plomp, R. (1994). Effect of temporal envelope smearing on speech reception. J. Acoust. Soc. Am. 95, 1053–1064. doi: 10.1121/1.408467

Durisin, M., Buchner, A., Lesinski-Schiedat, A., Bartling, S., Warnecke, A., and Lenarz, T. (2015). Cochlear implantation in children with bacterial meningitic deafness: the influence of the degree of ossification and obliteration on impedance and charge of the implant. Cochlear Implants Int. 16, 147–158. doi: 10.1179/1754762814Y.0000000094

Elliot, L. L. (1962). Backward and forward masking of probe tones of different frequencies. J. Acoust. Soc. Am. 34, 1116–1117. doi: 10.1121/1.1918254

Ernst, M. O., and Bulthoff, H. H. (2004). Merging the senses into a robust percept. Trends Cog. Sci. 8, 162–169. doi: 10.1016/j.tics.2004.02.002

Everhardt, M. K., Sarampalis, A., Coler, M., Baskent, D., and Lowie, W. (2020). Meta-analysis on the identification of linguistic and emotional prosody in cochlear implant users and vocoder simulations. Ear Hear. 41, 1092–1102. doi: 10.1097/AUD.0000000000000863

Fischer, T., Schmid, C., Kompis, M., Mantokoudis, G., Caversaccio, M., and Wimmer, W. (2020). Pinna-Imitating microphone directionality improves sound localization and discrimination in bilateral cochlear implant ssers. Ear Hear. 42, 214–222. doi: 10.1097/AUD.0000000000000912

Fletcher, M. D. (2020). Using haptic stimulation to enhance auditory perception in hearing-impaired listeners. Exp. Rev. Med. Devic. 20, 1–12. doi: 10.1080/17434440.2021.1863782

Fletcher, M. D., and Zgheib, J. (2020). Haptic sound-localisation for use in cochlear implant and hearing-aid users. Sci. Rep. 10:14171. doi: 10.1038/s41598-020-70379-2

Fletcher, M. D., Cunningham, R. O., and Mills, S. R. (2020a). Electro-haptic enhancement of spatial hearing in cochlear implant users. Sci. Rep. 10:1621. doi: 10.1038/s41598-020-58503-8

Fletcher, M. D., Hadeedi, A., Goehring, T., and Mills, S. R. (2019). Electro-haptic enhancement of speech-in-noise performance in cochlear implant users. Sci. Rep. 9:11428. doi: 10.1038/s41598-019-47718-z

Fletcher, M. D., Mills, S. R., and Goehring, T. (2018). Vibro-tactile enhancement of speech intelligibility in multi-talker noise for simulated cochlear implant listening. Trends Hear. 22, 1–11. doi: 10.1177/2331216518797838

Fletcher, M. D., Song, H., and Perry, S. W. (2020b). Electro-haptic stimulation enhances speech recognition in spatially separated noise for cochlear implant users. Sci. Rep. 10:12723.

Fletcher, M. D., Thini, N., and Perry, S. W. (2020c). Enhanced pitch discrimination for cochlear implant users with a new haptic neuroprosthetic. Sci. Rep. 10:10354. doi: 10.1038/s41598-020-67140-0

Fletcher, M. D., Zgheib, J., and Perry, S. W. (2021a). Sensitivity to haptic sound-localisation cues. Sci. Rep. 11:312. doi: 10.1038/s41598-020-79150-z

Fletcher, M. D., Zgheib, J., and Perry, S. W. (2021b). Sensitivity to haptic sound-localization cues at different body locations. Sensors 21:3770. doi: 10.3390/s21113770

Florentine, M., Buus, S., and Mason, C. R. (1987). Level discrimination as a function of level for tones from 0.25 to 16-kHz. J. Acoust. Soc. Am. 81, 1528–1541. doi: 10.1121/1.394505

Foxe, J. J., Morocz, I. A., Murray, M. M., Higgins, B. A., Javitt, D. C., and Schroeder, C. E. (2000). Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res. Cogn. Brain Res. 10, 77–83. doi: 10.1016/s0926-6410(00)00024-0

Francart, T., Lenssen, A., and Wouters, J. (2011). Enhancement of interaural level differences improves sound localization in bimodal hearing. J. Acoust. Soc. Am. 130, 2817–2826. doi: 10.1121/1.3641414

Fujisaki, W., and Nishida, S. (2005). Temporal frequency characteristics of synchrony-asynchrony discrimination of audio-visual signals. Exp. Brain Res. 166, 455–464. doi: 10.1007/s00221-005-2385-8

Galvin, J. J. III, and Fu, Q. J. (2009). Influence of stimulation rate and loudness growth on modulation detection and intensity discrimination in cochlear implant users. Hear. Res. 250, 46–54. doi: 10.1016/j.heares.2009.01.009

Garadat, S. N., and Pfingst, B. E. (2011). Relationship between gap detection thresholds and loudness in cochlear-implant users. Hear. Res. 275, 130–138. doi: 10.1016/j.heares.2010.12.011

Gault, R. H. (1924). Progress in experiments on tactile interpretation of oral speech. J. Ab. Soc. Psychol. 19, 155–159. doi: 10.1037/h0065752

Gault, R. H. (1930). On the effect of simultaneous tactual-visual stimulation in relation to the interpretation of speech. J. Ab. Soc. Psychol. 24, 498–517. doi: 10.1037/h0072775

Gescheider, G. A. (1966). Resolving of successive clicks by the ears and skin. J. Exp. Psychol. 71, 378–381. doi: 10.1037/h0022950

Gescheider, G. A. (1967). Auditory and cutaneous temporal resolution of successive brief stimuli. J. Exp. Psychol. 75, 570–572. doi: 10.1037/h0025113

Gescheider, G. A., Bolanowski, S. J. Jr., and Verrillo, R. T. (1989). Vibrotactile masking: effects of stimulus onset asynchrony and stimulus frequency. J. Acoust. Soc. Am. 85, 2059–2064. doi: 10.1121/1.397858

Gescheider, G. A., Edwards, R. R., Lackner, E. A., Bolanowski, S. J., and Verrillo, R. T. (1996a). The effects of aging on information-processing channels in the sense of touch: III. Differential sensitivity to changes in stimulus intensity. Somatosens Mot. Res. 13, 73–80. doi: 10.3109/08990229609028914

Gescheider, G. A., Zwislocki, J. J., and Rasmussen, A. (1996b). Effects of stimulus duration on the amplitude difference limen for vibrotaction. J. Acoust. Soc. Am. 100(4 Pt 1), 2312–2319. doi: 10.1121/1.417940

Gick, B., and Derrick, D. (2009). Aero-tactile integration in speech perception. Nature 462, 502–504. doi: 10.1038/nature08572

Gick, B., Ikegami, Y., and Derrick, D. (2010). The temporal window of audio-tactile integration in speech perception. J. Acoust. Soc. Am. 128, 342–346. doi: 10.1121/1.3505759

Gifford, R. H., and Dorman, M. F. (2012). The psychophysics of low-frequency acoustic hearing in electric and acoustic stimulation (EAS) and bimodal patients. J. Hear. Sci. 2, 33–44.

Gifford, R. H., Dorman, M. F., Spahr, A. J., and Bacon, S. P. (2007). Auditory function and speech understanding in listeners who qualify for EAS surgery. Ear Hear. 28(Suppl. 2), 114S–118S. doi: 10.1097/AUD.0b013e3180315455

Gillmeister, H., and Eimer, M. (2007). Tactile enhancement of auditory detection and perceived loudness. Brain Res. 1160, 58–68. doi: 10.1016/j.brainres.2007.03.041

Goehring, T., Keshavarzi, M., Carlyon, R. P., and Moore, B. C. J. (2019). Using recurrent neural networks to improve the perception of speech in non-stationary noise by people with cochlear implants. J. Acoust. Soc. Am. 146, 705–718. doi: 10.1121/1.5119226

Goff, G. D. (1967). Differential discrimination of frequency of cutaneous mechanical vibration. J. Exp. Psychol. 74, 294–299. doi: 10.1037/h0024561

Grant, K. W., Ardell, L. H., Kuhl, P. K., and Sparks, D. W. (1985). The contribution of fundamental frequency, amplitude envelope, and voicing duration cues to speechreading in normal-hearing subjects. J. Acoust. Soc. Am. 77, 671–677. doi: 10.1121/1.392335

Grantham, D. W. (1984). Interaural intensity discrimination: insensitivity at 1000 Hz. J. Acoust. Soc. Am. 75, 1191–1194. doi: 10.1121/1.390769

Guan, J., and Liu, C. (2019). Speech perception in noise with formant enhancement for older listeners. J. Speech Lang. Hear. Res. 62, 3290–3301. doi: 10.1044/2019_JSLHR-S-18-0089

Hairston, W. D., Laurienti, P. J., Mishra, G., Burdette, J. H., and Wallace, M. T. (2003). Multisensory enhancement of localization under conditions of induced myopia. Exp. Brain Res. 152, 404–408. doi: 10.1007/s00221-003-1646-7

Hanin, L., Boothroyd, A., and Hnath-Chisolm, T. (1988). Tactile presentation of voice fundamental frequency as an aid to the speechreading of sentences. Ear Hear. 9, 335–341. doi: 10.1097/00003446-198812000-00010

Hanvey, K. (2020). BCIG Annual Data Collection Financial Year 2018-2019. Available online at: https://www.bcig.org.uk/wp-content/uploads/2020/02/BCIG-activity-data-FY18-19.pdf (accessed May 25, 2020).

Hazrati, O., and Loizou, P. C. (2012). The combined effects of reverberation and noise on speech intelligibility by cochlear implant listeners. Int. J. Audiol. 51, 437–443. doi: 10.3109/14992027.2012.658972

Huang, J., Lu, T., Sheffield, B., and Zeng, F. (2019). Electro-tactile stimulation enhances cochlear-implant melody recognition: effects of rhythm and musical training. Ear Hear. 41, 160–113. doi: 10.1097/AUD.0000000000000749

Huang, J., Sheffield, B., Lin, P., and Zeng, F. G. (2017). Electro-tactile stimulation enhances cochlear implant speech recognition in noise. Sci. Rep. 7:2196. doi: 10.1038/s41598-017-02429-1

Kan, A., Goupell, M. J., and Litovsky, R. Y. (2019). Effect of channel separation and interaural mismatch on fusion and lateralization in normal-hearing and cochlear-implant listeners. J. Acoust. Soc. Am. 146:1448. doi: 10.1121/1.5123464

Kang, R., Nimmons, G. L., Drennan, W., Longnion, J., Ruffin, C., Nie, K., et al. (2009). Development and validation of the university of washington clinical assessment of music perception test. Ear Hear. 30, 411–418. doi: 10.1097/AUD.0b013e3181a61bc0

Keetels, M., and Vroomen, J. (2008). Temporal recalibration to tactile-visual asynchronous stimuli. Neurosci. Lett. 430, 130–134. doi: 10.1016/j.neulet.2007.10.044

Keshavarzi, M., Goehring, T., Turner, R. E., and Moore, B. C. J. (2019). Comparison of effects on subjective intelligibility and quality of speech in babble for two algorithms: a deep recurrent neural network and spectral subtraction. J. Acoust. Soc. Am. 145:1493. doi: 10.1121/1.5094765

Kewley-Port, D., and Zheng, Y. (1998). Auditory models of formant frequency discrimination for isolated vowels. J. Acoust. Soc. Am. 103, 1654–1666. doi: 10.1121/1.421264

Kishon-Rabin, L., and Nir-Dankner, M. (1999). The perception of phonologically significant contrasts using speech envelope cues. J. Basic Clin. Physiol. Pharmacol. 10, 209–219. doi: 10.1515/jbcpp.1999.10.3.209

Kishon-Rabin, L., Boothroyd, A., and Hanin, L. (1996). Speechreading enhancement: a comparison of spatial-tactile display of voice fundamental frequency (F-0) with auditory F-0. J. Acoust. Soc. Am. 100, 593–602. doi: 10.1121/1.415885

Kreft, H. A., Donaldson, G. S., and Nelson, D. A. (2004). Effects of pulse rate and electrode array design on intensity discrimination in cochlear implant users. J. Acoust. Soc. Am. 116(4 Pt 1), 2258–2268. doi: 10.1121/1.1786871

Kuhl, P. K., Stevens, E., Hayashi, A., Deguchi, T., Kiritani, S., and Iverson, P. (2006). Infants show a facilitation effect for native language phonetic perception between 6 and 12 months. Developmental. Sci. 9, F13–F21. doi: 10.1111/j.1467-7687.2006.00468.x

Laback, B., Pok, S. M., Baumgartner, W. D., Deutsch, W. A., and Schmid, K. (2004). Sensitivity to interaural level and envelope time differences of two bilateral cochlear implant listeners using clinical sound processors. Ear Hear. 25, 488–500. doi: 10.1097/01.aud.0000145124.85517.e8

Lakatos, P., Chen, C. M., O’Connell, M. N., Mills, A., and Schroeder, C. E. (2007). Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53, 279–292. doi: 10.1016/j.neuron.2006.12.011

Lammers, M. J. W., Versnel, H., Topsakal, V., van Zanten, G. A., and Grolman, W. (2018). Predicting performance and non-use in prelingually deaf and late-implanted cochlear implant users. Otol. Neurotol. 39, e436–e442. doi: 10.1097/MAO.0000000000001828

Landry, S. P., Guillemot, J. P., and Champoux, F. (2013). Temporary deafness can impair multisensory integration: a study of cochlear-implant users. Psychol. Sci. 24, 1260–1268. doi: 10.1177/0956797612471142

Laurienti, P. J., Burdette, J. H., Maldjian, J. A., and Wallace, M. T. (2006). Enhanced multisensory integration in older adults. Neurobiol. Aging 27, 1155–1163. doi: 10.1016/j.neurobiolaging.2005.05.024

Leclere, T., Lavandier, M., and Deroche, M. L. D. (2017). The intelligibility of speech in a harmonic masker varying in fundamental frequency contour, broadband temporal envelope, and spatial location. Hear. Res. 350, 1–10. doi: 10.1016/j.heares.2017.03.012

Levanen, S., and Hamdorf, D. (2001). Feeling vibrations: enhanced tactile sensitivity in congenitally deaf humans. Neurosci. Lett. 301, 75–77. doi: 10.1016/s0304-3940(01)01597-x

Leveque, J. L., Dresler, J., Ribot-Ciscar, E., Roll, J. P., and Poelman, C. (2000). Changes in tactile spatial discrimination and cutaneous coding properties by skin hydration in the elderly. J. Invest. Dermatol. 115, 454–458. doi: 10.1046/j.1523-1747.2000.00055.x

Lewkowicz, D. J., and Ghazanfar, A. A. (2006). The decline of cross-species intersensory perception in human infants. Proc. Natl. Acad. Sci. U.S.A. 103, 6771–6774. doi: 10.1073/pnas.0602027103

Lewkowicz, D. J., and Ghazanfar, A. A. (2009). The emergence of multisensory systems through perceptual narrowing. Trends Cog. Sci. 13, 470–478. doi: 10.1016/j.tics.2009.08.004

Li, F., Trevino, A., Menon, A., and Allen, J. B. (2012). A psychoacoustic method for studying the necessary and sufficient perceptual cues of American English fricative consonants in noise. J. Acoust. Soc. Am. 132, 2663–2675. doi: 10.1121/1.4747008

Litovsky, R. Y., Parkinson, A., and Arcaroli, J. (2009). Spatial hearing and speech intelligibility in bilateral cochlear implant users. Ear Hear. 30, 419–431. doi: 10.1097/AUD.0b013e3181a165be

Luo, X., and Hayes, L. (2019). Vibrotactile stimulation based on the fundamental frequency can improve melodic contour identification of normal-hearing listeners with a 4-channel cochlear implant simulation. Front. Neurosci. 13:1145. doi: 10.3389/fnins.2019.01145

McDermott, H. J. (2004). Music perception with cochlear implants: a review. Trends Amplif. 8, 49–82. doi: 10.1177/108471380400800203

Meister, H., Landwehr, M., Pyschny, V., Walger, M., and von Wedel, H. (2009). The perception of prosody and speaker gender in normal-hearing listeners and cochlear implant recipients. Int. J. Audiol. 48, 38–48. doi: 10.1080/14992020802293539

Meredith, M. A., and Allman, B. L. (2015). Single-unit analysis of somatosensory processing in the core auditory cortex of hearing ferrets. Euro. J. Neurosci. 41, 686–698. doi: 10.1111/ejn.12828

Moore, B. C. (1973). Frequency difference limens for short-duration tones. J. Acoust. Soc. Am. 54, 610–619. doi: 10.1121/1.1913640

Moore, B. C. (1985). Frequency selectivity and temporal resolution in normal and hearing-impaired listeners. Br. J. Audiol. 19, 189–201. doi: 10.3109/03005368509078973

Moore, B. C., and Glasberg, B. R. (1988). Gap detection with sinusoids and noise in normal, impaired, and electrically stimulated ears. J. Acoust. Soc. Am. 83, 1093–1101. doi: 10.1121/1.396054

Most, T., and Peled, M. (2007). Perception of suprasegmental features of speech by children with cochlear implants and children with hearing aids. J. Deaf. Stud. Deaf Ed. 12, 350–361. doi: 10.1093/deafed/enm012

Murray, I. R., and Arnott, J. L. (1993). Toward the simulation of emotion in synthetic speech – a review of the literature on human vocal emotion. J. Acoust. Soc. Am. 93, 1097–1108. doi: 10.1121/1.405558

Nava, E., Bottari, D., Villwock, A., Fengler, I., Buchner, A., Lenarz, T., et al. (2014). Audio-tactile integration in congenitally and late deaf cochlear implant users. PLoS One 9:e99606. doi: 10.1371/journal.pone.0099606

Navarra, J., Soto-Faraco, S., and Spence, C. (2007). Adaptation to audiotactile asynchrony. Neurosci. Lett. 413, 72–76. doi: 10.1016/j.neulet.2006.11.027

Novich, S. D., and Eagleman, D. M. (2015). Using space and time to encode vibrotactile information: toward an estimate of the skin’s achievable throughput. Exp. Brain Res. 233, 2777–2788. doi: 10.1007/s00221-015-4346-1

O’Connell, B. P., Dedmon, M. M., and Haynes, D. S. (2017). Hearing preservation cochlear implantation: a review of audiologic benefits, surgical success rates, and variables that impact success. Curr. Otorhinolaryngol. Rep. 5, 286–294. doi: 10.1007/s40136-017-0176-y

O’Neill, E. R., Kreft, H. A., and Oxenham, A. J. (2019). Speech perception with spectrally non-overlapping maskers as measure of spectral resolution in cochlear implant users. J. Assoc. Res. Otolaryngol. 20, 151–167. doi: 10.1007/s10162-018-00702-2

Parise, C. V., and Ernst, M. O. (2016). Correlation detection as a general mechanism for multisensory integration. Nat. Commun. 7:11543. doi: 10.1038/ncomms11543

Peng, S. C., Tomblin, J. B., and Turner, C. W. (2008). Production and perception of speech intonation in pediatric cochlear implant recipients and individuals with normal hearing. Ear Hear. 29, 336–351. doi: 10.1097/AUD.0b013e318168d94d

Penner, M. J. (1977). Detection of temporal gaps in noise as a measure of the decay of auditory sensation. J. Acoust. Soc. Am. 61, 552–557. doi: 10.1121/1.381297

Penner, M. J., Leshowitz, B., Cudahy, E., and Ricard, G. (1974). Intensity discrimination for pulsed sinusoids of various frequencies. Percept. Psychophys. 15, 568–570. doi: 10.3758/Bf03199303

Perrotta, M. V., Asgeirsdottir, T., and Eagleman, D. M. (2021). Deciphering sounds through patterns of vibration on the skin. Neuroscience 8, 1–25. doi: 10.1016/j.neuroscience.2021.01.008

Pezent, E., Israr, A., Samad, M., Robinson, S., Agarwal, P., Benko, H., et al. (2019). “Tasbi: multisensory squeeze and vibrotactile wrist haptics for augmented and virtual reality,” in Proceedings of the IEEE World Haptics Conference (WHC), Facebook Research, Tokyo.

Pisanski, K., Raine, J., and Reby, D. (2020). Individual differences in human voice pitch are preserved from speech to screams, roars and pain cries. R. Soc. Open Sci. 7:191642. doi: 10.1098/rsos.191642

Plomp, R. (1964). Rate of decay of auditory sensation. J. Acoust. Soc. Am. 36, 277–282. doi: 10.1121/1.1918946

Potts, W. B., Ramanna, L., Perry, T., and Long, C. J. (2019). Improving localization and speech reception in noise for bilateral cochlear implant recipients. Trends Hear. 23:2331216519831492. doi: 10.1177/2331216519831492

Proctor, A., and Goldstein, M. H. (1983). Development of lexical comprehension in a profoundly deaf child using a wearable, vibrotactile communication aid. Lang. Speech Hear. Serv. Sch. 14, 138–149. doi: 10.1044/0161-1461.1403.138

Raine, C. (2013). Cochlear implants in the United Kingdom: awareness and utilization. Cochlear Implants Int. 14(Suppl. 1), S32–S37. doi: 10.1179/1467010013Z.00000000077

Raine, C., Atkinson, H., Strachan, D. R., and Martin, J. M. (2016). Access to cochlear implants: time to reflect. Cochlear. Implants Int. 17(Suppl. 1), 42–46. doi: 10.1080/14670100.2016.1155808

Ray, J., Wright, T., Fielden, C., Cooper, H., Donaldson, I., and Proops, D. W. (2006). Non-users and limited users of cochlear implants. Cochlear. Implants Int. 7, 49–58. doi: 10.1179/cim.2006.7.1.49

Reed, C. M., Delhorne, L. A., and Durlach, N. A. (1992). “Results Obtained With Tactaid II and Tactaid VII,” in The 2nd International Conference on Tactile Aids, Hearing Aids, and Cochlear Implants, eds. A. Risberg, S. Felicetti, G. Plant, and K. E. Spens (Stockholm: Royal Institute of Technology), 149–155.

Reuter, E. M., Voelcker-Rehage, C., Vieluf, S., and Godde, B. (2012). Touch perception throughout working life: effects of age and expertise. Exp. Brain Res. 216, 287–297. doi: 10.1007/s00221-011-2931-5

Rothenberg, M., Verrillo, R. T., Zahorian, S. A., Brachman, M. L., and Bolanowski, S. J. Jr. (1977). Vibrotactile frequency for encoding a speech parameter. J. Acoust. Soc. Am. 62, 1003–1012. doi: 10.1121/1.381610

Sagi, E., Meyer, T. A., Kaiser, A. R., Teoh, S. W., and Svirsky, M. A. (2010). A mathematical model of vowel identification by users of cochlear implants. J. Acoust. Soc. Am. 127, 1069–1083. doi: 10.1121/1.3277215

Schorr, E. A., Fox, N. A., van Wassenhove, V., and Knudsen, E. I. (2005). Auditory-visual fusion in speech perception in children with cochlear implants. Proc. Natl. Acad. Sci. U.S.A. 102, 18748–18750. doi: 10.1073/pnas.0508862102

Schurmann, M., Caetano, G., Jousmaki, V., and Hari, R. (2004). Hands help hearing: facilitatory audiotactile interaction at low sound-intensity levels. J. Acoust. Soc. Am. 115, 830–832. doi: 10.1121/1.1639909

Shannon, R. V. (1990). Forward masking in patients with cochlear implants. J. Acoust. Soc. Am. 88, 741–744. doi: 10.1121/1.399777

Sherrick, C. E. (1984). Basic and applied-research on tactile aids for deaf people – Progress and prospects. J. Acoust. Soc. Am. 75, 1325–1342. doi: 10.1121/1.390853

Shore, S. E., El Kashlan, H., and Lu, J. (2003). Effects of trigeminal ganglion stimulation on unit activity of ventral cochlear nucleus neurons. Neuroscience 119, 1085–1101. doi: 10.1016/s0306-4522(03)00207-0

Shore, S. E., Vass, Z., Wys, N. L., and Altschuler, R. A. (2000). Trigeminal ganglion innervates the auditory brainstem. J. Comp. Neurol. 419, 271–285. doi: 10.1002/(Sici)1096-9861(20000410)419:3<271::Aid-Cne1>3.0.Co;2-M

Slater, A., and Kirby, R. (1998). Innate and learned perceptual abilities in the newborn infant. Exp. Brain Res. 123, 90–94. doi: 10.1007/s002210050548

Snyder, J. C., Clements, M. A., Reed, C. M., Durlach, N. I., and Braida, L. D. (1982). Tactile communication of speech. I. Comparison of Tadoma and a frequency-amplitude spectral display in a consonant discrimination task. J. Acoust. Soc. Am. 71, 1249–1254. doi: 10.1121/1.387774