- 1Department of Neurology, School of Medicine, Fukushima Medical University, Fukushima, Japan

- 2Department of Neurology, Faculty of Medicine, Universitas Indonesia, Cipto Mangunkusumo Hospital, Jakarta, Indonesia

- 3Department of Human Neurophysiology, Fukushima Medical University, Fukushima, Japan

- 4Department of Neurology, Takeda General Hospital, Fukushima, Japan

- 5Department of Neurology, Teikyo University, Tokyo, Japan

The decisions we make are sometimes influenced by interactions with other agents. Previous studies have suggested that the prefrontal cortex plays an important role in decision-making and that the dopamine system underlies processes of motivation, motor preparation, and reinforcement learning. However, the physiological mechanisms underlying how the prefrontal cortex and the dopaminergic system are involved in decision-making remain largely unclear. The present study aimed to determine how decision strategies influence event-related potentials (ERPs). We also tested the effect of levodopa, a dopamine precursor, on decision-making and ERPs in a randomized double-blind placebo-controlled investigation. The subjects performed a matching-pennies task against an opposing virtual computer player by choosing between right and left targets while their ERPs were recorded. According to the rules of the matching-pennies task, the subject won the trial when they chose the same side as the opponent, and lost otherwise. We set three different task rules: (1) with the alternation (ALT) rule, the computer opponent made alternating choices of right and left in sequential trials; (2) with the random (RAND) rule, the opponent randomly chose between right and left; and (3) with the GAME rule, the opponent analyzed the subject’s past choices to predict the subject’s next choice, and then chose the opposite side. A sustained medial ERP became more negative toward the time of the subject’s target choice. A biphasic potential appeared when the opponent’s choice was revealed after the subject’s response. The ERPs around the subject’s choice were greater in RAND and GAME than in ALT, and the negative peak was enhanced by levodopa. In addition to these medial ERPs, we observed lateral frontal ERPs tuned to the choice direction. The signals emerged around the choice period selectively in RAND and GAME when levodopa was administered. These results suggest that decision processes are modulated by the dopamine system when a complex and strategic decision is required, which may reflect decision updating with dopaminergic prediction error signals.

Introduction

Interactive decision-making is fundamental to our social activity. However, it is elusive, in that it may change dynamically depending on social contexts and knowledge about the intentions of others. For example, playing the rock-paper-scissors game involves complex interactive processes including decision variables such as likelihoods, priors, and values. The game theory provides a mathematical framework for the strategic interactions among decision-makers. In the game theory, a player is said to use a mixed strategy whenever they choose to randomize over the set of available actions (Dixit et al., 2009). Mixed-strategy games have been used for neurophysiological research on decision-making and identified neural signals related to the previous choices, previous rewards, expected reward value, and decision switching of subjects in the medial prefrontal cortex and other brain areas (Barraclough et al., 2004; Dorris and Glimcher, 2004; Seo and Lee, 2007, 2008; Seo et al., 2009; Thevarajah et al., 2009; Donahue et al., 2013). These neural signals may reflect strategic updating of decision variables based on past history.

There are several brain regions involved in decision-making, among which the dopamine system and prefrontal cortex appear to play pivotal roles, particularly when a decision involves a strategic interaction (Lee and Seo, 2016). There is converging evidence that the dopamine system is essential for adapting behavior based on previous outcomes, such as dopamine neurons emitting teaching signals that guide reinforcement learning (Schultz et al., 1997), dopamine activity reflecting the upcoming choices and actions of subjects (Morris et al., 2006; Yun et al., 2020), and the economic decisions of Parkinson’s disease (PD) patients being influenced by dopaminergic treatment (Kobayashi et al., 2019). Decision processes can be decomposed into multiple tasks, including integration of external information, outcome estimation, and movement preparation. The primate prefrontal cortex has been shown to be involved in each of these cognitive tasks, and is thus thought to be one of the main processors involved in decision-making to guide goal-directed behavior (Kim and Shadlen, 1999; Krawczyk, 2002; Matsumoto et al., 2003). Anatomically, dopamine neurons project to the prefrontal cortex via the mesocortical pathway. Given these anatomical and physiological backgrounds, it is tempting to speculate that decision substrates are formed in the prefrontal cortex under the influence of dopaminergic input that carries reinforcement signals and motivational drive. However, we are not aware of any previous study that has directly examined dopaminergic influences on behavior and electrophysiological activities during an interactive game.

We aimed to determine how dopamine influences event-related potentials (ERPs) while healthy subjects played a matching-pennies task, which is a type of mixed-strategy zero-sum game. ERPs are voltage changes in electroencephalography (EEG) recordings that occur before, during, or after physical or mental events (Picton et al., 2000). Neural correlates of decision-making in humans have been studied mainly using functional magnetic resonance imaging (fMRI). However, decision processes are dynamic and instantaneous, possibly occurring in the subsecond range, and so fMRI data with a low temporal resolution must be interpreted with caution. Recording ERPs is well suited to studying decision processes since it yields non-invasive data on cortical activity with excellent temporal resolution. Much of the ERP research on decision-making has focused on postdecision activity, such as error-related negativity (Falkenstein et al., 1991) and the central–parietal P3 component (Polich, 2007). We looked for ERP correlates of strategic decision-making, focusing on prefrontal activity and dopaminergic influences.

According to the rules of the matching-pennies task, the subject won the trial when they chose the same side as the opponent, and lost otherwise. We set three different task rules: (1) with the alternation (ALT) rule, the computer opponent made alternating choices of right and left in sequential trials; (2) with the RAND rule, the opponent randomly chose between right and left; and (3) with the GAME rule, the opponent analyzed the subject’s past choices in order to predict the subject’s next choice, and then chose the opposite side. Therefore, the subject had to make choice patterns as random as possible in order to reduce the likelihood of the computer opponent winning. We hypothesized that the prefrontal cortex would exhibit greater activation when decisions were based on complex priors rather than the simple alternation rule: in order words, when decision-making is based on intensive computation of complex priors and outcome prediction, dopaminergic input to the prefrontal cortex plays a critical role. We therefore tested whether the administration of levodopa, a precursor of dopamine, enhances decision-related ERPs.

Materials and Methods

Participants

Eighteen right-handed healthy adults (12 males; age 46.2 ± 12.8 years, mean ± SD) were recruited. One male subject was excluded because he withdrew from the experiment after the first session. Fukushima Medical University Ethical Committee approved the experimental design of the study, and informed consent was obtained from all subjects.

Procedures

Each subject participated in recording sessions twice, separated by an interval of at least 1 week. The subjects took either a placebo or 100 mg of levodopa 45 min before the start of each recording. Drug treatment was double-blinded and randomized.

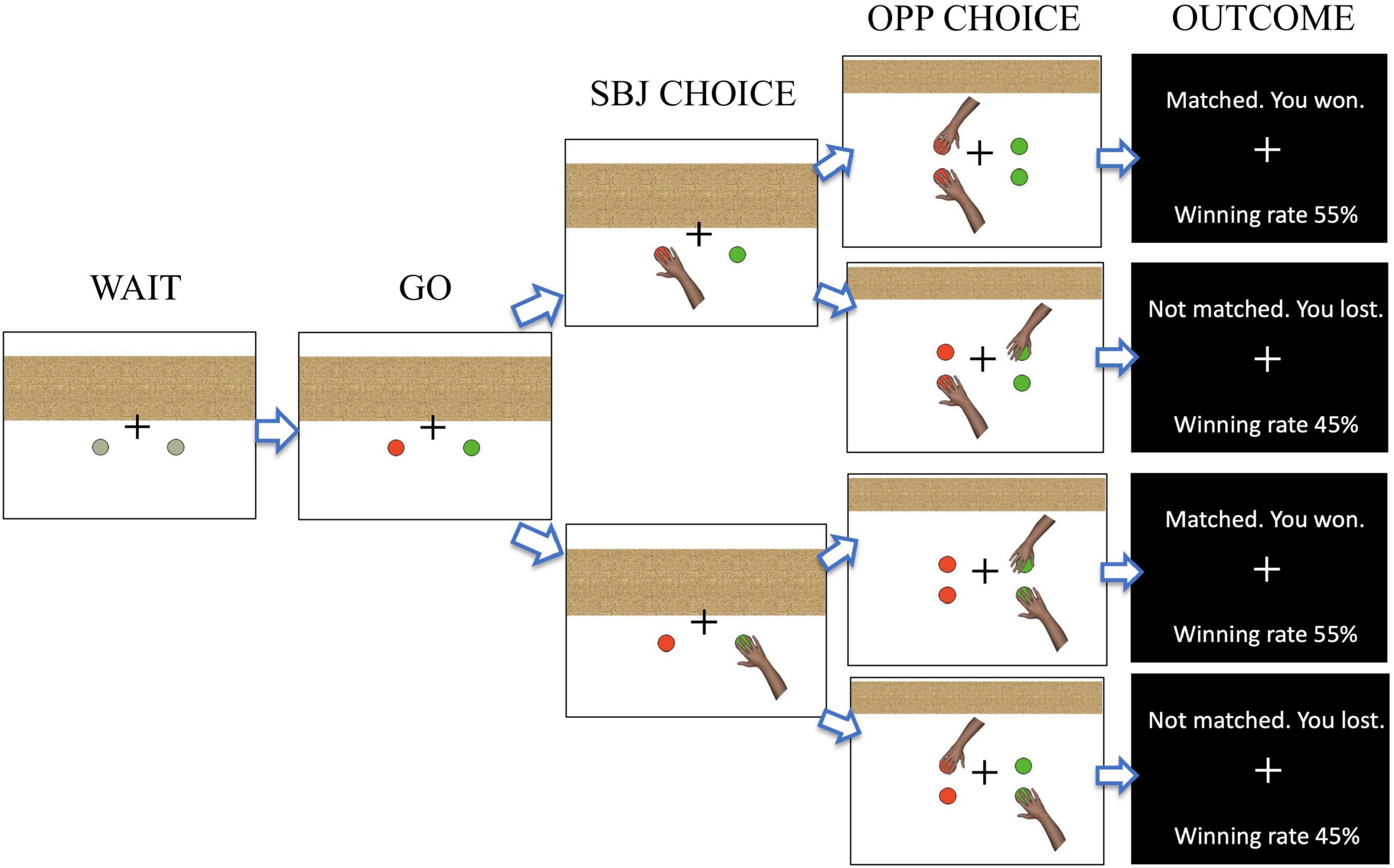

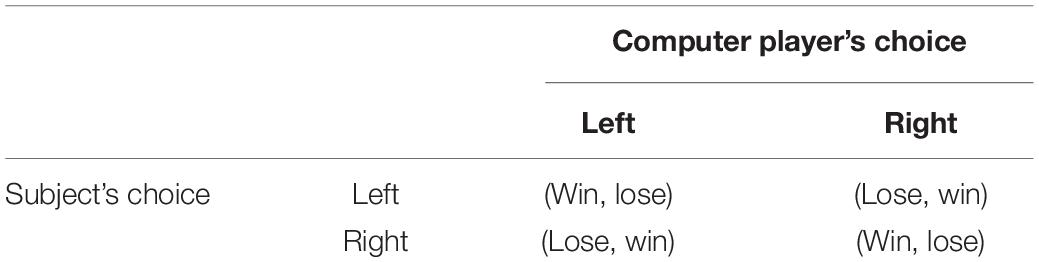

During EEG recordings, the subject was seated 70 cm from a computer monitor (60 cm × 30 cm) and played a computerized version of the matching-pennies task (Figure 1). At the beginning of each trial, two gray circles were displayed at the bottom of the monitor and the subject put the index and middle fingers of their right hand on the two designated keys on the keyboard (during the WAIT period). After 800 ms, the gray circles changed color, which signaled the subject to press either one of the two keys (the GO period). Immediately after they had made their choice, a hand illustration was displayed below the chosen side of the circles (designated as SBJ CHOICE). After 1,000 ms, the choice of the computer player was revealed by a hand illustration shown on the left or right side at the top of the monitor (OPP CHOICE). The subject won the trial if the side of their choice matched that of the computer player, and otherwise, the subject lost (Table 1). After 700 ms, the outcome of each trial was displayed on the monitor together with the total winning rate (OUTCOME). The subject was encouraged to win as much as possible. The trial was aborted if the subject responded during the WAIT period or did not respond within 5 s after the GO signal.

Figure 1. Illustration of the task flow in the matching-pennies task. The task started with the presentation of two gray circles (WAIT). A color change of the two circles signaled the subject to make a choice response (GO), with the subject having to choose either the left or right target (SBJ CHOICE). After a 1,000 ms delay, the choice made by the computer player was displayed (OPP CHOICE). After 700 ms, the outcome was shown (OUTCOME).

Table 1. Payoff matrix of the subject and the computer player for the choice of left vs. right key presses (subject, computer).

The following algorithms were implemented for the computer player: Each subject performed the matching-pennies task with three different rules. For the ALT rule, in which the computer player chose the right or left target alternatively, the subject could easily predict the opponent’s choice. However, the subject could not predict the opponent’s choice for the RAND rule, since the computer player chose the right or left target randomly with equal probability. The GAME rule was modified from a previous study (Barraclough et al., 2004), in which the computer could exploit systematic bias in the subject’s choice in the recent past to maximize the winning rates of the computer player. The computer saved the entire history of the subject’s choices in a given session and used the information to predict the subject’s next choice by testing a set of hypotheses. Multiple conditional probabilities were estimated to predict the subject’s choice, and the computer biased its selection according to these probabilities.

P(A, N) is the conditional probability of the subject choosing A (right or left) given that they have chosen A in the Nth previous trials. For example, if the subject chose the right target with a probability of 80% in the recent past (i.e., P(left, 1) = 0.8), the probability of the computer selecting the left target would be 80%. The subject’s choice may be conditional not only on their past choice but also on the game outcome (win vs. lose). Pwin(A, N) and Plose(A, N) are the conditional probabilities of the subject choosing A given that they chose A in the Nth previous trials and winning or losing the trial, respectively, for example, Pwin(A, 1) = 1 means a win-stay strategy and Plose(A, 1) = 0 means a lose-switch strategy. Among these conditional probabilities computed for N = 1–4, the probability with the highest value (Pmax) was taken to bias the choice of the computer player to the opposite side at the same probability:

The next choice of the computer player was opposite to the subject’s choice with a probability of Pmax.

All of the subjects played 450 trials, comprising six sessions with three different rules and 75 trials/session. Six blocks of trials were run in a fixed order (ALT–RAND–GAME–ALT–RAND–GAME) and there was a short break between the trial blocks. At the beginning of the experiment, we first provide general instructions about the task sequence and procedure of the matching-pennies task, including showing example screenshots. We then explained the three different rules and allowed 15 practice trials for each rule.

The following instructions were given for each task rule:

ALT rule: “In this session, the computer opponent will simply alternate its response between right and left. So, you can easily win by alternating your response to choose the side that the opponent will choose.”

RAND rule: “In this session, the computer opponent will randomly choose between right and left. So, its response is unpredictable. You will win or lose by chance.”

GAME rule: “In this session, the computer opponent will analyze your past choices in order to predict your next choice. For example, if you chose left repeatedly, the computer opponent would choose right to win. Therefore, you have to try to make unpredictable selection patterns to reduce the likelihood of the computer opponent winning. You are encouraged to win as much as possible.”

The three rules were applied in a fixed order to make it as easy for the subjects to understand which rule they were performing for a particular task. The sequence of ALT–RAND–GAME was repeated twice to avoid the possible order effect, training effect, and effects of changes in the recording conditions such as in the electrode impedances. We explicitly provided instructions about the relevant rule to the subjects before a new session began. We also instructed them to look at the cross mark at the center of the monitor to avoid eye movements. The behavioral task was controlled by MATLAB (version R2014a, The MathWorks, Natick, Massachusetts, United States) with Cogent 2000 and the Psychophysics Toolbox (Brainard, 1997) running on a Windows computer connected to a Macintosh computer that sampled the EEG data.

Data Acquisition and Analysis

Seventeen subjects completed the entire experiment, and all of their behavioral data were included in the behavioral analysis. We analyzed the winning rates of the subjects for each rule. We evaluated how much the choice of a subject was influenced by their own choices in the past by using the log likelihood ratio (LR):

where LRself(N) is the LR of choosing the same side in the current trial as in the Nth previous trials, rt and lt correspond to pressing the right- and left-side keys as the decision, respectively, and P(Aself, Bself) is the condition probability of the subject choosing A in the current trial and choosing B in the Nth previous trials. LRself(N) = 0 indicates that past choices did not influence the present choice. If the subjects tended to choose the same side as or the opposite side to the choice in the Nth previous trials, LRself(N) would be positive or negative, respectively.

The subject’s current choice could also be influenced by the opponent’s choice in the previous trials. The influence from the past choices of the computer player was evaluated in an analogous manner:

where LRpc(N) is the LR of choosing the same side in the current trial as the opponent’s choice in N previous trials, and P(Aself, Bpc) is the conditional probability of the subject choosing A in the current trial when the computer player chose B in the Nth previous trials. Logistic regression analysis was used to identify how the past choices influenced the current choice (see Supplementary Material 1 for details).

To measure the randomness of choosing between right and left, we calculated the permutation entropy of the numerical sequences of the chosen target using the MATLAB function “pec.m” (Bandt and Pompe, 2002; Ouyang, 2020).

EEG data were recorded from 129 scalp locations at a sampling rate of 1,000 Hz with a 24-bit resolution (Geodesic EEG System 400, Electrical Geodesic, Eugene, Oregon, United States). EEG data analysis was performed using EEGLAB (version 14.1.1) (Delorme and Makeig, 2004) and ERPLAB (version 6.1.4) (Lopez-Calderon and Luck, 2014). The data were downsampled to 250 Hz and bandpass filtered between 0.01 and 30 Hz, with a notch filter at 50 Hz. Noisy channels were removed, and the removed channels were interpolated. The sampled data were re-referenced to the average of all electrodes. Independent-components analysis and an automatic EEG artifact detector (Mognon et al., 2011) were used to correct for artifacts such as blinks and eye movements.

ERPs were analyzed in three periods. The first period (period I) was from the onset of the WAIT cue until the onset of the GO cue (800 ms), which corresponds to the period when the subject waited for the GO stimulus to appear on the monitor. The second period (period II) was from -800 to 0 ms from the subject’s choice response (SBJ CHOICE, 800 ms). The third period (period III) was from the disclosure of the opponent’s choice (OPP CHOICE) until the onset of the feedback cue (OUTCOME, 700 ms). The activity during the intertrial interval was used for baseline correction. We excluded EEG data with a large proportion of noise contamination (more than 25% of trials rejected by ERPLAB).

For periods I and II, the period-mean amplitude was defined as the amplitudes of ERPs averaged over the entire period. For periods I to III, the negative peak amplitude was defined as the minimum value of the ERP during each period. For period III, the positive peak amplitude was defined as the maximum value of the ERP during the period.

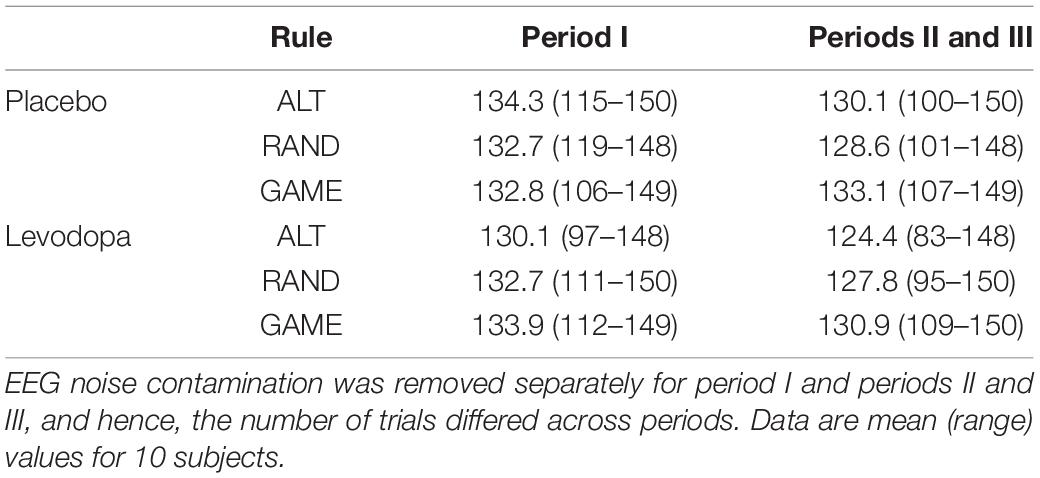

Initially, there were 17 subjects. Data from seven subjects were excluded because the number of trials was insufficient after artifact removal. Therefore, data from the remaining 10 subjects were analyzed. The numbers of trials averaged for the ERP analysis are summarized in Table 2.

To quantify the selectivity of the ERP signals to the direction of the subject choice, we calculated the mean ERP difference between choosing the right and left targets:

where ERPmean(rt) and ERPmean(rt) are the ERPs averaged for trials in which the subject chose the right and left, respectively. To quantify the ERP selectivity for behavioral outcomes, we calculated the mean ERP difference between winning and losing trials:

where ERPmean(win) and ERPmean(lose) are the ERPs averaged for trials in which the subject won and lost, respectively.

To examine whether ERPs were influenced by the choice history, we examined the effect of previous choices from the following aspects:

1. The influence of the previous choice was examined by calculating the mean ERP difference between trials in which subjects chose the right and left targets in the preceding trial (Supplementary Material 2).

2. The influence of the outcome in the previous trial was examined by calculating ERP(win–lose) based on the outcome in the preceding trial:

where ERPmean(won in previous trial) and ERPmean(lost in previous trial) are the ERPs averaged for trials just after winning and losing, respectively.

3. Whether subjects changed the choice direction from the preceding trial (switch) or chose the same direction as before (stay) was examined by calculating the mean ERP difference between switch and stay trials:

where ERPmean(switch) and ERPmean(stay) are the ERPs averaged for trials in which the subject had switched from or stayed with the previous trials, respectively.

Statistical Analysis

Behavioral Data

Average winning rates were subjected to two-way repeated-measures ANOVA for rule (RAND and GAME) × drug (levodopa and placebo). Behavioral data in the ALT condition were not included because the subjects won almost 100% of those trials. Because reaction time data were not normally distributed, the data were inverse Gaussian transformed. Data on winning rates, reaction time, and permutation entropy were analyzed using two-way repeated-measures ANOVA for rule × drug. When the permutation entropy was tested by ANOVA, data in the ALT condition were not included because behavioral responses were simple alternations and their permutation entropy was fixed at 0.69.

The significance criterion was set at p < 0.05 and the effect size was quantified using partial eta-squared (ηp2). We tested whether LRself(N) and LRpc(N) differed significantly from 0 using a two-tailed Student’s t-test with Bonferroni correction.

Electrophysiological Data

The effects of drug and rule on the ERPs were tested using two-way repeated-measures ANOVA for rule (ALT, RAND, and GAME) × drug (levodopa and placebo). The significance criterion was set at p < 0.05 with multiple comparisons corrected using Tukey HSD. We also tested ERPs using three-way ANOVA for rule (ALT, RAND, and GAME) × drug (levodopa and placebo) × outcome (win and lose) and three-way ANOVA for rule (ALT, RAND, and GAME) × drug (levodopa and placebo) × choice (switch and stay). The significance criterion was set at p < 0.05. The analyses were conducted using the MATLAB Statistics toolbox and SPSS statistical software (version 23, IBM, Armonk, New York, United States).

Results

Behavioral Results

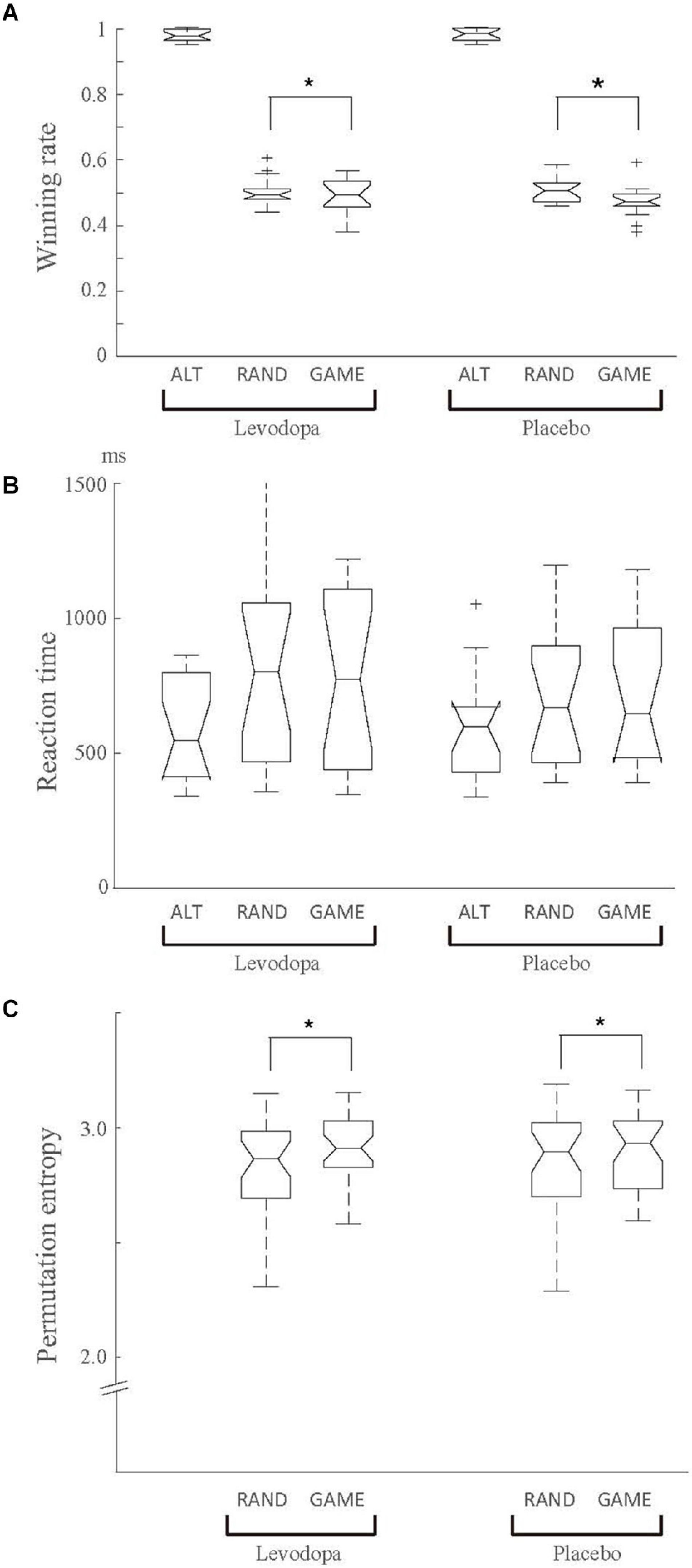

Figure 2A shows the winning rates for the three task rules in the levodopa and placebo groups. The behavioral performance in ALT sessions was nearly perfect, as expected, reflecting how easy it was to predict the opponent’s choice. The behavioral performance in RAND and GAME was close to the chance level, but significantly worse for GAME than for RAND, as supported by a significant main effect of rule in two-way repeated-measures ANOVA for rule × drug on winning rate [F(1, 16) = 8.515, p = 0.01, ηp2 = 0.068]. There was no significant main effect of drug or an interaction effect. The choice reaction time was 623.0 ± 301.4 ms overall, and it did not change significantly with the rules or levodopa treatment (two-way repeated-measures ANOVA, p > 0.05; Figure 2B).

Figure 2. Behavioral results for the winning rate (A), behavioral reaction time (B), and permutation entropy of the choice sequence (C). Data were averaged for each task rule and for each drug condition (levodopa or placebo) across 17 subjects. (A) There was a significant main effect of rule in two-way repeated-measures ANOVA (p < 0.05), indicating that the winning rate was higher in RAND than in GAME. There was no significant main effect of drug or an interaction effect (p > 0.05). (B) The choice reaction time did not change significantly with the rule or levodopa treatment. (C) The permutation entropy measured randomness of the choice sequence. Permutation entropy in ALT was fixed at 0.69 (not plotted). Entropy was slightly but significantly higher in GAME than in RAND (drug main effect, p = 0.02 in two-way repeated-measures ANOVA). In each box plot, the central line indicates the median, the bottom and top edges of the box indicate the 25th and 75th percentiles, respectively, and the whiskers extend to the extreme data points that were not considered outliers; the outliers are indicated separated by the “+” symbols. *p < 0.05 for the main effect in ANOVA.

In GAME sessions, the subjects could play better by choosing targets independently in each trial, because any choice bias could have been exploited by the computer. Figure 2C shows the permutation entropy (a measure of response randomness) in RAND and GAME. The behavioral response in ALT was a fixed sequence, and so its permutation entropy was fixed at 0.69. The permutation entropy was significantly higher in GAME than in RAND when applying two-way repeated-measures ANOVA for rule × drug [F(1, 16) = 6.374, p = 0.02, ηp2 = 0.056]. These results indicate that the subjects made more random choices in GAME (Figure 2C).

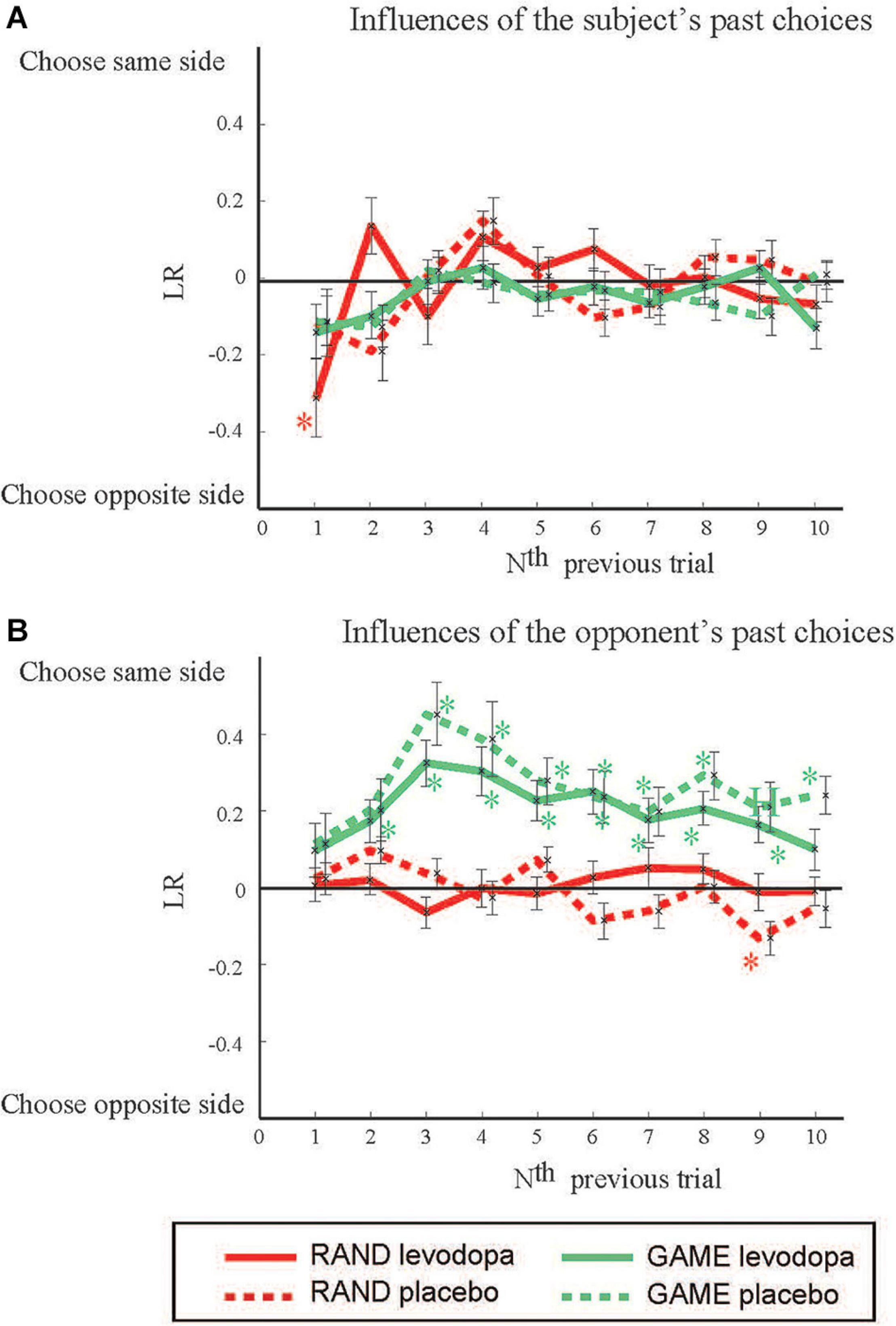

We evaluated the degree to which the choices made by subjects were influenced by their own choices in the past by using the LR method (cf. Supplementary Material 1 for the results by logistic regression analysis). LRself(N) expresses the influences from the past choices of the subject in the Nth previous trials (Figure 3A). LRself(1) was significantly negative in RAND sessions with levodopa [t(35) = 2.968, p = 0.05, Student’s t-test with Bonferroni correction], indicating that subjects tended to choose the target opposite to the one they chose in a previous trial. There was no significant past influence in GAME sessions. In a similar way, we analyzed the influences of the opponent’s past choices (Figure 3B). LRpc(N) was significantly positive in GAME sessions for N = 2–9 with levodopa [t(31) = 3.14, 5.49, 4.83, 4.38, 4.23, 3.11, 4.62, and 3.47, respectively; p < 0.005] and for N = 3 to 10 without levodopa [t(33) = 5.48, 3.90, 4.50, 3.42, 3.10, 4.67, 3.22, and 4.88, respectively, p < 0.05]. These results indicate that the subjects tended to choose the target on the same side as the target chosen by the computer player in the past.

Figure 3. The effects of previous choices on decision-making. The figure shows how past choices influenced the present choice by plotting the LR vs. how long ago the previous trial occurred. Positive and negative LRs mean that the subject tended to choose the same side as and the opposite side to that in the previous trials, respectively. (A) Influences from the subject’s own past choices. (B) Influences from the opposing player’s past choices. Data are mean and SD values. *p < 0.05 (Student’s t-test) for deviation from 0.

Electrophysiological Results

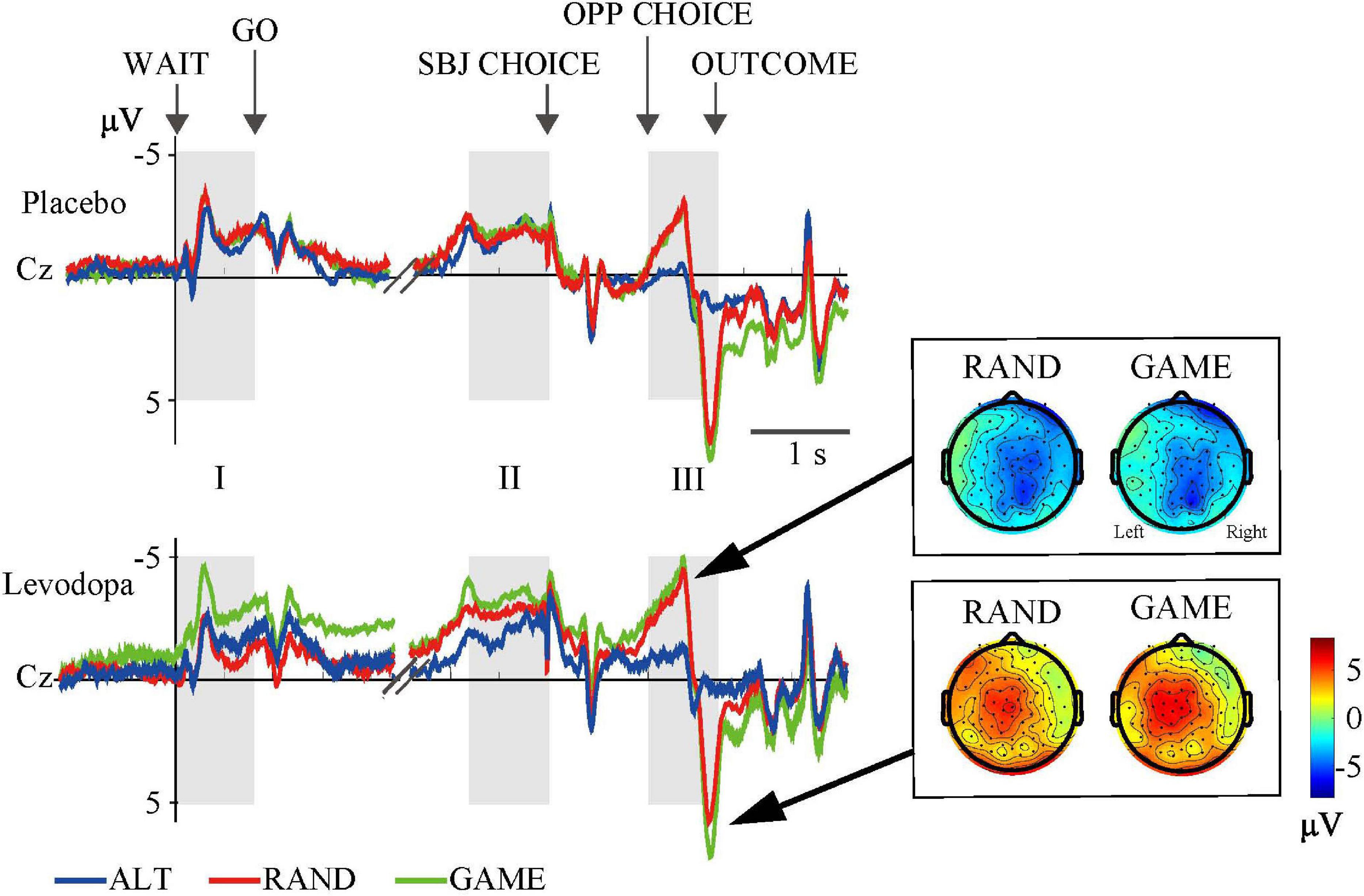

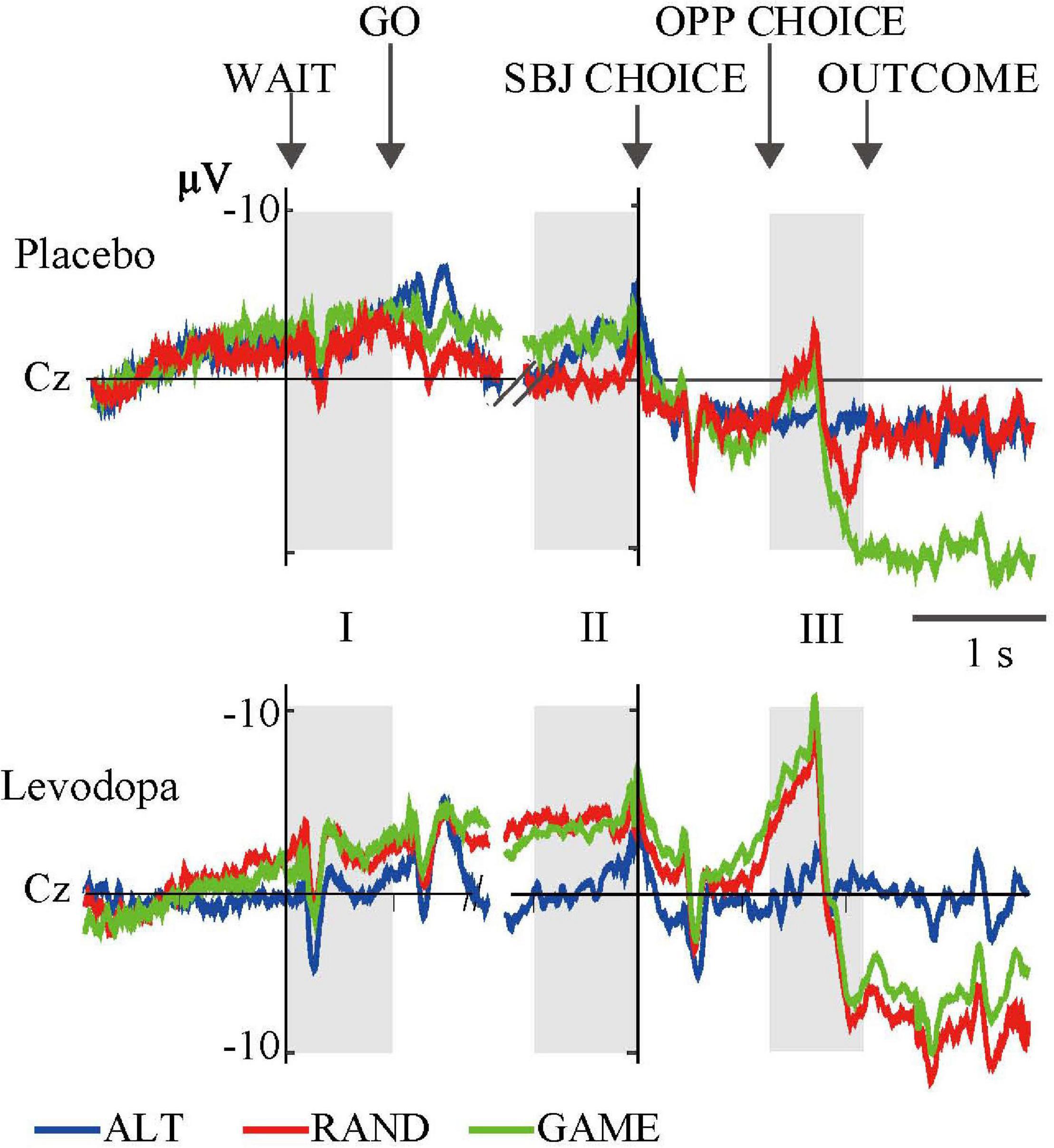

We averaged EEG activities from 10 subjects and aligned them to the trial start (WAIT) and the subject’s choice response (SBJ CHOICE). The activity recorded at the Cz electrode is plotted separately for the three different task rules with and without levodopa in Figure 4. The ERP profile of a representative single subject recorded at the Cz electrode is shown in Figure 5. Period I covers the movement preparation period from the WAIT cue until the GO cue (800 ms). After the phasic response to the WAIT cue, we observed sustained negativity particularly during GAME and RAND sessions with levodopa between periods I and II, which returned to the baseline after the subjects made the key-press response (SBJ CHOICE). During period III, when the opposing player’s choice was displayed on the monitor, the negative potential increased again, which resulted in an abrupt deflection toward positivity and a return to the baseline after the outcome information was displayed on the monitor. For the RAND and GAME rules, the negative and positive peaks were distributed around the vertex electrodes during period III (topographical maps in Figure 4).

Figure 4. Grand-averaged waveforms at the Cz electrode for the three rules. Event-related potentials (ERPs) were averaged from 10 subjects in three task conditions. Gray areas (I, II, and III) indicate time windows of the ERP analyses (cf. Table 3). Topographical maps show the distributions of negative (top) and positive (bottom) peaks during period III.

Figure 5. Grand-averaged waveforms of a representative single subject recorded at the Cz electrode for the three rules. The format is the same as in Figure 4.

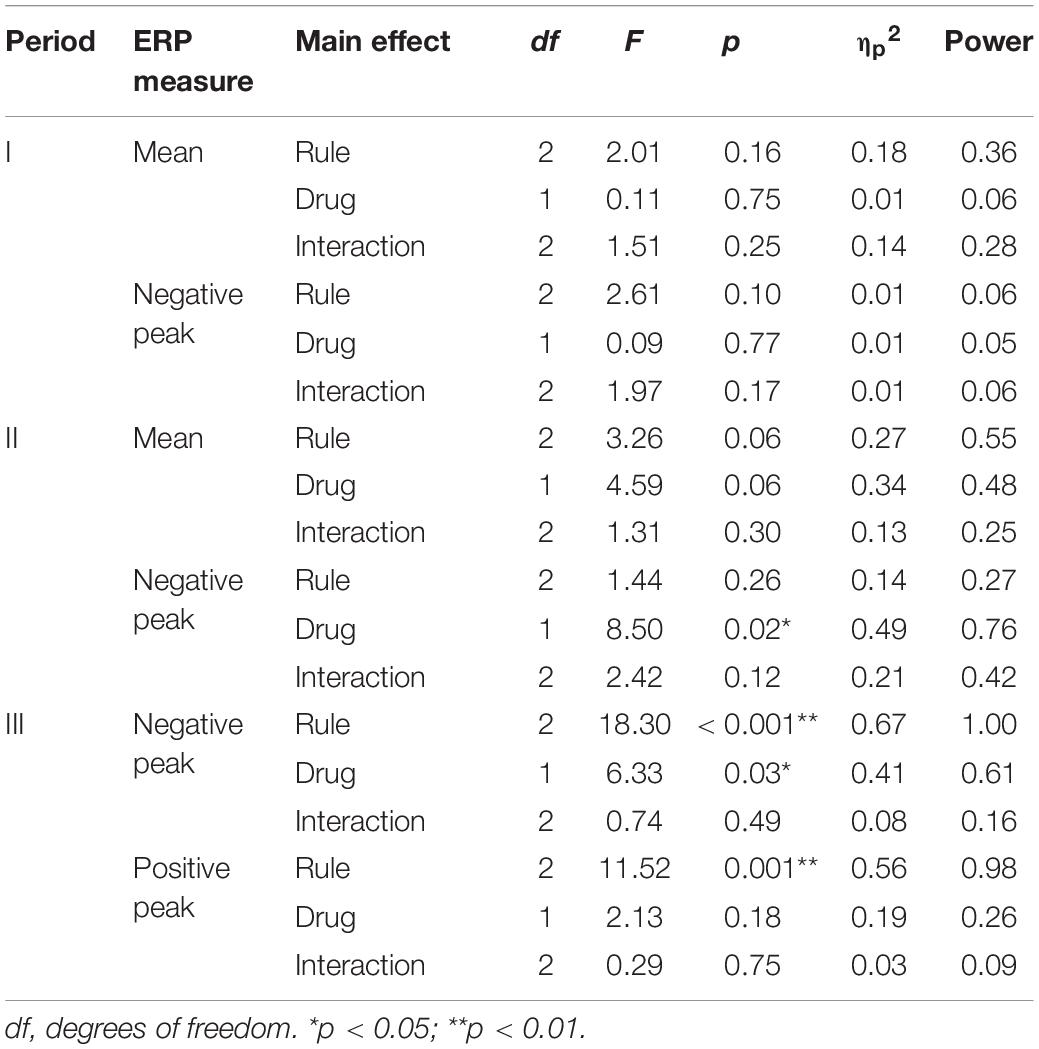

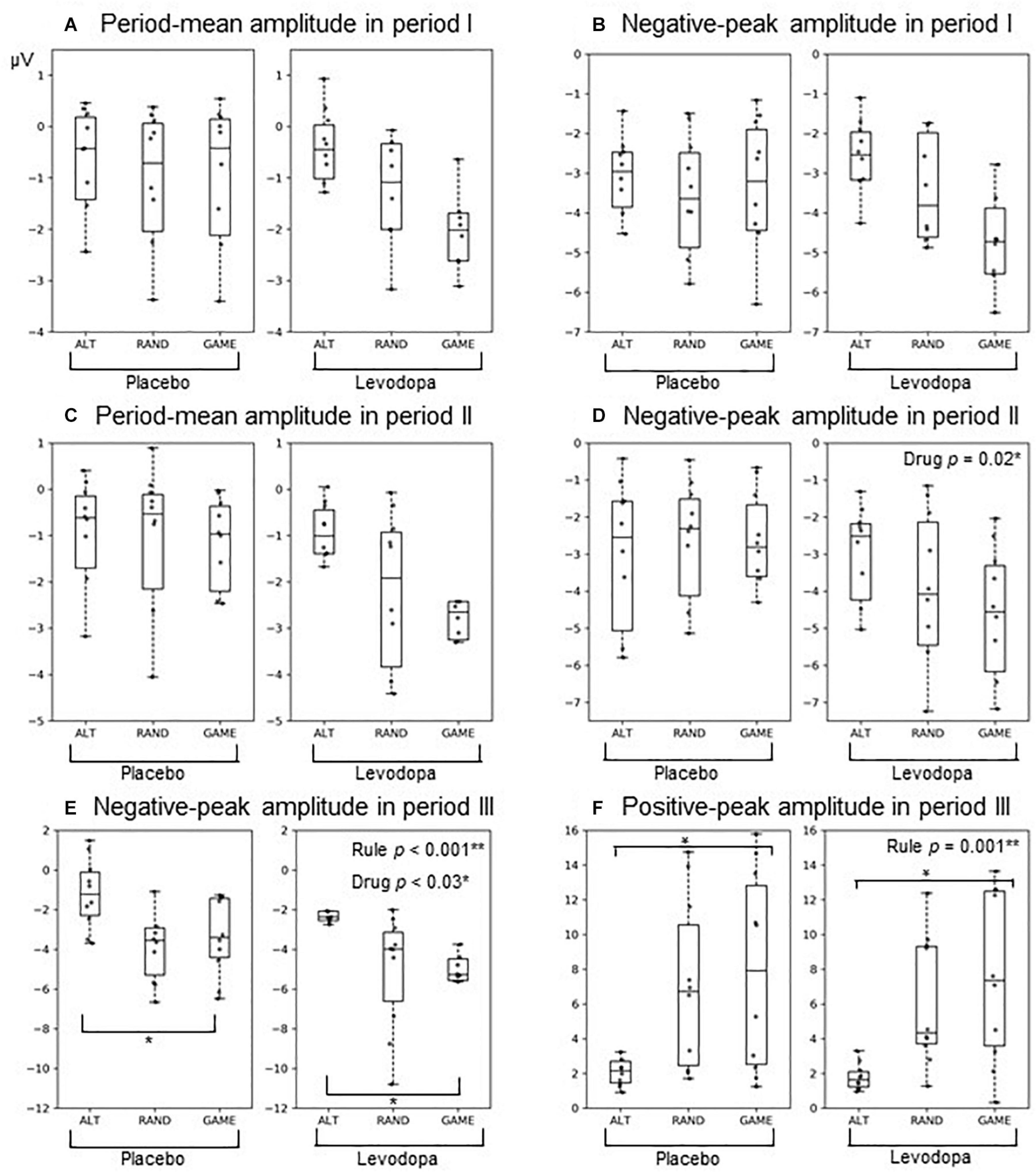

Figure 6 and Table 3 summarize the amplitudes of ERPs recorded at the Cz electrode during periods I to III and the results of the statistical tests, respectively. The period-mean ERP amplitude in period II showed a tendency of main effects of drug and rule (p = 0.06, two-way repeated-measures ANOVA). The negative peak in period II showed a significant main effect of drug (p = 0.02). During period III, the ERPs showed negative-to-positive biphasic peaks, and two-way repeated-measures ANOVA was applied to the amplitude of each peak. For the negative peak, there were significant main effects of rule (p < 0.001) and drug (p = 0.03), while for the positive peak, there was a significant main effect of rule (p = 0.001). The grand-averaged waveforms in Figure 4 show that the activity after the subject choice (the time between periods II and III) generally shifted to negative values with levodopa as compared with placebo. When the amplitude of the negative peak in period III was corrected for the baseline amplitude during period II, there was no significant effect of rule or drug (p > 0.05, two-way repeated-measures ANOVA).

Figure 6. Box plots of ERPs at the Cz electrode: (A,B) Period I amplitudes of the period mean (A) And negative peak (B). (C,D) Period II amplitudes of the period mean (C) And negative peak (D). (E,F) Period III amplitudes of the negative peak (E) And positive peak (F). The format of each box plot is the same as in Figure 2. Dots indicate individual data points.

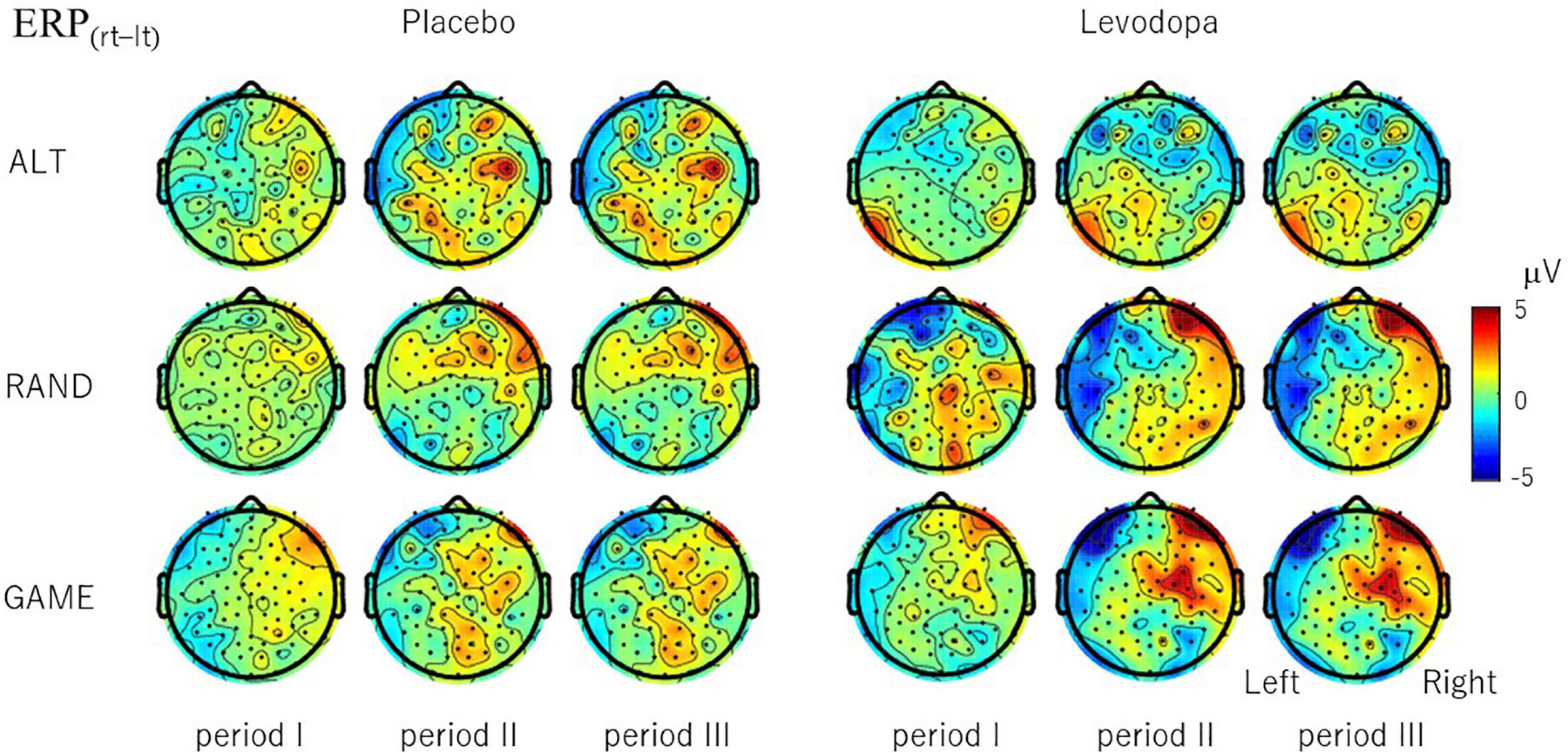

To examine whether EEG signals differentiate the subject’s choice direction (right–left), we examined the topographical distribution of ERP(rt–lt) (Figure 7). In RAND and GAME sessions with levodopa, clear hemispheric asymmetry emerged after period II, with ERP(rt–lt) generally being positive in the right hemisphere and negative in the left hemisphere. The choice signal was not clear in any of the tasks for placebo. Even with levodopa, the signal was absent in ALT sessions. We also examined whether EEG signals differentiated the subject’s choice direction in the previous trial by calculating ERP(rt–lt) based on the choice in the preceding trial (Supplementary Material 2). The hemispheric lateralized signal was not present except for in the ALT sessions, in which the previous choice and present choice were contingent on each other.

Figure 7. Topographical maps of choice signal ERP(rt–lt), which is the difference in the period-mean ERP amplitude between right-choice and left-choice trials. ERP(rt–lt) was calculated for each channel and averaged across 10 subjects. For RAND and GAME rules, positive signals evolved in the right hemisphere and negative signals evolved in the left hemisphere during periods II and III with levodopa, but the signal was weaker with placebo.

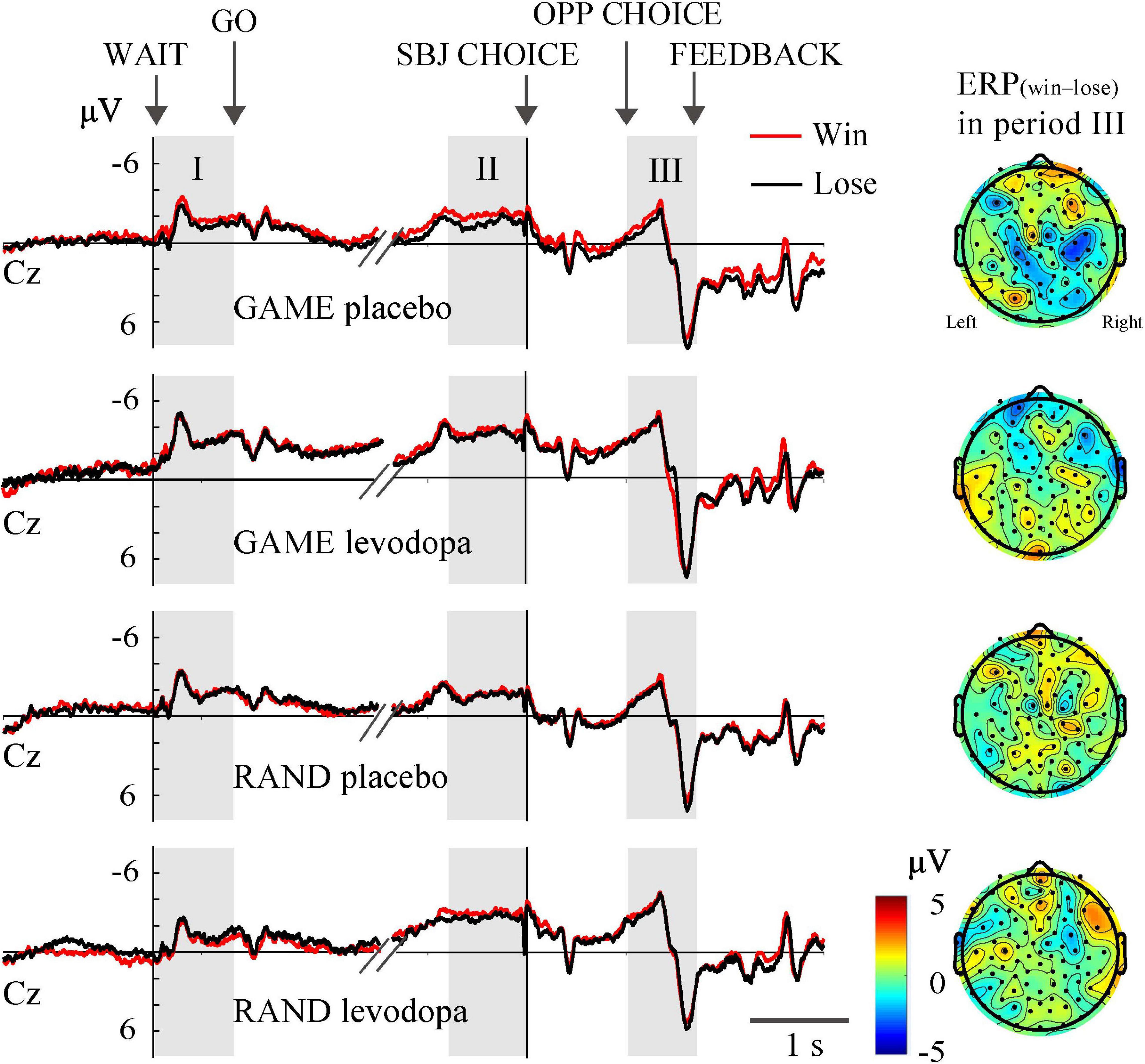

In RAND and GAME sessions, the behavioral winning rates were approximately 0.5. Trials in which the subjects won and lost against the computer player are merged in Figures 4, 5. To examine whether the ERPs differentiated the choice outcome, we plotted the average ERP waveform at the Cz electrode separately for each outcome and examined ERP(win–lose) in all recording channels during period III (Figure 8). Three-way ANOVA for rule (ALT, RAND, and GAME) × drug (levodopa and placebo) × outcome (win and lose) revealed that there was no significant main effect of the outcome on the ERPs at the Cz electrode during any periods (p > 0.05). Also, ERP(win–lose) did not deviate from 0 significantly in any condition (p > 0.05, Student’s t-test with Bonferroni correction). This meant that the ERPs did not differentiate the decision outcome. We also examined whether the outcome in the previous trial significantly influenced the ERPs by analyzing ERPprev(win–lose), which revealed that this parameter did not deviate from 0 in any condition (p > 0.05, Student’s t-test with Bonferroni correction).

Figure 8. Grand-averaged waveforms at the Cz electrode plotted separately for trials in which the subjects won and lost.

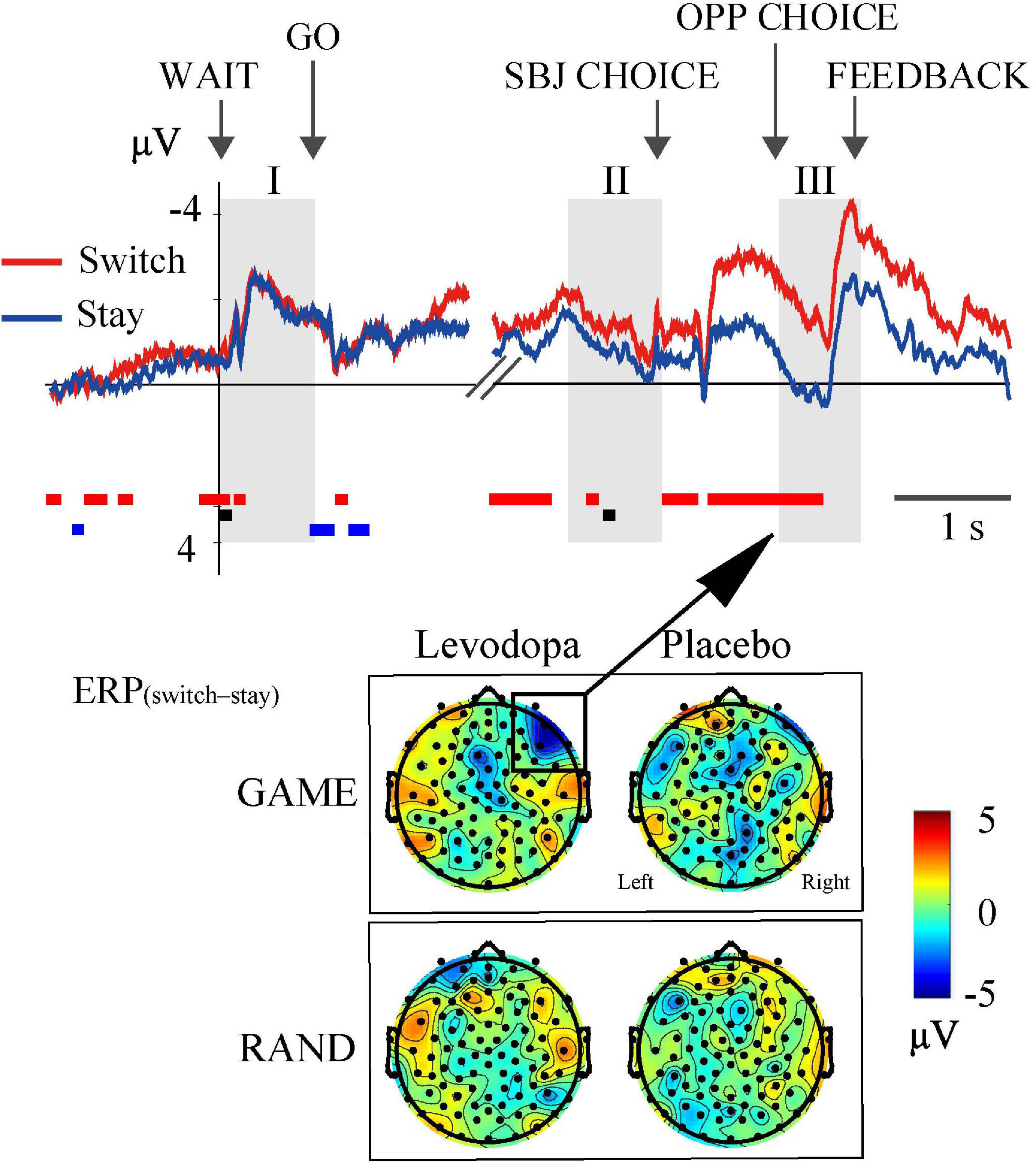

To examine whether ERPs reflect the switch-or-stay decision for the direction of target choice, we examined topographical maps of ERP(switch–stay) in the GAME and RAND sessions (Figure 9). ERP(switch–stay) was negative in the right lateral prefrontal area in GAME sessions with levodopa treatment during period III (top-left topographical map in Figure 9). The ERP selectivity for the switch-or-stay choice was not observed in the RAND sessions (topographical map in the bottom panel of Figure 9). The average ERP in this region in GAME (top waveforms in Figure 9) was significantly more negative on switching (red line) than on staying (blue line) after period II to period III (red rectangles below the waveforms; p < 0.05, three-way ANOVA for rule × drug × switch–stay).

Figure 9. ERP selectivity to switch-or-stay choices. The right lateral frontal area showed negative ERP(switch–stay) in GAME with levodopa, indicating that the ERP amplitude was more negative when switching than when staying with the previous target choice. The waveforms are the grand-averaged waveforms in the right lateral frontal area (average of 10 electrodes) in GAME sessions with levodopa. Rectangles below the waveforms indicate time periods where the main effects of choice (red) and drug (black) and the interaction effect were significant [p < 0.05; three-way ANOVA for rule × drug × choice (switch and stay)].

Discussion

We aimed to determine whether ERPs reflect decision strategies when performing a binary choice task. We found that the behavioral performance and reaction time changed systematically according to the decision rule for choosing between right and left targets (Figure 2). Although the subjects made more random choices in GAME than in RAND, the choices were partially predictable, with subjects tending to choose the target on the same side as that previously chosen by the computer player (Figures 2C, 3B). We found that levodopa did not significantly affect the behavioral performance in sessions of any rule. Previous studies that found an influence of levodopa on decision-making were often driven by reward incentives (Chowdhury et al., 2013). Thus, it is possible that cognitive decision-making that does not involve salient reward drive is less sensitive to dopaminergic treatment. In summary, the present behavioral analyses revealed the presence of strategic adaptation in binary decision-making based on the task rule.

We observed sustained negative ERPs after presentation of the WAIT cue. It has been reported that self-paced action induces premovement negative ERPs in the frontocentral region, which is called movement-related potentials (MRPs) (Kornhuber and Deecke, 1965, 2016). The early component of the MRPs is called the Bereitschaftspotential (BP), which is a negative potential that slowly increases a few seconds before executing a movement (Cunnington et al., 1995). There have been several reports of MRP amplitudes being smaller in PD patients than in healthy controls, with the reduced amplitude of the BP being restored by dopaminergic therapy (Amabile et al., 1986; Dick et al., 1987; Feve et al., 1992; Oishi et al., 1995). However, the result was not consistent with another report (Barrett et al., 1986), and the effect of levodopa on MRPs has not been studied in healthy subjects. We found that levodopa enhanced the ERP negativity from period II to period III, indicating the impact of exogenous dopamine on prefrontal activity in healthy human subjects.

Along with the progression of decision-making, ERPs may show selectivity to the chosen direction. To find ERP correlates of the directional decision, we introduced ERP(rt–lt) and found that it emerged and developed during the task period. The signal was distributed in the frontocentral region with clear hemispheric laterality (Figure 7). A particularly interesting observation was that the directional signal emerged more slowly in GAME than in RAND, possibly reflecting the difference in decision timing. Importantly, the directional signal was not present in ALT even though the subjects exhibited the same motor responses. These results suggest that the ERPs do not simply encode motor execution, with instead the lateralized directional signal possibly reflecting the cognitive strategy varying according to the task requirements. Another interesting point is that ERP(rt–lt) was much weaker without levodopa. Because behavioral measures were not changed significantly by levodopa, the behavioral implication of the levodopa-induced ERP change is unclear. Our speculation is that the prefrontal cortex in healthy subjects has sufficient capacity to perform the task, and so levodopa does not influence behavioral performance due to a ceiling effect. In PD patients whose frontal executive functioning is known to be compromised (Owen, 2004), levodopa treatment may ameliorate the dysfunction and improve the task performance. This hypothesis should be investigated in future studies.

While the present tasks required subjects to choose between right and left targets, it is possible that the decision is made in an alternative form, that is, choosing whether to switch from or stay with the previous choice. We introduced ERP(switch–stay) as an indicator of this form of decision-making and found that the ERP correlate was present in the right prefrontal cortex selectively during GAME sessions (Figure 9). Decades of primate neurophysiology research has elucidated the pivotal role of the prefrontal cortex in decision-making. The medial frontal cortex is involved in the voluntary switching of action (Shima and Tanji, 1998; Isoda and Hikosaka, 2007), while the dorsolateral prefrontal cortex (DLPFC) integrates perceptional inputs and reinforcement signals to realize adaptive behavior in a changing environment (Lauwereyns et al., 2001; Sakagami et al., 2001; Gold and Shadlen, 2007; Shadlen and Kiani, 2013). For example, when monkeys forage between two targets following Herrnstein’s matching law, DLPFC neurons encode the valuations of specific choices based on the previous rewards (Tsutsui et al., 2016). Also, when monkeys play a matching task, DLPFC neurons encode their past decisions and reward history (Barraclough et al., 2004). We found that past decisions influenced ERPs in the form of switching from or staying with the previous choice, whereas there was no influence of reward history. The inconsistent results for the same behavioral task are probably attributable to differences in methods. Besides physiological differences between the single-neuron activities and ERPs, the reinforcement method also differed, with the monkeys receiving an immediate liquid reward after their choice and our subjects receiving verbal feedback via the monitor as immediate reinforcement.

We observed large biphasic ERPs while the subjects perceived the choice of the opposing player and recognized the behavioral response (period III). These ERPs were prominent in GAME and RAND, but much smaller in ALT (Figure 4). The rule dependency of ERPs may be explained by the information value of the feedback stimulus. The choice of the opposing player was not predictable during RAND and GAME, and so its disclosure represented important information. However, in ALT, the alternating choice of the computer player was perfectly predictable, and disclosing it was not informative. A major finding of the present study is that rule-dependent ERPs were modulated by levodopa administration. This finding is consistent with the role of dopamine in reinforcement learning (Schultz, 2015). Upon receiving the reinforcement signal, the medial prefrontal cortex may compute the action value and update the decision strategy. More specifically, dopaminergic input to the prefrontal cortex might be used to compute the advantages and disadvantages of staying with or shifting the choice in the next trial.

The ERP literature indicates that contingent negative variation (CNV) is elicited in the frontocentral area when two sensory stimuli are paired and presented with a fixed interstimulus interval (Walter, 1964; Tecce, 1972; Rohrbaugh et al., 1976; Hamano et al., 1997). In our study, the intervals between the WAIT and GO cues and between SBJ CHOICE and OPP CHOICE events were fixed. Thus, the ERPs around these events can be conservatively classified as CNV. Whether these ERPs have cognitive components beyond the classical concept of CNV is an important question. To dissociate bottom-up sensory components and top-down cognitive components, researchers have examined how ERPs are modulated by behavioral performance (Gajewski and Falkenstein, 2013; Di Russo et al., 2016, 2017; Perri and Di Russo, 2017). For example, the activity of prefrontal pN and P3 components during a go/no-go task was inversely related to the reaction time variability, which was interpreted as reflecting top-down attention (Perri et al., 2016). Most studies of prefrontal ERPs have used operant tasks, such as the go/no-go task and the Stroop task, in which correct responses are determined by stimulus–response mapping. Thus, in operant tasks, response variability could manifest only in the reaction time and occasional errors. In contrast, the use of a mixed-strategy game in the present study allowed subjects to respond in a nondeterministic manner. We found ERPs that change according to the task rule, choice direction, and decision to switch or stay, and the obtained results provide strong evidence of the influence by top-down executive processes.

The primary factor restricting the generalizability of the present results is the smallness of the sample. Insignificant results in this study may be attributable to an insufficient sample size and the resulting lack of statistical power. For example, it is possible that a larger sample would have revealed significant main effects of rule and drug on the period-mean ERP amplitude during period II. Table 3 provides the power calculations used to estimate the probability of type II errors. The present results should be tested in future research involving a larger number of subjects. The advantages of this study include the relatively large number of trials in each session (Table 2) and its double-blind placebo-controlled design, which are expected to increase the data reliability and statistical power in reducing biases.

In conclusion, we found that prefrontal ERPs reflect different decision strategies when performing a binary choice task. Both the frontomedial negativity and frontolateral signal tuned to the choice direction were modulated by the task rule and levodopa. Important questions to be addressed in future research are how PD patients play mixed-strategy games and whether their ERPs differ from those of healthy subjects.

Data Availability Statement

The datasets presented in this article are not readily available because the data are still under study for separate analyses. Requests to access the datasets should be directed to SK, c2tvYmEtdGt5QHVtaW4ubmV0.

Ethics Statement

The studies involving human participants were reviewed and approved by the Fukushima Medical University ethical committee. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

SK designed the study. F-YC, SK, and WW conducted the experiments. YU commented on the experimental data. F-YC and SK analyzed the data and wrote the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by a Grant-in-Aid for Scientific Research on Innovative Areas from MEXT to YU, a Grant-in-Aid for Scientific Research grant to SK, and a scholarship to F-YC from the Rotary Yoneyama Memorial Foundation.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Yumiko Tanji, Shiho Takano, Nozomu Matsuda, Eiichi Ito, Takenobu Murakami, and Akira Yamashita at Fukushima Medical University.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2021.552750/full#supplementary-material

References

Amabile, G., Fattapposta, F., Pozzessere, G., Albani, G., Sanarelli, L., Rizzo, P. A., et al. (1986). Parkinson disease: electrophysiological (CNV) analysis related to pharmacological treatment. Electroencephalogr. Clin. Neurophysiol. 64, 521–524. doi: 10.1016/0013-4694(86)90189-6

Bandt, C., and Pompe, B. (2002). Permutation entropy: a natural complexity measure for time series. Phys. Rev. Lett. 88:174102. doi: 10.1103/PhysRevLett.88.174102

Barraclough, D. J., Conroy, M. L., and Lee, D. (2004). Prefrontal cortex and decision making in a mixed-strategy game. Nat. Neurosci. 7, 404–410. doi: 10.1038/nn1209

Barrett, G., Shibasaki, H., and Neshige, R. (1986). Cortical potential shifts preceding voluntary movement are normal in parkinsonism. Electroencephalogr. Clin. Neurophysiol. 63, 340–348. doi: 10.1016/0013-4694(86)90018-0

Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision 10, 433–436. doi: 10.1163/156856897X00357

Chowdhury, R., Guitart-Masip, M., Lambert, C., Dayan, P., Huys, Q., Düzel, E., et al. (2013). Dopamine restores reward prediction errors in old age. Nat. Neurosci. 16, 648–653. doi: 10.1038/nn.3364

Cunnington, R., Iansek, R., Bradshaw, J. L., and Phillips, J. G. (1995). Movement-related potentials in Parkinson’s disease. Presence and predictability of temporal and spatial cues. Brain 118, 935–950. doi: 10.1093/brain/118.4.935

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Di Russo, F., Berchicci, M., Bozzacchi, C., Perri, R. L., Pitzalis, S., and Spinelli, D. (2017). Beyond the “Bereitschaftspotential”: action preparation behind cognitive functions. Neurosci. Biobehav. Rev. 78, 57–81. doi: 10.1016/j.neubiorev.2017.04.019

Di Russo, F., Lucci, G., Sulpizio, V., Berchicci, M., Spinelli, D., Pitzalis, S., et al. (2016). Spatiotemporal brain mapping during preparation, perception, and action. Neuroimage 126, 1–14. doi: 10.1016/j.neuroimage.2015.11.036

Dick, J. P., Cantello, R., Buruma, O., Gioux, M., Benecke, R., Day, B. L., et al. (1987). The Bereitschaftspotential, l-DOPA and Parkinson’s disease. Electroencephalogr. Clin. Neurophysiol. 66, 263–274. doi: 10.1016/0013-4694(87)90075-7

Dixit, A. K., Skeath, S., and McAdams, D. (2009). Games of Strategy. New York, NY: W. W. Norton & Company.

Donahue, C. H., Seo, H., and Lee, D. (2013). Cortical signals for rewarded actions and strategic exploration. Neuron 80, 223–234. doi: 10.1016/j.neuron.2013.07.040

Dorris, M. C., and Glimcher, P. W. (2004). Activity in posterior parietal cortex is correlated with the relative subjective desirability of action. Neuron 44, 365–378. doi: 10.1016/j.neuron.2004.09.009

Falkenstein, M., Hohnsbein, J., Hoormann, J., and Blanke, L. (1991). Effects of crossmodal divided attention on late ERP components. II. Error processing in choice reaction tasks. Electroencephalogr. Clin. Neurophysiol. 78, 447–455. doi: 10.1016/0013-4694(91)90062-9

Feve, A. P., Bathien, N., and Rondot, P. (1992). Chronic administration of L-dopa affects the movement-related cortical potentials of patients with Parkinson’s disease. Clin. Neuropharmacol. 15, 100–108. doi: 10.1097/00002826-199204000-00003

Gajewski, P. D., and Falkenstein, M. (2013). Effects of task complexity on ERP components in Go/Nogo tasks. Int. J. Psychophysiol. 87, 273–278. doi: 10.1016/j.ijpsycho.2012.08.007

Gold, J. I., and Shadlen, M. N. (2007). The neural basis of decision making. Ann. Rev. Neurosci. 30, 535–574. doi: 10.1146/annurev.neuro.29.051605.113038

Hamano, T., Luders, H. O., Ikeda, A., Collura, T. F., Comair, Y. G., and Shibasaki, H. (1997). The cortical generators of the contingent negative variation in humans: a study with subdural electrodes. Electroencephalogr. Clin. Neurophysiol. 104, 257–268. doi: 10.1016/s0168-5597(97)96107-4

Isoda, M., and Hikosaka, O. (2007). Switching from automatic to controlled action by monkey medial frontal cortex. Nat. Neurosci. 10, 240–248. doi: 10.1038/nn1830

Kim, J. N., and Shadlen, M. N. (1999). Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nat. Neurosci. 2, 176–185. doi: 10.1038/5739

Kobayashi, S., Asano, K., Matsuda, N., and Ugawa, Y. (2019). Dopaminergic influences on risk preferences of Parkinson’s disease patients. Cogn. Affect. Behav. Neurosci. 19, 188–197. doi: 10.3758/s13415-018-00646-3

Kornhuber, H. H., and Deecke, L. (1965). Hirnpotentialänderungen bei Willkürbewegungen und passiven bewegungen des menschen: bereitschaftspotential und reafferente potentiale. Pflügers Arch. 284, 1–17. doi: 10.1007/BF00412364

Kornhuber, H. H., and Deecke, L. (2016). Brain potential changes in voluntary and passive movements in humans: readiness potential and reafferent potentials. Pflügers Arch. 468, 1115–1124. doi: 10.1007/s00424-016-1852-3

Krawczyk, D. C. (2002). Contributions of the prefrontal cortex to the neural basis of human decision making. Neurosci. Biobehav. Rev. 26, 631–664. doi: 10.1016/S0149-7634(02)00021-0

Lauwereyns, J., Sakagami, M., Tsutsui, K. I., Kobayashi, S., Koizumi, M., and Hikosaka, O. (2001). Responses to task-irrelevant visual features by primate prefrontal neurons. J. Neurophysiol. 86, 2001–2010. doi: 10.1152/jn.2001.86.4.2001

Lee, D., and Seo, H. (2016). Neural basis of strategic decision making. Trends Neurosci. 39, 40–48. doi: 10.1016/j.tins.2015.11.002

Lopez-Calderon, J., and Luck, S. J. (2014). ERPLAB: an open-source toolbox for the analysis of event-related potentials. Front. Hum. Neurosci. 8:213. doi: 10.3389/fnhum.2014.00213

Matsumoto, K., Suzuki, W., and Tanaka, K. (2003). Neuronal correlates of goal-based motor selection in the prefrontal cortex. Science 301, 229–232. doi: 10.1126/science.1084204

Mognon, A., Jovicich, J., Bruzzone, L., and Buiatti, M. (2011). ADJUST: an automatic EEG artifact detector based on the joint use of spatial and temporal features. Psychophysiology 48, 229–240. doi: 10.1111/j.1469-8986.2010.01061.x

Morris, G., Nevet, A., Arkadir, D., Vaadia, E., and Bergman, H. (2006). Midbrain dopamine neurons encode decisions for future action. Nat. Neurosci. 9, 1057–1063. doi: 10.1038/nn1743

Oishi, M., Mochizuki, Y., Du, C., and Takasu, T. (1995). Contingent negative variation and movement-related cortical potentials in parkinsonism. Electroencephalogr. Clin. Neurophysiol. 95, 346–349. doi: 10.1016/0013-4694(95)00084-C

Ouyang, G. (2020). Permutation Entropy. https://www.mathworks.com/matlabcentral/fileexchange/37289-permutation-entropy. Retrieved December 10, 2020

Owen, A. M. (2004). Cognitive dysfunction in Parkinson’s disease: the role of frontostriatal circuitry. Neuroscientist 10, 525–537. doi: 10.1177/1073858404266776

Perri, R. L., Berchicci, M., Lucci, G., Spinelli, D., and Di Russo, F. (2016). How the brain prevents a second error in a perceptual decision-making task. Sci. Rep. 6:32058. doi: 10.1038/srep32058

Perri, R. L., and Di Russo, F. (2017). Executive functions and performance variability measured by event-related potentials to understand the neural bases of perceptual decision-making. Front. Hum. Neurosci. 11:556. doi: 10.3389/fnhum.2017.00556

Picton, T. W., Bentin, S., Berg, P., Donchin, E., Hillyard, S. A., and Johnson, R. Jr., et al. (2000). Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Psychophysiology 37, 127–152. doi: 10.1017/S0048577200000305

Polich, J. (2007). Updating P300: an integrative theory of P3a and P3b. Clin. Neurophys. 118, 2128–2148. doi: 10.1016/j.clinph.2007.04.019

Rohrbaugh, J. W., Syndulko, K., and Lindsley, D. B. (1976). Brain wave components of the contingent negative variation in humans. Science 191, 1055–1057. doi: 10.1126/science.1251217

Sakagami, M., Tsutsui, K. I., Lauwereyns, J., Koizumi, M., Kobayashi, S., and Hikosaka, O. (2001). A code for behavioral inhibition on the basis of color, but not motion, in ventrolateral prefrontal cortex of macaque monkey. J. Neurosci. 21, 4801–4808. doi: 10.1523/jneurosci.21-13-04801.2001

Schultz, W. (2015). Neuronal reward and decision signals: from theories to data. Physiol. Rev. 95, 853–951. doi: 10.1152/physrev.00023.2014

Schultz, W., Dayan, P., and Montague, P. R. (1997). A neural substrate of prediction and reward. Science 275, 1593–1599. doi: 10.1126/science.275.5306.1593

Seo, H., Barraclough, D. J., and Lee, D. (2009). Lateral intraparietal cortex and reinforcement learning during a mixed-strategy game. J. Neurosci. 29, 7278–7289. doi: 10.1523/JNEUROSCI.1479-09.2009

Seo, H., and Lee, D. (2007). Temporal filtering of reward signals in the dorsal anterior cingulate cortex during a mixed-strategy game. J. Neurosci. 27, 8366–8377. doi: 10.1523/JNEUROSCI.2369-07.2007

Seo, H., and Lee, D. (2008). Cortical mechanisms for reinforcement learning in competitive games. Philos. Trans. R Soc. Lond B Biol. Sci. 363, 3845–3857. doi: 10.1098/rstb.2008.0158

Shadlen, M. N., and Kiani, R. (2013). Decision making as a window on cognition. Neuron 80, 791–806. doi: 10.1016/j.neuron.2013.10.047

Shima, K., and Tanji, J. (1998). Role for cingulate motor area cells in voluntary movement selection based on reward. Science 282, 1335–1338.

Tecce, J. J. (1972). Contingent negative variation (CNV) and psychological processes in man. Psychol. Bull. 77, 73–108. doi: 10.1037/h0032177

Thevarajah, D., Mikuliæ, A., and Dorris, M. C. (2009). Role of the superior colliculus in choosing mixed-strategy saccades. J. Neurosci. 29, 1998–2008. doi: 10.1523/JNEUROSCI.4764-08.2009

Tsutsui, K. I., Grabenhorst, F., Kobayashi, S., and Schultz, W. (2016). A dynamic code for economic object valuation in prefrontal cortex neurons. Nat. Commun. 7:12554. doi: 10.1038/ncomms12554

Walter, W. G. (1964). Slow potential waves in the human brain associated with expectancy, attention and decision. Arch. Psychiatr. Nervenkr. 206, 309–322. doi: 10.1007/BF00341700

Keywords: levodopa, feedback, readiness potential, game theory, Parkinson’s disease, high-density EEG, prefrontal cortex, executive function

Citation: Chang F-Y, Wiratman W, Ugawa Y and Kobayashi S (2021) Event-Related Potentials During Decision-Making in a Mixed-Strategy Game. Front. Neurosci. 15:552750. doi: 10.3389/fnins.2021.552750

Received: 16 April 2020; Accepted: 03 February 2021;

Published: 19 March 2021.

Edited by:

Daeyeol Lee, Johns Hopkins University, United StatesReviewed by:

Emmanuel Procyk, Institut National de la Santé et de la Recherche Médicale (INSERM), FranceYoungbin Kwak, University of Massachusetts Amherst, United States

Hyojung Seo, School of Medicine Yale University, United States

Copyright © 2021 Chang, Wiratman, Ugawa and Kobayashi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shunsuke Kobayashi, c2tvYmEtdGt5QHVtaW4ubmV0

Fang-Yu Chang

Fang-Yu Chang Winnugroho Wiratman

Winnugroho Wiratman Yoshikazu Ugawa

Yoshikazu Ugawa Shunsuke Kobayashi

Shunsuke Kobayashi