95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

DATA REPORT article

Front. Neurosci. , 19 October 2020

Sec. Neural Technology

Volume 14 - 2020 | https://doi.org/10.3389/fnins.2020.566147

This article is part of the Research Topic Datasets for Brain-Computer Interface Applications View all 16 articles

Research studies in the field of Brain-Computer Interfaces (BCI) mostly take place in controlled lab environments. To move BCIs into the real world and everyday life situations it is crucial to bring research out of those controlled environments and into more realistic scenarios.

Recently, various studies have been recorded in classrooms, cars or realistic tugboat simulators (Blankertz et al., 2010; Brouwer et al., 2017; Ko et al., 2017; Miklody et al., 2017). Mobile BCIs even allow participants to move freely during the recording (Lotte et al., 2009; Castermans et al., 2011; De Vos et al., 2014; Wriessnegger et al., 2017; von Lühmann et al., 2019). Other studies have been carried out with paralyzed, locked-in or completely locked-in users or with participants recovering from stroke (Neuper et al., 2003; Ang et al., 2011; Leeb et al., 2013; Höhne et al., 2014; Hwang et al., 2017; Han et al., 2019; Lugo et al., 2020).

However, so far there has not been a BCI study where distractions are investigated systematically. We have recorded a motor imagery-based BCI study (N = 16) under five types of distractions that mimic out-of-lab environments and a control task where no distraction was added. The secondary tasks include watching a flickering video, searching the room for a specific number, listening to news, closing the eyes and vibro-tactile stimulation.

Many BCI datasets have been published, e.g., in context of the BNCI Horizon 2020 initiative1, 4 BCI competitions have had a big impact on the research community (Sajda et al., 2003; Blankertz et al., 2004, 2006; Tangermann et al., 2012) and still datasets are made available (Shin et al., 2016; Cho et al., 2017; Kaya et al., 2018). We want to contribute further by publishing this BCI dataset with multiple distractor conditions. This report provides a summary of the design and experimental setup of the study. We also show group-level results on event-related synchronization and desynchronization, results on a standard classification pipeline and power spectra for all secondary tasks. Apart from the dataset2, code for the analysis is also publicly available3 and a more advanced analysis can be found in Brandl et al. (2016).

Sixteen participants (six female, average age 26.3 ± 1.9 years) volunteered to participate in this study. Three volunteers had previously participated in another BCI experiment. All instructions were given in German requiring basic language skills. Volunteers were reimbursed for their participation in the study except for three employees of the TU Berlin Machine Learning Group. All participants were instructed on the experimental procedures prior to signing an informed consent. This study was conducted according to the declaration of Helsinki and was approved by the Ethics Committee of the Charite-Universitätsmedizin Berlin (approval number: EA4/012/12).

EEG signals were recorded with a Fast'n Easy Cap (EasyCap GmbH) with 63 wet Ag/AgCl electrodes which were placed at symmetrical positions according to the international 10–20 system (Jasper, 1958) referenced to the nose. We used two 32-channel amplifiers (BrainAmp, BrainProducts) to amplify the signals, which were sampled at 1,000 Hz. Data was recorded in the period of 15 April–18 July 2014 at TU Berlin and raw data without any preprocessing was made publicly available1.

During the experiment, the participants were sitting in a comfortable armchair at a distance of 1m in front of a 24” computer screen. Auditory instructions were given via headphones.

Each experimental session lasted about 3 h including preparation and about 90 min of signal recording. Before the main experiment, we recorded eight trials in which participants had to alternately keep their eyes open or closed for 15 s.

The main experiment was divided into seven runs à 10 min with 72 trials per run. One trial lasted 4.5 s and was defined by one motor imagery task with an additional secondary task except for the first run. The first run served as a calibration phase without feedback and distraction tasks. The subsequent runs included three blocks à four trials (two left and two right) of each secondary tasks (72 trials per run). The blocks were presented in a random order to minimize sequence effects.

At the beginning of each trial, instructions for left or right hand motor imagination were given over headphones (links and rechts as the instructions were in German). This was the primary task in this study. At the end of the trial the participant received a stop command followed by a break of 2.5 s, after which the next trial started.

Participants were asked to choose one haptic hand movement. Several strategies for motor imagery were presented to the participants to choose from. The majority chose to imagine squeezing a soft ball—other strategies involved opening a water tap, piano playing or using a salt shaker.

Auditory online feedback was given in the six runs after the calibration to keep the motivation up. The online feedback was trained on the calibration data and based on Laplacian filters of the C3 and C4 electrodes (McFarland et al., 1997) and regularized linear discriminant analysis (RLDA, Friedman, 1989). For this, EEG data was downsampled to 100 Hz, Laplacian filters of C3 and C4 were calculated and the data was band-pass filtered in the ranges 9–13 and 18–26 Hz with a Butterworth filter of order 5. Data was then cut into epochs of 750–3,500 ms and an RLDA classifier was trained on the logarithm of variances as features. During the feedback phase, EEG data was downsampled and band-pass filtered as before, projected on the Laplacian filters and the trained classifier applied on the log-variance features. Furthermore, we applied pooled-mean adaptation to continue training the classifier during the feedback phase (Vidaurre et al., 2010). Classification averaged across all participants reached an accuracy of 57.05%. Auditory feedback was given after the stop command as decision left (Entscheidung links) or decision right (Entscheidung rechts) during the 2.5 s break. Online classification was performed with the BBCI toolbox in MATLAB4.

We simulated a pseudo-realistic environment by adding six secondary tasks on top of the primary motor imagery task to the experimental setup. They were selected to cover different types of distractions in an out-of-lab scenario.

1. Clean

This condition served as a control task where no additional distraction was added.

2. Eyes-Closed

Participants were asked to close their eyes before the motor imagery trial started and to keep them closed until the trial finished. Here, we expected a power increase in the alpha band (8–12 Hz) due to the closed eyes to overlap with the motor task related mu rhythm (8–13 Hz). This task was also the primary reason for providing all instructions and feedback auditorily instead of visually.

3. News

Short sequences of a public newscast (Tagesschau) were played over the headphones with current news (January/February 2014) and news from 1994. Each sequence was only played once in each experiment. We expected the participants to be cognitively distracted and the auditory cortex to be activated during the motor imagery task which might influence the motor imagery performance.During the experiment, we did not assess active listening of the participants.

4. Numbers

For this task, 26 sheets of paper with a randomly mixed letter-number combination were set up on the wall in front of the participants and also on the left and right side of the room. This implies that participants needed to turn their head in order to see the sheets. For each trial a new window appeared on the screen asking the participants to search the room for a particular letter to match with a stated number and to read it out loud. Each combination was shown 2–3 times to all participants. We counted how often the letters were found. Out of 72 trials, 59.7 combinations were successfully found on average. This task was expected to cause both a high cognitive distraction and additional muscular artifacts.

5. Flicker

A flickering stimulus with alternating gray shades at a frequency of 10 Hz was presented on the screen. We included this task to analyze the influence of the steady state visually evoked potential (SSVEP) (Morgan et al., 1996).

6. Stimulation

We placed two coin vibration motors with a diameter of 3 cm on the insides of both forearms, one over each wrist and the other just below the elbows. To investigate the interference of steady state vibration somatosensory evoked potential (SSVSEP, Tobimatsu et al., 1999; Brouwer and Van Erp, 2010) on the motor imagery task, vibrotactile stimulation was carried out with carrier frequencies of 50 and 100 Hz, each modulated at 9, 10, and 11 Hz.

We show group-level results of event-related synchronization and desynchronization (ERS/ERD, see Figure 1) which can be observed during motor imagination and execution (Pfurtscheller, 1992). Data analysis was also performed with the BBCI toolbox for MATLAB4.

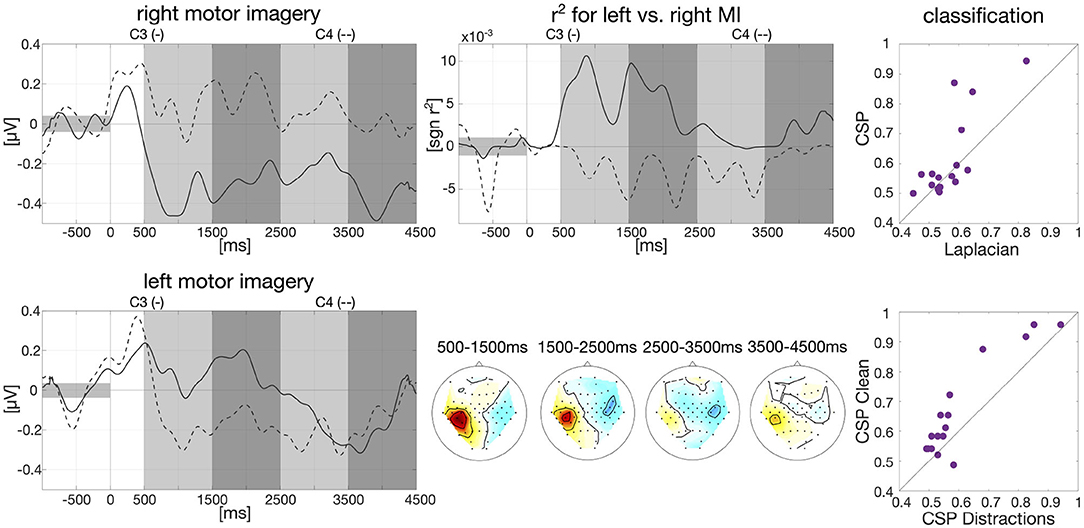

Figure 1. Baseline analysis for calibration data and first classification results. Left: Group-average envelopes of C3 (solid) and C4 (dashed) electrodes for right and left motor imagery trials. Center: Group-average signed r2 values evolving over time within trials as times series for C3 and C4 (upper) and patterns for four different intervals (lower). Right: Classification results on motor imagery data left vs. right (one dot per participant): comparison offline CSP vs. online Laplacian (upper) and comparison CSP without distractions vs. CSP with distractions (lower).

Data from the calibration session was band-pass filtered in the frequency band of 9–13 Hz with a 3rd order zero-phase Butterworth filter and cut into epochs for each participant individually, starting 1,000 ms prior to trial onset until 4,500 ms after trial onset. The envelope was then calculated on the group average based on the Hilbert transformation with a moving average window of 200 ms. Baseline correction was applied, i.e., the average EEG amplitude in the interval of 1,000 ms prior to trial onset was subtracted. The resulted smoothed envelope is presented in Figure 1 for the electrodes C3 and C4. Here, we clearly see desynchronization effects in C3 for right hand motor imagery and C4 for left hand motor imagery starting around 500 ms after trial onset.

We further calculated signed biserial correlation coefficients (r2) on the smoothed group-average envelope to determine which EEG channels show the most discriminative information for left and right hand motor imagery. Results can be examined in Figure 1 where the scalp patterns of both left and right motor cortex carry relevant class information especially in the beginning of the trial which matches findings in the literature (Pfurtscheller, 1992). Above the scalp patterns, we show the time course over an average of all epochs of the r2-values for C3 and C4. Here, we can see that on average 500–2,000 ms after trial onset the two channels carry import information to separate right and left motor imagery as indicated by r2.

We also conducted an offline classification with Common Spatial Patterns (CSP, Ramoser et al., 2000) in comparison to the online classification with Laplacian filters. Individual frequency bands between 8 and 30 Hz and time intervals between 250 and 4,500 ms after stimulus onset were selected for each participant as described in Blankertz et al. (2007). Data was then band-pass filtered in the selected frequency band with a 3rd order zero-phase Butterworth filter and cut into epochs. Six CSP filters were extracted, three per class based on the “ratio-of-median” score as described in Blankertz et al. (2007). The logarithm of the variance of the CSP-filtered signal was then used as features and fed into an RLDA classifier. Overall classification averaged across all participants reached an accuracy of 61.81%. Classification results of CSP vs. Laplacian filters are plotted in Figure 1 (61.81 vs. 57.05%) as well as classification of CSP on clean condition vs. the five distraction tasks (67.08 vs. 60.76%).

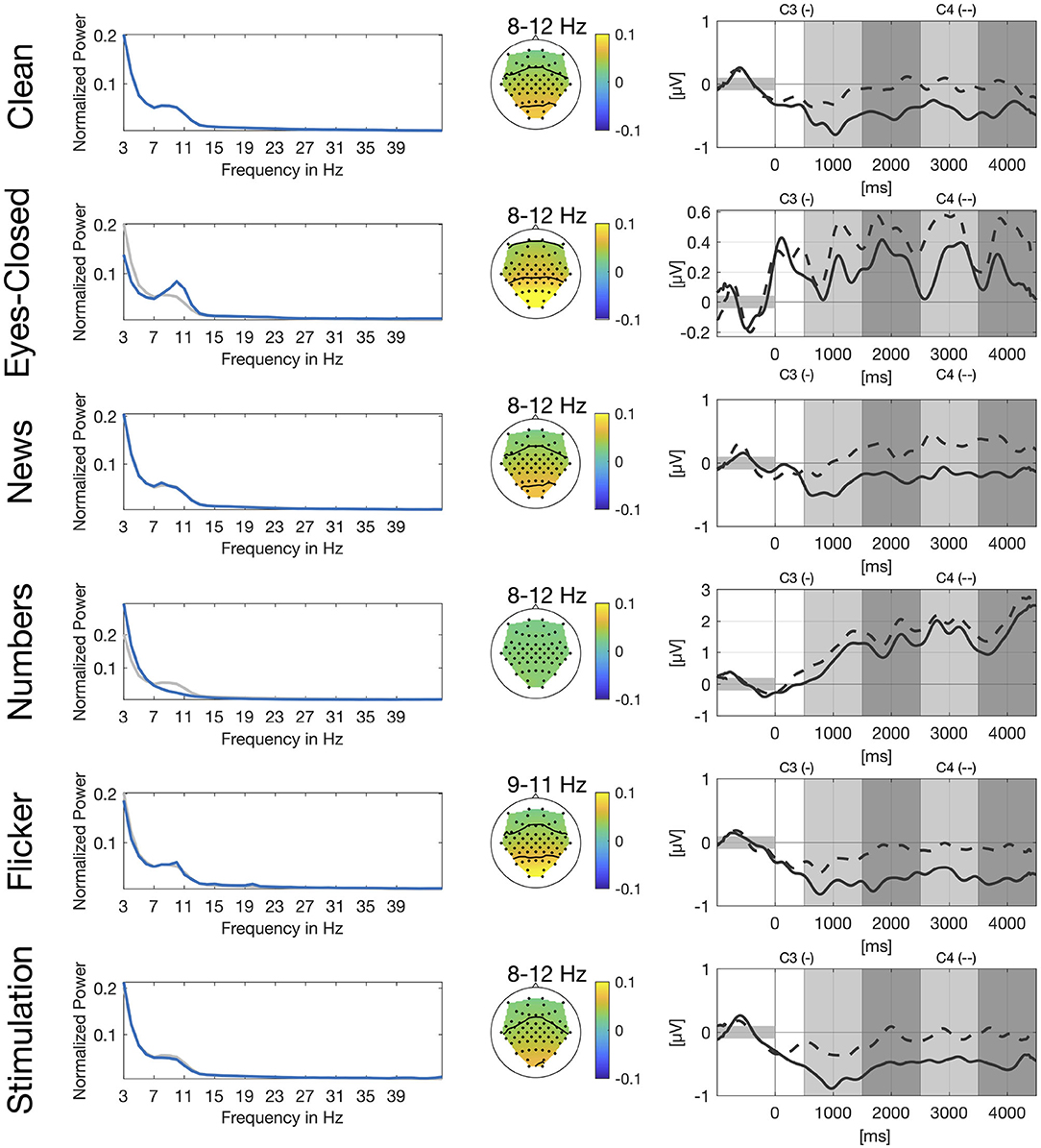

In Figure 2, we show power spectra for all secondary tasks. For each participant, power spectra were averaged across trials and normalized channel-wise. We then extracted the power spectra for the channels O1 and O2, averaged over the two channels and again across participants. Alpha peaks clearly differ for eyes-closed and numbers compared to clean. For the eyes-closed task, we see the expected alpha peak in the range of 8–12 Hz (Berger, 1929). For the numbers task there is no clear alpha peak visible in the occipital channels which is in line with the expected suppression of the visual alpha rhythm during visual search. Power spectrum for the flicker task shows a small sharp peak between 9 and 11 Hz which is very close to the frequency of the flickering video and another even smaller peak at 20 Hz which represents the second harmonic of the flicker frequency. The news and stimulation task do not show clear differences compared to clean.

Figure 2. Spectral, spatial, and temporal information for all secondary tasks. Left: Normalized power spectrum averaged across participants and channels O1 and O2, in blue for the respective secondary task and in gray for the clean condition. Center: Spatial distribution of power spectral values averaged across participants for different frequency bands. Right: Group-average envelopes in 9–13 Hz of C3 (solid) and C4 (dashed) electrodes for right hand motor imagery.

We also show spatial distribution for different frequency bands in the alpha range based on the peaks in the power spectrum. For eyes-closed and flicker we see a clear activation over the occipital and parietal cortex whereas there is no clear pattern visible for the numbers task. Again, patterns for the news and the stimulation task look very similar to the pattern of the clean task.

Similar to Figure 1, we show envelopes of channels C3 and C4 for right hand motor imagery. The modulation of the sensorimotor rhythm is still visible in all conditions as a stronger ERD in C3 compared to C4. However, the effect is obscured by the different artifacts. The disturbences are smallest in the news, flicker and the stimulation tasks due to the stationary nature of the artifacts. For the flicker task we still see a clear difference between both channels, whereas channels are already closer for eyes-closed and still even closer for the numbers task.

We recorded a motor imagery-based BCI study with 16 participants where different distraction scenarios are added as secondary tasks to systematically investigate the influence of those noise sources on the motor imagery performance. We have presented group-averages that show typical ERD/ERS effects especially during the first half of the trial over the motor cortex, typical phenomena according to the literature. We further show expected differences in power spectra for occipital channels and spatial patterns for different frequency bands in the alpha range for three of the secondary tasks. We also show classification results of a standard CSP + RLDA classification pipeline that clearly show that classification accuracy decreases in the distraction tasks. All the data2 and the code3 is publicly available and a more advanced analysis has been published in Brandl et al. (2016).

The dataset recorded for this study can be found in DepositOnce1.

The studies involving human participants were reviewed and approved by Ethikkommission der Charité—Universitätsmedizin Berlin. The patients/participants provided their written informed consent to participate in this study.

Design of the study by SB and BB. Recording and analysis of the study by SB. SB wrote the manuscript which was revised by BB. All authors contributed to the article and approved the submitted version.

This work was funded by the German Ministry for Education and Research as BIFOLD—Berlin Institute for the Foundations of Learning and Data (Refs. 01IS18025A and 01IS18037A).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank all participants for taking part in the study. We also thank J. Höhne for his support and advice on recording the study. We further thank A. von Lühmann, D. Miklody, and the reviewers for valuable comments on the manuscript and N. Koreuber for code review.

1. ^https://bnci-horizon-2020.eu/database/data-sets

2. ^https://depositonce.tu-berlin.de/handle/11303/10934.2

3. ^https://github.com/stephaniebrandl/bci-under-distraction

Ang, K. K., Guan, C., Chua, K. S. G., Ang, B. T., Kuah, C. W. K., Wang, C., et al. (2011). A large clinical study on the ability of stroke patients to use an eeg-based motor imagery brain-computer interface. Clin. EEG Neurosci. 42, 253–258. doi: 10.1177/155005941104200411

Berger, H. (1929). Über das Elektroenkephalogramm des Menschen. Archiv. Psychiatr. Nervenkrankheit. 87, 527–570. doi: 10.1007/BF01797193

Blankertz, B., Müller, K. R., Curio, G., Vaughan, T. M., Schalk, G., Wolpaw, J. R., et al. (2004). The BCI competition 2003: progress and perspectives in detection and discrimination of EEG single trials. IEEE Trans. Biomed. Eng. 51, 1044–1051. doi: 10.1109/TBME.2004.826692

Blankertz, B., Müller, K. R., Krusienski, D. J., Schalk, G., Wolpaw, J. R., Schlögl, A., et al. (2006). The BCI competition III: validating alternative approaches to actual BCI problems. IEEE Trans. Neural Syst. Rehabil. Eng. 14, 153–159. doi: 10.1109/TNSRE.2006.875642

Blankertz, B., Tangermann, M., Vidaurre, C., Fazli, S., Sannelli, C., Haufe, S., et al. (2010). The Berlin brain-computer interface: non-medical uses of BCI technology. Front. Neurosci. 4:198. doi: 10.3389/fnins.2010.00198

Blankertz, B., Tomioka, R., Lemm, S., Kawanabe, M., and Muller, K.-R. (2007). Optimizing spatial filters for robust eeg single-trial analysis. IEEE Signal Processi. Mag. 25, 41–56. doi: 10.1109/MSP.2008.4408441

Brandl, S., Frølich, L., Höhne, J., Müller, K.-R., and Samek, W. (2016). Brain-computer interfacing under distraction: an evaluation study. J. Neural Eng. 13:056012. doi: 10.1088/1741-2560/13/5/056012

Brouwer, A.-M., Snelting, A., Jaswa, M., Flascher, O., Krol, L., and Zander, T. (2017). “Physiological effects of adaptive cruise control behaviour in real driving,” in Proceedings of the 2017 ACM Workshop on an Application-Oriented Approach to BCI Out of the Laboratory (Limassol), 15–19. doi: 10.1145/3038439.3038441

Brouwer, A.-M., and Van Erp, J. B. (2010). A tactile P300 brain-computer interface. Front. Neurosci. 4:19. doi: 10.3389/fnins.2010.00019

Castermans, T., Duvinage, M., Petieau, M., Hoellinger, T., Saedeleer, C., Seetharaman, K., et al. (2011). Optimizing the performances of a P300-based brain-computer interface in ambulatory conditions. IEEE J. Emerg. Select. Top. Circuits Syst. 1, 566–577. doi: 10.1109/JETCAS.2011.2179421

Cho, H., Ahn, M., Ahn, S., Kwon, M., and Jun, S. C. (2017). EEG datasets for motor imagery brain-computer interface. GigaScience 6:gix034. doi: 10.1093/gigascience/gix034

De Vos, M., Gandras, K., and Debener, S. (2014). Towards a truly mobile auditory brain-computer interface: exploring the P300 to take away. Int. J. Psychophysiol. 91, 46–53. doi: 10.1016/j.ijpsycho.2013.08.010

Friedman, J. H. (1989). Regularized discriminant analysis. J. Am. Stat. Assoc. 84, 165–175. doi: 10.1080/01621459.1989.10478752

Han, C.-H., Kim, Y.-W., Kim, S. H., Nenadic, Z., Im, C.-H., et al. (2019). Electroencephalography-based endogenous brain-computer interface for online communication with a completely locked-in patient. J. Neuroeng. Rehabil. 16:18. doi: 10.1186/s12984-019-0493-0

Höhne, J., Holz, E., Staiger-Sälzer, P., Müller, K.-R., Kübler, A., and Tangermann, M. (2014). Motor imagery for severely motor-impaired patients: evidence for brain-computer interfacing as superior control solution. PLoS ONE 9:e104854. doi: 10.1371/journal.pone.0104854

Hwang, H.-J., Han, C.-H., Lim, J.-H., Kim, Y.-W., Choi, S.-I., An, K.-O., et al. (2017). Clinical feasibility of brain-computer interface based on steady-state visual evoked potential in patients with locked-in syndrome: case studies. Psychophysiology 54, 444–451. doi: 10.1111/psyp.12793

Jasper, H. (1958). The ten twenty electrode system of the international federation. EEG Clin. Neurophysiol. 10, 371–375.

Kaya, M., Binli, M. K., Ozbay, E., Yanar, H., and Mishchenko, Y. (2018). A large electroencephalographic motor imagery dataset for electroencephalographic brain computer interfaces. Sci. Data 5:180211. doi: 10.1038/sdata.2018.211

Ko, L.-W., Komarov, O., Hairston, W. D., Jung, T.-P., and Lin, C.-T. (2017). Sustained attention in real classroom settings: an EEG study. Front. Hum. Neurosci. 11:388. doi: 10.3389/fnhum.2017.00388

Leeb, R., Perdikis, S., Tonin, L., Biasiucci, A., Tavella, M., Creatura, M., et al. (2013). Transferring brain-computer interfaces beyond the laboratory: successful application control for motor-disabled users. Artif. Intell. Med. 59, 121–132. doi: 10.1016/j.artmed.2013.08.004

Lotte, F., Fujisawa, J., Touyama, H., Ito, R., Hirose, M., and Lécuyer, A. (2009). “Towards ambulatory brain-computer interfaces: a pilot study with P300 signals,” in Proceedings of the International Conference on Advances in Computer Enterntainment Technology (Athens), 336–339. doi: 10.1145/1690388.1690452

Lugo, Z. R., Pokorny, C., Pellas, F., Noirhomme, Q., Laureys, S., Müller-Putz, G., et al. (2020). Mental imagery for brain-computer interface control and communication in non-responsive individuals. Ann. Phys. Rehabil. Med. 63, 21–27. doi: 10.1016/j.rehab.2019.02.005

McFarland, D. J., McCane, L. M., David, S. V., and Wolpaw, J. R. (1997). Spatial filter selection for EEG-based communication. Electroencephalogr. Clin. Neurophysiol. 103, 386–394. doi: 10.1016/S0013-4694(97)00022-2

Miklody, D., Uitterhoeve, W. M., van Heel, D., Klinkenberg, K., and Blankertz, B. (2017). “Maritime cognitive workload assessment,” in Symbiotic Interaction. Symbiotic 2016. Lecture Notes in Computer Science, Vol. 9961, eds L. Gamberini, A. Spagnolli, G. Jacucci, B. Blankertz, and J. Freeman (Cham: Springer), 102–114. doi: 10.1007/978-3-319-57753-1_9

Morgan, S., Hansen, J., and Hillyard, S. (1996). Selective attention to stimulus location modulates the steady-state visual evoked potential. Proc. Natl. Acad. Sci. U.S.A. 93, 4770–4774. doi: 10.1073/pnas.93.10.4770

Neuper, C., Müller, G., Kübler, A., Birbaumer, N., and Pfurtscheller, G. (2003). Clinical application of an EEG-based brain-computer interface: a case study in a patient with severe motor impairment. Clin. Neurophysiol. 114, 399–409. doi: 10.1016/S1388-2457(02)00387-5

Pfurtscheller, G. (1992). Event-related synchronization (ERS): an electrophysiological correlate of cortical areas at rest. Electroencephalogr. Clin. Neurophysiol. 83, 62–69. doi: 10.1016/0013-4694(92)90133-3

Ramoser, H., Muller-Gerking, J., and Pfurtscheller, G. (2000). Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Trans. Rehabil. Eng. 8, 441–446. doi: 10.1109/86.895946

Sajda, P., Gerson, A., Müller, K.-R., and Blankertz, B. (2003). A data analysis competition to evaluate machine learning algorithms for use in brain-computer interfaces. Rehabilitation 11, 184–185. doi: 10.1109/TNSRE.2003.814453

Shin, J., von Lühmann, A., Blankertz, B., Kim, D.-W., Jeong, J., Hwang, H.-J., et al. (2016). Open access dataset for EEG + NIRS single-trial classification. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 1735–1745. doi: 10.1109/TNSRE.2016.2628057

Tangermann, M., Müller, K. R., Aertsen, A., Birbaumer, N., Braun, C., Brunner, C., et al. (2012). Review of the BCI competition IV. Front. Neurosci. 6:55. doi: 10.3389/fnins.2012.00055

Tobimatsu, S., Zhang, Y. M., and Kato, M. (1999). Steady-state vibration somatosensory evoked potentials: physiological characteristics and tuning function. Clin. Neurophysiol. 110, 1953–1958. doi: 10.1016/S1388-2457(99)00146-7

Vidaurre, C., Kawanabe, M., von Bünau, P., Blankertz, B., and Müller, K.-R. (2010). Toward unsupervised adaptation of LDA for brain-computer interfaces. IEEE Trans. Biomed. Eng. 58, 587–597. doi: 10.1109/TBME.2010.2093133

von Lühmann, A., Boukouvalas, Z., Müller, K.-R., and Adalı, T. (2019). A new blind source separation framework for signal analysis and artifact rejection in functional near-infrared spectroscopy. Neuroimage 200, 72–88. doi: 10.1016/j.neuroimage.2019.06.021

Wriessnegger, S. C., Krumpl, G., Pinegger, A., and Mueller-Putz, G. (2017). “Mobile BCI technology: NeuroIS goes out of the lab, into the field,” in Information Systems and Neuroscience, eds F. Davis, R. Riedl, J. vom Brocke, P. M. Léger, and A. Randolph (Cham: Springer), 59–65. doi: 10.1007/978-3-319-41402-7_8

Keywords: brain-computer interface, motor imagery, out-of-lab scenarios, artifacts, steady-state visual evoked potential (SSVEP), vibro-tactile stimulation

Citation: Brandl S and Blankertz B (2020) Motor Imagery Under Distraction— An Open Access BCI Dataset. Front. Neurosci. 14:566147. doi: 10.3389/fnins.2020.566147

Received: 27 May 2020; Accepted: 21 August 2020;

Published: 19 October 2020.

Edited by:

Andrea Kübler, Julius Maximilian University of Würzburg, GermanyReviewed by:

Pavel Bobrov, Institute of Higher Nervous Activity and Neurophysiology (RAS), RussiaCopyright © 2020 Brandl and Blankertz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stephanie Brandl, c3RlcGhhbmllLmJyYW5kbEB0dS1iZXJsaW4uZGU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.