- 1JARA-Institute Molecular Neuroscience and Neuroimaging (INM-11), Jülich Research Centre, Jülich, Germany

- 2Child Neuropsychology Section, Department of Child and Adolescent Psychiatry, Psychosomatics and Psychotherapy, Medical Faculty, RWTH Aachen University, Aachen, Germany

- 3Department of Psychiatry, University of Münster, Münster, Germany

- 4Brain Innovation B.V., Research Department, Maastricht, Netherlands

- 5Faculty of Psychology and Neuroscience, Department of Cognitive Neuroscience, Maastricht University, Maastricht, Netherlands

- 6School of Psychological Science, University of Bristol, Bristol, United Kingdom

- 7MRC Integrative Epidemiology Unit, University of Bristol, Bristol, United Kingdom

Background: The effects of electroencephalography (EEG) and functional magnetic resonance imaging (fMRI)-neurofeedback on brain activation and behaviors have been studied extensively in the past. More recently, researchers have begun to investigate the effects of functional near-infrared spectroscopy-based neurofeedback (fNIRS-neurofeedback). FNIRS is a functional neuroimaging technique based on brain hemodynamics, which is easy to use, portable, inexpensive, and has reduced sensitivity to movement artifacts.

Method: We provide the first systematic review and database of fNIRS-neurofeedback studies, synthesizing findings from 22 peer-reviewed studies (including a total of N = 441 participants; 337 healthy, 104 patients). We (1) give a comprehensive overview of how fNIRS-neurofeedback training protocols were implemented, (2) review the online signal-processing methods used, (3) evaluate the quality of studies using pre-set methodological and reporting quality criteria and also present statistical sensitivity/power analyses, (4) investigate the effectiveness of fNIRS-neurofeedback in modulating brain activation, and (5) review its effectiveness in changing behavior in healthy and pathological populations.

Results and discussion: (1–2) Published studies are heterogeneous (e.g., neurofeedback targets, investigated populations, applied training protocols, and methods). (3) Large randomized controlled trials are still lacking. In view of the novelty of the field, the quality of the published studies is moderate. We identified room for improvement in reporting important information and statistical power to detect realistic effects. (4) Several studies show that people can regulate hemodynamic signals from cortical brain regions with fNIRS-neurofeedback and (5) these studies indicate the feasibility of modulating motor control and prefrontal brain functioning in healthy participants and ameliorating symptoms in clinical populations (stroke, ADHD, autism, and social anxiety). However, valid conclusions about specificity or potential clinical utility are premature.

Conclusion: Due to the advantages of practicability and relatively low cost, fNIRS-neurofeedback might provide a suitable and powerful alternative to EEG and fMRI neurofeedback and has great potential for clinical translation of neurofeedback. Together with more rigorous research and reporting practices, further methodological improvements may lead to a more solid understanding of fNIRS-neurofeedback. Future research will benefit from exploiting the advantages of fNIRS, which offers unique opportunities for neurofeedback research.

Introduction

Functional near-infrared spectroscopy (fNIRS) is a growing functional neuroimaging technique that exploits the principles of near-infrared (NIR) spectroscopy and brain hemodynamics. Human tissues, including brain tissue, are relatively transparent to light in the NIR range (650–1,000 nm). If NIR light is directed onto the surface of the head most of the light scatters within the underlying tissue, while some of the light is absorbed by pigmented compounds (chromophores). The main chromophore hemoglobin (red blood cells transporting oxygen) absorbs and attenuates the NIR light, and the absorption spectrum of hemoglobin is dependent on the oxygenation level, i.e., oxy-(HbO) > 800 nm and deoxyhemoglobin (HbR) <800 nm. This principle is utilized by fNIRS to detect relative changes of HbO and HbR levels and thereby indirectly estimating brain activation in the underlying brain tissue via optical sensors placed on the surface of the head (see Ferrari and Quaresima, 2012; Pinti et al., 2018b).

Compared to other neuroimaging modalities, such as functional magnetic resonance imaging (fMRI), electroencephalography (EEG), and magnetoencephalography (MEG), fNIRS shows both advantages and disadvantages. First, the spatial and temporal resolution of fNIRS lies between fMRI and EEG. It provides higher spatial resolution (between 2 and 3 cm) than EEG and potentially higher temporal resolution than fMRI, due to a higher sampling rate. The depth of fNIRS measurements is restricted to neocortical brain regions (Pinti et al., 2018b). FNIRS also has a lower spatial resolution and lower signal-to-noise ratio compared to fMRI (Cui et al., 2011). However, the practicability of fNIRS is a major advantage over fMRI: it is easier to use, portable, safe, nearly silent, inexpensive, and requires little setup time. Moreover, fNIRS measurements tolerate more head motion compared to EEG and fMRI measurements. This makes it possible to use fNIRS in more naturalistic environments/situations (e.g., allowing neural activity to be recorded during overt speech, movement, and direct interaction with another person). Furthermore, it permits populations to be investigated that are more likely to show head motion (e.g., neurological or psychiatric patients or infants) and situations that do not allow fMRI measurements (e.g., participants with ferromagnetic implants or claustrophobia). For recent reviews on the use of fNIRS in neuroscience see Pinti et al. (2018b) and Quaresima and Ferrari (2019).

Given the advantages of fNIRS over other neuroimaging modalities, this technique has been increasingly used as a tool for neurofeedback (Ehlis et al., 2018). During neurofeedback training, participants are trained to self-regulate their brain activity, generally with the ultimate goal of changing behavior or cognitive/emotional functions (for reviews see Thibault et al., 2016; Sitaram et al., 2017; Paret et al., 2019). Figure 2 (upper part) shows a typical fNIRS-neurofeedback setup. Changes in HbO, HbR, or total hemoglobin (tHb) are assessed via optodes placed on the participants' heads covering a certain brain region of interest and are usually fed back to the subject in the form of visual representations. Individuals can then use this feedback information to learn successful self-regulation of brain activity and ideally transfer this skill to daily life. Successful neurofeedback training usually requires several neurofeedback sessions [1–5 sessions for fMRI-neurofeedback and up to 30 sessions for EEG-neurofeedback (see Thibault et al., 2016)], which is costly and difficult to perform with fMRI.

To date, there has been no comprehensive systematic review of fNIRS-neurofeedback studies. The available reviews are either not systematic or are selective (not covering all published fNIRS-neurofeedback work). Some reviews focus more on general aspects of fNIRS-based brain-computer interfacing (e.g., Naseer and Hong, 2015; Thibault et al., 2016; Ehlis et al., 2018). For example, a recent review by Ehlis et al. (2018) reviewed several of their own studies alongside a few other experiments. They concluded that fNIRS-neurofeedback training can enable participants to regulate their hemodynamic responses deliberately and that this training may induce changes in brain functions over time. Further, Ehlis et al. (2018) conclude that if future studies confirmed initial findings, fNIRS-neurofeedback may become a complementary or even alternative treatment option for neuropsychiatric disorders.

The present systematic review is divided into five stand-alone sections. We (1) synthesize information about training protocols; (2) provide an overview of the methods used for online signal-processing to calculate the feedback signal; (3) critically evaluate the quality of published studies including experimental designs, reporting (Tufanaru et al., 2017; Ros et al., 2020), and statistical power; (4) assess and discuss the effectiveness of fNIRS-neurofeedback to regulate and induce pre-post changes in brain activity; and (5) assess and discuss its effectiveness in inducing changes in behavioral/cognitive/emotional1 outcome measurements in healthy and pathological populations and also review the clinical potential of fNIRS-neurofeedback. We finish the review with a discussion arising from the findings of the five sections and also touch on the future of fNIRS-neurofeedback research.

Methods

The study protocol for this systematic review was registered on PROSPERO and can be accessed at https://www.crd.york.ac.uk/PROSPERO/display_record.php?RecordID=141049. Data from this systematic review are available at the Open Science Framework (see https://osf.io/hnxfq/). We followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Moher et al., 2009).

Search Strategy

We searched the following electronic bibliographic databases for studies published up until 3 July 2019: PubMed/MEDLINE, Web of Knowledge/Web of Science, Scopus, and EMBASE. Additional searches were conducted using the Real-time Functional Imaging and Neurofeedback (rtFIN) database (rtfin.org), Cochrane Reviews library database (cochranelibrary.com), Clinicaltrials.gov, scholar.google.de, and preprint servers: biorxiv.org, arxiv.org, psyarxiv.com, medrxiv.org, and osf.io. The following search terms were used: (“functional near infrared spectroscopy” OR “fNIRS” OR “near infrared spectroscopy” OR “NIRS”) AND (“real-time” OR “real time” OR “neurofeedback” OR “biofeedback” OR “Brain-Computer Interface” OR “Brain computer interface” OR “BCI” OR “Brain-Machine Interface” OR “Brain Machine Interface” OR “BMI”). More details of the search strategy can be found in the protocol or via the following link: https://www.crd.york.ac.uk/PROSPEROFILES/141049_STRATEGY_20190703.pdf.

Study Selection

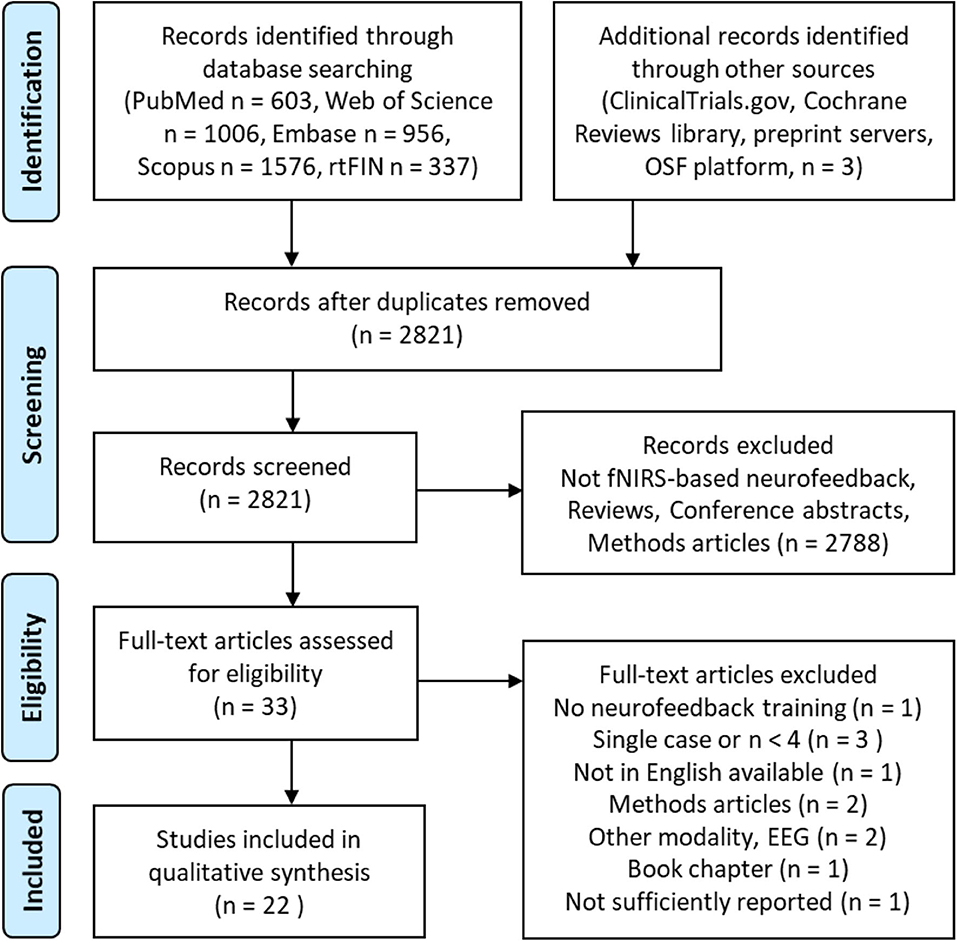

We included all published articles with study designs that applied fNIRS-neurofeedback training to regulate brain activity and/or behavior in healthy or patient populations. The field is very young and to date no randomized controlled trials have been published. Hence, we applied rather loose inclusion criteria, also including non-controlled, pilot, feasibility, and proof-of-concept studies involving at least four participants. Studies applying fNIRS only for the purpose of brain-based communication or control of devices were excluded. After removing duplicates, the titles and abstracts of 2,821 articles were screened and the full-text of the remaining 33 articles was retrieved and assessed for eligibility. Twenty-two studies met the inclusion criteria and were considered in the qualitative synthesis (see Figure 1), involving a total of 441 participants (337 healthy participants and 104 patients).

Figure 1. Search decision flow diagram according to preferred reporting items for systematic reviews and meta-analyses (PRISMA; Moher et al., 2009).

Data Extraction and Analysis

A spreadsheet was used to document data extracted from the studies. Extracted data included: information about study population and study design, details of the neurofeedback protocol and control conditions; methods used for online signal-processing to calculate the feedback information, and outcomes of the neurofeedback training categorized into behavioral and neural effects within and independent of targeted brain regions (see Supplementary Material). After finishing the data extraction, the spreadsheet was sent to all corresponding authors of the included studies to ask for corrections. Fifteen of 22 authors replied and either approved the data extraction or sent minor corrections. We also gave authors the opportunity to comment on a preprint version of the manuscript, which was uploaded at the Open Science Framework (see https://osf.io/hnxfq/) before submission. Further methodological details will be provided in the respective sections.

Results and Discussion

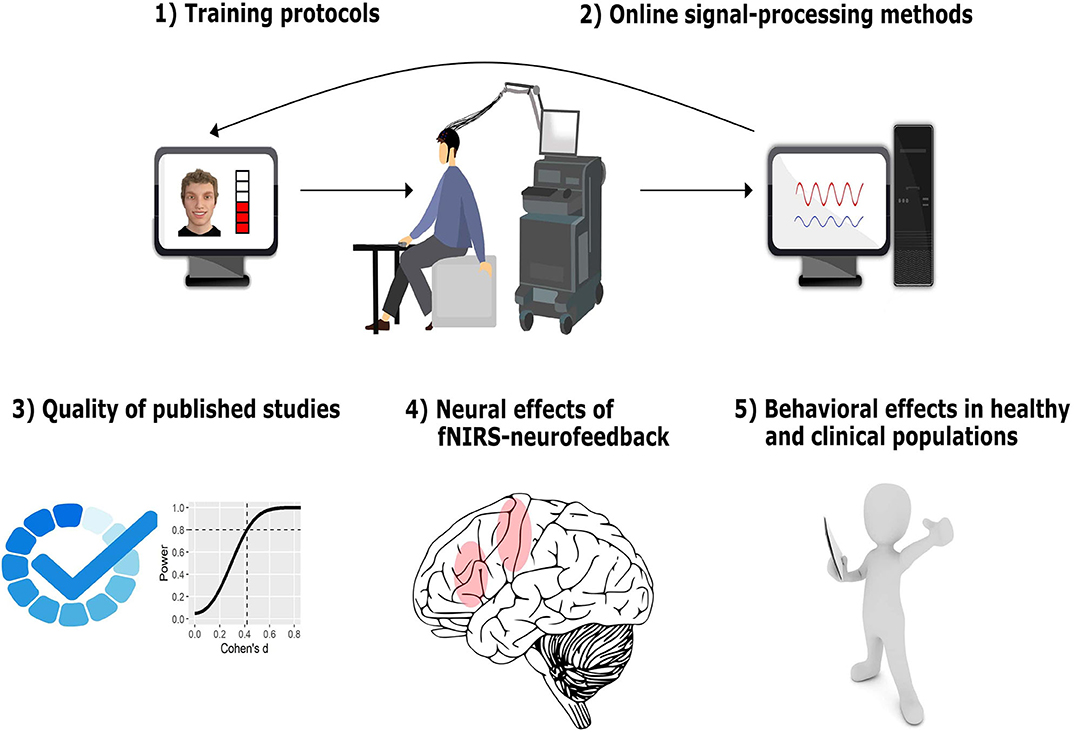

We present and discuss the results of this systematic review in five sections (depicted in Figure 2).

Figure 2. Structure of results and discussion. In the first two sections, we provide a comprehensive overview of how fNIRS-neurofeedback training is implemented, describing and discussing important features of neurofeedback-training protocols (1) and of the real-time signal-processing methods applied (2). In the third section, we critically evaluate the quality of published studies including experimental design and reporting quality as well as statistical power/sensitivity as an indicator of reliability of the reported findings (3). In the fourth section, we assess and discuss the effectiveness of fNIRS-neurofeedback to regulate and induce pre-post changes in brain activity (4). Finally, we assess and discuss its effectiveness in changing behavioral outcomes and we review the clinical potential of fNIRS-neurofeedback (5). The fNIRS illustration was created by Laura Bell.

1. Training Protocols

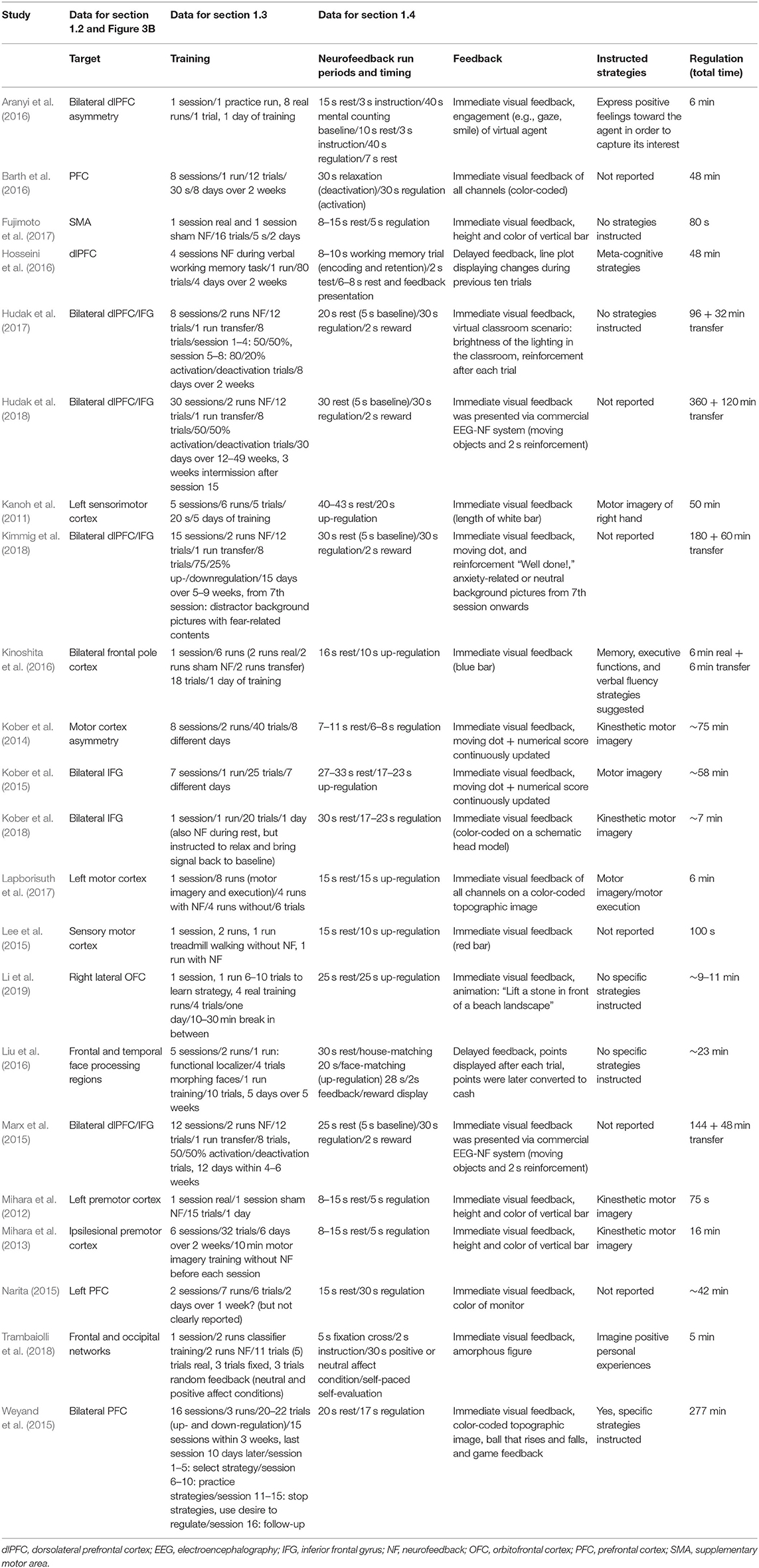

When designing a neurofeedback study and creating a new training protocol, there are a number of aspects to be considered. Some of these include: (1) population(s) to be studied (e.g., healthy or patients or both), (2) neural target for the neurofeedback training (e.g., a particular [set of] brain region(s) or a measure of connectivity), (3) control-group approach (see section 3.1), (4) duration of training, and (5) the neurofeedback procedure, including training conditions (e.g., regulation, rest, transfer), the kind of feedback presentation and task instructions. In this section of the review, we provide an overview of how previous fNIRS-neurofeedback studies established these protocol aspects. We also discuss implications and possible extensions for future studies. Table 1 shows details of the training protocols used in the studies included in our review.

1.1. Target Populations

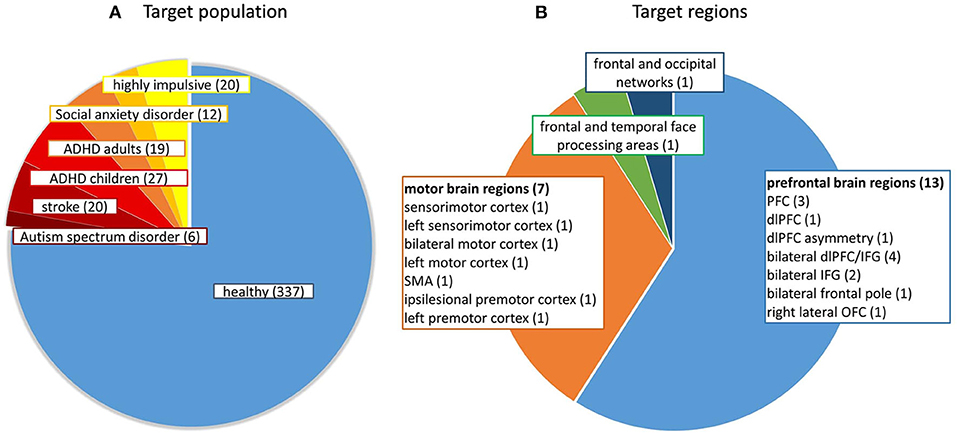

Figure 3A shows the different target populations investigated in the studies. Mostly healthy participants were investigated (N = 337), but also patients after stroke (N = 20), with social anxiety disorder (N = 12), autism spectrum disorder (N = 6), attention-deficit/hyperactivity disorder (N = 27 children and N = 19 adults), and adults with high impulsivity (N = 20).

Figure 3. (A) Number of participants from different target populations and (B) Number of studies targeting a certain brain region. ADHD, attention-deficit/hyperactivity disorder; dlPFC, dorsolateral prefrontal cortex; IFG, inferior frontal gyrus; OFC, orbitofrontal cortex; PFC, prefrontal cortex; SMA, supplementary motor area.

1.2. Target Regions

Figure 3B shows the distribution of the neurofeedback target regions of the included studies. The bulk of the studies trained participants to regulate parts of the prefrontal cortex (PFC), i.e., dorsolateral prefrontal cortex (dlPFC), inferior frontal gyrus (IFG), frontal pole or orbitofrontal cortex (OFC). Some studies broadly targeted the PFC using eight to fourteen target channels (Barth et al., 2016; Hudak et al., 2017, 2018; Kimmig et al., 2018) and others targeted specific subregions of the PFC using only one target channel (Li et al., 2019). Another large proportion of the studies trained participants to regulate activation within sensorimotor regions and enhance motor imagery-related brain activation (e.g., Fujimoto et al., 2017). One study reinforced up-regulation of temporal and frontal face-processing regions, as individually defined by a functional localizer (Liu et al., 2016) and another aimed to train a broad affective network of frontal and occipital brain regions using a multivariate classifier approach (Trambaiolli et al., 2018). The signal-to-noise (SNR) ratio likely differs between target regions and depends on scalp-brain or source-detector distance. The SNR is dependent on individual physical features such as individual brain anatomy, head size, skull thickness, and hair properties (e.g., thickness, density, length and color; Orihuela-Espina et al., 2010). On average, the scalp-brain distance is higher in parietal regions and lower in frontal and temporal regions (Cui et al., 2011). These factors should be taken into account when selecting target regions/channels for a neurofeedback study [see also section Selection of Target Regions (Channels of Interest)]. It should be noted that to date no study has used fNIRS-neurofeedback training to target connectivity between specific brain regions.

1.3. Training Duration

The length of training varied broadly between studies and ranged from short one-session designs (nine studies) up to 30 sessions (Hudak et al., 2018), resulting in a total duration of regulation training that varied between 75 s and up to 8 h. In particular, clinical treatment studies used a higher number of training sessions (Marx et al., 2015; Hudak et al., 2018; Kimmig et al., 2018). The majority of studies applied five sessions or less. While the optimal number of sessions for acquiring self-regulation of hemodynamic brain responses via fNIRS needs to be determined, successful regulation after even a single session has been reported in sham-controlled studies (Fujimoto et al., 2017; Li et al., 2019). Similar to fMRI-neurofeedback, fNIRS-neurofeedback targets spatially specific brain hemodynamics and might therefore offer a faster pace of learning compared to EEG-neurofeedback with most studies involving 20–40 training sessions (see also Marx et al., 2015; Thibault et al., 2018). However, the data of studies by (Marx et al., 2015; Mayer et al., 2015) that directly compared fNIRS- with EEG-neurofeedback still need to be published to shed further light on different learning mechanisms.

1.4. Neurofeedback Procedure

A neurofeedback procedure consists of at least three different aspects: (1) within-run task periods and their timing, (2) feedback presentation (i.e., sensory modality employed, timing, and complexity of feedback information, and (3) instructions provided to participants.

1.4.1. Neurofeedback Run Periods and Their Timing

A neurofeedback run procedure consists of at least two different kinds of periods, i.e., a regulation period during which participants receive neurofeedback and try to change brain activity, and a resting period during which no feedback is provided that can also serve as a baseline-control condition. Most studies instructed participants to rest during the control condition, except for one study that instructed participants to engage in mental counting in an attempt to control for potentially confounding mental processes (Aranyi et al., 2016). Some protocols also included additional reward periods (e.g., smiling faces or points) presented after each regulation trial (Liu et al., 2016; Hudak et al., 2017, 2018; Kimmig et al., 2018), which is a form of additional delayed feedback. Two studies applied a combination of neurofeedback with (socio-)cognitive training and presented delayed feedback on activity during a cognitive task after a block (Hosseini et al., 2016; Liu et al., 2016). To ensure or assess the transfer of self-regulation skills beyond the neurofeedback sessions, a few studies employed transfer (no-feedback) periods (Marx et al., 2015; Hudak et al., 2017, 2018; Kimmig et al., 2018), where participants received the same instructions as in the neurofeedback task, but without receiving any feedback on their brain activity. Unfortunately, these studies did not report regulation success specifically for transfer periods. While most protocols either trained up- or downregulation, some also trained regulation in both directions, where activation and deactivation periods were randomly presented. The lengths of the regulation and baseline conditions varied broadly across studies from 5–40 to 6–43 s, with most studies varying between 20 and 30 s.

Transfer indicates that a skill is transferred to different situations or tasks. Depending on the contextual factors of the transfer situation, we can distinguish between near and far transfer, with the latter being more important for the success of training (see Barnett and Ceci, 2002). However, some studies (Marx et al., 2015; Hudak et al., 2017, 2018; Kimmig et al., 2018) used the term transfer to describe task periods but presented a reward after each transfer trial. Hence, they switched from immediate to delayed neurofeedback training and are unable to ensure or demonstrate that participants are able to regulate brain activity without receiving feedback, i.e., to transfer the skill of brain regulation to a new situation and possibly beyond neurofeedback training (far transfer). When combining up- and down-regulation within a session, care should be taken to balance the randomization of up- and down-regulation periods in order to keep transition probabilities between periods equal. Otherwise, participants may anticipate and prepare for the following condition, i.e., regulate in the opposing direction during the baseline condition as demonstrated by Hudak et al. (2018). Similarly, anticipatory effects might be prevented by introducing variable onsets of the regulation conditions, as applied by some of the studies. This would render randomization of periods unnecessary and enable to present several up- and downregulation periods in a blocked fashion, which reduces cognitive demands and may facilitate shaping of individual strategies during the training. However, combining up- and downregulation in a single session might involve the risk of carry-over effects between periods, which may impede regulation performance. However, this speculation needs to be corroborated by a comparative study. The optimal trial structure for neurofeedback procedures needs further investigation. Since comparative studies including fMRI- and EEG-neurofeedback research are lacking, we mostly rely on theoretical considerations when designing neurofeedback tasks. Considering the time course of the hemodynamic response (Ogawa et al., 1992), which is delayed and peaks after 4–6 s, a reasonable duration of the regulation and baseline period is 20–30 s. Further research is needed to discover whether shorter or longer periods of up to 40 s (Aranyi et al., 2016) and above are beneficial. While a short duration of 5 s can be considered too short for a hemodynamic response to develop properly, these studies continue to show the feedback signal during a subsequent rest period (Mihara et al., 2012, 2013; Fujimoto et al., 2017). Moreover, we can assume that if feedback is presented immediately (a slowly developing hemodynamic signal), longer durations are required compared to delayed feedback, which is presented considering the activation of a whole previous trial.

1.4.2. Feedback Presentation

The presentation of feedback information may differ with regard to (1) sensory modality (visual, auditory, tactile), (2) timing (immediate vs. delayed), (3) complexity (simple vs. complex virtual environment), and (4) rewarding content (smiling faces or monetary reward). Most of the studies used a simple type of immediate visual feedback in the form of a bar (e.g., Fujimoto et al., 2017) or more complex animations of, for example, rising objects (e.g., Marx et al., 2015; Li et al., 2019), or smiling virtual agents (Aranyi et al., 2016). Others used color-coded topographic maps (Barth et al., 2016; Lapborisuth et al., 2017; Kober et al., 2018), displaying the signal change of all channels while instructing participants to focus on a certain region within the channel arrangement. Kimmig et al. (2018) started with a simple form of visual feedback (moving ball) and introduced neutral and anxiety-related pictures in the middle of the training period to provide a relevant context for patients with social anxiety disorder. Some studies presented delayed feedback in addition to immediate feedback in the form of a reward (e.g., smileys or points) after each trial (Hudak et al., 2017; Kimmig et al., 2018), or only delayed feedback in order to reduce distraction during the task/regulation period (Hosseini et al., 2016; Liu et al., 2016).

Whether immediate or delayed feedback is superior or whether they are equally effective is a matter of ongoing debate and there is only limited and as yet unclear evidence from a few comparative fMRI-neurofeedback studies (see Paret et al., 2019). In case of the noisier fNIRS-neurofeedback, feeding back mean or median activity at the end of each block may avoid confusing participants due to noisy fluctuations of the feedback signal and may also be beneficial with regard to timing (see above). The form of social neurofeedback used by Aranyi et al. (2016) may be experienced as more rewarding and may improve motivation, which is confirmed by preliminary evidence from an fMRI-neurofeedback study demonstrating that social neurofeedback outperforms simple visual feedback and leads to stronger activation of reward-related brain regions (Mathiak et al., 2015). In an effort to increase motivation and facilitate transfer to daily life or critical situations, neurofeedback has been embedded in a 3D virtual-reality environment. While the feasibility of this approach has been demonstrated in a subclinical adult population (Hudak et al., 2017), an ongoing clinical trial in children with attention-deficit/hyperactivity disorder (ADHD) is investigating whether virtual-reality-based neurofeedback is superior to simpler forms of feedback (Blume et al., 2017). Some researchers are opposed to complex feedback, arguing that neurofeedback should lead to knowledge of results and that gaming environments which are too complex may distract participants (Hinterberger et al., 2004; Sherlin et al., 2011). However, comparative studies provide evidence that the more engaging complex feedback embedded in a virtual environment may facilitate learning and is better received by users (Gruzelier et al., 2010; Cohen et al., 2016).

1.4.3. Instructions

In a neurofeedback study, participants are provided with general task instructions about the experiment and in most cases also instructions on how to regulate a certain brain region. Instructions can be very specific (e.g., use motor imagery to regulate motor brain regions) or rather loose (e.g., use mental strategies to change the feedback signal).

Twelve studies instructed participants to upregulate, one to downregulate, and six to up- and down-regulate a certain target region. Another study reinforced asymmetry, i.e., a difference between the activity of a target region and its homolog on the other hemisphere, and two used a multivariate analysis approach to calculate the feedback signal. While some studies provided explicit instructions about types of mental strategies to regulate brain activity, such as kinesthetic motor imagery (Kober et al., 2014) or affective strategies (Trambaiolli et al., 2018), others merely encouraged participants to learn self-regulation using a trial-and-error approach (e.g., Fujimoto et al., 2017; Hudak et al., 2017).

Even if no strategies are provided, study design and general instructions about the experiment may prime the use of certain strategies (Kohl et al., 2019). For example, in the study by Fujimoto et al. (2017), when participants were informed about the target region (supplementary motor area) they might have been tempted to use motor-imagery strategies. Therefore, care needs to be taken when giving task instructions and also when informing participants about the general purpose of the experiment. It is thus important that participants' strategies are documented thoroughly, ideally after each neurofeedback trial or run [see also section Experimental Design and Reporting Quality (CRED-nf Checklist)], although results need to be interpreted carefully, since retrospective self-reports may lack realiability (see Veenman, 2011), particularly in children (Stone and Lemanek, 1990). Whether explicit instructions are beneficial for neurofeedback learning or not remains an open question and may depend on the particular training protocol (see Paret et al., 2019). For instance, it has been suggested that explicit strategies are not necessary, and some work even indicated that they may be detrimental or at least not helpful in some cases (Birbaumer et al., 2013; Sepulveda et al., 2016; Shibata et al., 2019). This is also supported by a recent fNIRS-neurofeedback study (Weyand et al., 2015) where participants used two personalized mental strategies over ten sessions and were then instructed to stop using their strategies and instead to use only their desire to regulate brain activity. Interestingly, regulation performance remained the same after weaning off specific mental strategies and the majority of participants reported training to be less demanding and more intuitive. Nevertheless, strategy instruction may initially facilitate learning (Scharnowski et al., 2015) and may be helpful if successful strategies to regulate a certain brain region are clearly known, e.g., using motor imagery to regulate motor regions. Hence, the decision about instructions may depend on the targeted brain region, duration, and purpose of the neurofeedback-training experiment.

1.5. Conclusion—Training Protocols

FNIRS-neurofeedback has been applied in a variety of populations, including different patient populations, children, adolescents, and older adults. However, previous studies are heterogeneous in terms of (i) selected neurofeedback targets (mostly comprising prefrontal and sensory motor brain regions), (ii) duration of training, and (iii) design of the neurofeedback procedure, including timing, feedback display, and instructions. Generally, as fNIRS measures the same hemodynamic brain signal as fMRI, a large part of the fMRI-neurofeedback training procedures can be (and have already been) transferred to fNIRS-neurofeedback. Note, however, that also for neurofeedback in general there are a lot of open issues with regard to training protocol methods (see Paret et al., 2019). Further systematic research and discussion will help to achieve consensus and make neurofeedback training protocols more efficient.

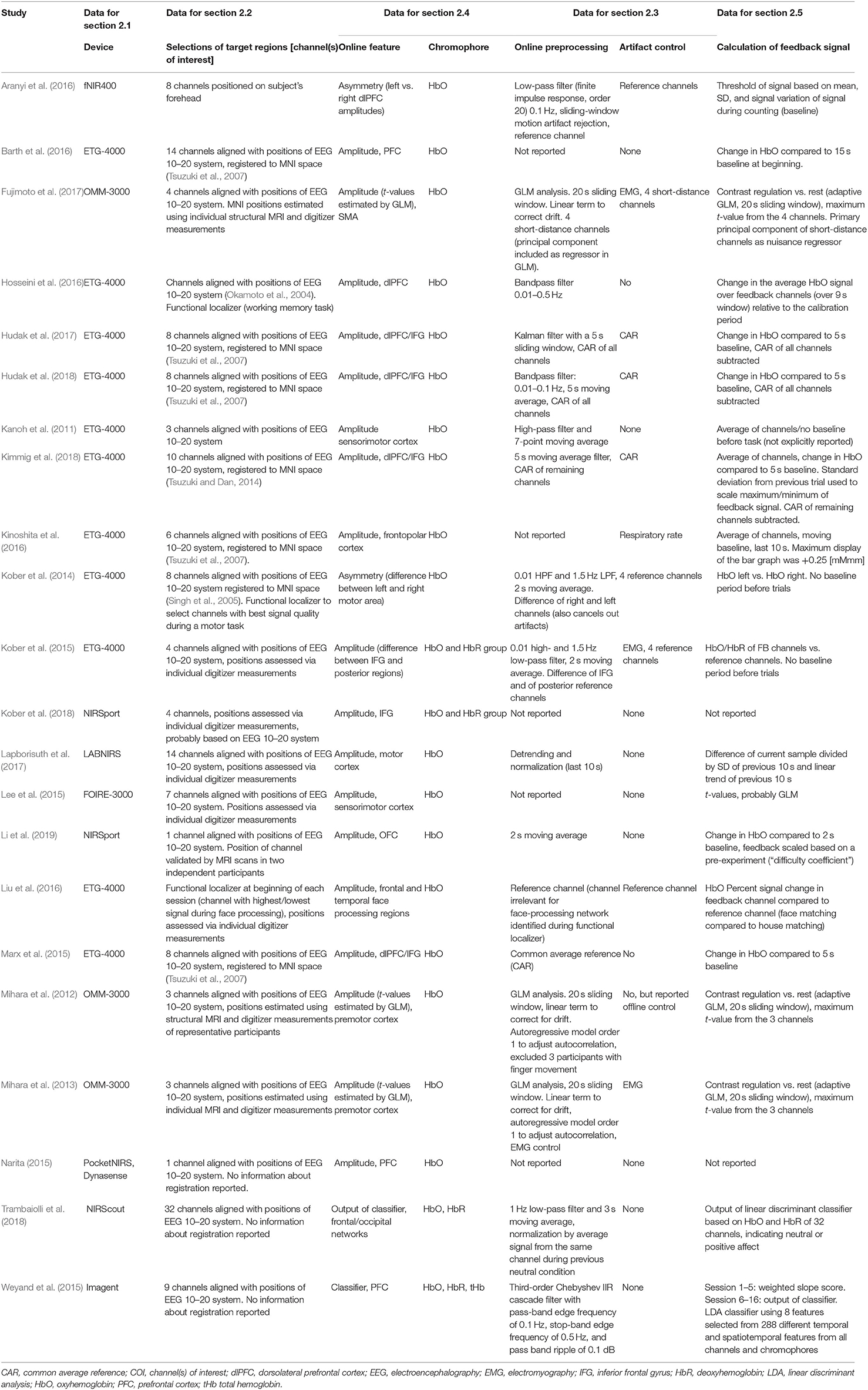

2. Online Signal-Processing Methods and Hardware

In this section, we give an overview of the different methods used for online signal-processing, including devices, selection of brain regions (fNIRS channels) of interest, online feature extraction (chromophores used), and preprocessing and artifact control (see also Table 2). Providing valid feedback of neural activity to the participant is a crucial component of successful neurofeedback applications. Therefore, methods have to be carefully selected to capture the neural activity of a target region and minimize strong extracranial artifacts, as are frequently present in the fNIRS signal (Caldwell et al., 2016).

2.1. Devices

Nine different devices were used in the studies: ETG-4000, OMM-3000, NIRSport, NIRScout, fNIR400, LABNIRS, FOIRE-3000, PocketNIRS, and Imagent. Some devices were used more frequently than others. However, this does not imply qualitative superiority, and it is beyond the scope of this review to judge the functionality of the devices used. Notably, other commercially available devices are used in cognitive neuroscience and custom-developed mobile fNIRS instrumentations (also available open-source: www.opennirs.org) are used for BCI applications (von Lühmann et al., 2015), and may be used for neurofeedback applications. For an overview of commercially available fNIRS systems and their features refer to Scholkmann et al. (2014) and Pinti et al. (2018a). Major differences between the devices are the wavelength used and the amount of wavelength exploited by the systems. Five of the devices used two wavelengths (ETG-4000, NIRSport, NIRScout, Imagent, fNIR400) whereas the other four devices support three wavelengths (OMM-3000, FOIRE-3000, LABNIRS, PocketNIRS). It should be noted that each of the devices used different wavelengths in the range from 690 to 860 nm and had different precisions (e.g., dynamic range or sensitivity, based on the hardware and its quality). These differences can potentially constitute confounds in the context of reproducibility of neurofeedback results. The use of more wavelengths can improve signal quality (Arifler et al., 2015) and might therefore be beneficial for fNIRS-neurofeedback applications.

2.2. Selection of Target Regions (Channels of Interest)

In order to target specific brain regions reliably, the studies applied different methods to verify appropriate channel selection for extracting the neurofeedback information. In fNIRS-neurofeedback studies, a certain number of optodes are placed on the participants' heads (general optode setup), then some of the channels (‘channels of interest') are selected to extract the neurofeedback information. All the studies used a priori knowledge about the assumed location of specific brain regions involved in the regulation task. Additionally, most studies used the EEG 10–20 system (Jasper, 1958) as a reference to place the optodes (20 out of 22 studies; Table 2) and the methods of Okamoto et al. (2004), Singh et al. (2005), or Tsuzuki et al. (2007) to register channels to the MNI space. Alternatively (or in combination), the positions were either verified by an individual or reference structural MRI scan combined with a digitizer measurement (Fujimoto et al., 2017) or a functional localization procedure was performed. Eight studies used additional digitizer measurements to verify channel positions post-hoc. Three studies used a functional localizer before the training session to select task-relevant channel(s) with individually good signal quality (Kober et al., 2014; Hosseini et al., 2016; Liu et al., 2016). In one study, the optodes were placed on the forehead of the subject without describing the use of other reference points (Aranyi et al., 2016). Three studies provided no further information about additional registration of the optodes. For the eight studies using digitizer measurements, the channels were positioned with respect to the neurofeedback target region based on MNI coordinates using virtual spatial registration or general head location in combination with a functional localizer (Liu et al., 2016). The input for the online feature was either a single channel or the average signal of channels covering the region of interest. The number of channels used for extracting the neurofeedback information ranged from 1 to 14 for studies using amplitude changes and 9 to 32 channels for studies using multivariate statistics as a feature for the feedback (see Table 2).

In sum, the selection of channels of interest relied mainly on the EEG 10–20 system in combination with MNI coordinates and a priori knowledge about specific brain regions that are involved in the regulation task, which is a suitable procedure for selecting target channels. A combination with functional localizers seems to be a more reliable solution (if applicable) for amplitude-based studies since it additionally takes subject-specific variance into account by selecting individualized channels. Additionally, using digitization of optode locations and alignment with (preferably individual) MRI scans allows even more details to be obtained on optimum placement of the optodes.

2.3. Online Pre-processing and Artifact Control

The fNIRS signal comprises different sources of noise. Most problematic in the context of real-time analysis seems to be the physiological noise that overlaps partly with task frequency, such as low-frequency oscillations of blood pressure (Mayer waves; Kamran et al., 2016). The studies applied different online preprocessing methods to deconfound the feedback signal from these sources of noise. Five studies did not report the online preprocessing methods they used and are thus not further considered in this review with respect to their preprocessing (Lee et al., 2015; Narita, 2015; Barth et al., 2016; Kinoshita et al., 2016; Kober et al., 2018). The majority of the studies which reported their online pre-processing steps applied different kinds of high- and low-pass filters (10 of 17; Table 2). Some studies did not use a high-pass filter (e.g., Aranyi et al., 2016; Kimmig et al., 2018; Li et al., 2019). For low-pass filtering, mainly finite impulse response (FIR) filters were used, most commonly a moving-average filter (window ranging from 2 to 5 s). Four studies (Marx et al., 2015; Hudak et al., 2017, 2018; Kimmig et al., 2018) used a common average reference (CAR) for data preprocessing, i.e., subtracting the average of all channels from the feedback channel, three of these in combination with additional filtering. Four studies used one or multiple reference channels (Kober et al., 2014, 2015; Aranyi et al., 2016; Liu et al., 2016). However, considering the lack of relevant information in other studies, it may be the case that some of these studies used additional filters but did not report them. Most of the studies did not use any explicit artifact control on top of the filtering methods (7 of 17). The studies that used artifact control to some degree most frequently employed either CAR or reference channels (8 out of 11) and one study (Kober et al., 2015) also included electromyography (EMG) measures for post-hoc artifact control. Only one of these eight studies used short-distance channels to control for artifacts (in combination with EMG measures, Fujimoto et al., 2017). Two studies used either only EMG (Mihara et al., 2013) or the respiration rate (Kinoshita et al., 2016) as a reference for post-hoc artifact control, which was implemented using, for example, visual inspection of the EMG signal or differences in the respiration rate. Aranyi et al. (2016) used a sliding-window motion artifact rejection (SMAR) procedure, which rejected motion-affected periods in the fNIRS signal. Other motion correction methods suitable for real-time applications, e.g., Cui et al. (2010), were not applied.

Surprisingly, only three out of the nine studies that targeted motor regions used EMG to control for subtle movements (Mihara et al., 2013; Kober et al., 2015; Fujimoto et al., 2017), which can confound the feedback signal, and details of the EMG analysis are rarely reported. Other studies did not control for this confound or only visually inspected movements of the participants (Mihara et al., 2012). It is important that future studies targeting motor regions control for this confound. To date, studies have only looked at motion artifacts post-hoc. Future studies could establish methods to control for EMG signals online, e.g., stop presenting feedback signal when movements occur or include the EMG signal as a nuisance regressor when using a general linear model (GLM) approach to calculate the feedback signal. Some studies did not apply high-pass filtering. It could be argued that if the feedback signal is compared to a preceding baseline in a short time frame, low-frequency drifts can be neglected, which would render high-pass filtering unnecessary. However, this remains to be confirmed by future research.

In general, the field would benefit from implementing more sophisticated artifact-control methods to account for potential confounding signals (Caldwell et al., 2016; Tachtsidis and Scholkmann, 2016; Pfeifer et al., 2018). Short-distance channels in combination with GLM seem to be the most efficient tool to correct for extracerebral physiological signal components (Brigadoi and Cooper, 2015; Tachtsidis and Scholkmann, 2016; von Lühmann et al., 2020). As already stated, only Fujimoto et al. (2017) used this technique, which may be because most of the fNIRS systems are not equipped with the appropriate hardware (Klein and Kranczioch, 2019). If this is the case, a potential alternative is the global component removal approach as introduced by Zhang et al. (2016). This technique seems to be promising to reduce global physiological signals from the fNIRS data and can be used for online artifact control as recently pointed out by Klein and Kranczioch (2019) specifically with respect to single-trial data. FNIRS-neurofeedback studies have not yet applied this method but have rather used CAR or other referencing to correct for evoked systemic cerebral and extracerebral components. However, referencing should be applied with care, as reference channels have to be independent of the target region and participants may modulate the feedback signal by regulating reference channels instead of feedback channels (Hudak et al., 2018). Therefore, the global component-removal approach could be a viable alternative. However, if none of those methods is available during the experiment it should be checked post-hoc that these global signals did not drive the neurofeedback signal change.

2.4. Online Feature—Chromophores Used

For the online feature, either the amplitude or a derivative of the HbO or HbR was used, or a classifier was trained to discriminate specific states. Most studies (20 of 22) used a type of amplitude change. In examining the studies that used the amplitude, we found three studies (Mihara et al., 2012, 2013; Fujimoto et al., 2017) which used the maximum t-value of the selected channels. All other studies used the HbO or HbR amplitude as a direct source for the feedback, scaled to a certain level, and referenced to a specific period before the feedback. For the studies using a classifier, a linear discriminant analysis (LDA) classifier was trained to discriminate two classes (e.g., neutral or positive affect; Weyand et al., 2015; Trambaiolli et al., 2018).

Even though there are multiple options for the source of neurofeedback information using fNIRS, most of the studies used HbO. Only two of the 22 studies used both chromophores and only two used HbR and HbO for different study groups (Kober et al., 2015, 2018). It is important to note that two of the four studies that used both chromophores employed a classifier approach (Trambaiolli et al., 2018), and one also included tHb (Weyand et al., 2015). All other studies used the direct amplitude of HbO/HbR or a derivative of it. Kober et al. (2018) showed that people can regulate both chromophores with fNIRS-neurofeedback, but depending on the regulation strategy (in this case motor imagery to alter brain activation) regulation ability may be restricted to the natural course of the HbO and HbR signal changes related to this strategy.

The best-suited chromophore for neurofeedback (and other BCI) applications is still a matter of debate and has not been intensively investigated. Most studies use HbO since it displays larger amplitudes than HbR (Stangl et al., 2013; Sato et al., 2016). On the other hand, Kirilina et al. (2012) reported HbR to be less sensitive to artifacts. However, a recent study (Klein and Kranczioch, 2019) demonstrated that HbR is also affected by a global signal component. The contrast-to-noise ratio seems to be comparable for HbO and HbR across different tasks (Cui et al., 2011), but according to Naseer and Hong (2015) HbO signals were more discriminative for BCI applications than those of HbR signals.

In sum, although less frequently used, current evidence does not imply that HbR or tHb are less suitable for fNIRS-neurofeedback. While all three options (HbO, HbR and tHb) seem to be suitable, future research is still needed to ascertain whether one option outperforms the others in the context of neurofeedback applications.

2.5. Calculation of Feedback Information

The calculation of the feedback signal greatly depends on the type of display used for the presentation (see section 1.4.2). Generally, the amplitude of the HbO signal during the feedback/task block was used and either compared to a preceding baseline (e.g., Hudak et al., 2017, 2018), the fNIRS-system baseline (Barth et al., 2016), or a baseline of the GLM (e.g., Fujimoto et al., 2017). The signal of interest can additionally be compared to a different channel not covering the region of interest (Liu et al., 2016) or asymmetry scores can be calculated, e.g., difference between right- and left-hemispheric channels (Aranyi et al., 2016). For the two studies using a multivariate approach, the feedback was based on the output of the classifier identifying neutral or positive affect (Weyand et al., 2015; Trambaiolli et al., 2018). Two studies (Narita, 2015; Kober et al., 2018) did not report the methods used for feedback calculation. Using the system baseline instead of a preceding baseline before each trial seems risky, since low-frequency drifts may confound the signal if the applied preprocessing methods do not capture them properly.

Another important aspect is the selection of feedback thresholds, i.e., defining the amount of signal change necessary for change and setting a minimum and maximum of the presented feedback. For example, thresholds were defined based on signal variation during the preceding control condition (Aranyi et al., 2016; Kimmig et al., 2018) on the basis of t-values, which was also used for feedback (Fujimoto et al., 2017), or based on a certain HbO change (Kinoshita et al., 2016). Li et al. (2019) calibrated thresholds based on a pre-experiment conducted in an independent sample. However, some studies did not transparently report how the threshold used for feedback was defined [see also section 3.2].

2.6. Conclusion—Online Signal-Processing Methods and Hardware

While the studies reviewed applied a considerable diversity of online signal-processing methods, some similarities across the studies were also evident, e.g., regarding online-feature and chromophore selection. Unfortunately, crucial information regarding online signal-processing procedures was often missing. Since there are no established standards for online or offline processing methods (Kamran et al., 2016; Pinti et al., 2018b), future studies are encouraged to explore different methods and provide sufficient information so that other studies can easily replicate successful methods.

Assuring signal quality is crucial for neurofeedback applications, particularly for fNIRS-neurofeedback, which suffers from strong extracranial artifacts. A careful selection of online signal-processing methods is necessary to avoid the presentation of invalid feedback information. Further developments and more systematic research on fNIRS online signal-processing methods are definitely needed to overcome this specific shortcoming of the fNIRS technology.

3. QUALITY OF PUBLISHED STUDIES

In this section, we assess and discuss the quality of published studies including (1) features of experimental designs and methodological quality according to the JBI ratings, (2) design and reporting quality according to the CRED-nf checklist, and (3) analysis of statistical power/sensitivity as an indicator of reliability of the reported findings.

3.1. Quality of Experimental Designs

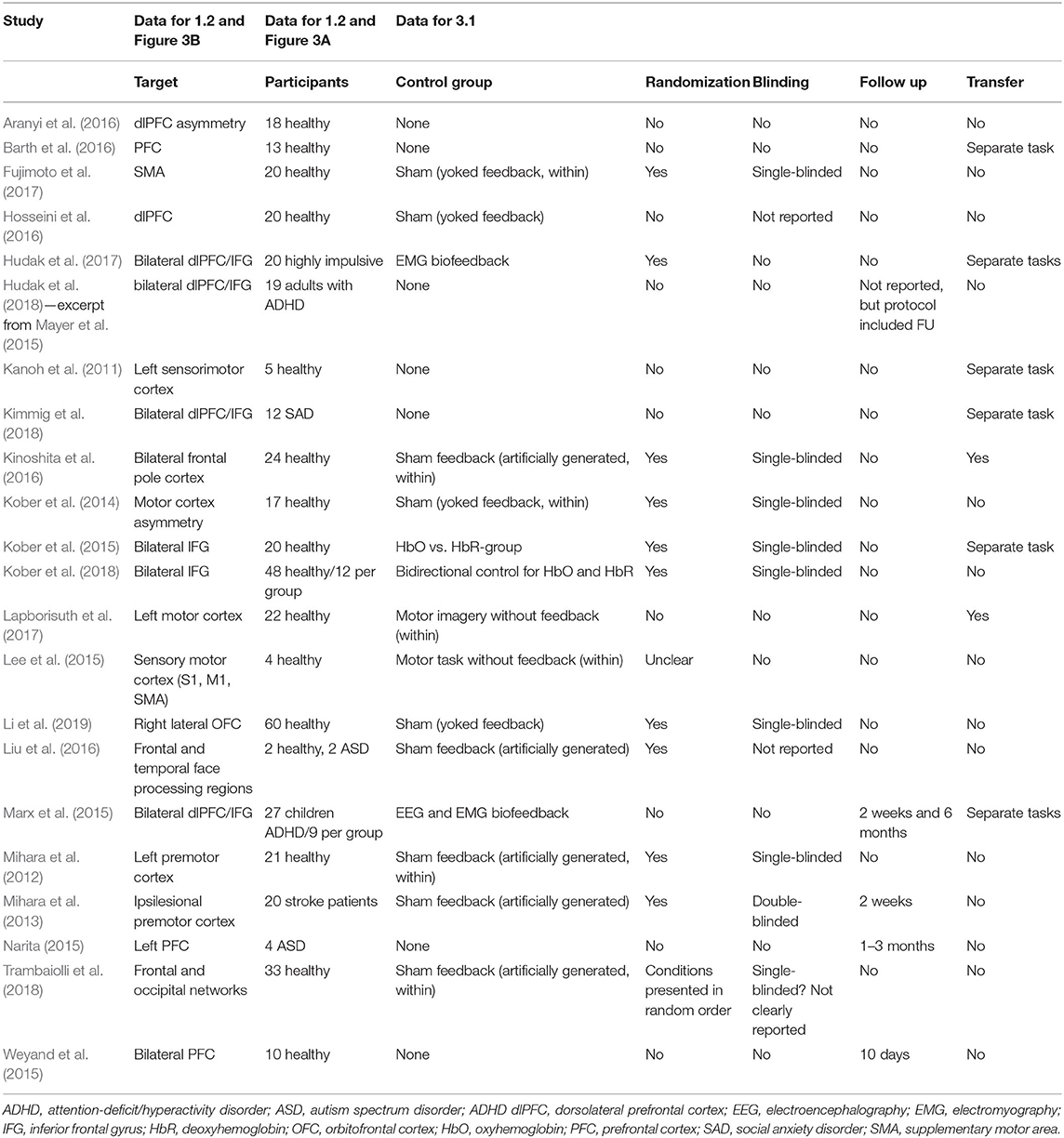

Table 3 shows important features of the experimental designs of the studies.

3.1.1. Control Conditions

Depending on the specific research aim, neurofeedback studies can make use of several different control conditions. Control conditions may include, treatment-as-usual, bidirectional-regulation control, feedback of an alternative brain signal, sham feedback, and mental-rehearsal control, and can be applied in a within- or between-subject design (see Sorger et al., 2019). Ideally, multiple control conditions are applied in order to disentangle neurofeedback-specific from unspecific processes. In this regard, Lubianiker et al. (2019) recently proposed an extension of established control conditions, in which participants of the control group are randomly assigned to a subset of different neural control targets (randomized ROI control condition). In this way, specific effects related to the control targets and neurofeedback-unspecific processes that likely differ for different neurofeedback targets may average out across all subjects of this control group. For extensive discussions on different control conditions in neurofeedback research, see Lubianiker et al. (2019) and Sorger et al. (2019).

Seven of the studies did not use any control condition. Nine studies used a sham feedback based on either artificially created signals (five studies) or based on a brain signal of another participant (yoked feedback, four studies). Two studies compared the effects with other forms of biofeedback (EEG- or EMG-based). One study compared the effects of motor-imagery neurofeedback with mental rehearsal (motor imagery only) and another compared neurofeedback during a motor task with motor task only in a within-subject design. Also, the effects of neurofeedback based on HbO and HbR were compared in a between-subject design without an additional sham-feedback condition (Kober et al., 2015) and in a bidirectional-control approach, investigating four different groups (Kober et al., 2018).

3.1.2. Randomization and Blinding

Of the fifteen studies with a control group, eleven studies randomized assignment to groups or order of conditions. Seven studies blinded participants to conditions and only one applied double-blinding. However, in some situations blinding is not possible, e.g., when comparing neurofeedback to another treatment. These studies attempted to reduce bias by not informing participants about any other treatment than that which they received (Marx et al., 2015; Hudak et al., 2017), which may at least help to keep levels of expectation and motivation equal across groups. Assessments of motivation, expectation, or other unspecific factors can be included in future studies to check this assumption.

3.1.3. Assessment of Transfer

To assess a transfer effect, four studies made use of transfer trials or no-feedback conditions, where participants received the same instructions as in the neurofeedback task, but without receiving any neurofeedback information. As described in section 1.4, some studies used a very liberal definition of the term transfer (if at all a very near transfer; see Barnett and Ceci, 2002). Five studies (Mihara et al., 2012; Kober et al., 2015; Barth et al., 2016; Hudak et al., 2017; Kimmig et al., 2018) assessed transfer using other computerized tasks and investigated whether activation within the targeted brain region changes after neurofeedback training, and were able to demonstrate transfer beyond neurofeedback training (far transfer).

3.1.4. Follow-Up Measures

Only four of the studies investigated long-term effects of neurofeedback in a follow-up (10 days up to 6 months after neurofeedback training) in order to investigate whether participants were still able to regulate brain activity after a period without training (Weyand et al., 2015) or stability of observed behavioral effects (Marx et al., 2015). Evidence of delayed effects of neurofeedback emerging after the primary endpoint of a study has been reported. Particularly when investigating clinical populations, follow-up measures may boost statistical power and should be applied where possible (Rance et al., 2018; Van Doren et al., 2019).

3.1.5. Methodological Quality (JBI Critical Appraisal Tool)

To assess the methodological quality of the included studies, we used the checklist for quasi-experimental studies of the Joanna Briggs Institute (JBI) critical appraisal tools (Tufanaru et al., 2017). Two of the authors (SK and DM) independently rated studies according to the nine criteria of the checklist. These items include: clarity of cause and effect (temporal relationship between variables), similar participants; similar treatment in compared groups; existence of a control group/condition; multiple measurement points of the outcome; completion of follow-up; similar outcome measurements in compared groups; reliability of outcome measurements; appropriate statistical methods. Each study was allocated points based on the number of criteria fulfilled. Disagreements between the review authors were resolved by discussion. For further details about rating criteria, see Supplementary Material.

Table S1 shows the results of the ratings for each study. On average 5.55 (SD = 2.15) of 9 required quality criteria were rated “yes.” It should be noted that only four studies used appropriate statistical methods according to this rating. Most of the studies were rated as not using appropriate statistical methods because they did not justify their sampling plan or omitted labeling their study a pilot, feasibility or proof-of-concept study.

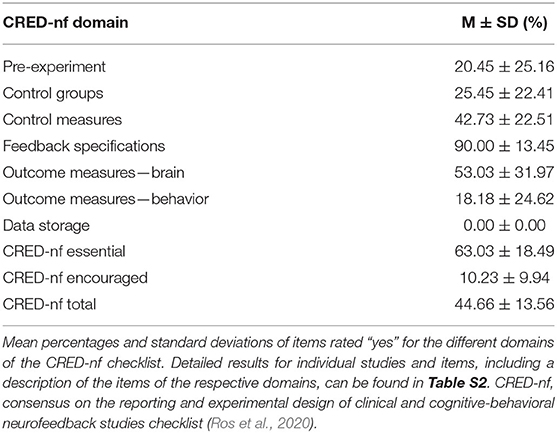

3.2. Experimental Design and Reporting Quality (CRED-nf Checklist)

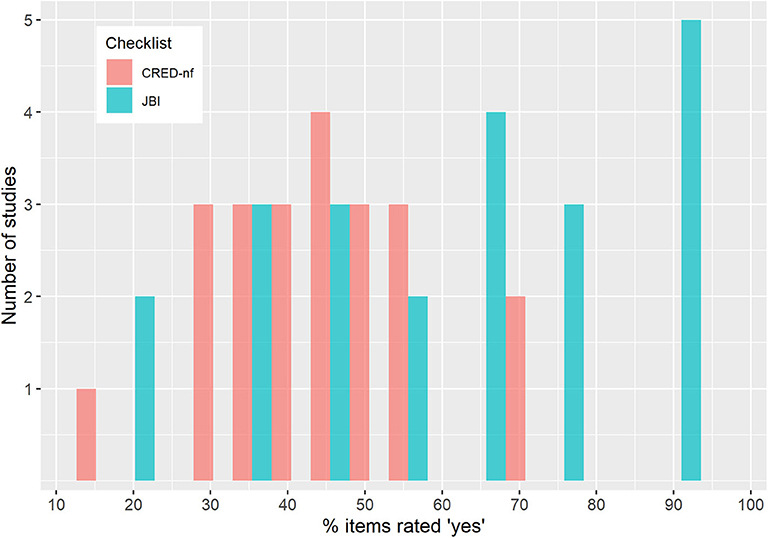

To assess experimental design and reporting quality we used the current version of the CRED-nf (Consensus on the reporting and experimental design of clinical and cognitive-behavioral neurofeedback studies) checklist (Ros et al., 2020). The CRED-nf checklist is designed to encourage best practice in terms of experimental designs and reporting of neurofeedback studies. It covers seven domains (Pre-experiment, Control groups, Control measures, Feedback specifications, Outcome measures brain, Outcome measures behavior, and Data storage), including 23 checklist items, fifteen of which are considered essential, and eight encouraged. In contrast to the JBI checklist, the CRED-nf checklist does not include subjective ratings. Instead, it assesses whether a study reports contents required by a respective item, e.g., “Report the feedback modality and content.” One of the authors (SK) filled in a checklist for each study with page numbers identifying where each point was addressed. The number of addressed items of both categories essential and encouraged are reported for each study. For further details see Supplementary Material.

Table 4 and Table S2 show detailed results of the CRED-nf checklist, including a short description of individual items of the respective CRED-nf domains. On average 63.03% (~9 of 15) of the essential items and 10.23% (~1 of 8) of encouraged items were reported. It should be noted that this best-practice checklist was published only very recently after all the included studies had been published. Hence, the authors of the included studies as well as ongoing studies could not make use of this resource in designing their experiments and publishing their results.

The domain Pre-experiment was not very well reported, on average only 20.45% of the items were included. Only one study reported a pre-registration of the experimental protocol (Hudak et al., 2018). However, this study deviated from the originally published registration (Mayer et al., 2015) and investigated a different research question including only a subsample of participants. None of the studies conducted a power analysis in order to justify sample size. Eight of the 22 studies were labeled as a pilot, feasibility, or proof-of-concept study, which renders a power analysis unnecessary.

On average only 25.45% of the items in the domain Control groups were reported, which is related to the fact that only a few clinical trials have yet been published and seven uncontrolled studies were included. Of the fifteen controlled studies, nine reported having used single- or double-blinding or at least discussed the fact that blinding was not possible (Marx et al., 2015; Hudak et al., 2017). Only one study reported having blinded raters of the outcomes or whether participants/experimenters remained blinded. Two of the clinical studies employed a standard-of-care intervention group as a benchmark for improvement (Mihara et al., 2013; Marx et al., 2015).

With respect to the domain Control measures, the studies reported on average 42.73% of the items. Six studies reported having used some measure of psychosocial or non-specific factors (e.g., motivation, expectation, effort). Since these measures are easy to implement and do not require much additional time, we recommend that future studies should make more use of this additional easy and low-cost method of controlling for non-specific effects. Almost a third of the studies did not report whether participants were provided with a strategy and only six studies reported strategies used by the participants. Even if no explicit strategies are provided, study design and general instructions about the experiment may prime the use of certain strategies. The use of mental strategies undoubtedly affects brain activity and may also induce behavioral effects on its own. Hence, better control and transparent and more detailed reporting of this factor is required, which may also contribute to solving outstanding issues of the utility of strategy instructions (see also section Neurofeedback Run Periods and Their Timing).

The domain Feedback specifications was well reported, on average 90% were included. All studies included feedback modality and content as well as the software and hardware used, and almost all studies reported the definition of the online-feature extraction. Sixteen of the studies also included at least some information about the reinforcement schedule, for example feedback-threshold criteria, but did not justify this in relation to the existing literature. Also, the amount of reward received by participants was rarely included. While most studies reported the essential contrast used for feedback, i.e., regulation vs. rest, only eight of the studies described both conditions of regulation and rest separately.

Studies reported on average 53.03% of the items related to Outcome measures—Brain. Most studies included neurofeedback regulation success based on the feedback signal. However, some studies did not report regulation success at all (Marx et al., 2015; Narita, 2015), while others only reported regulation success of representative participants or only based on significant channels and took surrounding channels that were not used for feedback calculation into account instead of reporting the average of all feedback channels (e.g., Mihara et al., 2012; Lapborisuth et al., 2017). This lack of transparency makes it difficult to conclude whether neurofeedback was at all effective on the brain level. Less than half of the studies plotted the feedback signal of within-session or between-session regulation blocks of the feedback signal, which would permit further insights into the dynamics of regulation performance over the course of the training period. Only seven of the studies statistically compared the experimental to the control condition or group. There were seven uncontrolled studies unable to make such a comparison. Hence, seven controlled studies did not undertake this comparison, but rather analyzed groups/conditions separately, which does not allow any conclusions to be drawn about group differences and makes it difficult to appraise the effectiveness of neurofeedback (Nieuwenhuis et al., 2011).

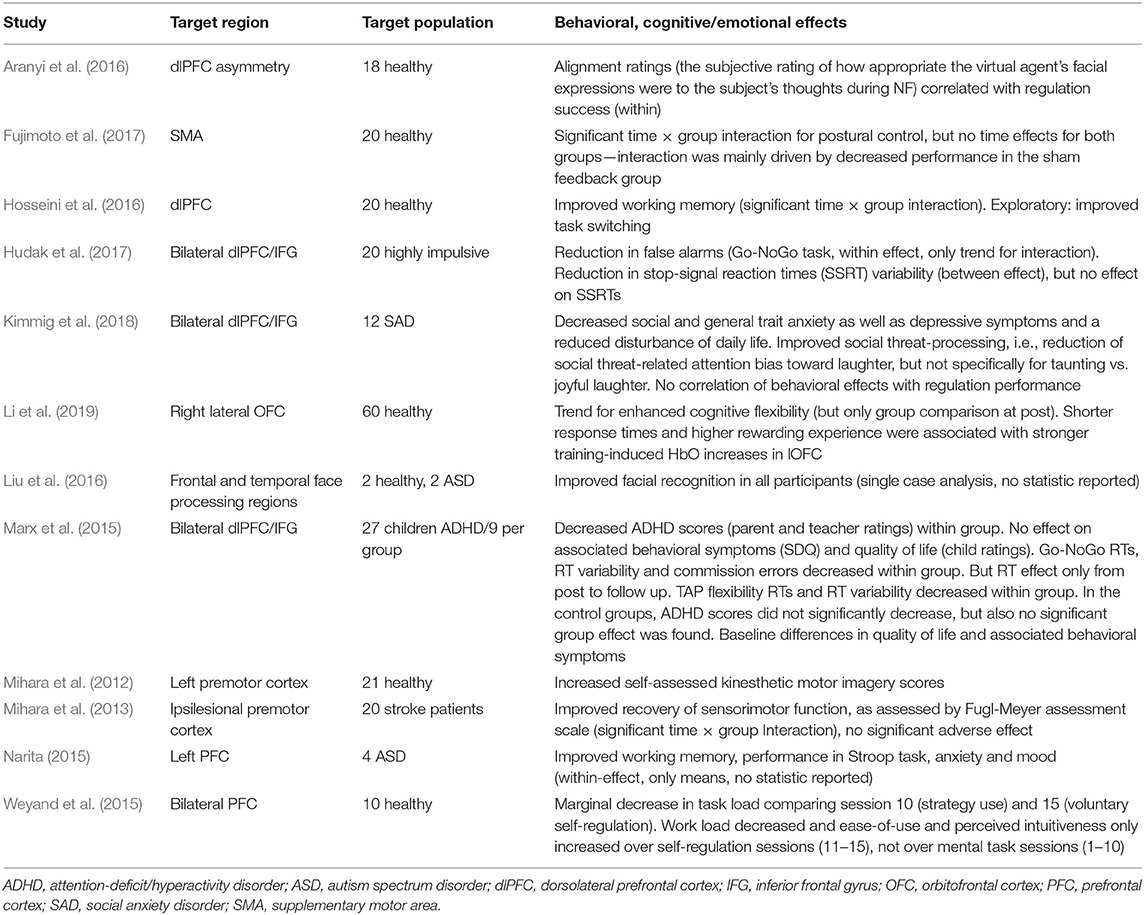

On average only 18.18% of items in the domain Outcome measures—Behavior were reported. However, this low rate can be explained by the fact that most studies were mainly interested in regulation performance, and behavioral variables did not represent the primary outcome or were not assessed at all. Of the 14 studies that actually assessed a behavioral variable, eight reported a correlation between regulation success and behavioral outcome. None of the studies included measures of clinical or behavioral significance, such as the minimal clinically important difference (MCID; Engel et al., 2018). However, there are only a few clinical studies in which such a measure is applicable.

None of the studies reported having uploaded materials, analysis scripts, code, or data to an open-access data repository.

3.2.1. CRED-nf Checklist—Conclusion

In sum, the reporting quality of published studies can be considered to be moderate to low in the light of current consensus guidelines. While some domains such as Feedback specifications were well reported, other such as Pre-experiment or Data Storage were not. The CRED-nf checklist provides guidelines for best practice with regard to experimental design and reporting of neurofeedback studies. The field of fNIRS-neurofeedback is still very young and some of the standards set by the CRED-nf checklist do not yet apply at this early stage. For example, double-blinding or a measure of clinical or behavioral significance should be included at the stage of randomized controlled trials, but not necessarily in early proof-of-concept studies that primarily aim to test neural regulation performance. However, we note that we applied more lenient criteria for some of the items, which resulted in higher ratings than if we had applied the original items. This should be considered in future comparisons applying the CRED-nf checklist. As stated above, this best-practice checklist was not available when the studies included in this review were published. Hence, a lack of reporting for some items should be expected at this early stage. Nevertheless, the incomplete reporting identified in the domain Outcome measures—Brain gives reason for concern. Without this information, we cannot clearly identify whether participants learned to control the brain signal of interest. As is common across the published literature, findings that do not meet a threshold for statistical significance may remain underreported in the fNIRS-neurofeedback literature. Future studies could benefit from orienting toward these new guidelines, while also abiding by other guidelines for reporting clinical trials, such as CONSORT (Schulz et al., 2010). We encourage researchers to use the CRED-nf checklist as an orientation when designing new studies and suggest they make use of the CRED-nf web application (rtfin.org/CREDnf) when submitting results for publication. The web application helps authors to standardize the reporting of CRED-nf items, which will facilitate future systematic reviews.

3.3. Statistical Power/Sensitivity

To further assess study quality, we extracted information about the reported sample sizes and estimated statistical power/sensitivity of the included studies. Statistical power, the probability of detecting an effect size of a certain magnitude with a given sample size and accepted threshold for the probability of a false-positive finding, is an important indicator for the reliability of reported findings and will therefore be investigated here.

The majority of neuroscientific studies, including neuroimaging studies, feature relatively small sample sizes and hence remarkably low statistical power for detecting realistic (i.e., small to moderate) effect sizes (Button et al., 2013; Poldrack et al., 2017). Furthermore, small sample sizes imply higher variability around effect size estimates. In combination with publication bias (i.e., the tendency to publish mainly significant findings), reported effects thus tend to be overestimated (Algermissen and Mehler, 2018), rendering the scientific literature in psychology and neuroscience an unreliable basis for conducting power analyses for future studies (Szucs and Ioannidis, 2017; Allen and Mehler, 2019; Schäfer and Schwarz, 2019).

Computing statistical power requires knowledge of the effect size that is considered relevant and worth detecting, i.e., the smallest effect size of interest (SESOI). Post-hoc power analyses that are commonly not based on SESOIs represent mere transformations of the p-value. Such an approach cannot therefore provide any information about the a priori power of the included fNIRS studies. Since SESOIs are highly experiment-specific (Lakens et al., 2018) and unknown to us, it proved difficult to set an SESOI for (1) regulation performance and (2) behavioral outcomes for our purpose. First, we note that neurofeedback experiments represent complex interventions (Sitaram et al., 2017) during which various factors, or their interaction, may determine the outcome (see also section 1.4). We also note that neurofeedback studies vary greatly in how they define and quantify successful regulation (Paret et al., 2019) and different definitions may reveal different effect sizes. Second, SESOIs for behavioral outcomes will depend on the specific paradigm (e.g., motor imagery or emotion regulation), outcome variable (e.g., reaction times or self-rated measures of emotion regulation), and study population (i.e., healthy participants or patient populations, and for patient groups the type of clinical population, e.g., stroke or depressed patients). Therefore, we did not set an SESOI to calculate the post-hoc statistical power of fNIRS-neurofeedback studies, but instead used Cohen's conventions, covering a range of effect sizes that may be comparable to potential SESOIs (d = 0.2, 0.5, and 0.8; Cohen, 1992).

Additionally, we assessed the statistical sensitivity, calculating the smallest effect size that individual studies were able to detect with certain probabilities (0.8 and 0.95), given their reported sample size. We conducted separate analyses for regulation performance and for a behavioral outcome, where applicable. Several studies employed a repeated-measure analysis of variance (ANOVA) but did not provide details of the correlation among repeated measures or violation of the sphericity assumption. For pragmatic reasons, we thus assumed for all studies that there was no violation of sphericity and a correlation of 0.8, which is considered a good general estimate for test-retest correlations in neuropsychological assessments (Calamia et al., 2013). To simplify analyses, we also used uncorrected p-values and ANOVA/t-tests instead of non-parametric tests, if used by a specific study. Additionally, instead of mixed models or ANOVAs with more than one within factor, two-factorial mixed ANOVAs were used for the sensitivity analysis. Overall, these measures should lead to higher power estimation, and we can assume that we overestimated the statistical power of the studies. Analyses were carried out using G*Power (Faul et al., 2007).

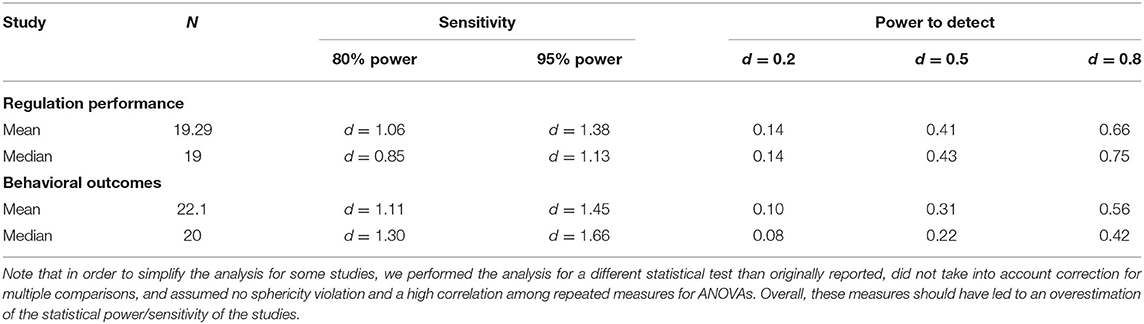

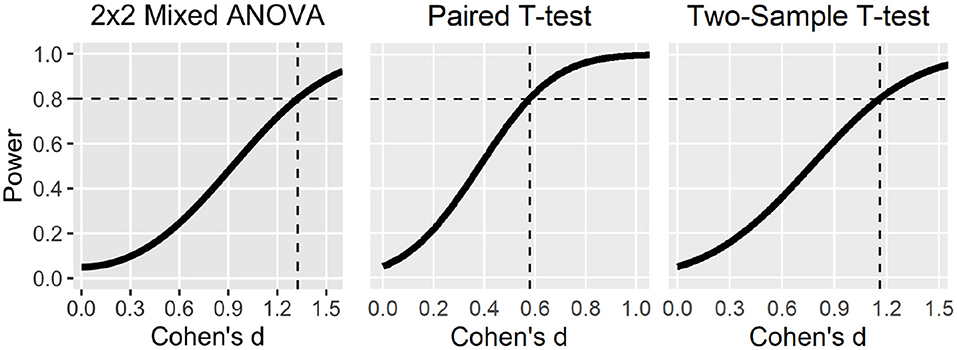

Overall, sample sizes varied across studies from our minimum inclusion size, i.e., four (two per cell), up to 60 (30 per cell). One single group study included 33 participants. The median sample size of the studies was 20 (12 per cell). We calculated the median effect size that was detectable with 80 and 95% power. For the outcome regulation performance, we found a value of d = 0.85 and d = 1.13, respectively. For behavioral outcomes, the value was d = 1.28 and d = 1.63, respectively. We further found that the median power to detect a small effect of d = 0.2 was low (0.14 and 0.08). Our results showed that the power was even insufficient to reliably detect large behavioral effects (0.43). Studies were only powered to reliably detect large effects in regulation performance (0.75; see Table 5 and Tables S3, S4 for more details).

Some studies that included control groups lacked a direct statistical comparison of regulation performance between the experimental and the control condition. Instead, these studies only reported the main effects within conditions and compared statistical significance between conditions instead of effect sizes. This statistical approach is erroneous for group comparisons (Nieuwenhuis et al., 2011) and makes it difficult to assess whether the experimental group outperformed the control group. If we assume that these studies found no statistically significant group effect, we note that due to insufficient statistical power we cannot come to valid conclusions about potential group effects. To check this assumption, we conducted sensitivity analyses for the respective group/interaction effects, using a statistical test that was appropriate for the respective study design (see Supplementary Material). Sensitivity for detecting a certain group/interaction effect size was lower as compared to detecting a within-group main effect. It is reasonable to assume that interaction effects are smaller and, depending on the underlying assumptions, require four to sixteen times more participants to achieve similar a priori statistical power (Gelman, 2018). Therefore, studies were likely underpowered for reliably detecting group differences in neurofeedback effects, which are very likely smaller than within-group effects.

As noted earlier, it remains difficult, if not impossible, to generalize these findings across paradigms because the smallest relevant effect sizes (SESOIs) may depend on the choice of the neurofeedback target region, population, control conditions, and other characteristics of the design. However, specific behavioral effects of neurofeedback, as assessed in placebo and motivation level-controlled designs, are possibly rather small (see Supplementary Material). This assumption is at least partly supported for the EEG-neurofeedback literature where well-controlled studies depending on population and rating report no specific group effect (e.g., Schabus et al., 2017; Schönenberg et al., 2017) or medium to small and non-significant effect sizes (Strehl et al., 2017).

Altogether, our results suggest the median sample size of published fNIRS-neurofeedback studies is relatively small at N = 20, which is comparable to sample sizes reported more broadly for neuroimaging (Poldrack et al., 2017). It is thus not surprising that most studies lack sufficient statistical sensitivity to detect (realistic) SESOIs. In contrast, most studies were biased toward finding only relatively large effects (Figure 4). We further note that the analyses we present are based on rather liberal statistical assumptions (e.g., no application of multiple-testing correction) and thus likely still overestimate the true statistical sensitivity and power in the field.

Figure 4. Statistical power curves to detect different effect sizes with 20 participants (median sample size) for different statistical tests. Dashed lines indicate smallest effect sizes detectable at 80% power. Note that the power curve for the 2 × 2 mixed ANOVA was based on liberal statistical assumptions (e.g., high correlation among repeated measures, sphericity, and uncorrected p-value of 0.05).

Particularly with regard to specific behavioral effects, studies still lack statistical sensitivity and thus allow only very limited conclusions to be drawn about the feasibility of the paradigms and no conclusions about the specificity of fNIRS-neurofeedback effects. The field mainly consists of small feasibility, pilot, or proof-of-concept studies, which do not have to fulfill the same requirements with regard to sample size since their purpose is to explore the potential of fNIRS-neurofeedback in self-regulating a target brain signal and modulating behavior. However, as described above, most studies are not sufficiently transparent about this and hence risk overstating their findings. We thus reiterate previous concerns and recommend that authors appropriately label pilot, feasibility, and proof-of-concept studies, ideally in their manuscript title. Furthermore, we recommend a clear distinction between planned and exploratory analyses.

3.4. Conclusion—Quality of Published Studies

Altogether, the design and reporting quality of the studies can be considered to be moderate. There were a few studies of lower quality, but also some high-quality studies including sham-control conditions, randomization, and blinding (Figure 5). Sample sizes of the studies were small and thus their statistical power to detect realistic effects was low. While similar results have been reported for other fields within the neurosciences (Nieuwenhuis et al., 2011; Button et al., 2013; Szucs and Ioannidis, 2017), fNIRS-neurofeedback is still in its infancy and we thus still have the chance to tackle these issues early on and lay a more robust foundation to build upon in future work. As the field moves on, well-designed, sufficiently powered confirmatory studies are necessary to reach valid conclusions about the effectiveness of fNIRS-neurofeedback.

4. Neural Effects of Neurofeedback

In this section, we assess and discuss the effectiveness of fNIRS-neurofeedback for regulating and inducing pre-post changes in brain activity.

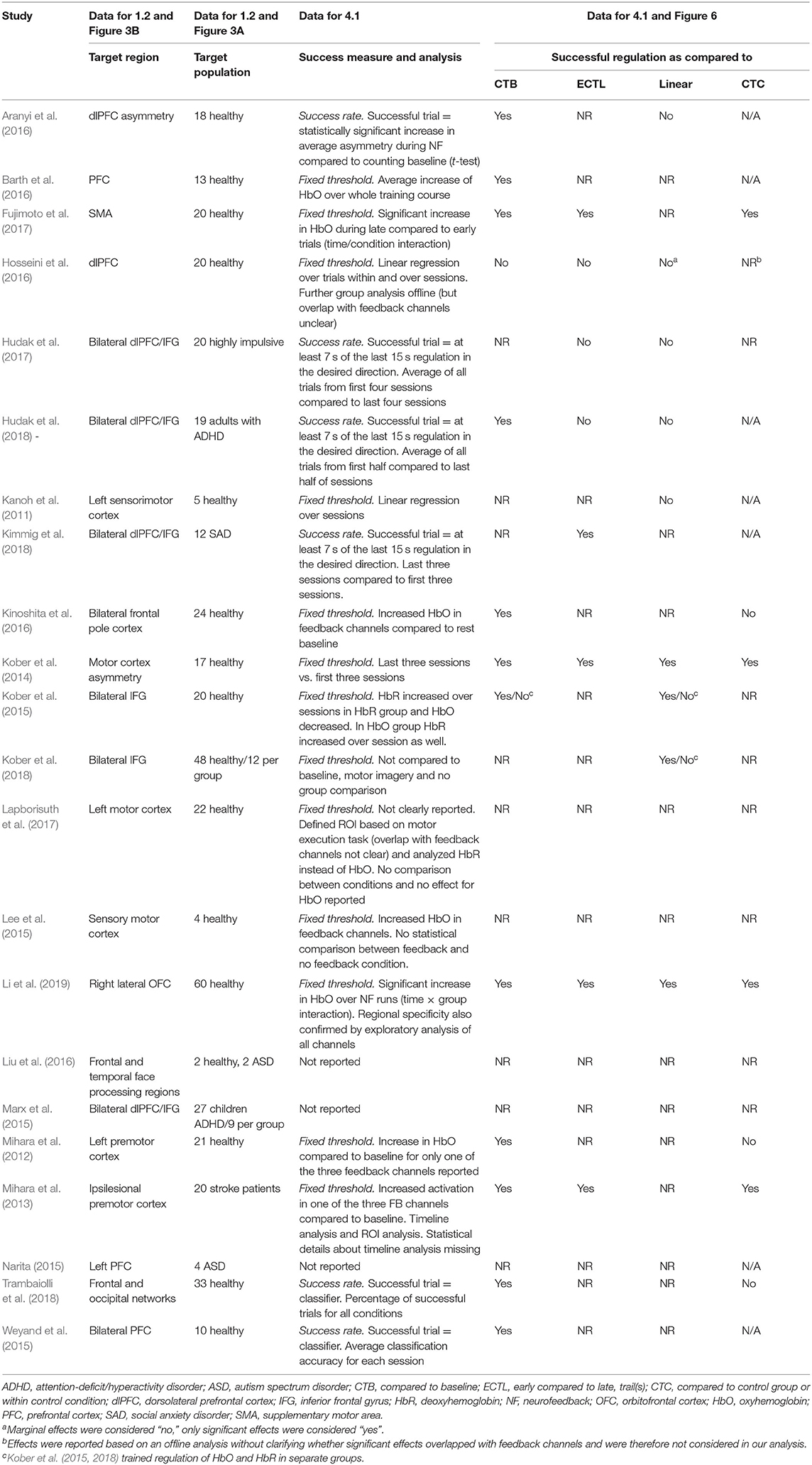

4.1. Neurofeedback Regulation Success

Before considering training effects in terms of changes in behavior or brain activity after neurofeedback, an essential question is whether neurofeedback training was successful, meaning was it effective for regulating the target brain signal. Unfortunately, no standard has been established so far, and there are a number of different ways to define and quantify neurofeedback regulation success. Paret et al. (2019) provide a taxonomy and discuss this issue in depth. In this section, we first discuss different success measures as applied by the studies and then systematically assess and critically discuss the effectiveness of fNIRS-neurofeedback for regulating a target brain signal, as reported by the studies (see Table 6).

4.1.1. Neurofeedback Regulation Success—Measures

While most fNIRS-neurofeedback studies define regulation success based on the magnitude of a signal change, some also define it on the basis of time, i.e., on the amount of time in a trial during which the feedback signal exceeds a defined threshold (Hudak et al., 2017, 2018; Kimmig et al., 2018). Most studies apply a fixed threshold approach and compare the regulation statistically to a baseline condition over a session, while some also report success rates, i.e., a ratio of successful (as previously defined) trial per session (Weyand et al., 2015; Aranyi et al., 2016; Trambaiolli et al., 2018) or for a group of sessions (Hudak et al., 2017, 2018; Kimmig et al., 2018). None of the studies calculated personal effect sizes, i.e., divided average signal change by the standard deviation of a session or run to account for individual noise (cf. Paret et al., 2019). Finally, to judge the regulation success of neurofeedback training, effects over time should be assessed for potential learning curves, rather than merely assessing average signal change across all trials compared to the baseline. For instance, comparisons can be made between early [first session(s)/trial(s)] and late parts of the training period [last sessions(s)/trial(s)] (Kober et al., 2014; Fujimoto et al., 2017; Kimmig et al., 2018). Alternatively, assuming linear improvement, regulation success can be assessed over all trials or sessions via linear regression (Kober et al., 2018; Li et al., 2019). Ultimately, success measures should be compared with a control group or within control conditions. This is important since also sham feedback induces activations, particularly in frontal brain regions (Ninaus et al., 2013), which is the target of the majority of fNIRS-neurofeedback studies. Certain studies compared some of the first sessions with some of the last sessions (Kober et al., 2014; Kimmig et al., 2018), which seems arbitrary. If certain sessions or trials are selected for comparison, this should be theoretically or empirically justified. For example Fujimoto et al. (2017) compared the first six trials with the last ten trials, because according to the existing literature participants should reach a plateau after the first six trials during a motor-learning task (Hatakenaka et al., 2007).

It should be noted that, with a few exceptions in fMRI-neurofeedback (e.g., Goldway et al., 2019), variability measures have been neglected in most neurofeedback success definitions. According to learning theories, the probability of a certain behavior (brain activity) should increase after learning (Skinner, 1963), but variability (noise) should also be reduced. Some theorists have compared neurofeedback learning to motor skill learning (Sitaram et al., 2017). From this perspective, it is assumed that in the course of learning a new skill variability is reduced to optimize performance (He et al., 2016).

4.1.2. Neurofeedback Regulation Success—Results

We assessed whether the studies reported an effect for each of the aforementioned comparisons (see Table 6 and Figure 6). Overall, results show that fNIRS-neurofeedback can be used to regulate brain activity, with some of the studies also demonstrating a greater increase over time as compared to a control group (Mihara et al., 2013; Kober et al., 2014; Fujimoto et al., 2017; Li et al., 2019). Particularly these studies were all of higher quality according to our ratings, at least single- or double-blinded and applied a sham-feedback approach (see section 3.1). The other studies reported mixed results, lacked a control group, or did not report results sufficiently well, making it difficult to draw definite conclusions. For example, some studies did not report regulation success based on the feedback signal, but rather results from an offline analysis of all channels, and did not clarify whether significant effects overlap with channels used for feedback (e.g., Mihara et al., 2012; Lapborisuth et al., 2017). This impedes any judgement about the success of a neurofeedback protocol. We encourage authors to report regulation success based on all feedback channels. If more than one channel is used, the average of the channels or an ROI analysis only based on feedback channels can be reported. Furthermore, some of the studies used time-based binary success criteria and did not additionally report brain activation during regulation. These criteria require the definition of a threshold to be surpassed and neglect information about the signal amplitude. Some studies (Hudak et al., 2017, 2018; Kimmig et al., 2018) used a threshold of zero, i.e., spent at least half of the time of the last 15 s of a trial in the desired direction. This threshold is very liberal and it can be expected that random fluctuation (only noise) of a signal should be half of the time above and half of the time below zero. Hence, high success rates can be expected by chance and are not informative. When using time-based criteria, the amplitudes of the feedback signal should be reported as well.

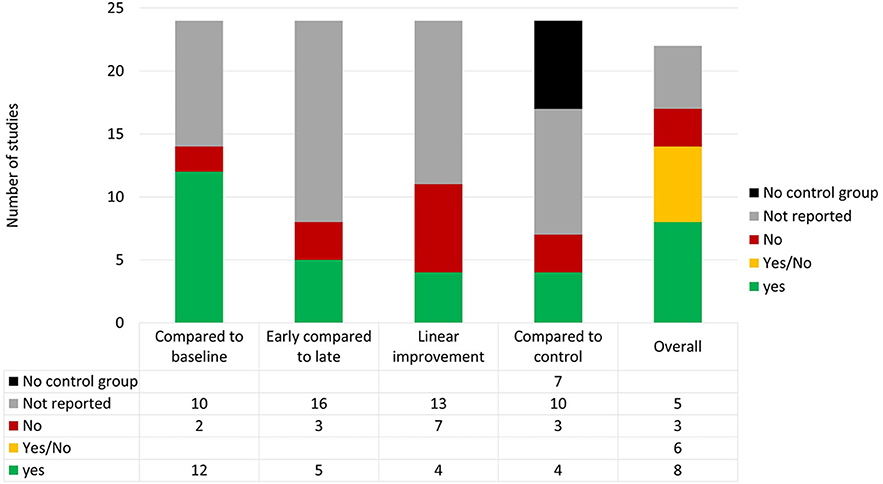

Figure 6. Neurofeedback regulation success. Overall regulation was classified “Yes” if a significant effect for one or more of the four measures were reported and “No” if no significant effect was reported. If both were reported, the overall regulation was classified “Yes/No.” Note that Kober et al. (2015, 2018) trained the regulation of HbO and HbR in different groups and found differential results for the groups. Therefore, the two studies were counted twice for the four measures. In overall regulation, the two studies were only counted once and were classified as “Yes/No”.

4.2. Neural Changes Over Time and Neural Mechanisms