94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 27 April 2020

Sec. Neural Technology

Volume 14 - 2020 | https://doi.org/10.3389/fnins.2020.00348

This article is part of the Research TopicClosed-loop Interfaces for Neuroelectronic Devices and Assistive RobotsView all 10 articles

Raphael M. Mayer1*

Raphael M. Mayer1* Ricardo Garcia-Rosas1

Ricardo Garcia-Rosas1 Alireza Mohammadi1

Alireza Mohammadi1 Ying Tan1

Ying Tan1 Gursel Alici2,3

Gursel Alici2,3 Peter Choong3,4

Peter Choong3,4 Denny Oetomo1,3

Denny Oetomo1,3The appropriate sensory information feedback is important for the success of an object grasping and manipulation task. In many scenarios, the need arises for multiple feedback information to be conveyed to a prosthetic hand user simultaneously. The multiple sets of information may either (1) directly contribute to the performance of the grasping or object manipulation task, such as the feedback of the grasping force, or (2) simply form additional independent set(s) of information. In this paper, the efficacy of simultaneously conveying two independent sets of sensor information (the grasp force and a secondary set of information) through a single channel of feedback stimulation (vibrotactile via bone conduction) to the human user in a prosthetic application is investigated. The performance of the grasping task is not dependent to the second set of information in this study. Subject performance in two tasks: regulating the grasp force and identifying the secondary information, were evaluated when provided with either one corresponding information or both sets of feedback information. Visual feedback is involved in the training stage. The proposed approach is validated on human-subject experiments using a vibrotactile transducer worn on the elbow bony landmark (to realize a non-invasive bone conduction interface) carried out in a virtual reality environment to perform a closed-loop object grasping task. The experimental results show that the performance of the human subjects on either task, whilst perceiving two sets of sensory information, is not inferior to that when receiving only one set of corresponding sensory information, demonstrating the potential of conveying a second set of information through a bone conduction interface in an upper limb prosthetic task.

It is well-established that the performance of grasping and object manipulation task relies heavily on the appropriate feedback. This is established in human grasping with or without using prostheses (Childress, 1980; Augurelle et al., 2003) and in robotic grasping algorithms (Dahiya et al., 2009; Shaw-Cortez et al., 2018, 2019). Within prosthetic applications, such feedback allows effective closed-loop control of the prostheses by the human user (Saunders and Vijayakumar, 2011; Antfolk et al., 2013; Markovic et al., 2018; Stephens-Fripp et al., 2018). To date, prosthetic hand users rely on visual and incidental feedback for the closed-loop control of hand prosthesis (Markovic et al., 2018), as explicit feedback mechanisms are not prevalent in commercial prostheses (Cordella et al., 2016). Incidental feedback can be obtained from vibrations transmitted through the socket (Svensson et al., 2017), proprioceptive information from the muscles (Antfolk et al., 2013), sound from the motor (Markovic et al., 2018), or the reaction forces transmitted by the actuating cable in body-powered prostheses (Shehata et al., 2018). Visual feedback has been the baseline feedback mechanism in prosthetic grasping exercises as it is the only feedback available naturally to all commercial hand prostheses (Saunders and Vijayakumar, 2011; Ninu et al., 2014).

It is also established that a combination of feedback information is required—and required simultaneously—for effective grasping and manipulation to be realized. In Westling and Johansson (1984) and Augurelle et al. (2003), it was demonstrated that the maintenance of grip force as a function of the measured load in a vertical lifting scenario is accompanied by their slip detection function. It was argued that in the scenarios of moving a hand-held object, accidental slips rarely occur because “the grip force exceeds the minimal force required” by a safety margin factor. No exceedingly high values of grip force are obtained due to a mechanism measuring the frictional condition using skin mechanoreceptors (Westling and Johansson, 1984). This argues for the use of two sets of information during the operation, namely the feedback of the grip force as well as the information of the object slippage and friction, even if it is to update an internal feed-forward model (Johansson and Westling, 1987). Other examples include an exercise in “sense and explore” where the proprioception information is required along with the tactile information relevant to the object/environment being explored. Information on temperature in addition to the proprioception and tactile could also be needed in specific applications to indicate dangerous temperature, for example when drinking hot beverage using a prosthetic hand—the user may not feel the temperature of the cup until it reaches the lips and causes a burn (Lederman and Klatzky, 1987).

Investigations in the prosthetic literature have so far focused on conveying each independent sensor information to the human user through a single transducer. The feedback is either continuous (Chaubey et al., 2014) or event driven (Clemente et al., 2017) and multiple transducers have been deployed via high density electrotactile arrays (Franceschi et al., 2017). The number of feedback transducers that can be deployed on the human is limited due to the physiology and the available space. Physiologically, the minimum spatial resolution is determined by the two point discrimination that can be discerned on the skin. The minimum spatial resolution is 40 mm for mechanotactile and vibrotactile feedback (on the forearm) and 9 mm for electrotactile feedback (Svensson et al., 2017). An improved result was shown in D'Alonzo et al. (2014), colocating the vibrotactile and electrotactile transducers on the surface of the skin. Spatially, the number of transducers that can be fitted in a transhumeral or transradial socket is limited by the available space within the socket and the contact surface with the residual limb. The limitation of the available stimulation points is even more compelling when using bone conduction for vibrotactile sensation. For osseointegrated implants there is only one rigid abutment point (Clemente et al., 2017; Li and Brånemark, 2017) and for non-invasive bone conduction there are 2–3 usable bony landmarks near the elbow (Mayer et al., 2019). In all these experiments, each sensory information is still conveyed by one dedicated feedback channel.

A few studies have recognized the need for the more efficient use of the feedback channels and proposed the use of multiple sensor information via a single feedback channel. Multiple sets of information have been transmitted in a sequential manner (Ninu et al., 2014), event triggered (Clemente et al., 2016), or representing only a discrete combination of the information from two sensors (Choi et al., 2016, 2017). Time sequential (Ninu et al., 2014) or event triggered feedback (Clemente et al., 2016) can be used for tasks or events where the need for each sensing information can be decoupled over the subsequent events, therefore do not address the need described above for simultaneous feedback information.

Of the many facets of the challenges in closing the prosthetic control loop through the provision of effective feedback, we seek in this paper to improve the information density that can be conveyed through a single stimulation transducer to deliver multiple sets of feedback information simultaneously to the prosthetic user. Specifically, the amplitude and the frequency of the stimulus signal are used to convey different information. This concept was observed in Dosen et al. (2016), where a vibrotactile transducer was designed to produce independent control of the amplitude and the frequency of the stimulation signal. It was reported that a psychophysical experiment on four healthy subjects found 400 stimulation settings (a combination of amplitude and frequency of the stimulus signal—each termed a “vixel”) distinguishable by the subjects.

In this paper, the efficacy of this concept is further investigated on a closed-loop operation of a hand prosthesis in virtual reality. One set of information, the grasp force, is used in the closed-loop application, providing sensory feedback on the grasp force regulated by the motor input via surface electromyography (sEMG). The second set provides an additional secondary information. Note that a closed-loop operation differs from psychometric evaluation as the sensory excitation is a function of the voluntary user effort in the given task. This study is investigated within the context of non-invasive bone conduction interface, where the need for higher information bandwidth is compelling due to the spatial limitations in the placement of feedback transducer to the human user. It should be noted that the purpose of the study in this paper is to establish the ability for a second piece of information to be perceived. Once this is established, the second set of information may be used to (1) perceive an independent set of information, such as the temperature of the object grasped, or (2) improve the performance of the primary task with additional information. In this paper, the second set of information is not expected to improve the performance of the primary task, which is the closed loop object grasping task.

It was found that the human subjects were able to discern the two sets of information even when applied simultaneously. The baseline for comparison is the case where only one set of sensor information was directly conveyed as feedback to the human user. Comparing the proposed technique to the baseline, a comparable performance in regulating the grasp force of the prosthesis (accuracy and repeatability) and in correctly identifying the secondary information (low, medium, or high) was achieved.

We define the sensor information as y ∈ RN, where N is the number of independent sets of sensor information, where the measurements can be continuous-time signals or discrete events. The feedback stimulation to the prosthetic user is defined as x ∈ RM, where M is the number of channels (transducers) employed to provide the feedback stimulation. The scenario being addressed in this paper is that where N > M. The relationship between measurement y and the feedback stimulation x can be written as

where ϕ : RN → RM.

Four major sensing modalities are generally present in the upper limbs: touch, proprioception, pain, and temperature. The touch modality is further made up of a combination of information: contact, normal and shear force/pressure, vibration, and texture (Antfolk et al., 2013). To achieve a robust execution of grasping and object manipulation task, only a subset of these sensing modalities are used as feedback. Recent studies have further isolated the types of feedback modalities and information that would be pertinent to an effective object grasping and manipulation, such as grip force and skin-object friction force (de Freitasnzo et al., 2009; Ninu et al., 2014). Furthermore, literature has explicitly determined that such combination of feedback information is required simultaneously for an effective grasping and manipulation (Westling and Johansson, 1984; Augurelle et al., 2003). In the context of an upper limb prosthesis, it is possible to equip a prosthetic hand with a large number of sensors (Kim et al., 2014; Mohammadi et al., 2019) so that N > M. It should be noted that the N sets of independent information can be constructed out of any number of sensing modalities, such as force sensing, grasp velocity sensing, tactile information e.g., for object roughness. It may even contain estimated quantities that cannot be directly measured by sensors, for example: object stiffness may require the measurements of contact force and displacement.

The state of the art of non-invasive feedback in prosthetic technology generally utilizes electrotactile (ET), vibrotactile (VT), and mechanotactile (MT) modalities, placed in contact with the skin as a way to deliver the sensation (Stephens-Fripp et al., 2019). More novel feedback mechanisms have also been explored, such as using augmented reality (Markovic et al., 2014). Of these modalities, ET and VT present the challenges of a varying stimulation perception with location of application, VT also presents the challenge where its perception is static-force dependent (i.e., it depends on how hard the VT transducer is pressed against the skin) while MT is often bulky, with high power consumption (Svensson et al., 2017; Stephens-Fripp et al., 2018).

It was shown, however, that VT applied over bony landmarks does not suffer from the static force dependency (Mayer et al., 2018), is compact and does not suffer from high power consumption (Mayer et al., 2019). It does, however, restrict the locations that this technique can be applied to on the upper limb, as there are relatively fewer bony landmarks on the upper limb than skin surface. A psychophysical evaluation in Mayer et al. (2019) demonstrates comparable results in non-invasive vibrotactile feedback on the bone to the invasive (osseointegrated) study in Clemente et al. (2017). It is highlighted that personalization is required for the perception threshold in order to be used as an interface. A higher sensitivity has been reported for frequencies in the range of 100–200 Hz where lower stimulation forces are required. This allows the use of more compact transducers with lower power consumption (Mayer et al., 2019).

In order to demonstrate the concept of conveying multi-sensor information via fewer feedback channels, this paper uses one feedback channel to convey two sets of independent sensor information, namely the grasp force fg and a secondary information s, which could be e.g., skin-object friction, temperature. That is,

where fg represents a continuous-time signal of the grasp force and s is a discrete class of the secondary information. The primary information, the grasp force, is used as a feedback to the task of regulating the object grasp force. The secondary information does not directly contribute to the task of regulating the grasp force.

VT via bone conduction is selected as the feedback stimulation, applied on the elbow bony landmark. The sinusoidal waveform applied as the vibrotactile stimulus is:

where the amplitude a(t) is modulated as a linear function of the continuous-time grasp force signal fg(t):

while the frequency f(t) is modulated as a linear function of the secondary information s(t)

where s(t) ∈ {S1, S2, S3} is a discrete set describing the secondary information at time t. The offset a0 and f0 denote the minimum amplitude and frequency detectable by human bone conduction perception. The constants ka and ks are positive.

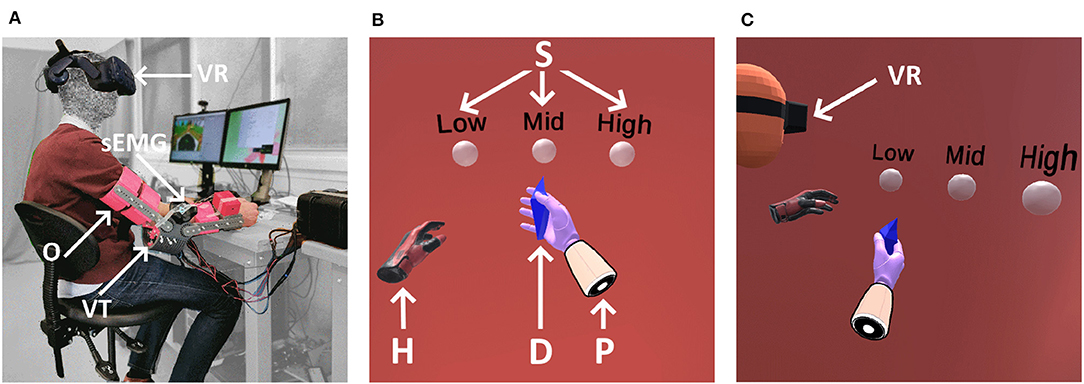

The proposed approach is validated in a human-subject experiment using a VT transducer worn on the elbow bony landmark to provide the feedback and a virtual reality based environment to simulate the grasping task, as shown in Figure 1A. This experiment seeks to verify that subjects can differentiate two encoded sensory information conveyed via one bone conduction channel. This is done by firstly, comparing the performance of the proposed approach against the baseline of carrying out the same task with only one set of information conveyed through the feedback channel. Secondly, the performance with and without the addition of visual feedback was compared.

Figure 1. The setup of the grasp task in virtual reality is shown in (A) where the subject is seated with the arm placed in the orthoses (O) onto the table. The vibrotactile transducer (VT) is mounted onto the ulnar olecranon; the EMG electrodes (sEMG) onto the forearm and the virtual reality headset (VR) placed on the subjects head. The subjects first person view in virtual reality in (B) shows the prosthetic (P); the grasped object (D); the non-dominant hand (H) for commands as activating/deactivating EMG or touching the sphere (S) for reporting the secondary information class and to advance to the next task; (C) shows the top person view of the virtual reality setup.

The experiment consists of three parts:

(1) A pre-evaluation of the psychophysics of the interface;

(2) Obtaining the bone conduction perception threshold at the ulnar olecranon for individual subjects;

(3) Evaluating the performance of the human subject in the task of grasping within a virtual reality environment.

The experiment was conducted on 10 able-bodied subjects (2 female, 8 male; age 28.7 ± 4 years). Informed consent was received from all subjects in the study. The experimental procedure was approved by the University of Melbourne Human Research Ethics Committee, project numbers 1852875.2 and 1750711.1.

This subsection performs the psychophysical evaluation of the bone conduction interface as sensory feedback. This is done to ensure that subjects can discriminate between the given stimulation frequencies and amplitudes chosen in the later for the Grasp Force Regulation and Secondary Information Classification Task (see section 3.3). Therefore the minimum noticeable difference for subjects, later referred to as “just noticeable difference” (JND), is obtained to quantify the capabilities of the bone conduction interface in frequency and amplitude domain.

A custom elbow orthosis with adjustable bone conduction transducers was fitted to the subjects dominant hand for the experiment as shown in Figure 1A. The orthosis (O) was fixed to the upper and lower arm of the subject through adjustable velcro straps. The vibrotactile transducer (VT) position was adjusted by a breadboard-style variable mounting in order to align and be in contact with the ulnar olecranon, which is the proximal end of the ulna located at the elbow. The VT is adjusted using two screws to ensure good contact with the bony landmark. The orthosis is placed on the desk (see Figure 1A), and kept static during the experiments.

The setup consists of a B81 transducer (RadioEar Corporation, USA), calibrated using an Artificial Mastoid Type 4930 (Brüel & Kjære, Denmark) at the static force of 5.4 N. The stimulation signals were updated at 90 Hz and amplified using a 15 W Public Address amplifier Type A4017 (Redback Inc., Australia) having a suitable 4 − 16Ω output to drive the 8Ω B81 transducers and a suitable low harmonic distortion of < 3% at 1 kHz. Calibrated force sensitive resistor (FSR) (Interlink Electronics 402 Round Short Tail), placed between the transducer and the mounting plate, were used to measure the applied force using a force sensitive area of A = 1.33cm2. The calibration was done using three different weights [0.2, 0.5, 0.7] kg measuring five repetitions and applying a linear interpolation to obtain the force/voltage relationship. The achieved force/voltage relationship has a variance of 5.4±0.37N. The stimulation signal was generated using a National Instruments NI USB-6343 connected to a Windows Surface Book 2 (Intel Core i7-8, 16GB RAM, Windows 10™) as control unit. A MATLAB® GUI was used to guide the user through the psychophysics and perception threshold experiment. The computer was connected via a Wi-Fi hotspot through a UDP connection to the head mounted virtual reality system for the experiment tasks.

It is noted that the JND of frequency (JNDf) as well as the JND of amplitude (JNDa) are different for each person (Dosen et al., 2016). Therefore, a sample of five subjects are employed to evaluate JNDf and JNDa to show that the subjects can discriminate between the given stimulation frequencies and amplitudes. The JNDf is measured for three frequencies fref ∈ [100, 400, 750] Hz and three amplitudes aref ∈ [0.1, 0.3, 0.5]V, giving the permutation of the nine different combinations. For each combination, a standard two-interval forced-choice (2IFC) threshold procedure is used. For the 2IFC, the reference stimulus fref is selected out of the three predetermined frequencies and the target stimulus ft was varied in a stochastic approximation staircase (SAS) manner, where the variation is based on the report of the subjects of the perceived stimulus (Clemente et al., 2017). Therefore,

where ftn is the target stimulus during the previous trial, ftn+1 is the upcoming trial, m is the number of reversals showing how many times the answers change from wrong to right, Zn is set to 1 for correct answer and 0 for an incorrect answer, and ftn is initialized with 1.5 times the reference stimulus. The trials are stopped after 50 iterations and the value ft51 for the 51st trial is taken as the perception threshold (Clemente et al., 2017; Mayer et al., 2019).

The JNDa is obtained similar to the JNDf where the target amplitude atn+1 is now varied in a SAS manner and the reference stimulus aref is chosen out of the given amplitudes.

The objective of this subsection is to determine the minimum stimulation amplitude a0 from which subjects could perceive a given stimulation frequency f. This will be referred to as “perception threshold” henceforth. For any given frequency, the amplitude thresholds change and are different for each person, thus it is necessary to be identified (Mayer et al., 2019).

The same setup as for the psychophysical evaluation was used which is explained in section 3.1.1.

The perception threshold is obtained using a method of adjustment test (Kingdom and Prins, 2016, Chapter 3). The subjects are presented n = 10 times with each frequency f ∈ [100, 200, 400, 750, 1500, 3000, 6000] Hz. At each iteration, the amplitude is adjusted by the subject to the lowest perceived stimulation. The subject can adjust the amplitude in small ΔUsmall = 0.005V and large ΔUlarge = 0.05V increments. The frequencies were presented in a randomized order.

The obtained perception threshold value a0 for each subject is set in the bone conduction stimulation signal (Equation 4). The experiment then proceeded to the virtual reality based grasping tasks.

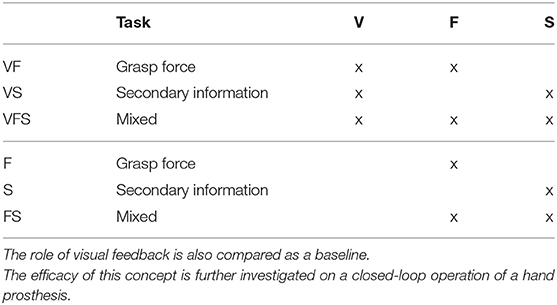

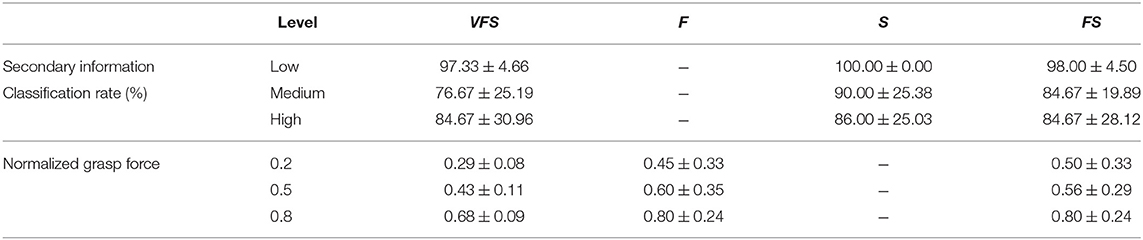

Subjects were asked to perform a set of grasp force regulation and secondary information classification tasks with a virtual prosthetic hand. The tasks involved regulating the grasp force of the virtual reality prosthetic hand through the use of a sEMG-based control interface and classifying the secondary information. Different combinations of feedback modalities [visual feedback (V), grasp force (F), and the secondary information (S)] were presented, as shown in Table 1.

Table 1. Experimental cases tested: encoding two sets of information onto the amplitude and frequency of the vibrotactile stimulation as the feedback to the subject through bone conduction.

Three grasping tasks were tested in each group (Table 1). “Grasp Force Regulation Task” consisted purely of applying a grasp force to an object in-hand, this task is detailed in section 3.3.3.1. “Secondary Information Classification Task” consisted of classifying the secondary information, with no grasp force involved, this task is detailed in section 3.3.2.2. “Mixed Task” was a combination of “Grasp Force Regulation Task” and “Secondary Information Classification Task,” where subjects required to apply a given grasp force and classify the secondary information, this task is detailed in section 3.3.2.3. Tasks VF and VS were considered as training for the users to familiarize themselves with the sEMG control interface and the feedback. Tasks VFS, F, S, and FS were used to show if subjects can differentiate multiple sensory feedback encoded in one channel with and without visual feedback. The tasks are detailed in the following subsections.

The same setup as for the psychophysical evaluation was used, as explained in section 3.1.1.

MyoWare sensors with Ag-AgCl electrodes were used for sEMG data gathering. Data gathering and virtual reality update were performed at 90 Hz.

The virtual reality component of the experiment was performed on an HTC Vive Pro HMD with the application developed in Unity3D. The experimental platform runs on an Intel Core i7-8700K processor at 3.7 GHz, with 32 GB RAM, and GeForce GTX 1080Ti video card with 11 GB GDDR5. An HTC Vive Controller was used for tracking the non-dominant hand of the subject and to interact with the virtual reality application. The subjects report on the secondary information and navigate through the experiment with the non-dominant hand. An HTC Vive Tracker was used to determine the location of the dominant hand of the subject to determine the location of the virtual prosthesis. The application used for the experiment can be downloaded from https://github.com/Rigaro/VRProEP.

An average time latency of a touch event generated in virtual reality and the activation of the feedback stimulus of tlatency = 66 ms was estimated by measuring the single time latency's involved. The total delay results from the time delay of sending a command from the virtual reality setup via a UDP connection to the stimulation control unit tUDP = 65 ms (measured) and the delay of sending the stimulation command to the NI USB-6343 tNI = 1 ms (datasheet).

Subjects performed a set of grasping and secondary information classification tasks in the virtual reality environment. The tasks were separated into two blocks (see Table 1) with a 2 min break between them. An HTC Vive Pro Head Mounted Display (HMD) was used to display the virtual reality environment to subjects. The virtual reality set-up is shown in Figure 1A while the subject's first person view in virtual reality is shown in Figure 1B and a top person view in Figure 1C. A Vive Controller was held by the subject on their non-dominant hand and was used to enable the EMG interface by a button press and to select the secondary information class in the classification task. A standard dual-site differential surface EMG proportional prosthetic interface was used to command the prosthetic hand closing velocity (Fougner et al., 2012). Muscle activation was gathered using sEMG electrodes placed on the forearm targeting wrist flexor and extensor muscles for hand closing and opening, respectively.

In the grasp force task, subjects were asked to use the sEMG control interface to regulate the grasp force to grip objects with a certain grasp force level. A fixed stimulation frequency was used, in line with the result of the psychophysical evaluation, while the amplitude a(t) is used to provide feedback on the grasp force produced by the human subject as determined by Equation (4).

The grasp force fg was calculated from the sEMG signal magnitude. Therefore, the sEMG signal magnitude uEMG is integrated in a recursive discrete manner, as given by

where uEMG(k) is the sEMG input amplification adjusted per subject to range from [−100, 100]; Δfg = 0.005 is the scaling factor to convert sEMG signal magnitude to a force rate of change. The grasp force fg is bounded to [0, 1]. The recursion is updated at 90 Hz.

The grasp tasks were grouped in two parts (see Table 1). In the first part (VF), visual feedback related to the grasp force was given to the subjects and is considered as training. The visual feedback consisted of the grasped object changing color in a gradient depending on the applied grasp force fg(k). In the second part (F), no visual feedback was provided. Three different target grasp force levels were used for the task and each was repeated five times in a randomized manner. The target grasp force levels were [0.3, 0.5, 0.8]. The object starting color represented the target grasp force level, however, subjects did not explicitly know the exact target force.

In the secondary information classification task, subjects were asked to report on which of the three different classes they perceived by touching one of three spheres in front of them representing each of the classes. The classes (s) were low, mid, and high, which translated to the following frequencies [100, 400, 750] Hz. The grasp force was set to constant at fg = 0.8 resulting in a constant amplitude a(t) in the feedback stimulus to the subject for this task. In other words, it is not regulated based on the subject sEMG involvement. Each class was presented 5 times in a randomized manner. The secondary information classification tasks were grouped in two parts (see Table 1). In the first part (VS), visual feedback related to the correct class was shown to the subject through the color of the classification spheres whilst presenting the stimuli and is therefore considered as training. In the second part (S), no visual feedback was provided.

The mixed task combines both the grasp force regulation and secondary information classification tasks simultaneously, such that the grasp force regulation had to be executed and the subjects were then asked to report on which secondary information class they perceived. This means that the stimulation provided to the subjects had the grasp force fg(k) encoded in its amplitude a(t) and the secondary information class s encoded in its frequency f, simultaneously. A permutation of all force levels and secondary information classes was presented and each combination was repeated five times in a randomized manner. Force levels are [0.3, 0.5, 0.8] and secondary information classes are [100, 400, 750] Hz. The mixed tasks were grouped in two parts (see Table 1). In the first part (VFS), visual feedback related to the grasp force was given to the subjects. No visual feedback was given for the secondary information feedback. In the second part (FS), no visual feedback was provided.

The grasp force fg (as calculated in Equation 7) and the actual sEMG activation levels were continuously recorded for all trials for the duration of each task, along with the desired force target. The subject's answer for the Secondary Information Classification Task was recorded for tasks “Secondary Information Classification Task” and “Mixed Task,” along with the correct class.

The following performance measures were used:

Normalized Grasp Force: is the normalized grasp force fg(kf) at the time kf, where kf is the time the subjects finalize the force adjustment by disabling the EMG interface by a button press. The mean and standard deviation is calculated over the repetitions of each task regulating the grasp force and represents the accuracy and repeatability of the grasp force regulation exercise.

Secondary Information Classification Rate: The rate with which the subject identifies the correct secondary information class was used as the performance measure in the secondary information classification task.

The achieved results of perception threshold, secondary information classification rate and normalized grasp force are visually presented using boxplots, showing the median, 25th and 75th percentiles and the whiskers indicating the most extreme points not considered outliers. Any outliers are plotted using the “+” symbol.

For statistical analysis a non-parametric ANOVA like analysis, specifically a Friedman test was applied (Daniel, 1990) as an ANOVA due to non normal distributed data (Shapiro–Wilk test) was not suitable. This was followed up by a post-hoc analysis via Wilcoxon signed rank test (Wilcoxon, 1945). The obtained p values are given as well as the statistical significance indicated in the plots.

Before using the VT bone conduction feedback interface, a pre-evaluation of the psychophysics of this interface is conducted and the perception threshold of each subject determined.

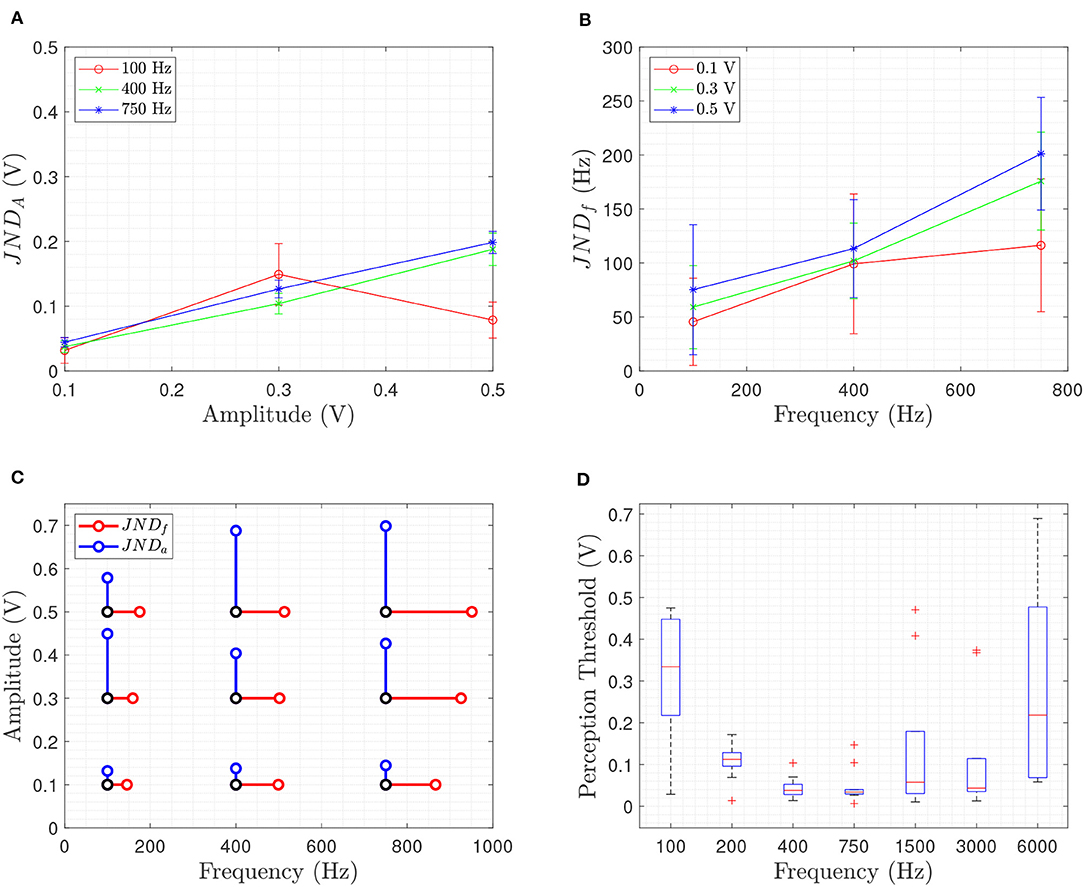

Figures 2A,B show the obtained mean and standard deviation for the JNDa and JNDf. In Figure 2C, the mean of both JNDa and JNDf are plotted together to show the resolution of the proposed interface. The black dots in Figure 2C denote the reference stimulus of the SAS approach and the red and blue dots show the obtained mean value of the JND. Therefore, this plot shows the next closest noticeable stimulation point (frequency or amplitude).

Figure 2. Results of the psychophysical evaluation of five subjects show the mean and standard deviation of the (A) JNDa giving the amplitude resolution for three different frequencies at three different amplitudes and (B) JNDf giving the frequency resolution for three different amplitudes at three different frequencies while (C) shows a summary plot of the obtained mean value of JNDa (blue) and JNDf (red) at each reference stimulus (black). In (D), the identified perception threshold value a0 at the frequencies [100, 200, 400, 750, 1500, 3000, 6000] Hz for 10 subjects, is shown.

The results in Figure 2C show that the JNDa is the smallest for lower frequencies except at 100 Hz and 0.3 V reference stimulus. Hence, the fixed frequency of the grasp force regulation task, as discussed in section 3.3.2, was set to 100 Hz since subjects had the best amplitude discrimination. Comparing the results obtained for VT on skin in Dosen et al. (2016), Figures 2A,B show similar behavior where the JND is increasing linearly with increasing amplitude and frequency. The lower value for JNDa at 100 Hz indicates better sensitivity at lower frequencies for higher stimulation amplitudes in case of bone conduction.

Before applying VT bone conduction feedback, the lowest perceived stimulation at the given frequencies was found using a method of adjustment. This threshold a0 was used in Equation (4) to fit the linear relation. The maximum was set to half of the maximum transducer voltage of 0.5 V. Figure 2D shows the obtained perception threshold for all subjects.

In the following subsections, the performance of the Mixed Task, representing the proposed concept of conveying two sets of information simultaneously via one feedback channel to the human subject, is compared to the baseline performance of the Grasp Force Regulation Task and the Secondary Information Classification Task, using the defined performance measures.

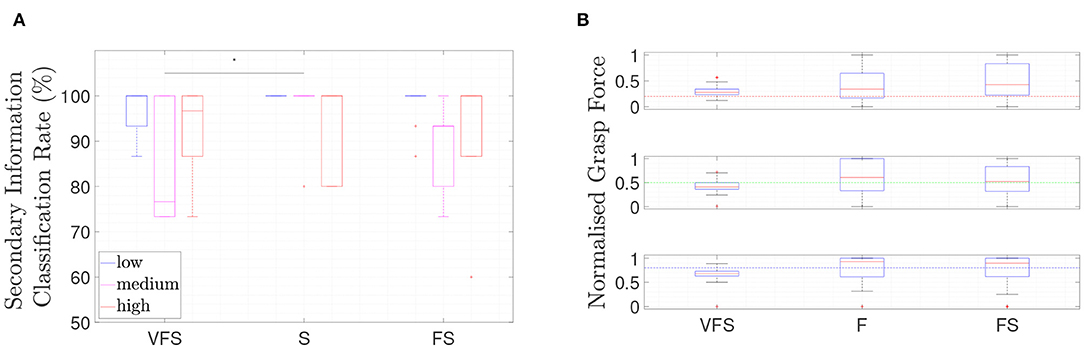

The obtained secondary information classification rates are shown in the boxplot of Figure 3A for the VFS, S, and FS tasks. VS and VF are the training tasks and therefore the obtained data is not considered in the plots. In VS, the subjects received visual feedback for the correct answer in order to learn how to interpret the secondary information feedback and therefore reached 100% secondary information classification rate. In S, only secondary information feedback via bone conduction is provided without visual feedback. In FS, the grasp force level has to be adjusted and the correct secondary information class chosen afterwards, with both grasp force and secondary information feedback provided simultaneously via the bone conduction mechanism.

Figure 3. Boxplots of the obtained results of the (A) secondary information classification task, subdivided into the three secondary information classes, and in (B) the achieved grasp force, subdivide into the three target grasp force levels, is shown for 10 subjects. *Asterisk indicates statistical difference by post-hoc analysis p < 0.05.

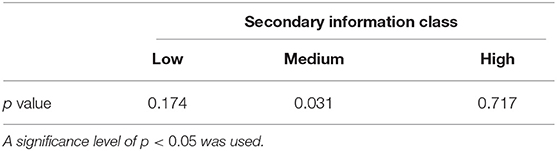

A mean secondary information classification rate of 86.22±18.17% for VFS (visual, force, and secondary information feedback), 92.00±16.57% for S (secondary information feedback) and 89.11±16.16% for FS (force and secondary information feedback) has been observed. The mean secondary information classification rate and standard deviation for each class (low, medium, high) for the three different tasks are given in Table 2 and the boxplot shown in Figure 3A. A Friedman test (VFS, S, FS) for secondary information classification rate resulted in a statistical significance for the medium secondary information class classification (see Table 3).

Table 2. Shows the mean and standard deviation of the obtained results for secondary information classification rate and normalized grasp force for the 4 tasks for 10 subjects.

Table 3. The p-values of the Friedman test for the secondary information classification rate for the three different classes.

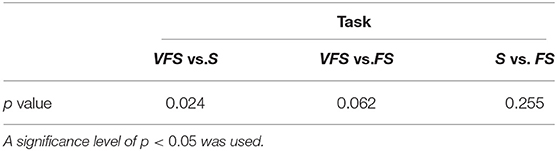

For low and high secondary information class, no statistical significance could be found, suggesting the data is compatible with all groups having the same distribution. For medium secondary information class a Wilcoxon signed rank test is applied as post-hoc test and results are shown in Table 4. A statistical significance could be found for VFS vs. S, but not for VFS vs. FS and S vs. FS suggesting the data is compatible with all groups having the same distribution.

Table 4. The p-values of the post-hoc Wilcoxon signed rank test for medium class of the mean secondary information classification rate.

Figure 3B shows the boxplot of the achieved grasp force by the subjects during VFS, F, and FS. In VF, the subjects received visual feedback for the applied grasp force to learn how to associate grasp force to visual feedback as well as tactile feedback. In all cases, grasp force feedback is present, while visual feedback is present only in VFS (see Table 1). The result of each force level and each trial is given in Table 2.

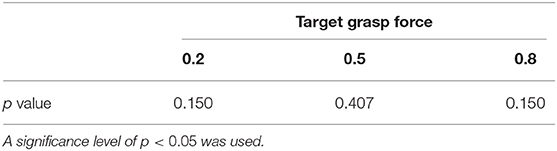

The obtained results for the Friedman Test (VFS, F, FS) of all force levels are shown in Table 5 and no statistical significance could be found suggesting the data is compatible with all groups having the same distribution.

Table 5. The p-values of the Friedman test for the Normalized Grasp Force for the different target levels.

In this subsection, we discuss the performance of the subjects in Tasks FS compared to S. The role of visual feedback (Task VFS) is discussed separately in the following subsection 5.2. Table 3 indicates a statistical difference for the performance in recognizing the medium secondary information class but not for low and high. However, the post-hoc test, Table 4, provides more details by showing no significant difference between the performance in Tasks S vs. FS for detecting the medium secondary information class. Therefore, no statistically significant difference is found between conveying two sets of information simultaneously through the single bone conduction channel in the context of recognizing the secondary information compared to conveying one set of information.

The Friedman test for the performance of the subjects in the grasp force regulation task as shown in Table 5 does not show any statistically significant difference across the cases of F, FS, and VFS. This is found consistently across the three levels of grasp force. Therefore, no statistically significant reduction in performance is found in the proposed approach against the baseline in the context of grasp force regulation, leading us to conclude that adding a second set of sensor information does not influence the ability to use the first set of sensor information in a closed-loop manner. The standard deviation is qualitatively decreasing for increasing force levels indicating a better repeatability for higher force levels in the case of no visual feedback (F and FS).

It should be noted that the grasping task for F is carried out at the stimulation frequency of 100 Hz, as justified by the psychophysical evaluation. In the case of VFS and FS, the subject also had the chance to carry out the task of regulating grasp force alongside the secondary information classification exercises, which were conducted at [100, 400, 750]Hz. This difference did not significantly influence the ability to control the grasp force.

As visual feedback is present in a prosthetic system next to incidental feedback, the influence of visual feedback is investigated while incidental feedback is avoided by using a virtual reality setup. To investigate the influence of visual feedback, whilst feeding back two sets of information, the grasp force has been feed back as a color gradient of the grasped object. Though this is not a real case scenario it contains the same underlying set of information.

Comparing VFS to S showed a statistically significant increase in the secondary information classification rate in the absence of visual feedback (see Table 4), for the medium secondary information class, but not for low and high. It should be noted that the visual feedback was representing grasp force information and not the secondary information. Comparing VFS to FS does not yield any statistically significant difference in performance (see Tables 3, 4). Several explanations are possible. It could suggest that the subjects were able to learn the meaning of the feedback and perform better or that the reduced cognitive effort increased performance. However, the data collected in this study did not permit the authors to draw further conclusions.

The obtained normalized grasp force performance shows no statistically significant difference between the tasks involving visual feedback VFS compared to those with no visual feedback (F and FS). A smaller variance of the normalized grasp force is obtained for VFS compared to F and FS. It should be noted that VFS adds visual feedback for the same sensory information, namely the grasp force. A similar observation was reported in Patterson and Katz (1992) stating that the primary advantage of supplemental feedback is to reduce the variability of responses. This decrease can not be observed for F compared to FS as it does not add more feedback of the same sensory information but rather superimposes other types of sensory information.

It should be noted that the results are obtained using a virtual reality setup. This allows the control of the provision of visual feedback while guiding the subjects through the grasp task experiment. Admittedly it abstracts the experiment from a practical grasping task. However, it does not take away the main premise from the study, which is to understand how well two sets of information can be conveyed in this novel manner.

This study investigated the efficacy of conveying multi-sensor information via fewer feedback channels in a prosthetics context. Two sets of sensor information: grasp force and a secondary information, are conveyed simultaneously to human users through one feedback channel (a vibrotactile transducer on bone conduction). Human subject experiment was conducted using physical vibrotactile transducers on the elbow bony landmark and virtual reality environment to simulate the prosthetic grasping force regulation and secondary information classification tasks. It was found that the subjects were able to discern the two sets of feedback information, sufficient to perform the grasping and secondary information classification tasks to a performance not inferior to that when carried out with only one set of feedback information. The addition of visual feedback, a common feedback mechanism present in prostheses, was found to improve the repeatability of grasp force regulation as reported in literature.

It is expected that the result is generalizable to other types of information and modalities (not limited to grasp force and bone conduction stimulation) and more freedom in the selection of the number of independent sets of sensor information N and feedback stimulation channel M, as long as N > M. The second set of information was generalized and labeled secondary information but can be multiple in a real world application e.g., temperature, friction.

It should be noted that in this experiment, one set of sensor information was used explicitly in the closed-loop performance of grasp force regulation, while the other set constitutes additional information. Future work will investigate other modulation techniques to encode the multi-sets of information into the one feedback stimulation channel and algorithms to find an optimal matching between sensory information and provided feedback.

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by Ethics Committee of the University of Melbourne. Project numbers are 1852875.2 and 1750711.1. The patients/participants provided their written informed consent to participate in this study.

RM, RG-R, AM, YT, and DO: literature, experiment, data analysis, and paper. GA and PC: paper design, experiment design, and paper review.

This project was funded by the Valma Angliss Trust and the University of Melbourne.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors acknowledge the assistance in the statistical analysis of the data in this paper by Cameron Patrick from the Statistical Consulting Centre at The University of Melbourne.

Antfolk, C., D'alonzo, M., Rosén, B., Lundborg, G., Sebelius, F., and Cipriani, C. (2013). Sensory feedback in upper limb prosthetics. Expert Rev. Med. Dev. 10, 45–54. doi: 10.1586/erd.12.68

Augurelle, A.-S., Smith, A. M., Lejeune, T., and Thonnard, J.-L. (2003). Importance of cutaneous feedback in maintaining a secure grip during manipulation of hand-held objects. J. Neurophysiol. 89, 665–671. doi: 10.1152/jn.00249.2002

Chaubey, P., Rosenbaum-Chou, T., Daly, W., and Boone, D. (2014). Closed-loop vibratory haptic feedback in upper-limb prosthetic users. J. Prosthet. Orthot. 26, 120–127. doi: 10.1097/JPO.0000000000000030

Childress, D. S. (1980). Closed-loop control in prosthetic systems: historical perspective. Ann. Biomed. Eng. 8, 293–303.

Choi, K., Kim, P., Kim, K. S., and Kim, S. (2016). “Two-channel electrotactile stimulation for sensory feedback of fingers of prosthesis,” in IEEE International Conference on Intelligent Robots and Systems (Daejeon), 1133–1138.

Choi, K., Kim, P., Kim, K. S., and Kim, S. (2017). Mixed-modality stimulation to evoke two modalities simultaneously in one channel for electrocutaneous sensory feedback. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 2258–2269. doi: 10.1109/TNSRE.2017.2730856

Clemente, F., D'Alonzo, M., Controzzi, M., Edin, B. B., and Cipriani, C. (2016). Non-invasive, temporally discrete feedback of object contact and release improves grasp control of closed-loop myoelectric transradial prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 24, 1314–1322. doi: 10.1109/TNSRE.2015.2500586

Clemente, F., Håkansson, B., Cipriani, C., Wessberg, J., Kulbacka-Ortiz, K., Brånemark, R., et al. (2017). Touch and hearing mediate osseoperception. Sci. Rep. 7:45363. doi: 10.1038/srep45363

Cordella, F., Ciancio, A. L., Sacchetti, R., Davalli, A., Cutti, A. G., Guglielmelli, E., et al. (2016). Literature review on needs of upper limb prosthesis users. Front. Neurosci. 10:209. doi: 10.3389/fnins.2016.00209

Dahiya, R. S., Metta, G., Valle, M., and Sandini, G. (2009). Tactile sensing - from humans to humanoids. IEEE Trans. Robot. 26, 1–20. doi: 10.1109/TRO.2009.2033627

D'Alonzo, M., Dosen, S., Cipriani, C., and Farina, D. (2014). HyVE: Hybrid vibro-electrotactile stimulation for sensory feedback and substitution in rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 290–301. doi: 10.1109/TNSRE.2013.2266482

de Freitas, P. B., Uygur, M., and Jaric, S. (2009). Grip force adaptation in manipulation activities performed under different coating and grasping conditions. Neurosci. Lett. 457, 16–20. doi: 10.1016/j.neulet.2009.03.108

Dosen, S., Ninu, A., Yakimovich, T., Dietl, H., and Farina, D. (2016). A novel method to generate amplitude-frequency modulated vibrotactile stimulation. IEEE Trans. Haptics 9, 3–12. doi: 10.1109/TOH.2015.2497229

Fougner, A., Stavdahl, O., Kyberd, P. J., Losier, Y. G., and Parker, P. A. (2012). Control of upper limb prostheses: terminology and proportional myoelectric controla review. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 663–677. doi: 10.1109/TNSRE.2012.2196711

Franceschi, M., Seminara, L., Dosen, S., Strbac, M., Valle, M., and Farina, D. (2017). A system for electrotactile feedback using electronic skin and flexible matrix electrodes: experimental evaluation. IEEE Trans. Haptics 10, 162–172. doi: 10.1109/TOH.2016.2618377

Johansson, R., and Westling, G. (1987). Signals in tactile afferents from the fingers eliciting adaptive motor responses during precision grip. Exp. Brain Res. 66, 141–154. doi: 10.1007/BF00236210

Kim, J., Lee, M., Shim, H. J., Ghaffari, R., Cho, H. R., Son, D., et al. (2014). Stretchable silicon nanoribbon electronics for skin prosthesis. Nat. Commun. 5:5747. doi: 10.1038/ncomms6747

Kingdom, F. A. A., and Prins, N. (2016). “Psychophysics,” in Science Direct 2nd Edn.. doi: 10.1016/C2012-0-01278-1

Lederman, S. J., and Klatzky, R. L. (1987). Hand movements : a window into haptic object recognition. Cogn. Psychol. 19, 342–368. doi: 10.1016/0010-0285(87)90008-9

Li, Y., and Brånemark, R. (2017). Osseointegrated prostheses for rehabilitation following amputation. Der Unfallchirurg 120, 285–292. doi: 10.1007/s00113-017-0331-4

Markovic, M., Dosen, S., Cipriani, C., Popovic, D., and Farina, D. (2014). Stereovision and augmented reality for closed-loop control of grasping in hand prostheses. J. Neural Eng. 11:046001. doi: 10.1088/1741-2560/11/4/046001

Markovic, M., Schweisfurth, M. A., Engels, L. F., Farina, D., and Dosen, S. (2018). Myocontrol is closed-loop control : incidental feedback is sufficient for scaling the prosthesis force in routine grasping. J. Neuroeng. Rehabil. 15:81. doi: 10.1186/s12984-018-0422-7

Mayer, R. M., Mohammadi, A., Alici, G., Choong, P., and Oetomo, D. (2018). “Static force dependency of bone conduction transducer as sensory feedback for stump-socket based prosthesis,” in ACRA 2018 Proceedings (Lincoln).

Mayer, R. M., Mohammadi, A., Alici, G., Choong, P., and Oetomo, D. (2019). “Bone conduction as sensory feedback interface : a preliminary study,” in Engineering in Medicine and Biology (EMBC) (Berlin).

Mohammadi, A., Xu, Y., Tan, Y., Choong, P., and Oetomo, D. (2019). Magnetic-based soft tactile sensors with deformable continuous force transfer medium for resolving contact locations in robotic grasping and manipulation. Sensors 19:4925. doi: 10.3390/s19224925

Ninu, A., Dosen, S., Muceli, S., Rattay, F., Dietl, H., and Farina, D. (2014). Closed-loop control of grasping with a myoelectric hand prosthesis: which are the relevant feedback variables for force control? IEEE Trans. Neural Syst. Rehabil. Eng. 22, 1041–1052. doi: 10.1109/TNSRE.2014.2318431

Patterson, P. E., and Katz, J. A. (1992). Design and evaluation of a sensory feedback system that provides grasping pressure in a myoelectric hand. J. Rehabil. Res. Dev. 29, 1–8. doi: 10.1682/JRRD.1992.01.0001

Saunders, I., and Vijayakumar, S. (2011). The role of feed-forward and feedback processes for closed-loop prosthesis control. J. NeuroEng. Rehabil. 8:60. doi: 10.1186/1743-0003-8-60

Shaw-Cortez, W., Oetomo, D., Manzie, C., and Choong, P. (2018). Tactile-based blind grasping: a discrete-time object manipulation controller for robotic hands. IEEE Robot. Autom. Lett. 3, 1064–1071. doi: 10.1109/LRA.2020.2977585

Shaw-Cortez, W., Oetomo, D., Manzie, C., and Choong, P. (2019). Robust object manipulation for tactile-based blind grasping. Control Eng. Pract. 92:104136. doi: 10.1016/j.conengprac.2019.104136

Shehata, A. W., Scheme, E. J., and Sensinger, J. W. (2018). Audible feedback improves internal model strength and performance of myoelectric prosthesis control. Sci. Rep. 8:8541. doi: 10.1038/s41598-018-26810-w

Stephens-Fripp, B., Alici, G., and Mutlu, R. (2018). A review of non-invasive sensory feedback methods for transradial prosthetic hands. IEEE Access 6, 6878–6899. doi: 10.1109/ACCESS.2018.2791583

Stephens-Fripp, B., Mutlu, R., and Alici, G. (2019). A comparison of recognition and sensitivity in the upper arm and lower arm to mechanotactile stimulation. IEEE Trans. Med. Robot. Bion. 2, 76–85. doi: 10.1109/TMRB.2019.2956231

Svensson, P., Wijk, U., Björkman, A., and Antfolk, C. (2017). A review of invasive and non-invasive sensory feedback in upper limb prostheses. Expert Rev. Med. Dev. 14, 439–447. doi: 10.1080/17434440.2017.1332989

Westling, G., and Johansson, R. (1984). Factors influencing the force control during precision grip. Exp. Brain Res. 53, 277–284.

Keywords: neuroprostheses, sensory feedback restoration, human-robot interaction, tactile feedback, bone conduction

Citation: Mayer RM, Garcia-Rosas R, Mohammadi A, Tan Y, Alici G, Choong P and Oetomo D (2020) Tactile Feedback in Closed-Loop Control of Myoelectric Hand Grasping: Conveying Information of Multiple Sensors Simultaneously via a Single Feedback Channel. Front. Neurosci. 14:348. doi: 10.3389/fnins.2020.00348

Received: 10 September 2019; Accepted: 23 March 2020;

Published: 27 April 2020.

Edited by:

Loredana Zollo, Campus Bio-Medico University, ItalyReviewed by:

Marco D'Alonzo, Campus Bio-Medico University, ItalyCopyright © 2020 Mayer, Garcia-Rosas, Mohammadi, Tan, Alici, Choong and Oetomo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Raphael M. Mayer, ci5tYXllckBzdHVkZW50LnVuaW1lbGIuZWR1LmF1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.