94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

HYPOTHESIS AND THEORY article

Front. Neurosci., 28 February 2020

Sec. Auditory Cognitive Neuroscience

Volume 14 - 2020 | https://doi.org/10.3389/fnins.2020.00164

This article is part of the Research TopicSmart Mobile Data Collection in the Context of NeuroscienceView all 12 articles

Robin Kraft1,2*

Robin Kraft1,2* Winfried Schlee3

Winfried Schlee3 Michael Stach1

Michael Stach1 Manfred Reichert1

Manfred Reichert1 Berthold Langguth3

Berthold Langguth3 Harald Baumeister2

Harald Baumeister2 Thomas Probst4

Thomas Probst4 Ronny Hannemann5

Ronny Hannemann5 Rüdiger Pryss6

Rüdiger Pryss6The increasing prevalence of smart mobile devices (e.g., smartphones) enables the combined use of mobile crowdsensing (MCS) and ecological momentary assessments (EMA) in the healthcare domain. By correlating qualitative longitudinal and ecologically valid EMA assessment data sets with sensor measurements in mobile apps, new valuable insights about patients (e.g., humans who suffer from chronic diseases) can be gained. However, there are numerous conceptual, architectural and technical, as well as legal challenges when implementing a respective software solution. Therefore, the work at hand (1) identifies these challenges, (2) derives respective recommendations, and (3) proposes a reference architecture for a MCS-EMA-platform addressing the defined recommendations. The required insights to propose the reference architecture were gained in several large-scale mHealth crowdsensing studies running for many years and different healthcare questions. To mention only two examples, we are running crowdsensing studies on questions for the tinnitus chronic disorder or psychological stress. We consider the proposed reference architecture and the identified challenges and recommendations as a contribution in two respects. First, they enable other researchers to align our practical studies with a baseline setting that can satisfy the variously revealed insights. Second, they are a proper basis to better compare data that was gathered using MCS and EMA. In addition, the combined use of MCS and EMA increasingly requires suitable architectures and associated digital solutions for the healthcare domain.

For many use cases in the healthcare domain, e.g., in the assessment of chronic diseases and disorders, there is a need for the collection of large, qualitative, longitudinal, and ecologically valid data sets. Additionally, contextual information like environmental factors can give even more valuable insights to researchers, healthcare providers (e.g., physicians or therapists), and last but not least, the patients themselves. At the same time, smart mobile devices (e.g., smartphones and smartwatches) and low-powered sensors are becoming increasingly ubiquitous. Two concepts that highly benefit from these advancements are mobile crowdsensing (MCS) and ecological momentary assessments (EMA). They can be used in combination in the form of mobile apps to correlate EMA assessment data with sensor measurement data in order to gain even more valuable insights about patients. However, there are numerous challenges when implementing a software solution in order to provide the desired functionality, to cope with technical aspects, as well as to comply with high standards and regulations in the healthcare domain. In this work, we discuss these challenges, derive several recommendations and propose a reference architecture for a respective software platform. These insights were mainly gained through several studies that combined MCS and EMA based on mHealth apps that we have developed in the last years. The mentioned studies, in turn, address different healthcare questions and are mostly running for many years. This provides us with a proper basis for the proposed reference architecture as well as the introduced set of recommendations. To conclude, the work at hand provides the following contributions:

• Various challenges are pointed out and discussed on the basis of the ongoing research project TrackYourTinnitus (TYT), which has been running since 2014.

• A number of recommendations are derived from the findings during this and other related projects.

• A reference architecture for a platform enabling the combination of MCS and EMA is proposed that aims to address the defined recommendations. Additionally, technical considerations for the implementation of the architecture are discussed.

The remainder of this paper is organized as follows. In section 2, related work in the fields of mobile crowdsensing and ecological momentary assessments is presented, and the combination of both concepts is discussed. Lessons learned during the operation of the TrackYourTinnitus (TYT) project are presented in section 3. In section 4, we derive recommendations for a MCS-EMA platform, propose a reference architecture to address these recommendations, and discuss selected technical considerations. Furthermore, the findings and their implications for MCS and EMA research are discussed in section 5. Finally, section 6 concludes the paper with a summary and an outlook.

In this section, we discuss mobile crowdsensing (MCS) in the healthcare domain. We cover related work in the fields of MCS and EMA and explain how we relate ecological momentary assessments (EMA) apps to MCS.

Mobile crowdsensing is a paradigm in which a community is leveraging devices with sensing and computing capabilities to collectively share data and extract information in order to measure and map phenomena of common interest. Therefore, it is also referred to as community sensing. As opposed to personal sensing, where the phenomena that are monitored belong to an individual user, community sensing applications focus on monitoring large-scale phenomena that cannot easily be measured by a single user or device (Ganti et al., 2011). This set of applications can then further be classified into participatory sensing (Burke et al., 2006) and opportunistic sensing (Lane et al., 2010) applications. Participatory sensing requires an active and conscious involvement of the user in order to contribute sensor data, while in opportunistic sensing, user involvement is minimal and sensor measurements as well as data transmission are done passively. In reality, mobile crowdsensing applications will often be located somewhere between these two extremes and use both paradigms to some extent. Furthermore, there exist recent works that reflect the categories of participatory and opportunistic sensing in the healthcare context (e.g., Pryss, 2019).

Furthermore, we consider the concept of mobile crowdsensing in the healthcare domain. Therefore, we are focusing on correlating personal sensing data with assessment data in order to gain insights on specific health conditions, (chronic) diseases and the patients' behavior. We consider the potential knowledge generated from this data as the phenomenon of common interest in terms of mobile crowdsensing. There are a number of applications in the field of healthcare (Guo et al., 2015). Its use cases include data collection in clinical and health/psychological trials (Pryss et al., 2015; Schobel et al., 2015), environmental monitoring and pollution measurement like noise pollution (Schweizer et al., 2011; Zappatore et al., 2017) or air pollution (Mun et al., 2009), public health (Wesolowski et al., 2012), and personal well-being (Consolvo et al., 2006). Although various mobile applications and solutions have been proposed, less works exist that cover reference settings to build generic solutions (Tokosi and Scholtz, 2019). In addition, few works are based on comprehensive experiences that are gained through various long-running projects (Tokosi and Scholtz, 2019).

Ecological Momentary Assessment (EMA) (Stone and Shiffman, 1994) denotes a range of research methods aiming to assess phenomena with ecological validity by allowing subjects and patients to repeatedly report in real time, in real-world settings, over time, and across contexts and therefore avoiding the bias of retrospective reports (Pryss et al., 2018a). Among numerous other aspects, EMA is characterized by several key features (Shiffman et al., 2008):

• Ecological: Data is collected in situ, i.e., in real-world settings and environments, which constitutes the ecological validity.

• Momentary: Assessments focus on current or very recent states in real time, which aims to avoid a bias associated with retrospective assessments.

• Strategic sampling: Assessment timings are strategically selected by specific sampling schemes, e.g., based on particular events of interest or by random, representative samplings across contexts.

• Longitudinal data: Subjects complete multiple assessments over time, which provides longitudinal data with insights on how the state varies over time and across situations.

A related methodology in the field of momentary research is the Experience Sampling Method (ESM) (Larson and Csikszentmihalyi, 2014; Van Haren, 2018), which aims at measuring momentary behavior, thoughts, symptoms, and feelings of participants, collected through self-reports that are typically filled out several times a day over several consecutive days (Myin-Germeys et al., 2009; Van Berkel et al., 2018). Generally, ESM has a focus on random time sampling and private, subjective experiences, while EMA is defined more broadly, as it also includes other sampling approaches and behavioral as well as physiological measures (Stone and Shiffman, 2002). Since we are striving to make our architecture as generic as possible and to additionally address physiological sampling via mobile sensors, we focus on EMA within the scope of this work.

EMA studies can be carried out with the help of portable electronic devices, which support the following EMA key functions (Shiffman, 2007; Shiffman et al., 2008):

1. Present assessment content to the subject (i.e., display questions and response options).

2. Manage assessment logic (e.g., handle branching and validate inputs).

3. Provide time-stamp data to document when assessments are completed.

4. Store assessment data.

5. Manage prompting schedules (e.g., determine when assessments should be made).

6. Prompt the subject to complete assessments.

Modern smartphones offer all of these functions, as they provide high-resolution displays, advanced processing power and storage, as well as push notifications (Raento et al., 2009). They have already been used in different EMA studies (Ebner-Priemer and Kubiak, 2007; Schlee et al., 2016). We summarize smartphone applications that offer EMA functionality using the term EMA apps. Smartphones offer additional capabilities that go beyond the initially defined EMA key functions, most importantly advanced processing capabilities, an (almost) always available network connection and built-in as well locally connected sensors (Van Berkel et al., 2018). Furthermore, data can be stored locally on the device and synchronized with the server, enabling an offline availability. Therefore, we explore different extensions of EMA apps and their combinations and study their effects. These extensions can be broadly categorized in (1) guidance, (2) feedback, (3) adjustable prompts, and (4) dynamic questionnaires. Generally, we distinguish between EMA apps and features that are used for data collection only (mainly research) and others that offer a benefit to the user (research and health care). The four categories of extensions we consider are described in the following:

• Guidance: We refer to guidance as the option for the user of the EMA app to link to a contact person. This contact person might be some kind of healthcare provider (HCP) that has some professional qualifications, e.g., a physician or therapist. The HCP might influence the process of EMA prompts, provide feedback to submitted data, and offer general advice to the user, or just act as an observer.

• Feedback: The EMA app could offer feedback to the user when he/she submits questionnaires. This feedback can be in the form of text messages by the HCP or automated feedback by the app, like tips and warnings when certain thresholds are exceeded, as well as graphical feedback in the form of graphs about the history of different measurements. We assume that feedback of this kind might act as an incentive to users and therefore increase adherence, but we also want to study the effects of this feedback on the EMA data.

• Adjustable prompts: Assessment prompts (i.e., notifications) can either be fixed and determined by the system, defined by the HCP, event-triggered (e.g., when a patient perceives his tinnitus, or when a context change is detected through sensor data), or can be adjusted by the user in a flexible manner.

• Dynamic questionnaires: The content of EMA questionnaires could be dynamic and adjusted depending on answered questionnaires in the past, occurring events, or other external parameters (e.g., the current weather retrieved through a web service).

There are a number of potential challenges when employing EMA studies, which are outlined in the following (Van Berkel et al., 2018):

• Participant burden: Answering questionnaires multiple times a day can be burdensome for participants. To counteract this issue, the number of questions, alerts, and question types should be kept as small as possible.

• Participant retention: Related to the frequent answering of questionnaires, study dropout rates are generally high. There has to be some sort of incentive for participants in order to keep them entering their data in a constant manner.

• Programming: There is no generic software solution that allows to employ EMA studies on mobile devices without requiring at least basic programming skills.

• Platform heterogeneity: Flexible software is required in order to support a large number of different hardware devices and operating systems.

• Data quality: Since data is not collected in a controlled environment, participants' data might be of low quality or noisy. Mechanisms should be in place to avoid or compensate missing, wrong or careless answers, as well as response shifts (i.e., changes in the participant's internal standards) or changes in the participants reactivity (i.e., behavioral adjustments because the participants know that they are being observed). In the context of participant retention, participants might answer the questionnaires as often as possible, even in a dishonest way, if they expect a reward or think they are supporting the platform in this way.

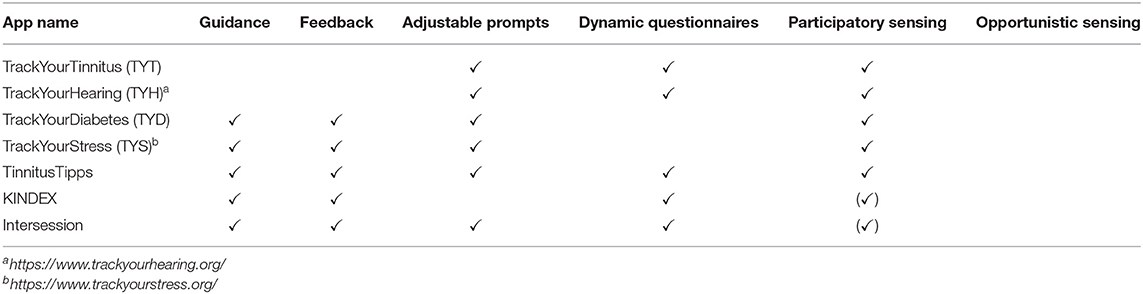

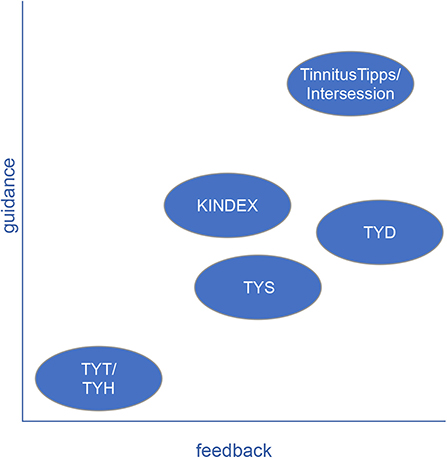

Crucially, smartphones enable us to not only collect explicit answers to EMA questionnaires, but additionally capture the context in which they are collected (Van Berkel et al., 2018). We consider EMA apps similar to mobile crowdsensing, in which the assessed phenomenon in terms of mobile crowdsensing is the ecological data collected in EMA questionnaires. Consequently, we combine the concepts and features of EMA apps with the paradigms of mobile crowdsensing by correlating questionnaire responses and sensor data in order to gain new insights on certain phenomena. Furthermore, we derive different classes of mobile crowdsensing EMA apps depending on the EMA features they provide and the crowdsensing paradigms that they make use of. Table 1 shows examples for apps that are incorporating both EMA and mobile crowdsensing features that were developed by the authors. The TrackYourTinnitus (TYT) project tracks one's individual tinnitus and is described in detail in section 3. Similar to TYT, TrackYourHearing (TYH), TrackYourDiabetes (TYD), and TrackYourStress (TYS) (Pryss et al., 2019) help the user to assess and track the progress of their hearing loss, diabetes, or stress level, respectively, and allow them to be more sensitive to symptom changes in specific contexts. The TinnitusTipps app was designed to enable the communication between healthcare providers (HCP) and tinnitus patients, including the assessment of the user's tinnitus and various automatic as well as manual feedback options. The KINDEX mum screen enables the assessment of psychosocial stress factors during pregnancy (Ruf-Leuschner et al., 2016). Finally, the Intersession app focuses on the assessment and guidance of users during the time between therapy sessions. Even though the last two apps are not incorporating any sensor measurements and are therefore by definition not utilizing MCS, we consider their assessed ecological data as phenomenon of common interest in terms of mobile crowdsensing and their contributions regarding guidance and feedback as a valuable basis for MCS-EMA platforms. The number of users, submitted answer sheets and released versions as well as the incorporated sensor measurements for the developed apps are shown in Table 2. The sensor measurements are performed while the patients answer the questionnaires and stored together with the answer data in order to allow to investigate correlations. To put these apps into perspective, Figure 1 shows how they are incorporating guidance and feedback (as defined in section 2.2.1) on a relative two-dimensional scale based on a subjective rating (however, guided by the extensive experiences) by the authors.

Table 1. Examples of apps developed by the authors combining mobile crowdsensing (MCS) and ecological momentary assessments (EMA), compared according to their respective features.

Figure 1. Subjective and relative rating of guidance and feedback of selected EMA apps developed by the authors.

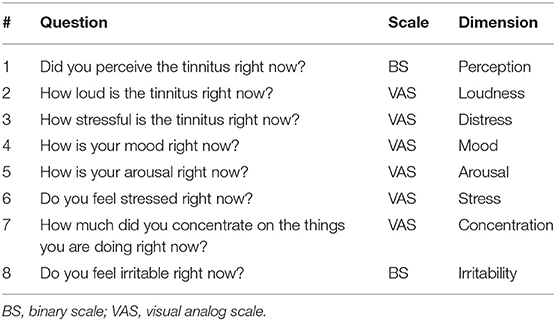

The TrackYourTinnitus (TYT) platform is available and has been maintained since April 2014. It consists of a website for registration1, two native mobile applications (iOS and Android), and a central backend that stores the collected data in a relational database. The mobile apps track the individual tinnitus perception by asking the patients to complete tinnitus assessment EMA questionnaires at different times during the day and on a random basis. The daily questionnaire is assessing tinnitus by measuring eight dimensions, e.g., tinnitus loudness and distress, utilizing the questions shown in Table 3. Furthermore, the apps measure the environmental sound level while patients fill out the questionnaires (Pryss et al., 2015). Medically, tinnitus is the perception of a sound when no corresponding external sound is present. The symptoms, in turn, are subjective and vary over time. Hence, TYT was realized to monitor and evaluate the variability of symptoms over time based on EMA and mobile crowdsensing (Schlee et al., 2016).

Table 3. Questions of the daily questionnaire in the TrackYourTinnitus (TYT) smartphone application, along with their scale and the dimension they measure (Schlee et al., 2016; Pryss et al., 2017).

One potential risk worth considering is whether continuous tracking of tinnitus with the app could aggravate the patient's symptoms by drawing additional attention to them. However, it has been shown that the regular use of the TYT app has no significant negative effect on the perceived tinnitus loudness and the tinnitus distress. Therefore, the app can be considered as a safe method for the longitudinal assessment of tinnitus symptoms in the everyday life of patients (Schlee et al., 2016). Another health risk is that patients (or their HCP) use TYT as a treatment tool and unnecessarily change their treatment plan due to self-reported symptoms in the app. In order to make patients aware of these risks, they are outlined on the TYT website2.

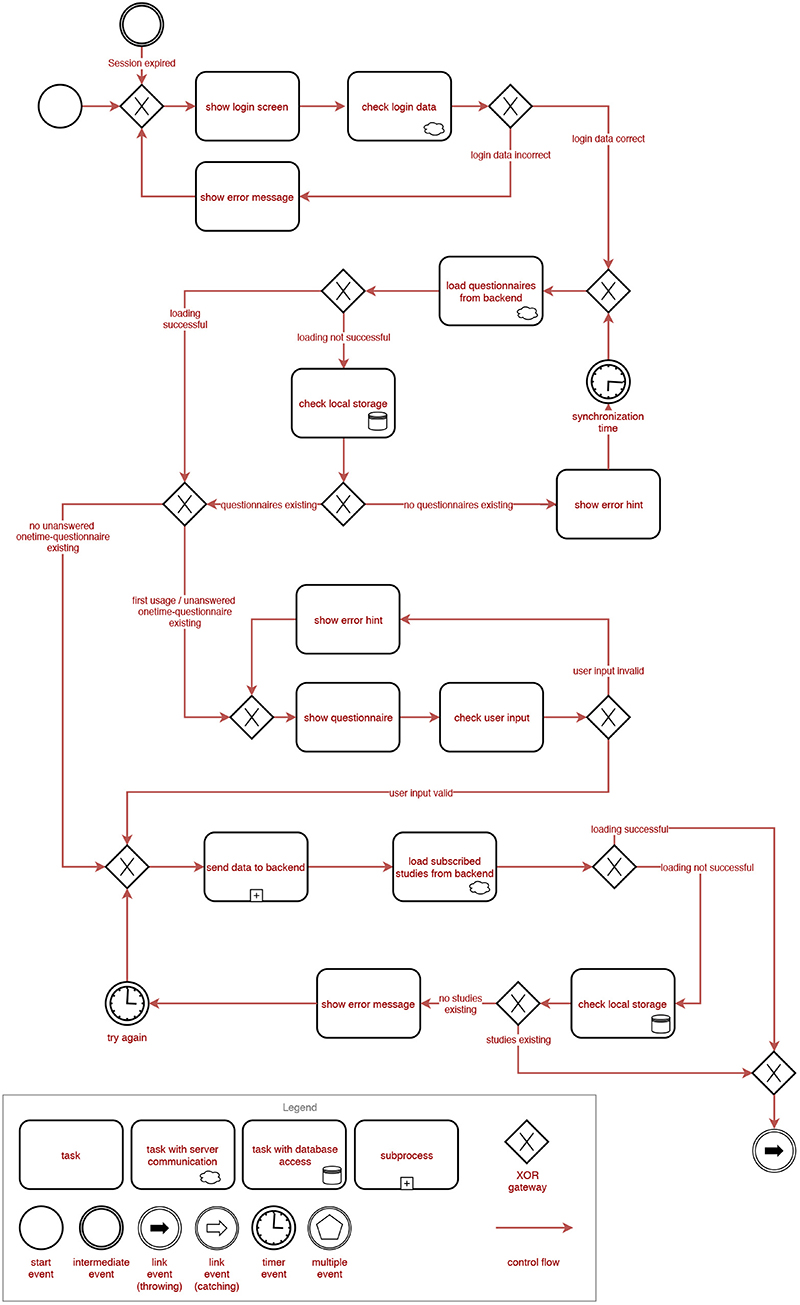

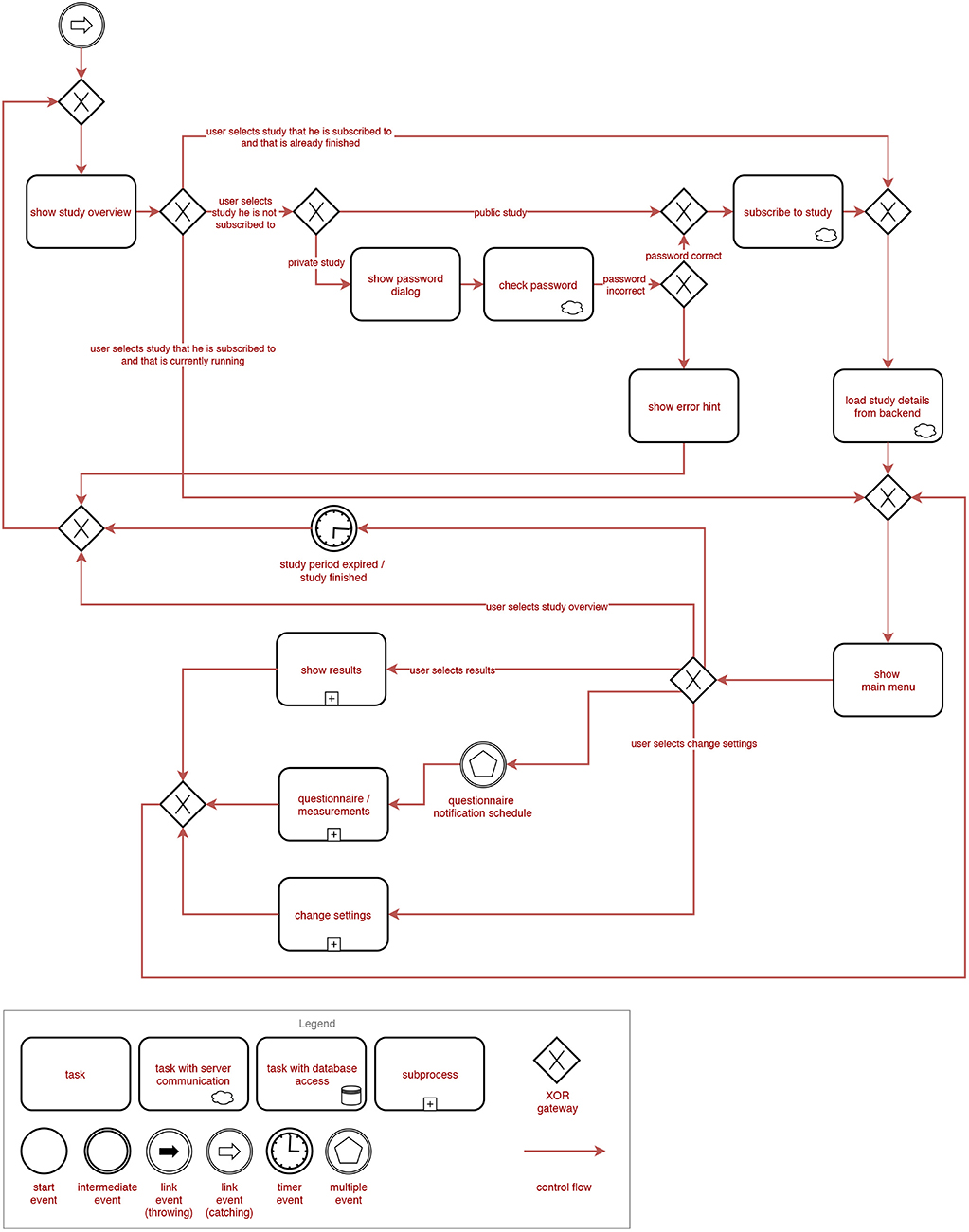

Figures 2, 3 show the general process a user is going through when using the TYT iOS or Android application. Note that these figures are process-oriented graphs in terms of the Business Process Modeling Notation (BPMN). This notation is an industry standard and also well-known for the documentation of healthcare-related procedures (Reichert and Pryss, 2017). With respect to these figures, first of all, a user authenticates himself/herself with his/her login data. Then, all available questionnaires are loaded from a central backend. If the loading is unsuccessful (e.g., no connection to the server can be established), locally stored data is used until the next synchronization attempt. In case there are no locally stored questionnaires, the synchronization attempt is retried until it succeeds. The app then checks if there are first usage (i.e., questionnaires that are only answered once after the first login) or one-time (i.e., questionnaires that are only answered once but might be answered at a later time) questionnaires available. If this is the case, these questionnaires are displayed and can be filled in by the user one after the other. Data is then synchronized with the backend by uploading all newly answered questionnaire data and loading all studies the user is subscribed to. If the synchronization is unsuccessful, the local storage is checked once again and the process is retried after some time if no data can be retrieved both remotely and locally. In the next step, an overview of all available studies is presented to the user. He/She may then select a study from that overview. Depending on the study and the user's subscription status, the following process differs. If the user is currently not subscribed to the study, he/she will be able to (a) directly subscribe to that study if it is public, or (b) be prompted to enter a password if it is a private study. For private studies, the password is then checked with the backend. If the password is correct, the user is subscribed to the study. Otherwise, an error hint is displayed and the user is redirected back to the study overview. If the user is currently subscribed to the study and that study is already finished, its details are loaded from the backend and the user is forwarded to the main menu. If the user is currently subscribed to the study and that study is still running, the user is also forwarded to the main menu. From the main menu, the user can choose to go back to the study overview, display his/her results, fill in questionnaires and perform sensor measurements, and finally, change the settings. From the results, questionnaire and settings views, he/she can always return to the main menu. If the user selects the study overview, or if the study period is expired (respectively, if the study is finished), the study overview is displayed once again.

Figure 2. BPMN representation of the general process of the TrackYourTinnitus (TYT) smartphone application (Part 1).

Figure 3. BPMN representation of the general process of the TrackYourTinnitus (TYT) smartphone application (Part 2).

During the development and advancement of the platform, we faced several challenges and peculiarities. Additionally, we gained some valuable insights when implementing such a combination of an EMA and MCS approach. First, we required a basic functionality to identify different users. One could argue that, since data has to be stored anonymized, a device ID would be sufficient, but this would prevent the users from changing devices without data loss. Therefore, we implemented basic authentication and authorization mechanisms, including registration via email, login with username and password, as well as password reset features.

The core of the application is the presentation and fill-in process of (EMA) questionnaires. In order to facilitate adding new questionnaires and adjusting existing questionnaires at a later time, the platform should offer a generic approach to handle questionnaires. We achieved this by defining the questionnaires as JavaScript Object Notation (JSON) objects containing an array of questionnaire elements (e.g., headline, text, multiple-choice-question), stored on the backend. The apps provide components with functionalities to render, configure, and handle the input for each of these elements. The components are then put together in a list view, and additional checks like input validation or ensuring that required questions are filled in are performed. Another requirement was to make the questionnaires easy to use, while not introducing bias. In order to improve usability of the apps, we tried to make the questionnaires look similar to their paper-pencil counterpart while using as many system-provided and default UI elements as possible when implementing the element components. However, some of the default UI elements are not suitable for the use in psychological questionnaires and had to be adjusted. For instance, default iOS and Android sliders have a pre-selected value, which fosters undesirable anchoring affects (Tversky and Kahneman, 1974).

Furthermore, a sophisticated algorithm has to be deployed in order to implement the notification (i.e., prompting) schedules. The algorithm has to account for the users' sleep and work schedules, ensuring notifications are not too close to each other3 and allowing different adjustments by the user (e.g., the time frame and number of notifications per day). Since we managed the notification schedules exclusively inside the apps, there was no way to retrieve any information on the scheduled and received notifications. Therefore, we were unable to extract valuable information on how users change their notification schedules and, most importantly, we could not evaluate the notification adherence. We offered both random (in a given time frame, adjustable by the user) and fixed (at an exact point in time, chosen by the user) notifications. However, users reported problems with random notifications not being delivered as configured or not delivered at all. While fixed notifications have proven to be more reliable, more flexible, and less disruptive to the user, their value in terms of EMA is to be questioned. Users might integrate answering the questionnaire into their daily routine, which can lead to a possible bias.

In our first version of the app, we incorporated an environmental sound measurement. If enabled by the user, the app tracks the average loudness recorded by the smartphone microphone while the user answers the questionnaires. This value is then stored together with the questionnaire data and can be correlated to gain new valuable insights on tinnitus and its interrelations with environmental sound. However, due to manufacturer and device model differences, measurements are not comparable across users. Calibrations with different device models or other measures to ensure comparability should be performed before integrating similar measurements into mobile applications. Additionally, these sensor measurements are hard-coded into the apps. A dynamic framework to integrate internal and external sensors would facilitate studies aiming to correlate different sensor data with questionnaire data. In this way, one could integrate additional sensors, e.g., positioning with GPS in order to investigate the interrelations to motion patterns or the influence of weather-related factors.

Another aspect worth considering is incentives. There needs to be some sort of motivation for users to continuously submit data. Zhang et al. (2015) divide incentives in mobile crowd sensing applications into entertainment, service, and monetary incentives. Since we do not consider monetary incentives sustainable in the long term (especially in the research context), we focus on the former two categories in order to increase the users' extrinsic and intrinsic motivation. While in TYT, we provided some minimalistic feedback in the form of a chart of the perceived tinnitus loudness and an option to review the history of submitted questionnaires for each individual user, we believe the main incentive for users is the contribution to research on a chronic disorder from which they are suffering. However, more than 78% of users drop out after 10 days of participation. More incentive mechanisms, like advanced feedback, gamification, or social features should be implemented (Agrawal et al., 2018).

In order to perform different studies with the app (and to exclude test users from the actual data set), the need to separate users into study groups inside the app emerged. We updated the app to incorporate a basic study allocation. Users are able to join studies by manually selecting them from a list inside the app. However, users can currently only be member of a single study at a time and there is no functionality in place for the study manager to control or verify which user joins which study without checking the database.

Since mobile devices are not guaranteed to always be connected to the internet (i.e., be online), the app should also be functional without internet connection whenever possible. TYT offers a basic offline functionality by initially downloading all questionnaires and storing them on the device. Additionally, the users' given answers for questionnaires are cached on the device if there is no internet connectivity until the connection is restored. This way, the feedback features also remain functional. However, other features, e.g., the study management, are only available if the device is online.

Furthermore, safety, security, and privacy are aspects of high importance in the healthcare domain. Region-specific regulations, e.g., the General Data Protection Regulation (GDPR) and the Medical Device Regulation (MDR) in the EU, as well as high expectations of patients need to be considered when designing a software system in this field. TYT applies state-of-the-art security measures with an email verification as part of the registration process, credential-based authentication and token-based authorization (see above) as well as encrypted data transmission via SSL/TLS (Rescorla, 2018). Health risks are outlined on the website. However, since safety, security, and privacy requirements are constantly evolving, a more transparent informed consent, additional security measures and a privacy-preserving design would be desirable for the future (e.g., Beierle et al., 2019).

Data quality of the submitted data is another critical issue in MCS-EMA apps (see section 2.2.2). As already discussed above, reliable sensor and comparable measurements on mobile devices are difficult to achieve due to the variety of device models. But, also for the questionnaire data, no real statement can be made regarding its quality. Since we only require the user to answer two of the eight questions in the daily questionnaire, users can skip most of the questions if they would like to do so, which leads to missing values. Also, if users feel forced to answer a question or have malicious intentions, they might provide untruthful data. In addition, the use of a smartphone to gather large amounts of personal data in real life that is stored to a large database for scientific research could boost competition thoughts. Consequently, participants might provide data only for the purpose of providing more data than others. Such factors should be taken into account and mechanisms should be in place to cope with data quality.

Moreover, scientists providing the platform and HCPs want to analyze the collected data. In TYT, data analysis is only possible in a static way by querying the raw database. More flexible, on-demand analysis functionalities for scientists evaluating the platform data are desirable. Furthermore, HCPs and their patients could benefit from a dynamic analysis of the patients' data, providing detailed insights and building the baseline for tailored feedback.

Finally, the experiences gained with TrackYourTinnitus and the projects shown in Table 2 are discussed in the light of their general contribution and their generalizability. A recent review of mobile health crowdsensing research (Tokosi and Scholtz, 2019) shows that the projects shown in Table 2 and the related papers are heavily recognized by their selected key terms of existing works. Tokosi and Scholtz (2019) also shows that although more and more research is pursued in this context, less experiences are reported that were gained over multiple large-scale and long-running projects. Therefore, we consider our experiences as a proper starting point to conceive a reference architecture that incorporates aspects that are relevant on one hand. On the other, these aspects have shown their importance at multiple times. Furthermore, the authors have already worked on better generic solutions for parts of the reference architecture. For example, for the REST interface (see Figure 4) in Pryss et al. (2018b), a more generic solution was proposed. This solution, in turn, is utilized by all projects shown in Table 2 that have been started after TrackYourTinnitus. However, as for other purposes, like mobile data collection, better generic solutions have been proposed (e.g., Schobel et al., 2019). A configurable crowdsensing platform based on (1) the archetype shown in (Schobel et al., 2019) and (2) the results of this work is currently conceived. Moreover, developments, such as PACO4 show that easily customizable MCS-EMA apps are highly welcome by users. In addition, commercial tools, such as ilumivu5 emphasize the need of generic solutions in the given context of EMA and mobile crowdsensing. Thereby, the ilumivu technical solution provides already sophisticated features for EMA apps on a generic level. Importantly, these features deal with many aspects raised in this work. On the other, ilumivu still does not consider all of the discussed aspects. For example, ilumivu does not convey how they cope with a management of incentives. Following this, the work at hand can be utilized to reflect existing solutions or new developments with the shown experiences and derived recommendations, especially as they are gained over time and across projects. We do not claim that these recommendations are complete or cover every aspect, but we consider them as a proper starting point for various projects and questions in the context of healthcare and the combination of mobile crowdsensing and EMA.

Based on the findings in section 3, we derive a number of recommendations for a mature and contemporary MCS-EMA platform. We then propose a reference architecture to address these recommendations and discuss technical considerations with respect to the implementation.

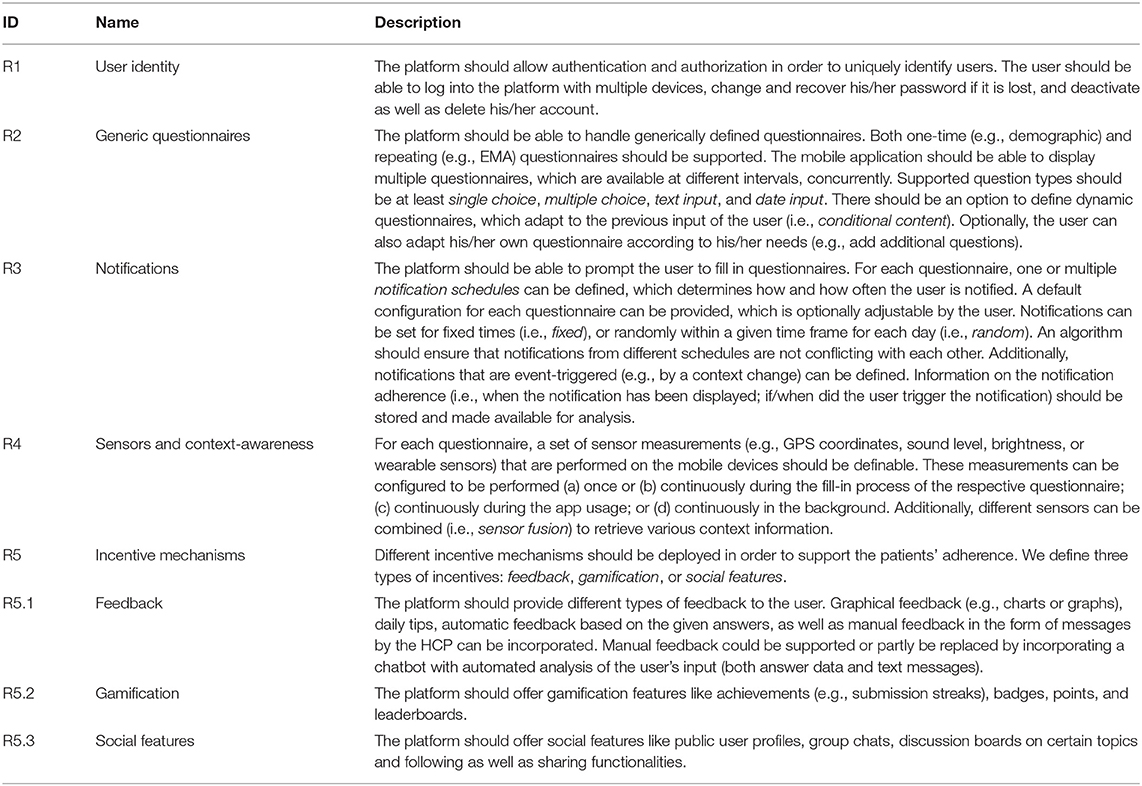

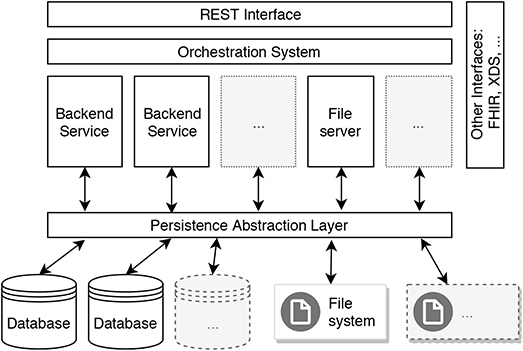

We derived twelve recommendations from the lessons learned during the TYT project (see section 3), various discussions with colleagues and domain experts, as well as general considerations when building a modern software system. Namely, these recommendations are (R1) User Identity, (R2) Generic Questionnaires, (R4) Sensors and Context-Awareness, (R5) Incentive Mechanisms, (R6) Groups, Studies and HCPs, (R7) High Availability and Performance, (R8) Offline Availability, (R9) Safety, Security, and Privacy, (R10) Data Quality, (R11) Data Analysis, and (R12) Interoperability. The recommendations are described in detail in Tables 4, 5.

Table 4. Recommendations for a platform combining mobile crowdsensing (MCS) and ecological momentary assessments (EMA) in the healthcare domain (Part 1).

Table 5. Recommendations for a platform combining mobile crowdsensing (MCS) and ecological momentary assessments (EMA) in the healthcare domain (Part 2).

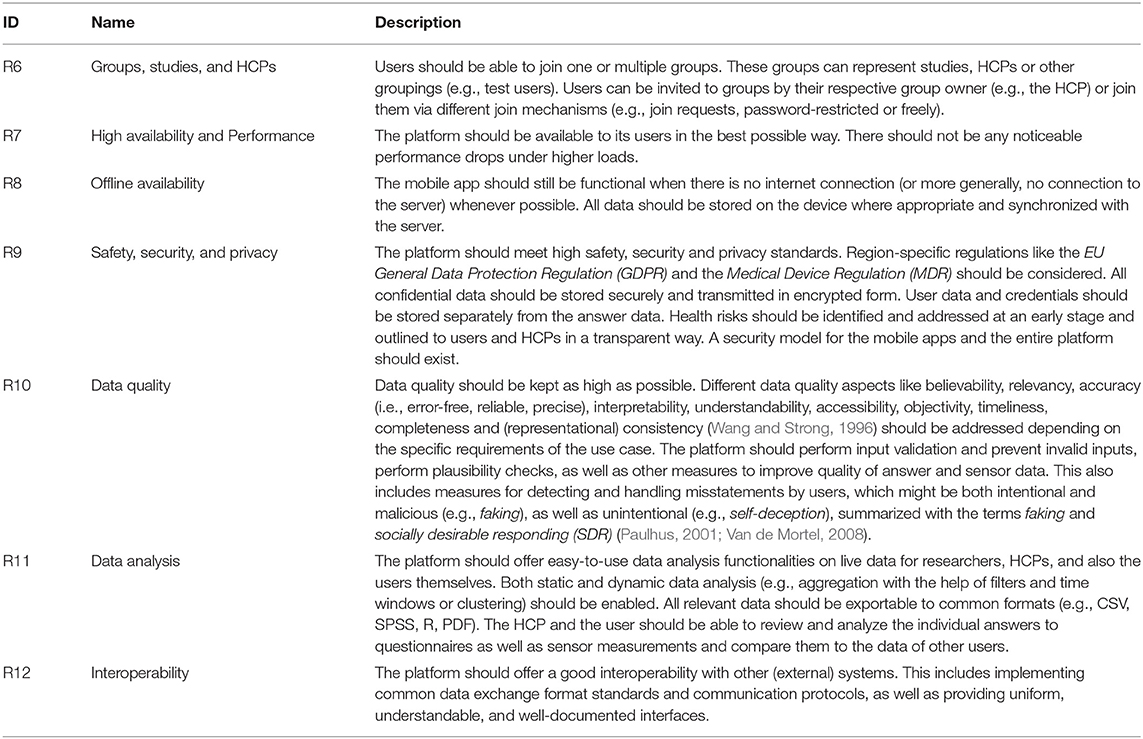

Based on the recommendations defined in section 4.1, we propose a reference architecture for a platform supporting the combination of mobile crowdsensing and ecological momentary assessments in the healthcare domain. Figure 4 shows the general architecture. It comprises a central backend with different services, a database and a file server, as well as mobile apps for both Android and iOS, a web dashboard for HCPs and another web dashboard for system administrators (admins). The clients (mobile apps, HCP dashboard and admin dashboard) communicate with the backend via a RESTful interface. Files, like multimedia and documents, are stored on a file server. Relevant files are downloaded and additionally stored on the mobile devices. All relevant data, like questionnaires, notification schedules as well as answer and sensor data are synchronized between the central database in the backend and the mobile apps' local databases. The backend additionally provides other interfaces for external systems, implementing common standards in the healthcare domain.

Furthermore, we discuss technical considerations in order to address some of the architectural aspects of the defined recommendations in respect to our reference architecture. First, in order to achieve high availability, the system has to be scalable, and in the best case, elastic. According to definitions provided by Herbst et al., scalability is “the ability of a system to handle increasing workloads with adequate performance,” while elasticity is “the degree to which a system is able to adapt to workload changes by provisioning and deprovisioning resources in an autonomic manner, such that at each point in time the available resources match the current demand as closely as possible” (Herbst et al., 2013). We suggest to use a cloud-native approach to address these recommendations. A cloud-native application (CNA) is explicitly designed to be operated in the cloud. Therefore, such application is—by design—distributed, elastic, and horizontally scalable. Furthermore, it is composed of microservices with a minimum of isolated states (Kratzke and Quint, 2017). The internal architecture for a cloud-native implementation of the backend in our reference architecture is shown in Figure 5. The backend can be decomposed to multiple microservices, and these microservices can then be replicated in order to enable horizontal scalability. Optimally, the database, file server and file system should be distributed and/or replicated as well. In order to provide elasticity, an orchestration system is used to monitor metrics describing the load of the system and automatically orchestrate resources based on these metrics in order to scale in and scale out. A common approach would be to use Docker6 as container technology to implement microservices and Kubernetes7 (Burns et al., 2016) as container-orchestration system.

Figure 5. Scalable design of a backend in the reference architecture for MCS-EMA platforms in the healthcare domain.

In order to provide high levels of security and privacy, all communication between different components of the architecture should be encrypted. All personal and private user data should be stored separately from the application data to reduce the risk of it being exposed in case of a data breach. Optionally, in a privacy-preserving design, this data should be encrypted in a way that it can only be decrypted by each respective user himself. In the best case, a dedicated privacy model is incorporated or developed (e.g., Beierle et al., 2019).

Furthermore, for the development of the mobile apps, it has to be decided whether to develop a native app for each target platform (e.g., Android, iOS, web browser) or use cross-platform frameworks that enable the developer to use a single code-base and deploy this code to different platforms. We recommend to use cross-platform frameworks (e.g., Xamarin8, Flutter9, or Ionic10) for small developer teams and teams which are prone to changes (e.g., research projects), since the single code base requires less efforts for development and maintenance, as well as causes lesser heterogeneity-based challenges in programming languages and tools, which makes it easier for new developers to enter the team. However, for bigger and more consistent developer teams, native app development might be better suited. Native apps might provide a better interface to the operating system and therefore more control over sensors and the user interface, as well as potentially better performance. This has special value to MCS apps incorporating advanced sensor usage.

Finally, in order to provide good interoperability with other internal as well as external systems, common interfaces should be provided. This includes state-of-the-art architectural styles in web technology like REST (Fielding and Taylor, 2000; Pryss et al., 2018b), but also standards in the healthcare domain [e.g., FHIR11 or XDS (Trotter and Uhlman, 2011)]. Standards that one wants to support should be considered at an early stage when designing the data models.

We argue that, when considering mobile crowdsensing in the healthcare domain, differentiating only between participatory and opportunistic sensing is not sufficient. Other aspects like context-awareness, incentive mechanisms, groups, security, and privacy, data quality, as well as technical aspects like availability, performance, offline availability and interoperability should be also thoroughly taken into account. Additionally, although personal sensing data on its own only belongs to an individual user, it can be used in order to be beneficial for the community as a whole by processing, clustering, and correlating this type of data. Therefore, we further argue that in the context of mobile crowdsensing in healthcare, there is no distinct separation between community sensing and personal sensing, and that both concepts should be considered depending on the scenario that is addressed.

Furthermore, in the literature, MCS and EMA are considered as separate, mostly unrelated concepts. While they have different origins, we argue that both concepts make use of similar approaches, namely leveraging the crowd and their (already existing) mobile devices in order to assess phenomena of common interest. Therefore, they should be considered closely related to each other, and their combination should get more awareness. Beyond that, the architectural model is often not provided in publications on MCS and EMA studies, although we argue that it has meaningful implications on the comparability of their results. We believe that a reference architecture, such as that introduced in this work, can raise awareness and counteract this issue to a certain degree. In this context, we have particularly shown which aspects the reference architecture incorporates to develop more generic technical solutions based on it.

In this work, we discussed the combination of mobile crowdsensing (MCS) and ecological momentary assessment (EMA) in the healthcare domain. We introduced both terms and described how we considered their underlying concepts that are similar to each other, which fosters combining MCS and EMA in a single approach. Furthermore, we discussed the lessons we learned from the TrackYourTinnitus project, which is running for over 5 years. Based on these findings, we derived recommendations for a platform supporting the combination of MCS and EMA in the healthcare domain. We then proposed a reference architecture for such a platform, described its components and how they interact. Additionally, we outlined how the reference architecture could be implemented in order to address the defined recommendations from the technical side. Furthermore, we discussed how MCS and EMA research should be considering both concepts in combination and propose that publications in this field should refer to the used architectural model.

In conclusion, one can see that there are numerous conceptual, architectural and technical, as well as legal challenges when designing a MCS-EMA platform for the healthcare domain. We believe that the defined recommendations can—adjusted to the individual factors, needs and requirements of a (research) project or product—act as foundation for future MCS-EMA systems. All the different aspects should be considered at an early stage of the project. Additionally, the reference architecture can serve as a generic template for a platform implementation. Technical considerations should be kept in mind in order to be able to scale and cope with future requirements. However, we believe that the combination of MCS and EMA is a promising approach for many different use cases in the healthcare domain. For this endeavor, our reference architecture and recommendations shall be a basis for more generic and comparable technical solutions.

RK and RP substantially contributed to the TrackYourTinnitus platform, drafted, and revised the manuscript. WS, MS, MR, BL, and TP substantially contributed to the TrackYourTinnitus platform and revised the manuscript. HB read and revised the manuscript. RH substantially contributed to the TinnitusTipps platform and revised the manuscript.

RH was employed by the company Sivantos GmbH, Germany.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

1. ^https://www.trackyourtinnitus.org/

2. ^https://www.trackyourtinnitus.org/about

3. ^We chose 15 min as minimal distance between two consecutive notifications.

8. ^https://dotnet.microsoft.com/apps/xamarin

Agrawal, K., Mehdi, M., Reichert, M., Hauck, F., Schlee, W., Probst, T., et al. (2018). Towards incentive management mechanisms in the context of crowdsensing technologies based on trackyourtinnitus insights. Proc. Comput. Sci. 134, 145–152. doi: 10.1016/j.procs.2018.07.155

Beierle, F., Tran, V. T., Allemand, M., Neff, P., Schlee, W., Probst, T., et al. (2019). What data are smartphone users willing to share with researchers? J. Ambient Intell. Human. Comput. 2019, 1–13. doi: 10.1007/s12652-019-01355-6

Burke, J. A., Estrin, D., Hansen, M., Parker, A., Ramanathan, N., Reddy, S., et al. (2006). Participatory Sensing. Los Angeles, CA: UCLA; Center for Embedded Network Sensing.

Burns, B., Grant, B., Oppenheimer, D., Brewer, E., and Wilkes, J. (2016). Borg, Omega, and Kubernetes. New York, NY: ACM Queue.

Consolvo, S., Everitt, K., Smith, I., and Landay, J. A. (2006). “Design requirements for technologies that encourage physical activity,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Montreal, QC: ACM), 457–466.

Ebner-Priemer, U. W., and Kubiak, T. (2007). Psychological and psychophysiological ambulatory monitoring. Eur. J. Psychol. Assess. 23, 214–226. doi: 10.1027/1015-5759.23.4.214

Fielding, R. T., and Taylor, R. N. (2000). Architectural styles and the design of network-based software architectures (Doctoral dissertation), University of California, Irvine, Irvine, CA, United States.

Ganti, R. K., Ye, F., and Lei, H. (2011). Mobile crowdsensing: current state and future challenges. IEEE Commun. Mag. 49, 32–39. doi: 10.1109/MCOM.2011.6069707

Guo, B., Wang, Z., Yu, Z., Wang, Y., Yen, N. Y., Huang, R., et al. (2015). Mobile crowd sensing and computing: the review of an emerging human-powered sensing paradigm. ACM Comput. Surv. 48:7. doi: 10.1145/2794400

Herbst, N. R., Kounev, S., and Reussner, R. (2013). “Elasticity in cloud computing: what it is, and what it is not,” in Proceedings of the 10th International Conference on Autonomic Computing (ICAC 13) (San Jose, CA), 23–27.

Kratzke, N., and Quint, P.-C. (2017). Understanding cloud-native applications after 10 years of cloud computing–a systematic mapping study. J. Syst. Softw. 126, 1–16. doi: 10.1016/j.jss.2017.01.001

Lane, N. D., Miluzzo, E., Lu, H., Peebles, D., Choudhury, T., and Campbell, A. T. (2010). A survey of mobile phone sensing. IEEE Commun. Mag. 48, 140–150. doi: 10.1109/MCOM.2010.5560598

Larson, R., and Csikszentmihalyi, M. (2014). “The experience sampling method,” in Flow and the Foundations of Positive Psychology, ed M. Csikszentmihalyi (Dordrecht: Springer), 21–34.

Mun, M., Reddy, S., Shilton, K., Yau, N., Burke, J., Estrin, D., et al. (2009). “Peir, the personal environmental impact report, as a platform for participatory sensing systems research,” in Proceedings of the 7th International Conference on Mobile Systems, Applications, and Services (Kraków: ACM), 55–68.

Myin-Germeys, I., Oorschot, M., Collip, D., Lataster, J., Delespaul, P., and Van Os, J. (2009). Experience sampling research in psychopathology: opening the black box of daily life. Psychol. Med. 39, 1533–1547. doi: 10.1017/S0033291708004947

Paulhus, D. L. (2001). “Socially desirable responding: the evolution of a construct,” in The Role of Constructs in Psychological and Educational Measurement, eds H. I. Braun, D. N. Jackson, and D. E. Wiley (Abingdon: Routledge), 61–84.

Pryss, R. (2019). “Mobile crowdsensing in healthcare scenarios: taxonomy, conceptual pillars, smart mobile crowdsensing services,” in Digital Phenotyping and Mobile Sensing, eds H. Baumeister and C. Montag (Cham: Springer), 221–234.

Pryss, R., John, D., Schlee, W., Schlotz, W., Schobel, J., Kraft, R., et al. (2019). Exploring the time trend of stress-levels while using the crowdsensing mhealth platform trackyourstress and the influence of stress reactivity. JMIR mHealth uHealth 7:e13978. doi: 10.2196/13978

Pryss, R., Probst, T., Schlee, W., Schobel, J., Langguth, B., Neff, P., et al. (2018a). Prospective crowdsensing versus retrospective ratings of tinnitus variability and tinnitus–stress associations based on the trackyourtinnitus mobile platform. Int. J. Data Sci. Anal. 8, 327–338. doi: 10.1007/s41060-018-0111-4

Pryss, R., Reichert, M., Herrmann, J., Langguth, B., and Schlee, W. (2015). “Mobile crowd sensing in clinical and psychological trials–a case study,” in 2015 IEEE 28th International Symposium on Computer-Based Medical Systems (São Carlos: IEEE), 23–24.

Pryss, R., Schlee, W., Langguth, B., and Reichert, M. (2017). “Mobile crowdsensing services for tinnitus assessment and patient feedback,” in 2017 IEEE International Conference on AI & Mobile Services (AIMS) (Honolulu, HI: IEEE), 22–29.

Pryss, R., Schobel, J., and Reichert, M. (2018b). “Requirements for a flexible and generic api enabling mobile crowdsensing mhealth applications,” in 2018 4th International Workshop on Requirements Engineering for Self-Adaptive, Collaborative, and Cyber Physical Systems (RESACS) (Banff, AB: IEEE), 24–31.

Raento, M., Oulasvirta, A., and Eagle, N. (2009). Smartphones: an emerging tool for social scientists. Sociol. Methods Res. 37, 426–454. doi: 10.1177/0049124108330005

Reichert, M., and Pryss, R. (2017). Flexible support of healthcare processes. Process Model. Manag. Healthc. 1, 35–66. doi: 10.1201/b21884-2

Rescorla, E. (2018). The Transport Layer Security (TLS) Protocol Version 1.3. Technical report, RFC Editor.

Ruf-Leuschner, M., Brunnemann, N., Schauer, M., Pryss, R., Barnewitz, E., Liebrecht, M., et al. (2016). The kindex-app–an instrument for assessment and immediate analysis of psychosocial risk factors in pregnant women in daily practice by gynecologists, midwives and in gynecological hospitals. Verhaltenstherapie 26, 171–181. doi: 10.1159/000448455

Schlee, W., Pryss, R. C., Probst, T., Schobel, J., Bachmeier, A., Reichert, M., et al. (2016). Measuring the moment-to-moment variability of tinnitus: the trackyourtinnitus smart phone app. Front. Aging Neurosci. 8:294. doi: 10.3389/fnagi.2016.00294

Schobel, J., Probst, T., Reichert, M., Schickler, M., and Pryss, R. (2019). Enabling sophisticated lifecycle support for mobile healthcare data collection applications. IEEE Access 7, 61204–61217. doi: 10.1109/ACCESS.2019.2916142

Schobel, J., Pryss, R., and Reichert, M. (2015). “Using smart mobile devices for collecting structured data in clinical trials: results from a large-scale case study,” in 2015 IEEE 28th International Symposium on Computer-Based Medical Systems (São Carlos: IEEE), 13–18.

Schweizer, I., Bärtl, R., Schulz, A., Probst, F., and Mühläuser, M. (2011). “NoiseMap–real-time participatory noise maps,” in Second International Workshop on Sensing Applications on Mobile Phones (Seattle, WA: Citeseer), 1–5.

Shiffman, S. (2007). “Designing protocols for ecological momentary assessment,” in The Science of Real-Time Data Capture: Self-Reports in Health Research, eds A. Stone, S. Shiffman, A. Atienza, and L. Nebeling (Oxford: Oxford University Press), 27–53.

Shiffman, S., Stone, A. A., and Hufford, M. R. (2008). Ecological momentary assessment. Annu. Rev. Clin. Psychol. 4, 1–32. doi: 10.1146/annurev.clinpsy.3.022806.091415

Stone, A. A., and Shiffman, S. (1994). Ecological momentary assessment (EMA) in behavorial medicine. Ann. Behav. Med. 16, 199–202. doi: 10.1093/abm/16.3.199

Stone, A. A., and Shiffman, S. (2002). Capturing momentary, self-report data: a proposal for reporting guidelines. Ann. Behav. Med. 24, 236–243. doi: 10.1207/S15324796ABM2403_09

Tokosi, T. O., and Scholtz, B. M. (2019). “A classification framework of mobile health crowdsensing research: a scoping review,” in Proceedings of the South African Institute of Computer Scientists and Information Technologists 2019 (Skukuza: ACM), 4.

Trotter, F., and Uhlman, D. (2011). Hacking Healthcare: A Guide to Standards, Workflows, and Meaningful Use. Sebastopol, CA: O'Reilly Media, Inc.

Tversky, A., and Kahneman, D. (1974). Judgment under uncertainty: heuristics and biases. Science 185, 1124–1131. doi: 10.1126/science.185.4157.1124

Van Berkel, N., Ferreira, D., and Kostakos, V. (2018). The experience sampling method on mobile devices. ACM Comput. Surv. 50:93. doi: 10.1145/3123988

Van de Mortel, T. F. (2008). Faking it: social desirability response bias in self-report research. Aust. J. Adv. Nurs. 25:40.

Van Haren, S. (2018). An exploratory study into the use of mobile experience sampling as a method for assessing infant language development (Master's thesis), Tilburg University, Tilburg, Netherlands.

Wang, R. Y., and Strong, D. M. (1996). Beyond accuracy: what data quality means to data consumers. J. Manag. Inform. Syst. 12, 5–33. doi: 10.1080/07421222.1996.11518099

Wesolowski, A., Eagle, N., Tatem, A. J., Smith, D. L., Noor, A. M., Snow, R. W., et al. (2012). Quantifying the impact of human mobility on malaria. Science 338, 267–270. doi: 10.1126/science.1223467

Zappatore, M., Longo, A., and Bochicchio, M. A. (2017). Crowd-sensing our smart cities: a platform for noise monitoring and acoustic urban planning. J. Commun. Softw. Syst. 13:53. doi: 10.24138/jcomss.v13i2.373

Keywords: mobile crowdsensing (MCS), crowdsourcing, ecological momentary assessments (EMA), mobile healthcare application, chronic disorders, reference architecture

Citation: Kraft R, Schlee W, Stach M, Reichert M, Langguth B, Baumeister H, Probst T, Hannemann R and Pryss R (2020) Combining Mobile Crowdsensing and Ecological Momentary Assessments in the Healthcare Domain. Front. Neurosci. 14:164. doi: 10.3389/fnins.2020.00164

Received: 05 September 2019; Accepted: 13 February 2020;

Published: 28 February 2020.

Edited by:

Alejandro Rodríguez González, Polytechnic University of Madrid, SpainReviewed by:

Kazuhiro Yoshiuchi, The University of Tokyo, JapanCopyright © 2020 Kraft, Schlee, Stach, Reichert, Langguth, Baumeister, Probst, Hannemann and Pryss. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Robin Kraft, cm9iaW4ua3JhZnRAdW5pLXVsbS5kZQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.