95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 28 March 2017

Sec. Auditory Cognitive Neuroscience

Volume 11 - 2017 | https://doi.org/10.3389/fnins.2017.00153

Music can trigger emotional responses in a more direct way than any other stimulus. In particular, music-evoked pleasure involves brain networks that are part of the reward system. Furthermore, rhythmic music stimulates the basal ganglia and may trigger involuntary movements to the beat. In the present study, we created a continuously playing rhythmic, dance floor-like composition where the ambient noise from the MR scanner was incorporated as an additional instrument of rhythm. By treating this continuous stimulation paradigm as a variant of resting-state, the data was analyzed with stochastic dynamic causal modeling (sDCM), which was used for exploring functional dependencies and interactions between core areas of auditory perception, rhythm processing, and reward processing. The sDCM model was a fully connected model with the following areas: auditory cortex, putamen/pallidum, and ventral striatum/nucleus accumbens of both hemispheres. The resulting estimated parameters were compared to ordinary resting-state data, without an additional continuous stimulation. Besides reduced connectivity within the basal ganglia, the results indicated a reduced functional connectivity of the reward system, namely the right ventral striatum/nucleus accumbens from and to the basal ganglia and auditory network while listening to rhythmic music. In addition, the right ventral striatum/nucleus accumbens demonstrated also a change in its hemodynamic parameter, reflecting an increased level of activation. These converging results may indicate that the dopaminergic reward system reduces its functional connectivity and relinquishing its constraints on other areas when we listen to rhythmic music.

Playing and enjoying music is an universal phenomenon and can be found in all known present and past cultures (Gray et al., 2001; Zatorre and Krumhansl, 2002; Fritz et al., 2009); and emotional responses to music are an experience that almost everyone has felt. Further, in all cultures, across all ages, and without the requirement of musical expertise, people easily dance and synchronize their body movements to rhythmic music (Phillips-Silver and Trainor, 2005). Consequently, the recent two decades have seen an increasing interest in mapping the neuroanatomical correlates of music processing for understanding the underlying mechanism that make music such a unique stimulus in human culture and—although controversially discussed—human evolution (Levitin and Tirovolas, 2009). It turns out that the processing of music does not only activate the auditory system but a complex and distributed network, covering cortical and sub-cortical areas (Peretz and Zatorre, 2005; Zatorre and McGill, 2005; Koelsch, 2011, 2014; Zatorre, 2015). Two aspects have been in the closest focus over the recent years: Processing of rhythm and emotions evoked by music.

It is a daily experience that listening to predominantly rhythmic music often spontaneously triggers toe tapping, foot tapping, or head nodding, synchronous with the beat, and is perceived as pleasurable. It is an almost automatic and often involuntary process that does not require cognitive awareness in order to perform rhythmical movements in synchronization with the perceived beat (Phillips-Silver and Trainor, 2005; Trost et al., 2014). It also requires no musical skills or formal musical training, and is mostly and easily triggered by auditory stimulation. It has been shown that rhythm processing, i.e., both the production of rhythmic movements and the perception of rhythmic sounds, activates the basal ganglia and here the putamen in particular, the supplementary motor area (SMA), pre-SMA, and cerebellum (Grahn and Brett, 2007; Zatorre et al., 2007; Chen et al., 2008a,b; Grahn and Rowe, 2009); but also other substructures have been identified as structures that encode musical meter, like the caudate nucleus (Trost et al., 2014). Moreover, studies have also shown that the perception of rhythms with a preferred tempo increases activity in the premotor cortex (Kornysheva et al., 2010).

Besides rhythm, there is mounting evidence that listening to music induces emotional experiences and could interact with the affect systems. Neuroanatomically, these processes are tightly linked not only to the amygdala, but to a network that is involved in expecting and experiencing reward (Levitin and Tirovolas, 2009; Chanda and Levitin, 2013; Koelsch, 2014; Salimpoor et al., 2015). In particular the ventral striatum, comprising the caudate nucleus and the nearby nucleus accumbens together with the ventral tegmental area, the ventral pallidum and other, mainly frontal areas have been repeatedly related to music listening (Blood and Zatorre, 2001; Levitin and Tirovolas, 2009; Chanda and Levitin, 2013; Salimpoor et al., 2013, 2015; Zatorre and Salimpoor, 2013; Koelsch, 2014; Zatorre, 2015). Interestingly, Salimpoor et al. (2011) demonstrated an anatomically distinct increased release of dopamine, depending on whether an emotional response to music was anticipated or experienced. While the dorsal striatum demonstrated an elevated release of dopamine only during the anticipation of an emotional response to the music, the nucleus accumbens demonstrated this during the experience of an emotional response (Salimpoor et al., 2011). Further, the activity of the nucleus accumbens also served as a predictor of the amount one would spend for the music (Salimpoor et al., 2013).

The above-described aspects of emotional responses to music and synchronized movement to rhythmic auditory stimulation are interweaved, particularly in musical groove. Groove is often defined as the musical quality that induces movement and pleasure (Madison, 2006; Madison et al., 2011; Janata et al., 2012; Witek et al., 2014). Further, a recent transcranial-magnetic stimulation study demonstrated that musical groove is able to modulate excitability within the motor system, with stronger motor-evoked potentials to high-groove music (Stupacher et al., 2013). From a musicology perspective, there is strong evidence that the experience of groove is mainly triggered by beat density and syncopation, but also a moderate, i.e., not too simple and not too complex, rhythmic complexity (Stupacher et al., 2013; Witek et al., 2014, 2015). An example for this type of music is the genre of electronic dance music (Panteli et al., 2016), to which also the stimulation of the present study would belong.

Based on the above-described studies, it is evident that rhythmic music, like electronic dance music, triggers responses in different parts of the basal ganglia loop. In particular, the ventral striatum and the putamen appear as key areas that respond to the rhythm of the music and may generate an emotional response. Therefore, it was hypothesized that rhythmic music will affect the connectivity within a network comprising the auditory cortex, putamen/pallidum, and ventral striatum/nucleus accumbens.

This hypothesis was tested using a continuous stimulation paradigm and a resting-state like analysis approach by using stochastic dynamic causal modeling (sDCM). This procedure does not test activation strength per se, since there is no reference condition for a contrast, but examines fluctuations of the BOLD signal and functional dependencies between regions. DCM, in general, allows the specification of models with up to eight anatomically distinct regions that are either directly or indirectly connected to each other. Here, the model space was restricted to a plausible model of six areas, representing the sensory input, the rhythm processing in the basal ganglia, and the reward system.

The participants were recruited from the student population at the University of Bergen. In total, 26 right-handed, healthy adults (music group: eight female, five male, mean age 30.8 ± 8.4, control group: six female, seven male, mean age 22.8 ± 3.7,) participated in this fMRI study. All participants gave written informed consent in accordance with the Declaration of Helsinki and institutional guidelines, and an approval of fMRI studies in healthy subjects was obtained from the regional ethics committee for western Norway (REK-Vest). Half of the subjects listened to the music during the scanning, while the other 13 subjects served as control subjects with an ordinary resting-state fMRI condition without any additional auditory stimulation. Both groups got the instruction to lie still with eyes open. Participants were compensated for their effort with 200 NOK. Exclusion criteria were neurological or psychiatric disorders, claustrophobia, any surgery of the brain, eyes, or head, pregnancy, implants, braces, large tattoos, and non-removable piercing. All participants got an emergency button and were informed that they could withdraw from the study at any point. Participants were recruited mainly through announcements at the University of Bergen and the Haukeland University Hospital.

To overcome limitations from earlier studies, where often short or chunked pieces of music have been used, the presented study used a new continuous-stimulation design with an experimentally controlled composition, which was synchronized with the sounds generated by the MR scanner. The music was created as electronic dance music, out of 12 samples of different instruments, like dance drums, bass, kick snare, guitar, etc., mixed together with Adobe Audition 2.0 (www.adobe.com) to a 10.16 min-long sequence with 120 beats per minute (see Supplementary Material).

This piece of music was composed with repeated periods of 20 s duration where all the instruments were playing, alternating with periods where only a few instruments were playing. However, the overall rhythm was always present during the entire sequence of 10.16 min. During image acquisition, the TR was set to 2 s, which included a 0.5 s silent gap. By doing so, the MR scanner was synchronized with the rhythm of the music and thereby was acting as “additional” instrument and was not perceived as an unrelated and unsynchronized auditory stimulation. During the scanning, the subjects of the music group were asked to relax and to listen to the music, and were also asked not to move during the scanning. Participants were interviewed after the examination and all except one reported to have experienced the music as pleasant and relaxing. The control subjects were just asked to relax. Although the control subject were examined with the same sequence, i.e., with brief silent gaps of 500 ms, they did not report to have perceived the scanner noise as any kind of music, and they were only debriefed afterwards that they served as control participants in a study that focuses on rhythm perception.

The fMRI study was performed on a 3T GE Signa Excite scanner, and the axial slices for the functional imaging were positioned parallel to the AC–PC line with reference to a high-resolution anatomical image of the entire brain volume, which was obtained using a T1-weighted gradient echo pulse sequence. The functional images were acquired using an echo-planar imaging (EPI) sequence with 311 volumes, each containing 24 axial slices (64 × 64 matrix, 3.4 × 3.4 × 5.5 mm3 voxel size, TE 30 ms) that covered the cerebrum and most of the cerebellum. This low spatial resolution was selected to gain a short acquisition time of TA 1.5 s. Together with a silent gap of 0.5 s this resulted in an effective TR time of 2 s. The stimuli were presented through MR compatible headphones with insulating materials that also compensated for the ambient scanner noise by 24 dB.

The DICOM data were converted into 4D NifTi data files, using dcm2nii (http://www.mccauslandcenter.sc.edu/mricro/). The BOLD-fMRI data were pre-processed using SPM12 (http://www.fil.ion.ucl.ac.uk/spm). The EPI images were first realigned to adjust for head movements during the image acquisition and the images were corrected for movement-induced distortions (“unwarping”). Data were subsequently inspected for residual movement artifacts, and all movements were <2 mm and the rotations were <2°. Afterwards, the realigned image series were normalized to the stereotaxic space created by Montreal Neurological Institute (MNI), which is defined by an EPI template provided by the SPM12 software package (“Old normalize”). Normalization parameters were estimated from the mean images, generated after the realign and unwarp procedure, and subsequently applied to the realigned time series. The normalized images were resampled with a voxel size of 2 × 2 × 2 mm, and finally smoothed by using a Gaussian kernel of 8 mm.

Following the concept proposed by Di and Biswal (2015), the data were modeled within SPM using a design matrix that consisted only of sinusoidal functions, covering a frequency range 0.005–0.1 Hz. Accordingly, the high-pass filter was set to a liberal cut-off of 360 s. This GLM is only needed for extracting the time course that feed into the sDCM analysis. Hence, only an F-contrast was defined that spanned over all sinusoidal functions.

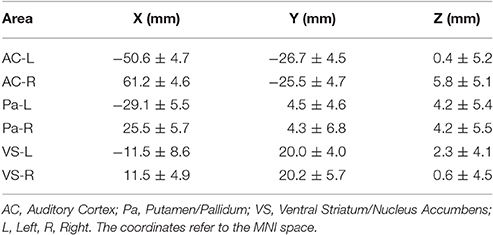

The dynamic causal modeling approach allows specification of models with up to eight regions. Following the a-prior hypothesis, six anatomically distinct areas were selected for the present analysis covering the core areas of auditory perception, rhythm processing, and reward processing. The respective coordinates of four areas of interest were defined on published coordinates with representative areas for the auditory cortex and the putamen/pallidum. The coordinates for the ventral striatum/nucleus accumbens were identified directly on the MNI template. Since there was no prediction on the hemispheric lateralization, areas were defined for the left and right hemisphere. In total six anatomical areas of interest were defined. These were the auditory cortex (AC) with reference to the subarea Te1 (Morosan et al., 2001), the putamen/pallidum (PA; Grahn and Rowe, 2009) and the ventral striatum/nucleus accumbens (VS), of the left and right hemispheres, respectively (see Table 1 for the exact coordinates). For each region, the time series from the most significant voxel were extracted using a liberal threshold of p < 0.05, uncorrected, since the underlying significance of the GLM model fit is of minor relevance for this approach and served only as basis for extracting time courses. If no local maximum was within the range of 8 mm around the target coordinate, the nearest sub-threshold voxel within the 8 mm range was used. This procedure assured that only voxels that showed fluctuations over time were selected for this analysis.

Table 1. The table reports the mean coordinates (±SD) for the six areas used in the stochastic DCM analysis.

The terms ventral striatum and nucleus accumbens as well as putamen and pallidum are often used interchangeably in the neuroimaging literature. Although these are anatomically and functionally distinct areas, they are often difficult to separate in functional neuroimaging data with low spatial resolution (in the present case 3.4 × 3.4 × 5.5 mm3) due their proximities. Therefore, in the following, the terms ventral striatum/nucleus accumbens as well as putamen/pallidum will be used to reflect that the precise spatial localization is limited for these areas, given the intrinsic resolution of the raw fMRI data.

Dynamic causal modeling (DCM) is a method that rests on generative models describing the brain as non-linear, but deterministic system. Those generative models that describe the network of neuronal populations are a set of differential equations that describe how the hidden neuronal states could have generated the observed data. Since the system is described through those equations, the model can be inverted and parameters for the not directly observable hidden states can be estimated (Friston et al., 2011). Originating from task-related fMRI, DCM has been extended to resting-state fMRI, called stochastic DCM (Friston et al., 2011). The stochastic DCM (sDCM) model was defined as a fully connected model, where each node was connected with every other node. The DCM model (DCM12, revision 5729) was defined within SPM12 (rev. 6225). After model estimation, post-hoc Bayesian model optimization was applied (Friston and Penny, 2011). The resulting connectivity estimates were subjected to group-specific one-sample t-tests, as well as between-group two-sample t-tests using SPSS (ver. 22). A Bonferroni correction was applied, taking into account that 36 t-tests were performed. In addition, the hemodynamic response was examined in more detail but analysing the estimated parameter for transit (transit time through the “balloon”), decay (signal decay), and epsilon (neural efficiency). These parameter refer to the revised Balloon model (Friston et al., 2000; Buxton et al., 2004; Buxton, 2012) that express the metabolic response by taking into account the cerebral blood volume, cerebral blood flow, and oxygen extraction rate. A DCM analysis estimates the metabolic parameter transit and decay for each region separately, while ε is a global parameter per subject.

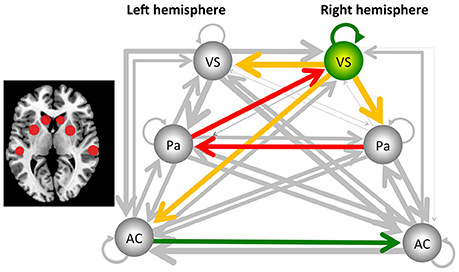

The sDCM results revealed significant (Bonferroni-corrected) connections between the auditory cortex, putamen and ventral striatum for both hemispheres and for both groups. More importantly, several significant group differences were detected. In the following, uncorrected and Bonferroni-corrected differences are reported, where the latter ones are marked with “*.” All differences are described relative to the control group. Reduced connectivity was detected from the right ventral striatum/nucleus accumbens to the left homolog [t(24) = −2.295, p < 0.031], to the right putamen/pallidum [t(24) = −3.196, p < 0.004], and the left auditory cortex [t(24) = −2.140, p < 0.043]. In addition, reduced connectivity was detected for the connection from the left putamen/pallidum to the right ventral striatum/nucleus accumbens [t(24) = −3.901, p < 0.001*] and from the right to the left putamen/pallidum [t(24) = −4.562, p < 0.001*]. These latter two results were also significant after Bonferroni correction (see Figure 1). Finally, the intrinsic, self-inhibitory connection of the right ventral striatum/nucleus accumbens was reduced in the music group [i.e., less negative, t(24) = 2.138, p < 0.043]. By contrast, increased connectivity was only detected for the connection from the left to the right auditory cortex [t(24) = 2.354, p < 0.027].

Figure 1. Results from the stochastic dynamic causal modeling (sDCM) analysis, using the auditory cortex (AC), the putamen/pallidum (PA), and the ventral striatum/nucleus accumbens (VS) of the left and right hemisphere as core areas. The dark arrows indicate connections that are not significantly different between the music and control groups, and line thickness indicates the strength of the connectivity. Yellow/red arrows indicate a decreased connectivity. In addition, the right ventral striatum/nucleus accumbens reduced its self-inhibition, indicated by the green arrow, as well as changed its hemodynamic response parameter, as indicated by the green sphere. Finally, the green arrow from the left to the right auditory cortex indicates a significant increase of connectivity. The two red arrows indicate that this effect was also significant after Bonferroni correction. The figure to the left indicates the localization of the areas (see Table 1 for the exact coordinates).

For the hemodynamic parameter transit, decay and epsilon, the group comparison demonstrated that the parameter transit and decay were significantly different for the right ventral striatum/nucleus accumbens, only. Although the corresponding p-values do not survive a Bonferroni correction, they are worth being mentioned, since differences were—again—detected only for this area. It appeared that transit was significantly reduced [t(24) = −2.158, p < 0.041], while decay was significantly increased [t(24) = 2.202, p < 0.038].

With this study, the aim was to investigate brain networks involved in processing a continuously playing piece of rhythmic music. Generally, the analysis of continuous stimulation paradigms is challenging. Therefore, we present for the first time the application of stochastic dynamic causal modeling as a tool for analysing the connectivity in a study with continuous stimulation. The sDCM analysis provided evidence that, when compared to the control group without music stimulation, dance floor-like rhythmic music decreased connectivity from the right putamen/pallidum, via the left putamen/pallidum to the right ventral striatum/nucleus accumbens. Further, the right ventral striatum/nucleus accumbens changed its level of activity by a reduction in self-inhibition and an altered hemodynamic response. In addition, functional connectivity was reduced from the right ventral striatum/nucleus accumbens to the left ventral striatum/nucleus accumbens, the left auditory cortex, as well as the right putamen/pallidum (see Figure 1).

Taking together, the results indicate that mainly the right ventral striatum/nucleus accumbens changed its activity as well as network coupling when listening to this piece of rhythmic, dance-floor-like music. The analysis also shows a significantly reduced connectivity from the right to the left putamen/pallidum, which may reflect a reduced coupling in motor-related brain areas. Future studies may examine further whether this is related to the experience of groove (Stupacher et al., 2013).

The results show that listening to rhythmic music not only changes the level of activation, measured in terms of BOLD signal fluctuation, of the reward system but also causes changes of connectivity within a network that is related to reward processing. The results further support the view that the ventral striatum, i.e., presumably the nucleus accumbens, is deeply involved in processing music-evoked emotions and reduces its connectivity to other areas. This does not mean a reduced activation, but rather acting on a different time scale (Mueller et al., 2015). In fact, the significantly reduced self-inhibition as well as the changed hemodynamic parameter of the right ventral striatum/nucleus accumbens indicate an increased level of activation. The results are also in line with a recent meta-analysis by Koelsch (2014), who referred to the right ventral striatum and in particular to the right nucleus accumbens as an important structure for processing music-induced emotions. In this respect, the nucleus accumbens, which is part of the dopaminergic system, is seen as part of the reward processing system which is mainly active during experiencing reward, and its activity may correlate with the reward value (Salimpoor et al., 2013, 2015; Ikemoto et al., 2015; Zatorre, 2015). This is true for any type of reward, in particular biologically relevant rewards such as food or sex, but also money (Daniel and Pollmann, 2014), and even fairness (Cappelen et al., 2014). By contrast, the dorsal striatum/caudate is generally more related to anticipation of reward but also to music-induced frisson (Koelsch et al., 2015; Zatorre, 2015). This notion is further supported by a PET study that indicated increase of dopamine release that was different for the ventral and dorsal striatum. The dorsal striatum/caudate appeared to be more involved in the anticipation and prediction of reward, while the ventral striatum/nucleus accumbens demonstrated the strongest dopamine release while experiencing an emotional response to music (Salimpoor et al., 2011).

The results presented here may contradict an fMRI study by Salimpoor and colleagues, who detected an increased connectivity between the auditory cortex and the ventral striatum (Salimpoor et al., 2011). However, one has to bear in mind that the present study used a continuous stimulation paradigm with an unfamiliar piece of music that lasted for 10.16 min and thus much longer than the stimuli of most other studies that typically last less than a minute. Further, all measures are derived from measures of BOLD fluctuations and how they propagate through the specified network. In this respect, results may not be directly comparable to studies, where connectivity measures were based on task contrasts.

Finally, it should be mentioned that the sDCM analysis also indicated an increased connectivity from the left to the right auditory cortex when compared to ordinary resting-state fMRI. This confirms the validity of our approach of analysing data from a continuous stimulation paradigm with sDCM. Moreover, an increased connectivity from left to right was reasonable to expect, since the right auditory cortex is assumed to be more dominant during the processing of tonal information, like music (Tramo, 2001; Zatorre et al., 2007).

The results presented here are also significant from a methodological perspective. The unconstrained DCM model not only confirmed the notion about the right ventral striatum, but it also confirmed the validity of the presented sDCM approach. Note that for the first time a continuous stimulation protocol was combined with a stochastic DCM approach. A stochastic DCM model is set up as a fully connected model without any constraints. Therefore, it is a significant result that the contribution of the right ventral striatum evolved naturally out of the model estimation. Further, there are no significant group differences of the hemodynamic parameter or of the self-inhibition for the auditory system. This may indicate that continuous auditory stimulation does not change hemodynamic fluctuations within the auditory system, when compared to resting-state fMRI, although the level of activation might be different.

However, there are important limitations to the selected approach that one also has to bear in mind and that restrict the general conclusions that can be drawn from the presented results. Firstly, due to methodological constrains, the present study only compares music perception to resting state and used only one piece of music. Although there is strong evidence that the detected effects are mostly related to the presentation of rhythmic music and that the majority of the participants experienced this music as pleasant, one cannot entirely rule out that comparable effects may have emerged when listening to other types of (pleasant) stimuli in a similar passive and “resting” situation. In addition, the experience of groove wasn't formally assessed in this study although several participants of the music group reported this, but one might also want to speculate whether the observed decoupling could be related to suppressing the urge to move along. Secondly, the DCM approach generally limits the number of areas that can be included in a model, and these areas should have a certain distance to avoid overlapping effects through smoothing. Accordingly, the selection of the areas of interest was based on the a-prior hypothesis described in the introduction that comprised the auditory cortex, the basal ganglia and the reward system. Therefore, only these six anatomically distinct areas were included in the model, but future study may include additional areas like the ventromedial prefrontal cortex or amygdala. Finally, fMRI studies with 13 participants per group are still within the standard range, but larger sample sizes are required and recommended for future studies for drawing more general conclusions.

This study demonstrated a reduced functional connectivity and thus less constrained activation of the reward processing system, namely the right ventral striatum and nucleus accumbens, while listening to a several minutes-long pleasurable and rhythmic piece of music—as compared to silence. The results give a deeper insight into the power of music and show how easily and how strongly rhythmic music interacts with the affect and basal ganglia system, and that emotional experiences during listening to music may be generated through a stronger activation and, simultaneously, reduced functional connectivity of the reward system. Thus, “The powers of cognition that are set into play by this representation [of a beautiful object] are hereby in a free play, since no determinate concept restricts them to a particular cognition” (Kant, 1790) (Guyer, 2002).

HB contributed to the analysis of the data and writing of the article. BO contributed to the planning, stimulus preparation, and writing of the article. KS contributed to the planning, stimulus preparation, performance, analysis, and writing of the article.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank all participants for their participation, and the staff of the radiological department of the Haukeland University Hospital for their help during data acquisition. We would like to thank particularly Kjetil Vikene for inspiring discussions throughout the writing process. The study was supported by a grant to KS from the Bergen Research Foundation (When a sound becomes speech) and the Research Council of Norway (217932: It's time for some music).

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fnins.2017.00153/full#supplementary-material

Blood, A. J., and Zatorre, R. J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U.S.A. 98, 11818–11823. doi: 10.1073/pnas.191355898

Buxton, R. B. (2012). Dynamic models of BOLD contrast. Neuroimage 62, 953–961. doi: 10.1016/j.neuroimage.2012.01.012

Buxton, R. B., Uludağ, K., Dubowitz, D. J., and Liu, T. T. (2004). Modeling the hemodynamic response to brain activation. Neuroimage 23(Suppl. 1), S220–S233. doi: 10.1016/j.neuroimage.2004.07.013

Cappelen, A. W., Eichele, T., Hugdahl, K., Specht, K., Sørensen, E. Ø., and Tungodden, B. (2014). Equity theory and fair inequality: a neuroeconomic study. Proc. Natl. Acad. Sci. U.S.A. 111, 15368–15372. doi: 10.1073/pnas.1414602111

Chanda, M. L., and Levitin, D. J. (2013). The neurochemistry of music. Trends Cogn. Sci. 17, 179–193. doi: 10.1016/j.tics.2013.02.007

Chen, J. L., Penhune, V. B., and Zatorre, R. J. (2008a). Listening to musical rhythms recruits motor regions of the brain. Cereb. Cortex 18, 2844–2854. doi: 10.1093/cercor/bhn042

Chen, J. L., Penhune, V. B., and Zatorre, R. J. (2008b). Moving on time: brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. J. Cogn. Neurosci. 20, 226–239. doi: 10.1162/jocn.2008.20018

Daniel, R., and Pollmann, S. (2014). A universal role of the ventral striatum in reward-based learning: evidence from human studies. Neurobiol. Learn. Mem. 114C, 90–100. doi: 10.1016/j.nlm.2014.05.002

Di, X., and Biswal, B. B. (2015). Dynamic brain functional connectivity modulated by resting-state networks. Brain Struct. Funct. 220, 37–46. doi: 10.1007/s00429-013-0634-3

Friston, K. J., Li, B., Daunizeau, J., and Stephan, K. E. (2011). Network discovery with DCM. Neuroimage 56, 1202–1221. doi: 10.1016/j.neuroimage.2010.12.039

Friston, K. J., Mechelli, A., Turner, R., and Price, C. J. (2000). Nonlinear responses in fMRI: the Balloon model, Volterra kernels, and other hemodynamics. Neuroimage 12, 466–477. doi: 10.1006/nimg.2000.0630

Friston, K. J., and Penny, W. (2011). Post hoc Bayesian model selection. Neuroimage 56, 2089–2099. doi: 10.1016/j.neuroimage.2011.03.062

Fritz, T., Jentschke, S., Gosselin, N., Sammler, D., Peretz, I., Turner, R., et al. (2009). Universal recognition of three basic emotions in music. Curr. Biol. 19, 573–576. doi: 10.1016/j.cub.2009.02.058

Grahn, J. A., and Brett, M. (2007). Rhythm and beat perception in motor areas of the brain. J. Cogn. Neurosci. 19, 893–906. doi: 10.1162/jocn.2007.19.5.893

Grahn, J. A., and Rowe, J. B. (2009). Feeling the beat: premotor and striatal interactions in musicians and nonmusicians during beat perception. J. Neurosci. 29, 7540–7548. doi: 10.1523/JNEUROSCI.2018-08.2009

Gray, P. M., Krause, B., Atema, J., Payne, R., Krumhansl, C. L., and Baptista, L. (2001). The Music of nature and the nature of music. Science 291, 52–54. doi: 10.1126/science.10.1126/SCIENCE.1056960

Guyer, P. (2002). “Immanuel kant: critique of the power of judgment,” in The Cambridge Edition of the Works of Immanuel Kant in Translation, ed P. Guyer (Cambridge: Cambridge University Press).

Ikemoto, S., Yang, C., and Tan, A. (2015). Basal ganglia circuit loops, dopamine and motivation: a review and enquiry. Behav. Brain Res. 290, 17–31. doi: 10.1016/j.bbr.2015.04.018

Janata, P., Tomic, S. T., and Haberman, J. M. (2012). Sensorimotor coupling in music and the psychology of the groove. J. Exp. Psychol. Gen. 141, 54–75. doi: 10.1037/a0024208

Koelsch, S. (2011). Toward a neural basis of music perception - a review and updated model. Front. Psychol. 2:110. doi: 10.3389/fpsyg.2011.00110

Koelsch, S. (2014). Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 15, 170–180. doi: 10.1038/nrn3666

Koelsch, S., Jacobs, A. M., Menninghaus, W., Liebal, K., Klann-Delius, G., and von Scheve, C., et al. (2015). The quartet theory of human emotions: an integrative and neurofunctional model. Phys. Life Rev. 13, 1–27. doi: 10.1016/j.plrev.2015.03.001

Kornysheva, K., von Cramon, D. Y., Jacobsen, T., and Schubotz, R. I. (2010). Tuning-in to the beat: aesthetic appreciation of musical rhythms correlates with a premotor activity boost. Hum. Brain Mapp. 31, 48–64. doi: 10.1002/hbm.20844

Levitin, D. J., and Tirovolas, A. K. (2009). Current advances in the cognitive neuroscience of music. Ann. N.Y. Acad. Sci. 1156, 211–231. doi: 10.1111/j.1749-6632.2009.04417.x

Madison, G. (2006). Experiencing groove induced by music: consistency and phenomenology. Music Percept. 24, 201–208. doi: 10.1525/mp.2006.24.2.201

Madison, G., Gouyon, F., Ullén, F., and Hörnström, K. (2011). Modeling the tendency for music to induce movement in humans: first correlations with low-level audio descriptors across music genres. J. Exp. Psychol. Hum. Percept. Perform. 37, 1578–1594. doi: 10.1037/a0024323

Morosan, P., Rademacher, J., Schleicher, A., Amunts, K., Schormann, T., and Zilles, K. (2001). Human primary auditory cortex: cytoarchitectonic subdivisions and mapping into a spatial reference system. Neuroimage 13, 684–701. doi: 10.1006/nimg.2000.0715

Mueller, K., Fritz, T., Mildner, T., Richter, M., Schulze, K., Lepsien, J., et al. (2015). Investigating the dynamics of the brain response to music: a central role of the ventral striatum/nucleus accumbens. Neuroimage 116, 68–79. doi: 10.1016/j.neuroimage.2015.05.006

Panteli, M., Rocha, B., Bogaards, N., and Honingh, A. (2016). A model for rhythm and timbre similarity in electronic dance music. Musicae Sci. doi: 10.1177/1029864916655596. [Epub ahead of print].

Peretz, I., and Zatorre, R. J. (2005). Brain organization for music processing. Annu. Rev. Psychol. 56, 89–114. doi: 10.1146/annurev.psych.56.091103.070225

Phillips-Silver, J., and Trainor, L. J. (2005). Feeling the beat: movement influences infant rhythm perception. Science 308, 1430–1430. doi: 10.1126/science.1110922

Salimpoor, V. N., Benovoy, M., Larcher, K., Dagher, A., and Zatorre, R. J. (2011). Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nat. Neurosci. 14, 257–262. doi: 10.1038/nn.2726

Salimpoor, V. N., van den Bosch, I., Kovacevic, N., McIntosh, A. R., Dagher, A., and Zatorre, R. J. (2013). Interactions between the nucleus accumbens and auditory cortices predict music reward value. Science 340, 216–219. doi: 10.1126/science.1231059

Salimpoor, V. N., Zald, D. H., Zatorre, R. J., Dagher, A., and McIntosh, A. R. (2015). Predictions and the brain: how musical sounds become rewarding. Trends Cogn. Sci. 19, 86–91. doi: 10.1016/j.tics.2014.12.001

Stupacher, J., Hove, M. J., Novembre, G., Schütz-Bosbach, S., and Keller, P. E. (2013). Musical groove modulates motor cortex excitability: a TMS investigation. Brain Cogn. 82, 127–136. doi: 10.1016/j.bandc.2013.03.003

Tramo, M. J. (2001). Music of the hemispheres. Science 291, 54–56. doi: 10.1126/science.10.1126/SCIENCE.1056899

Trost, W., Frühholz, S., Schön, D., Labbé, C., Pichon, S., Grandjean, D., et al. (2014). Getting the beat: entrainment of brain activity by musical rhythm and pleasantness. Neuroimage 103C, 55–64. doi: 10.1016/j.neuroimage.2014.09.009

Witek, M. A. G., Clarke, E. F., Wallentin, M., Kringelbach, M. L., and Vuust, P. (2014). Syncopation, body-movement and pleasure in groove music. PLoS ONE 9:e94446. doi: 10.1371/journal.pone.0094446

Witek, M. A. G., Kringelbach, M. L., and Vuust, P. (2015). Musical rhythm and affect: comment on “The quartet theory of human emotions: an integrative and neurofunctional model” by S. Koelsch et al. Phys. Life Rev. 13, 92–94. doi: 10.1016/j.plrev.2015.04.029

Zatorre, R. J. (2015). Musical pleasure and reward: mechanisms and dysfunction. Ann. N.Y. Acad. Sci. 1337, 202–211. doi: 10.1111/nyas.12677

Zatorre, R. J., Chen, J. L., and Penhune, V. B. (2007). When the brain plays music: auditory-motor interactions in music perception and production. Nat. Rev. Neurosci. 8, 547–558. doi: 10.1038/nrn2152

Zatorre, R. J., and Krumhansl, C. L. (2002). Mental models and musical minds. Science 298, 2138–2139. doi: 10.1126/science.1080006

Zatorre, R. J., and Salimpoor, V. N. (2013). From perception to pleasure: music and its neural substrates. Proc. Natl. Acad. Sci. U.S.A. 110(Suppl. 2), 10430–10437. doi: 10.1073/pnas.1301228110

Keywords: music, rhythm, basal ganglia, reward system, ventral striatum, nucleus accumbens, fMRI, dynamic causal modeling

Citation: Brodal HP, Osnes B and Specht K (2017) Listening to Rhythmic Music Reduces Connectivity within the Basal Ganglia and the Reward System. Front. Neurosci. 11:153. doi: 10.3389/fnins.2017.00153

Received: 14 August 2016; Accepted: 09 March 2017;

Published: 28 March 2017.

Edited by:

Simone Dalla Bella, University of Montpellier 1, FranceCopyright © 2017 Brodal, Osnes and Specht. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Karsten Specht, a2Fyc3Rlbi5zcGVjaHRAcHN5YnAudWliLm5v

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.